LiDAR-Based GNSS Denied Localization for Autonomous Racing Cars

Abstract

1. Introduction

- While facing a tight turn or passing through narrow corridors, the optimal trajectory is close to the internal edge of the turn or, more in general, the track border;

- The optimal speed profile is at the limit of friction, thus, small localization errors can lead to divergent behaviors.

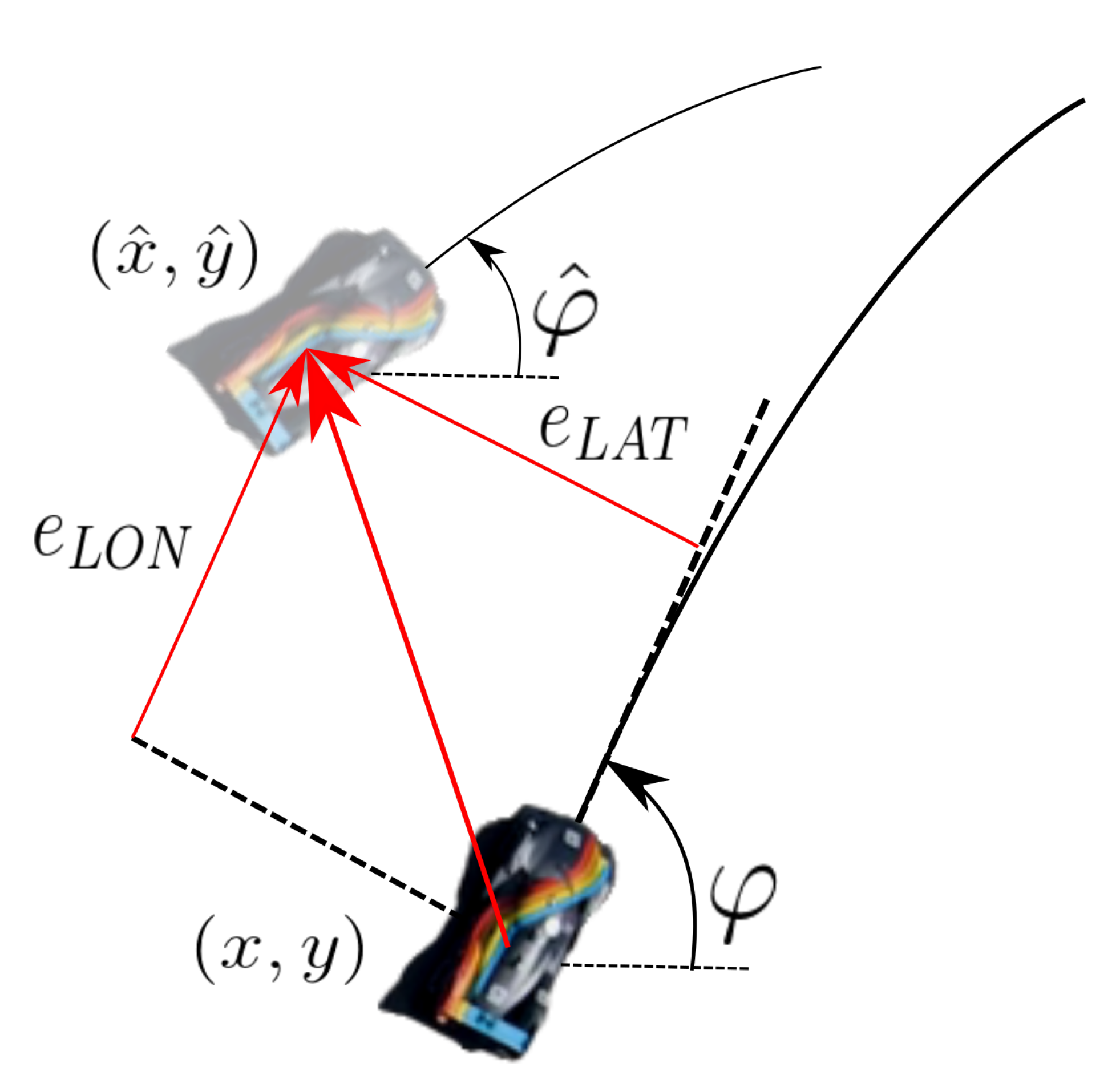

2. Problem Formulation

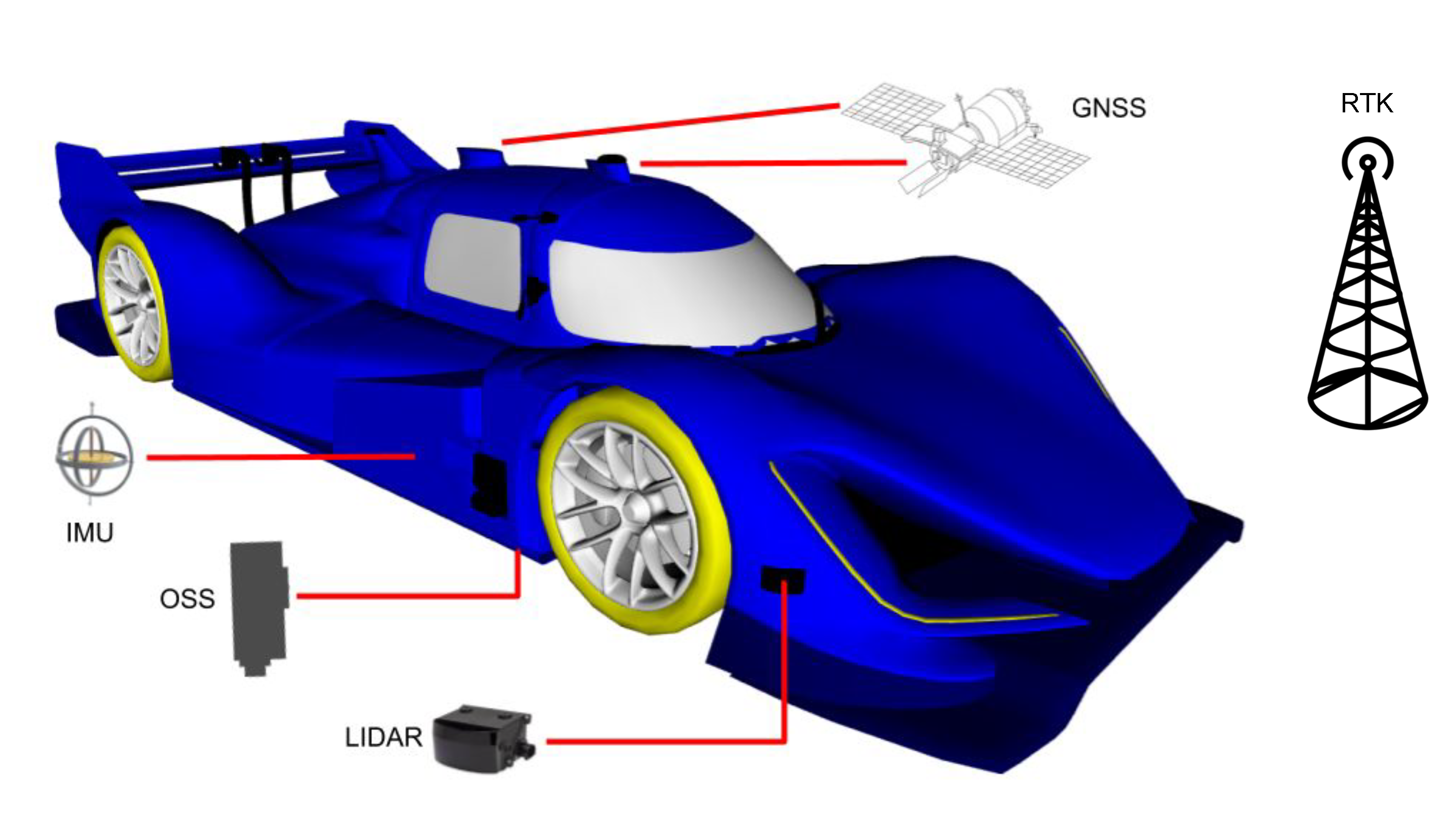

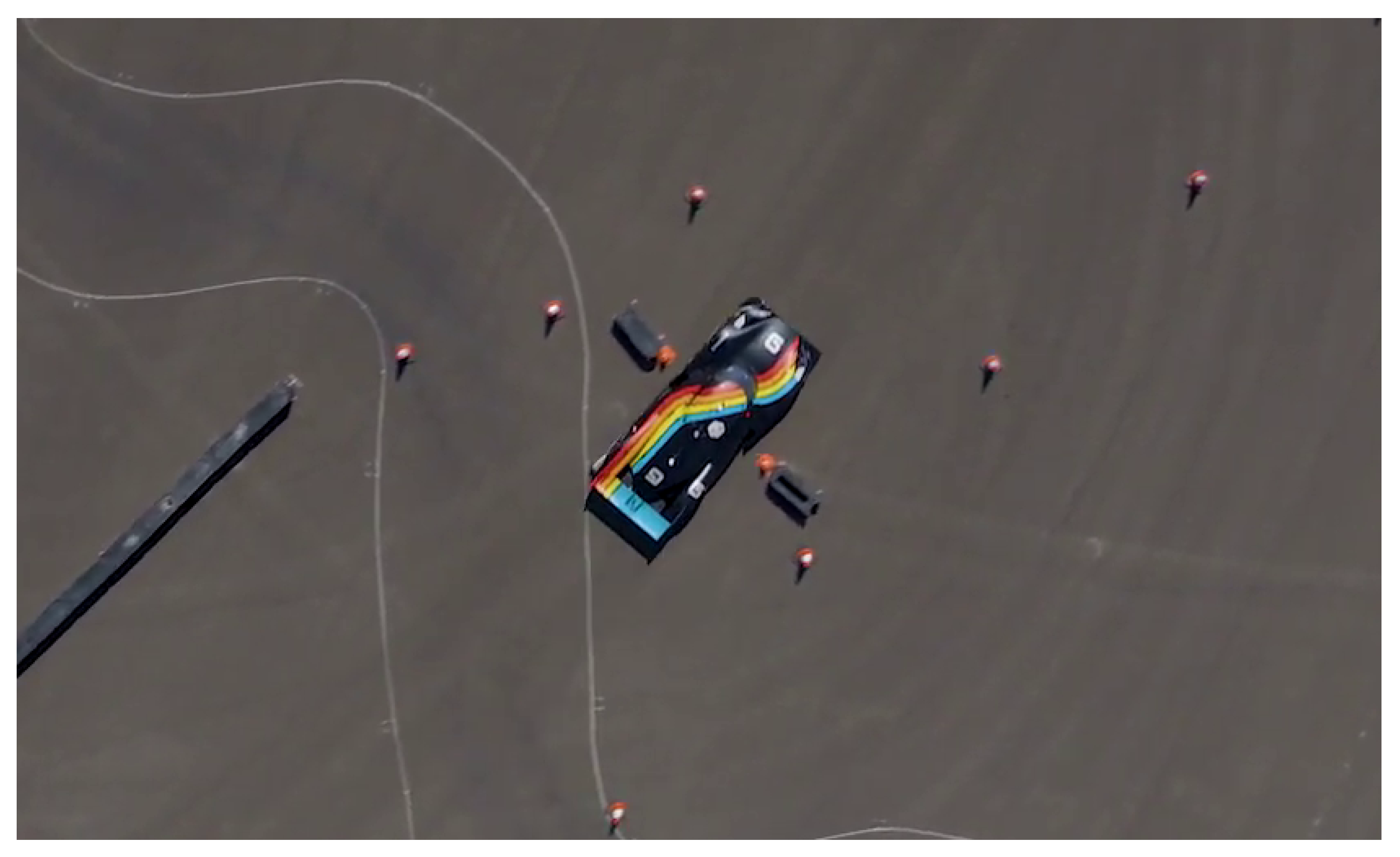

3. Sensors Setup

- OxTS Inertial Navigation System (INS): this commercial module consists of a dual-antenna GNSS and an IMU , which are pre-fused to obtain a high frequency (250 Hz) pose, velocity, and accelerations estimates;

- Ibeo LiDAR range finders: four LiDAR sensors are placed on the corners of the vehicle, each with 4 vertically stacked layers at 0.8 degrees spacing. The aggregate point cloud resulting from all the sensors is provided with a rate of 25 Hz.

- Optical Speed Sensor (OSS): this sensor provides direct longitudinal and lateral speed measurements through a Controller Area Network (CAN) interface at 500 Hz, and it is not affected by wheel drift.

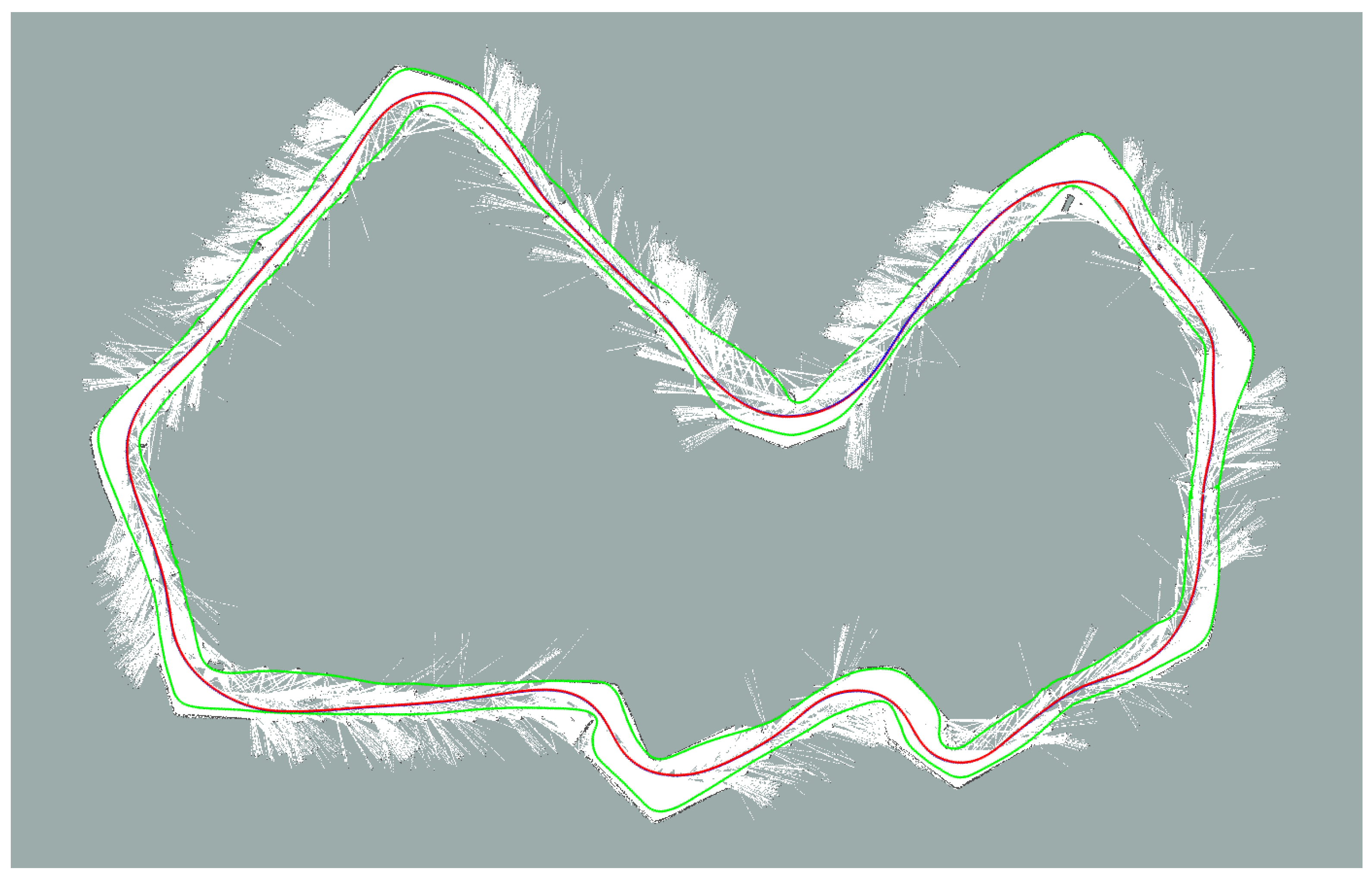

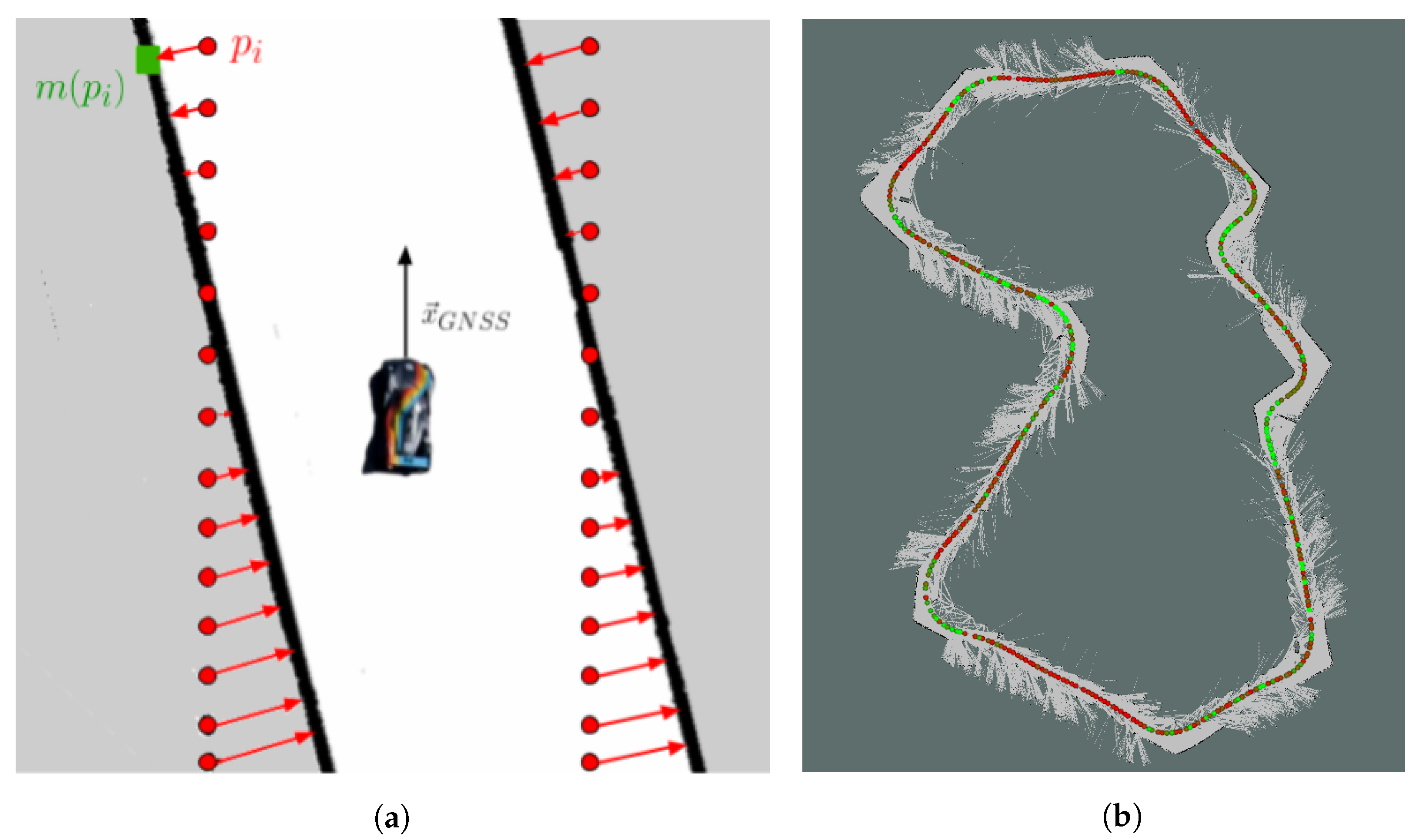

4. Mapping Procedure

Map Quality Assessment

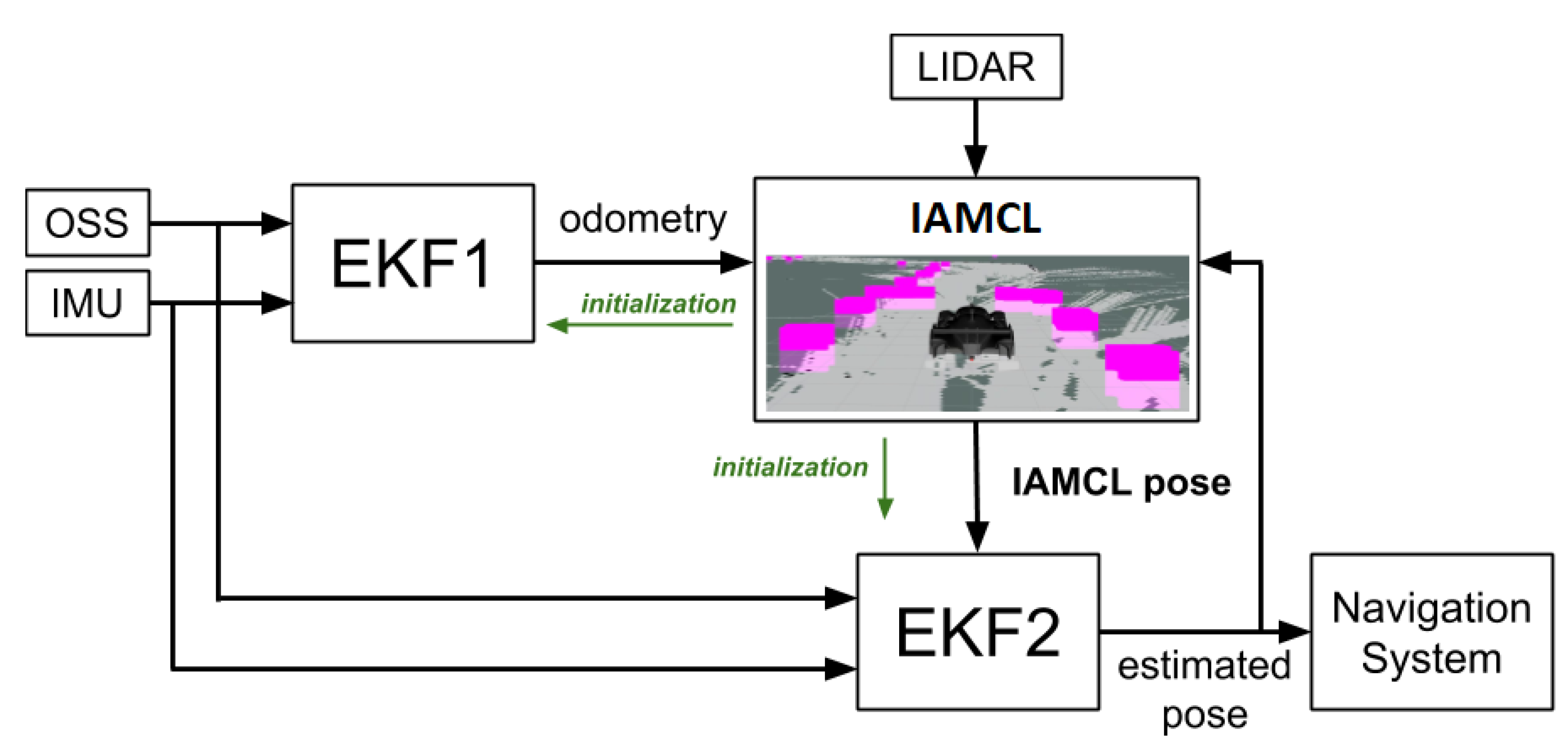

5. State Estimation Algorithm

- Computational burden: the NVIDIA Drive PX2 has a high number of CUDA cores, while CPU is rather limited; in this paper we propose a CPU implementation for simplicity and because the available computing power was enough for the particular experimental task;

- Flexibility: the particular race format affects not only strategy but also which sensors are available and what other modules must concurrently run on the board (e.g., interfaces with V2X race control infrastructure, planning software);

- Real-time requirements: the DevBot motion control module runs in real-time on a dedicated SpeedGoat board at 250 Hz. No patch was allowed to the standard Ubuntu kernel to make it real-time compliant. Thus, it needs to receive a pose estimate signal with high frequency.

5.1. Odometry (EKF1)

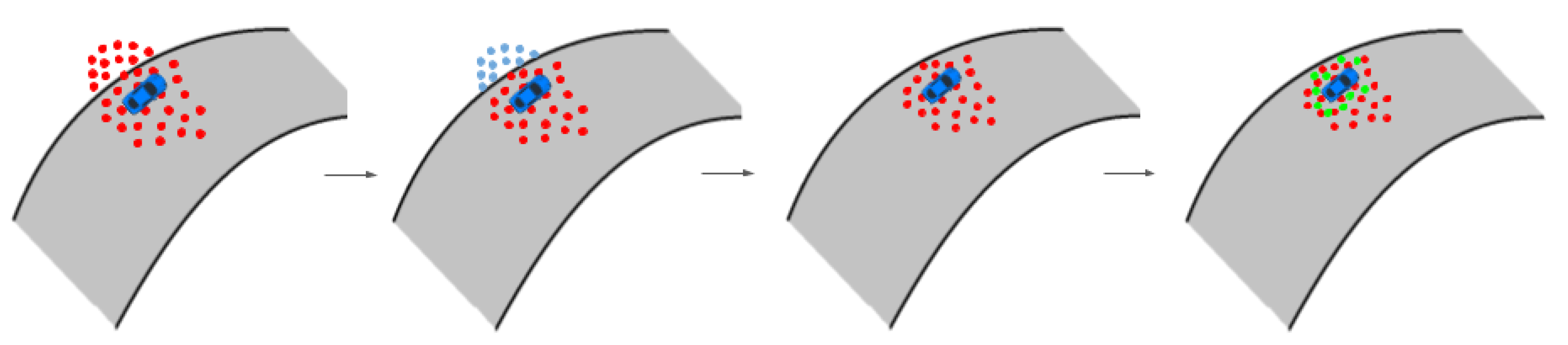

5.2. Lidar Scan Matching (IAMCL)

- The filter automatic initialization provided in the AMCL ROS package [17] takes too much time to converge; in the racing context, however, the initialization must be accurate and should be performed before the car starts driving;

- During the race, many particles are generated outside of the racing track boundaries or with opposite orientation (with respect to the race fixed direction), which is inefficient;

- Due to unavoidable, even small, map imperfections (mainly false positives in the occupancy grid), the algorithm exhibits a kidnapping problem pretty often.

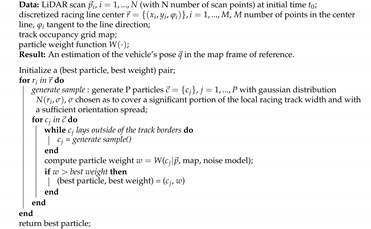

5.2.1. Automatic Initialization Procedure

| Algorithm 1: Automatic initialization algorithm (init). |

|

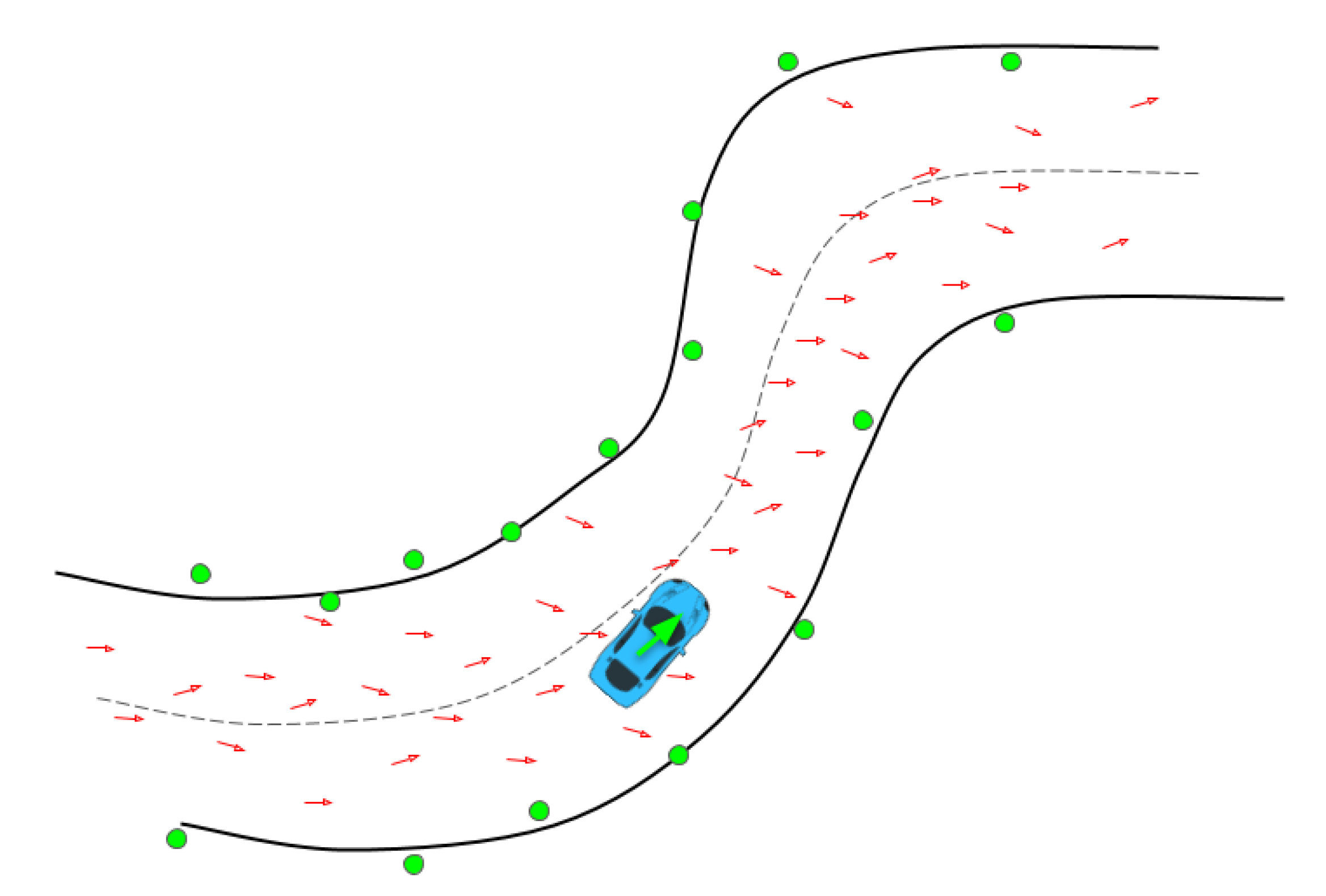

5.2.2. Informed Prediction

5.3. Smoothing Filter (EKF2)

6. Results

6.1. Comparison With State-of-the-Art

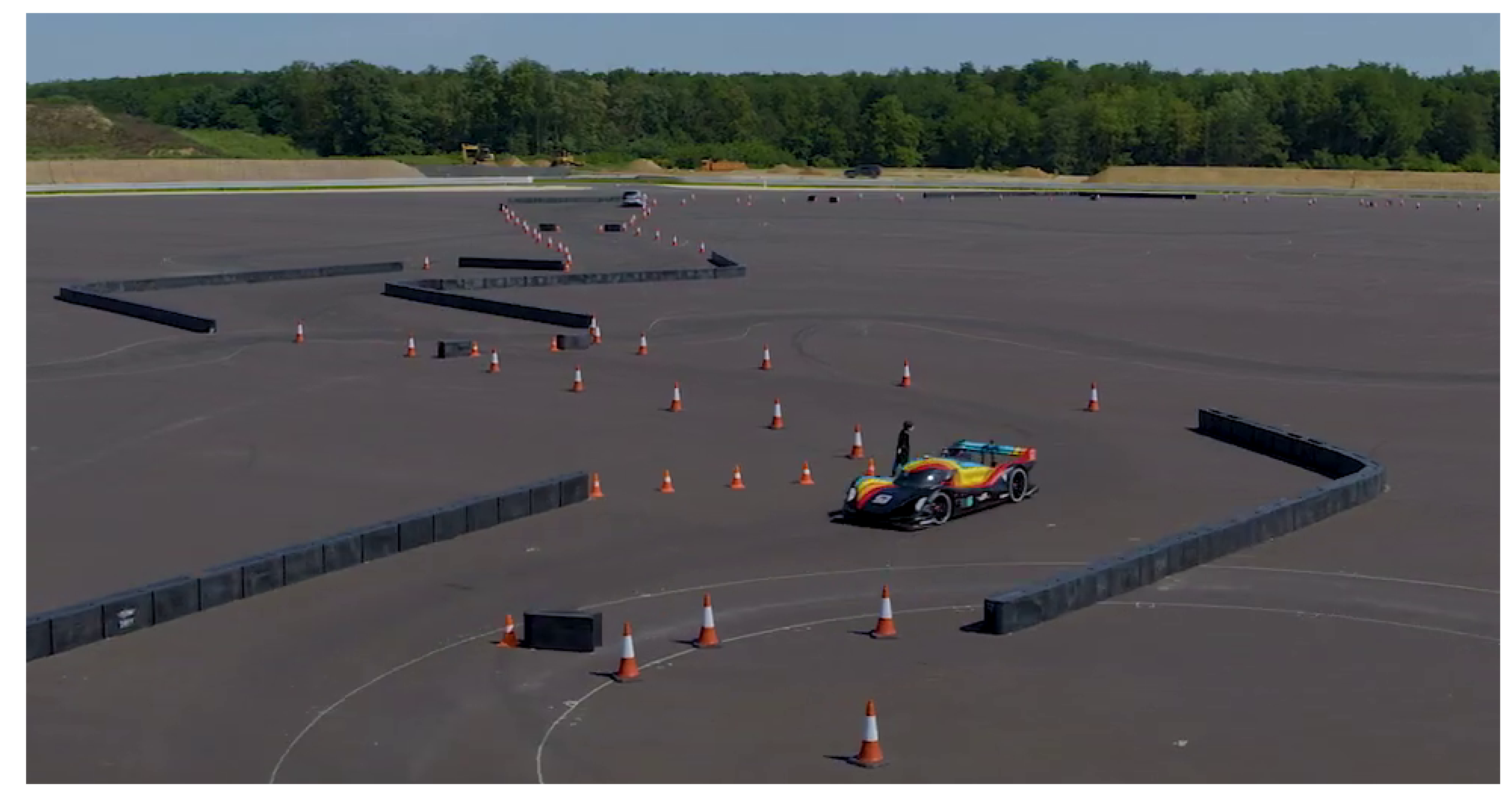

6.2. Experimental Tests

- 1.

- A run in autonomous mode at km/h;

- 2.

- A run driven by a professional human driver at km/h, the maximum speed allowed by the track;

- 3.

- A run in autonomous mode at km/h on the simulator.

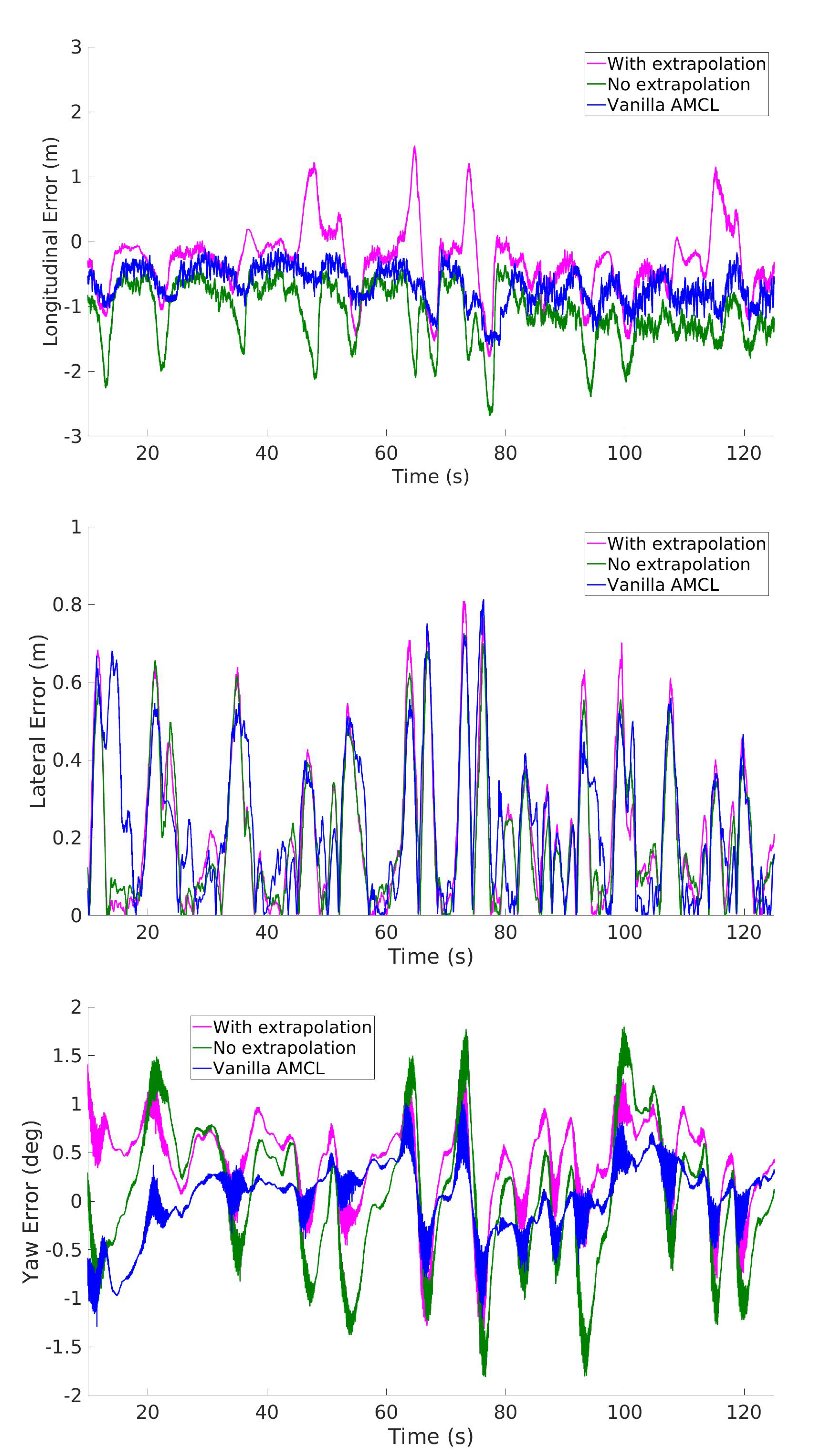

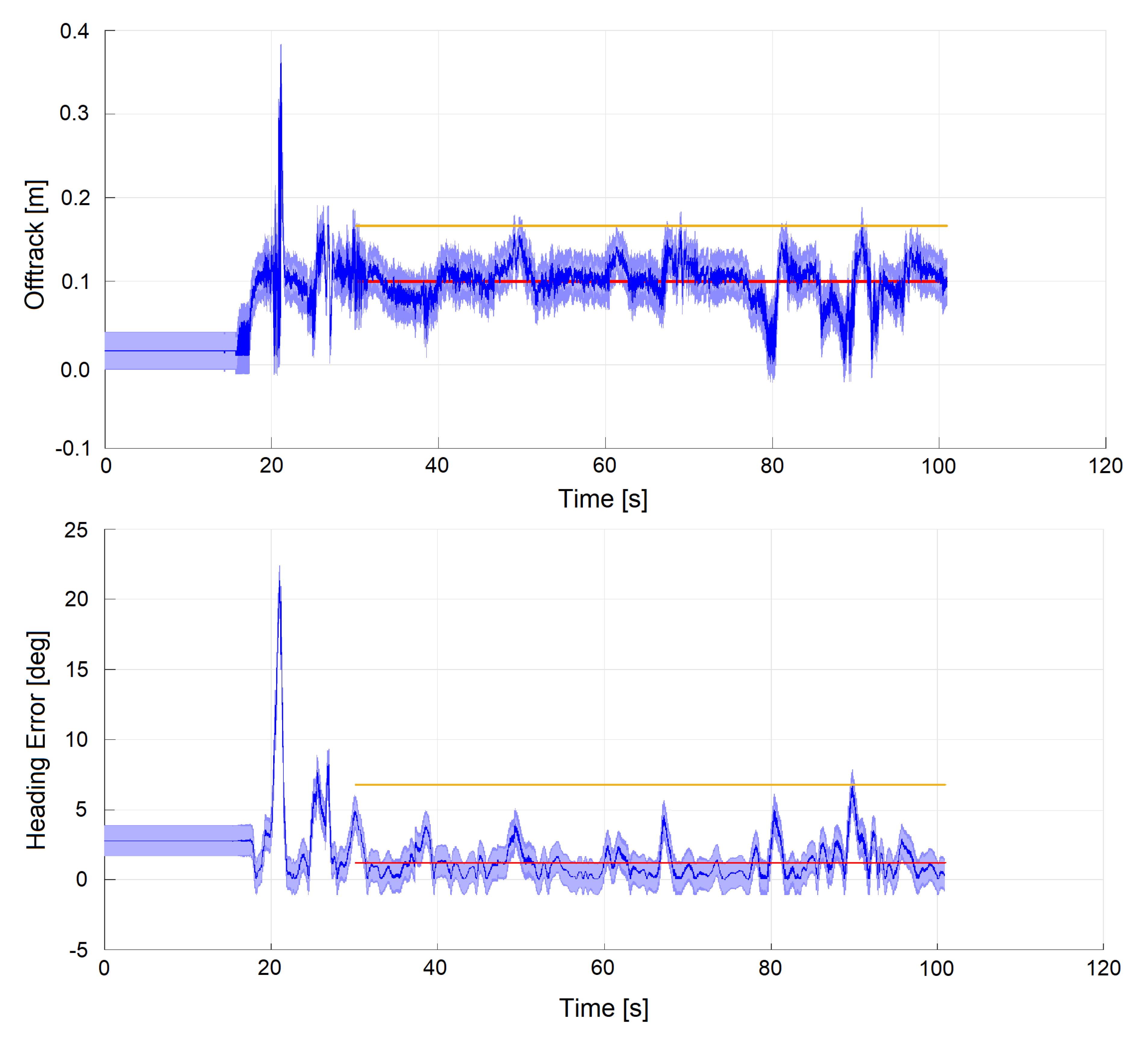

6.3. Dataset 1: Autonomous Mode (Experimental)

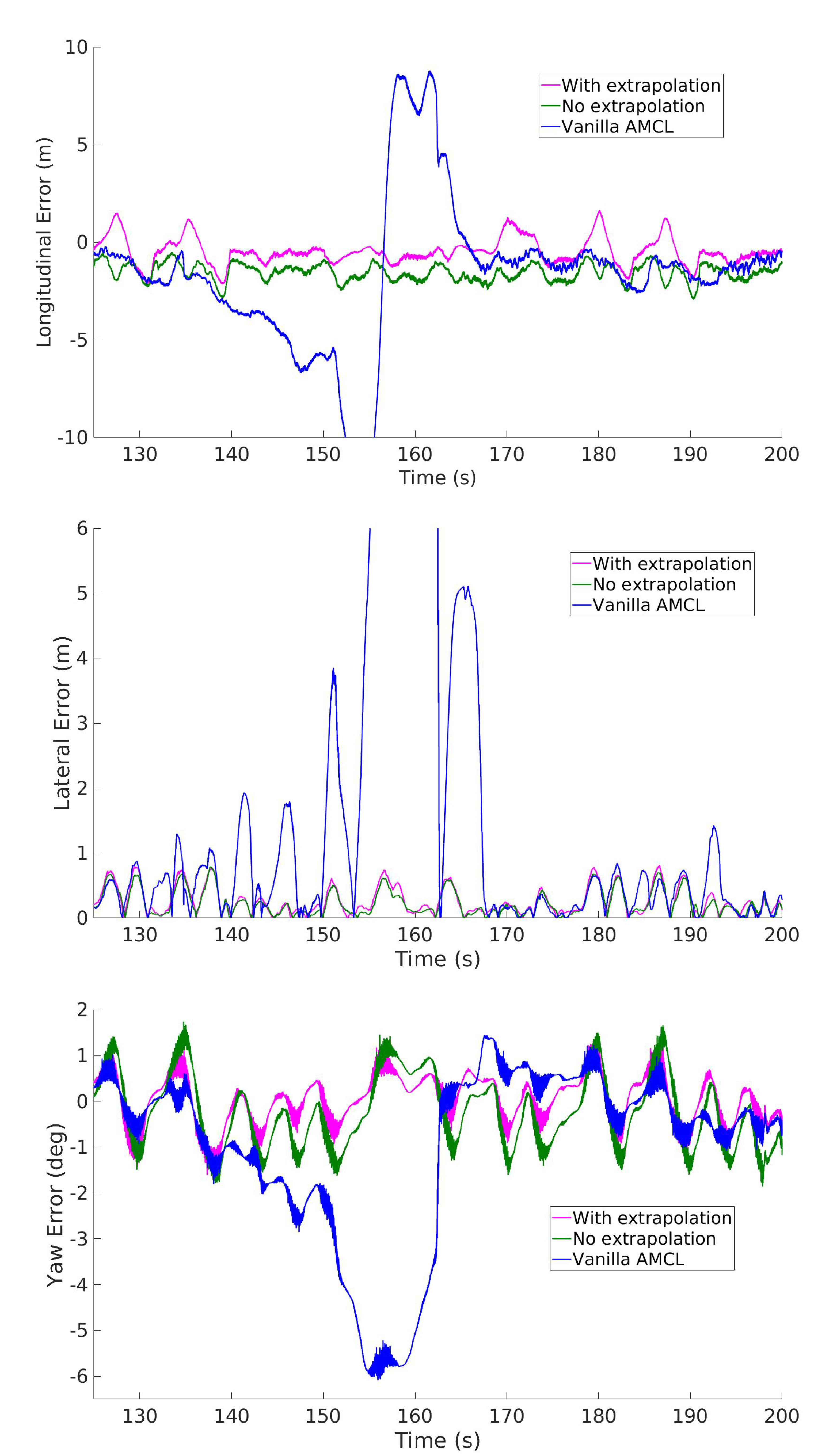

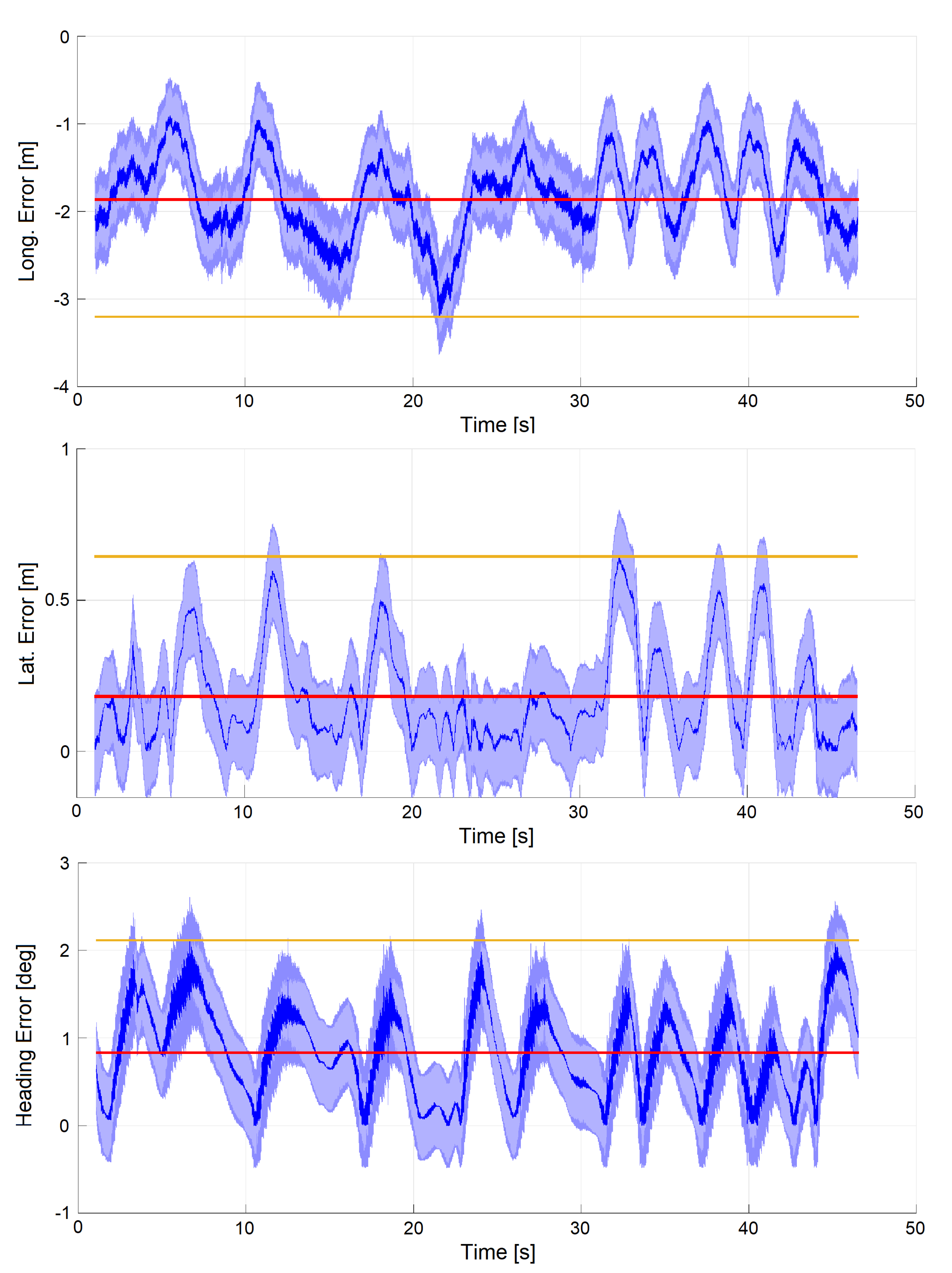

6.4. Dataset 2: Manual Drive Mode (Experimental)

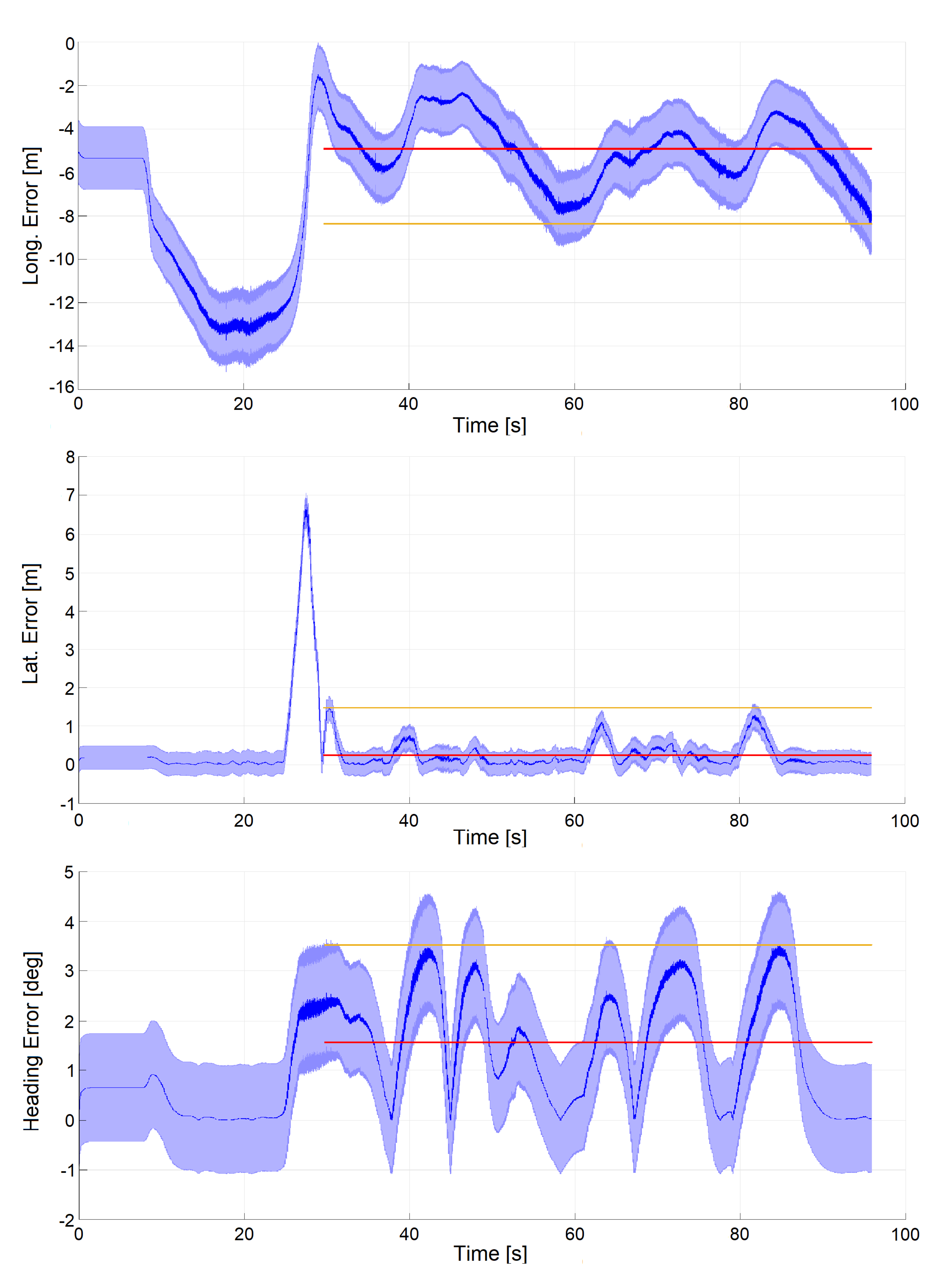

6.5. Dataset 3: Autonomous Mode (Simulation)

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Karlsson, R.; Gustafsson, F. The Future of Automotive Localization Algorithms: Available, reliable, and scalable localization: Anywhere and anytime. IEEE Signal Process. Mag. 2017, 34, 60–69. [Google Scholar] [CrossRef]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Bresson, G.; Alsayed, Z.; Yu, L.; Glaser, S. Simultaneous Localization and Mapping: A Survey of Current Trends in Autonomous Driving. IEEE Trans. Intell. Veh. 2017, 2, 194–220. [Google Scholar] [CrossRef]

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part I. IEEE Robot. Autom. Mag. 2006, 13, 99–110. [Google Scholar] [CrossRef]

- Bailey, T.; Durrant-Whyte, H. Simultaneous localization and mapping (SLAM): Part II. IEEE Robot. Autom. Mag. 2006, 13, 108–117. [Google Scholar] [CrossRef]

- Filatov, A.; Filatov, A.; Krinkin, K.; Chen, B.; Molodan, D. 2d slam quality evaluation methods. In Proceedings of the 2017 21st Conference of Open Innovations Association (FRUCT), Helsinki, Finland, 6–10 November 2017; pp. 120–126. [Google Scholar]

- Grisetti, G.; Stachniss, C.; Burgard, W. Improving grid-based slam with rao-blackwellized particle filters by adaptive proposals and selective resampling. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; pp. 2432–2437. [Google Scholar]

- Grisetti, G.; Stachniss, C.; Burgard, W. Improved techniques for grid mapping with rao-blackwellized particle filters. IEEE Trans. Robot. 2007, 23, 34. [Google Scholar] [CrossRef]

- Levinson, J.; Thrun, S. Robust vehicle localization in urban environments using probabilistic maps. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 4372–4378. [Google Scholar] [CrossRef]

- Levinson, J.; Askeland, J.; Becker, J.; Dolson, J.; Held, D.; Kammel, S.; Kolter, J.Z.; Langer, D.; Pink, O.; Pratt, V.; et al. Towards fully autonomous driving: Systems and algorithms. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (IV), Baden, Germany, 5–9 June 2011; pp. 163–168. [Google Scholar] [CrossRef]

- Javanmardi, E.; Javanmardi, M.; Gu, Y.; Kamijo, S. Autonomous vehicle self-localization based on multilayer 2D vector map and multi-channel LiDAR. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 437–442. [Google Scholar] [CrossRef]

- Meng, X.; Wang, H.; Liu, B. A robust vehicle localization approach based on GNSS/IMU/DMI/LiDAR sensor fusion for autonomous vehicles. Sensors 2017, 17, 2140. [Google Scholar] [CrossRef] [PubMed]

- Wan, G.; Yang, X.; Cai, R.; Li, H.; Zhou, Y.; Wang, H.; Song, S. Robust and Precise Vehicle Localization Based on Multi-Sensor Fusion in Diverse City Scenes. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 4670–4677. [Google Scholar]

- Nobili, S.; Dominguez-Quijada, S.; Garcia, G.; Martinet, P. 6 channels Velodyne versus planar LiDARs based perception system for Large Scale 2D-SLAM. In Proceedings of the 7th Workshop on Planning, Perception and Navigation for Intelligent Vehicles, Hamburg, Germany, 18 September 2015. [Google Scholar]

- Deilamsalehy, H.; Havens, T.C. Sensor fused three-dimensional localization using IMU, camera and LiDAR. In Proceedings of the 2016 IEEE SENSORS, Orlando, FL, USA, 30 October–3 November 2016; pp. 1–3. [Google Scholar] [CrossRef]

- Kuutti, S.; Fallah, S.; Katsaros, K.; Dianati, M.; Mccullough, F.; Mouzakitis, A. A Survey of the State-of-the-Art Localization Techniques and Their Potentials for Autonomous Vehicle Applications. IEEE Internet Things J. 2018, 5, 829–846. [Google Scholar] [CrossRef]

- AMCL ROS Package. Available online: https://github.com/ros-planning/navigation (accessed on 17 July 2020).

- Xiao, L.; Wang, J.; Qiu, X.; Rong, Z.; Zou, X. Dynamic-SLAM: Semantic monocular visual localization and mapping based on deep learning in dynamic environment. Robot. Auton. Syst. 2019, 117, 1–16. [Google Scholar] [CrossRef]

- Akail, N.; Moralesl, L.Y.; Murase, H. Reliability estimation of vehicle localization result. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 740–747. [Google Scholar]

- Xu, S.; Chou, W.; Dong, H. A Robust Indoor Localization System Integrating Visual Localization Aided by CNN-Based Image Retrieval with Monte Carlo Localization. Sensors 2019, 19, 249. [Google Scholar] [CrossRef] [PubMed]

- Sun, L.; Adolfsson, D.; Magnusson, M.; Andreasson, H.; Posner, I.; Duckett, T. Localising Faster: Efficient and precise lidar-based robot localisation in large-scale environments. arXiv 2020, arXiv:2003.01875. [Google Scholar]

- Antonante, P.; Spivak, D.I.; Carlone, L. Monitoring and Diagnosability of Perception Systems. arXiv 2020, arXiv:2005.11816. [Google Scholar]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar]

- Agrawal, P.; Iqbal, A.; Russell, B.; Hazrati, M.K.; Kashyap, V.; Akhbari, F. PCE-SLAM: A real-time simultaneous localization and mapping using LiDAR data. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 1752–1757. [Google Scholar]

- Besl, P.J.; McKay, N.D. Method for registration of 3-D shapes. In Sensor Fusion IV: Control Paradigms and Data Structures; International Society for Optics and Photonics: Bellingham, WA, USA, 1992; Volume 1611, pp. 586–606. [Google Scholar]

- Daoust, T.; Pomerleau, F.; Barfoot, T.D. Light at the end of the tunnel: High-speed lidar-based train localization in challenging underground environments. In Proceedings of the 2016 13th Conference on Computer and Robot Vision (CRV), Victoria, BC, Canada, 1–3 June 2016; pp. 93–100. [Google Scholar]

- Magnusson, M. The Three-Dimensional Normal-Distributions Transform: An Efficient Representation for Registration, Surface Analysis, and Loop Detection. Ph.D. Thesis, Örebro Universitet, Örebro, Sweden, 2009. [Google Scholar]

- Woo, A.; Fidan, B.; Melek, W.; Zekavat, S.; Buehrer, R. Localization for Autonomous Driving. In Handbook of Position Location: Theory, Practice, and Advances, 2nd ed.; Wiley: Hoboken, NJ, USA, 2019; pp. 1051–1087. [Google Scholar] [CrossRef]

- Reid, T.G.R.; Houts, S.E.; Cammarata, R.; Mills, G.; Agarwal, S.; Vora, A.; Pandey, G. Localization Requirements for Autonomous Vehicles. arXiv 2019, arXiv:1906.01061. [Google Scholar] [CrossRef]

- Yomchinda, T. A method of multirate sensor fusion for target tracking and localization using extended Kalman Filter. In Proceedings of the 2017 Fourth Asian Conference on Defence Technology-Japan (ACDT), Tokyo, Japan, 29 November–1 December 2017; pp. 1–7. [Google Scholar] [CrossRef]

- Hu, Y.; Duan, Z.; Zhou, D. Estimation Fusion with General Asynchronous Multi-Rate Sensors. IEEE Trans. Aerosp. Electron. Syst. 2010, 46, 2090–2102. [Google Scholar] [CrossRef]

- Armesto, L.; Tornero, J.; Domenech, L. Improving Self-localization of Mobile Robots Based on Asynchronous Monte-Carlo Localization Method. In Proceedings of the 2006 IEEE Conference on Emerging Technologies and Factory Automation, Prague, Czech Republic, 20–22 September 2006; pp. 1028–1035. [Google Scholar] [CrossRef]

- Lategahn, H.; Schreiber, M.; Ziegler, J.; Stiller, C. Urban localization with camera and inertial measurement unit. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium (IV), Gold Coast, QLD, Australia, 23–26 June 2013; pp. 719–724. [Google Scholar] [CrossRef]

- Bachrach, A.; Prentice, S.; He, R.; Roy, N. RANGE—Robust autonomous navigation in GPS-denied environments. J. Field Robot. 2011, 28, 644–666. [Google Scholar] [CrossRef]

- Achtelik, M.; Bachrach, A.; He, R.; Prentice, S.; Roy, N. Stereo vision and laser odometry for autonomous helicopters in GPS-denied indoor environments. In Unmanned Systems Technology XI; Gerhart, G.R., Gage, D.W., Shoemaker, C.M., Eds.; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 2009; Volume 7332, pp. 336–345. [Google Scholar] [CrossRef]

- Caporale, D.; Settimi, A.; Massa, F.; Amerotti, F.; Corti, A.; Fagiolini, A.; Guiggiani, M.; Bicchi, A.; Pallottino, L. Towards the design of robotic drivers for full-scale self-driving racing cars. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 5643–5649. [Google Scholar]

- Caporale, D.; Fagiolini, A.; Pallottino, L.; Settimi, A.; Biondo, A.; Amerotti, F.; Massa, F.; De Caro, S.; Corti, A.; Venturini, L. A Planning and Control System for Self-Driving Racing Vehicles. In Proceedings of the 2018 IEEE 4th International Forum on Research and Technology for Society and Industry (RTSI), Palermo, Italy, 10–13 September 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Madgwick, S. An efficient orientation filter for inertial and inertial/magnetic sensor arrays. Rep. X-Io Univ. Bristol (UK) 2010, 25, 113–118. [Google Scholar]

- Paden, B.; Čáp, M.; Yong, S.Z.; Yershov, D.; Frazzoli, E. A survey of motion planning and control techniques for self-driving urban vehicles. IEEE Trans. Intell. Veh. 2016, 1, 33–55. [Google Scholar] [CrossRef]

- Fox, D.; Burgard, W.; Dellaert, F.; Thrun, S. Monte carlo localization: Efficient position estimation for mobile robots. AAAI/IAAI 1999, 1999, 2. [Google Scholar]

- Thrun, S.; Burgard, W.; Fox, D. Probabilistic Robotics (Intelligent Robotics and Autonomous Agents); The MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Dellaert, F.; Fox, D.; Burgard, W.; Thrun, S. Monte carlo localization for mobile robots. In Proceedings of the 1999 IEEE International Conference on Robotics and Automation (Cat. No.99CH36288C), Detroit, MI, USA, 10–15 May 1999; Volume 2, pp. 1322–1328. [Google Scholar]

- rFpro Website. Available online: http://www.rfpro.com/ (accessed on 17 July 2020).

- Kapania, N.R.; Gerdes, J.C. Design of a feedback-feedforward steering controller for accurate path tracking and stability at the limits of handling. Veh. Syst. Dyn. 2015, 53, 1687–1704. [Google Scholar] [CrossRef]

| Case | H | |

|---|---|---|

| velocity not available | ||

| pose estimate not available | ||

| all measurements available |

| IAMCL (w/ extr.) | IAMCL (w/o extr.) | AMCL | |

|---|---|---|---|

| Long. error (avg/max) | 0.47/1.78 m | 1.10/2.69 m | 0.68/1.63 m |

| Lat. error (avg/max) | 0.21/0.81 m | 0.20/0.72 m | 0.23/0.81 m |

| Heading error (avg/max) | 0.51/1.39 deg | 0.57/1.81 deg | 0.29/1.29 deg |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Massa, F.; Bonamini, L.; Settimi, A.; Pallottino, L.; Caporale, D. LiDAR-Based GNSS Denied Localization for Autonomous Racing Cars. Sensors 2020, 20, 3992. https://doi.org/10.3390/s20143992

Massa F, Bonamini L, Settimi A, Pallottino L, Caporale D. LiDAR-Based GNSS Denied Localization for Autonomous Racing Cars. Sensors. 2020; 20(14):3992. https://doi.org/10.3390/s20143992

Chicago/Turabian StyleMassa, Federico, Luca Bonamini, Alessandro Settimi, Lucia Pallottino, and Danilo Caporale. 2020. "LiDAR-Based GNSS Denied Localization for Autonomous Racing Cars" Sensors 20, no. 14: 3992. https://doi.org/10.3390/s20143992

APA StyleMassa, F., Bonamini, L., Settimi, A., Pallottino, L., & Caporale, D. (2020). LiDAR-Based GNSS Denied Localization for Autonomous Racing Cars. Sensors, 20(14), 3992. https://doi.org/10.3390/s20143992