Effective Three-Stage Demosaicking Method for RGBW CFA Images Using The Iterative Error-Compensation Based Approach

Abstract

1. Introduction

1.1. Related Work

1.2. Contributions

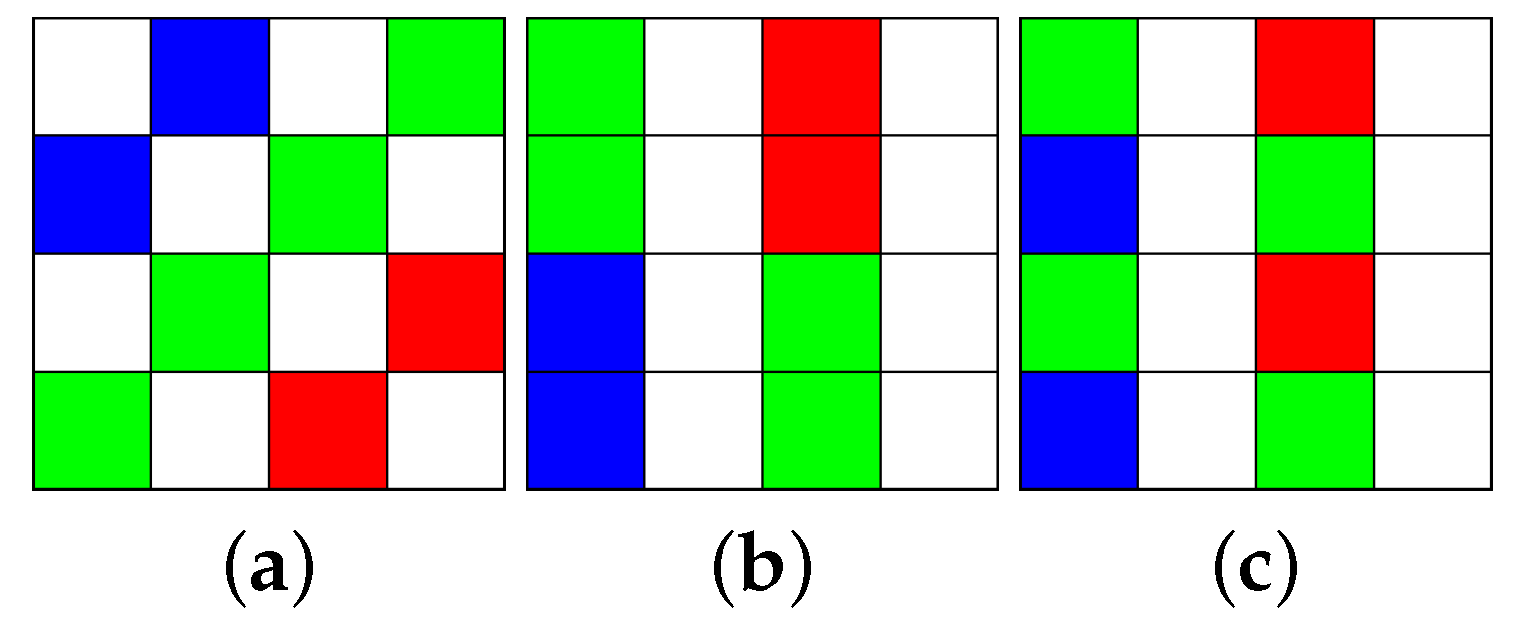

2. The Proposed Three-Stage Demosaicking Method For RGBW CFA Images

2.1. The First Stage: The Cross Shape-Based Color Difference Approach To Construct The Missing W Color Pixels

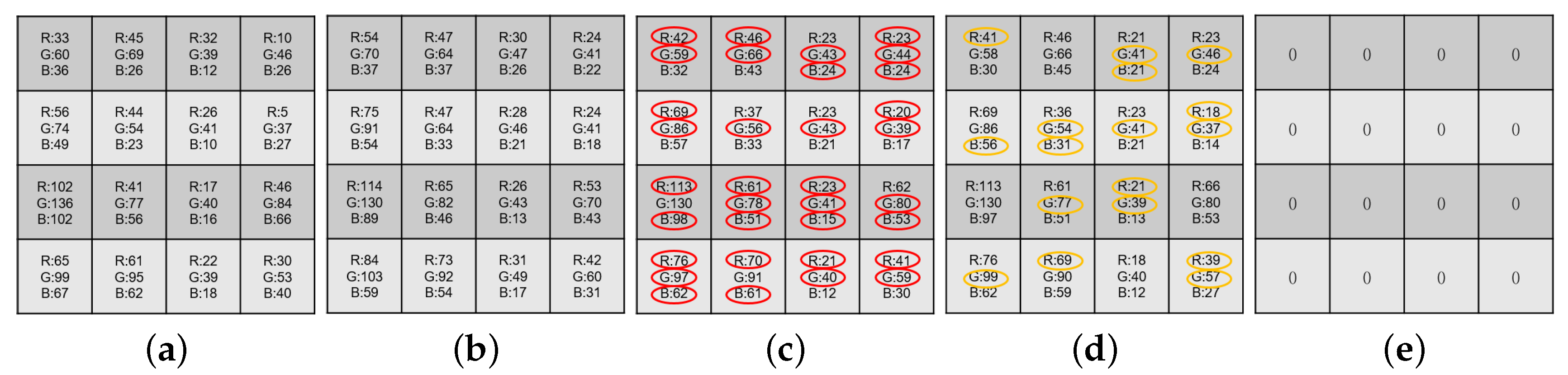

2.2. The Second Stage: The Iterative Error Compensation Approach To Minimize The R, G, B Errors

2.3. The Third Stage: The -Based Refinement Process To Zeroize

3. Experimental Results

3.1. Object Quality Merit of Our Method

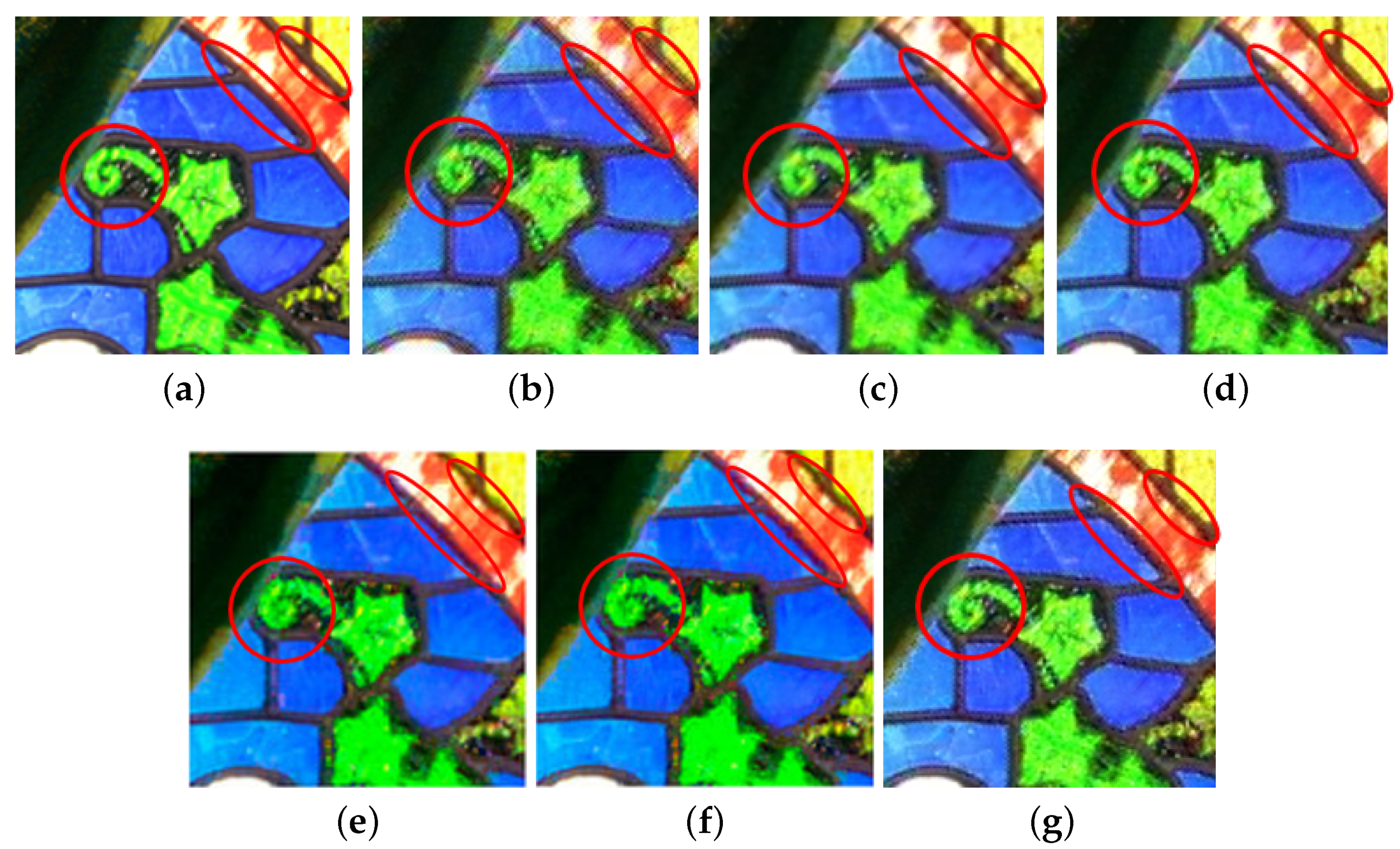

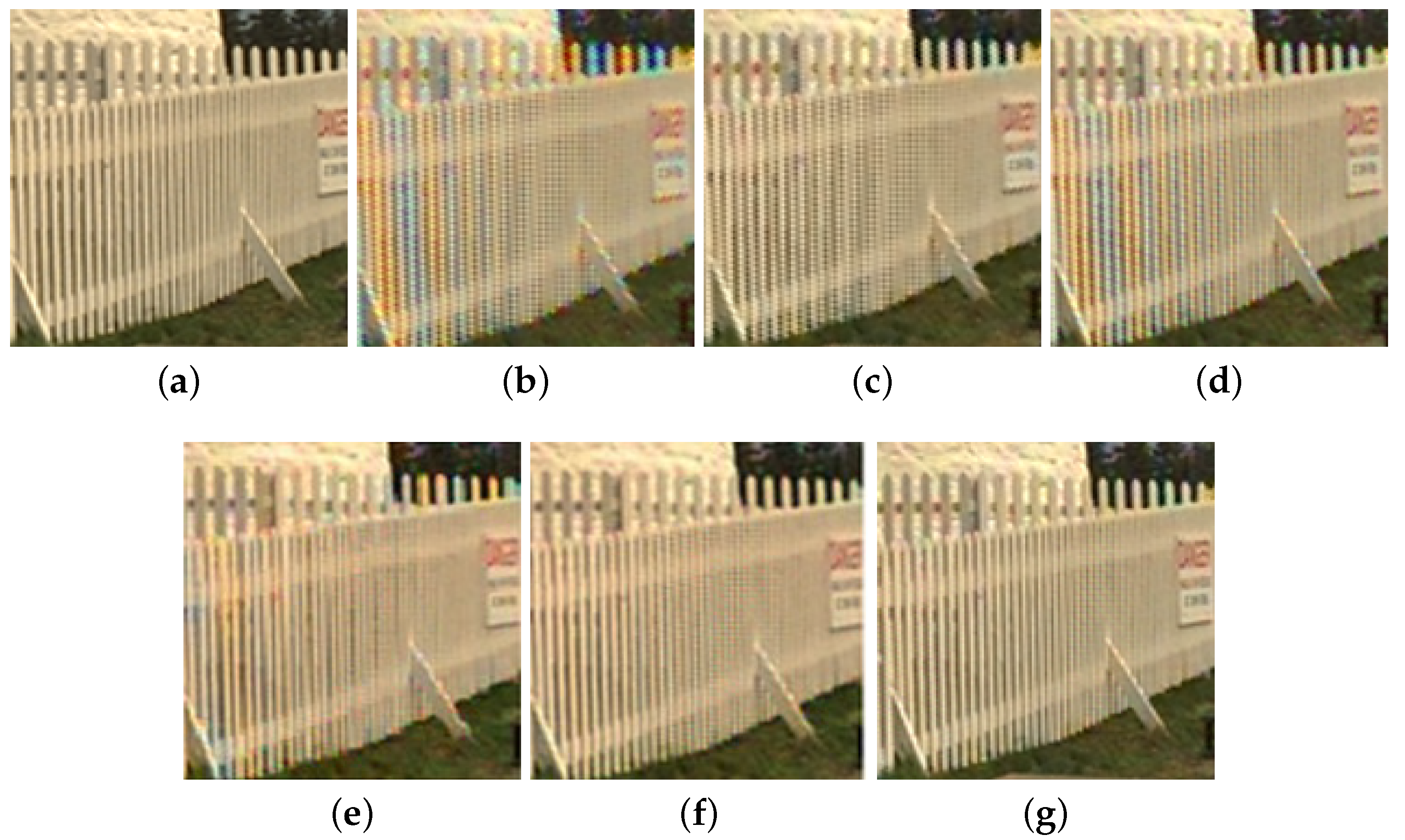

3.2. Perceptual Effect Merit of Our Method

3.3. Actual Time Cost of Our Method

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bayer, B.E. Color Imaging Array. U.S. Patent 3 971 065, 20 July 1976. [Google Scholar]

- Chung, K.L.; Yang, W.J.; Yan, W.M.; Wang, C.C. Demosaicing of color filter array captured images using gradient edge detection masks and adaptive heterogeneity-projection. IEEE Trans. Image Process. 2008, 17, 2356–2367. [Google Scholar] [CrossRef] [PubMed]

- Kiku, D.; Monno, Y.; Tanaka, M.; Okutomi, M. Residual interpolation for color image demosaicking. In Proceedings of the IEEE International Conference on Image Processing, Melbourne, Australia, 15–18 September 2013; pp. 2304–2308. [Google Scholar]

- Li, X.; Gunturk, B.; Zhang, L. Image demosaicing: A systematic survey. In Proceedings of the SPIE-IS&T Electronic Imaging, Visual Communications and Image Processing, San Jose, CA, USA, 27–31 January 2008; Volume 6822, pp. 68221J–68221J15. [Google Scholar]

- Menon, D.; Calvagno, G. Color image demosaicking: An overview. Signal Process. Image Commun. 2011, 26, 518–533. [Google Scholar] [CrossRef]

- Pei, S.C.; Tam, I.K. Effective color interpolation in CCD color filter arrays using signal correlation. IEEE Trans. Circuits Syst. Video Technol. 2003, 13, 503–513. [Google Scholar]

- Zhang, L.; Wu, X.; Buades, A.; Li, X. Color demosaicking by local directional interpolation and nonlocal adaptive thresholding. J. Electron. Imaging 2011, 20, 023016. [Google Scholar]

- Lee, S.H.; Oh, P.; Kang, M.G. Three dimensional colorization based image/video reconstruction from white-dominant RGBW pattern images. Digit. Signal Process. 2019, 93, 87–101. [Google Scholar] [CrossRef]

- Ono, S. Image-Capturing Apparatus. U.S. Patent Application No. 10/166, 271, 30 January 2003. [Google Scholar]

- Compton, J.T.; Hamilton, J.F. Image Sensor with Improved Light Sensitivity. U.S. Patent 8,139,130,20, 20 March 2012. [Google Scholar]

- Hamilton, J.F.; Compton, J.T. Processing Color and Panchromatic Pixels. U.S. Patent 0,024,879,A1, 25 September 2012. [Google Scholar]

- Condat, L. A generic variational approach for demosaicking from an arbitrary color filter array. In Proceedings of the IEEE International Conference on Image Processing, Cairo, Egypt, 7–10 November 2009; pp. 1625–1628. [Google Scholar]

- Kwan, C.; Chou, B.; Kwan, L.M.; Budavari, B. Debayering RGBW color filter arrays: A pansharpening approach. In Proceedings of the IEEE Ubiquitous Computing, Electronics & Mobile Communication Conference, New York, NY, USA, 19–21 October 2017; pp. 94–100. [Google Scholar]

- Zhou, J.; Kwan, C.; Budavari, B. Hyperspectral image superresolution: A hybrid color mapping approach. J. Appl. Remote Sens. 2016, 10, 035024. [Google Scholar] [CrossRef]

- Gharbi, M.; Chaurasia, G.; Paris, S.; Durand, F. Deep joint demosaicking and denoising. ACM Trans. Graph. 2016, 35, 1–2. [Google Scholar] [CrossRef]

- Kwan, C.; Chou, B. Further improvement of debayering performance of RGBW color filter arrays using deep learning and pansharpening techniques. J. Imaging 2019, 5, 68. [Google Scholar] [CrossRef]

- Zhang, C.; Yan, L.; Wang, J.; Hao, P. Universal demosaicking of color filter arrays. IEEE Trans. Image Process. 2016, 25, 5173–5186. [Google Scholar] [CrossRef] [PubMed]

- Amba, P.; Alleysson, D.; Mermillod, M. Demosaicing using dual layer feedforward neural network. In Proceedings of the Twenty-sixth Color and Imaging Conference, Vancouver, BC, Canada, 12–16 November 2018; pp. 211–218. [Google Scholar]

- IMAX True Color Image Collection. Available online: https://www.comp.polyu.edu.hk/~cslzhang/CDM_Dataset.htm (accessed on 30 June 2020).

- Kodak True Color Image Collection. Available online: http://www.math.purdue.edu/~lucier/PHOTO_CD/BMP_IMAGES/ (accessed on 30 June 2020).

- Execution Codes of HCbicubic and HCKiku. Available online: Ftp://140.118.175.164/HC/ (accessed on 30 June 2020).

- Execution Code of VA Method. Available online: https://lcondat.github.io/publications.html (accessed on 20 June 2020).

- Execution Code of Our Method. Available online: Ftp://140.118.175.164/ours/ (accessed on 30 June 2020).

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

| HC [11] | HC [11] | VA [12] | Pansharpening-Based Method [13] | Deep Learning-Based Method [16] | Proposed | ||

|---|---|---|---|---|---|---|---|

| CPSNR | IMAX | 29.32595 | 31.4849 | 31.05877 | 33.26039 | 33.98561 | 33.47046 |

| Kodak | 28.84192 | 31.7211 | 35.95515 | 33.58083 | 34.85267 | 36.67467 | |

| Average CPSNR | 29.08 | 31.60 | 33.50 | 33.42 | 34.42 | 35.072 | |

| Average CPSNR gain | 5.988635 | 3.46957 | 1.56561 | 1.65196 | 0.65343 | ||

| HC [11] | HC [11] | VA [12] | Proposed | ||

|---|---|---|---|---|---|

| CPSNR | IMAX | 29.32595 | 31.4849 | 31.05877 | 33.47046 |

| Kodak | 29.26757 | 31.85273 | 35.73768 | 36.57367 | |

| Average CPSNR | 29.29676 | 31.66882 | 33.39823 | 35.02207 | |

| Average CPSNR gain | 5.72531 | 3.353255 | 1.623845 | ||

| SSIM | IMAX | 0.8921 | 0.915778 | 0.898775 | 0.933092 |

| Kodak | 0.895423 | 0.933169 | 0.973259 | 0.975752 | |

| Average SSIM | 0.8937615 | 0.924474 | 0.936017 | 0.954422 | |

| Average SSIM gain | 0.0606605 | 0.029949 | 0.018405 | ||

| E | IMAX | 3.531127 | 2.777121 | 2.966827 | 2.392574 |

| Kodak | 3.708511 | 2.598007 | 2.122344 | 2.173272 | |

| Average E | 3.619819 | 2.687564 | 2.544586 | 2.282923 | |

| Average E gain | −1.336896 | −0.40464 | −0.26166 | ||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chung, K.-L.; Chan, T.-H.; Chen, S.-N. Effective Three-Stage Demosaicking Method for RGBW CFA Images Using The Iterative Error-Compensation Based Approach. Sensors 2020, 20, 3908. https://doi.org/10.3390/s20143908

Chung K-L, Chan T-H, Chen S-N. Effective Three-Stage Demosaicking Method for RGBW CFA Images Using The Iterative Error-Compensation Based Approach. Sensors. 2020; 20(14):3908. https://doi.org/10.3390/s20143908

Chicago/Turabian StyleChung, Kuo-Liang, Tzu-Hsien Chan, and Szu-Ni Chen. 2020. "Effective Three-Stage Demosaicking Method for RGBW CFA Images Using The Iterative Error-Compensation Based Approach" Sensors 20, no. 14: 3908. https://doi.org/10.3390/s20143908

APA StyleChung, K.-L., Chan, T.-H., & Chen, S.-N. (2020). Effective Three-Stage Demosaicking Method for RGBW CFA Images Using The Iterative Error-Compensation Based Approach. Sensors, 20(14), 3908. https://doi.org/10.3390/s20143908