Actually there are several approaches in the filtering of 3D data. Many of them are based on statistical properties between the given point and its surroundings and there are some based on artificial intelligence. In the following part, we describe our method called Importance Map Based Median (IMBM) filter and also several other standard methods for further result comparison (Statistical Outlier Removal (SOR), Radius Outlier Removal (ROR), PointCleanNet).

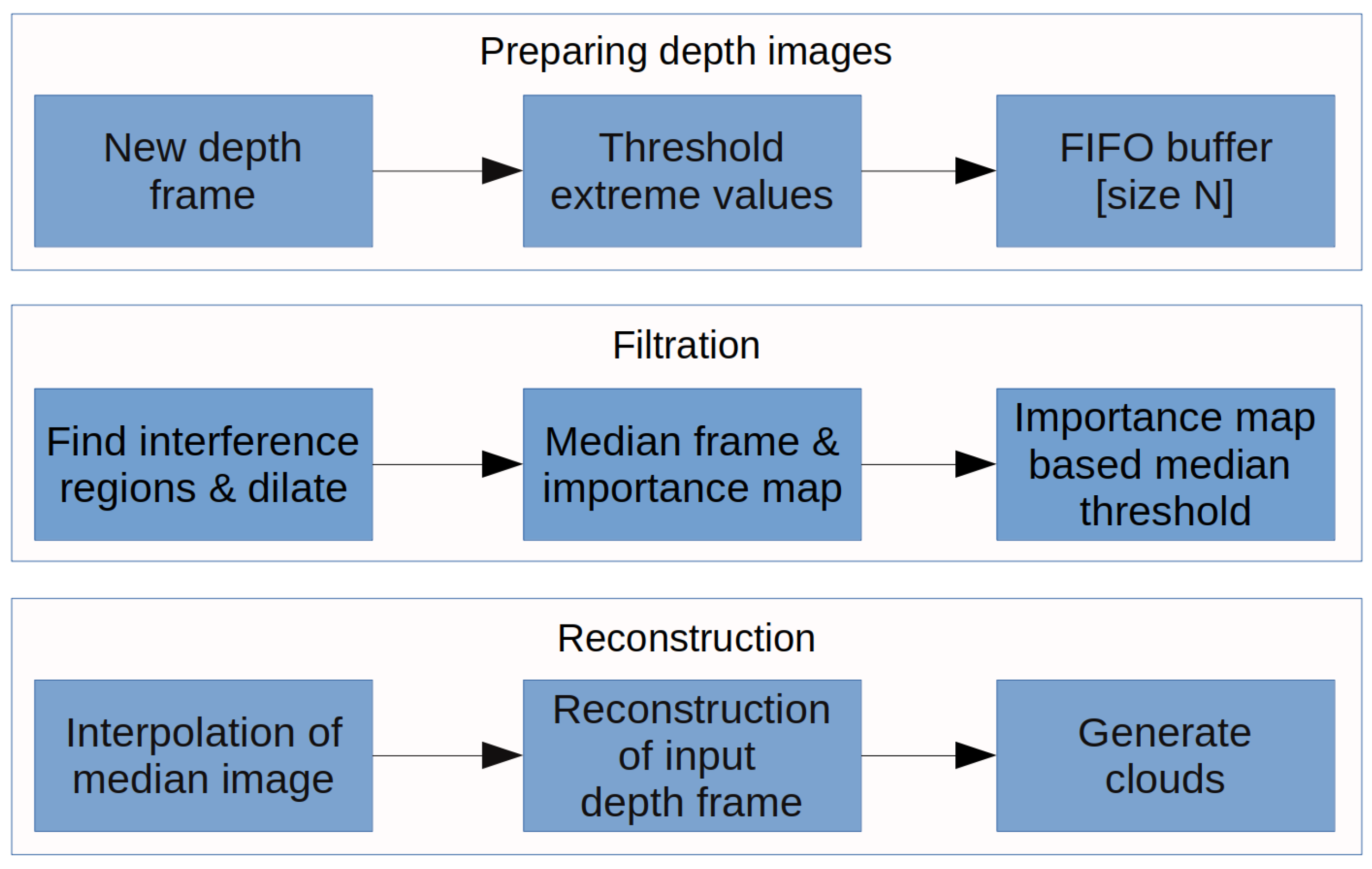

5.2.4. Importance Map Based Median Filter

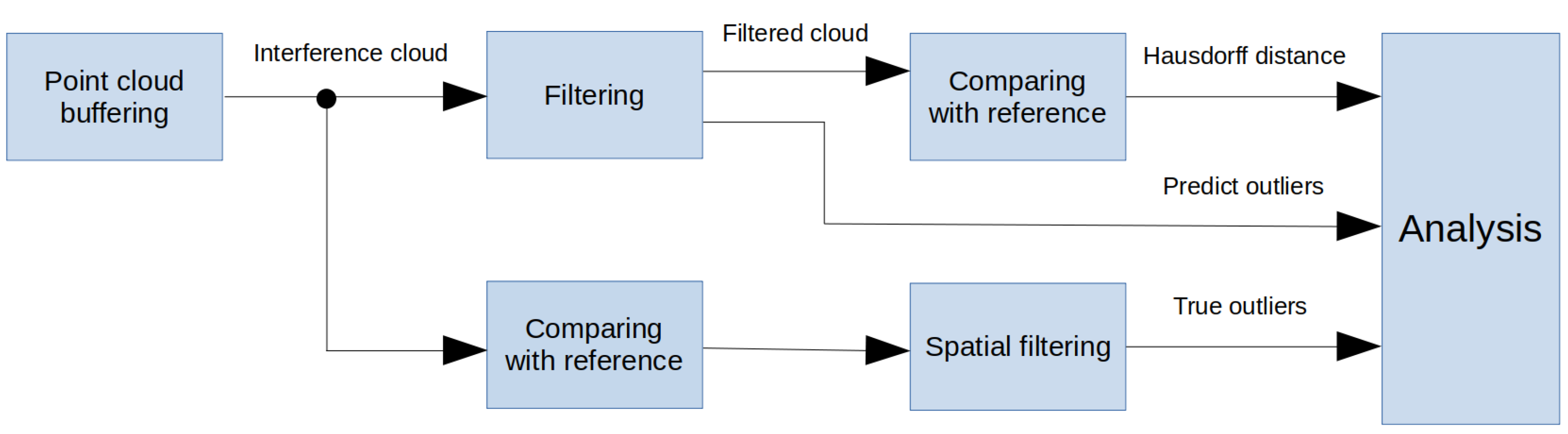

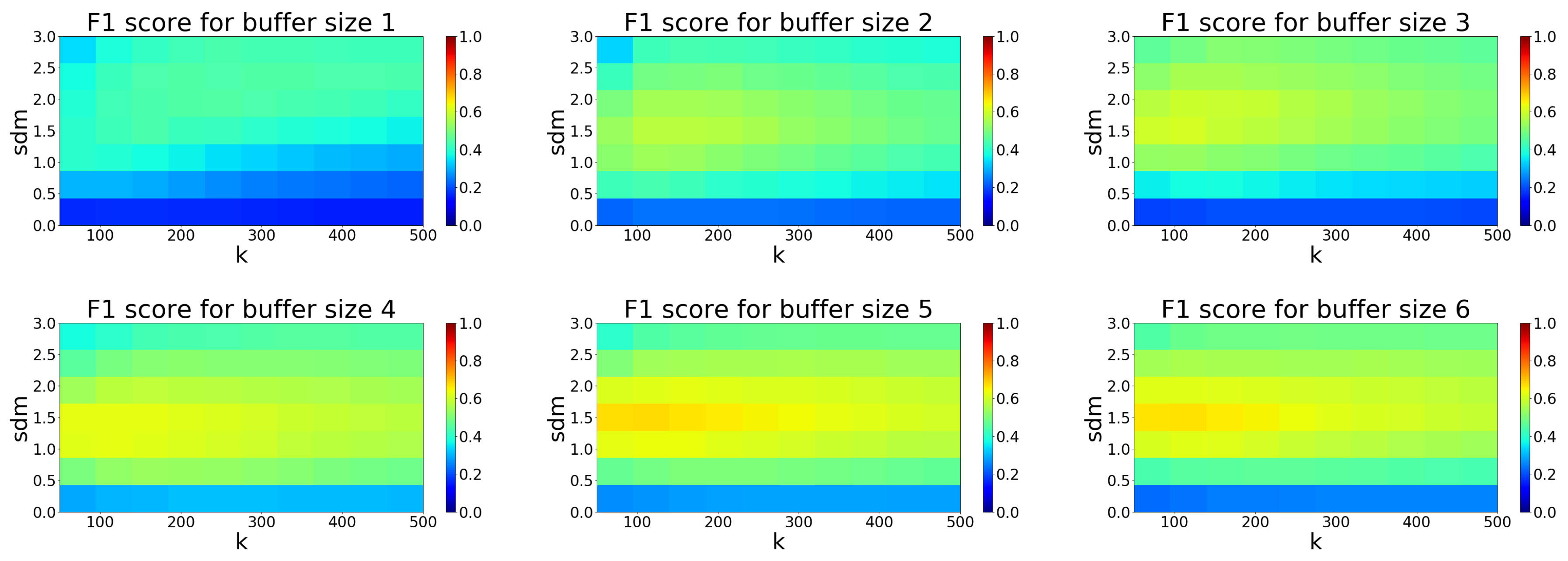

For the suppression and filtering of interference artifacts in the multi-camera system, we proposed an algorithm (see

Figure 7). The algorithm is based on interference region extraction and interpolation in a series of images obtained from the camera. The algorithm was proposed mainly for the application of scanning parts of the human body but is not strictly intended only for this application field. The important prerequisite for filtering and interpolation is to have a sufficient number of subsequent depth frames in the buffer. The basic condition is that objects must have the same or very close position in each frame in buffer. This condition is met by fact, that object is static. In the case of the object which can make a move, we have to minimize the buffer size to avoid possible motion artifacts in the resulting depth map.

Firstly, we must set the adequate number of frames for buffer. This number is discussed in experimental results. Each frame going into the buffer is thresholded using threshold values determined by the topology and properties of our system.

If

d(x;y) is a single pixel of the depth map, the threshold is performed using the following equation:

where

d(x;y) is 32-bit float number and

,

are lower and upper depth threshold values. In our case they are set to 80 and 1300 (these numbers represent real distance in mm). The scanning cabin is shown in

Figure 5d and the dimension of the bottom is

mm.

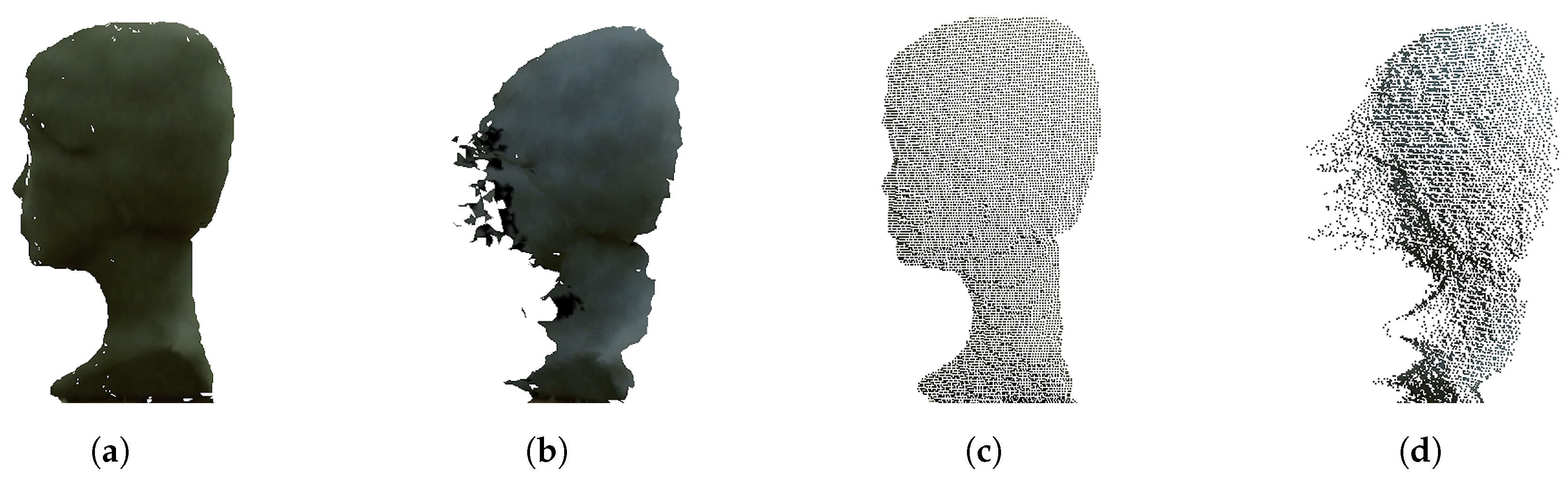

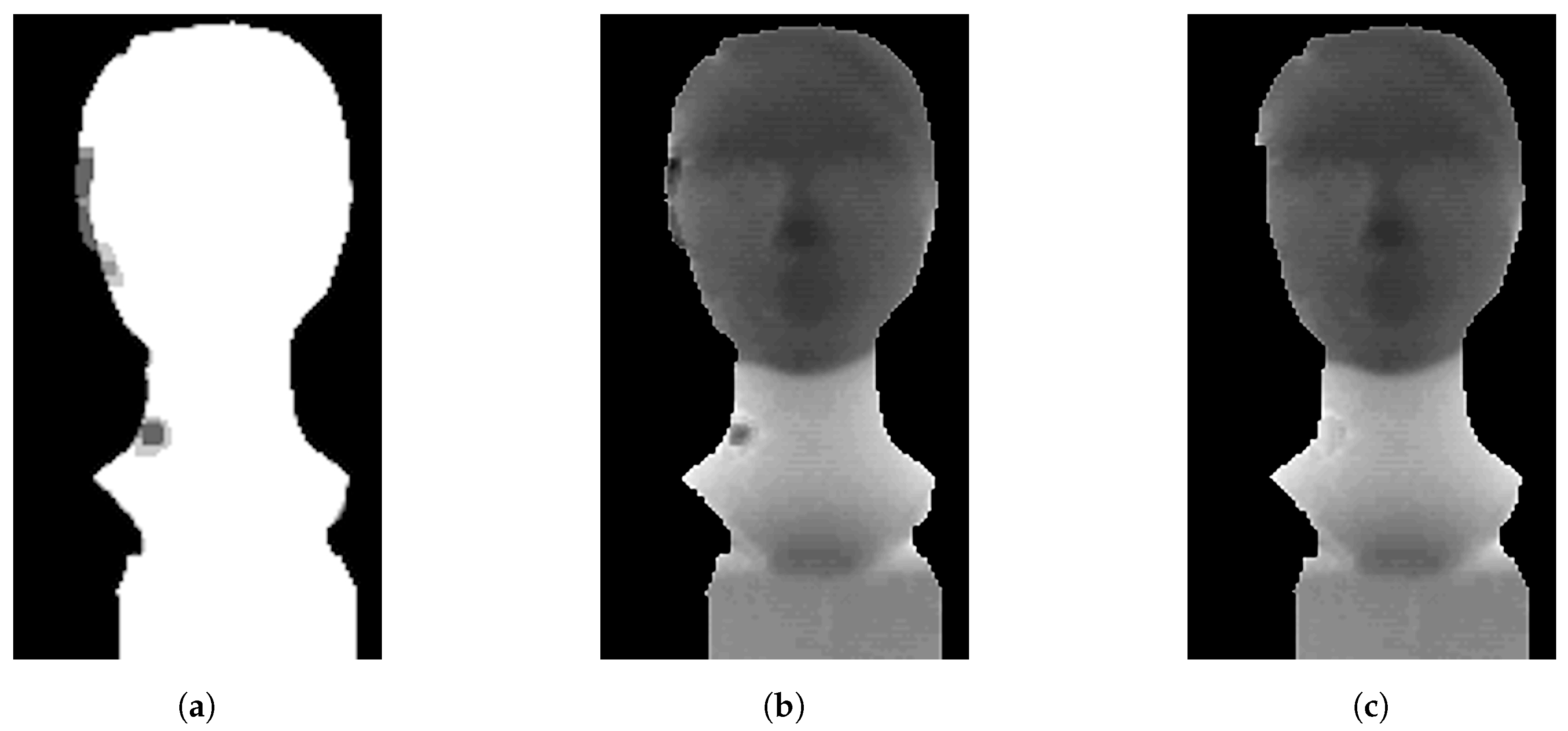

After depth thresholding, the background is removed and images include only the object of interest (

Figure 8). This simple step is important and effective because it often removes areas with interference in which pixels tend to take extreme values (overflowing 32-bit stack variable).

In the next step, the thresholded image with a minimum number of non-zero values is selected as a reference image in the buffer. It is because this image with the highest probability contains a minimal number of interfering pixels. The binary map is created from this reference image and each depth image in the buffer is masked with it. In this step, input images are divided into three-pixel regions—background (also including extreme interference regions), interference region pixels (also including some object pixels) and object pixels (also including interference pixels). Interference regions are dilated by structuring element square because extreme interference regions are mostly surrounded with lower intensity of interference regions. In this way, the sum of interference pixels is decreased but the number of object pixels in the interference map encreases.

These morphologically processed frames in the buffer are used to compute the median frame and importance map. The median frame pixels are computed as medians of corresponding pixels through all buffer frames, where all zero values are excluded from the median computation. If all the values in number series are zeros, the resulting value is zero. This step is analogous to temporal averaging filtering of image data and also reduces noise processes [

24].

Each median value (pixel in median frame) is annotated with information about the number of non-zero values used for median computation. This additive information (2D array) is called importance map (

Figure 9a).

Using importance map we have knowledge about the significance of each pixel in the median frame. The small importance value of a given pixel means a high probability of interference occurring at a particular point. For this reason, it seems to be useful to remove such areas by threshold settings.

Pixels in median frame (

Figure 9b) with selected importance higher than

are preserved, other pixels are set to zero. The

k represents the importance map threshold and

N is the size of the buffer. The coefficient

k can take a range of values from 0 to 1.

The next phase in IMBM filter is an interpolation. Data removed from the median frame could be reconstructed by using bilinear interpolation as an example. The effect and influence of different interpolation are discussed in Reference [

25].

Bilinear interpolation can be replaced by another interpolation method (e.g., bicubic, biquadratic...). In the aspect of computation, bilinear transformation is a good compromise between the nearest neighbor method and other more complex interpolation methods. The limitation of interpolation occurs if there is a depth map with a relatively big area of holes. The limitation of interpolation is also the holes situated in the place of a mouth, eye corners, and so forth.

The used interpolation method is adapted for scanning the application of the human head and face. We must avoid and restrict the false reconstruction in the mentioned areas (mouth, eyes...). Therefore we do not perform the interpolation over the data where the depth significantly varies, over the areas between small isolated particles or large areas affected by interference. Interpolation can be disabled in IMBM algorithm or can be replaced by other interpolation methods.

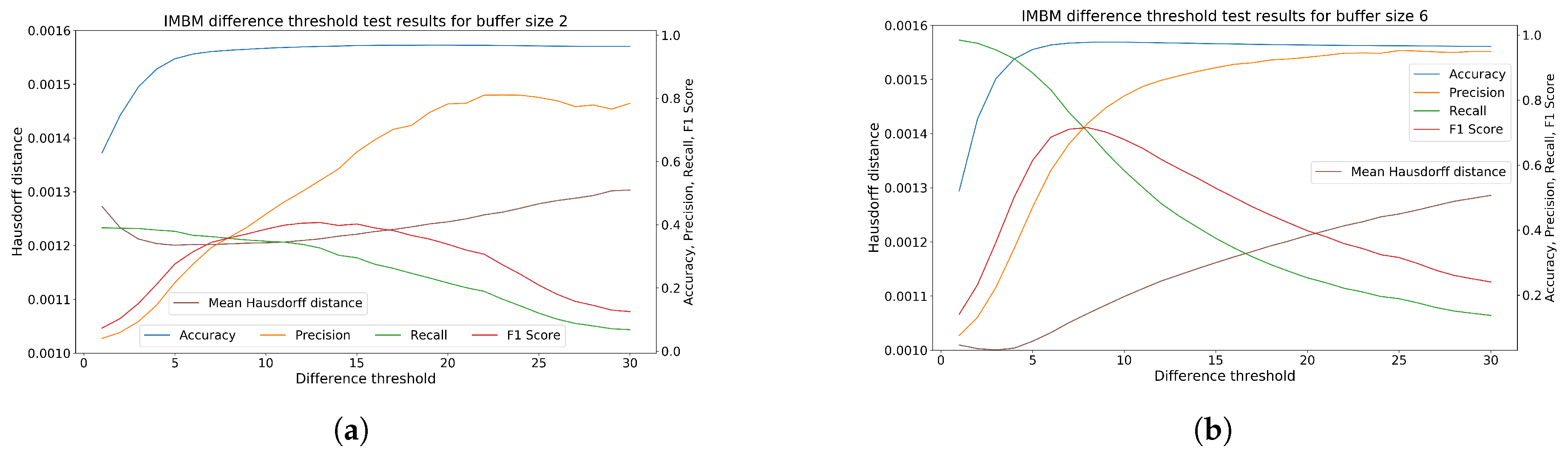

Using the median frame we can subsequently repair input depth maps with interference. In the repairing process, the pixels of depth maps are compared with pixels of the median frame. If the absolute difference value is greater than the selected difference threshold (this value is discussed in the experimental part in the

Section 6.4), the pixel in original depth map is replaced by median value (or zero value, depending on the mode of IMBM method). The value of the difference threshold depends on camera noise intensity for the defined distance. If this value is too low, the big number of non-interfering pixels will be replaced by the median value. On the other side, the filter could preserve a large amount of interference in the original image. The filter output can be in the form of the median frame or in the form of non-interfering input images.

Generally, we can say, that applying the proposed algorithm improves depth image (

Figure 9c) by reducing flying pixels, noise processes and filling the holes in the resulting 3D model.