Abstract

To solve the problems of low teaching programming efficiency and poor flexibility in robot welding of complex box girder structures, a method of seam trajectory recognition based on laser scanning displacement sensing was proposed for automated guidance of a welding torch in the skip welding of a spatially intermittent welding seam. Firstly, a laser scanning displacement sensing system for measuring angles adaptively is developed to detect corner features of complex structures. Secondly, a weld trajectory recognition algorithm based on Euclidean distance discrimination is proposed. The algorithm extracts the shape features by constructing the characteristic triangle of the weld trajectory, and then processes the set of shape features by discrete Fourier analysis to solve the feature vector used to describe the shape. Finally, based on the Euclidean distance between the feature vector of the test sample and the class matching library, the class to which the sample belongs is identified to distinguish the weld trajectory. The experimental results show that the classification accuracy rate of four typical spatial discontinuous welds in complex box girder structure is 100%. The overall processing time for weld trajectory detection and classification does not exceed 65 ms. Based on this method, the field test was completed in the folding special container production line. The results show that the system proposed in this paper can accurately identify discontinuous welds during high-speed metal active gas arc welding (MAG) welding with a welding speed of 1.2 m/min, and guide the welding torch to automatically complete the skip welding, which greatly improves the welding manufacturing efficiency and quality stability in the processing of complex box girder components. This method does not require a time-consuming pre-welding teaching programming and visual inspection system calibration, and provides a new technical approach for highly efficient and flexible welding manufacturing of discontinuous welding seams of complex structures, which is expected to be applied to the welding manufacturing of core components in heavy and large industries such as port cranes, large logistics transportation equipment, and rail transit.

1. Introduction

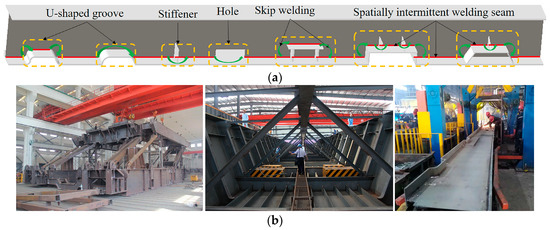

Complex box girder components are critical components for equipment used in industries such as heavy machinery, special containers, ships, bridges, and heavy-duty vehicles [1,2,3,4]. Welding is the key technology for the manufacture of complex box girders [1,2,3,4]. Due to the complicated structures of complex box girders, e.g., U-shaped grooves, holes, and reinforcing plates used to reduce welding deformation, a welding seam trajectory is usually an intermittent spatial curve (Figure 1). Spatially intermittent welding seams usually need to be completed by varying positions and skip welding.

Figure 1.

(a) Schematic diagram of skip welding; (b) typical example of spatial discontinuous welds in complex box girder.

With the development of information sensing technology and modern manufacturing technology, automated, robotized, flexible, and intelligent welding manufacturing has become an inevitable trend [4,5,6]. Nevertheless, the current welding and manufacturing of complex box girders is still mainly completed manually and by mechanized welding equipment. Although welding robots have been applied to the welding of some box girder components [7,8], due to the complicated manufacturing process of complex box girders, the accuracy of assembly positioning can be guaranteed only with difficulty, especially when it comes to tailor welding and combination welding of large and heavy components. Therefore, without sufficient teaching time, the robotic welding of such components cannot be realized. With the disadvantages of low programming efficiency and lack of flexibility [9,10,11], it is difficult to adapt robotic welding to the efficient and flexible production of complex box girders in small quantities.

To overcome the difficulties encountered by welding robots in teaching and offline programming mode operations, many studies have been performed to explore methods that can make robotic welding simpler and more effective [12,13,14,15,16,17,18,19,20,21]. Among these methods, welding seam trajectory recognition is used for welding path planning and welding seam tracing is a significant method for improving the efficiency and flexibility of robotic welding.

Having been extensively applied to robotic welding due to its high accuracy and non-contact, vision sensing is considered one of the most promising welding seam trajectory recognition technologies [19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44]. Great attention has been paid to welding seam detection methods based on structured light vision sensing [20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37]. Hascoet et al. [23] first used a single-line laser vision sensor to detect V-shaped groove shape information, then generated a torch path based on this information, and finally proposed a welding strategy for automated welding of ships. Prasarn et al. [24] developed a robotic welding seam tracking system based on a crossed structured light vision sensor, achieving the tracking of butt-jointed seams on the V-shaped grooves of thick plates. Li et al. [25] developed a robotic welding tracking system based on a single-line laser vision sensor for the tracking of butt-jointed seams on the V-shaped groove of medium-thick plates of molten electrode gas shielded welding. Zeng et al. [26] integrated fusion light and structured light information to identify the positions of multi-layer/multi-pass welding (MLMPW) seams. Zou et al. [27] developed a robotic welding seam tracking system based on three-line laser vision to track the trajectory of the welding seams of complex curved overlap welding. Later, they detected the positions and characteristics of welding seams using a three-line laser vision sensor, and they proposed a real-time position estimation method [28] that improved the adaptability of a robotic welding position and the quality of complex curved welding. Zeng et al. [29] proposed a 3D path demonstration method based on an X-type laser vision sensor, achieving the 3D welding seam trajectory recognition of narrow butt-jointed seams. Zhang et al. [30] mounted a Keyence laser displacement sensor onto an industrial robot to effectively obtain the 3D information of a workpiece through multi-segment scanning. They used gray-scale image processing to extract the characteristics of the welding seams and they applied cubic smooth splines to reconstruct spatially complex curved welding seam models, accomplishing the detection of the characteristics of spatially complex curved overlap welding seams. Liu et al. [31] realized the three-dimensional reconstruction of the molten pool surface by projecting a 19-by-19 dot matrix structured light pattern on the weld pool area. Some studies explored welding seam trajectory recognition methods based on passive vision sensing technology [38,39,40,41]. By visually measuring the offset between the center of a welding seam and a welding gun, Ma et al. [38] proposed a welding seam tracking method that achieved the welding tracking of straight butt-jointed seams. Mitchell et al. [39] applied reliable image matching and triangulation algorithms to achieve the robust identification and positioning of Z-type and S-type narrow butt-jointed seams. Wang et al. [40] designed a measurement system that consisted of a multi-optical magnifier, a camera, and an external lighting diode lamp, and they devised a welding seam detection method based on narrow depth of field (NDOF) to accurately detect narrow welding seams in laser welding. In some studies, binocular vision sensing technology was used for the 3D reconstruction of welding seam trajectory. Mitchell et al. [42] installed two color cameras on the welding gun of an industrial robot, and they used the adaptive linear growth algorithm to identify the robustness of fillet welding seams. Drago et al. [43] applied a 3D vision detection system for the online detection of the 3D welding paths of arcs to automatically identify arc welding abnormalities. Yang et al. [44] designed a 3D structured light sensor using a DLP projector and two cameras to obtain 3D information of welding seams. However, the above studies were primarily focused on the trajectory recognition of continuous welding seams, and the sensors were required to be accurately and complexly calibrated before welding. Additionally, their image processing methods were complicated and time-consuming, showing poor robustness to the environment of the field. There is currently no study on the spatially intermittent welding seam trajectory recognition of complex welded structures.

A welding seam trajectory recognition method for automated skip welding guidance of spatially intermittent welding seams was proposed in this study. First, a laser scanning displacement sensing system with an adaptive measuring angle was designed in order to detect the features of the corners of complex structures in real time. Second, a welding seam trajectory recognition algorithm based on Euclidean distance discrimination was recommended in order to identify spatially intermittent welding seams. The algorithm extracted the shape features by constructing the characteristic triangles of the welding seam trajectory, and then discrete Fourier analysis was used to process the shape feature set to solve the feature vector that was used to describe the shape. Third, based on the Euclidean distance of the feature vector of the test sample and the class matching library, the class to which the sample belonged was discriminated, thereby achieving the online automated recognition of spatially intermittent welding seams. In this study, the laser scanning displacement sensing system was used to collect the trajectory samples of four typical spatially intermittent welding seams from 200 groups of complex box girder structures to test the classification accuracy of the classification model. To verify the effectiveness of the method, the test was conducted on the production line of foldable special containers.

The rest of the paper is structured as follows. Section 2 provides an overview of the experimental device used in this study, the principle of welding seam detection based on the experimental device, and the approach of recognizing the spatially intermittent welding seams. Section 3 describes the testing of the performance of the proposed method and the field testing of the automated skip welding of the foldable special container beam, and the test results are discussed. The conclusions are presented in Section 4.

2. Experimental Methods

2.1. Experimental Details

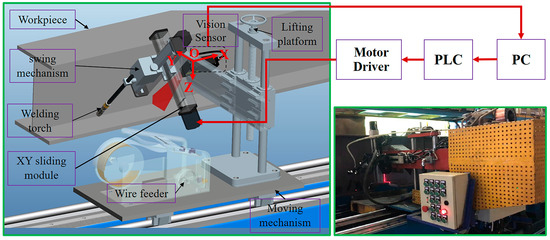

Figure 2 is an image of the experimental system and devices. The laser scanning displacement sensing system consisted of a laser generator (laser wavelength: 650 nm, power: 100 mw), a narrow band-pass filter (650 nm light waves could be passed), a CCD (TCD1208AP), a stepping motor, and receiving lens. Instead of being connected to the welding gun, the laser scanning displacement sensing system was installed a certain distance ahead of the welding gun, and the system was mainly used to detect the distance between the measurement point at the surface of the welding seam groove and the origin of the coordinates. The programmable logic controller (PLC) system was mainly used to control all of the operations that were required for the welding gun swing mechanism, XY sliding module, and moving mechanism to complete the welding process. The welding gun was controlled by the XY sliding module to move forward and backward. To control the welding gun in a cone-shaped swing, the welding gun swing mechanism was installed on the XY sliding module. The system device was driven by the moving mechanism to move along the welding direction. The lifting platform was used to adjust the overall large workpiece tooling drop before welding. For the PC, a CPU processor with a main frequency of 1.8 G (Intel (R) i5-8250, RAM 8 G) was used to run the main program algorithm and undertake complex computing tasks.

Figure 2.

The configuration of the experimental system.

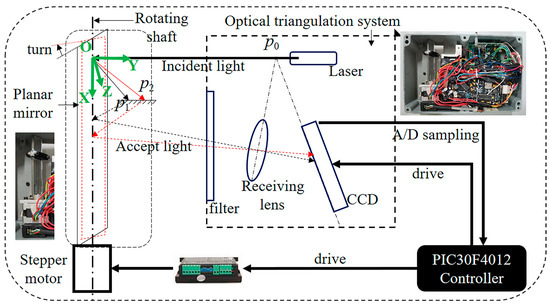

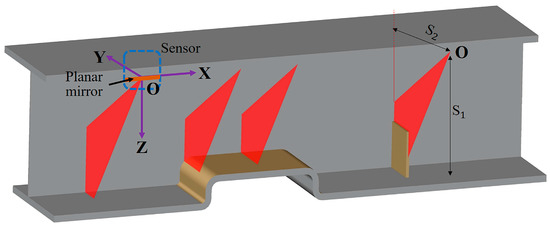

In this study, a laser scanning displacement sensing system was designed independently based on an improved laser displacement sensor at conventional points. Based on the optical triangulation system of the point laser displacement sensor, a rotary mirror device—including a motor and a plane mirror, as well as a motor that could drive the plane mirror to rotate coaxially—was added to the laser scanning displacement sensing system. The rotary mirror device could change the measurement direction of the optical triangulation system, whereas the conventional point laser optical triangulation system could only measure the distance in the direction of the laser beam. Hence, the designed sensing system could measure in multiple directions with the physical position and attitude of the system being constant, greatly improving the measurement degree of freedom of the sensor (Figure 3). As shown in Figure 3, this sensing system was mainly composed of a point laser optical triangulation system and a rotary mirror device. The motor and the plane mirror were coaxial, with the angle between the axis of the emitted laser and the rotation axis of the stepping motor being 90°. During the measurement, the stepping motor was controlled to drive the deflection of the plane mirror, which deflected the incident laser beam, thereby changing the measurement direction of the optical triangulation system. The measurement principle was as follows: first, the laser beam that was emitted by the optical triangulation system was reflected by the plane mirror onto the workpiece. Then, the laser spot on the surface of the workpiece was reflected by the plane mirror to the optical triangulation system. Ultimately, the length of the incident laser beam (e.g., and ) at different deflection angles could be solved for based on the principle of triangulation.

Figure 3.

The configuration of the designed laser scanning displacement sensing system.

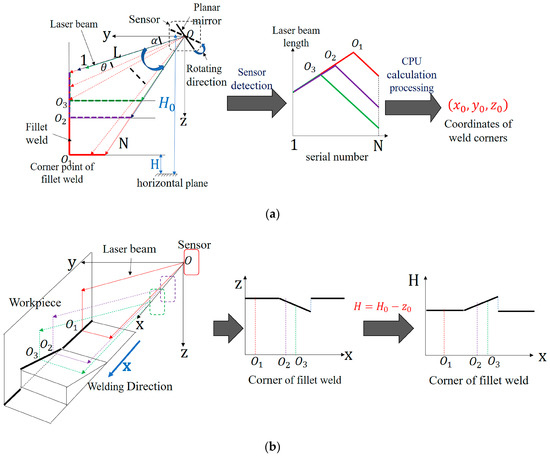

2.2. Corner Position and Trajectory Detection of Fillet Welds

Figure 4 shows a schematic diagram of welding position and trajectory detection. A coordinate system was established with the intersection point of the plane mirror axis of the laser scanning displacement sensing system (i.e., the axis of the motor shaft) and the incident laser axis as the origin O. The direction of the plane mirror axis (i.e., the welding direction) was established as the X axis, and the perpendicular lines pointing from the origin to the surface of the right-angle welding seam groove were established as the Y and Z axes. As shown in Figure 4a, the rotary mirror device of the laser scanning displacement sensing system drove the measuring to rotate counterclockwise at the starting angle , measure once for each rotation at a certain angle of , and then transmit the distance information of the measurement point to the PC processor in real time. The laser scanning displacement sensing system returned to its original position after rotating and measuring times, and no measurement was performed during this reset process. During the reset process of the sensor, the PC processor used the difference method to process the above-mentioned measured data to obtain the distance information of the measurement points near the welding seam corner. In the end, , the coordinates of the corner of the fillet welding seam, were calculated based on the distance information and corresponding deflection angle. As shown in Figure 4b, when the device moved along the welding direction, the corner trajectory of a right-angle welding seam was detected, and the two-dimensional trajectory of this corner in the XZ plane was the object extracted and analyzed in this study. To facilitate the analysis, the variation trend of the horizontal height H (the reference height from the welding seam corner to the level ground) was used to characterize the two-dimensional welding seam corner trajectory in the XZ plane. As shown in Figure 5, the equation for solving the horizontal height H was

where denotes the moving length of the system device, is the distance from the origin to the measurement point on the surface of the welding seam groove (i.e., the length of the incident laser beam), denotes the reference height from the origin to the level ground, is the serial number of the measurement point, refers to the number of measurements within a scanning cycle, is the angle between the adjacent measurement laser beams, and is the angle between the initial measurement laser beam and the Y axis.

Figure 4.

Schematic diagram of seam position and trajectory detection: (a) weld position detection; (b) weld trajectory detection.

Figure 5.

Definition of characteristic parameters of a seam trajectory.

2.3. Seam Trajectory Features Extraction

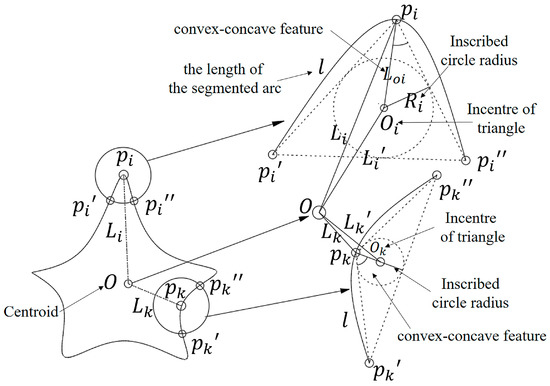

The contour curve of an object has two shape features, i.e., the inscribed radius and the concave-convex feature of a characteristic triangle. The characteristic triangle was constructed as follows: the contour curve was sampled uniformly. Two points, i.e., and , were found clockwise and counterclockwise along the contour curve, with the random point being the starting point. (known as the length of the segmented arc) denoted the length of the arcs between the two points and , and a characteristic triangle was formed by the description of point for the shape of the contour curve. As shown in Figure 5, and were two random sampling points on the contour curve, was the shape center of the contour, and were the characteristic triangles at the sampling points and , and represented the incentre of and . and referred to the distances from to and , and were the distances from to and , and were the distances from and to and , and and were the inscribed circle radiuses of and .

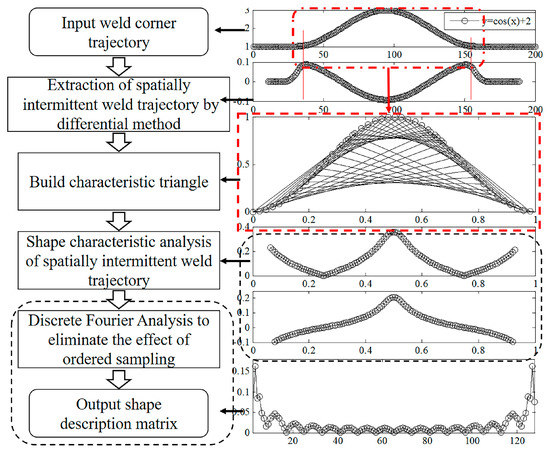

The shape analysis of trajectory of the spatially intermittent welding seam corner consisted of three parts. First, the difference method was used to analyze the welding seam corner trajectory to extract data about the trajectory of the spatially intermittent welding seam corner. Second, the shape features of the trajectory of the spatially intermittent welding seam corner were extracted. Third, a Fourier analysis was conducted on the set of shape features, and then the Fourier shape descriptor of the trajectory of the spatially intermittent welding seam corner was solved. Figure 6 shows the flow chart of the shape analysis of the cosine curve. The difference method was first applied to extract the trajectory of the spatially intermittent welding seam corner. The characteristic triangle was then established based on the shape of the trajectory of the spatially intermittent welding seam corner, and two shape features (i.e., the inscribed radius and degree of concavity-convexity) were extracted. To eliminate the impact of the starting point, a set of shape features was formed based on the plural form of the two shape features. The set was then processed by discrete Fourier analysis to solve for the feature vector (also known as the Fourier shape descriptor) that was used to describe the shape. Finally, the category of the samples was determined based on the Euclidean distance between the feature vector of the test sample and the class matching library.

Figure 6.

The processing result of each step of the proposed features extraction algorithm.

was the welding seam trajectory, so the difference value between the left and right differences at could be expressed by Equation (2).

In the equation, is the backward difference of , is the forward difference of , and is the length of the data traversed by the difference method. A reasonable could eliminate noise interference.

A differential analysis was performed on the trajectory of spatially intermittent welding seam corner based on Equation (2), the first extreme point , and the last extreme point after the differential analysis was solved. Then the welding seam trajectory interval was where the shape feature needed to be extracted.

The inscribed radius was the ratio of the area to the half-perimeter of the characteristic triangle. The inscribed radius was mainly used to describe the global features of the shape at point . As shown in Figure 5, based on Heron’s formula, Equation (3) for the inscribed radius of the characteristic triangle at point was derived:

where is the inscribed radius of the characteristic triangle at point , , and refer to the distance from point to point , the distance from point to point and the distance from point to point , respectively, and denotes the half-perimeter of the triangle.

The concave-convex feature was a product of the concavity and convexity of the shape multiplied by the concave-convex height. As shown in Figure 5, the concavity and the convexity of the shape was defined as follows: when the distance between the contour centroid and point was greater than the distance between the contour centroid and the inner center of the characteristic triangle , the contour shape at point was convex with respect to the centroid, with the concavity and convexity of the shape being positive. In contrast, when the distance between the contour centroid and point was smaller than the distance between the contour centroid and the inner center of the characteristic triangle , the contour shape at point was concave when compared with the centroid, with the concavity and convexity of the shape being negative.

The concave-convex height was defined as follows: was the distance between the sampling point and the inner center in the characteristic triangle . The greater the was, the steeper the slope of the shape was. The smaller the was, the gentler the slope of the shape was.

Therefore, the equation for solving the degree of concave-convex feature (CCH) was

To orderly sample the contour curve, an appropriate starting point needed to be selected. When the starting point of the sampling point set changed, the sampling point set experienced a translation, and only the phase of the corresponding Fourier transform coefficient changed accordingly, with its amplitude value remaining constant. Hence, with only the amplitude of the Fourier coefficient being used to describe the final shape feature, the influence of the position of the starting point on the Fourier shape descriptor was eliminated. Therefore, the inscribed radius that was normalized at all sampling points was combined with the concave-convex feature in the form of a complex number to finally obtain a set of complex numbers (hereinafter referred to as the shape feature complex function) corresponding to the set of contour sampling points that could characterize the shape features. The real part of the complex function represented the sampling points, description of the global shape feature of the contour curve, and the imaginary part characterized the detailed shape features of the contour curve at the sampling points.

A discrete Fourier transform was performed on the shape feature complex function, and the Fourier transform coefficient was calculated using the following equation:

where denotes the Fourier transform coefficient of the shape feature complex function, represents the number of sampling points, and is a frequency domain variable.

The modulus of the Fourier transform coefficient that was obtained with Equation (5), , was calculated, and the Fourier shape descriptor was finally solved, the equation of which was

where is a frequency domain variable, is the modulus of the Fourier transform coefficient with as the frequency domain variable, and is the Fourier shape descriptor.

2.4. Classification Method Based on Euclidean Distance

The category of the test samples in the category matching library was determined by the Euclidean distance. The similarity between the test samples and the category matching library was measured with the Euclidean distance. Distance and similarity were inversely related. Apparently, the category most similar to the category matching library was the category to which the test samples belonged. The calculation equation was as follows:

where is the Fourier shape descriptor of test sample , is the Fourier shape descriptor of category in the category matching library, is the frequency domain variable of the Fourier analysis, and are the moduli of the k-th Fourier transform coefficients of and , and denotes the category to which the test samples belong.

The category matching library was obtained by training the samples using the least square method. First, the above method was used to solve the Fourier shape descriptor of the training samples. The optimal solution matching the Fourier shape descriptor of the training samples was calculated using the least square method, and this optimal solution was then used as the matching library of this category. The relevant derivation equation was as follows:

where denotes the squared loss function of the least square method, is the optimal solution of the training samples that was calculated using the least square method, is the Fourier descriptor of the -th training sample, is the Fourier descriptor of the matching test, and is the number of training samples.

3. Results and Discussion

3.1. Results of 3D Trajectory Detection and Corner Trajectory Shape Feature Extraction of Welds

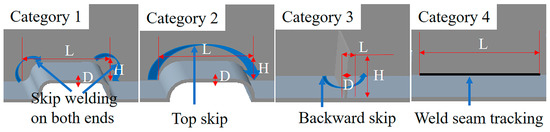

Figure 7 shows the four types of trajectory shapes used in this study, which were Categories 1, 2, 3, and 4, the most commonly used categories in the welding structure of complex box girders. The criteria for discriminating between these categories were determined by the shape of the welding seam trajectory and the welding process. As shown in Figure 8, Category 1 was S-shaped at both ends and straight at the top. When Category 1 was welded, the welding gun skipped the S-shaped ends to weld on the straight part at the top. Category 2 was arc-shaped at both ends and straight at the top. For the welding of Category 2, the welding gun skipped the top to weld on the normal welding section at the back. Category 3 was generally used to prevent the welding from deforming into a rectangular shape. When Category 3 was welded, the welding gun skipped Category 3 to weld on the normal welding section at the back. Category 4 was normal welding. For the welding of Category 4, the welding seam tracking was required during the welding process. Considering the welding speed, the measurement range of the laser scanning displacement sensor, the advanced scanning distance of the laser scanning displacement sensor, the size parameters of the four categories needed to be within specific applicable ranges (Table 1).

Figure 7.

Schematic diagram of welding treatment for Categories 1, 2, 3, and 4.

Figure 8.

Laser displacement sensor detection workpieceα.

Table 1.

Table of dimensional parameters for Categories 1, 2, 3, and 4.

As shown in Figure 4 and Figure 8, a coordinate system was established with the intersection point between the rotary mirror axis (i.e., the axis of the motor shaft) and the incident laser axis as the origin O. The plane mirror axis (i.e., the welding direction) was established as the X axis, and the perpendicular lines pointing from the origin to the surface of the right-angle welding seam groove were established as the Y and Z axes. At the starting position detected by the system device, the vertical distances from the origin O to the surface of the fillet welding seams in the Y and Z directions were 400 and 410 mm, respectively, and the horizontal height between the origin O and the level ground was 580 mm. The laser scanning displacement sensor scanned counterclockwise, with the angle between the initial measurement laser beam and the Y axis being 33.024°, the angle between adjacent measurement laser beams being 0.5°, and the number of measurements being 40.

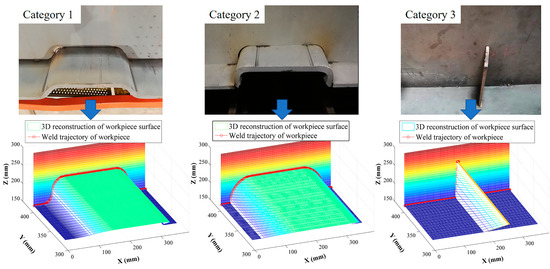

Figure 9 shows the 3D reconstruction results for the system’s detection of the welding seam trajectory in the moving state, for which the moving speed (i.e., the welding speed) was 1.2 m/min, the moving length was 350 mm, and the scanning frequency of the laser scanning displacement sensor was 20 Hz. Category 1 had a size of , Category 2 had a size of , and Category 3 had a size of . Figure 10 shows the welding seam corner trajectories of Categories 1, 2, and 3 that were extracted using the difference method.

Figure 9.

3D reconstruction results of welding seam.

Figure 10.

Weld corner trajectories of Categories 1, 2, and 3.

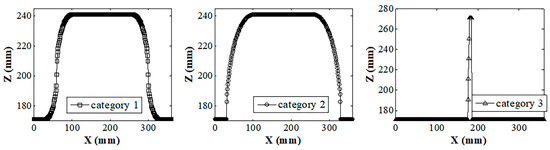

Figure 11 shows the shape features of the welding seam corner trajectory shown in Figure 10 that were extracted using the method mentioned in Section 2, with a segmented arc length of 20 sampling intervals (the sampling interval in this article is 1 mm). Figure 11a shows the shape feature curve represented by an inscribed radius. Figure 11b shows the shape feature curve characterized by the degree of concavity–convexity. Figure 11c shows the Fourier shape descriptor that was solved using the shape feature extraction method. By comparing the T1, T2, and T3 areas in Figure 11a–c, it was found that the extracted shape features could effectively reflect the differences between the welding seam corner trajectories of Categories 1, 2, and 3.

Figure 11.

Shape extraction results for corner trajectories of welds in Categories 1, 2, and 3: (a) inscribed circle radius; (b) concavity and convexity; (c) Fourier shape descriptors.

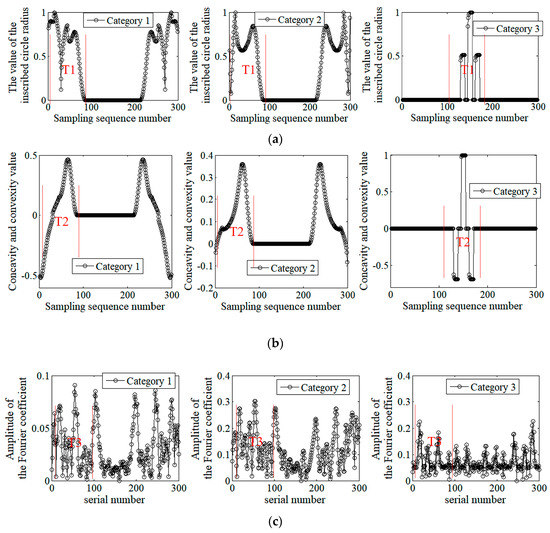

With the welding seam corner trajectory of Category 1 in Figure 11a as the case, the corresponding characteristic triangles for the shape feature extraction were constructed with the length of the segmented arc being four sampling intervals, 16 sampling intervals, and 32 sampling intervals, the results of which are shown in Figure 12. In Figure 12, the upper side shown is the shape feature curve represented by the inscribed radius of the characteristic triangle, the lower side shown is the shape feature curve represented by the degree of concavity-convexity, area A shows the top straight part of Category 1, and area B shows the S-shaped curve part at both sides of Category 1.

Figure 12.

Shape characteristic curves under different segmentation arc lengths.

As shown in Figure 12, when the length of the segmented arc of the characteristic triangle was four sampling intervals, area B of the shape feature curve experienced a large fluctuation, while area A was a straight line with a value of 0. When the length of the segmented arc was 16 sampling intervals, area B of the shape feature curve had small fluctuations, and area A was less wide than when the length of the segmented arc was four sampling intervals. When the length of the segmented arc was 32 sampling intervals, area B of the shape feature curve fluctuated rarely, and area A was shorter than when the length of the segmented arc was four or 16 sampling intervals. All these phenomena implied that when the segmented arc was relatively short, the shape feature curve mainly revealed local detailed features, while when the segmented arc was comparatively long, the curve reflected more about the global shape features. Hence, the segmented arc of the characteristic triangle needed to be neither too long nor too short, so that both the local details and the global shape features could be fully reflected. By comprehensively considering the sampling interval and the trajectory scale, the length of the segmented arc of the characteristic triangle was expressed as

where is the length of the segmented arc of the characteristic triangle, denotes the number of sampling points ( is close to the value of ), is the empirical compensation value, and represents the sampling interval.

3.2. Classification Experiment Based on the Euclidean Distance

To verify the effectiveness of the proposed method in recognizing the welding seam trajectories of Categories 1, 2, and 3, some experiments were carried out. The data for the welding seam trajectories that were detected in the experiments served as the test sample for classification. The experimental device performed detection based on the established coordinate system shown in Figure 8. The experimental parameters are shown in Table 2. As shown in Table 2, S1 and S2 refer to the vertical distances from the origin O to the surface of the welding seam grooves in the Y and Z axes, α is the angle between the initial measurement laser beam and the Y axis, and θ is the angle between adjacent measurement laser beams. N denotes the number of measurements of the sensor within one cycle, V is the moving speed of the system device, and F is the scanning frequency of the laser scanning displacement sensor. The laser scanning displacement sensor scanned counterclockwise, with the horizontal height H0 from the origin O to the level ground being 580 mm. Category 1 had a size of (mm), Category 2 had a size of (mm), and Category 3 had a size of (mm), with 22 sampling intervals being the length of segmented arc that constituted the characteristic triangle.

Table 2.

Experimental parameters of Categories 1, 2, and 3 weld trajectory detection.

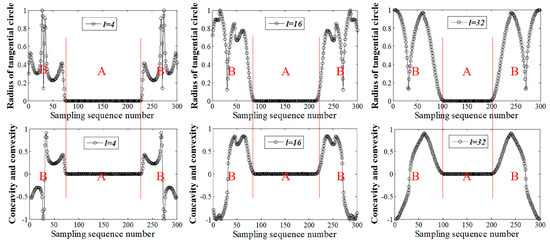

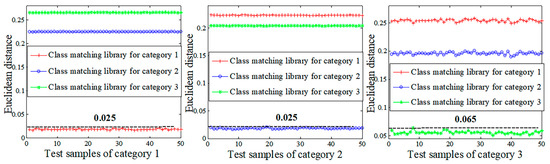

Ten sets of welding seam trajectory data were randomly selected from the data of the welding seam trajectories of Categories 1, 2, and 3. These data sets were used as training samples for solving the classification and matching library using the least square method. Fifty sets of data about the welding seam trajectories were selected as the test samples to calculate the Euclidean distance between the test samples and the matching library they belonged to. The results of this calculation are shown in Figure 13. As shown in Figure 14, the Euclidean distance between both of the test samples in Categories 1 and 2 and the matching library to which they belonged was less than 0.025, and the Euclidean distance between the test samples in Category 3 and the matching library to which they belonged was less than 0.065. All of the test samples in the 150 sets had the smallest Euclidean distance with the matching libraries that they belonged to, indicating that the proposed method could accurately recognize 100% of the test samples in the 150 sets.

Figure 13.

Classification test results for Categories 1, 2, and 3 test samples.

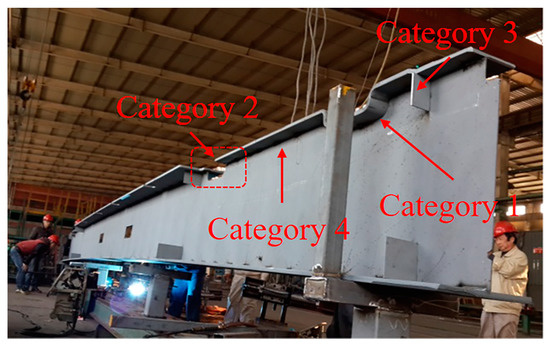

Figure 14.

Example of workpiece of the folding special container girder.

The processing time of this algorithm was measured by functions in MATLAB. The measurement results were as follows: (1) the communication time between the sensor and the PC did not exceed 15 ms. (2) The real-time extraction of the welding seam corner trajectory did not exceed 30 ms. (3) The analysis and classification concerning the shapes of the trajectories did not exceed 20 ms. Therefore, the total time cost did not exceed 65 ms, satisfying the needs of online identification for the types of welding seam trajectory during the welding process. The experimental results showed that this method could be used to accurately detect the positions of the 3D welding seams and to identify the welding seam corner trajectory in the real time during the process of welding the structure made of complex box girders.

3.3. Welding Experimental and Field Test Validation

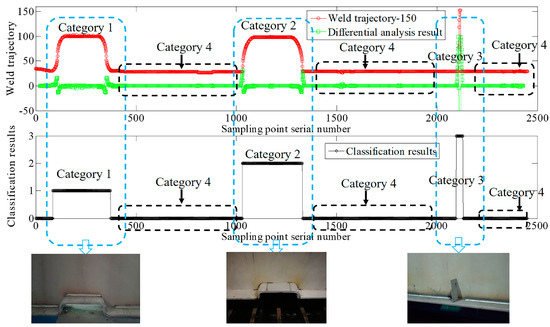

The most common workpiece of the foldable special container was selected as the test sample to be used in the field test. The workpiece was 14,000 mm long, 300 mm wide, and 500 mm high (Figure 14). A coordinate system was established for the experimental device, as shown in Figure 8. On the YZ plane, S2 and S1, the vertical distances from the origin O to the surface of the welding seam grooves in the Y and Z directions, were 390 and 400 mm, respectively. The horizontal height from the origin O to the level ground was 590 mm and the distance from the origin O to the welding gun was 500 mm. In terms of the welding direction, the laser scanning displacement sensor moved along the direction driven by the moving mechanism at a speed of 1.2 m/min (i.e., the welding speed), with the moving length being 2500 mm. The laser scanning displacement sensor scanned counterclockwise, with the scanning frequency being 20 Hz, the angle between the initial measurement laser beamand the Y axis being 32°, the angle between the adjacent measurement laser beams being 0.5°, and the number of measurements being 40. The welding voltage was 30 V, the welding current was 320 A, and the welding protective gases were carbon dioxide and argon mixed at a ratio of 1:4. Figure 15 shows the experimental results that were obtained based on the above parameters.

Figure 15.

Results of weld trajectory identification and automated skip welding in field tests.

As shown in Figure 15, the laser scanning displacement sensor detected the distance between the origin O and the measuring point on the surface of the workpiece’s fillet welding seam groove and the sensor sent the measured distance to the PC processor in real time. Once the PC processor received the distance data for one scanning cycle, the difference method was used to solve the coordinates of the fillet welding seam. In this way, the system device could acquire data about the fillet welding seam corner trajectory in real time during mobile welding, and the system device could perform real-time differential analysis on this welding seam corner trajectory to extract data about the welding seam corner trajectories in Categories 1, 2, and 3. Then the shape features of this welding seam corner trajectory were analyzed and classified using the method proposed in this study. According to the results of online classification, the PC processor first created sub-welding tasks in the trajectory segment where the class is located (sub-welding tasks of Categories 1, 2, and 3 are shown in Figure 7), and then inserted the sub-tasks into the corresponding guide trajectory of the welding torch, finally controlled the PLC controller to drive the four-axis robot to guide the welding torch to perform the jump welding operation. As shown in Figure 15 and Table 3, the proposed method welded the workpiece at a high speed of 1.2 m/min using the correct welding processing based on the identification of the fillet welding seam corner trajectory, verifying the effectiveness of the proposed method in practical application. As required by the on-site manufacturing process, a certain margin without welding was left at both ends of the test samples in Category 2.

Table 3.

The key parameters and test results of the field test.

Based on the field welding test results, the laser scanning displacement sensing system that was developed in this study was able to scan the workpiece in real time during the welding process and to guide the welding gun in order to complete the automated skip welding of spatially intermittent welding seams. Without any requirement for calibration and additional offline or online teaching programming, the system device that was developed based on this sensing system greatly improved the efficiency of the welding manufacturing. When the spatial trajectories of large and complex structures are scanned, the welding seam trajectory is likely to shift beyond the measurement range of a laser displacement sensor, which places high demands on the measurement range and degree of freedom of a sensor. The laser scanning displacement sensing system that was developed in this study not only inherited the advantages of the point laser displacement sensing method, but also could adaptively change the viewing angle without changing the physical position or posture by changing the deflection angle of the rotary mirror, remarkably improving the measurement degree of freedom of the sensing system. Moreover, in this sensing system, the laser spot on the axis of the laser beam can be clearly imaged on the line array CCD. Through the conventional filtering method and centroid method, the influence of surface reflection can be eliminated, and the imaging position of the laser spot can be extracted. This is one of the factors of strong anti-interference ability of this measurement method. Compared to the sensing method in this study, the single-line laser vision sensing method limits the measurement range and measurement freedom due to factors such as the depth of field and angle of view of the camera lens, and the laser streak and the reflection streak are imaged together in the camera when detecting highly reflective workpieces (e.g., aluminum plate), and a complex algorithm is needed to filter out the artifacts caused by reflection in the image. Of course, the single-line laser vision sensing method also has great advantages, such as high detection efficiency, small structure, and high two-dimensional measurement resolution. Therefore, if there are large measurement ranges, reflection phenomena, low measurement frequency requirements, and low cost requirements in the application scenario, it is recommended to select the point laser + rotating mirror method for detection. If there are narrow butt joints, narrow groove welds, or the need to obtain groove shape information in the detection scene, it is recommended to use single-line laser visual sensing to detect.

The advanced scanning distance of the laser scanning displacement sensor proposed in this study could be theoretically set to 350 mm. Since the on-site manufacturing process required a certain margin without welding to be left at both ends of the test samples in Category 2, the advanced scanning distance was actually set to 500 mm. This advanced scanning distance was mainly determined by two factors, i.e., the structural size of the spatially intermittent welding seams and the time consumed by the welding seam trajectory recognition algorithm. If the distance was too long, the structure of the system device would be enlarged, and the real-time storage pressure of the PC database would be increased. If the distance was too short, the welding seam trajectory might be incorrectly identified. In actual application, this distance can be changed according to the site conditions.

This paper proposes a seam trajectory recognition algorithm based on Euclidean distance discrimination. In this paper, the algorithm takes less than 65 ms. The time consumed by the algorithm increases with the number of classes in the class matching library. Therefore, in the future, we will try to further improve the classification library model based on efficient intelligent machine learning algorithm to adapt to more types of complex welding structure products in heavy machinery and other fields.

4. Conclusions

In order to realize the automated skip welding guidance of complex box girder structures, a method for detecting the corner trajectories and identifying the spatially intermittent welding seams was proposed, and a hardware system supporting this method was established.

A laser scanning displacement sensor with adaptive field of view was proposed. The sensor is based on the optical design of a combination of a point laser type optical triangulation and a rotating mirror, which can change the measurement angle while maintaining the physical installation position and attitude of the sensor, so as to adapt to the workpiece weld corners detection under different product types and complex conditions.

A weld trajectory recognition algorithm based on Euclidean distance discrimination was proposed. The algorithm extracts the shape features by constructing the characteristic triangle of the weld trajectory, and then processes the set of shape features by discrete Fourier analysis to solve the feature vector used to describe the shape. Finally, based on the Euclidean distance between the feature vector of the test sample and the class matching library, the class to which the sample belongs is identified. The classification accuracy rate of the algorithm for four kinds of the spatially intermittent welding seams in common complex box girder structures is 100%. The overall processing time for weld trajectory detection and classification does not exceed 65 ms.

The field test was carried out on the special type container girder production line. The results show that the system proposed in this paper can accurately identify the discontinuous welds in the high-speed MAG welding process with a welding speed of 1.2 m/min, and guide the welding torch to automatically complete “welding-arc extinguishing-obstacle avoidance-arc starting-welding”, which significantly improved the welding manufacturing efficiency and quality stability of complex box girder components. This method does not require time-consuming pre-weld teaching programming and visual inspection system calibration. It provides a new technical approach for the efficient and flexible welding of discontinuous welds with complex structures, and provides a key technical basis for a new generation of intelligent robot welding systems.

In order to further specifically optimize and expand the applicability of the method, future work needs to improve the intelligent classification library based on efficient machine learning algorithms to achieve stable, fast, and accurate online discrimination of more types of weld trajectories, and is expected to be applied to the welding and manufacturing of core components of major industries such as port cranes, large logistics transportation equipment, and rail transit.

Author Contributions

Conceptualization, G.L. and B.H.; Methodology, J.G. and X.L.; Data curation, G.L. and J.G.; Investigation, Y.H., G.L. and B.H.; Writing—Original Draft Preparation, G.L. and Y.H.; Supervision, B.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (Grant Numbers 51575468 and 51605251).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Krausche, T.; Launert, B.; Pasternak, H. A study on the prediction of welding effects in steel box girders. In Proceedings of the European Conference on Steel and Composite Structures (Eurosteel), Copenhagen, Denmark, 13–15 September 2017. [Google Scholar]

- Saad-Eldeen, S.; Garbatov, Y.; Soares, C.G. Experimental assessment of corroded steel box-girders subjected to uniform bending. Ships Offshore Struct. 2013, 8, 653–662. [Google Scholar] [CrossRef]

- Koller, R.E.; Stoecklin, I.; Weisse, B.; Terrasi, G.P. Strengthening of fatigue critical welds of a steel box girder. Eng. Fail. Anal. 2012, 25, 329–345. [Google Scholar] [CrossRef]

- Bolmsjö, G.; Olsson, M.; Cederberg, P. Robotic arc welding—Trends and developments for higher autonomy. Ind. Robot Int. J. 2002, 29, 98–104. [Google Scholar] [CrossRef][Green Version]

- Liu, Z.; Bu, W.; Tan, J. Motion navigation for arc welding robots based on feature mapping in a simulation environment. Robot. Comput. Integr. Manuf. 2010, 26, 137–144. [Google Scholar] [CrossRef]

- Chen, S.B.; Lv, N. Research evolution on intelligentized technologies for arc welding process. J. Manuf. Process. 2014, 16, 109–122. [Google Scholar] [CrossRef]

- Moon, S.B.; Hwang, S.H.; Shon, W.H.; Lee, H.G.; Oh, Y.T. Portable robotic system for steel h-beam welding. Ind. Robot 2003, 30, 258–264. [Google Scholar] [CrossRef]

- Tavares, P.; Costa, C.M.; Rocha, L.; Malaca, P.; Costa, P.; Moreira, A.P.; Sousa, A.; Veiga, G. Collaborative Welding System using BIM for robotic reprogramming and spatial augmented reality. Autom. Constr. 2019, 106, 102825. [Google Scholar] [CrossRef]

- Neto, P.; Mendes, N. Direct off-line robot programming via a common cad package. Robot. Auton. Syst. 2013, 61, 896–910. [Google Scholar] [CrossRef]

- Baizid, K.; Ćuković, S.; Iqbal, J.; Yousnadj, A.; Chellali, R.; Meddahi, A.; Devedžićl, G.; Ghionea, I. Irosim: Industrial robotics simulation design planning and optimization platform based on cad and knowledgeware technologies. Robot. Comput. Integr. Manuf. 2016, 42, 121–134. [Google Scholar] [CrossRef]

- Xu, Y.; Lv, N.; Fang, G.; Du, S.; Zhao, W.; Ye, Z.; Chen, S. Welding seam tracking in robotic gas metal arc welding. J. Mater. Process. Technol. 2017, 248, 18–30. [Google Scholar] [CrossRef]

- Rout, A.; Deepak, B.B.V.L.; Biswal, B.B. Advances in weld seam tracking techniques for robotic welding: A review. Robot. Comput. Integr. Manuf. 2019, 56, 12–37. [Google Scholar] [CrossRef]

- Xu, Y.; Fang, G.; Lv, N.; Chen, S.; Zou, J.J. Computer vision technology for seam tracking in robotic gtaw and gmaw. Robot. Comput. Integr. Manuf. 2015, 32, 25–36. [Google Scholar] [CrossRef]

- Soares, L.B.; Weis, A.A.; Rodrigues, R.N.; Drews, P.L.J., Jr.; Guterres, B.; Botelho, S.S.C.; Filho, N.D. Seam tracking and welding bead geometry analysis for autonomous welding robot. In Proceedings of the IEEE Latin American Robotics Symposium, Curitiba, Brazil, 8–11 November 2017. [Google Scholar]

- Idrobo-Pizo, G.A.; Motta, J.M.S.T.; Sampaio, R.C. A calibration method for a laser triangulation scanner mounted on a robot arm for surface mapping. Sensors 2019, 19, 1783. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Zhang, Y. Toward welding robot with human knowledge: A remotely-controlled approach. IEEE Trans. Autom. Sci. Eng. 2014, 12, 769–774. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, Y. Iterative local ANFIS-based human welder intelligence modeling and control in pipe GTAW process: A data-driven approach. IEEE/ASME Trans. Mechatron. 2014, 20, 1079–1088. [Google Scholar] [CrossRef]

- Liu, Y.K.; Zhang, Y.M. Model-based predictive control of weld penetration in gas tungsten arc welding. IEEE Trans. Control Syst. Technol. 2013, 22, 955–966. [Google Scholar] [CrossRef]

- Zhang, K.; Chen, Y.; Gui, H.; Li, D.; Li, Z. Identification of the deviation of seam tracking and weld cross type for the derusting of ship hulls using a wall-climbing robot based on three-line laser structural light. J. Manuf. Process. 2018, 35, 295–306. [Google Scholar] [CrossRef]

- Xue, B.; Chang, B.; Peng, G.; Gao, Y.; Tian, Z.; Du, D.; Wang, G. A vision based detection method for narrow butt joints and a robotic seam tracking system. Sensors 2019, 19, 1144. [Google Scholar] [CrossRef]

- Liu, W.; Li, L.; Hong, Y.; Yue, J. Linear mathematical model for seam tracking with an arc sensor in P-GMAW Processes. Sensors 2017, 17, 591. [Google Scholar] [CrossRef]

- Mohd Shah, H.N.; Sulaiman, M.; Shukor, A.Z. Autonomous detection and identification of weld seam path shape position. Int. J. Adv. Manuf. Technol. 2017, 92, 3739–3747. [Google Scholar] [CrossRef]

- Hascoet, J.Y.; Hamilton, K.; Carabin, G.; Rauch, M.; Alonso, M.; Ares, E. Welding torch trajectory generation for hull joining using autonomous welding mobile robot. In Proceedings of the 4th Manufacturing Engineering Society International Conference (MESIC 2011), Cadiz, Spain, 21–23 September 2011. [Google Scholar]

- Kiddee, P.; Fang, Z.; Tan, M. An automated weld seam tracking system for thick plate using cross mark structured light. Int. J. Adv. Manuf. Technol. 2016, 87, 3589–3603. [Google Scholar] [CrossRef]

- Li, X.; Li, X.; Khyam, M.O.; Ge, S.S. Robust welding seam tracking and recognition. IEEE Sens. J. 2017, 17, 5609–5617. [Google Scholar] [CrossRef]

- Zeng, J.; Chang, C.; Du, D.; Wang, L.; Chang, S.; Peng, G.; Wang, W. A weld position recognition method based on directional and structured light information fusion in multi-layer/multi-pass welding. Sensors 2018, 18, 129. [Google Scholar] [CrossRef]

- Zou, Y.; Wang, Y.; Zhou, W.; Chen, X. Real-time seam tracking control system based on line laser visions. Opt. Lasers Eng. 2018, 103, 182–192. [Google Scholar] [CrossRef]

- Zou, Y.; Chen, J.; Wei, X. Research on a real-time pose estimation method for a seam tracking system. Opt. Lasers Eng. 2020, 127, 105947. [Google Scholar] [CrossRef]

- Zeng, J.; Chang, B.; Du, D.; Peng, G.; Chang, S.; Hong, Y.; Wang, L.; Shan, J. A vision-aided 3d path teaching method before narrow butt joint welding. Sensors 2017, 17, 1099. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Yan, M.; Huang, T.; Zheng, J.; Li, Z. 3d reconstruction of complex spatial weld seam for autonomous welding by laser structured light scanning. J. Manuf. Process. 2019, 39, 200–207. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, Y. Control of 3D weld pool surface. Control Eng. Pract. 2013, 21, 1469–1480. [Google Scholar] [CrossRef]

- Huang, W.; Kovacevic, R. A laser-based vision system for weld quality inspection. Sensors 2011, 11, 506–521. [Google Scholar] [CrossRef]

- Wei, A.; Chang, B.; Xue, B.; Peng, G.; Du, D.; Han, Z. Research on the weld position detection method for sandwich structures from Face-Panel side based on backscattered X-ray. Sensors 2019, 19, 3198. [Google Scholar] [CrossRef]

- Hong, Y.; Chang, B.; Peng, G.; Yuan, Z.; Hou, X.; Xue, B.; Du, D. In-Process Monitoring of Lack of Fusion in Ultra-Thin Sheets Edge Welding Using Machine Vision. Sensors 2018, 18, 2411. [Google Scholar] [CrossRef] [PubMed]

- Zeng, J.; Cao, G.; Peng, Y.; Huang, S. A weld joint type identification method for visual sensor based on image features and SVM. Sensors 2020, 20, 471. [Google Scholar] [CrossRef] [PubMed]

- Park, J.B.; Lee, S.H.; Lee, I.J. Precise 3d lug pose detection sensor for automatic robot welding using a structured-light vision system. Sensors 2009, 9, 7550–7565. [Google Scholar] [CrossRef]

- Zeng, J.; Cao, G.; Hong, Y.; Chang, S.; Zou, Y. A precise visual method for narrow butt detection in specular reflection workpiece welding. Sensors 2016, 16, 1480. [Google Scholar] [CrossRef]

- Ma, H.; Wei, S.; Sheng, Z.; Lin, T.; Chen, S. Robot welding seam tracking method based on passive vision for thin plate closed-gap butt welding. Int. J. Adv. Manuf. Technol. 2010, 48, 945–953. [Google Scholar] [CrossRef]

- Dinham, M.; Fang, G. Autonomous weld seam identification and localisation using eye-in-hand stereo vision for robotic arc welding. Robot. Comput. Integr. Manuf. 2013, 29, 288–301. [Google Scholar] [CrossRef]

- Wang, P.J.; Shao, W.J.; Gong, S.H.; Jia, P.J.; Li, G. High-precision measurement of weld seam based on narrow depth of field lens in laser welding. Sci. Technol. Weld. Join. 2016, 21, 267–274. [Google Scholar] [CrossRef]

- Nele, L.; Sarno, E.; Keshari, A. An image acquisition system for real-time seam tracking. Int. J. Adv. Manuf. Technol. 2013, 69, 2099–2110. [Google Scholar] [CrossRef]

- Dinham, M.; Fang, G. Detection of fillet weld joints using an adaptive line growing algorithm for robotic arc welding. Robot. Comput. Integr. Manuf. 2014, 30, 229–243. [Google Scholar] [CrossRef]

- Bračun, D.; Sluga, A. Stereo vision based measuring system for online welding path inspection. J. Mater. Process. Technol. 2015, 223, 328–336. [Google Scholar] [CrossRef]

- Yang, L.; Liu, Y. A novel 3D seam extraction method based on multi-functional sensor for V-Type weld seam. IEEE Access 2019, 7, 182415–182424. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).