Towards Optical Imaging for Spine Tracking without Markers in Navigated Spine Surgery

Abstract

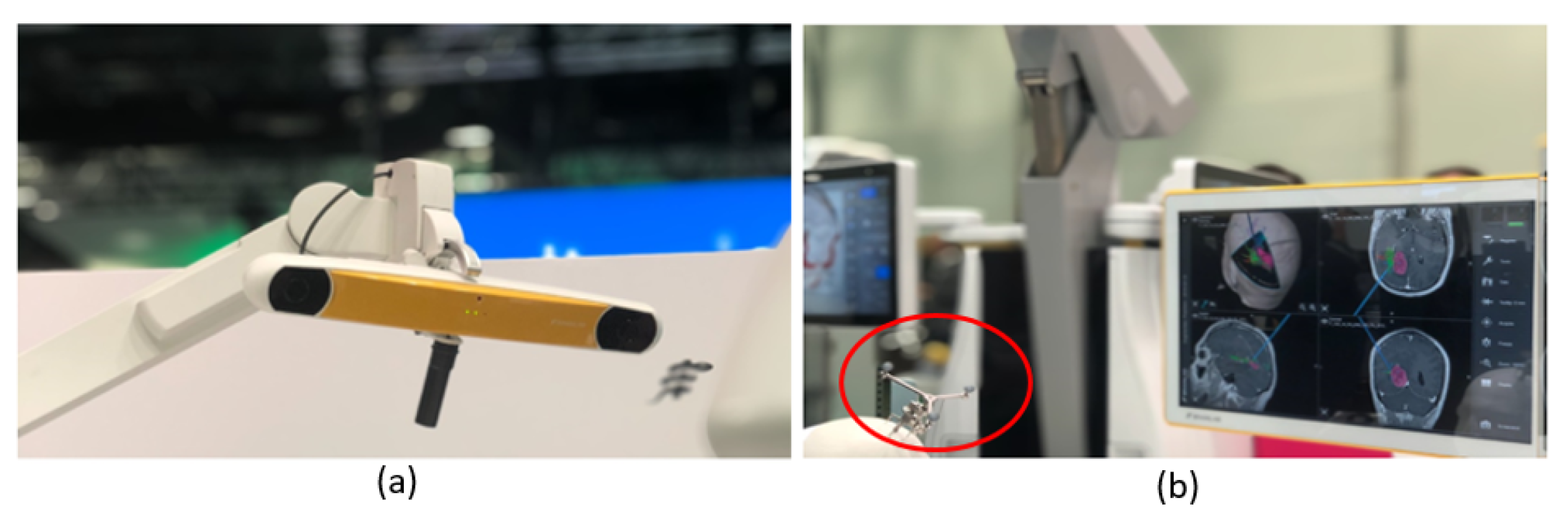

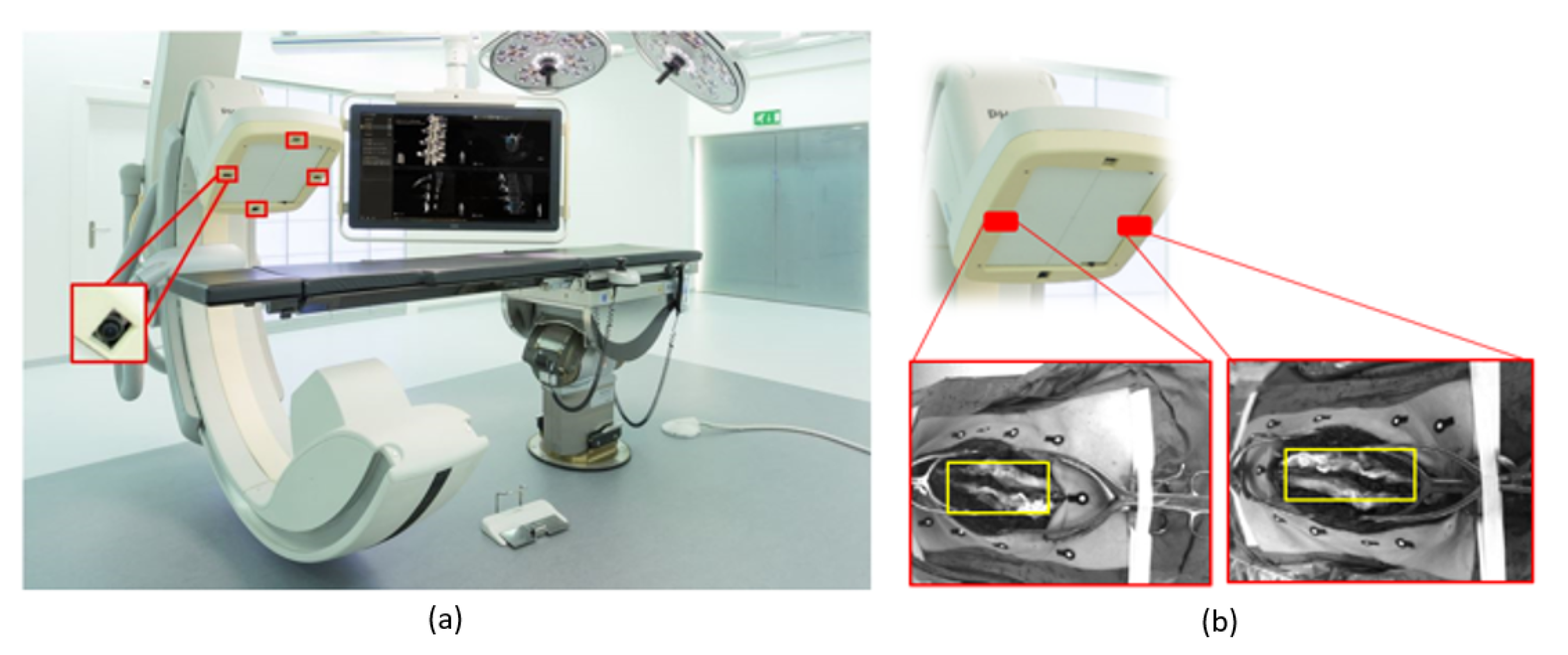

1. Introduction

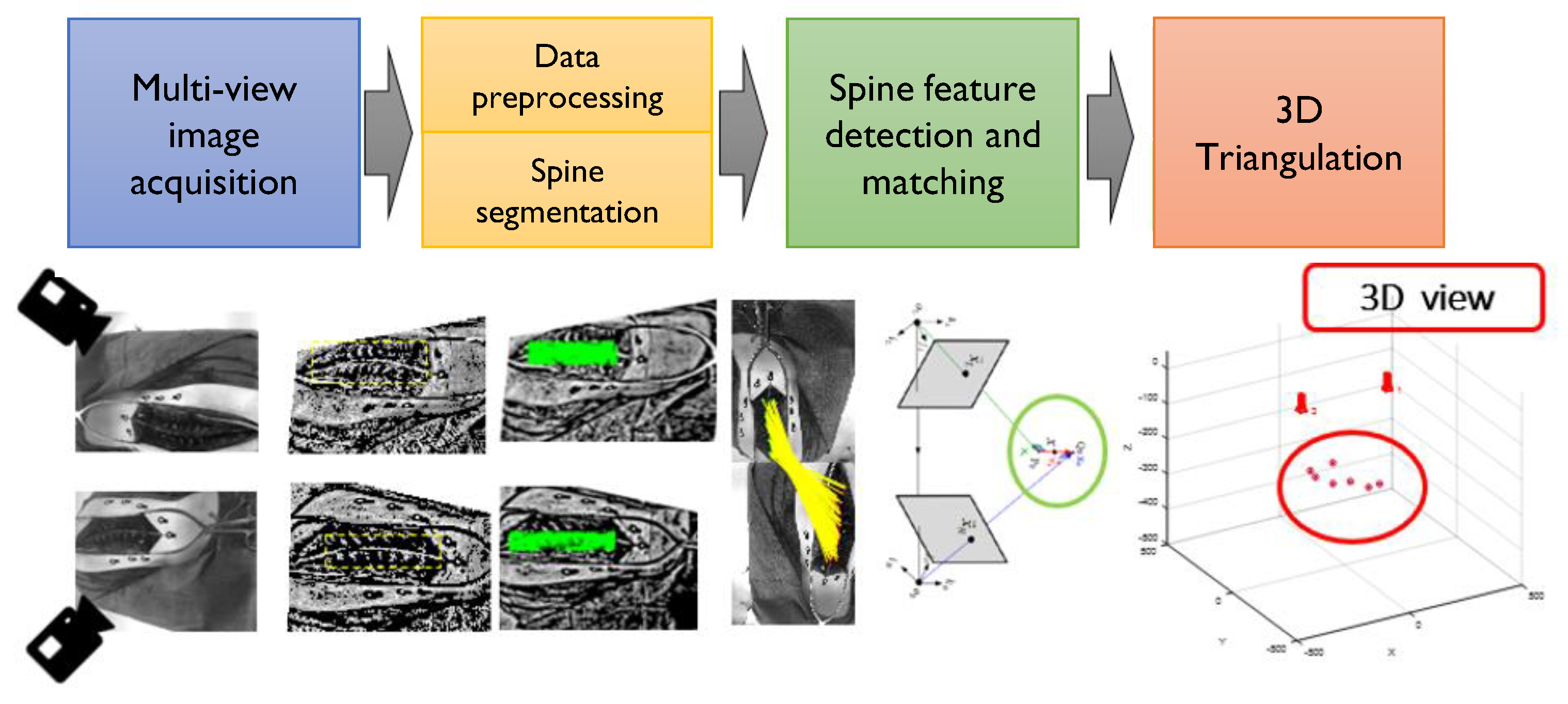

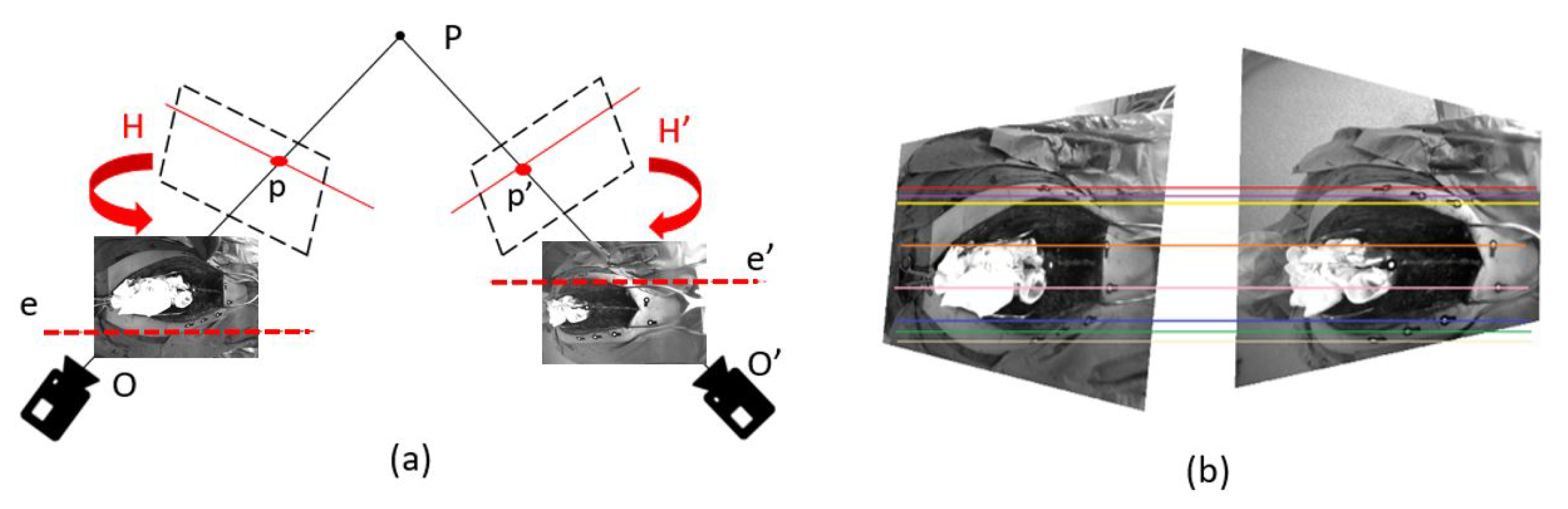

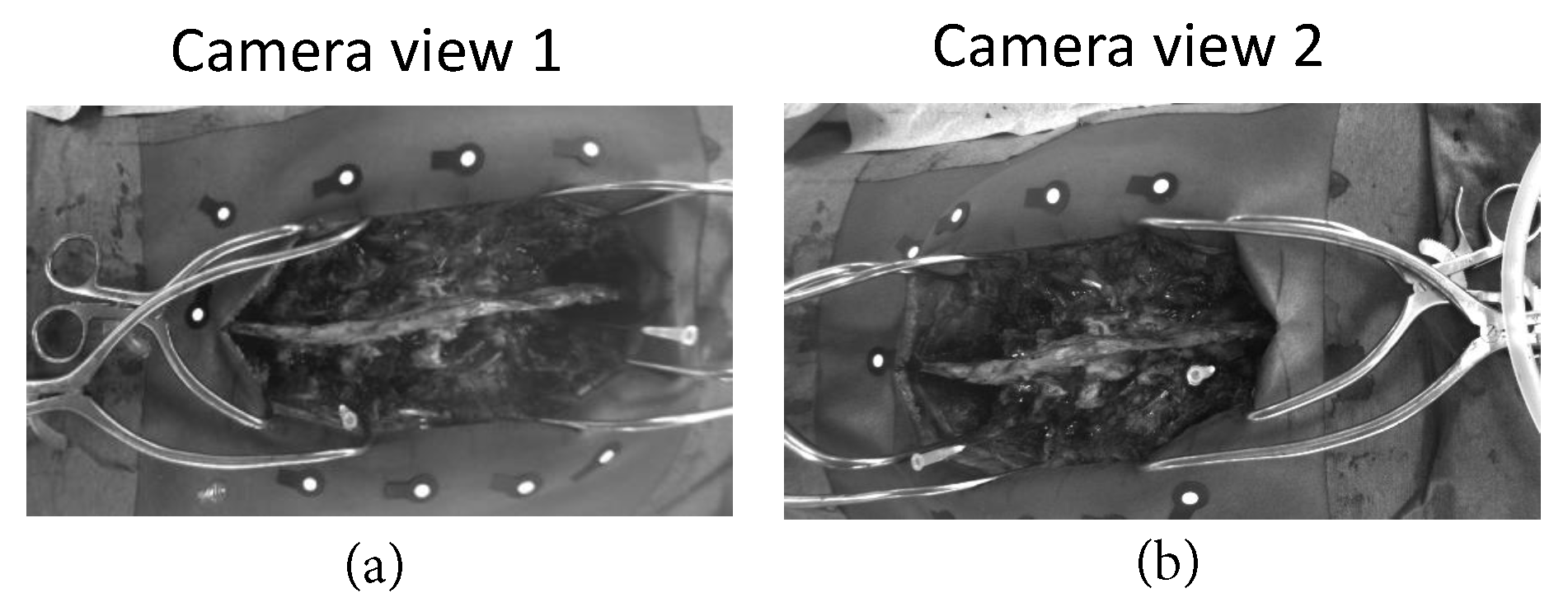

2. Materials and Methods

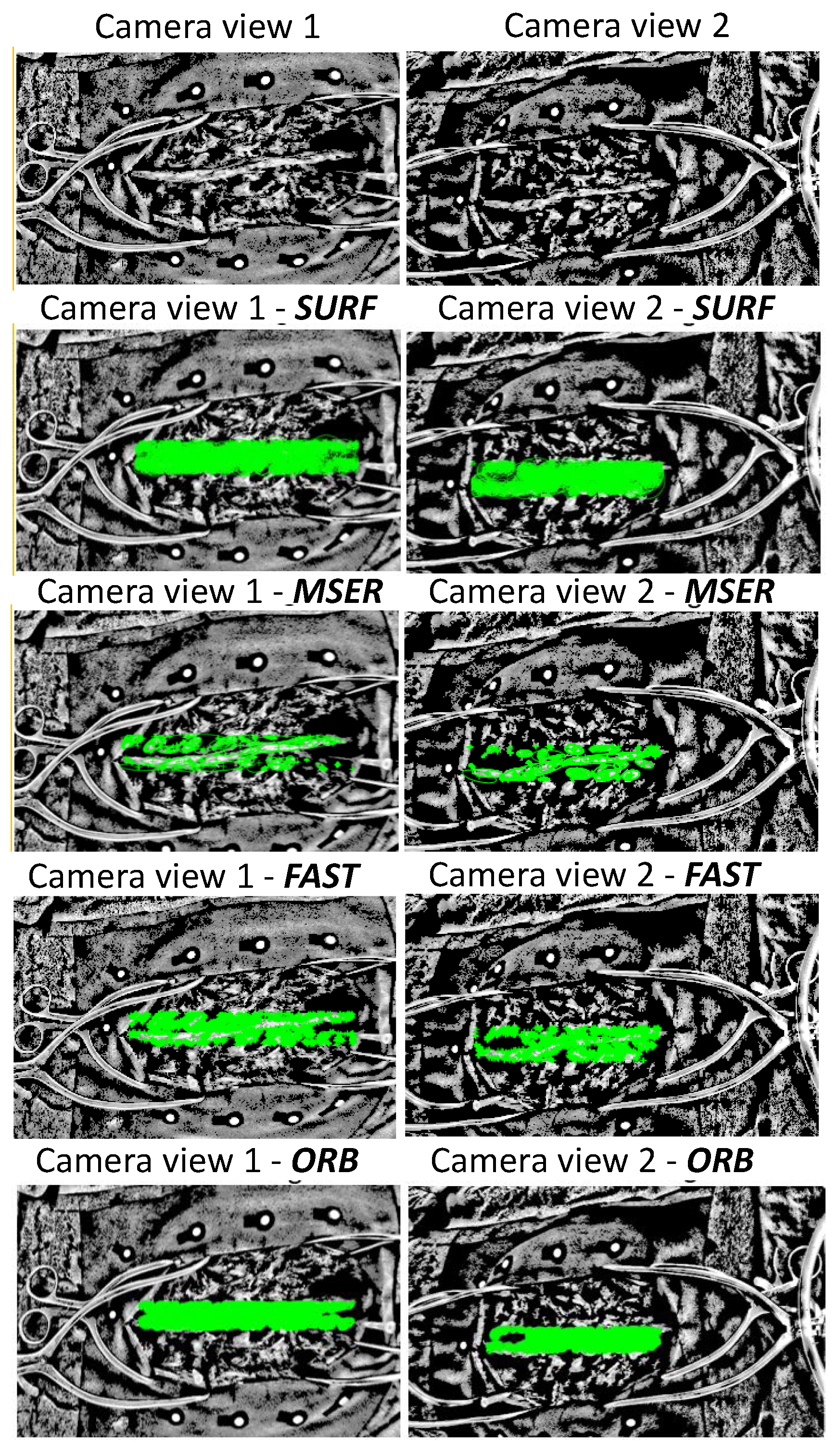

2.1. Image Preprocessing for Improved Feature Matching

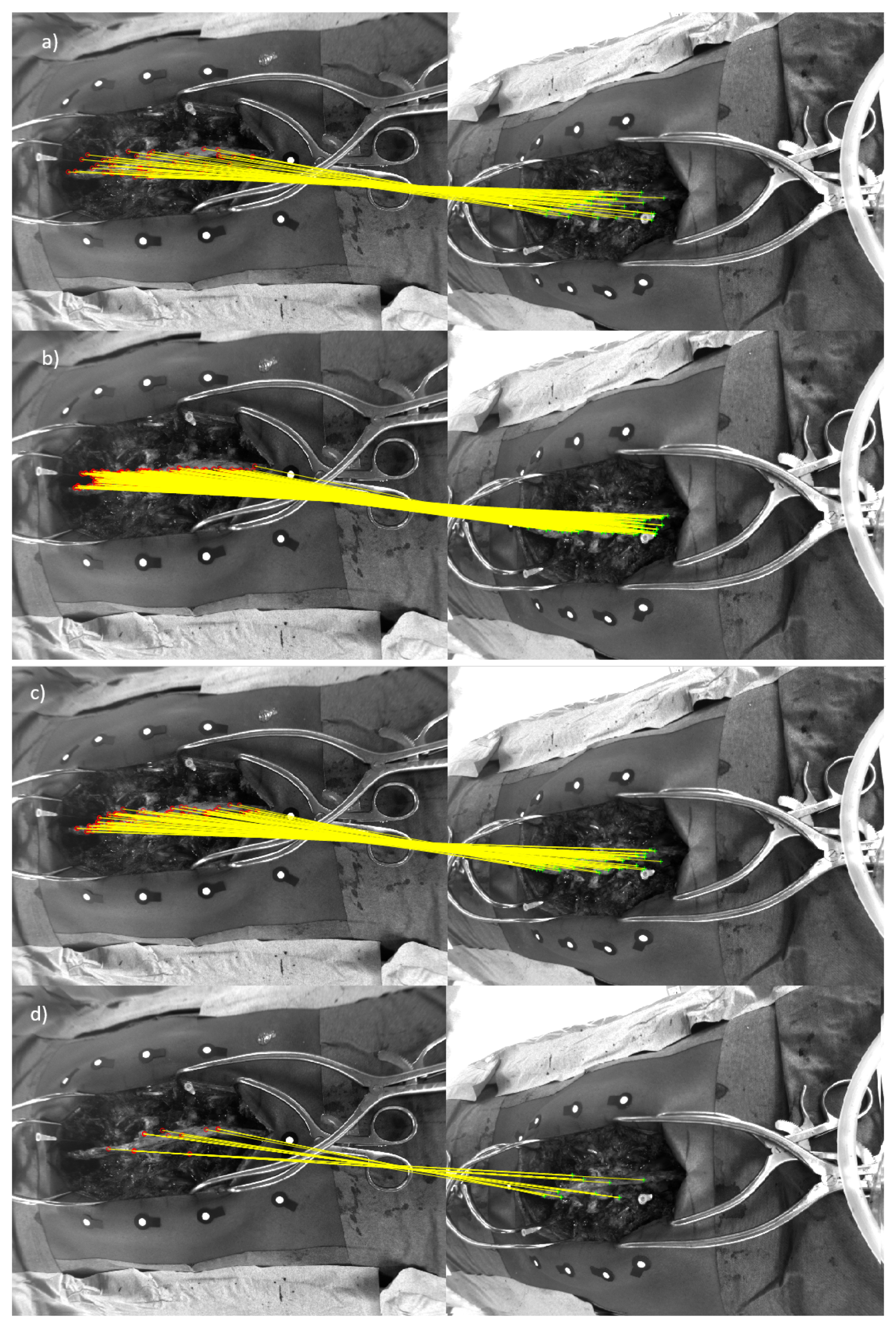

2.2. Spine Feature Detection

2.3. Spine Feature Matching of Multi-View Images

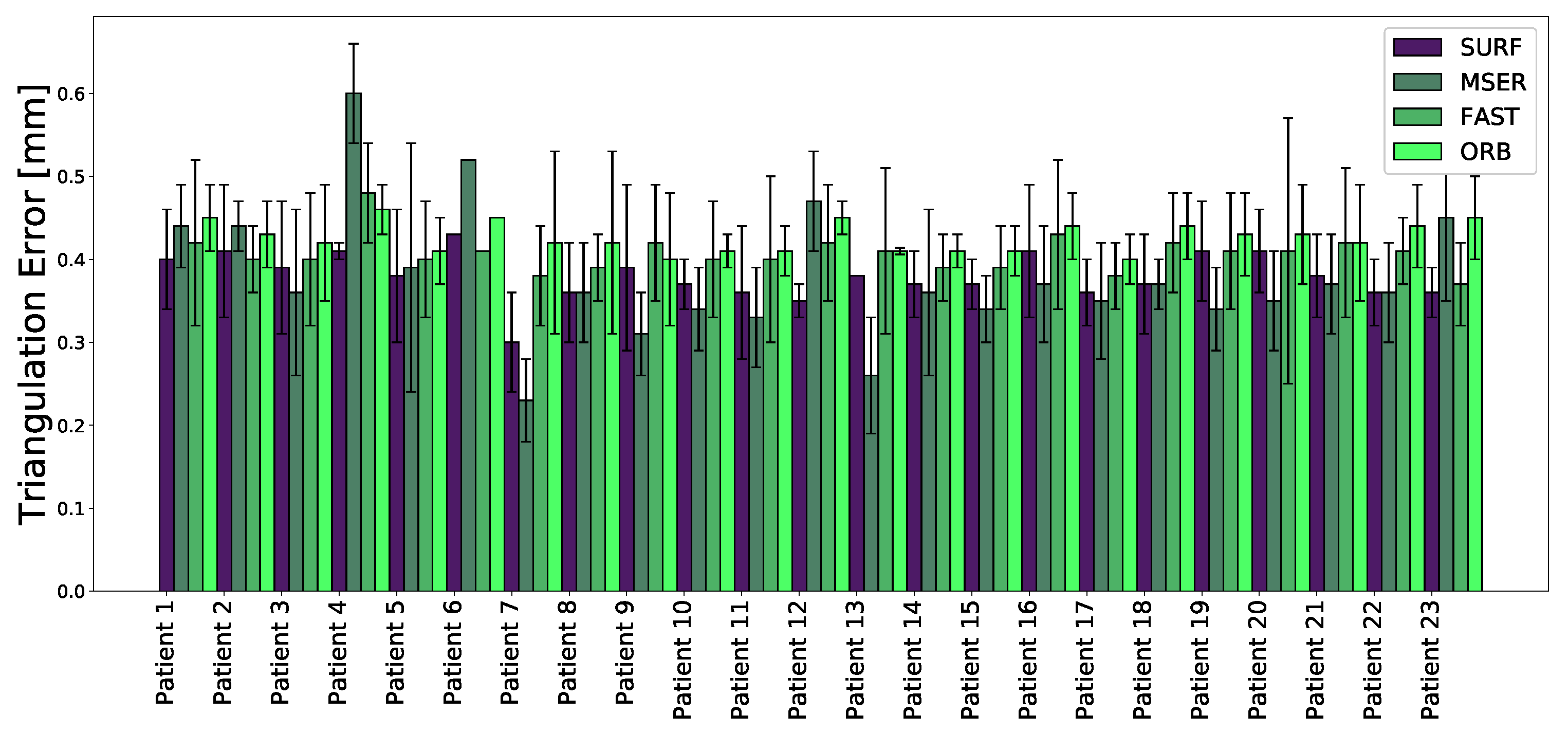

2.4. 3d Stereo Triangulation

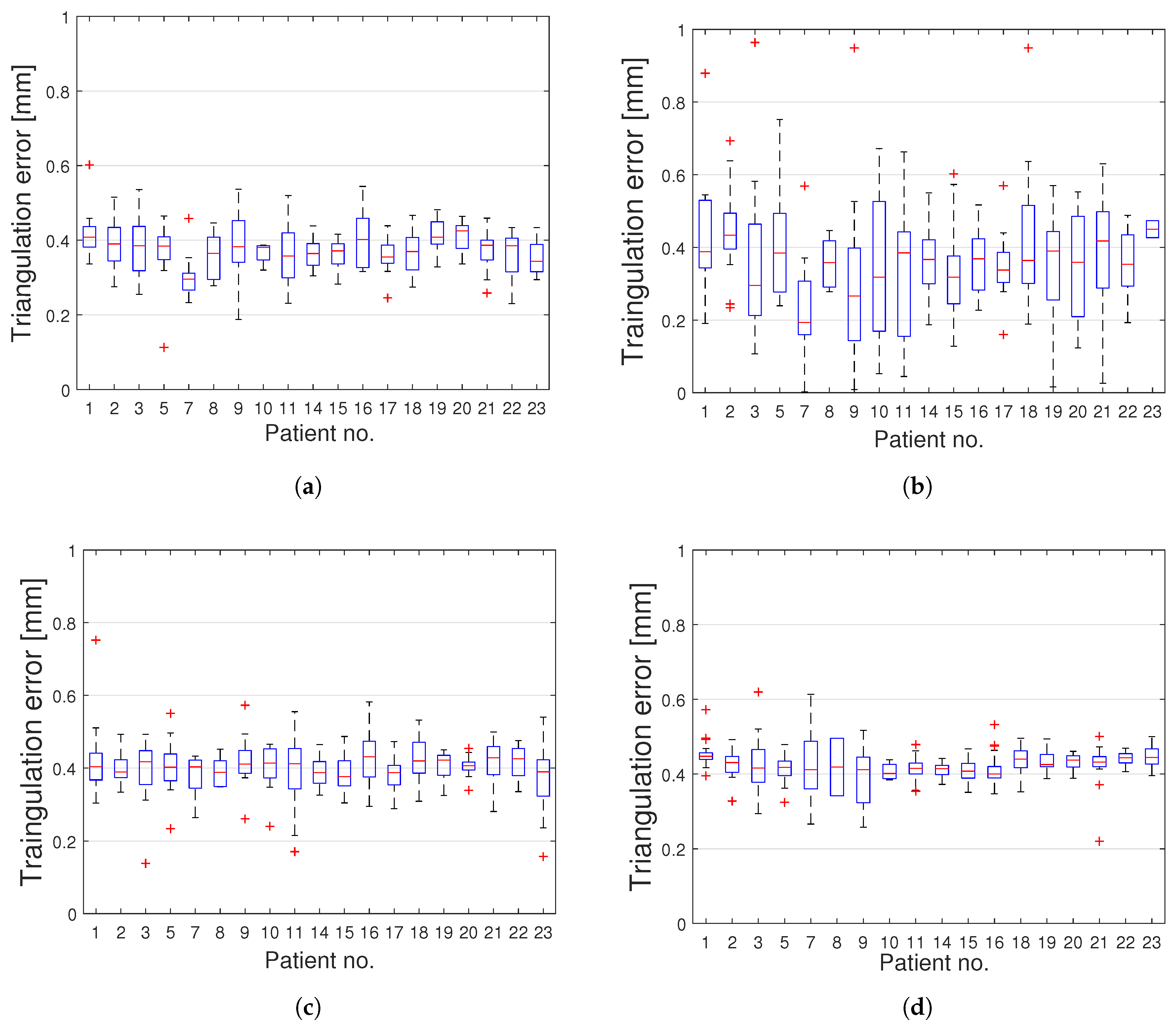

3. Results

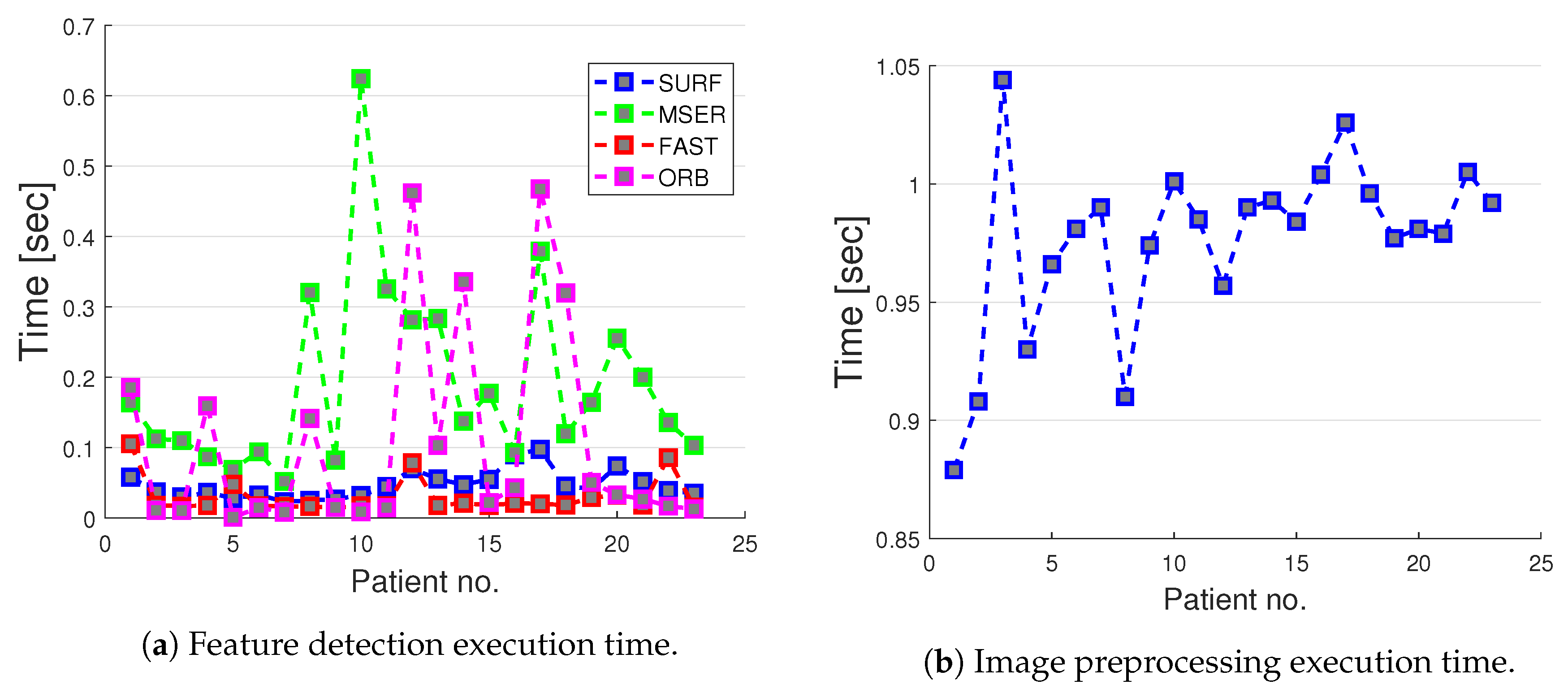

Computation Times

4. Discussion

5. Limitations

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Edström, E.; Buström, G.; Gerdhem, P.; Elmi-Terander, A. A novel augmented reality-based surgical navigation system for spine surgery in a hybrid operating room: Design, workflow and clinical applications. Oper. Neurosurg. 2020, 18, 496–502. [Google Scholar] [CrossRef]

- Tian, N.; Huang, Q.; Zhou, P.; Zhou, Y.; Wu, R.; Lou, Y.; Xu, H. Pedicle screw insertion accuracy with different assisted methods: A systematic review and meta-analysis of comparative studies. Eur. Spine J. 2011, 20, 846–859. [Google Scholar] [CrossRef]

- Houten, J.K.; Rani, N.; Nrupen, B. Clinical assessment of percutaneous lumbar pedicle screw placement using the O-arm multidimensional surgical imaging system. Neurosurgery 2012, 70, 990–995. [Google Scholar] [CrossRef] [PubMed]

- Kosmopoulos, V.; Constantin, S. Pedicle screw placement accuracy: A meta-analysis. Spine 2007, 32, E111–E120. [Google Scholar] [CrossRef] [PubMed]

- Kim, Y.J.; Lenke, L.G.; Bridwell, K.H.; Cho, Y.S.; Riew, K.D. Free hand pedicle screw placement in the thoracic spine: Is it safe? Spine 2004, 29, 333–342. [Google Scholar] [CrossRef] [PubMed]

- Viau, M.; Tarbox, B.B.; Wonglertsiri, S.; Karaikovic, E.E.; Yingsakmongkol, W.; Gaines, R.W. Thoracic pedicle screw instrumentation using the “Funnel Technique”: Part 2. Clinical experience. Clin. Spine Surg. 2002, 15, 450–453. [Google Scholar] [CrossRef]

- Parker, S.L.; McGirt, M.J.; Farber, S.H.; Amin, A.G.; Rick, A.-M.; Suk, I.; Bydon, A.; Sciubba, D.M.; Wolinsky, J.-P.; Gokaslan, Z.L.; et al. Accuracy of free-hand pedicle screws in the thoracic and lumbar spine: Analysis of 6816 consecutive screws. Neurosurgery 2011, 68, 170–178. [Google Scholar] [CrossRef]

- Gelalis, I.D.; Paschos, N.K.; Pakos, E.E.; Politis, A.N.; Arnaoutoglou, C.M.; Karageorgos, A.C.; Ploumis, A.; Xenakis, T.A. Accuracy of pedicle screw placement: A systematic review of prospective in vivo studies comparing free hand, fluoroscopy guidance and navigation techniques. Eur. Spine J. 2012, 21, 247–255. [Google Scholar] [CrossRef]

- Du, J.P.; Fan, Y.; Wu, Q.N.; Wang, D.H.; Zhang, J.; Hao, D.J. Accuracy of pedicle screw insertion among 3 image-guided navigation systems: Systematic review and meta-analysis. World Neurosurg. 2018, 109, 24–30. [Google Scholar] [CrossRef]

- Elmi-Terander, A.; Skulason, H.; Söderman, M.; Racadio, J.; Homan, R.; Babic, D.; van der Vaart, N.; Nachabe, R. Surgical navigation technology based on augmented reality and integrated 3D intraoperative imaging: A spine cadaveric feasibility and accuracy study. Spine (Phila Pa 1976) 2016, 41, E1303–E1311. [Google Scholar] [CrossRef]

- Helm, P.A.; Teichman, R.; Hartmann, S.L.; Simon, D. Spinal navigation and imaging: History, trends, and future. IEEE Trans. Med. Imaging 2015, 34, 1738–1746. [Google Scholar] [CrossRef] [PubMed]

- Rajasekaran, S.; Vidyadhara, S.; Ramesh, P.; Shetty, A.P. Randomized clinical study to compare the accuracy of navigated and non-navigated thoracic pedicle screws in deformity correction surgeries. Spine 2007, 32, E56–E64. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Takigawa, T.; Wu, Y.; Sugimoto, Y.; Tanaka, M.; Ozaki, T. Accuracy of pedicle screw insertion in posterior scoliosis surgery: A comparison between intraoperative navigation and preoperative navigation techniques. Eur. Spine J. 2017, 26, 1756–1764. [Google Scholar] [CrossRef]

- Burström, G.; Nachabe, R.; Persson, O.; Edström, E.; Elmi-Terander, A. Augmented and virtual reality instrument tracking for minimally invasive spine surgery: A feasibility and accuracy study. Spine (Phila Pa 1976) 2019, 44, 1097–1104. [Google Scholar] [CrossRef] [PubMed]

- Elmi-Terander, A.; Burström, G.; Nachabe, R.; Skulason, H.; Pedersen, K.; Fagerlund, M.; Ståhl, F.; Charalampidis, A.; Söderman, M.; Holmin, S.; et al. Pedicle screw placement using augmented reality surgical navigation with intraoperative 3d imaging: A first in-human prospective cohort study. Spine (Phila Pa 1976) 2019, 44, 517–525. [Google Scholar] [CrossRef] [PubMed]

- Van de Kelft, E.; Costa, F.; Van der Planken, D.; Schils, F. A prospective multicenter registry on the accuracy of pedicle screw placement in the thoracic, lumbar, and sacral levels with the use of the O-arm imaging system and StealthStation Navigation. Spine 2012, 37, E1580–E1587. [Google Scholar] [CrossRef] [PubMed]

- Hecht, N.; Kamphuis, M.; Czabanka, M.; Hamm, B.; König, S.; Woitzik, J.; Synowitz, M.; Vajkoczy, P. Accuracy and workflow of navigated spinal instrumentation with the mobile AIRO® CT scanner. Eur. Spine J. 2016, 25, 716–723. [Google Scholar] [CrossRef] [PubMed]

- Hott, J.S.; Deshmukh, V.R.; Klopfenstein, J.D.; Sonntag, V.K.H.; Dickman, C.A.; Spetzler, R.F.; Papadopoulos, S.M. Intraoperative Iso-C C-arm navigation in craniospinal surgery: The first 60 cases. Neurosurgery 2004, 54.5, 1131–1137,. [Google Scholar] [CrossRef]

- Uehara, M.; Takahashi, J.; Ikegami, S.; Kuraishi, S.; Shimizu, M.; Futatsugi, T.; Oba, H.; Kato, H. Are pedicle screw perforation rates influenced by distance from the reference frame in multilevel registration using a computed tomography-based navigation system in the setting of scoliosis? Spine J. 2017, 17, 499–504. [Google Scholar] [CrossRef]

- Jin, M.; Liu, Z.; Qiu, Y.; Yan, H.; Han, X.; Zhu, Z. Incidence and risk factors for the misplacement of pedicle screws in scoliosis surgery assisted by O-arm navigation—Analysis of a large series of one thousand, one hundred and forty five screws. Int Orthop. 2017, 41, 773–780. [Google Scholar] [CrossRef]

- Gumprecht, H.K.; Widenka, D.C.; Lumenta, C.B. Brain Lab VectorVision neuronavigation system: Technology and clinical experiences in 131 cases. Neurosurgery 1999, 44, 97–104. [Google Scholar] [CrossRef]

- Oertel, M.F.; Hobart, J.; Stein, M.; Schreiber, V.; Scharbrodt, W. Clinical and methodological precision of spinal navigation assisted by 3D intraoperative O-arm radiographic imaging. J. Neurosurg. Spine 2011, 14, 532–536. [Google Scholar] [CrossRef] [PubMed]

- Nemec, S.F.; Donat, M.A.; Mehrain, S.; Friedrich, K.; Krestan, C.; Matula, C.; Imhof, H.; Czerny, C. CT–MR image data fusion for computer assisted navigated neurosurgery of temporal bone tumors. Eur. J. Radiol. 2007, 62, 192–198. [Google Scholar] [CrossRef] [PubMed]

- Burström, G.; Buerger, C.; Hoppenbrouwers, J.; Nachabe, R.; Lorenz, C.; Babic, D.; Homan, R.; Racadio, J.; Grass, M.; Persson, O.; et al. Machine learning for automated 3-dimensional segmentation of the spine and suggested placement of pedicle screws based on intraoperative cone-beam computer tomography. J. Neurosurg. Spine 2019, 31, 147–154. [Google Scholar] [CrossRef]

- Elmi-Terander, A.; Nachabe, R.; Skulason, H.; Pedersen, K.; Söderman, M.; Racadio, J.; Babic, D.; Gerdhem, P.; Edström, E. Feasibility and accuracy of thoracolumbar minimally invasive pedicle screw placement with augmented reality navigation technology. Spine (Phila Pa 1976) 2018, 43, 1018–1023. [Google Scholar] [CrossRef] [PubMed]

- Suenaga, H.; Tran, H.H.; Liao, H.; Masamune, K.; Dohi, T.; Hoshi, K.; Takato, T. Vision-based markerless registration using stereo vision and an augmented reality surgical navigation system: A pilot study. BMC Med. Imaging 2015, 15. [Google Scholar] [CrossRef]

- Seitel, A.; Bellemann, N.; Hafezi, M.; Franz, A.M.; Servatius, M.; Saffari, A.; Kilgus, T.; Schlemmer, H.-P.; Mehrabi, A.; Radeleff, B.A.; et al. Towards markerless navigation for percutaneous needle insertions. Int. J. Comput. Assist. Radiol. Surg. 2016, 11, 107–117. [Google Scholar] [CrossRef]

- Hübner, P.; Clintworth, K.; Liu, Q.; Weinmann, M.; Wursthorn, S. Evaluation of HoloLens Tracking and Depth Sensing for Indoor Mapping Applications. Sensors 2020, 20, 1021. [Google Scholar] [CrossRef] [PubMed]

- Gibby, J.T.; Swenson, S.A.; Cvetko, S.; Rao, R.; Javan, R. Head-mounted display augmented reality to guide pedicle screw placement utilizing computed tomography. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 525–535. [Google Scholar] [CrossRef] [PubMed]

- Umeyama, S. Least-squares estimation of transformation parameters between two point patterns. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 376–380. [Google Scholar] [CrossRef]

- Manni, F.; Edström, E.; de With, P.H.N.; Liu, X.; Holthuizen, R.; Zinger, S.; der Sommen, F.v.; Shan, C.; Mamprin, M.; Burstrom, G.; et al. Towards non-invasive patient tracking: Optical image analysis for spine tracking during spinal surgery procedures. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 3909–3914. [Google Scholar] [CrossRef]

- Mikolajczyk, K.; Schmid, C. A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1615–1630. [Google Scholar] [CrossRef] [PubMed]

- Matas, J.; Chum, O.; Urban, M.; Pajdla, T. Robust wide-baseline stereo from maximally stable extremal regions. Image Vis. Comput. 2004, 22, 761–767. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Gool, L.V. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110.3, 346–359. [Google Scholar] [CrossRef]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In Computer Vision—ECCV 2006; Springer Publishing: New York, NY, USA, 2006; pp. 430–443. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Zuiderveld, K. Contrast limited adaptive histogram equalization. In Graphics Gems IV; Academic Press Professional, Inc.: Cambridge, MA, USA, 1994; pp. 474–485. [Google Scholar]

- Manni, F.; Mamprin, M.; Zinger, S.; Shan, C.; Holthuizen, R.; de With, P.H.N. Multispectral image analysis for patient tissue tracking during complex interventions. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018. [Google Scholar]

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. BRIEF: Binary Robust Independent Elementary Features In Computer Vision—ECCV 2010; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- Glossop, N.D.; Hu, R.W.; Randle, J.A. Computer-aided pedicle screw placement using frameless stereotaxis. Spine (Phila Pa 1976) 1996, 21, 2026–2034. [Google Scholar] [CrossRef] [PubMed]

- Thomale, U.-W.; Kneissler, M.; Hein, A.; Maetzig, M.; Kroppenstedt, S.-N.; Lueth, T.; Woiciechowsky, C. A spine frame for intra-operative fixation to increase accuracy in spinal navigation and robotics. Comput. Aided Surg. 2005, 10, 151–155. [Google Scholar] [CrossRef] [PubMed]

- Burström, G.; Nachabé, R.; Homan, R.; Hoppenbrouwers, J.; Holthuizen, R.; Persson, O.; Edström, E.; Elmi-Terander, A. Frameless patient tracking with adhesive optical skin markers for augmented reality surgical navigation in spine surgery. Spine (Phila Pa 1976) 2020, in press. [Google Scholar]

- Manni, F.; van der Sommen, F.; Zinger, S.; Shan, C.; Holthuizen, R.; Lai, M.; Burström, G.; Hoveling, R.J.M.; Edström, E.; Elmi-Terander, A.; et al. Hyperspectral imaging for skin feature detection: Advances in markerless tracking for spine surgery. Appl. Sci. 2020, 10, 4078. [Google Scholar] [CrossRef]

- Burström, G.; Balicki, M.; Patriciu, A.; Kyne, S.; Popovic, A.; Holthuizen, R.; Homan, R.; Skulason, H.; Persson, O.; Edström, E.; et al. Feasibility and accuracy of a robotic guidance system for navigated spine surgery in a hybrid operating room: A cadaver study. Sci. Rep. 2020, 10, 7522. [Google Scholar] [CrossRef]

| SURF | Feature threshold = 600 | Number of octaves = 4 | Number of scales = 6 |

| MSER | Step size for threshold = 0.3 | Region size = [100, ..., 800] | Area variation = 0.3 |

| FAST | Min. corner quality = 0.1 | Min. intensity = 0.2 | |

| ORB | Scale factor = 1.2 | Decomposition levels = 8 |

| Max. Inliers | Min. Inliers | Mean Inliers | Median Inliers | IQR Range | |

|---|---|---|---|---|---|

| No. | No. | No. | No. | No. | |

| SURF matched features | 177 | 2 | 40 | 26 | 42.25 |

| MSER matched features | 214 | 1 | 15 | 25 | 30 |

| FAST matched features | 94 | 2 | 28 | 33 | 29 |

| ORB matched features | 732 | 1 | 131 | 181 | 209.5 |

| Patient No. | Execution Time (Mean ± std) | ||||

|---|---|---|---|---|---|

| SURF [sec] | MSER [sec] | FAST [sec] | ORB [sec] | PREPROC. [sec] | |

| 1 | 0.058 (±0.024) | 0.164 (±0.056) | 0.105 (±0.220) | 0.185 (±0.460) | 0.879 (±0.314) |

| 2 | 0.037 (±0.005) | 0.113 (±0.032) | 0.018 (±0.002) | 0.011 (±0.005) | 0.908 (±0.276) |

| 3 | 0.030 (±0.006) | 0.110 (±0.032) | 0.017 (±0.002) | 0.011 (±0.008) | 1.044 (±0.185) |

| 4 | 0.036 (±0.013) | 0.087 (±0.021) | 0.018 (±0.003) | 0.159 (±0.452) | 0.930 (±0.223) |

| 5 | 0.028 (±0.010) | 0.069 (±0.014) | 0.048 (±0.044) | 0.005 (±0.008) | 0.966 (±0.007) |

| 6 | 0.033 (±0.011) | 0.094 (±0.041) | 0.018 (±0.001) | 0.015 (±0.012) | 0.981 (±0.020) |

| 7 | 0.023 (±0.006) | 0.052 (±0.012) | 0.016 (±0.003) | 0.008 (±0.003) | 0.990 (±0.025) |

| 8 | 0.025 (±0.006) | 0.320 (±0.280) | 0.016 (±0.300) | 0.141 (±0.500) | 0.910 (±0.282) |

| 9 | 0.027 (±0.005) | 0.082 (±0.120) | 0.015 (±0.010) | 0.015 (±0.200) | 0.974 (±0.030) |

| 10 | 0.032 (±0.004) | 0.032 (±0.400) | 0.017 (±0.320) | 0.009 (±0.427) | 1.001 (±0.039) |

| 11 | 0.045 (±0.009) | 0.045 (±0.510) | 0.018 (±0.300) | 0.015 (±0.500) | 0.985 (±0.017) |

| 12 | 0.070 (±0.015) | 0.070 (±0.510) | 0.078 (±0.220) | 0.461 (±0.990) | 0.957 (±0.021) |

| 13 | 0.055 (±0.025) | 0.055 (±0.380) | 0.018 (±0.001) | 0.103 (±0.380) | 0.990 (±0.016) |

| 14 | 0.047 (±0.020) | 0.047 (±0.070) | 0.021 (±0.007) | 0.335 (±0.600) | 0.993 (±0.032) |

| 15 | 0.055 (±0.008) | 0.177 (±0.007) | 0.018 (±0.002) | 0.022 (±0.002) | 0.984 (±0.001) |

| 16 | 0.090 (±0.004) | 0.093 (±0.005) | 0.021 (±0.002) | 0.043 (±0.001) | 1.004 (±0.050) |

| 17 | 0.097 (±0.020) | 0.379 (±0.360) | 0.020 (±0.020) | 0.467 (±0.060) | 1.026 (±0.096) |

| 18 | 0.045 (±0.013) | 0.120 (±0.060) | 0.018 (±0.002) | 0.319 (±0.031) | 0.996(±0.028) |

| 19 | 0.041 (±0.011) | 0.164 (±0.031) | 0.029 (±0.038) | 0.050 (±0.108) | 0.977 (±0.042) |

| 20 | 0.073 (±0.026) | 0.255 (±0.077) | 0.032 (±0.044) | 0.032 (±0.014) | 0.981 (±0.032) |

| 21 | 0.051 (±0.020) | 0.199 (±0.078) | 0.018 (±0.002) | 0.026 (±0.014) | 0.979 (±0.024) |

| 22 | 0.038 (±0.011) | 0.135 (±0.051) | 0.085 (±0.269) | 0.016 (±0.008) | 1.005 (±0.026) |

| 23 | 0.035 (±0.008) | 0.103 (±0.001) | 0.015 (±0.003) | 0.012 (±0.006) | 0.992 (±0.011) |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Manni, F.; Elmi-Terander, A.; Burström, G.; Persson, O.; Edström, E.; Holthuizen, R.; Shan, C.; Zinger, S.; van der Sommen, F.; de With, P.H.N. Towards Optical Imaging for Spine Tracking without Markers in Navigated Spine Surgery. Sensors 2020, 20, 3641. https://doi.org/10.3390/s20133641

Manni F, Elmi-Terander A, Burström G, Persson O, Edström E, Holthuizen R, Shan C, Zinger S, van der Sommen F, de With PHN. Towards Optical Imaging for Spine Tracking without Markers in Navigated Spine Surgery. Sensors. 2020; 20(13):3641. https://doi.org/10.3390/s20133641

Chicago/Turabian StyleManni, Francesca, Adrian Elmi-Terander, Gustav Burström, Oscar Persson, Erik Edström, Ronald Holthuizen, Caifeng Shan, Svitlana Zinger, Fons van der Sommen, and Peter H. N. de With. 2020. "Towards Optical Imaging for Spine Tracking without Markers in Navigated Spine Surgery" Sensors 20, no. 13: 3641. https://doi.org/10.3390/s20133641

APA StyleManni, F., Elmi-Terander, A., Burström, G., Persson, O., Edström, E., Holthuizen, R., Shan, C., Zinger, S., van der Sommen, F., & de With, P. H. N. (2020). Towards Optical Imaging for Spine Tracking without Markers in Navigated Spine Surgery. Sensors, 20(13), 3641. https://doi.org/10.3390/s20133641