Passive Sensing of Prediction of Moment-To-Moment Depressed Mood among Undergraduates with Clinical Levels of Depression Sample Using Smartphones

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Protocol

2.3. Measures

2.3.1. Baseline Depression Severity

2.3.2. Dynamic Depressed Mood

2.3.3. Passive Sensor Data

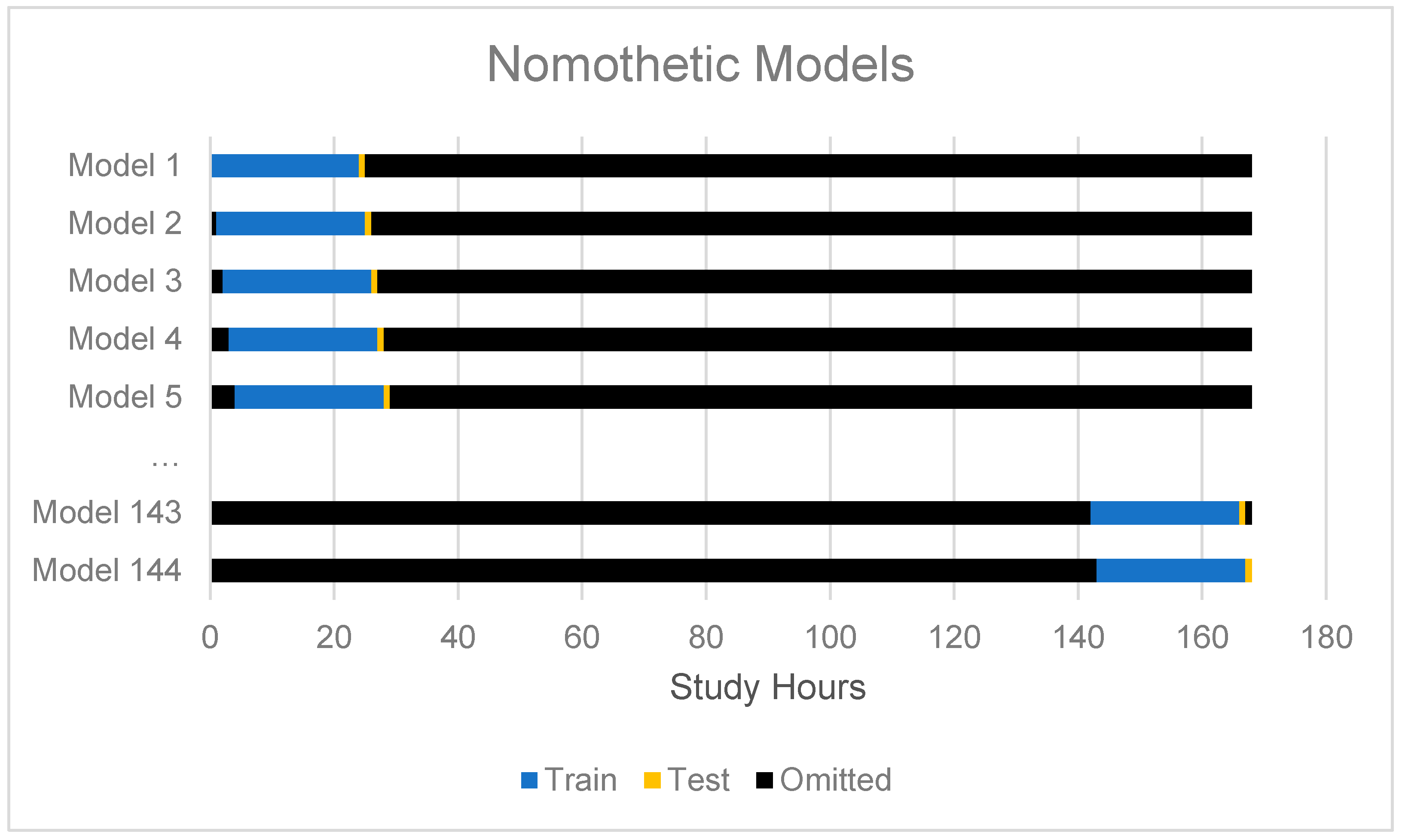

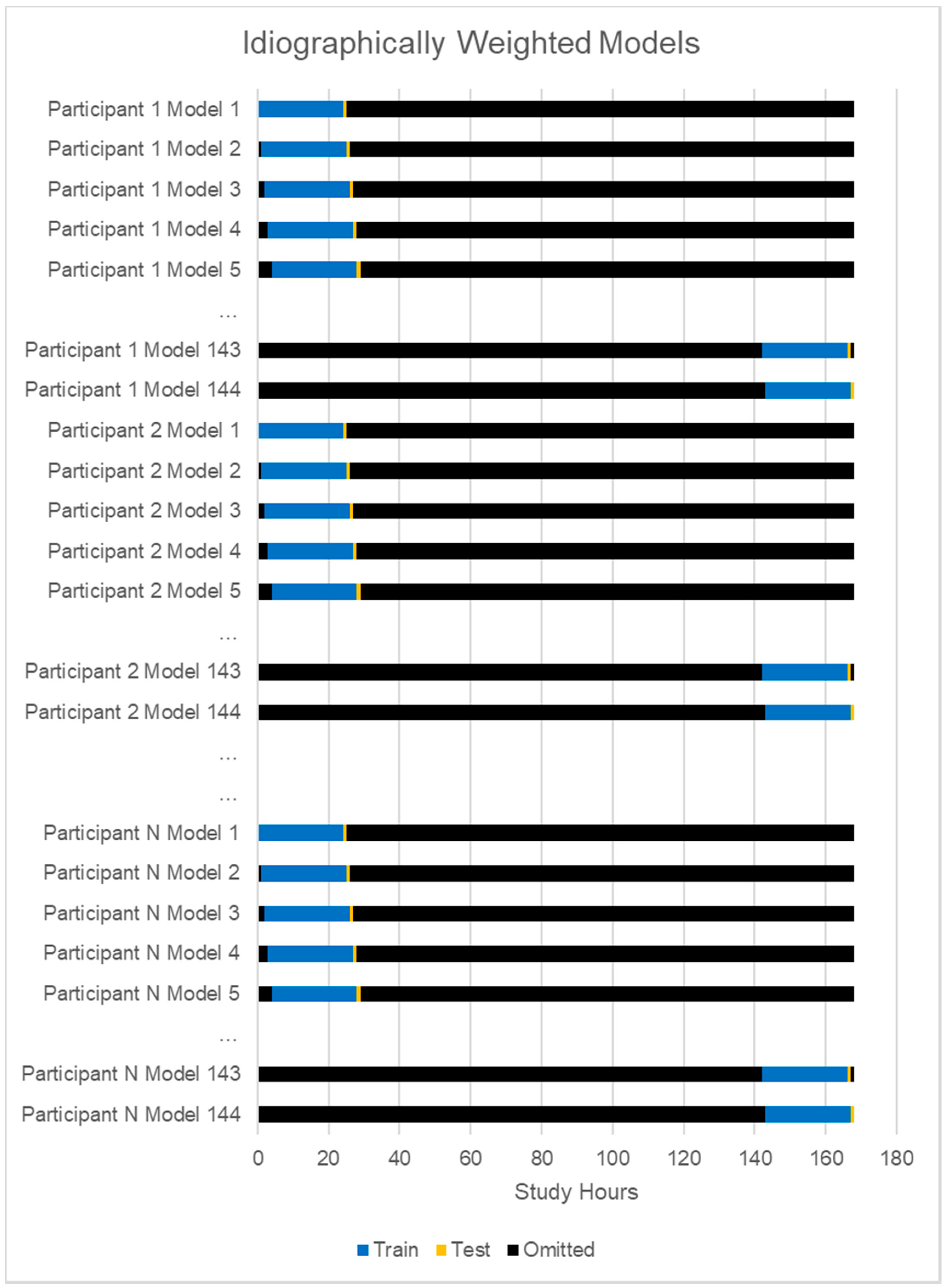

2.4. Planned Analysis

3. Results

3.1. Compliance

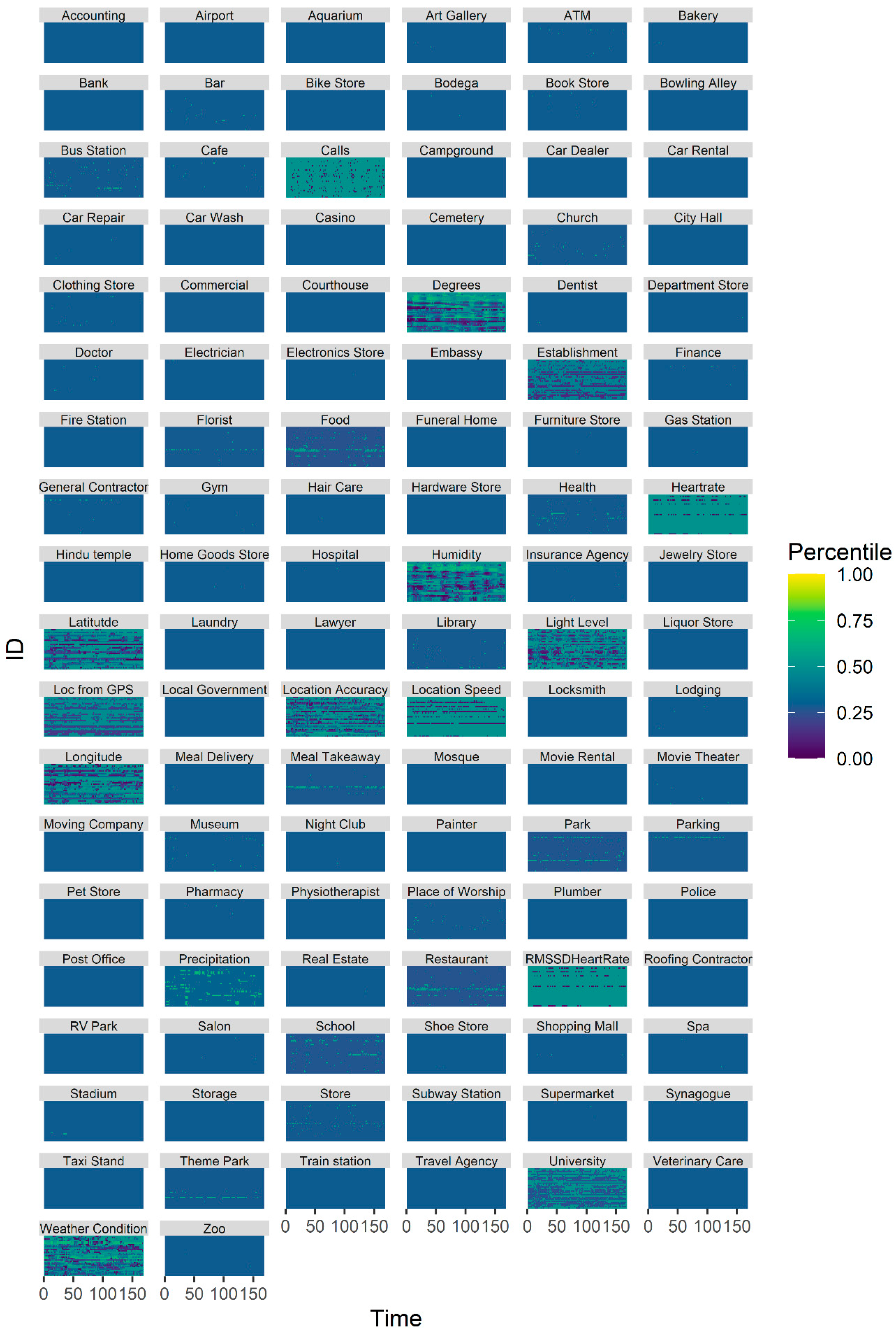

3.2. Sensing Data

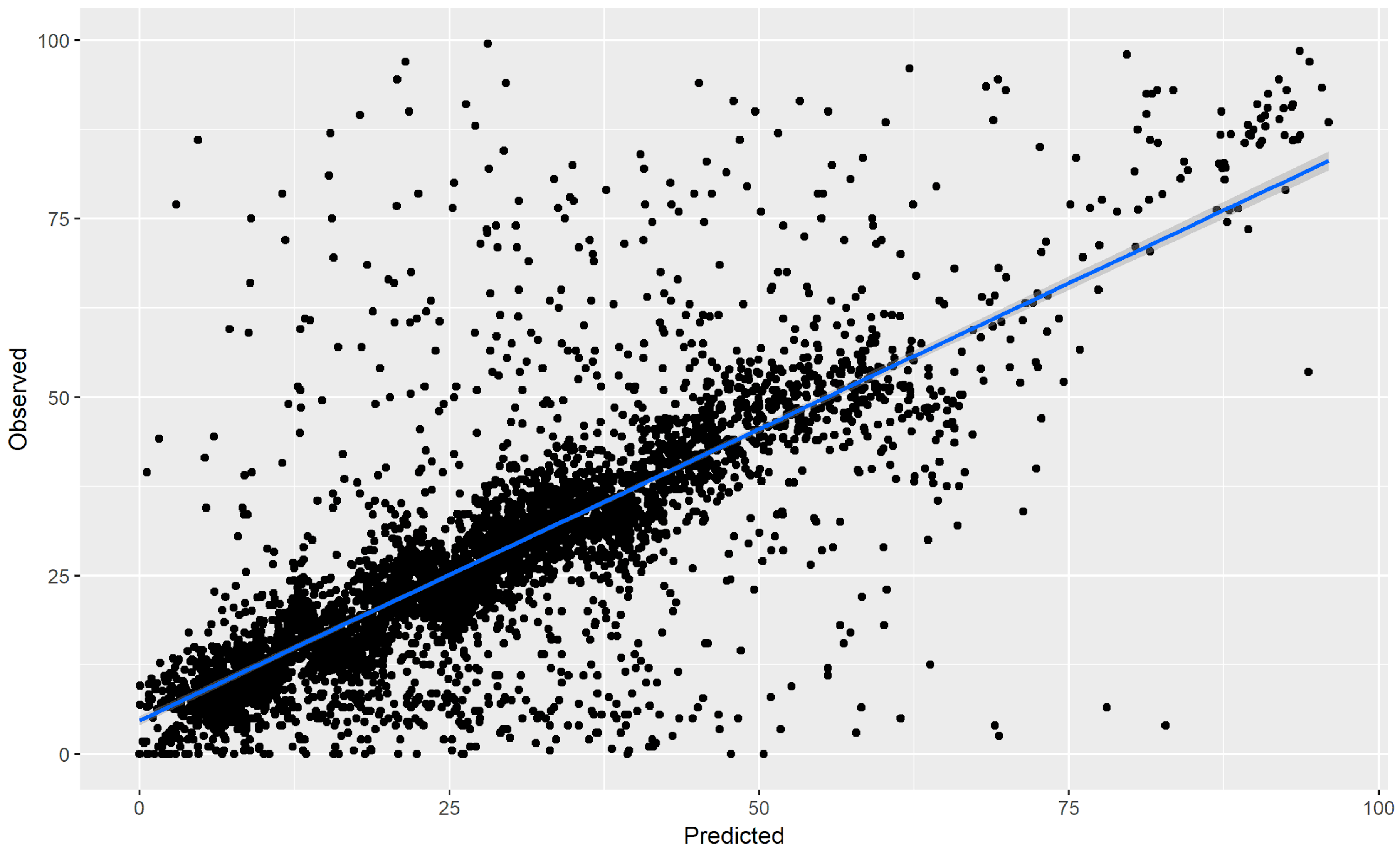

3.3. Predicting Depressed Mood

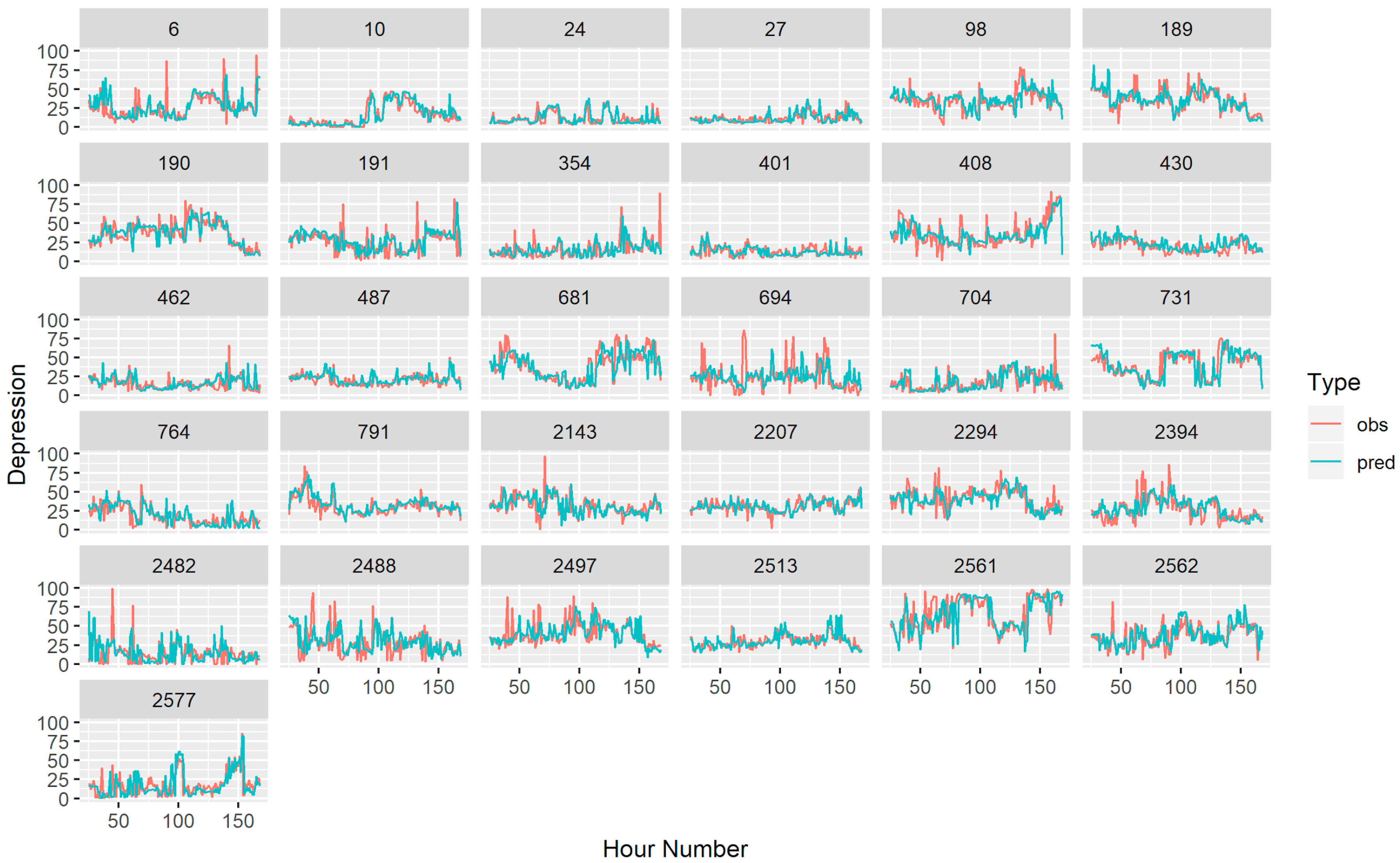

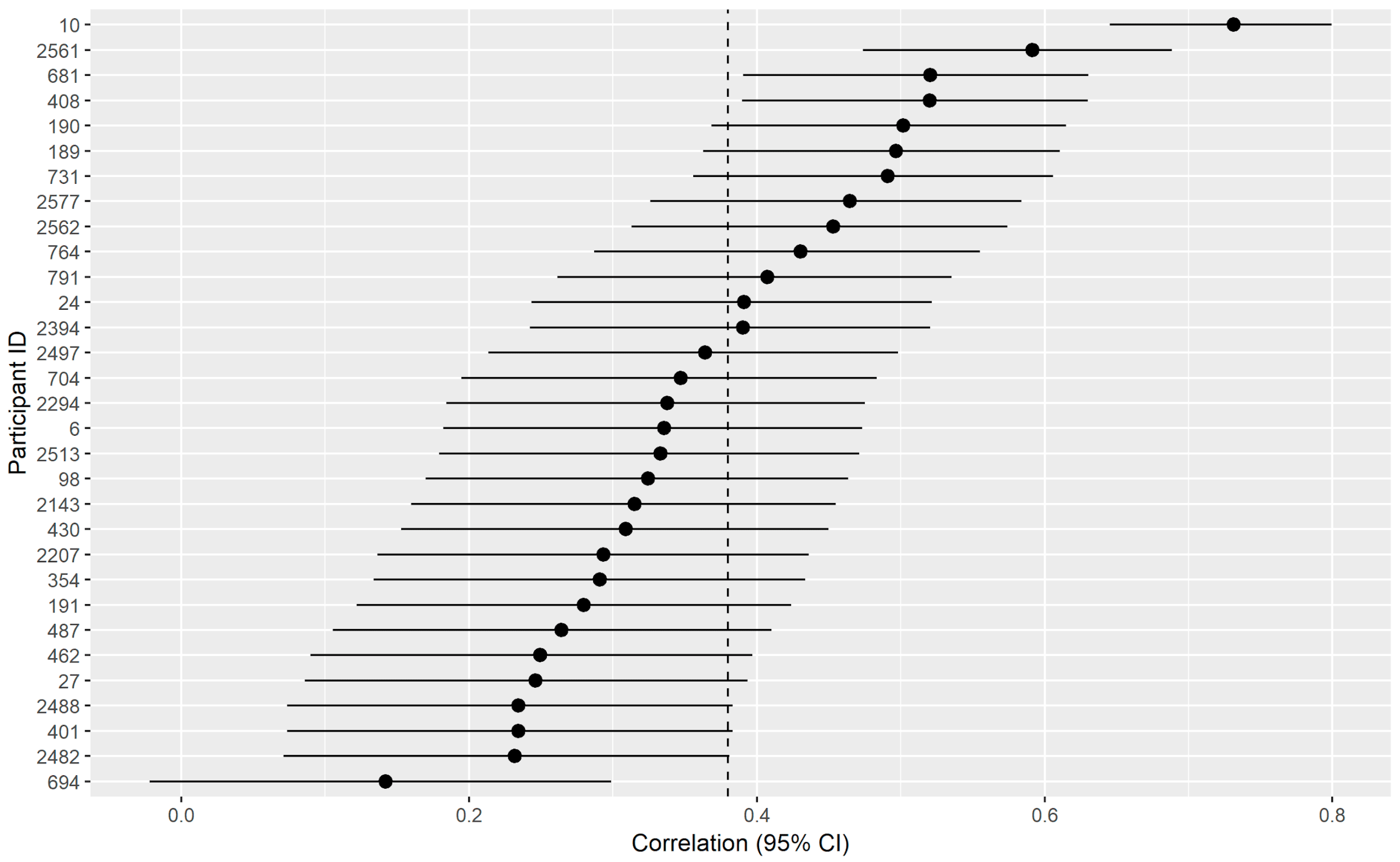

3.4. Idiographic Predictions

3.5. Follow-up Sensitivity Analyses

4. Discussion

Author Contributions

Funding

Conflicts of Interest

References

- Cousins, C.; Servaty-Seib, H.; Lockman, J. College Student Adjustment and Coping for Bereaved and Nonbereaved College Students. OMEGA J. Death Dying 2014, 74. [Google Scholar] [CrossRef]

- Blanco, C.; Okuda, M.; Wright, C.; Hasin, D.S.; Grant, B.F.; Liu, S.-M.; Olfson, M. Mental Health of College Students and Their Non–College-Attending Peers. Arch. Gen. Psychiatr. 2008, 65, 1429–1437. [Google Scholar] [CrossRef] [PubMed]

- Bose, J.; Hedden, S.L.; Lipari, R.N.; Park-Lee, E. Key Substance Use and Mental Health Indicators in the United States: Results From the 2017 National Survey on Drug Use and Health [Internet]. Substance Abuse and Mental Health Services Administration, 2018. Available online: https://www.samhsa.gov/data/report/2017-nsduh-annual-national-report (accessed on 12 June 2019).

- Gallagher, R.P. Thirty Years of the National Survey of Counseling Center Directors: A Personal Account. J. Coll. Stud. Psychother. 2012, 26, 172–184. [Google Scholar] [CrossRef]

- Twenge, J.M.; Gentile, B.; de Wall, C.N.; Ma, D.; Lacefield, K.; Schurtz, D.R. Birth cohort increases in psychopathology among young Americans, 1938–2007: A cross-temporal meta-analysis of the MMPI. Clin. Psychol. Rev. 2010, 30, 145–154. [Google Scholar] [CrossRef] [PubMed]

- Whooley, M.A.; Wong, J.M. Depression and Cardiovascular Disorders. Annu. Rev. Clin. Psychol. 2013, 9, 327–354. [Google Scholar] [CrossRef]

- Bradley, K.L.; Santor, D.A.; Oram, R. A Feasibility Trial of a Novel Approach to Depression Prevention: Targeting Proximal Risk Factors and Application of a Model of Health-Behaviour Change. Can. J. Community Ment. Heal. 2016, 35, 47–61. [Google Scholar] [CrossRef]

- Pew Research Center Staff. Mobile Fact Sheet. Pew Research Center. Available online: https://www.pewinternet.org/fact-sheet/mobile/ (accessed on 12 June 2019).

- Chow, P.I.; Fua, K.; Huang, Y.; Bonelli, W.; Xiong, H.; E Barnes, L.; Teachman, B.A.; Saeb, S.; Burns, M.; Schueller, S.; et al. Using Mobile Sensing to Test Clinical Models of Depression, Social Anxiety, State Affect, and Social Isolation Among College Students. J. Med. Internet Res. 2017, 19, e62. [Google Scholar] [CrossRef] [PubMed]

- Ben-Zeev, D.; Scherer, E.A.; Wang, R.; Xie, H.; Campbell, A.T. Next-generation psychiatric assessment: Using smartphone sensors to monitor behavior and mental health. Psychiatr. Rehabil. J. 2015, 38, 218–226. [Google Scholar] [CrossRef] [PubMed]

- Saeb, S.; Lattie, E.G.; Kording, K.; Mohr, D.C.; Mosa, A.; Torous, J.; Wahle, F.; Barnes, L.; Faurholt-Jepsen, M.; Paglialonga, A. Mobile Phone Detection of Semantic Location and Its Relationship to Depression and Anxiety. JMIR mHealth uHealth 2017, 5, e112. [Google Scholar] [CrossRef]

- Farhan, A.A.; Lu, J.; Bi, J.; Russell, A.; Wang, B.; Bamis, A. Multi-view Bi-clustering to Identify Smartphone Sensing Features Indicative of Depression. In Proceedings of the 2016 IEEE First International Conference on Connected Health: Applications, Systems and Engineering Technologies (CHASE); Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2016; pp. 264–273. [Google Scholar]

- Pratap, A.; Atkins, D.C.; Renn, B.N.; Tanana, M.; Mooney, S.D.; Anguera, J.A.; Areán, P.A. The accuracy of passive phone sensors in predicting daily mood. Depress. Anxiety 2019, 36, 72–81. [Google Scholar] [CrossRef] [PubMed]

- Saeb, S.; Lattie, E.G.; Schueller, S.M.; Kording, K.; Mohr, D.C. The relationship between mobile phone location sensor data and depressive symptom severity. PeerJ. 2016, 4. [Google Scholar] [CrossRef] [PubMed]

- Ware, S.; Yue, C.; Morillo, R.; Lu, J.; Shang, C.; Kamath, J.; Bamis, A.; Bi, J.; Russell, A.; Wang, B. Large-scale Automatic Depression Screening Using Meta-data from WiFi Infrastructure. Proc. ACM Interac. Mob. Wearable Ubiquitous Technol. 2018, 2, 1–27. [Google Scholar] [CrossRef]

- Jacobson, N.C.; Weingarden, H.; Wilhelm, S. Using Digital Phenotyping to Accurately Detect Depression Severity. J. Nerv. Ment. Dis. 2019, 207, 893–896. [Google Scholar] [CrossRef] [PubMed]

- Jacobson, N.C.; Weingarden, H.; Wilhelm, S. Digital biomarkers of mood disorders and symptom change. NPJ Digit. Med. 2019, 2, 3. [Google Scholar] [CrossRef]

- Wahle, F.; Kowatsch, T.; Fleisch, E.; Rufer, M.; Weidt, S. Mobile Sensing and Support for People with Depression: A Pilot Trial in the Wild. JMIR mHealth uHealth 2016, 4, e111. [Google Scholar] [CrossRef] [PubMed]

- Faurholt-Jepsen, M.; Frost, M.; Vinberg, M.; Christensen, E.M.; Bardram, J.E.; Kessing, L.V. Smartphone data as objective measures of bipolar disorder symptoms. Psychiatr. Res. Neuroimag. 2014, 217, 124–127. [Google Scholar] [CrossRef]

- Faurholt-Jepsen, M.; Vinberg, M.; Frost, M.; Debel, S.; Christensen, E.M.; Bardram, J.E.; Kessing, L.V. Behavioral activities collected through smartphones and the association with illness activity in bipolar disorder. Int. J. Methods Psychiatr. Res. 2016, 25, 309–323. [Google Scholar] [CrossRef]

- Beiwinkel, T.; Kindermann, S.; Maier, A.; Kerl, C.; Moock, J.; Barbian, G.; Rössler, W.; Faurholt-Jepsen, M.; Mayora, O.; Buntrock, C. Using Smartphones to Monitor Bipolar Disorder Symptoms: A Pilot Study. JMIR Ment. Heal. 2016, 3, e2. [Google Scholar] [CrossRef]

- Grünerbl, A.; Muaremi, A.; Osmani, V.; Bahle, G.; Ohler, S.; Tröster, G.; Mayora, O.; Haring, C.; Lukowicz, P.; Troester, G. Smartphone-Based Recognition of States and State Changes in Bipolar Disorder Patients. IEEE J. Biomed. Heal. Inform. 2014, 19, 140–148. [Google Scholar] [CrossRef]

- Gruenerbl, A.; Osmani, V.; Bahle, G.; Carrasco-Jimenez, J.C.; Oehler, S.; Mayora, O.; Haring, C.; Lukowicz, P. Using smart phone mobility traces for the diagnosis of depressive and manic episodes in bipolar patients. In Proceedings of the 5th Augmented Human International Conference; Association for Computing Machinery: New York, NY, USA, 2014; pp. 1–8. [Google Scholar]

- Lu, J.; Shang, C.; Yue, C.; Morillo, R.; Ware, S.; Kamath, J.; Bamis, A.; Russell, A.; Wang, B.; Bi, J. Joint Modeling of Heterogeneous Sensing Data for Depression Assessment via Multi-task Learning. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 2, 1–21. [Google Scholar] [CrossRef]

- Osmani, V. Smartphones in Mental Health: Detecting Depressive and Manic Episodes. IEEE Pervasive Comput. 2015, 14, 10–13. [Google Scholar] [CrossRef]

- Palmius, N.; Tsanas, A.; Saunders, K.E.; Bilderbeck, A.C.; Geddes, J.R.; Goodwin, G.M.; de vos, M. Detecting Bipolar Depression from Geographic Location Data. IEEE Trans. Biomed. Eng. 2016, 64, 1761–1771. [Google Scholar] [CrossRef] [PubMed]

- Mehrotra, A.; Hendley, R.; Musolesi, M. Towards multi-modal anticipatory monitoring of depressive states through the analysis of human-smartphone interaction. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing—UbiComp 16; Association for Computing Machinery: Heidelberg, Germany, 2016; pp. 1132–1138. [Google Scholar]

- Zulueta, J.; Piscitello, A.; Rasic, M.; Easter, R.; Babu, P.; Langenecker, S.A.; McInnis, M.; Ajilore, O.; Nelson, P.C.; Ryan, K.A.; et al. Predicting Mood Disturbance Severity with Mobile Phone Keystroke Metadata: A BiAffect Digital Phenotyping Study. J. Med. Internet Res. 2018, 20, e241. [Google Scholar] [CrossRef] [PubMed]

- Doryab, A.; Min, J.K.; Wiese, J.; Zimmerman, J.; Hong, J.I. Detection of Behavior Change in People with Depression. 2014. Available online: https://kilthub.cmu.edu/articles/Detection_of_behavior_change_in_people_with_depression/6469988 (accessed on 12 June 2019).

- Canzian, L.; Musolesi, M. Trajectories of depression. In Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2015 ACM International Symposium on Wearable Computers—UbiComp ’15; Association for Computing Machinery (ACM): New York, NY, USA, 2015; pp. 1293–1304. [Google Scholar]

- Burns, M.; Begale, M.; Duffecy, J.; Gergle, D.; Karr, C.J.; Giangrande, E.; Mohr, D.C.; Proudfoot, J.; Dear, B. Harnessing Context Sensing to Develop a Mobile Intervention for Depression. J. Med. Internet Res. 2011, 13, e55. [Google Scholar] [CrossRef]

- Murray, G. Diurnal mood variation in depression: A signal of disturbed circadian function? J. Affect. Disord. 2007, 102, 47–53. [Google Scholar] [CrossRef]

- Hall, D.P.; Sing, H.C.; Romanoski, A.J. Identification and characterization of greater mood variance in depression. Am. J. Psychiat. 1991, 148, 1341–1345. [Google Scholar] [CrossRef]

- Variability of Activity Patterns Across Mood Disorders and Time of day/BMC Psychiatry/Full Text. Available online: https://bmcpsychiatry.biomedcentral.com/articles/10.1186/s12888-017-1574-x (accessed on 24 August 2019).

- Peeters, F.; Berkhof, J.; Delespaul, P.; Rottenberg, J.; Nicolson, N.A. Diurnal mood variation in major depressive disorder. Emotion 2006, 6, 383–391. [Google Scholar] [CrossRef]

- Krane-Gartiser, K.; Vaaler, A.E.; Fasmer, O.B.; Sørensen, K.; Morken, G.; Scott, J. Variability of activity patterns across mood disorders and time of day. BMC Psychiat. 2017, 17, 404. [Google Scholar] [CrossRef]

- Naragon-Gainey, K. Affective models of depression and anxiety: Extension to within-person processes in daily life. J. Affect. Disord. 2019, 243, 241–248. [Google Scholar] [CrossRef]

- Nahum-Shani, I.; Smith, S.N.; Spring, B.; Collins, L.M.; Witkiewitz, K.; Tewari, A.; Murphy, S.A. Just-in-Time Adaptive Interventions (JITAIs) in Mobile Health: Key Components and Design Principles for Ongoing Health Behavior Support. Ann. Behav. Med. 2017, 52, 446–462. [Google Scholar] [CrossRef]

- Wilhelm, S.; Weingarden, H.; Ladis, I.; Braddick, V.; Shin, J.; Jacobson, N.C. Cognitive-Behavioral Therapy in the Digital Age: Presidential Address. Behav. Ther. 2019, 51, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Fisher, A.J. Toward a Dynamic Model of Psychological Assessment: Implications for Personalized Care. J. Consul. Clin. Psychol. 2015, 83, 825–836. [Google Scholar] [CrossRef] [PubMed]

- Fisher, A.J.; Bosley, H.G.; Fernandez, K.C.; Reeves, J.W.; Soyster, P.D.; Diamond, A.E.; Barkin, J. Open trial of a personalized modular treatment for mood and anxiety. Behav. Res. Ther. 2019, 116, 69–79. [Google Scholar] [CrossRef] [PubMed]

- Fisher, A.J.; Medaglia, J.D.; Jeronimus, B.F. Lack of group-to-individual generalizability is a threat to human subject’s research. Proc. Natl. Acad. Sci. USA 2018, 115, E6106–E6115. [Google Scholar] [CrossRef] [PubMed]

- Fisher, A.J.; Reeves, J.W.; Lawyer, G.; Medaglia, J.D.; Rubel, J.A. Exploring the idiographic dynamics of mood and anxiety via network analysis. J. Abnorm. Psychol. 2017, 126, 1044–1056. [Google Scholar] [CrossRef]

- Abdullah, S.; Matthews, M.; Frank, E.; Doherty, G.; Gay, G.; Choudhury, T. Automatic detection of social rhythms in bipolar disorder. J. Am. Med. Inform. Assoc. 2016, 23, 538–543. [Google Scholar] [CrossRef]

- Robinson, O.C. The Idiographic/Nomothetic Dichotomy: Tracing Historical Origins of Contemporary Confusions. Hist. Philosoph. Psychol. 2011, 32–39. [Google Scholar]

- de Matteo, D.; Batastini, A.; Foster, E.; Hunt, E. Individualizing Risk Assessment: Balancing Idiographic and Nomothetic Data. J. Forensic Psychol. Pr. 2010, 10, 360–371. [Google Scholar] [CrossRef]

- Torous, J.; Powell, A.C. Current research, and trends in the use of smartphone applications for mood disorders. Internet Interv. 2015, 2, 169–173. [Google Scholar] [CrossRef]

- Muaremi, A.; Gravenhorst, F.; Grünerbl, A.; Arnrich, B.; Tröster, G. Assessing Bipolar Episodes Using Speech Cues Derived from Phone Calls. In Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2014; Volume 100, pp. 103–114. [Google Scholar]

- Lovibond, S.H.; Lovibond, P.F. Manual for the Depression Anxiety & Stress Scales, 2nd ed.; Psychology Foundation of Australia: Sydney, Australia, 1995. [Google Scholar]

- Swartz, H.A. Recognition and Treatment of Depression. AMA J. Ethic 2005, 7, 430–434. [Google Scholar] [CrossRef]

- Page, A.C.; Hooke, G.R.; Morrison, D.L.; Reicher, S. Psychometric properties of the Depression Anxiety Stress Scales (DASS) in depressed clinical samples. Br. J. Clin. Psychol. 2007, 46, 283–297. [Google Scholar] [CrossRef] [PubMed]

- Brown, T.A.; Chorpita, B.F.; Korotitsch, W.; Barlow, D.H. Psychometric properties of the Depression Anxiety Stress Scales (DASS) in clinical samples. Behav. Res. Ther. 1997, 35, 79–89. [Google Scholar] [CrossRef]

- Wardenaar, K.J.; Wanders, R.B.K.; Jeronimus, B.F.; de Jonge, P. The Psychometric Properties of an Internet-Administered Version of the Depression Anxiety and Stress Scales (DASS) in a Sample of Dutch Adults. J. Psychopathol. Behav. Assess. 2017, 40, 318–333. [Google Scholar] [CrossRef] [PubMed]

- Cacioppo, J.T.; Hughes, M.E.; Waite, L.J.; Hawkley, L.C.; Thisted, R.A. Loneliness as a specific risk factor for depressive symptoms: Cross-sectional and longitudinal analyses. Psychol. Aging 2006, 21, 140–151. [Google Scholar] [CrossRef] [PubMed]

- Heikkinen, R.-L.; Kauppinen, M. Depressive symptoms in late life: A 10-year follow-up. Arch. Gerontol. Geriatr. 2004, 38, 239–250. [Google Scholar] [CrossRef] [PubMed]

- Ouellet, R.; Joshi, P. Loneliness in Relation to Depression and Self-Esteem. Psychol. Rep. 1986, 58, 821–822. [Google Scholar] [CrossRef]

- van Beljouw, I.M.J.; van Exel, E.; Gierveld, J.D.J.; Comijs, H.; Heerings, M.; Stek, M.L.; van Marwijk, H. “Being all alone makes me sad”: Loneliness in older adults with depressive symptoms. Int. Psychogeriatr. 2014, 26, 1541–1551. [Google Scholar] [CrossRef]

- Reis, J. The Structure of Depression in Community Based Young Adolescent, Older Adolescent, and Adult Mothers. Fam. Relat. 1989, 38, 164. [Google Scholar] [CrossRef]

- Arving, C.; Glimelius, B.; Brandberg, Y. Four weeks of daily assessments of anxiety, depression and activity compared to a point assessment with the Hospital Anxiety and Depression Scale. Qual. Life Res. 2007, 17, 95–104. [Google Scholar] [CrossRef]

- Bolkhovsky, J.B.; Scully, C.G.; Chon, K.H. Statistical analysis of heart rate and heart rate variability monitoring using smart phone cameras. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2012; Volume 2012, pp. 1610–1613. [Google Scholar]

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely randomized trees. Mach. Learn. 2006, 63, 3–42. [Google Scholar] [CrossRef]

- Wright, M.N.; Ziegler, A. A Fast Implementation of Random Forests for High Dimensional Data in C++ and R. J. Stat. Softw. 2017, 77. [Google Scholar] [CrossRef]

- Jacobson, N.C.; Summers, B.; Wilhelm, S. Digital Biomarkers of Social Anxiety Severity: Digital Phenotyping Using Passive Smartphone Sensors. J. Med. Internet Res. 2020, 22, e16875. [Google Scholar] [CrossRef] [PubMed]

- Jacobson, N.C.; O’Cleirigh, C. Objective digital phenotypes of worry severity, pain severity and pain chronicity in persons living with HIV. Br. J. Psychiatr. 2019, 1–3. [Google Scholar] [CrossRef] [PubMed]

- Jacobson, N.C.; Lord, K.A.; Newman, M.G. Perceived emotional social support in bereaved spouses mediates the relationship between anxiety and depression. J. Affect. Disord. 2017, 211, 83–91. [Google Scholar] [CrossRef] [PubMed]

- Jacobson, N.C.; Newman, M.G. Perceptions of close and group relationships mediate the relationship between anxiety and depression over a decade later. Depress. Anxiety. 2016, 33, 66–74. [Google Scholar] [CrossRef] [PubMed]

- Jacobson, N.C.; Newman, M.G. Anxiety and Depression as Bidirectional Risk Factors for One Another: A Meta-Analysis of Longitudinal Studies. Psychol. Bull. 2017, 143, 1155–1200. [Google Scholar] [CrossRef] [PubMed]

- Jacobson, N.C.; Newman, M.G. Avoidance mediates the relationship between anxiety and depression over a decade later. J. Anxiety Disord. 2014, 28, 437–445. [Google Scholar] [CrossRef] [PubMed]

- Roche, M.J.; Jacobson, N.C. Elections Have Consequences for Student Mental Health: An Accidental Daily Diary Study. Psychol. Rep. 2018, 122, 451–464. [Google Scholar] [CrossRef] [PubMed]

- Jacobson, N.C.; Lekkas, D.; Price, G.; Heinz, M.V.; Song, M.; O’Malley, A.J.; Barr, P.J. Flattening the Mental Health Curve: COVID-19 Stay-at-Home Orders Are Associated With Alterations in Mental Health Search Behavior in the United States. JMIR Ment. Heal. 2020, 7, e19347. [Google Scholar] [CrossRef]

| Study | Timescale | Design Summary | Result |

|---|---|---|---|

| [18] | 8 weeks | smartphone mobile sensing and support | 59.1–60.1% accuracy, 62.3–72.5% sensitivity, 47.3–60.8% specificity |

| [24] | 13 weeks | smartphone sensors and wearable sensors | R2 = 0.44, F1 = 0.77 |

| [11] | 3–6 weeks | mobile phone sensors and location data | AUC = 0.88 |

| [30] | 1–14 days later | location data from smartphone sensors | 71–74%-sensitivity, 78–80% specificity |

| [13] | daily | smartphone sensors | median area underthe curve [AUC] > 0.50) for 80.6% of persons |

| Current Study | Hourly | smartphone sensors | r = 0.587 across persons, r = 0.376 within persons |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jacobson, N.C.; Chung, Y.J. Passive Sensing of Prediction of Moment-To-Moment Depressed Mood among Undergraduates with Clinical Levels of Depression Sample Using Smartphones. Sensors 2020, 20, 3572. https://doi.org/10.3390/s20123572

Jacobson NC, Chung YJ. Passive Sensing of Prediction of Moment-To-Moment Depressed Mood among Undergraduates with Clinical Levels of Depression Sample Using Smartphones. Sensors. 2020; 20(12):3572. https://doi.org/10.3390/s20123572

Chicago/Turabian StyleJacobson, Nicholas C., and Yeon Joo Chung. 2020. "Passive Sensing of Prediction of Moment-To-Moment Depressed Mood among Undergraduates with Clinical Levels of Depression Sample Using Smartphones" Sensors 20, no. 12: 3572. https://doi.org/10.3390/s20123572

APA StyleJacobson, N. C., & Chung, Y. J. (2020). Passive Sensing of Prediction of Moment-To-Moment Depressed Mood among Undergraduates with Clinical Levels of Depression Sample Using Smartphones. Sensors, 20(12), 3572. https://doi.org/10.3390/s20123572