Abstract

In this paper, a customizable wearable 3D-printed bionic arm is designed, fabricated, and optimized for a right arm amputee. An experimental test has been conducted for the user, where control of the artificial bionic hand is accomplished successfully using surface electromyography (sEMG) signals acquired by a multi-channel wearable armband. The 3D-printed bionic arm was designed for the low cost of 295 USD, and was lightweight at 428 g. To facilitate a generic control of the bionic arm, sEMG data were collected for a set of gestures (fist, spread fingers, wave-in, wave-out) from a wide range of participants. The collected data were processed and features related to the gestures were extracted for the purpose of training a classifier. In this study, several classifiers based on neural networks, support vector machine, and decision trees were constructed, trained, and statistically compared. The support vector machine classifier was found to exhibit an 89.93% success rate. Real-time testing of the bionic arm with the optimum classifier is demonstrated.

1. Introduction

Research into advanced medical and prosthetic devices has generated significant attention in recent years due to the increasing demand for reliable bionic hands capable of manifesting the patient’s intentions to perform various tasks. In general, gesture recognition techniques have emerged as a key enabling feature for improving both the accuracy and functionality of bionic hands, allowing the patient control over delicate operations in dangerous situations, or to help patients with movement disorders and disabilities, as well as in the rehabilitation training process.

The use of bionic hands is not only limited to medical use, but has also found innumerable applications in industrial settings; artificial bionic hands can perform certain tasks in hazardous or restricted environments while maintaining the user’s level of dexterity and natural response time. Under such circumstances, vision-based gesture recognition using image detection [1,2,3,4,5,6,7] could be sufficient to provide the correct hand motion.

Furthermore, several technologies, such as electrical impedance tomography (EIT), were used for improving motion detection. Zhang et al. [8] proposed a hand gesture recognition system based on EIT. The EIT system measures the internal electrical impedance and estimates the interior structure by using the surface electrodes and high-frequency alternating current (AC). Although the the proposed system achieves high accuracy, direct contact with the skin is required for proper performance.

Recently, wearable devices based on surface electromyography (sEMG) have become quite attractive in the human gesture recognition domains, as these devices are used to capture the characteristics of the muscles. In general, the sEMG signals obtained from a human arm contain sufficient information with respect to the intended and performed hand gestures [9]. Wheeler et al. [10] introduced a gesture-based control system utilizing sEMG signals taken from a forearm, where the proposed systems were successfully able to act as a joystick movement for virtual devices. Furthermore, Saponas et al. [9] proposed a technique based on ten sEMG sensors worn in a narrow band around the upper forearm to separate the position and pressure of finger presses.

Generally, the feature extraction and pattern recognition stages are very important for the gesture recognition systems to capture gestures well. In the feature extraction stage [11,12], the eigenvalues and the feature vectors for each sEMG sample are selected for classifying the gestures. This procedure can be achieved using several approaches, such as time-domain, frequency-domain, and time–frequency-domain features. On the other hand, the classifier plays an important role in the pattern recognition block, where the most commonly used classifiers are the artificial neural network (ANN), linear discriminant analysis (LDA), and support vector machine (SVM). Li et al. [13] combined force prediction with finger motion recognition, where the time domain and autoregressive methods were both used to extract features. Furthermore, a principal component analysis (PCA) approach was used for further dimensionality reduction, while an artificial neural network classifier was used to evaluate the finger movement. Khezri et al. [14] proposed a system based on the adaptive neuro-fuzzy inference system to recognize six hand gestures. For the feature extractions, the time and frequency domains and their combination were used to extract eigenvectors, and the system provided a recognition rate of 92%. Karlik et al. [15] used the ANN classifier to perform a pattern recognition of six movements of upper limbs. The results were compared with a gesture recognition system based on fuzzy clustering, where the fuzzy clustering neural network has better classification performance than simple ANN algorithms. Similarly, Chu et al. [16] used a wavelet packet transform to extract the feature vectors, and then a dimensional reduction was performed using the PCA algorithm. Finally, a multi-layer ANN is used to classify the gestures. The results show that the eigenvector extraction procedure has more impact on the recognition accuracy than the ability of the classifiers. Huang et al. [17] proposed a system for multilimb movements using the Gaussian mixture model (GMM). The obtained results indicated that the GMM algorithm has a good classification recognition rate at a low computational cost. Noce et al. [18] introduced a new approach for neural control of hand prostheses. This approach is based on pattern recognition applied to the envelope of neural signals. In this approach, sEMG signals were simultaneously recorded from one human amputee, and the envelope of the sEMG signals was computed, while a Support Vector Machine (SVM) algorithm was adopted to decode the user’s intention. The results obtained in this study showed that well-known techniques of sEMG pattern recognition can be used to process the neural signal and can pave the way to the application of neural gesture decoding in upper limb prosthetics. Shi et al. [19] proposed a bionic hand controlled by hand gestures, while the gestures were recognized based on surface EMG signals. The proposed approach was based on extracting multiple features, such as studies—mean absolute value, zero crossing, slope sign change, and waveform length—and a simple k-nearest-neighbors (KNN) algorithm was used as a classifier to perform hand posture recognition. The results show that the KNN classifier was able to recognize four different hand postures.

The key contribution of this work is the design and fabrication of a low-cost 3D-printed bionic arm for an amputee. The proposed solution offers a proof-of-concept demonstration of a 428 g bionic arm with a cost of 295 USD. The Myo arm band technology was leveraged for gesture recognition and training. A comparison between a variety of algorithms based on a neural network, support vector machine, and decision trees was performed to select the most optimal for the proposed design. Furthermore, the best classifier was then used for online gesture recognition and the control of different movements of the bionic hand.

2. Bionic Hand

2.1. Methodology

The bionic arm implemented and tested was customized for a specific user to fit with his amputation conditions. It was made to provide the user with the ability to perform basic grasping actions and allow the user to effectively participate in his daily activities. The user was born with a small portion of his right arm, as shown in the Figure 1. The user is a 24 year old male with no other significant health issues. He used a number of previous prosthetic arms, whose components and make are not detailed. He found that all of these arms are not sufficiently functional or heavy, or are uncomfortable or expensive. The user gave his informed consent for inclusion before he participated in the study. The study was conducted in accordance with the Declaration of Helsinki, and the protocol was approved by the Ethics Committee with reference (SP190402-01).

Figure 1.

Amputation case with the user wearing a Myo armband.

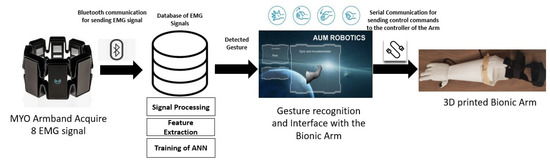

The user’s feedback was taken into consideration when working on the design of a low-cost customized bionic arm. The user was heavily involved in the mechanical design phase of the bionic arm. The prosthetic arm presented in this work is controlled by a multi-channel sEMG sensor that is used to acquire the muscles’ activities. The muscles’ activities represent different gestures, which are used to perform the required action by the hand attached to the arm. The hand has 9 degrees of freedom (DOF), which enable it to perform different accurate actions as per the user’s demand. The schematic chart illustrating the steps required to control the bionic arm is shown in Figure 2. A database of sEMG gestures is created with all of the units associated with signal processing, feature recognition, and machine learning to catalog the signals that are required for movements of the finger actuators in the bionic hand to perform specific hand postures.

Figure 2.

Schematic chart to control the bionic hand.

2.2. Multi-Channel Wearable Armband

Different wearable sensor systems are available in the market for acquiring different bio-signals, such as electromyography (EMG), electroencephalography (EEG), electrodermal activity (EDA), and electrocardiogram (ECG) [20]. The Myo armband is a wearable armband used primarily for Human Computer Interaction (HCI) applications, where humans can deal with computers and systems in an interactive way. The structure of Myo consists of eight EMG (electromyography) sensors and an inertial measurement unit (IMU), which consists of a gyroscope, accelerometer, and a magnetometer [21]. Due to the engineered design of the multi-channel wearable armband, the user wears it in the small portion of the forearm below the elbow. Myo is used for detecting the different gestures and movements that are applied for sEMG database collection. The database, signal processing, and machine learning techniques described later are used for the accurate detection of four different gestures.

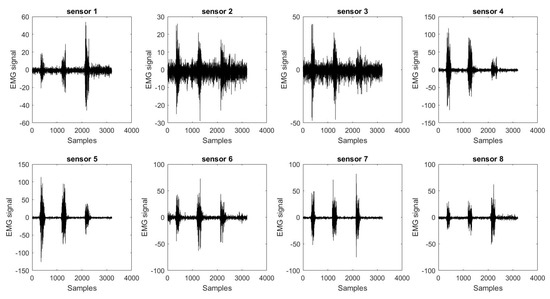

The user was trained to perform the following four gestures: Fist (close), spread fingers (open), wave-in, and wave-out. The detected gestures were displayed on the screen to provide the user with feedback during the training phase [22]. The concept behind the creation of the sEMG database using Myo is to enable a more generic arm design for any amputee with a similar arm amputation. The combination of the 8 different sEMG electrodes of Myo enabled more sEMG signal data for a better gesture recognition system. Figure 3 shows the raw sEMG signals acquired by Myo while the user is performing hand gestures prior to signal processing. The sEMG sensors of the armband are numbered from 1 to 8 to be able to match the signals with the muscles. Sensor 3, as shown in Figure 1, is placed in the area least affected by the surrounding muscles.

Figure 3.

An example of the eight electromyography (EMG) sensors’ raw data collected by the Myo armband.

2.3. Bionic Arm Design

Amputees with limb amputation may be disappointed with particular aspects of available limbs in the market due to their limitations. A customized design for the user through an individual design process has been undertaken here, which has the capacity to target a design that fulfills the need of an individual amputee case, particularly in terms of its low cost and light weight. The current devices available in the market range from 4000 up to 20,000 USD [23]. A detailed market analysis of the cost associated with prosthetic limbs was compiled by the authors in [24]. A simple cosmetic arm and hand may cost between 3000 and 5000 USD. The cost of a functional prosthetic arm, on the other hand, may cost between 20,000 to 30,000 USD [24]. The main target is to manufacture a cheap and affordable bionic arm for amputees costing around 295 USD. Nowadays, the advancement of and easy access to 3D-printing technology have reduced the cost of manufacturing bionic arms and provide easier solutions for the design of prosthetic arms customized for users [22]. The user’s left arm dimensions were measured to fabricate the right arm with the same dimensions for a symmetric look. A mirrored geometry was assumed using a computer-aided design (CAD) software. The dimensions of the affected arm were taken into consideration and embedded in the design to develop a wearable arm with enough room for the Myo armband to fit and be concealed from view.

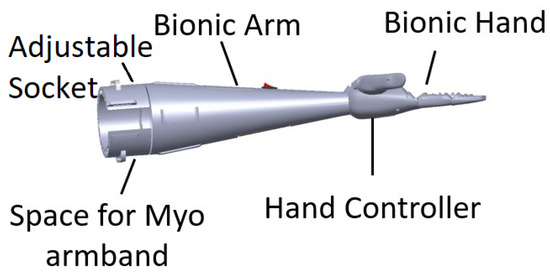

The 3D model design for the bionic arm is shown in Figure 4. The design is based on different criteria, as listed and described below:

Figure 4.

Bionic arm 3D model on computer-aided design (CAD) software.

- Adjustable socket: This is the portion that joins the limb (stump) to the bionic arm. The socket designed for this arm is adjusted by a strap. The user is wearing the Myo armband at a set location on his arm before adjusting the size of the socket to have a tight fit. Designs were iteratively created, tested, and the subject’s feedback was taken into consideration, until an improved design was reached, implemented, and tested. The comfort feeling is one of the most important points considered in the design of the socket, allowing the user to mount the bionic arm for up to four hours with the help of the bicep support.

- Dimensions: The symmetry of arm length is critical for the user to avoid serious muscle asymmetry symptoms and muscle pain from disbalance. Consequently, the designed arm was engineered to match the dimensions of the physical left arm.

- Artificial Hand: A 3D model assembled of the open-source Brunel hand was made to ensure the fitting between the arm and the hand. The hand consists of 9 degrees of freedom and 4 degrees of actuation. It is able to perform complex tasks with precision. The four linear motors are attached to threads along with springs to allow smooth linear motion. These linear actuators consist of feedback that allows the control of the location of the fingers precisely.The majority of the parts are printed with polylactic acid (PLA) material to provide a strong structure, whereas the outer layer and the joints are printed with thermoplastic polyurethane (TPU) to provide a soft cushioning and flexible movement. Small printers were used for the small parts and an industrial-size printer for the larger parts. The complete hand fabrication required less than 2 kg of filament. The total weight of the Brunel hand adds up to just below 350 g.

- Bicep Support: An arm harness made of straps was added to release the pressure off the socket joint with a bicep support made of 25 mm width black nylon strap.

- Myo Integration: The Myo armband is integrated in the bionic arm at the position to ensure correct surface electromyography signal capturing.

- Light Weight: The arm is made to be lightweight by strategically designing the arm to fulfill the design requirements ensuring the strength of the bionic arm at the same time. The total weight of the arm, including the hand with the actuators and excluding the Myo armband, is 428 g.

- Stress Analysis: The constructed prototype was tested using finite element analysis software. The software analysis indicates that lifting a 2 kg load is possible with the fixture at the insertion point and the load on the far end, while taking into consideration the 400 g artificial hand. Experimental load tests indicated that the user can carry a maximum load of 4 kg for 10 seconds or 3 kg for 30 seconds before feeling stress on his muscles. A test conducted by the user is to carry a load of 1.5 kg for 60 s, as shown in Figure 5.

Figure 5. Bionic arm load test.

Figure 5. Bionic arm load test. - Electronics and Battery: To ensure safe and organized assembly, the electronic wiring and cables were concealed, while the battery was placed in the user’s pocket to minimize weight.

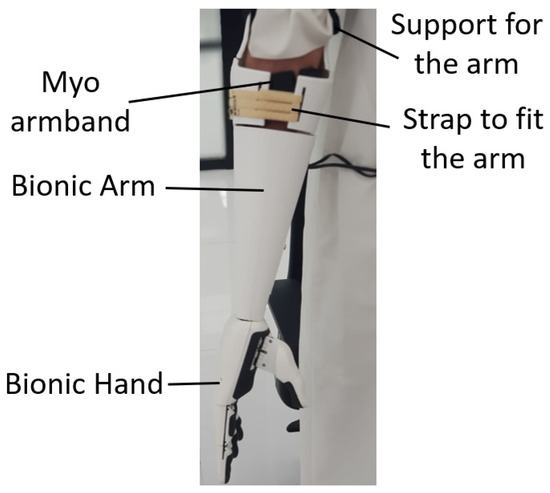

After completion and evaluation of the design, a large-scale industrial 3D printer was used to 3D print the hand parts to be assembled with actuators and electronics. The arm part until the socket was printed in one print. The cost estimation for the arm includes the electronics, actuators, and the 3D-printed material used in the hand. The total cost of the whole arm with parts and electronics is around 295 USD, as detailed in Table 1, which is an affordable price compared to commercially available systems on the market. As the adoption of the proposed arm design will increase the cost of the arm depending on the amputation case, the time for measuring, printing, and assembling is indicated. The final 3D-printed arm is shown in Figure 6.

Table 1.

Detailed cost analysis of the bionic arm.

Figure 6.

Amputee wearing the bionic arm.

2.4. Electronics and Control

The bionic hand actuators are controlled by a Chestnut board placed inside the bionic hand [25], featuring the ARM Cortex M0+ Processor. The Chestnut board is designed to be embedded within robotic hands. It can control up to four motors simultaneously. The mass of the board is 15 g, and its dimensions are small at mm, allowing it to fit inside the bionic hand.

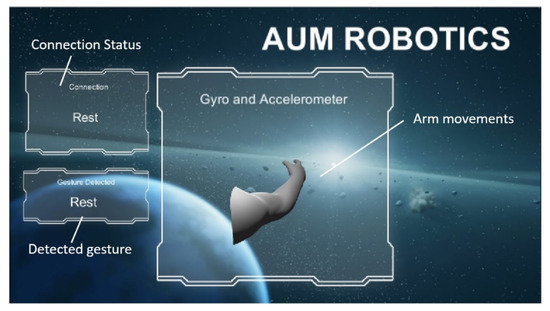

All of the Myo armband data were acquired wirelessly via Bluetooth at a fixed sampling rate of 200 Hz and transmitted serially to a PC. Each transmitted serial datum corresponds to a gesture. These signals are compared with the trained model of gestures. A graphical user interface (GUI) screen for interfacing with the user was developed to indicate the detected gesture, as shown in Figure 7. The detected gesture by the Myo EMG sensors was mapped to perform hand movements; for example, closing the hand, opening the hand, or closing one finger or two fingers. These actions are achieved by controlling the motion of the actuators. The control signals are transferred through the Chestnut board to move the actuators of the hand.

Figure 7.

Interface screen on the PC with implemented graphical user interface (GUI).

Although the bionic arm hardware was customized for a single user, the software was meant to be adaptable for any user. Consequently, sets of gesture data were collected from different participants to enable feature extraction and classification, as detailed in the following section.

3. Feature Extraction and Classification

In this work, a Myo armband was used to collect the data of the selected four gestures from twenty-three participants (twelve males and eleven females with ages ranging from 18 to 45 years). First, the armband was connected wirelessly to the computer, and several numerical algorithms were used to transform the collected data from the official Myo software, called Myo-Connect, to a matrix data format. This procedure simplified the data collection process and allowed visualization of data while recording. Only data used to train and test the offline classifiers were collected using numerical tools, while the online implementation of this project was be performed using Python code. There are three distinct phases involved: Data collection, data processing and rectification, and feature extraction.

As part of the data collection procedure, participants were instructed to keep an angle of at the elbow joint during data collection. The dataset was collected in several sessions (within a period of two months), and every time the Myo armband was attached at the same location around the forearm of all participants. In the first phase, data were collected from participants in several sessions, where data associated with four hand gestures were recorded: Spread fingers, closed hand, wave-in, and wave-out. The participants were instructed to move their hand from the resting position to perform one of the proposed gestures and then move back to the resting position for around four seconds. The participants repeated this procedure more than 10 times for each single gesture. The same procedure was applied for all four gestures. As a result, a dataset of 2000 files was collected, where each file contains the signals of several gestures. In the second phase, the collected data were processed and rectified in order to simplify the third phase (the feature extraction phase).

3.1. Data Processing

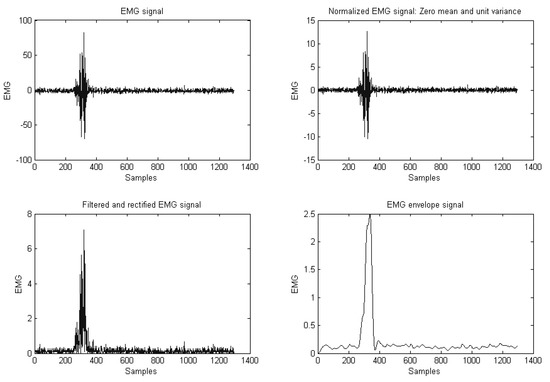

Figure 8 illustrates the second phase of processing raw sEMG signals. First, the raw sEMG signal was modified by removing its mean value, resulting in an AC coupled signal. Next, a band-pass filter was used remove distortions and non-EMG effects from the recorded signal. Generally, raw EMG signals have a frequency between 6–500 Hz. However, certain fast oscillations, which are caused by unwanted electrical noise, may appear within the signal frequency band. Furthermore, slow oscillations, which are caused by movement artefacts or electrical networks, may also contaminate the EMG signals. These unwanted signals can be removed from the original EMG signal using a band-pass filter with cutoff frequencies between 20 and 450 Hz. The resulting data signals may be further rectified by taking the absolute value of all EMG values. This step will ensure that negative and positive values of the EMG signals will not cancel each other upon further analysis, such as calculating the mean values of the absolute EMG signal or obtaining other features. Finally, the second phase was be concluded by capturing the envelope of the filtered and rectified EMG signal, as the obtained shape gives a better reflection of the forces generated by the muscles.

Figure 8.

Filtered and rectified EMG signal.

3.2. Feature Extraction

In the feature extraction procedure, which is the third phase, the dimensionality of the processed data was reduced in order to simplify the classification step. Generally, sEMG data may contain relevant and irrelevant information, and the irrelevant information can be discarded by mapping sEMG data to another reduced space (reduced dimensionality). This step is known as feature extraction, and the main advantage of this step is the reduction of the dimensionality of the problem, which eventually simplifies the classification process. In this work, a combination of two statistical features, mean absolute value (MAV) and standard deviation (SD), along with the auto-regressive coefficients (AR) approach, is used to extract significant information from the data, which reflects the targeted gestures [26,27,28]. The main idea is to use the sEMG data to fit an auto-regressive model, where the coefficients of the model along with MAV and SD values are then considered as inputs to the classifier for gesture recognition. For each sEMG envelope signal, the AR model is fitted such as:

where , are the AR model parameters, m is the order of the model, and is the error. Then, the parameters are used to represent the EMG signal. In this work, the value of . A vector of size 2 is needed to capture both MAV and SD values. Furthermore, eight EMG signals were involved in the collection procedure, and the classifier inputs are reduced to eighty entries.

3.3. Classification

In this section, the extracted features along with the corresponding known outputs are used as the input data to train a classifier or recognition algorithms. Based on a pre-selected optimization algorithm, the classifier is trained to learn and identify patterns in the data and to respond to the inputs according to the given outputs. After successful training, the reliability of the classifier is tested with a different dataset.

Training and testing classifiers help in validating the results and obtaining an accurate classification model. In this section, three classifiers are investigated: The artificial neural network (ANN), support vector machine (SVM), and decision trees (TD) algorithms, in order to identify which classifier is better suited for building the bionic hand.

3.3.1. Artificial Neural Network

Artificial neural networks (ANN), also known as multi-layer perceptrons (MLP), are one of main pattern recognition techniques; they comprise a large number of neurons, and these neurons are connected in a layered manner. The training procedure of a neural network can be easily achieved by optimizing the unknown weights to minimize a pre-selected fitness function. Generally, the neuron architecture can be summarized as the following: A neuron (or node) receives inputs, and then respective weights are applied on these inputs. Then, a bias term is added on the linear combination of the weighted input signals. The resulting combination is mapped through an activation function. Usually, the ANN consists of input and output layers, as well as hidden layers that permit the neural network to learn more complex features. In this work, one of the most recognized ANN algorithms, the feed-forward neural network, is used as a supervised classifier for gesture recognition. The feed-forward classifier is trained with a set of data (called training data); the trained classifier is then tested with a different dataset. Finally, the resulting ANN classifier is used to recognize online input data [29,30,31,32,33].

3.3.2. Support Vector Machine

A support vector machine (SVM) is a multi-class classifier that has been successfully applied in many disciplines. The SVM algorithm gained its success from its excellent empirical performance in applications with relatively large numbers of features. In this algorithm, the learning task involves selecting the weights and bias values based on given labeled training data. This can be achieved by finding the weights and bias that maximize a quantity known as the margin. Generally, the SVM algorithm was first designed for two-class classification. However, it has been extended to multi-class classification by creating several one-against-all classifiers (in which the algorithm solves K two-class problems, and, each time, a class is selected and classified against the rest of the classes), or by formulating the SVM problem as a one-against-one classification problem (in this case, binary classification problems are solved by considering all classes in pairs) [34,35]. In this work, a multi-class SVM classifier is trained, tested, and used to classify gestures based on online data [36].

3.3.3. Decision Tree

Recently, decision tree (DT) algorithms have become very attractive in machine learning applications due to their low computational cost [37]. Furthermore, DT approaches are transparent and easy to understand, since the classification process could be visualized as following a tree-like path until a classification answer is obtained. The decision tree algorithm can be summarized as follows: The classification is broken down into a set of choices, where each choice is about a specific feature. Then, the algorithm starts at the tree’s base (root) and keeps progressing to the leaves in order to receive the optimized classification result. The trees are usually easy to comprehend, and can be transformed into a set of if–then rules, which are suitable for simplifying the training procedure of machine learning applications. Generally, decision trees use greedy heuristic approaches to perform search and optimizations, where these algorithms evaluate their possible options at the current learning stage and select the solution that seems optimal at that instant. In this work, a decision tree algorithm is used to train and test a gesture dataset, and the results are compared with the SVM and ANN in order to select the best model for creating the bionic hand.

4. Results

After selecting three different types of classifiers, the offline procedure was used to train and test these classifiers in order to select the model that will be used for the online recognition procedure. The ANN classifier has two hidden layers, with the number of neurons used in each layer set to 116 and 48, respectively. The , which is the hyperbolic tangent function, is considered as the activation function of the ANN. The training procedure is achieved using an optimizer called the limited-memory Broyden–Fletcher–Goldfarb–Shanno (LBFGS) algorithm. In the case of the decision tree classifier, a Gini impurity was used to measure the split’s quality. The lowest number of samples required to split an internal node is considered to be two, and only two samples are required at every leaf node. To obtain an accurate SVM classifier, one should select the right value for the regularization parameter C, which is, in this case, , and the kernel parameter .

The parameter values for the three classifiers were selected after performing a cross-validation process for each classifier. Each classifier was used to train and test the same dataset for a different set of parameters. The best model for each version of the three classifiers was selected based on its performance. Next, a statistical study was used to compare the testing results in order to select the best classifier among the three classifiers (ANN, SVM, and DT classifiers). First, each classifier was run for thirty trials, and the testing accuracy for the classification was stored in a table. The SVM classifier provided the highest classification result with a mean value of the training data equal to % and standard deviation of 1.92%. Furthermore, the SVM classifier provided a testing accuracy equal to 89.93%, and standard deviation of 1.75%. The decision tree algorithm produced a training accuracy of 73.46% with a standard deviation of 4.87%, while the testing results were equal to 70.5% with a standard deviation of 2.5%.

Finally, the ANN classifiers provided a training accuracy of 84.78% with a standard deviation of 4.11%. The ANN accuracy of the testing procedure was equal to 83.91% with a standard deviation of 2.3%. The results are presented in Table 2.

Table 2.

Training and testing results for the three classifiers.

The confusion matrices for the training and testing procedures of the SVM classifier are presented in Table 3 and Table 4, respectively. The four gestures presented in the tables are close, open, wave-in, and wave-out, and the reported results represent a classification trial based on the SVM classifier. As observed, the accuracy for both training and testing procedures was higher than 82%. In addition, the results indicate that the mis-classification between gestures is relatively low and mostly happens between the open and close gestures.

Table 3.

Confusion matrix for the support vector machine (SVM) classifier: Training (accuracy: 93.75%).

Table 4.

Confusion matrix for the SVM classifier: Testing (accuracy: 92.62%).

Furthermore, the t-test is used to identify if there is a significant difference between the results of all three classifiers. The obtained P-values were found to be quite small (less than 5%), which indicates that there is a significant difference between the classification results. The Holm approach was then used in the statistical investigation to show that there are statically significant differences among the results of the three classifiers, and the SVM classifier provides a better accuracy than both the ANN and the DT classifiers. As a result, the SVM classification model is adopted for the online classification.

5. Real-Time Implementation

Different testing protocols were proposed to the user for testing the arm design and the EMG signal control with the optimum classifier enabled. The user practiced for one week on how to perform different gestures and be able to control his muscles. After the training phase, the user wore the Myo armband in his forearm and then performed the trained gestures (fist (closed), spread fingers (open), wave-in, wave-out) using his muscles for 20 consecutive times. Subsequently, the user was asked to perform two different gestures consecutively for 20 times to test the daily activities that can be performed by the bionic arm. The gestures were mapped with four different hand postures, as shown in Table 5.

Table 5.

Mapping between the trained gestures and hand actions.

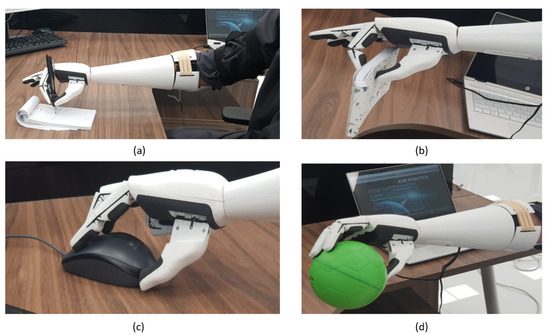

The testing scenarios showed the ability of the user to control the bionic hand accurately after the training phase. The bionic hand movements were optimized to allow the user to perform different activities (holding objects, grasping, drinking, and writing). In single-action testing, the user was asked to perform one action at a time. The single actions include making a fist, spreading the fingers, closing one finger, and closing two fingers, as shown in Figure 9.

Figure 9.

(a) Writing with the pen (two fingers closed action); (b) holding of a notebook (one finger closed action); (c) using the PC mouse (one finger closed action); (d) holding a ball (fist action).

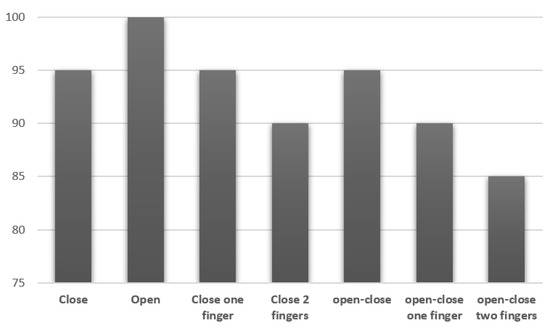

The user performed each action repetitively for 20 consecutive times. The results of testing each single action show a minimum detection rate of 85%. In the combination of two actions, the user performed opening and closing with a success rate of 95%, opening and closing of one finger with 90%, and opening and closing of two fingers with 85%, as shown in Figure 10.

Figure 10.

Success rate of hand actions.

6. Conclusions and Recommendations for Future Work

A customized 3D-printed bionic arm was designed, fabricated, and tested for a right arm amputee. The 3D-printed bionic arm was designed to have a low cost, comfort, light weight, durability, and appearance. To facilitate a generic control of the bionic arm, EMG data were collected for a set of four gestures (fist, spread fingers, wave-in, wave-out) from a wide range of participants. The collected data were processed and feature extraction was performed for the purpose of training a classifier. The support vector machine classifier was found to out-perform the neural network and decision tree classifiers, reaching an average accuracy of 89.93%. Real-time testing of the bionic arm with the associated classifier software enabled the user to perform his daily activities.

Additional features are needed to further improve the bionic arm; for example, a multi-degree-of-freedom wrist joint connector. This can be achieved by using two servo motors with brackets or by utilizing a spherical manipulator. Furthermore, air-ducted adjustable sockets can allow the user to mount and dismount the bionic arm with ease.

Author Contributions

Conceptualization, S.S. and A.N.-a.; methodology, S.S. and M.S.; software, M.S. and I.B.; validation, S.S. and A.N.-a.; formal analysis, S.A.; investigation, S.S.; resources, M.S. and I.B.; data curation, S.S. and I.B.; writing—original draft preparation, A.S.K.; writing—review and editing, A.S.K. and A.N.-a.; supervision, A.N.-a. and S.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

The authors would like to acknowledge the support of the Robotics Research Center at the American University of the Middle East. The authors would like to thank the reviewers for their valuable insight and feedback.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ben-Arie, J.; Wang, Z.; Pandit, P.; Rajaram, S. Human Activity Recognition Using Multidimensional Indexing. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 1091–1104. [Google Scholar] [CrossRef]

- Du, W.; Li, H. Vision based gesture recognition system with single camera. In Proceedings of the International Conference on Signal Processing, Beijing, China, 21–25 August 2000; pp. 1351–1357. [Google Scholar]

- Kapoor, A.; Picard, R. A Real-Time Head Nod and Shake Detector. In Proceedings of the Workshop Perspective User Interfaces, Orlando, FL, USA, 15–16 November 2001; pp. 1–5. [Google Scholar]

- Matsumoto, Y.; Zelinsky, A. An algorithm for real-time stereo vision implementation of head pose and gaze direction measurement. In Proceedings of the International Conference on Automatic Face and Gesture Recognition, Grenoble, France, 28–30 March 2000; pp. 499–504. [Google Scholar]

- Morency, L.; Sidner, C.; Lee, C.; Darrell, T. Contextual Recognition of Head Gestures. In Proceedings of the International Conference on Multimodal Interfaces, Torento, Italy, 4–6 October 2005; pp. 18–24. [Google Scholar]

- Morimoto, C.; Yacoob, Y.; Devis, L. Recognition of Head Gestures Using Hidden Markov Models. In Proceedings of the International Conference on Pattern Recognition, Vienna, Austria, 25–29 August 1996; pp. 461–465. [Google Scholar]

- Nickel, K.; Stiefelhagen, R. Pointing Gesture Recognition on 3DTracking of Face Hands and Head Orientation. In Proceedings of the International Conference on Multimodal Interfaces, Vancouver, BC, Canada, 5–7 November 2003; pp. 140–146. [Google Scholar]

- Zhang, Y.; Xiao, R.; Harrison, C. Advancing hand gesture recognition with high resolution electrical impedance tomography. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology, Tokyo, Japan, 16–19 October 2016; pp. 843–850. [Google Scholar]

- Saponas, T.; Tan, S.; Morris, D.; Balakrishnan, R. Demonstrating the feasibility of using forearm electromyography for muscle–computer interfaces. In Proceedings of the 26th SIGCHI Conference on Human Factors in Computing Systems, Florence, Italy, 5–10 April 2008; pp. 515–524. [Google Scholar]

- Wheeler, K.; Chang, M.; Knuth, K. Gesture-based control and EMG decomposition. IEEE Trans. Syst. Man Cybern. C Appl. Rev. 2006, 36, 503–514. [Google Scholar] [CrossRef]

- Liu, J.; Zhou, P. A novel myoelectric pattern recognition strategy for hand function restoration after incomplete cervical spinal cord injury. IEEE Trans. Neural Syst. Rehabil. Eng. 2013, 21, 96–103. [Google Scholar] [CrossRef]

- Bunderson, N.; Kuiken, T. Quantification of feature space changes with experience during electromyogram pattern recognition control. IEEE Trans. Neural Syst. Rehabil. Eng. 2012, 20, 1804–1807. [Google Scholar] [CrossRef]

- Li, X.; Fu, J.; Xiong, L.; Shi, Y.; Davoodi, R.; Li, Y. Identification of finger force and motion from forearm surface electromyography. In Proceedings of the IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), San Diego, CA, USA, 14–16 September 2015; pp. 316–321. [Google Scholar]

- Khezrim, M.; Jahed, M. Real-time intelligent pattern recognition algorithm for surface EMG signals. Biomed. Eng. 2007, 6, 1–12. [Google Scholar]

- Karlik, B.; Tokhi, M.; Alci, M. A fuzzy clustering neural network architecture for multifunction upperlimb prosthesis. IEEE Trans. Biomed. Eng. 2003, 50, 1255–1261. [Google Scholar] [CrossRef]

- Chu, J.; Moon, I.; Mun, M. A real-time EMG pattern recognition system based on linear-nonlinear feature projection for a multifunction myoelectric hand. IEEE Trans. Biomed. Eng. 2006, 53, 2232–2239. [Google Scholar]

- Huang, Y.; Englehart, K.; Hudgins, B. A Gaussian mixture model based classification scheme for myoelectric control of powered upper limb prostheses. IEEE Trans. Biomed. Eng. 2005, 52, 1801–1811. [Google Scholar] [CrossRef]

- Noce, E.; Bellingegni, A.; Ciancio, A.; Sacchetti, R.; Davalli, A.; Guglielmelli, E.; Zollo, L. EMG and ENG-envelope pattern recognition for prosthetic hand control. J. Neurosci. Methods 2019, 311, 38–46. [Google Scholar] [CrossRef]

- Shi, W.-T.; Lyu, Z.-J.; Tang, S.-T.; Chia, T.-L.; Yang, C.-Y. A bionic hand controlled by hand gesture recognition based on surface EMG signals: A preliminary study. Biocybern. Biomed. Eng. 2018, 38, 126–135. [Google Scholar] [CrossRef]

- Said, S.; Alkork, S.; Beyrouthy, T.; Fayek, M. Wearable Bio-Sensors Bracelet for Driver’s Health Emergency Detection. In Proceedings of the 2017 2nd International Conference on Bio-Engineering for Smart Technologies (BioSMART), Paris, France, 30 August–1 September 2017. [Google Scholar]

- Rawat, S.; Vats, S.; Kumar, P. Evaluating and Exploring the MYO Armband. In Proceedings of the International Conference on System Modeling & Advancement in Research Trends, Moradabad, India, 25–27 November 2016; pp. 115–120. [Google Scholar]

- Said, S.; Sheikh, M.; Al-Rashidi, F.; Lakys, Y.; Beyrouthy, T.; Naitali, A. A Customizable Wearable Robust 3D Printed Bionic Arm: Muscle Controlled. In Proceedings of the 3rd International Conference on Bio-Engineering for Smart Technologies (BioSMART), Paris, France, 24–26 April 2019; pp. 1–6. [Google Scholar]

- Zuniga, J.; Katsavelis, D.; Peck, J.; Stollberg, J.; Petrykowski, M.; Carson, A.; Fernandez, C. Cyborg beast: A low-cost 3d-printed prosthetic hand for children with upper-limb differences. BMC Res. Notes 2015, 8, 1–8. [Google Scholar] [CrossRef]

- McGimpsy, G.; Bradford, T. C: Limb Prosthetics Services and Devices Critical Unmet Need: Market Analysis; Bioengineering Institute Center for Neuroproshetics, Worcester Polytechnic Institution: Worcester, MA, USA, 2010. [Google Scholar]

- Available online: https://openbionicslabs.com/shop/chestnut-board (accessed on 15 April 2019).

- Baillie, D.C.; Mathew, J. A comparison of autoregressive modeling techniques for fault diagnosis of rolling element bearings. Mech. Syst. Signal Process. 1996, 10, 1–17. [Google Scholar] [CrossRef]

- Vu, V.; Thomas, M.; Lakis, A.; Marcouiller, L. Operational modal analysis by updating autoregressive model. Mech. Syst. Signal Process. 2011, 25, 1028–1044. [Google Scholar] [CrossRef]

- Akhmadeev, K.; Rampone, E.; Yu, T.; Aoustin, Y.; Le Carpentier, E. A testing system for a real-time gesture classification using surface EMG. IFAC-PapersOnLine 2018, 50, 11498–11503. [Google Scholar] [CrossRef]

- Ahsan, M.R.; Ibrahimy, M.I.; Khalifa, O.O. Electromygraphy (EMG) signal based HGR using artificial neural network (ANN). In Proceedings of the 4th International Conference on Mechatronics (ICOM), Kuala Lumpur, Malaysia, 17–19 May 2011; pp. 1–6. [Google Scholar]

- Zhang, X.H.; Wang, J.J.; Wang, X.; Ma, X.L. Improvement of Dynamic HGR Based on HMM Algorithm. In Proceedings of the International Conference on Information System and Artificial Intelligence (ISAI) Hong Kong, Hong Kong, China, 24–26 June 2016; pp. 401–406. [Google Scholar]

- Dai, Y.; Zhou, Z.; Chen, X.; Yang, Y. A novel method for simultaneous gesture segmentation and recognition based on HMM. In Proceedings of the 2017 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS), Xiamen, China, 6–9 November 2017; pp. 684–688. [Google Scholar]

- Weston, J.; Watkins, C. Multi-class support vector machines. In Proceedings of the European Symp. Artificial Neural Networks (ESANN), Bruges, Belgium, 21–23 April 1999; pp. 219–224. [Google Scholar]

- Zhang, Z.; Yang, K.; Qian, J.; Zhang, L. Real-Time Surface EMG Pattern Recognition for Hand Gestures Based on an Artificial Neural Network. Sensors 2019, 14, 3170. [Google Scholar] [CrossRef] [PubMed]

- Fong, S. Using hierarchical time series clustering algorithm and wavelet classifier for biometric voice classification. J. Biomed. Biotechnol. 2012, 2012, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Theodoridis, S. Machine Learning: A Bayesian and Optimization Perspective; Academic Press: Oxford, UK, 2015. [Google Scholar]

- Zhang, X.; Chen, X.; Li, Y.; Lantz, V.; Wang, K.; Yang, J. A framework for hand gesture recognition based on accelerometer and EMG sensors. IEEE Trans. Syst. Man Cybern. Part Syst. Hum. 2011, 41, 1064–1076. [Google Scholar] [CrossRef]

- Marsland, S. Machine Learning: An Algorithmic Perspective; Chapman and Hall/CRC: Boca Raton, FL, USA, 2014. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).