Hybrid SVM-CNN Classification Technique for Human–Vehicle Targets in an Automotive LFMCW Radar †

Abstract

1. Introduction

2. Signal Model in the LFMCW Radar Sensor

3. Proposed Hybrid SVM-CNN Classification Method

3.1. Data Preprocessing

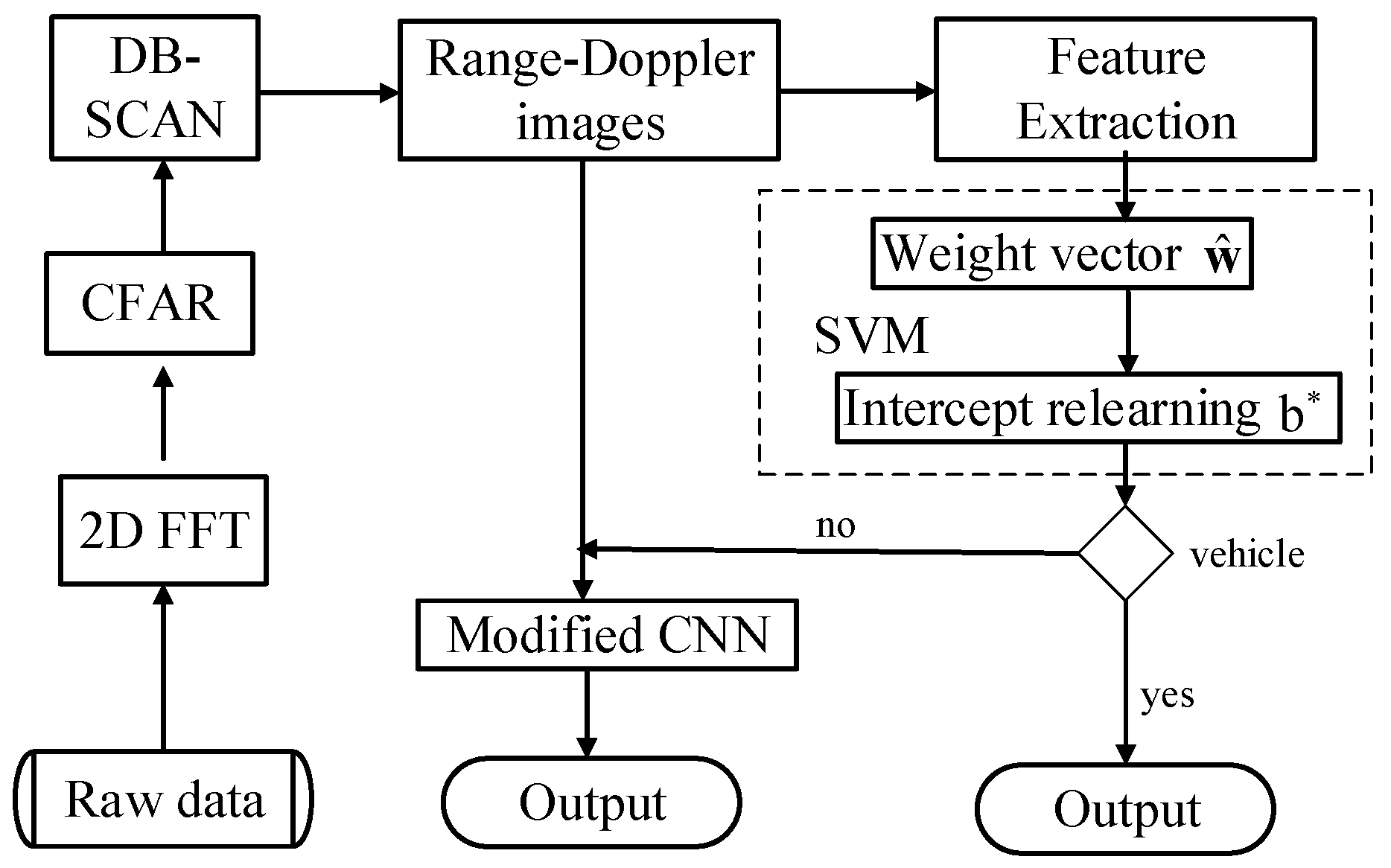

3.2. Hybrid SVM-CNN Classification Method

3.2.1. Modified SVM Approach

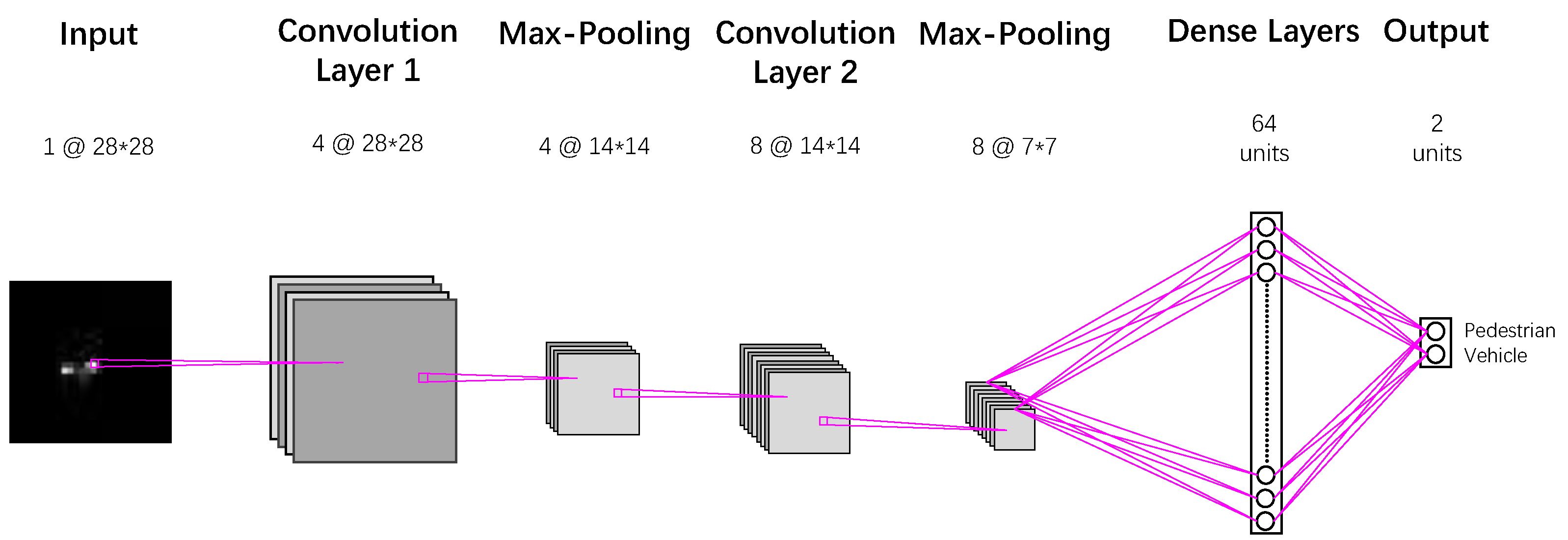

3.2.2. Modified CNN Method

3.3. Summary of Proposed Hybrid SVM-CNN Classification Method

3.4. Analysis of Computational Complexity

4. Experiments

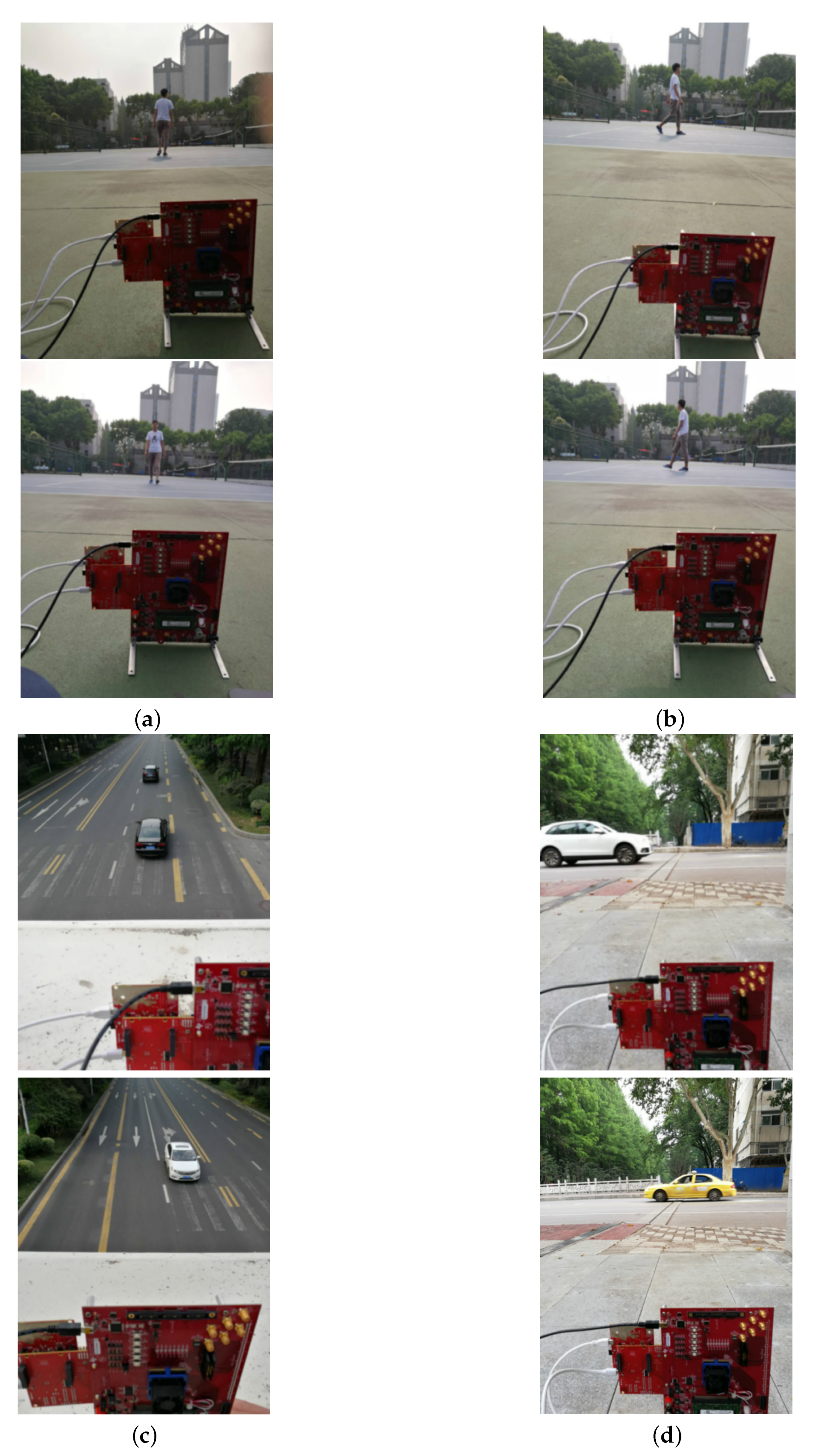

4.1. Datasets and Data Augmentation

4.2. Classification Performance Comparisons

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Dollar, P.; Wojek, C.; Schiele, B.; Perona, P. Pedestrian detection: An evaluation of the state of the art. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 743–761. [Google Scholar] [CrossRef]

- Enzweiler, M.; Gavrila, D.M. Monocular pedestrian detection: Survey and experiments. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 31, 2179–2195. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Bilik, I.; Tabrikian, J.; Cohen, A. GMM-based target classification for ground surveillance Doppler radar. IEEE Trans. Aerosp. Electron. Syst. 2006, 42, 267–278. [Google Scholar] [CrossRef]

- Heuel, S.; Rohling, H. Two-stage pedestrian classification in automotive radar sensors. In Proceedings of the 12th International Radar Symposium (IRS), Leipzig, Germany, 7–9 September 2011; pp. 39–44. [Google Scholar]

- Schubert, E.; Meinl, F.; Kunert, M.; Menzel, W. Clustering of highresolution automotive radar detections and subsequent feature extraction for classification of road users. In Proceedings of the 16th International Radar Symposium (IRS), Dresden, Germany, 24–26 June 2015; pp. 174–179. [Google Scholar]

- Lee, J.; Kim, D.; Jeong, S.; Ahn, G.C.; Kim, Y. Target classification scheme using phase characteristics for automotive FMCW radar. IET Electron. Lett. 2016, 52, 2061–2063. [Google Scholar] [CrossRef]

- Lee, S.; Yoon, Y.J.; Lee, J.E.; Kim, S.C. Human-vehicle classification using feature-based SVM in 77-GHz automotive FMCW radar. IET Radar Sonar Navig. 2017, 11, 1589–1596. [Google Scholar] [CrossRef]

- Tahmous, D. Review of micro-Doppler signatures. IET Radar Sonar Navig. 2015, 9, 1140–1146. [Google Scholar] [CrossRef]

- Fioranelli, F.; Ritchie, M.; Griffiths, H. Feature diversity for optimized human micro-Doppler classification using multistatic radar. IEEE Trans. Aerosp. Electron. Syst. 2017, 53, 640–654. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Jokanovic, B.; Amin, M. Fall detection using deep learning in range-Doppler radars. IEEE Trans. Aerosp. Electron. Syst. 2018, 52, 180–189. [Google Scholar] [CrossRef]

- Seyfioglu, M.S.; Gurbuz, S.Z. Deep neural network initialization methods for micro-Doppler classification with low training sample support. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2462–2466. [Google Scholar] [CrossRef]

- Kim, B.K.; Kang, H.; Park, S. Drone classification using convolutional neural networks with merged Doppler images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 38–42. [Google Scholar] [CrossRef]

- Hadhrami, E.A.; Mufti, M.A.; Taha, B.; Werghi, N. Ground moving radar targets classification based on spectrogram images using convolutional neural networks. In Proceedings of the 19th International Radar Symposium (IRS), Bonn, Germany, 20–22 June 2018; pp. 1–9. [Google Scholar]

- Angelov, A.; Robertson, A.; Murray-Smith, R.; Fioranelli, F. Practical classification of different moving targets using automotive radar and deep neural networks. IET Radar Sonar Navig. 2018, 12, 1082–1089. [Google Scholar] [CrossRef]

- Kim, W.; Cho, H.; Kim, J.; Kim, B.; Lee, S. YOLO-based simultaneous target detection and classification in automotive FMCW radar systems. Sensors 2020, 20, 2897. [Google Scholar] [CrossRef]

- Trommel, R.P.; Harmanny, R.I.A.; Cifola, L.; Driessen, J.N. Multi-target human gait classification using deep convolutional neural networks on micro-Doppler spectrograms. In Proceedings of the European Radar Conference, London, UK, 5–7 October 2016; pp. 81–84. [Google Scholar]

- Klarenbeek, G.; Harmanny, R.I.A.; Cifola, L. Multi-target human gait classification using LSTM recurrent neural networks applied to micro-Doppler. In Proceedings of the European Radar Conference (EURAD), Nuremberg, Germany, 11–13 October 2017; pp. 167–170. [Google Scholar]

- Krawczyk, B. Learning from imbalanced data: Open challenges and future directions. Prog. Artif. Intell. 2016, 5, 221–232. [Google Scholar] [CrossRef]

- Hensman, P.; Masko, D. The Impact of Imbalanced Training Data for Convolutional Neural Networks. 2015. Available online: http://urn.kb.se/resolve?urn=urn:nbn:se:kth:diva-166451 (accessed on 17 June 2020).

- Pouyanfar, S.; Tao, Y.; Mohan, A.; Tian, H.; Kasseb, A.S.; Gauen, K.; Dailey, R.; Aghajanzadeh, S.; Lu, Y.-H.; Chen, S.-C.; et al. Dynamic sampling in convolutional neural networks for imbalanced data classification. In Proceedings of the IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), Miami, FL, USA, 10–12 April 2018. [Google Scholar]

- Buda, M.; Maki, A.; Mazurowski, M.A. A systematic study of the class imbalance problem in convolutional neural networks. Neural Netw. 2018, 106, 249–259. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar]

- Wang, H.; Cui, Z.; Chen, Y.; Avidan, M.; Abdallah, A.B.; Kronzer, A. Predicting hospital readmission via cost-sensitive deep learning. IEEE/ACM Trans. Comput. Biol. Bioinf. 2018, 15, 1968–1978. [Google Scholar] [CrossRef]

- Liu, X.; Wu, J.; Zhou, Z. Exploratory undersampling for class-imbalance learning. IEEE Trans. Syst. Man. Cybern. Part B (Cybern.) 2009, 39, 539–550. [Google Scholar]

- Mease, D.; Wyner, A.J.; Buja, A. Boosted classifcation trees and class probability quantile estimation. J. Mach. Learn. Res. 2007, 8, 409–439. [Google Scholar]

- Winkler, V. Range Doppler detection for automotive FMCW radars. In Proceedings of the European Radar Conference, Munich, Germany, 10–12 October 2007; pp. 166–169. [Google Scholar]

- Carrara, G.W.; Goodman, S.R.; Majewski, M.R. Spotlight Synthetic Aperture Radar: Signal Processing Algorithms; Artech House: Boston, MA, USA, 1995. [Google Scholar]

- Magaz, B.; Belouchrani, A. Automatic threshold selection in OS-CFAR radar detection using information theoretic criteria. Prog. Electromagn. Res. 2011, 30, 157–175. [Google Scholar] [CrossRef]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In KDD-96 Proceedings; Association for the Advancement of Artificial Intelligence (AAAI) Press: Palo Alto, CA, USA, 1996; pp. 226–231. [Google Scholar]

- Schubert, E.; Kunert, M.; Menzel, W.; Frischen, A. A multi-reflection-point target model for classification of pedestrians by automotive radar. In Proceedings of the 11th European Radar Conference (EuRAD), Rome, Italy, 8–10 October 2014; pp. 181–184. [Google Scholar]

- Platt, J. Sequential Minimal Optimization: A Fast Algorithm for Training Support Vector Machines; Microsoft Research Technical Report; Microsoft Research: Redmond, WA, USA, 1998; pp. 1–21. [Google Scholar]

- He, H.; Garcia, E.A. Learning from imbalanced data. IEEE Trans. Knowl. Data Eng. 2009, 21, 1263–1284. [Google Scholar]

- Kim, Y.; Moon, T. Human detection and activity classification based on micro-Doppler signatures using deep convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2016, 13, 8–12. [Google Scholar] [CrossRef]

- Jokanovic, B.; Amin, M.G.; Ahmad, F. Effect of data representations on deep learning in fall detection. In Proceedings of the IEEE Sensor Array and Multichannel Signal Processing Workshop (SAM), Rio de Janerio, Brazil, 10–13 July 2016; pp. 1–5. [Google Scholar]

- Jokanovic, B.; Amin, M.; Erol, B. Multiple joint-variable domains recognition of human motion. In Proceedings of the IEEE Radar Conference (RadarConf), Seattle, WA, USA, 8–12 May 2017; pp. 0948–0952. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–8 December 2012. [Google Scholar]

- Leem, S.K.; Khan, F.; Cho, S.H. Detecting mid-air gestures for digit writing with radio sensors and a CNN. IEEE Trans. Instrum. Meas. 2019, 69, 1066–1081. [Google Scholar] [CrossRef]

- Arsalan, M.; Santra, A. Character recognition in air-writing based on network of radars for human-machine interface. IEEE Sens. J. 2019, 19, 8855–8864. [Google Scholar] [CrossRef]

- Khan, F.; Leem, S.K.; Cho, S.H. In-air continuous writing using UWB impulse radar sensors. IEEE Access 2020, 8, 99302–99311. [Google Scholar] [CrossRef]

- Anand, R.; Mehrotra, K.G.; Mohan, C.K.; Ranka, S. An improved algorithm for neural network classification of imbalanced training sets. IEEE Trans. Neural Netw. 1993, 4, 962–969. [Google Scholar] [CrossRef]

- Burges, C.J. A tutorial on support vector machines for pattern recognition. Data Min. Knowl. Discov. 1998, 2, 121–167. [Google Scholar] [CrossRef]

- He, K.; Sun, J. Convolutional neural networks at constrained time cost. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 5353–5360. [Google Scholar]

- TI IRW1443 Evaluation Board. Available online: http://www.ti.com/tool/IWR1443BOOST (accessed on 17 June 2020).

- He, F.; Huang, X.; Liu, C.; Zhou, Z.; Fan, C. Modeling and simulation study on radar Doppler signatures of pedestrian. In Proceedings of the IEEE Radar Conference, Washington, DC, USA, 10–14 May 2010; pp. 1322–1326. [Google Scholar]

- Schubert, E.; Kunert, M.; Menzel, W.; Fortuny-Guasch, J.; Chareau, J.M. Human RCS measurements and dummy requirements for the assessment of radar based active pedestrian safety systems. In Proceedings of the 14th International Radar Symposium (IRS), Dresden, Germany, 19–21 June 2013; pp. 752–757. [Google Scholar]

- Geary, K.; Colburn, J.S.; Bekaryan, A.; Zeng, S.; Litkouhi, B.; Murad, M. Automotive radar target characterization from 22 to 29 GHz and 76 to 81 GHz. In Proceedings of the IEEE Radar Conference (RadarCon13), Ottawa, ON, Canada, 29 April–3 May 2013; pp. 1–6. [Google Scholar]

| Feature | Description |

|---|---|

| Range extension | |

| Variance estimation in range | |

| Radial velocity | |

| Velocity extension | |

| Variance estimation in |

| Parameters | Value |

|---|---|

| Number of sample per chirp | 250 |

| Number of chirps per frame | 128 |

| Chirp bandwidth | 1500 MHz |

| Chirp duration | 100 µs |

| Frequency slope | 30 MHz/µs |

| Carrier frequency | 77 GHz |

| ADC sampling frequency | 10 MHz |

| Transmitter-receiver(TX/RX) channels | 1/4 |

| The SVM Classifier | The Improved SVM Classifier | ||

|---|---|---|---|

| The training set | Number of samples classified as vehicles | 1066 | 483 |

| Number of vehicle samples classified correctly | 923 | 483 | |

| Precision | 0.87 | 1 | |

| The test set | Number of samples classified as vehicles | 268 | 126 |

| Number of vehicle samples classified correctly | 228 | 126 | |

| Precision | 0.85 | 1 | |

| Method | Accuracy | Precision () | Recall () | Score | AUC |

|---|---|---|---|---|---|

| CNN | 0.92 | 1.00 | 0.62 | 0.75 | 0.90 |

| ROS | 0.90 | 0.72 | 0.78 | 0.75 | 0.93 |

| WFE | 0.94 | 0.92 | 0.76 | 0.83 | 0.94 |

| SVM-CNN | 0.96 | 0.92 | 0.88 | 0.90 | 0.99 |

| Method | CNN | ROS | WFE | SVM-CNN |

|---|---|---|---|---|

| Running Time (min) | 15.22 | 23.66 | 16.25 | 18.27 |

| Method | Accuracy | Precision () | Recall () | Score | AUC |

|---|---|---|---|---|---|

| CNN | 0.89 | 1.00 | 0.45 | 0.62 | 0.90 |

| ROS | 0.90 | 0.86 | 0.56 | 0.67 | 0.92 |

| WFE | 0.91 | 0.84 | 0.71 | 0.76 | 0.92 |

| SVM-CNN | 0.95 | 0.95 | 0.78 | 0.86 | 0.98 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Q.; Gao, T.; Lai, Z.; Li, D. Hybrid SVM-CNN Classification Technique for Human–Vehicle Targets in an Automotive LFMCW Radar. Sensors 2020, 20, 3504. https://doi.org/10.3390/s20123504

Wu Q, Gao T, Lai Z, Li D. Hybrid SVM-CNN Classification Technique for Human–Vehicle Targets in an Automotive LFMCW Radar. Sensors. 2020; 20(12):3504. https://doi.org/10.3390/s20123504

Chicago/Turabian StyleWu, Qisong, Teng Gao, Zhichao Lai, and Dianze Li. 2020. "Hybrid SVM-CNN Classification Technique for Human–Vehicle Targets in an Automotive LFMCW Radar" Sensors 20, no. 12: 3504. https://doi.org/10.3390/s20123504

APA StyleWu, Q., Gao, T., Lai, Z., & Li, D. (2020). Hybrid SVM-CNN Classification Technique for Human–Vehicle Targets in an Automotive LFMCW Radar. Sensors, 20(12), 3504. https://doi.org/10.3390/s20123504