1. Introduction

Structural Health Monitoring (SHM) has been an increasingly important technology in monitoring the structural integrity of composite materials used in the aerospace industry. Because airframes operate under continuous external loads, they will be exposed to large deflections that may adversely affect their structural integrity. Critical components, such as fuselage and wings, should be monitored to ensure long service life. Although these components are designed to withstand different types of loading conditions, such as bending, torsion, tension, and compression, among others, a robust SHM system will be extremely valuable for the aerospace industry for realtime monitoring of loads.

Current aircraft maintenance and repair systems used for structural monitoring rely on load monitoring systems while using strain gauges [

1,

2], optical measurement systems [

3,

4,

5,

6,

7], and fiber brag grating (FBG) [

8,

9,

10,

11] sensors.

The strain gauge based measurements are widely used both in literature and the industry for aircraft wing deflection measurements due to their ability to fit into almost any space and proven high accuracy measurements [

1,

2]. However, strain gauges have many limitations, such that they cannot be attached to every kind of material, they are easily affected by external temperature variations, and physical scratches or cuts can easily damage them. More importantly, a large number of them need to be installed if one needs to monitor the whole wing due to their small size.

Besides strain gauges, various approaches that were based on optical methods were investigated in the literature for measurement of wing deflections and loads acting on them. Burner et al. [

3] presented the theoretical foundations of video grammetric model deflection (VMD) measurement technique, which was implemented by National Aeronautics and Space Administration (NASA) for wind tunnel testing [

4]. Afterwards, many research on wing deflection measurement and analysis were motivated by the catastrophic failure of the unmanned aerial vehicle (UAV) Helios [

5,

6]. Lizotte et al. [

7] proposed estimation of aircraft structural loads based on wing deflection measurements. Their approach is based on the installation of infrared lightemitting diodes (LEDs) on the wings; however, the deflection measurements are local, which do not cover the whole wing structure unless a large number of LEDs are installed.

Motivated by the catastrophic failure of Helios, a realtime in flight wing deflection monitoring of Ikhana and Global Observer UAVs were performed by Richards et al. [

8] by utilizing the spatial resolution and equal spacing of FBGs. Moreover, FBGs have also been used by Alvarenga et al. [

9] for realtime wing deflection measurements on lightweight UAVs. Additionally, chord wise strain distribution measurements that were obtained from a network of FBG sensors were also used by Ciminello et al. [

10] for development of an in flight shape monitoring system as a part of the European Smart Intelligent Aircraft project. More recently, Nicolas et al. [

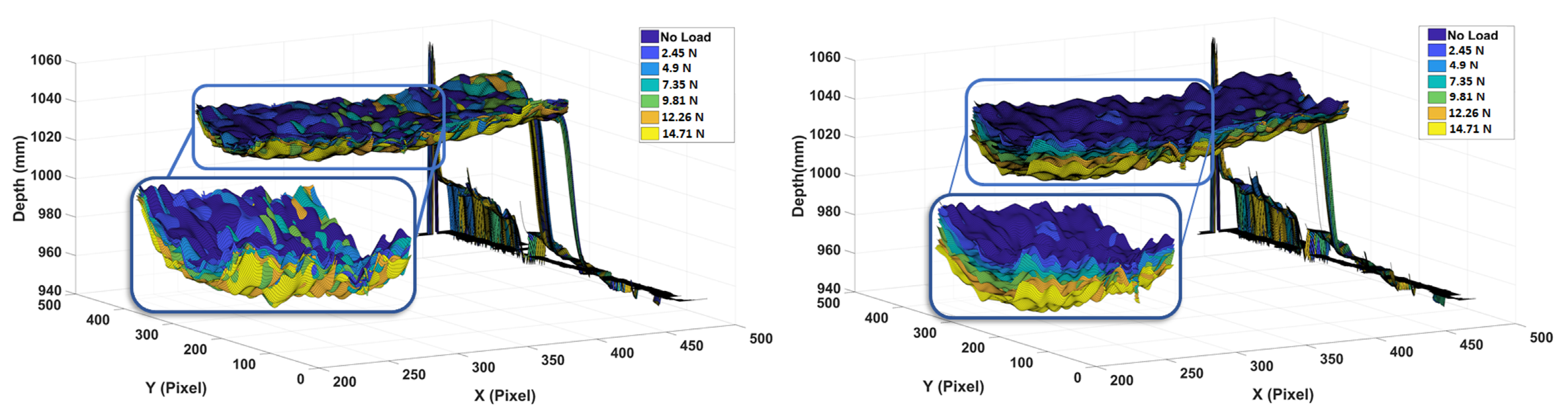

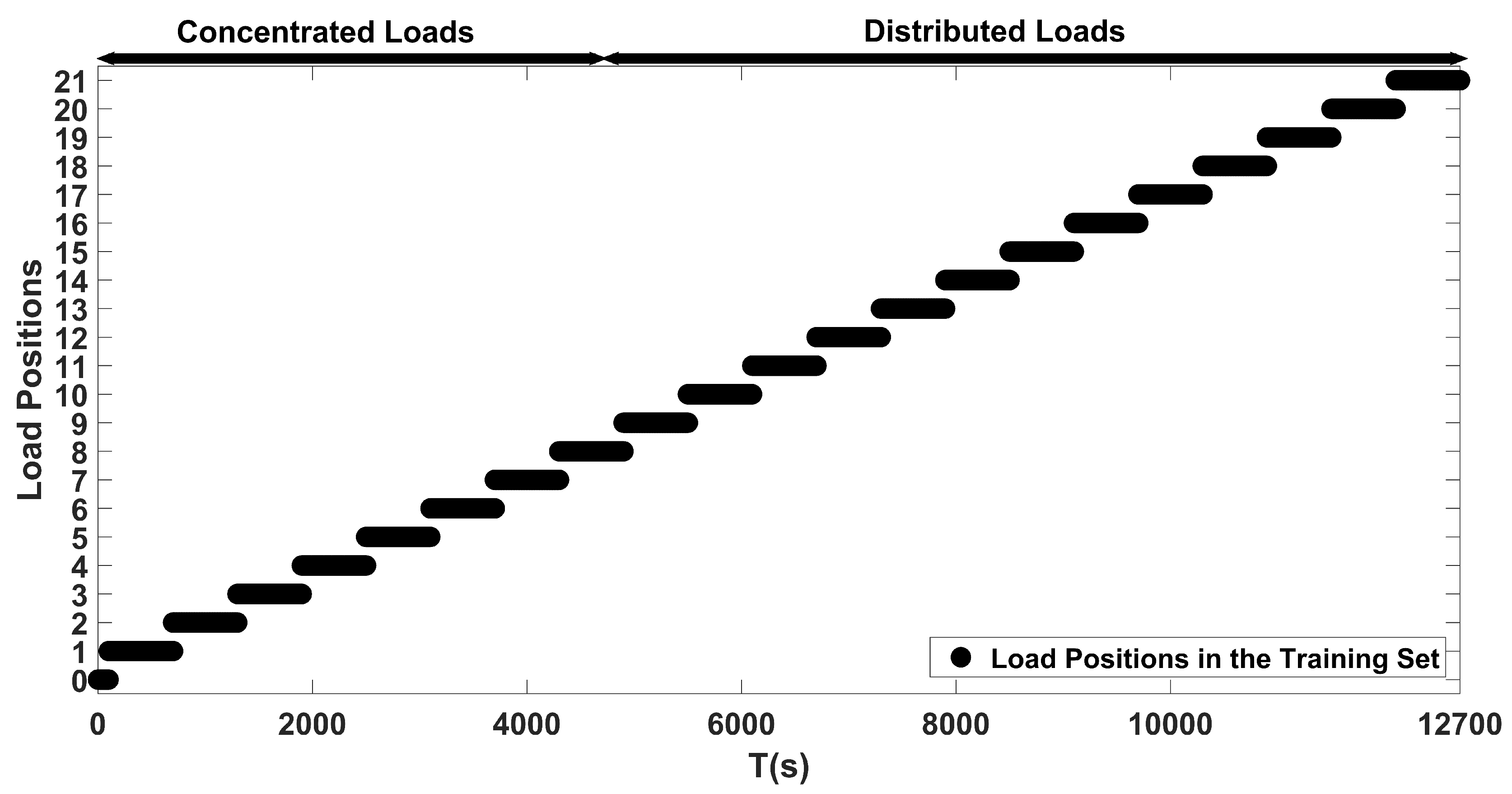

11] proposed the usage of FBG sensors for determination of wing deflection shape as well as the associated out of plane load magnitudes causing such deflections. To simulate in flight loading conditions, both concentrated and distributed loads were applied on the wings each with incrementally increasing loads. They reported that their calculated out of plane displacements and load magnitudes were within 4.2% of the actual measured data by strain gauges. As seen from these works in literature, even though FBGs used for SHM purposes have advantages over conventional sensors, they are still highly affected by temperature changes. Moreover, their installation is not an easy task due to their fragile nature, and special attention must be given to the problems of ingress and egress of the optical fibers [

12].

Although the aforementioned sensors used in the literature can be used for load monitoring in aerospace vehicles, better technologies are needed to achieve usable sensitivity, robustness, and high resolution requirements. The need for the use of a large quantity of sensors to cover the whole structure is one of the major drawbacks of these sensor technologies. Therefore, a sensor that is capable of full field load measurement from a single unit with high accuracy and precision can become an important alternative. This will also result in a considerable reduction in costs, especially when a fleet of airframes need to be inspected and monitored.

From these works in the literature, it is observed that, in general, a mathematical model for describing the deflections of an aircraft wing is used to study its behavior under different types of loads. However, obtaining physics based models of systems can easily become a difficult problem due to system complexity and uncertainties; thus, effectively decreasing their usefulness. This is especially the case in systems, where lots of data are obtained using different types of sensors, which, in turn, adds more complexity to the system due to inherent sensor noise. In such cases data driven modeling techniques have been found to be more effective since the acquired data already contains all kinds of uncertainties, sensor errors and sensor noise [

13]. One of the most effective data driven modeling techniques has been proven to be artificial neural networks (ANN)s [

14]. In this regard, many recent applications of neural networks have emerged in literature for monitoring of strains and stresses during load cycles using strain gauges [

15], pavement defect segmentation using a deep autoencoder [

16], and machine learning based continuous deflection detection for bridges using fiber optic gyroscope [

17].

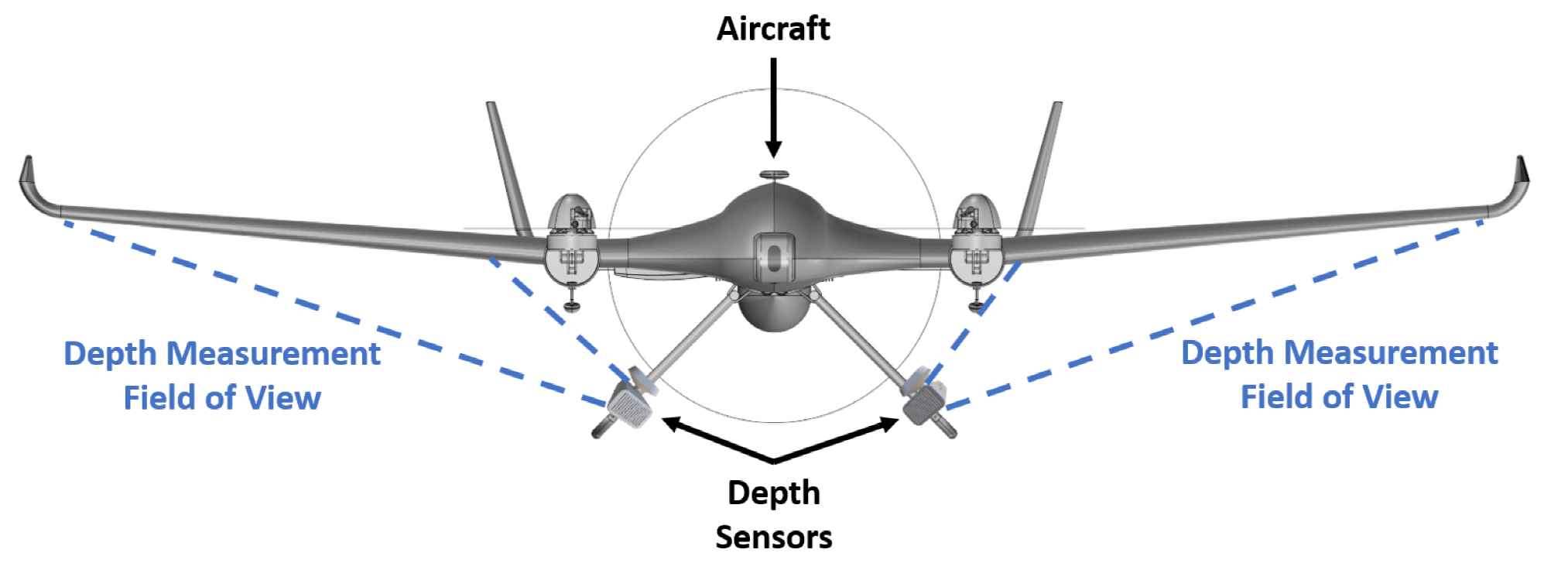

In this work, an ANN based approach for realtime localization and the estimation of loads acting on aircraft wings from full field depth measurements is proposed. The proposed methodology can work with a single external depth image sensor with full field measurement capability for a single wing; thus, one sensor is enough for inspection of the whole wing. Moreover, depth cameras do not require any calibration and can be directly used on any kind of wing regardless if it was made of composites or not due to optical measurement. The proposed framework is able to estimate the magnitude of the load causing wing deflections under both bending and twisting loading conditions; therefore, it is not limited to pure bending case, as was in the work of Nicolas et al. [

11]. Moreover, the proposed method is not just limited to the estimation of load magnitudes, but it will also be able to estimate the location of the load causing bending and twisting deflections; therefore, making the localization of the loads possible. The localization of loads can become a very useful tool, especially in the case when one needs to know the nature of the external loads occurring in flight. Using this information, one can estimate the exact flight conditions and, as such, can improve the design of the aircraft based on this new data. More importantly, the proposed framework can operate in real time. To the best of the authors’ knowledge, this is the first work in the literature to address the problems of real time localization and estimation of bending and twisting loads causing deflections to structures based on depth imaging and ANN.

The rest of the paper is structured, as follows; in

Section 2, the proposed method for real time load monitoring from depth measurements using neural networks is presented. In

Section 3, the experimental setup, data collection procedure, and evaluation of the used depth sensor for load monitoring are described. The effectiveness of the proposed approach is validated by an experimental study in

Section 4, followed by the conclusion in

Section 5.

5. Conclusions

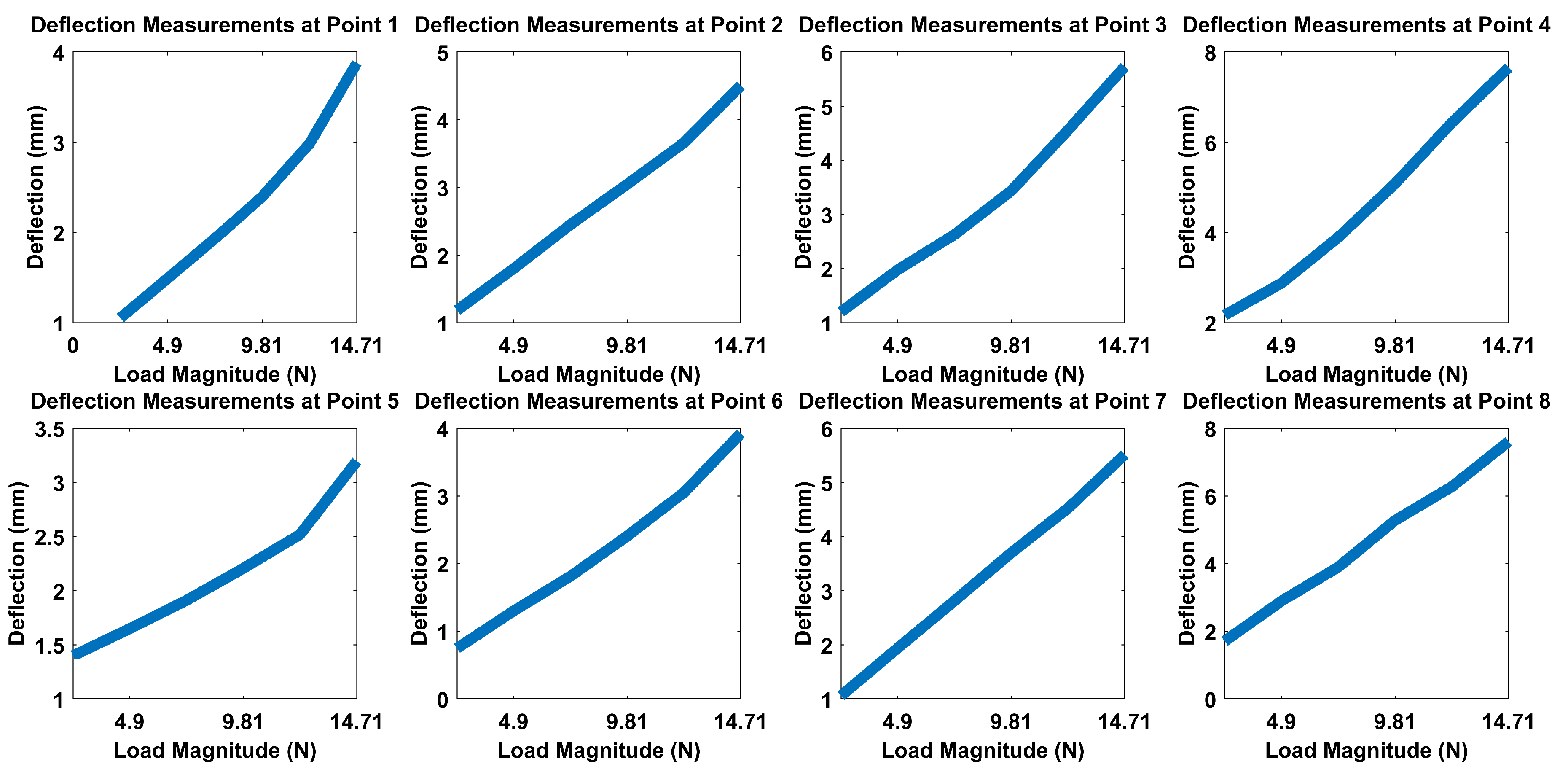

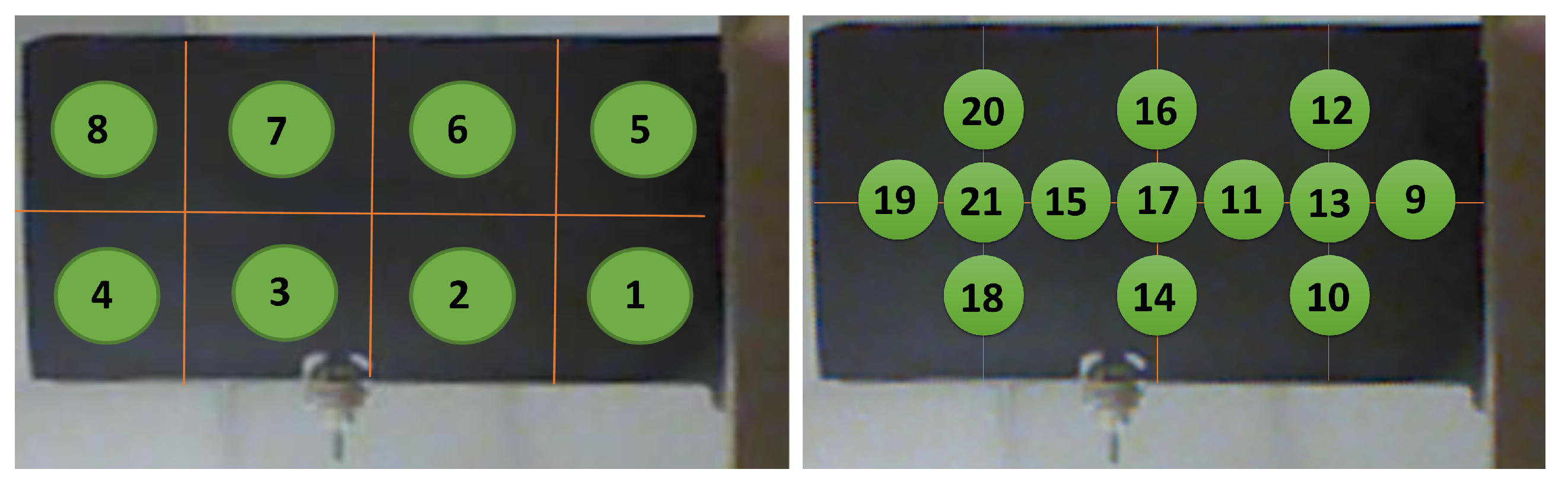

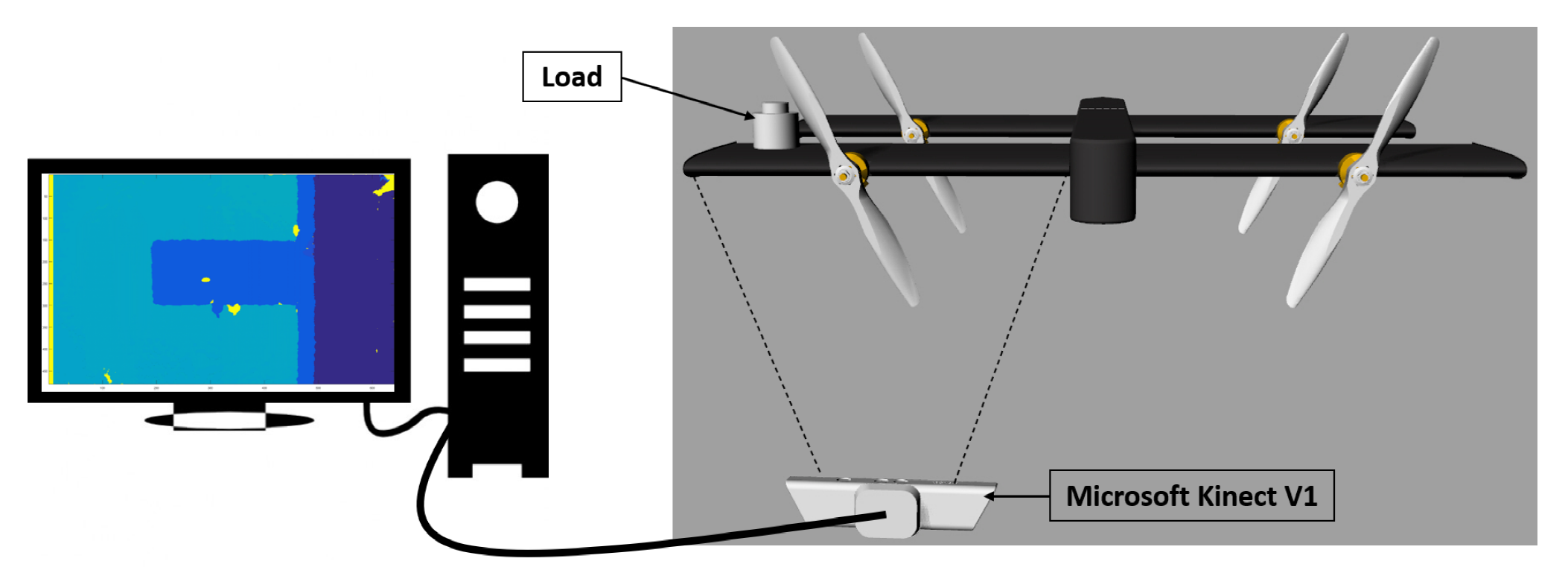

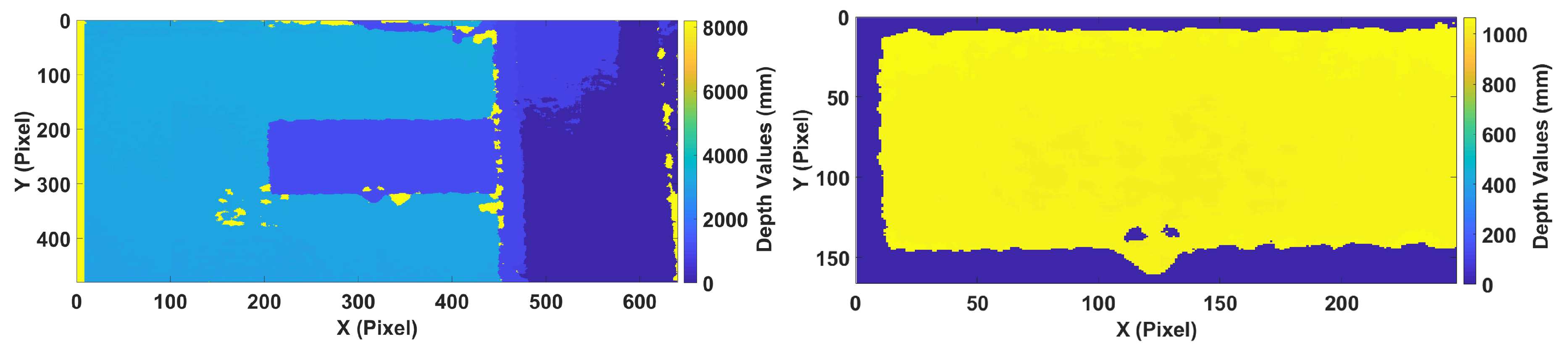

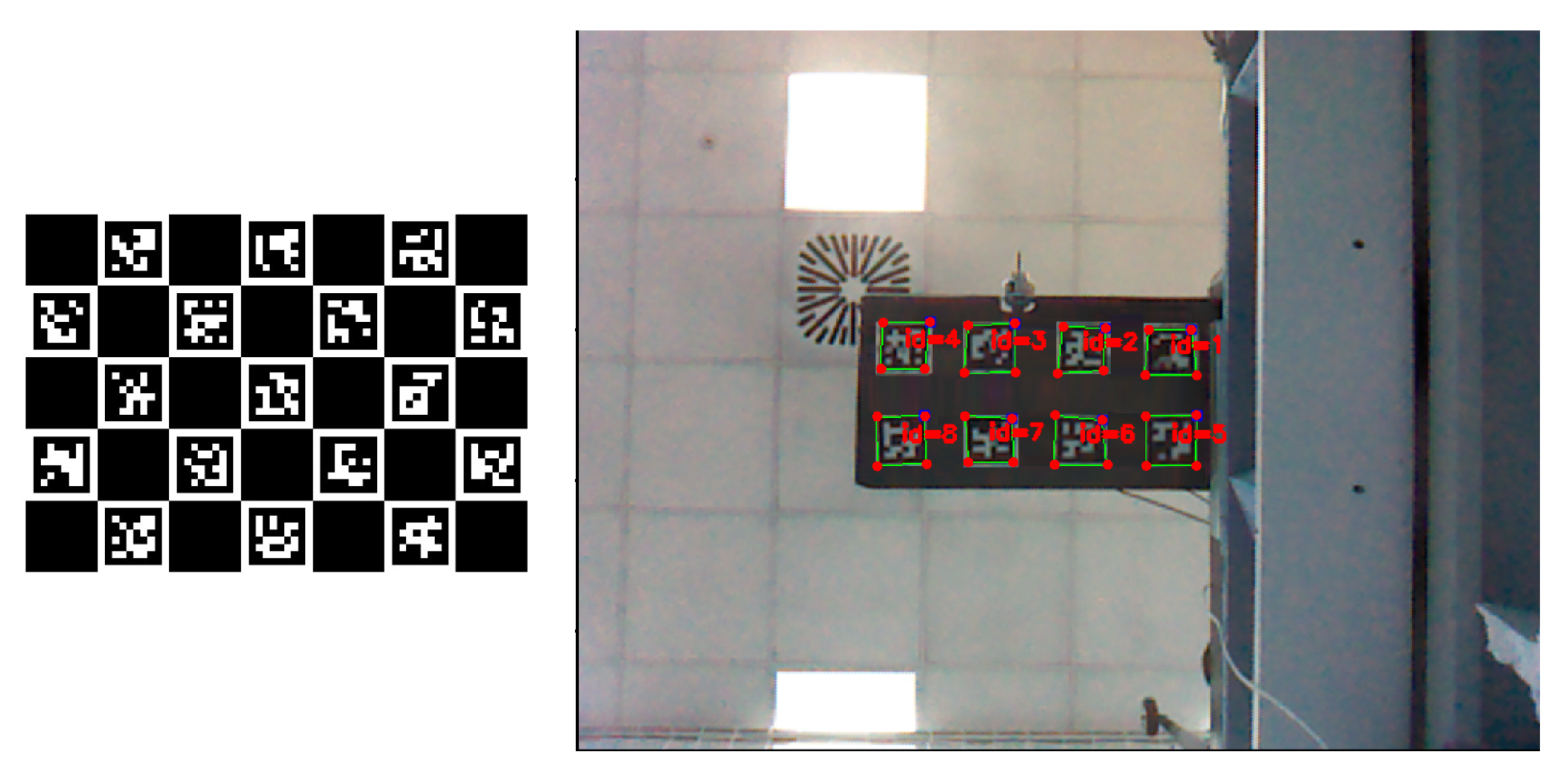

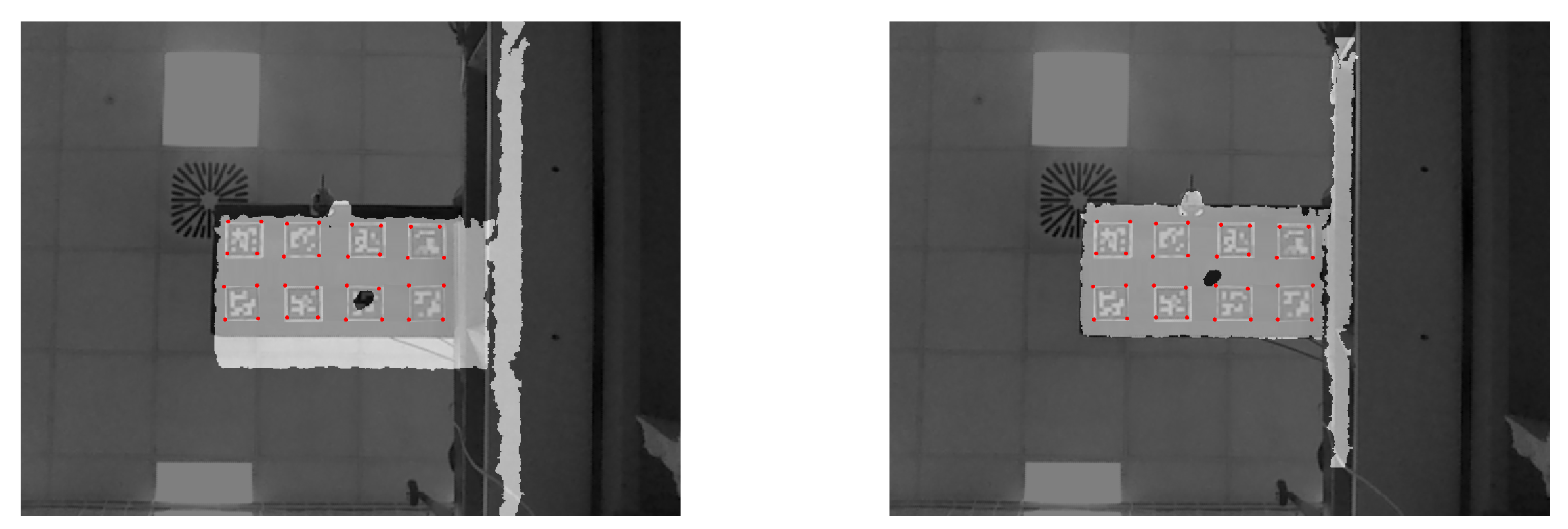

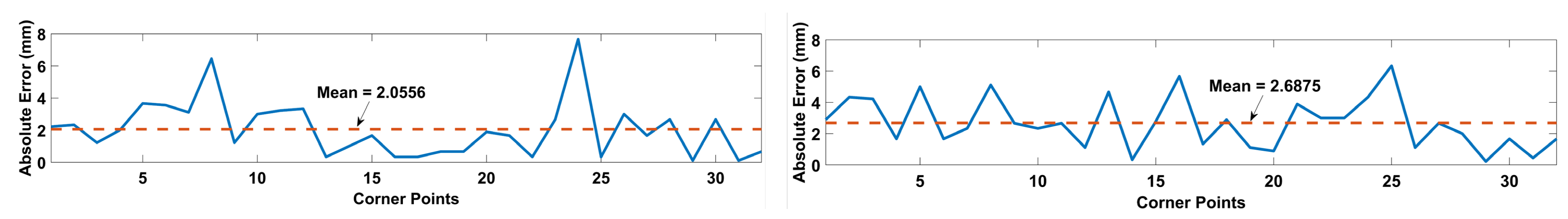

In this work, a robust structural health monitoring system based on depth imaging and artificial neural networks for localization and estimation of bending and twisting loads acting on an aircraft wing in real time is proposed. The proposed framework is based on the usage of depth images obtained from a depth camera as input features to an autoencoder and load location or magnitude as output labels of supervisory neural networks placed in series with the autoencoder. Initially, the Microsoft Kinect V1 depth sensor’s accuracy and precision were evaluated for monitoring of aircraft wings by making use of ArUco markers and a Leica DISTO X310 laser meter having an accuracy of ±1 mm. The Kinect V1 proved to be reliable for SHM purposes, since it provided full field measurements with accuracy and standard deviation of 2.25 mm and 0.28 mm, respectively, when compared with the single point measurements provided by the laser meter.

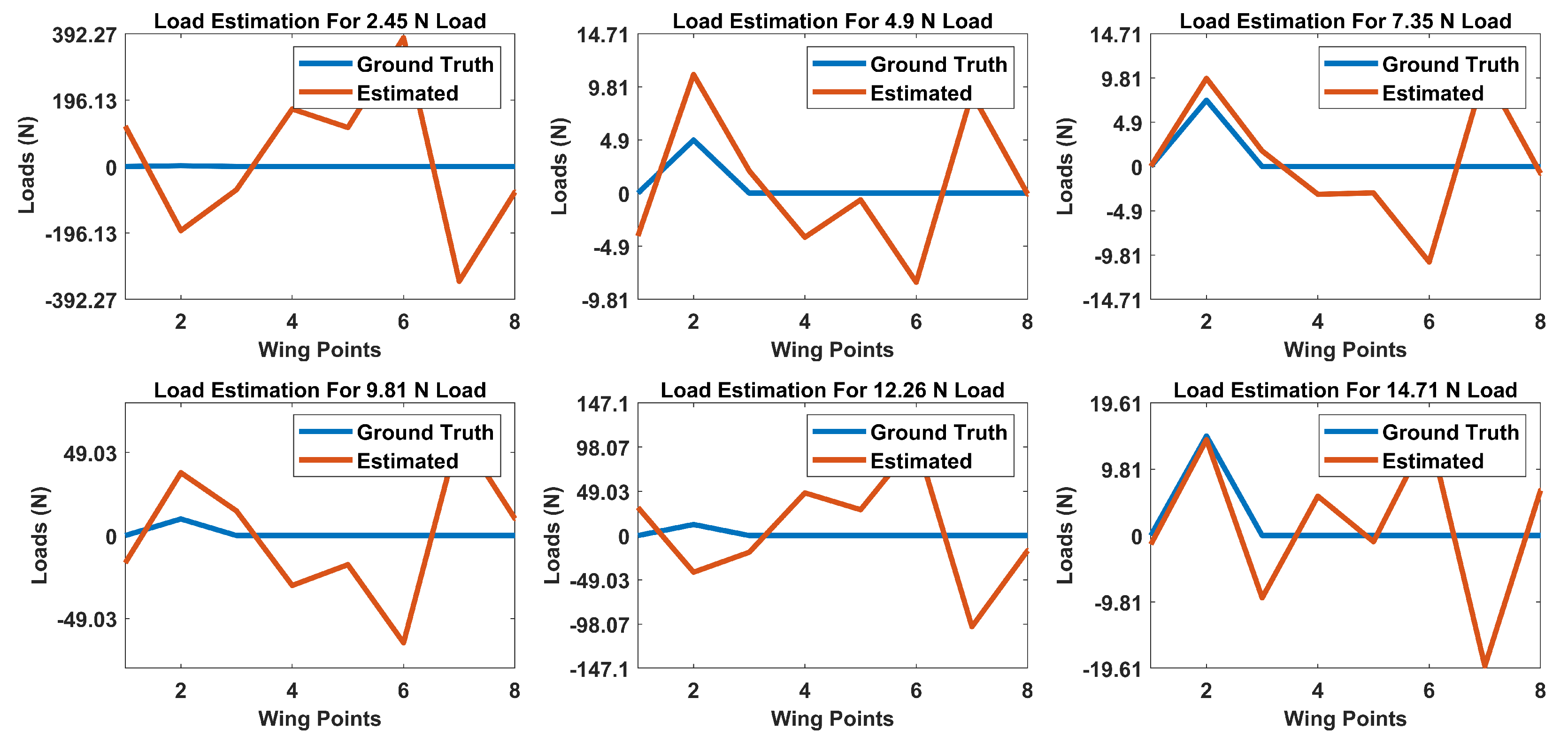

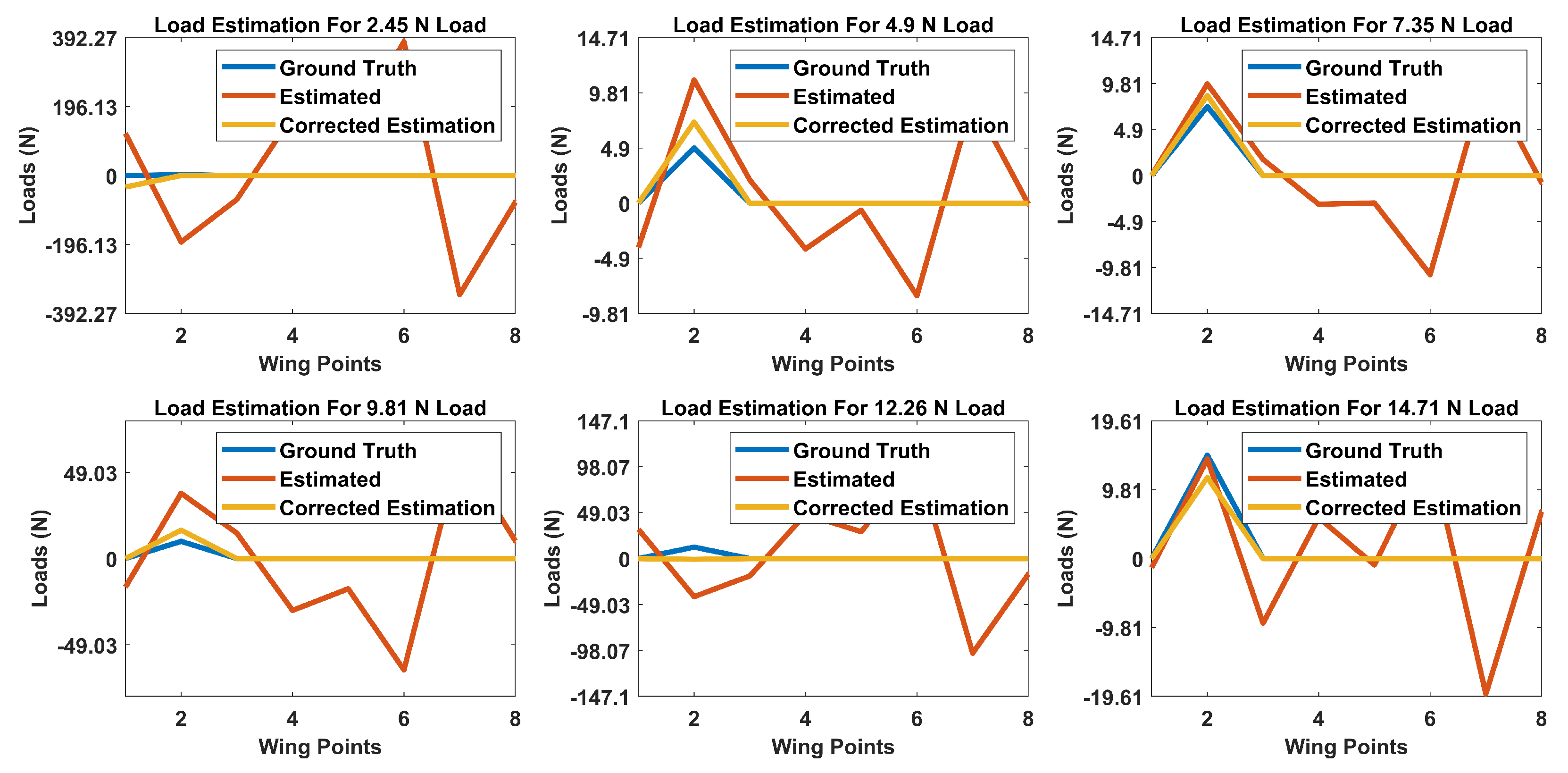

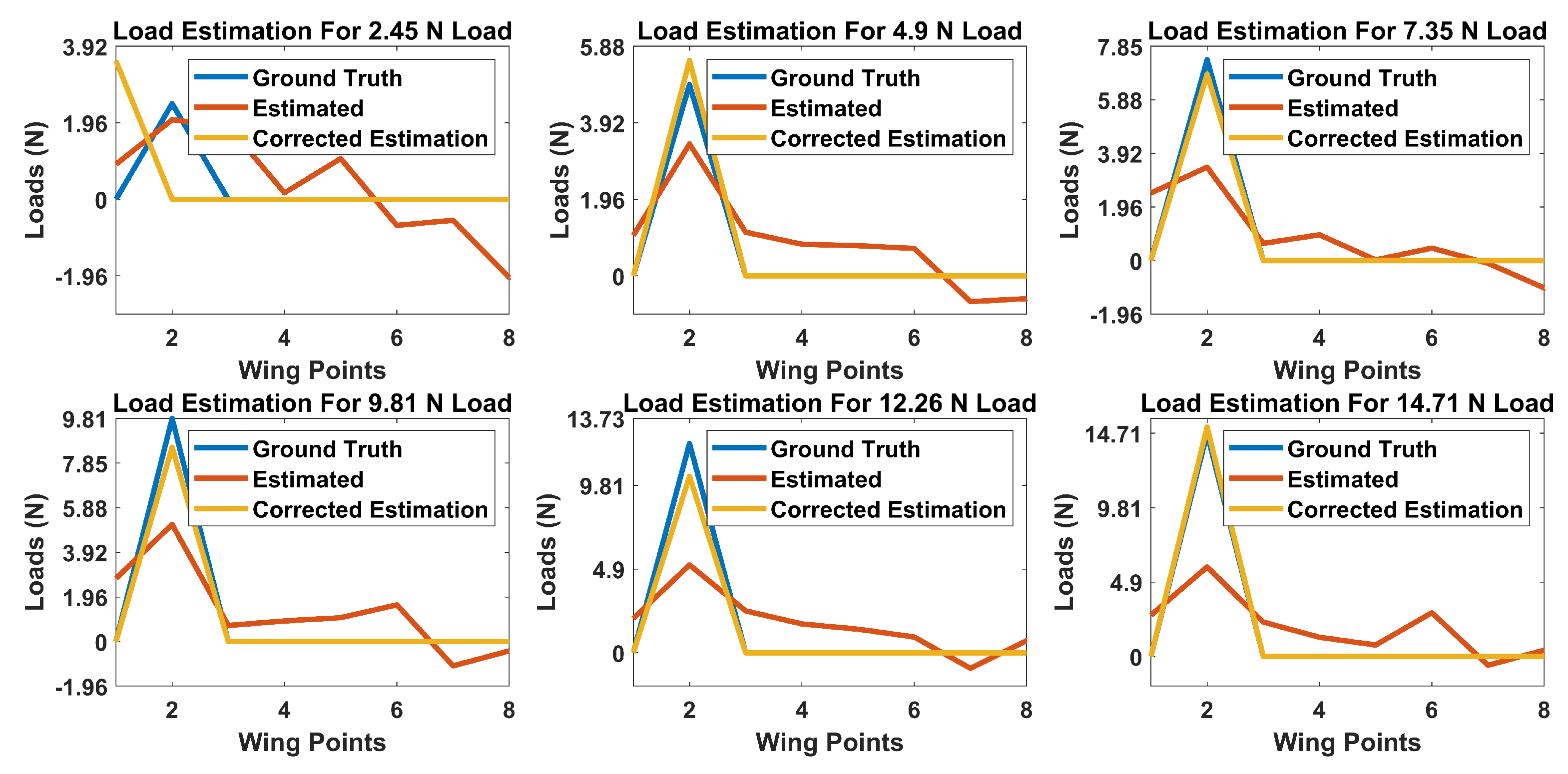

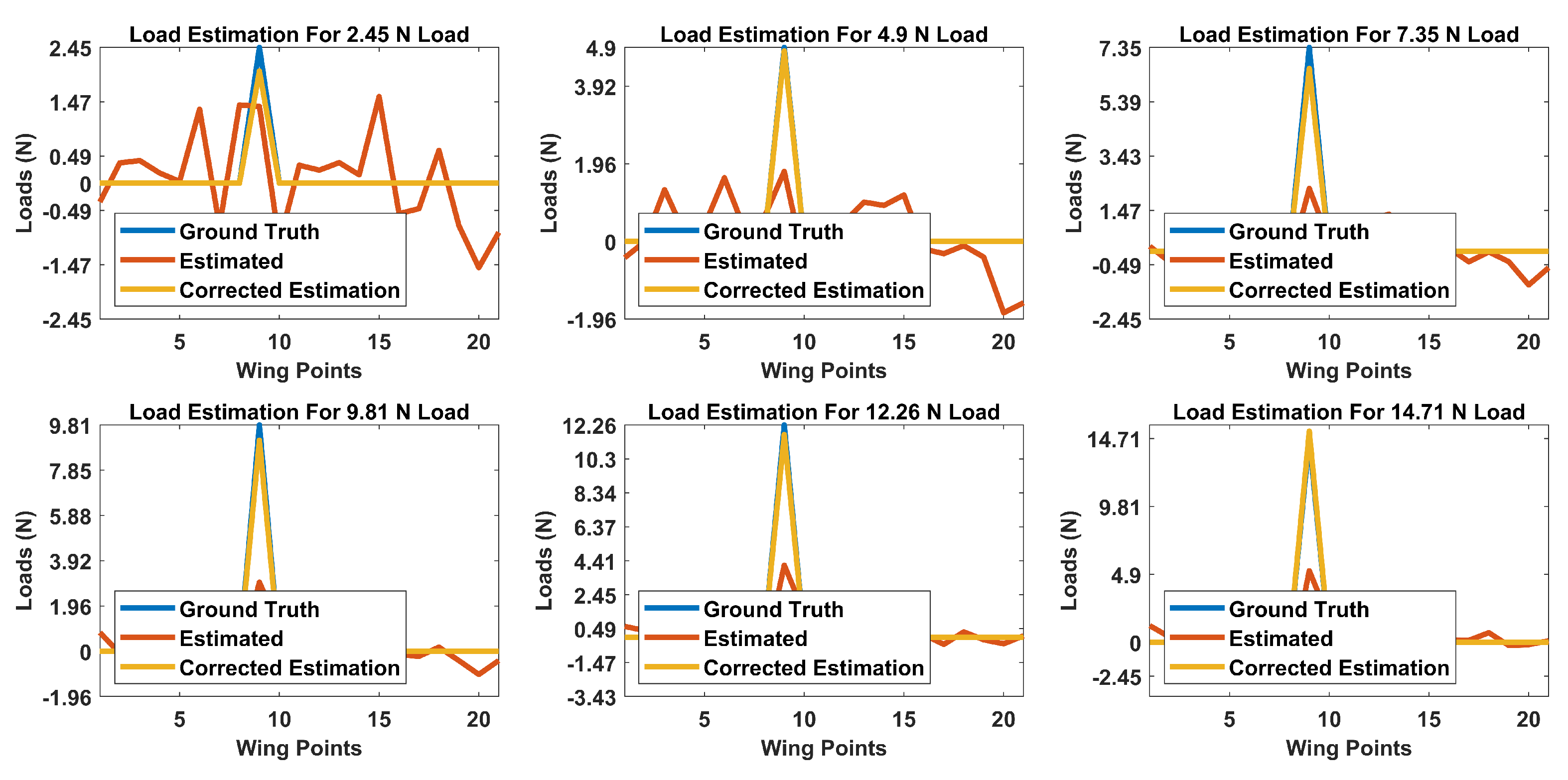

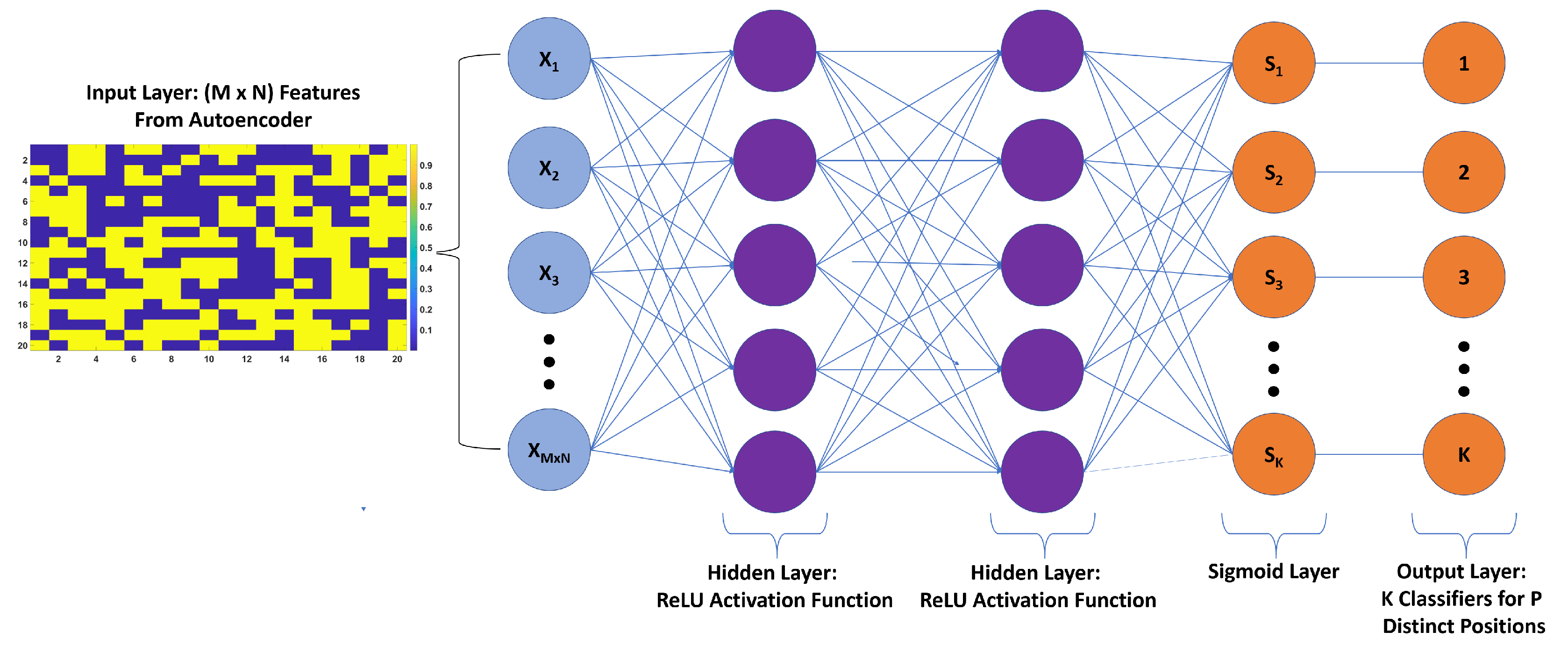

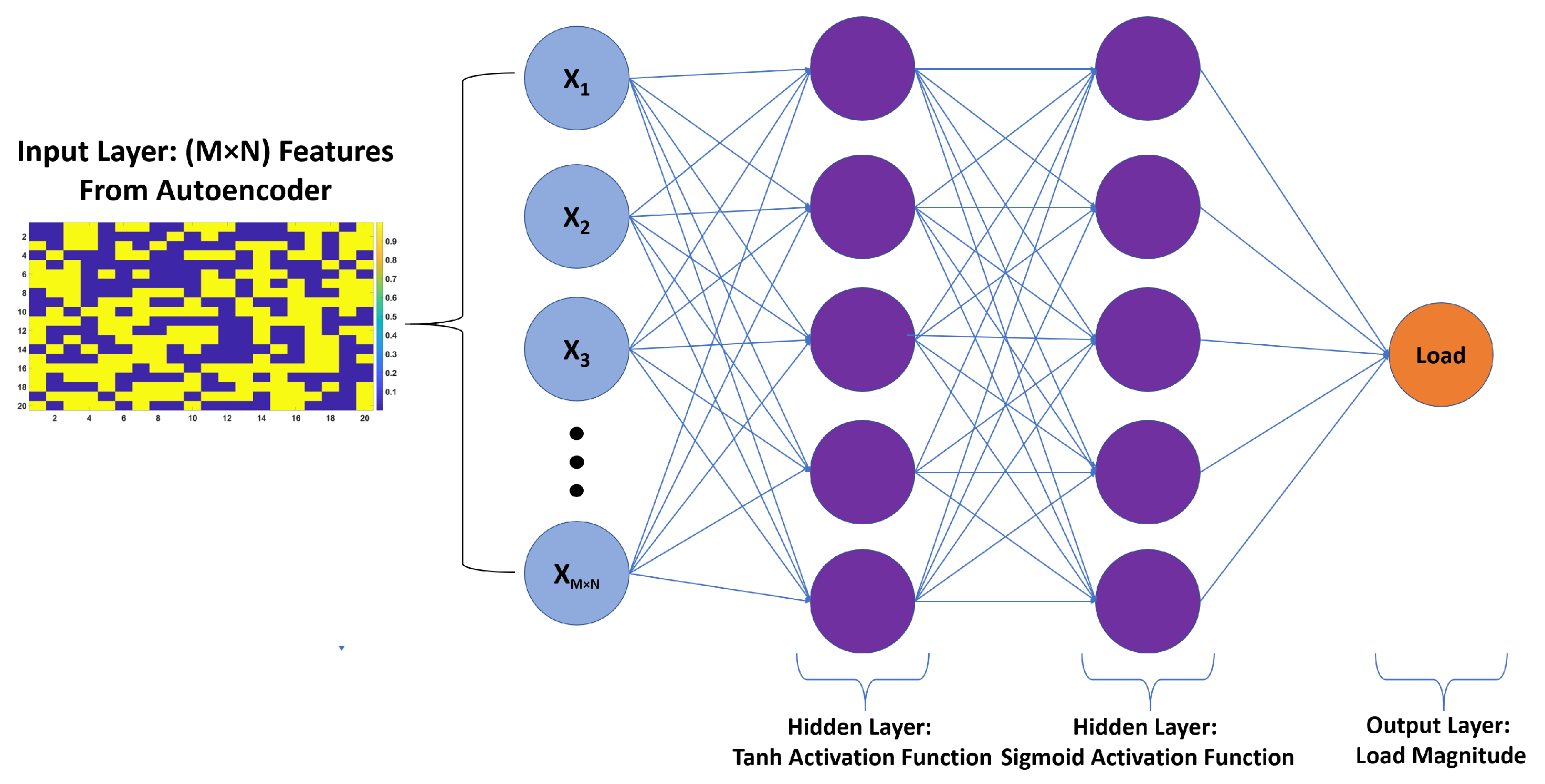

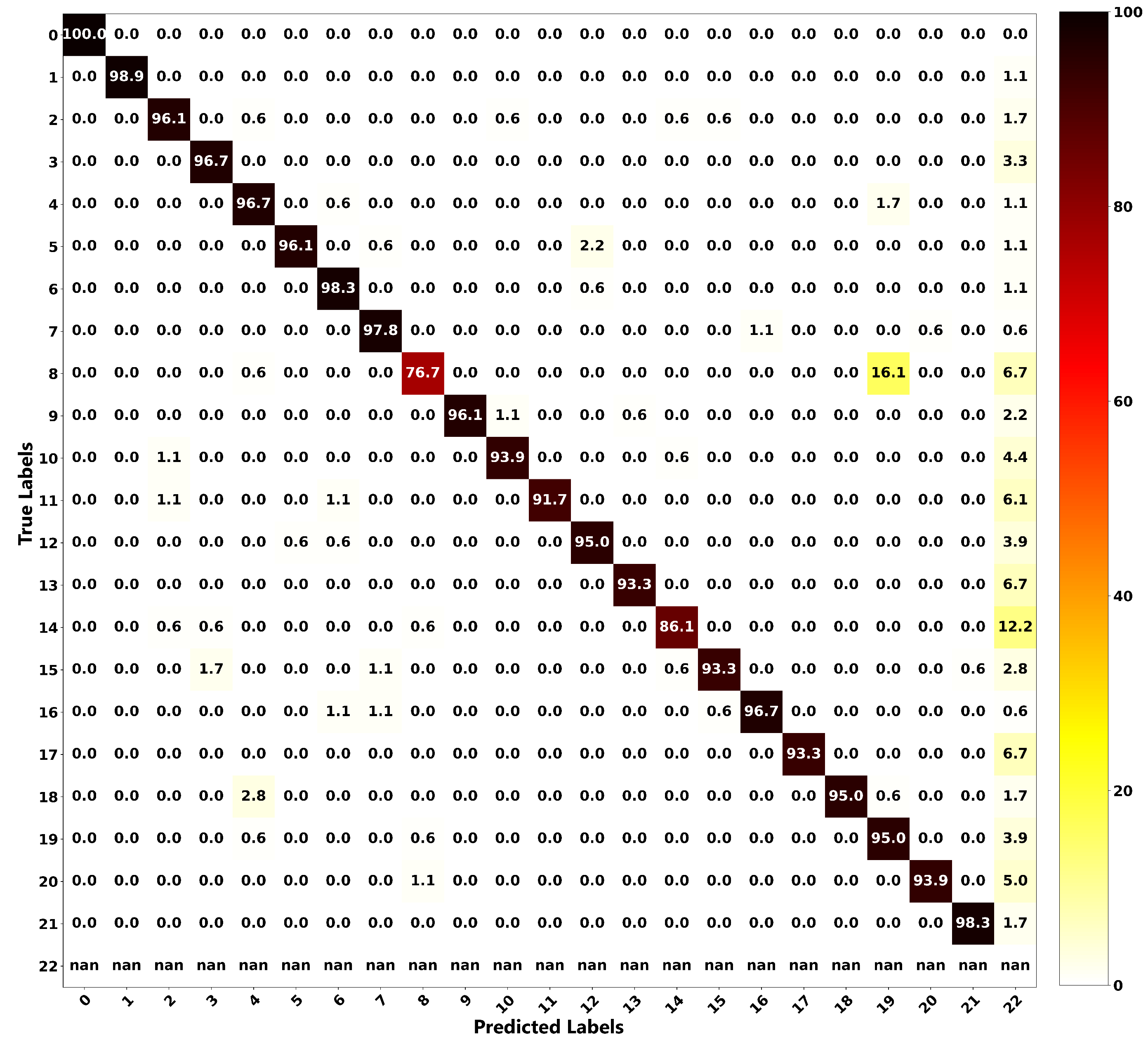

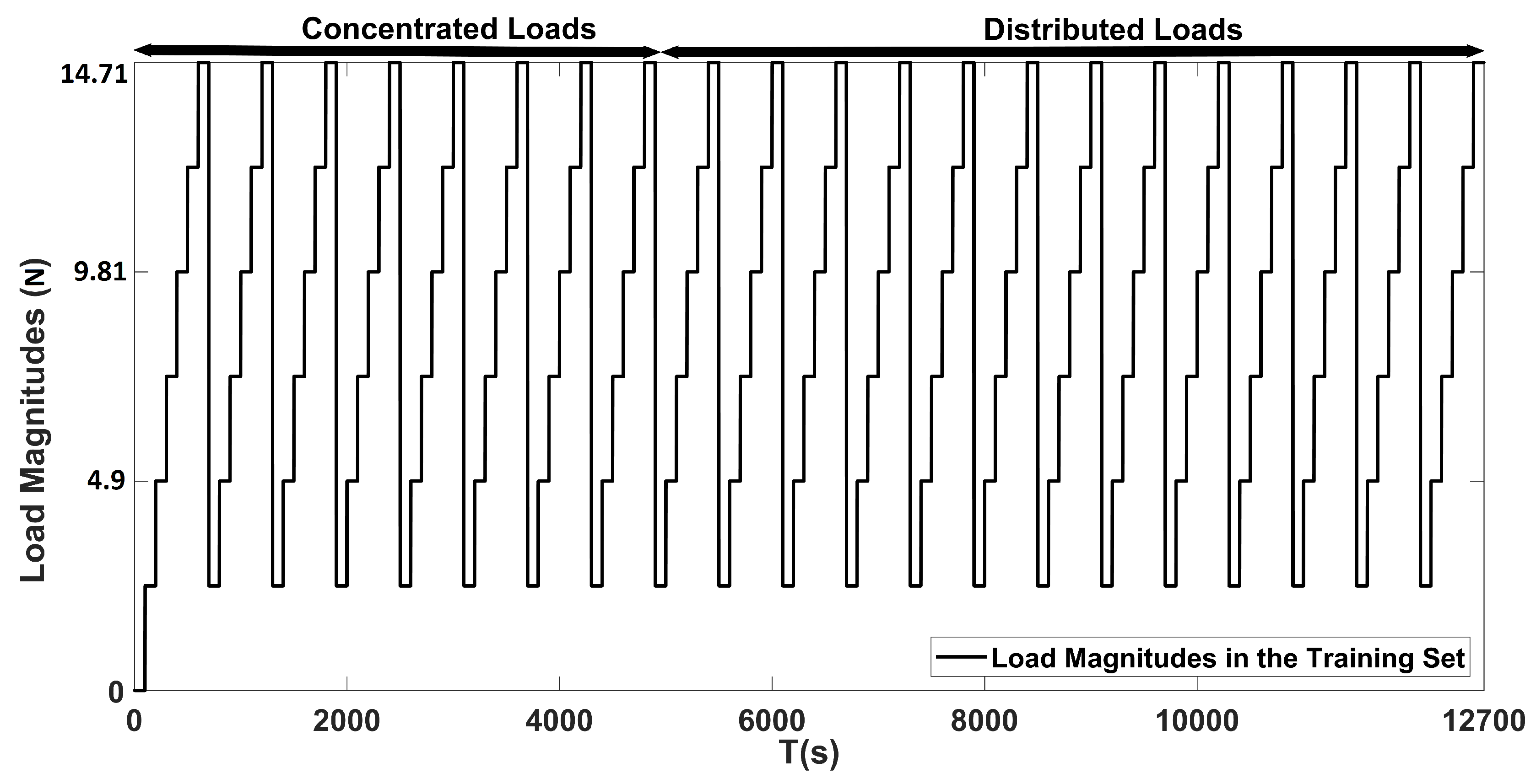

As for the proposed method, first, an ANN consisting of an autoencoder and two hidden layer classification network with ReLU activation functions was proposed for estimating the location of loads. Second, an autoencoder and logistic regression network of two hidden layers with tanh and sigmoid activation functions was proposed for estimating the magnitude of these loads. Both of the proposed networks were trained and validated on an experimental setup, in which the application of concentrated and distributed loads were applied on a composite UAV wing.

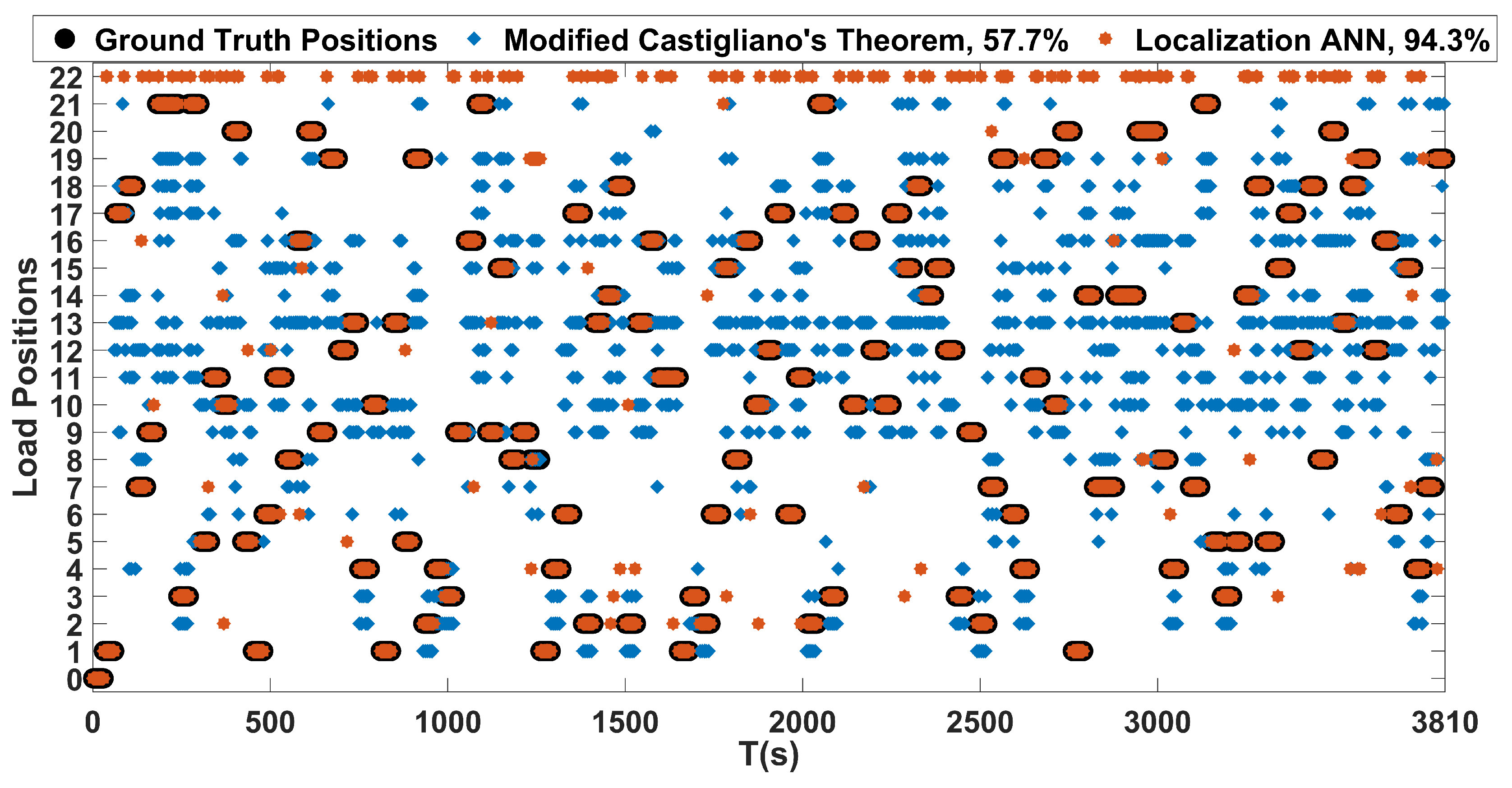

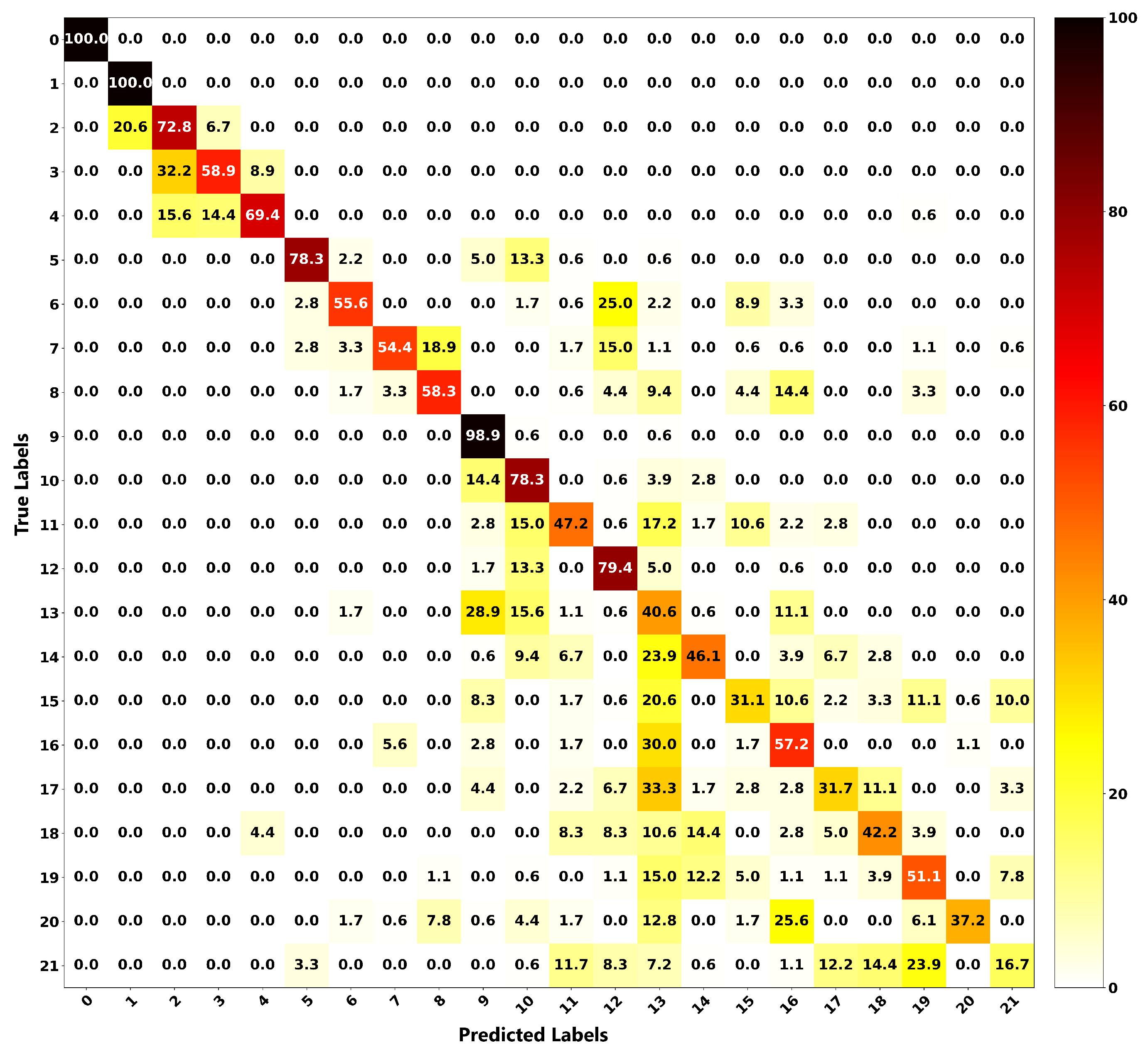

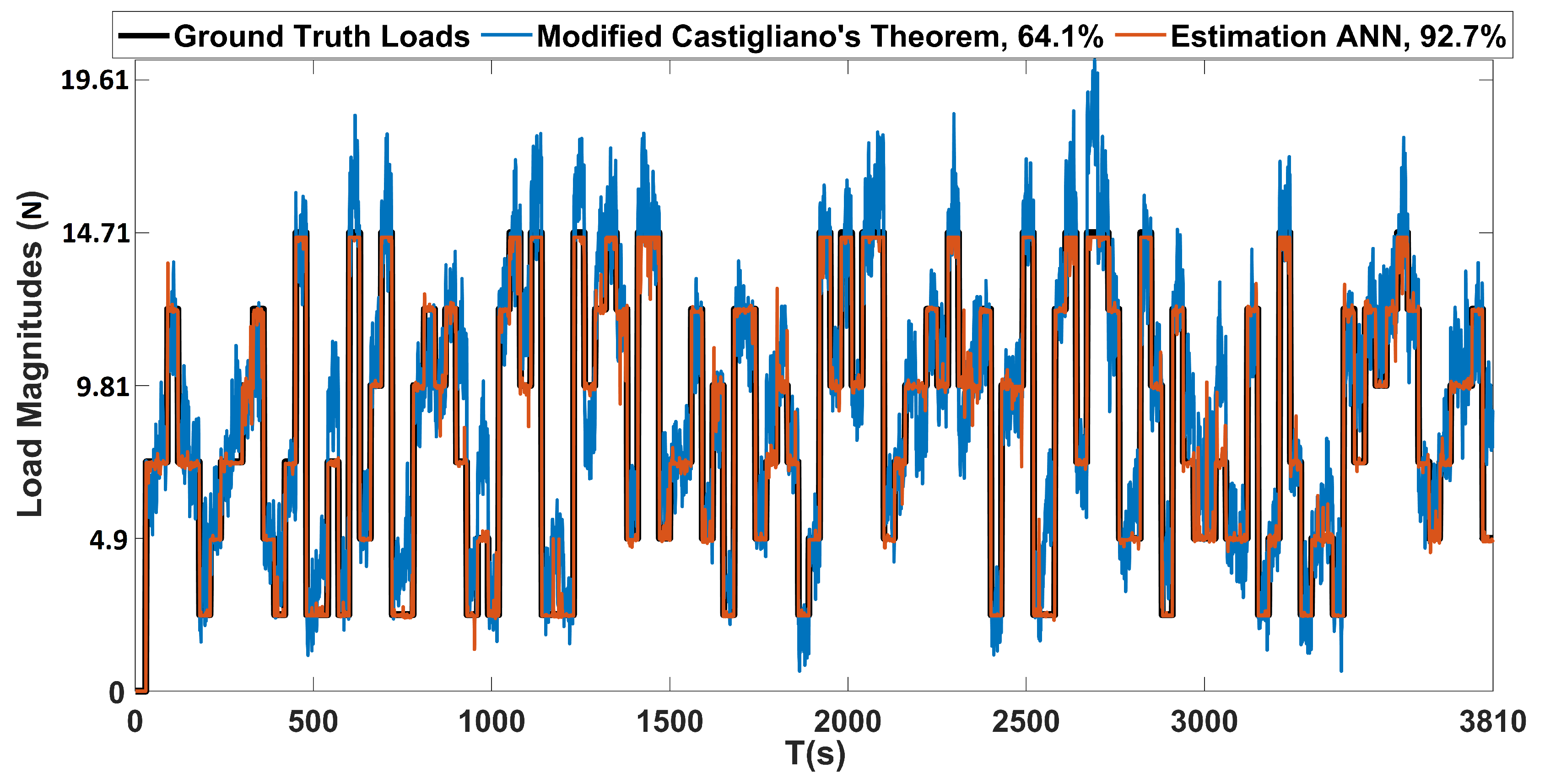

In addition, a comparison with an approach based on Castigliano’s theorem was performed, and the proposed method proved to have superior performance in terms of the localization and estimation of loads. The proposed localization and estimation ANNs achieved accuracies of 94.3% and 92.7%, while the framework based on Castigliano’s theorem achieved average accuracies of 57.7% and 64.1%, respectively, when both of the methods were evaluated on a dataset containing randomly applied concentrated and distributed loads. As demonstrated, the proposed ANN based framework can localize and estimate the magnitudes of loads acting on aircraft wings with very high accuracies from a single depth sensor in realtime.

In the near future, it is planned to extend the current study for the localization and estimation of highly dynamic loads on larger aircraft wings.