Fast CNN Stereo Depth Estimation through Embedded GPU Devices

Abstract

1. Introduction

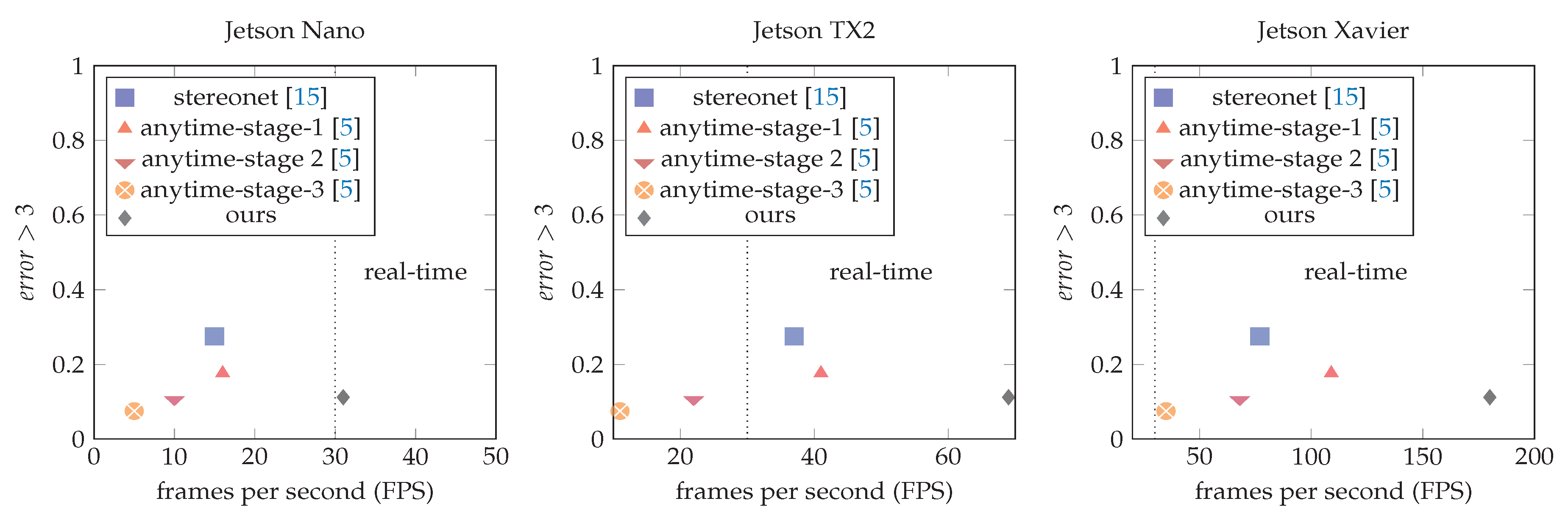

- We assess the performance of two of the fastest state-of-the-art CNN-based models to compute stereo disparity on embedded GPUs, in order to know their limitations for further real-time applications (e.g., small robotics platforms). In this study, to have a common development platform, three of the most novel NVIDIA embedded GPUs have been selected (from the cheapest Jetson Nano to the more expensive Jetson Xavier).

- We evaluate the state-of-the-art models using a custom CUDA kernel for cost-volume computation and the TensorRT SDK for network layer inference optimization showing a more realistic runtime performance on current embedded GPU devices. State-of-the-art evaluations usually do not consider model optimization techniques under their evaluations, leaving unknown what is the current potential on embedded GPU devices.

- We propose the use of a U-Net [6] like architecture for cost-volume postprocessing, instead of the typical sequence of 3D convolutions. Using an U-Net like architecture for the postprocessing step has two key benefits: (1) The network can run much faster since 3D convolutions are costly operations on embedded devices, and (2) Having a faster inference model allows for the computation of disparity models at a higher resolution affecting the disparity pixel error performance of the network directly.

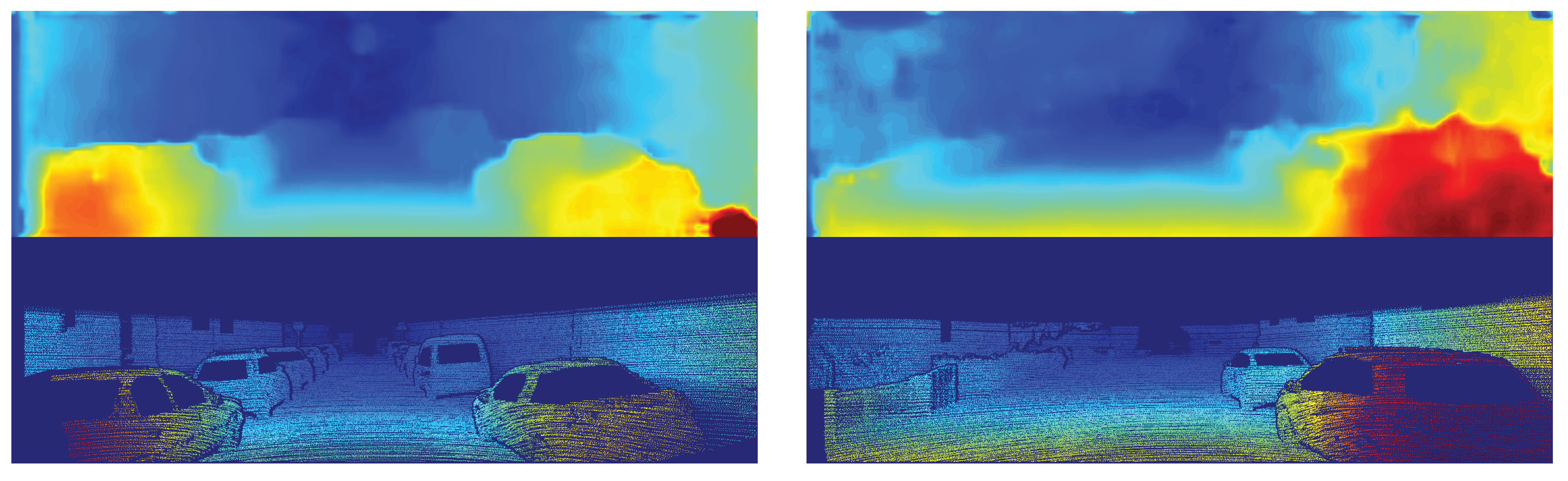

2. Related Works

3. Embedded GPU Devices

4. Disparity Estimation on Embedded GPUs

5. Model Optimization on Embedded GPUs

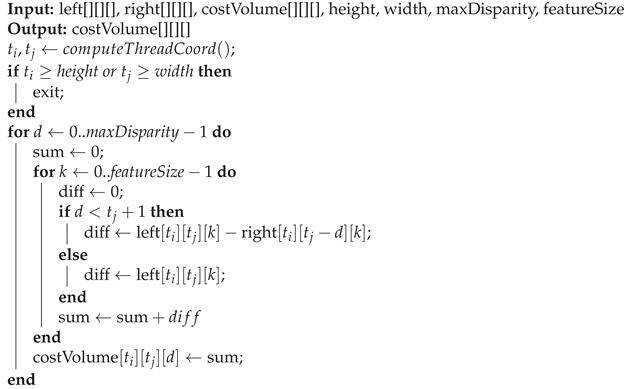

- Custom CUDA kernel for initial cost volume computation: cost-volume computation is essential to relate information between the left and right images in current stereo disparity models. Essentially, it consists of comparing pixels features between one position in a reference image and the features of all possible positions in the target image (maximum disparity) using a given metric: in this case, L1 distance. The cost-volume computation involves multiple loop operations that run slow using current deep learning frameworks but can be easily improved using custom GPU kernels. We follow a similar implementation to [20]. Algorithm 1 contains the pseudocode of our implementation.

Algorithm 1: Pseudocode of cost-volume computation GPU kernel - TensorRT layer optimization: TensorRT is an NVIDIA SDK library to optimize the inference time of trained models. It has several attributes, such as layer fusion, which optimize GPU memory and bandwidth by fusing common layers combination into a single kernel, and weight and precision calibration, which can quantize network weights to a lower precision like INT8. In this work, we use TensorRT 6.0.1 without reducing the precision of the model. We choose to maintain FP32 weights for two reasons. Firstly, current TensorRT SDK fully supports FP32 and FP16 operations, partially supporting other precision modes (see Table 3, top). Secondly, lower precision modes are not supported or optimized for all embedded devices, depending on their CUDA architecture (see Table 3, bottom). As a side note, we tried FP16 also without noticing any substantial improvement in the Jetson TX2 and the Jetson Nano.

6. U-Net Like Model for Cost-Volume Postprocessing

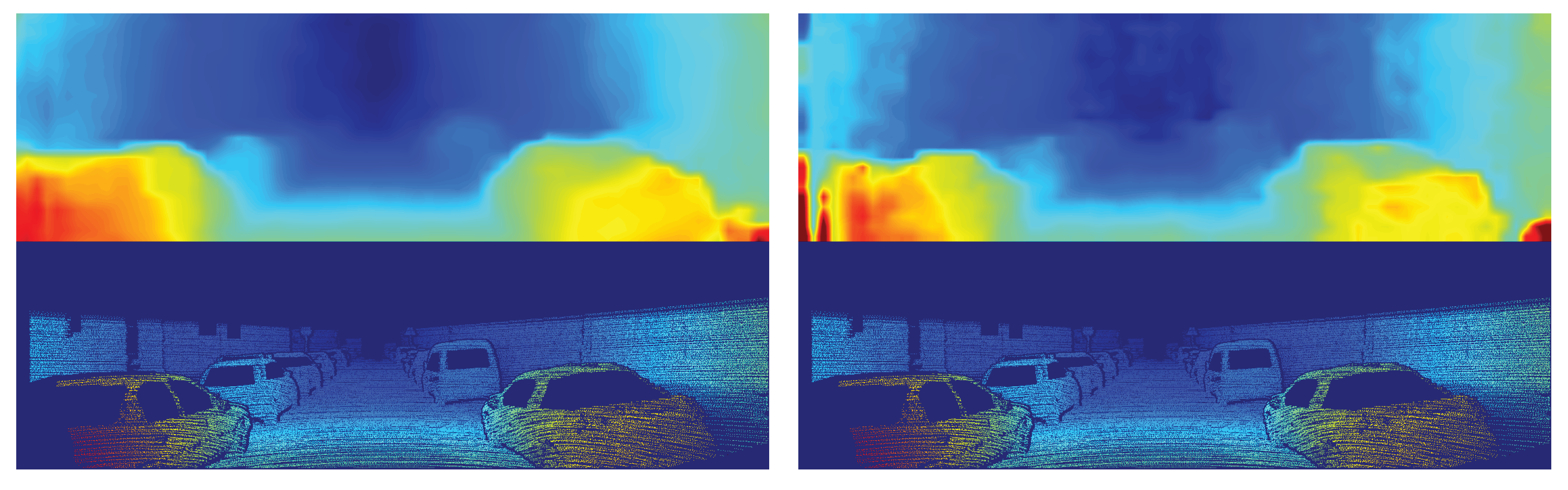

- 3D convolutions are costly operations to run on current embedded GPU devices, at least when compared to 2D convolutions. Hence, it is necessary to look for alternatives to 3D convolutions, with a similar performance and a faster runtime speed.

- Reducing the runtime of cost-volume postprocessing can allow current models to compute disparity maps at a higher resolution.

- Many applications require continuous depth estimation, so multisteps models need to run over a fixed number of layers. Hence, it is preferable to set each model to its maximum capacity for the given embedded device. The latter improves the performance of the network since its loss functions do not need to trade off between the disparity pixel error over the different steps.

6.1. Model Description

- Feature extraction: The first step is to extract features from both input images using a U-Net [6] architecture. In essence, U-Net is an encoder-decoder network with skip connections between the downsampled and upsampled features that have the same dimension. In our case, we downsample the input image three times, reducing 16 times the original size (lines 1–12 in Table 6) and upsample once (lines 13–16 in Table 6). The upsampling process is key, and the number of upsampling steps will depend on the resources of the target device. For example, if we upsample once, the number of possible disparities to search became 192/8 (scaled disparity), where 192 is the maximum disparity. If we upsample twice, then the number of possible disparities became 192/4, generating a bigger cost-volume matrix (line 17 in Table 6). In our experiments, we choose one upsampling step to allow the Jetson Nano to run in real-time.

- Cost-volume: This is the second step and consists of relating features from both images, using an L1 distance metric. We use Algorithm 1.

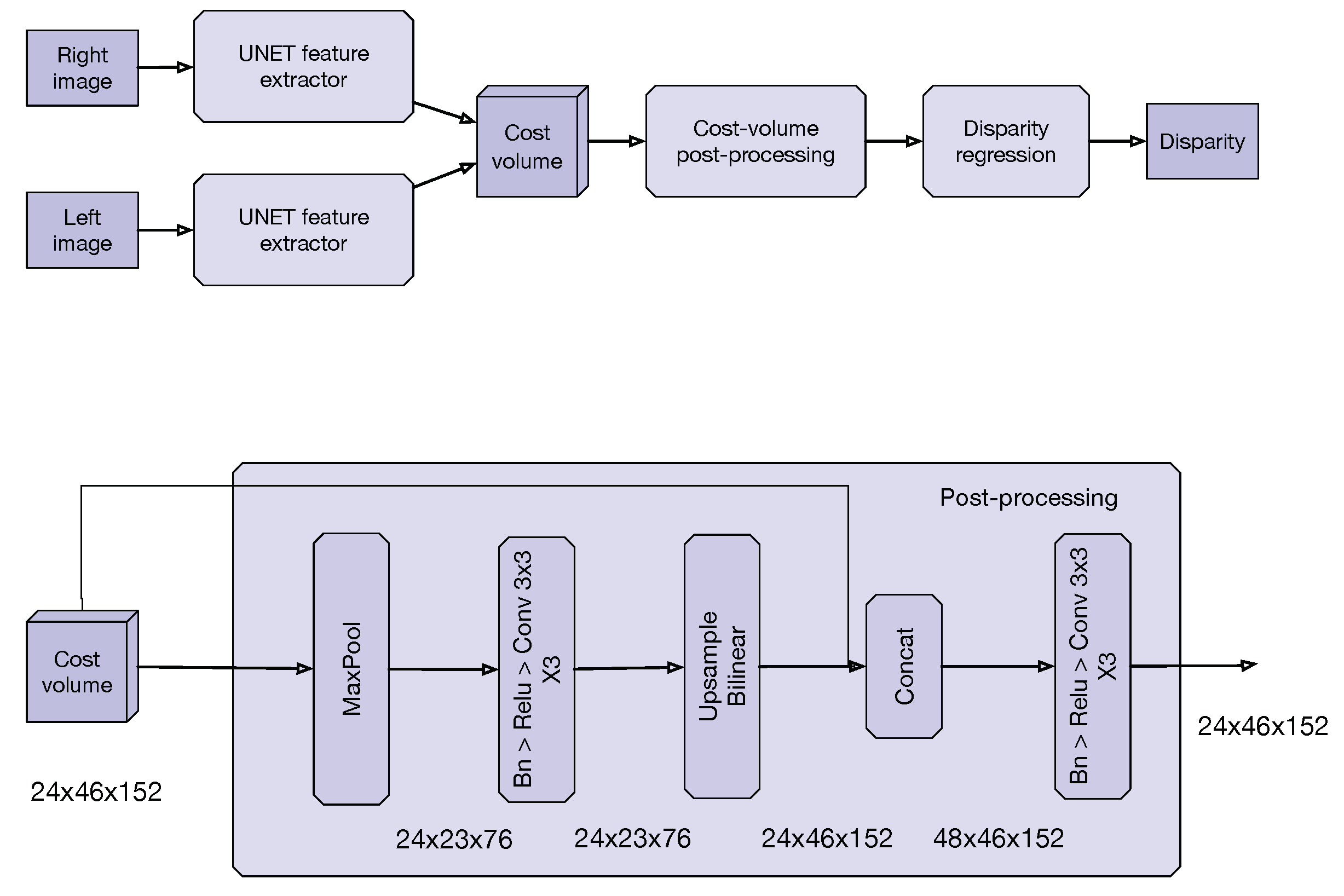

- Cost-volume postprocessing: A U-Net model, like the one used for feature extraction, is used to postprocess the cost-volume instead of the traditional sequence of 3D convolutions. The intuition behind our proposal is that having an encoder-decoder model, that uses 2D convolutions for postprocessing the cost-volume matrix, helps to reduce the lack of volumetric insight that 3D convolutions have (lines 18–26 in Table 6). In our experiments, we downsample and upsample just once, since the resulting cost-volume matrix was small.

- The last step is to upsample the disparity estimation to the original size, using bilinear interpolation (line 28 in Table 6).

6.2. Evaluation

7. Discussion

8. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Du, Y.C.; Muslikhin, M.; Hsieh, T.H.; Wang, M.S. Stereo Vision-Based Object Recognition and Manipulation by Regions with Convolutional Neural Network. Electronics 2020, 9, 210. [Google Scholar] [CrossRef]

- Nalpantidis, L.; Kostavelis, I.; Gasteratos, A. Stereovision-Based Algorithm for Obstacle Avoidance. Intelligent Robotics and Applications; Xie, M., Xiong, Y., Xiong, C., Liu, H., Hu, Z., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; pp. 195–204. [Google Scholar]

- Pon, A.D.; Ku, J.; Li, C.; Waslander, S.L. Object-Centric Stereo Matching for 3D Object Detection. arXiv 2019, arXiv:1909.07566. [Google Scholar]

- Zhang, Y.; Chen, Y.; Bai, X.; Yu, S.; Yu, K.; Li, Z.; Yang, K. Adaptive Unimodal Cost Volume Filtering for Deep Stereo Matching. arXiv 2020, arXiv:1909.03751. [Google Scholar]

- Wang, Y.; Lai, Z.; Huang, G.; Wang, B.H.; van der Maaten, L.; Campbell, M.; Weinberger, K.Q. Anytime Stereo Image Depth Estimation on Mobile Devices. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar]

- Hirschmuller, H. Stereo processing by semiglobal matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 30, 328–341. [Google Scholar] [CrossRef] [PubMed]

- Žbontar, J.; LeCun, Y. Stereo Matching by Training a Convolutional Neural Network to Compare Image Patches. J. Mach. Learn. Res. 2016, 17, 2287–2318. [Google Scholar]

- Luo, W.; Schwing, A.G.; Urtasun, R. Efficient Deep Learning for Stereo Matching. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 5695–5703. [Google Scholar] [CrossRef]

- Chang, J.; Chen, Y. Pyramid Stereo Matching Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2018, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Scharstein, D.; Szeliski, R. A Taxonomy and Evaluation of Dense Two-Frame Stereo Correspondence Algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Jie, Z.; Wang, P.; Ling, Y.; Zhao, B.; Wei, Y.; Feng, J.; Liu, W. Left-Right Comparative Recurrent Model for Stereo Matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2018, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Batsos, K.; Mordohai, P. RecResNet: A Recurrent Residual CNN Architecture for Disparity Map Enhancement. In Proceedings of the 2018 International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018; pp. 238–247. [Google Scholar] [CrossRef]

- Kendall, A.; Martirosyan, H.; Dasgupta, S.; Henry, P. End-to-End Learning of Geometry and Context for Deep Stereo Regression. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 66–75. [Google Scholar] [CrossRef]

- Khamis, S.; Fanello, S.R.; Rhemann, C.; Kowdle, A.; Valentin, J.P.C.; Izadi, S. StereoNet: Guided Hierarchical Refinement for Real-Time Edge-Aware Depth Prediction. arXiv 2018, arXiv:1807.08865. [Google Scholar]

- Hernandez-Juarez, D.; Chacón, A.; Espinosa, A.; Vázquez, D.; Moure, J.C.; López, A.M. Embedded Real-time Stereo Estimation via Semi-Global Matching on the GPU. In Proceedings of the International Conference on Computational Science 2016 (ICCS 2016), San Diego, CA, USA, 6–8 June 2016; pp. 143–153. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Mayer, N.; Ilg, E.; Hausser, P.; Fischer, P.; Cremers, D.; Dosovitskiy, A.; Brox, T. A Large Dataset to Train Convolutional Networks for Disparity, Optical Flow, and Scene Flow Estimation. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar] [CrossRef]

- Prechelt, L. Early Stopping—But When. In Neural Networks: Tricks of the Trade, 2nd ed.; Montavon, G., Orr, G.B., Müller, K.R., Eds.; Springe: Berlin/Heidelberg, Germany, 2012; pp. 53–67. [Google Scholar] [CrossRef]

- Smolyanskiy, N.; Kamenev, A.; Birchfield, S. On the Importance of Stereo for Accurate Depth Estimation: An Efficient Semi-Supervised Deep Neural Network Approach. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Falcon, W.E.A. PyTorch Lightning. Available online: https://github.com/PytorchLightning/pytorch-lightning (accessed on 10 March 2019).

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. GhostNet: More Features from Cheap Operations. arXiv 2020, arXiv:1911.11907. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. arXiv 2017, arXiv:1707.01083. [Google Scholar]

| Jetson TX2 (2017) | Jetson Xavier (2018) | Jetson Nano (2019) | |

|---|---|---|---|

| Architecture | Pascal | Volta | Maxwell |

| SM | 2 | 8 | 1 |

| CUDA cores per SM | 128 | 64 | 128 |

| CPU | 6 (4 + 2) core ARM | 8 core ARM | 4 core ARM |

| Memory | 8 GB 128 bit LPDDR4 | 16 GB 256-bit LPDDR4x | 4 GB 64-bit LPDDR4 |

| Bandwidth | 58.4 GB/s | 137 GB/s | 25.6 GB/s |

| Storage | 32 GB eMMC | 32 GB eMMC | 16 GB eMMC 5.1 Flash |

| Power | 7.5 W/15 W | 10 W/15 W/30 W | 5 W/10 W |

| Model | Err > 3 | Titan XP | Jetson TX2 | Jetson Xavier | Jetson Nano |

|---|---|---|---|---|---|

| Anytime stage 1 | 0.177 | 4 ms | 39 ms | 13 ms | 77 ms |

| Anytime stage 2 | 0.115 | 6 ms | 60 ms | 19 ms | 107 ms |

| Anytime stage 3 | 0.075 | 10 ms | 105 ms | 33 ms | 200 ms |

| Stereonet x16 | 0.275 | 4 ms | 45 ms | 17 ms | 96 ms |

| Available Layers | FP32 | FP16 | INT8 | INT32 | DLA FP16 | DLA INT8 | |

|---|---|---|---|---|---|---|---|

| 2D Conv | Yes | Yes | Yes | No | Yes | Yes | |

| 3D Conv | Yes | Yes | No | No | No | No | |

| Batchnorm 2D | Yes | Yes | Yes | No | Yes | Yes | |

| Batchnorm 3D | Yes | Yes | Yes | No | Yes | Yes | |

| ReLU | Yes | Yes | Yes | No | Yes | Yes | |

| GPU Devices | CUDA | FP32 | FP16 | INT8 | FP16 Tensor Cores | INT8 Tensor Cores | DLA |

| AGX Xavier | 7.2 | Yes | Yes | Yes | Yes | Yes | Yes |

| TX2 | 6.2 | Yes | Yes | No | No | No | No |

| Nano | 5.3 | Yes | Yes | No | No | No | No |

| Model | Err > 3 | Jetson TX2 | Jetson Xavier | Jetson Nano |

|---|---|---|---|---|

| Anytime stage 1 | 0.177 | 24 ms | 9 ms | 62 ms |

| Anytime stage 2 | 0.115 | 45 ms | 15 ms | 97 ms |

| Anytime stage 3 | 0.075 | 95 ms | 29 ms | 210 ms |

| Stereonet x16 | 0.275 | 27 ms | 13 ms | 65 ms |

| Jetson TX2 | Feature Extraction Mostly 2D Convs) | Cost-Volume (Matrix Operations) | Cost-Volume Post Processing (Mostly 3D Convs) | Regression (SoftArgMin) |

|---|---|---|---|---|

| Anytime Stage 1 without optimizations | 0.011921 s | 0.011517 s | 0.013466 s | 0.002360 s |

| Anytime Stage 1 with optimizations | 0.009067 s | 0.000346 s | 0.013466 s | 0.001414 s |

| # | Layer (type) | Output Shape | # Params |

|---|---|---|---|

| 1 | Conv 2D 3 × 3 | 1 × 368 × 1218 | 28 |

| 2 | BatchNorm 2D | 1 × 368 × 1218 | 2 |

| 3 | ReLU | 1 × 368 × 1218 | 0 |

| 4 | MaxPool 2D | 1 × 92 × 304 | 0 |

| 5 | Bn -> ReLU -> Conv 2D | 2 × 92 × 304 | 20 |

| 6 | Bn -> ReLU -> Conv 2D | 2 × 92 × 304 | 40 |

| 7 | MaxPool 2D | 2 × 46 × 152 | 0 |

| 8 | Bn -> ReLU -> Conv 2D | 4 × 46 × 152 | 76 |

| 9 | Bn -> ReLU -> Conv 2D | 4 × 46 × 152 | 152 |

| 10 | MaxPool 2D | 4 × 23 × 76 | 0 |

| 11 | Bn -> ReLU -> Conv 2D | 8 × 23 × 76 | 296 |

| 12 | Bn -> ReLU -> Conv 2D | 8 × 23 × 76 | 592 |

| 13 | Bilinear upsample | 8 × 46 × 152 | 0 |

| 14 | Concat 13 and 9 | 12 × 46 × 152 | 0 |

| 15 | Bn -> ReLU -> Conv 2D | 8 × 46 × 152 | 888 |

| 16 | Bn -> ReLU -> Conv 2D | 8 × 46 × 152 | 592 |

| 17 | Cost-volume | 24 × 46 × 152 | 0 |

| 18 | MaxPool 2D | 24 × 23 × 76 | 0 |

| 19 | Bn -> ReLU -> Conv 2D | 24 × 23 × 76 | 5232 |

| 20 | Bn -> ReLU -> Conv 2D | 24 × 23 × 76 | 5232 |

| 21 | Bn -> ReLU -> Conv 2D | 24 × 23 × 76 | 5232 |

| 22 | Bilinear upsample | 24 × 46 × 152 | 0 |

| 23 | Concat 22 and 17 | 48 × 46 × 152 | 0 |

| 24 | Bn -> ReLU -> Conv 2D | 24 × 46 × 152 | 10,464 |

| 25 | Bn -> ReLU -> Conv 2D | 24 × 46 × 152 | 5232 |

| 26 | Bn -> ReLU -> Conv 2D | 24 × 46 × 152 | 5232 |

| 27 | Soft argmin | 1 × 46 × 152 | 0 |

| 28 | Bilinear upsample | 1 × 368 × 1218 | 0 |

| Total | 39,319 |

| Err > 3 | Jetson TX 2 | Jetson Xavier | Jetson Nano | |

|---|---|---|---|---|

| Baseline 3D | 0.090 | 162 ms | 46 ms | 439 ms |

| Baseline 2D | 0.210 | 14 ms | 5 ms | 32 ms |

| Proposed model | 0.112 | 14 ms | 5 ms | 32 ms |

| Jetson Nano | Feature Extraction (mostly 2D convs) | Cost-Volume (Matrix Operations) | Cost-Volume Post Processing (Mostly 3D Convs) | Regression (SoftArgMin) |

|---|---|---|---|---|

| Baseline 3D | 0.025766 s | 0.000501 s | 0.410672 s | 0.002663 s |

| Baseline 2D | 0.025766 s | 0.000501 s | 0.002911 s | 0.002663 s |

| Proposed model | 0.025766 s | 0.000501 s | 0.003136 s | 0.002663 s |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aguilera, C.A.; Aguilera, C.; Navarro, C.A.; Sappa, A.D. Fast CNN Stereo Depth Estimation through Embedded GPU Devices. Sensors 2020, 20, 3249. https://doi.org/10.3390/s20113249

Aguilera CA, Aguilera C, Navarro CA, Sappa AD. Fast CNN Stereo Depth Estimation through Embedded GPU Devices. Sensors. 2020; 20(11):3249. https://doi.org/10.3390/s20113249

Chicago/Turabian StyleAguilera, Cristhian A., Cristhian Aguilera, Cristóbal A. Navarro, and Angel D. Sappa. 2020. "Fast CNN Stereo Depth Estimation through Embedded GPU Devices" Sensors 20, no. 11: 3249. https://doi.org/10.3390/s20113249

APA StyleAguilera, C. A., Aguilera, C., Navarro, C. A., & Sappa, A. D. (2020). Fast CNN Stereo Depth Estimation through Embedded GPU Devices. Sensors, 20(11), 3249. https://doi.org/10.3390/s20113249