A Machine Learning Method for Vision-Based Unmanned Aerial Vehicle Systems to Understand Unknown Environments

Abstract

1. Introduction

2. Related Work

2.1. Methods for Understanding the Environment

2.2. Detection Ability of UAVs

2.3. Machine Learning Algorithm for Detection

3. Materials and Methods

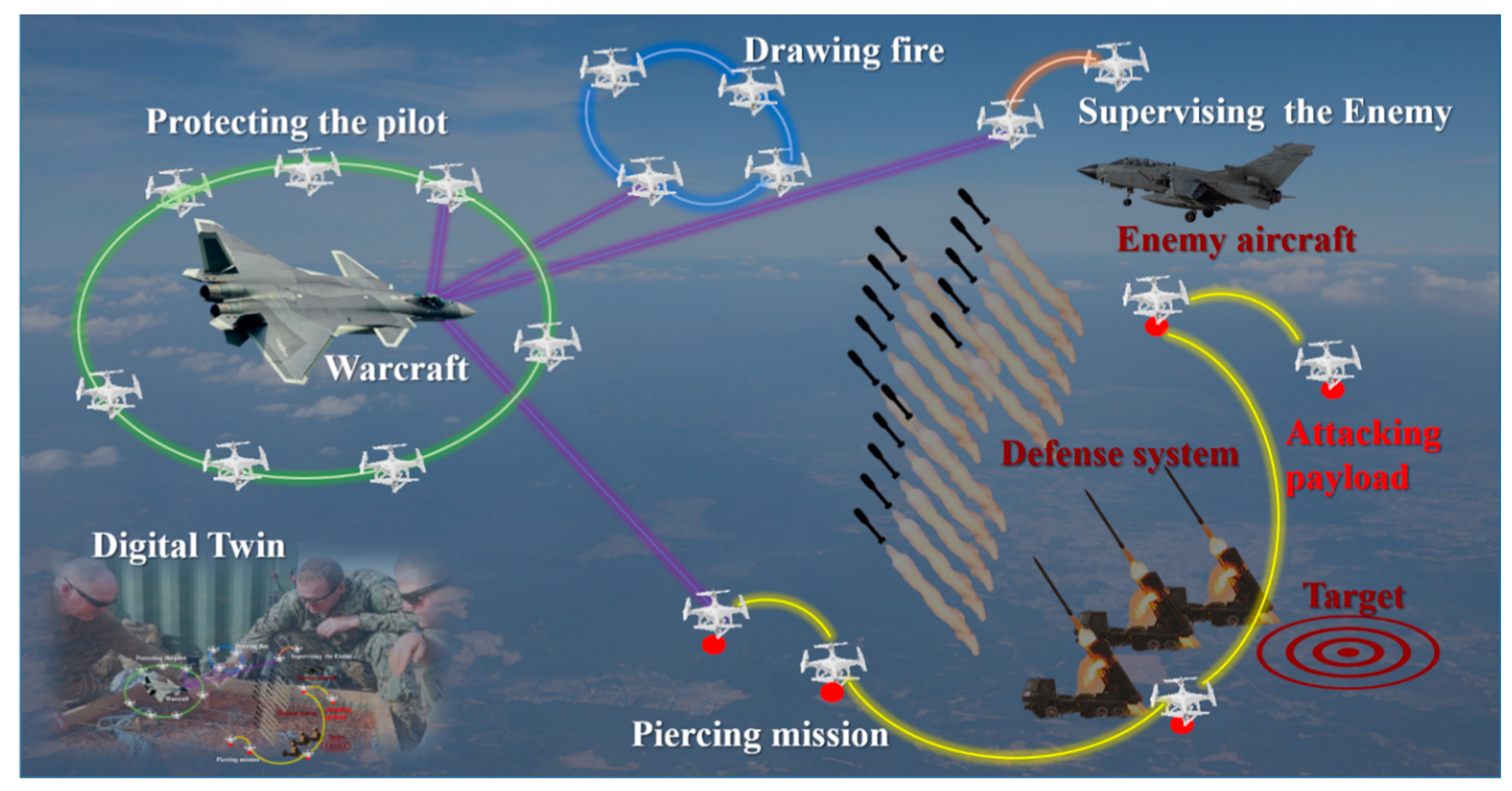

3.1. Problem Formulation

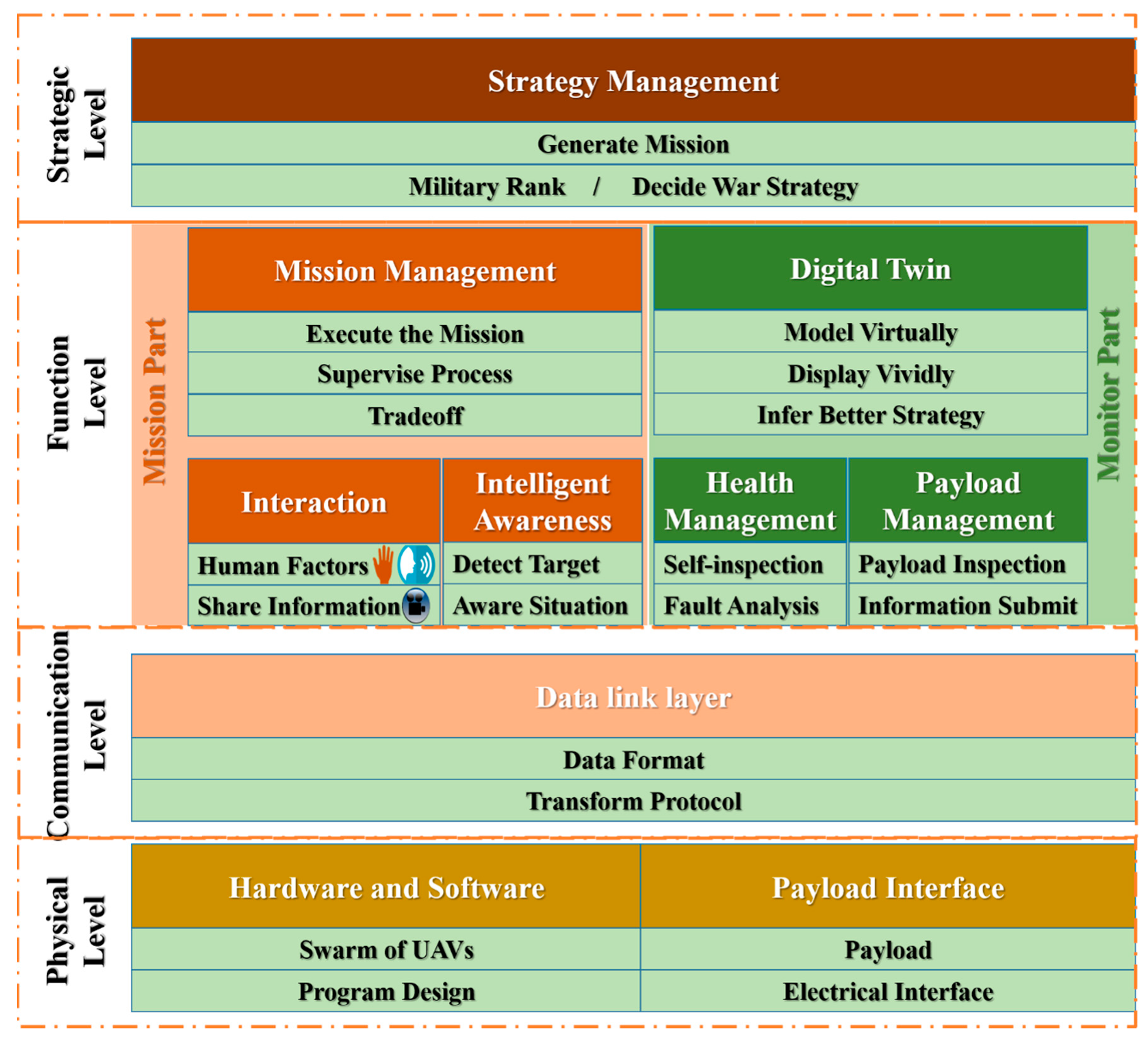

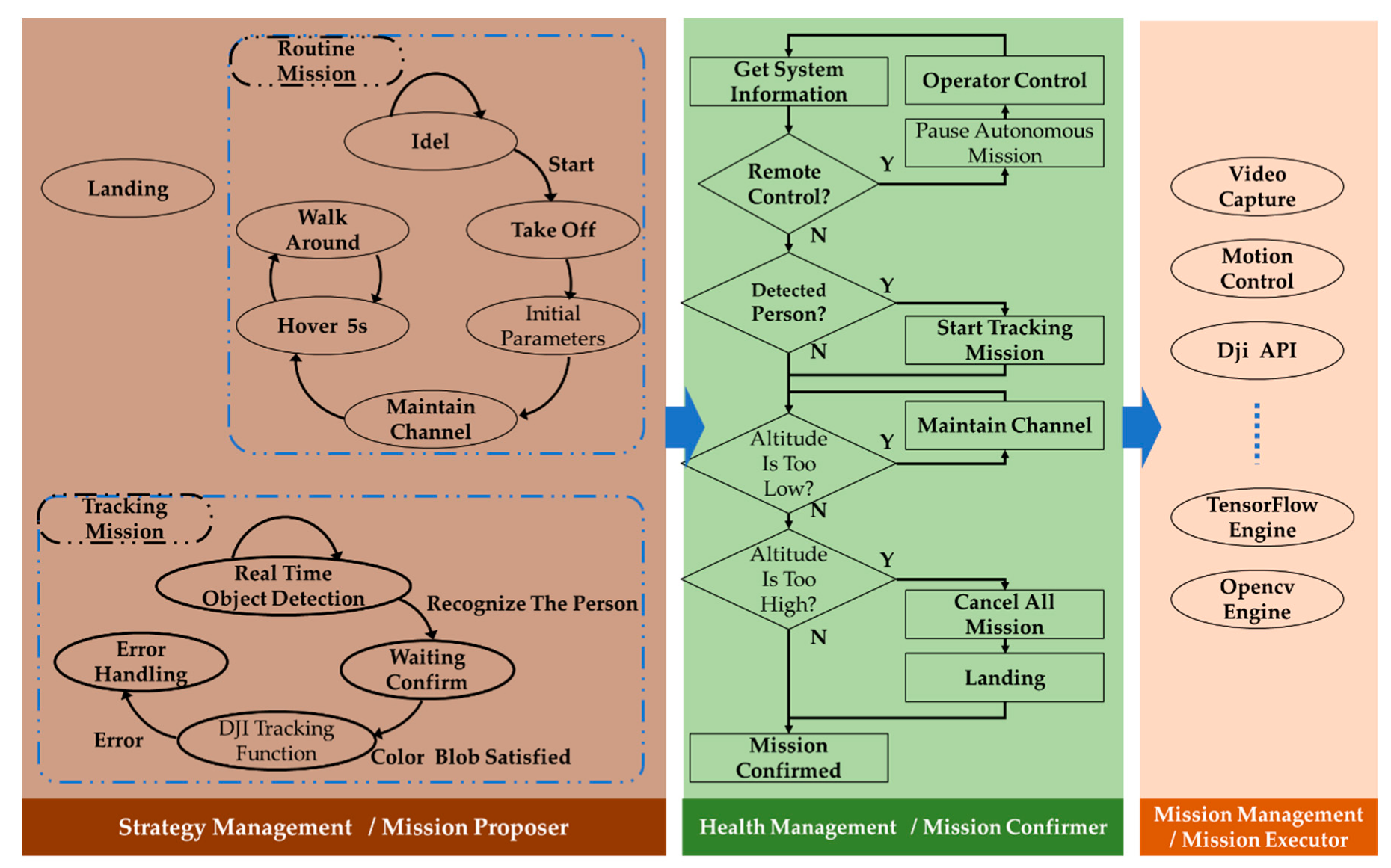

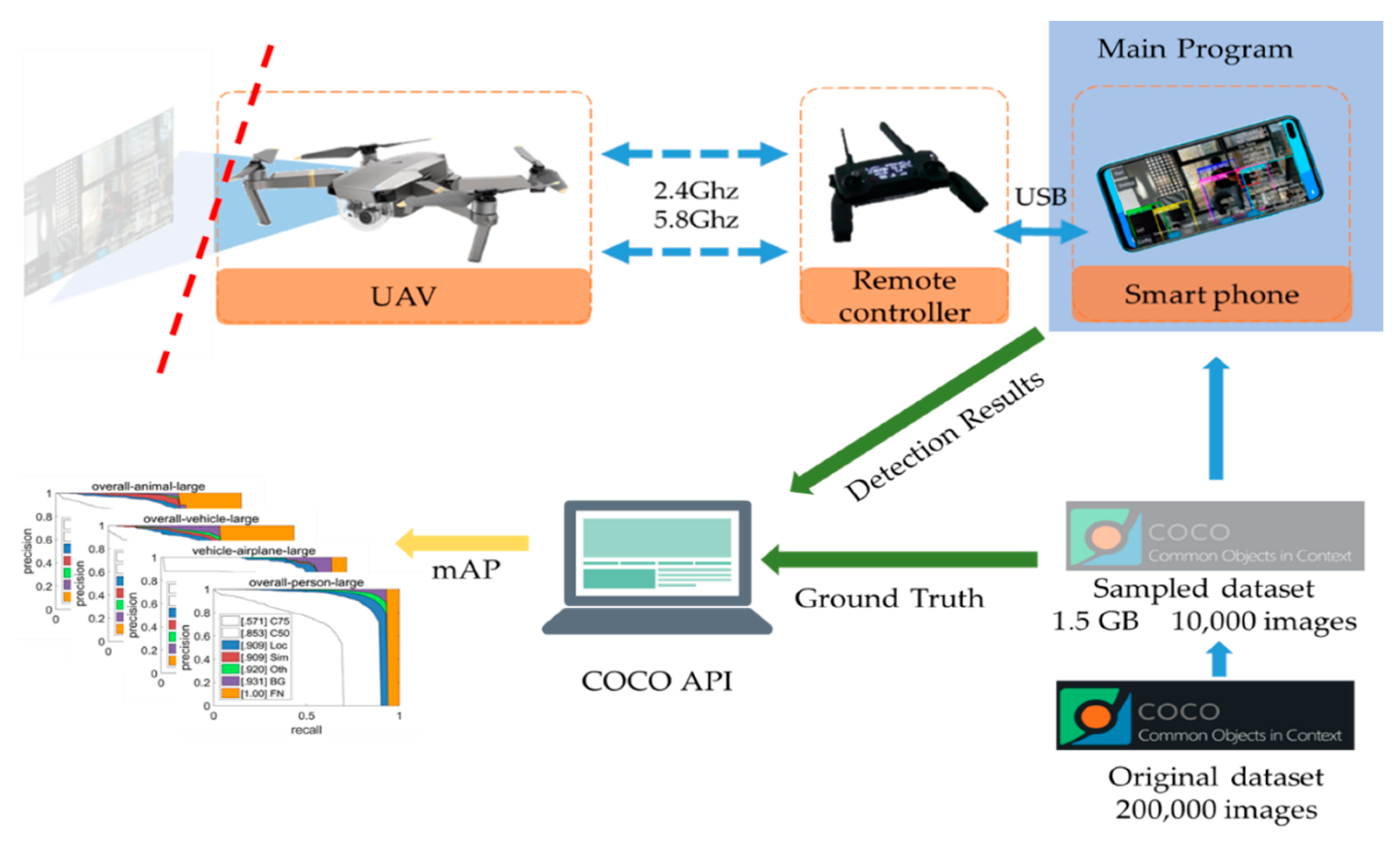

3.2. Architecture of the System

3.3. Data Stream Pipeline

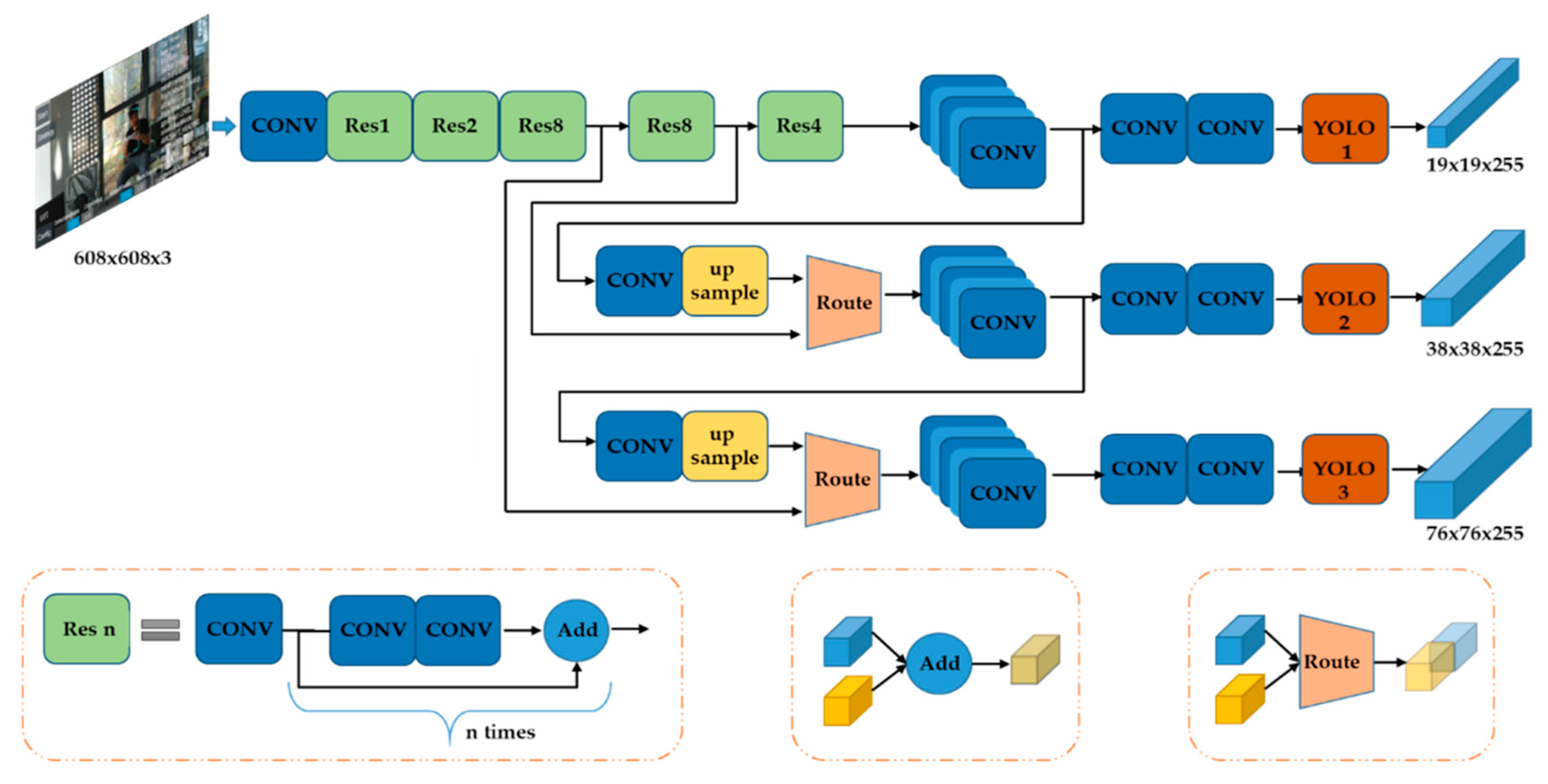

3.4. Understanding Part of the Method

| Algorithm 1 Color Blob Detection. | |

| Require: Real time RGB image; the hue value of the ROI (region of interest); the hue section | |

| |

3.5. Fusion at the Action Level

4. Experiment Setup

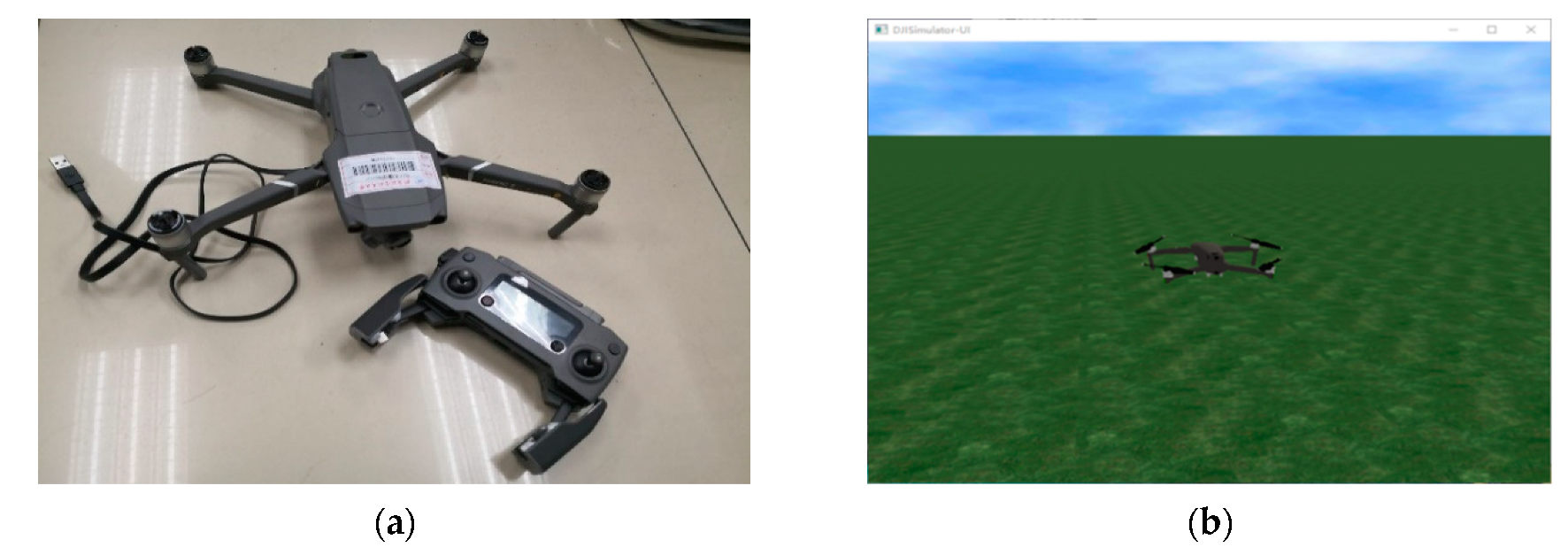

4.1. Experimental Testbed

4.2. Semi-Physical Simulation Testbed

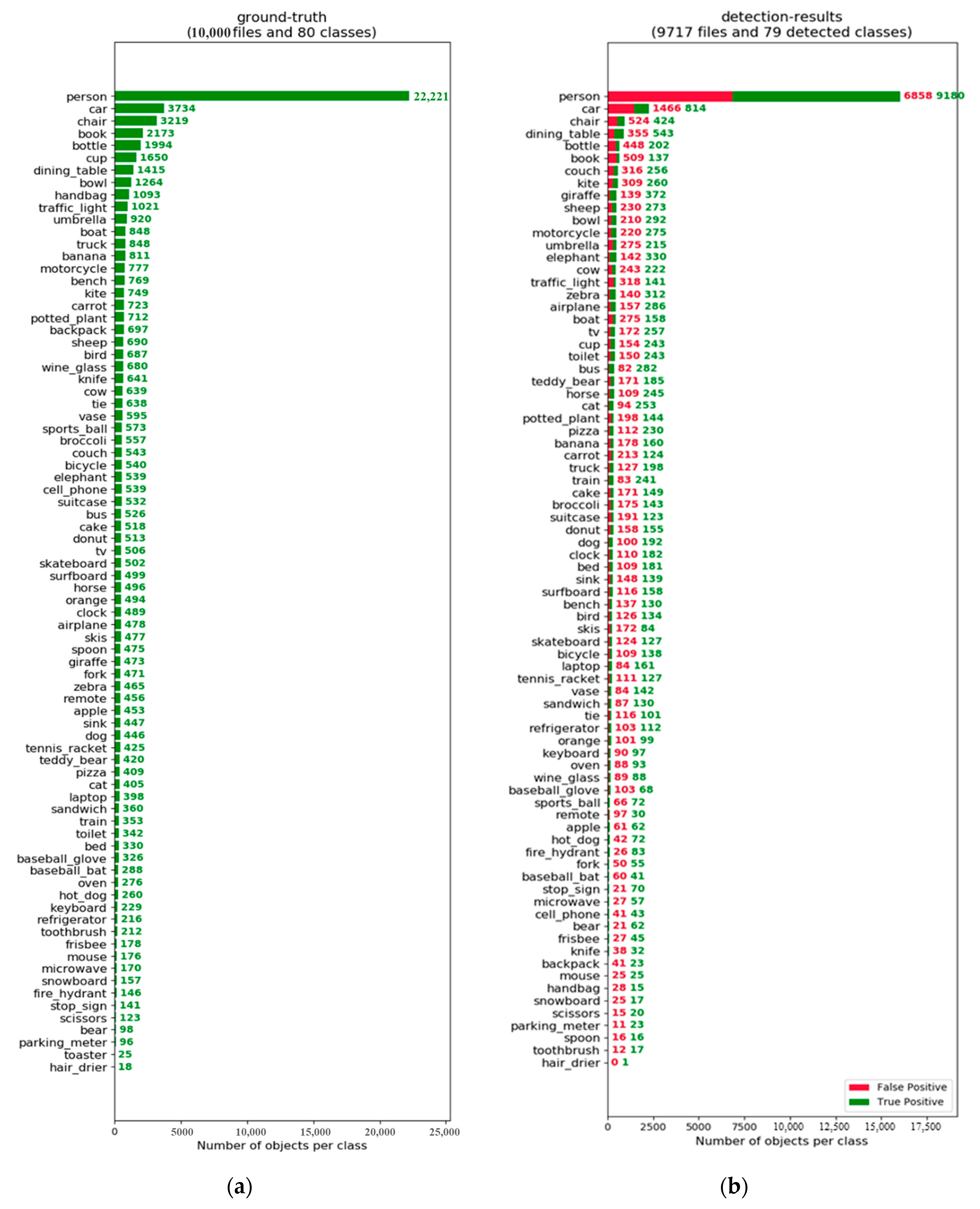

4.3. Detection Evaluation Setup

4.4. Task Setups

5. Results

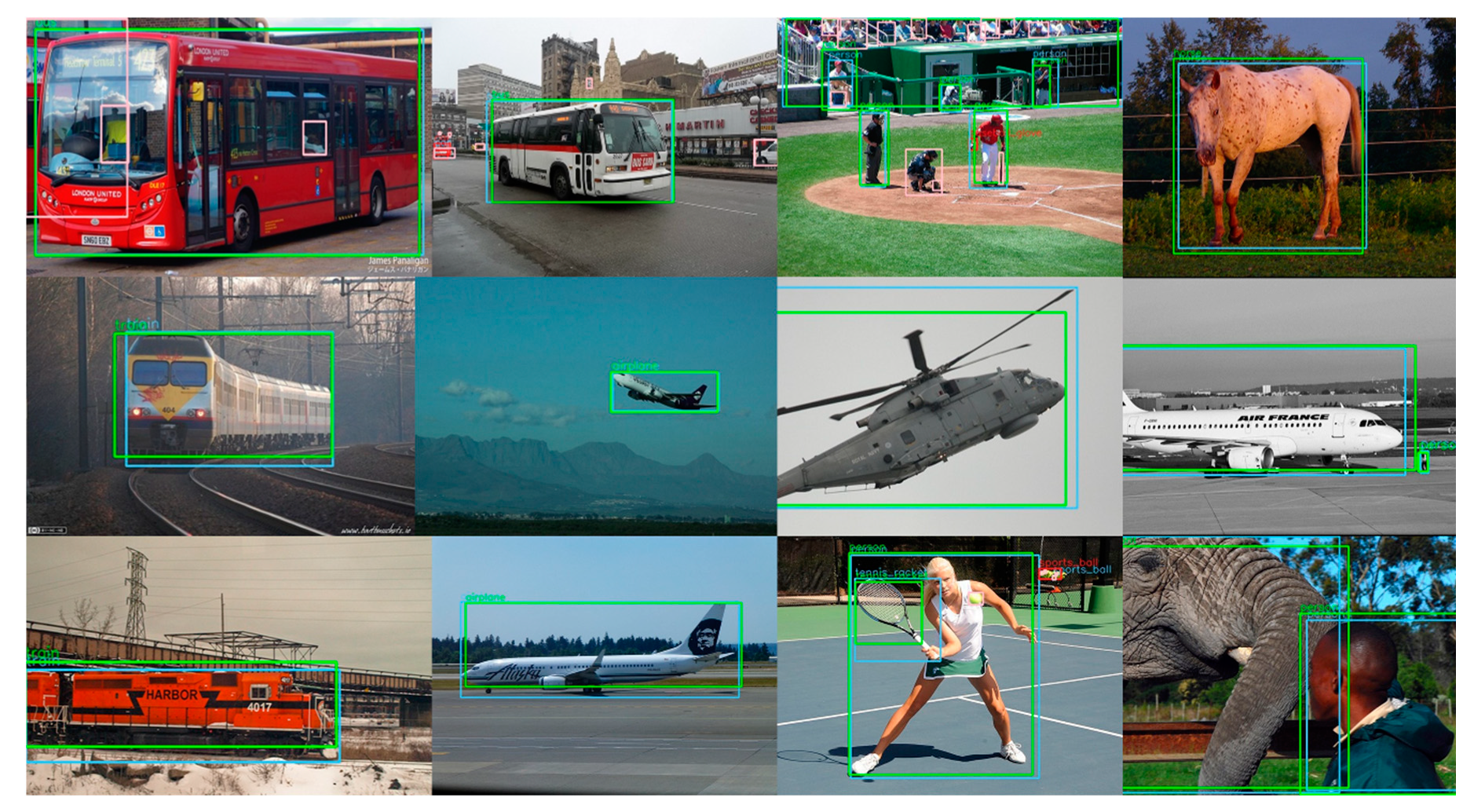

5.1. Quantitative Evaluation

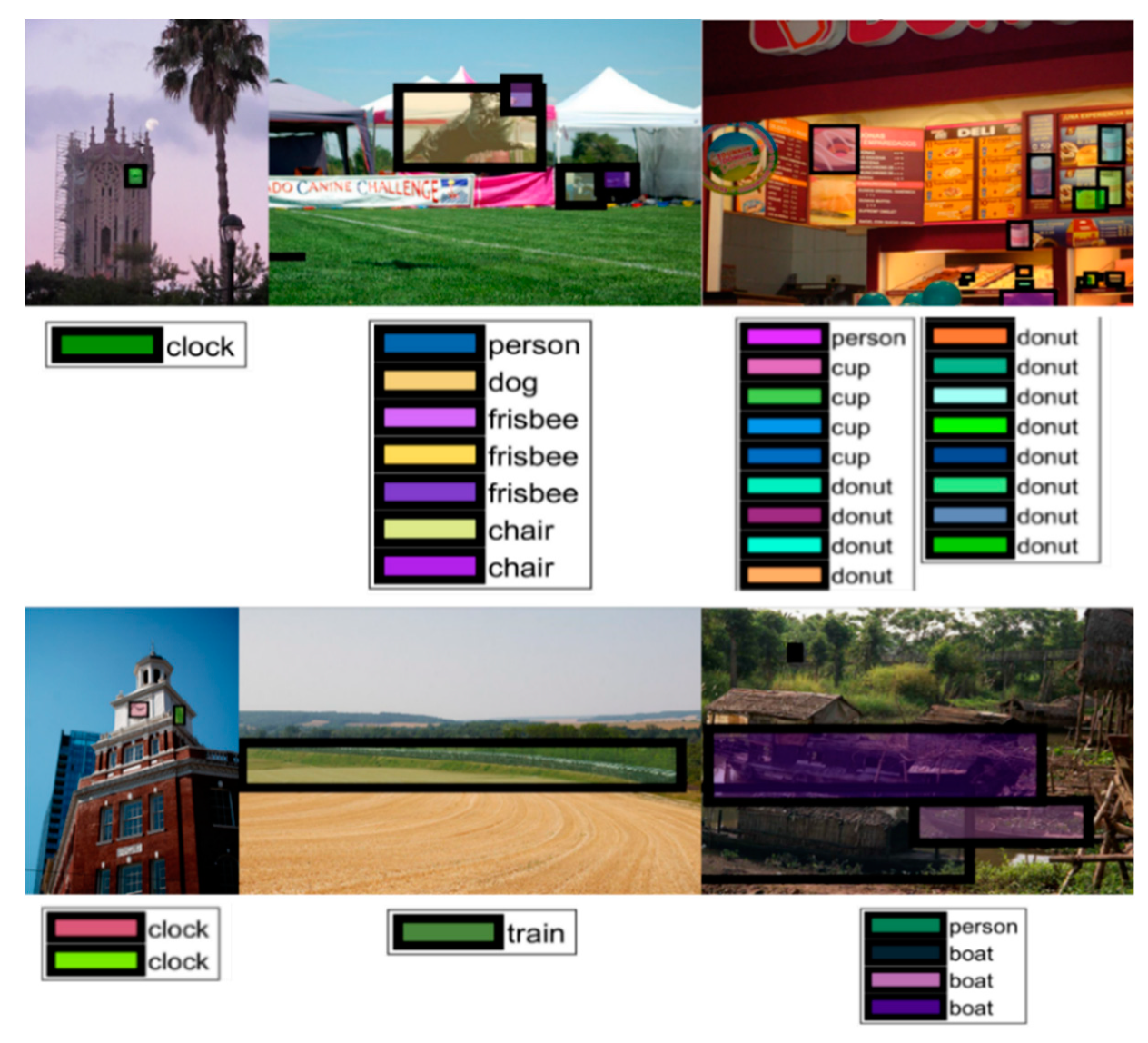

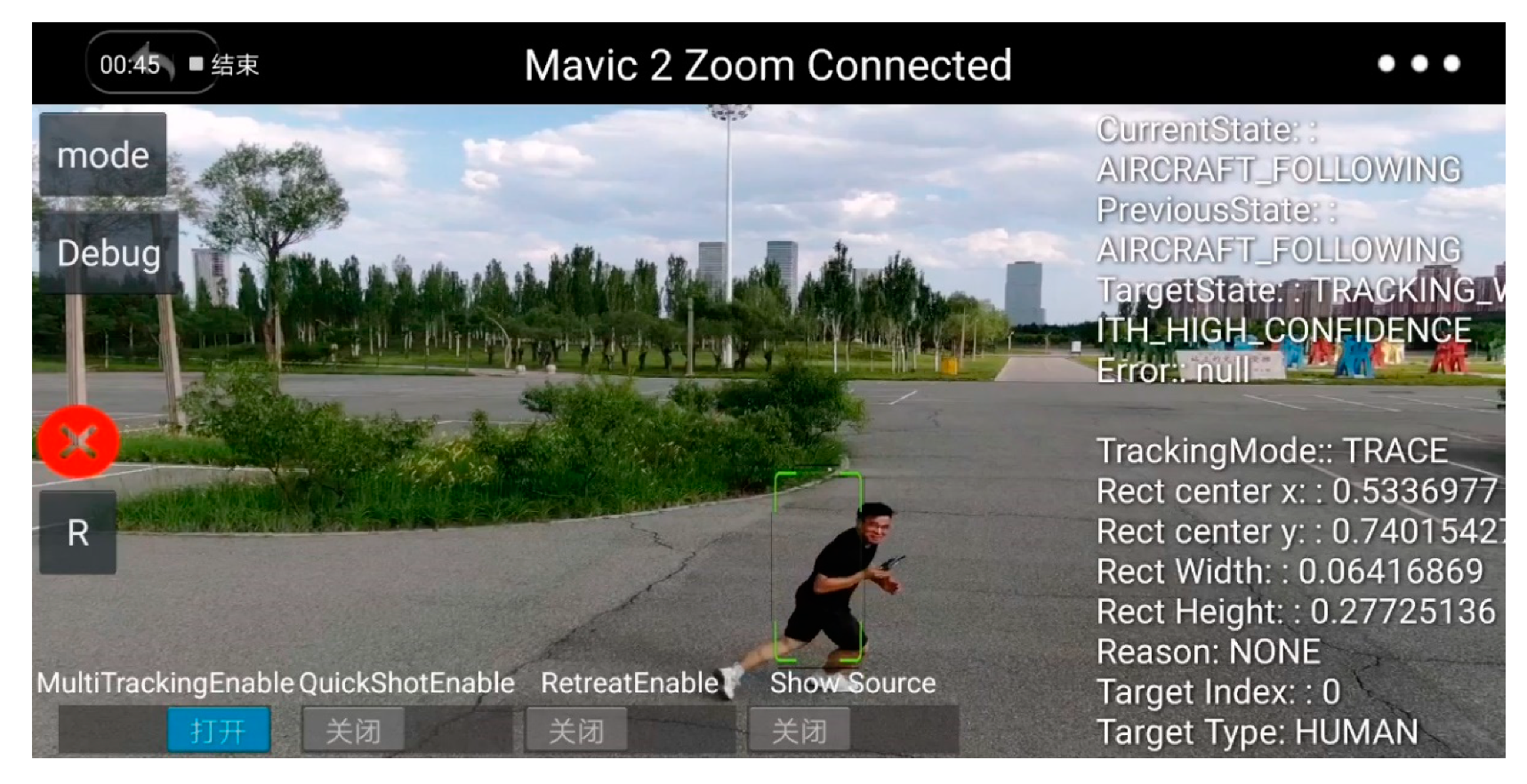

5.1.1. Detection Accuracy Analysis

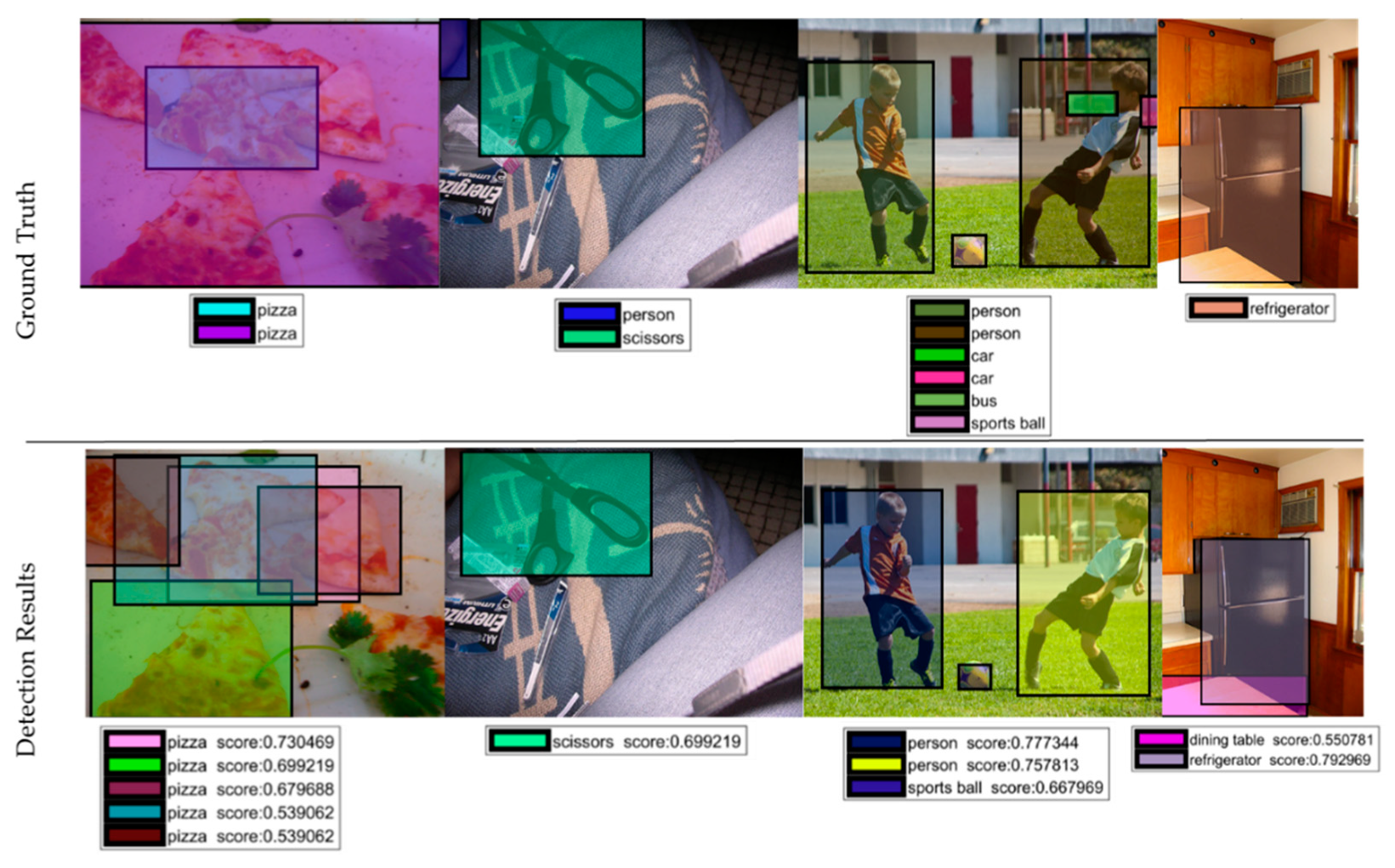

5.1.2. Real-Time Performance Analysis

5.2. System Analysis

5.2.1. Generality

5.2.2. Transportability

5.2.3. Scalability

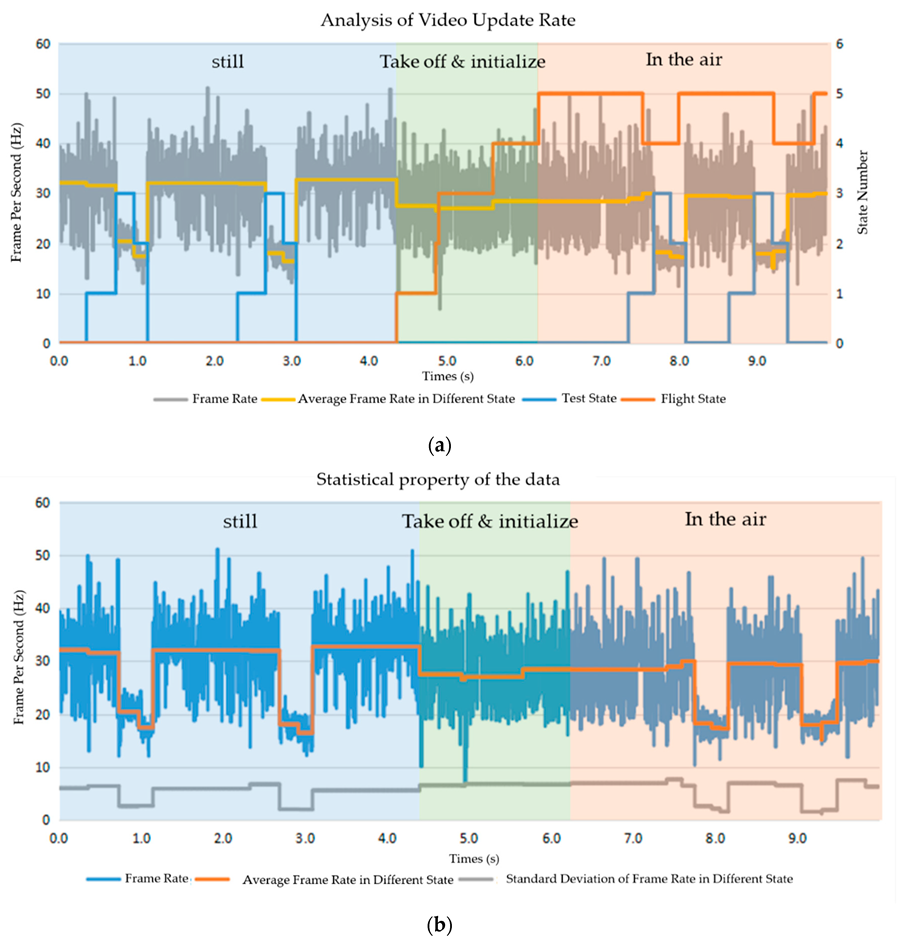

5.2.4. Interactivity

6. Discussion

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

References

- Ham, Y.; Han, K.K.; Lin, J.J.; Golparvar-Fard, M. Visual monitoring of civil infrastructure systems via camera-equipped Unmanned Aerial Vehicles (UAVs): A review of related works. Vis. Eng. 2016, 4, 1–8. [Google Scholar] [CrossRef]

- Floreano, D.; Wood, R.J. Science, Technology and the Future of Small Autonomous Drones. Nature 2015, 521, 460–466. [Google Scholar] [CrossRef] [PubMed]

- Kolling, A.; Walker, P.; Chakraborty, N.; Sycara, K.; Lewis, M. Human Interaction with Robot Swarms: A Survey. IEEE Trans. Hum.-Mach. Syst. 2016, 46, 9–26. [Google Scholar] [CrossRef]

- Hocraffer, A.; Nam, C.S. A Meta-Analysis of Human-System Interfaces in Unmanned Aerial Vehicle (UAV) Swarm Management. Appl. Ergonom. 2017, 58, 66–80. [Google Scholar] [CrossRef] [PubMed]

- Mi, Z.-Q.; Yang, Y. Human-robot interaction in UVs swarming: A survey. Int. J. Comput. Sci. Issues 2013, 10 Pt 1, 273. [Google Scholar]

- Zhang, Z.; Xie, P.; Ma, O. Bio-Inspired Trajectory Generation for UAV Perching Movement Based on Tau Theory. Int. J. Adv. Robot. Syst. 2014, 11, 141. [Google Scholar] [CrossRef]

- Opromolla, R.; Inchingolo, G.; Fasano, G. Airborne Visual Detection and Tracking of Cooperative UAVs Exploiting Deep Learning. Sensors 2019, 19, 4332. [Google Scholar] [CrossRef]

- Shen, W.; Xu, D.; Zhu, Y.; Fei-Fei, L.; Guibas, L.; Savarese, S. Situational Fusion of Visual Representation for Visual Navigation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 2881–2890. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Swizerland, 2016; Volume 9905, pp. 21–37. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Samaras, S.; Diamantidou, E.; Ataloglou, D.; Sakellariou, N.; Vafeiadis, A.; Magoulianitis, V.; Lalas, A.; Dimou, A.; Zarpalas, D.; Votis, K.; et al. Deep Learning on Multi Sensor Data for Counter UAV Applications—A Systematic Review. Sensors 2019, 19, 4837. [Google Scholar] [CrossRef]

- Al-Kaff, A.; Martín, D.; García, F.; Escalera, A.; de la María Armingol, J. Survey of Computer Vision Algorithms and Applications for Unmanned Aerial Vehicles. Expert Syst. Appl. 2018, 92, 447–463. [Google Scholar] [CrossRef]

- Zhou, J.; Tian, Y.; Yuan, C.; Yin, K.; Yang, G.; Wen, M. Improved UAV Opium Poppy Detection Using an Updated YOLOv3 Model. Sensors 2019, 19, 4851. [Google Scholar] [CrossRef] [PubMed]

- Falanga, D.; Mueggler, E.; Faessler, M.; Scaramuzza, D. Aggressive Quadrotor Flight through Narrow Gaps with Onboard Sensing and Computing Using Active Vision. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 5774–5781. [Google Scholar] [CrossRef]

- Markiewicz, J.; Abratkiewicz, K.; Gromek, A.; Samczyński, W.O.P.; Gromek, D. Geometrical Matching of SAR and Optical Images Utilizing ASIFT Features for SAR-Based Navigation Aided Systems. Sensors 2019, 19, 5500. [Google Scholar] [CrossRef] [PubMed]

- Falanga, D.; Kleber, K.; Scaramuzza, D. Dynamic Obstacle Avoidance for Quadrotors with Event Cameras. Sci. Robot. 2020, 5, eaaz9712. [Google Scholar] [CrossRef]

- Blösch, M.; Weiss, S.; Scaramuzza, D.; Siegwart, R. Vision Based MAV Navigation in Unknown and Unstructured Environments. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–8 May 2010; pp. 21–28. [Google Scholar] [CrossRef]

- Land, M.F.; Dan-Eric, N. Animal Eyes, 2nd ed.; Oxford University Press: Oxford, UK, 2012; pp. 154–155. ISBN 9780199581146. [Google Scholar]

- Luo, C.; Yu, L.; Ren, P. A Vision-Aided Approach to Perching a Bioinspired Unmanned Aerial Vehicle. IEEE Trans. Ind. Electron. 2018, 65, 3976–3984. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Gao, P.; Zhang, Y.; Zhang, L.; Noguchi, R.; Ahamed, T. Development of a Recognition System for Spraying Areas from Unmanned Aerial Vehicles Using a Machine Learning Approach. Sensors 2019, 19, 313. [Google Scholar] [CrossRef]

- Opromolla, R.; Fasano, G.; Accardo, D. A Vision-Based Approach to UAV Detection and Tracking in Cooperative Applications. Sensors 2018, 18, 3391. [Google Scholar] [CrossRef]

- Hummel, K.; Pollak, M.; Krahofer, J. A Distributed Architecture for Human-Drone Teaming: Timing Challenges and Interaction Opportunities. Sensors 2019, 19, 1379. [Google Scholar] [CrossRef]

- Martinez-Alpiste, I.; Casaseca-de-la-Higuera, P.; Alcaraz-Calero, J.; Grecos, C.; Wang, Q. Benchmarking Machine-Learning-Based Object Detection on a UAV and Mobile Platform. In Proceedings of the 2019 IEEE Wireless Communications and Networking Conference (WCNC), Marrakesh, Morocco, 15–19 April 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Li, M.; Zhao, L.; Tan, D.; Tong, X. BLE Fingerprint Indoor Localization Algorithm Based on Eight-Neighborhood Template Matching. Sensors 2019, 19, 4859. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classifification with Deep Convolutional Neural Networks. In Proceedings of the International Conference on the Neural Information Processing Systems Conference, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Hinton, G.E.; Srivastava, N.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R.R. Improving Neural Networks by Preventing Co-Adaptation of Feature Detectors. arXiv 2012, arXiv:1207.0580. [Google Scholar]

- Wang, Q.; Zhang, L.; Bertinetto, L.; Hu, W.; Torr, P.H.S. Fast Online Object Tracking and Segmentation: A Unifying Approach. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 1328–1338. [Google Scholar]

- Zhang, T.; Hu, X.; Xiao, J.; Zhang, G.; Fu, L. An Implementation of Non-Electronic Human-Swarm Interface for Multi-Agent System in Cooperative Searching. In Proceedings of the 2019 IEEE 15th International Conference on Control and Automation (ICCA), Edinburgh, UK, 16–19 July 2019; pp. 1355–1360. [Google Scholar] [CrossRef]

- Running on Mobile with TensorFlow Lite. Available online: https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/running_on_mobile_tensorflowlite.md (accessed on 14 July 2018).

- Suzuki, S.; Be, K. Topological structural analysis of digitized binary images by border following. Comput. Vis. Graph. Image Process. 1985, 30, 32–46. [Google Scholar] [CrossRef]

- Qi, Y.; Yao, H.; Sun, X.; Sun, X.; Zhang, Y.; Huang, Q. Structure-Aware Multi-Object Discovery for Weakly Supervised Tracking. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 466–470. [Google Scholar] [CrossRef]

- Qi, Y.; Qin, L.; Zhang, S.; Huang, Q.; Yao, H. Robust Visual Tracking via Scale-and-State-Awareness. Neurocomputing 2019, 329, 75–85. [Google Scholar] [CrossRef]

- Qi, Y.; Zhang, S.; Zhang, W.; Su, L.; Huang, Q.; Yang, M.-H. Learning Attribute-Specific Representations for Visual Tracking. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 8835–8842. [Google Scholar] [CrossRef]

- Yu, H.; Li, G.; Zhang, W.; Huang, Q.; Du, D.; Tian, Q.; Sebe, N. The Unmanned Aerial Vehicle Benchmark: Object Detection, Tracking and Baseline. Int. J. Comput. Vis. 2020, 128, 1141–1159. [Google Scholar] [CrossRef]

| Model | Size, Weight | Sensors | MSED 1 | Lifetime | Video |

|---|---|---|---|---|---|

| Mavic Pro | 83 × 83 × 198 mm, 734 g (with battery) | Gyroscope, accelerometer, binocular system, camera with gimbals | FCC: 7000 m; CE: 4000 m; SRRC: 4000 m | 27 min, 3830 mAh | 720 p @ 30 fps 1080 p @ 30 fps |

| Mavic Air | 168 × 184 × 64 mm, 430 g (with battery) | Gyroscope, accelerometer, binocular system, camera with gimbals | FCC: 4000 m CE: 2000 m SRRC: 2000 m MIC: 2000 m | 21 min, 2375 mAh | 720 p @ 30 fps |

| State Number | Flight State | Test State |

|---|---|---|

| 0 | IDLE | None |

| 1 | Take off | Detect Color Blob |

| 2 | Initial Parameters | Detect Object |

| 3 | Maintain Channel | Detect Color Blob and Object |

| 4 | Hover | - |

| 5 | Walk Around | - |

| Smart Phone | AP 2 | AP50 3 | AP75 4 | APS 5 | APM 6 | APL 7 | |

|---|---|---|---|---|---|---|---|

| YOLOv2 1 | - | 21.6 | 44.0 | 19.2 | 5.0 | 22.4 | 35.5 |

| SSD513 1 | - | 31.2 | 50.4 | 33.3 | 10.2 | 34.5 | 49.8 |

| DSSD513 1 | - | 33.2 | 53.3 | 35.2 | 13.0 | 35.4 | 51.1 |

| RetinaNET 1 | - | 40.8 | 61.1 | 44.1 | 24.1 | 44.2 | 51.2 |

| YOLOv3 (608 × 608)1 | - | 33.0 | 57.9 | 34.4 | 18.3 | 35.4 | 41.9 |

| Ours (320 × 320) | YES | 18.6 | 30.5 | 19.9 | 0.07 | 14.6 | 46.8 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, T.; Hu, X.; Xiao, J.; Zhang, G. A Machine Learning Method for Vision-Based Unmanned Aerial Vehicle Systems to Understand Unknown Environments. Sensors 2020, 20, 3245. https://doi.org/10.3390/s20113245

Zhang T, Hu X, Xiao J, Zhang G. A Machine Learning Method for Vision-Based Unmanned Aerial Vehicle Systems to Understand Unknown Environments. Sensors. 2020; 20(11):3245. https://doi.org/10.3390/s20113245

Chicago/Turabian StyleZhang, Tianyao, Xiaoguang Hu, Jin Xiao, and Guofeng Zhang. 2020. "A Machine Learning Method for Vision-Based Unmanned Aerial Vehicle Systems to Understand Unknown Environments" Sensors 20, no. 11: 3245. https://doi.org/10.3390/s20113245

APA StyleZhang, T., Hu, X., Xiao, J., & Zhang, G. (2020). A Machine Learning Method for Vision-Based Unmanned Aerial Vehicle Systems to Understand Unknown Environments. Sensors, 20(11), 3245. https://doi.org/10.3390/s20113245