Abstract

A light detection and ranging (LiDAR) sensor can obtain richer and more detailed traffic flow information than traditional traffic detectors, which could be valuable data input for various novel intelligent transportation applications. However, the point cloud generated by LiDAR scanning not only includes road user points but also other surrounding object points. It is necessary to remove the worthless points from the point cloud by using a suitable background filtering algorithm to accelerate the micro-level traffic data extraction. This paper presents a background point filtering algorithm using a slice-based projection filtering (SPF) method. First, a 3-D point cloud is projected to 2-D polar coordinates to reduce the point data dimensions and improve the processing efficiency. Then, the point cloud is classified into four categories in a slice unit: Valuable object points (VOPs), worthless object points (WOPs), abnormal ground points (AGPs), and normal ground points (NGPs). Based on the point cloud classification results, the traffic objects (pedestrians and vehicles) and their surrounding information can be easily identified from an individual frame of the point cloud. We proposed an artificial neuron network (ANN)-based model to improve the adaptability of the algorithm in dealing with the road gradient and LiDAR-employing inclination. The experimental results showed that the algorithm of this paper successfully extracted the valuable points, such as road users and curbstones. Compared to the random sample consensus (RANSAC) algorithm and 3-D density-statistic-filtering (3-D-DSF) algorithm, the proposed algorithm in this paper demonstrated better performance in terms of the run-time and background filtering accuracy.

1. Introduction

As a type of active vision sensor, light detection and ranging (LiDAR) is a 3-D point cloud imaging vision sensor with the advantages of insensitivity to external light changes, strong adaptability to complex environments, wide coverage, and is informative. Currently, the most typical application of LiDAR in intelligent transportation systems is to detect road and traffic information for automatic driving vehicles [1,2,3,4]. Despite the rapid development of technologies related to self-driving cars in recent years, we cannot realize automatic driving by merely using on-board detectors. Fatal accidents may occur if the detectors of an autonomous vehicle make errors. For example, Tesla and Uber have experienced fatal accidents during their self-driving tests [5,6]. Therefore, it is very important for roadside equipment to monitor road traffic in mixed connected and unconnected traffic conditions. Roadside equipment sends road information to road users to form a cooperative vehicle infrastructure system that can improve traffic efficiency and safety [7].

At present, many roadside detection devices collect traffic information from cameras [4,8,9]. The cameras perceive traffic flow and road surface information by obtaining the brightness in combination with red, green and blue (RGB). However, they cannot directly obtain the depth information. Some algorithms were developed to convert the image information into the corresponding depth information [10], which carried out the 3-D detection and trajectory tracking of road users. However, there are some accuracy risks, as the data provided by a camera can be easily affected by light, and the accuracy at night is relatively low. On the contrary, LiDAR can directly obtain the credible depth information without the influence of light and can accurately obtain the location of objects and their 3-D shape features. In this regard, more benefits can be obtained by deploying a roadside LiDAR sensor (also called an infrastructure-based LiDAR sensor).

The LiDAR sensor can obtain richer and more detailed information of traffic flow characteristics and parameters than traditional traffic detectors, such as video, infrared, radar, radio frequency, and geomagnetic sensors. It is possible to extract the high-resolution fine-grained position, direction, speed, and even the trajectory of each vehicle or pedestrian within the LiDAR scanning range. The micro-level traffic data detected by a roadside LiDAR sensor could be a valuable data input for a vehicle–infrastructure cooperation system, connected/autonomous vehicle systems, vehicle-to-pedestrian crash reduction analysis, smart traffic signals, and other various traffic application fields [11].

In addition, compared with the high-channel LiDAR that are currently deployed on autonomous vehicles or other traditional traffic flow sensors, the low-channel LiDAR has already proved its great potential in traffic information collection due to the low cost, high accuracy and reliability, high reusability, and portability [12,13]. For example, a magnetometer is one of the commonly used traffic flow sensors. The price of a magnetometer is about U.S. $400 per unit in the area of traffic engineering in China. Installing a magnetometer in each lane is required to detect traffic flow parameters. Generally, if considering two or three lanes in each direction of the road, four or six magnetometers are usually needed to realize the overall traffic flow detection on the road segment.

However, only one LiDAR sensor is needed to completely detect the traffic flow parameters on a road segment or at an intersection due to the 360-degree detection coverage. In addition, the price of low-channel LiDAR sensors is expected to drop significantly to about U.S.$100 if massive production is realized in the near future. Therefore, the overall cost of the solutions based on low-channel LiDAR sensors will be lower than most current traffic flow detectors. In this regard, the low-channel LiDAR sensor will have a broad application in the fields of traffic flow detection, early safety warnings of people or vehicles in the mixed environment of connected and unconnected traffic, and many others.

Real-time detecting and tracking of fast-moving vehicles is very important for transportation applications. For example, a fast algorithm can give a timely alarm when a vehicle and other objects are about to collide. For another example, if the algorithm can quickly perceive dangerous situations and release safety messages to other vehicles in time when the vehicle suddenly brakes or has fatal faults, it can reduce the occurrence of accidents as much as possible. The point cloud generated by LiDAR scanning is very informative, including buildings, trees, road users, and the ground. The amount of points used to describe road users in a point cloud is usually less than 1/10. The amount of points directly affects the computational speed and operational efficiency in identifying and tracking road users [14]. Therefore, it is necessary to remove worthless points from the point cloud by using a suitable background filtering algorithm.

Academic researchers and industry engineers are developing many background point filtering algorithms for on-board LiDAR data. Asma [15] used an octree-based occupancy grid representation to model the dynamic environment around the vehicle and to detect moving objects based on inconsistencies between scans. Alireza [16] built a voxel-grid model for static and moving obstacles’ detection by using discriminative analysis and ego-motion information. Dewan [17] presented a novel model-free approach for detecting and tracking dynamic objects in 3-D LiDAR scans obtained by a moving sensor. This relied only on motion cues and did not require any prior information regarding the objects. These methods mentioned above can all remove background points to obtain moving object information around the vehicle.

There is some valuable information for the vehicle in static objects, such as curbstones or other ground information. Zhang [18] developed a real-time curb detection method for self-driving based on the road segmentation. The point cloud data were first processed to distinguish on-road and off-road areas. Then, they proposed a sliding-beam method to segment the road by using the off-road data. The curb information formed the foundation of the decision-making and path planning for autonomous driving.

Multiple LiDARs outperform the single LiDAR in terms of object classification [19]. Therefore, Cao [20] proposed the occupancy-rate-based filtering method for filtering point clouds generated by fusing multiple LiDARs. Although numerous background filtering algorithms of on-board LiDAR have been developed, those algorithms cannot be applied to infrastructure-based LiDAR sensors directly as the infrastructure-based LiDAR and on-board LiDAR differ in the data quality, density, and expected application targets. In particular, on-board LiDAR are designed to detect the environment around the vehicle, which needs to work with video cameras, high-resolution 3-D maps, and high-precision global position system (GPS) to provide supportive data. Infrastructure-based LiDAR works individually to detect wild range traffic flow information, such as a whole intersection [17,18].

With a roadside LiDAR sensor, Wu [21] identified ground points by a channel-based filtering algorithm, which is a combination of raster-based clustering, distance-based/neighborhood-based filtering, and a slope-based method. The algorithm worked well for both flat roads and non-flat roads. Vosselman [22] differentiated point clouds into ground points and non-ground points according to the height difference between each. Konolige [23] used the random sample consensus (RANSAC) algorithm to select many points and selected a plane that was the most close to selected points as the ground plane.

Zhang [24] proposed the slope-based algorithm to distinguish ground points and non-ground points by the points’ differences in characteristics. Ground surfaces are relatively continuous and smooth while the shapes of non-ground features, such as trees and buildings, have a sharp change in the slope. However, those methods mentioned above only distinguish ground points and non-ground points. We still need to extract valuable objects (such as road users) from the point cloud. Wu [25] also introduced an automatic background filtering method named 3-D density-statistic-filtering (3-D-DSF). This method can filter both static background and vibrating backgrounds efficiently by the threshold of cube-point-density (TD) for learning automatically.

For the 3-D-DSF method, all static background points were removed from the data clouds, including curbstones, tree trunks, and other fixed background objects. As a result, some valuable points for traffic were also filtered out of the data set. Furthermore, these algorithms were based on multi-frame point cloud data, which are not suitable for on-line traffic object identification and trajectory tracking. In addition, there are other filtering algorithms based on high-density point clouds [26,27,28]. However, it is difficult to deploy those advanced LiDAR sensors (HDL-64 or VLS-128) for infrastructure-based LiDAR due to their expensive costs.

For better fitting of on-line point cloud filtering for low-channel infrastructure-based LiDAR, we proposed a novel slice-based projection filtering (SPF) algorithm. The aim of the method is to retain as much valuable information and remove as much worthless information as possible in a shorter processing time. The main work of this paper focuses on:

- (1)

- A 3-D point cloud is projected into 2-D polar coordinate points, and a slice-based projection filtering algorithm is proposed to reduce the point cloud data processing dimensions and computing time.

- (2)

- The point cloud is classified into four categories in a slice unit: Valuable object points (VOPs), worthless object points (WOPs), abnormal ground points (AGPs), and normal ground points (NGPs). Based on the point cloud classification results, the traffic objects and their surrounding information would be easily identified from an individual frame of point cloud.

- (3)

- A machine learning model based on a backpropagation artificial neural network (BP-ANN) is used to dynamically fit the road gradient or employing the inclination of the LiDAR sensor, as to improve the adaptability of the algorithm in a complex environment.

The rest of the paper is structured as follows: In Section 2, we introduce the LiDAR sensor and the type classification of data points. In Section 3, we give a detailed description of the assumptions and definitions proposed in this paper. In Section 4, we illustrate the detailed methods for background filtering. The experiments and validation analysis are presented in Section 5. In Section 6, we summarize the contributions of our work, as well as the perspectives on future work.

2. LiDAR Sensor and Points Classification

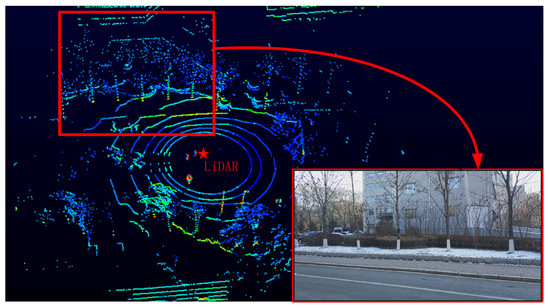

The working principle of LiDAR is to obtain the distance information of objects by firing and receiving laser beams. There are four important parameters: The number of laser channels, rotating frequency, vertical angle resolution, and horizontal angle resolution. These parameters determine the amount of points and the speed of the LiDAR sensor output point cloud. In this paper, we develop the point cloud filtering algorithm based on a 360° Velodyne VLP-16 LiDAR sensor that contains 16 laser channels. This sensor can detect ±15° in the vertical range, and the vertical angle resolution is 2°. We set the rotating frequency of LiDAR to 600 RPM and the corresponding horizontal angle resolution approximately 0.2°. Figure 1 demonstrates a selected frame of a raw 3-D LiDAR point cloud from the VeloView (Velodyne LiDAR, San Jose, CA, USA) software and field photo corresponding to LiDAR firing.

Figure 1.

A frame of a light detection and range (LiDAR) point cloud displayed by the VeloView and the corresponding field photo.

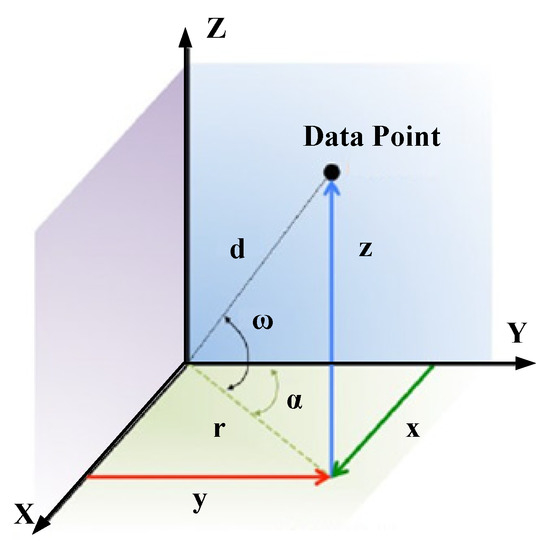

The LiDAR sensor outputs 3-D point cloud in spherical coordinates, which can be expressed as , where is the distance between a data point and the LiDAR, is the vertical angle fixed and given by the Laser ID (identity document number), and is the horizontal rotation angle provided by the data packet of LiDAR. In this paper, we deal with the point cloud after projection in XYZ coordinates. The conversion relationship of the data point from spherical coordinates to XYZ coordinates is shown a s Figure 2 [29].

Figure 2.

The conversion relationship of the data point from spherical coordinates to XYZ coordinates.

The spherical to XYZ conversion formulas are [30]:

A good filtering algorithm should retain as much valuable information as possible and remove as much worthless information as possible. That is, a good filtering algorithm should not simply filter all ground points. Instead, it should also retain all points with useful information for ground points, such as steps and curbs, which can also be used for road users’ path prediction. There are also worthless information points in object points, such as leaves, which have little impact on traffic. Therefore, we divided the points in a point cloud into four categories in this paper: Valuable object points (VOPs), worthless object points (WOPs), abnormal ground points (AGPs), and normal ground points (NGPs).

VOPs contain points that can affect traffic, such as tree trunks, road users, and lighting poles. As for WOPs, they mainly include points that cannot be used to judge objects and have no impact on traffic, such as noise and leaves. For NGPs, road surface points that can be normally used by vehicles are included. The algorithm proposed in this paper aims to remove WOPs and NGPs from the point cloud as much as possible. According to AGPs and VOPs, we can analyze pedestrians, vehicles, and their surrounding information (such as nearness to curbstones, the relative location of the road surface, adjacent position to a light pole), which are valuable inputs for microcosmic traffic behavior analysis, pedestrian zebra-crossing behavior analysis, driver or pedestrian intention prediction, trajectory tracking in traffic accident prevention, and other various intelligent transportation applications.

3. Definitions and Assumptions

3.1. Definition of Slice

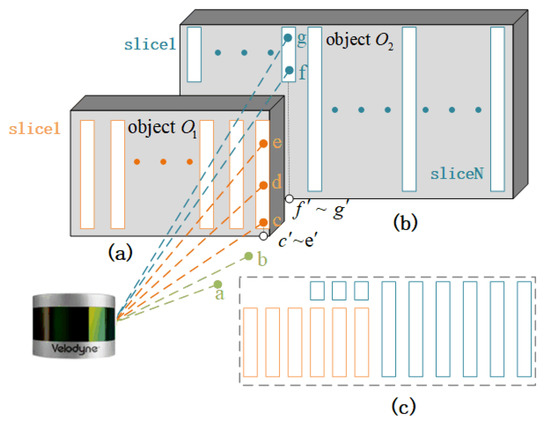

Figure 3 shows the schematic diagram of LiDAR scanning objects in a data frame of the point cloud. In the point cloud, the point and are the points firing on the ground by LiDAR. The point , , and are the points firing on the front object . The point , , and are within the same vertical block and form a slice within object . Point and are the points firing on the rear object . Point and are within the same vertical block and form a slice within object . The point , , , and are the points of the point , , , and mapping to the horizontal plane, respectively. As shown in Figure 3a,b, one object is composed of several slices in the same vertical plane. A vertical plane contains all the objects’ slices, which are in the same direction, as shown in Figure 3c. We define that a slice is a point set containing the points firing on the same object and within the same vertical block.

Figure 3.

The schematic diagram of a LiDAR slice. (a) Points and slices within object . (b) Points and slices within object . (c) Slices and objects within a vertical plane.

is defined as the amount of points contained in a slice, and if . If , this indicates that it is a noise point or a ground point of LiDAR. The slice is an invalid slice that does not have enough information for further processing.

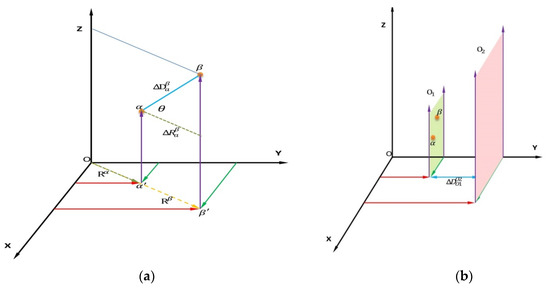

As shown in Figure 4a, the point and are the LiDAR data point in the same slice. The point and are the points of the point and mapping to the horizontal plane, respectively. is the spatial distance between point and . is the distance between point and in the horizontal plane. is the inclination angle of the object to the horizontal plane. and are the distance of point and to the ordinate origin, respectively. Their relationship can be formed as:

Figure 4.

The points’ relationship in a slice and between objects. (a) The points’ relationship in a slice. (b) The points’ relationship between objects.

Generally speaking, objects, such as people, vehicles, and buildings, are vertically aligned with the ground. It means that the object’s inclination angle goes to 90°. Then:

As shown in Figure 4b, is the minimum distance between object and object . There always have a social distance, safety distance, or viewing distance between pedestrians, vehicles, trees, and buildings. It can be deduced that:

Therefore, a threshold value can be found to distinguish whether the point and belong to the same slice:

Furthermore, the threshold value setting of will affect the accuracy of slice extraction within the object, particularly for the object, which is not perpendicular to the ground. For example, a too small may give rise to classification of the point and into different slices, when the point and actively belong to the same slice. On the contrary, a too large will result in the point and being classified into a slice, when the point and should be in different slices. From the experimental experiences, the threshold value of showed high accuracy and no obvious effect on the experiment results within the range of . In this paper, we set the threshold value as the median value of the range , = 0.3 m.

3.2. Definition of Abnormal and Normal Ground Points

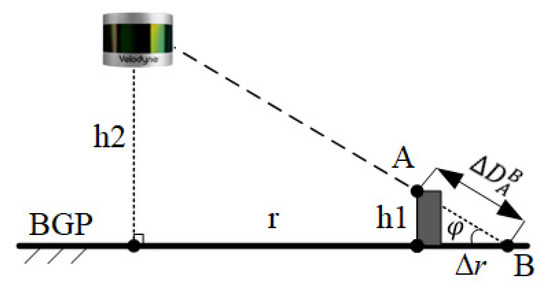

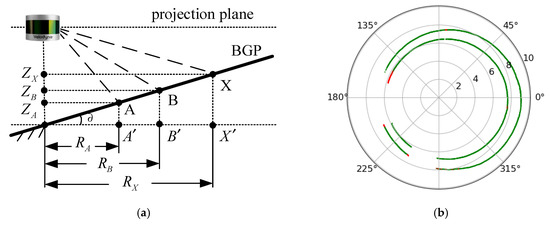

We assumed that there is a base ground plane (BGP), which is a reference plane and absolutely smooth. The curbstones and road obstacles can be regarded as the static objects on the BGP, and a pothole is also a type of object. As shown in Figure 5, if there is a curbstone on the BGP, the LiDAR sensor obtains the laser point as A; if there is no curbstone on the BGP, the LiDAR sensor obtains the laser point as B. According the classification of the point cloud in Section 2, point A belongs to AGPs and point B belongs to NGPs.

Figure 5.

Schematic diagram of distinguishing abnormal ground points (AGPs) and normal ground points (NGPs).

In Figure 5, is the height of the curbstone, is the horizontal distance between the curbstone and LiDAR sensor, and is the installation height of the LiDAR. is the horizontal distance between point A and B in the BGP. According to Equation (2), = .

3.3. Definition of Valuable and Worthless Object Points

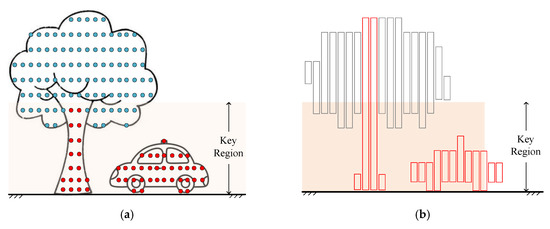

In general, no matter the moving road users or the fixed tree trunks, they are all connected to the ground. We call them valuable objects. Road users are the main body of traffic, and other valuable objects will affect the trajectory of road users. Slices connected with or close to the ground are called valuable slices, and others are worthless slices. The corresponding points in slices are VOPs and WOPs. We define an area from the BGP to a certain height as a key region, as shown in Figure 6. The VOPs in the key region are called key valuable points.

Figure 6.

Schematic diagram of separating valuable object points (VOPs) and worthless object points (WOPs). (a) The schematic diagram showing the key region and key valuable points in the key region; (b) The schematic diagram of obtaining all valuable slices in the point cloud based on the key valuable points in the key region.

In Figure 6a, the orange area is the key region, the red points are the key valuable points, and the blue points are other types of points. In Figure 6b, the red slices are the valuable slices and the gray slices are worthless slices. Most of the points in the key region are key valuable points, which are denser than other points in the point cloud within the key region. Based on this assumption, we can extract all the key valuable points. Then, all the object points can be classified into VOPs and WOPs.

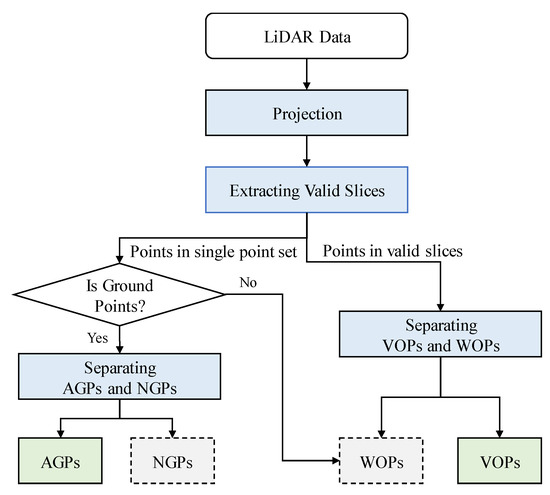

4. Slice-Based Projection Filtering Algorithm

In this section, we propose the detailed steps of the slice-based projection filtering (SPF) algorithm, which makes up four major parts: Projection, extraction of valid slices, AGPs and NGPs separation, and VOPs and WOPs separation. Figure 7 shows the flowchart of the proposed method.

Figure 7.

Flowchart of the slice-based projection filtering algorithm.

4.1. Projection

Compared with the 2-D plane, background filtering using 3-D space coordinates will take advantage of the rich characteristic information of the object. However, it will increase the calculation dimensions and computation complexity in dealing with laser points. For example, in 3-D-DSF background filtering, Wu [25] divided the 3-D space into continuous small cubes with the cube size at . For a scanning range, it needed to be divided into cubes. There is only cells to be analyzed if it taken into the 2-D plane. The loop count of computation with the 2-D plane is 1/80 of the 3-D space method. Therefore, we attempted to project the 3-D point cloud into the 2-D plane. The 2-D point cloud data can be represented by rectangular coordinates and polar coordinates , as shown in Figure 2. These two coordinate systems take the LiDAR sensor as the origin of coordinates. The data point can be expressed as:

where and are the coordinate position within the X-axis and Y-axis, respectively, in rectangular coordinates; is the distance between the point and LiDAR in the horizontal plane; and is the horizontal rotation angle.

The process of projection in rectangular coordinates includes the multiplication of four trigonometric functions, while the projection in the polar coordinate system only requires the multiplication of one trigonometric function. Therefore, projection in polar coordinates can effectively reduce the calculation time and computation resources. Furthermore, the scanning trajectory will be a closed circle or ellipse after being projected when a laser beam in LiDAR is scanned on the ground plane.

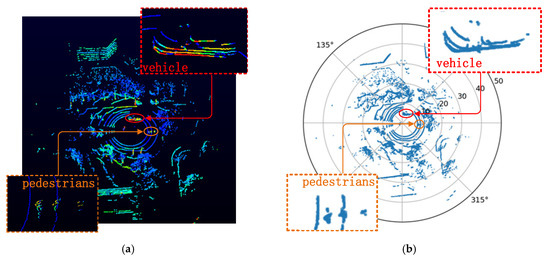

There will be two values on a certain value when rectangular coordinates are used to represent this trajectory curve. If polar coordinates are used, and are a one-by-one correspondence. Hence, polar coordinates can be used to represent the trajectory curve with a simpler equation, which is more in line with the characteristics of the LiDAR data. In this paper, the 3-D point cloud is projected in a polar coordinate plane for analysis, as shown in Figure 8.

Figure 8.

The point cloud display in different coordinates. (a) The original point cloud displayed in the VeloView software. (b) The result of the projecting point cloud.

Figure 8 shows the comparison diagram of the point cloud before (Figure 8a) and after the projection (Figure 8b). We find that the height information of the point cloud is hidden after the projection. However, this will not affect the processing results, as the purpose of the projection in this paper is to extract the valid slices in the point cloud.

4.2. Valid Slices Extraction

The purpose of the valid slice extraction operation is to find the object points in the point cloud based on the properties of the slice. We used the matrix to represent the raw data of the LiDAR rotation output, where , , and represent the column vectors that contain , , and , respectively, and each row corresponds to a point collected by the LiDAR sensor. We used matrix to represent the matrix after projecting matrix . We used to obtain all row vectors in the satisfying the condition of the flag.

Suppose is a certain row of and we need to find other points in the same slice with , it is the simplest way to traverse all points in and calculate the distance from . However, there are many points in the point cloud, and it will take a long time to traverse all points, so the implementation efficiency of this method is very low. For this reason, this paper proposed a solution based on matrix operations to extract effective slices efficiently, as shown in Algorithm 1.

| Algorithm 1 Extracting Valid Slices |

|

According to the definition and attributes of valid slice, the valid slices can be extracted based on two conditions:

Condition 1: If points belong to the same slice, these points have the same horizontal rotation angle.

Condition 2: The horizontal distance of two points in the same slice is less than the threshold value of .

Therefore, we suppose , , and are three points that are all contained in . If and belong to the same slice, and also belong to the same slice, then it is clear that and also belong to the same slice. Thus, we only need to determine the distance between two adjacent laser points within the setting horizontal rotation angle.

We can obtain the matrix , where two adjacent rows are also adjacent in real space after sorting the rows of matrix by and then by . Then, it is easy to get the distance difference of all adjacent points to LiDAR. Finally, we can reasonably set the threshold value of to extract all points in the point cloud that belong to the valid slices. In algorithm 1, matrix is used to mark all points, where true indicates that the point belongs to a certain slice and false indicates that the point is a single point.

There are one or more single points between the different slices. Based on the differences, the slice can be differentiated from and labeled. In the proposed algorithm, we used the matrix to mark different slices. Within the matrix , 0 indicates that the point is a single point; if the number is greater than 0, it indicates the index of the slice that the point belongs to.

By the experiments, the runtime of the proposed algorithm is about 18 ms compared to the traversing method, which needed 1.5 s to extract the valid slice. Therefore, the proposed algorithm of this paper is more efficient.

4.3. Abnormal and Normal Ground Points Separation

The surface, slope, curbstones, road obstacles, and other information of the ground are also very useful for trajectory predictions of road users with the consideration of the edge of different function areas of the road. The purpose of the AGPs and NGPs separation operation is to abstract all the ground points that could impact the road users.

4.3.1. Ground Point Extraction

After extracting the valid slices from the point cloud, the remaining points are a single point set. The single point set is composed of the ground points, noise points, and edge points of objects. In the LiDAR scanning range, the ground points are closer to the BGP. Therefore, we can obtain the ground points by setting the condition as follows:

where is the height of point ; is the coordinates of the point on the -axis, where the horizontal plane is the BGP; and is the threshold of ground points.

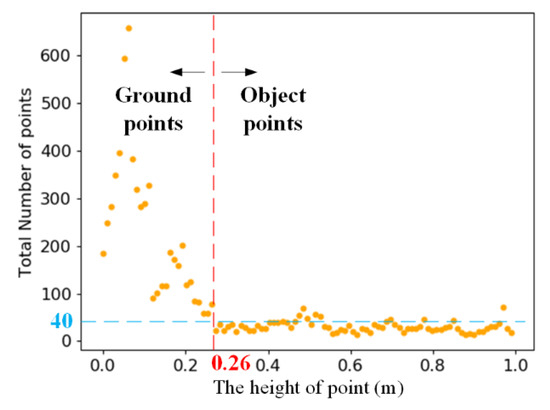

In this paper, the threshold value was based on the height between the point cloud and BGP. We divided 0–1 m into 100 parts on average and counted the amount of points in each part. The height distribution of the point cloud is shown in Figure 9.

Figure 9.

The height distribution of the point cloud.

4.3.2. Separation Method

In the absence of AGPs, the projected NGPs should be a closed curve track, noted as . When the projection plane of the LiDAR point cloud is parallel to the ground, the trajectory is a circle. The trajectory is a curve similar to an ellipse when LiDAR has an angle from the ground. When there is an AGP at a horizontal angle , the point should deviate from the curve . Therefore, we can fit the trajectory curve , and use it to decide whether a point in the ground points belongs to the NGPs or AGPs according to the distance from the curve . The judgement condition is:

where is the actual distance between the projected point and LiDAR; is the distance predicted by the curve L1 at the horizontal roatation angle ; is the absolute function; and is the threshold value to differentiate AGPs and NGPs; the range of is:

where is a coefficient, and ; is the height of the point; and is the installation height of the LiDAR.

We selected the data of laser channel 0 with the smallest vertical angle to train the trajectory curve , as the points where the laser of this channel fell on BGP were the closest to the LiDAR, and contained the most ground information. In addition, we selected a frame of the point cloud including more ground information, so as to fit the curve more accurately. After fitting the curve , the curves can be applied to the point cloud data of all other frames.

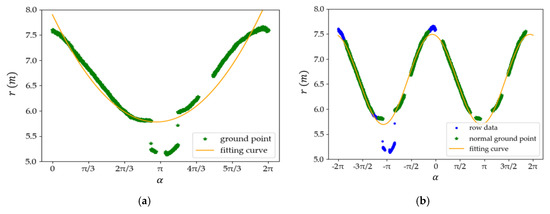

The and of all points obtained by a single channel laser beam in a scanning period are one-by-one corresponding. As shown in Figure 10, with taken as the horizontal axis and as the vertical axis, the processes of the fitting curve can be described as the following steps:

Figure 10.

The processes of the fitting curve . (a) Fitting the curve in step 1; (b) Fitting the final curve in step 3.

Step 1: We used a quadratic equation to fit the ground points detected by the LiDAR laser channel 0 by least squares, firstly:

where is the distance between the point and LiDAR in the horizontal plane as mentioned above; and , , and are regression coefficients, which can be obtained by least squares regression.

As shown in Figure 10a, the green scatter is the ground point detected by the laser channel 0, noted as . The orange curve is the fitting curve of the ground points, noted as . It can be seen that there are some points far below the fitting curve . These are noise points or AGPs in GP0.

Step 2: Base on the fitting curve fitted by the laser channel 0, we used to filter the points detected by LiDAR laser channel 1 to channel 15. With the same horizontal angle , if the points detected by the LiDAR laser channel 1 to channel 15 are far below the corresponding point in , these are removed before stepping into the next step.

Step 3: After filtering out all the noise points or AGPs in step 1 and step 2, the remaining points are the NGPs. We used the remaining points to fit the final curve by the Sinusoid function as:

where is the horizontal rotation angle of the point as mentioned above; and , , , and are the regression coefficients, which can be obtained by least squares regression.

As shown in Figure 10b, the green scatters are the NGPs. The orange curve is the final fitting curve . It can be seen that some blue scatters are far below the fitting curve . These are noise points or AGPs within the raw data. The remaining points nearly around the fitting curve map well with the NGPs. Most of the blue points nearly around the fitting curve overlap with the corresponding NGPs. It means that the fitting curve can effectively distinguish the AGPs and NGPs.

4.3.3. Separation Results

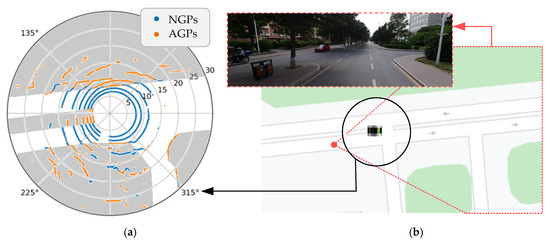

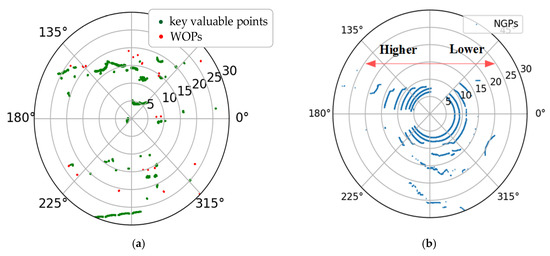

After obtaining the trajectory equations of all laser channels, we can distinguish AGPs and NGPs in all ground points by setting the threshold value reasonably. In practical applications, the values of and are fixed. According Equation (9), the max() will change as the actual distance is changing. As to distinguish the AGPs and NGPs accurately, the threshold value should change as the as the actual distance changes. In the experiment, we set equal to 0.26 m based on the height of the curbstones and equal to 1.8 m based on the installation height of the LiDAR. According in Equation (9), . Thus, . The experiments results showed that a too small threshold value will give rise to recognition of the NGPs as AGPs when the points are near the LiDAR sensor. On the contrary, a too large threshold value will result in the AGPs being identified as NGPs when the points are far away from the LiDAR sensor. In this paper, we used the median value of the range , setting . The experiment result is shown in Figure 11.

Figure 11.

Abnormal ground points (AGPs) and normal ground points (NGPs) separation result. (a) The distribution of AGPs and NGPs in the polar coordinate. (b) The field scene of the LiDAR sensor scanning.

As shown in Figure 11a, the blue scatters are NGPs, and the orange scatters are AGPs. It can be effectively distinguished by the proposed method. If there are a large number of AGPs and a small number of NGPs in a certain area, it can be deduced that this area is a non-driving area due to too many obstacles within this area. The gray areas are non-driving areas as shown in Figure 11a. Figure 11b shows the experimental location in the Baidu Map and the field scene scanned by the LiDAR sensor in the experiment.

4.4. Valuable and Worthless Object Points Separation

We can obtain the object points in the point cloud after extracting the valid slice operation. However, not all object points are useful for traffic behavior analysis. Therefore, the separating VOPs and WOPs operation is required to find the points from all object points, which are useful for traffic behavior analysis.

4.4.1. Key Region Optimization

If the projection plane of the LiDAR point cloud is parallel to the BGP, the information of the point cloud can be directly used to obtain the key region. In fact, there may be a slope in the BGP. In this situation, it is hard to ensure the projection plane remains parallel to the BGP. Therefore, if there is an inclination angle between the BGP and the projection plane, it will be difficult to obtain the accurate key region by using only the information of the point cloud. The error increases with the distance from the LiDAR sensor, as shown in Figure 12.

Figure 12.

Calculating the slope between the base ground plane (BGP) and the projection plane. (a) A mathematical model using LiDAR to calculate the slope; (b) The points used to calculate the slope.

As shown in Figure 12a, point and point are formed by LiDAR laser beams falling on the BGP. The projected points are and , respectively. is the inclination angle between BGP and the projection plane. When another laser beam falls on point on the BGP, the Z-coordinate of point should be:

where , , and are the horizontal distance from the point , , and to the LiDAR sensor, respectively; and , , and are the distance deviation in calculation the Z-coordinate of point , , and , respectively.

Clearly, if there is an inclination angle between the BGP and the projection plane, the is changing for different points within the point cloud, particularly for the object points. It requires at least two channel laser points to calculate the inclination angle between the BGP and the projection plane.

According to the LiDAR user manual and programming guide [29], the laser ID 0 and 2 are the two laser beam closer to the ground. In the experiment, these two laser beams generate more ground points than the other laser beam in LiDAR. In this paper, the NGPs generated by laser ID 0 and 2 are used to calculate the inclination angle between BGP and the projection plane. The NGPs are obtained based on the process results of Section 4.3, as shown in Figure 12b.

In the LiDAR data packet, we can obtain the XYZ coordinates of the point and its horizontal rotation angle. Here, is the coordinate information of point obtained by the laser ID 0, is the coordinate information of point obtained by the laser ID 2, and point A and point B have the same horizontal rotation angle. According to Equation (12) and Figure 12a, the inclination angle between BGP and the projection plane can be calculated as:

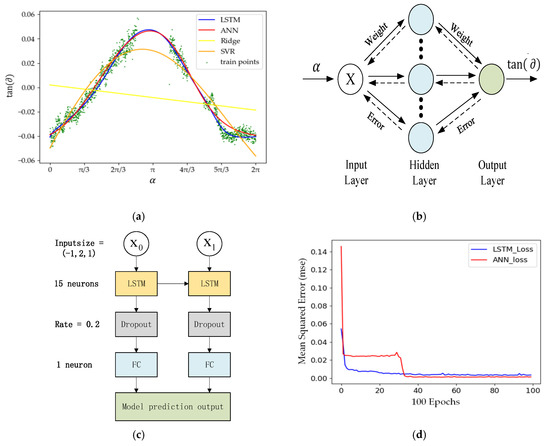

All the point pairs in Figure 12a were used to calculate , and the calculation results are shown in Figure 13a, where the green scatters is the value of at different horizontal rotation angles (0–).

Figure 13.

The inclination angle between the BGP and the projection plane obtained by the machine learning model. (a) The fitting results of the curve by different machine learning models. (b) The schematic diagram of a backpropagation artificial neuron network (BP-ANN) machine learning model. (c) The schematic diagram of a long short-term memory (LSTM) machine learning model; (d) The loss curves generated by the mean square error function with the LSTM model and BP-ANN mode.

As shown in Figure 13a, there is noise in the calculation results, which is caused by the errors of the sensor itself and the uneven ground. The continuous curve of the value cannot be obtained directly as the points used to calculate it are discrete, or there is a shied on the road.

To improve the flexibility of the algorithm, it is necessary to fully consider the inclination angle information between the BGP and the projection plane. In addition, the road surface condition also should be considered. To adapt to different road surface conditions and employ inclination, we attempted to use a machine learning algorithm to obtain accurately the inclination angle between the BGP and the projection plane and identify the key region. A machine learning model based on the backpropagation artificial neuron network (BP-ANN) was developed to fit the scatter of thte calculated to obtain an accurate and continuous actual .

The BP-ANN is a multilayer feedforward neural network composed of an input layer, a hidden layer, and an output layer, as shown in Figure 13b. In practical applications, the road pavement conditions within the scanning range of LiDAR are different. Thus, in the different LiDAR scanning horizontal rotational angles , the inclination angle may be different. In the BP-ANN, we input the horizontal rotational angle to get the corresponding road pavement inclination with the output of by the BP-ANN-based machine learning model. It means that and is obtained by the BP-ANN model. The training data set of and were the points on the BGP with the same horizontal rotational angle , using Equation (12) or Equation (13) to calculate the corresponding . Therefore, the input layer and output layer contained only one neuron each as only one variable was needed for each layer. The variables and were used for the input layer and the output layer, respectively. The number of neurons of the hidden layer was set as 32. We used the activation function for neurons in the hidden layer for the function that could converge faster than the sigmoid function, while the neuron in the output layer did not use any activation function to avoid gradient explosion. During the model training period, the mean square error function was used for the loss function, the optimizer was Adam, and the batch size was set as 32. The fitting curve of is shown as the red line in Figure 13a.

In addition, we also used three other algorithms to fit the curve. A machine learning model based on long short-term memory (LSTM) was used to fit the curve [31]. The complete network model is shown in Figure 13c, in which each LSTM cell contains 15 neurons. The time-step of the model was set to 2, due to the being predicted by keeping the memory of several previous position points when both the LiDAR and the BGP are fixed. Then, the memory of the changing was transferred between two adjacent batches until the internal state of the model was reset. During the model training period, the mean square error function was used for the loss function, the optimizer was Adam, and the batch size was set as 8. After the completion of the model, we set the time-step to 1 for the prediction, and the obtained continuous curve is shown as the blue line in Figure 13a.

The second method was the Ridge regression [32], where the coefficient of the penalty term was set as 0.5. The fitting curve of is shown as the yellow line in Figure 13a. The last method was support vector regression (SVR) [33], where the radial basis was used for the kernel function. The fitting result of the curve is shown as the orange line in Figure 13a.

From the fitting results, we can see that the fitting results based on LSTM are the best in the range of . The fitting curve of based on BP-ANN is similar to LSTM. The loss curve generated by the mean square error function with the LSTM model dropped faster than the BP-ANN model. However, the loss values were almost the same after 100 epochs, as shown in Figure 13d. In practical applications, the LSTM-based machine learning model is much more complex than the BP-ANN machine learning model. Therefore, we chose the simpler BP-ANN machine learning model to obtain the accurate inclination angle between the BGP and the projection plane.

After identification, there is an inclination angle between the BGP and the projection plane, and after obtaining the accurate in the corresponding horizontal rotation angle, it is easy to identify a point within the point cloud that belongs to the key region by:

where is the Z-coordinate output by the LiDAR sensor; is the installation height of the LiDAR sensor; is the height of the key region; and is the distance deviation, which can be calculated according to Equation (12).

In China, the average height of male adults is about 1.71 m, and it is about 1.6 m for female adults [34]. The height of small cars is generally within 1.6 m and 1.8 m. Road users are the main research object in the field of transportation. Therefore, in the experiments, we set the value of as 1.5 m, slightly lower than the general height of road users. Within this height range, most road users can be included, and most roadside leaves can be excluded.

4.4.2. Valuable Object Points Extraction

The key valuable points are more point intensive than other types of points after projecting all points in the key region. Therefore, the DBSCAN (density-based spatial clustering of applications with noise) [35] algorithm can be used to extract the key value points from the key region. With DBSCAN, one object can be joined in the clustering area if the point density is higher than or equal to a pre-defined minimum number of points (), and the distance to the center is less than a pre-defined epsilon (). In the experiments, we set and to cluster the projected points in the key region. The key value point cluster results are shown as Figure 14a.

Figure 14.

The key value points cluster in the key region with an inclination angle between the BGP and the projection plane. (a) The key valuable points in the key region. (b) The normal ground points in the key region.

As shown in Figure 14a, the green scatters are the key valuable points obtained from the point cloud in Figure 8. The red scatters are sparse points that belong to WOPs. According to Algorithm 1, we can obtain the matrix , which contains the index of all valid slices. Therefore, we can find all the index numbers contained in the key valuable points and then find all the VOPs by combining with the matrix .

Figure 14b is generated by the NGPs that were obtained based on the process methods of Section 4.3, and has the same raw point cloud of Figure 14a. According the fitting curve of , we can infer that the height of the left side is higher than the right side in Figure 14. If only the coordinate is used for the identification of the key region, we will obtain less area for the left side and more in the right side.

Table 1 shows the numbers of VOPs obtained in the left and right sides of Figure 14, with or without the fitting of the inclination angle between the BGP and the projection plane.

Table 1.

The number of VOP extraction with or without fitting of the inclination angle between the BGP and the projection plane.

As shown in Table 1, on the left side of the key region, the number of VOPs obtained after fitting the inclination angle was more than that without fitting, and it was an adverse result on the right side. This phenomenon demonstrates that greater accuracy in the key region can be found after fitting the angle. In addition, the number of VOPs with fitting was more than that without fitting, which shows that the greater the accuracy in the key region, the more VOPs that will be obtained.

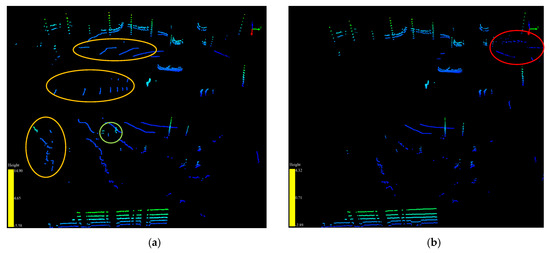

Figure 15 shows the AGPs and VOPs extraction results with or without the fitting of the inclination angle between the BGP and the projection plane.

Figure 15.

The AGPs and VOPs extraction results with and without fitting of the inclination angle between the BGP and the projection plane. (a) The extraction result with fitting of the inclination angle. (b) The extraction result without fitting of the inclination angle.

As shown in Figure 15a, the VOPs within the green ellipse region are missing in Figure 15b. Furthermore, the inclination angle between the BGP and the projection plane not only affects the extraction result of VOPs but also affects the extraction result of AGPs. As shown in Figure 15, the AGPs within the orange ellipse regions in Figure 15a are missing in Figure 15b, which does not have fitting of the inclination angle. This is due to the left side being higher than the right side as discussed above in Figure 14, and a large number of ground points are far from LiDAR on the left side being identified as individual single points, which belong to the objects in Figure 15b. In addition, as shown in Figure 15b, some single points within the red ellipse region, which belong to the objects on the right side, are identified as the AGPs. Therefore, it is necessary to fit the inclination angle to improve the accuracy in the identification of the category of points.

5. Experiments and Validation Analysis

5.1. Filtering Results

The first experiment was designed to illustrate the obtained filtering results. We evaluated the performance of the proposed method of this paper and compared it to a popular RANSAC algorithm by Behley [23] and the 3-D-DSF algorithm provided by Wu [25]. For the 3-D-DSF approach, the side length of the cube was 0.1 m.

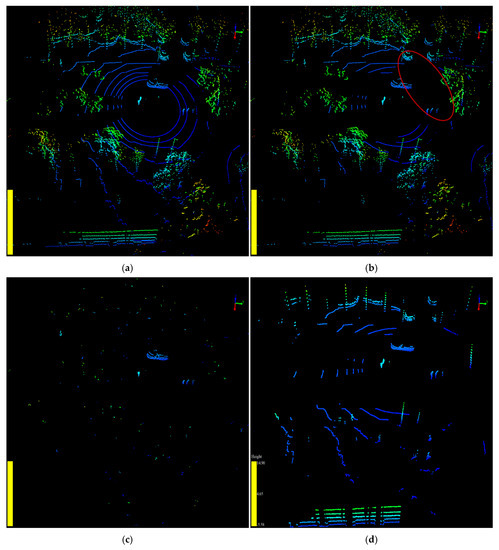

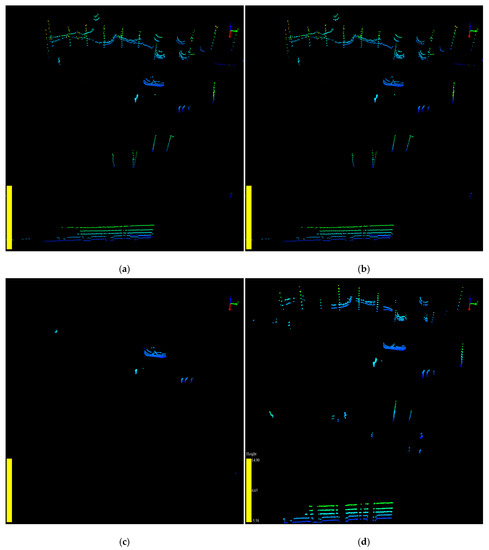

The point cloud data were collected by the Velodyne VLP-16 LiDAR sensor at Central Avenue, Nanling campus, Jilin University. The location of the LiDAR sensor is latitude 43.85 n, longitude 125.33 w. Approximately 1200 frames of point cloud were collected and used as input data in the experiments. The background point filtering results of the three algorithms are shown in Figure 16.

Figure 16.

The point cloud before and after background point filtering by different algorithms are shown in VeloView. (a) The raw point cloud before background point filtering. (b)The point cloud after being filtered by random sample consensus algorithm (RANSAC); (c) The point cloud after being filtered by 3-D-DSF; (d) The point cloud after being filtered by slice-based projection filtering algorithm (SPF).

Figure 16a shows the raw point cloud before processing. Figure 16b shows that the RANSAC algorithm can only filter the ground points from the raw point cloud. More processing stages are required to remove the worthless points from the point cloud by other methods. As shown in Figure 16c, there are still some noise points left after the background point filtering. We needed to remove noise points using clustering methods, such as k-means and DBSCAN, which increases the execution time of the algorithm [36]. Furthermore, the 3-D-DSF algorithm ignores certain useful information generated by fixed objects, such as curbstones and tree trunks, which can affect the trajectory of a road user in summer in tropical areas.

Figure 16d shows the filtering result by the proposed algorithm SPF. Different colors in the figure present different objects clustered by DBSCAN. From the perspective of filtering quality, the proposed algorithm was able to effectively extract useful points in the point cloud, including the AGPs that belong to the ground and the VOPs that belong to the objects. Through AGPs and VOPs, we can not only obtain the information of all road users in the point cloud but also the environment information that can affect the trajectory of the road users. Therefore, analyzing the data after filtering by the proposed algorithm can help to perceive the traffic information more comprehensively, accurately, and quickly.

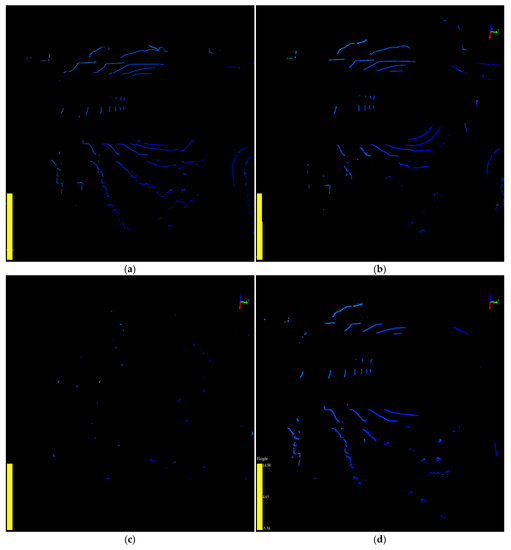

To compare the filtering results in more detail, we used the amount of the point cloud before and after filtering to evaluate the algorithms. The experimental results are compared in Table 2, and Figure 17 and Figure 18. The raw point cloud, raw VOPs, and raw AGPs in the experiments are shown in Figure 16a, Figure 17a, and Figure 18a, respectively.

Table 2.

The background point filtering results by different algorithms.

Figure 17.

The VOPs before and after filtering are shown in VeloView. (a) The raw VOPs before filtering. (b)The VOPs after being filtered by RANSAC; (c) The VOPs after being filtered by 3D-DSF; (d) The VOPs after being filtered by SPF.

Figure 18.

The AGPs before and after filtering are shown in VeloView. (a) The raw AGPs before filtering. (b)The AGPs after being filtered by RANSAC; (c) The AGPs after being filtered by 3D-DSF; (d) The AGPs after being filtered by SPF.

As shown in Table 2, the 3-D-DSF algorithm can filter out 96.7% of the points from the point cloud. The VOPs and AGPs excluded percentages were 84.5% and 96.8%, respectively, as shown in Figure 17c and Figure 18c, respectively. However, the 3-D-DSF algorithm filtered out too much valuable information. Thus, the amount of valuable points (VOPs and AGPs) after background point filtering was only 956, which was the minimum. However, it still contained some noise after the background point filtering by 3-D-DSF. The percentage of noise point in the valuable points was about 4.29%.

The background point filtering percentage of RANSAC was only 15.9% in the raw point cloud filtering. The VOPs and AGPs excluded percentages were 11.7% and 7.0%, respectively, as shown in Figure 17b and Figure 18b, respectively. This showed that the RANSAC algorithm retained too much worthless information. This is why the amount of valuable points after background point filtering was only 6321, which was the maximum. However, it needed to further exclude about 74% of the worthless points after the raw point cloud filtering. The percentage of valuable point was only 26% after background point filtering by RANSAC, which was the minimum.

The SPF algorithm, the proposed method in this paper, filtered out 80.1% of the points from the raw point cloud. The VOPs and AGPs excluded percentages were 20.0% and 14.8%, respectively, as shown in Figure 17d and Figure 18d, respectively. It could exclude all of the worthless points in the raw point cloud background filtering. The percentage of valuable point was almost 100% after background point filtering by SPF in the raw point cloud filtering, which was the maximum.

In terms of the filtering quality and accuracy for further processing in identifying the traffic object, the SPF algorithm was able to retain more valuable information and remove more worthless information for further processing in the stage of traffic object identification and trajectory tracking. In this respect, we concluded that the SPF algorithm performed better than RANSAC and 3-D-DSF.

5.2. Runtime

The execution time of the algorithm plays an important role in practical applications, especially in area traffic behavior and safety analysis. Therefore, the experiment was designed to evaluate the running performance of the three algorithms. The three algorithms were implemented in Python3.5 and were deployed on a desktop computer equipped with an Intel Core i7-7500U CPU (2.70 GHz), with 8 GB of RAM, and with a Windows 10 operating system. The raw point cloud was collected by the Velodyne VLP-16 LiDAR sensor located at Central Avenue, Nanling campus, Jilin University. The raw point cloud including about 1200 data frames was used as the data input of the three algorithms. The performance results are shown in Table 3.

Table 3.

The runtime of the three algorithms.

As shown in Table 3, the average background point filtering time was about 51 ms per frame by the SPF algorithm. The running times of RANSAC and 3-D-DSF were 198 and 113 ms per frame, respectively. In addition, the 3-D-DSF algorithm needed multi-frames to perform the algorithm. It would not be suitable for on-line intelligent transportation applications.

6. Conclusions and Discussion

A LiDAR sensor has advantages in obtaining the high-resolution fine-grained position, direction, speed, and trajectory data of vehicles and pedestrians. However, the point cloud generated by a LiDAR scan contains not only road user points but also buildings, trees, and ground points. To speed up the micro-level traffic data extraction from the LiDAR sensor, removing the background points using a suitable background filtering algorithm is necessary. In this paper, we developed a novel method of background point filtering for low-channel infrastructure-based LiDAR.

First, we projected a 3-D point cloud into the 2-D polar coordinate points for filtering to reduce the data processing dimensions and computing time. Secondly, we divided the points in the point cloud into four categories: AGPs, NGPs, VOPs, and WOPs. Then, we used the slice as a unit to analyze the point attributes, and to separate and extract the VOPs and AGPs from a single frame of the point cloud. To dynamically fit different road conditions and situations, such as different road gradients and different LiDAR scanning angles, a machine learning method based on the artificial neural network was used to improve the adaptability of the algorithm in complex environments.

Finally, compassion was done to verify the efficiency and accuracy of the method in this paper. Compared to the methods of RANSAC and 3-D-DSF, the proposed algorithm of this paper retained more valuable points, removed more worthless points, and required fewer computational sources. In addition, there were less parameters required for calibration in the proposed algorithm. We have the following major findings:

- (1)

- Projecting 3 = D point cloud into 2-D polar coordinates can reduce the computation complexity and memory resource in the point cloud loop processing during the background point filtering. The processing time using 2-D polar coordinates is about one quarter of the processing time using the 3-D point cloud directly.

- (2)

- Using the vertical slice unit, which is different from the horizontal slice unit mentioned in the the state-of-the-art literature, can effectively classify the point cloud into VOPs, WOPs, AGPs, and NGPs. It can further remove the worthless points in the background point filtering. The accurate and excluding efficiency is about 5 times that is not based on the vertical slice unit.

- (3)

- In practical applications, road pavement conditions and LiDAR installation statuses need to be considered, such as the road gradient and LiDAR sensor inclination. Therefore, a BP-ANN machine learning model was proposed to fit the inclination angle between the BGP and the projection plane to improve the flexibility of the background point algorithm in a complex environment.

However, the present study and its methodological approach are not free from limitations. The first limitation is that the slice-based background point filtering algorithm did not consider the object far away from the LiDAR sensor, which can be obtained from only one single point. Based on the vertical slice unit rule, these points presenting the objects are considered as noise in the algorithm and filtered out. Therefore, it needs to identify the effective filtering range of the proposed algorithm. A second limitation is that the non-driving area far away from the LiDAR sensor cannot be detected accurately, due to a lack of reference points, and the threshold used to differentiate point types increases as the distance increases in the present study. However, the non-driving area is important for pedestrian trajectory tracking and intention prediction in intelligent transportation applications. The third limitation is that the present study did not consider the weather conditions. The proposed algorithm only evaluated during the daytime on a sunny day. However, the bright day and the black night have a different impact on the laser refection intensity of the LiDAR sensor. Snow and rain falling on the ground will influence the accuracy of ground point identification. A fourth limitation is the efficiency and accuracy of traffic object identification and traffic object trajectory tracking from the VOPs and AGPs. The effectiveness and necessity to retain AGPs for assistant identification of the traffic object and trajectory should be further evaluated. The final limitation is that the present study used low-density point cloud from the Velodyne VLP-16 low-channel LiDAR sensor to evaluate the efficiency and accuracy of the proposed algorithm. The high-density point cloud data from a higher channel LiDAR sensor, such as Velodyne VLP-32C and HDL-64, or other manufacturers’ LiDAR product models with the same or higher channel, should be used to evaluate the proposed algorithm to test and validate the efficiency, accuracy, and portability of the proposed algorithm in different LiDAR products. For further studies, such problems and factors should be considered and added in the modeling, testing, and validation of the model.

Author Contributions

Conceived and designed the experiments: H.L., C.L., D.W. Performed the experiments: B.G., H.L. Analyzed the data: H.L., C.L. Contributed reagents/materials/analysis tools: H.L., C.L., B.G. Wrote the paper: H.L., C.L., D.W. All authors have read and agreed to the published version of the manuscript.

Funding

The authors acknowledge the National Natural Science Foundation of China (Grant No. 51408257 and 51308248), Youth Scientific Research Fund of Jilin (Grant No. 20180520075JH), and the Science and technology project of Jilin Provincial Education Department (Grant No. JJKH20170810KJ and JJKH20180150KJ) are partly support this work.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Caltagirone, L.; Bellone, M.; Svensson, L.; Wahde, M. LIDAR–camera fusion for road detection using fully convolutional neural networks. Robot. Auton. Syst. 2019, 111, 125–131. [Google Scholar] [CrossRef]

- Hecht, J. Lidar for self-driving cars. Opt. Photonics News 2018, 29, 26–33. [Google Scholar] [CrossRef]

- Javanmardi, E.; Gu, Y.; Javanmardi, M.; Kamijo, S. Autonomous vehicle self-localization based on abstract map and multi-channel LiDAR in urban area. IATSS Res. 2019, 43, 1–13. [Google Scholar] [CrossRef]

- Wang, H.; Wang, B.; Liu, B.; Meng, X.; Yang, G. Pedestrian recognition and tracking using 3D LiDAR for autonomous vehicle. Robot. Auton. Syst. 2017, 88, 71–78. [Google Scholar] [CrossRef]

- Griggs, T.; Wakabayashi, D. How a self-driving Uber killed a Pedestrian in Arizona. New York Times, 19 March 2018. [Google Scholar]

- Vlasic, B.; Boudette, N.E. Self-Driving Tesla Was Involved in Fatal Crash, US Says. New York Times, 30 June 2016. [Google Scholar]

- Wang, Y.; Lu, G.; Yu, H. Traffic Engineering Considering Cooperative Vehicle Infrastructure System. Strateg. Study Chin. Acad. Eng. 2018, 20, 106–110. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- You, X.; Zheng, Y. An accurate and practical calibration method for roadside camera using two vanishing points. Neurocomputing 2016, 204, 222–230. [Google Scholar] [CrossRef]

- Mohamed, Y.S.; Shehata, H.M.; Abdellatif, M.; Awad, T.H. Steel crack depth estimation based on 2D images using artificial neural networks. Alex. Eng. J. 2019, 58, 1167–1174. [Google Scholar] [CrossRef]

- Zhao, J. Exploring the Fundamentals of Using Infrastructure-Based LiDAR Sensors to Develop Connected Intersections. Ph.D. Thesis, Texas Tech University, Lubbock, TX, USA, 2019. [Google Scholar]

- Lee, J.-S.; Jo, J.-H.; Park, T.-H. Segmentation of Vehicles and Roads by a Low-Channel Lidar. IEEE Trans. Intell. Transp. Syst. 2019, 20, 4251–4256. [Google Scholar] [CrossRef]

- Kwon, J.-S.; Kim, D.-S.; Hwang, T.-H.; Park, H.-M. A Development of Effective Object Detection System Using Multi-Device LiDAR Sensor in Vehicle Driving Environment. J. Korea Inst. Electron. Commun. Sci. 2018, 13, 313–320. [Google Scholar]

- Zhao, J.; Xu, H.; Liu, H.; Wu, J.; Zheng, Y.; Wu, D. Detection and tracking of pedestrians and vehicles using roadside LiDAR sensors. Transp. Res. Part C Emerg. Technol. 2019, 100, 68–87. [Google Scholar] [CrossRef]

- Azim, A.; Aycard, O. Detection, classification and tracking of moving objects in a 3D environment. In Proceedings of the 2012 IEEE Intelligent Vehicles Symposium (IV 2012), Alcal de Henares, Madrid, Spain, 3–7 June 2012; pp. 802–807. [Google Scholar]

- Asvadi, A.; Premebida, C.; Peixoto, P.; Nunes, U. 3D Lidar-based static and moving obstacle detection in driving environments: An approach based on voxels and multi-region ground planes. Robot. Auton. Syst. 2016, 83, 299–311. [Google Scholar] [CrossRef]

- Dewan, A.; Caselitz, T.; Tipaldi, G.D.; Burgard, W. Motion-based detection and tracking in 3d lidar scans. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 4508–4513. [Google Scholar]

- Zhang, Y.; Wang, J.; Wang, X.; Dolan, J.M. Road-segmentation-based curb detection method for self-driving via a 3D-LiDAR sensor. IEEE Trans. Intell. Transp. Syst. 2018, 19, 3981–3991. [Google Scholar] [CrossRef]

- Li, Q.; Chen, L.; Li, M.; Shaw, S.-L.; Nüchter, A.J. A sensor-fusion drivable-region and lane-detection system for autonomous vehicle navigation in challenging road scenarios. IEEE Trans. Veh. Technol. 2013, 63, 540–555. [Google Scholar] [CrossRef]

- Cao, M.; Wang, J.J. Obstacle Detection for Autonomous Driving Vehicles with Multi-LiDAR Sensor Fusion. J. Dyn. Syst. Meas. Control 2020, 142, 021007. [Google Scholar] [CrossRef]

- Wu, J.; Tian, Y.; Xu, H.; Yue, R.; Wang, A.; Song, X. Automatic ground points filtering of roadside LiDAR data using a channel-based filtering algorithm. Opt. Laser Technol. 2019, 115, 374–383. [Google Scholar] [CrossRef]

- Vosselman, G.; Dijkman, S. 3D building model reconstruction from point clouds and ground plans. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2001, 34, 37–44. [Google Scholar]

- Konolige, K.; Agrawal, M.; Blas, M.R.; Bolles, R.C.; Gerkey, B.; Sola, J.; Sundaresan, A. Mapping, navigation, and learning for off-road traversal. J. Field Robot. 2009, 26, 88–113. [Google Scholar] [CrossRef]

- Zhang, K.; Whitman, D.; Sensing, R. Comparison of three algorithms for filtering airborne lidar data. Photogramm. Eng. 2005, 71, 313–324. [Google Scholar] [CrossRef]

- Wu, J.; Xu, H.; Sun, Y.; Zheng, J.; Yue, R. Automatic background filtering method for roadside LiDAR data. Transp. Res. Rec. 2018, 2672, 106–114. [Google Scholar] [CrossRef]

- Börcs, A.; Nagy, B.; Benedek, C.J.I.G.; Letters, R.S. Instant object detection in lidar point clouds. IEEE Geosci. Remote Sens. Lett. 2017, 14, 992–996. [Google Scholar] [CrossRef]

- Halterman, R.; Bruch, M. Velodyne HDL-64E lidar for unmanned surface vehicle obstacle detection. In Proceedings of the Unmanned Systems Technology XII, Orlando, FL, USA, 6–9 April 2010; p. 76920D. [Google Scholar]

- Minemura, K.; Liau, H.; Monrroy, A.; Kato, S. LMNet: Real-time Multiclass Object Detection on CPU Using 3D LiDAR. In Proceedings of the 2018 3rd Asia-Pacific Conference on Intelligent Robot Systems (ACIRS), Singapore, 21–23 July 2018; pp. 28–34. [Google Scholar]

- Velodyne. VLP-16 User’s Manual and Programming Guide; Velodyne LiDAR: San Jose, CA, USA, 2016. [Google Scholar]

- Velodyne. VLP-16 User Manual; Velodyne LiDAR: San Jose, CA, USA, 2018. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Hoerl, A.E.; Kennard, R.W.J.T. Ridge regression: Biased estimation for nonorthogonal problems. Technometrics 1970, 12, 55–67. [Google Scholar] [CrossRef]

- Drucker, H.; Burges, C.J.; Kaufman, L.; Smola, A.J.; Vapnik, V. Support vector regression machines. In Proceedings of the 9th International Conference on Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 1997; pp. 155–161. [Google Scholar]

- General Administration of Sport of China. National Constitution Monitoring Bulletin. Available online: http://www.sport.gov.cn/index.html (accessed on 20 March 2020).

- Ester, M.; Kriegel, H.-P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. Kdd 1996, 96, 226–231. [Google Scholar]

- MacQueen, J. Some methods for classification and analysis of multivariate observations. In Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability; University of California: Berkeley, CA, USA, 1967; pp. 281–297. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).