Abstract

A joint demosaicing and denoising task refers to the task of simultaneously reconstructing and denoising a color image from a patterned image obtained by a monochrome image sensor with a color filter array. Recently, inspired by the success of deep learning in many image processing tasks, there has been research to apply convolutional neural networks (CNNs) to the task of joint demosaicing and denoising. However, such CNNs need many training data to be trained, and work well only for patterned images which have the same amount of noise they have been trained on. In this paper, we propose a variational deep image prior network for joint demosaicing and denoising which can be trained on a single patterned image and works for patterned images with different levels of noise. We also propose a new RGB color filter array (CFA) which works better with the proposed network than the conventional Bayer CFA. Mathematical justifications of why the variational deep image prior network suits the task of joint demosaicing and denoising are also given, and experimental results verify the performance of the proposed method.

1. Introduction

Nowadays, many digital imaging systems use a single monochrome sensor with color filter array (CFA) to capture a color image. Without color filters, the monochrome camera sensor would give only brightness or luminance information and could not recover the colors of the light that fall on each pixel. To obtain color information, every pixel is covered with a color filter that only lets through a certain color of light: red, green or blue. These sampled red, green and blue channels are then interpolated to fill in the missing information at the pixels for which certain colors could not be sampled. This procedure is called the demosaicing procedure, for which many methods have been proposed [1,2,3,4,5,6,7,8,9].

Current image sensors come in CCD (charge-coupled device) or CMOS (complementary metal–oxide–semiconductor) types, which are both sensitive to thermal noise. Therefore, CFA pattern images taken in low illumination suffer from noise of Poisson distribution. The noise in the CFA pattern image has a large effect on the reconstruction of the color image, as the noise in the noisy pixels spreads out to neighboring regions by the demosaicing process. The denoising is also a challenging task since at least two-thirds of the data are missing. Complex aliasing problems can occur in the demosaicing process if a poor denoising is applied beforehand. As most digital camera pipelines are sequential, quite often the demosaicing and denoising are also performed in an independent and sequential way. This leads to an irreversible error accumulation, since both the demosaicing and the denoising are ill-posed problems and the error occurring in one of the procedures cannot be undone in the other procedure. It has been shown that simultaneous coping with the errors in both the denoising and the demosaicing has advantages, and some joint demosaicing and denoising methods based on optimization techniques have been developed [10,11,12]. Recently, inspired by the success of the convolutional neural network (CNN) in many image processing tasks, methods which use the CNN in joint demosaicing and denoising have been proposed [13,14,15]. The work of [13] is a first attempt to apply a learning approach for demosaicing, and the work in [14] is a first attempt to use a convolutional network for joint demosaicing and denoising, but works only on a single noise level. The work in [15] exposes a runtime parameter and trains the network so that it adapts to a wider range of noise levels, but still can only work with a relatively low level of noise. The work of [16] proposes a residue learning neural network structure for the joint demosaicing and denoising problem based on the analysis of the problem using sparsity models, and that of [17] presents a method to learn demosaicing directly from mosaiced images without requiring ground truth RGB data, and showed that a specific burst improves the fine-tuning of the network. Furthermore, the work in [18] proposes a demosaicing network which can be described as an iterative process, and proposes a principled way to design a denoising network architecture. Such CNN-based methods need a lot of data to be trained, and normally, work poorly with varying noise.

In this paper, we propose a deep image prior based method which needs only the noisy image as the training data for the demosaicing. The proposed method uses as the input a sum of a constant and varying noise. We give mathematical justifications as to why the added varying input noise results in the denoising of the demosaiced image. Furthermore, we propose a color filter array which suits the proposed demosaicing method and show experimentally that the proposed method yields good joint demosaicing and denoising results.

2. Related Works

The following works are related to the proposed method. The proposed method can be seen as a variation of the following works fitted to the joint demosaicing and denoising problem.

2.1. Deep Image Prior

Recently, in [19], a deep image prior (DIP) has been proposed for image restoration. The DIP is a type of convolutional neural network which resembles an auto encoder, but which is trained with a single image ; i.e., only with the image to be restored. The original DIP converts a 3D tensor into a restored image , where denotes the deep image prior network with parameters . The tensor is filled with random noise from a uniform distribution. The DIP can be trained to inpaint an image with a loss function as follows:

where is a binary mask with values of zero corresponding to the missing pixels to be inpainted and values of one corresponding to the existing pixels which have to be kept, and ⊙ is an element-wise multiplication operator. Here, D is a distance measure, which is normally set as the square of the difference operator; i.e., . The minimization of L in Equation (1) with respect to the parameters of the DIP network has been shown to be capable of inpainting an image; i.e., the minimization results in an inpainted image . Inpainting and demosaicing are similar in that they try to fill in missing pixels. The difference is that in inpainting the existing pixels have full channel information, i.e., all R, G and B values are available, whereas in the demosaicing the existing pixels have only one of the R, G and B values.

2.2. Variational Auto Encoder

The variational auto-encoder [20] is a stochastic spin of the auto-encoder which consists of an encoder and a decoder , where both the encoder and decoder are neural networks with parameters and , respectively. Given an image as the input, the encoder outputs parameters to a Gaussian probability distribution. After that, samples are drawn from this distribution to get a noise input to the decoder . The space from which is sampled is stochastic and of lower dimension than the space of . By sampling different samples each time, the variational auto-encoder learns to generate different images. The proposed method also samples noise from a Gaussian distribution, but, unlike the variational auto-encoder, the noise is constituted of constant noise and varying noise and is an input into the network and not used as an intermediate input stage to the decoder.

3. Variational Deep Image Prior for Joint Demosaicing and Denoising

In this section, we propose a variational deep image prior (DIP) for joint demosaicing and denoising. We denote by the DIP network, and use the same network structure as the DIP for inpainting as defined in [19]; i.e., a U-Net type network which is downsampled five times and upsampled five times. The loss function for the variational DIP differs from that of the original DIP as follows:

Here, and denote the constant noise and the varying noise, respectively, both derived from a Gaussian distribution, and is the binary mask corresponding to the proposed random CFA; i.e., it consists of three channels. Each channel constitutes of 33% of random pixels with the value one and 66% of random pixels having the value zero. Unlike the inpainting problem, the positions of the pixels having the value one are different for each channel. The input to is a constant noise () until the -th training step. Then, after the -th training step, the input becomes the sum of a constant noise () and a varying noise (), where the noise is newly generated and differs for each training step. The effect of adding this varying noise will be explained later. The target image also differs for the different iteration steps:

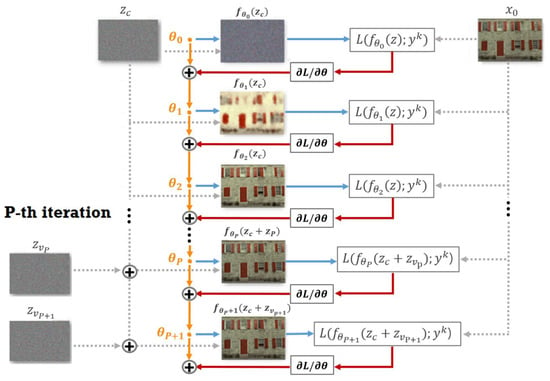

For the steps , , is a three channel image, wherein each channel contains 33% of either the R, G or B intensity values at the pixel positions where the R, G and B values are sensed by the random CFA, respectively, and 66% of zero values at the remaining positions. Furthermore, we assume that contains noise. Therefore, if for all steps k, then the reconstructed image will converge to a noisy demosaiced image. To avoid this, after the step , the target image becomes a weighted average of the previous target image , the given noisy image and . The weights between these images are controlled by and . In the experiments, we let and for all images. It should be noted that differs from the current output . The image is a denoised version of , and the adding of it denoises the target image . The adding of has the effect of adding back the noise, a trick which is widely used in denoising algorithms to restore the fine details back to the target image. The adding of the previous target image keeps the record of the denoised target image. By using the improved target image , the network is not trained toward the given noisy image any longer, which results in a better denoised output image. Figure 1 shows the working flow of the proposed method. Here, the orange bullets and the solid lines refer to the neural network used in the computation with the specific parameters of , and the dashed lines refer to the inputs to the network. Again, it can be observed that remains constant, while , are changing over the time.

Figure 1.

Diagram of the proposed method. The variational noise , is added after the (P-1)-th iteration.

Next, we give mathematical justifications on the joint demosaicing and denoising property of the variational DIP. First, the reason which explains why the DIP performs demosaicing can be found in [21], where it is proven that an auto-encoder with an insufficient number of input channels performs an approximation of the target signal under the Hankel structured low-rank constraint:

where denotes the Hankel matrix of f with p input channels and a convolution filter of size d, and is the target signal. The above approximation can be easily extended to the two-dimensional case. Thus, letting and for the two-dimensional case, we see that we get a low rank approximation of . It has been already shown in [22], that a low rank approximation can perform a reconstruction of missing pixels. When applied to the CFA patterned image, this results in a demosaicing. The Hankel structured low-rank approximation in Equation (4) performs a better approximation than the method in [22], since in [22] the low rank approximation is with respect to the Fourier basis, whereas in Equation (4) this is with respect to a learned basis which best reconstructs the given image, and therefore, yields a better demosaicing result.

Now, to consider the effect of adding the varying noise to the constant noise , we consider a multi-variable vector-valued function which can be expressed as a set of M multi-variable scalar-valued function :

The Taylor expansion of the i-th () component is

where is a vector. The gradient vector and the Hessian matrix are the first and second order derivatives of the function defined as:

We can extend Equation (5) to as a vector form. The first two terms can be written as

where is the Jacobian matrix defined over . The second order term requires a tensor form to be expressed which is difficult to write in vector form, and therefore, we replace the second order term with the error term . Then, we can express by the Taylor expansion

This results in

where

and is the Jacobian matrix defined over the vector function :

Equation (6) implies the fact that if and , then ; i.e., the outputs and cannot be the same. This is contradictory to the loss function in Equation (2), which minimization forces the outputs to converge to the same image for all different inputs . It should be noted that, with very high probability, and , since and are random noises. Therefore, the different inputs of act as regularizers which eliminate the components with small norm energy from . As the components with small energy will be mostly the noise, this will result in a noise removal of .

Furthermore, if we take the expectation of the different outputs with respect to , we get

which shows the fact that if we put as the input after the DIP has been trained, the output will be approximately the average of the outputs for different . This averaging has a further denoising effect which will remove the remaining noise.

The fact that different inputs of result in different outputs can also be shown by the mean value theorem. According to the mean value theorem, there always exists a point between and such that the following equality holds:

When , then the right-hand side of Equation (9) is, with very high probability, non-zero, since it is almost unlikely that and are orthogonal to each other. Therefore, with very high probability,

where is the angle between and . This means that if there is a difference between the inputs, then the outputs of the DIP cannot be the same, so there will be an averaging which removes the noise.

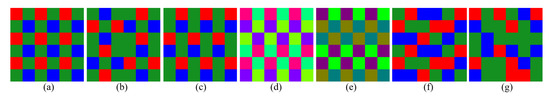

Next, we propose a CFA pattern, which we think works well with the proposed demosaicing method. The proposed CFA consists of randomly distributed pixels, where the pixels corresponding to the R, G and B channels take up 33% of the whole CFA pattern each. The design of the proposed CFA is not based on a rigorous analysis, as done in classical CFA designs [23,24,25], but on simple reasoning and experimental results. We reason that if the filters are to learn to generate the R, G and B pixels without any bias for a specific color or a specific position, the best training method would be to train the filters to generate any random color at any random position. For example, if the CFA pattern has, for example, 50% green pixels, as in the Bayer format, the convolutional filters will be trained mainly how to generate the green pixels from the noise. When trained like this, the same convolutional filters may be less effective in generating the R or B pixels. Therefore, we reason that the amount of information should be the same for all three channels; i.e., the CFA should consist of 33% of R, G and B pixels each. In the same manner, we reason that if the filters are to learn to generate the R, G and B pixels without any bias for a specific position, it would be good to train the filters to generate any random color at any random position, which is why we propose a pattern with randomly distributed color pixels. Experimental results show that the randomly patterned CFA works better with the proposed demosaicing method than the Bayer pattern or the Fuji X-Trans pattern. Figure 2 shows the different color filter arrays (CFAs) which are used in the experiments including the proposed CFA.

Figure 2.

Different color filter arrays (CFAs): (a) Bayer CFA [26]; (b) Fuji X-Trans CFA [27]; (c) Lukac [23]; (d) Hirakawa Pattern-A [25]; (e) Hirakawa Pattern-B [25]; (f) proposed CFA (1:1:1); (g) proposed CFA (1:2:1).

4. Experimental Results

We compared the proposed method with other deep-learning-based demosaicing methods on three different datasets. We added different noises generated from Gaussian distributions with different standard deviations of , and , for the R, G and B channels, respectively. This is due to the fact that the R, G and B filters absorb different light energy. We compared the proposed method with the method in [28] as a representative of the non-deep learning demosaicing method; the sequential energy minimization (SEM) method [14]; the DemosaicNet [15] with two different CFAs, i.e., the DemosaicNet with Bayer CFA(DNetB) and the DemosaicNet with the Fuji X-Trans CFA(DNetX); and the plain DIP [19]. We made quantitative comparisons with the PSNR (peak signal-to-noise ratio), the CPSNR (color peak signal-to-noise ratio), the SSIM (structural similarity index), the FSIM (feature similarity index) and the FSIMc (feature similarity index chrominance) measures and summarized the results in Tables 2–4. The values in the tables are the average values for the Kodak images and the McMaster (also known as the IMAX) images, respectively. Furthermore, the values corresponding to the red, green and blue channels are the average values for those particular channels, respectively. The parameters for and in Equation (3) were set to 0.003 and 0.007, respectively, throughout all the experiments.

Table 1 shows the results of performance comparison when the proposed method was applied for the different CFA patterns; i.e, the Bayer [26], the Fuji X-Trans [27], the Lucak [23], the Hirakawa [25] and the proposed CFA patterns with different noise levels. For the Hirakawa CFA we used the pattern-A and pattern-B patterns which have RGB ratios of 1:1:1 and 1:2:1, respectively. Likewise, for the proposed random patterned CFA, we used the Random1 pattern (RGB ratio of 1:1:1) and the Random2 pattern (RGB ratio of 1:2:1). The CPSNR, SSIM and FSIMc values are the average values of the images in the Kodak image dataset. When the noise is low, the Hirakawa pattern-A CFA shows the largest CPSNR and SSIM values. However, when the noise increases, the proposed random pattern shows larger PSNR, SSIM and FSIM values. This is maybe due to the fact that when the noise increases, the tasks of demosaicing and denoising become similar—i.e., the task of removing the random noise and the task of filling in random colors become similar—so the finding of the parameters which do the demosaicing and denoising tasks simultaneously becomes an easier task with the proposed random CFA than with other CFAs. It can be seen that the proposed CFA pattern mostly shows the largest value, especially when the noise is large.

Table 1.

Comparison of the CPSNR, SSIM and FSIMc values for the different CFA patterns used with the proposed method on the Kodak image dataset.

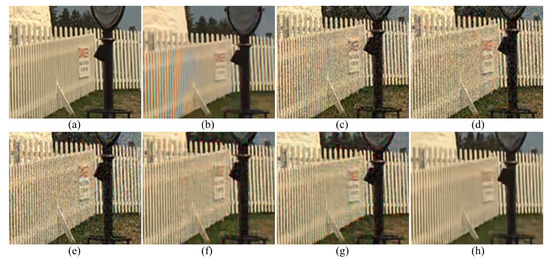

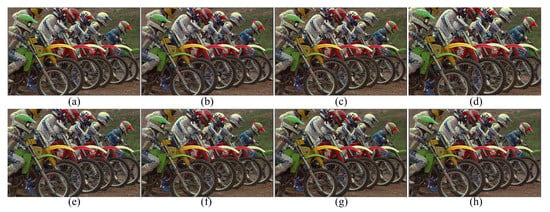

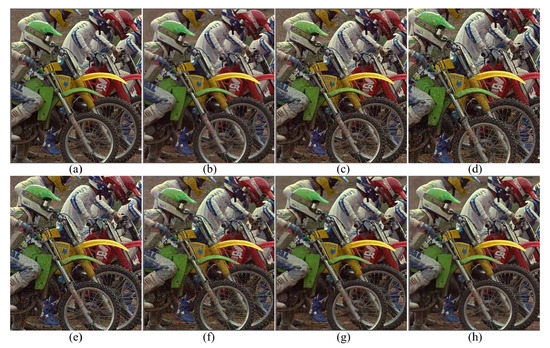

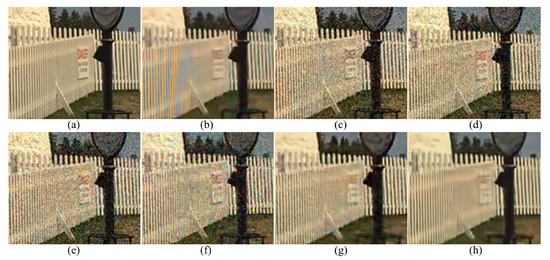

Figure 3 shows the results of the different demosaicing methods on the first dataset with color noise of standard deviations , and for the R, G and B channels, respectively. The parameter P in Equation (3) is set to 1200 for the experiments with this color noise, and to 500 for all the other experiments. When the noise is light, the SEM, the DNetB and the DNetX also produce good joint demosaicing and denoising results. The SEM shows the best quantitative results in the PSNR values for the Kodak dataset, as can be seen in Table 2. However, the proposed method achieves the best results in the SSIM and the FSIM measures for all datasets, and the best PSNR values for the McMaster dataset. Figure 4 and Figure 5 and Table 2, Table 3 and Table 4 show the results on the dataset with color noise of standard deviations , and . The ADMM method got the highest value of cPSNR for the McMaster dataset; that was due to the fact that the ADMM method incorporates the powerful BM3D denoising [29] and the total variation minimization into a single framework, which results in a large denoising power. Therefore, we experimented also on a combination of the proposed method and the BM3D. In this case, the proposed training method can focus more on finding the parameters for the demosaicing task, leaving a large part of denoising to the BM3D, which results in finding effective parameters for demosaicing. The results of the SEM, DNetB and DNetX are those without using the external BM3D denoising method. The proposed + BM3D outperforms the other methods on the Kodak dataset with respect to the PSNR and SSIM measures. As the noise increases, the ADMM, SEM, DNetB and DNetX result in severe color artifacts, as can be observed from the fence regions in the enlarged images in Figure 5b–e. However, the DIP and the proposed method overcome such color artifacts due to the inherent rank minimization property. The figures are selected according to the best PSNR values, which is why the figures for the DIP are a little more blurry than the figures for the proposed method. The DIP reconstructs the noise when reconstructing the high frequency components while the proposed method does not. Finally, Figure 6 and Figure 7 and Table 2, Table 3 and Table 4 show the results on the dataset with color noise of standard deviations , and . For this dataset, the non-deep-learning ADMM method outperforms all the deep-learning-based methods, including the proposed method, in the quantitative measures. However, the proposed method outperforms all other deep-learning-based methods. Furthermore, while the ADMM shows large aliasing artifacts, as can be seen in Figure 8b, the proposed method is free from such artifacts. Again, it should be taken into account that this is the result of training with the noisy pattern CFA image only. Furthermore, we fixed all the hyper-parameters of the network for all the different noise levels, which means that the proposed method is not sensitive to the noise levels.

Figure 3.

Reconstruction results for the Kodak number 19 image with noise levels , and . (a) Original, (b) ADMM, [28] (c) SEM, [14] (d) DNetB, [15] (e) DNetX, [15] (f) DIP, [19] (g) proposed and (h) proposed + BM3D.

Table 2.

Comparison of the PSNR values among the various demosaicing methods on the Kodak and the McMaster image datasets.

Figure 4.

Reconstruction results for the Kodak number 19 image with noise levels , and . (a) Original, (b) ADMM, [28] (c) SEM, [14] (d) DNetB, [15] (e) DNetX, [15] (f) DIP, [19] (g) proposed and (h) proposed + BM3D.

Figure 5.

Enlarged regions of Figure 4. (a) Original, (b) ADMM, [28] (c) SEM, [14] (d) DNetB, [15] (e) DNetX, [15] (f) DIP, [19] (g) proposed and (h) proposed + BM3D.

Table 3.

Comparison of the SSIM values among the various demosaicing methods on the Kodak and the McMaster image datasets.

Table 4.

Comparison of the FSIM values among the various demosaicing methods on the Kodak and the McMaster image datasets.

Figure 6.

Reconstruction results for the Kodak number 5 image with noise levels , and . (a) Original, (b) ADMM, [28] (c) SEM, [14] (d) DNetB, [15] (e) DNetX, [15] (f) DIP, [19] (g) proposed and (h) proposed + BM3D.

Figure 7.

Enlarged regions of Figure 6. (a) Original, (b) ADMM, [28] (c) SEM, [14] (d) DNetB, [15] (e) DNetX, [15] (f) DIP, [19] (g) proposed and (h) proposed + BM3D.

Figure 8.

Enlarged regions of the denoising results on the Kodak number 19 image with noise levels , and . (a) Original, (b) ADMM, [28] (c) SEM, [14] (d) DNetB, [15] (e) DNetX, [15] (f) DIP, [19] (g) proposed and (h) proposed + BM3D.

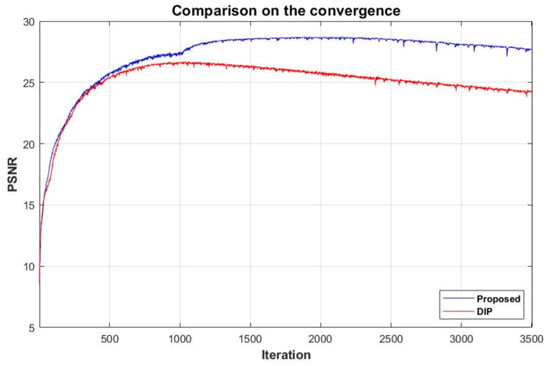

Figure 9 compares the convergence of the PSNR values according to the training iterations of the plain DIP and the proposed variational DIP, respectively. As can be seen, the plain DIP converges to a lower PSNR value as the training step iterates, which is due to the fact that the noise in the target image is reconstructed. In comparison, with the proposed variational DIP, the noise is not reconstructed, due to the reasons explained in the previous section. Therefore, the final output image converges to a joint demosaiced and denoised image, which results in a convergence to a higher PSNR value.

Figure 9.

Comparison of the convergence between the DIP and the proposed variational DIP.

Table 5 shows the computational time costs of the different methods. All the methods have been run on a PC with an Intel Core i9-9900K Processor, NVIDIA GeForce RTX 2080 Ti and 32 GB RAM. The proposed method is the slowest of all the methods, which is due to the fact that the proposed method uses a training step for each incoming CFA image. The computational time can be reduced if the proposed method is combined with the meta learning approach. One of the possible methods would be to initialize the neural network with good initial parameters obtained by some pre-training with many images. This should be one of the major topics of further studies.

Table 5.

Showing the computational time costs of the different joint demosaicing and denoising methods.

5. Conclusions

In this paper, we proposed a variational deep image prior for the joint demosaicing and denoising of the proposed random color filter array. We mathematically explained why the variational model results in a demosaicing and denoising result, and experimentally verified the performance of the proposed method. The experimental results showed that the proposed method is superior to other deep-learning-based methods, including the deep image prior network. How to apply the proposed method on the demosaicing of color filter arrays including channels other than the three primary color channels could be the topic of further studies.

Author Contributions

Conceptualization, Y.P., S.L. and J.Y.; methodology, Y.P. and B.J.; software, Y.P. and S.L.; validation, B.J. and J.Y.; formal analysis, S.L. and J.Y.; investigation, J.Y.; resources, Y.P. and B.J.; writing—original draft preparation, Y.P. and S.L.; writing—review and editing, B.J. and J.Y.; visualization, Y.P.; supervision, J.Y.; project administration, J.Y. All authors have read and agreed to the published version of the manuscript.

Funding

The work of J.Y. was supported in part by the National Research Foundation of Korea under grant NRF-2020R1A2C1A01005894 and the work of S.L. was supported by the Basic Science Research Program through the National Research Foundation of Korea under grant NRF-2019R1I1A3A01060150.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Pekkucuksen, I.; Altunbasak, Y. Multiscale gradients-based color filter array interpolation. IEEE Trans. Image Process. 2013, 22, 157–165. [Google Scholar] [CrossRef] [PubMed]

- Kiku, D.; Monno, Y.; Tanaka, M.; Okutomi, M. Beyond color difference: Residual interpolation for color image demosaicking. IEEE Trans. Image Process. 2016, 25, 1288–1300. [Google Scholar] [CrossRef] [PubMed]

- Gunturk, B.K.; Altunbasak, Y.; Mersereau, R.M. Color plane interpolation using alternating projections. IEEE Trans. Image Process. 2002, 11, 997–1013. [Google Scholar] [CrossRef] [PubMed]

- Alleysson, D.; Süsstrunk, S.; Hérault, J. Linear demosaicing inspired by the human visual system. IEEE Trans. Image Process. 2005, 14, 439–449. [Google Scholar] [PubMed]

- Gunturk, B.K.; Glotzbach, J.; Altunbasak, Y.; Schafer, R.W.; Mersereau, R.M. Demosaicking: Color filter array interpolation. IEEE Signal Process. Mag. 2005, 22, 44–54. [Google Scholar] [CrossRef]

- Kimmel, R. Demosaicing: Image reconstruction from color ccd samples. IEEE Trans. Image Process. 1999, 8, 1221–1228. [Google Scholar] [CrossRef] [PubMed]

- Pei, S.-C.; Tam, I.-K. Effective color interpolation in ccd color filter arrays using signal correlation. IEEE Trans. Circuits Syst. Video Technol. 2003, 13, 503–513. [Google Scholar]

- Menon, D.; Calvagno, G. Color image demosaicking: An overview. Signal Process Image 2011, 26, 518–533. [Google Scholar] [CrossRef]

- Dubois, E. Frequency-domain methods for demosaicking of bayer-sampled color images. IEEE Signal Process. Lett. 2005, 12, 847–850. [Google Scholar] [CrossRef]

- Hirakawa, K.; Parks, T.W. Joint demosaicing and denoising. IEEE Trans. Image Process. 2006, 15, 2146–2157. [Google Scholar] [CrossRef] [PubMed]

- Jeon, G.; Dubois, E. Demosaicking of noisy bayer sampled color images with least-squares luma-chroma demultiplexing and noise level estimation. IEEE Trans. Image Process. 2013, 22, 146–156. [Google Scholar] [CrossRef] [PubMed]

- Buades, A.; Duran, J. CFA Video Denoising and Demosaicking Chain via Spatio-Temporal Patch-Based Filtering. IEEE Trans. Circuits Syst. Video Technol. 2019. [Google Scholar] [CrossRef]

- Khashabi, D.; Nowozin, S.; Jancsary, J.; Fitzgibbon, A.W. Joint demosaicing and denoising via learned nonparametric random fields. IEEE Trans. Image Process. 2014, 23, 4968–4981. [Google Scholar] [CrossRef] [PubMed]

- Klatzer, T.; Hammernik, K.; Knobelreiter, P.; Pock, T. Learning joint demosaicing and denoising based on sequential energy minimization. In Proceedings of the 2016 IEEE International Conference on Computational Photography (ICCP), Evanston, IL, USA, 13–15 May 2016; pp. 1–11. [Google Scholar]

- Gharbi, M.; Chaurasia, G.; Paris, S.; Durand, F. Deep Joint Demosaicking and Denoising. ACM Trans. Graph. (TOG) 2016, 35, 1–12. [Google Scholar] [CrossRef]

- Huang, T.; Wu, F.; Dong, W.; Guangming, S.; Li, X. Lightweight Deep Residue Learning for Joint Color Image Demosaicking and Denoising. In Proceedings of the 2018 International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 127–132. [Google Scholar]

- Ehret, T.; Davy, A.; Arias, P.; Facciolo, G. Joint Demosaicking and Denoising by Fine-Tuning of Bursts of Raw Images. In Proceedings of the 2019 International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 8868–8877. [Google Scholar]

- Kokkinos, F.; Lefkimmiatis, S. Iterative Joint Image Demosaicking and Denoising Using a Residual Denoising Network. IEEE Trans. Image Process. 2019, 28, 4177–4188. [Google Scholar] [CrossRef] [PubMed]

- Ulyanov, D.; Vedaldi, A.; Lempitsky, V. Deep Image Prior. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 9446–9454. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. In Proceedings of the 2nd International Conference on Learning Representations(ICLR 2014), Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Ye, J.C.; Han, Y.S. Deep Convolutional Framelets: A General Deep Learning for Inverse Problems. SIAM J. Imaging Sci. 2017, 11, 991–1048. [Google Scholar] [CrossRef]

- Zhou, J.; Kwan, C.; Ayhan, B. A High Performance Missing Pixel Reconstruction Algorithm for Hyperspectral Images. In Proceedings of the 2nd International Conference on Applied and Theoretical Information Systems, Taipei, Taiwan, 10–12 February 2012; pp. 1–10. [Google Scholar]

- Lukac, R.; Konstantinos, N.P. Color Filter Arrays: Design and Performance Analysis. IEEE Trans. Consum. Electron. 2005, 51, 1260–1267. [Google Scholar] [CrossRef]

- Vaughn, I.J.; Alenin, A.S.; Tyo, J.S. Focal plane filter array engineering I: Rectangular lattices. Opt. Express 2017, 25, 11954–11968. [Google Scholar] [CrossRef] [PubMed]

- Hirakawa, K.; Wolfe, P.J. Spatio-Spectral Color Filter Array Design for Optimal Image Recovery. IEEE Trans. Image Process. 2008, 17, 1876–1890. [Google Scholar] [CrossRef] [PubMed]

- Bayer, B. Color Imaging Array. U.S. Patent 3,971,065, 20 July 1976. [Google Scholar]

- Fujifilm X-Pro1. Available online: http://www.fujifilmusa.com/products/digital_cameras/x/fujifilm_x_pro1/features (accessed on 23 May 2020).

- Tan, H.; Zeng, X.; Lai, S.; Liu, Y.; Zhang, M. Joint demosaicing and denoising of noisy bayer images with ADMM. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP 2017), Beijing, China, 17–20 September 2017; pp. 2951–2955. [Google Scholar]

- Kostadin, D.; Alessandro, F.; Vladimir, K.; Karen, E. Image denoising by sparse 3D transform-domain collaborative filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).