RFI Artefacts Detection in Sentinel-1 Level-1 SLC Data Based On Image Processing Techniques

Abstract

1. Introduction

2. RFI Artefact

3. Materials

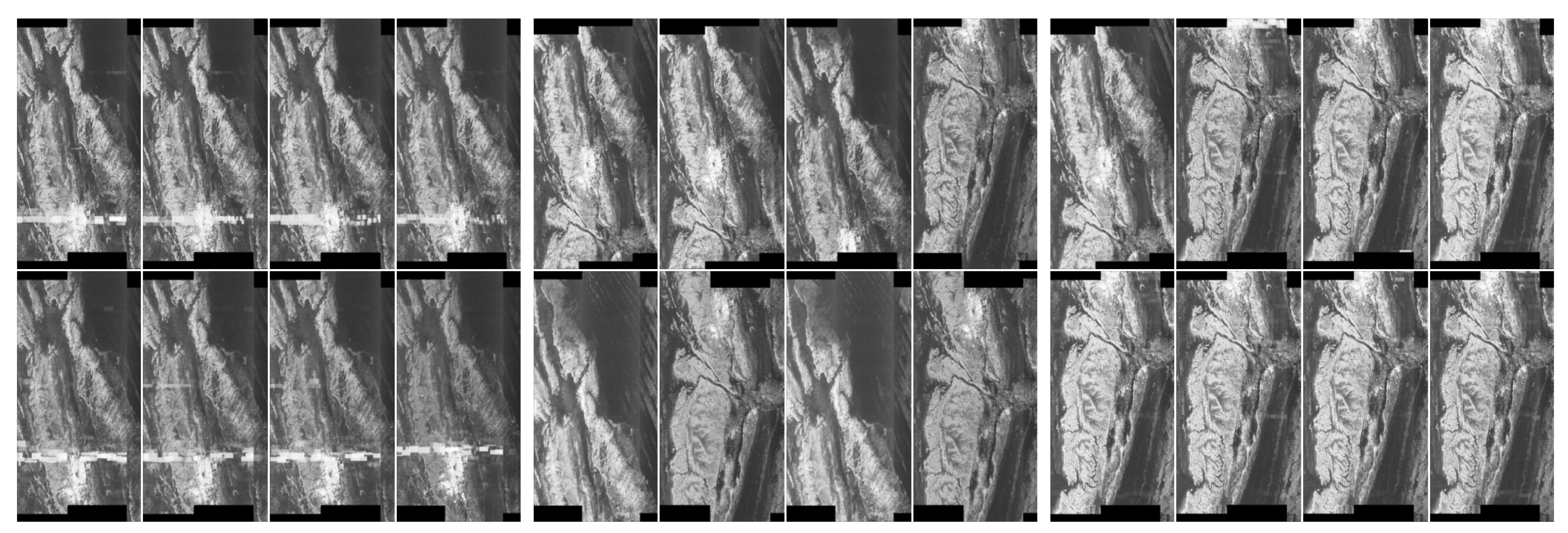

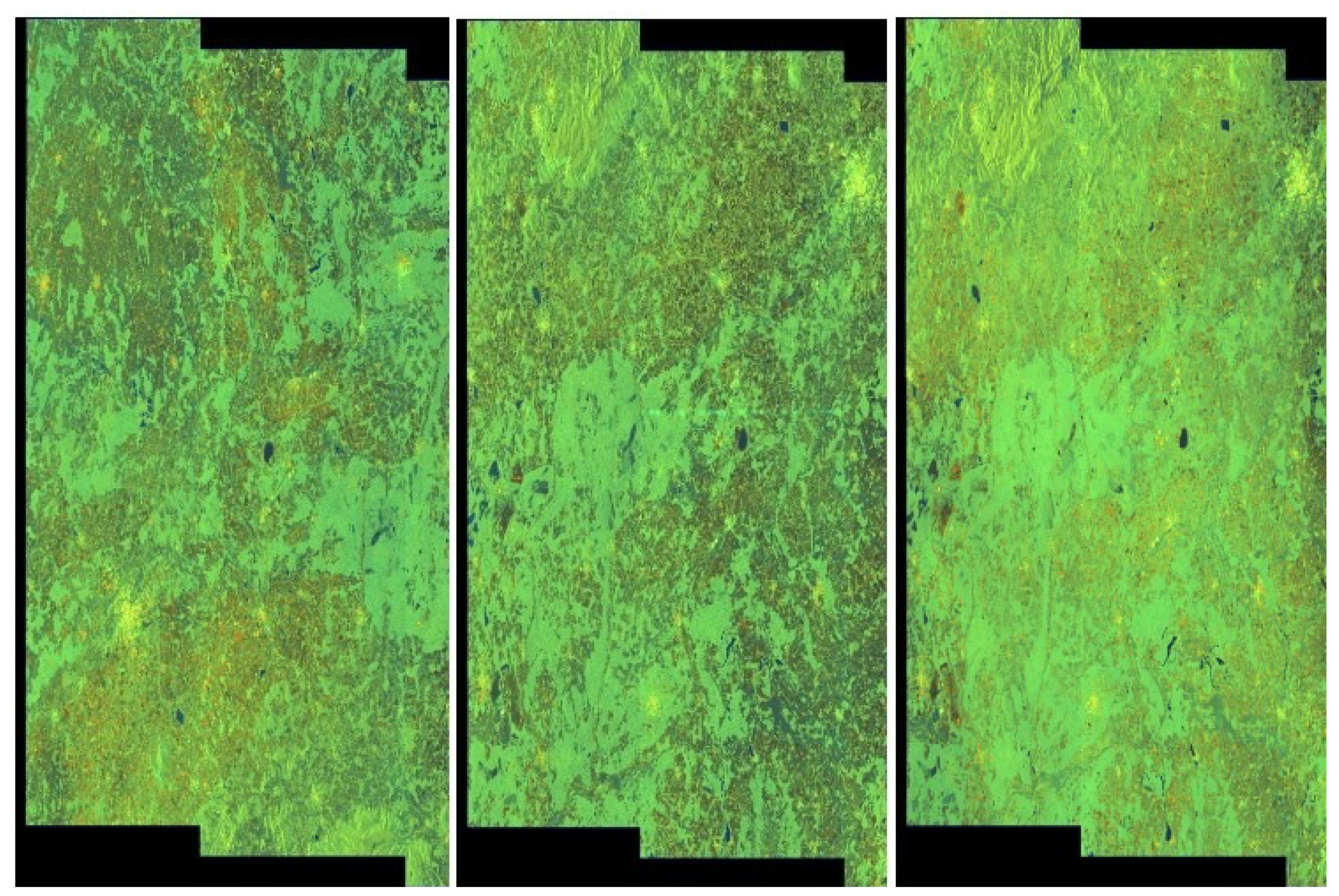

3.1. Source Data

3.2. Experimental Datasets

4. Methodology

4.1. Digital Representation of the Image

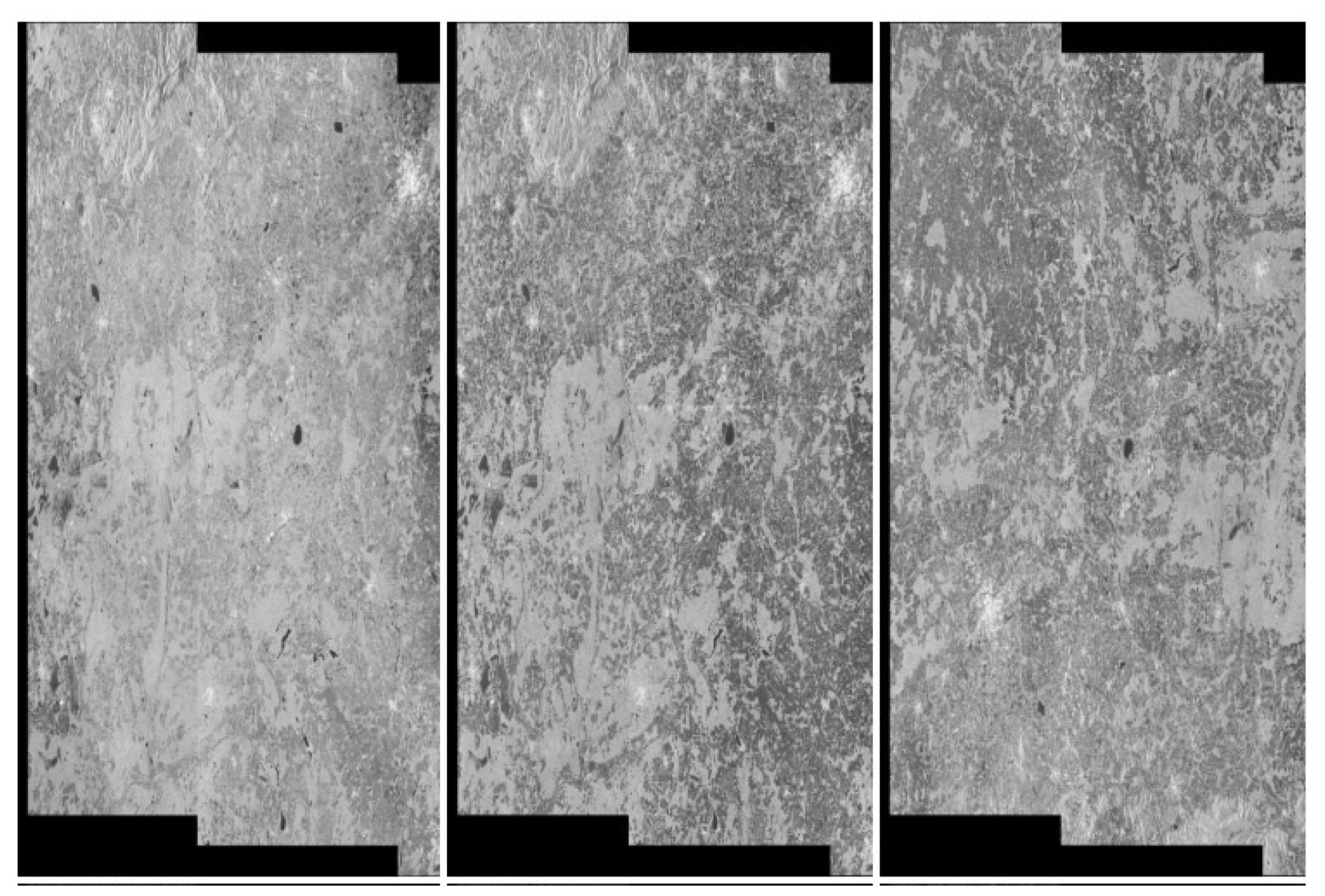

4.2. Conversion from RGB to Greyscale

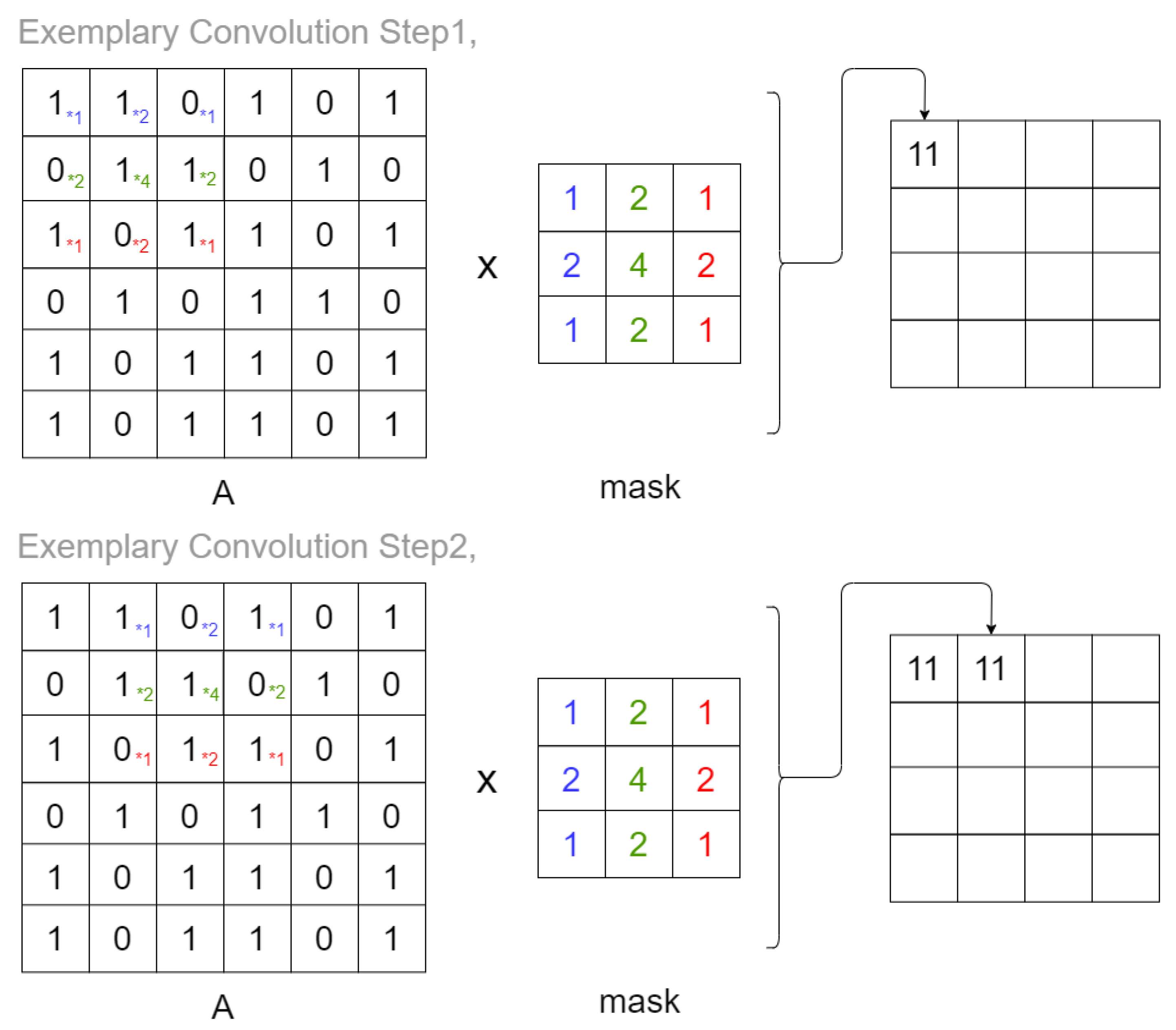

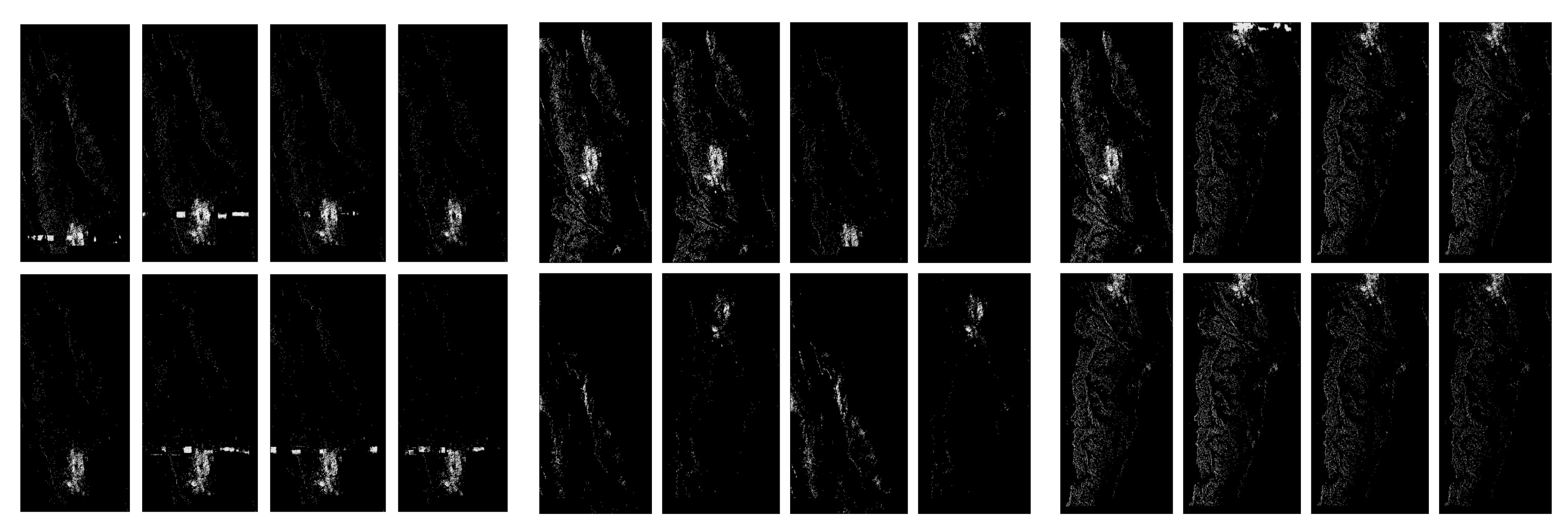

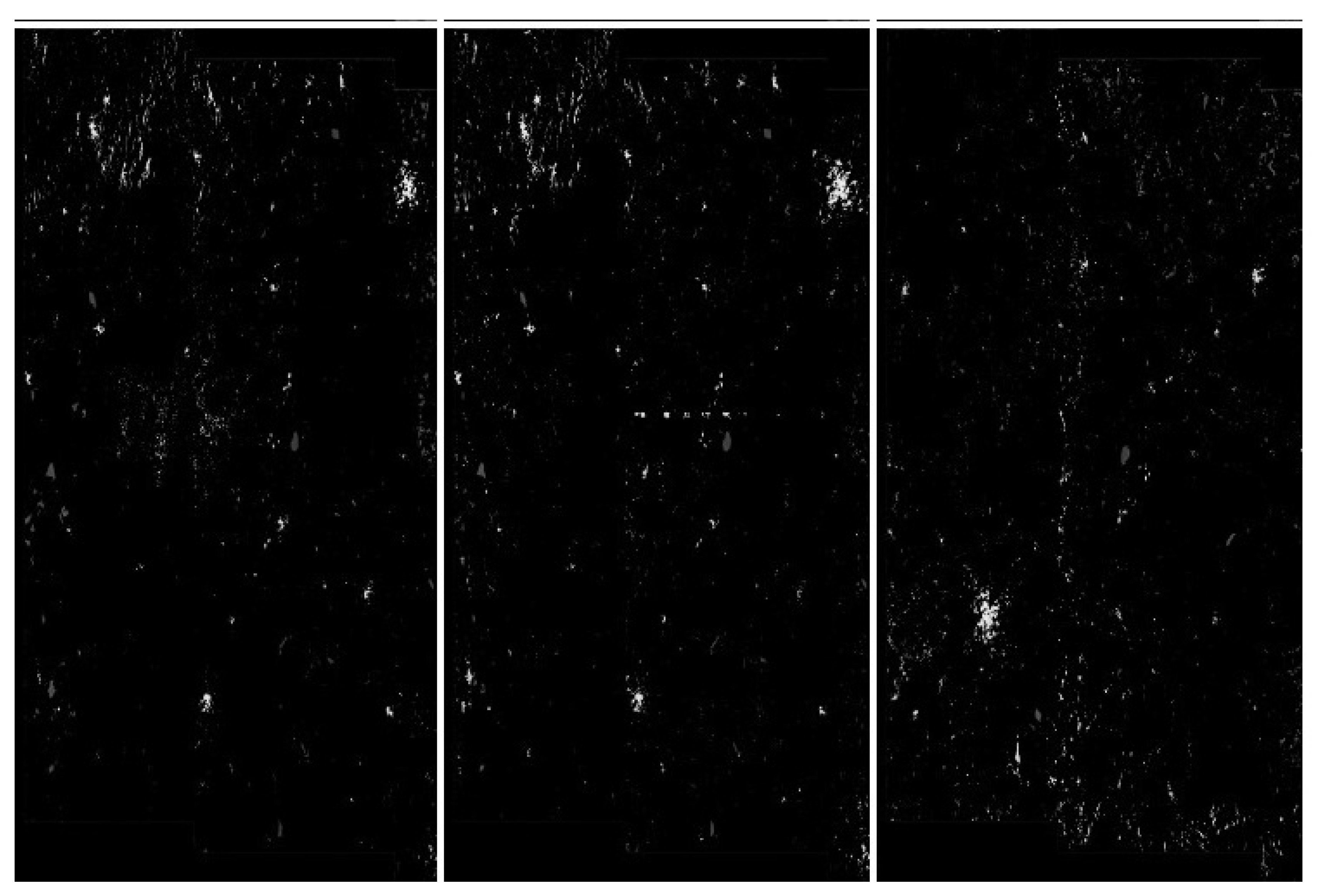

4.3. Feature Extraction Based on Pixel Convolution

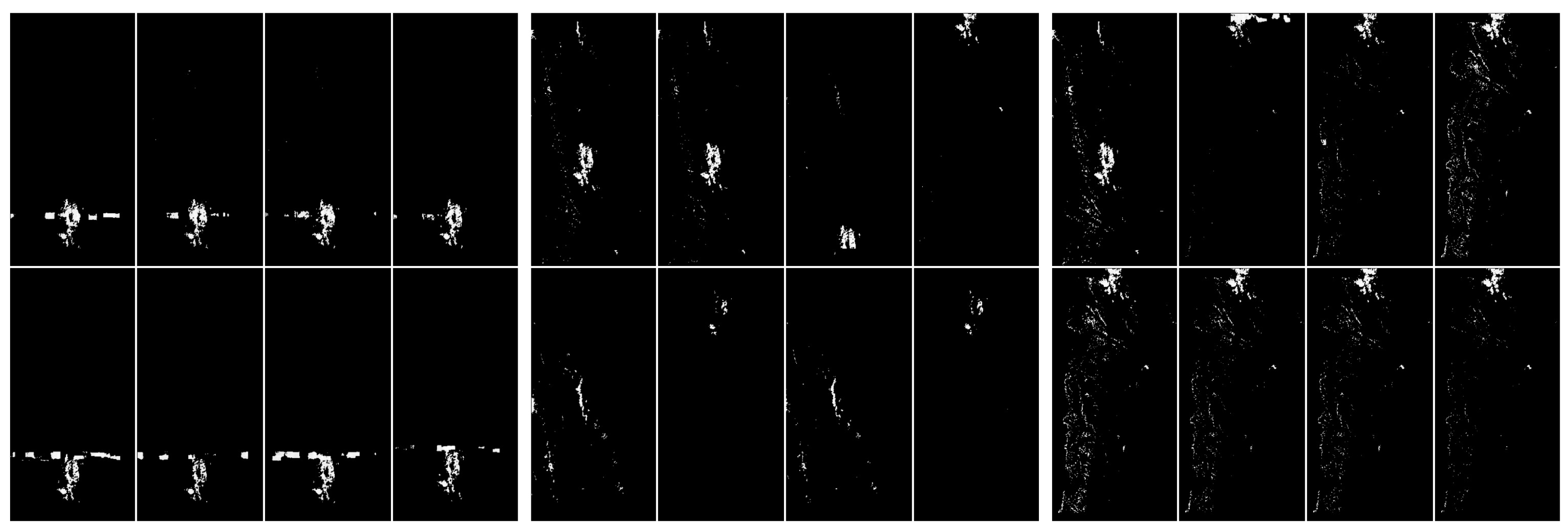

4.4. Thresholding

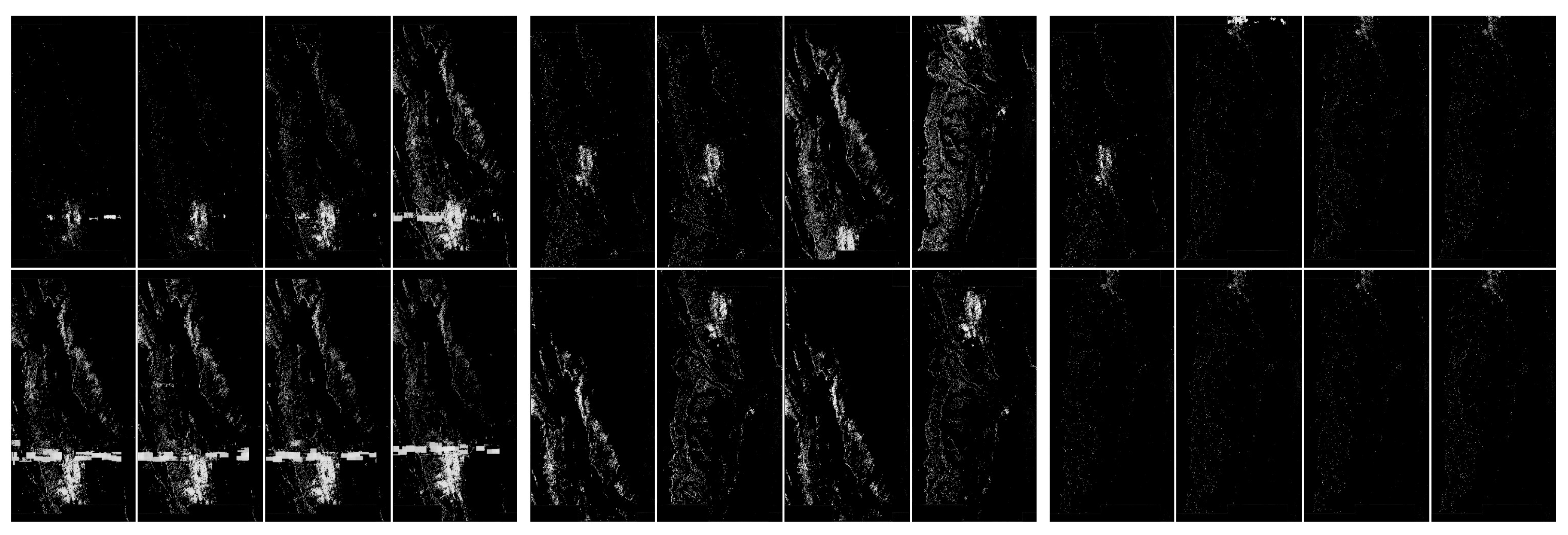

4.5. Nearest Neighbor Structure Filtering

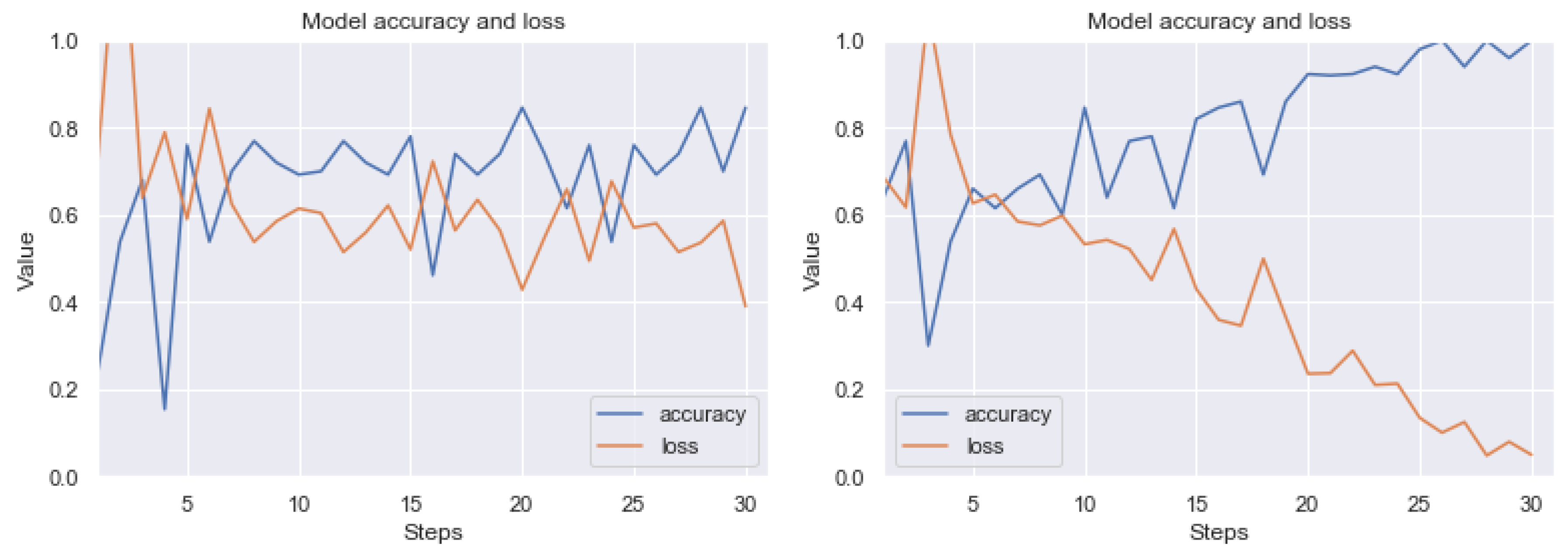

4.6. Exemplary Deep Neural Network Architecture as Referenced Classifier

5. Experimental Session Details

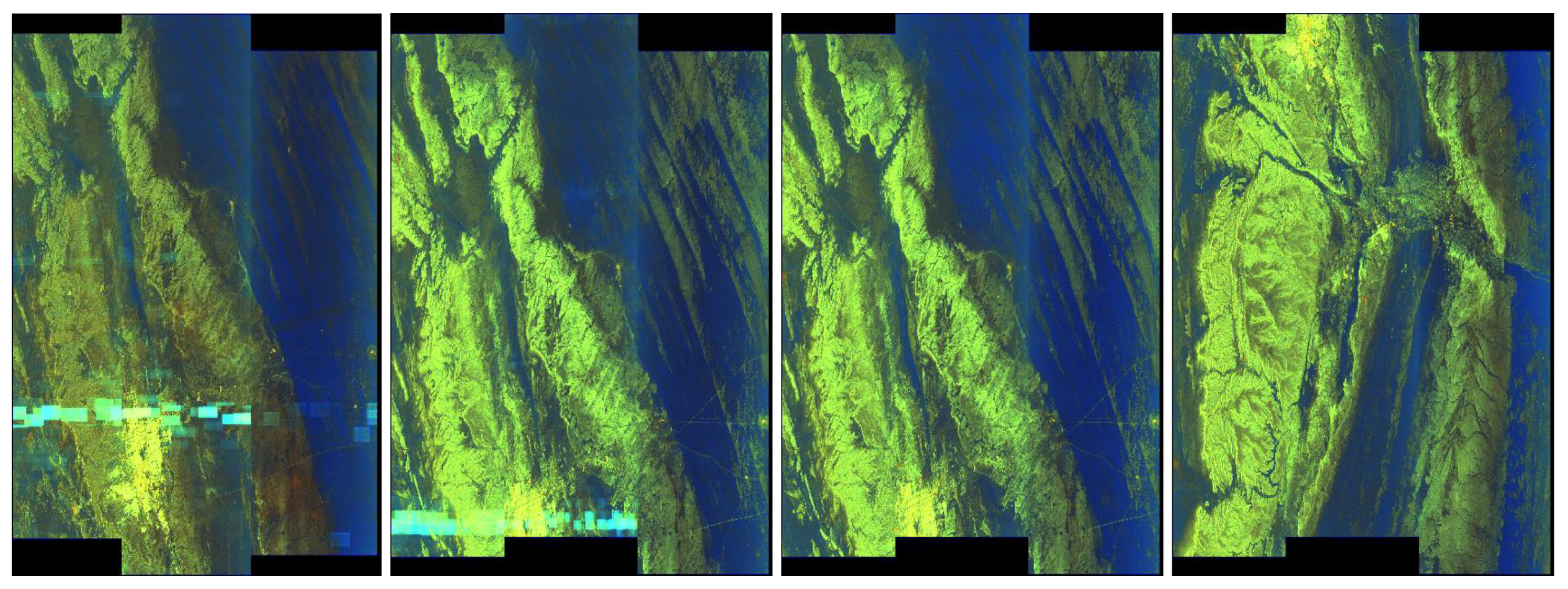

5.1. Artefacts Detection in the RIYADH Dataset

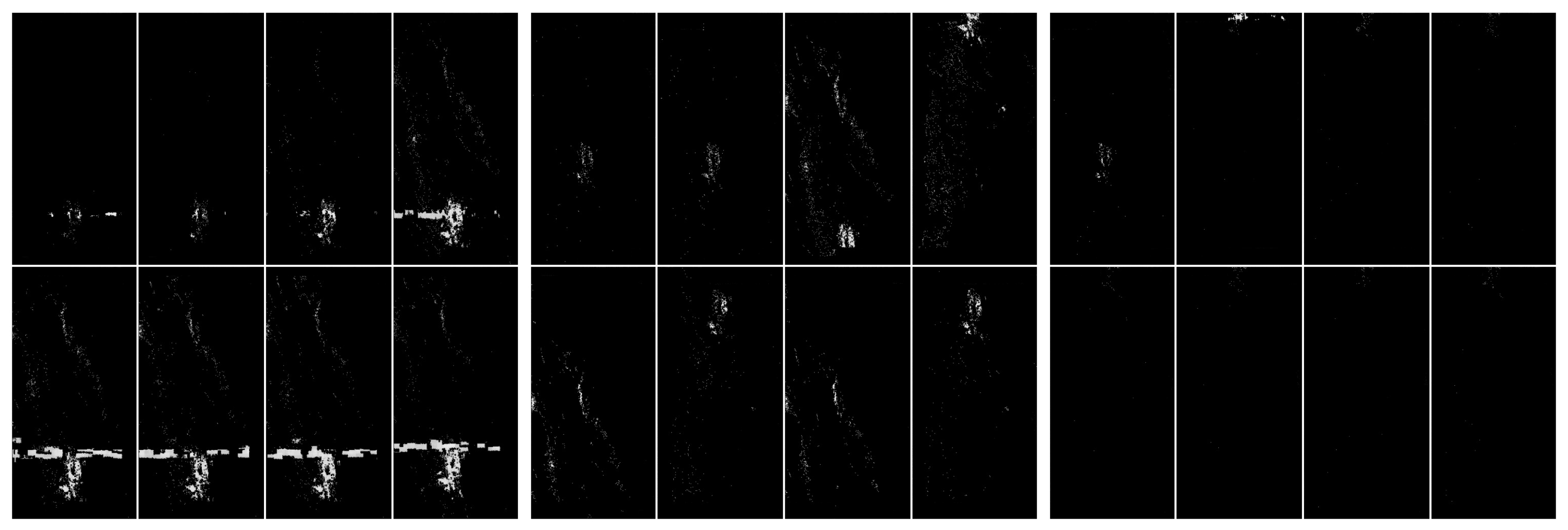

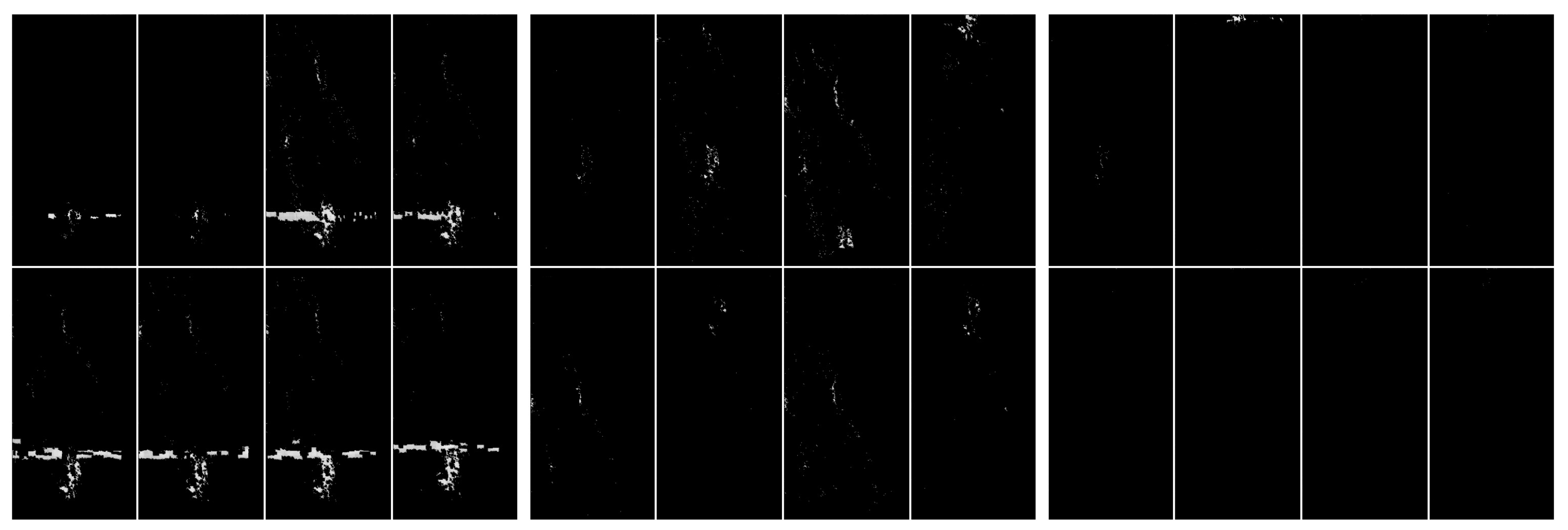

5.2. Overview of Feature Detectors Based on Convolution

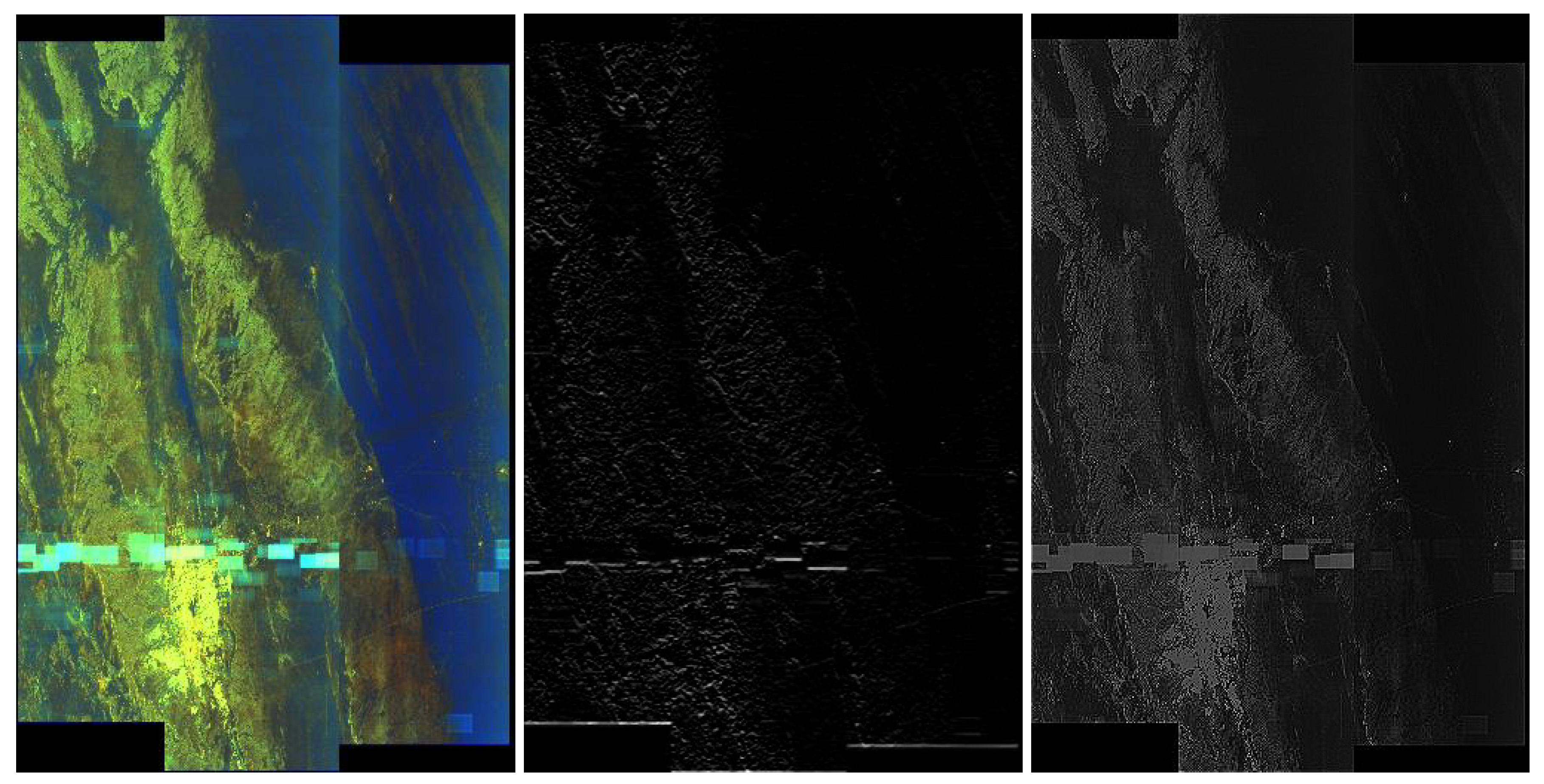

5.3. Application of Thresholding

5.4. Application of Nearest Neighbor Filtering

5.5. Summary of Results for Detection Artefacts in RIYADH Dataset

5.6. Results for MOSCOW Dataset

5.7. Summary of Results for MOSCOW Dataset

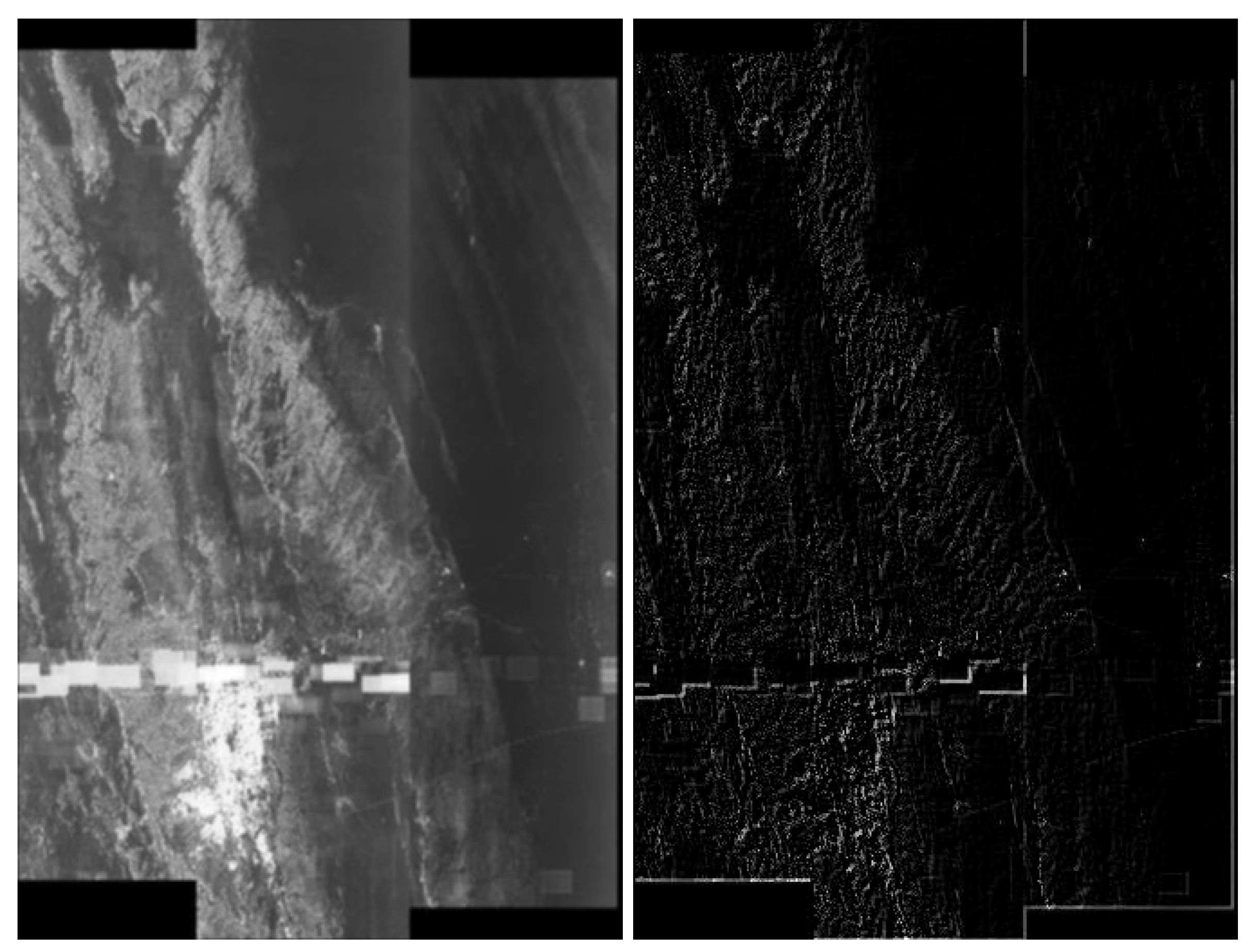

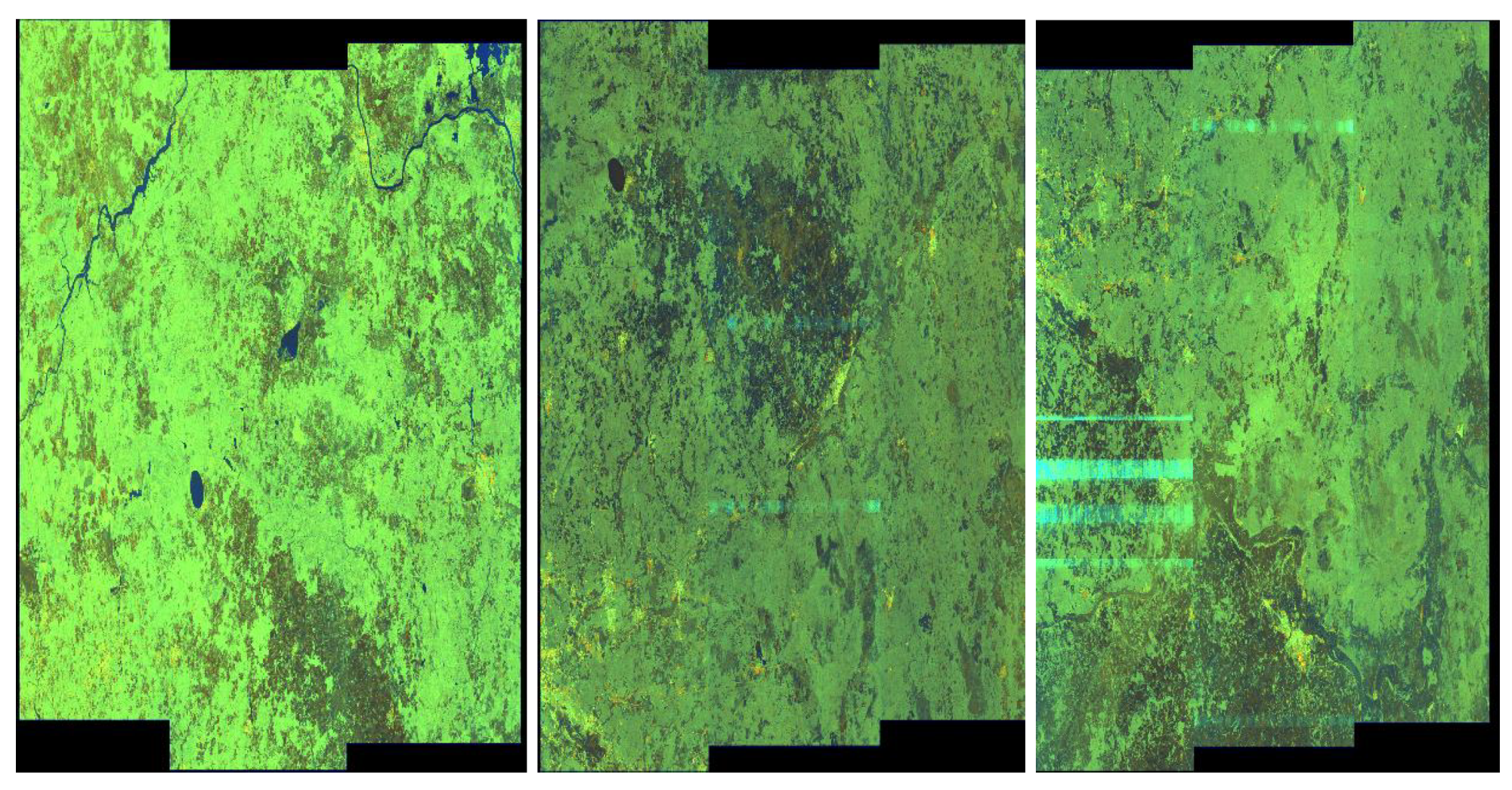

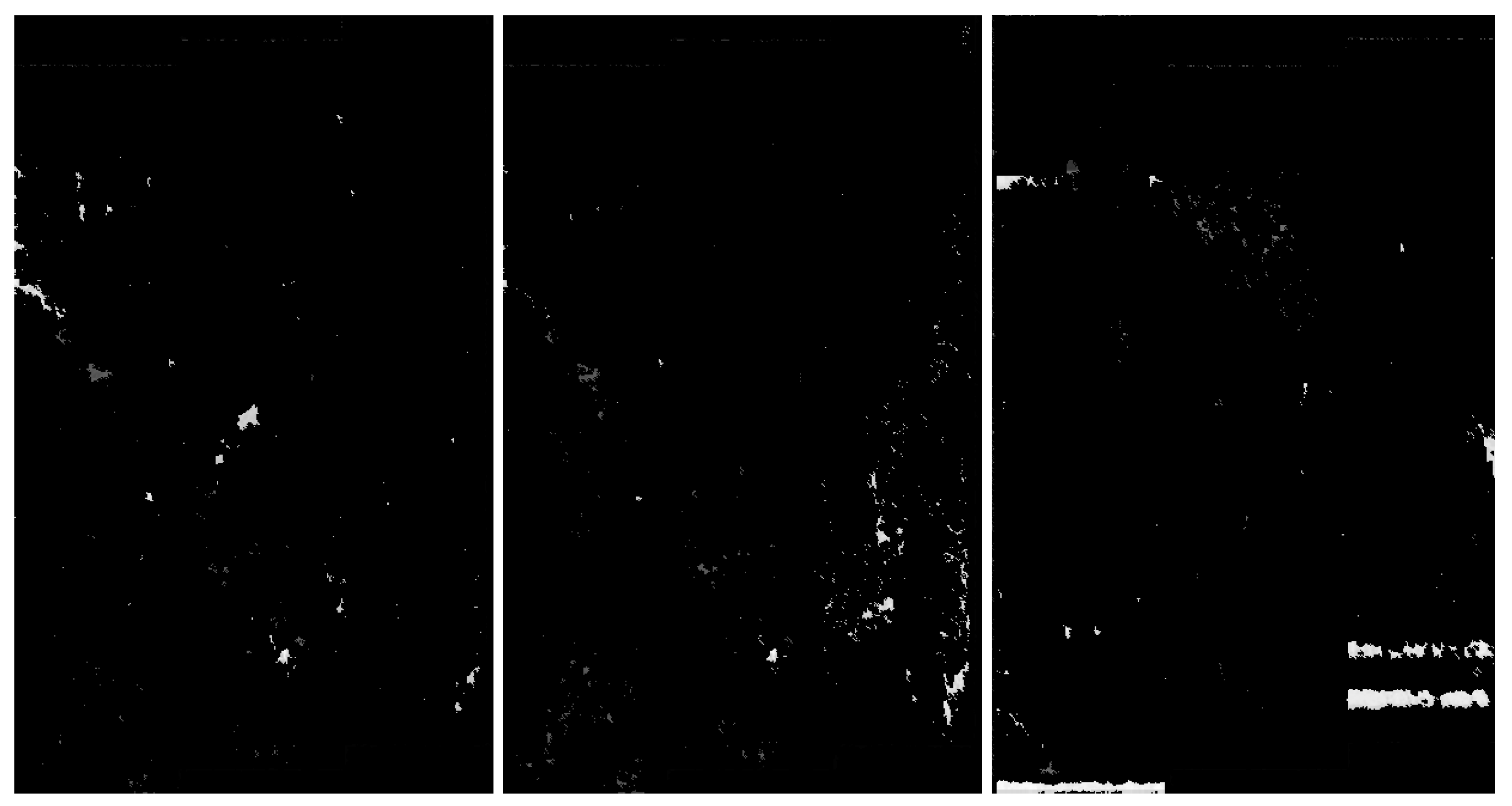

5.8. Results for ASMOW Dataset

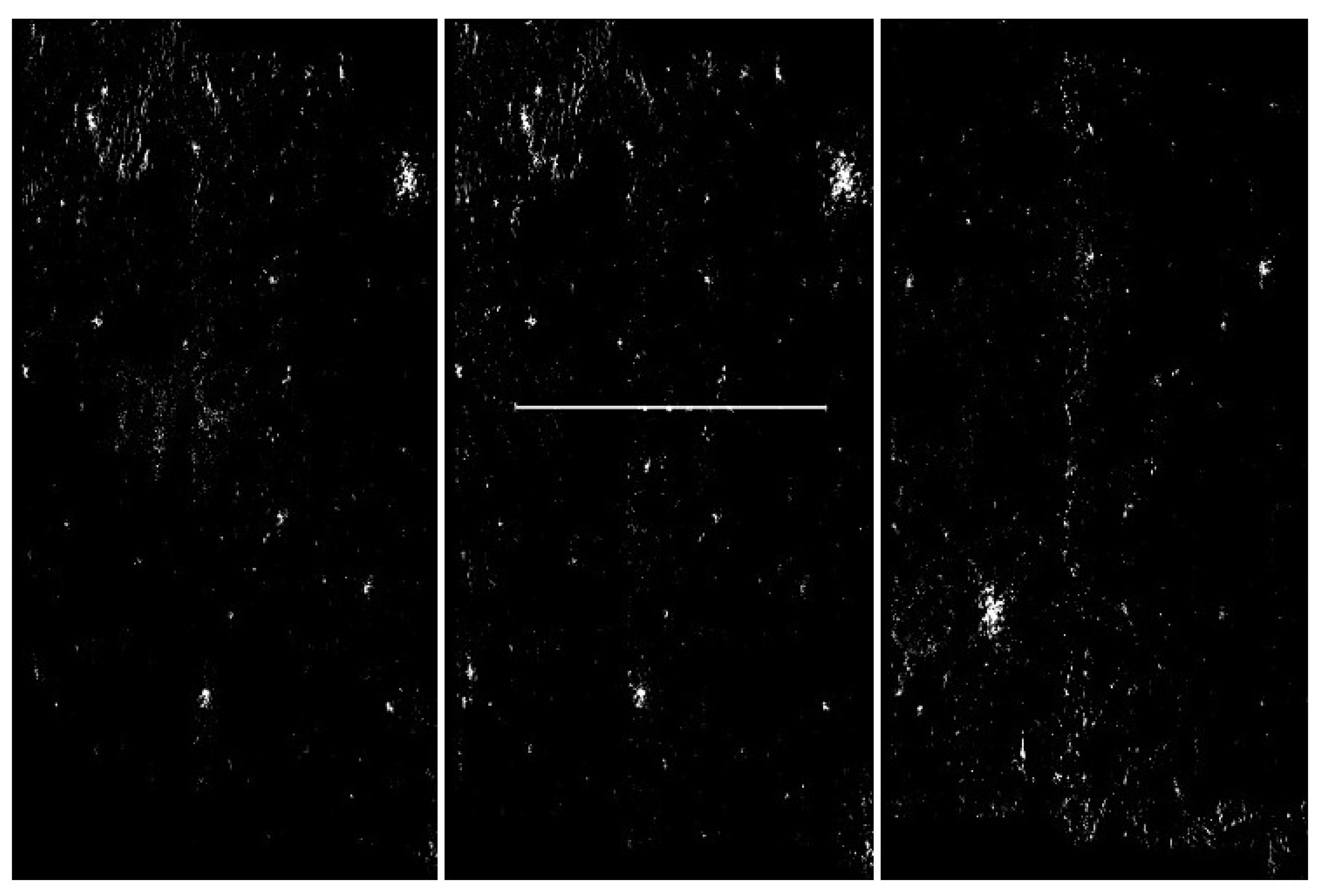

- We applied a hybrid threshold method based on the histogram (with a fixed frequency threshold of 10,000), and using the 1 technique for noise reduction, see Figure 22.

- Then we detected the area whose pixels are arranged in a thick straight line—see Figure 24. The thickness was set at 7 vertical pixels. We applied the expected linear structure length threshold of 40, so our artefact was detected and distinguished from undamaged images. The area of artefact was automatically marked with a line.

5.9. Summary of Results for ASMOW Dataset

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- NASA. Landsat 1. Landsat Science. 2020. Available online: https://landsat.gsfc.nasa.gov/landsat-1/ (accessed on 28 February 2020).

- Tao, M.; Su, J.; Huang, Y.; Wang, L. Mitigation of Radio Frequency Interference in Synthetic Aperture Radar Data: Current Status and Future Trends. Remote Sens. 2019, 11, 2438. [Google Scholar] [CrossRef]

- Ding, X.-L.; Li, Z.-W.; Zhu, J.-J.; Feng, G.-C.; Long, J.-P. Atmospheric Effects on InSAR Measurements and Their Mitigation. Sensors 2008, 8, 5426–5448. [Google Scholar] [CrossRef] [PubMed]

- Yang, L.; Zheng, H.; Feng, J.; Li, N.; Chen, J. Detection and suppression of narrow band RFI for synthetic aperture radar imaging. Chin. J. Aeronaut. 2015, 28, 1189–1198. [Google Scholar] [CrossRef]

- Wang, J.; Yu, W.; Deng, Y.; Wang, R.; Wang, Y.; Zhang, H.; Zheng, M. Demonstration of Time-Series InSAR Processing in Beijing Using a Small Stack of Gaofen-3 Differential Interferograms. J. Sens. 2019. [Google Scholar] [CrossRef]

- Massonnet, D.; Feigl, K.L. Radar interferometry and its application to changes in the earth’s surface. Rev. Geophys. 1998, 36, 441–500. [Google Scholar] [CrossRef]

- Burgmann, R.; Rosen, P.A.; Fielding, E.J. Synthetic aperture radar interferometry to measure Earth’s surface topography and its deformation. Annu. Rev. Earth Planet. Sci. 2000, 28, 169–209. [Google Scholar] [CrossRef]

- Liu, Z.; Zhou, C.; Fu, H.; Zhu, J.; Zuo, T. A Framework for Correcting Ionospheric Artifacts and Atmospheric Effects to Generate High Accuracy InSAR DEM. Remote Sens. 2020, 12, 318. [Google Scholar] [CrossRef]

- Solari, L.; Ciampalini, A.; Raspini, F.; Bianchini, S.; Moretti, S. PSInSAR Analysis in the Pisa Urban Area (Italy): A Case Study of Subsidence Related to Stratigraphical Factors and Urbanization. Remote Sens. 2016, 8, 120. [Google Scholar] [CrossRef]

- Qin, X.; Ding, X.; Liao, M.; Zhang, L.; Wang, C. A bridge-tailored multi-temporal DInSAR approach for remote exploration of deformation characteristics and mechanisms of complexly structured bridges. ISPRS J. Photogramm. Remote Sens. 2019, 156, 27–50. [Google Scholar] [CrossRef]

- Hu, Z.; Mallorquí, J.J. An Accurate Method to Correct Atmospheric Phase Delay for InSAR with the ERA5 Global Atmospheric Model. Remote Sens. 2019, 11, 1969. [Google Scholar] [CrossRef]

- Massonnet, D.; Feigl, K.L. Discrimination of geophysical phenomena in satellite radar interferograms. Geophys. Res. Lett. 1995, 22, 1537–1540. [Google Scholar] [CrossRef]

- Ferretti, A.; Prati, C.; Rocca, F. Permanent Scatterers in SAR Interferometry. IEEE Trans. Geosci. Remote Sens. 2001, 39, 8–20. [Google Scholar] [CrossRef]

- Dai, K.; Li, Z.; Tomás, R.; Liu, G.; Yu, B.; Wang, X.; Cheng, H.; Chen, J.; Stockamp, J. Monitoring activity at the Daguangbao mega-landslide (China) using Sentinel-1 TOPS time series interferometry. Remote Sens. Environ. 2016, 186, 501–513. [Google Scholar] [CrossRef]

- Yang, K.; Yan, L.; Huang, G.; Chen, C.; Wu, Z. Monitoring Building Deformation with InSAR: Experiments and Validation. Sensors 2016, 16, 2182. [Google Scholar] [CrossRef] [PubMed]

- Oštir, K.; Komac, M. PSInSAR and DInSAR methodology comparison and their applicability in the field of surface deformations—A case of NW Slovenia. Geologija 2007, 50, 77–96. [Google Scholar] [CrossRef]

- Babu, A.; Kumar, S. PSInSAR Processing for Volcanic Ground Deformation Monitoring Over Fogo Island. In Proceedings of the 2nd International Electronic Conference on Geosciences, Online, 8–15 June 2019. [Google Scholar] [CrossRef]

- Tofani, V.; Raspini, F.; Catani, F.; Casagli, N. Persistent Scatterer Interferometry (PSI) Technique for Landslide Characterization and Monitoring. Remote Sens. 2013, 5, 1045–1065. [Google Scholar] [CrossRef]

- Ge, L.; Chang, H.C.; Rizos, C. Mine subsidence monitoring using multi-source satellite SAR images. Photogramm. Eng. Remote Sens. 2007, 73, 259–266. [Google Scholar] [CrossRef]

- Benattou, M.M.; Balz, T.; Lia, M. Measuring surface subsidence in Wuhan, China with Sentinel-1 data using PSInSAR. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-3, 73–77. [Google Scholar] [CrossRef]

- Liao, M.; Jiang, H.; Wang, Y.; Wang, T.; Zhang, L. Improved topographic mapping through high-resolution SAR interferometry with atmospheric removal. ISPRS J. Photogram. Remote Sens. 2013, 80, 72–79. [Google Scholar] [CrossRef]

- Zebker, H.A.; Rosen, P.A.; Hensley, S. Atmospheric effects in interferometric synthetic aperture radar surface deformation and topographic maps. J. Geophys. Res. 1997, 102, 7547–7563. [Google Scholar] [CrossRef]

- Emardson, T.R.; Simons, M.; Webb, F.H. Neutral atmospheric delay in interferometric synthetic aperture radar applications: Statistical description and mitigation. J. Geophys. Res. 2003, 108, 2231–2238. [Google Scholar] [CrossRef]

- Stasolla, M.; Neyt, X. An Operational Tool for the Automatic Detection and Removal of Border Noise in Sentinel-1 GRD Products. Sensors 2018, 18, 3454. [Google Scholar] [CrossRef] [PubMed]

- Hajduch, G.; Miranda, N. Masking “No-Value” Pixels on GRD Products Generated by the Sentinel-1 ESA IPF; Document Reference MPC-0243; S-1 Mission Performance Centre, ESA: Paris, France, 2018. [Google Scholar]

- Ali, I.; Cao, S.; Naeimi, V.; Paulik, C.; Wagner, W. Methods to Remove the Border Noise From Sentinel-1 Synthetic Aperture Radar Data: Implications and Importance For Time-Series Analysis. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 777–786. [Google Scholar] [CrossRef]

- Luo, Y.; Flett, D. Sentinel-1 Data Border Noise Removal and Seamless Synthetic Aperture Radar Mosaic Generation. In Proceedings of the 2nd International Electronic Conference on Remote Sensing, Online, 22 March–5 April 2018. [Google Scholar] [CrossRef]

- Bouvet, A.; Mermoz, S.; Ballère, M.; Koleck, T.; Le Toan, T. Use of the SAR Shadowing Effect for Deforestation Detection with Sentinel-1 Time Series. Remote Sens. 2018, 10, 1250. [Google Scholar] [CrossRef]

- International Telecommunication Union. Radio Regulations Articles, Section VII—Frequency sharing, article 1.166, definition: Interference. 2016. Available online: http://search.itu.int/history/HistoryDigitalCollectionDocLibrary/1.43.48.en.101.pdf (accessed on 28 February 2020).

- Meyer, F.J.; Nicoll, J.B.; Doulgeris, A.P. Correction and Characterization of Radio Frequency Interference Signatures in L-Band Synthetic Aperture Radar Data. IEEE Trans. Geosci. Remote Sens. 2013, 51, 4961–4972. [Google Scholar] [CrossRef]

- Parasher, P.; Aggarwal, K.M.; Ramanujam, V.M. RFI detection and mitigation in SAR data. In Proceedings of the Conference: 2019 URSI Asia-Pacific Radio Science Conference (AP-RASC), New Delhi, India, 9–15 March 2019. [Google Scholar] [CrossRef]

- Cucho-Padin, G.; Wang, Y.; Li, E.; Waldrop, L.; Tian, Z.; Kamalabadi, F.; Perillat, P. Radio Frequency Interference Detection and Mitigation Using Compressive Statistical Sensing. Radio Sci. 2019, 54, 11. [Google Scholar] [CrossRef]

- Querol, J.; Perez, A.; Camps, A. A Review of RFI Mitigation Techniques in Microwave Radiometry. Remote Sens. 2019, 11, 3042. [Google Scholar] [CrossRef]

- Shen, W.; Qin, Z.; Lin, Z. A New Restoration Method for Radio Frequency Interference Effects on AMSR-2 over North America. Remote Sens. 2019, 11, 2917. [Google Scholar] [CrossRef]

- Soldo, Y.; Le Vine, D.; de Matthaeis, P. Detection of Residual “Hot Spots” in RFI-Filtered SMAP Data. Remote Sens. 2019, 11, 2935. [Google Scholar] [CrossRef]

- Johnson, J.T.; Ball, C.; Chen, C.; McKelvey, C.; Smith, G.E.; Andrews, M.; O’Brien, A.; Garry, J.L.; Misra, S.; Bendig, R.; et al. Real-Time Detection and Filtering of Radio Frequency Interference Onboard a Spaceborne Microwave Radiometer: The CubeRRT Mission. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13. [Google Scholar] [CrossRef]

- Yang, Z.; Yu, C.; Xiao, J.; Zhang, B. Deep residual detection of radio frequency interference for FAST. Mon. Not. R. Astron. Soc. 2020, 492, 1. [Google Scholar] [CrossRef]

- Monti-Guarnieri, A.; Giudici, D.; Recchia, A. Identification of C-Band Radio Frequency Interferences from Sentinel-1 Data. Remote Sens. 2017, 9, 1183. [Google Scholar] [CrossRef]

- Itschner, I.; Li, X. Radio Frequency Interference (RFI) Detection in Instrumentation Radar Systems: A Deep Learning Approach. In Proceedings of the IEEE Radar Conference (RadarConf) 2019, Boston, MA, USA, 22–26 April 2019. [Google Scholar] [CrossRef]

- ESA. Sentinel Online Technical Website. Sentinel-1. 2020. Available online: https://sentinel.esa.int/web/sentinel/missions/sentinel-1 (accessed on 2 February 2020).

- Copernicus. The European Union’s Earth Observation Programme. 2020. Available online: https://www.copernicus.eu/en/about-copernicus/copernicus-brief (accessed on 28 February 2020).

- Torres, R.; Snoeij, P.; Geudtner, D.; Bibby, D.; Davidson, M.; Attema, E.; Potin, P.; Rommen, B.; Floury, N.; Brown, M.; et al. GMES Sentinel-1 mission. Remote Sens. Environ. 2012, 120, 9–24. [Google Scholar] [CrossRef]

- De Zan, F.; Monti-Guarnieri, A. TOPSAR: Terrain Observation by Progressive Scans. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2352–2360. [Google Scholar] [CrossRef]

- Lasocki, S.; Antoniuk, J.; Moscicki, J. Environmental Protection Problems in the Vicinity of the Żelazny Most Flotation Wastes Depository in Poland. J. Environ. Sci. Health Part A 2003, 38, 1435–1443. [Google Scholar] [CrossRef] [PubMed]

- KGHM Polska Miedź. Experts Discuss “Żelazny Most”. 2019. Available online: https://media.kghm.com/en/news-and-press-releases/experts-discuss-zelazny-most (accessed on 27 February 2020).

- Major, K. Jest Największy w Europie i Rośnie. Kluczowa Inwestycja KGHM. [The largest in Europe and Continues to Raise. Crucial investment for the KGHM.]WP Polska Miedź. 2019. Available online: http://polskamiedz.wp.pl/artykul/jest-najwiekszy-w-europie-i-rosnie-kluczowa-inwestycja-kghm (accessed on 27 February 2020).

- IEEE FARS Technical Committee. Database of Frequency Allocations for Microwave Remote Sensing and Observed Radio Frequency Interference. 2020. Available online: http://grss-ieee.org/microwave-interferers/ (accessed on 28 February 2020).

- Trussell, H.J.; Saber, E.; Vrhel, M. Color image processing [basics and special issue overview]. IEEE Signal Process. Mag. 2005, 22, 14–22. [Google Scholar] [CrossRef]

- Gonzalez, R. Digital Image Processing; Pearson: New York, NY, USA, 2018; ISBN 978-0-13-335672-4. [Google Scholar]

- Bradski, G.; Kaehler, A. Learning OpenCV: Computer vision with the OpenCV library; O’Reilly Media: Sebastopol, CA, USA, 2008; ISBN 978-0596516130. [Google Scholar]

- Shapiro, L.G.; Stockman, G.C. Computer Vision; Pearson: London, UK, 2001; ISBN 978-0130307965. [Google Scholar]

- Nixon, M.S.; Aguado, A.S. Feature Extraction and Image Processing; Newnes: Oxford, UK, 2002; ISBN 978-0750650786. [Google Scholar]

- Mo, J.; Wang, B.; Zhang, Z.; Chen, Z.; Huang, Z.; Zhang, J.; Ni, X. A convolution-based approach for fixed-pattern noise removal in OCR. In Proceedings of the International Conference on Artificial Intelligence and Big Data (ICAIBD) 2018, Chengdu, China, 26–28 May 2018; pp. 134–138. [Google Scholar] [CrossRef]

- Bradley, D.; Roth, G. Adaptive Thresholding using the Integral Image. J. Graph. Tools 2007, 12, 13–21. [Google Scholar] [CrossRef]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Novotny, J.; Bilokon, P.A.; Galiotos, A.; Délèze, F. Nearest Neighbours. In Machine Learning and Big Data with kdb+/q; John Wiley & Sons: Hoboken, NJ, USA, 2019. [Google Scholar] [CrossRef]

- Lensch, H. Computer Graphics: Texture Filtering & Sampling Theory. Max Planck Institute for Informatics 2007. Available online: http://resources.mpi-inf.mpg.de/departments/d4/teaching/ws200708/cg/slides/CG09-Textures+Filtering.pdf (accessed on 14 January 2018).

- Craw, S. Manhattan Distance. In Encyclopedia of Machine Learning and Data Mining; Sammut, C., Webb, G.I., Eds.; Springer: Basel, Switzerland, 2017. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; The MIT Press: London, UK, 2016; ISBN 78-0262035613. [Google Scholar]

- Lou, G.; Shi, H. Face image recognition based on convolutional neural network. China Commun. 2020, 17, 2. [Google Scholar] [CrossRef]

- Almakky, I.; Palade, V.; Ruiz-Garcia, A. Deep Convolutional Neural Networks for Text Localisation in Figures From Biomedical Literature. In Proceedings of the International Joint Conference on Neural Networks (IJCNN) 2019, Budapest, Hungary, 14–19 July 2019. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference for Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Partow, A. C++ Bitmap Library. Available online: http://partow.net/programming/bitmap/index.html (accessed on 24 February 2020).

- Xu, Q.-S.; Liang, Y.-Z. Monte Carlo cross validation. Chemom. Intell. Lab. Syst. 2001, 56, 1. [Google Scholar] [CrossRef]

- Singh, P.; Singh, B. A Review of Document Image Binarization Techniques. Int. J. Comput. Sci. Eng. 2019, 7, 746–749. [Google Scholar] [CrossRef]

| Test No. | nil.ok.er | ok.er | nil.ok.tr | ok.tr | nil.all | All |

|---|---|---|---|---|---|---|

| 1 | ||||||

| 2 | ||||||

| 3 | ||||||

| 4 | ||||||

| 5 | ||||||

| avg | ||||||

| SD |

| Test No. | nil.0.II | 0.II | nil.0.I | 0.I | nil.all | All |

|---|---|---|---|---|---|---|

| 1 | ||||||

| 2 | ||||||

| 3 | ||||||

| 4 | ||||||

| 5 | ||||||

| avg | ||||||

| SD |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chojka, A.; Artiemjew, P.; Rapiński, J. RFI Artefacts Detection in Sentinel-1 Level-1 SLC Data Based On Image Processing Techniques. Sensors 2020, 20, 2919. https://doi.org/10.3390/s20102919

Chojka A, Artiemjew P, Rapiński J. RFI Artefacts Detection in Sentinel-1 Level-1 SLC Data Based On Image Processing Techniques. Sensors. 2020; 20(10):2919. https://doi.org/10.3390/s20102919

Chicago/Turabian StyleChojka, Agnieszka, Piotr Artiemjew, and Jacek Rapiński. 2020. "RFI Artefacts Detection in Sentinel-1 Level-1 SLC Data Based On Image Processing Techniques" Sensors 20, no. 10: 2919. https://doi.org/10.3390/s20102919

APA StyleChojka, A., Artiemjew, P., & Rapiński, J. (2020). RFI Artefacts Detection in Sentinel-1 Level-1 SLC Data Based On Image Processing Techniques. Sensors, 20(10), 2919. https://doi.org/10.3390/s20102919