An Autonomous Fruit and Vegetable Harvester with a Low-Cost Gripper Using a 3D Sensor

Abstract

1. Introduction

2. Related Work

2.1. Harvesting Tools

2.2. Perception

3. System Overview and the Gripper Mechanism

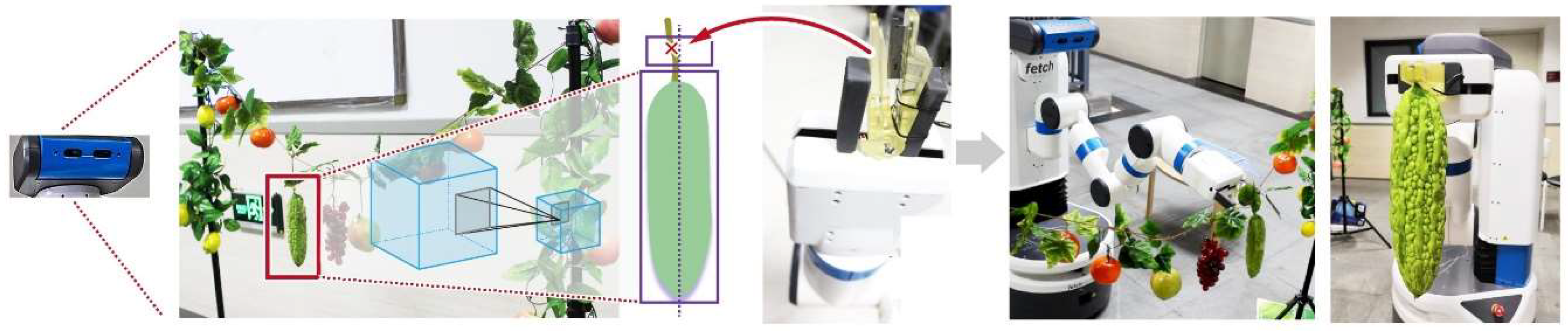

3.1. The Harvesting Robot

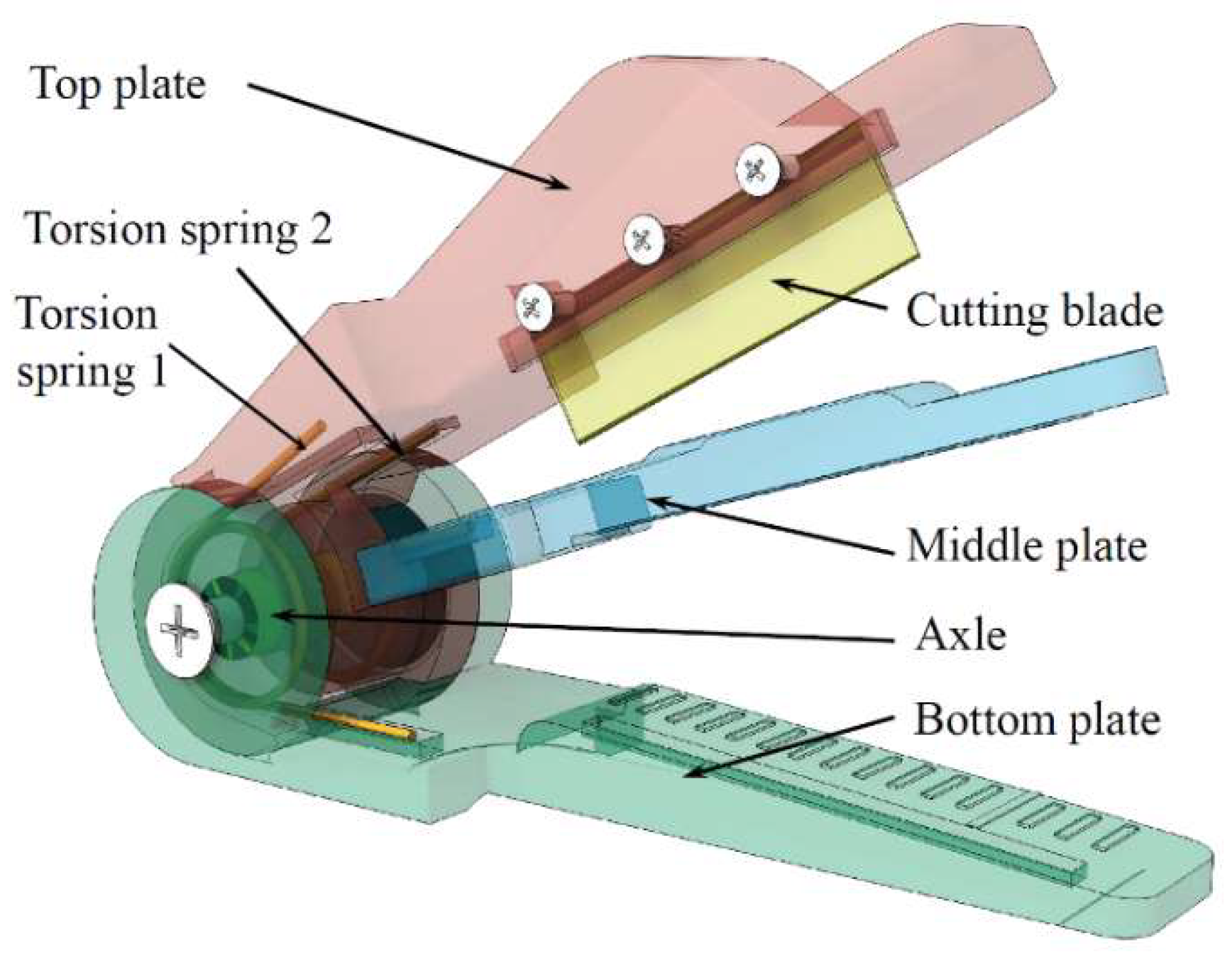

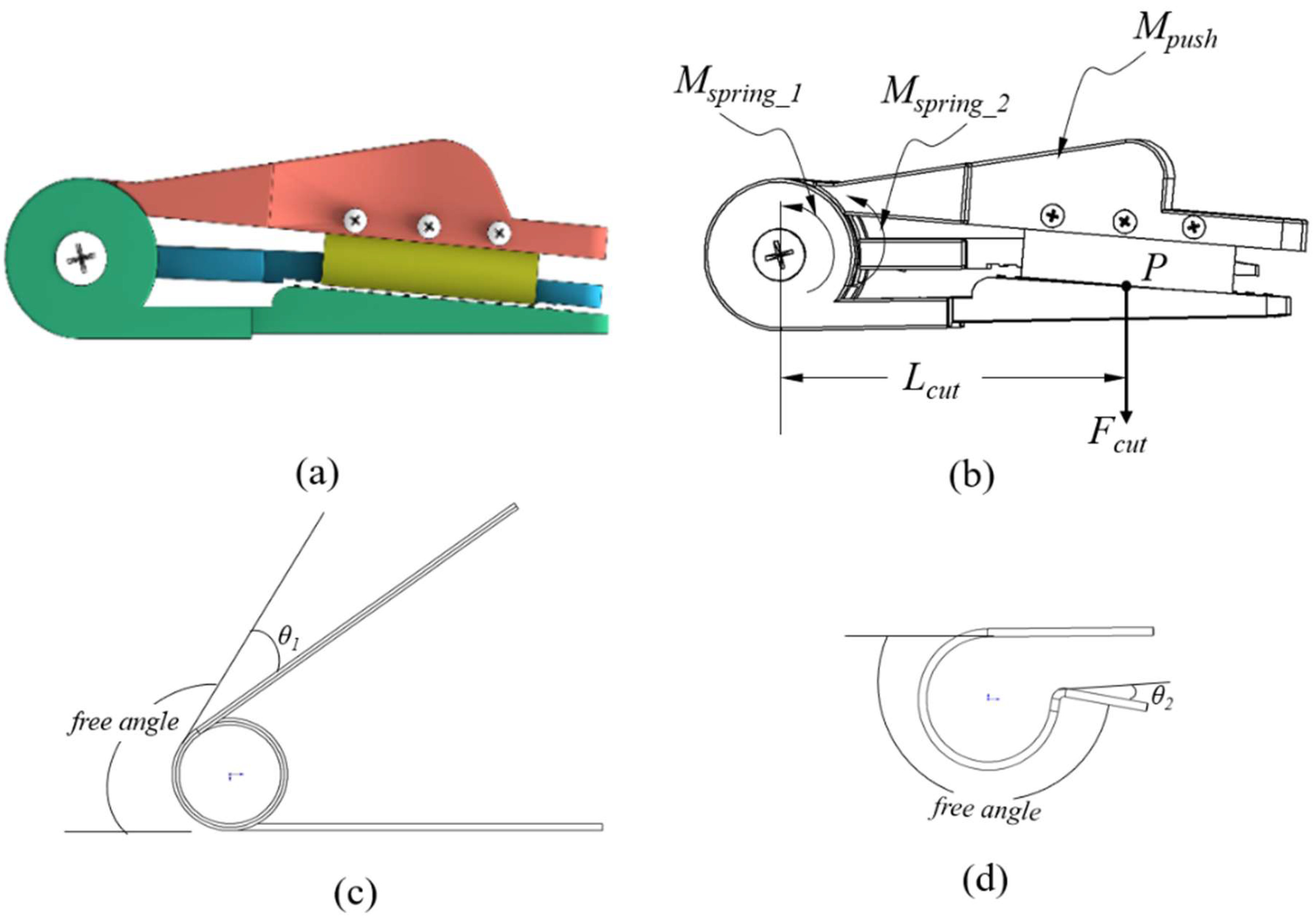

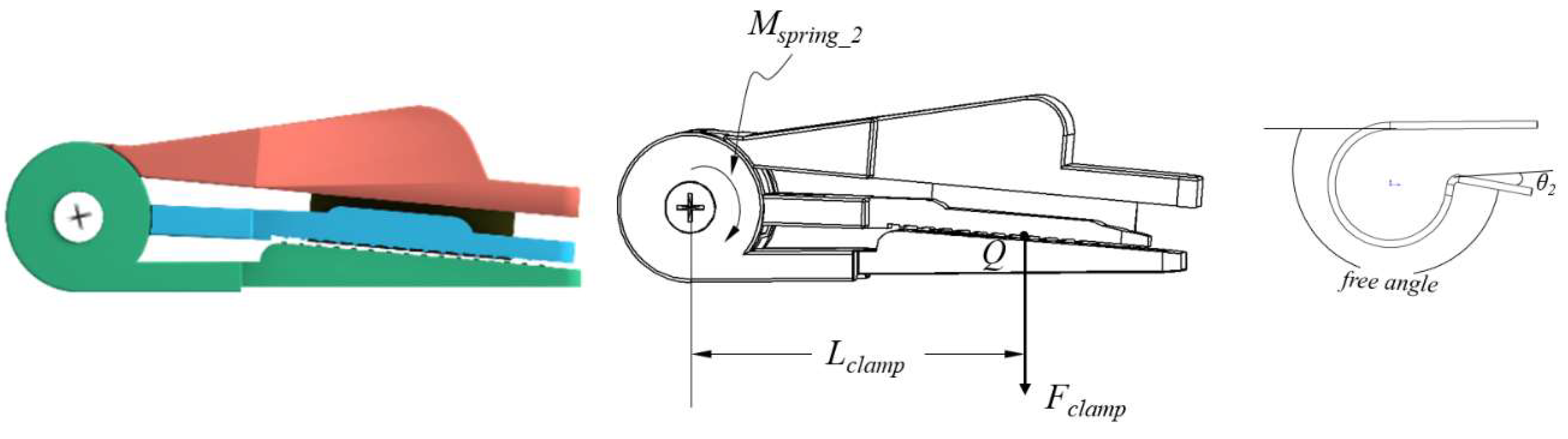

3.2. The Gripper Mechanism

- Clamping the peduncle: pressing the top plate, the middle plate contacts the bottom plate based on “torsion spring 2”, and thus the peduncle is clamped, as shown in Figure 3b.

- Cutting the peduncle: continuing to press the top plate, the cutting blade contacts the peduncle on the bottom plate, thereby cutting the peduncle, as shown in Figure 3c.

3.3. Force Analysis of the Gripper

4. Perception and Control

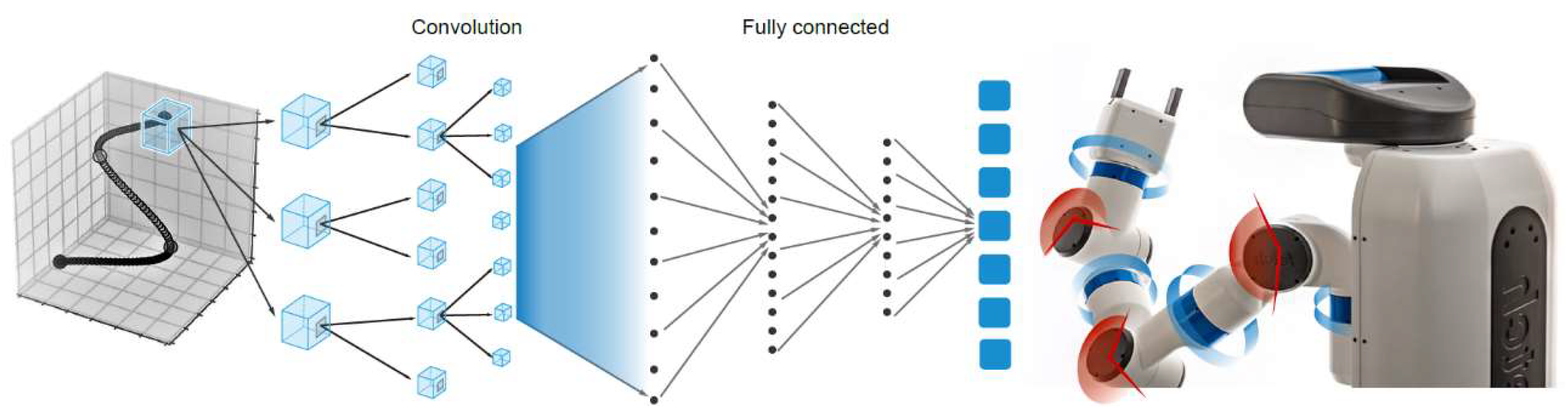

4.1. Cutting-Point Detection

4.2. System Control

5. Hardware Implementation

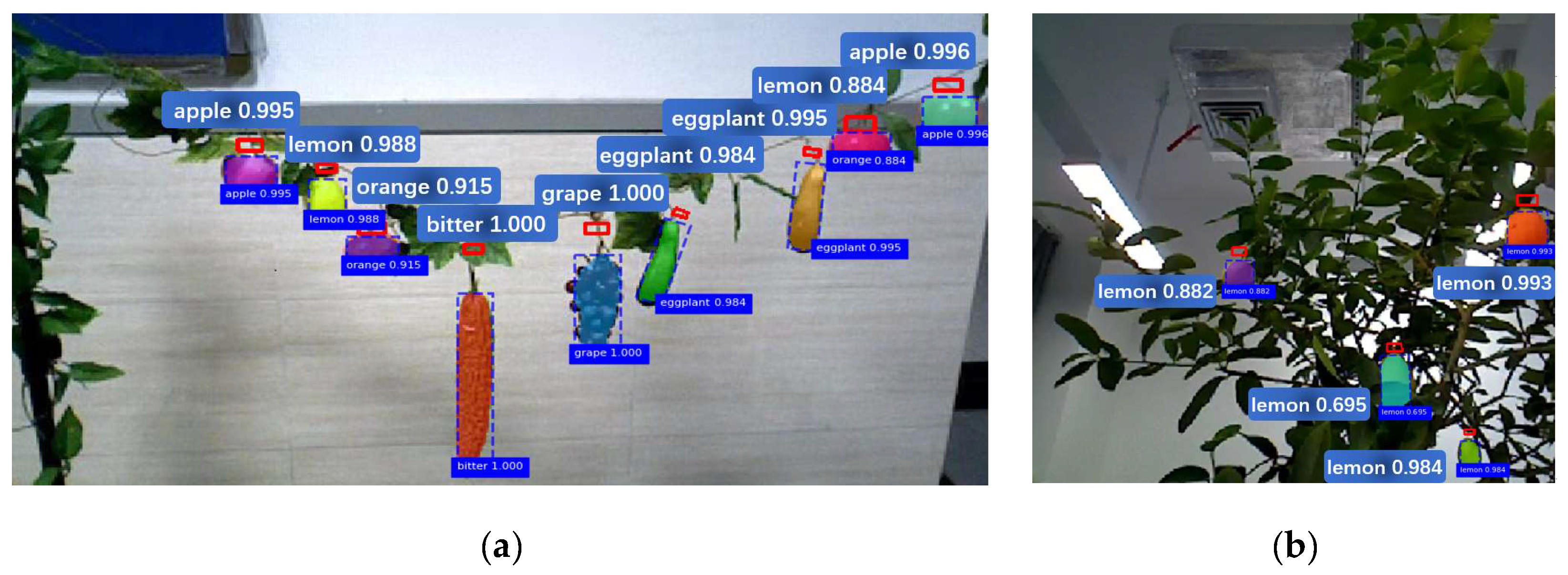

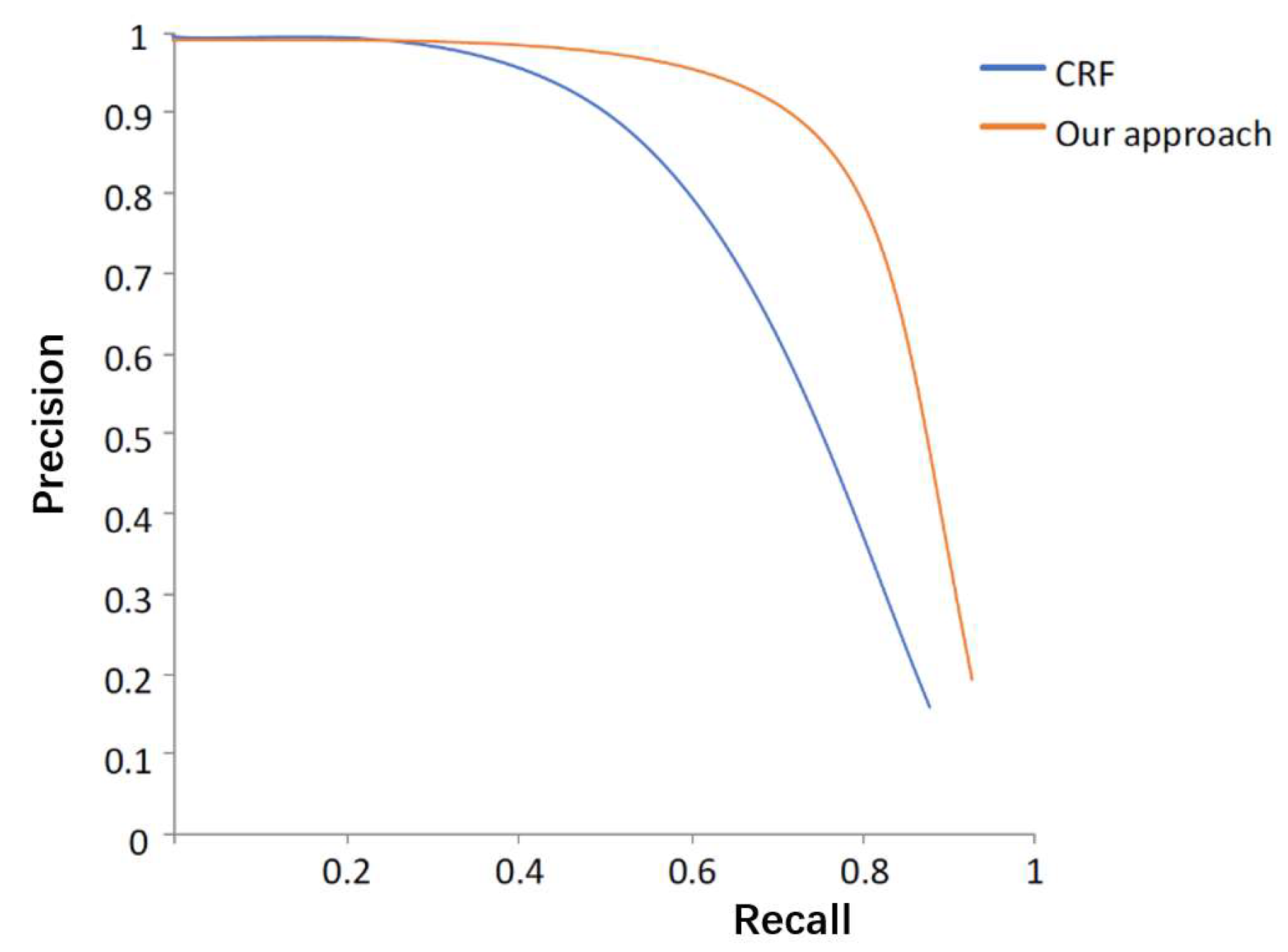

5.1. Detection Results

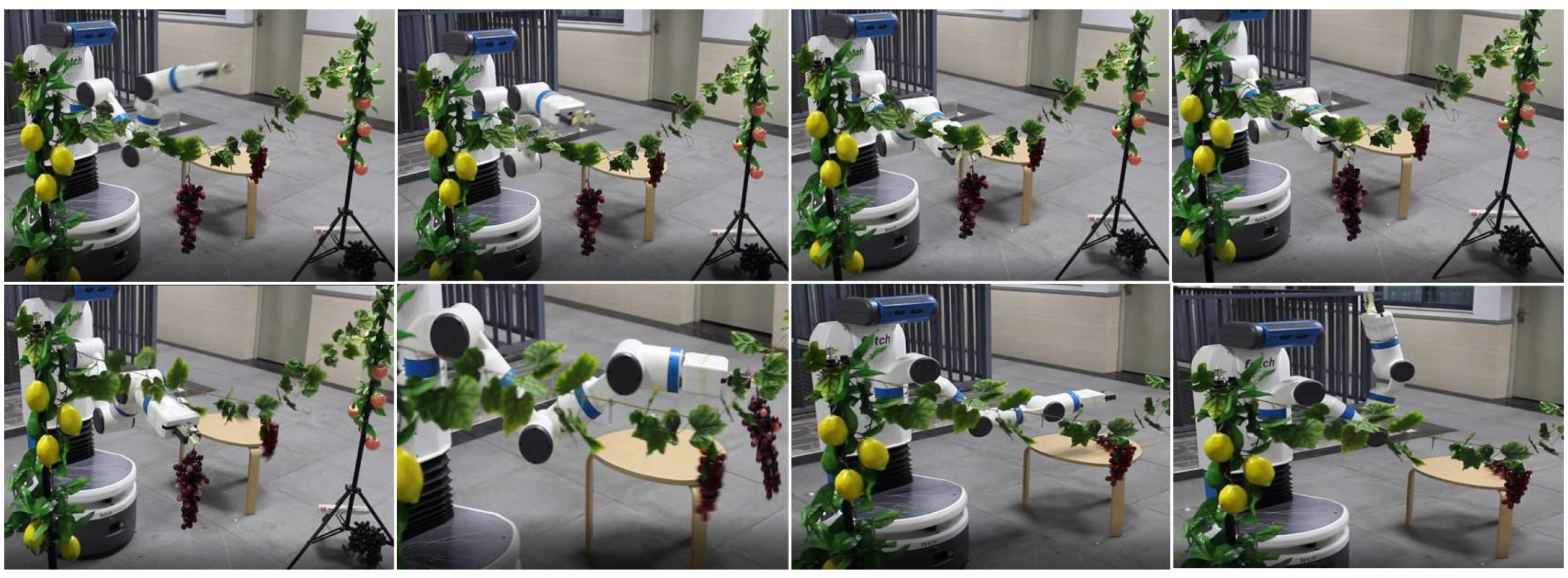

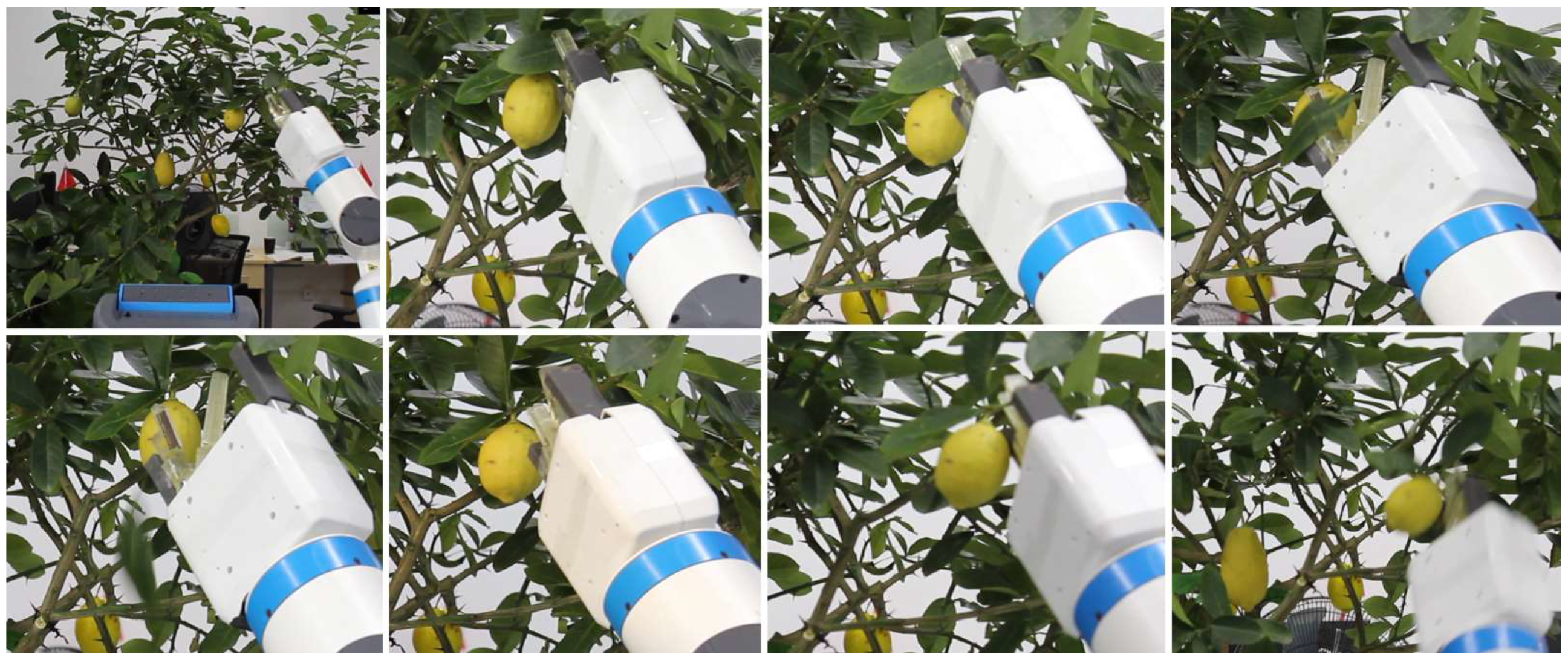

5.2. Autonomous Harvesting

5.3. Discussion

6. Conclusions and Future Work

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Kapach, K.; Barnea, E.; Mairon, R.; Edan, Y.; Ben-Shahar, O. Computer vision for fruit harvesting robots-state of the art and challenges ahead. Int. J. Comput. Vis. Robot. 2012, 3, 4–34. [Google Scholar] [CrossRef]

- Bac, C.W.; Hemming, J.; Tuijl, B.A.J.V.; Barth, R.; Wais, E.; Henten, E.J.V. Performance evaluation of a harvesting robot for sweet pepper. J. Field Robot. 2017, 34, 6. [Google Scholar] [CrossRef]

- Bac, C.W.; van Henten, E.J.; Hemming, J.; Edan, Y. Harvesting robots for high-value crops: State-of-the-art review and challenges ahead. J. Field Robot. 2014, 31, 888–911. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldu, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Arad, B.; Kurtser, P.; Barnea, E.; Harel, B.; Edan, Y.; Ben-Shahar, O. Controlled lighting and illumination-independent target detection for real-time cost-efficient applications the case study of sweet pepper robotic harvesting. Sensors 2019, 19, 1390. [Google Scholar] [CrossRef] [PubMed]

- Sa, I.; Lehnert, C.; English, A.; McCool, C.; Dayoub, F.; Upcroft, B.; Perez, T. Peduncle detection of sweet pepper for autonomous crop harvesting—Combined color and 3-d information. IEEE Robot. Autom. Lett. 2017, 2, 765–772. [Google Scholar] [CrossRef]

- Luo, L.; Tang, Y.; Lu, Q.; Chen, X.; Zhang, P.; Zou, X. A vision methodology for harvesting robot to detect cutting points on peduncles of double overlapping grape clusters in a vineyard. Comput. Ind. 2018, 99, 130–139. [Google Scholar] [CrossRef]

- Eizicovits, D.; van Tuijl, B.; Berman, S.; Edan, Y. Integration of perception capabilities in gripper design using graspability maps. Biosyst. Eng. 2016, 146, 98–113. [Google Scholar] [CrossRef]

- Eizicovits, D.; Berman, S. Efficient sensory-grounded grasp pose quality mapping for gripper design and online grasp planning. Robot. Auton. Syst. 2014, 62, 1208–1219. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dolla’r, P.; Girshick, R. Mask r-cnn. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 2961–2969. [Google Scholar]

- van Henten, E.J.; Slot, D.A.V.; Cwj, H.; van Willigenburg, L.G. Optimal manipulator design for a cucumber harvesting robot. Comput. Electron. Agric. 2009, 65, 247–257. [Google Scholar] [CrossRef]

- Chiu, Y.C.; Yang, P.Y.; Chen, S. Development of the end-effector of a picking robot for greenhouse-grown tomatoes. Appl. Eng. Agric. 2013, 29, 1001–1009. [Google Scholar]

- Lehnert, C.; English, A.; McCool, C.S.; Tow, A.M.W.; Perez, T. Autonomous sweet pepper harvesting for protected cropping systems. IEEE Robot. Autom. Lett. 2017, 2, 872–879. [Google Scholar] [CrossRef]

- Yamamoto, S.; Hayashi, S.; Yoshida, H.; Kobayashi, K. Development of a stationary robotic strawberry harvester with picking mechanism that approaches target fruit from below (part 2)—Construction of the machine’s optical system. J. Jpn. Soc. Agric. Mach. 2010, 72, 133–142. [Google Scholar]

- Hayashi, S.; Shigematsu, K.; Yamamoto, S.; Kobayashi, K.; Kohno, Y.; Kamata, J.; Kurita, M. “Evaluation of a strawberry-harvesting robot in a field test. Biosyst. Eng. 2010, 105, 160–171. [Google Scholar] [CrossRef]

- Hemming, J.; Bac, C.; van Tuijl, B.; Barth, R.; Bontsema, J.; Pekkeriet, E.; van Henten, E. A robot for harvesting sweet-pepper in greenhouses. In Proceedings of the International Conference of Agricultural Engineering, Zurich, Switzerland, 6–10 July 2014. [Google Scholar]

- Hayashi, S.; Takahashi, K.; Yamamoto, S.; Saito, S.; Komeda, T. Gentle handling of strawberries using a suction device. Biosyst. Eng. 2011, 109, 348–356. [Google Scholar] [CrossRef]

- Dimeas, F.; Sako, D.V. Design and fuzzy control of a robotic gripper for efficient strawberry harvesting. Robotica 2015, 33, 1085–1098. [Google Scholar] [CrossRef]

- Bontsema, J.; Hemming, J.; Pekkeriet, E. Crops: High tech agricultural robots. In Proceedings of the International Conference of Agricultural Engineering, Zurich, Switzerland, 6–10 July 2014. [Google Scholar]

- Baeten, J.; Donn’e, K.; Boedrij, S.; Beckers, W.; Claesen, E. Autonomous fruit picking machine: A robotic apple harvester. Springer Tracts Adv. Robot. 2007, 42, 531–539. [Google Scholar]

- Haibin, Y.; Cheng, K.; Junfeng, L.; Guilin, Y. Modeling of grasping force for a soft robotic gripper with variable stiffness. Mech. Mach. Theory 2018, 128, 254–274. [Google Scholar] [CrossRef]

- Mantriota, G. Theoretical model of the grasp with vacuum gripper. Mech. Mach. Theory 2007, 42, 2–17. [Google Scholar] [CrossRef]

- Han, K.-S.; Kim, S.-C.; Lee, Y.B.; Kim, S.C.; Im, D.H.; Choi, H.K.; Hwang, H. Strawberry harvesting robot for bench-type cultivation. J. Biosyst. Eng. 2012, 37, 65–74. [Google Scholar] [CrossRef]

- Tian, G.; Zhou, J.; Gu, B. Slipping detection and control in gripping fruits and vegetables for agricultural robot. Int. J. Agric. Biol. Eng. 2018, 11, 45–51. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, W.; Gupta, M.M. An underactuated self-reconfigurable robot and the reconfiguration evolution. Mech. Mach. Theory 2018, 124, 248–258. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, W.; Gupta, M. A novel docking system for modular self-reconfigurable robots. Robotics 2017, 6, 25. [Google Scholar] [CrossRef]

- Bac, C.; Hemming, J.; van Henten, E. Robust pixel-based classification of obstacles for robotic harvesting of sweet-pepper. Comput. Electron. Agric. 2013, 96, 148–162. [Google Scholar] [CrossRef]

- McCool, C.; Sa, I.; Dayoub, F.; Lehnert, C.; Perez, T.; Upcroft, B. Visual detection of occluded crop: For automated harvesting. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2017; pp. 2506–2512. [Google Scholar]

- Blasco, J.; Aleixos, N.; Molto, E. Machine vision system for automatic quality grading of fruit. Biosyst. Eng. 2003, 85, 415–423. [Google Scholar] [CrossRef]

- Ruiz, L.A.; Molt’o, E.; Juste, F.; Pla, F.; Valiente, R. Location and characterization of the stem–calyx area on oranges by computer vision. J. Agric. Eng. Res. 1996, 64, 165–172. [Google Scholar] [CrossRef][Green Version]

- Zemmour, E.; Kurtser, P.; Edan, Y. Dynamic thresholding algorithm for robotic apple detection. In Proceedings of the 2017 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Coimbra, Portugal, 26–28 April 2017; pp. 240–246. [Google Scholar]

- Zemmour, E.; Kurtser, P.; Edan, Y. Automatic parameter tuning for adaptive thresholding in fruit detection. Sensors 2019, 19, 2130. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, UK, 2015; pp. 91–99. [Google Scholar]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. Deep-fruits: A fruit detection system using deep neural networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef]

- Zhang, T.; You, W.; Huang, Z.; Lin, J.; Huang, H. A Universal Harvesting Gripper. Utility Patent. Chinese Patent CN209435819, 27 September 2019. Available online: http://www.soopat.com/Patent/201821836172 (accessed on 27 September 2019).

- Zhang, T.; You, W.; Huang, Z.; Lin, J.; Huang, H. A Harvesting Gripper for Fruits and Vegetables. Design Patent, Chinese Patent CN305102083, 9 April 2019. Available online: http://www.soopat.com/Patent/201830631197 (accessed on 9 April 2019).

- Donahue, J.; Jia, Y.; Vinyals, O.; Hoffman, J.; Zhang, N.; Tzeng, E.; Darrell, T. Decaf: A deep convolutional activation feature for generic visual recognition. In Proceedings of the International Conference on Machine Learning, Berkeley, CA, USA, 21-26 June 2014; pp. 647–655. [Google Scholar]

- Baur, J.; Schu¨tz, C.; Pfaff, J.; Buschmann, T.; Ulbrich, H. Path planning for a fruit picking manipulator. In Proceedings of the International Conference of Agricultural Engineering, Garching, Germany, 6–10 June 2014. [Google Scholar]

- Katyal, K.D.; Staley, E.W.; Johannes, M.S.; Wang, I.-J.; Reiter, A.; Burlina, P. In-hand robotic manipulation via deep reinforcement learning. In Proceedings of the 30th Conference on Neural Information Processing Systems, Barcelona, Spain, 5–8 December 2016. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, UK; Lake Tahoe, NV, USA, 2012; pp. 1097–1105. [Google Scholar]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. Ros: An open-source robot operating system. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 12–17 May 2009; pp. 1–6. [Google Scholar]

- Davis, J.; Goadrich, M. The relationship between precision-recall and roc curves. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 233–240. [Google Scholar]

- Tseng, H.; Chang, P.; Andrew, G.; Jurafsky, D.; Manning, C. A conditional random field word segmenter for sighan bakeoff 2005. In Proceedings of the fourth SIGHAN Workshop on Chinese language Processing, Jeju Island, Korea, 14–15 October 2005; pp. 168–171. [Google Scholar]

- Wu, C.; Zeng, R.; Pan, J.; Wang, C.C.; Liu, Y.-J. Plant phenotyping by deep-learning-based planner for multi-robots. IEEE Robot. Autom. Lett. 2019, 4, 3113–3120. [Google Scholar] [CrossRef]

- Barth, R.; Hemming, J.; van Henten, E.J. Angle estimation between plant parts for grasp optimisation in harvest robots. Biosyst. Eng. 2019, 183, 26–46. [Google Scholar] [CrossRef]

- Lin, D.; Chen, G.; Cohen-Or, D.; Heng, P.-A.; Huang, H. Cascaded feature network for semantic segmentation of rgb-d images. In Proceedings of the IEEE International Conference on Computer Vision, Honolulu, HI, USA, 21–26 July 2017; pp. 1311–1319. [Google Scholar]

- Xu, K.; Zheng, L.; Yan, Z.; Yan, G.; Zhang, E.; Niessner, M.; Deussen, O.; Cohen-Or, D.; Huang, H. Autonomous reconstruction of unknown indoor scenes guided by time-varying tensor fields. ACM Trans. Graph. 2017, 36, 1–15. [Google Scholar] [CrossRef]

| Success Cases | Plastic Crops | Real Crops | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Apple (6) | Lemon (3) | Orange (6) | Bitter melon (3) | Grapes (3) | Eggplants (6) | Total | Grapes (7) | Melons (14) | Common figs (10) | Total | |

| Attachment | 5 | 2 | 5 | 3 | 2 | 5 | 81% | 5 | 11 | 6 | 71% |

| Detachment | 5 | 2 | 4 | 1 | 2 | 5 | 70% | 4 | 8 | 5 | 55% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, T.; Huang, Z.; You, W.; Lin, J.; Tang, X.; Huang, H. An Autonomous Fruit and Vegetable Harvester with a Low-Cost Gripper Using a 3D Sensor. Sensors 2020, 20, 93. https://doi.org/10.3390/s20010093

Zhang T, Huang Z, You W, Lin J, Tang X, Huang H. An Autonomous Fruit and Vegetable Harvester with a Low-Cost Gripper Using a 3D Sensor. Sensors. 2020; 20(1):93. https://doi.org/10.3390/s20010093

Chicago/Turabian StyleZhang, Tan, Zhenhai Huang, Weijie You, Jiatao Lin, Xiaolong Tang, and Hui Huang. 2020. "An Autonomous Fruit and Vegetable Harvester with a Low-Cost Gripper Using a 3D Sensor" Sensors 20, no. 1: 93. https://doi.org/10.3390/s20010093

APA StyleZhang, T., Huang, Z., You, W., Lin, J., Tang, X., & Huang, H. (2020). An Autonomous Fruit and Vegetable Harvester with a Low-Cost Gripper Using a 3D Sensor. Sensors, 20(1), 93. https://doi.org/10.3390/s20010093