Bearing Fault Diagnosis Based on the Switchable Normalization SSGAN with 1-D Representation of Vibration Signals as Input

Abstract

1. Introduction

2. Theory Background

2.1. A Brief Introduction to GAN

2.2. Deconvolution, Convolution, Normalization and Activation

2.2.1. Deconvolution and Convolution

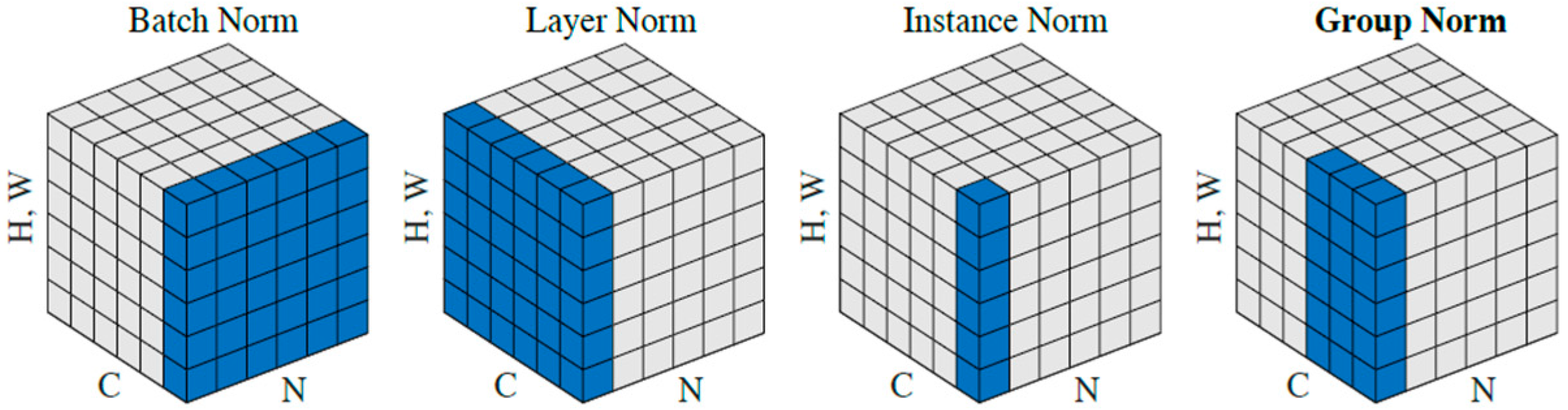

2.2.2. Normalization

2.2.3. Activation Layer

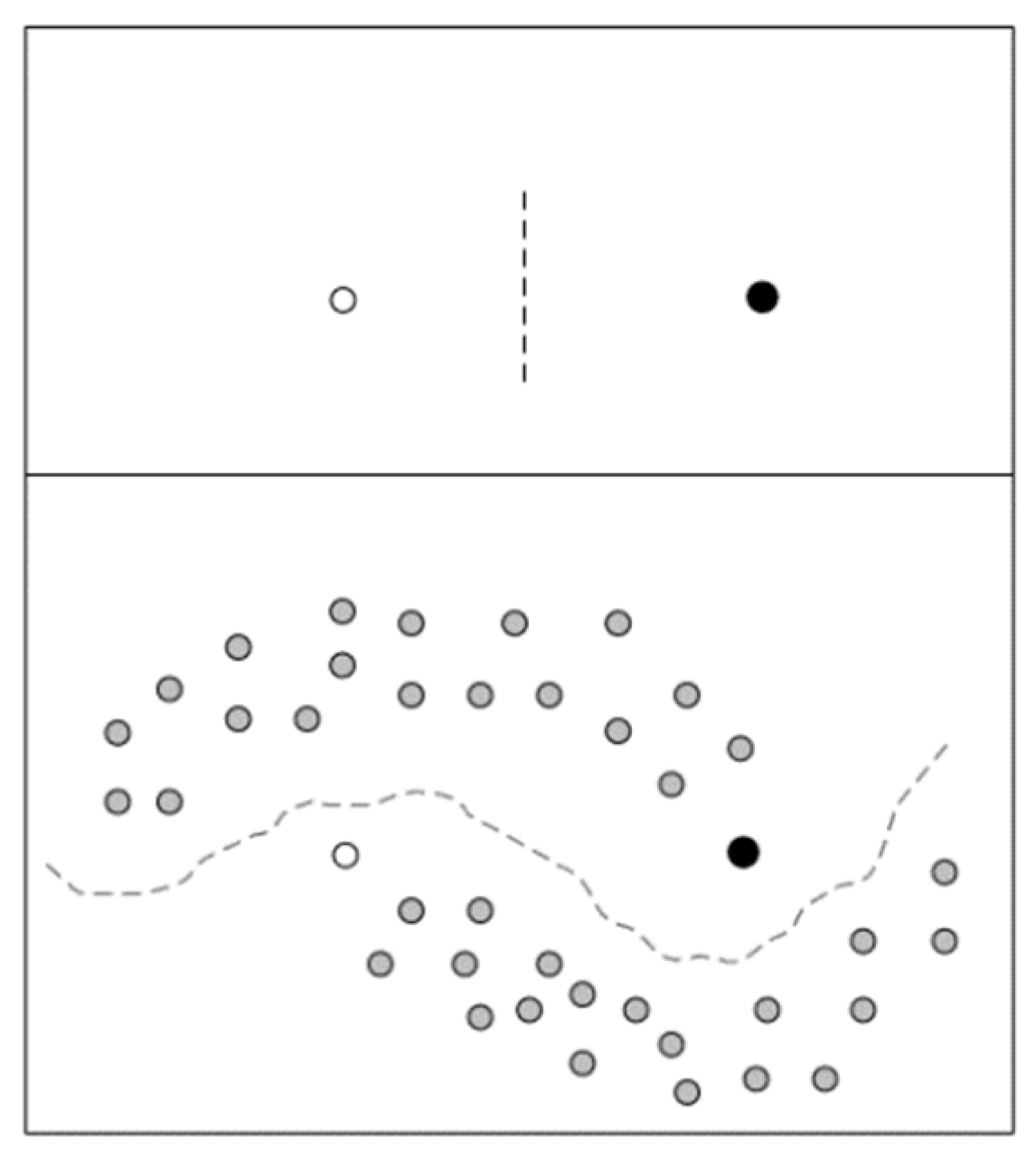

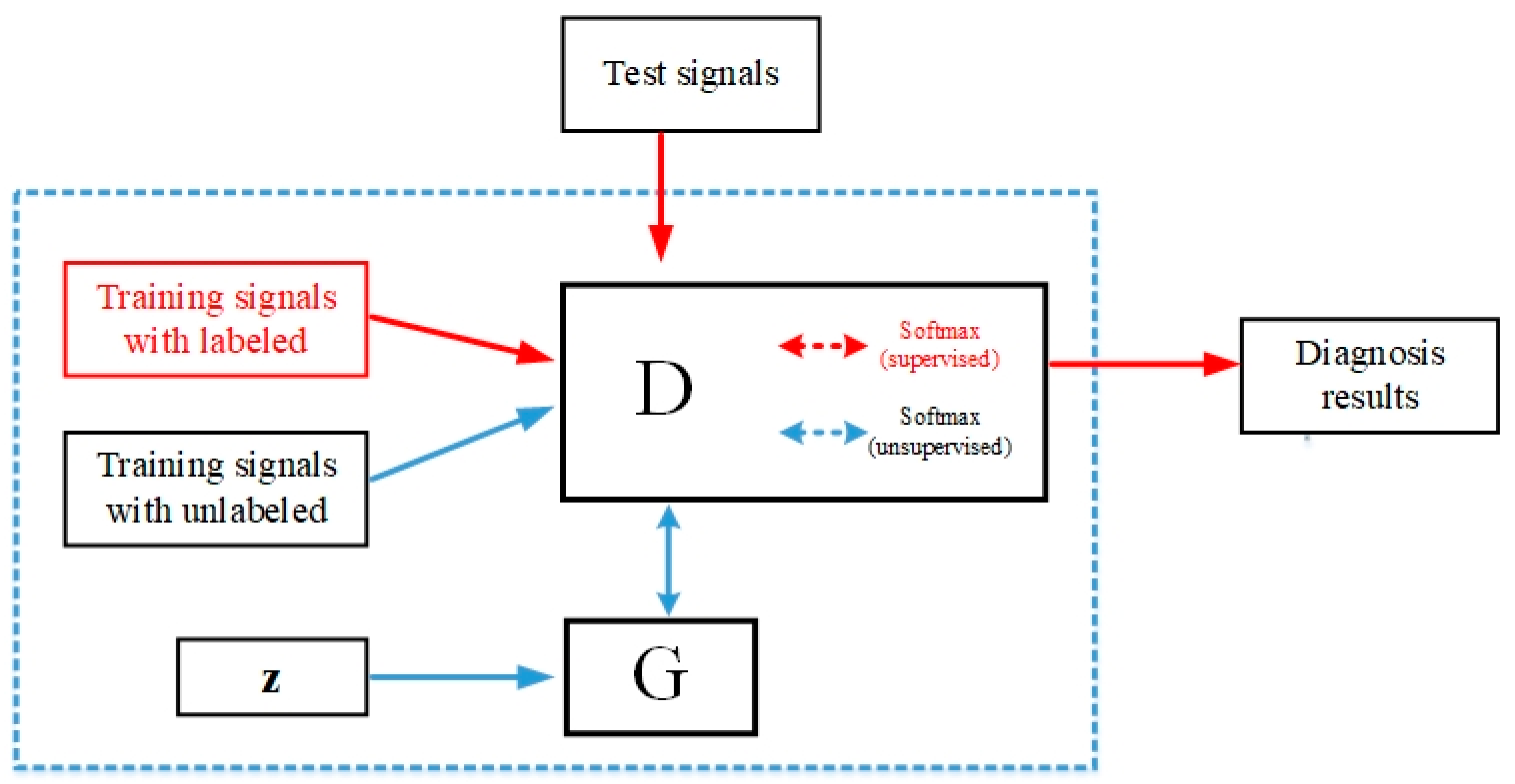

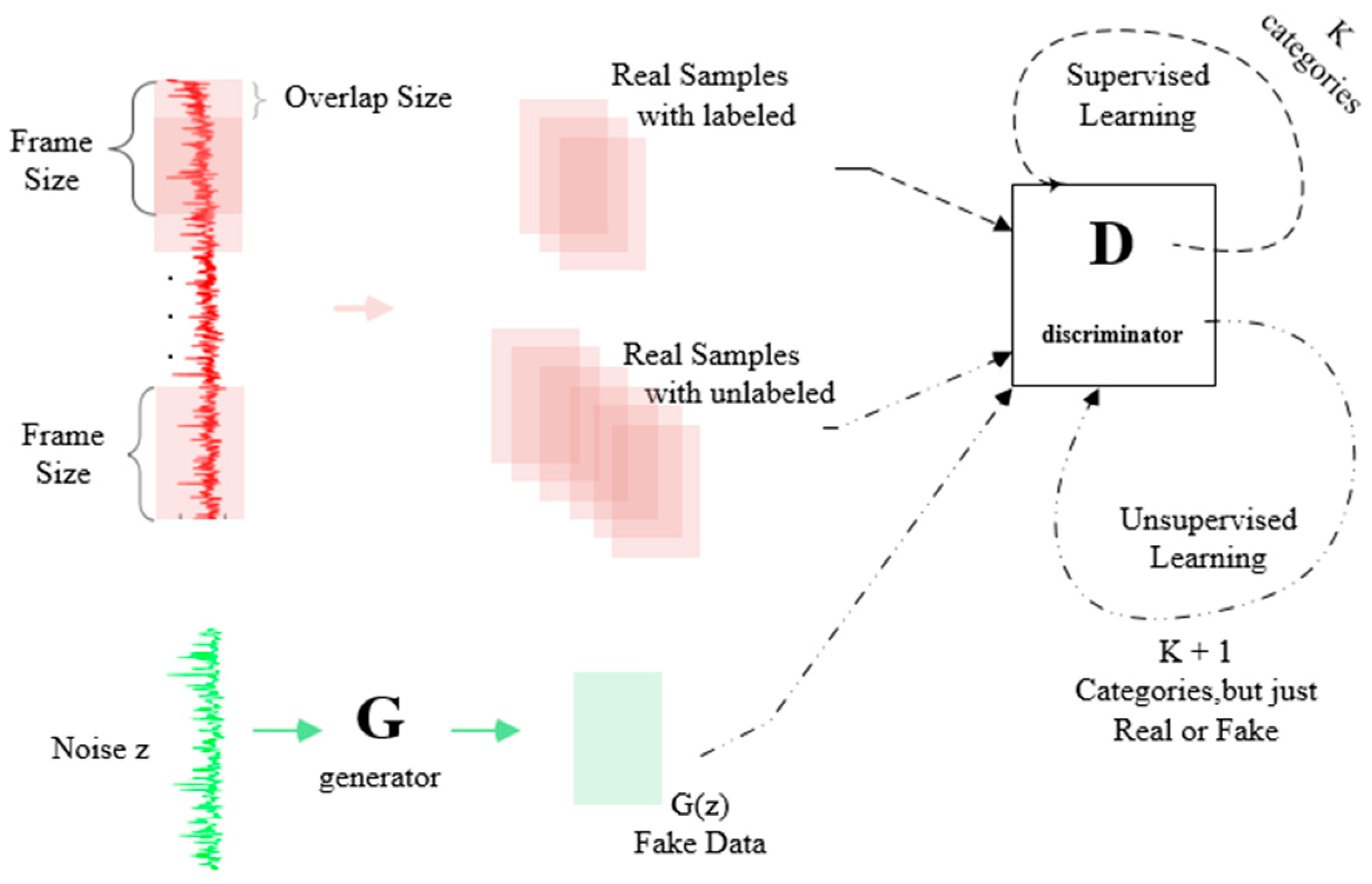

2.3. Architecture of SSGAN

- (1)

- Labeled real data: from the training data set. When training, the D just needs to try to identify them.

- (2)

- Unlabeled real data: from the training data set. When training, the D just needs to regard them as real data, and attempt to give a probability as close to 1 as possible.

- (3)

- Unlabeled fake data: generated by the G. When training the D tries to distinguish them from unlabeled real data as a probability as close to 0 as possible.

3. Proposed SN-SSGAN Intelligent Diagnosis Method

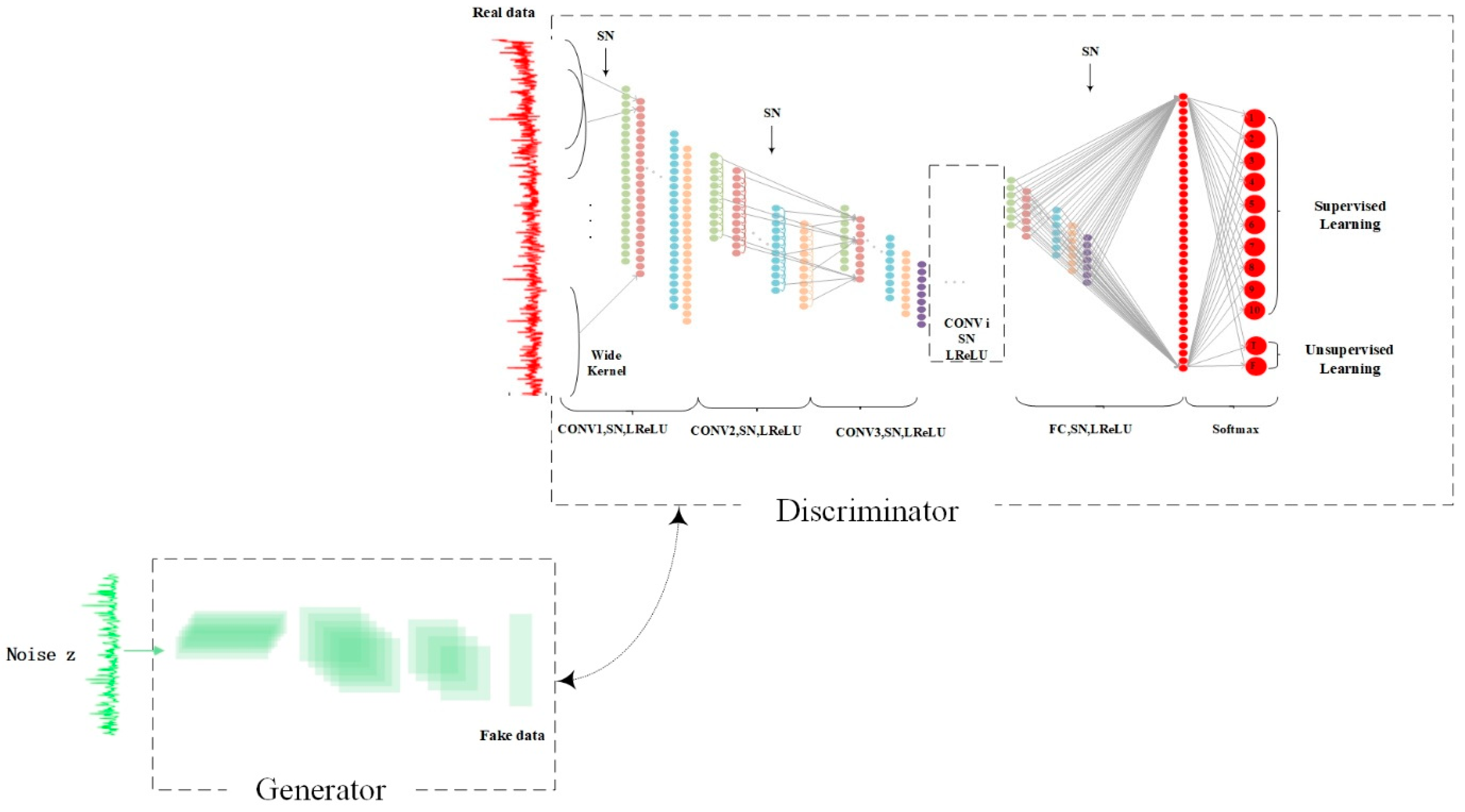

3.1. Architecture of the Proposed SN-SSGAN Model

- Cancel all pooling layers. Use the fractionally stride convolution instead of the pooling layer in the G network, and use the stride convolution instead of the pooling layer in the D network.

- Use batch normalization in both D and G.

- ReLU is used as the activation function in the G, and tanh is used in the last layer.

- Leaky ReLU is used as an activation function in the D.

3.1.1. The Architectural Parameters of the G

- i.

- input data: data = [BatchSize, z]

- ii.

- data = reshape(data)

- iii.

- data = FC(data)

- iv.

- num = int(ceil(log2(max(h,w))))

- v.

- i from 0 to (num-2)

- 1.

- data = deconvolution (data) # the output shape of length/(2^(i+1))

- 2.

- data = SwitchableNormalization (data)

- 3.

- data = ReLU(data)

- vi.

- data = deconvolution (data) # the output shape of length/(2^(num-1)))

- vii.

- data = SwitchableNorma(data)

- viii.

- output data = tanh(data)

3.1.2. The Architectural Parameters of the D

3.1.3. The Loss Optimization

3.2. Training of SSGAN

- Initialization: the number of iterations, the labeled samples to participate in the supervised training.

- To generate fake data: the G with input of normal random number vector generates fake data.

- Classification: fake data, unlabeled real data and labeled data are fed into D, obtaining the corresponding discriminant result.

- Compute the total loss: supervised learning if there is labeled data, unsupervised learning with unlabeled real data and fake data, and finally, compute the G/D loss.

- Optimization: fixed G/D, to optimize and update the parameters of the D/G.

- Whether to save the model.

- Iterative training: repeat steps 2–6 until the max step, stop training.

3.3. Stable Switchable Normalization

- Manually set normalized layer: not a universal method when solving practical problems.

- Deep neural networks usually have many layers. These normalized layers use only the same normalization operation, because manually designing operations for each normalized layer requires a lot of experiments.

4. Experiment and Discussion

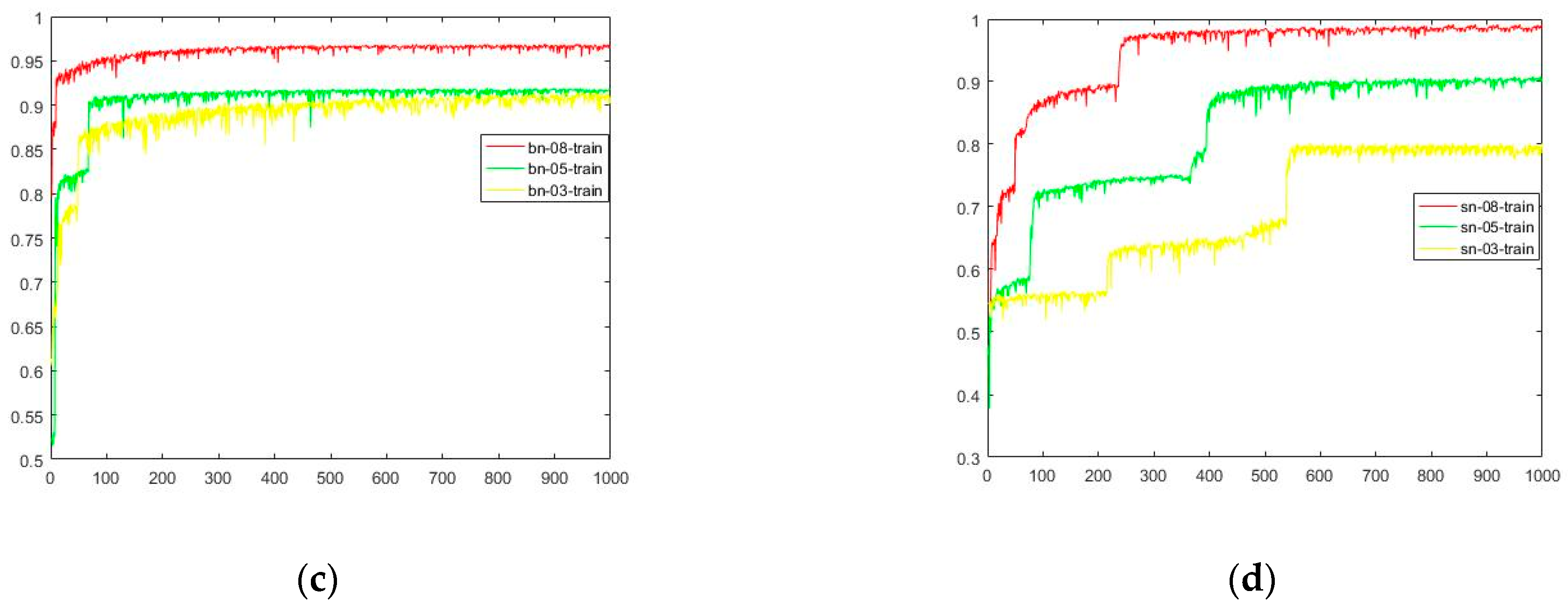

4.1. Data Description

4.2. Experimental Setup

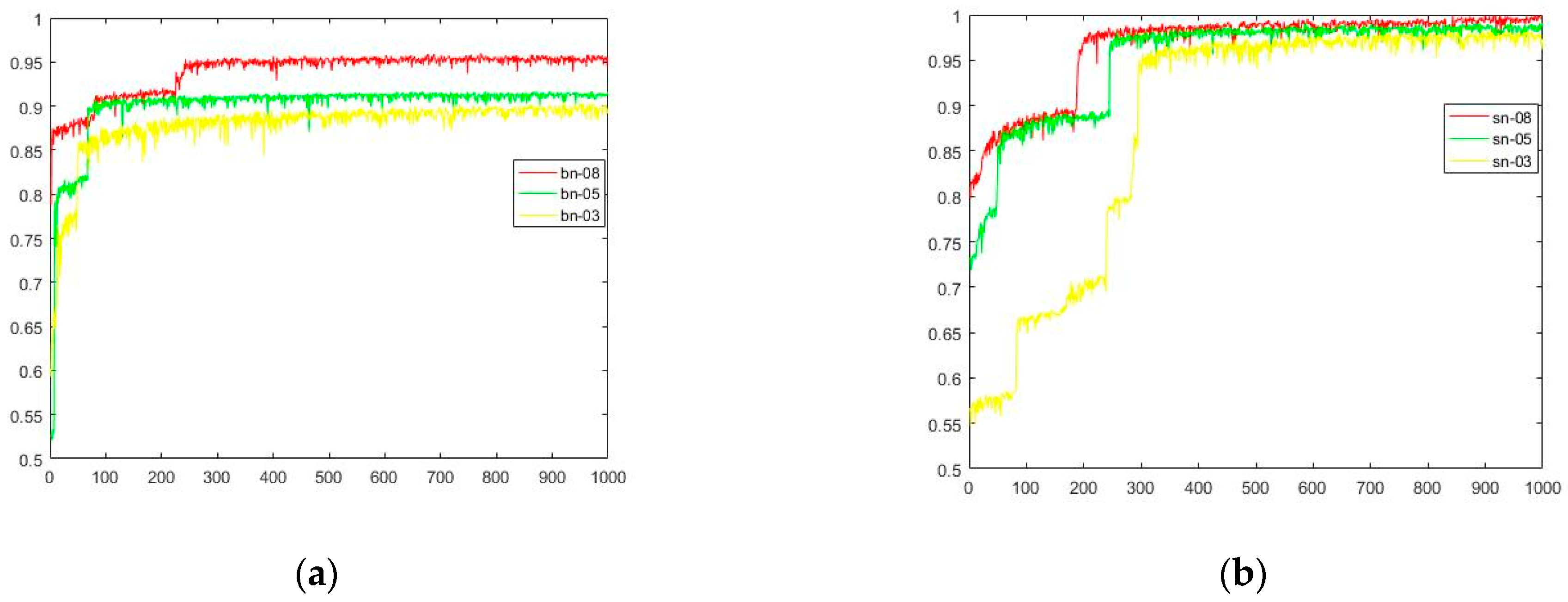

4.3. Experimental Results and Analysis

- The traditional method of FFT+SVM does not work well. FFT+SVM and WDCNN are greatly affected by the amount of labeled data. When there is 50% labeled samples, WDCNN only reaches 94.59%, while SN-SSGAN reached 98.84%. When the proportion of labeled data is 80%, the accuracy of WDCNN reaches 98.79, while that of SN-SSGAN reaches 99.93%, which means SN-SSGAN is good at bearing fault diagnosis under the G spurring. Compared with BN-SSGAN, it can be clearly seen from this table that SN-SSGAN has obvious advantages in accuracy. SN-SSGAN reached 97.45% when the proportion of labeled data is 30%. Compared with BN-SSGAN, SN-SSGAN is able to guarantee a higher level of accuracy.

- Although no unlabeled data is listed in Table 6, it is obvious that the lower the proportion of labeled data, the higher the proportion of unlabeled real data. Of course, the unlabeled data involved in training also includes fake data generated by the G. The number of fake data samples involved in training is related to the iterative proportion of the training process between the G and the D. In this algorithm, the ratio of samples trained between the G and the D is 1:1. Unlabeled real data plays a positive role in unsupervised learning, because even in the case of less labeled sample data, better results can still be obtained, which is enough to meet the demand for accuracy in the bearing diagnosis.

- Fake data generated from the G of SN-SSGAN can solve the problem of insufficient data so that a good score is still obtained even when there is less data, although it takes more time for SN-SSGAN.

5. Conclusions

- (1)

- Semi-supervised GAN is introduced to boost the accuracy of fault diagnosis under less labeled samples in training.

- (2)

- Switchable normalization determines the appropriate normalization operation for each normalization layer in a deep network.

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Pan, J.; Chen, J.; Zi, Y.; Li, Y.; He, Z. Mono-component feature extraction for mechanical fault diagnosis using modified empirical wavelet transform via data-driven adaptive Fourier spectrum segment. Mech. Syst. Signal. Process. 2016, 72–73, 160–183. [Google Scholar] [CrossRef]

- Tran, V.T.; Althobiani, F.; Ball, A.; Choi, B. An application to transient current signal based induction motor fault diagnosis of Fourier–Bessel expansion and simplified fuzzy ARTMAP. Expert Syst. Appl. 2013, 40, 5372–5384. [Google Scholar] [CrossRef]

- Li, C.; De Oliveira, J.V.; Lozada, M.C.; Cabrera, D.; Sanchez, V.; Zurita, G. A systematic review of fuzzy formalisms for bearing fault diagnosis. IEEE Trans. Fuzzy Syst. 2018, 1. Available online: https://ieeexplore.ieee.org/document/8510832/citations#citations (accessed on 26 October 2018). [CrossRef]

- Raj, A.S.; Murali, N. Early Classification of Bearing Faults Using Morphological Operators and Fuzzy Inference. IEEE Trans. Ind. Electron. 2013, 60, 567–574. [Google Scholar] [CrossRef]

- Sugumaran, V. Automatic rule learning using decision tree for fuzzy classifier in fault diagnosis of roller bearing. Mech. Syst. Signal. Process. 2007, 21, 2237–2247. [Google Scholar] [CrossRef]

- Ballal, M.S.; Khan, Z.J.; Suryawanshi, H.M.; Sonolikar, R.L. Adaptive Neural Fuzzy Inference System for the Detection of Inter-Turn Insulation and Bearing Wear Faults in Induction Motor. IEEE Trans. Ind. Electron. 2007, 54, 250–258. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, Z.; Zhao, S. Short-term fault prediction of mechanical rotating parts on the basis of fuzzy-grey optimising method. Mech. Syst. Signal. Process. 2007, 21, 856–865. [Google Scholar] [CrossRef]

- Cui, H.; Qiao, Y.; Yin, Y.; Hong, M. An investigation of rolling bearing early diagnosis based on high-frequency characteristics and self-adaptive wavelet de-noising. Neurocomputing 2016, 100, 649–656. [Google Scholar]

- Zarei, J.; Poshtan, J. Bearing fault detection using wavelet packet transform of induction motor stator current. Tribol. Int. 2007, 40, 763–769. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, Y.; Wang, K. Fault diagnosis and prognosis using wavelet packet decomposition, Fourier transform and artificial neural network. J. Intell. Manuf. 2013, 24, 1213–1227. [Google Scholar] [CrossRef]

- Eren, L.; Devaney, M.J. Bearing damage detection via wavelet packet decomposition of the stator current. IEEE Trans. Instrum. Meas. 2004, 53, 431–436. [Google Scholar] [CrossRef]

- Peng, Z.K.; Tse, P.W.; Chu, F. A comparison study of improved Hilbert-Huang transform and wavelet transform: Application to fault diagnosis for rolling bearing. Mech. Syst. Signal. Process. 2005, 19, 974–988. [Google Scholar] [CrossRef]

- Konar, P.; Chattopadhyay, P.P. Bearing fault detection of induction motor using wavelet and Support Vector Machines (SVMs). Appl. Soft Comput. 2011, 11, 4203–4211. [Google Scholar] [CrossRef]

- Sun, Q.; Tang, Y. Singularity analysis using continuous wavelet transform for bearing fault diagnosis. Mech. Syst. Signal. Process. 2002, 16, 1025–1041. [Google Scholar] [CrossRef]

- Tse, P.W.; Peng, Y.H.; Yam, R.C. Wavelet Analysis and Envelope Detection For Rolling Element Bearing Fault Diagnosis—Their Effectiveness and Flexibilities. J. Vib. Acoust. 2001, 123, 303–310. [Google Scholar] [CrossRef]

- Lou, X.; Loparo, K.A. Bearing fault diagnosis based on wavelet transform and fuzzy inference. Mech. Syst. Signal. Process. 2004, 18, 1077–1095. [Google Scholar] [CrossRef]

- Kankar, P.K.; Sharma, S.C.; Harsha, S.P. Rolling element bearing fault diagnosis using wavelet transform. Neurocomputing 2011, 74, 1638–1645. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, Z.; Wang, J. Time–frequency analysis for bearing fault diagnosis using multiple Q-factor Gabor wavelets. Isa Trans. 2019, 87, 225–234. [Google Scholar] [CrossRef]

- Jayaswal, P.; Verma, S.N.; Wadhwani, A.K. Development of EBP-Artificial neural network expert system for rolling element bearing fault diagnosis. J. Vib. Control. 2011, 17, 1131–1148. [Google Scholar] [CrossRef]

- Wu, J.; Liu, C. An expert system for fault diagnosis in internal combustion engines using wavelet packet transform and neural network. Expert Syst. Appl. 2009, 36, 4278–4286. [Google Scholar] [CrossRef]

- Ebersbach, S.; Peng, Z. Expert system development for vibration analysis in machine condition monitoring. Expert Syst. Appl. 2008, 34, 291–299. [Google Scholar] [CrossRef]

- Liu, X.; Bo, L.; Luo, H. Bearing faults diagnostics based on hybrid LS-SVM and EMD method. Meas. 2015, 59, 145–166. [Google Scholar] [CrossRef]

- Samanta, B.; Albalushi, K.R.; Alaraimi, S.A. Artificial neural networks and support vector machines with genetic algorithm for bearing fault detection. Eng. Appl. Artif. Intell. 2003, 16, 657–665. [Google Scholar] [CrossRef]

- Prieto, M.D.; Cirrincione, G.; Espinosa, A.G.; Ortega, J.A.; Henao, H. Bearing Fault Detection by a Novel Condition-Monitoring Scheme Based on Statistical-Time Features and Neural Networks. IEEE Trans. Ind. Electron. 2013, 60, 3398–3407. [Google Scholar] [CrossRef]

- Ali, J.B.; Fnaiech, N.; Saidi, L.; Chebelmorello, B.; Fnaiech, F. Application of empirical mode decomposition and artificial neural network for automatic bearing fault diagnosis based on vibration signals. Appl. Acoust. 2015, 89, 16–27. [Google Scholar]

- Samanta, B.; Albalushi, K.R.; Alaraimi, S.A. Artificial neural networks and genetic algorithm for bearing fault detection. Soft Comput. 2006, 10, 264–271. [Google Scholar] [CrossRef]

- Samanta, B.; Albalushi, K.R. Artificial Neural Network Based Fault Diagnostics Of Rolling Element Bearings Using Time-Domain Features. Mech. Syst. Signal. Process. 2003, 17, 317–328. [Google Scholar] [CrossRef]

- Shao, H.; Jiang, H.; Zhang, X.; Niu, M. Rolling bearing fault diagnosis using an optimization deep belief network. Meas. Sci. Technol. 2015, 26, 115002. [Google Scholar] [CrossRef]

- Zhang, W.; Peng, G.; Li, C.; Chen, Y.; Zhang, Z. A New Deep Learning Model for Fault Diagnosis with Good Anti-Noise and Domain Adaptation Ability on Raw Vibration Signals. Sensors 2017, 17, 425. [Google Scholar] [CrossRef]

- Guo, S.; Yang, T.; Gao, W.; Zhang, C. A Novel Fault Diagnosis Method for Rotating Machinery Based on a Convolutional Neural Network. Sensors 2018, 18, 1429. [Google Scholar] [CrossRef]

- Tang, S.; Shen, C.; Wang, D.; Li, S.; Huang, W.; Zhu, Z. Adaptive deep feature learning network with Nesterov momentum and its application to rotating machinery fault diagnosis. Neurocomputing 2018, 305, 1–14. [Google Scholar] [CrossRef]

- Guo, S.; Yang, T.; Gao, W.; Zhang, C.; Zhang, Y. An Intelligent Fault Diagnosis Method for Bearings with Variable Rotating Speed Based on Pythagorean Spatial Pyramid Pooling CNN. Sensors 2018, 18, 3857. [Google Scholar] [CrossRef]

- Chen, Z.; Li, W. Multisensor Feature Fusion for Bearing Fault Diagnosis Using Sparse Autoencoder and Deep Belief Network. IEEE Trans. Instrum. Meas. 2017, 66, 1693–1702. [Google Scholar] [CrossRef]

- Qin, Y.; Wang, X.; Zou, J. The Optimized Deep Belief Networks with Improved Logistic Sigmoid Units and Their Application in Fault Diagnosis for Planetary Gearboxes of Wind Turbines. IEEE Trans. Ind. Electron. 2019, 66, 3814–3824. [Google Scholar] [CrossRef]

- Zhao, G.; Liu, X.; Zhang, B.; Zhang, G.; Niu, G.; Hu, C. Bearing Health Condition Prediction Using Deep Belief Network. Available online: https://pdfs.semanticscholar.org/1603/a32b5876788af6a60477048baae8920ce762.pdf (accessed on 27 April 2019).

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Wardefarley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the advances in neural information processing systems 2014, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Salvaris, M.; Dean, D.; Tok, W.H. Generative Adversarial Networks. In Deep Learning with Azure; Apress: Berkeley, CA, USA, 2018; pp. 187–208. [Google Scholar]

- Salimans, T.; Goodfellow, I.J.; Zaremba, W.; Cheung, V.; Radford, A.; Chen, X. Improved techniques for training GANs. In Proceedings of the Annual Conference on Neural Information Processing Systems 2016, Barcelona, Spain, 5–10 December 2016; pp. 2234–2242. [Google Scholar]

- Berthelot, D.; Schumm, T.; Metz, L. BEGAN: Boundary Equilibrium Generative Adversarial Networks. arXiv 2017, arXiv:1703.10717. [Google Scholar]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein Generative Adversarial Networks. Available online: https://leon.bottou.org/publications/pdf/icml-2017.pdf (accessed on 27 April 2019).

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A.C. Improved Training of Wasserstein GANs. In Proceedings of the Annual Conference on Neural Information Processing Systems 2017, Long Beach, CA, USA, 4–9 December 2017; pp. 5767–5777. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. Available online: https://arxiv.org/pdf/1502.03167.pdf (accessed on 27 April 2019).

- Lei Ba, J.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Ulyanov, D.; Vedaldi, A.; Lempitsky, V. Instance normalization: The missing ingredient for fast stylization. arXiv 2016, arXiv:1607.08022. [Google Scholar]

- Wu, Y.X.; He, K.M. Group normalization. arXiv 2018, arXiv:1803.08494. [Google Scholar]

- Miyato, T.; Kataoka, T.; Koyama, M.; Yoshida, Y. Spectral Normalization for Generative Adversarial Networks. Available online: https://openreview.net/pdf?id=B1QRgziT- (accessed on 27 April 2019).

- Kingma, D.P.; Welling, M. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Springenberg, J.T. Unsupervised and Semi-supervised Learning with Categorical Generative Adversarial Networks. Available online: https://arxiv.org/pdf/1804.03700.pdf (accessed on 27 April 2019).

- Luo, P.; Ren, J.; Peng, Z.; Zhang, R.; Li, J. Differentiable Learning-to-Normalize via Switchable Normalization. Available online: https://openreview.net/pdf?id=ryggIs0cYQ (accessed on 27 April 2019).

| No. | Operation Name | Output Size |

|---|---|---|

| 1 | FC, SN, ReLU | [BatchSize, 1, 1, 2048] |

| 2 | Deconv1, SN, ReLU | [BatchSize, 2, 1, 1024] |

| 3 | Deconv2, SN, ReLU | [BatchSize, 4, 1, 512] |

| 4 | Deconv3, SN, ReLU | [BatchSize, 8, 1, 256] |

| 5 | Deconv4, SN, ReLU | [BatchSize, 16, 1, 128] |

| 6 | Deconv5, SN, ReLU | [BatchSize, 32, 1, 64] |

| 7 | Deconv6, SN, ReLU | [BatchSize, 64, 1, 32] |

| 8 | Deconv7, SN, ReLU | [BatchSize, 128, 1, 16] |

| 9 | Deconv8, SN, ReLU | [BatchSize, 256, 1, 8] |

| 10 | Deconv9, SN, ReLU | [BatchSize, 512, 1, 4] |

| 11 | Deconv10, SN, ReLU | [BatchSize, 1024, 1, 2] |

| 12 | Deconv11, SN, ReLU | [BatchSize, 2048, 1, 1] |

| 13 | Deconv12, SN, tanh | [BatchSize, 2048, 1, 1] |

| No. | Operation Name | Output Size |

|---|---|---|

| 1 | Conv1, SN, LReLU | [BatchSize, 64, 1, 16] |

| 2 | Conv2, SN, LReLU | [BatchSize, 32, 1, 32] |

| 3 | Conv3, SN, LReLU | [BatchSize, 16, 1, 64] |

| 4 | Conv4, SN, LReLU | [BatchSize, 8, 1, 64] |

| 5 | Conv5, SN, LReLU | [BatchSize, 3, 1, 64] |

| 6 | FC, SN, LReLU | [BatchSize, 100] |

| 7 | Softmax | [BatchSize, K] |

| Fault Location | Ball | Inner Race | Outer Race | Normal | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Fault diameter (inch) | 0.007 | 0.014 | 0.021 | 0.007 | 0.014 | 0.021 | 0.007 | 0.014 | 0.021 | 0 |

| Category labels | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| Training samples | 1410 | 1410 | 1410 | 1410 | 1410 | 1410 | 1410 | 1410 | 1410 | 1410 |

| Test samples | 352 | 352 | 352 | 352 | 352 | 352 | 352 | 352 | 352 | 352 |

| Parameter | Value | Explanation |

|---|---|---|

| Batch size | 32 | Number of training samples at one time |

| Learning rate of G | 0.0001 | Generator’s learning rate |

| Learning rate of D | 0.0001 | Discriminator’s learning rate |

| Update rate | 1 | Assume update rate equals k, which indicates the discriminator updates k times and the generator updates one time. |

| Size of z | 128 | Random generated vector and the generator’s input |

| Optimizer of the D | Adam | (beta1 = 0.5) |

| Optimizer of the G | Adam | (beta1 = 0.5) |

| One-side label smoothing | 0.9 | Let label from 1/0 to 0.9/0.1 in unsupervised learning |

| Ratio | Fake Data | Real Data | Test Data | |

|---|---|---|---|---|

| Labeled | Unlabeled | |||

| 0.3 | 4 | 1.2 | 2.8 | 1 |

| 0.5 | 4 | 2 | 2 | 1 |

| 0.8 | 4 | 3.2 | 0.8 | 1 |

| The Ratio between Labeled Data and Unlabeled Data | Time (ms/signal) | |||

|---|---|---|---|---|

| 0.3 | 0.5 | 0.8 | ||

| FFT+SVM | 68.36% | 81.68% | 85.13% | 0.7 |

| WDCNN | 89.17% | 94.59% | 98.79% | 0.28 |

| BN-SSGAN | 88.92% | 91.15% | 95.26% | 0.31 |

| SN-SSGAN | 97.45% | 98.84% | 99.93% | 0.39 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, D.; Liu, F.; Meng, H. Bearing Fault Diagnosis Based on the Switchable Normalization SSGAN with 1-D Representation of Vibration Signals as Input. Sensors 2019, 19, 2000. https://doi.org/10.3390/s19092000

Zhao D, Liu F, Meng H. Bearing Fault Diagnosis Based on the Switchable Normalization SSGAN with 1-D Representation of Vibration Signals as Input. Sensors. 2019; 19(9):2000. https://doi.org/10.3390/s19092000

Chicago/Turabian StyleZhao, Dongdong, Feng Liu, and He Meng. 2019. "Bearing Fault Diagnosis Based on the Switchable Normalization SSGAN with 1-D Representation of Vibration Signals as Input" Sensors 19, no. 9: 2000. https://doi.org/10.3390/s19092000

APA StyleZhao, D., Liu, F., & Meng, H. (2019). Bearing Fault Diagnosis Based on the Switchable Normalization SSGAN with 1-D Representation of Vibration Signals as Input. Sensors, 19(9), 2000. https://doi.org/10.3390/s19092000