Effects of Offset Pixel Aperture Width on the Performances of Monochrome CMOS Image Sensors for Depth Extraction

Abstract

:1. Introduction

2. Architecture of the Sensor

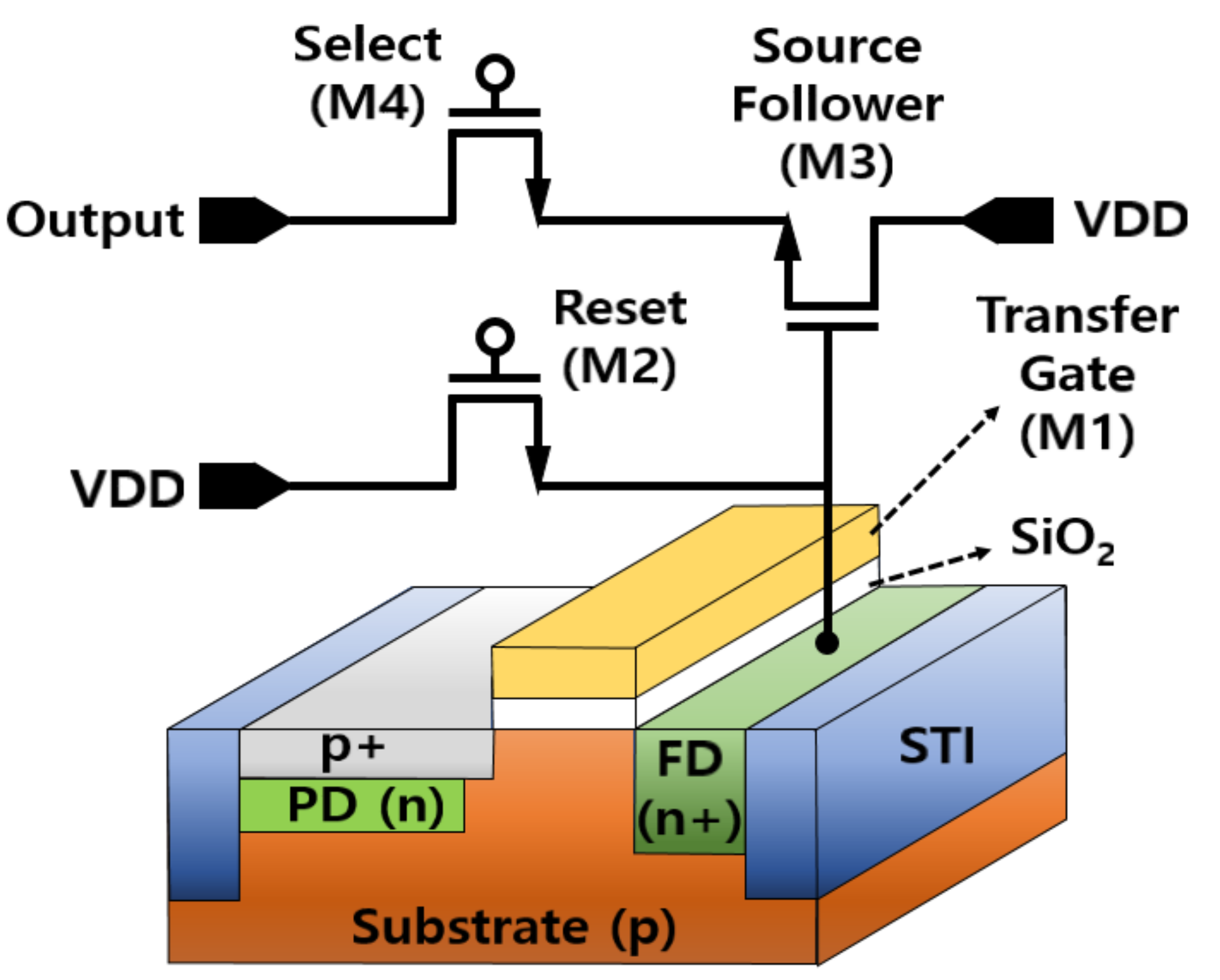

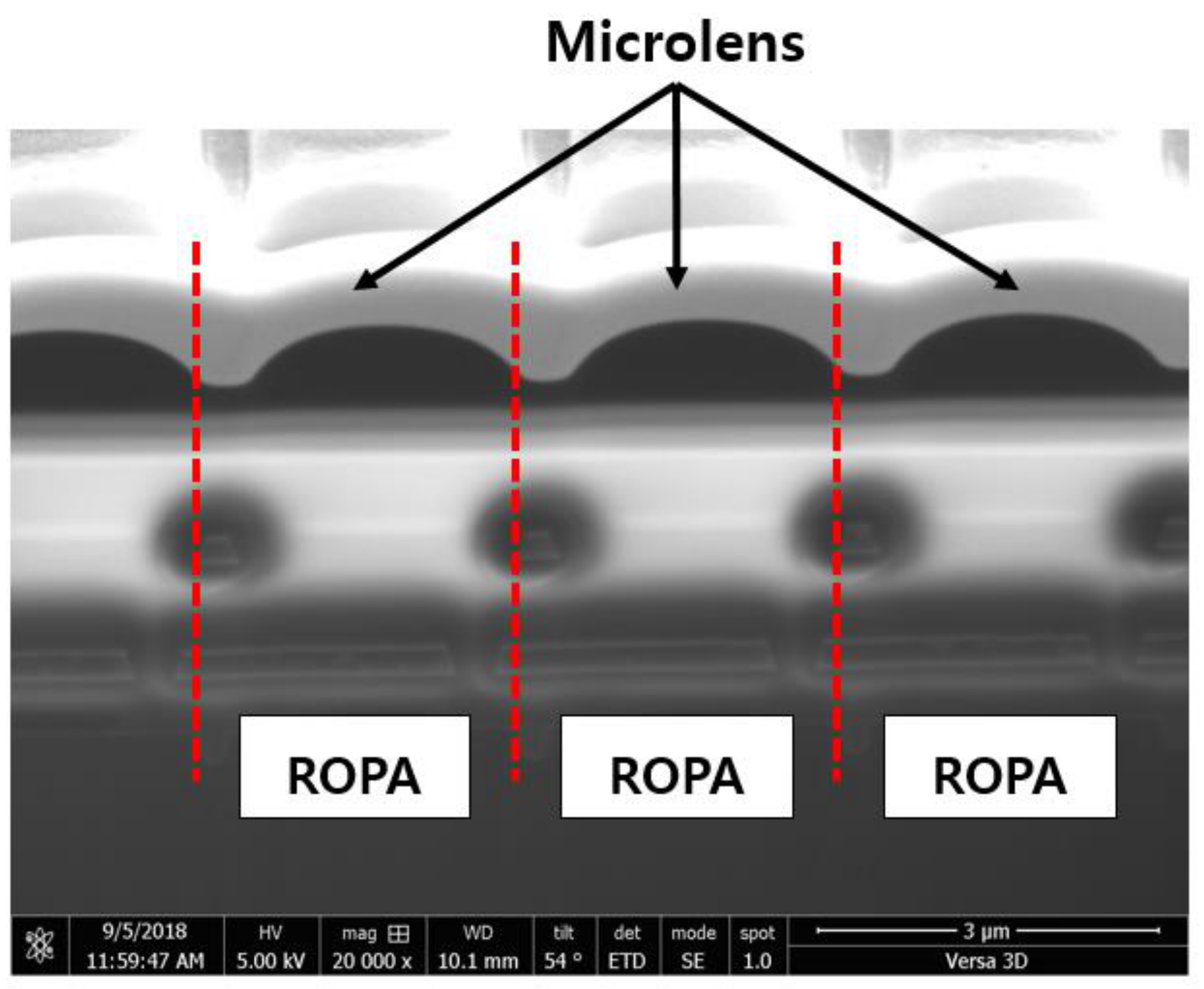

2.1. Pixel Structure

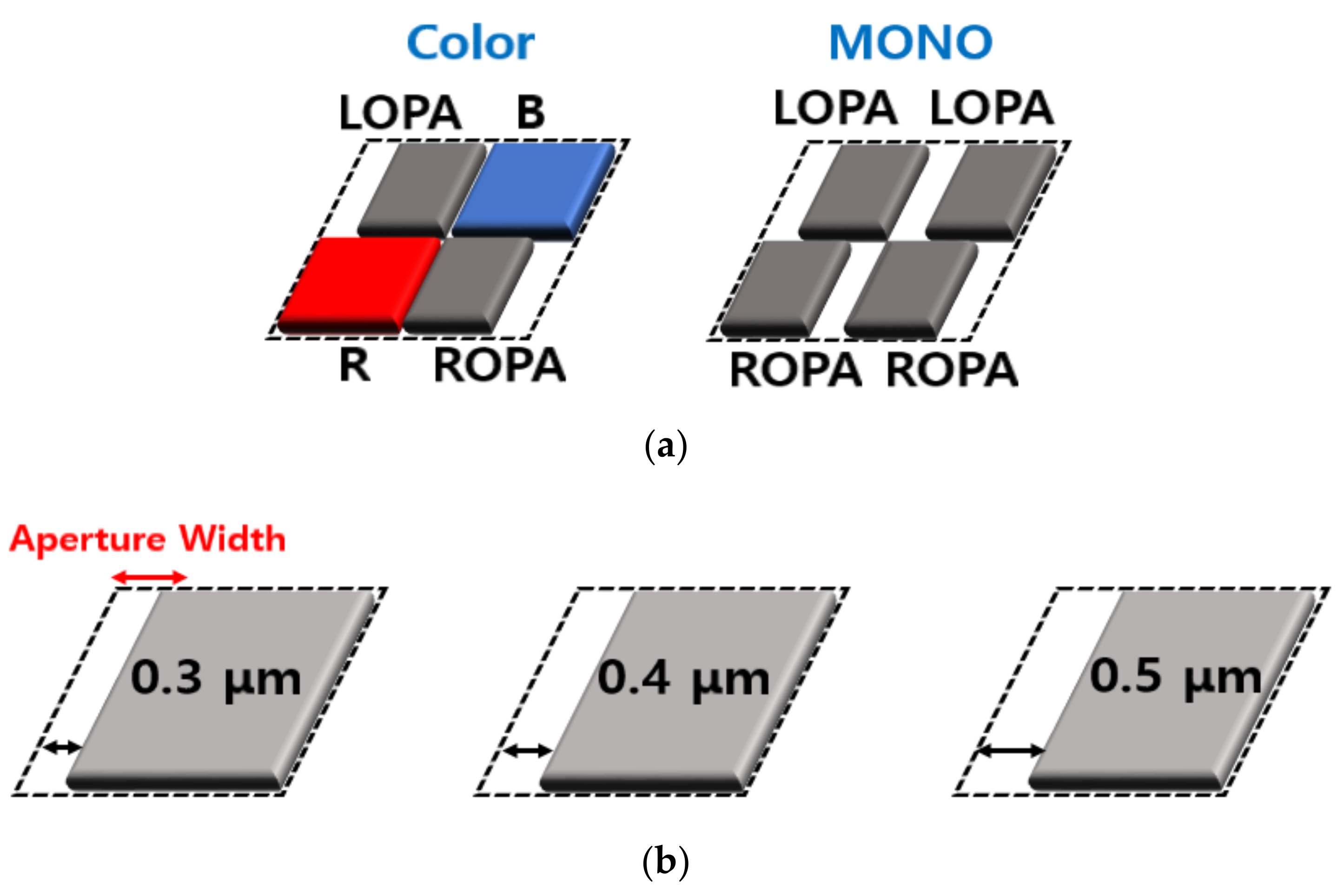

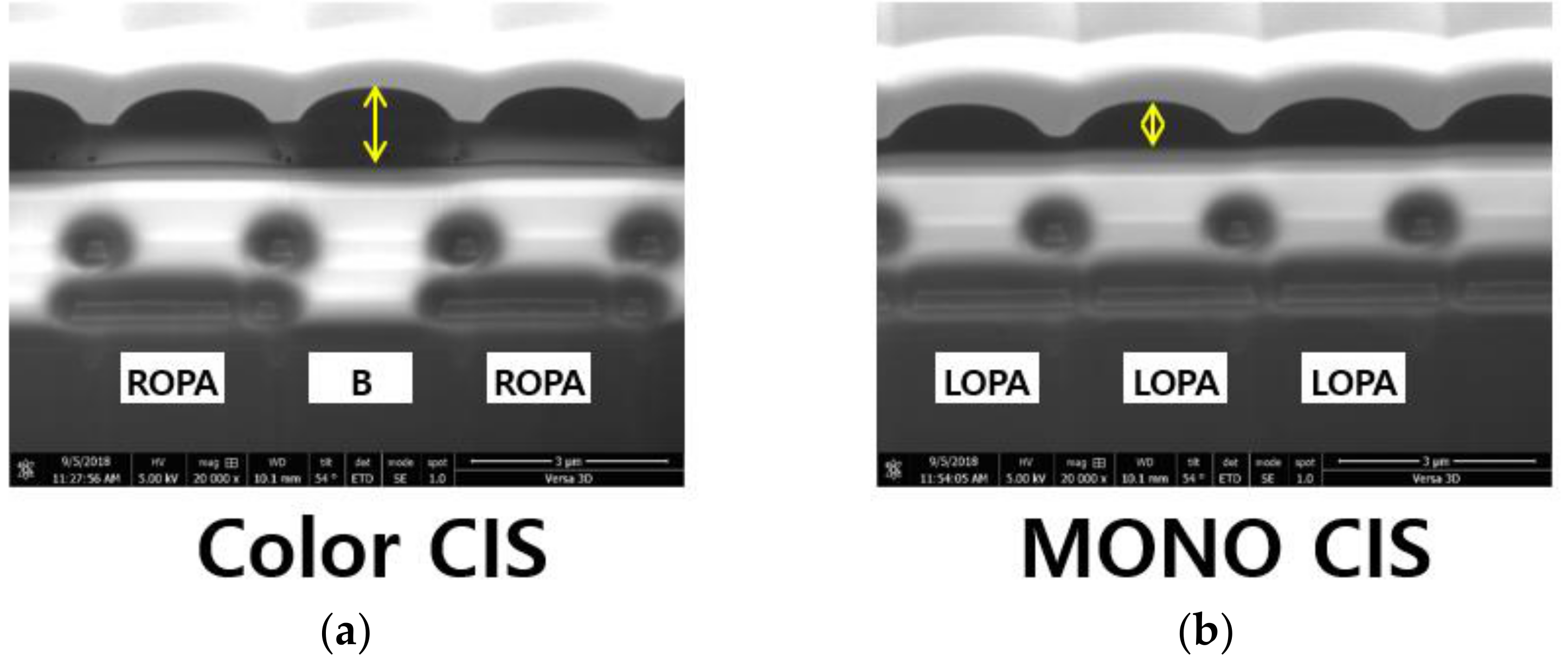

2.2. MONO CIS with OPA Technique

3. Results and Discussions

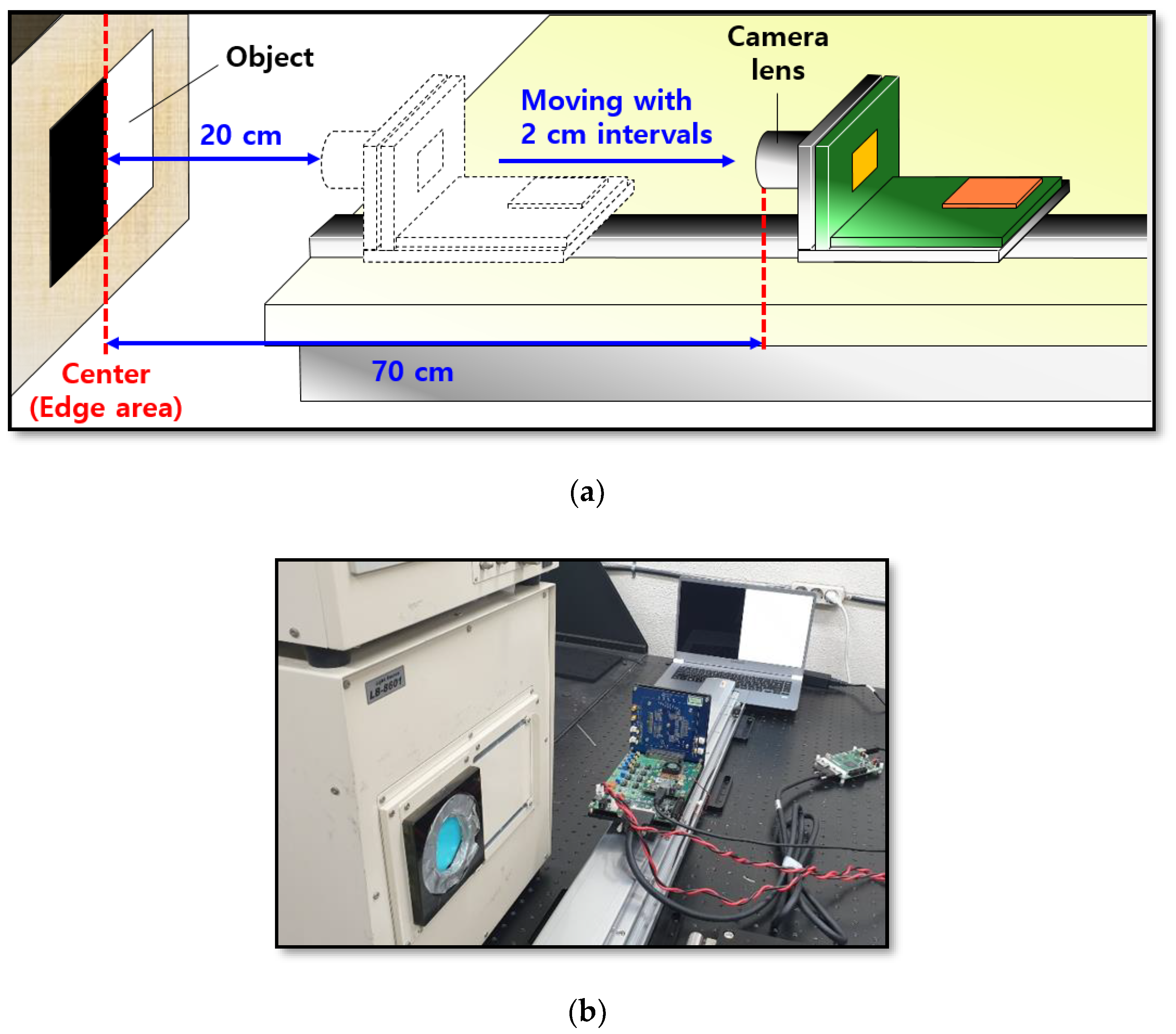

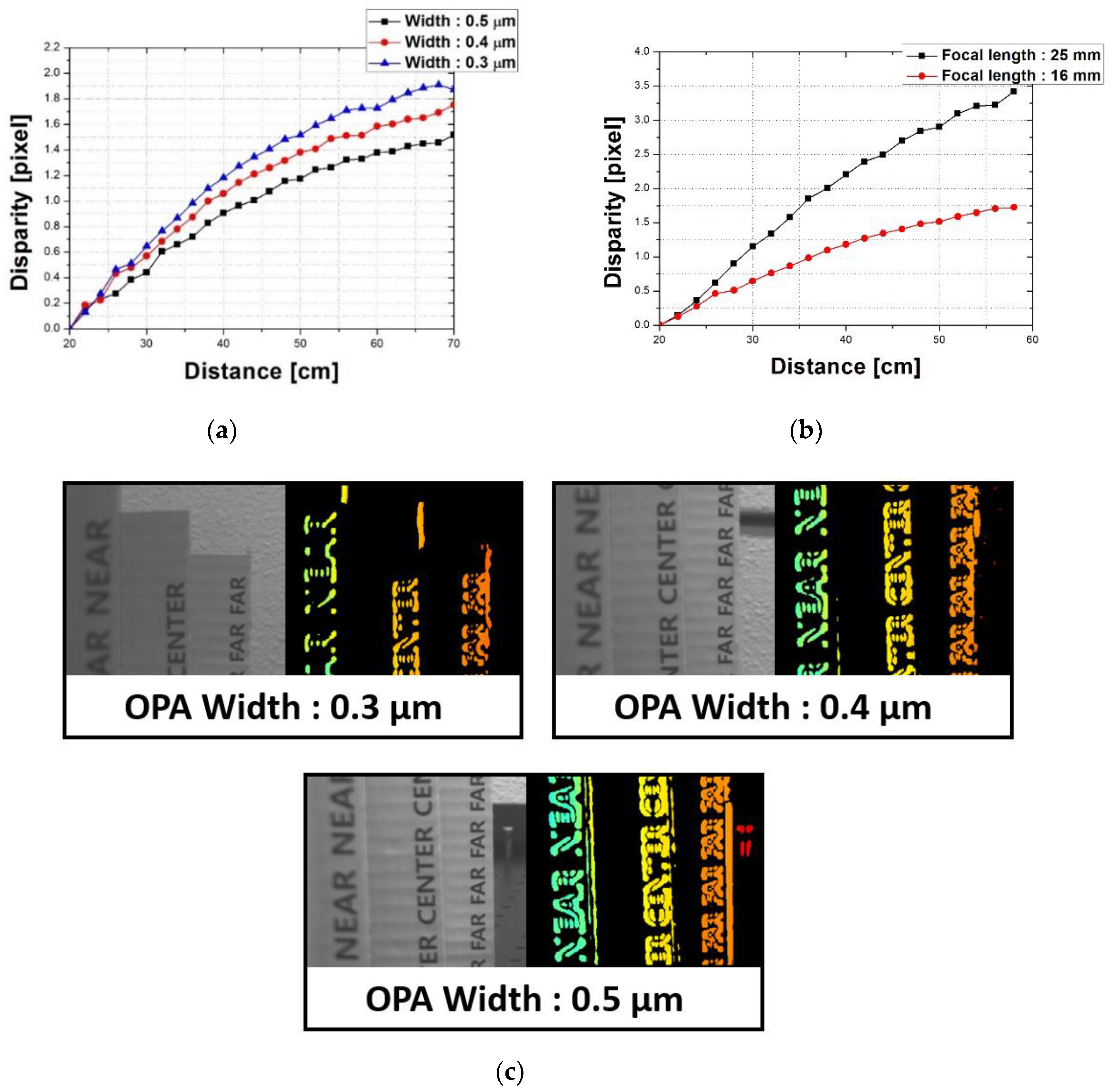

3.1. Measurement of the Disparity Depending on the Distance

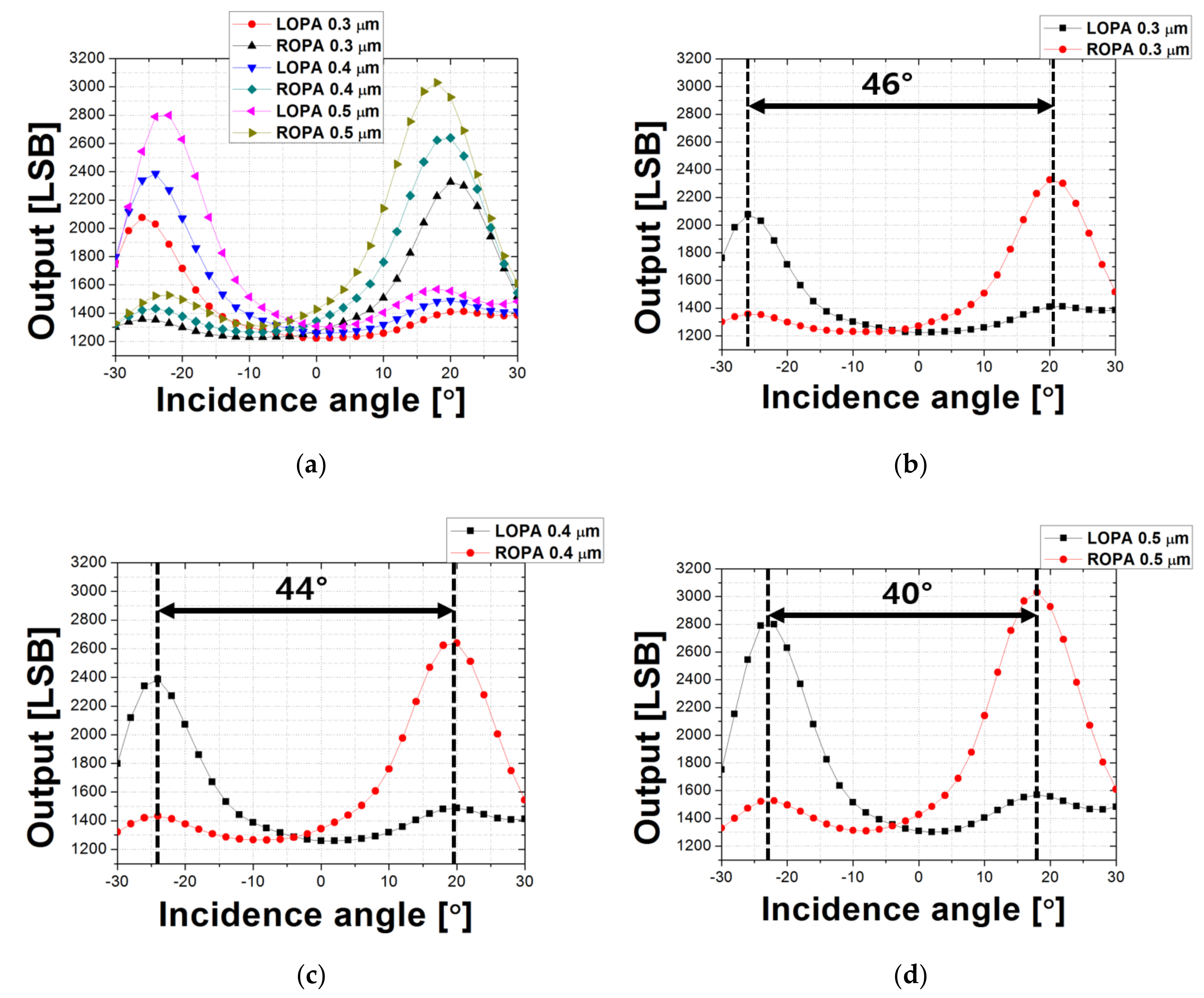

3.2. Characteristics of the Proposed MONO CIS with OPA Depending on the CRA

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Brewster, D. The Stereoscope; Its History, Theory and Construction, with Its Application to the Fine and Useful Arts and to Education; Morgan & Morgan Inc.: Fort Myers, FL, USA, 1971. [Google Scholar]

- Eric, N.; Jang, J.W. Kinect Depth Sensor for Computer Vision Applications in Autonomous Vehicles. In Proceedings of the 2017 Ninth International Conference on Ubiquitous and Future Networks (ICUFN), Milan, Italy, 4–7 July 2017. [Google Scholar]

- Bertozzi, M.; Broggi, A. GOLD: A parallel real-time stereo vision system for generic obstacle and lane detection. IEEE Trans. Image Process. 1998, 7, 62–81. [Google Scholar] [CrossRef] [PubMed]

- Bok, Y.; Jeon, H.G.; Kweon, I.S. Geometric Calibration of Micro-Lens-Based Light Field Cameras Using Line Features. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 287–300. [Google Scholar] [CrossRef] [PubMed]

- Ferrara, P.; Piva, A.; Argenti, F.; Kusuno, J.; Niccolini, M.; Ragaglia, M.; Uccheddu, F. Wide-angle and long-range real time pose estimation: A comparison between monocular and stereo vision systems. J. Vis. Commun. Image Represent. 2017, 48, 159–168. [Google Scholar] [CrossRef]

- Filho, J.F.B.; Siebert, L.C.; Mariani, V.C.; Coelho, L.S. A Conceptual Model of a Stereo Vision System to Aid a Teleoperated Robot in Pruning Vegetation Close to Overhead Urban Power Lines. In Proceedings of the 2018 International Symposium on Power Electronics, Electrical Drives, Automation and Motion (SPEEDAM), Amalfi, Italy, 20–22 July 2018. [Google Scholar]

- Tippetts, B.; Lee, D.J.; Lillywhite, K.L.; Archibald, J. Review of stereo vision algorithms and their suitability for resource-limited systems. J. Real-Time Image Process. 2016, 11, 5–25. [Google Scholar] [CrossRef]

- Oleynikova, H.; Honegger, D.; Pollefeys, M. Reactive Avoidance Using Embedded Stereo Vision for MAV Flight. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2018. [Google Scholar]

- Wang, W.; Yan, J.; Xu, N.; Wang, Y.; Hsu, F.H. Real-Time High-Quality Stereo Vision System in FPGA. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 1696–1708. [Google Scholar] [CrossRef]

- Mochizuki, F.; Kagawa, K.; Miyagi, R.; Seo, M.; Zhang, B.; Takasawa, T.; Yasutomi, K.; Kawahito, S. Separation of Multi-path Components in Sweep-Less Time-of-flight Depth Imaging with a Temporally-Compressive Multi-Aperture Image Sensor. ITE Trans. Media Technol. Appl. 2018, 6, 202–211. [Google Scholar] [CrossRef]

- Bamji, C.S.; O’Connor, P.; Elkhatib, T.; Mehta, S.; Thompson, B.; Prather, L.A.; Snow, D.; Akkaya, O.C.; Daniel, A.; Payne, A.D.; et al. A 0.13 μm CMOS System-on-Chip for a 512 × 424 Time-of-Flight Image Sensor with Multi-Frequency Photo-Demodulation up to 130 MHz and 2 GS/s ADC. IEEE J. Solid-State Circuits 2015, 50, 303–319. [Google Scholar] [CrossRef]

- Niclass, C.; Soga, M.; Matsubara, H.; Ogawa, M.; Kagami, M. A 0.18-m CMOS SoC for a 100-m-Range 10-Frame/s 200× 96-Pixel Time-of-Flight Depth Sensor. IEEE J. Solid-State Circuits 2014, 49, 315–330. [Google Scholar] [CrossRef]

- Villa, F.; Lussana, R.; Bronzi, D.; Tisa, S.; Tosi, A.; Zappa, F.; Dalla Mora, A.; Contini, D.; Durini, D.; Weyers, S.; et al. CMOS Imager with 1024 SPADs and TDCS for Single-Photon Timing and 3-D Time-of-Flight. IEEE J. Sel. Top. Quantum Electron. 2014, 20, 364–373. [Google Scholar] [CrossRef]

- Sarbolandi, H.; Lefloch, D.; Kolb, A. Kinect range sensing: Structured-Light versus Time-of-Flight Kinect. Comput. Vis. Image Underst. 2015, 139, 1–20. [Google Scholar] [CrossRef]

- Huang, F.-C.; Luebke, D.; Wetzstein, G. The light field stereoscope: Immersive computer graphics via factored near-eye light field displays with focus cues. J. ACM Trans. Graphics 2015, 34. [Google Scholar] [CrossRef]

- Jeon, H.G.; Park, J.; Choe, G.; Park, J.; Bok, Y.; Tai, Y.W.; Kweon, I.S. Accurate Depth Map Estimation from a Lenslet Light Field Camera. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Wang, T.C.; Efros, A.A.; Ramamoorthi, R. Occlusion-aware Depth Estimation Using Light-Field Cameras. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3487–3495. [Google Scholar]

- Wanner, S.; Goldluecke, B. Variational Light Field Analysis for Disparity Estimation and Super-Resolution. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 606–619. [Google Scholar] [CrossRef] [PubMed]

- Tao, M.W.; Su, J.C.; Wang, T.C.; Malik, J.; Ramamoorthi, R. Depth Estimation and Specular Removal for Glossy Surfaces Using Point and Line Consistency with Light-Field Cameras. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 1155–1169. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Choi, B.-S.; Kim, S.H.; Lee, J.; Lee, J.; Chang, S.; Park, J.H.; Lee, S.-J.; Shin, J.-K. Effect of Aperture Width on the Performance of Monochrome CMOS Image Sensor Using Offset Pixel Aperture Technique for Depth Extraction. In Proceedings of the 4th International Workshop on Image Sensors and Imaging Systems (IWISS2018), TITECH Tamachi Campus, Tokyo, Japan, 28–29 November 2018. [Google Scholar]

- Choi, B.-S.; Bae, M.; Kim, S.H.; Lee, J.; Oh, C.W.; Chang, S.; Park, J.H.; Lee, S.-J.; Shin, J.-K. CMOS image sensor for extracting depth information using offset pixel aperture technique. In Proceedings of the Novel Optical Systems Design and Optimization XX, San Diego, CA, USA, 24 August 2017. [Google Scholar]

- Yun, W.J.; Kim, Y.G.; Lee, Y.M.; Lim, J.Y.; Kim, H.J.; Khan, M.U.K.; Chang, S.; Park, H.S.; Kyung, C.M. Depth extraction with offset pixels. Opt. Express 2018, 26, 15825–15841. [Google Scholar] [CrossRef] [PubMed]

- Choi, B.-S.; Lee, J.; Kim, S.H.; Chang, S.; Park, J.H.; Lee, S.-J.; Shin, J.-K. Analysis of Disparity Information for Depth Extraction Using CMOS Image Sensor with Offset Pixel Aperture Technique. Sensors 2019, 19, 472. [Google Scholar] [CrossRef] [PubMed]

| Width 0.3 μm | Width 0.4 μm | Width 0.5 μm | |

|---|---|---|---|

| Pixel size | 2.8 × 2.8 μm | ||

| Pixel array | 486 × 1080 (test element group (TEG) region only) | ||

| Frame rate | 18 fps | ||

| Process | 0.11 μm CIS process | ||

| Color pattern | Monochrome (without color filters) | ||

| Pixel type | 4-Tr APS structure | ||

| Chip size | 7 mm (H) × 10 mm (V) | ||

| Power | Analog: 3.3 V. Digital: 1.8 V | ||

| Disparity | 46° | 44° | 40° |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, J.; Choi, B.-S.; Kim, S.-H.; Lee, J.; Lee, J.; Chang, S.; Park, J.; Lee, S.-J.; Shin, J.-K. Effects of Offset Pixel Aperture Width on the Performances of Monochrome CMOS Image Sensors for Depth Extraction. Sensors 2019, 19, 1823. https://doi.org/10.3390/s19081823

Lee J, Choi B-S, Kim S-H, Lee J, Lee J, Chang S, Park J, Lee S-J, Shin J-K. Effects of Offset Pixel Aperture Width on the Performances of Monochrome CMOS Image Sensors for Depth Extraction. Sensors. 2019; 19(8):1823. https://doi.org/10.3390/s19081823

Chicago/Turabian StyleLee, Jimin, Byoung-Soo Choi, Sang-Hwan Kim, Jewon Lee, Junwoo Lee, Seunghyuk Chang, JongHo Park, Sang-Jin Lee, and Jang-Kyoo Shin. 2019. "Effects of Offset Pixel Aperture Width on the Performances of Monochrome CMOS Image Sensors for Depth Extraction" Sensors 19, no. 8: 1823. https://doi.org/10.3390/s19081823

APA StyleLee, J., Choi, B.-S., Kim, S.-H., Lee, J., Lee, J., Chang, S., Park, J., Lee, S.-J., & Shin, J.-K. (2019). Effects of Offset Pixel Aperture Width on the Performances of Monochrome CMOS Image Sensors for Depth Extraction. Sensors, 19(8), 1823. https://doi.org/10.3390/s19081823