1. Introduction

In the last decade, the spread of 3D scanning devices has been increasing progressively in industry, mainly for the inspection and quality control of processes that use robotic and machine vision systems, which need motion control within an unknown workspace [

1,

2]. The main noncontact measurement methods include visual detection [

3,

4] and laser scanning methods [

5].

Up to now, few works have been published about sensor model calibration describing the combination of motion control with the high positioning accuracy of industrial robots (1 mm maximum error tolerance) and 3D noncontact measuring systems [

1,

2,

6,

7,

8,

9,

10,

11,

12,

13,

14]. A robotic system can perform measurements from different angles and directions avoiding occlusion, shading problems, and insufficient data from the surface to be measured [

15,

16].

To achieve an accurate measurement of an object’s pose expressed in a world coordinate system using a vision system mounted on the robot, various components need to be calibrated beforehand [

11,

17,

18]. This includes the robot tool position, expressed in the robot base coordinate system; the camera pose, expressed in the robot tool coordinate system; and the object pose, expressed in the camera coordinate system. In recent years, there have been major research efforts to individually resolve each of the tasks above [

19]. For instance, the calibration of camera and laser projectors to find the intrinsic and extrinsic parameters of the digitizer or 2D image coordinates from 3D object coordinates that are expressed in world coordinate systems [

20]. In addition, there is also research focused on robot calibration in order to increase the accuracy of the robot end-effector positioning by using measures expressed in a 3D digitizer coordinate system [

21]. Once all system components are individually calibrated, the object position expressed in the robot base reference system can be directly obtained from vision sensor data.

The calibration of the complete robot-vison system can be achieved from the calibration of its components or subsystems separately, taking into account that each procedure for the component calibration is relatively simple. If any one of the components of the system has its relative position modified, the calibration procedure must be repeated only for that component of the system.

Noncontact measurement systems have been analyzed and compared regarding their measurement methodology and accuracy in a comparative and analytical form in [

22], considering their high sensitivity to various external factors inherent in the measuring process or the optical characteristics of the object. However, in the case of noncontact optical scanning systems and due to the complexity of the assessment to the process errors, there is no standardized method to evaluate the measurement uncertainty, as described in ISO/TS/14253-2:1999 and IEC Guide 98-3:2008, which makes it difficult to establish criteria to evaluate the performance of the measurement equipment. In ISO 10360-7:2011, for example, there is currently no specification of performance requirements for the calibration of laser scanners, fringe projection, or structured light systems.

An experimental procedure has been conceived to calibrate the relative position between the vision sensor coordinate system and the robot base coordinate system consisting of moving the robot manipulator to different poses for the digitization of a standard sphere of known radius [

10]. Through a graphical visualization algorithm, a trajectory could be chosen by the user for the robot tool to follow. The calibration procedure proposed in that work agreed with the standards specifications of ISO 10360-2 for coordinate measuring machines (CMMs). A similar work is presented in [

23].

In this article, a calibration routine is presented to acquire surface 3D maps from a scanner specially built with a vision camera and two laser projectors to transform these coordinates into object coordinates expressed in the robot controller for surface welding. The calibration of the geometric parameters of the vision sensor can be performed by using a flat standard block to acquire several images of the laser light at different angular positions of the mobile laser projector. The image of the fixed sensor is stored to compute the intersection between it and the images of the light projections of the mobile laser projector. The transformation of 3D maps from the sensor coordinates to the robot base coordinates was performed using a method to calibrate the sensor position fixed on the robot arm together with the geometric parameters of the robot. Results have shown that in the application the scanning sensor based on triangulation can generate 3D maps expressed in the robot base coordinates with acceptable accuracy for welding, with the values of positioning errors smaller than 1 mm in the working depth range.

2. The Optical System

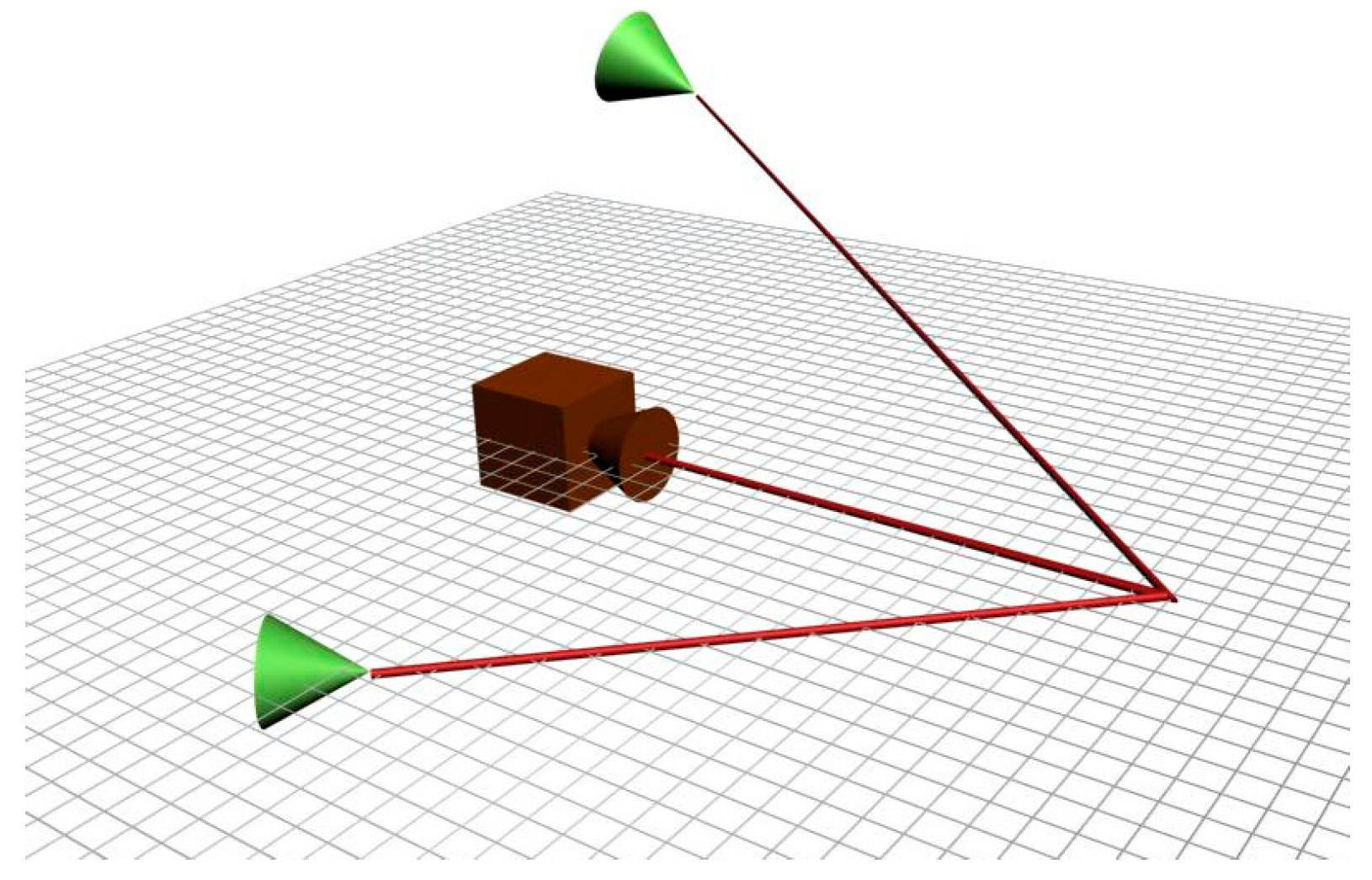

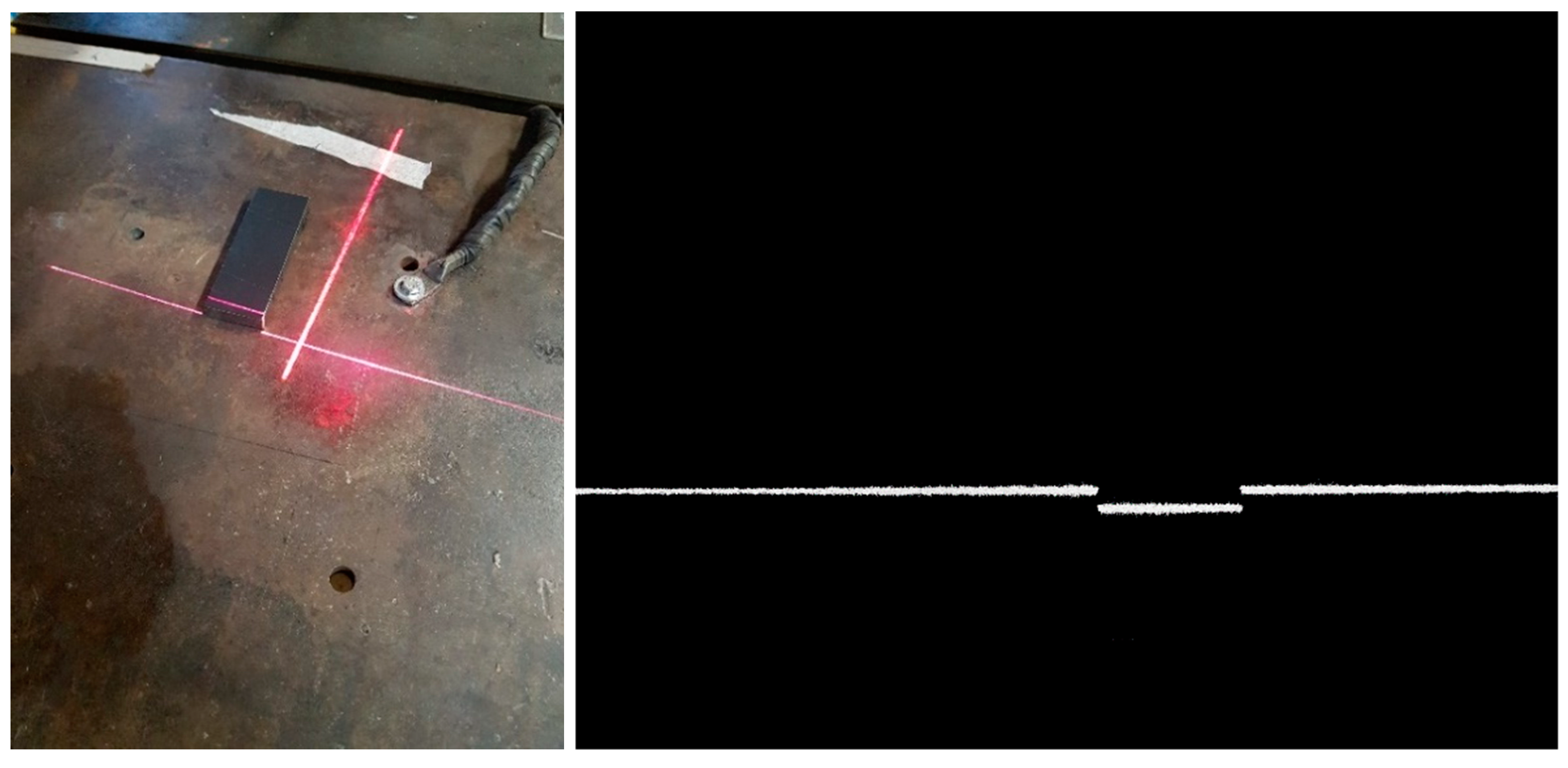

The surface scanning system developed in this research does not depend on positioning sensors to measure the angular displacement of the laser light source. However, the determination of this angular displacement is required for the triangulation process to produce the depth map. The proposed sensor replaces the angular displacement sensor by another laser source, such that the system is composed of two laser projectors and a camera, as shown in the sketch in

Figure 1.

In addition to the use of a second laser projector, it is also necessary to include two geometrical restrictions to the mounting system. The first restriction is that the relative position between the two planes of light projected by the lasers must be perpendicular so that the triangulation equations proposed are valid. The second restriction is that one of the laser projectors is fixed. These restrictions will be discussed in detail later.

Each of the laser diodes project a light plane on a surface generating a light curve on it, as shown in

Figure 2.

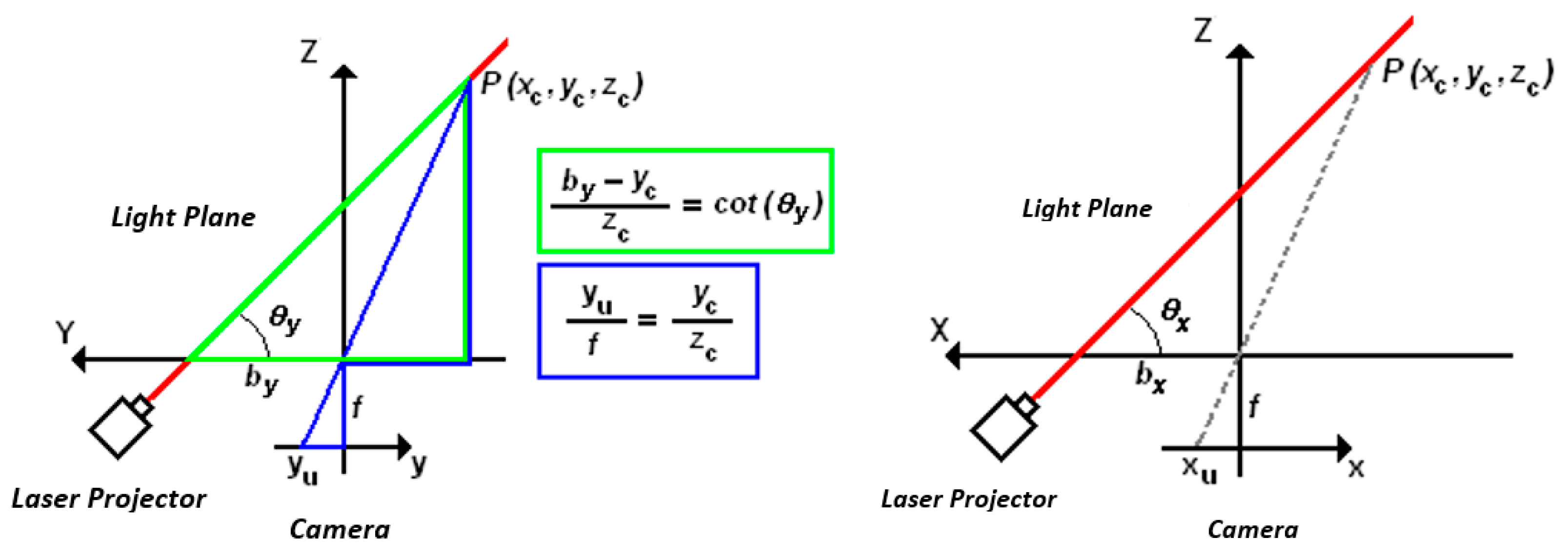

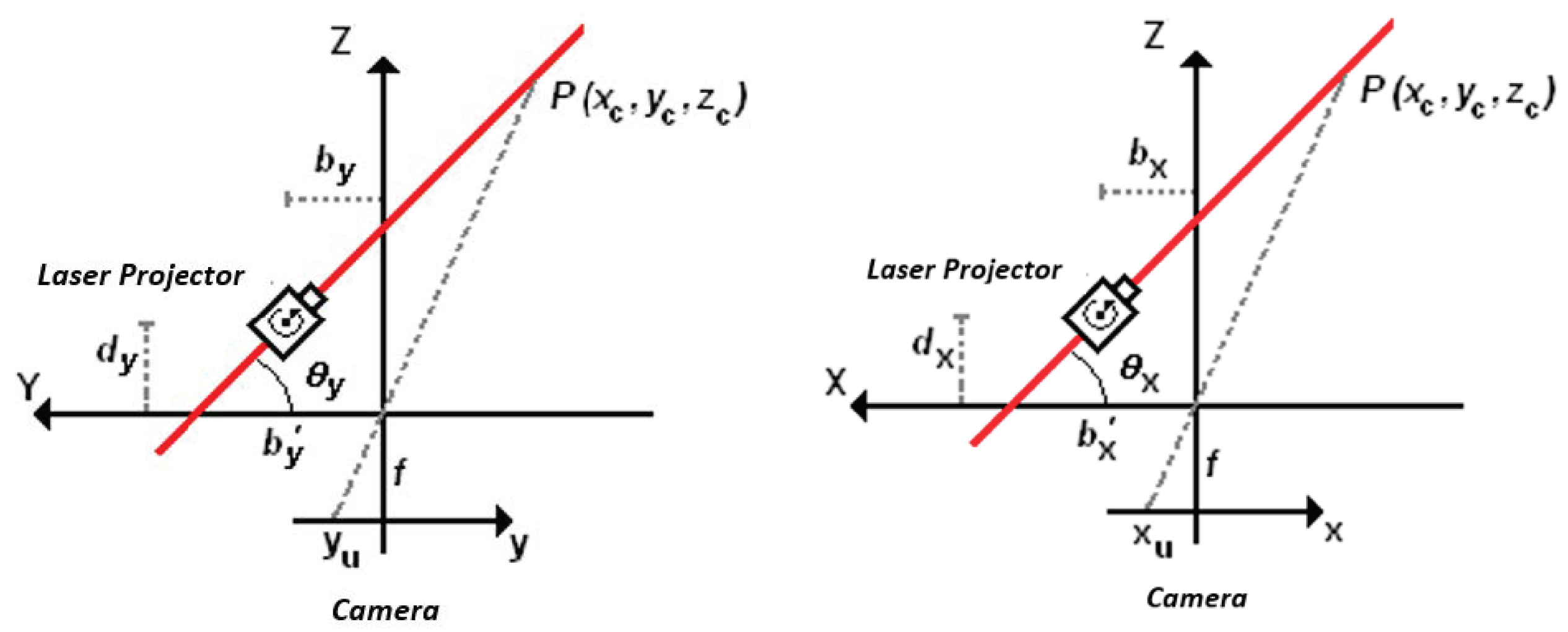

It is considered that the light plane in

Figure 2 is parallel to the X-axis of the camera coordinate system. In the Z–Y plane in

Figure 3, the image of a single point,

P, on the laser line is projected on the camera sensor such that the image formation of

P is a projection by the central perspective model.

There are two triangles in green and blue in

Figure 3 from which a relationship between the 3D coordinates of point P and the 2D image coordinates can be formulated.

(xc, yc, zc) → 3D coordinates of point P in camera coordinates (mm);

(xu, yu) → image coordinates of point P (mm);

by → distance along the Y-axis between the camera origin and the laser plane parallel to the X-axis;

θy → angle between the Y-axis and the laser plane parallel to the X-axis;

f → camera focal length.

From the perspective equations,

xc/xu = yc/yu, it is possible to determine the value of

xc from

such that the 3D coordinates of point

P are completely defined by the 2D image coordinates by

Due to the restrictions of the mounting system, both laser planes are perpendicular to each other so that the second laser is parallel to the Y-axis of the camera. The equations of the first laser line can be derived from a projection of the X–Z plane shown in

Figure 3 such that the image formation of point

P on the line can be formulated with the perspective model with Equation (4):

where

From the perspective equations:

such that the 3D coordinates of point

P are completely defined by its 2D image coordinates using Equation (6):

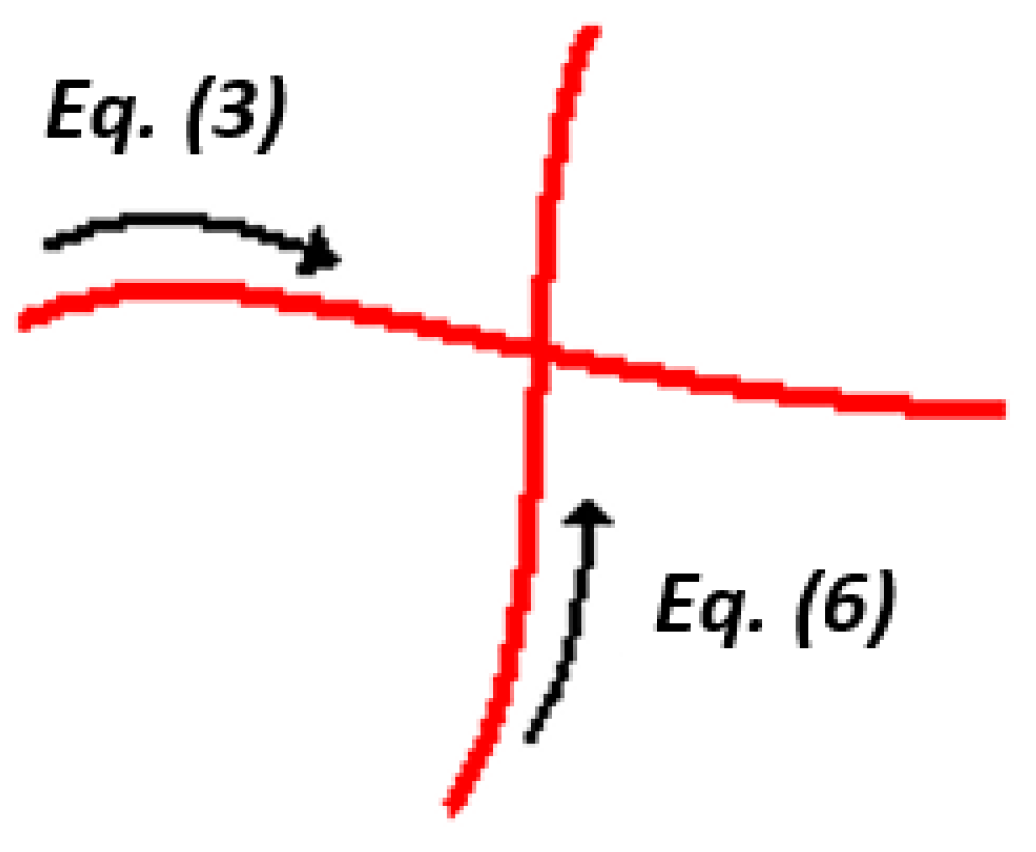

Equations (3) and (6) define a relationship between the 3D coordinates of a point

P and its 2D image coordinates, but these equations are not valid for all points of the light projection. Equation (3) is valid only for one of the laser’s lines, and Equation (6) is valid only for the other, as shown in

Figure 4.

However, at the point of intersection Pint between the two lasers’ lines projected on the surface, both equations are valid.

From the image coordinates of the intersection point (

xint and

yint), the 3D coordinates of

Pint can be calculated from both Equations (3) and (6), so a relationship between the angular displacement of both laser diodes,

θx and

θy, can be obtained as

Since one of the laser diodes has no degree of freedom, then either cot(θx) or cot(θy) is constant and previously known, as well as the values of bx, by, and f, which are also calibrated previously. Therefore, the other term cot(θx) or cot(θy) of the mobile laser can be obtained from Equation (7) and Equation (3), or alternatively Equation (6) can convert the 2D image coordinates into 3D coordinates of each of the points on the line projected onto the surface by the mobile laser diode.

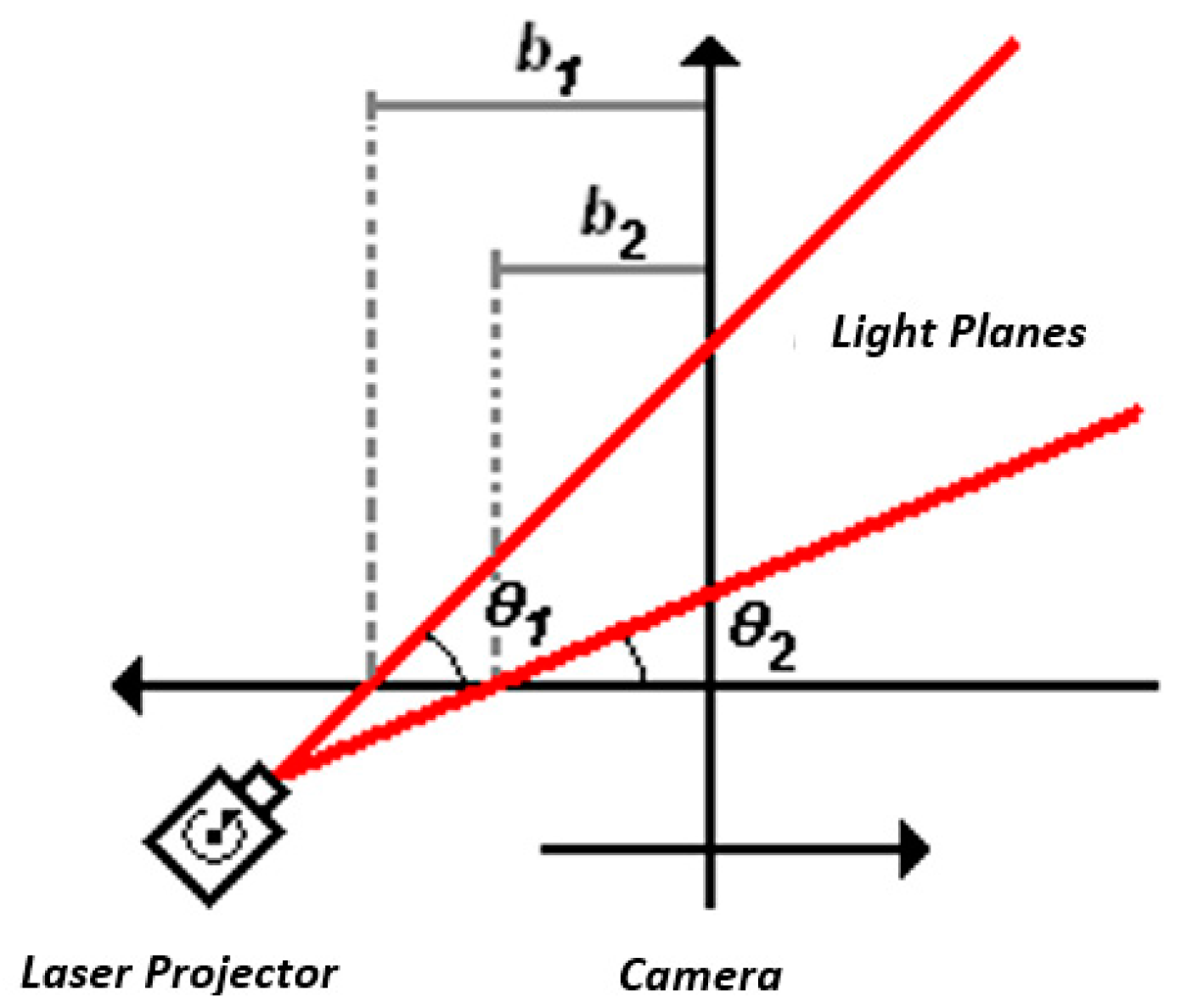

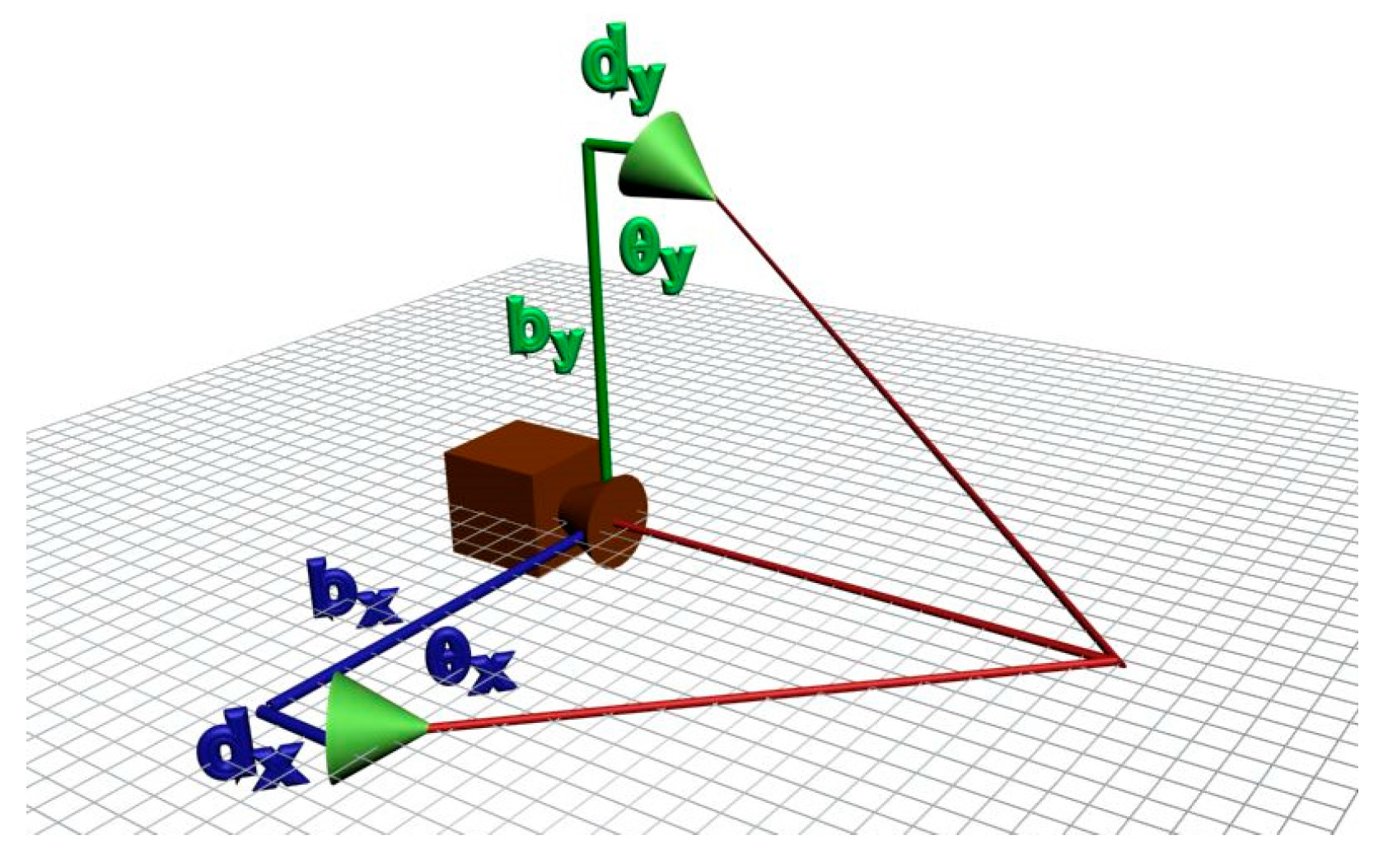

However, when rotating the mobile laser projector, the model described by Equation (3), Equation (6), and Equation (7) cannot describe the system geometry. As can be seen in

Figure 5, if the mobile laser diode is not aligned with the camera’s coordinate system, the distance,

b, does not remain constant while scanning the surface.

To consider this effect in the digitization equations, it is necessary to include a misalignment parameter, and then it is possible to perform a correction on the base distance of the laser for each angular position according to Equation (8) and

Figure 6:

It is important to note that although this misalignment can occur in both diodes, it generates variation only on the base distance of the mobile laser beam. For the fixed laser, regardless of the misalignment, the base distance, b’, remains constant. In other words, after determining this distance, no compensation is necessary due to the variation in the position of the mobile beam.

Rewriting the scanning equations, including the effect of the mobile laser misalignment, yields

where

bx’ and

by’ are given by Equation (8) and

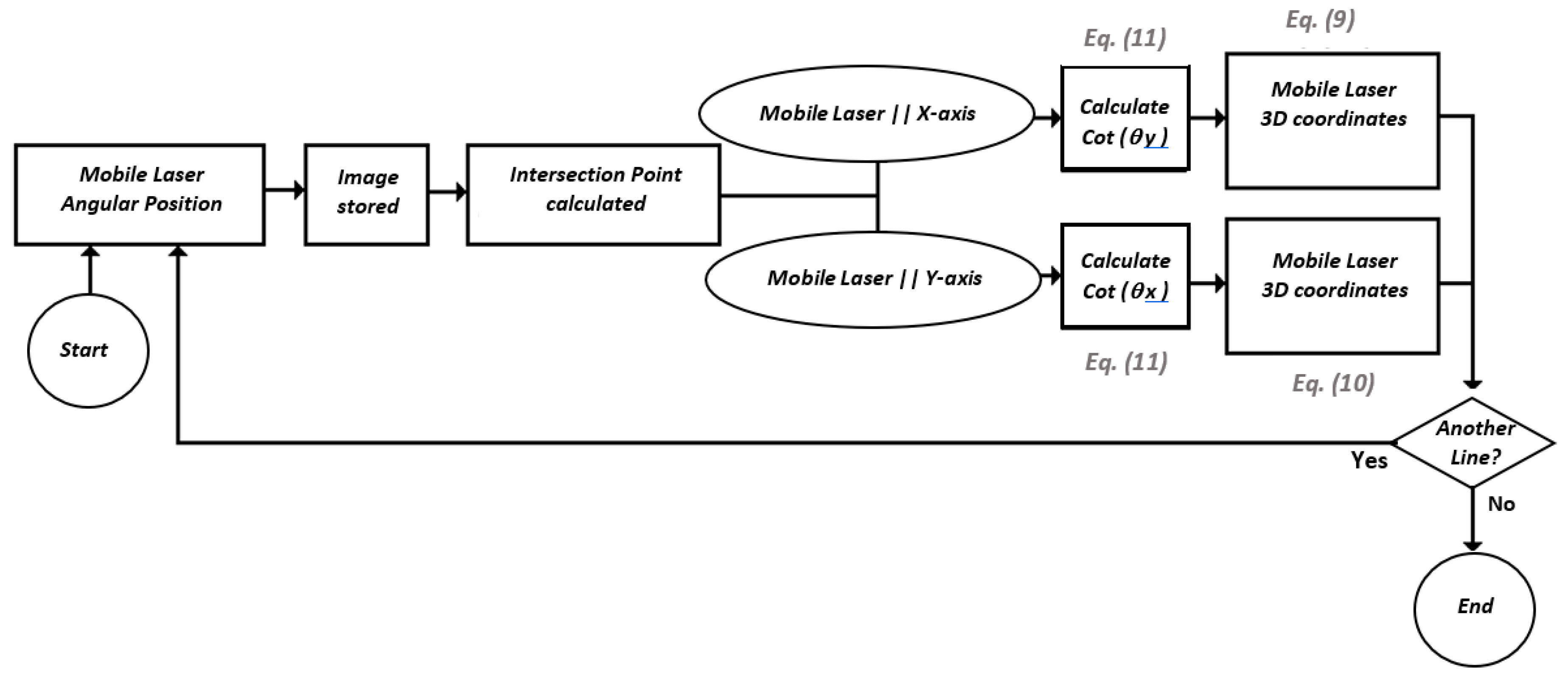

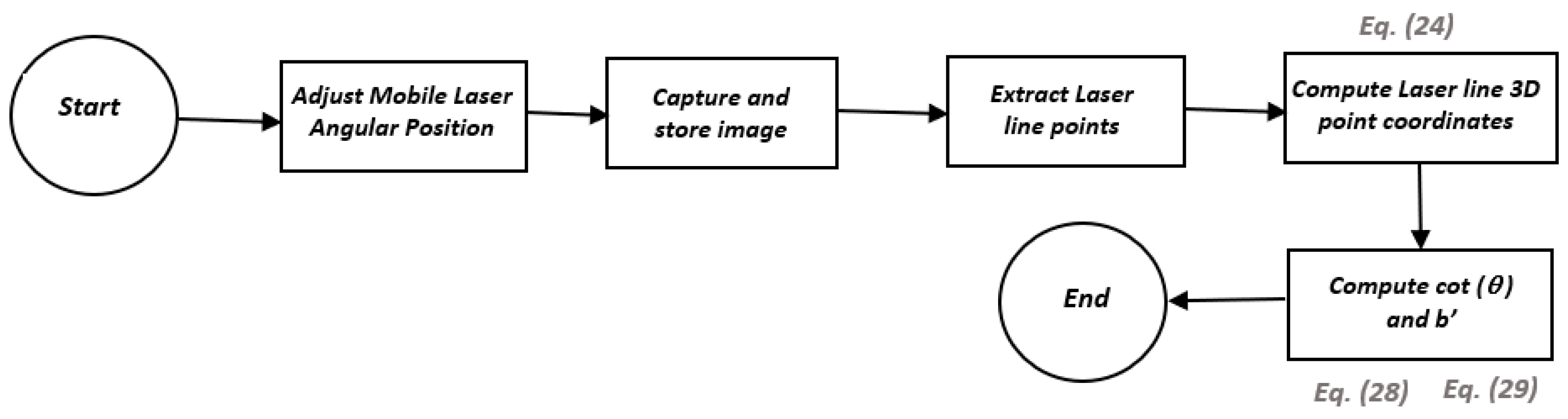

A flowchart shows each of the steps for the complete scan of a surface in

Figure 7. It is important to note that the camera model and the parameters

bx,

by,

dx,

dy,

cot(

θx), and

cot(

θy) are previously calibrated. Depending on which diode laser is used as the mobile laser, either Equation (9) or Equation (10) is used.

3. Optical System Calibration

Since the camera is calibrated, all camera intrinsic and extrinsic parameters are completely determined, and the optical system can be calibrated with these parameters.

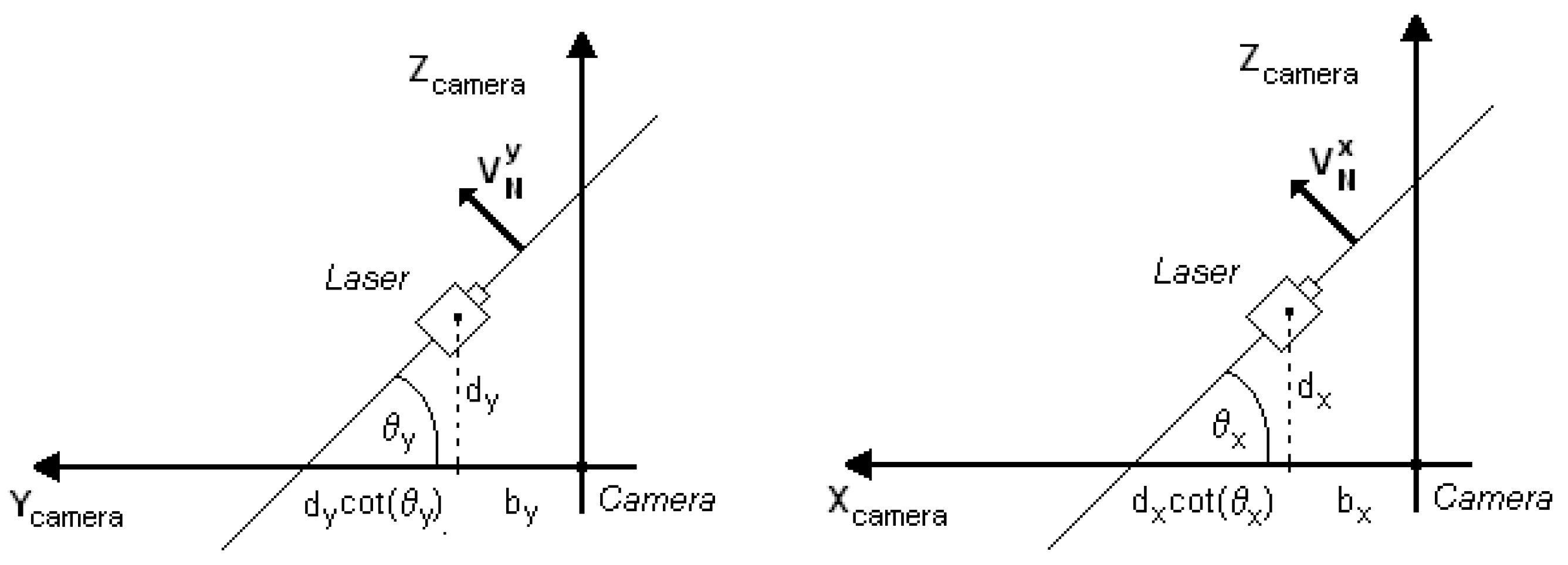

The calibration of the optical system is the process of identifying the real values of the geometric parameters of the optical system described previously. These parameters can be seen in

Figure 8.

A point P on the object reference system with coordinates (

xw, yw, zw) has its coordinates expressed in the camera coordinate system (

xc, yc, zc) by the following equation:

where

R is an orthonormal rotation matrix 3 × 3 and

T is a translation vector representing the spatial coordinates of the origin of the world reference system expressed in the camera coordinate system.

Considering that

R and

T, defined in Equation (12), perform the transformation of the world reference system to the camera reference system, it is possible to determine the equation of the reference plane in relation to the camera from the transformation below:

where

zw = 0.

To transform the normal plane vector to the camera’s coordinate system, one can use

To determine D in Equation (13) and considering that a point

[Tx Ty Tz]T belongs to the calibration plane, then

So, the calibration plane in relation to the camera frame is completely defined as

where

,

,

, and

.

The next step is the determination of the planes generated by each of the laser beams, as shown in

Figure 9, where

and

represent the normal vectors of the generated planes, and

and

are the positions of the laser diodes related to the camera.

The equations of these planes are given by

The intersection of these planes with the calibration board can be determined through Equations (16)–(18). These intersections are the projections of the laser light on the board surface and are mathematically described as lines in space.

The plane defined by Equation (17) is

By choosing

xc as a free parameter, the solution of the system is given from the parametric equation of the line of intersection between these planes:

Similarly, for the plane of light described by Equation (18):

The existence of the free parameter, t, in the equations of the intersection between the planes is to avoid divisions by zero since it is possible that the values of xc and yc are constant in light planes parallel to the X and Y axes, respectively.

Thus, the image coordinates (

xim and

yim) from a point on the laser line, since this point is on the plane of the calibration board, are obtained, then the coordinates of this point (

xc,

yc, and

zc) relative to the camera reference system can be obtained using the camera model equations proposed by Tsai [

24], Lenz, and Tsai [

25], referred to as Radial Alignment Constraint (RAC model), with some modifications proposed by Zhuang and Roth [

26], comprising the equations below together with the equation of the calibration board:

where (

Cx, Cy) are the coordinates of the image center in pixels,

μ =

fy/fx,

k = coefficient of image radial distortion,

kr2 << 1, and

fx and

fy are the focal length in pixels corrected for the shape of the pixel dimensions in the X and Y axes, respectively (scale factors

sx and

sy in

Table 1, where

fx =

f/

sx and

fy =

f/

sy).

Solving the system above, the coordinates (

xc,

yc, and

zc) of a point of the laser line can be obtained directly as

where

Therefore, using these obtained coordinates (

xc,

yc, and

zc) and the equation of the projection line of the laser plane in space (Equations (20) and (22)) it is possible to obtain a linear system of equations for

:

It is easily seen that Columns 2 and 3 are identical in Equation (27), i.e., regardless of the number of points used the system will always have a rank of 2. Therefore, the misalignment parameters, dx and dy, cannot be obtained directly from these systems.

For the calibration of

dx and

dy, two or more positions of the mobile laser are used and the values of

d.cot(θ) and

b are determined at once. The systems of Equation (26) and Equation (27) can be modified to

where

For the solution of these systems, a single point of the laser line is sufficient; however, the use of several points on the laser line and the optimization based on least squares or the singular value decomposition (SVD) can produce more accurate results.

Therefore, with N different positions of the mobile laser, it is possible to determine the actual base distance of the laser diode and its misalignment value through an overdetermined system, calibrating the laser parameters completely:

For the calibration of the fixed laser, the same procedure is performed; however, since the angle of inclination of the fixed laser is constant, the determination of the apparent base distance, b’, is sufficient.

The entire calibration of the optical system can be summarized through the algorithms illustrated in

Figure 10 and

Figure 11.

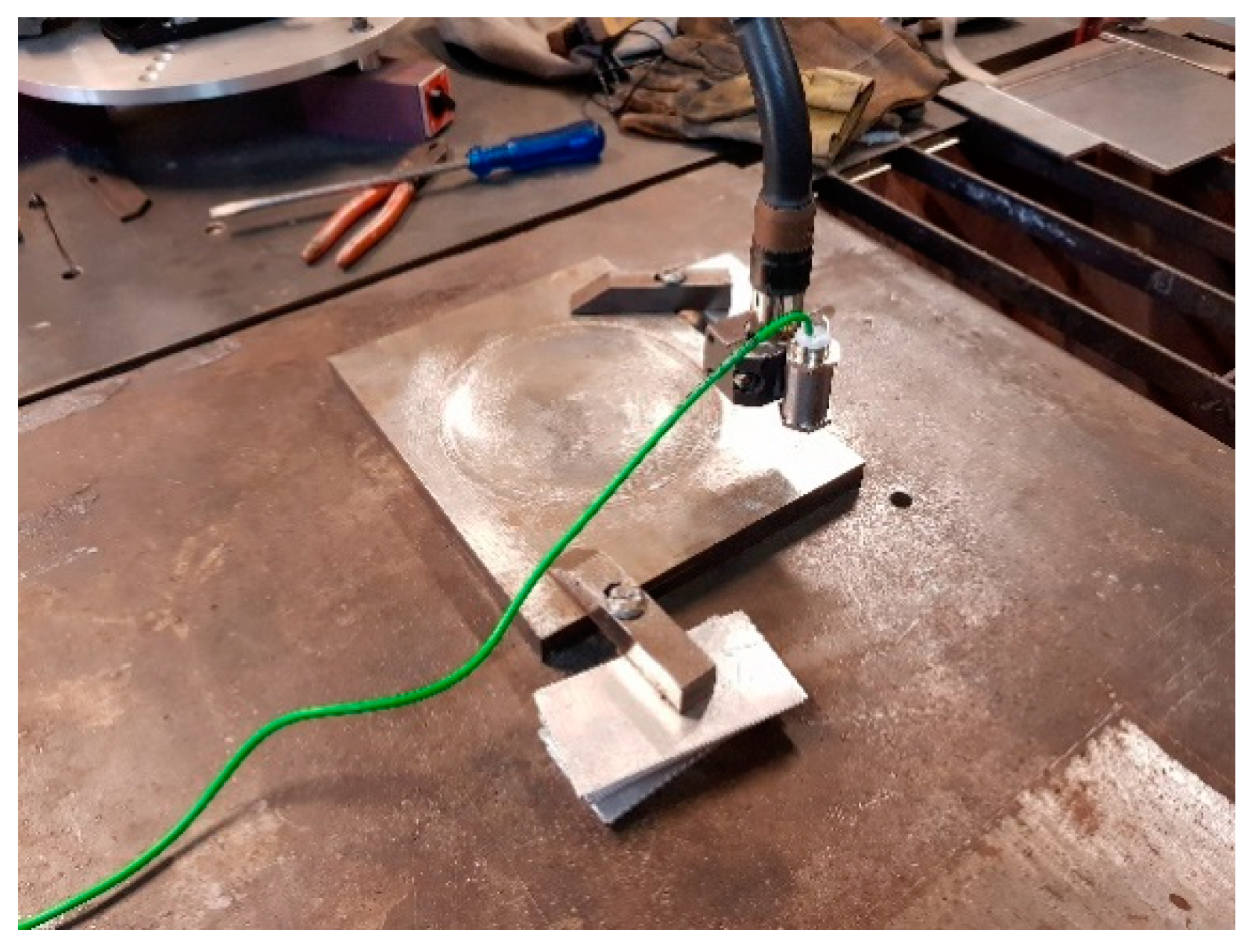

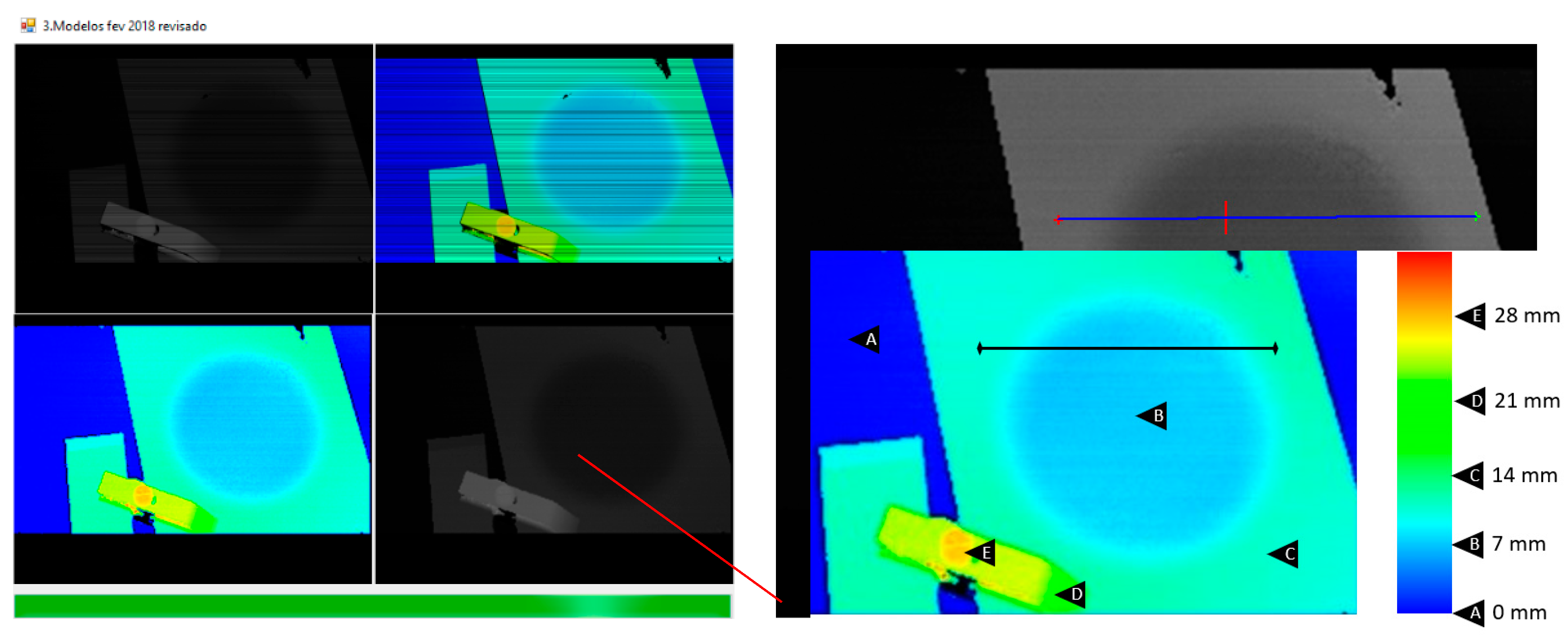

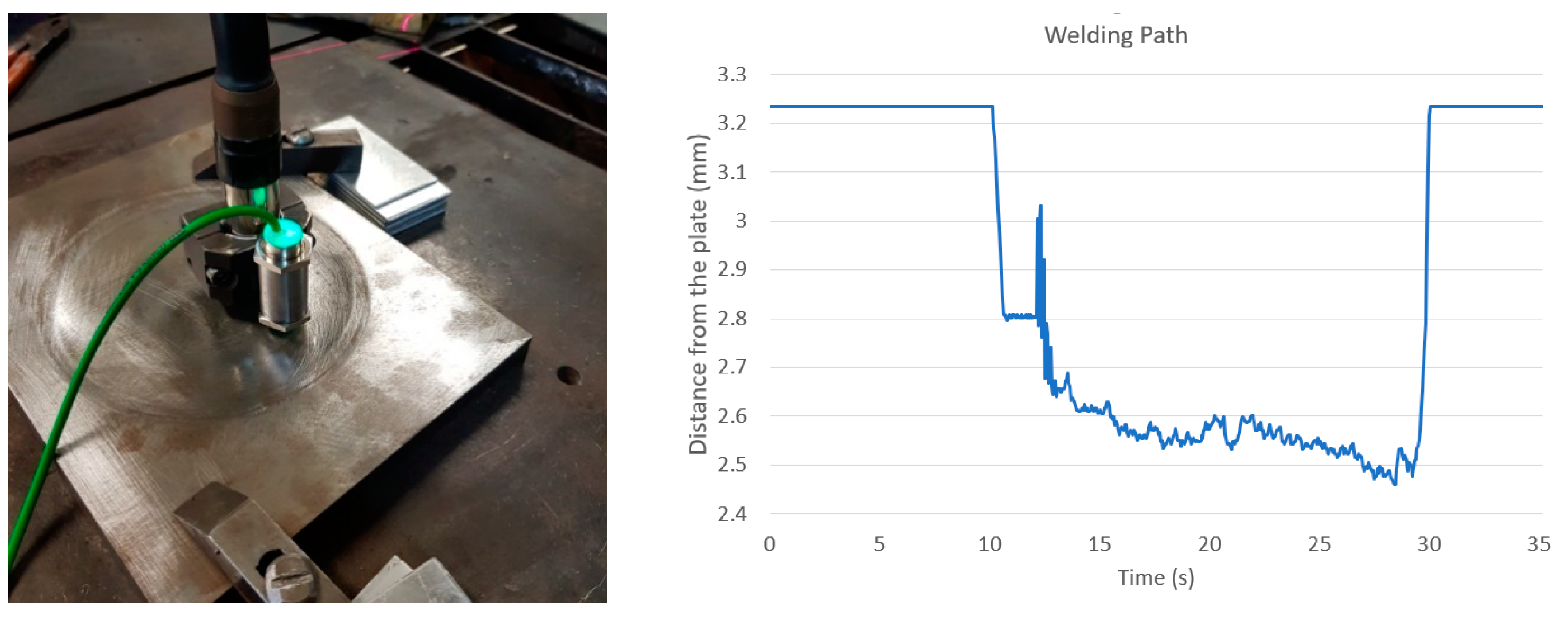

4. Calibration of the Sensor Position

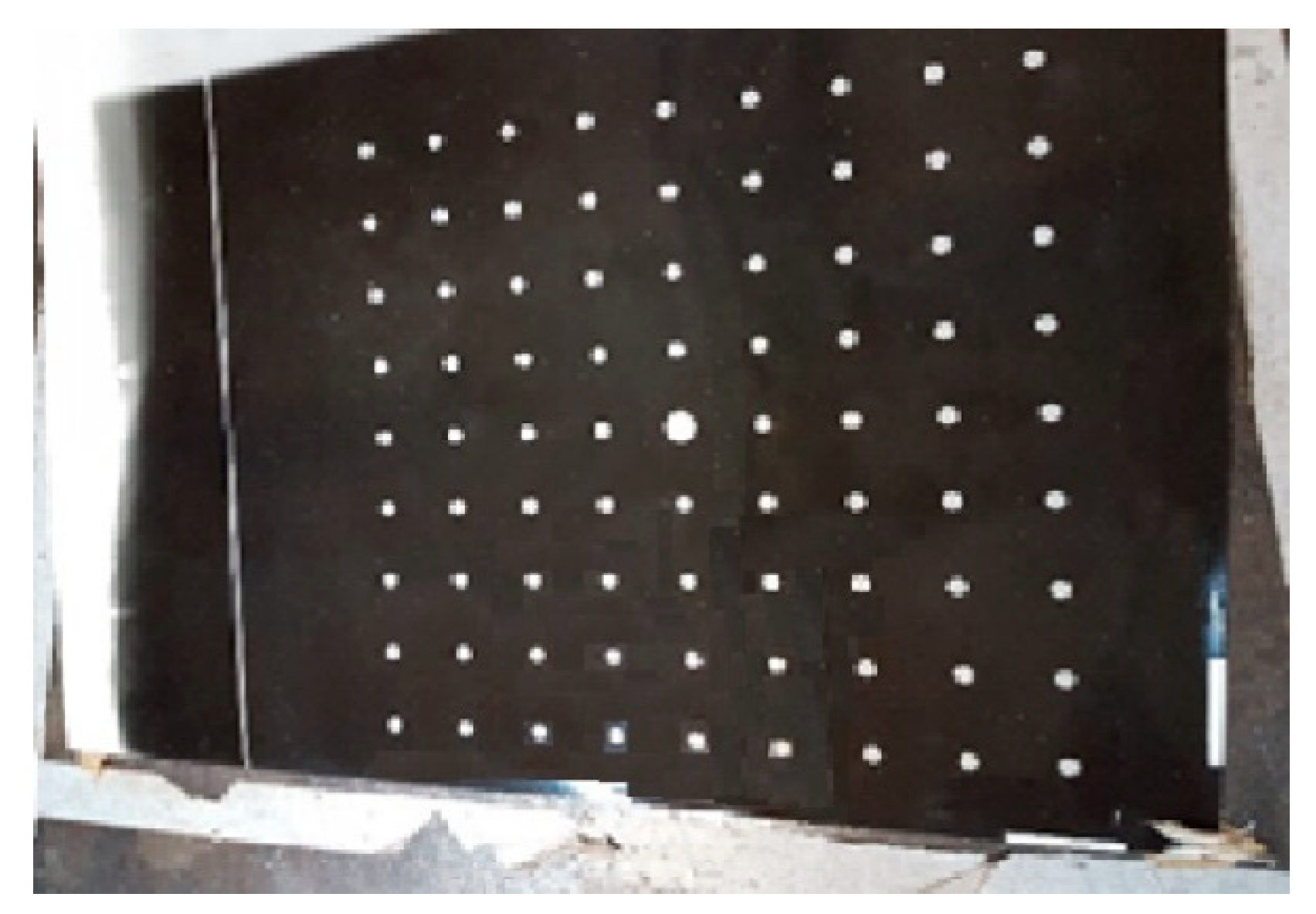

The 3D laser scanning sensor based on triangulation developed in this research is intended to produce 3D maps of the surfaces of hydraulic turbine blades. The sensor must be mounted and fixed on the robot arm to be moved over the surfaces by the robot. Therefore, for the 3D coordinates of the map to be assigned with respect to the controller coordinate system at the robot base, the sensor needs to have its position and orientation expressed in the robot base coordinate system previously determined.

The process to determine the sensor position is accomplished by moving the robotic arm with the sensor attached on it over a gage block of known dimensions, such that the range images represented in the respective 3D camera coordinates are obtained and recorded. Subsequently, the robot has its end-effector (weld torch) positioned at various point positions on the gage block surface and the robot coordinates are recorded and related to the coordinates of the same position point expressed in the camera coordinate system of the map.

From several point positions, the transformation between the camera coordinate system and the robot base coordinate system can be obtained and used in the parameter identification routine described in the next sections.

4.1. Robot Forward Kinematic Model

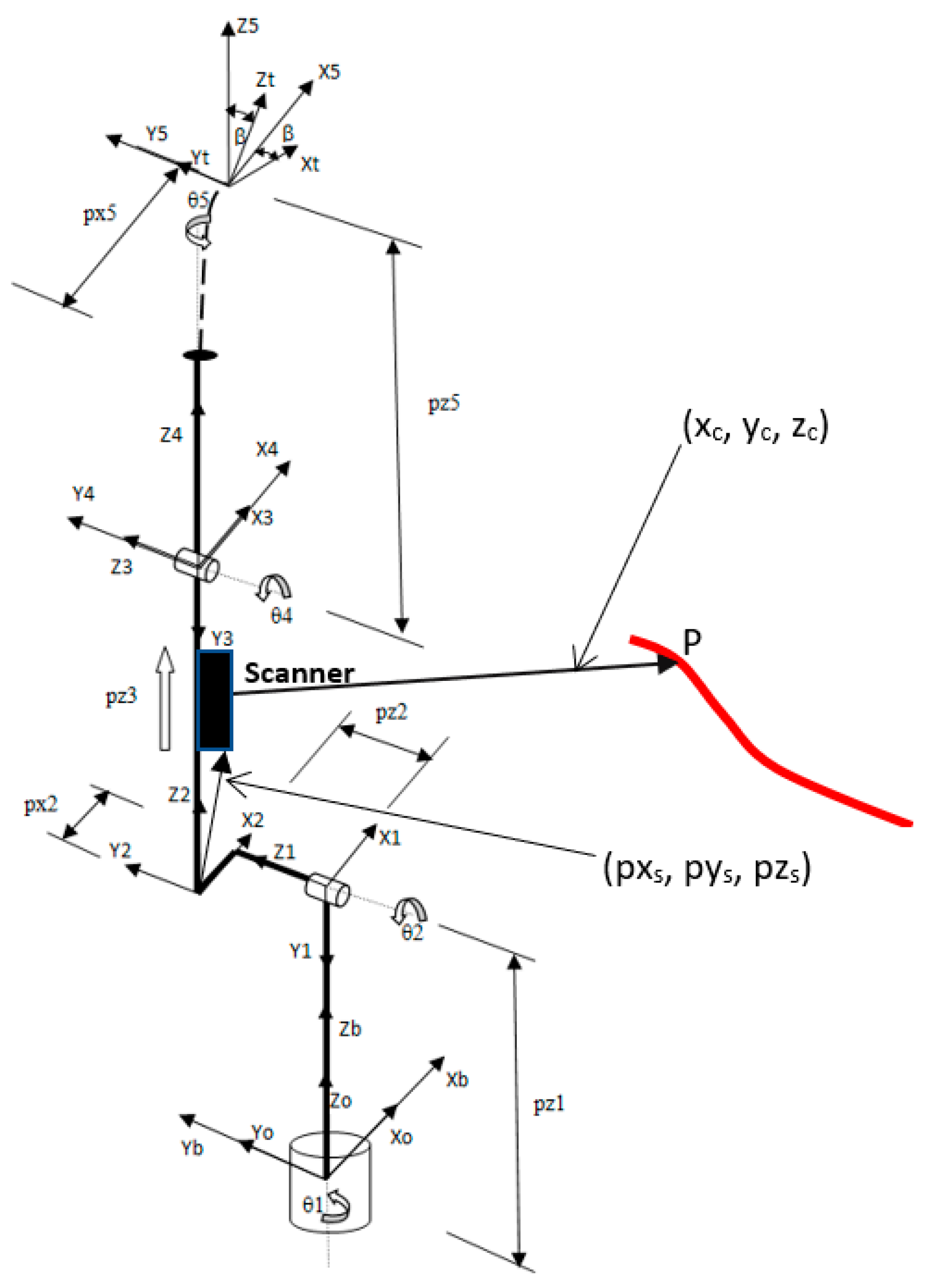

Considering the robot model shown in

Figure 12, homogeneous transformation matrices that relate coordinate frames from the robot base (b) to the robot torch/tool (t) can be formulated as follows:

where

is the homogeneous transformation between two successive joint coordinate frames.

The transformations shown in Equation (33) can be formulated with only 4 elementary motions as proposed by the Denavit–Hartenberg (D–H) convention [

27] as below:

where

θ and

α are rotation parameters in Z and X axes, respectively, and

d and

l are translation parameters along the Z and X axes, respectively. The application of Equation (34) to each of the consecutive robot joint frames by using the geometric parameters shown in

Figure 12 produces the general homogeneous transformation of the manipulator.

The entries of the general manipulator transformation,

, according to Equation (33), excluding the rotation of the torch tip coordinate frame by the angle

β (

Figure 12), are formulated below, as the robot forward kinematic equations:

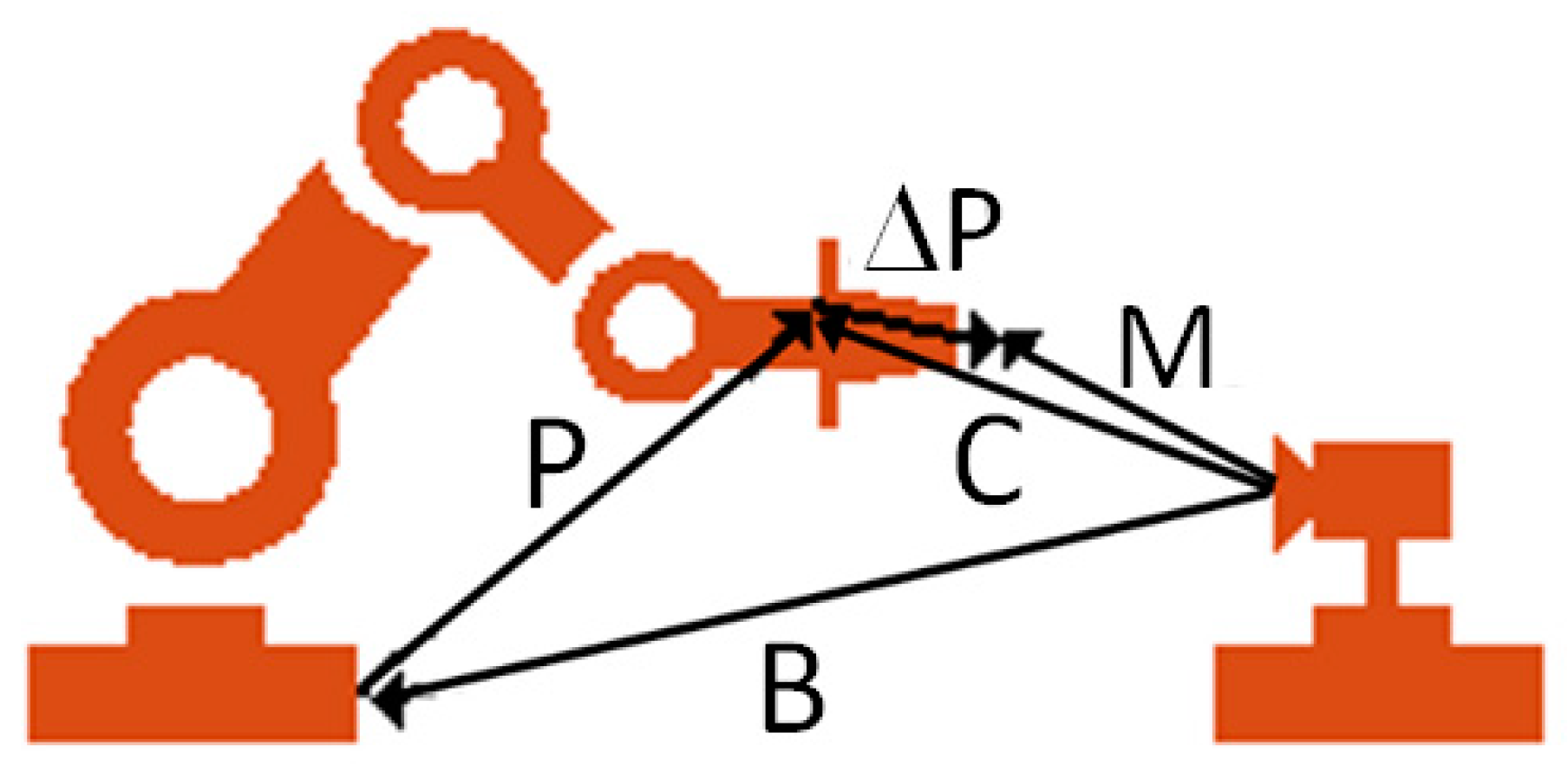

4.2. Parameter Identification Modeling

Robot calibration is a process of fitting a nonlinear complex model consisting of a parametrized kinematic model with error parameters to experimental data. The error parameters are identified by minimizing an error function [

17].

A robot kinematic model consists of a set of nonlinear functions relating joint variables and link geometric parameters to the robot end-effector pose, such as in

where T

i are any link transformations defined in Equation (34), P is the manipulator transformation and

n is the number of links. If the kinematic model uses a convention of 4 elementary transformations per link, like the D–H convention, the manipulator pose error can be expressed as (from Equation (34))

where

θ,

α,

d, and

l are geometric parameters that relate a robot joint frame to the next joint frame, where

d and

l are translation parameters, and

θ and

α are rotation parameters in two of the three coordinate axes, respectively.

The derivatives shown in Equation (48) characterize the partial contribution of each of the geometric error parameters of each joint, consisting of the total pose error of the robot’s end-effector, which can be measured with proper measuring devices. Considering the measured robot poses (M) and the transformation from the measurement system frame to the robot base (B), ΔP is the vector shown in

Figure 13.

The transformation, B, can also be considered as a virtual link belonging to the robot model that must be identified. So, the pose error, ΔP, can be calculated with Equation (49) as [

28]

The manipulator transformation, P, is updated each time a new set of geometric error parameters is fitted through an iterative process, and, when the calibration process finishes, P is the minimum deviation of the measured poses.

Equation (48) can be rewritten in a matrix form for

m measured poses in the form of a Jacobian matrix comprising the partial derivatives of P, such that Δ

x is the vector of the model parameter errors as in Equation (49):

The Jacobian matrix size depends on the number of measured poses in the robot workspace (m) and on the number of error parameters in the model (n). The matrix order is ηm x n, such that η is the number of space degrees of freedom (3 position and 3 orientation parameters). Then, the calibration problem can be set as the solution of the nonlinear system J.x = b.

A widely used method to solve this type of system is the Squared Sum Minimization (SSM). Several other methods are discussed extensively with their related algorithms in [

22]. A successful method for the solution of nonlinear least squares problems in practice is the Levemberg–Marquardt algorithm. Many versions of this algorithm have proved to be globally convergent. The algorithm is an iterative solution method with few modifications of the Gauss–Newton method to reduce numerical divergence problems.

4.3. Algorithm for the Transformation of Coordinates from the Sensor to the Robot Base

Input: The matrix with all the coordinates of the map points scanned by the sensor in a scan, expressed in the sensor coordinate system. Each point coordinate is transformed to coordinates represented in the robot base coordinate system with the homogeneous transformation equations below:

where,

A0P = matrix representing the position of the scanned object point (P) in the robot base coordinate system (0);

A01 = matrix representing the position of Joint 1 (1) in the robot base coordinate system (0);

A12 = matrix representing the position of Joint 2 (2) in the Joint 1 coordinate system (1);

A2S = matrix representing the position of the sensor (S) in the Joint 2 coordinate system (2) (px

s, py

s, and pz

s) (see

Figure 12);

ASP = matrix representing the position of the scanned point (P) in the sensor coordinate system (S) (x

c, y

c, and z

c) (see

Figure 12).

The homogeneous transformations are shown below:

where symbols are described in

Section 4.1 and:

θ1 = Joint 1 position when scanning, recorded from the robot controller;

θ2 = Joint 2 position when scanning, recorded from the robot controller;

pz3 = Joint 3 position when scanning, recorded from the robot controller;

(xc, yc, zc) = object point coordinates, P, represented in the sensor coordinate system.

The constant parameters were previously determined from a robot calibration process, and details about the calibration process of this robot can be seen in [

28]. The pertinent results are listed below:

α1 = −89.827°;

α2 = 90°;

pz1 = 275 mm;

pz2 = 104.718 mm;

pz3 = joint variable position in the controller +103.677 mm;

px1 = −0.059 mm;

px2 = 33.389 mm;

θ1 = joint variable position in the controller + 0.1097°;

θ2 = joint variable position in the controller + 89.602°.

The parameters to be identified are px

s, py

s, and pz

s, and the results of the identification routine are presented in the homogeneous transformation A2S:

Output: The matrix with all the object point coordinates of a scan expressed in the robot base coordinate system. These coordinate values must be input into the robot controller so that, through the forward kinematics, the robot torch reaches the programmed trajectory points.