1. Introduction

Micro air vehicles (MAVs) are a type of drone and are approximately the size of a person’s hand. This property makes them easy to pack and allows them to be flown indoors. One of the fundamental problems of autonomous indoor flight is the localization ability. This problem has become more severe due to the strict restrictions on the size and weight of MAVs. Thus, how to utilize low-cost and lightweight sensor resources to locate MAVs in complex and ever-changing indoor environments is a hot and challenging issue.

Many indoor localization technologies have been developed to achieve indoor localization, such as localization based on ranging sensors [

1,

2,

3], Bluetooth [

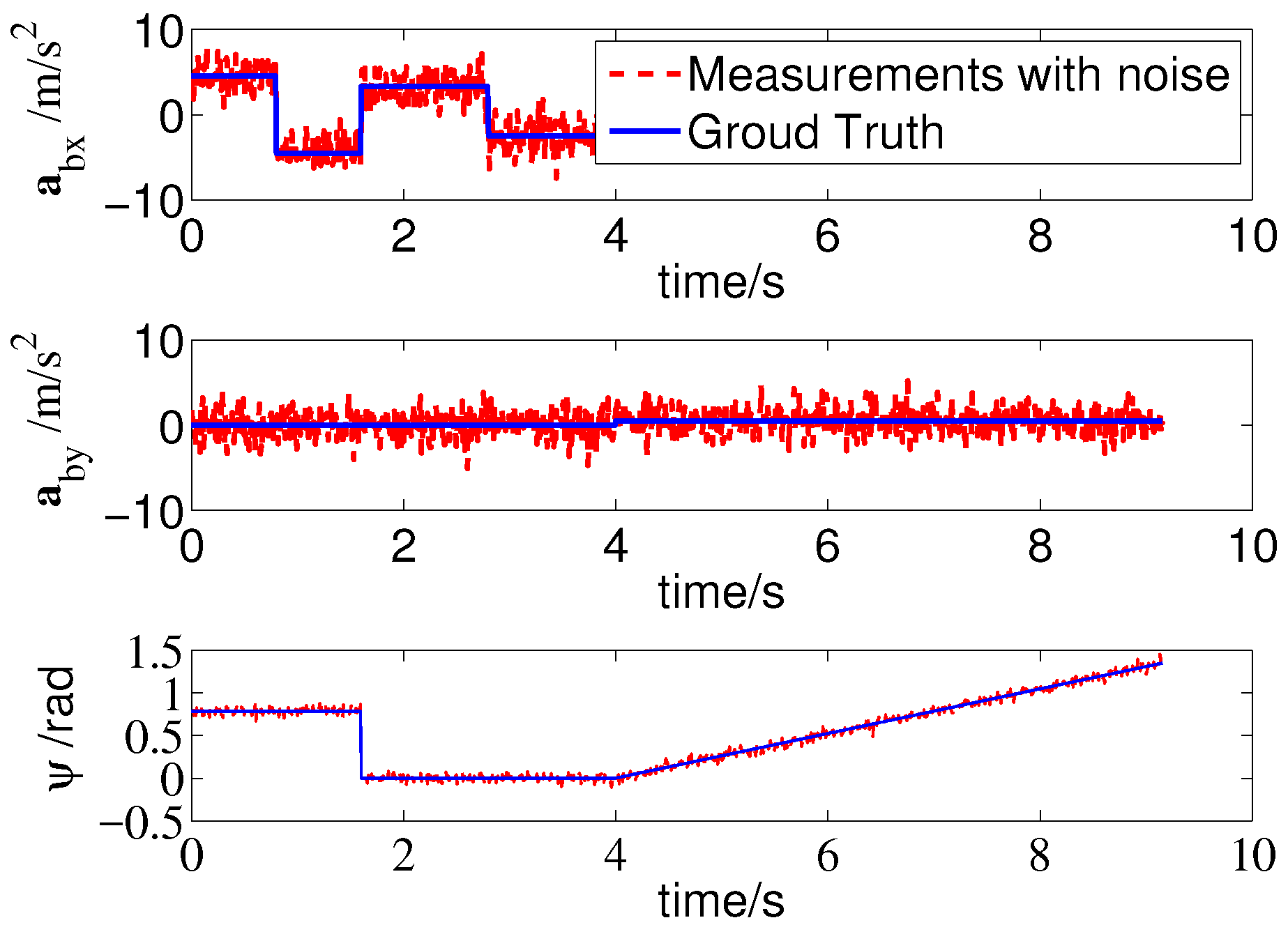

4], inertial measurement units (IMUs), cameras, ultra wide band (UWB) [

5], wireless local area network (WLAN) [

6], ZigBee [

7] and radio frequency sensors [

8]. In this paper, the above approaches can be divided into two types according to whether the main localization sensors are placed on the unmanned aerial vehicle (UAV): onboard-sensor-based approaches and offboard-sensor-based approaches. The offboard-sensor-based approaches, such as Cricket developed by MIT, require some equipment, such as the beacons or motion capture cameras, to be prearranged in the UAV’s flight environment; thus, such approaches have good positioning accuracy in known environments.

The onboard-sensor-based approaches, which do not require the assistance of external devices, can be applied to unknown environments. In [

9], the data from the IMUs and lidar are used as inputs to the odometer, and the position of the UAV and the map are given simultaneously. In [

10], a landmark-based method is introduced. In this method, some simply shaped objects, such as walls, corners and edges, are chosen as landmarks. Additionally, 16 ultrasonic sensors are mounted around the mobile robot to identify and measure the distance to the landmarks. Then, the robot’s position can be obtained when two geometrical elements are successfully identified. In [

11], extracted and matched scale invariant feature transform (SIFT) features are used to construct nonlinear least squares problems, and then the pose of the UAV is solved by the Gauss-Newton method, using an IMU to estimate the initial value of the solution. In [

12], the Harris corner detection algorithm is used to detect the corner points, and the corner points of two adjacent images are matched to obtain an optimized objective function; then, the LM algorithm is used for nonlinear optimization, and finally, the pose of the UAV is obtained. In [

13], a lamp on the ceiling is used as a landmark, and through the extraction of feature points on the lamp, real-time localization can be realized by combining the relevant information of the landmark in the database. In [

14], lidar data are segmented using KD trees, and then the PLICP algorithm is used to match the point sets of two adjacent scans; the error equation is constructed according to the distance between these matching points. Through the iterative solution of the equation, the rotation and translation of two adjacent scans are calculated, and then the position of the robot is estimated. In [

15], the author uses a planar object for positioning. First, the laser data are segmented and subject to plane fitting. Then, a variant of the hill-climbing algorithm is used to match the planes in data of two adjacent scans. Finally, three successful matching planes are selected to calculate the location of the robot based on the geometric relationship.

Considering their limited size and load, very few approaches are available for MAVs. The lidar-based and depth-camera-based approaches are too large or too heavy. Although a monocular camera or binocular camera can be small and light enough for a MAV, the corresponding image processing device is also unacceptable for being installed in a MAV, at least at present. Compared with the above approaches, ultrasonic range sensors have advantages in terms of size and weight, making them one of the best choices for the localization task.

In [

16], a few well-known ultrasonic localization systems, including Cricket, BUZZ and Dolphin, are investigated with a comparison of the systems in terms of performance, accuracy and limitations. The accuracies of the above systems range from 1.5 cm to 10 cm; however, these positioning approaches require special application conditions, such as arranging transmitters in the environment, time synchronization processing, and powerful computing capabilities. Thus, they are hard to apply in MAVs. In [

3], a ultrasonic-beacon-based approach is proposed to replace the role of GPS, it consists several stationary beacons and a mobile beacon and has a good balance between the weight and accuracy. However, it still needs the assistance of external devices, i.e, the stationary beacons, which may limits it application. Ref. [

2] discusses a possible way to map an unknown indoor environment by using 3 ultrasound modules. Ref. [

17] summarizes several commonly used sonar models, such as the centerline model, the occupancy grids model, the polygon model and the arc model. In [

18], an improved wedge model of the sonar sensor model is given, and a probabilistic measurement model that takes the sonar uncertainties into account is defined according to the experimental characterization. Experiments are conducted based on a Pioneer 3-DX robot equipped with 16 Polaroid ultrasonic range finders. However, a certain number of sensors are required to obtain satisfactory positioning accuracy, which is hard to apply to a light MAV.

In this paper, a novel beaconless localization approach is proposed and a multi-ray ultrasonic sensor model is presented to provide a rapid and accurate approximation of the complex beam pattern of ultrasonic sensors. Additionally, four ultrasonic sensors are used to achieve position estimation. The proposed localization approach is suitable for MAVs in terms of weight and computation.

This paper is organized as follows. The

MAV platform is presented in

Section 2. The multi-ray model of ultrasonic sensors is given in

Section 3. The MAV system is modeled in

Section 4.

Section 5 presents the localization algorithm based on EKF. In the last section, simulation and experimental results are presented to validate the proposed algorithm.

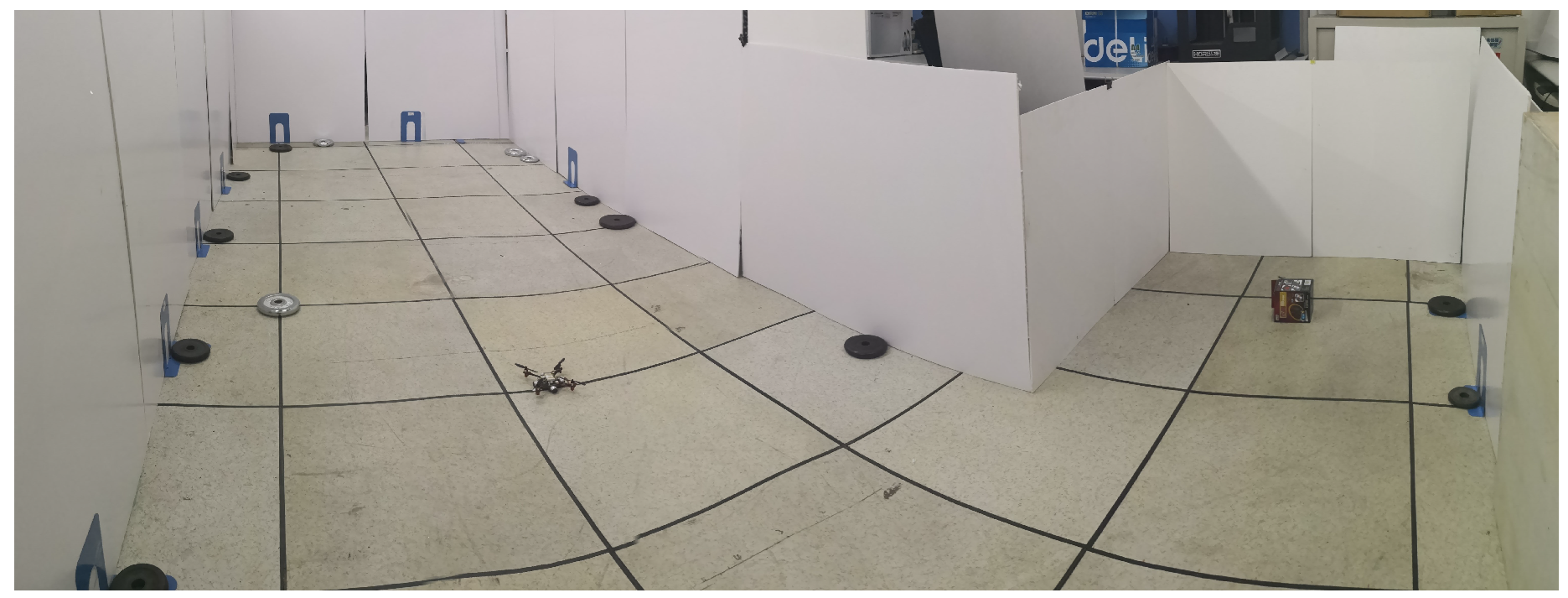

2. The Micro-UAV Platform

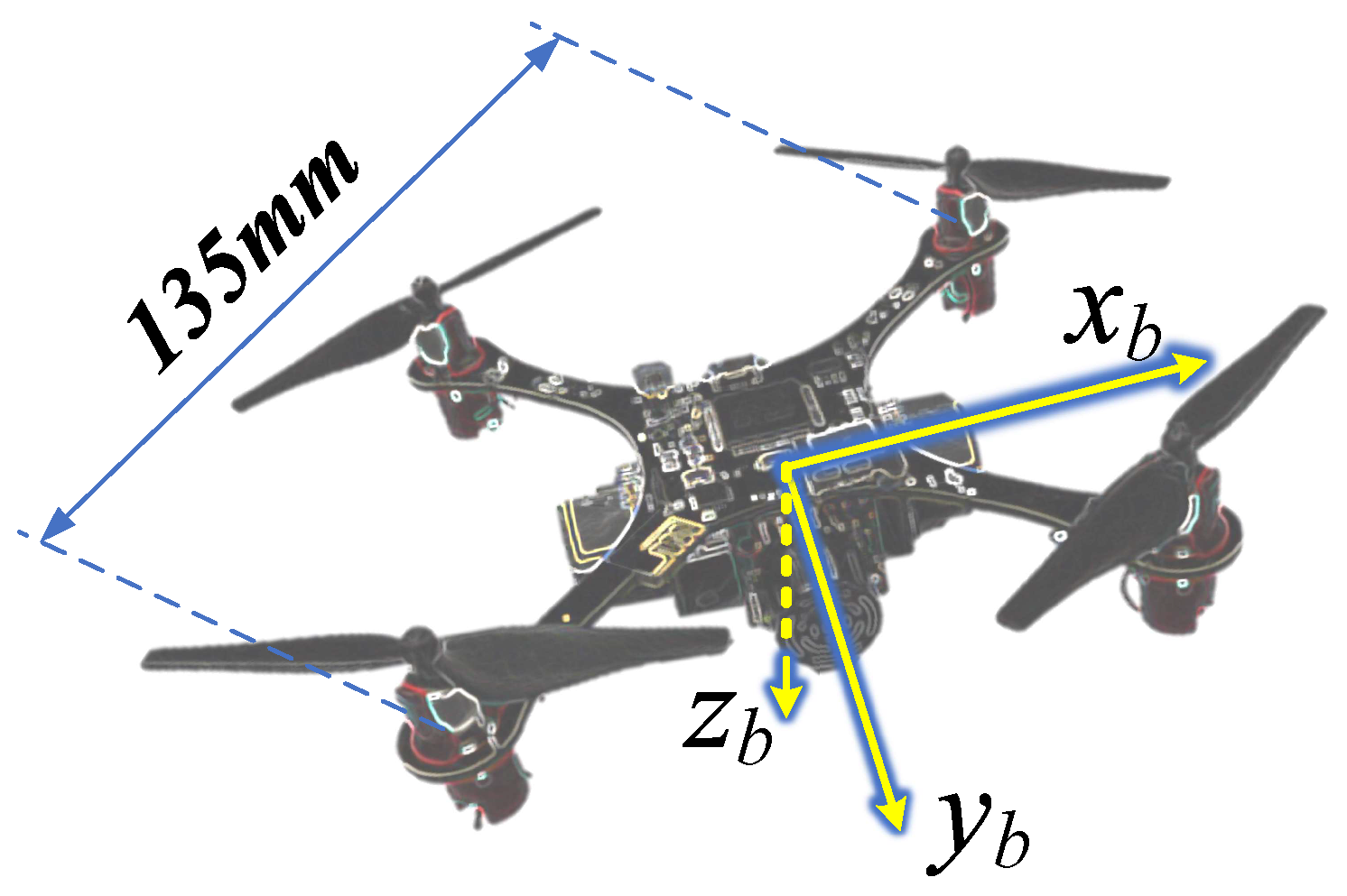

The

indoor MAV platform, shown in

Figure 1, is the second generation of the

series created by the our group [

19]. The MAV has the advantages of small size and light weight, and it can fly for about 4 min with a 400 mA battery.The weight of the

platform is approximately 75 g, which consists of the airframe (15 g), the battery (12 g), 4 motors and propellers (24 g) and 4 MB1222 sonar range finders (24 g), and its diagonal length is 135 mm (motor to motor).

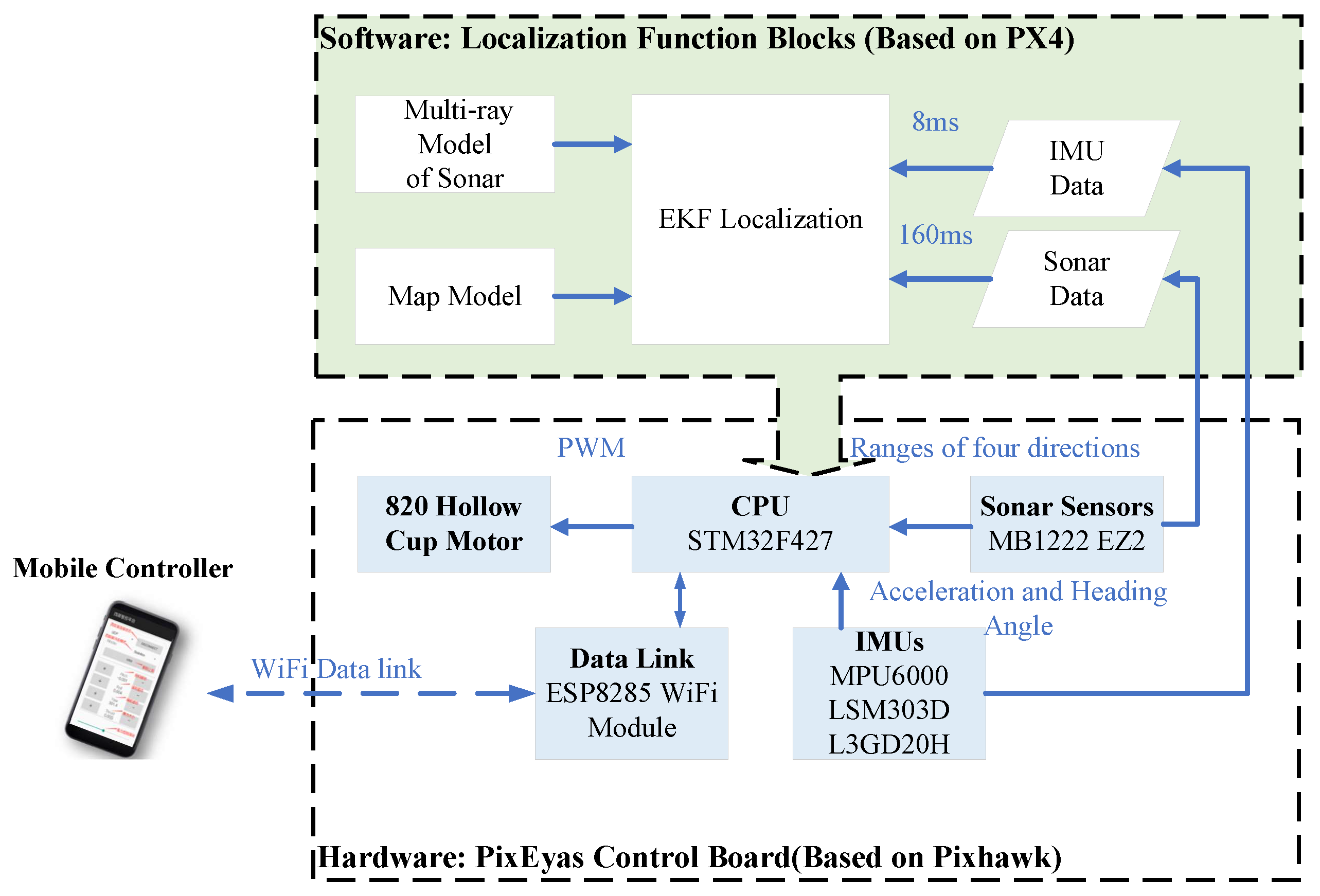

The system architecture of the

MAV platform is shown in

Figure 2, the lower part of the architecture shows the main hardware components, and it is a modified version based on the open source hardware Pixhawk [

20]. The powerful ARM STM32F427 is used to perform the calculation and the ESP8285 WiFi module is used to communicate with the mobile controller. Four 820-hollow-cup-motors are used to drive the 55 mm propellers. The angular velocity and movement acceleration are measured by an MPU6000 IMU sensor, and the heading angle is provided by an LSM303 magnetic sensor; both sensors have a sampling period of 8 ms.

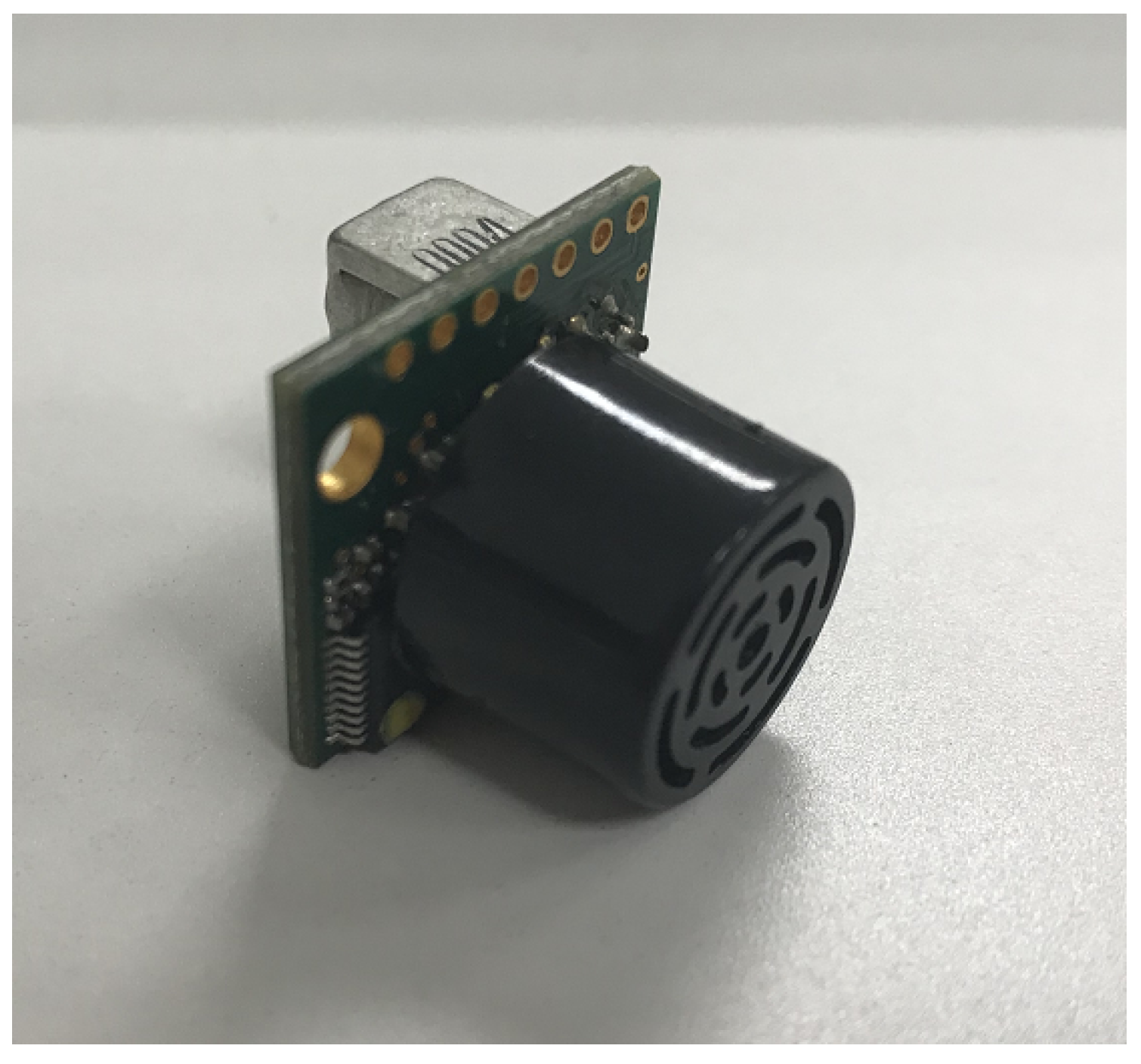

Considering the size and load limitations, some widely used precise distance measurement approaches, such as the laser range finder and the depth camera, cannot be applied in the MAV platform. In the

platform, four MB1222 I2CXL-MaxSonar-EZ2 range finders are installed on the bottom of the MAV. They are installed perpendicular to each other, as shown in

Figure 3. Thus, the ranges of four directions can be provided in a single measurement.

The features of the MB1222 I2CXL-MaxSonar-EZ2 range finder include centimeter resolution, an excellent compromise between sensitivity and side object rejection, short to long distance detection, range information from 20 cm to 765 cm, up to a 40 Hz read rate, and an I2C interface [

21]. Thus, this sensor is one of the best choices for the localization task. The other features of the MAV platform are shown in

Table 1.

The operating system running on the flight control board is the open source software PX4. It is easy to develop customized tasks, and all the data during the flight period are easy to store. The main functions of the proposed localization algorithm are shown as the upper part in

Figure 2.

3. Modeling of the Ultrasonic Sensors

Ultrasonic sensors are based on the time of flight to measure distance and return a range. However, this range is not the straight line distance to an obstacle; rather, it is the distance to the point that has the strongest reflection. This point could be anywhere along the perimeter of the sensor’s beam pattern [

17,

22], which makes the modeling of ultrasonic sensors a complex issue, particularly for online computing.

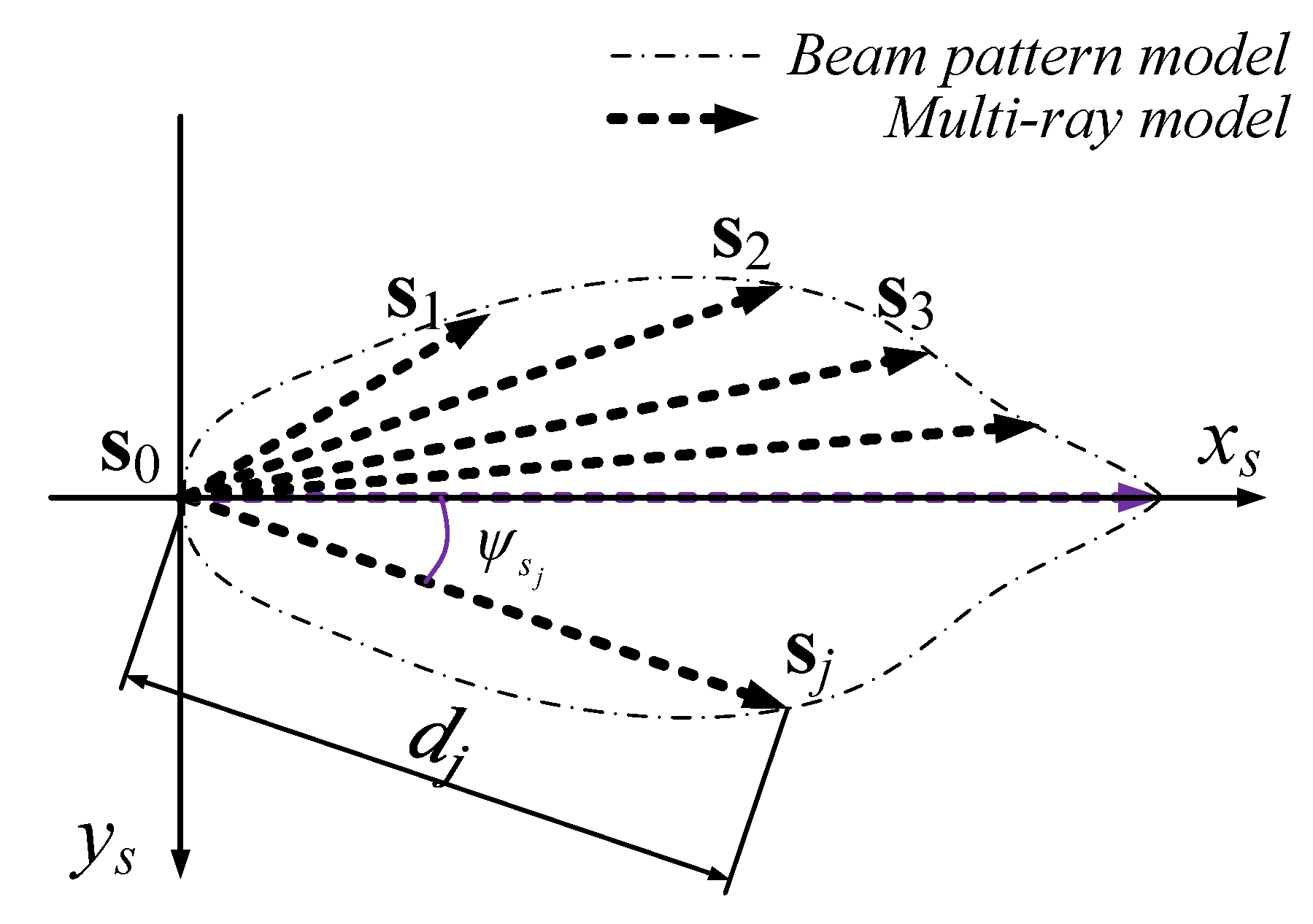

Figure 4 shows the detection area of the MaxSonar MB1222 sonar sensor; it is obtained by placing and measuring a plastic plate at predefined grid points in front of the ultrasonic sensor.

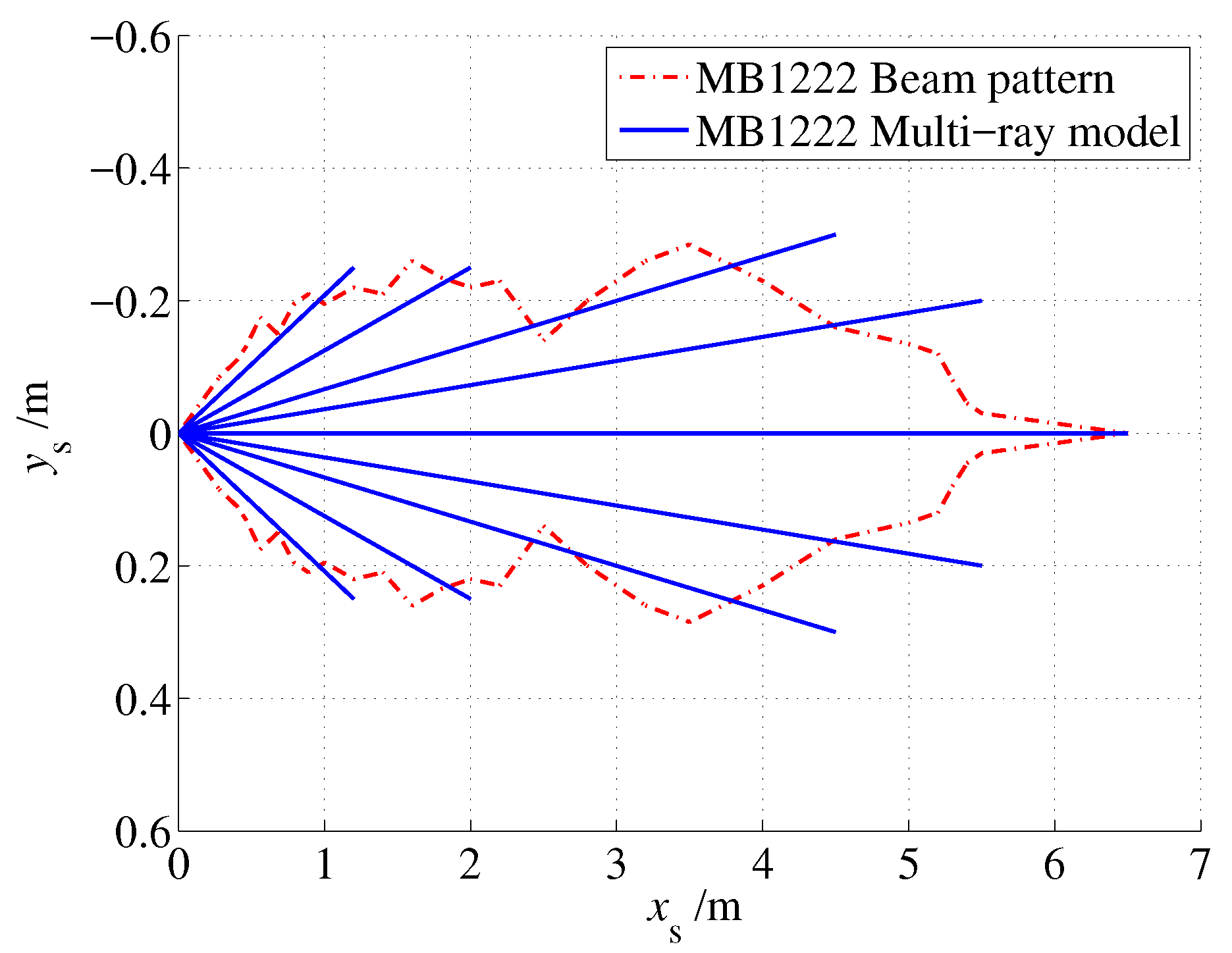

As shown in

Figure 4, the 2D beam pattern of the MB1222 sensor was approximated as an irregular polygon. To reduce the computational load of the polygon model, a multi-ray model is proposed, and the beam pattern is approximated by a ray group that starts from the origin, as shown in

Figure 5.

Then, the ultrasonic 2D multi-ray model

can be formulated as a ray group as

where

represents the sonar sensor’s position and

is the end point of the

j-th ray. Thus, for a known obstacle

, the model output

l is obtained through a two-step calculation. First, a set of all the intersections of

and

is calculated as

and then

l is given as

Equation (

3) follows the principle that the ultrasonic sensor provides the nearest measurement of all detections, and a predefined value

is given if there is no intersection between

and

.

Based on the beam pattern of the MaxSonar MB1222 sonar sensor, the multi-ray model was given as shown in

Figure 6. Nine rays were used to approximate the detection zone of MB1222. Note that the far ends of the rays were selected slightly beyond the edge to obtain better coverage of the detection zone.

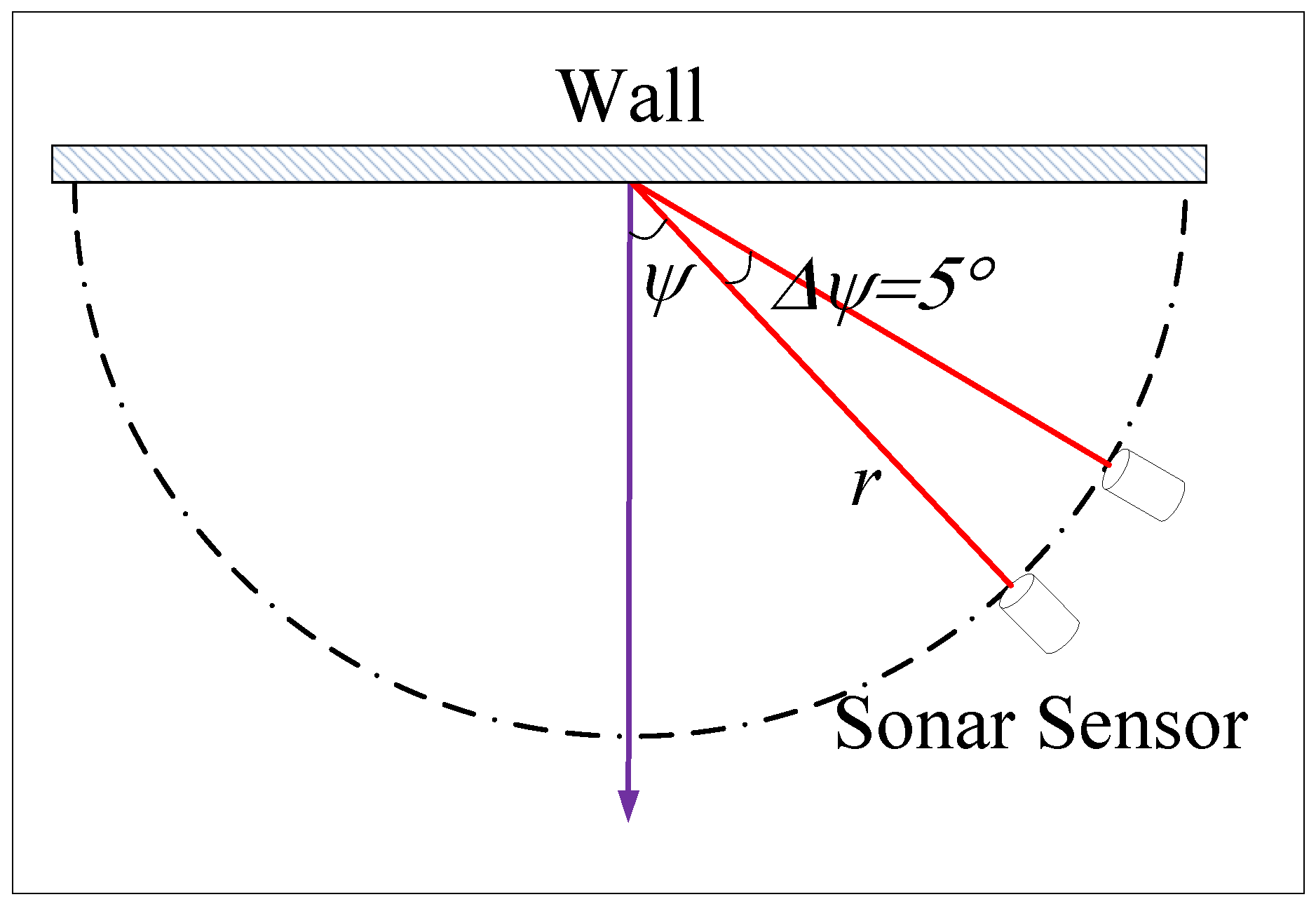

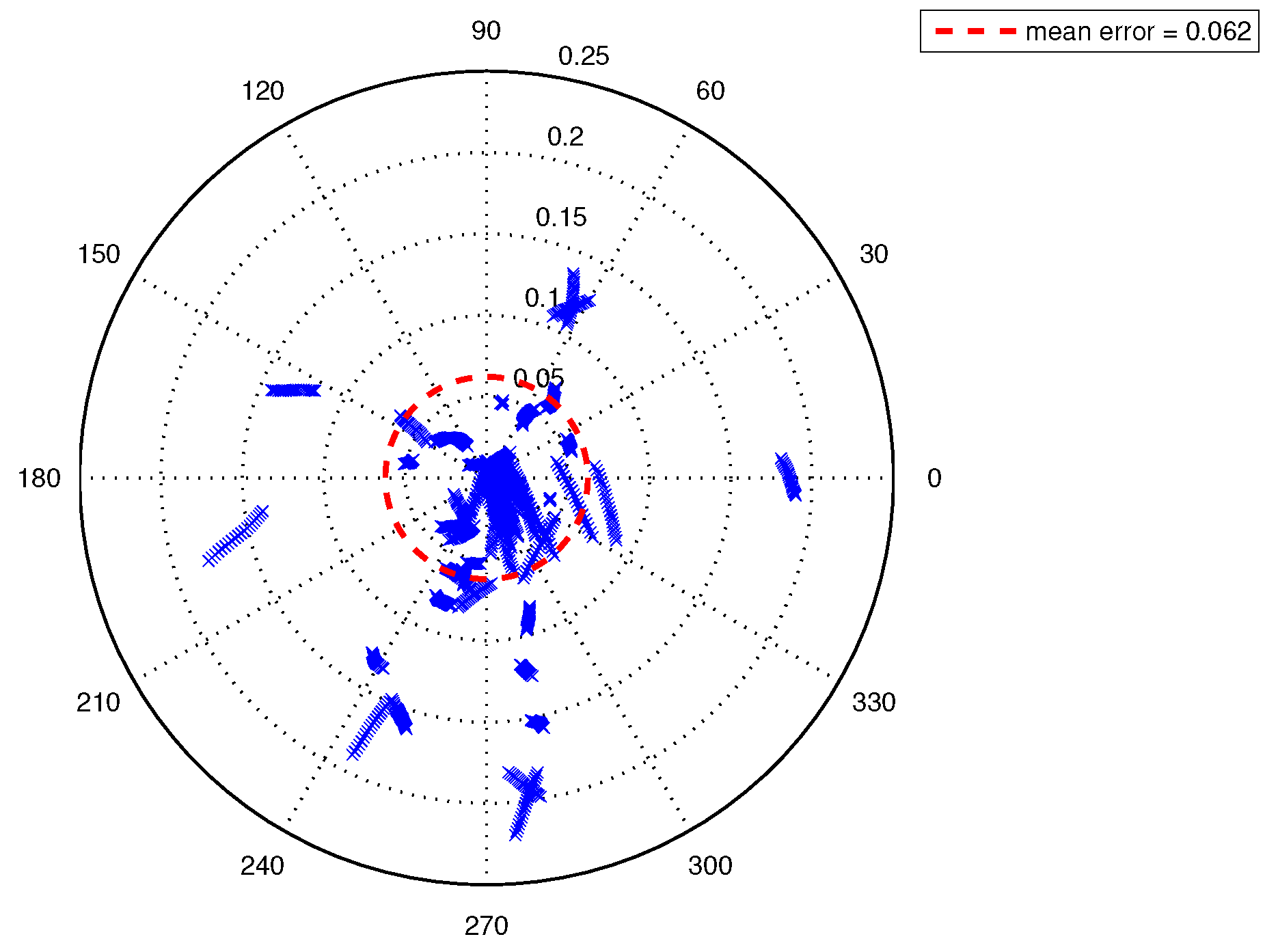

To test the fitness of the multi-ray model and the actual sensor measurement, a comparative test was performed between the proposed model and the MB1222 sensor, as shown in

Figure 7. The sensor was placed on the edge of a semicircle with radius

r, pointing to the center of the circle, and the angle

was then increased in five-degree steps. The actual measurement

is shown in

Table 2. The corresponding output of the multi-ray model

is presented in

Table 3. The modeling error

is presented in

Table 4.

(1) The measurement had a constant offset of approximately 3 cm to 4 cm, even in , i.e., the sensor is perpendicular to the wall.

(2) The maximum detection angles varied with the distances to the wall. The farther the sensor was from the wall, the narrower the detection angle. The half-side detection angle was close to 0 when the distance exceeded 5.9 m, and it reached approximately 35 degrees when r was less than 1.2 m.

For comparison, the 3 cm offset was subtracted from the output of the model, and the model error was defined as

cm, as shown in

Table 3 and

Table 4. As shown, in most cases, the model error was less than 1 cm, and the maximum model error was 2 cm. Considering that the minimum resolution of the sensor was 1 cm, the proposed model had good fitness with the actual sensor for indoor localization.

Note that obvious angular constraint characteristics were observed in the measurements of ultrasonic sensors; however, we did not introduce the angular constraint in the proposed model, which was a consideration for reducing the calculation load. Because the constraint involves calculating the angles between all line segments of

and

, it may lead to a significant increase in the calculation load. In an alternative approach, the jump filter, was used to solve this problem, which will be presented in

Section 5.

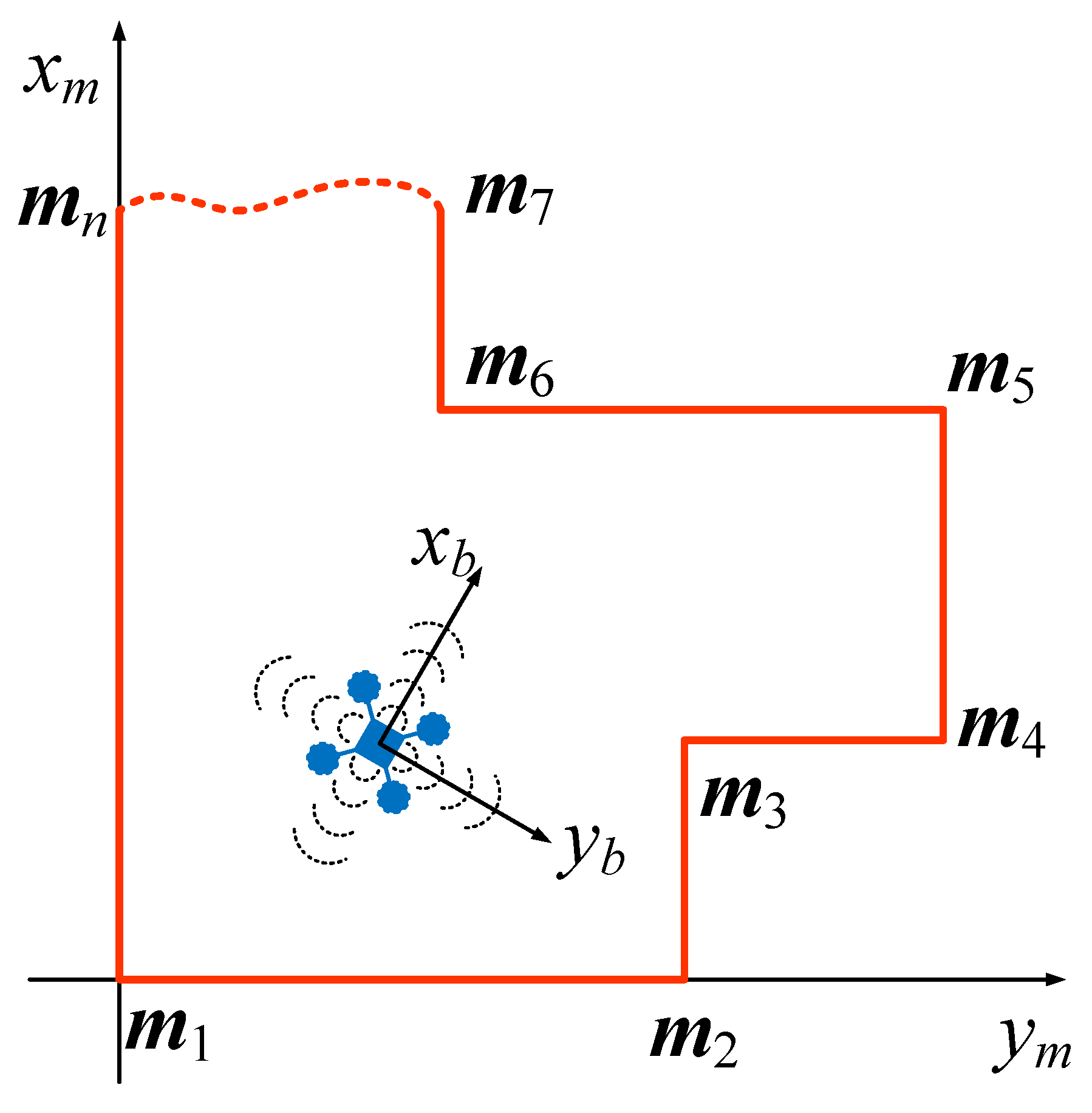

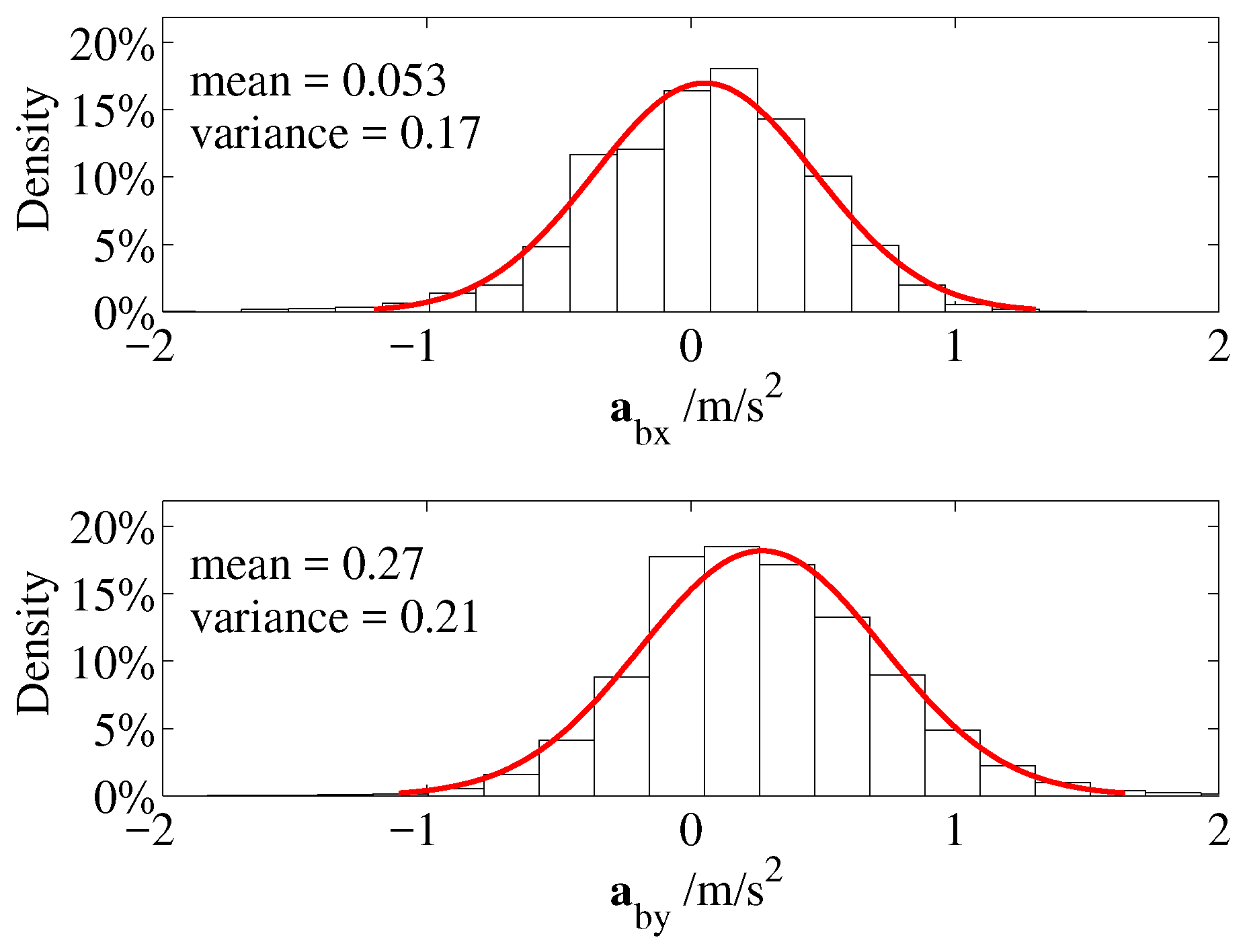

4. Modeling of the MAV System

To describe the motion of the MAV, the map coordinate system

and the body coordinate system

were introduced. The map coordinate system

was fixed to the earth, and its origin is located at the starting corner

of the map

. The body coordinate system

was fixed to the MAV (in strapdown configuration), as shown in

Figure 8.

The 2D polygonal map

can be formed as a set of line segments as

where

represents a line segment connecting points

and

.

is the position of the

corner in the map coordinate system.

The direct cosine matrix (DCM) is used to translate the acceleration from the body frame to the map frame.

where

are the roll, pitch and yaw angles, respectively.

Then, the accelerations on the body frame can be transferred to the map frame by

where

is the gravity vector in the map frame. Therefore, the discrete-time state-space model of the MAV is given by

where

represents the sampling period of the IMU and

and

are the velocity vector and position vector in the map frame at step

k, respectively. The output of the MAV system was the measurement of multiple sonar sensors, which is defined as

where

is the measurement vector of sonar sensors, and

is a nonlinear function of

,

, the sonar model

and the map of the working area

. To obtain the measurements of the sonar sensors, one needs to represent the sonar’s model

in the map coordinate system. Since

is a set of line segments, this transformation can be achieved by representing the endpoints of line segments as

where

and

denote the UAV’s position and heading angle in the map coordinate system, respectively.

is the heading angle of sonar in the body coordinate system, and

is the length between the origins of the body frame and of the sonar frame. Additionally,

and

are the length and the angle of the

jth ray in the sonar coordinate system, respectively. Then, the ultrasonic sensor’s measurement

l is given by Equations (

3) and (

11).

In particular, among all the intersections, the one that minimizes Equation (

3) is defined as the “active intersection”

, and terms “active ray”

and “active wall”

are introduced to represent the corresponding ray and the corresponding wall with the active intersection.

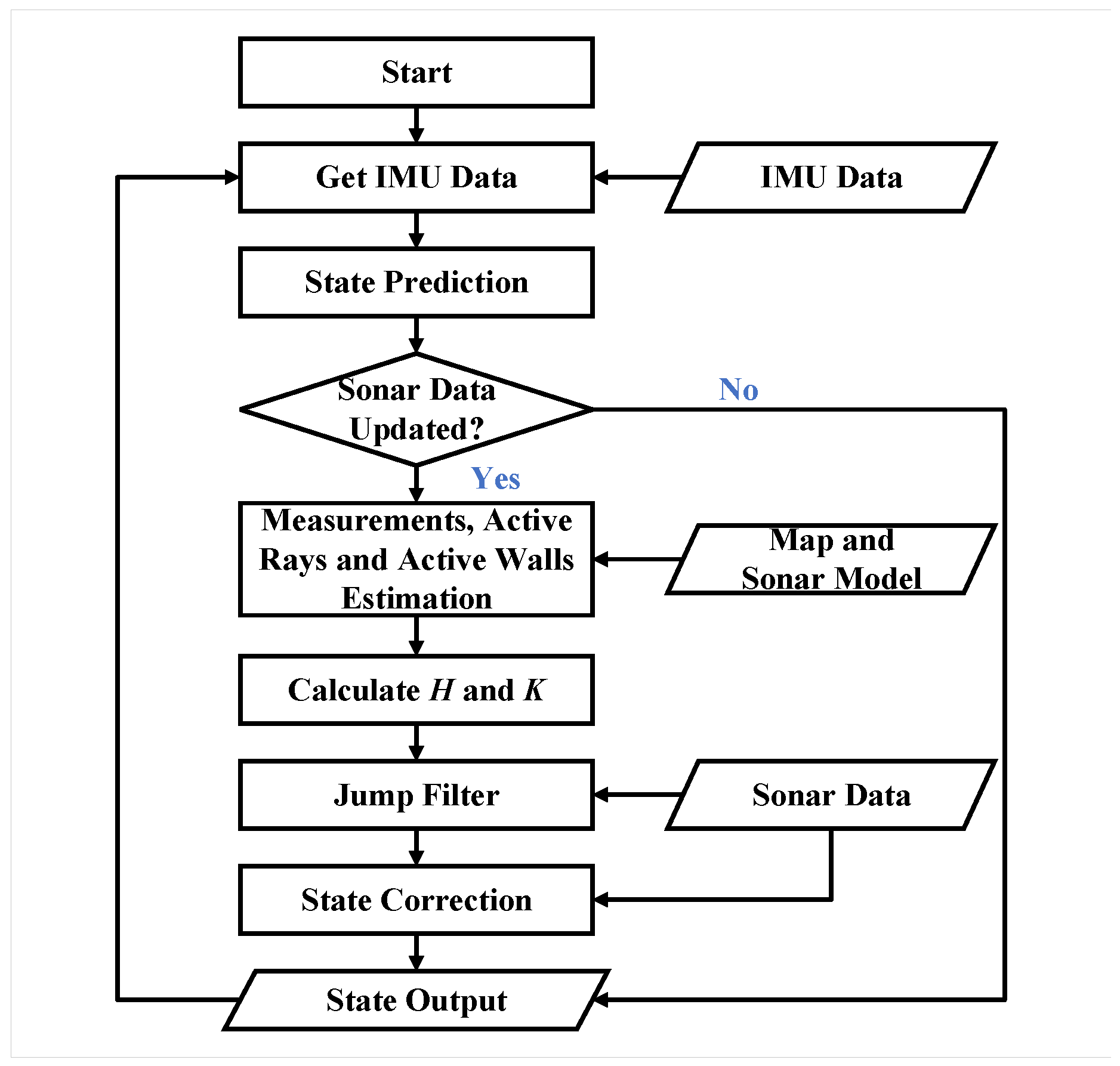

5. Indoor Localization Method Based on the EKF

As shown in Equation (

3), the measurement function of the system is a nonlinear and discontinuous function; thus, using the EKF rather than the traditional Kalman filter is a feasible way to estimate the location of the MAV. The key issue is to solve the Jacobian matrix of Equation (

3).

The gradient matrix of the function

with respect to

at step

k is given by

Based on the multi-ray model, the Jacobian matrix can be calculated by geometric methods. At time

k, suppose that the relationship between the sonar model and the map is as shown in

Figure 9. Additionally, assume that the active ray

and the active ray

remain unchanged. The Jacobian matrix can then be given as

where

and

represent the yaw angles of the “active ray” and the “active wall” of the

ultrasonic sensor in the map frame. In addition,

and

were set to zeros if there was no obstacle in the detection range of the

ultrasonic sensor. Then, the MAV’s position can be obtained through a standard EKF procedure as

Note that Equation (

3) is a piecewise continuous function, and its output may jump in some conditions, such as if

changes,

changes or

and

change simultaneously. In addition, as mentioned in

Section 3, if the angle between

and

exceeds the detection angle constraint, it may also lead to a significant deviation between

and

. Similar results can also occur when the sensor occasionally malfunctions. Considering that the above cases will lead to a significant change in the term

, a jump filter is given to solve this problem as

where

is a predesigned threshold. Therefore, if the measurement

is significantly different from its prediction

, i.e.,

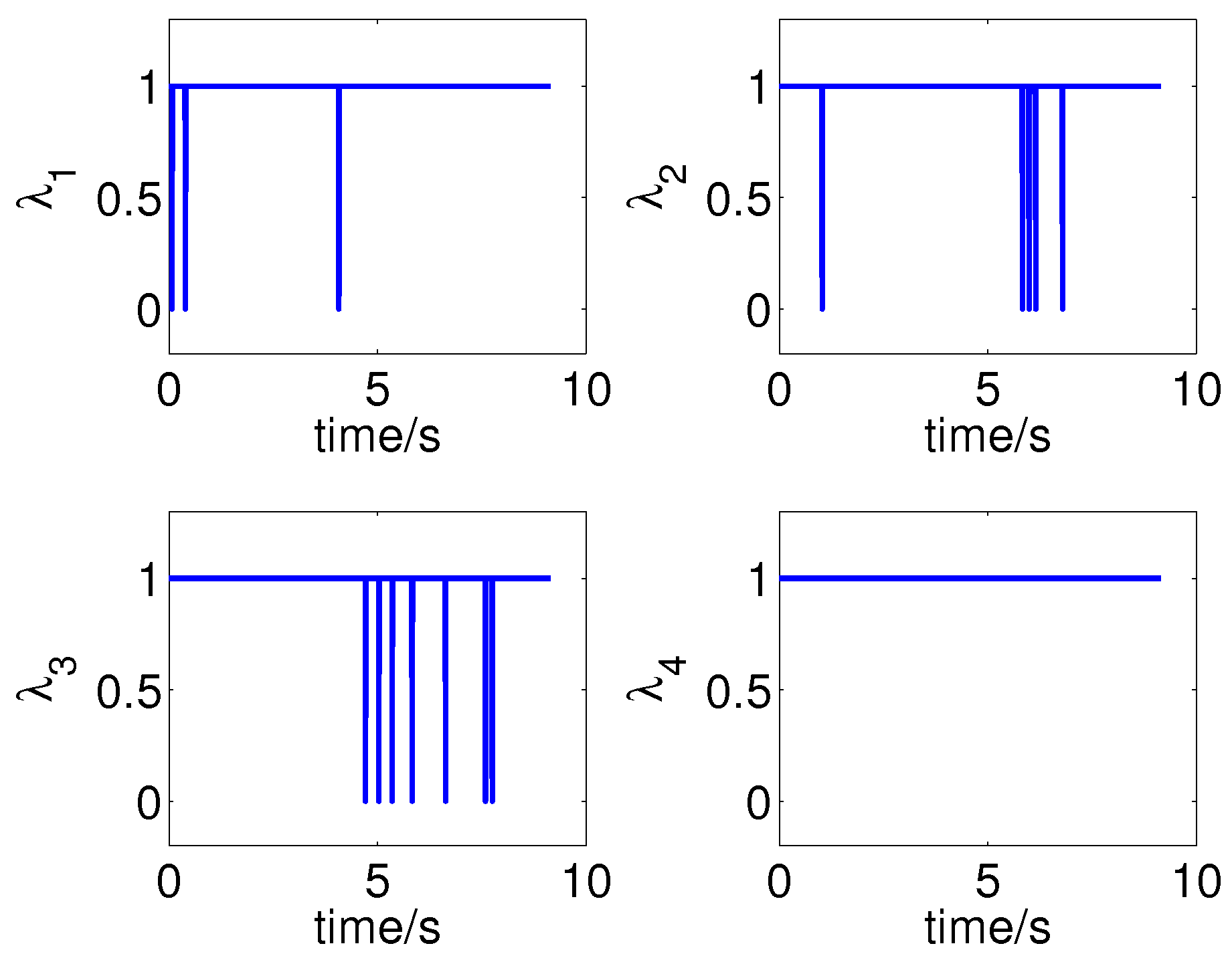

, the corresponding measurement will be filtered out from the estimation.

The flow chart of the indoor localization algorithm is shown in

Figure 10.

7. Conclusions

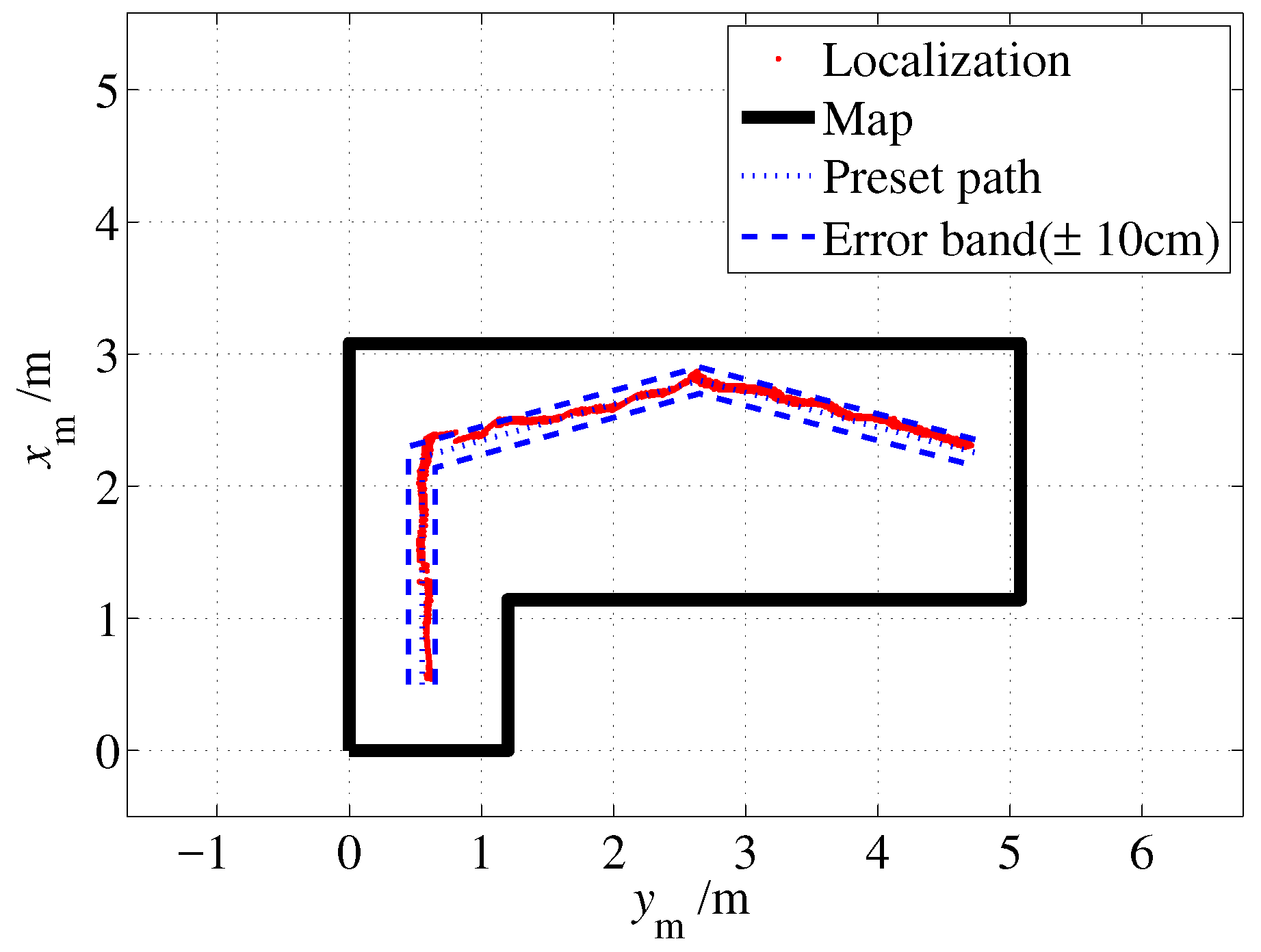

In this paper, a novel beaconless indoor localization approach that relies on onboard ultrasonic sensors and IMU sensors is presented.

A multi-ray model for ultrasonic sensors is proposed. It approximates a beam pattern accurately while maintaining a low computational complexity, which makes it suitable to be applied to a light MAV. Then, a multi-ray modeling process has been provided based on the beam pattern of the MaxSonar MB1222 ultrasonic sensor. The comparative test validates that the proposed model has good fitness with the actual sensor for indoor localization.

Based on the multi-ray model, an EKF-based indoor localization method has been presented. The measurements of sonar sensors and IMU sensors are fuzed to achieve higher precision positioning. The jump filter is introduced to suppress the abnormal and significant difference between the estimations and measurements.

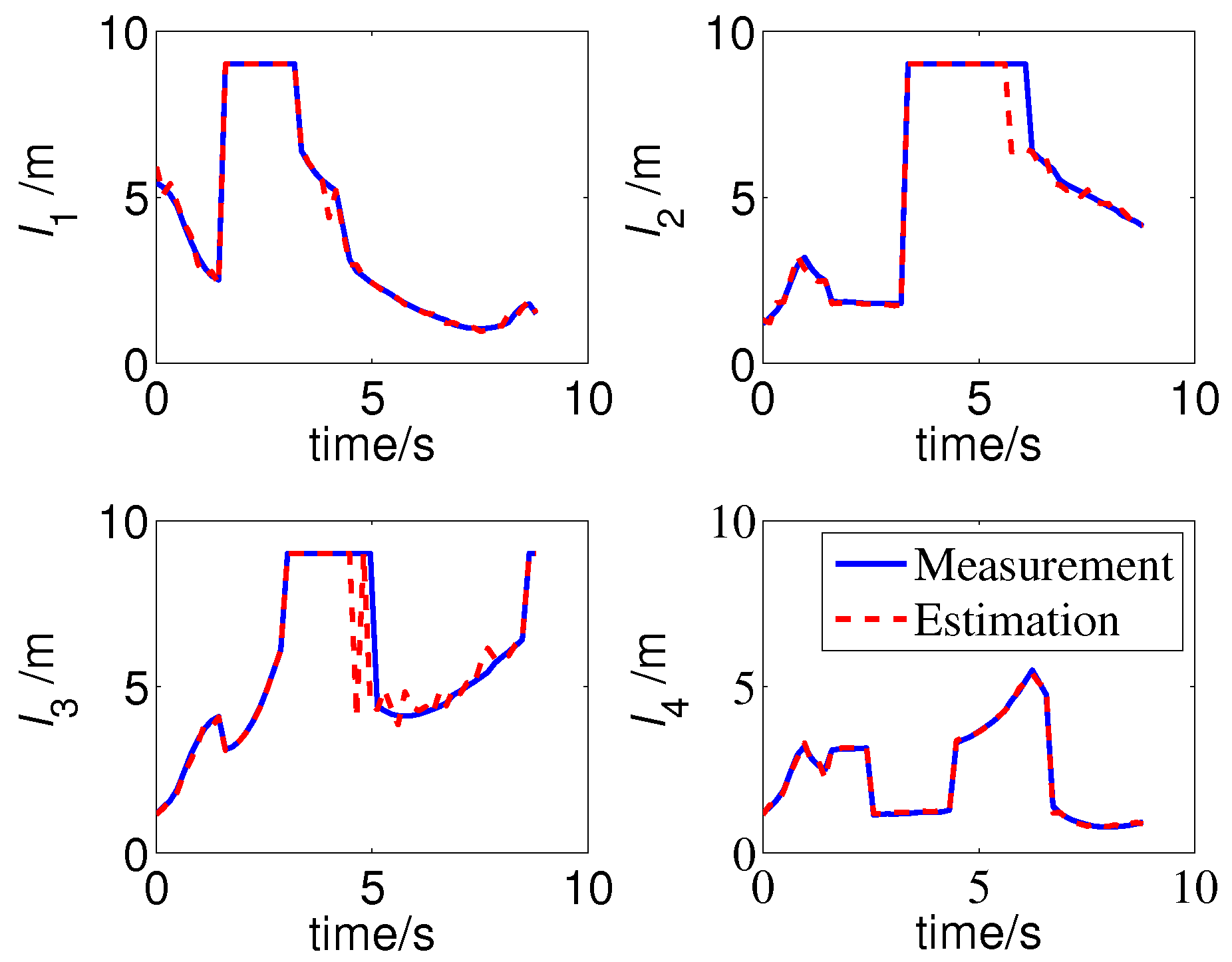

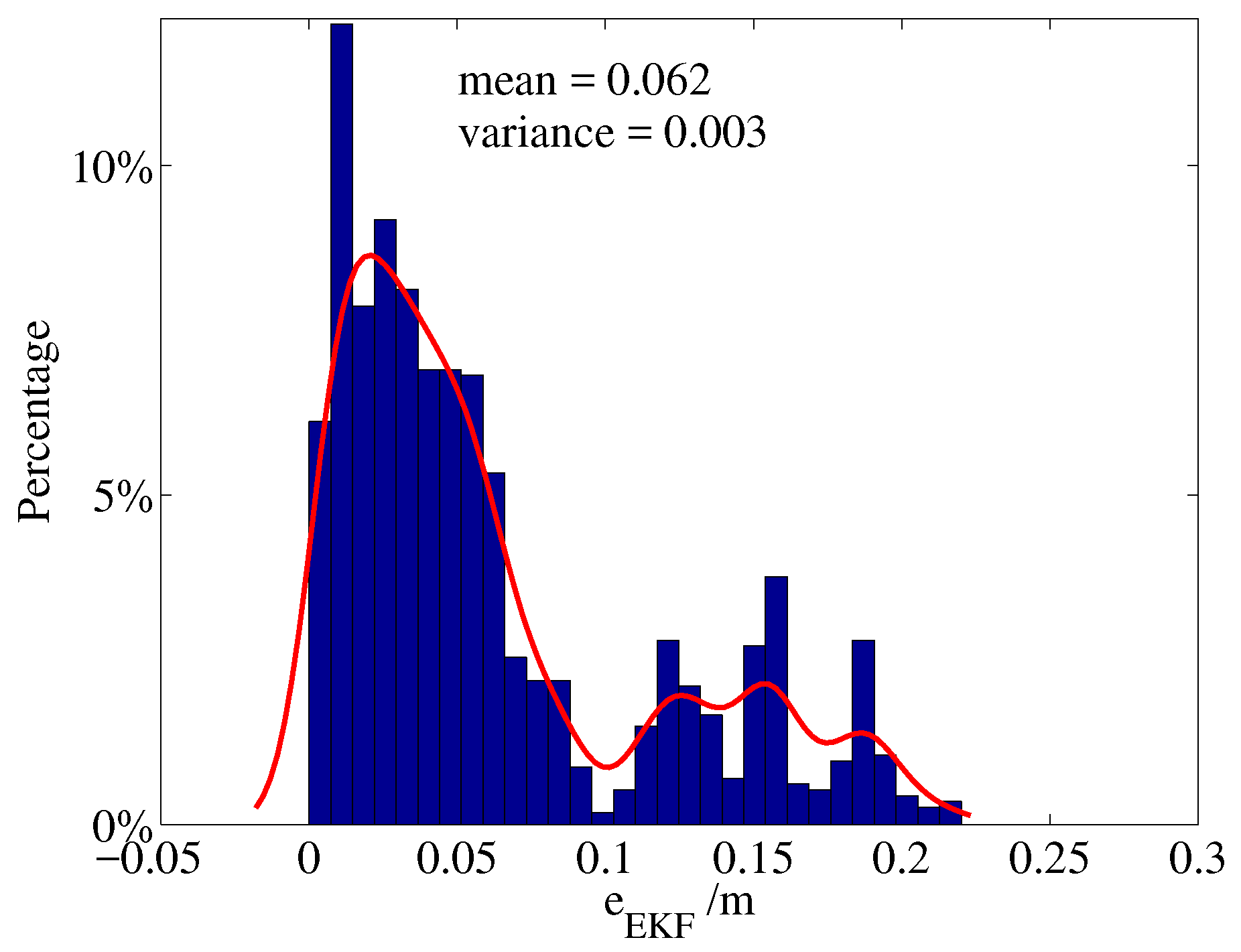

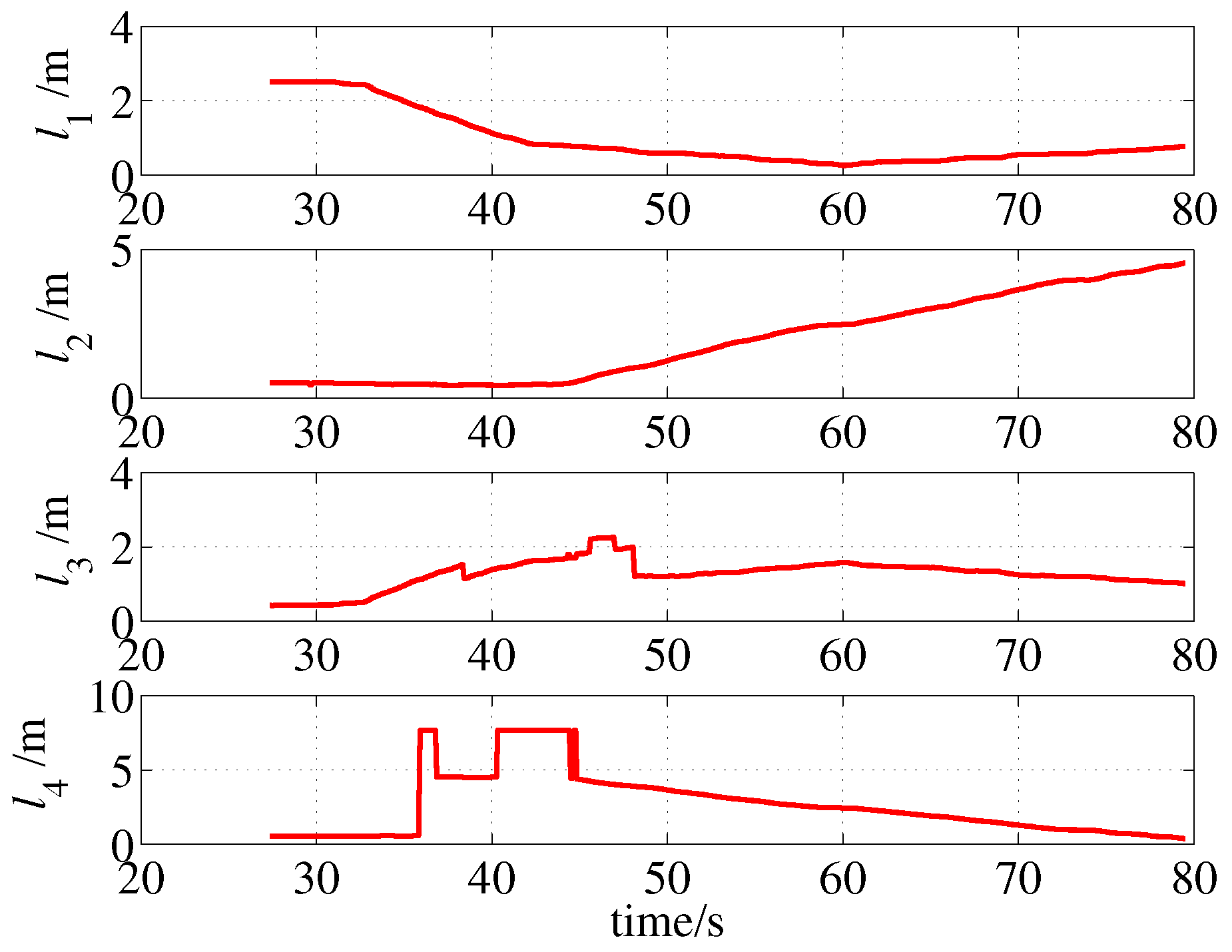

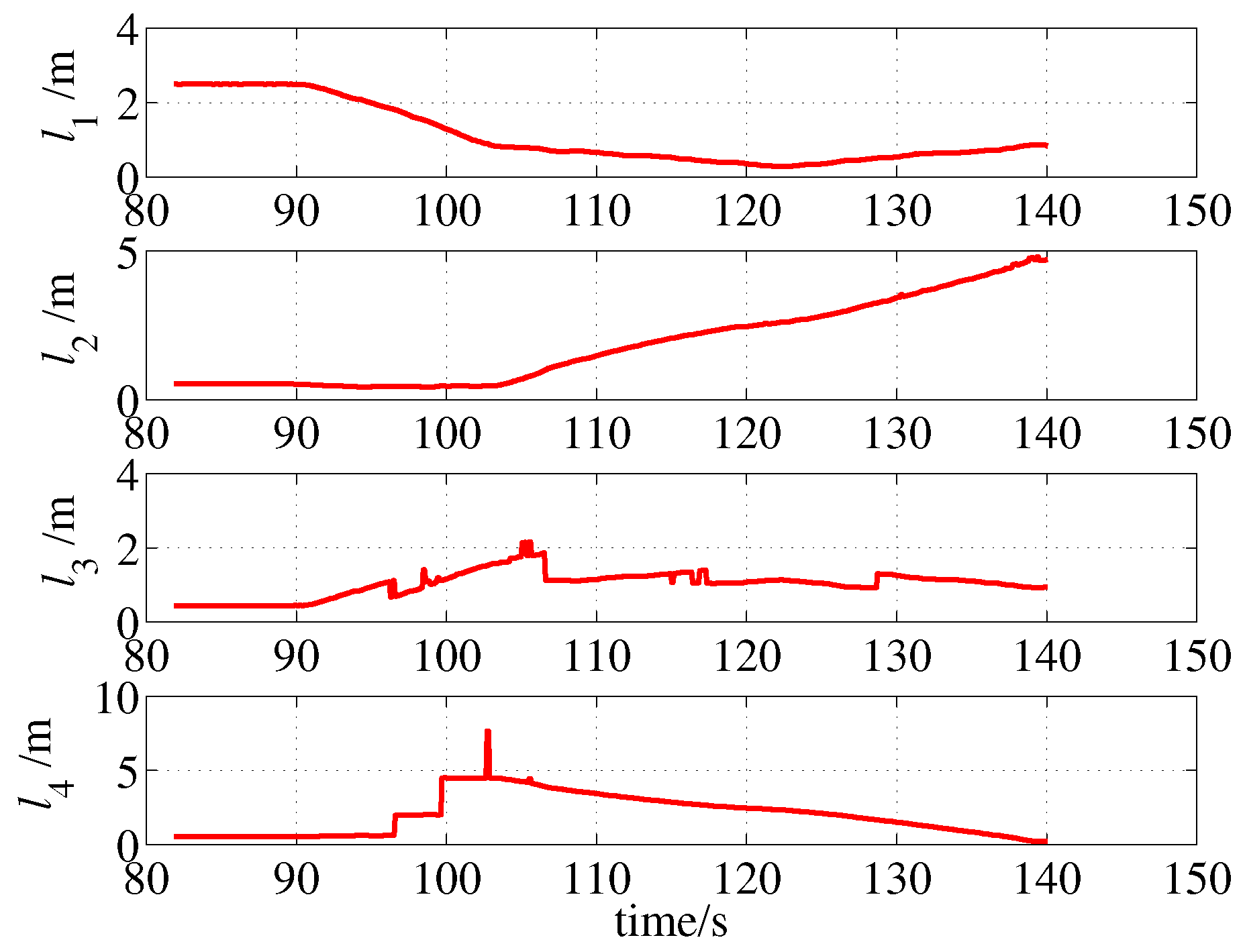

Simulations are presented to validate the proposed methods, and the results show it has a localization accuracy of approximately 20 cm. Afterwards, the proposed approach are applied to the MAV, which is a small size and light weight platform. The results illustrate that its computational complexity is simple enough to run on the stm32 platform and positioning accuracy is also higher than 20 cm. An experimental test with an unmodeled obstacle shows the good robustness of proposed method, the localization results have not been significantly affected and stays within the error band.

Future work is to improve the algorithm for more complex indoor environments such as offices with many electric and electronic equipments, that may lead a large interference to the measurement of the magnetic compass.