Coarse-Fine Convolutional Deep-Learning Strategy for Human Activity Recognition

Abstract

1. Introduction

2. State of the Art

- Infrared camera, microphone, GPS, gyroscope, accelerometer, proximity sensor, ultra-sound sensor and light sensor: This HAR research is developed to identify a single person activity. There are survey works giving a landscape on different techniques and terminologies [14,20,21,22,23]. We are interested in this emerging research area which uses a smartphone sensor for single user recognition. The main works and their techniques are described as follows.

2.1. Machine Learning-Based HAR Methods

2.2. Convolutional Neural Network-Based HAR Methods

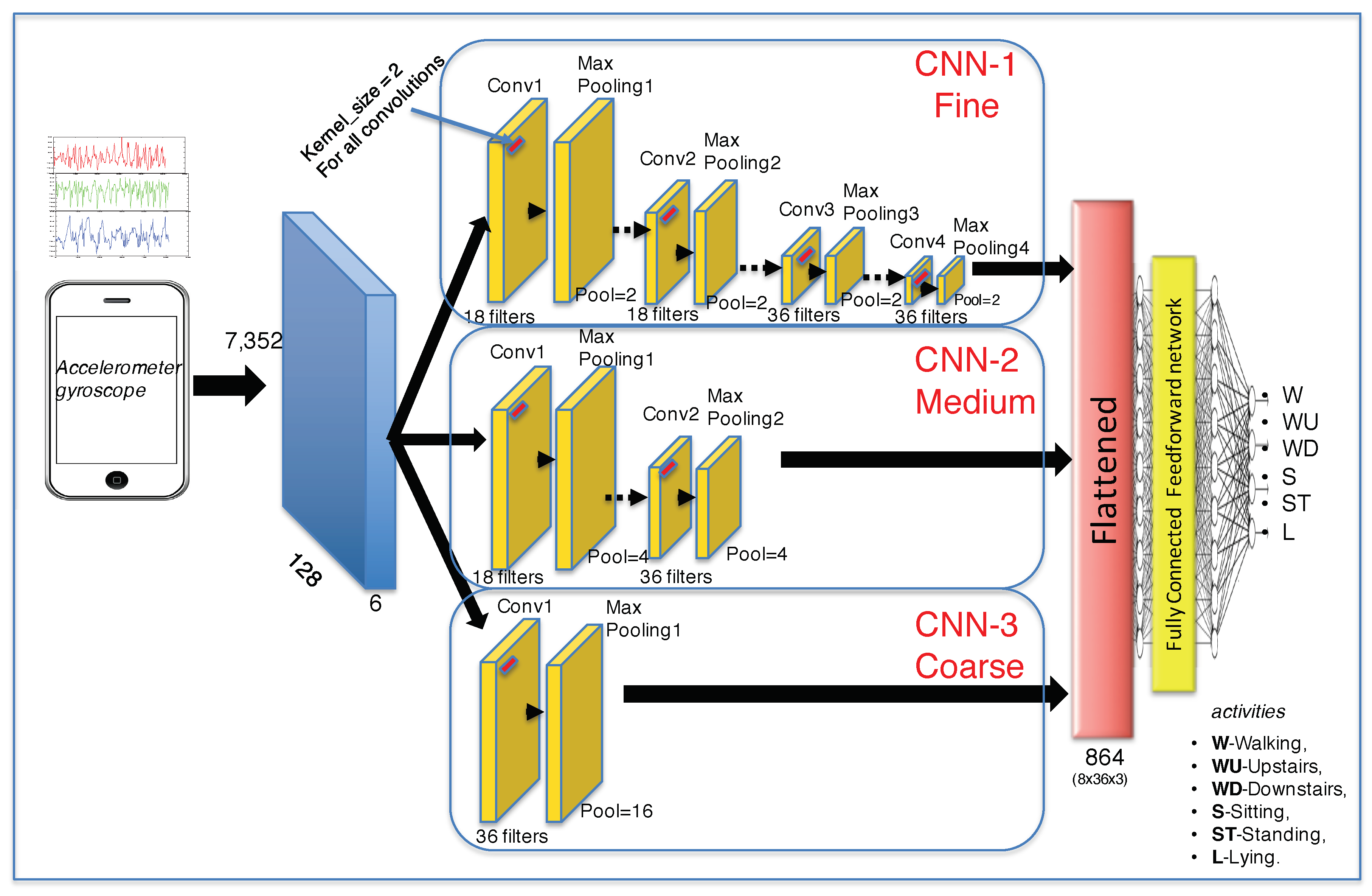

3. Proposal

- Convolutional layer: In one-dimensional case, a convolution between two vectors and a kernel vector is a vector , where , * represents the convolution operation. Thus, in discrete domain, the convolution is expressed as , for . In other words, a reflected vector h, which is also called a convolutional filter, is sliding along signal x, a dot product is computed at each n value and the concatenated values form the outputs of the convolutional layer .

- Activation function: Among the main non-linear activation functions such as sigmoidal, tangent, hyperbolic tangent, and ReLU, the latter is used in this proposal. Rectified linear unit (ReLU) is defined as . The effect produced by the ReLU function is thresholding of convolution c with respect to zero value, obtaining, only positive values of c.

- Pooling layer: The aim of this stage is to reduce and summarize the convolutional output. Two typical pooling functions are used, the max pooling and mean pooling function. In this proposal, the max pooling function is used with a vector size of .

- Full-connected layer: This stage concatenates the outputs of the three partial CNNs: a fine-CNN, a medium-CNN, and a coarse-CNN. The output of the partial CNNs is flattened into a one-dimensional vector and used for the classification. In this proposal, a fully-connected layer is comprised of one input layer, one hidden layer, and one output layer.

- Soft-max layer: Finally, the output of the last layer is passed to a soft-max layer that computes the probability distribution over the predicted walking, up-stairs, down-stairs, sitting, standing and laying human activities.

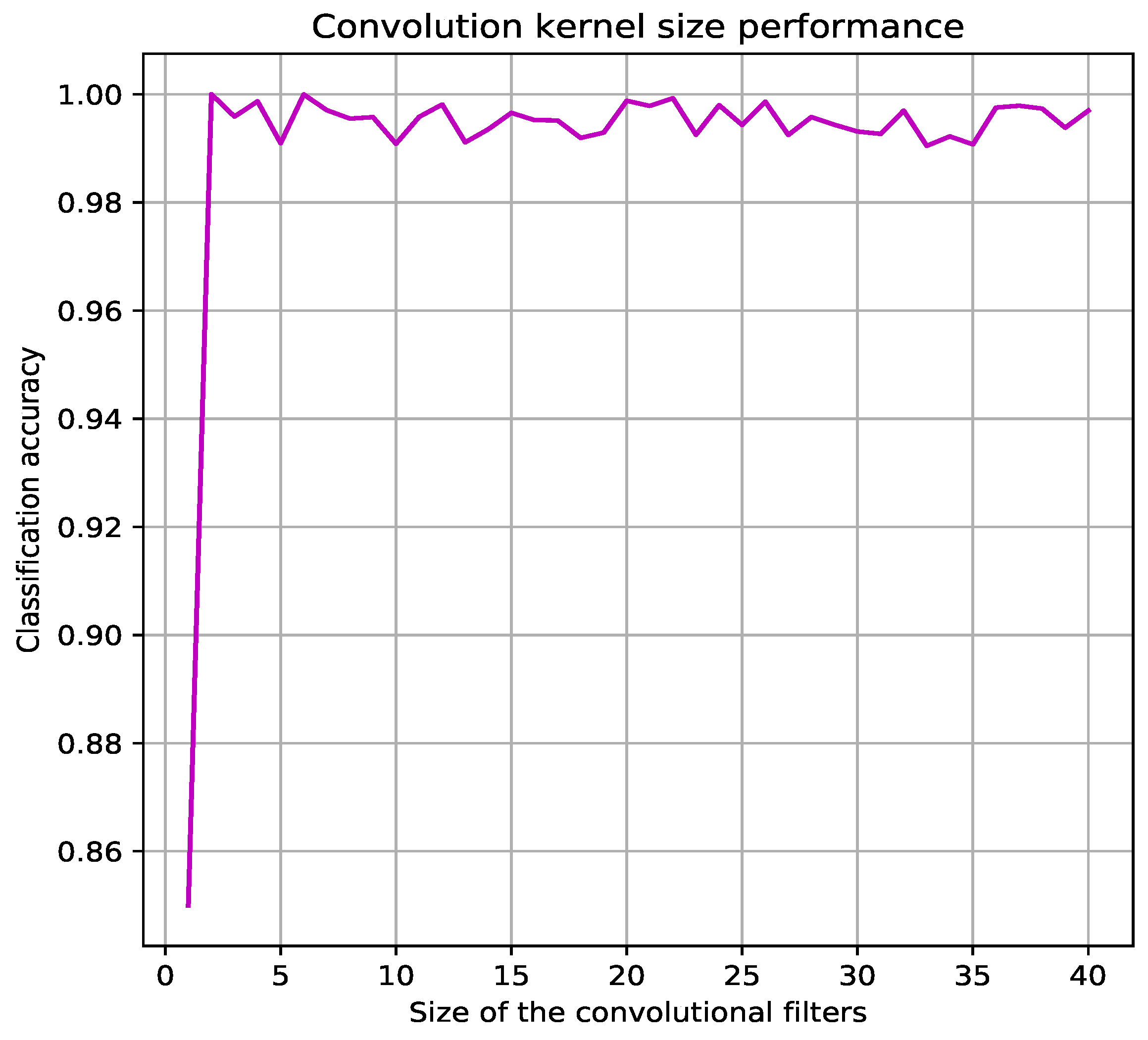

4. System Architecture

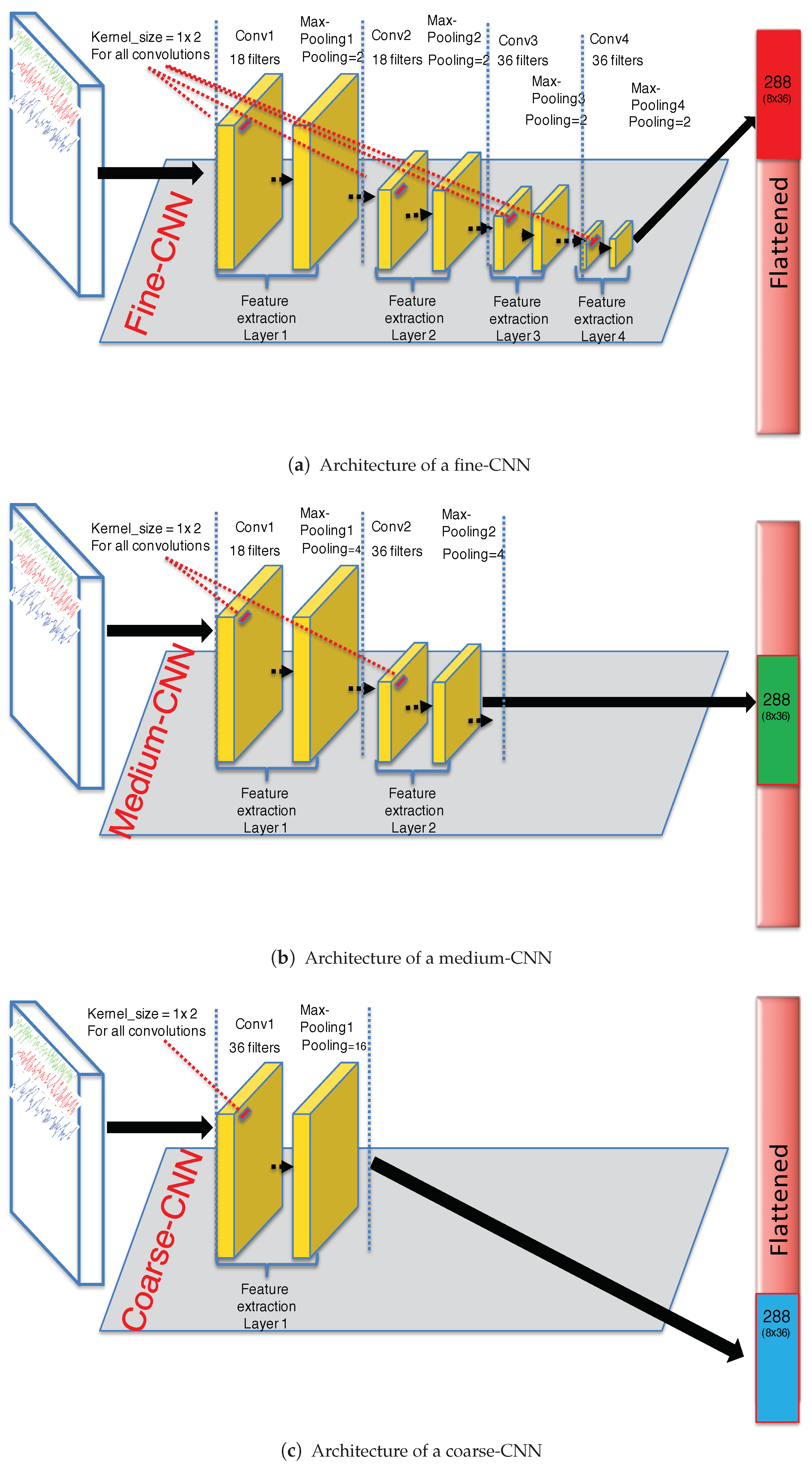

- Fine-CNN (See Figure 2a): A first convolutional layer comprised of 18 filters where the kernel filter has the size and the step of the convolution is 1. Then, a layer is applied with a size of and the step of is 2. The activation function is ReLU. Then, a second convolutional layer, comprised of 18 filters where the kernel filter has the size and the step of the convolution 2 is 1. Then, a layer is applied with a size of and the step of is 2. The activation function is ReLU. A third convolutional layer comprised of 36 filters where the kernel filter has the size and the step of the convolution 3 is 1. Then, a layer is applied with a size of and the step of is 2. The activation function is ReLU. Finally, a fourth convolutional layer comprised of 36 filters where the kernel filter has the size and the step of the convolution 4 is 1. Then, a layer is applied with a size of and the step of is 2. The activation function is ReLU.

- Medium-CNN (See Figure 2b): For this CNN, first convolutional layer comprised of 18 filters where the kernel filter has the size and the step of the convolution is 2. Then, a layer is applied with a size of and the step of is 2. The activation function is ReLU. Then, a second convolutional layer, comprised of 36 filters where the kernel filter has the size and the step of the convolution 2 is 3. Then, a layer is applied with a size of and the step of is 2. The activation function is also ReLU.

- Coarse-CNN (See Figure 2c): For the last partial CNN, only one convolutional layer comprised of 36 filters where the kernel filter has the size and the step of the convolution is 2. Then, a layer is applied with a size of and the step of is 2. The activation function is ReLU.

5. Experiments

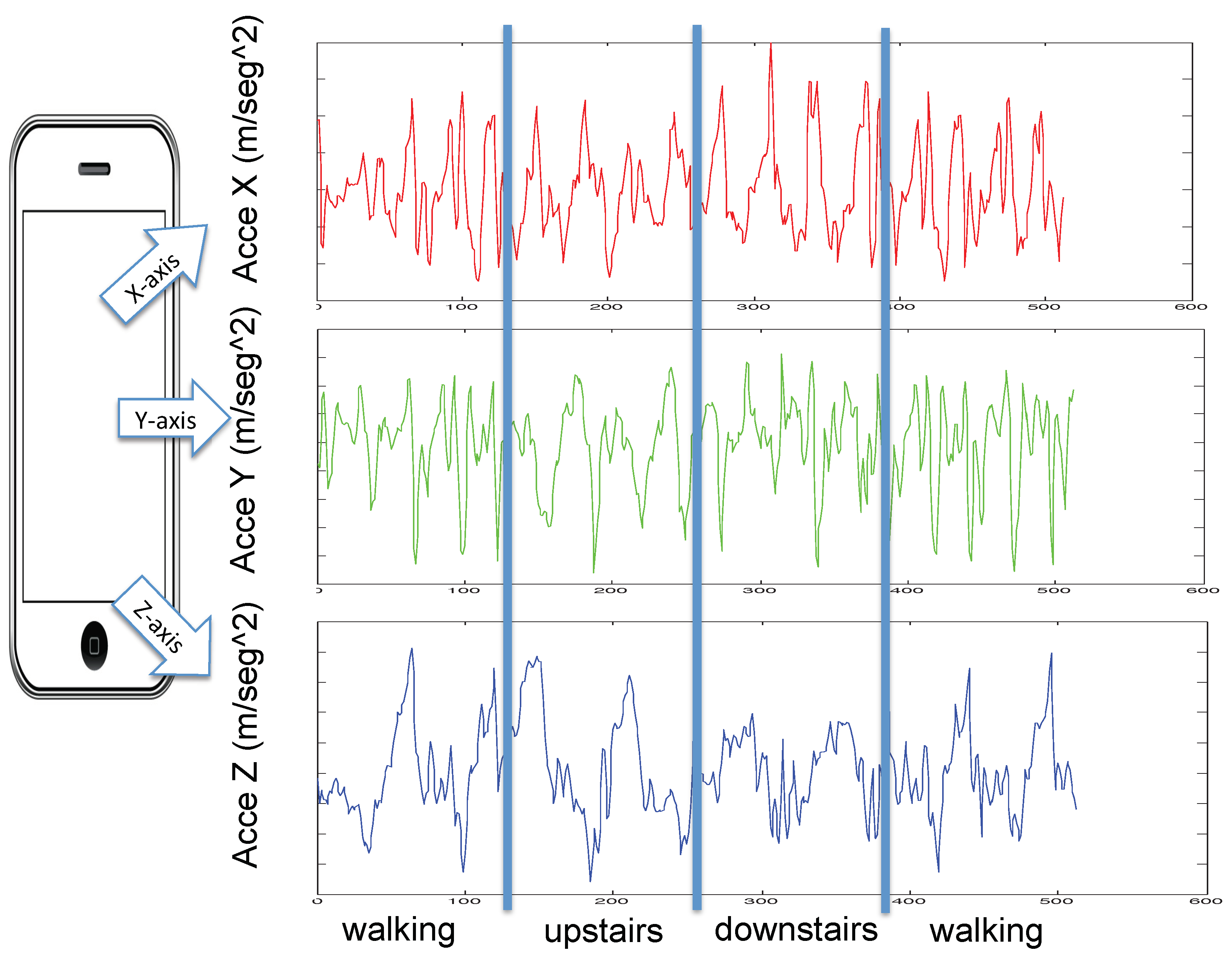

5.1. UCI HAR Dataset

5.2. Data Set

- Subject-Dependent datasetFor the training stage (See second column of Table 1), a full learning database was formed by 7352 trails of 21 volunteers ( of the whole database). Training dataset is conformed of 7352 trials multiply by 128 samples multiply by 6 axis ( matrix).

- Subject-Independent datasetFor the testing stage (See third column of Table 1), a database was formed by 2947 trails of 9 volunteers ( of the whole database). Testing dataset is conformed of 2947 trials multiply by 128 samples multiply by 6 axis ( matrix).

6. Evaluation

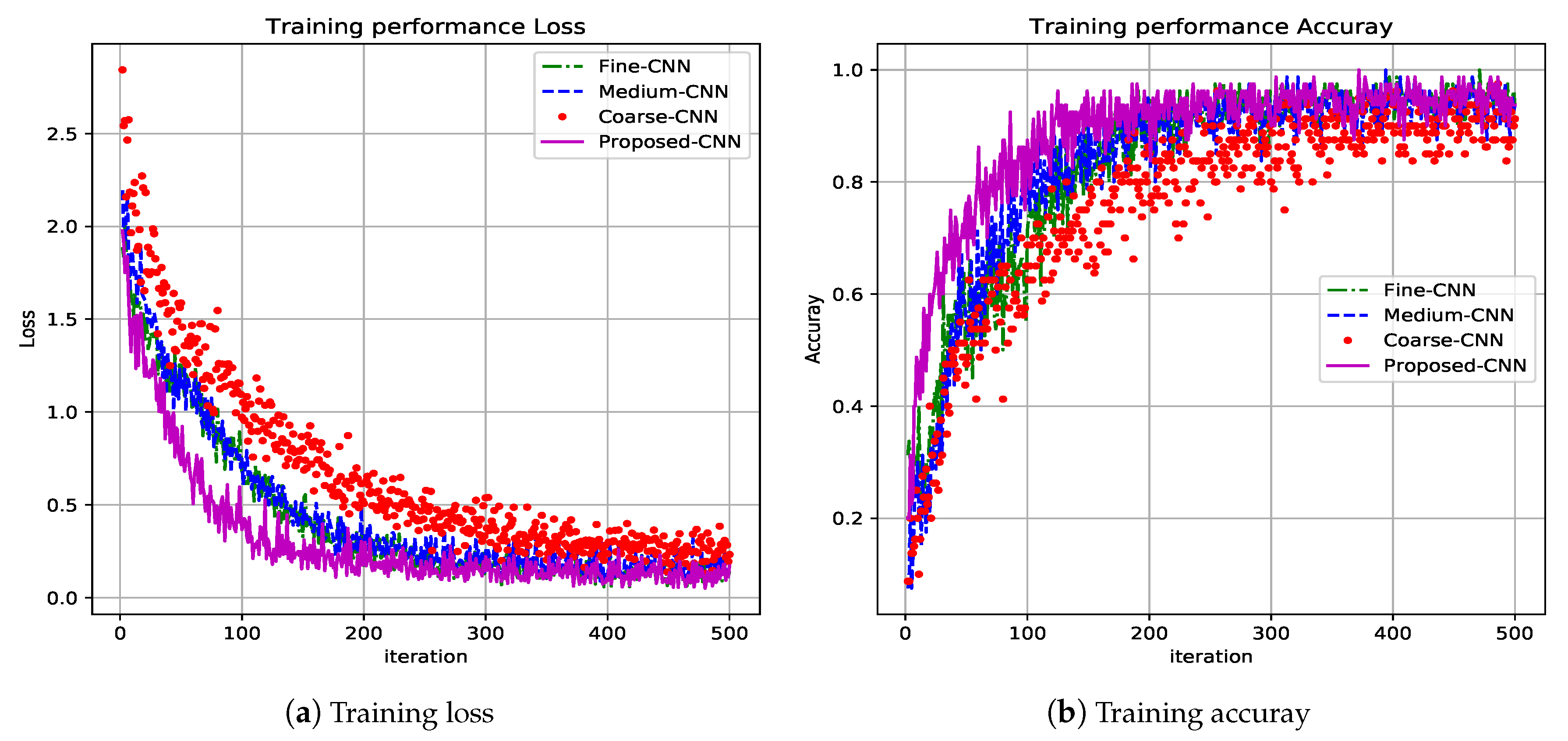

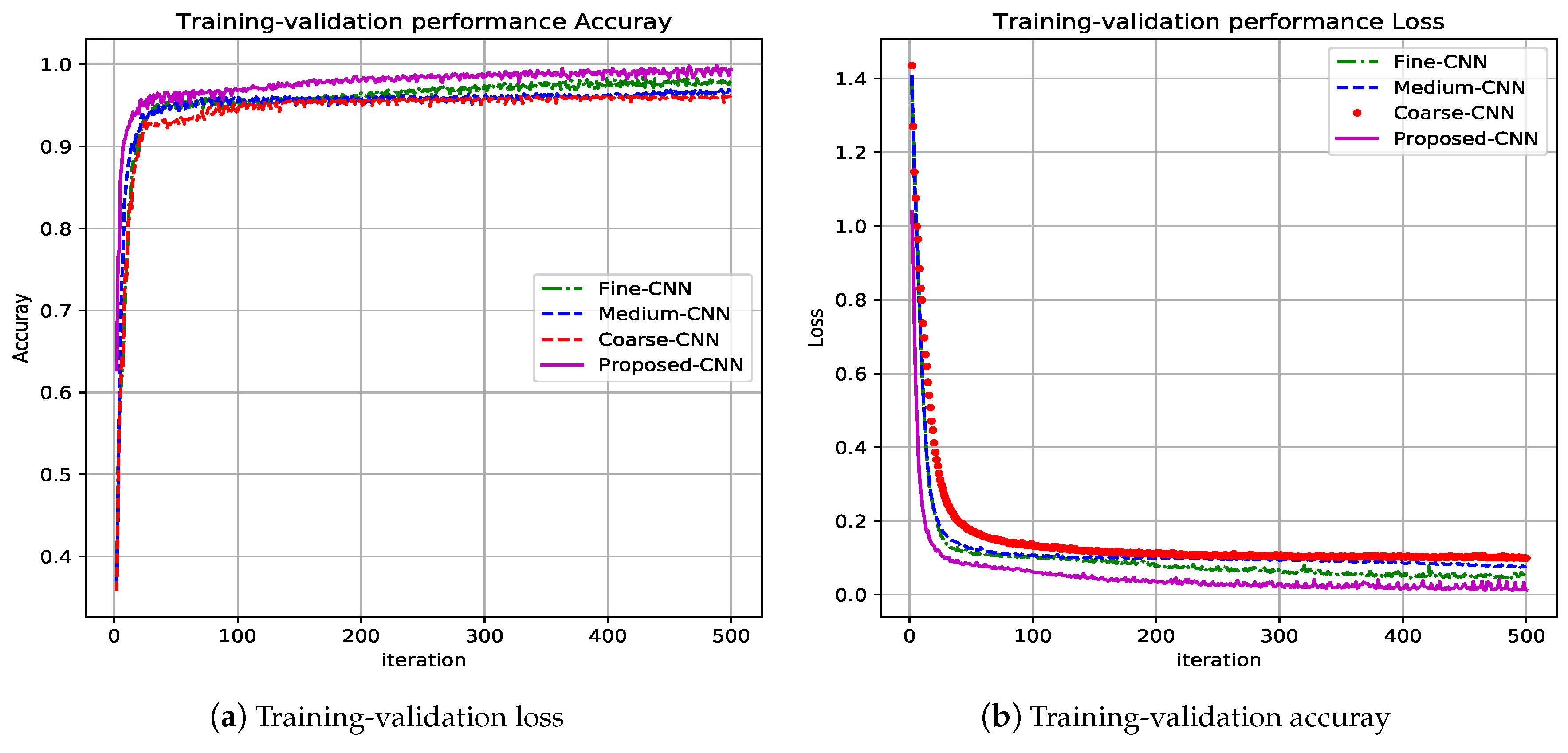

6.1. Learning Evaluation

6.2. Cross-Dataset Evaluation

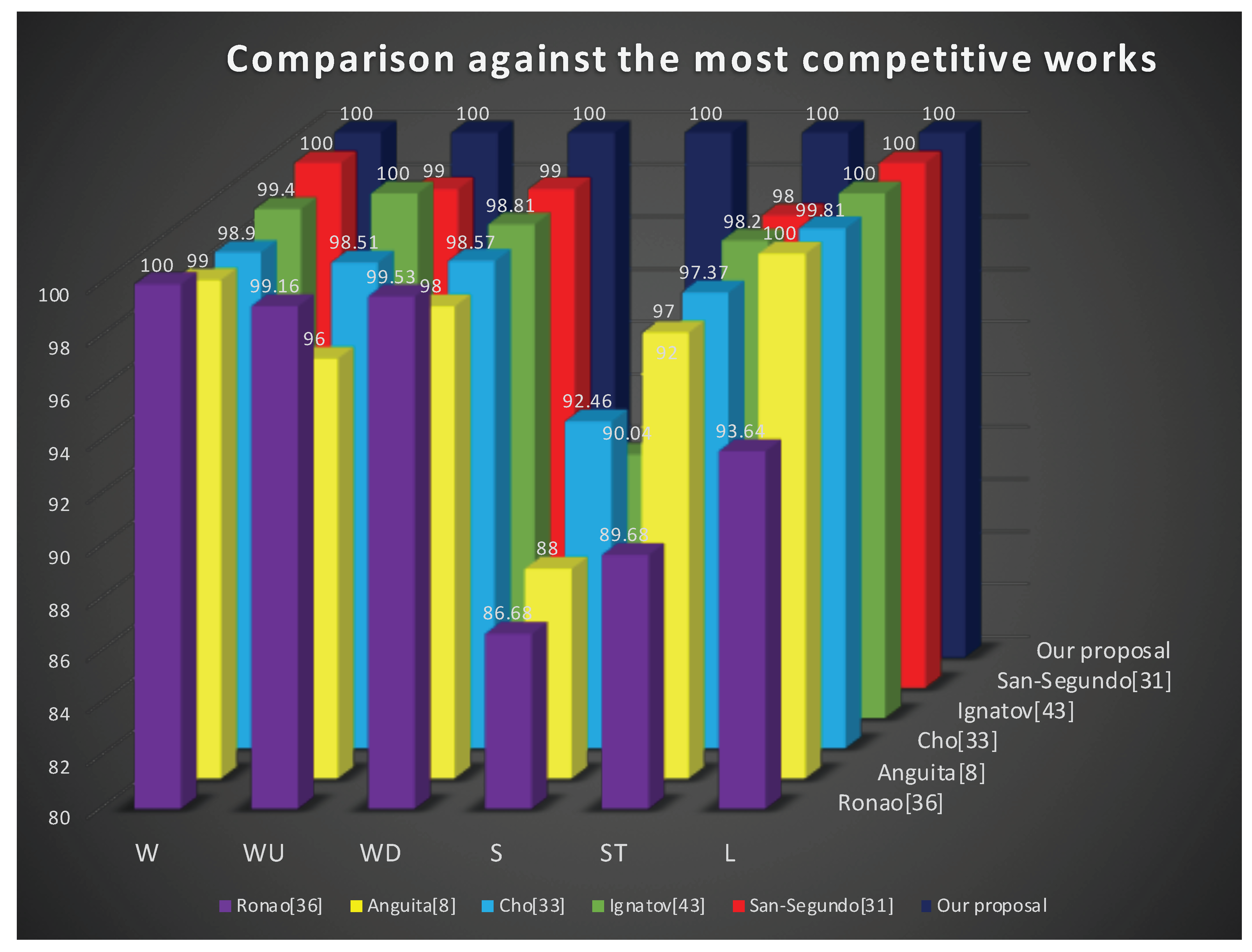

6.3. Comparison to Related Work

7. WISDM Dataset

7.1. Evaluation

7.2. Comparison to Related Work

8. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Lara, O.; Labrador, M. A survey on human activity recognition using wearable sensors. IEEE Commun. Surv. Tutor. 2013, 15, 1192–1209. [Google Scholar] [CrossRef]

- Chen, L.; Hoey, J.; Nugent, C.; Cook, D.; Yu, Z. Sensor-based activity recognition. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2012, 42, 790–808. [Google Scholar] [CrossRef]

- Anguita, D.; Ghio, A.; Oneto, L.; Parra, X.; Reyes-Ortiz, J.L. A Public Domain Dataset for Human Activity Recognition Using Smartphones. In Proceedings of the European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning (ESANN 2013), Bruges, Belgium, 24–26 April 2013; ISBN 978-2-87419-081-0. [Google Scholar]

- Le, T.D.; Nguyen, C.V. Human Activity Recognition by smartphone. In Proceedings of the 2nd National Foundation for Science and Technology Development Conference on Information and Computer Science, Ho Chi Minh City, Vietnam, 16–18 September 2015; pp. 219–224. [Google Scholar]

- Liang, Y.; Zhou, X.; YU, Z.; Guo, B. Energy-Efficient Motion Related Activity Recognition on Mobile Devices for Pervasive Healthcare. Mob. Netw. Appl. 2014, 19, 303–317. [Google Scholar] [CrossRef]

- Zhang, M.; Sawchuk, A.A. Motion Primitive-based Human Activity Recognition Using a Bag-of-features Approach. In Proceedings of the 2nd ACM SIGHIT International Health Informatics Symposium, Miami, Florida, USA, 28–30 January 2012; pp. 631–640. [Google Scholar]

- Lane, N.; Miluzzo, E.; Lu, H.; Peebles, D.; Choudhury, T.; Cambell, A. A Survey of Mobile Phone Sensing. IEEE Commun. Mag. 2010, 48, 140–150. [Google Scholar] [CrossRef]

- Liu, J.; Wang, Z.; Zhong, L.; Wickramasuriya, J.; Vasudevan, V. Wave: Accelerometer-Based Personalized Gesture Recognition and Its Applications. In Proceedings of the Seventh Annual IEEE International Conference on Pervasive Computing and Communications (PerCom 2009), Galveston, TX, USA, 9–13 March 2009. [Google Scholar]

- Zappi, P.; Lombriser, C.; Stiefmeier, T.; Farella, E.; Roggen, D.; Benini, L.; Tröster, G. Activity Recognition from On-Body Sensors: Accuracy-Power Trade-Off by Dynamic Sensor Selection. In Proceedings of the 5th European Conference on Wireless Sensor Networks, Bologna, Italy, 30 January–1 February 2008; Verdone, R., Ed.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 17–33. [Google Scholar]

- Roggen, D.; Calatroni, A.; Rossi, M.; Holleczek, T.; Forster, K.; Troster, G.; Lukowicz, P.; Bannach, D.; Pirkl, G.; Ferscha, A.; et al. Collecting complex activity datasets in highly rich networked sensor environments. In Proceedings of the 2010 Seventh International Conference on Networked Sensing Systems (INSS), Kassel, Germany, 15–18 June 2010; pp. 233–240. [Google Scholar]

- Lockhart, J.W.; Weiss, G.M.; Xue, J.C.; Gallagher, S.T.; Grosner, A.B.; Pulickal, T.T. Design Considerations for the WISDM Smart Phone-based Sensor Mining Architecture. In Proceedings of the Fifth International Workshop on Knowledge Discovery from Sensor Data (SensorKDD ’11), San Diego, CA, USA, 21 August 2011; ACM: New York, NY, USA, 2011; pp. 25–33. [Google Scholar]

- Micucci, D.; Mobilio, M.; Napoletano, P. UniMiB SHAR: A new dataset for human activity recognition using acceleration data from smartphones. Appl. Sci. 2017, 7, 1101. [Google Scholar] [CrossRef]

- Kwapisz, J.R.; Weiss, G.M.; Moore, S.A. Activity Recognition using Cell Phone Accelerometers. In Proceedings of the Fourth International Workshop on Knowledge Discovery from Sensor Data (at KDD-10), Washington, DC, USA, 25–28 July 2010. [Google Scholar]

- Zhang, C.; Yang, X.; Lin, W.; Zhu, J. Recognizing Human Group Behaviors with Multi-group Causalities. In Proceedings of the The 2012 IEEE/WIC/ACM International Joint Conferences on Web Intelligence and Intelligent Agent Technology—Volume 03; IEEE Computer Society: Washington, DC, USA, 2012; pp. 44–48. [Google Scholar]

- Fan, Y.; Yang, H.; Zheng, S.; Su, H.; Wu, S. Video Sensor-Based Complex Scene Analysis with Granger Causality. Sensor 2013, 13, 13685–13707. [Google Scholar] [CrossRef]

- Zhou, Z.; Li, K.; He, X. Recognizing Human Activity in Still Images by Integrating Group-Based Contextual Cues. In Proceedings of the 23rd ACM International Conference on Multimedia (MM’15), Brisbane, Australia, 26–30 October 2015; ACM: New York, NY, USA, 2015; pp. 1135–1138. [Google Scholar]

- Onofri, L.; Soda, P.; Pechenizkiy, M.; Iannello, G. A Survey on Using Domain and Contextual Knowledge for Human Activity Recognition in Video Streams. Expert Syst. Appl. 2016, 63, 97–111. [Google Scholar] [CrossRef]

- Zheng, Y.; Yao, H.; Sun, X.; Zhao, S.; Porikli, F. Distinctive action sketch for human action recognition. Signal Process. 2018, 144, 323–332. [Google Scholar] [CrossRef]

- Ji, X.; Cheng, J.; Feng, W.; Tao, D. Skeleton embedded motion body partition for human action recognition using depth sequences. Signal Process. 2018, 143, 56–68. [Google Scholar] [CrossRef]

- Ghosh, A.; Riccardi, G. Recognizing Human Activities from Smartphone Sensor Signals. In Proceedings of the 22Nd ACM International Conference on Multimedia (MM’14), Multimedia Orlando, Fl, USA, 3–7 November 2014; ACM: New York, NY, USA, 2014; pp. 865–868. [Google Scholar]

- Dao, M.S.; Nguyen-Gia, T.A.; Mai, V.C. Daily Human Activities Recognition Using Heterogeneous Sensors from Smartphones. Procedia Comput. Sci. 2017, 111, 323–328. [Google Scholar] [CrossRef]

- Hui, S.; Zhongmin, W. Compressed sensing method for human activity recognition using tri-axis accelerometer on mobile phone. J. China Univ. Posts Telecommun. 2017, 24, 31–71. [Google Scholar] [CrossRef]

- Harjanto, F.; Wang, Z.; Lu, S.; Tsoi, A.C.; Feng, D.D. Investigating the impact of frame rate towards robust human action recognition. Signal Process. 2016, 124, 220–232. [Google Scholar] [CrossRef]

- Lane, N.; Mohammod, M.; Lin, M.; Yang, X.; Lu, H.; Ali, S.; Doryab, A.; Berke, E.; Choudhury, T.; Campbell, A.B. A smartphone application to monitor, model and promote wellbeing. In Proceedings of the 5th International ICST Conference on Pervasive Computing Technologies for Healthcare, Dublin, Ireland, 23–26 May 2011; pp. 23–26. [Google Scholar]

- Kose, M.; Incel, O.; Ersoy, C. Online Human Activity Recognition on Smart Phones. In Proceedings of the Workshop on Mobile Sensing: From Smartphones and Wearables to Big Data, Beijing, China, 16–20 April 2012; pp. 11–15. [Google Scholar]

- Das, S.; Green, L.; Perez, B.; Murphy, M.; Perring, A. Detecting User Activities Using the Accelerometer on Android Smartphones; Technical Report; Carnegie Mellon University: Pittsburgh, PA, USA, 2010. [Google Scholar]

- Thiemjarus, S.; Henpraserttae, A.; Marukatat, S. A study on instance-based learning with reduced training prototypes for device-context-independent activity recognition on a mobile phone. In Proceedings of the 2013 IEEE International Conference on Body Sensor Networks (BSN), Cambridge, MA, USA, 6–9 May 2013; pp. 1–6. [Google Scholar]

- Kim, T.; Cho, J.; Kim, J. Mobile Motion Sensor-Based Human Activity Recognition and Energy Expenditure Estimation in Building Environments. Smart Innov. Syst. Technol. 2013, 22, 987–993. [Google Scholar]

- Siirtola, P. Recognizing Human Activities User-independently on Smartphones Based on Accelerometer Data. Int. J. Interact. Multimed. Artif. Intell. 2012, 1, 38–45. [Google Scholar] [CrossRef]

- Zhao, K.; Du, J.; Li, C.; Zhang, C.; Liu, H.; Xu, C. Healthy: A Diary System Based on Activity Recognition Using Smartphone. In Proceedings of the 2013 IEEE 10th International Conference on Mobile Ad-Hoc and Sensor Systems (MASS), Hangzhou, China, 14–16 October 2013; pp. 290–294. [Google Scholar]

- Khan, A.; Siddiqi, M.; Lee, S. Exploratory Data Analysis of Acceleration Signals to Select Light-Weight and Accurate Features for Real-Time Activity Recognition on Smartphones. Sensor 2013, 13, 13099–13122. [Google Scholar] [CrossRef] [PubMed]

- Guiry, J.; van de Ven, P.; Nelson, J. Orientation independent human mobility monitoring with an android smartphone. In Proceedings of the IASTED International Conference on Assistive Technologies, Innsbruck, Austria, 15–17 February 2012; pp. 800–808. [Google Scholar]

- San-Segundo-Hernández, R.; Lorenzo-Trueba, J.; Martínez-González, B.; Pardo, J.M. Segmenting human activities based on HMMs using smartphone inertial sensors. Pervasive Mob. Comput. 2016, 30, 84–96. [Google Scholar] [CrossRef]

- San-Segundo, R.; Montero, J.M.; Barra-Chicote, R.; Fernández, F.; Pardo, J.M. Feature extraction from smartphone inertial signals for human activity segmentation. Signal Process. 2016, 120, 359–372. [Google Scholar] [CrossRef]

- Cho, H.; Yoon, S.M. Divide and Conquer-Based 1D CNN Human Activity Recognition Using Test Data Sharpening. Sensors 2018, 18, 1055. [Google Scholar]

- Ignatov, A. Real-time human activity recognition from accelerometer data using Convolutional Neural Networks. Appl. Soft Comput. 2018, 62, 915–922. [Google Scholar] [CrossRef]

- Jiang, W.; Yin, Z. Human Activity Recognition Using Wearable Sensors by Deep Convolutional Neural Networks. In Proceedings of the 23rd ACM International Conference on Multimedia (MM’15), Brisbane, Australia, 26–30 October 2015; ACM: New York, NY, USA, 2015; pp. 1307–1310. [Google Scholar]

- Ronao, C.A.; Cho, S.B. Human activity recognition with smartphone sensors using deep learning neural networks. Expert Syst. Appl. 2016, 59, 235–244. [Google Scholar] [CrossRef]

- Zeng, M.; Nguyen, L.T.; Yu, B.; Mengshoel, O.J.; Zhu, J.; Wu, P.; Zhang, J. Convolutional Neural Networks for human activity recognition using mobile sensors. In Proceedings of the 6th International Conference on Mobile Computing, Applications and Services, Austin, TX, USA, 6–7 November 2014; pp. 197–205. [Google Scholar]

- Yang, J.B.; Nguyen, M.N.; San, P.P.; Li, X.L.; Krishnaswamy, S. Deep Convolutional Neural Networks on Multichannel Time Series for Human Activity Recognition. In Proceedings of the 24th International Conference on Artificial Intelligence (IJCAI’15), Buenos Aires, Argentina, 25 July–31 August 2015; AAAI Press: Menlo Park, CA, USA, 2015; pp. 3995–4001. [Google Scholar]

- Inoue, M.; Inoue, S.; Nishida, T. Deep Recurrent Neural Network for Mobile Human Activity Recognition with High Throughput. Artif. Life Robot. 2018, 23, 173–185. [Google Scholar] [CrossRef]

- Ordóñez, F.J.; Roggen, D. Deep Convolutional and LSTM Recurrent Neural Networks for Multimodal Wearable Activity Recognition. Sensors 2016, 16, 115. [Google Scholar] [CrossRef] [PubMed]

- Edel, M.; Köppe, E. Binarized-BLSTM-RNN based Human Activity Recognition. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016; pp. 1–7. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv, 2014; arXiv:1412.6980. [Google Scholar]

- Shakya, S.R.; Zhang, C.; Zhou, Z. Comparative Study of Machine Learning and Deep Learning Architecture for Human Activity Recognition Using Accelerometer Data. Int. J. Mach. Learn. Comput. 2018, 8, 577–582. [Google Scholar]

| Training | Testing | |

|---|---|---|

| walking | 1226 | 496 |

| walking-upstairs | 1073 | 471 |

| walking-downstairs | 986 | 420 |

| sitting | 1286 | 491 |

| standing | 1374 | 532 |

| laying | 1407 | 537 |

| Total | 7352 | 2947 |

| Parameter | Value |

|---|---|

| The size of input vector | 128 |

| The number of input channels | 6 |

| Filter size | |

| Pooling size | |

| Activation function | ReLU (rectified linear unit) |

| Learning rate | |

| Weight decay | |

| Momentum | 0.5–0.99 |

| The probability of dropout | |

| The size of minibatches | 500 |

| Maximum epochs | 2000 |

| Walking | Ascending Stairs | Descending Stairs | Sitting | Standing | Laying | |

|---|---|---|---|---|---|---|

| walking | 100 | 0 | 0 | 0 | 0 | 0 |

| ascending stairs | 0 | 100 | 0 | 0 | 0 | 0 |

| descending stairs | 0 | 0 | 100 | 0 | 0 | 0 |

| sitting | 0 | 0 | 0 | 100 | 0 | 0 |

| standing | 0 | 0 | 0 | 0 | 100 | 0 |

| laying | 0 | 0 | 0 | 0 | 0 | 100 |

| Walking | Ascending Stairs | Descending Stairs | Sitting | Standing | Laying | Mean Average | |

|---|---|---|---|---|---|---|---|

| Our proposal | |||||||

| San-Segundo [33] | |||||||

| Ignatov [36] | |||||||

| Cho [35] | |||||||

| Anguita [3] | 100 | ||||||

| Ronao [38] |

| Activity | Number of Samples | Percentage |

|---|---|---|

| Walking | 424,400 | |

| Jogging | 342,177 | |

| Upstairs | 122,869 | |

| Downstairs | 100,427 | |

| Sitting | 59,939 | |

| Standing | 48,397 |

| Walking | Jogging | Upstairs | Downstairs | Sitting | Standing | |

|---|---|---|---|---|---|---|

| walking | 100 | 0 | 0 | 0 | 0 | 0 |

| jogging | 0 | 100 | 0 | 0 | 0 | 0 |

| upstairs | 0 | 0 | 100 | 0 | 0 | 0 |

| downstairs | 0 | 0 | 0 | 100 | 0 | 0 |

| sitting | 0 | 0 | 0 | 0 | 100 | 0 |

| standing | 0 | 0 | 0 | 0 | 0 | 100 |

| Walking | Jogging | Upstairs | Downstairs | Sitting | Standing | Mean Average | |

|---|---|---|---|---|---|---|---|

| Our proposal | |||||||

| Shakya [45] |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Avilés-Cruz, C.; Ferreyra-Ramírez, A.; Zúñiga-López, A.; Villegas-Cortéz, J. Coarse-Fine Convolutional Deep-Learning Strategy for Human Activity Recognition. Sensors 2019, 19, 1556. https://doi.org/10.3390/s19071556

Avilés-Cruz C, Ferreyra-Ramírez A, Zúñiga-López A, Villegas-Cortéz J. Coarse-Fine Convolutional Deep-Learning Strategy for Human Activity Recognition. Sensors. 2019; 19(7):1556. https://doi.org/10.3390/s19071556

Chicago/Turabian StyleAvilés-Cruz, Carlos, Andrés Ferreyra-Ramírez, Arturo Zúñiga-López, and Juan Villegas-Cortéz. 2019. "Coarse-Fine Convolutional Deep-Learning Strategy for Human Activity Recognition" Sensors 19, no. 7: 1556. https://doi.org/10.3390/s19071556

APA StyleAvilés-Cruz, C., Ferreyra-Ramírez, A., Zúñiga-López, A., & Villegas-Cortéz, J. (2019). Coarse-Fine Convolutional Deep-Learning Strategy for Human Activity Recognition. Sensors, 19(7), 1556. https://doi.org/10.3390/s19071556