1. Introduction

In the field of measurements, a single sensor is seldom able to perform high-precision measurements by itself. Combined multi-sensor measurement schemes can effectively combine the characteristics of each sensor, leveraging the complementary advantages of sensors, and improving the accuracy and robustness of the measurement system. As [

1] shows, laser range finders (LRFs) provide high-precision distance information, while camera can provide rich image information. The combination of LRFs and cameras has attracted wide attention, with interesting applications in navigation [

2], human detection [

3] and 3D texture reconstruction [

4].

Compared with the current mainstream schemes combining scanning lasers and vision, the more challenging combination of 1-D laser ranging and vision has attracted the attention of researchers due to its low cost and wide applicability. The Shuttle Radar Topography Mission (SRTM) [

5] realizes high-precision measurements of Interferometric Synthetic Aperture Radar (IFSAR) on long-range cooperative targets. Ordez [

6] proposed a combination of camera and Laser Distance Meter (LDM) to estimate the length of a line segment in an unknown plane. Wu [

7] applied this method to a visual odometry (VO) system and realized the application in a quasi-plane scene. In our previous work, we further extended this method and constructed a complete SLAM method based on laser-vision fusion [

1].

Sensor calibration is the premise of data fusion, including the calibration of each sensor’s own parameters and the relationship of relative data between each sensor [

8]. However, as a necessary prerequisite for high-precision measurements, the calibration technology of 1D laser-camera systems evolves seldom. The existing calibration algorithm based on scanning laser ranging has been unable to apply, but the traditional one-dimensional laser calibration algorithms require a high-precision manipulator laser interferometer and other complex equipment.

In this paper, a simple and feasible high-precision laser and visual calibration algorithm is proposed, which can calibrate the parameters of laser and camera sensors through only simple data processing. Firstly, the camera parameters and distortion coefficients are determined using a non-iterative method. Then, the coordinates of the laser spot in the camera coordinate system are obtained by inversion of the laser image points in the image, and the initial values of external camera and laser ranging parameters are estimated. Finally, the parameters are optimized through the parameterization of the rotation matrix [

9] and the Gröbner basis method [

10]. Compared with the existing methods, the main contributions of this paper are as follows:

- (1)

The method proposed in this paper has wider applicability. It can be used for joint calibration of vision sensors and LRF from 1D to 3D.

- (2)

Compared with existing 1D laser-vision calibration methods, the proposed method can be realized using a simple chessboard lattice, without complicated customized targets and high-precision mechanical structures.

- (3)

The accuracy and usability of the proposed method are verified by simulation and observation experiments.

This paper is organized as follows: the existing methods related to our work are outlined in the following section.

Section 3 and

Section 4 describe the mathematical model and illustrate the proposed algorithm. In

Section 5, we evaluate the solution of the simulation and observation experiments. Finally, conclusions and future are provided in

Section 6.

2. Related Work

For the extrinsic parameters between LRF and vision sensors, it is helpful to combine the high-precision distance information of laser ranging with the high lateral resolution of vision to achieve high-precision pose estimation. However, this method is mostly used to calibrate 2D or 3D LRFs and cameras.

Vasconcelos [

11] calibrated the camera-laser extrinsic parameters by moving a checkerboard freely. This method assumes that the internal parameters are known and accurate, and converts the external parameter calibration problem into a plane-coplanar alignment problem to reach an exact solution. Similar work includes Scaramuzza [

12] and Ha [

13]. Ranjith [

14] realized the correlation and calibration of 3D LiDAR data and image data through feature point retrieval. Zhang [

15] uses mobile LRF and visual camera to achieve self-calibration of their external parameters through motion constraints. Viejo [

16] realized the correlation of the two sets of data by arranging control points, and calibrated the external parameters of 3D LiDAR and a monocular camera.

However, the above algorithms are mostly used to calibrate the external parameters of 2D or 3D scanning laser and vision systems, and cannot be used for 1D laser ranging without a scanning mechanism due to the lack of constraints. For the calibration of 1D LRF, the traditional method mostly realizes the correlation between the two by means of complex a manipulator or specific calibration target. For example, Zhu’s [

17] calibration algorithm is used to calibrate the direction and position parameters of a laser range finder based on spherical fitting. The calibration accuracy is high, but the solution is highly customized and not universal. Lu [

18] designed a multi-directional calibration block to calibrate the laser beam direction of a point laser probe on the platform of a coordinate measuring machine. Zhou [

19] proposed a new calibration algorithm for serial coordinate measuring machines (CMMs) with cylindrical and conical surfaces as calibration objects. Similar calibration methods are used in the implementation of the LFR-camera slam method [

1]. The relative rotation and translation of the two sensors’ coordinate systems are estimated through a high-precision laser tracker.

Although this method can achieve high accuracy, it requires the installation of sensors on precision measuring equipment, which has high calibration cost and complex operation, and cannot meet the needs of low-cost and fast landing scenarios such as existing robots. In 2010, Ordez [

6] proposed a set method of cameras and LRF to measure short distances in the plane. In another study [

20], the author introduces a preliminary calibration method for a digital camera and a laser rangefinder. The experiment involves the artificial adjustment of the projection center of the laser pointer, and only two laser projections are used. The accuracy and robustness of the calibration method are both problematic. After that, Wu et al. [

7] proposed a two-part calibration method based on the Ransac scheme, and solved the corresponding linear equation in the image by creating the index table of laser spot. However, this method cannot be well applied to the case where the laser light is close to the optical axis of the camera, and the final accuracy evaluation criteria are not given.

Zhang [

21] proposed a simple calibration method for camera intrinsic parameters, where the parameters were determined using a non-linear method, and high accuracy was achieved. Afterwards, based on Zhang’s framework, researchers improved accuracy and scene expansion by designing different forms of targets [

22,

23,

24] and improving the calibration of the algorithm [

25,

26,

27]. Hartly [

28] introduced the distortion division model to correct the imaging distortion. On this basis, Hong [

29] further explored the calibration method of large distortion cameras.

Currently, the calibration of omnidirectional cameras has attracted wide attention in order to improve the user’s degree of freedom and immersion in the virtual reality and autopilot. Li et al. [

30] proposed a multi-phase camera calibration scheme based on random pattern calibration board. Their method supports the calibration of a camera system which comprise normal pinhole cameras. Gwon Hwan [

31] proposed a new intrinsic calibration and extrinsic calibration method of omnidirectional cameras based on the Aruco marker and a Charuco board. The calibration structure and method can solve the problem of suing overly complicated procedures to accurately calibrate multiple cameras.

At the same time, the calibration board also plays an important role in the other calibration processs. Liu [

32] studied different applications of lasers and cameras. The calibration method of multiple non-common-view cameras by scanning a laser rangefinder is proposed. In the literature, the correlation between laser distance information and camera images is established through a specific calibration plate, so as to realize the relative pose estimation between cameras. Inspired by Liu’s work [

32], we establish a constraint of 1D laser and monocular vision by combining planar and coplanar constraints, so as to determine related external parameters. Considering that the camera imaging model has a direct impact on the calibration accuracy, we have improved Zhang‘s method [

21] used in camera calibration by replacing the traditional polynomial model with the division distortion model, and solved the linear solution of the iterative optimization using variable least squares on the basis of Hartly [

28] and Hong [

29]. Thus, the problem of falling into local optimal solutions is avoided, and the calibration speed is greatly improved. Combining the above innovations, a convenient method for calibrating the parameters of the camera-laser measurement system is realized, which can complete the calibration of measurement systems, including camera internal parameters, distortion coefficients and camera-laser external parameters, in one operation.

3. Measurement Model

Previous researchers established relatively mature camera imaging and laser measurement models. We integrate the two mathematical models and construct a complete mathematical description of the coordinate system.

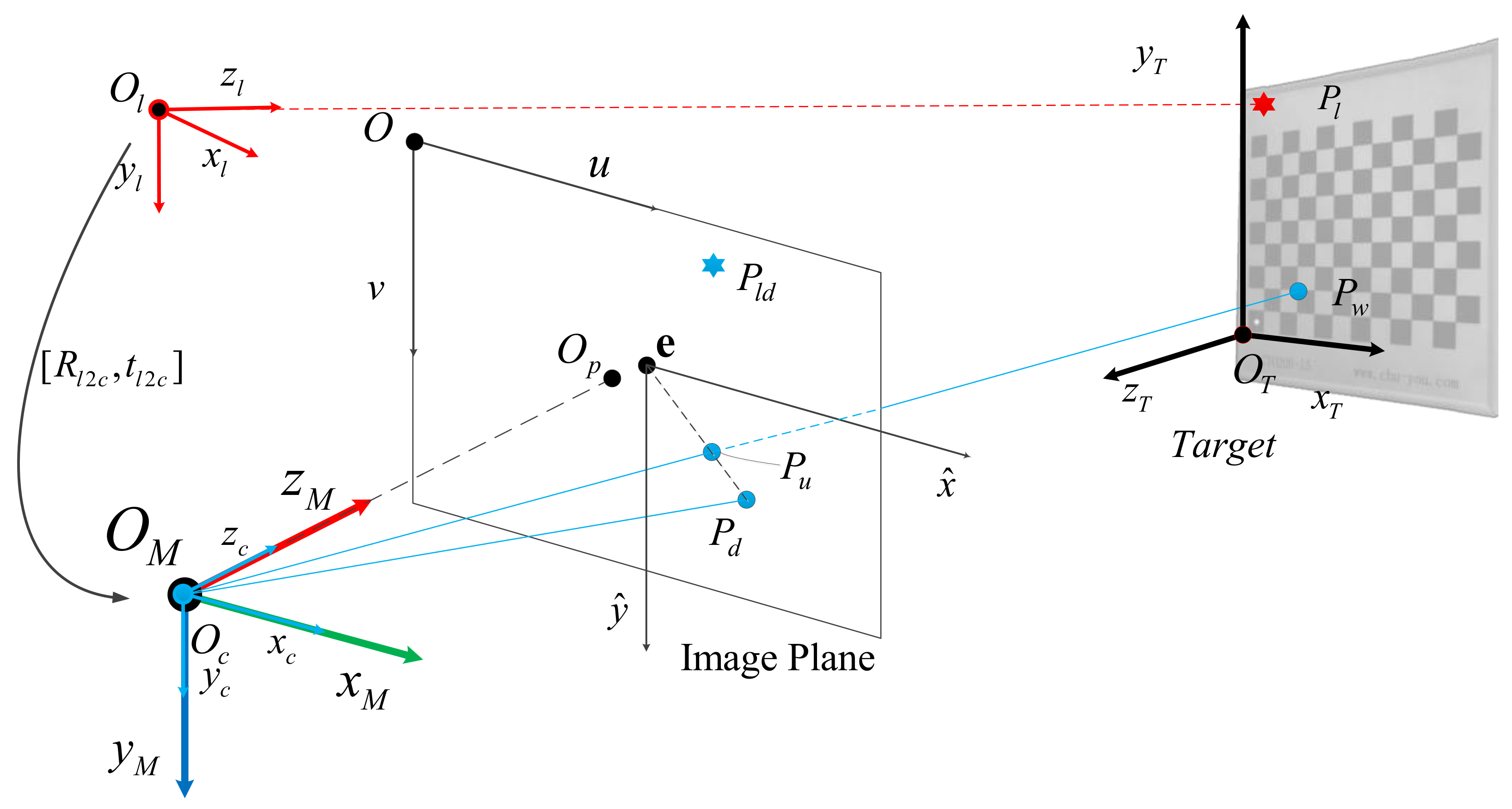

As shown in the

Figure 1,

is the camera coordinate system,

is the optical axis direction of the camera,

is the image plane of the camera,

is the coordinate system of the target itself, point

is the spatial position of the laser spot, point

is the spatial coordinate of the target control point and

represents the coordinate system of 1D laser ranging. We set the camera coordinate system

as the measurement coordinate system

of the system. In the next part, we introduce the imaging model of the monocular camera and the 1D laser ranging model, and convert and fuse the data through extrinsic parameters

.

3.1. Camera Imaging Model

In order to describe the imaging process of a monocular camera more accurately, we combine the lens distortion model with the aperture imaging model and introduce the shift of the distortion center

relative to the image center

[

28]. In the camera coordinate system,

is the physical coordinate system of the phase plane and

represents the image coordinate system. Image center Op denotes the intersection of the optical axis and the image plane.

The ideal imaging process can be described as the process of transforming a point

(

) in the world coordinate system to the image plane imaging point

(

) through a projection relationship. The mathematical expression is as follows:

where

is a named depth scale factor, the intrinsic matrix and

is described by a five-parameter model;

are the focal lengths,

is the coordinate of the image center

, and

is the skew coefficient.

is the transformation matrix relating

to

and it can be expressed as a rotation matrix

combined with the translation vector

.

Due to lens design and processing, the actual imaging process is distorted. We introduce a division distortion model to improve our imaging. The mathematical expressions are as follows:

represents the actual position of projection point

, and its coordinates are

;

and

are the distortion coefficients and

represents the distance from point

to the distortion center

, expressed as

.

In order to illustrate the method more clearly, the most important parameters used in this paper and their meaning are shown in

Table 1.

3.2. LRF Model

The mathematical model of the 1D laser ranging module is relatively simple. The laser ranging module can output single point laser distance information by observing the reflected signal and calculating the optical path using image coherence [

33]. The mathematical determination of the origin coordinate and laser direction of the laser ranging module allows the coordinate of the laser in the measurement coordinate system. In order to better represent the measurement results in the system measurement coordinate system

, we set up the European three-dimensional coordinate system

for the LRF module. The laser emission direction is

, the directions of

are perpendicular and parallel to the

plane, and the directions of

are determined by the right-hand rule, as shown in

Figure 1. The measured distance information

represents the distance from the origin

to the laser spot

.

In the process of extrinsic parameter calibration, the coordinate origin of the laser ranging coordinate system and the laser emission direction need to be calculated. Finally, the conversion relations between camera measurement system and the LRF coordinate system are estimated, the rotation matrix and the translation vector are determined.

4. Methodology

Calibration of the measurement system is the process of determining the model parameters of the measurement system. For our system, through the measurement and imaging of a specific target, the model parameters of the measurement system are determined using the corresponding relationship between the coordinates of the control points and the image coordinates. The main parameters are the intrinsic parameters of the camera and the extrinsic parameters of between LRF and camera.

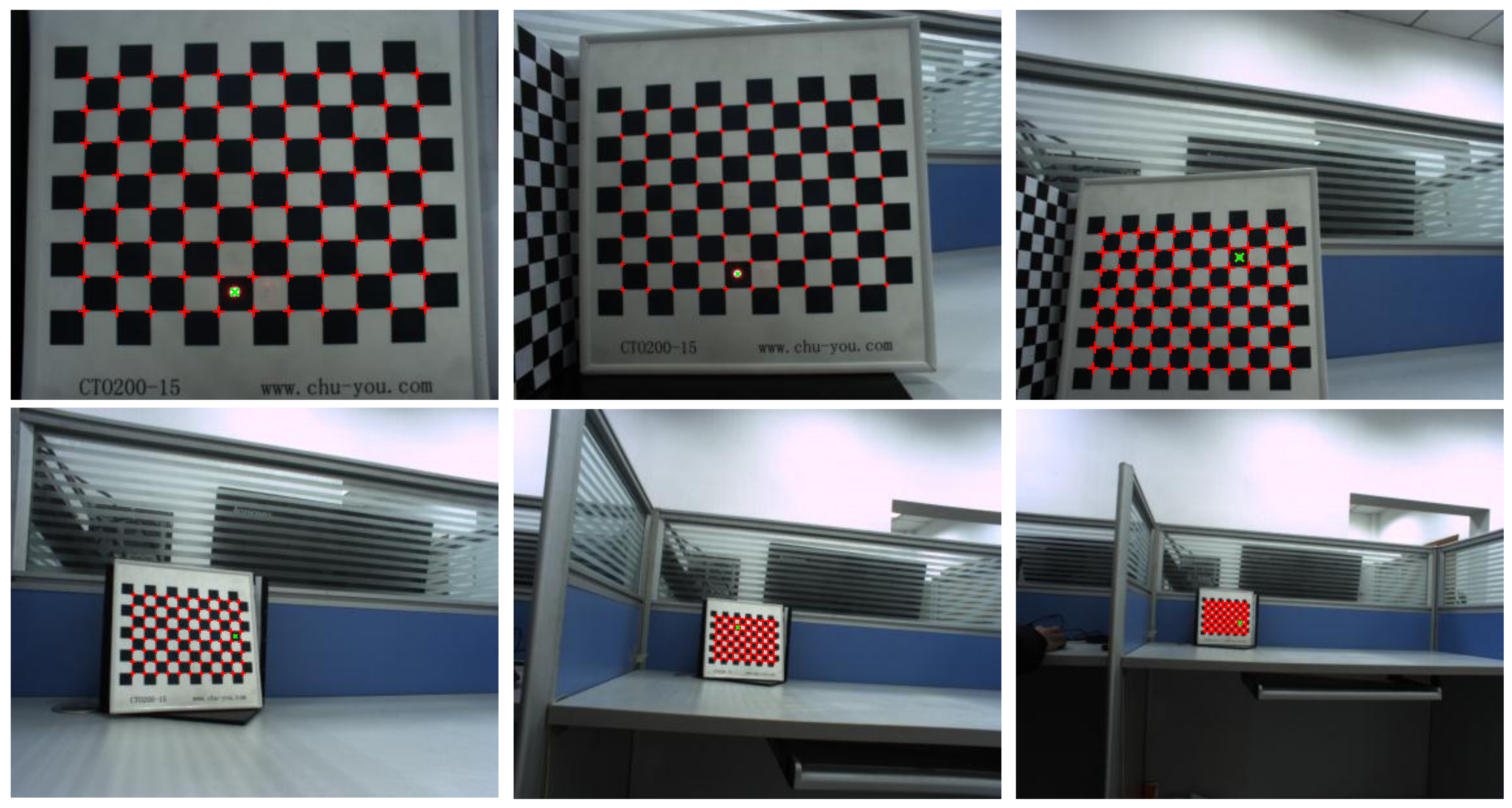

The specific calibration process is divided into three main steps: (1) the estimation of the camera distortion center ; (2) the intrinsic parameters and distortion coefficients are decoupled and determined independently; (3) finding the extrinsic parameters for translating the laser-vision coordinate system to the measurement coordinate system; (4) determining the optimal solution using the Gröbner basis method. In this section, we elaborate on the above.

4.1. The Center of Distortion

In many studies, it is usually assumed that the distortion center and the main point are in the same position, but Hartley [

28] determined experimentally that there is a certain deviation between them. During the calibration process, we use a checkerboard as the calibration object, and extract the corners

of the checkerboard as the control points for camera calibration. Since the corners are distributed on a plane, we set the

in the target coordinate system, in which case the imaging model can be expressed as:

where

is the column vector of rotation matrix

. The above equation can be simplified as:

Matrix called the homography matrix, and expresses the mapping relation between the corner of the checkerboard and the image points. The coordinates of are abbreviated as .

From the division model of Equation (2), we obtain:

We multiply the left side of the equations by

and combine it with Equation (4). In consideration of

:

We then multiply the left sides of the equations by

and obtain:

Let

.

is called the fundamental matrix of distortion and is expressed as follows:

We can solve the values of the fundamental matrix

using 8 pairs of corresponding corner points. The equation can be formulated as:

where:

The corresponding equations are solvable using least square when the number of points is greater than 8 points. The corresponding distortion center

is the left null vector of

:

So far, we have obtained the image coordinates of the distorted center . The corresponding homography matrix can be obtained using the fundamental matrix .

4.2. Decoupling Camera Parameters

If the image coordinate origin

is moved to the distortion center

e, the new distortion center after translation is expressed as

. In the new coordinate system, Equation (8) is expressed as:

where

and

represent the transformed image coordinates

and the fundamental matrix

. The transformation relationship is as follows:

From the definition of

:

Equations (15) and (16) are then introduced into Equation (14):

So far, the first two rows

of the homography matrix have been obtained. Referring to Equations (2) and (4), the image distortion after translation can be expressed as follows:

An equation set can be obtained after sorting out:

For Equation (19) and the combined Equation (17), two equations can be obtained for each pair of corner points. When the number of corresponding points

(where

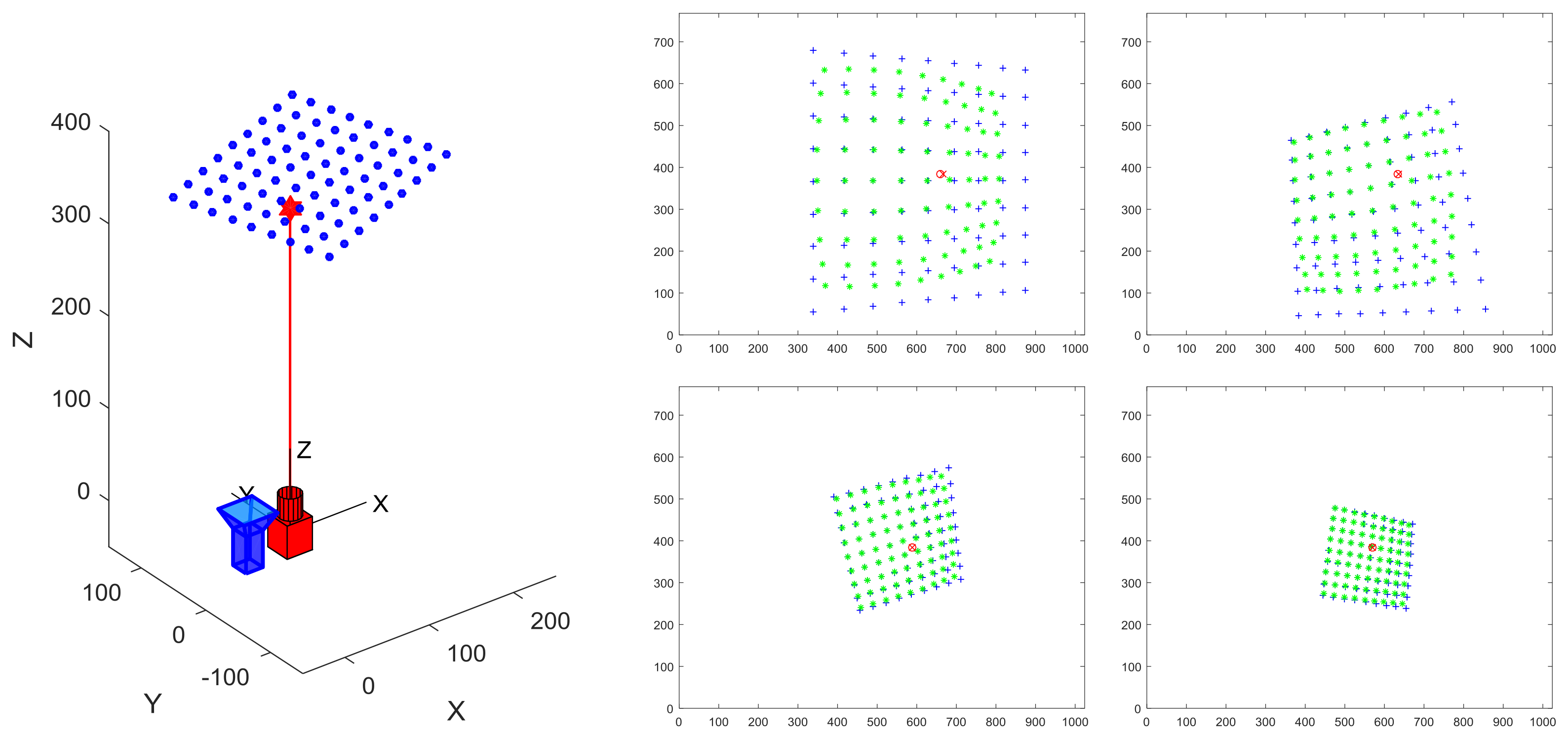

n denotes the number of distortion parameters), an overdetermined equation is obtained. This can be achieved by moving the target, as shown in

Figure 2. The homography matrix

and the distortion coefficients

can be obtained by using the least square method.

4.3. Parameter Solution

From the perspective projection model, the imaging relationship of the translation sequence can be expressed as follows:

Equation (19) can been solved to obtain

. The initial homography matrix

can be calculated by substituting Equation (21). We set

. By using the orthogonality and normality of rotation matrix

, we obtain:

Therefore, three images are needed to find five unknowns in the camera intrinsic parameter matrix

. If the camera collects

images from different directions for calibration, a set of linear equations containing

constrained equations can be established, which can be written in matrix form as follows:

where

is the coefficient matrix and

is the variable to be solved, with:

The solvable camera intrinsic parameters are:

Similarly, the camera parameters can be obtained:

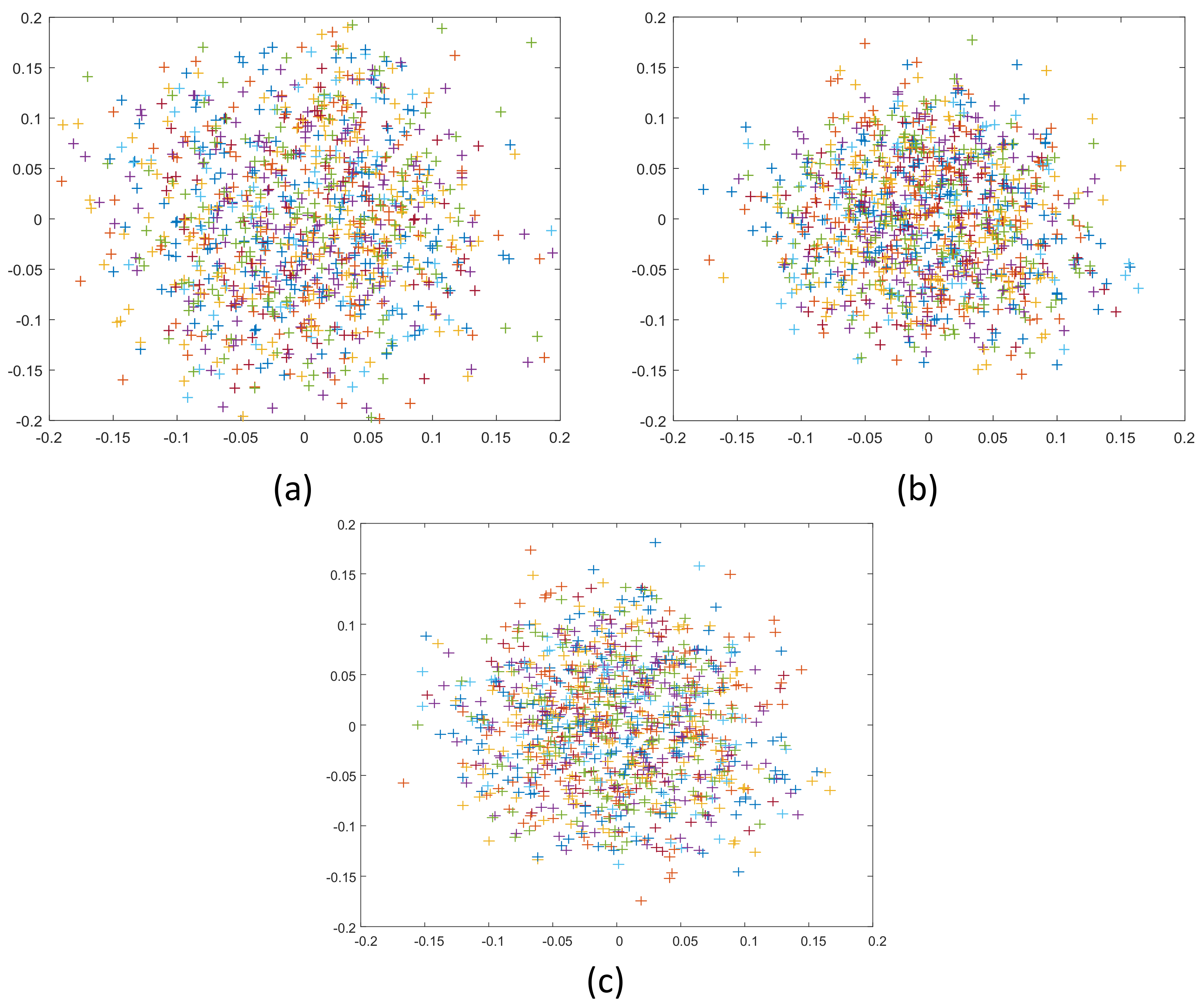

In the case of obtaining the parameters outside the target, the spatial coordinate of the laser spot in the camera coordinate system can be found by solving the known plane equation in the direction obtained by connecting the ray and the target from the camera optical center to the ideal image point coordinate . Moving the calibration board along the laser direction, the spatial position of laser spot can be obtained at different distances after multiple acquisitions. By processing the data, the laser beam can be straight in the camera coordinate system.

Through data processing, the linear equation

of the laser beam in the camera coordinate system can be obtained in the form of Equation (29). By combining the distance information DL obtained through laser ranging, the spatial coordinates of the laser origin in the camera coordinate system can be obtained, and the transformation relationship

between the laser system and camera system can be estimated:

where

,

,

. Combining with Equation (27), we have:

By combining with the imaging model, the linear equation between laser spot and camera light center can be expressed in two-point form:

Equations (30) and (31) are solved simultaneously, the only solution of which is the coordinate of the laser spot on the target in the camera coordinate system.

After many measurements, the linear equation can be expressed as a series of spatial point sets

, as shown in

Figure 2. Constraints can be applied using a point-line relationship to solve the linear equation

corresponding to laser rays, such as:

A space point can provide two constraints, and we need at least two space points to solve the equation and estimate the linear equation . Finally, the laser origin position is determined on the line by calculating the distance information obtained by ranging according to the coordinate system established before and using the relative transformation matrix of laser-camera .

4.4. Optimization of Solution

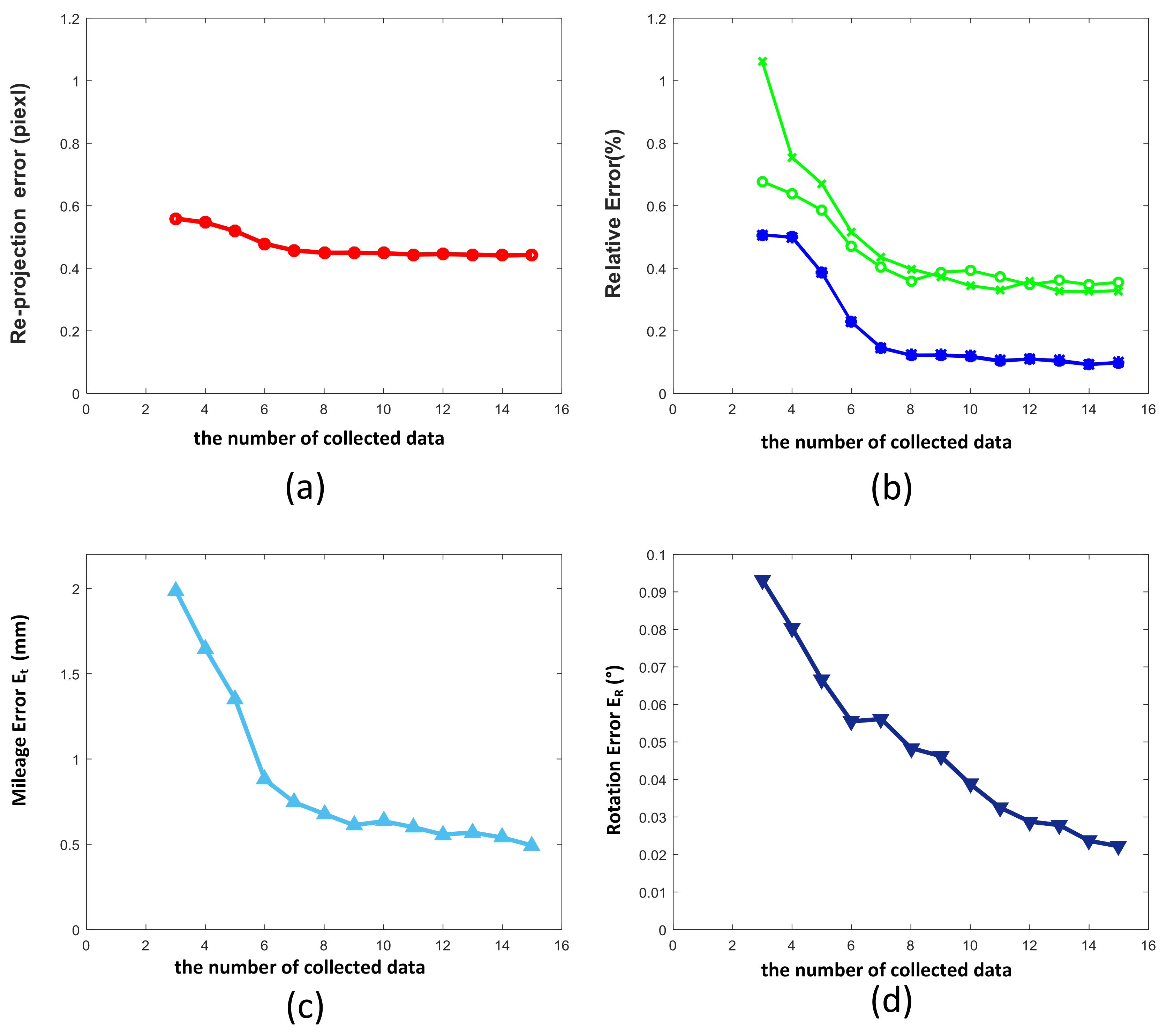

The above process does not involve any iteration. The camera internal parameters and laser-camera external parameters can be found using least squares. The calculation speed is fast and local minima can be effectively avoided effectively. If we want to obtain higher accuracy, we can take the calculated value as the initial value, and further improve the calibration accuracy of the system through the non-linear optimization method.

Given

calibrated images, each image has

corners

and one laser projection point

. The following objective functions are then constructed:

where

and

represent the projection functions of corner points

and laser spot

under the division distortion model, and

is a named weight coefficient that denotes the contribution of corner and laser points to errors, generally speaking

.

Using Cayley-Gibbs-Rodriguez (CGR) [

9] to parameterize the rotation matrix

, the latter can be expressed as a function of the CGR parameters

:

The problem is then transformed into an unconstrained optimization problem. The automatic Gröbner basis method [

10] is used to solve Equation (32), and the minimum solution

can be obtained. A nonlinear optimization method is used to further improve the accuracy and stability of the solution.

In this part, we have completed the estimation of the optimal solution of all parameters, including the camera intrinsic parameter matrix , distortion coefficient and laser-camera external parameters .