Abstract

Significant progress has been achieved in the past few years for the challenging task of pedestrian detection. Nevertheless, a major bottleneck of existing state-of-the-art approaches lies in a great drop in performance with reducing resolutions of the detected targets. For the boosting-based detectors which are popular in pedestrian detection literature, a possible cause for this drop is that in their boosting training process, low-resolution samples, which are usually more difficult to be detected due to the missing details, are still treated equally importantly as high-resolution samples, resulting in the false negatives since they are more easily rejected in the early stages and can hardly be recovered in the late stages. To address this problem, we propose in this paper a robust multi-resolution detection approach with a novel group cost-sensitive boosting algorithm, which is derived from the standard AdaBoost algorithm to further explore different costs for different resolution groups of the samples in the boosting process, and to place greater emphasis on low-resolution groups in order to better handle the detection of multi-resolution targets. The effectiveness of the proposed approach is evaluated on the Caltech pedestrian benchmark and KAIST (Korea Advanced Institute of Science and Technology) multispectral pedestrian benchmark, and validated by its promising performance on different resolution-specific test sets of both benchmarks.

1. Introduction

Object detection is a hot and challenging topic in the computer vision and multimedia community [1]. As an important task in this domain, pedestrian detection has received special interest because of its considerable applications in practice, such as video surveillance, crowd understanding, tracking, assistant driving, and robot navigation. Owing to lots of effort and many different detection approaches proposed in the literature, impressive progress has been achieved in the past few years. Nevertheless, it is still difficult to detect multi-resolution pedestrians in images and videos (as shown in Figure 1), and existing approaches suffer from their great performance drop with reducing resolution of the detected targets. For example, state-of-the-art detectors in the literature nowadays can achieve less than 1% of a mean miss rate for the detection of pedestrians taller than 80 pixels in the Caltech pedestrian benchmark [2], while the mean miss rate significantly increases to more than 50% for the detection of pedestrians who are 30–80 pixels high. Meanwhile, it is required to achieve robust detection of low-resolution targets in certain circumstances. For example, accurate detection of low-resolution pedestrians is very important in assistant driving systems so that necessary time can be provided to take the reactions.

Figure 1.

Example images and ground truth annotations in the Caltech pedestrian benchmark. Note that the resolutions of the pedestrians are in a wide range.

Because of both high effectiveness and high efficiency, the boosting-based approaches are popular in pedestrian detection literature for detector training [2]. The main idea is to linearly train a series of weak classifiers and then combine them to construct a strong classifier. In the boosting process, each training sample is assigned with a weight which is used to calculate its corresponding classification cost, and is updated iteratively according to the classification results in each iteration so that the wrongly classified samples can be better emphasized. In the case of pedestrian detection, the truth is compared to the huge number of negative windows, where only small number of positive targets need to be detected. Therefore, the positive samples should possess greater weights during training so that a higher detection rate can be achieved. To that end, the researchers in the community have proposed several cost-sensitive boosting algorithms [3,4,5] where the false negatives are given more penalties than the false positives so that more importance is put on the positive samples. However, these algorithms are not optimal for multi-resolution detection, since they still treat all positive samples equally and ignore their intra-class variations. Due to the missing details of the appearances for low-resolution pedestrians, the features extracted from low-resolution samples are usually less discriminative than that from high-resolution ones, leading to the consequence that low-resolution pedestrians could be regraded as false negatives since they are more easily rejected during boosting in the early stages and can hardly be recovered in the late stages. Consequently, the trained detectors are possibly biased towards high-resolution pedestrians and leads to poorer performance on low-resolution pedestrians.

In order to address this problem, we propose in this paper a new group cost-sensitive boosting algorithm for robust multi-resolution pedestrian detection. In particular, we integrate the proposed algorithm with two representative detection frameworks: Locally Decorrelated Channel Features (LDCF) [6] and Convolutional Channel Features (CCF) [7], and propose a multi-resolution LDCF approach and a multi-resolution CCF approach, respectively. The proposed approaches can explore different costs for different resolution groups of the samples in the boosting process, and put greater importance on low-resolution pedestrians in order to better handle the detection of multi-resolution targets.

The main contributions of this work can be summarized as follows:

- Different from the existing approaches that treat all positive samples equally and ignore their intra-class variations in the boosting process, we propose a new group cost-sensitive boosting algorithm to further explore different costs for different resolution groups in positive set, so that low-resolution pedestrians can be better emphasized in the training process, leading to better detection in multi-resolution cases.

- We integrate the proposed algorithm with two representative detection frameworks: one is based on the classical hand-crafted features (LDCF) and the other is based on the popular deep-learning features (CCF), so that its effectiveness and generalization capability can be better validated.

- We evaluate the proposed approaches on two challenging pedestrian detection benchmarks (the Caltech pedestrian dataset and the KAIST multispectral pedestrian dataset), and the results show their promising performances compared to other state-of-the-art approaches on different resolution-specific test sets.

A preliminary version of this work appeared in [8]. This paper significantly extends it in the following ways. Firstly, we only consider the case of two resolution groups in the proposed boosting algorithm in [8], while in this paper we extend it to a generalized case of N resolution groups, so that the proposed approach can be more easily applied in other specific problems. Secondly, besides the LDCF detection framework as the baseline in [8], we also integrate in this paper the proposed algorithm with the CCF detection framework, which is based on the popular deep-learning features, in order to further improve detection performance and better validate its effectiveness. Thirdly, we add in the Appendix A of this paper a detailed proof of the key solution in the proposed group cost-sensitive boosting algorithm. Finally, besides the Caltech pedestrian benchmark used in [8], we conduct more experimental evaluation on an additional KAIST (Korea Advanced Institute of Science and Technology) multispectral pedestrian benchmark to validate the effectiveness of the proposed approach more extensively.

The remainder of the paper is organized as follows. After reviewing the related work in Section 2, we present the details of the proposed group cost-sensitive boosting algorithm for multi-resolution detection in Section 3. Then Section 4 reports the experimental evaluation for the effectiveness of the proposed approach. Finally, we conclude the paper in Section 5.

2. Related Work

Pedestrian detection has attracted attention for decades and has achieved impressive progress thanks to many effective detection techniques proposed in the literature [2]. However, only limited attention has been paid in the literature [9,10,11,12] on the problem of multi-resolution detection. In [9], a multi-resolution model of pedestrians was proposed consisting of a rigid HOG (Histogram of Oriented Gradient) template used to score low-resolution instances and a deformable part-based model used to score high-resolution instances. The motivation lies in that low-resolution instances usually lose lots of visual detail due to their small scales, meaning that a rigid HOG template is sufficient to characterize their global appearance features. On the contrary, high-resolution instances contain more detailed information; thus, a more complex part-based model could be applied to capture more detailed features from different parts and to improve accuracy. In [10], the authors propose training multiple models for different scales to perform multi-scale detection. Different from the traditional approaches that train N models, each for an individual scale, which is highly computational-cost centered, the key idea of the authors is to reduce the number of models for feature computation by a factor K and to resize images at training time instead of at test time. The computed N/K models will be used at test time to approximate the models in the remaining N-N/K scales. The main focus of this work is on detection speedup more than on detection accuracy. In [11], the authors propose an approach of using scale-independent features and one single classifier for all pedestrian scales. For image representation, HOG, LBP (Local Binary Patterns), and LUV color descriptors are adopted and the codebook maps are calculated based on the bag-of-visual-words model of each descriptor. These maps are then decomposed into channels for each individual word to obtain the proposed word channels feature. For multi-scale detection, one single classifier is trained based on the scale-independent classification features computed on word channels, and is applied on all sliding window scales. The authors in [12] take pedestrian detection in different resolutions as different but related problems, and propose a multi-task model to jointly consider their relations and differences. They first map pedestrians in different resolutions to a common space via resolution-aware transformations, and then train a shared detector in that space to perform multi-scale pedestrian detection. Nevertheless, this method relies on the deformable part-based model, and thus has relatively high computational complexity.

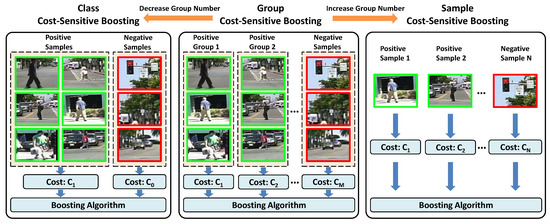

In order to achieve more efficient detection, the boosting-based approaches are popular for training detectors. Several cost-sensitive boosting algorithms have been proposed in the literature to address the problem of sample imbalance, and can be classified into two categories: one is class cost-sensitive boosting (denoted as CCS boosting) such as Asymmetric-AdaBoost [3], AdaCost [13], CSB0-CSB2 [14], AdaC1-AdaC3 [4], and cost-sensitive boosting [5]; and the other is sample cost-sensitive boosting (denoted as SCS boosting) [15]. In CCS boosting, the cost is determined by the type of classification errors, i.e., misclassifying a sample into different classes will lead to different costs. In SCS boosting, the cost is determined by the samples, i.e., different samples will lead to different costs, no matter whether their types of classification errors are the same or not. Nevertheless, these two kinds of methods share the same main idea of putting more costs on the misclassified positive samples by modifying the weight update rules in boosting, so that false negatives are more penalized than false positives. However, although these algorithms distinguish positive samples from negative ones in the boosting process, they still ignore the possible variations inside the positive set. Different from these methods, our proposed approach is based on a new group of cost-sensitive boosting (denoted as GCS boosting) which explores different costs for different resolution groups in the positive set during boosting in order to better handle the detection in multi-resolution situations. Note that the proposed approach is related to both CCS boosting and SCS boosting, as shown in Figure 2, and can be considered as a generalized form of them. In the special case of a decreasing group number where all positive samples are treated as one group, GCS boosting will be simplified to CCS boosting, while in the special case of increasing group numbers to treat each positive sample as an individual group, GCS boosting will scale up to SCS boosting.

Figure 2.

Comparison of different cost-sensitive boosting strategies.

3. Multi-Resolution Detection via Group Cost-Sensitive Boosting with Channel Features

In this section, we present the details of the proposed multi-resolution detection approach with a new group cost-sensitive boosting algorithm, which is derived from the standard AdaBoost algorithm by further exploring different costs for different resolution groups of the samples in the boosting process, so that low-resolution groups can obtain greater importance and more emphasis in order to achieve better detection of multi-resolution targets.

3.1. Baseline Detection Frameworks

We consider in this paper two representative detection frameworks as a baseline: one is Locally Decorrelated Channel Features (LDCF) [6], which is based on the classical hand-crafted HOG and color features, and the other is Convolutional Channel Features (CCF) [7], which is based on the popular deep-learning features.

3.1.1. Locally Decorrelated Channel Features (LDCF)

Given an input image, the LDCF approach calculates several image channels as a feature at first, where each image channel is a per-pixel feature map—in other words, the output pixels are calculated with their corresponding input pixels. Then, by applying a feature transform, the correlations in local image patches are removed. The idea is to replace the expensive oblique splits by the efficient orthogonal splits on locally decorrelated data in decision trees. In total, we calculated 10 feature channels, including one channel of the normalized gradient magnitude, six channels of the histogram of oriented gradients, and three channels of LUV color, and then applied four decorrelating filters for each channel, and finally obtained 40 locally decorrelated channels as features. To train detectors, we adopted the AdaBoost algorithm to train a certain number of decision trees on these channel features and then combined them to construct a strong classifier. More details of the LDCF approach can be referred to [6].

3.1.2. Convolutional Channel Features (CCF)

The CCF approach generally has similar workflow to the traditional channel-feature-based approaches, like the aforementioned LDCF, in that it consists of two components: image feature extraction, and classifier learning via boosting. However, the main difference lies in that CCF takes advantage of the recently developed deep-learning techniques, and replaces the hand-crafted HOG and color features used in the conventional channel-feature-based approaches by the deep-learning-based convolutional features in order to obtain performance improvements by utilizing better image representations. For the feature extraction component, CCF extends multiple channel features to low-level feature maps transferred from the first few layers of a CNN model pre-trained on an ImageNet image dataset. For the classifier learning component, CCF trains an ensemble of decision trees in a boosting manner, with each node in decision trees dependent on one pixel value in the candidate feature maps. To perform the detection, the learned decision tree model is applied on dense image patches and the output of each decision tree is accumulated to get the final result. Specifically, the “conv3-3” layer in the VGG-16 model is adopted as the final feature representation, and a sliding window strategy is applied during detection. More details of the CCF approach is referred to in [7].

3.1.3. Detection via AdaBoost

To facilitate understanding of the following description, a formal definition of the problem of detection via AdaBoost is given as follows: we first list in Table 1 the terms that will appear in the following equations, and describe how they are related to the multi-resolution pedestrian detection problem.

Table 1.

A list of the terms that appear in our approach.

Given a number of samples for detection, where is the feature representation of samples, and is the class label of samples, a detector (or so-called binary classifier) is defined as a function h that can map each feature to its corresponding class label y, and is usually implemented as follows:

where is a predictor, is the sign function which will be 1 if , and will be otherwise. If the detector can minimize the risk , where is a loss function to measure the classification error of the samples, then it will be considered as optimal. Recall that in the baseline LDCF and CCF approaches, the following loss function is adopted in the AdaBoost algorithm:

and a predictor is learned by linearly combining the weak learners as follows:

where is a set of weights for different weak learners and is a set of decision stumps with being a feature response and being a threshold.

Particularly, the predictor can be learned by the gradient descent with respect to the following exponential loss:

and we iteratively select the weak learners so that the classification error is minimized at each iteration:

where

is the total classification error, and is an indicator function, as follows:

We calculated the weight of each weak learner as:

and updated the weight of each sample so that at the next iteration, the importance of the wrongly classified samples was increased, and the importance of the correctly classified samples was decreased:

3.2. Group Cost-Sensitive Boosting Algorithm

Note that the loss function defined in Equation (2) is cost-insensitive because of the same costs of the false positives () and the false negatives () in this function. In order to deal with the multi-resolution detection in a better way, a new group cost-sensitive AdaBoost algorithm is proposed by exploring the different importance of the samples from different resolution groups so that low-resolution samples, which are usually harder to be detected, can have more emphasis in the boosting process.

3.2.1. Group Cost-Sensitive Loss

In order to assign different importance to samples of different resolution, the positive samples were further divided into N groups () according to their different resolutions (here we assume the groups are sorted in a resolution-ascending order, i.e., the samples in had larger resolution than the samples in ). Then, a group cost-sensitive loss function was proposed as follows:

where . In this loss function, different scenarios are respectively considered, including correct detections , false positives (), false negatives (miss detections) of samples in a resolution group (), false negatives of samples in a resolution group (), …, and false negatives of samples in a resolution group (). Note that in the case of , this group cost-sensitive loss will be equivalent to the standard class cost-sensitive loss.

As for the values of the costs and , they are determined based on different specific tasks. For pedestrian detection, our intuition indicates that should be greater than , since miss detections are usually more difficult to be recovered than false positives, and should be greater than , since lower-resolution samples are usually more difficult to be detected than higher-resolution ones, and so on for the case of and . We will choose the optimal values of these costs experimentally via cross-validation. Then, when the values of and are specified, we calculate the group cost-sensitive exponential loss as follows:

where is an indicator function similar to Equation (7) but in an extended form:

3.2.2. Group Cost-Sensitive Adaboost

Given the expected loss in Equation (11), the proposed group cost-sensitive AdaBoost algorithm is then derived by the gradient descent on its empirical estimate. Now we have a set of training samples , the predictor as in Equation (3) and different resolution groups which are defined as follows:

At each iteration m in the boosting process, the selected weak learner consists of an optimal step along the direction of the largest descent of the expected loss in Equation (11), and is expressed as:

The optimal step along the direction g is the solution of the following (Here we apply the gradient descent method to compute it; that is, we consider the output of the classifier for each training sample as a point () in n-dimensional space, where each axis corresponds to a training sample, each weak learner corresponds to a vector of fixed orientation and length, and the goal is to reach the target point () or any region where the value of the loss function is less than the value at that point in the least number of steps. Thus, we can perform the gradient descent optimization method to find with the steepest gradient and choose to minimize test error, and this can be done efficiently with the standard scalar search procedures. See detailed proof in the Appendix A):

with

After calculating the step and the direction g, we can calculate the total loss of the weak learner as follows:

and select the direction of the largest descent so that the minimum loss is obtained:

Finally, we update the weight of each sample at the next iteration according to the following rules:

Briefly speaking, we define the possible descent directions using a set of weak learners , and obtain the optimal step along each direction by solving Equation (15), which can be done efficiently with the standard scalar search procedures. The loss associated with the weak learner is then calculated as in Equation (18) when the step and direction g are given, and the weak learner is selected in Equation (19) as the best one so that the minimum loss is achieved. We present in Algorithm 1 a summary of the proposed group cost-sensitive AdaBoost algorithm.

| Algorithm 1 Group Cost-Sensitive AdaBoost Algorithm |

| Input: Training set where is the feature vector of the sample and is the class label, costs for different groups, the set of weak learners , and the number M of weak learners in the final classifier. |

| Output: Strong classifier for multi-resolution detectors. |

| 1: Initialization: Set of uniformly distributed weights for each group: |

| 2: . |

| 3: for do |

| 4: for do |

| 5: Compute parameter values as in Equations (16), (17) with ; |

| 6: Obtain the value of by solving Equation (15); |

| 7: Calculate the loss of the weak learner as in Equation (18). |

| 8: end for |

| 9: Select the best weak learner with the minimum loss as in Equation (19); |

| 10: Update the weights according to Equation (20). |

| 11: end for |

| 12: return. |

3.3. Multi-Resolution Detectors

By integrating the proposed group cost-sensitive AdaBoost algorithm into the baseline LDCF and CCF frameworks, respectively, i.e., replacing the standard AdaBoost algorithm used in LDCF and CCF by the proposed group cost-sensitive AdaBoost algorithm, a new group cost-sensitive LDCF detector and a new group cost-sensitive CCF detector (denoted as “GCS-LDCF” and “GCS-CCF” in the following experiments, respectively) can be obtained to better handle the detection in multi-resolution conditions. To perform multi-resolution pedestrian detection, we applied the proposed detectors on each test image with a multi-scale sliding window strategy, and adopted non-maximal suppression to merge multiple nearby detections to obtain the final detection results.

4. Experimental Evaluation

To evaluate the proposed approaches, we conducted the experiments on two standard datasets: the Caltech pedestrian detection benchmark [2], and the KAIST multispectral pedestrian detection benchmark [16].

The Caltech benchmark is by far the largest and the most challenging pedestrian dataset, by taking a video around 10 h long (, 30 Hz) from a vehicle driving through regular traffic in an urban environment. This dataset contains a large number of pedestrians, i.e., a total number of 350,000 annotated bounding boxes and 2300 unique pedestrians. However, it is challenging due to realistic occlusion frequency and many low-resolution pedestrians.

The KAIST benchmark is a multispectral pedestrian dataset. Different from the Caltech benchmark that contains only color images, this benchmark captures the additional thermal images and consists of 95 k color-thermal pairs (, 20 Hz) taken from a vehicle. All the pairs are manually annotated (person, people, cyclist) for the total of 103,128 dense annotations and 1182 unique pedestrians. The annotation includes temporal correspondence between bounding boxes which are similar to the Caltech benchmark.

4.1. Experimental Setup

The common experimental setups are followed on each of two benchmarks: For Caltech, its training set (set00–set05) is used to train the detectors, and its test set (set06–set10) is used to obtain the detection results; for KAIST, its training set (set00–set05) is used to train the detectors and the detection results are reported on its test set (set06–set11). For detector training, we chose the image regions labeled as “persons” that were non-occluded with different resolutions as positive samples, and chose the patches of random sizes at random locations in the training images without pedestrians as negative samples.

The important parameters of the proposed approach during training were set as follows: we considered two resolution groups ()—low-resolution samples (30–80 pixels high in Caltech, 30–115 pixels high in KAIST) and high-resolution samples (taller than 80 pixels in Caltech, taller than 115 pixels in KAIST), as defined in each of two benchmarks. As for the optimal value of the costs for different resolution groups, they were selected from , and experimentally by cross-validation. To construct a strong classifier, 4096 weak classifiers were trained and combined via the proposed boosting algorithm, and a pool of random candidate regions from image samples were used to construct the nodes of these decision trees. The multi-scale models were used to increase scale invariance, and three bootstrapping stages were applied with 25,000 additional hard negative samples each time.

To evaluate the results, we used the ground truth annotations and evaluation code available on the website of the Caltech benchmark (www.vision.caltech.edu/Image_Datasets/CaltechPedestrians/) and the KAIST benchmark (https://sites.google.com/site/pedestrianbenchmark/), respectively. For both benchmarks, the same per-image evaluation methodology was adopted—that is, the miss rate vs. FPPI (False-Positive-Per-Image) curves were used to compare the results. In addition, to compare different approaches more conveniently, we also calculated their summarized performances in terms of the log-average miss rate, which is the average of the miss rates at several fixed FPPI points (The mean miss rate at 0.0100, 0.0178, 0.0316, 0.0562, 0.1000, 0.1778, 0.3162, 0.5623 and 1.0000 FPPI). evenly distributed in the log-space from to . Different test subsets are available on two benchmarks to evaluate detectors in different conditions. In order to validate the effectiveness of the proposed approach for multi-resolution detection, we mainly conducted the experiments on several resolution-specific subsets: for Caltech, including the popular “Reasonable” (pedestrians of ≥50 pixels high and less than 35% occluded), “Large-Scale” (pedestrians of ≥100 pixels high and non-occluded), “Near-Scale” (pedestrians of ≥80 pixels high and non-occluded), and “Medium-Scale” (pedestrians of 30–80 pixels high and non-occluded); for KAIST, including the popular “Reasonable All” (pedestrians of ≥55 pixels high and less than 50% occluded), “Near-Scale” (pedestrians of ≥115 pixels high and non-occluded), “Medium-Scale” (pedestrians of 45–115 pixels high and non-occluded), and “Far-Scale” (pedestrians of ≤45 pixels high and non-occluded).

4.2. Comparison with Popular Approaches on the Caltech Benchmark

The proposed approaches are compared on the Caltech benchmark with many popular pedestrian detection approaches in the literature, including (the detailed definitions of the following short forms can be found in www.vision.caltech.edu/Image_Datasets/CaltechPedestrians/) VJ [17], HOG [18], ChnFtrs [19], ConvNet [20], FPDW [21], LatSVM [22], pAUCBoost [23], RandForest [24], SDN [25], DBN-Mut [26], Franken [27], JointDeep [28], InformedHaar [29], LDCF [6], ACF-Caltech+ [6], SpatialPooling [30], SpatialPooling+ [31], Katamari [32], LFOV [33], NAMC [34], DeepCascade [35], SCCPriors [36], Checkerboards [37], DeepParts [38], and CompACT-Deep [39]. The results of these approaches were obtained directly from the same website as the evaluation code. Note that some recent methods, such as AdaptFasterRCNN [40], SA-FastRCNN [41], F-DNN2 [42], TLL-TFA [43], and SDS-RCNN [44] were not considered in comparisons since they require additional external data (e.g., ImageNet, CityPersons, Cityscapes, TudBrussels, ETH) to train their deep models.

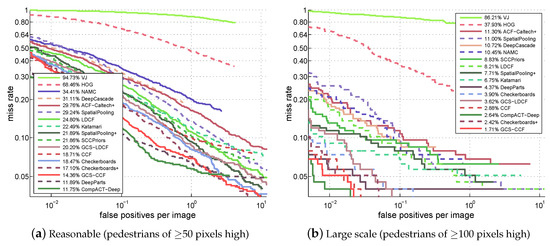

For the results, the miss rate vs. FPPI curves and their corresponding log-average miss rates (reported in the figure legend) of different approaches on four test sets of the Caltech benchmark are shown in Figure 3. Due to the space limitation, only the results of top 15 approaches plus the classic VJ and HOG are presented in the figure. It can be clearly seen that: (1) The proposed GCS-LDCF obviously performs better than its baseline LDCF on four test sets, i.e., 4.60 percentage points better on the “Reasonable” set, 4.59 percentage points better on the “Large-Scale” set, 4.62 percentage points better on the “Near-Scale” set, and 2.29 percentage points better on the “Medium-Scale” set, respectively. (2) The proposed GCS-CCF also clearly outperforms its baseline CCF on four test sets, i.e., 4.35 percentage points better on the “Reasonable” set, 1.17 percentage points better on the “Large-Scale” set, 1.52 percentage points better on the “Near-Scale” set, and 2.60 percentage points better on the “Medium-Scale” set, respectively. (3) These are positive demonstrations that the proposed approaches truly benefit from exploring different costs for the sample groups with different resolutions by the group cost-sensitive AdaBoost algorithm in the training process; (4) according to the miss rate vs. FPPI curves and the log-average miss rates on four test sets, the proposed GCS-CCF approach outperforms most other popular approaches, validating that it is an effective method for pedestrian detection, especially in multi-resolution occasions; (5) note that some well-performing approaches utilize additional motion or context information or multiple-feature combinations to aid detection (e.g., the CompACT-Deep approach [39] combines the ACF, SS, CB, LDA, and CNN features to learn cascades; the Checkerboards+ approach [37] uses the flow-based motion features from [45]), while the proposed approach in this paper focuses on pedestrian detection in static images and does not take such kinds of information into consideration. Nevertheless, utilizing motion and context information or additional features in the proposed approach for further improvement is a potential area for future research.

Figure 3.

Comparison with popular approaches on the Caltech benchmark.

4.3. Comparison with Popular Approaches on the KAIST Benchmark

The proposed approaches were also compared on the KAIST benchmark with some popular pedestrian detection approaches. Since the KAIST benchmark is a recently released pedestrian dataset, the results in the literature are not as many as the Caltech benchmark. Thus, we mainly made comparisons with several baseline approaches proposed in [16]. Also note that, different from the Caltech benchmark, the KAIST benchmark is a multispectral pedestrian dataset (color channels + thermal channel); thus, the baseline approaches extend the popular ACF framework [46] to handle both color and additional thermal channels. To make fair comparisons, we therefore also extend the proposed GCS-LDCF and GCS-CCF approaches to “GCS-LDCF+T+THOG” and “GCS-CCF+T+THOG”, respectively, with additional thermal channels by following the same method as explained in Section 3.2 in [16].

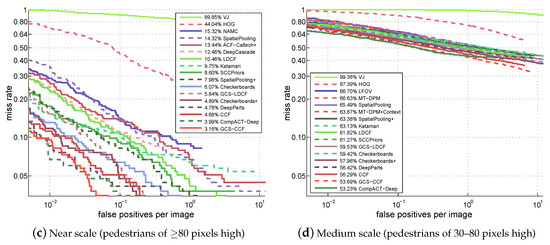

Figure 4 presents the miss rate vs. FPPI curves and their corresponding log-average miss rates (reported in the figure legend) of different approaches on four test sets of the KAIST benchmark. We can observe that: (1) The best-performing approach in [16] is ACF+T+THOG. By replacing the ACF detector with the LDCF detector and the CCF detector, our baseline LDCF+T+THOG and CCF+T+THOG already outperforms the ACF+T+THOG approach on four test sets. (2) The proposed GCS-LDCF+T+THOG approach also performs better than the baseline LDCF+T+THOG on four test sets (4.47 percentage points better on “Reasonable All”, 2.81 percentage points better on the “Near Scale”, 5.49 percentage points better on the “Medium-Scale”, and 2.93 percentage points better on the “Far Scale”, respectively). (3) The proposed GCS-CCF+T+THOG approach further outperforms the baseline CCF+T+THOG on four test sets (3.05 percentage points better on “Reasonable All”, 1.29 percentage points better on the “Near Scale”, 2.76 percentage points better on the “Medium Scale”, and 0.74 percentage points better on the “Far Scale”, respectively). (4) These results validate the effectiveness of the proposed group cost-sensitive boosting algorithm, and show that it also provides an effective way for multi-resolution pedestrian detection in multispectral conditions.

Figure 4.

Comparison with popular approaches on the KAIST benchmark.

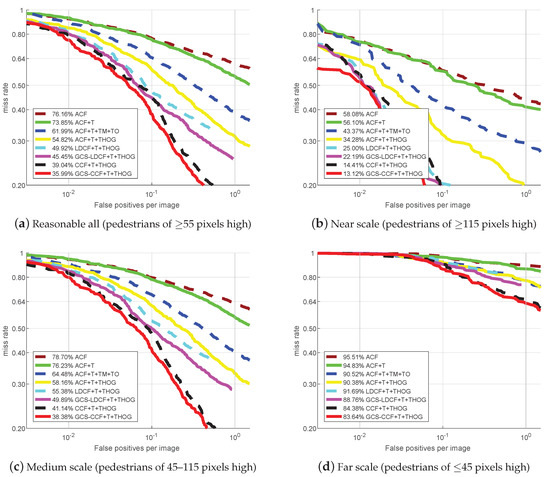

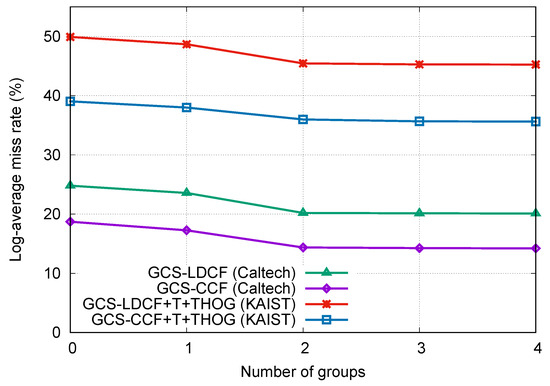

4.4. Discussion: Influence of Group Number

The number of resolution groups is an important factor in the proposed approaches, and there is no prior knowledge about the optimal number of groups. Therefore, we empirically selected the best number of groups on the Caltech and KAIST benchmarks, respectively, by changing the group number (N) from 0 to 4 in the proposed GCS-LDCF and GCS-CCF approaches, and comparing their performances. means that we do not distinguish false negatives from false positives in wrong detections, which equals to the original LDCF and CCF approaches. means that we consider all positive samples as a group and assign different costs for false negatives and false positives, respectively, which equals to the standard cost-sensitive setting. means that we further divide positive samples into different resolution groups and assign different costs for them. Specifically, when , the positive samples are divided into group 1 (30–80 pixels high in Caltech, 30–115 pixels high in KAIST) and group 2 (taller than 80 pixels in Caltech, taller than 115 pixels in KAIST); when , the positive samples are divided into group 1 (30–50 pixels high in Caltech, 30–55 pixels high in KAIST), group 2 (50–80 pixels high in Caltech, 55–115 pixels high in KAIST), and group 3 (taller than 80 pixels in Caltech, taller than 115 pixels in KAIST); and when , the positive samples are divided into group 1 (less than 30 pixels high in Caltech, less than 30 pixels high in KAIST), group 2 (30–50 pixels high in Caltech, 30–55 pixels high in KAIST), group 3 (50–80 pixels high in Caltech, 55–115 pixels high in KAIST), and group 4 (taller than 80 pixels in Caltech, taller than 115 pixels in KAIST).

The results are shown in Figure 5. We can observe that: (1) The performances with one group are better than the performances with no group, indicating the positive effect of distinguishing false negatives from false positives in wrong detections when training. (2) When the group number is increased from 1 to 2, the performances are also clearly improved, validating the effectiveness of the proposed group cost-sensitive boosting algorithm. (3) The performance gains become slight when the group number continues to increase from 2 to 3, and shows no improvement when the group number changes from 3 to 4. We think the main reasons for this may lie in that when we increase the group number, more positive samples with low resolution are divided and considered; however, according to [2], pedestrians less than 50 pixels high are very difficult to recognize, and for pedestrians below 30 pixels, even human annotators have difficulty in identifying them reliably. Moreover, the number of pedestrian samples below 30 pixels in both datasets is small. Therefore, very low-resolution samples (less than 50 pixels high) in Caltech and KAIST are in the minority and naturally difficult to detect, and thus can hardly provide help in the proposed approaches, which is why we chose group number in previous experiments. Overall, we can learn that the optimal number of resolution groups could depend on the specific detection tasks, as well as the data distribution of the specific datasets.

Figure 5.

Influence of group number in GCS-LDCF and GCS-CCF on the Caltech and KAIST benchmarks, respectively.

4.5. Discussion: Performance on Very Low-Resolution Samples

There are reasons why not only the proposed approaches, but also all the other ones perform poorly on very low-resolution pedestrian samples, as shown in Figure 3d and Figure 4d. According to the authors’ claim in [2], pedestrians less than 50 pixels tall in the Caltech benchmark are very difficult to recognize due to the missing appearance details, and for the pedestrians around 30 pixels, even human annotators have difficulty in identifying them reliably. This is also the case in the KAIST benchmark, since it is constructed in a similar way to Caltech. That is why there are “Reasonable” settings in both benchmarks (pedestrians taller than 50 pixels in Caltech and taller than 55 pixels in KAIST), because the pedestrians less than 50 pixels tall are naturally very difficult to detect. Therefore, the detection performances of all the approaches for these samples are far from satisfactory. This also can explain why the performances in Figure 4d are even poorer (>80% mean miss rate) than those in Figure 3d (>50% mean miss rate), since the samples in Figure 4d contain only the pedestrians less than 45 pixels tall, which are naturally very difficult to detect, but the samples in Figure 3d contain the pedestrians between 30 and 80 pixels tall, where the parts which are 30–50 tall are difficult to detect, while the parts which are 50–80 tall are relatively easier to detect.

As for our proposed approaches, according to the results in Figure 3d, GCS-LDCF and GCS-CCF still outperform the baseline LDCF and CCF (2.29 and 2.60 percentage points, respectively, which are relatively clear improvements considering the small performance gap between different approaches), and we believe the benefits come from the pedestrian samples of 50–80 pixels tall which provide actual help in the proposed group cost-sensitive boosting algorithm. However, due to other pedestrian samples that are 30–50 pixels tall which are difficult to detect and thus can hardly provide help in the proposed algorithm, the overall performances of GCS-LDCF and GCS-CCF are still not good enough in this case.

Overall, based on the experimental results, we can say that the proposed approaches could truly provide performance gain on low-resolution pedestrian samples (50–80 pixels tall). But for very low-resolution pedestrian samples (less than 50 pixels tall), since their detection is naturally a hard problem, there are still no good solutions for solving it, and the proposed approaches are clearly not the best solutions, but at least provide an effort to address this problem.

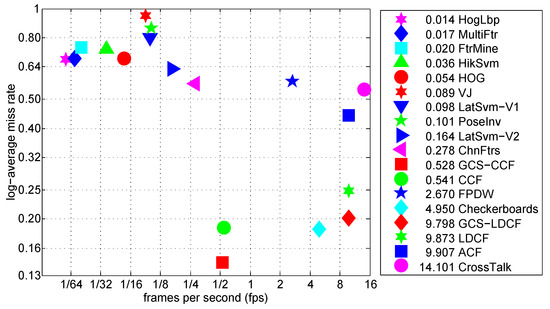

4.6. Runtime Analysis

In this section, we compare the runtime of the proposed approaches with other methods in the literature using video frames from the Caltech benchmark. The frames had a resolution of pixels, and the runtime was measured by averaging the runtime over multiple frames with the “Reasonable” settings. The runtimes of other approaches were obtained from [2], where runtimes of all detectors were normalized to the rate of a single modern machine, so that all times were directly comparable. In Figure 6, we plot log-average miss rate versus runtime for each approach. Note that symbols closer to the bottom-right corner indicate that the corresponding approaches possess both better accuracy and faster runtime speed. We can see that the proposed GCS-LDCF approach runs faster than most other detectors, and runs as fast as the original LDCF approach but improves its accuracy by almost 5 percentage points. As for the proposed GCS-CCF approach, its runtime speed is almost the same as the original CCF approach. Due to the sliding-window mechanism and deep-learning-based feature computation in a huge number of windows, their runtime speed is now around 0.5 fps. However, considering their good detection accuracy compared to other approaches, and the fact that acceleration techniques used in Fast R-CNN [47] are also applicable to CCF and GCS-CCF, it is very valuable and has the possibility of further improving their runtime speed. This will be done in our work in the future.

Figure 6.

Log-average miss rate vs. runtime of different approaches on Caltech “Reasonable” setting (symbols closer to the bottom-right corner indicating that the corresponding approaches possess both better accuracy and faster runtime speed).

5. Conclusions

In this paper, we proposed a new group cost-sensitive boosting algorithm for handling multi-resolution pedestrian detection. Different from the traditional boosting-based approaches where low-resolution samples are treated with equal importance as high-resolution ones, thus resulting in false-negatives since they are more easily rejected in the early stages during boosting, the proposed approach extends the standard AdaBoost algorithm by further exploring different costs for different resolution groups of the samples in the boosting process, and placing greater emphasis on low-resolution samples, which are usually more difficult to be detected, in order to better handle the detection in multi-resolution conditions. The effectiveness of the proposed approach has been validated by its promising performance compared to other popular methods on different resolution-specific test sets of the Caltech pedestrian benchmark and the KAIST multispectral pedestrian benchmark.

Future work includes the extension of the proposed group cost-sensitive boosting algorithm to the application of general object detection, and the utilization of additional motion and context information or other powerful features in the proposed approach for further performance improvement, as well as acceleration of the GCS-CCF approach while keeping its high detection accuracy.

Author Contributions

Conceptualization, C.Z.; Investigation, C.Z.; Methodology, C.Z. and X.-C.Y.; Supervision, X.-C.Y.; Writing—original draft, C.Z.; Writing—review and editing, C.Z. and X.-C.Y.

Funding

This research was funded by Beijing Natural Science Foundation under Grant 4174095, National Natural Science Foundation of China under Grant 61703039, and Fundamental Research Funds for the Central Universities under Grant FRF-TP-16-047A1.

Acknowledgments

The authors thank the editors and the anonymous reviewers for their insightful comments and helpful suggestions, which highly improved the quality of the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of the Conclusion in Equation (15)

Given the expected loss as in Equation (11), the indicator function as in Equation (7) and the extended indicator function as in Equation (12), the group-sensitive cost function can be expressed as:

and by adding the weak learner to the predictor , we have:

Since is minimized if and only if the argument of the expectation is minimized for all , the direction of the largest descent and optimal step size can be obtained by:

The expectation can be expressed as follows:

thus the direction of the largest descent and optimal step size can be obtained by:

where

with

are the posterior estimates associated with each sample. Hence, the weak learner with the minimum cost can be obtained by:

Given the definitions in Equations (16) and (17), and by replacing the expectations with the sample averages, we have:

Given the direction of the largest descent , and by setting the derivative with respect to to zero:

thus the optimal step size is the solution of:

References

- Zhang, X.; Yang, Y.; Han, Z.; Wang, H.; Gao, C. Object class detection: A survey. ACM Comput. Surv. 2013, 46, 28–36. [Google Scholar] [CrossRef]

- Dollár, P.; Wojek, C.; Schiele, B.; Perona, P. Pedestrian Detection: An Evaluation of the State of the Art. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 743–761. [Google Scholar] [CrossRef] [PubMed]

- Viola, P.A.; Jones, M.J. Fast and Robust Classification using Asymmetric AdaBoost and a Detector Cascade. In Proceedings of the 14th International Conference on Neural Information Processing Systems: Natural and Synthetic (NIPS), Vancouver, BC, Canada, 3–8 December 2001; pp. 1311–1318. [Google Scholar]

- Sun, Y.; Kamel, M.S.; Wong, A.K.C.; Wang, Y. Cost-sensitive boosting for classification of imbalanced data. Pattern Recognit. 2007, 40, 3358–3378. [Google Scholar] [CrossRef]

- Masnadi-Shirazi, H.; Vasconcelos, N. Cost-Sensitive Boosting. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 294–309. [Google Scholar] [CrossRef] [PubMed]

- Nam, W.; Dollár, P.; Han, J.H. Local Decorrelation For Improved Pedestrian Detection. In Proceedings of the 27th International Conference on Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 8–13 December 2014; pp. 424–432. [Google Scholar]

- Yang, B.; Yan, J.; Lei, Z.; Li, S.Z. Convolutional Channel Features. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 82–90. [Google Scholar]

- Zhu, C.; Peng, Y. Group Cost-Sensitive Boosting for Multi-Resolution Pedestrian Detection. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; pp. 3676–3682. [Google Scholar]

- Park, D.; Ramanan, D.; Fowlkes, C. Multiresolution Models for Object Detection. In Proceedings of the 11th European Conference on Computer Vision: Part IV (ECCV), Heraklion, Greece, 5–11 September 2010; pp. 241–254. [Google Scholar]

- Benenson, R.; Mathias, M.; Timofte, R.; Gool, L.J.V. Pedestrian detection at 100 frames per second. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 2903–2910. [Google Scholar]

- Costea, A.D.; Nedevschi, S. Word Channel Based Multiscale Pedestrian Detection without Image Resizing and Using Only One Classifier. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 2393–2400. [Google Scholar]

- Yan, J.; Zhang, X.; Lei, Z.; Liao, S.; Li, S.Z. Robust Multi-resolution Pedestrian Detection in Traffic Scenes. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; pp. 3033–3040. [Google Scholar]

- Fan, W.; Stolfo, S.J.; Zhang, J.; Chan, P.K. AdaCost: Misclassification Cost-Sensitive Boosting. In Proceedings of the Seventeenth International Conference on Machine Learning (ICML); Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1999; pp. 97–105. [Google Scholar]

- Ting, K.M. A Comparative Study of Cost-Sensitive Boosting Algorithms. In Proceedings of the Seventeenth International Conference on Machine Learning (ICML); Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2000; pp. 983–990. [Google Scholar]

- Abe, N.; Zadrozny, B.; Langford, J. An iterative method for multi-class cost-sensitive learning. In Proceedings of the Tenth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Seattle, WA, USA, 22–25 August 2004; pp. 3–11. [Google Scholar]

- Hwang, S.; Park, J.; Kim, N.; Choi, Y.; Kweon, I.S. Multispectral pedestrian detection: Benchmark dataset and baseline. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1037–1045. [Google Scholar]

- Viola, P.A.; Jones, M.J.; Snow, D. Detecting Pedestrians Using Patterns of Motion and Appearance. Int. J. Comput. Vis. 2005, 63, 153–161. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Dollár, P.; Tu, Z.; Perona, P.; Belongie, S. Integral Channel Features. In Proceedings of the British Machine Vision Conference; BMVC Press: London, UK, 2009; pp. 1–11. [Google Scholar]

- Sermanet, P.; Kavukcuoglu, K.; Chintala, S.; LeCun, Y. Pedestrian Detection with Unsupervised Multi-stage Feature Learning. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; pp. 3626–3633. [Google Scholar]

- Dollár, P.; Belongie, S.; Perona, P. The Fastest Pedestrian Detector in the West; BMVC Press: London, UK, 2010; pp. 1–11. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.A.; Ramanan, D. Object Detection with Discriminatively Trained Part-Based Models. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed]

- Paisitkriangkrai, S.; Shen, C.; van den Hengel, A. Efficient Pedestrian Detection by Directly Optimizing the Partial Area under the ROC Curve. In Proceedings of the 2013 IEEE International Conference on Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013; pp. 1057–1064. [Google Scholar]

- Marín, J.; Vázquez, D.; López, A.M.; Amores, J.; Leibe, B. Random Forests of Local Experts for Pedestrian Detection. In Proceedings of the 2013 IEEE International Conference on Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013; pp. 2592–2599. [Google Scholar]

- Luo, P.; Tian, Y.; Wang, X.; Tang, X. Switchable Deep Network for Pedestrian Detection. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 899–906. [Google Scholar]

- Ouyang, W.; Zeng, X.; Wang, X. Modeling Mutual Visibility Relationship in Pedestrian Detection. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; pp. 3222–3229. [Google Scholar]

- Mathias, M.; Benenson, R.; Timofte, R.; Gool, L.J.V. Handling Occlusions with Franken-Classifiers. In Proceedings of the 2013 IEEE International Conference on Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013; pp. 1505–1512. [Google Scholar]

- Ouyang, W.; Wang, X. Joint Deep Learning for Pedestrian Detection. In Proceedings of the 2013 IEEE International Conference on Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013; pp. 2056–2063. [Google Scholar]

- Zhang, S.; Bauckhage, C.; Cremers, A.B. Informed Haar-Like Features Improve Pedestrian Detection. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 947–954. [Google Scholar]

- Paisitkriangkrai, S.; Shen, C.; van den Hengel, A. Strengthening the Effectiveness of Pedestrian Detection with Spatially Pooled Features. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 546–561. [Google Scholar]

- Paisitkriangkrai, S.; Shen, C.; van den Hengel, A. Pedestrian Detection with Spatially Pooled Features and Structured Ensemble Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 1243–1257. [Google Scholar] [CrossRef] [PubMed]

- Benenson, R.; Omran, M.; Hosang, J.H.; Schiele, B. Ten Years of Pedestrian Detection, What Have We Learned? In Computer Vision—ECCV 2014 Workshops; Springer: Cham, Switzerland, 2014; pp. 613–627. [Google Scholar]

- Angelova, A.; Krizhevsky, A.; Vanhoucke, V. Pedestrian detection with a Large-Field-Of-View deep network. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 704–711. [Google Scholar]

- Toca, C.; Ciuc, M.; Patrascu, C. Normalized Autobinomial Markov Channels For Pedestrian Detection; BMVC Press: London, UK, 2015; pp. 1–11. [Google Scholar]

- Angelova, A.; Krizhevsky, A.; Vanhoucke, V.; Ogale, A.; Ferguson, D. Real-Time Pedestrian Detection with Deep Network Cascades; BMVC Press: London, UK, 2015; pp. 1–11. [Google Scholar]

- Yang, Y.; Wang, Z.; Wu, F. Exploring Prior Knowledge for Pedestrian Detection; BMVC Press: London, UK, 2015; pp. 1–11. [Google Scholar]

- Zhang, S.; Benenson, R.; Schiele, B. Filtered channel features for pedestrian detection. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1751–1760. [Google Scholar]

- Tian, Y.; Luo, P.; Wang, X.; Tang, X. Deep Learning Strong Parts for Pedestrian Detection. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1904–1912. [Google Scholar]

- Cai, Z.; Saberian, M.J.; Vasconcelos, N. Learning Complexity-Aware Cascades for Deep Pedestrian Detection. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 3361–3369. [Google Scholar]

- Zhang, S.; Benenson, R.; Schiele, B. CityPersons: A Diverse Dataset for Pedestrian Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4457–4465. [Google Scholar]

- Li, J.; Liang, X.; Shen, S.; Xu, T.; Yan, S. Scale-aware Fast R-CNN for Pedestrian Detection. IEEE Trans. Multimed. 2018, 20, 985–996. [Google Scholar] [CrossRef]

- Du, X.; El-Khamy, M.; Morariu, V.I.; Lee, J.; Davis, L.S. Fused Deep Neural Networks for Efficient Pedestrian Detection. arXiv, 2018; arXiv:1805.08688. [Google Scholar]

- Song, T.; Sun, L.; Xie, D.; Sun, H.; Pu, S. Small-scale Pedestrian Detection Based on Somatic Topology Localization and Temporal Feature Aggregation. arXiv, 2018; arXiv:1807.01438. [Google Scholar]

- Brazil, G.; Yin, X.; Liu, X. Illuminating Pedestrians via Simultaneous Detection and Segmentation. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4960–4969. [Google Scholar]

- Park, D.; Zitnick, C.L.; Ramanan, D.; Dollár, P. Exploring Weak Stabilization for Motion Feature Extraction. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; pp. 2882–2889. [Google Scholar]

- Dollár, P.; Appel, R.; Belongie, S.; Perona, P. Fast Feature Pyramids for Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1532–1545. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R.B. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).