Accurate and Cost-Effective Micro Sun Sensor based on CMOS Black Sun Effect †

Abstract

:1. Introduction

2. Sun Vector Extraction

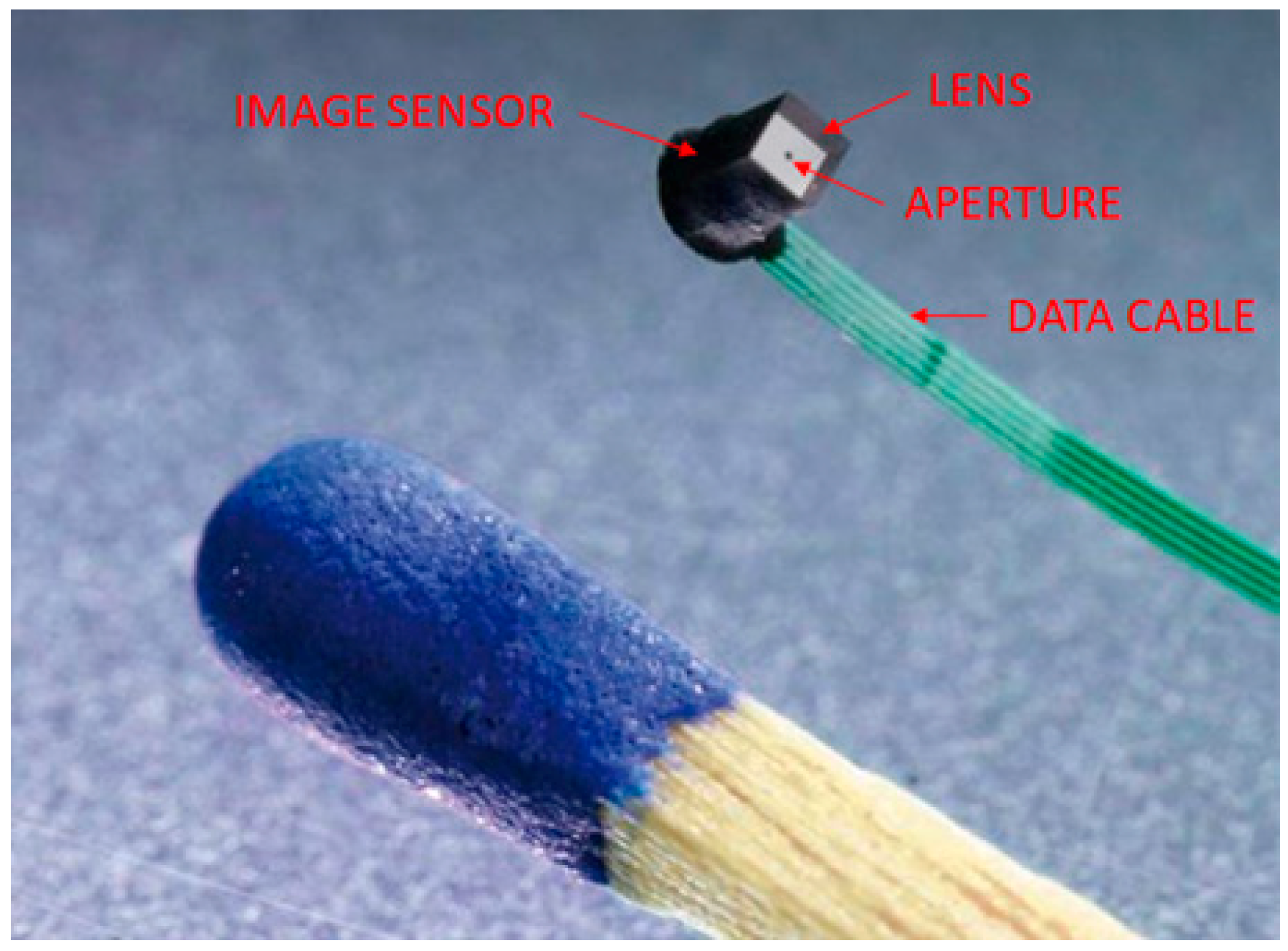

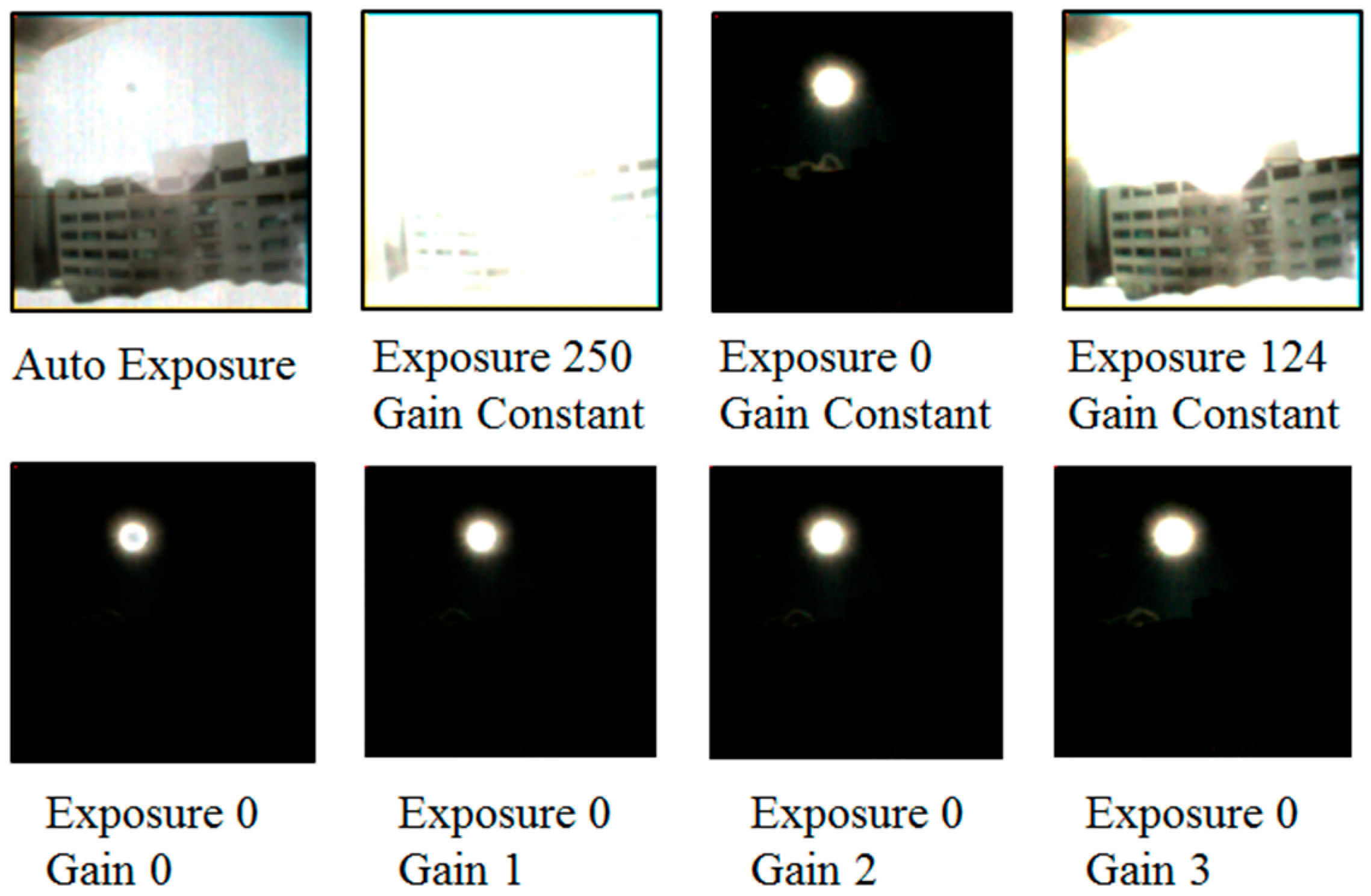

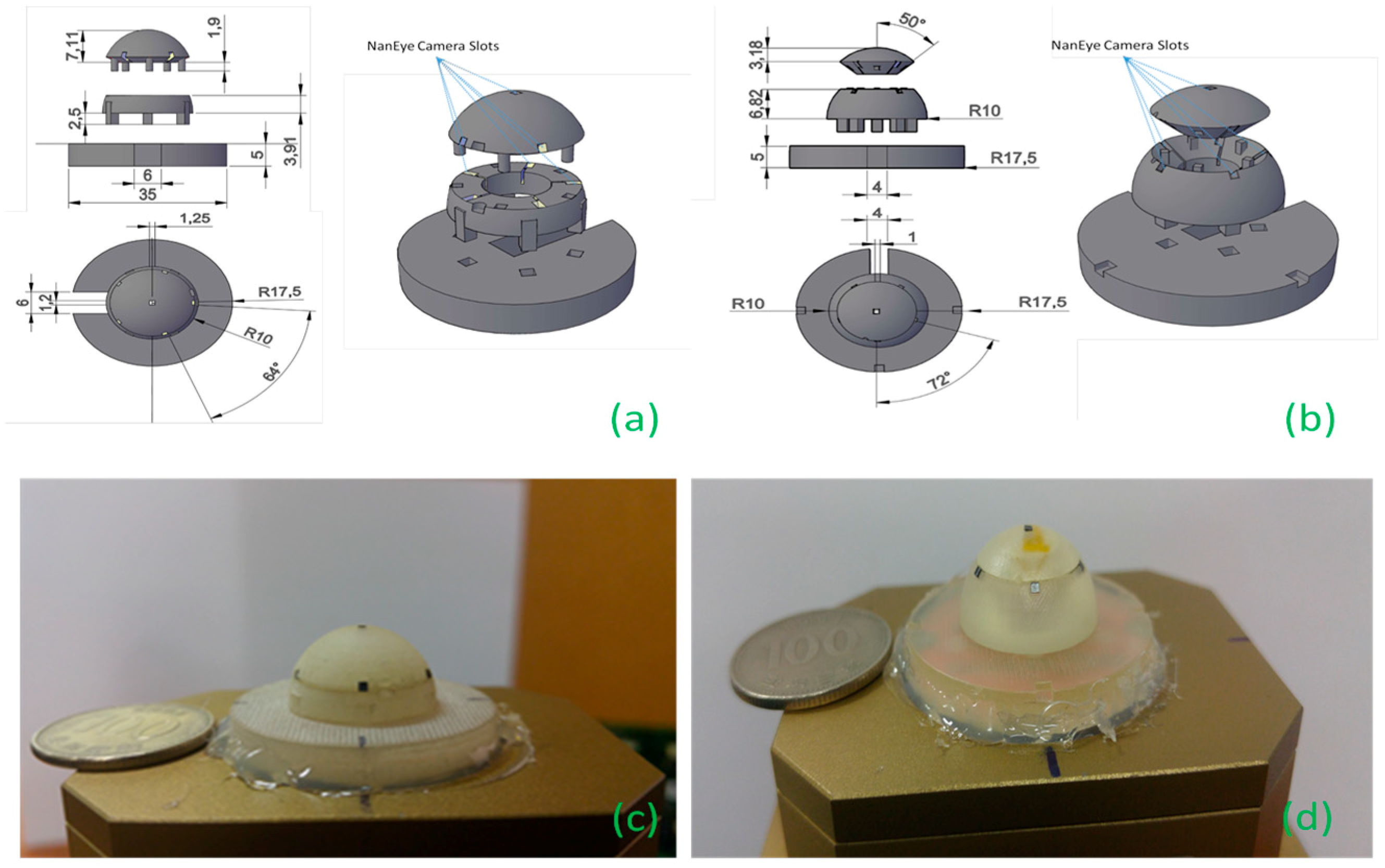

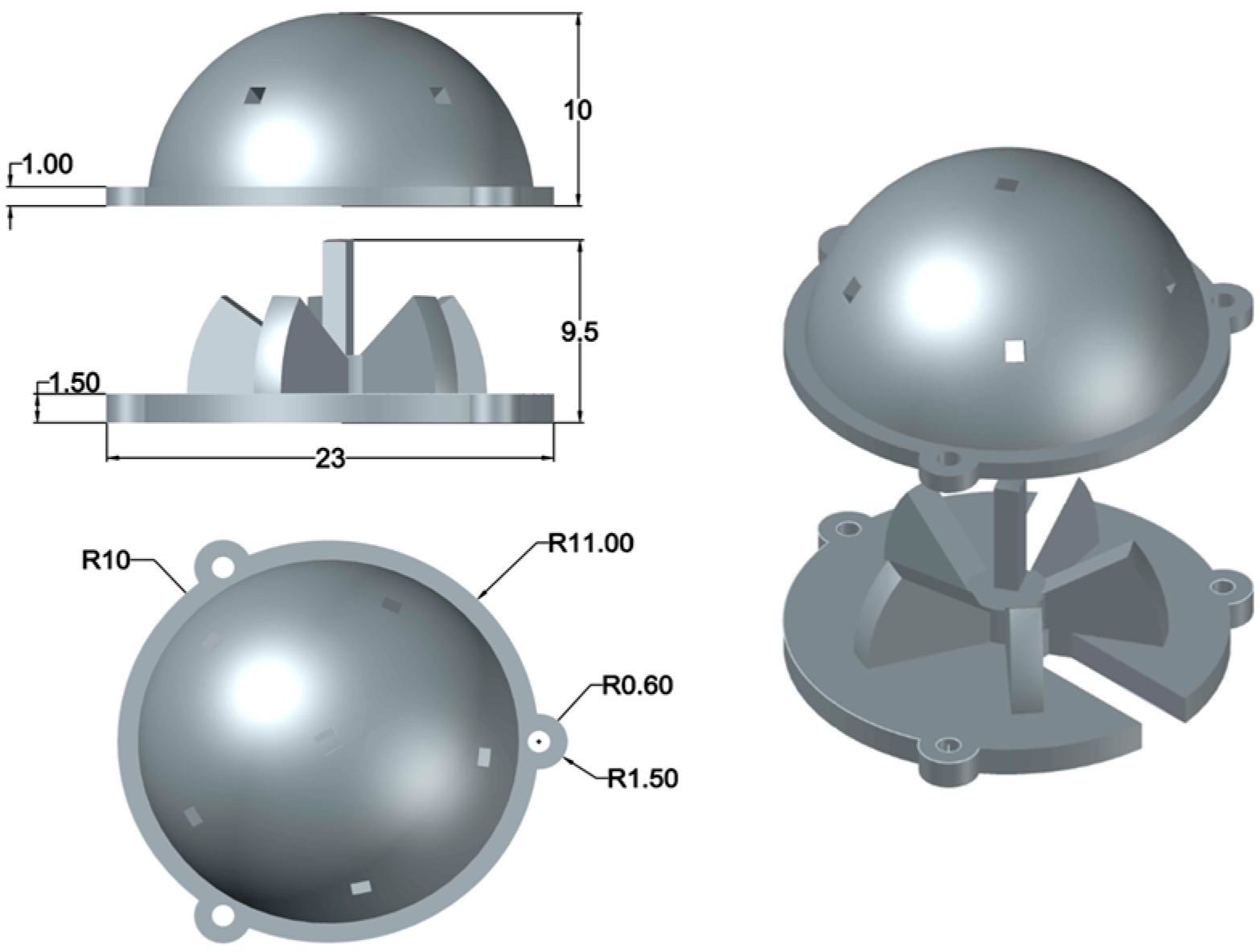

2.1. Camera Selection

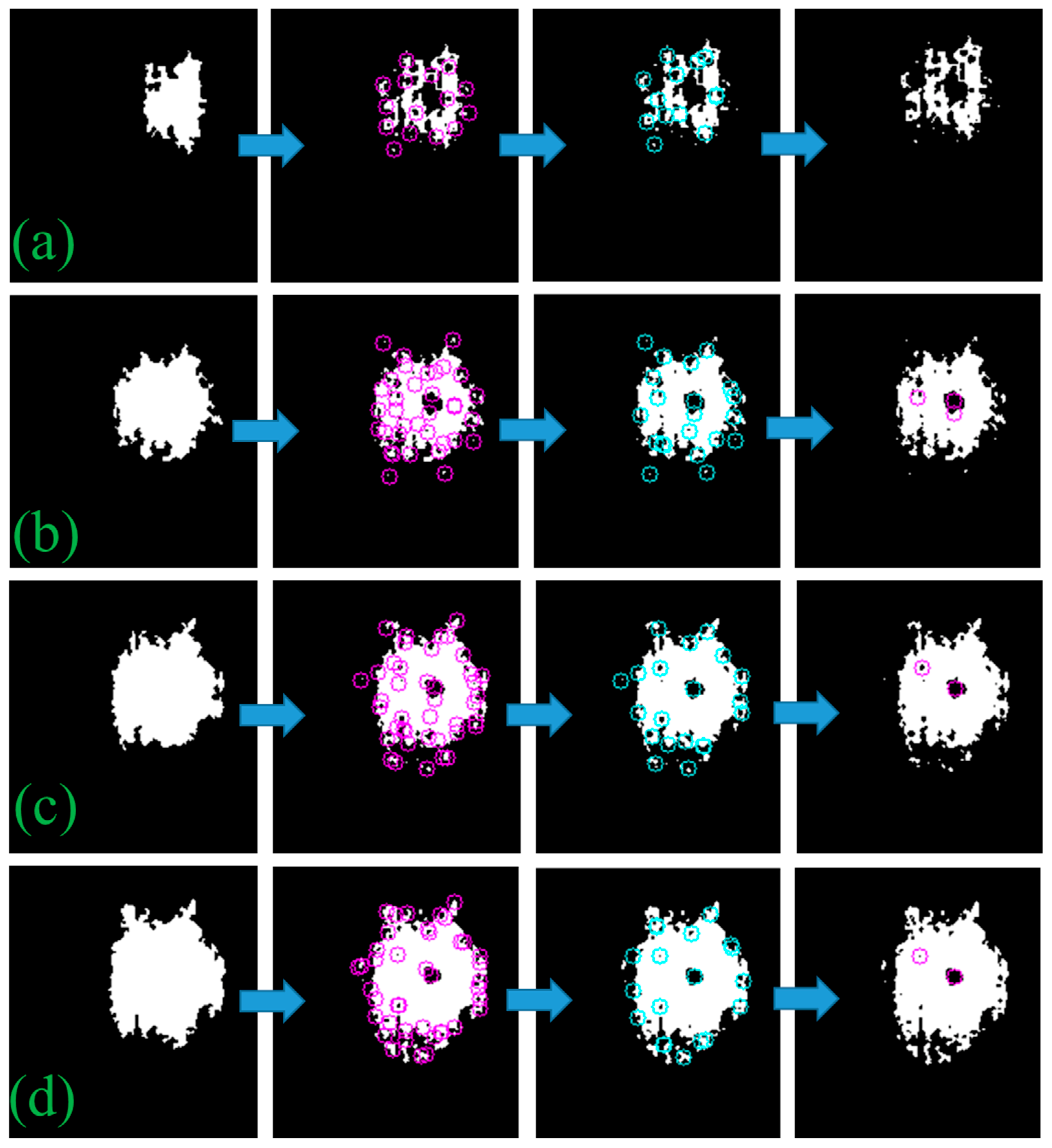

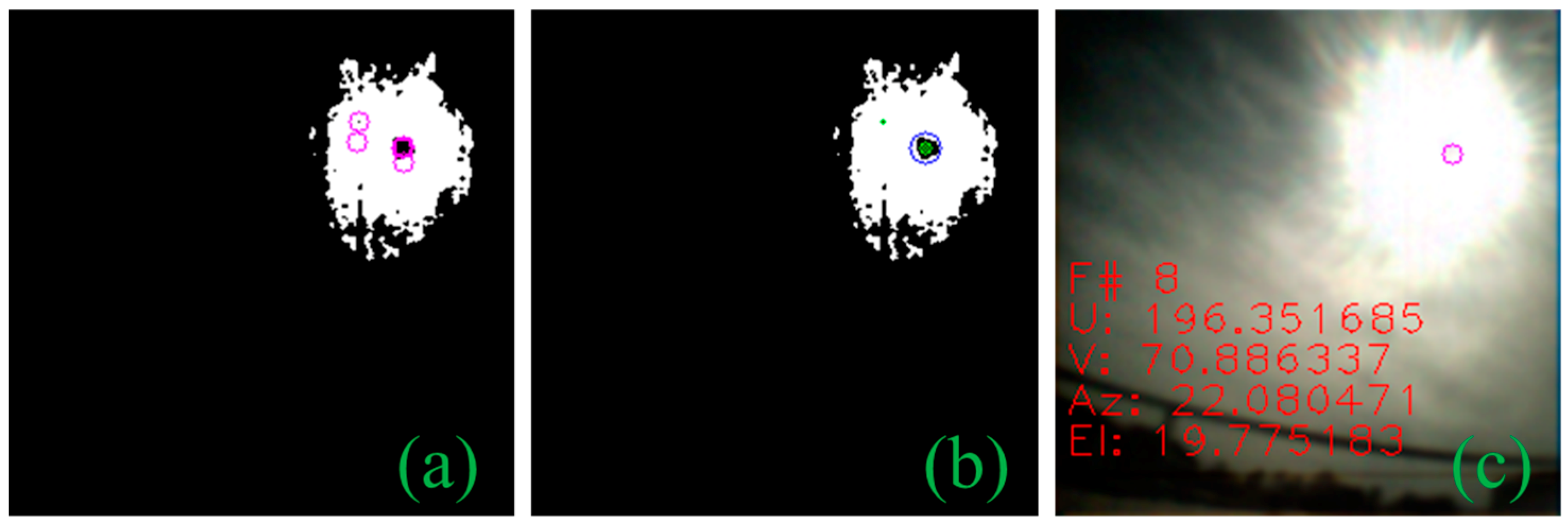

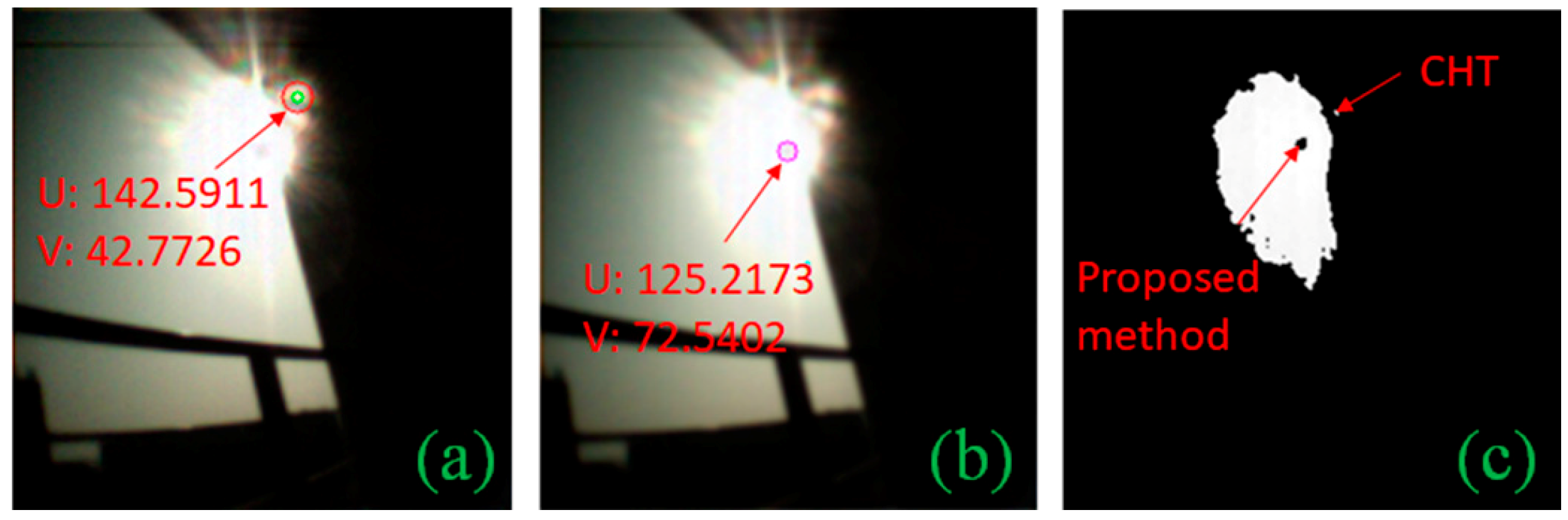

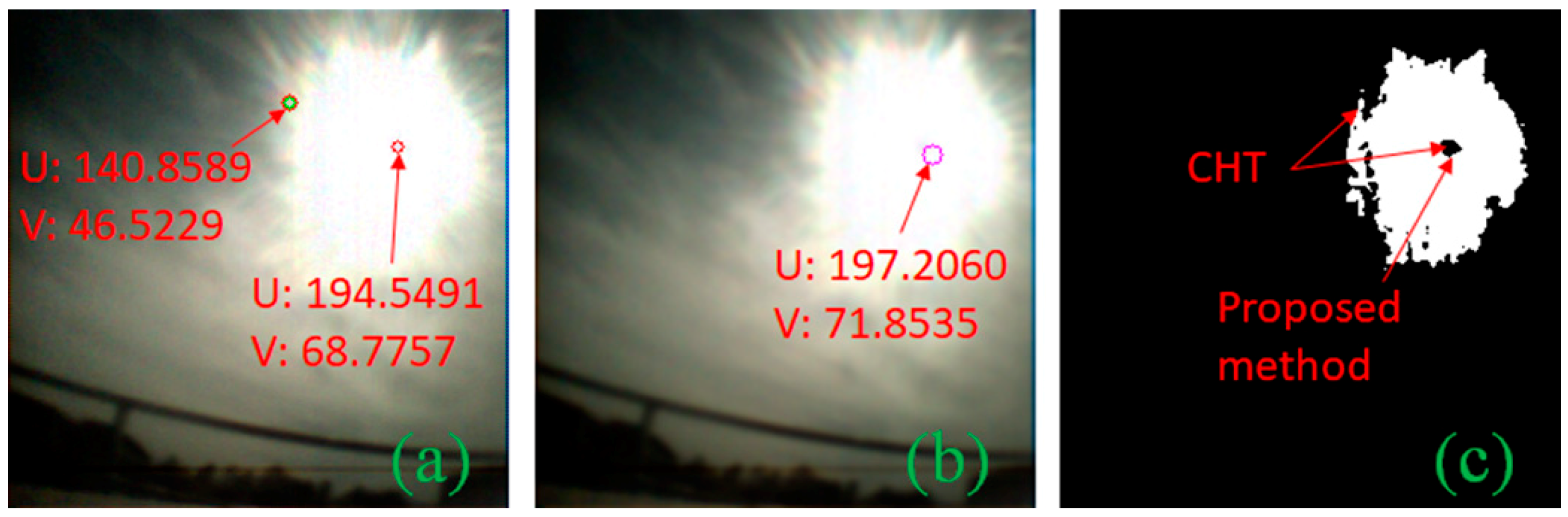

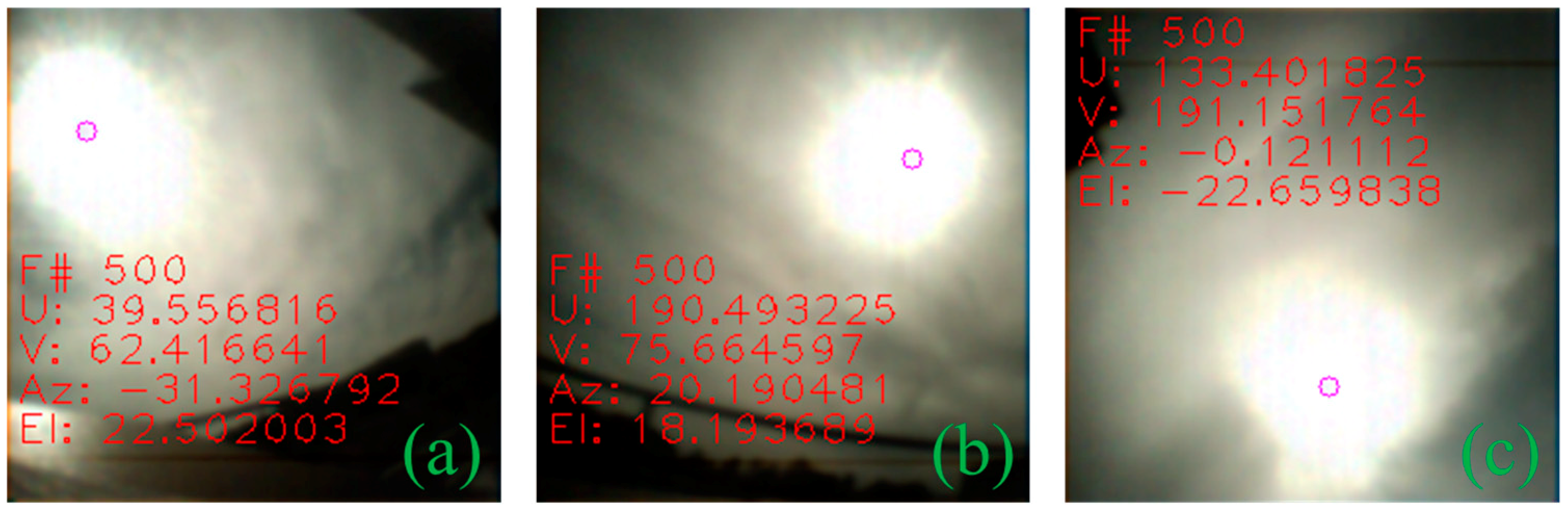

2.2. Centroid Detection

| Algorithm 1. Sun centroid detection using black sun detection |

| Input: Captured Image |

| 1: Apply Gaussian Blur and convert to grayscale 2: Set number of loop f and threshold = maximum pixel intensity - (: user-defined unsigned integer based on empirical sensor performance) 3: while f >= 1 3-1: Generate binary mask for pixels with intensity > threshold + f 3-2: Find contour in the binary mask 3-3: Find the index of largest contour 3-4: Get strong corner points 3-5: Find subpixel 3-6: Save corner points inside the largest contour (with eccentricity < 0.9) away from edges 3-7: Accumulate surviving points between iterations 3-8: Decrement f end while 4: Accumulate corner points 5: if no surviving points then Get accumulated corner point Check for point with the largest radius > minimum radius else Get surviving points Check for point with the largest radius > minimum radius end if Output: Black Sun Centroid Coordinates |

2.3. Performance Comparison

2.4. Sun Vector from Camera Pixels

3. Stationary Application

3.1. Approach

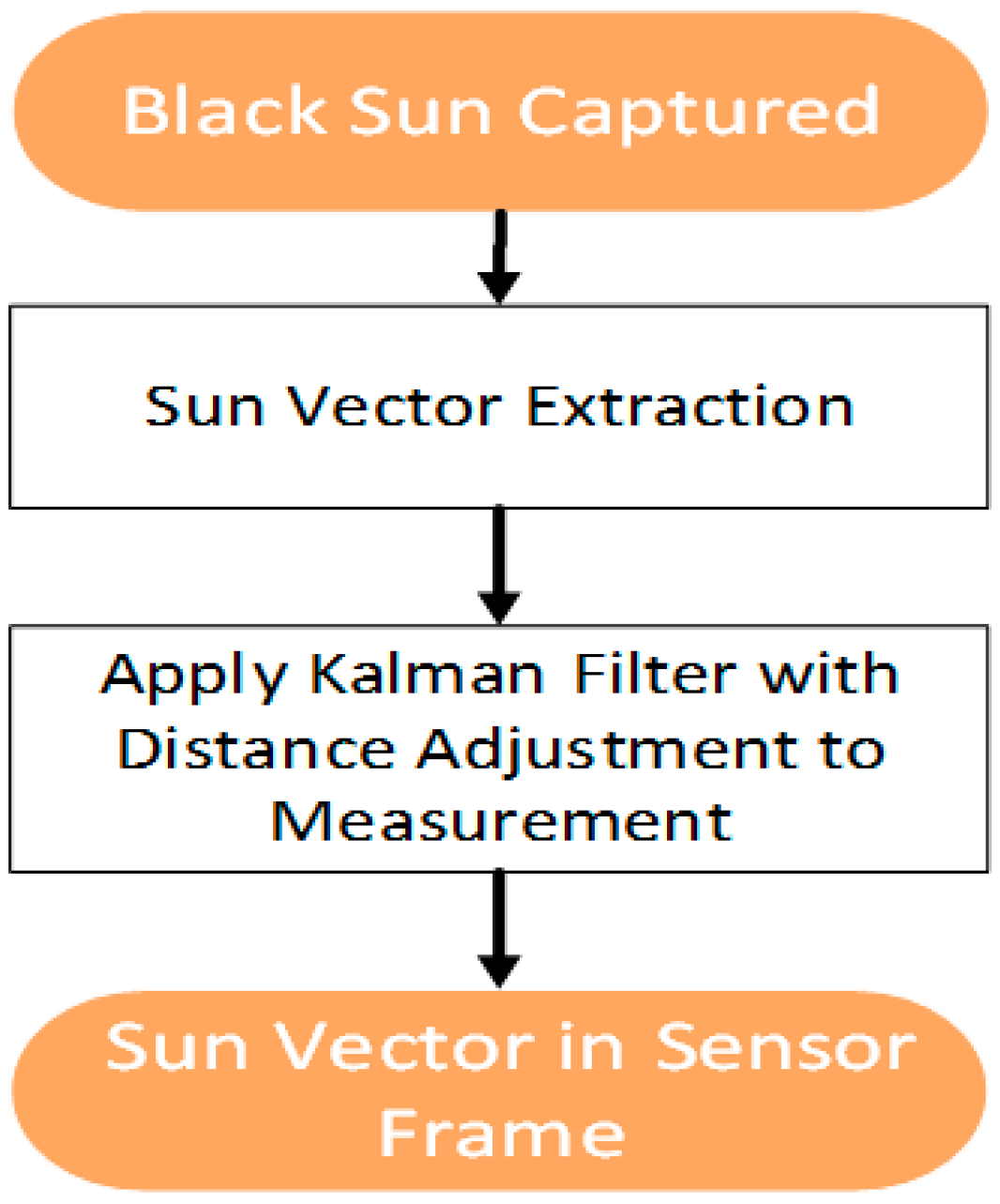

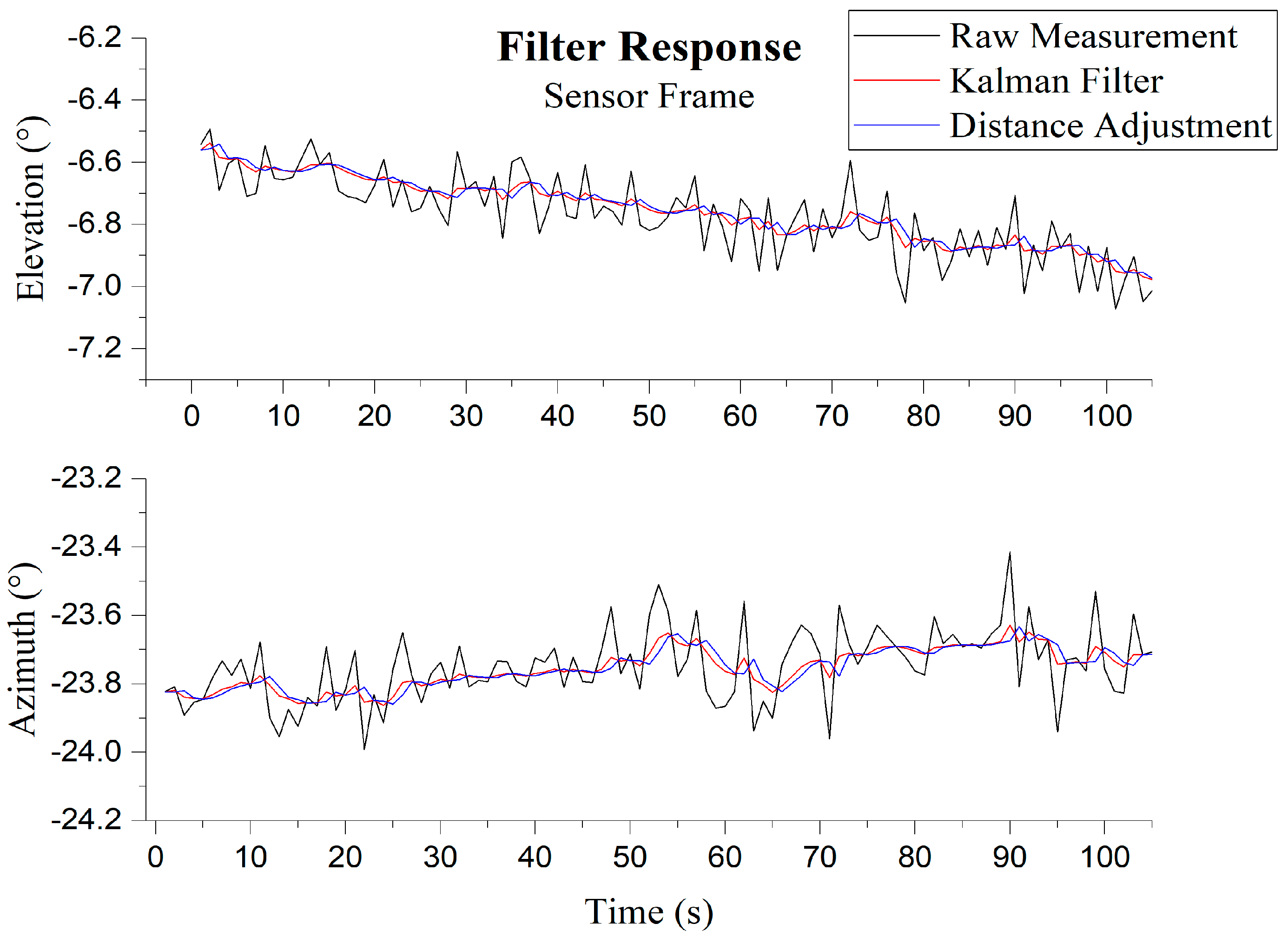

3.2. Filtering of the Sun Vector

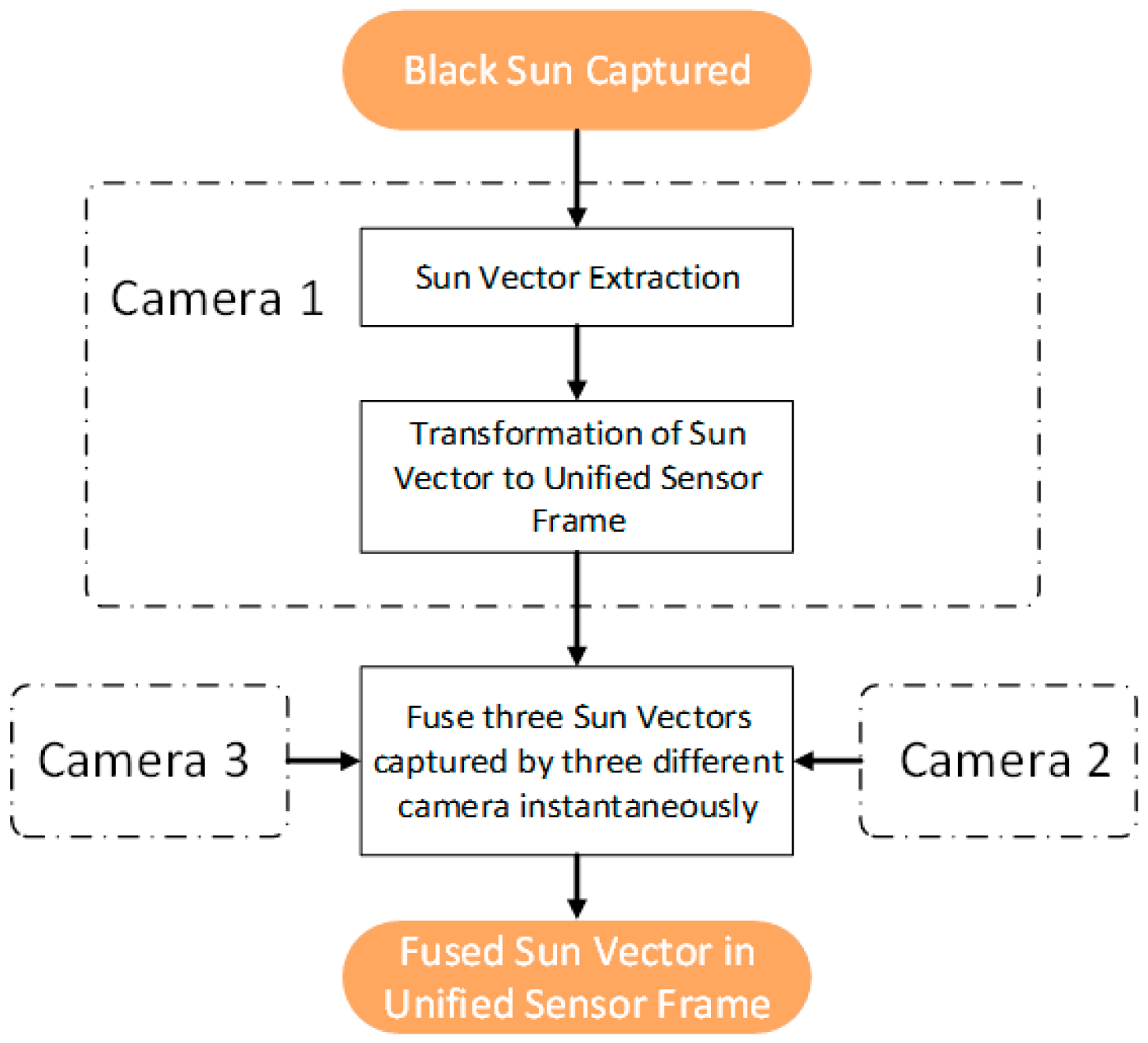

4. Non-Stationary Application

4.1. Approach

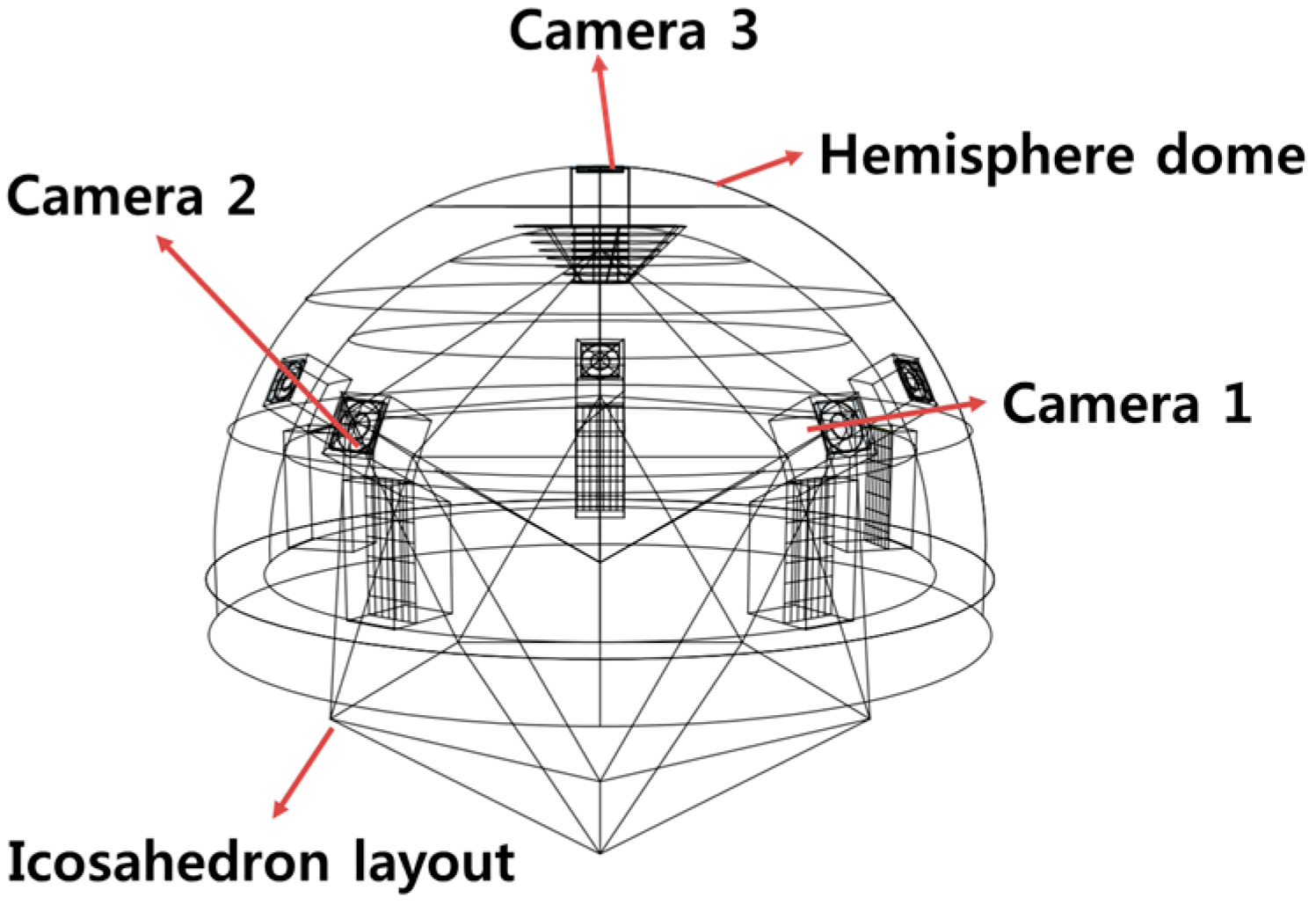

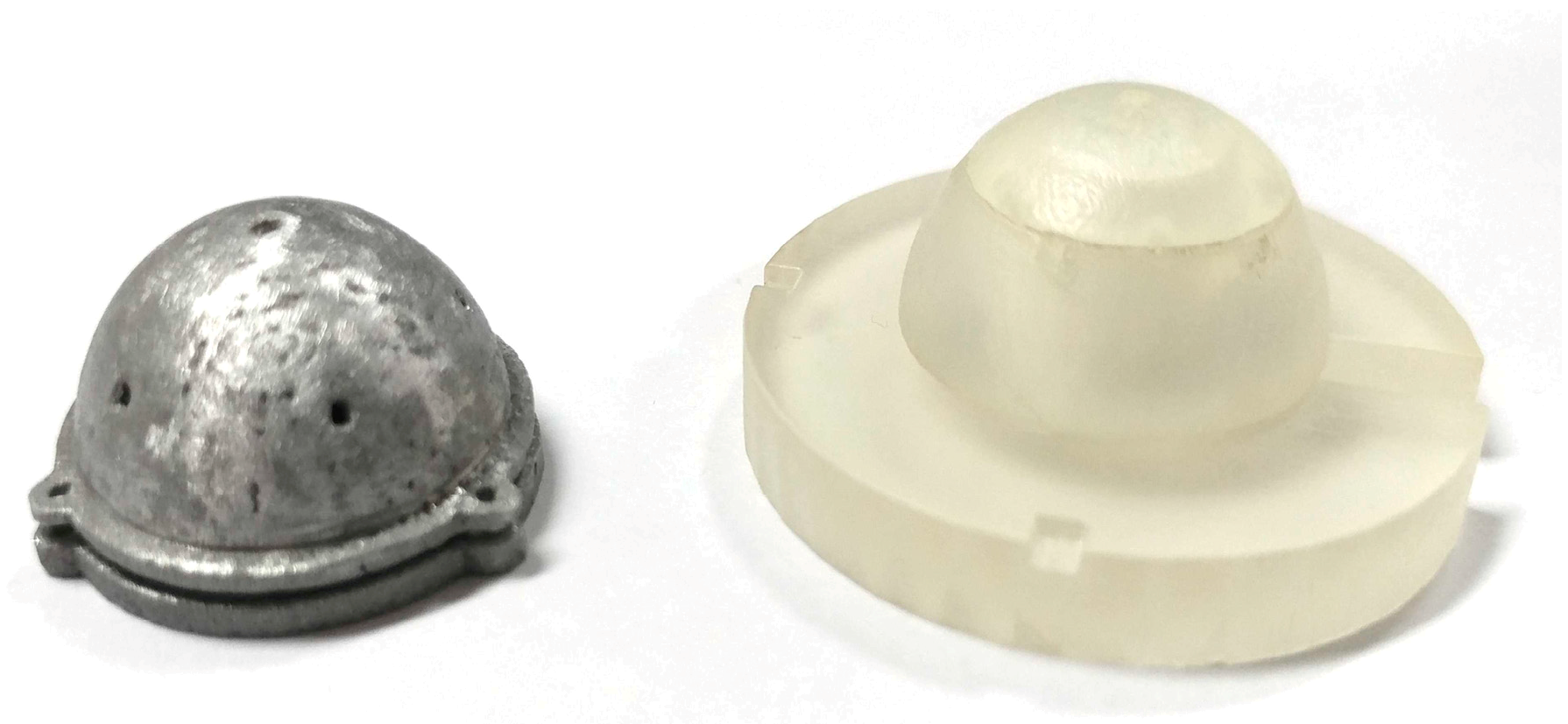

4.2. Icosahedron Design

4.3. Individual Sensor Orientation Estimation

4.4. Sensor Fusion

5. Experimentation

5.1. Black Sun Effect on the Error of Sun Vector Measurement

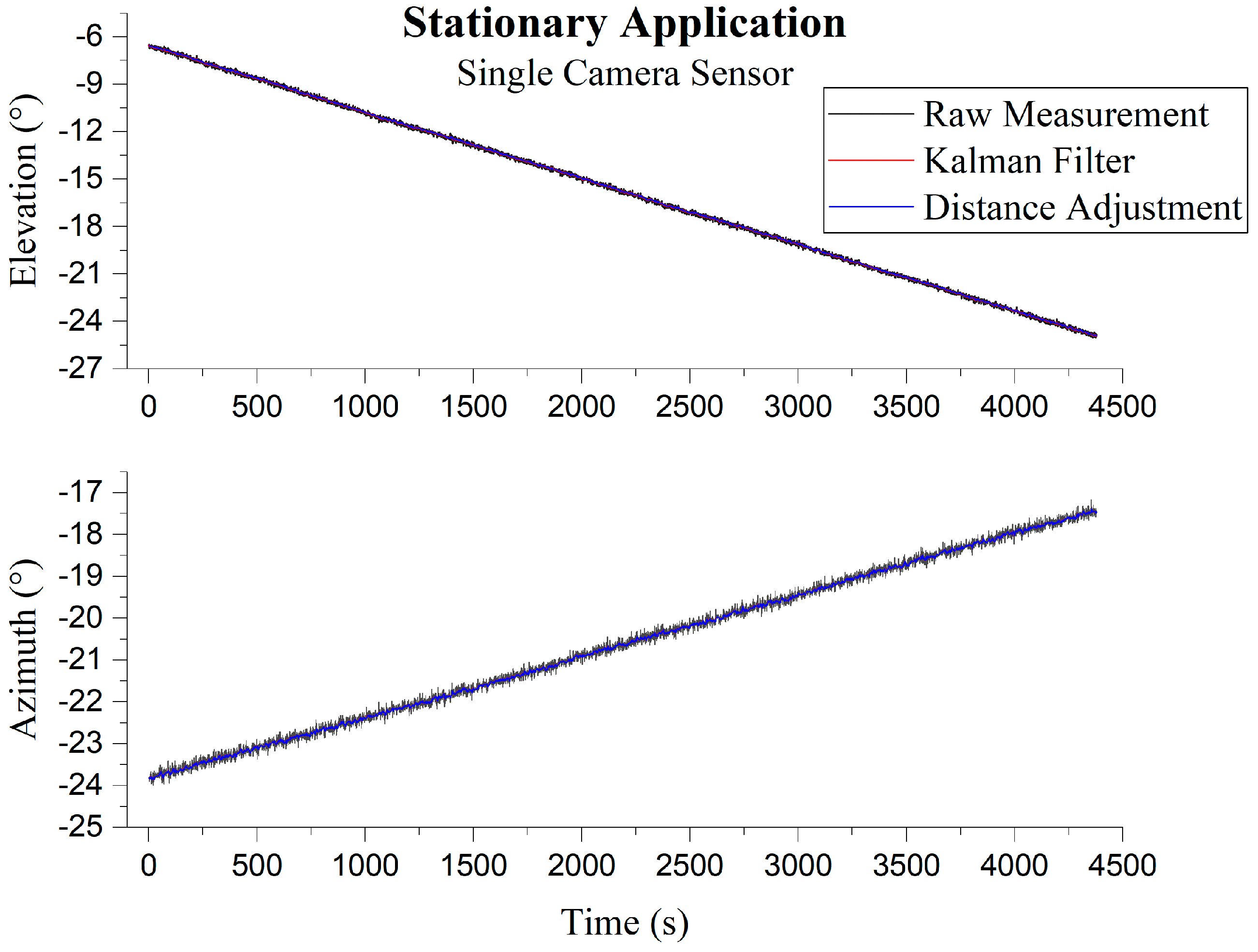

5.2. Stationary Application

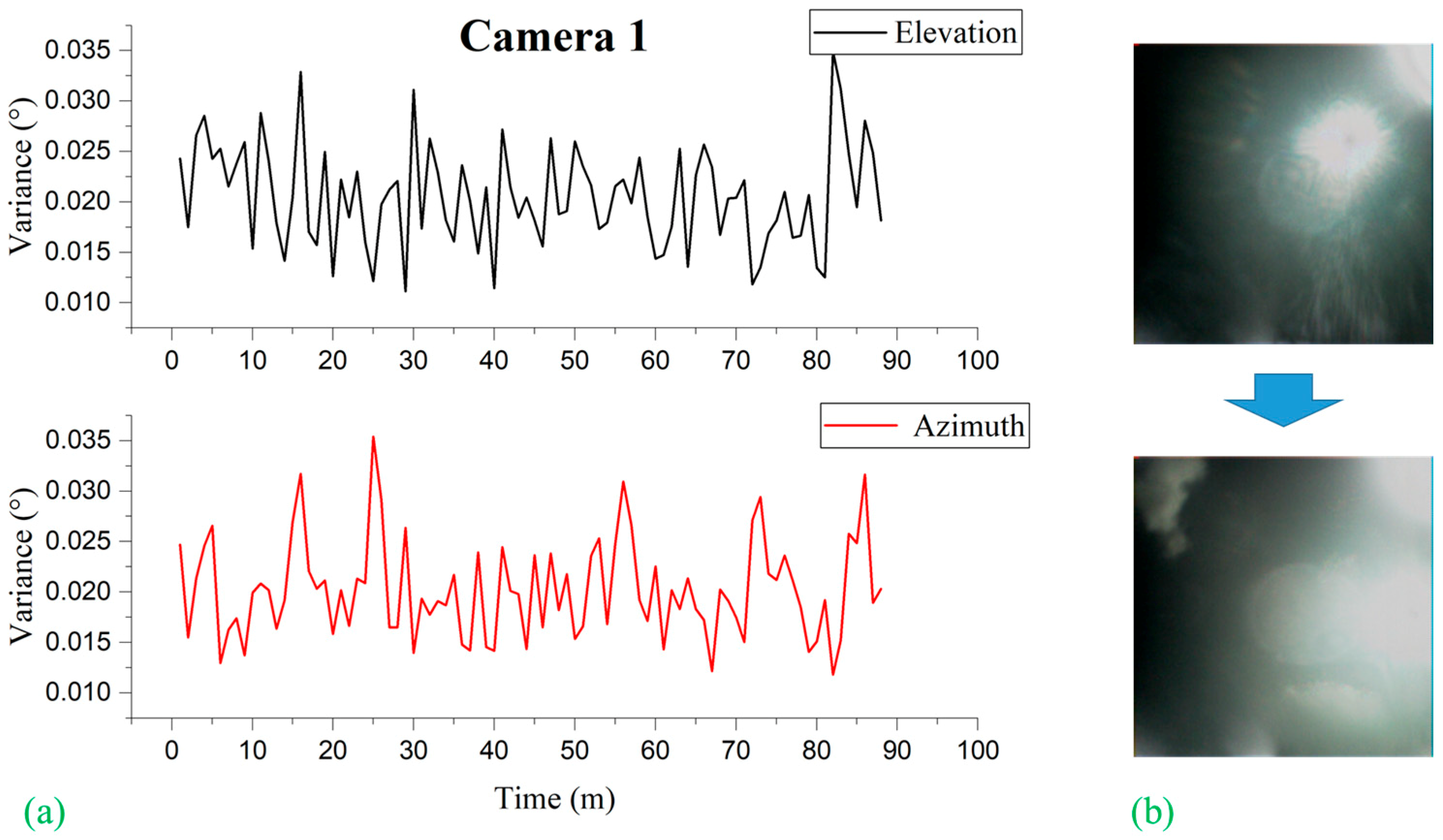

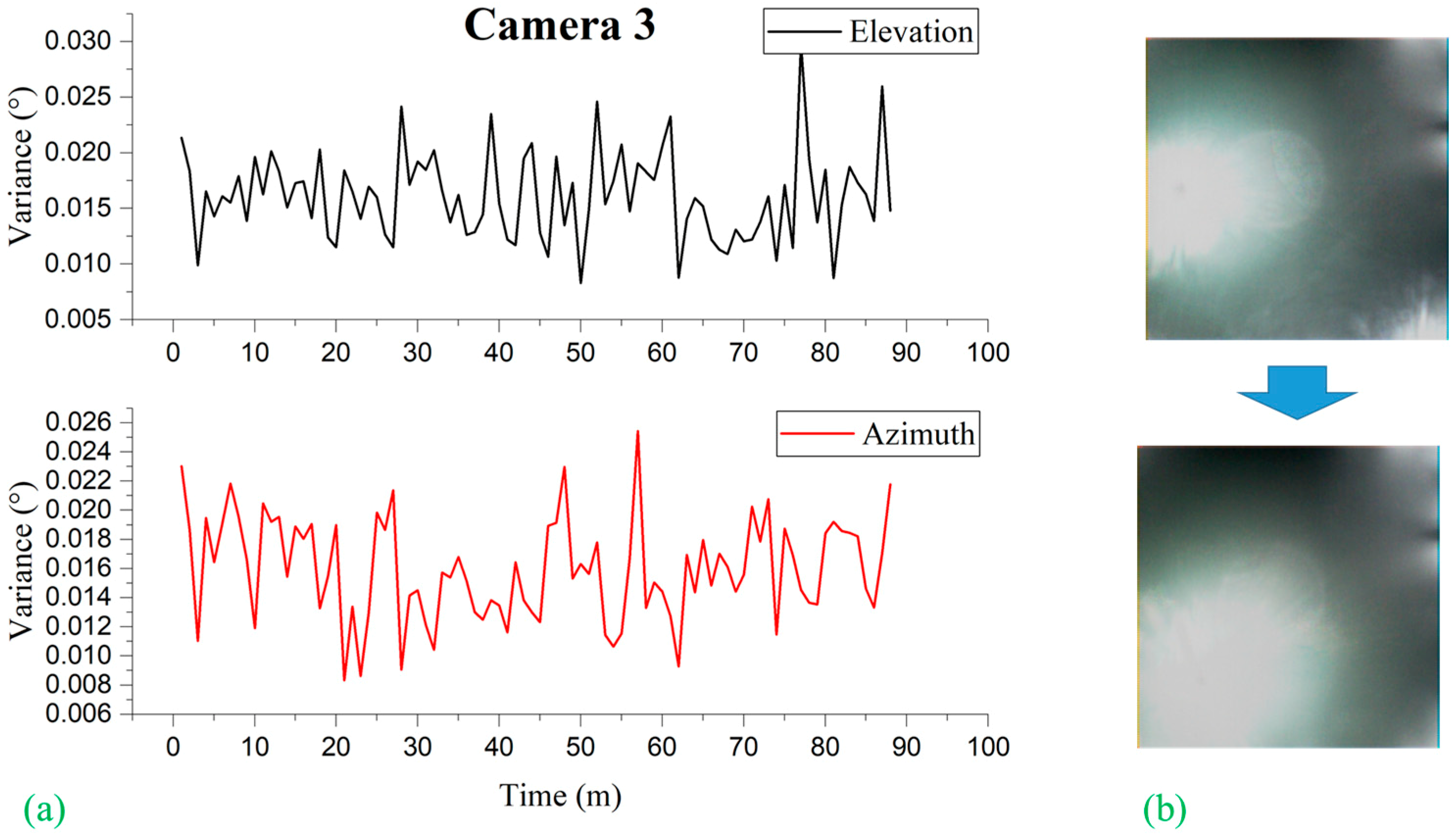

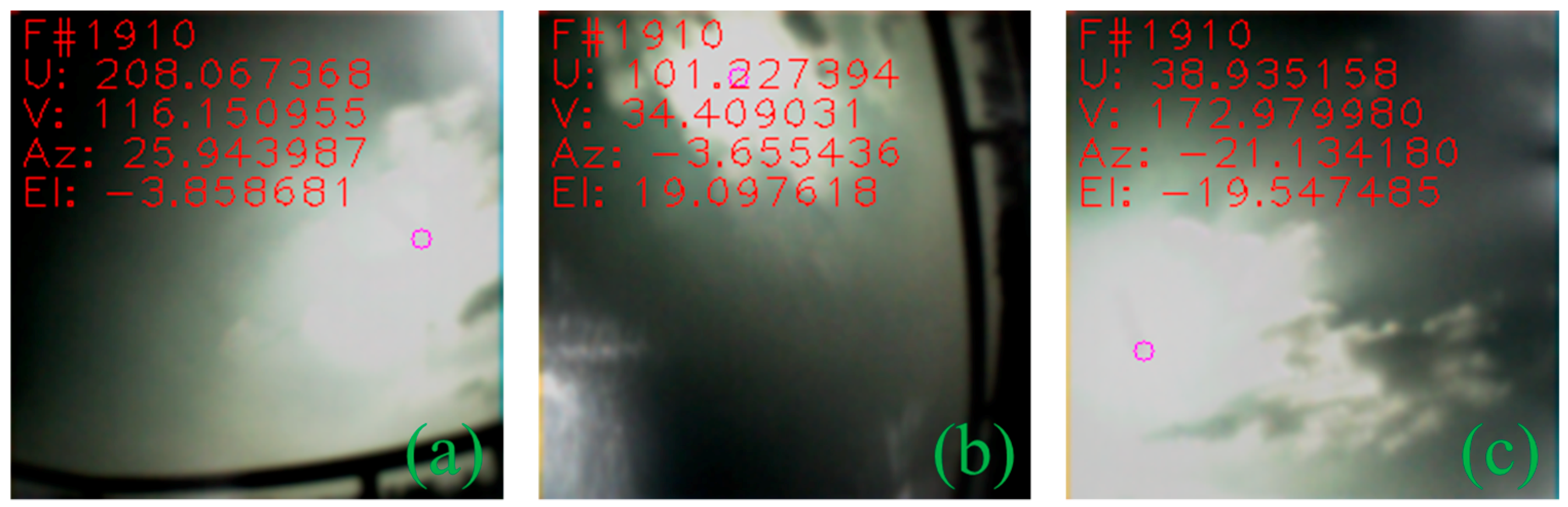

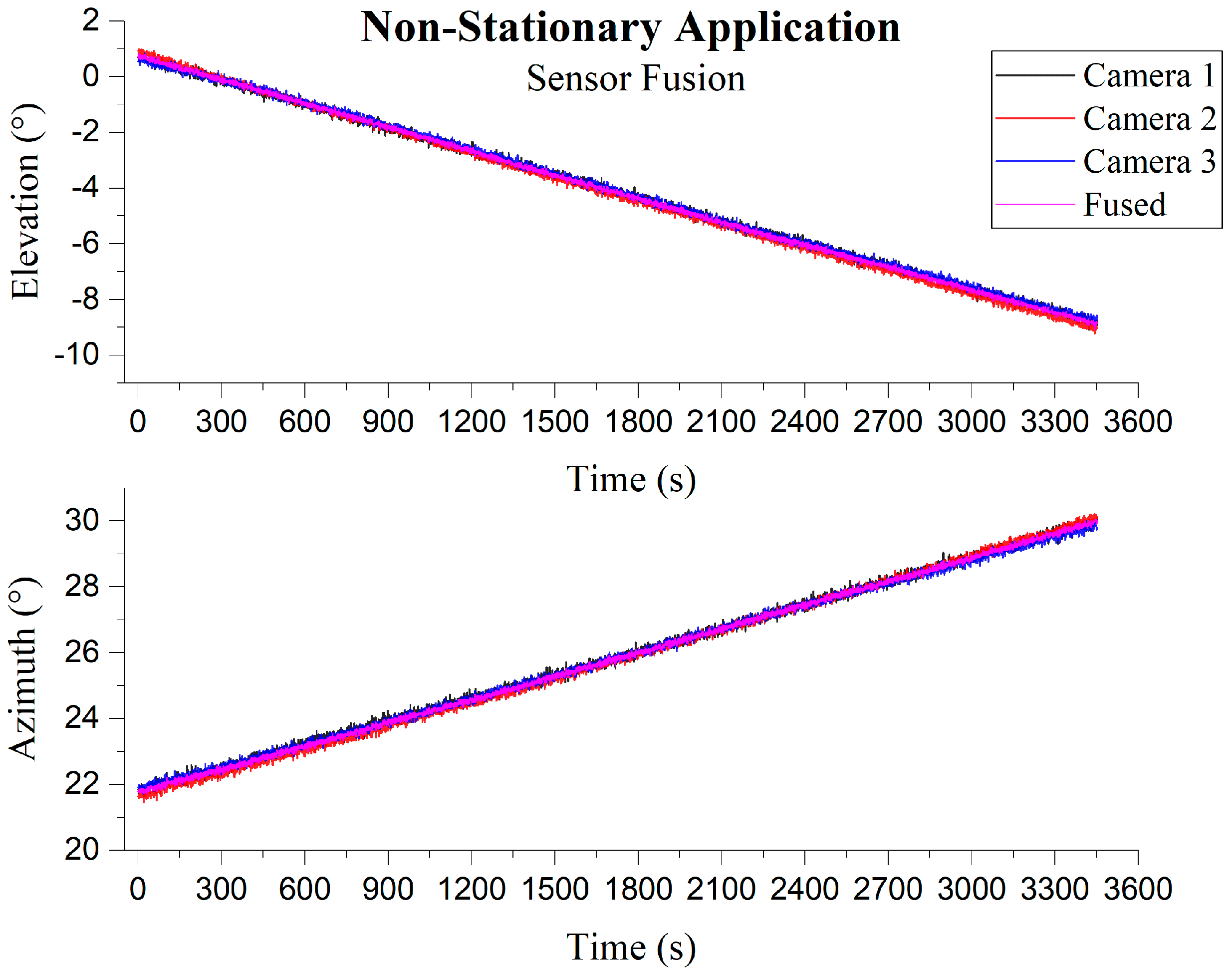

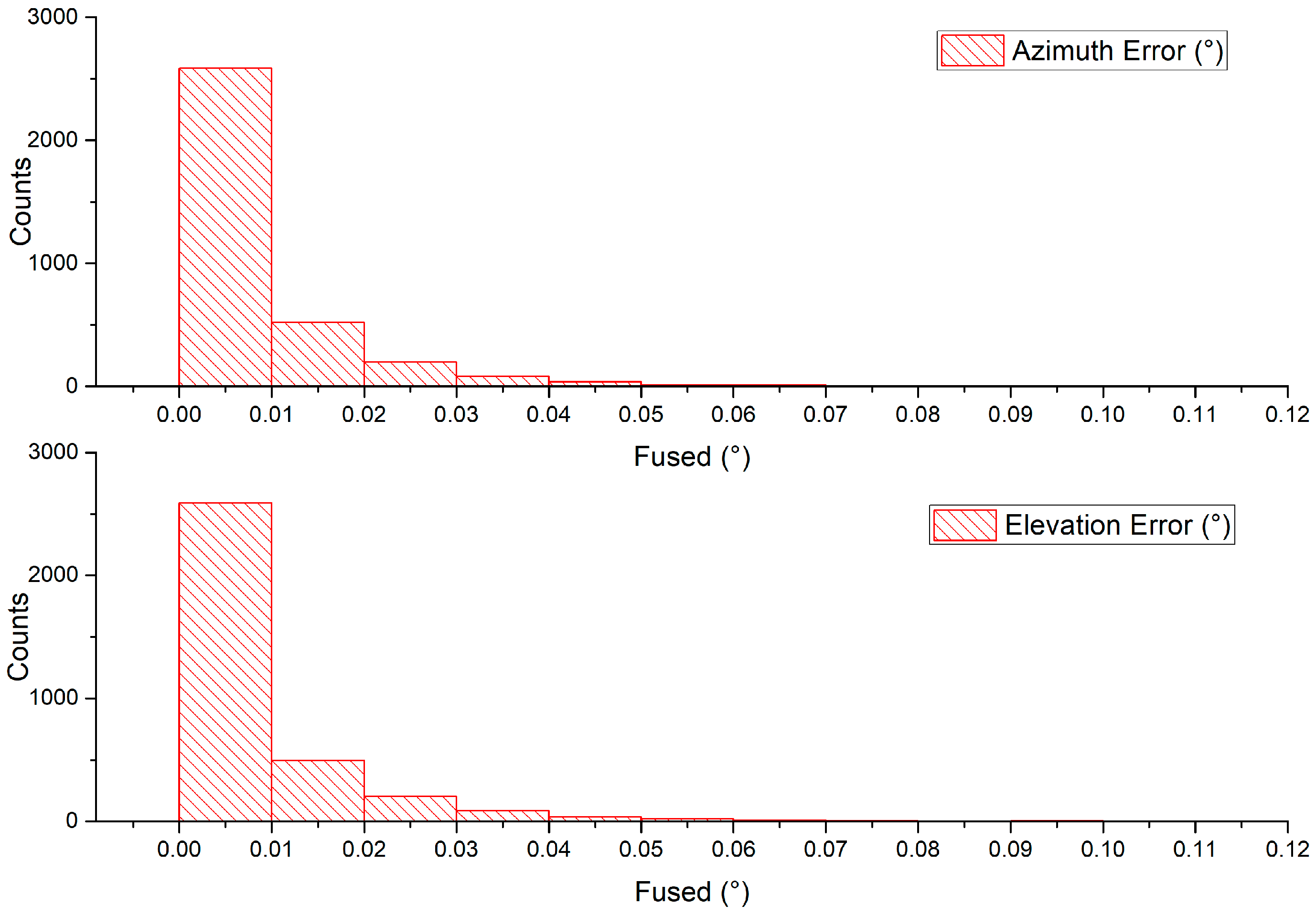

5.3. Non-Stationary Application

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Neilson, H.R.; Lester, J.B. Limb darkening in spherical stellar atmospheres. Astron. Astrophys. 2011, 530, A65. [Google Scholar] [CrossRef]

- Lim, S.-H. Column analog-to-digital converter of a CMOS image sensor for preventing a sun black effect. U.S. Patent 7,218,260, 15 May 2007. [Google Scholar]

- Volpe, R. Mars rover navigation results using sun sensor heading determination. In Proceedings of the 1999 IEEE/RSJ International Conference on Intelligent Robots and Systems. Human and Environment Friendly Robots with High Intelligence and Emotional Quotients (Cat. No.99CH36289), Kyongju, Korea, 17–21 October 1999; pp. 460–467. [Google Scholar]

- Doraiswami, R.; Price, R.S. A robust position estimation scheme using sun sensor. IEEE Trans. Instrum. Meas. 1998, 47, 595–603. [Google Scholar] [CrossRef]

- Singh, G.K. Solar power generation by PV (photovoltaic) technology: A review. Energy 2013, 53, 1–13. [Google Scholar] [CrossRef]

- Chiesi, M.; Franchi Scarselli, E.; Guerrieri, R. Run-time detection and correction of heliostat tracking errors. Renew. Energy 2017, 105, 702–711. [Google Scholar] [CrossRef]

- Liebe, C.C.; Mobasser, S. MEMS based Sun sensor. In Proceedings of the 2001 IEEE Aerospace Conference Proceedings (Cat. No.01TH8542), Big Sky, MT, USA, 10–17 March 2001; Volume 3, pp. 3/1565–3/1572. [Google Scholar]

- Mobasser, S.; Liebe, C.C. Micro Sun Sensor For Spacecraft Attitude Control. Int. Fed. Autom. Control 2004, 37, 833–838. [Google Scholar] [CrossRef]

- Solar MEMS Technologies S.L. SSOC-D60 Sun Sensor. Available online: http://www.solar-mems.com/ (accessed on 14 December 2015).

- Wei, M.; Xing, F.; You, Z.; Wang, G. Multiplexing image detector method for digital sun sensors with arc-second class accuracy and large FOV. Opt. Express 2014, 22, 23094. [Google Scholar] [CrossRef] [PubMed]

- Delgado, F.J.; Quero, J.M.; Garcia, J.; Tarrida, C.L.; Moreno, J.M.; Saez, A.G.; Ortega, P. SENSOSOL: MultiFOV 4-Quadrant high precision sun sensor for satellite attitude control. In Proceedings of the 2013 Spanish Conference on Electron Devices, Valladolid, Spain, 12–14 Februray 2013; pp. 123–126. [Google Scholar]

- Trebi-Ollennu, A.; Huntsberger, T.; Cheng, Y.; Baumgartner, E.T.; Kennedy, B.; Schenker, P. Design and Analysis of a Sun Sensor for Planetary Rover Absolute Heading Detection. IEEE Trans. Robot. Autom. 2001, 17, 939–947. [Google Scholar] [CrossRef]

- Deans, M.C.; Wettergreen, D.; Villa, D. A Sun Tracker for Planetary Analog Rovers. In Proceedings of the 8th International Symposium on Artificial Intelligence, Robotics and Automation in Space, Munich, Germany, 5–8 September 2005. [Google Scholar]

- Barnes, J.; Liu, C.; Ariyur, K. A hemispherical sun sensor for orientation and geolocation. IEEE Sens. J. 2014, 14, 4423–4433. [Google Scholar] [CrossRef]

- Liu, C.; Yang, F.; Ariyur, K.B. Interval-Based Celestial Geolocation Using a Camera Array. IEEE Sens. J. 2016, 16, 5964–5973. [Google Scholar] [CrossRef]

- Morys, M.; Mims, F.M.; Hagerup, S.; Anderson, S.E.; Baker, A.; Kia, J.; Walkup, T. Design, calibration, and performance of MICROTOPS II handheld ozone monitor and Sun photometer. J. Geophys. Res. Atmos. 2001, 106, 14573–14582. [Google Scholar] [CrossRef]

- Saleem, R.; Lee, S.; Kim, J. A cost-effective micro sun sensor based on black sun effect. In Proceedings of the 2017 IEEE SENSORS, Glasgow, UK, 29 October–1 Novomber 2017; pp. 1–3. [Google Scholar]

- Awaiba NanEye2D Module. Available online: http://www.awaiba.com/ (accessed on 1 September 2015).

- Hough, P.V.C. Method and Means for Recognising Complex Patterns. U.S. Patent 3,069,654, 18 December 1962. [Google Scholar]

- Arturo, M.M.; Alejandro, G.P. High—Precision Solar Tracking System. In Proceedings of the World Congress on Engineering, London, UK, 30 June–2 July 2010. [Google Scholar]

- Rahim, R.A. Image-based Solar Tracker Using Raspberry. J. Multidiscip. Eng. Sci. Technol. 2014, 1, 369–373. [Google Scholar]

- Wahba, G. A Least Square Estimate of Spacecraft Attitude. Soc. Ind. Appl. Math. Rev. 1965, 7, 409. [Google Scholar]

- Lourakis, M. An efficient solution to absolute orientation. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 3816–3819. [Google Scholar]

- Bakr, M.A.; Lee, S. A general framework for data fusion and outlier removal in distributed sensor networks. In Proceedings of the 2017 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Daegu, Korea, 16–18 Novomber 2017; pp. 91–96. [Google Scholar]

- Abu Bakr, M.; Lee, S. A Framework of Covariance Projection on Constraint Manifold for Data Fusion. Sensors 2018, 18, 1610. [Google Scholar] [CrossRef] [PubMed]

- US Department of Commerce; NOAA Earth System Research Laboratory. ESRL Global Monitoring Division. Available online: https://www.esrl.noaa.gov/gmd/grad/solcalc/calcdetails.html (accessed on 23 June 2016).

- Meeus, J. Astronomical algorithms, 2nd ed.; Willmann-Bell: Richmond, VA, USA, 1998; ISBN 978-0943396613. [Google Scholar]

| Positive Detection (%) | Circular Hough Transform | Our Method |

|---|---|---|

| Dataset 1 | 9.8501 | 99.7858 |

| Dataset 2 | 16.3339 | 99.8185 |

| RMSE (Standard. Error) | Azimuth | Elevation |

|---|---|---|

| Raw Measurement | 0.1250° (0.0884°) | 0.1255° (0.0888°) |

| Kalman Filter | 0.0205° (0.0145°) | 0.0208° (0.0147°) |

| Distance Adjustment | 0.0179° (0.0127°) | 0.0184° (0.0130°) |

| RMS Error (Standard Error) | Azimuth | Elevation |

|---|---|---|

| Camera 1 | 0.1429° (0.1010°) | 0.1422° (0.1005°) |

| Camera 2 | 0.1158° (0.0819°) | 0.1095° (0.0775°) |

| Camera 3 | 0.1261° (0.0892°) | 0.1268° (0.0897°) |

| Fused | 0.0713° (0.0504°) | 0.0717° (0.0507°) |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saleem, R.; Lee, S. Accurate and Cost-Effective Micro Sun Sensor based on CMOS Black Sun Effect. Sensors 2019, 19, 739. https://doi.org/10.3390/s19030739

Saleem R, Lee S. Accurate and Cost-Effective Micro Sun Sensor based on CMOS Black Sun Effect. Sensors. 2019; 19(3):739. https://doi.org/10.3390/s19030739

Chicago/Turabian StyleSaleem, Rashid, and Sukhan Lee. 2019. "Accurate and Cost-Effective Micro Sun Sensor based on CMOS Black Sun Effect" Sensors 19, no. 3: 739. https://doi.org/10.3390/s19030739

APA StyleSaleem, R., & Lee, S. (2019). Accurate and Cost-Effective Micro Sun Sensor based on CMOS Black Sun Effect. Sensors, 19(3), 739. https://doi.org/10.3390/s19030739