Abstract

We present a novel calibration method for a multi-view laser Doppler speed sensing (MLDSS) system. In contrast with the traditional method where only the laser geometry is independently calibrated, the proposed method simultaneously optimizes all the laser parameters and directly associates the parameters with a motion sensing model. By jointly considering the consistency among laser Doppler velocimetry, the laser geometry and a visual marker tracking system, the proposed calibration method further boosts the accuracy of MLDSS. We analyzed the factors influencing the precision, and quantitatively evaluated the efficiency of the proposed method on several data sets.

1. Introduction

Multiview laser Doppler speed sensing (MLDSS) [1] uses several one-dimensional speed measurements to recover the six-degree-of-freedom (6-DOF) motion of an arbitrary rigid body. Such a system has unique advantages, such as the capability to measure untextured and unstructured targets, ultrahigh speed, and ultralow computational cost. An inertial measurement unit [2,3] may have similar characteristics, but would be intrusive since it must be attached to the target. On the other hand, conventional contactless 6-DOF motion-sensing techniques, including computer-vision approaches, usually rely on the target structure [4,5] and texture [6,7], making it difficult to directly reapply them when the scenario changes. In this sense, MLDSS is potentially a more general solution for most motion-sensing tasks, e.g., industrial inspection, user interface [8], and autonomous driving.

It is important to mention that the precision of MLDSS strongly relies on the sensing accuracy of the laser Doppler velocimeter (LDV), the system-layout parameters, and calibration precision. Factors related to the LDV sensing, such as speckle noise [9,10] and laser source drift [11,12] have been well discussed. Hu et al. stated the essential system-layout conditions, and proposed a simple method to calibrate the system parameters [1]. In their approach, each laser was separately calibrated by linearly fitting of the camera-collected 3D laser spot co-ordinates.

A similar calibration principle can be commonly found in the literature for the calibration of galvanoscopic laser systems using various types of sensors [13,14,15]. It makes sense and achieves relatively good accuracy, but it has to be noted that LDV measurements and the system layout are not involved in the process. Hence, we refer to it as “geometric-only calibration”. Although measurement precision is rather sensitive to the accuracy of the calibration parameters, geometric-only calibration minimizes only the error in image-based measurements, but ignores correlation with the motion-reconstruction process.

The inspiration for our approach is derived from the calibration techniques of optical systems and computer vision, in which simultaneously calibrating all elements is rather beneficial in terms of both simplicity and accuracy [16,17]. It is also important to incorporate a system measurement model into the geometrical calibration, as implied in References [18,19]. Based on this idea, we present a novel calibration technique for MLDSS. This is done with a maximum-likelihood estimate (MLE) for the system parameters given the measurements of the LDV, and the motion ground truth provided by an additional 3D tracking vision system. Instead of directly calculating the laser geometry, in this method we sought the best group of laser parameters that minimized motion-estimation error. We validated the proposed method on several different datasets, and an obvious improvement in accuracy was confirmed.

2. MLDSS Parameters

In this section, we briefly describe the basic kinematic formulation in MLDSS [1] and parameterize it for the calibration.

Basic Equations

We discuss a right-handed co-ordinate system with a fixed origin . A rigid body has a 6-DOF speed denoted by , with angular components and linear components .

MLDSS utilizes a simple kinematic model to reconstruct the speed of a target rigid body. When a laser-beam ray hits the target, the one-dimensional velocity along this ray is measured by the Doppler effect. Let the laser be denoted by , where is one point on , and is the direction unit vector. Velocity measurement could then be formulated as:

By combining multiple measurements with the form in Equation (1), the 6-DOF speed could be linearly reconstructed from one-dimensional velocity measurements . The minimum MLDSS implementation with six lasers, as shown in Figure 1, is written as:

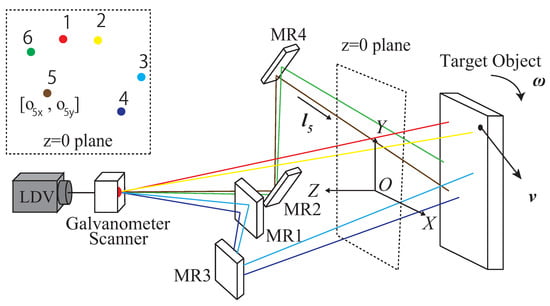

Figure 1.

System to be calibrated and its parameters. Laser beams emitted from the laser Doppler velocimeter (LDV) are reflected by the galvanometer scanner and mirror array MR1~MR4, and finally hit a moving-target object. By scanning the laser on the target surface, the six-degree-of-freedom (6-DOF) speed of the target could be reconstructed. Parameters consist of laser geometry, denoted by the x and y co-ordinates on the z = 0 plane, and the laser-direction angles.

We use A to denote the leftmost matrix, and to denote the rightmost vector in Equation (2). Matrix A fully describes the system, but the dimension of the parameter space is smaller than the number of matrix elements. Consider the row of A, . It represents the measurement process of laser , determined by and . Note that is a point on a straight line, and is a unit vector. Both and have only two DOFs, so that has four DOFs. Under the optical system layout and the specified co-ordinate system illustrated in Figure 1, we let and , without loss of generality. Then, the parameters of the system could be denoted by

where P consists of 24 elements.

3. MLDSS Calibration

3.1. Geometric-Only Calibration

Note that P consists of only the geometrical parameters of the lasers. We can directly calculate each laser’s spatial position, i.e., , in the calibration.

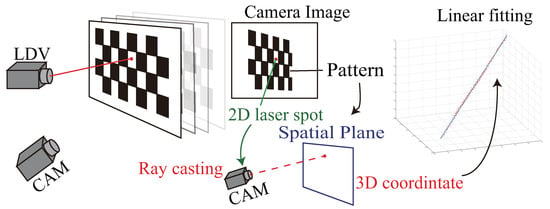

We first briefly describe this geometric-only calibration method [1,14,15]. As illustrated in Figure 2, a planar board with printed pattern such as a chessboard is used as the calibration object. When the laser hits the object, both the pattern and the laser spot are captured by a camera. With no loss of generality, we assume the camera is already calibrated. The position of the pattern can be calculated from the image [20], and the 3D co-ordinates of the laser spot can be computed by casting a ray from the camera to the pattern plane. This procedure is repeated, and the laser parameters are acquired by linear fitting of multiple 3D point co-ordinates. Note that a similar principle can be used with a different sensor, such as a depth camera [13].

Figure 2.

Geometric-only calibration procedures.

Apparently, this method has geometrical errors from sources such as the image processing error and lens distortion. Here, we go one step farther to see the error of motion reconstruction. Consider Equation (2). We can split matrix A into a ground-truth matrix and a distortion matrix . The motion reconstruction error related to the calibration error is then denoted by . Comparing with the error introduced by the LDV measurement denoted by , the calibration error introduces an unbounded error that linearly increases when target speed becomes larger. Hence, in the case of sensing large speed, calibration accuracy could be much more important than LDV measurement accuracy. However, the geometric-only methods only minimize the geometric error (e.g., reprojection error), so that the motion-reconstruction error is prone to larger target speed and systematic errors of the calibration system.

The intuition here, as we claimed in Section 1, is that all laser parameters should be jointly optimized, and motion-reconstruction error should be minimized instead of laser geometry error, so that bounded motion-reconstruction accuracy could be expected.

3.2. Statistical Calibration by Minimizing Motion-Reconstruction Error

Hence, in this paper, the calibration of MLDSS is described as how to find the best set of parameters P that generate the most accurate 6-DOF speed estimation under the current system layout, given LDV measurements .

To simplify the discussion, we divided motion sensing into discrete frames. First, assume that we know the ground-truth position of the target object at each frame k. It is denoted by in the space of Lie algebra, where is the orientation and is the location. Simultaneously, the MLDSS system measures velocities , respectively from each laser.

Note that long-term speed integration would inevitably introduce larger and time-dependent error, a short-time interval may result in undesired peaks in the estimated speed, and interframe subtraction helps to cancel systematic errors in a 3D tracking system. We formulate the problem with interframe motion increments. The pose change between frame k and is thus represented by , where

denotes the conversion from a rotation vector to the corresponding rotation matrix, known as the Rodrigues transform [21].

Knowing that matrix A can be uniquely determined by parameter set P, the 6-DOF speed of the target measured by the MLDSS system at frame k could be calculated by . Let . The interframe pose-change estimation of the MLDSS system is thus written as

We use to denote the error of one frame. With the assumption that is locally linear, and the LDV measurement error follows a Gaussian distribution, the MLE of the MLDSS system is the solution of the following nonlinear least-square problem:

We solve Equation (6) using the Levenberg–Marquardt algorithm [22]. It requires an initial guess of P, which can be provided by the geometric-only method as introduced in Section 3.1.

3.3. System Setup and Nonideal Factors

It has to be mentioned that the description in Section 3.2 omitted some details in order to clearly state the proposed method. In this subsection, we describe the practical system for calibration and reformulate the complete calibration problem.

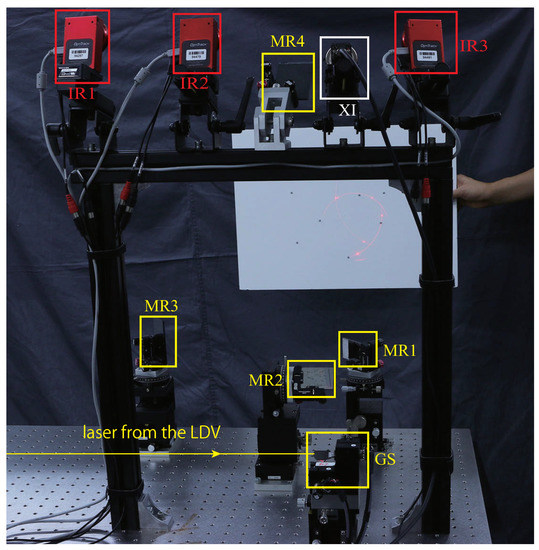

Figure 3 illustrates the system for calibration. It consists of the MLDSS system to be calibrated [1], a marker-based 3D tracking system composed of three IR cameras (Flex 3, Natural Point, Inc., Corvallis, OR, USA), and a monochrome camera (MQ003MG-CM, XIMEA). The 3D tracking system uses active infrared illumination and the stereo method to track the 3D location of retroreflective markers. Four or more asymmetrically placed markers on a rigid body can be tracked, with the 6-DOF pose denoted by Lie Algebra . This pose is taken as the ground truth in our discussion, since most of the systematic error is cancelled by interframe subtraction.

Figure 3.

Experimental setup. Photograph of the system and the experimental condition. MR1~MR4 are the mirrors, and GS is the galvanometer scanner. IR1~IR3 are IR illumination sources and cameras. XI is a monochrome camera. Calibration object was moved manually 1 m away from the system.

The four cameras were calibrated [23,24] beforehand. The IR camera’s trigger signal was inverted each time the LDV measurement was sampled, so that the frame rate of the MLDSS was roughly twice that of the cameras. Throughout the discussion in this paper, the MLDSS system ran at about 200 Hz, and the tracking system ran at 100 Hz. All devices were controlled by a desktop workstation (Dell T7910 workstation with Intel E5-2687W v4 @3GHz). Note that such a high-spec computer is not necessary for this calibration. Both motion reconstruction and optimization in this paper are computationally friendly and can run on common tabletop computers.

We address the nonideal factors in this practical calibration system as follows.

Temporal misalignment. As we described above, the 3D tracking system runs slower than the MLDSS system so that they are temporally misaligned. Using f to denote the frame index of MLDSS, and to denote the corresponding time, we align the MLDSS measurements to the 3D tracking system. Equation (5) is thus reformulated by linearized integration and interpolation:

where

Note that although the two systems are synchronized by hardware connection, unintended temporal misalignment might still exist due to the sampling time of the LDV and the exposure time of the cameras. This misalignment causes an error in and is independent of parameters P that finally influence calibration precision. To solve it, we modeled the temporal misalignment as a fixed offset parameter . Equation (8) is thus rewritten as

Mechanical velocity offset. A galvanometer scanner is used for controlling laser direction. While we assumed the scanner fully stopped when sampling the LDV measurement, the scanner could possibly slightly oscillate and cause error in the speed. Because the control sequence of the galvanometer is fixed and the optical path of each laser is different, this offset can be regarded as systematic to each laser. We thus refined speed reconstruction as

where is the vector composed of the velocity offset for each laser due to the corresponding mechanical oscillation.

Weighing error terms. In solving the multiobject optimization problem denoted by Equation (6), it is important to balance the influence of each error term and data samples in order to not let partial data be dominant. Here, we introduce a diagonal weight matrix W. Error term for each sample k is thus:

Considering the difference in the unit and the distance from the calibration object to the origin of the system, is ad hoc determined as the diagonal elements of W in our experiment, but note that this weight should be adjusted with a change in the co-ordinate system.

Outlier Measurements. Speckle noise [10] is one of the main noise sources of the LDV. It causes undesired short-term peaks in velocity measurements. Such noise can largely be relieved in the low-frequency domain with the use of a tracking filter [1,9]. However, considering signal intensity is low due to potential loss in the laser focus, there occasionally is speckle noise in measurements. In order to reduce its influence on calibration accuracy, a modified Huber kernel [25] is introduced to the problem:

where outlier errors grow linearly instead of quadratically.

Complete maximum likelihood estimation. With the above consideration, we can naturally extend Equation (6) by estimating the complete set of parameters:

where is defined by injecting Equations (7), (9), (10) into Equation (12). Equation (13) is solved with the Levenberg–Marquadt algorithm [22] as well. The initial guess of P is provided by geometric-only calibration. and are simply set to zero.

3.4. Summary

In this section, the calibration of MLDSS with a pratical system is described and formulated. The proposed method states the calibration problem with minimization of the motion error, which is essentially different from conventional geometrical methods [1,14,15]. The procedures of the proposed calibration are summarized as follows:

- Make a calibration object with four or more asymmetric placed markers;

- register the calibration object in the 3D tracking system as a trackable rigid body;

- simultaneously capture target motion and speed with the MLDSS and the 3D tracking system, and build a dataset following the instructions in Section 4;

- estimate initial parameters using the geometric-only method introduced in Section 3.1 (optional); and

- refine all parameters by solving Equation (13).

4. Data Collection

It is straightforward to solve Equation (13) with the collected motion data. However, inappropriate data selection would provide insufficient information, and finally result in an ill-conditioned optimization problem. We study the data collection for our method in this section.

Check the derivatives of Equation (2). Jacobian describes the gradient direction in the parameter space of one data sample. Let the parameter of laser be denoted by , and the column of be . Knowing that A is a function of P, we write the partial Jacobian as:

where is given by Equation (15).

is the stack of for . It can be observed that some submatrices of have only zero elements. It means that a certain kind of motion error would provide no additional constraints for some parameters. Especially from Equation (14), error in the translation components does not provide any constraint for position parameters and , and the error of , provides no constraint for direction angle .

More intuition is provided by Table 1, where we simulated the norms of the submatrices of using real calibration parameters. , , and denote the motion patterns of subtle speed , a rotational speed where , and a translational speed where , respectively. and denote the set of direction parameters and position parameters for all lasers.

Table 1.

∞-norm of submatrices under different motions.

Table 1 demonstrates how the motion pattern influences gradient direction. A large translational motion means a larger gradient in the direction parameters and a smaller gradient in position parameters . Jointly consider this with Equation (14). Translational motion error in one direction cannot fully condition the parameters, but the absolute value of the gradient could be much larger than the rotational motion. This might finally result in overfitting to a partial dataset, while the other part of the calibration data contributes little to the optimization.

Two implications could be derived to help such a situation. First, contribution to the optimization problem from different samples should be balanced considering the corresponding gradient. This is done by reweighing error terms as we introduced in Section 3.3. Second, multiple motion patterns should be included and evenly distributed in the dataset in order to avoid degeneration configuration.

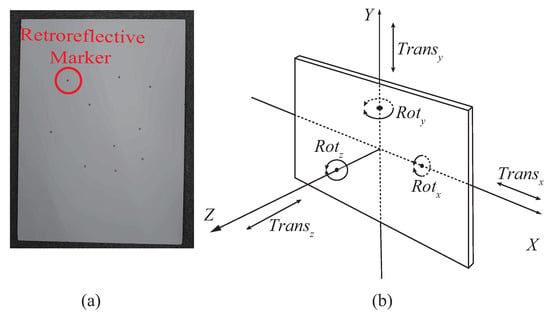

Based on the above discussion, we prepared the dataset for solving Equation (13) with a calibration object as illustrated in Figure 4a In the dataset, the motion of the calibration object was evenly distributed into six different patterns as illustrated in Figure 4b. During data collection, the calibration object was manually moved roughly 1 m away from the measurement systems, as shown in Figure 3.

Figure 4.

(a) Calibration object, a white board with ten randomly but asymmetrically placed retroreflective markers. (b) Six motion patterns of the calibration object in its local co-ordinate system, of which the calibration data are composed.

5. Evaluation

5.1. Cross-Validation

We validated the proposed method with a real dataset, consisting of 4500 sample frames for the calibration, and 1500 frames for the test. Both the calibration set and the test set consisted of six evenly distributed back-and-forward motion patterns, as shown in Figure 4b. This data amount far exceeds the necessary amount for the calibration, but it helps to reduce data noise. Note that the proposed system runs in high speed. Specifically, the marker tracking system runs at 100 Hz, and the MLDSS runs at 200 Hz. Hence, it takes no more than 10 min to collect all the data and finish optimization.

We compared the proposed method with the geometric-only calibration method [1], where the camera-calibration reprojection error was below 0.081 pixel, and the 3D point-to-line root mean square (RMS) distance in the linear fitting was 0.734 mm (100 points for each laser). Note this error is already smaller than that of the state-of-art geometric-only method [13] thanks to the use of a long-focus camera lens. Since in the MLDSS scenario we care most about motion reconstruction, while geometrical error is of minor importance, we compared the correctness of the reconstructed-motion increments in the test set.

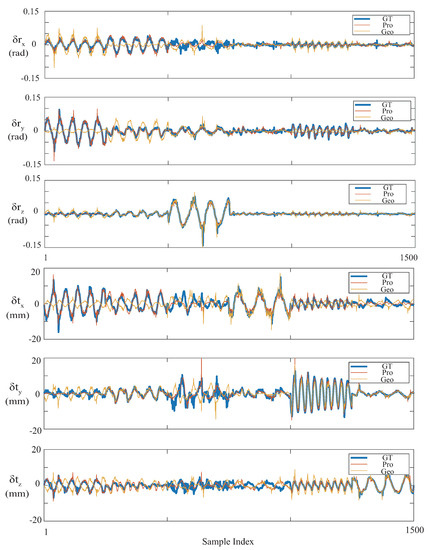

Figure 5 illustrates the six motion components in the reconstruction results of all the samples in the test set. We qualitatively confirmed that motion reconstruction was improved with the proposed method in this figure. While the geometric-only method achieved relatively good accuracy in major motion components, such as in the translational motion, the capability of measuring subtle and rotational motions degraded. With Equation (14), we consider the reason for this as the error in the wrong estimation of positional parameters and , and a joint effect of geometrical errors of multiple lasers. In contrast, the proposed method achieved better accuracy in almost all motion components, as shown in the magnified parts of the figure.

Figure 5.

Motion reconstruction results of the test set. Specifically, this figure includes the rotational components (top three subfigures) and translational components (bottom three subfigures) of the interframe motion increments in the test set. It was confirmed that the proposed method obviously outperforms the geometric-only method.

To quantitatively demonstrate the results, RMS error between the motion-reconstruction result and the ground truth is shown in Table 2. We divided the test data into six parts by major motion pattern, as demonstrated in Figure 4b. From Table 2, it is shown that the proposed method achieved smaller motion-reconstruction error in almost all motion patterns. The RMS errors of both the rotational and translational components across the whole dataset were reduced by more than 50%, which we consider significant improvement of accuracy.

Table 2.

Root mean square (RMS) errors in the test data.

5.2. Sensing Daily Object

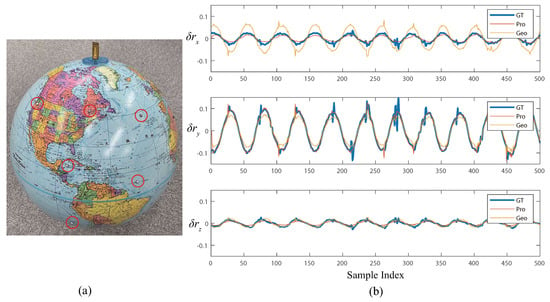

To show that the proposed method is also valid for the speed estimation of daily objects besides the calibration board, we briefly tested the new parameters on measuring a rotating globe as shown in Figure 6a. Ground-truth motion was also measured by the 3D marker tracking system. Figure 6b shows the result. The proposed method achieved a more accurate result than the geometric-only method. Rotational RMS error was 0.0245 rad with the proposed calibration parameters, while that of the conventional method was 0.0388 rad.

Figure 6.

(a) Used test globe. Retroreflective markers (red-circled) were attached on the globe to track its rotation. (b) Motion-reconstruction results (rotational part).

6. Conclusions

In this paper, we described a new calibration method for MLDSS. In contrast with the conventional method where only laser geometry is independently calibrated from images, the proposed method simultaneously optimizes all laser parameters and directly associates them with the motion-sensing model. We discussed in detail the system model and factors related to calibration accuracy, and proposed a maximum-likelihood estimation-based method for solving a calibration problem. Finally, higher accuracy was confirmed by cross-validation from qualitative and quantitative evaluation on a 1500 sample test set compared with the state-of-art method.

Author Contributions

Conceptualization, Y.H. and L.M.; data curation, Y.H.; formal analysis, Y.H.; funding acquisition, Y.W.; investigation, Y.H.; methodology, Y.H.; project administration, L.M. and Y.W.; software, Y.H.; supervision, M.I.; validation, Y.H.; visualization, Y.H.; writing—original draft, Y.H.; writing—review and editing, Y.H., L.M., and Y.W.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hu, Y.; Miyashita, L.; Watanabe, Y.; Ishikawa, M. Robust 6-DOF motion sensing for an arbitrary rigid body by multi-view laser Doppler measurements. Opt. Express 2017, 25, 30371–30387. [Google Scholar] [CrossRef] [PubMed]

- Won, S.P.; Golnaraghi, F.; Melek, W.W. A Fastening Tool Tracking System Using an IMU and a Position Sensor With Kalman Filters and a Fuzzy Expert System. IEEE Trans. Ind. Electron. 2009, 56, 1782–1792. [Google Scholar] [CrossRef]

- Yi, C.; Ma, J.; Guo, H.; Han, J.; Gao, H.; Jiang, F.; Yang, C. Estimating Three-Dimensional Body Orientation Based on an Improved Complementary Filter for Human Motion Tracking. Sensors 2018, 18, 3765. [Google Scholar] [CrossRef] [PubMed]

- Besl, P.J.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Chetverikov, D.; Svirko, D.; Stepanov, D.; Krsek, P. The Trimmed Iterative Closest Point algorithm. In Proceedings of the 16th International Conference on Pattern Recognition, Quebec City, QC, Canada, 11–15 August 2002; Volume 3, pp. 545–548. [Google Scholar] [CrossRef]

- Garon, M.; Lalonde, J. Deep 6-DOF Tracking. IEEE Trans. Vis. Comput. Gr. 2017, 23, 2410–2418. [Google Scholar] [CrossRef] [PubMed]

- Engel, J.; Koltun, V.; Cremers, D. Direct Sparse Odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 611–625. [Google Scholar] [CrossRef] [PubMed]

- Hu, Y.; Miyashita, L.; Watanabe, Y.; Ishikawa, M. GLATUI: Non-intrusive Augmentation of Motion-based Interactions Using a GLDV. In Proceedings of the Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems (CHI E ’18), Montreal, QC, Canada, 21–26 April 2018; ACM: New York, NY, USA, 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Vass, J.; Šmíd, R.; Randall, R.; Sovka, P.; Cristalli, C.; Torcianti, B. Avoidance of speckle noise in laser vibrometry by the use of kurtosis ratio: Application to mechanical fault diagnostics. Mech. Syst. Signal Process. 2008, 22, 647–671. [Google Scholar] [CrossRef]

- Rothberg, S. Numerical simulation of speckle noise in laser vibrometry. Appl. Opt. 2006, 45, 4523–4533. [Google Scholar] [CrossRef] [PubMed]

- Chang, Y.H.; Liu, C.S.; Cheng, C.C. Design and Characterisation of a Fast Steering Mirror Compensation System Based on Double Porro Prisms by a Screw-Ray Tracing Method. Sensors 2018, 18, 4046. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.-S.; Lin, K.-W. Numerical and experimental characterization of reducing geometrical fluctuations of laser beam based on rotating optical diffuser. Opt. Eng. 2014, 53, 122408. [Google Scholar] [CrossRef]

- Sels, S.; Bogaerts, B.; Vanlanduit, S.; Penne, R. Extrinsic Calibration of a Laser Galvanometric Setup and a Range Camera. Sensors 2018, 18, 1478. [Google Scholar] [CrossRef] [PubMed]

- Tu, J.; Zhang, L. Effective Data-Driven Calibration for a Galvanometric Laser Scanning System Using Binocular Stereo Vision. Sensors 2018, 18, 197. [Google Scholar] [CrossRef] [PubMed]

- Wissel, T.; Wagner, B.; Stüber, P.; Schweikard, A.; Ernst, F. Data-Driven Learning for Calibrating Galvanometric Laser Scanners. IEEE Sens. J. 2015, 15, 5709–5717. [Google Scholar] [CrossRef]

- Quéau, Y.; Leporcq, F.; Alfalou, A. Design and simplified calibration of a Mueller imaging polarimeter for material classification. Opt. Lett. 2018, 43, 4941–4944. [Google Scholar] [CrossRef] [PubMed]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle Adjustment—A Modern Synthesis. In Vision Algorithms: Theory and Practice; Triggs, B., Zisserman, A., Szeliski, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2000; pp. 298–372. [Google Scholar]

- Vasconcelos, F.; Barreto, J.P.; Nunes, U. A Minimal Solution for the Extrinsic Calibration of a Camera and a Laser-Rangefinder. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2097–2107. [Google Scholar] [CrossRef] [PubMed]

- Scaramuzza, D.; Harati, A.; Siegwart, R. Extrinsic self calibration of a camera and a 3D laser range finder from natural scenes. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS’07), San Diego, CA, USA, 29 October–2 November 2007; pp. 4164–4169. [Google Scholar]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. EPnP: An Accurate O(n) Solution to the PnP Problem. Int. J. Comput. Vis. 2008, 81, 155. [Google Scholar] [CrossRef]

- Trucco, E.; Verri, A. Introductory Techniques for 3-D Computer Vision; Prentice Hall: New Jersey, NJ, USA, 1998; Volume 201. [Google Scholar]

- Marquardt, D. An Algorithm for Least-Squares Estimation of Nonlinear Parameters. J. Soc. Ind. Appl. Math. 1963, 11, 431–441. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Heikkila, J.; Silven, O. A four-step camera calibration procedure with implicit image correction. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Juan, PR, USA, 17–19 June 1997; pp. 1106–1112. [Google Scholar] [CrossRef]

- Huber, P.J. Robust Estimation of a Location Parameter. Ann. Math. Stat. 1964, 35, 73–101. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).