Mobile User Indoor-Outdoor Detection through Physical Daily Activities

Abstract

1. Introduction

- (1)

- An empirical study was performed to introduce a universal availability method to utilizes the built-in inertial measurement sensors (IMU) of a smartphone without any additional facilities to detect the user IO state in the wild.

- (2)

- Since people use the smartphone in their own style and the number of activities done by a person during a day can be different for different ages and genders [27], so the effect of imbalanced data is analyzed for user IO state recognition.

- (3)

- The sensitivity and discriminability of features were measured to provide an in-depth understanding of the dimensionality reduction effects on recognition rates of the detectors in each activity dataset.

2. Related Work

- Light and sound approaches: The intensity of sound or light may differ over time, and it can be affected by various factors such as the position of the phone (e.g., due to users’ carrying behavior when placed in a bag or clothes pocket).

- The strength of cell tower approaches: the absolute signal strength of the cell tower may be significantly different at various places according to the mobile devices’ models, which makes it difficult to define a unique rule/model for indoor/outdoor classification problems.

- Magnetic variance approach: The magnetometer’s readings are error-prone without accurate calibration. Furthermore, the magnetic detection is only available when the user moves around.

- Wi-Fi Signal: The Wi-Fi signal length can be influenced by the shielding effects of surrounding objects or even the human body. This attribute can bring lots of noise into the detection system and it poorly available in many outdoor areas. Therefore, Wi-Fi signals cannot be used as a general approach in user IO state detection problems.

3. Design Criteria

- (1)

- Simple, functional and inclusive coverage: the environment detection process should have fast, easy-to-use, and stand-alone capabilities. It should not depend heavily on the hardware. This method can be extended to other smart devices such as smartwatches and tablets with different operating systems without requiring the installation of new facilities.

- (2)

- Trusted: the method should be able to determine the type of environment with high accuracy, and the system performance should not be decreased and dependent on different environmental conditions.

- (3)

- Energy consumption: Since the method runs on a mobile platform, it should be able to manage the resources of the mobile device (such as batteries, memory, and processor) optimally during the workday, as the energy of smart mobile devices can be rapidly depleted.

- (4)

- Instantaneous: The method should be provided as an integrated service component for higher-level applications. Therefore, the method should respond as fast as the changing of the environment. Any latency in the detection phase can reduce the mobile application’s performance.

- (5)

- Comprehensive usability (general usability): The methodology should perform a detection task independently, i.e., without prior knowledge of the environment, additional hardware or specific user feedback to ensure the comprehensiveness of the proposed method in a wide range of high-level applications.

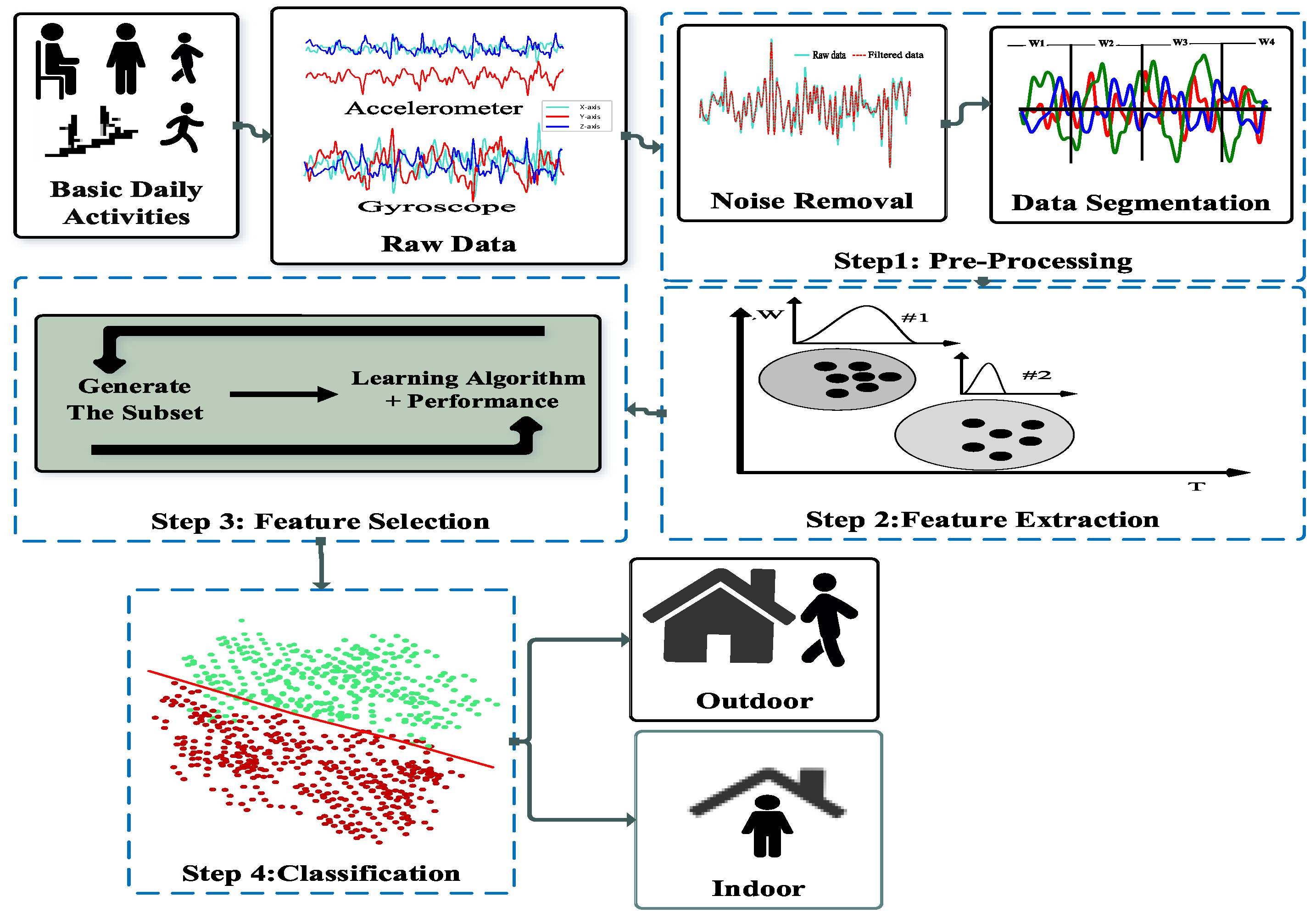

4. Methodology

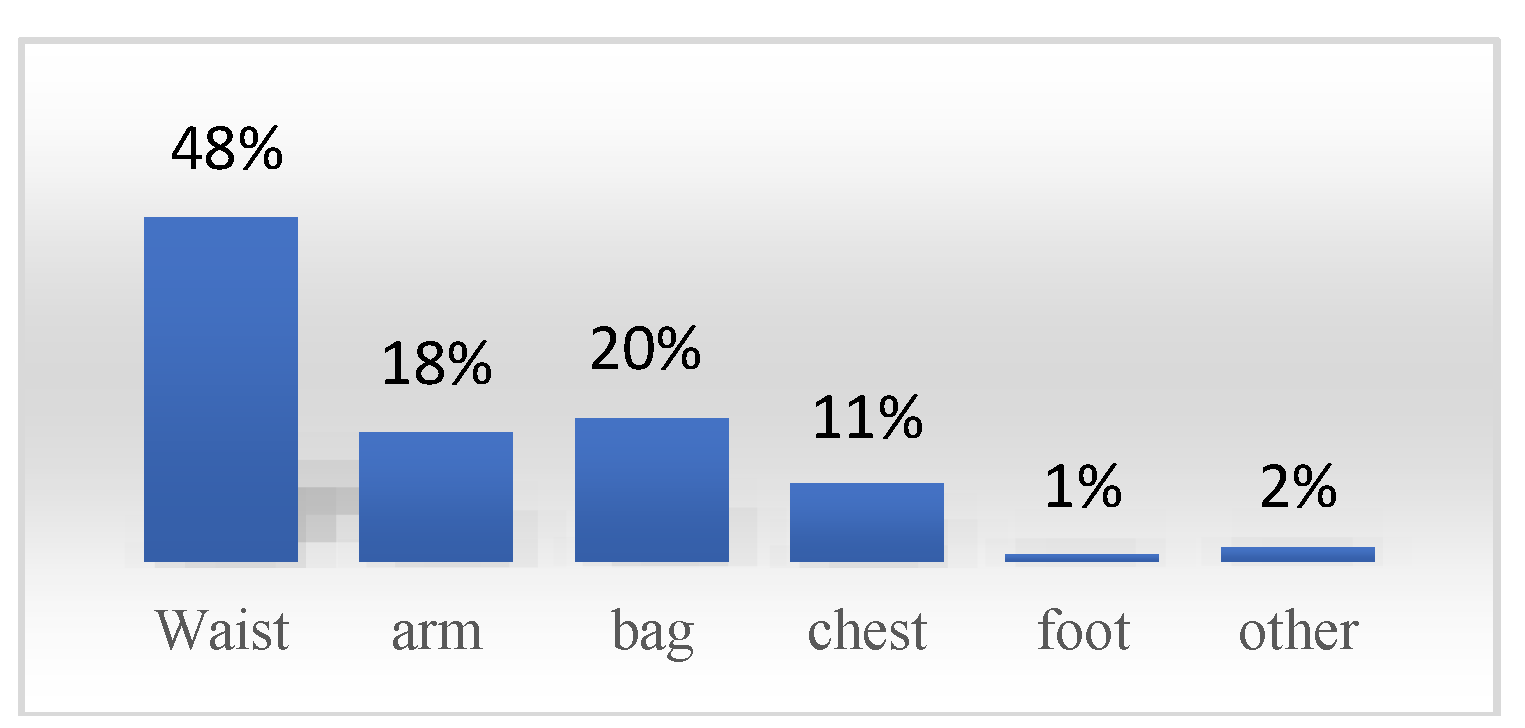

4.1. Dataset

4.2. Data Pre-Processing

4.2.1. Noise Removal

4.2.2. Data Segmentation

4.3. Feature Extraction

4.4. Feature Selection

5. Classification

5.1. Random Forest (RF)

5.2. AdaBoost (ADA)

6. Model Evaluation and Results

6.1. Training and Testing Procedure

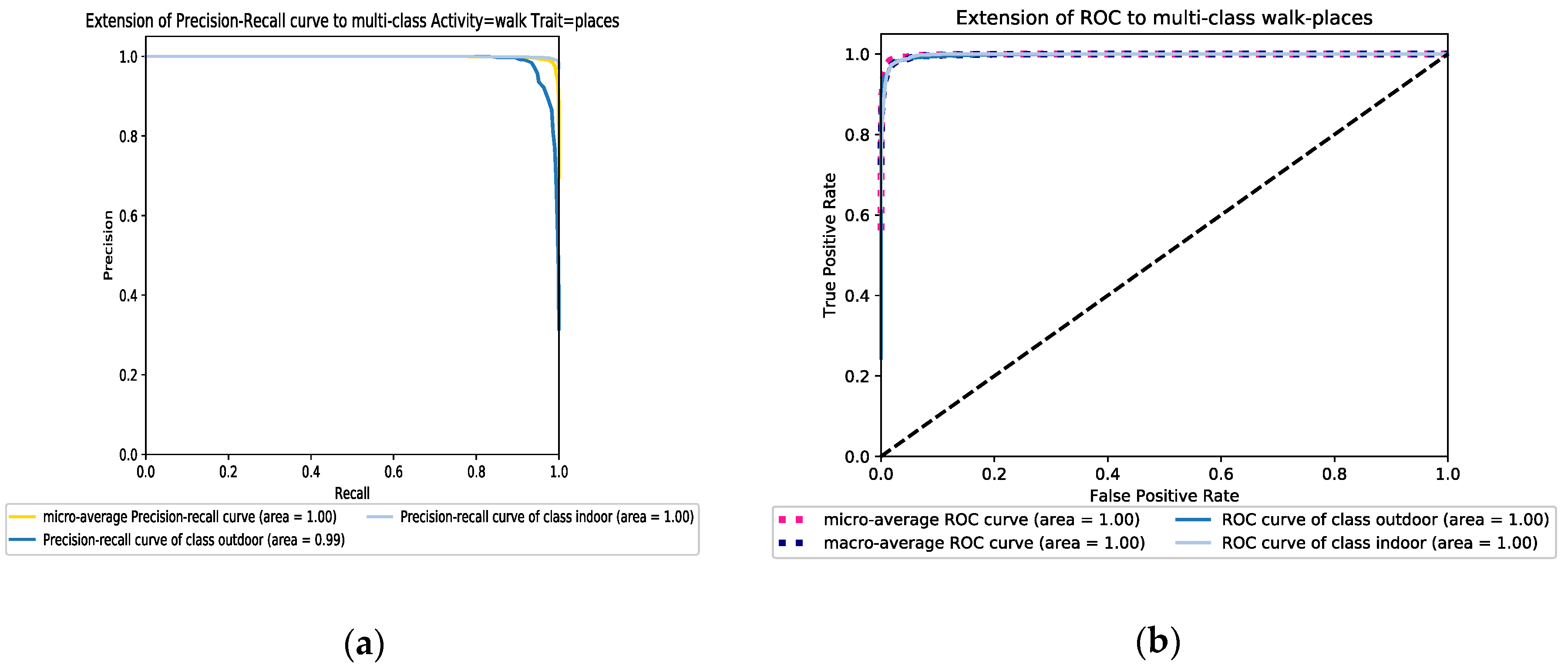

6.2. The Experimental Results

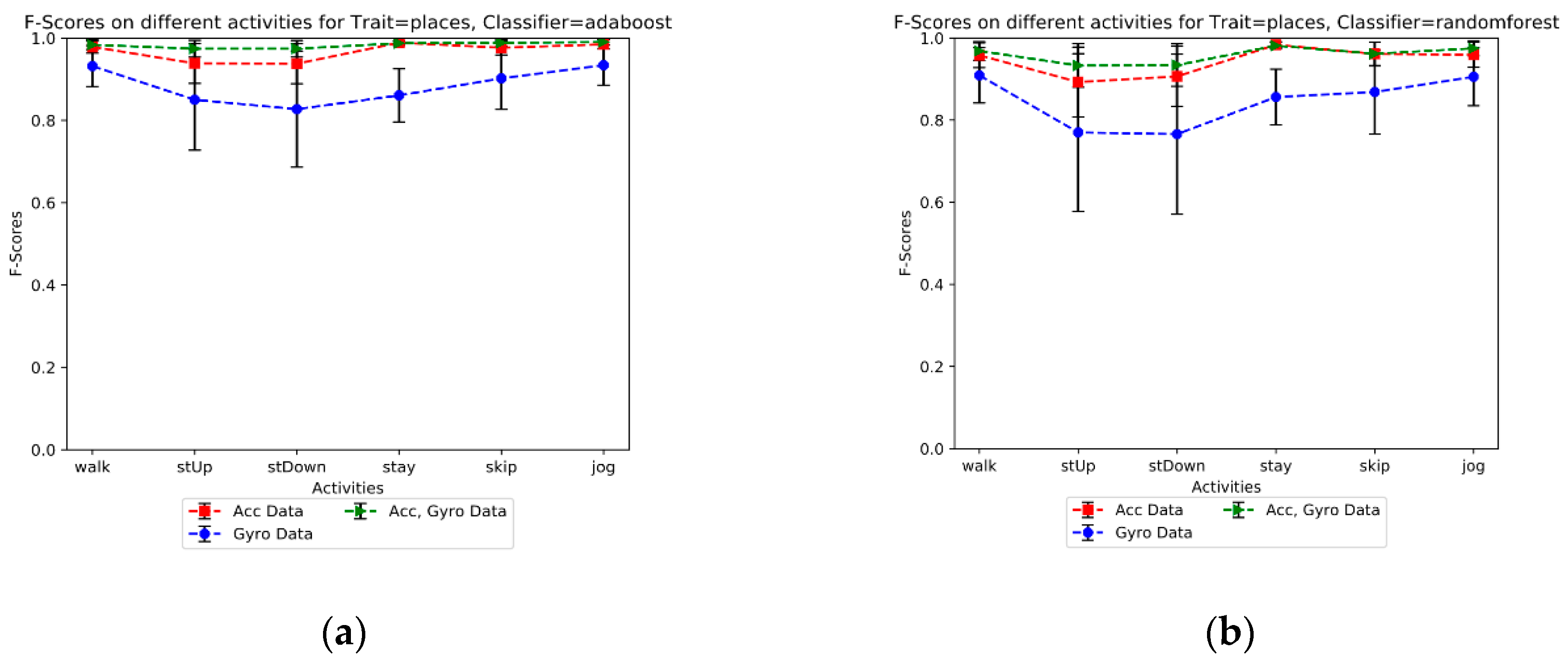

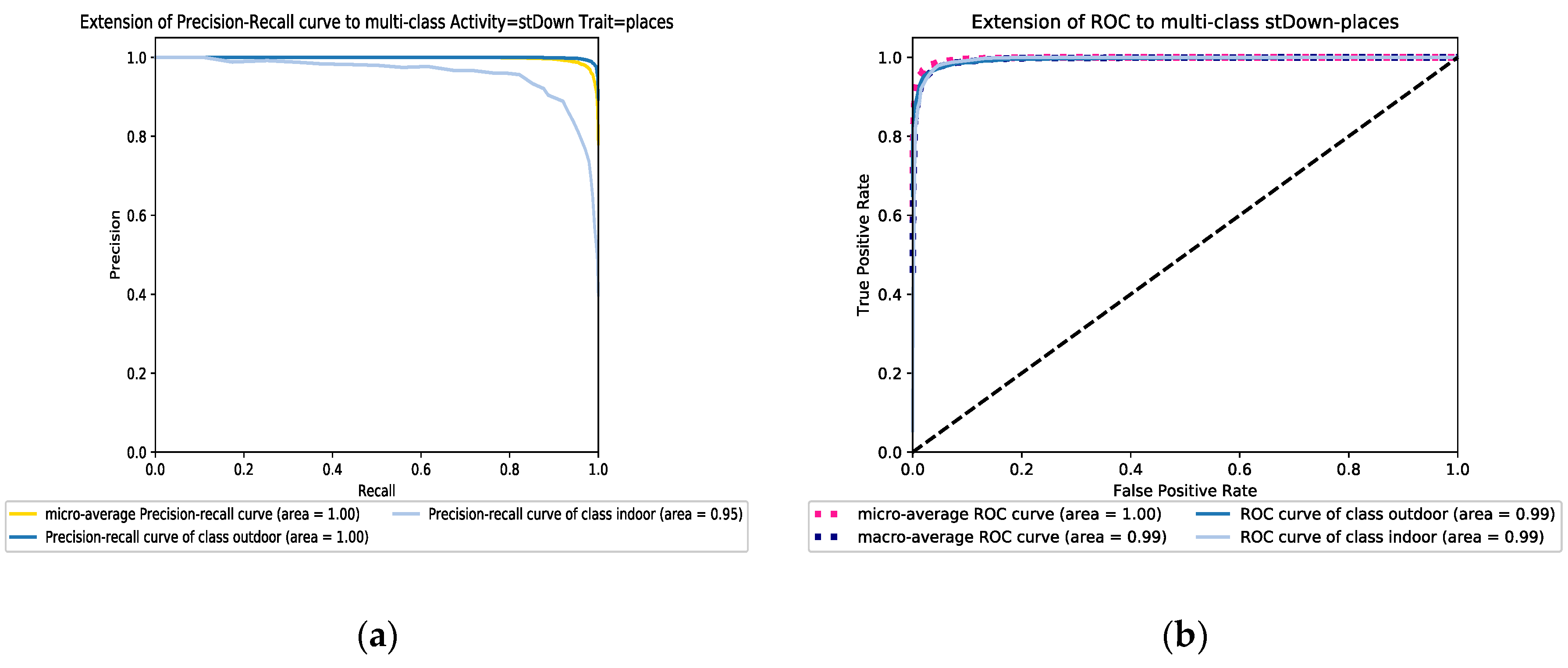

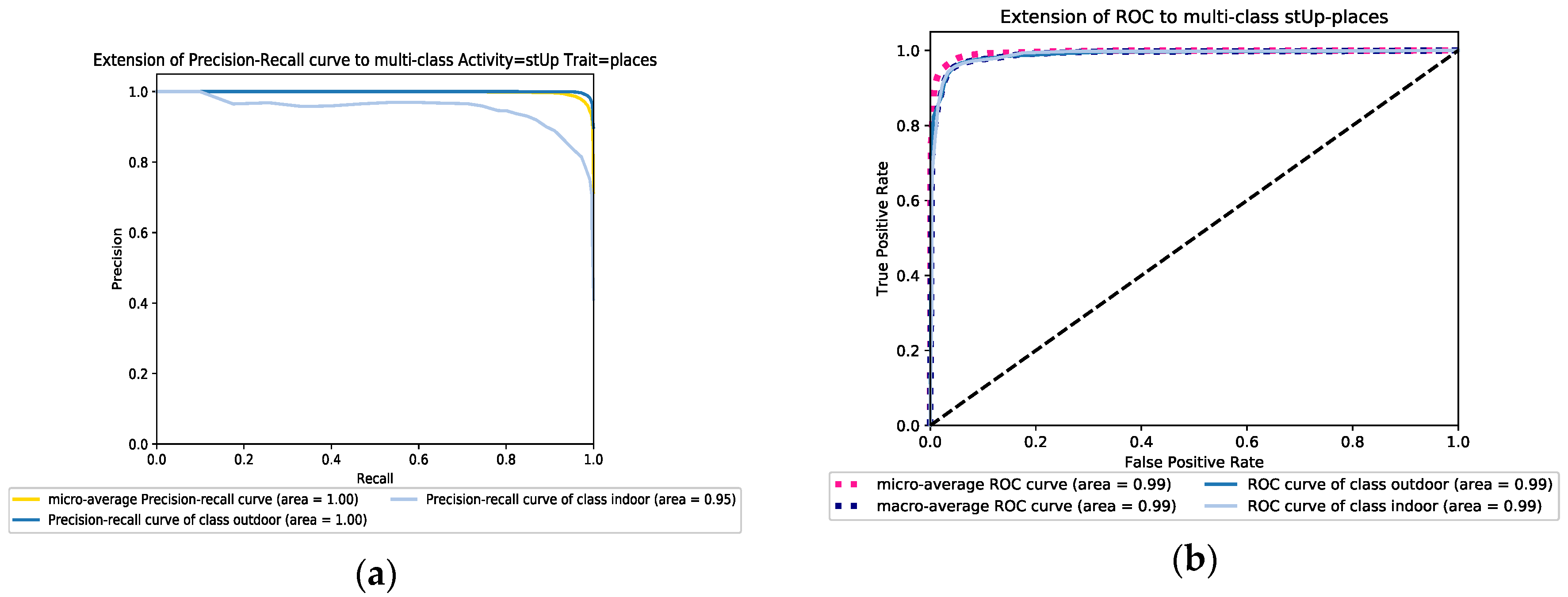

6.2.1. Stability shift by sensors data fusion

6.2.2. Impact of Unbalanced Data

- (1)

- The subject must have participated in all activities.

- (2)

- The subject must have both accelerometer and gyroscope motion sensor data.

- (3)

- The number of subjects in each class must be equal to the number of subjects in the minority class.

6.2.3. Sensitivity to the Feature Selection

7. Discussion

8. Conclusions and Future Works

Supplementary Materials

Author Contributions

Acknowledgments

Conflicts of Interest

Appendix A

| Feature Name | Walk | Jog | Skip | Stay | Stairs up | Stairs down |

|---|---|---|---|---|---|---|

| E_ Gyro | ✓ | ✓ | ||||

| SMA_ Gyro | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Avg_ Gyro _X | ✓ | |||||

| Avg_ Gyro _Y | ✓ | ✓ | ✓ | |||

| Avg_ Gyro _Z | ✓ | ✓ | ||||

| Avg_ Gyro _N | ✓ | ✓ | ✓ | ✓ | ✓ | |

| MinMax_ Gyro _X | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| MinMax_ Gyro _Y | ✓ | ✓ | ✓ | ✓ | ✓ | |

| MinMax_ Gyro _Z | ✓ | ✓ | ✓ | |||

| MinMax_ Gyro _N | ✓ | ✓ | ✓ | |||

| SD_ Gyro _X | ✓ | |||||

| SD_ Gyro _Y | ✓ | |||||

| SD_ Gyro _Z | ✓ | ✓ | ✓ | ✓ | ✓ | |

| SD_ Gyro _N | ✓ | |||||

| ME_ Gyro _X | ✓ | ✓ | ✓ | ✓ | ✓ | |

| ME_ Gyro _Y | ✓ | ✓ | ||||

| ME_ Gyro _Z | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Var_ Gyro _X | ✓ | ✓ | ||||

| Var_ Gyro _Y | ✓ | ✓ | ✓ | |||

| Var_ Gyro _Z | ✓ | |||||

| MC_ Gyro _XY | ✓ | ✓ | ✓ | ✓ | ✓ | |

| MC_ Gyro _XZ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| MC_ Gyro _YZ | ✓ | ✓ | ✓ | ✓ | ||

| Var_MI_Gyro | ✓ | |||||

| SMAMCS_ Gyro _X | ✓ | ✓ | ✓ | ✓ | ✓ | |

| SMAMCS_ Gyro _Y | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| SMAMCS_ Gyro _Z | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Spec2_ Gyro _Z | ✓ | |||||

| Spec3_ Gyro _Y | ✓ | |||||

| Spec3_ Gyro _X | ✓ | |||||

| Spec3_ Gyro _Z | ✓ | |||||

| Spec5_ Gyro _Z | ✓ | |||||

| Spec5_ Gyro _N | ✓ | |||||

| Spec9_ Gyro _N | ✓ |

| Feature Name | Walk | Jog | Skip | Stay | Stairs up | Stairs down |

|---|---|---|---|---|---|---|

| Avg_Acc_X | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Avg_Acc_Y | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Avg_Acc_Z | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Avg_Acc_N | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| SD_Acc_X | ✓ | ✓ | ✓ | ✓ | ||

| SD_Acc_Y | ✓ | ✓ | ||||

| SD_Acc_Z | ✓ | ✓ | ||||

| SD_Acc_N | ✓ | ✓ | ✓ | ✓ | ||

| MinMax_Acc_X | ✓ | ✓ | ||||

| MinMax_Acc_Y | ✓ | ✓ | ✓ | ✓ | ||

| MinMax_Acc_Z | ✓ | ✓ | ||||

| MinMax_Acc_N | ✓ | ✓ | ✓ | ✓ | ||

| Var_Acc_X | ✓ | ✓ | ✓ | ✓ | ||

| Var_Acc_Y | ✓ | |||||

| Var_Acc_Z | ✓ | ✓ | ✓ | |||

| Var_MI_Acc | ✓ | ✓ | ✓ | |||

| SMA_Acc | ✓ | ✓ | ✓ | ✓ | ✓ | |

| E_Acc | ✓ | ✓ | ✓ | ✓ | ||

| MC_Acc_XY | ✓ | ✓ | ||||

| MC_Acc_XZ | ✓ | |||||

| MC_Acc_YZ | ✓ | ✓ | ✓ | |||

| ME_Acc_X | ✓ | ✓ | ✓ | ✓ | ✓ | |

| ME_Acc_Y | ✓ | ✓ | ✓ | ✓ | ✓ | |

| ME_Acc_Z | ✓ | ✓ | ✓ | |||

| MMA_Acc | ✓ | ✓ | ✓ | ✓ | ✓ | |

| MVA_Acc | ✓ | ✓ | ✓ | |||

| MEA_Acc | ✓ | ✓ | ✓ | |||

| ME_Acc_XY | ✓ | ✓ | ✓ | ✓ | ✓ | |

| ME_Acc_XZ | ✓ | ✓ | ✓ | |||

| ME_Acc_YZ | ✓ | ✓ | ✓ | ✓ | ✓ | |

| SMAMCS_Acc_X | ✓ | ✓ | ✓ | ✓ | ✓ | |

| SMAMCS_Acc_Y | ✓ | ✓ | ✓ | ✓ | ✓ | |

| SMAMCS_Acc_Z | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Spec9_Acc_N | ✓ | |||||

| Spec5_Acc_N | ✓ | |||||

| Spec6_Acc_N | ✓ | |||||

| Wavelet_STD_aD2_Acc_X | ✓ | ✓ | ✓ | ✓ | ||

| Wavelet_STD_aD2_Acc_Y | ✓ | |||||

| Wavelet_STD_aD2_Acc_Z | ✓ | ✓ | ||||

| Wavelet_STD_aD3_Acc_Y | ✓ | |||||

| Wavelet_STD_dA3_Acc_X | ✓ | ✓ | ✓ | |||

| Wavelet_STD_dA3_Acc_Y | ✓ | ✓ | ✓ | |||

| Wavelet_STD_dA3_Acc_Z | ✓ | ✓ | ✓ | |||

| Wavelet_STD_dD3_Acc_X | ✓ | ✓ | ✓ | |||

| Wavelet_STD_dD3_Acc_Y | ✓ | ✓ | ||||

| Wavelet_STD_dD3_Acc_Z | ✓ | ✓ | ✓ | |||

| Wavelet_RMS_aD2_Acc_X | ✓ | ✓ | ✓ | |||

| Wavelet_RMS_aD2_Acc_Z | ✓ | |||||

| Wavelet_RMS_dA3_Acc_X | ✓ | |||||

| Wavelet_RMS_dA3_Acc_Z | ✓ | ✓ | ✓ | |||

| Wavelet_RMS_dD3_Acc_X | ✓ | ✓ | ✓ | |||

| Wavelet_RMS_dD3_Acc_Z | ✓ |

| Feature Name | Walk | Jog | Skip | Stay | Stairs up | Stairs down |

|---|---|---|---|---|---|---|

| Avg_Acc_X | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Avg_Acc_Y | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Avg_Acc_Z | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Avg_Acc_N | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| SD_Acc_X | ✓ | ✓ | ✓ | ✓ | ||

| SD_Acc_Y | ✓ | ✓ | ||||

| SD_Acc_Z | ✓ | ✓ | ||||

| SD_Acc_N | ✓ | ✓ | ✓ | ✓ | ||

| MinMax_Acc_X | ✓ | ✓ | ||||

| MinMax_Acc_Y | ✓ | ✓ | ✓ | ✓ | ||

| MinMax_Acc_Z | ✓ | ✓ | ||||

| MinMax_Acc_N | ✓ | ✓ | ✓ | ✓ | ||

| Var_Acc_X | ✓ | ✓ | ✓ | ✓ | ||

| Var_Acc_Y | ✓ | |||||

| Var_Acc_Z | ✓ | ✓ | ✓ | |||

| Var_MI_Acc | ✓ | ✓ | ✓ | |||

| SMA_Acc | ✓ | ✓ | ✓ | ✓ | ✓ | |

| E_Acc | ✓ | ✓ | ✓ | ✓ | ||

| MC_Acc_XY | ✓ | ✓ | ||||

| MC_Acc_XZ | ✓ | |||||

| MC_Acc_YZ | ✓ | ✓ | ✓ | |||

| ME_Acc_X | ✓ | ✓ | ✓ | ✓ | ✓ | |

| ME_Acc_Y | ✓ | ✓ | ✓ | ✓ | ✓ | |

| ME_Acc_Z | ✓ | ✓ | ✓ | |||

| MMA_Acc | ✓ | ✓ | ✓ | ✓ | ✓ | |

| MVA_Acc | ✓ | ✓ | ✓ | |||

| MEA_Acc | ✓ | ✓ | ✓ | |||

| ME_Acc_XY | ✓ | ✓ | ✓ | ✓ | ✓ | |

| ME_Acc_XZ | ✓ | ✓ | ✓ | |||

| ME_Acc_YZ | ✓ | ✓ | ✓ | ✓ | ✓ | |

| SMAMCS_Acc_X | ✓ | ✓ | ✓ | ✓ | ✓ | |

| SMAMCS_Acc_Y | ✓ | ✓ | ✓ | ✓ | ✓ | |

| SMAMCS_Acc_Z | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Spec 6_ Acc _X | ✓ | |||||

| Spec7_ Acc _Y | ✓ | |||||

| Spec9_ Acc _N | ✓ | |||||

| Spec4_ Gyro _X | ✓ | |||||

| Spec6_ Gyro _X | ✓ | |||||

| Spec8_ Gyro _Z | ✓ | |||||

| Avg_ Gyro _X | ||||||

| Avg_ Gyro _Y | ✓ | |||||

| Avg_ Gyro _Z | ||||||

| Avg_ Gyro _N | ✓ | ✓ | ✓ | ✓ | ||

| SD_ Gyro _X | ✓ | ✓ | ✓ | ✓ | ||

| SD_ Gyro _Y | ✓ | ✓ | ||||

| SD_ Gyro _Z | ✓ | ✓ | ✓ | |||

| SD_ Gyro _N | ||||||

| MinMax_ Gyro _X | ✓ | ✓ | ||||

| MinMax_ Gyro _Y | ✓ | ✓ | ||||

| MinMax_ Gyro _Z | ||||||

| MinMax_ Gyro _N | ✓ | ✓ | ||||

| Var_ Gyro _X | ✓ | ✓ | ✓ | |||

| Var_ Gyro _Y | ✓ | ✓ | ||||

| Var_ Gyro _Z | ✓ | ✓ | ✓ | |||

| Var_MI_ Gyro | ✓ | ✓ | ||||

| SMA_ Gyro | ✓ | ✓ | ✓ | ✓ | ||

| E_ Gyro | ✓ | ✓ | ✓ | |||

| MC_ Gyro _XY | ✓ | ✓ | ✓ | ✓ | ||

| MC_ Gyro _XZ | ✓ | ✓ | ✓ | ✓ | ✓ | |

| MC_ Gyro _YZ | ✓ | ✓ | ||||

| ME_ Gyro _X | ✓ | ✓ | ✓ | ✓ | ||

| ME_ Gyro _Y | ✓ | ✓ | ✓ | |||

| ME_ Gyro _Z | ✓ | ✓ | ✓ | ✓ | ||

| SMAMCS_ Gyro _X | ✓ | |||||

| SMAMCS_ Gyro _Y | ✓ | |||||

| SMAMCS_ Gyro _Z | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Var_MI_Acc_Gyro | ✓ | |||||

| SMA_Acc_Gyro | ✓ | |||||

| E_Acc_Gyro | ✓ | ✓ | ✓ | |||

| ME_Acc_Gyro_X | ✓ | ✓ | ✓ | |||

| ME_Acc_Gyro_Y | ✓ | ✓ | ✓ | |||

| SMAMCS_Acc_Gyro_Z | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Wavelet_STD_aD2_Acc_X | ✓ | |||||

| Wavelet_STD_aD2_Acc_Y | ✓ | ✓ | ||||

| Wavelet_STD_aD2_Acc_Z | ✓ | ✓ | ||||

| Wavelet_STD_aD3_Acc_Y | ✓ | |||||

| Wavelet_STD_aD4_Acc_Y | ✓ | |||||

| Wavelet_STD_aD4_Acc_Z | ||||||

| Wavelet_STD_dA3_Acc_X | ✓ | ✓ | ✓ | |||

| Wavelet_STD_dA3_Acc_Y | ✓ | ✓ | ✓ | |||

| Wavelet_STD_dA3_Acc_Z | ✓ | ✓ | ||||

| Wavelet_STD_dD3_Acc_X | ✓ | ✓ | ✓ | |||

| Wavelet_STD_dD3_Acc_Y | ✓ | ✓ | ||||

| Wavelet_STD_dD3_Acc_Z | ✓ | ✓ | ||||

| Wavelet_RMS_aD2_Acc_X | ✓ | ✓ | ||||

| Wavelet_RMS_aD2_Acc_Z | ✓ | |||||

| Wavelet_RMS_dA3_Acc_X | ✓ | ✓ | ✓ | |||

| Wavelet_RMS_dA3_Acc_Z | ✓ | ✓ | ||||

| Wavelet_RMS_dD3_Acc_X | ✓ | ✓ | ✓ | ✓ | ||

| Wavelet_RMS_dD3_Acc_Z | ✓ | ✓ | ✓ |

| Feature Name | Walk | Jog | Skip | Stay | Stairs up | Stairs down |

|---|---|---|---|---|---|---|

| Avg_Acc_X | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Avg_Acc_Y | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Avg_Acc_Z | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Avg_Acc_N | ✓ | ✓ | ✓ | ✓ | ✓ | |

| MinMax_Acc_X | ✓ | ✓ | ||||

| MinMax_Acc_Y | ✓ | ✓ | ✓ | |||

| MinMax_Acc_Z | ✓ | ✓ | ||||

| MinMax_Acc_N | ✓ | ✓ | ✓ | |||

| Var_Acc_X | ✓ | ✓ | ✓ | ✓ | ||

| Var_Acc_Y | ||||||

| Var_Acc_Z | ✓ | |||||

| Var_MI_Acc | ✓ | ✓ | ✓ | ✓ | ||

| SMA_Acc | ✓ | ✓ | ✓ | ✓ | ||

| E_Acc | ✓ | ✓ | ✓ | ✓ | ||

| MC_Acc_XY | ||||||

| MC_Acc_XZ | ||||||

| MC_Acc_YZ | ||||||

| ME_Acc_X | ✓ | ✓ | ✓ | ✓ | ✓ | |

| ME_Acc_Y | ✓ | ✓ | ✓ | ✓ | ||

| ME_Acc_Z | ✓ | ✓ | ✓ | |||

| MEA_Acc | ✓ | ✓ | ||||

| ME_Acc_XY | ✓ | ✓ | ✓ | ✓ | ✓ | |

| ME_Acc_XZ | ✓ | ✓ | ||||

| ME_Acc_YZ | ✓ | ✓ | ✓ | ✓ | ||

| SMAMCS_Acc_X | ✓ | ✓ | ✓ | ✓ | ✓ | |

| SMAMCS_Acc_Y | ✓ | ✓ | ✓ | ✓ | ✓ | |

| SMAMCS_Acc_Z | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Avg_Gyro _Y | ✓ | |||||

| Avg_Gyro _Z | ✓ | |||||

| ME_Acc_Gyro_X | ✓ | ✓ | ✓ | |||

| ME_Acc_Gyro_Y | ✓ | ✓ | ||||

| ME_Acc_Gyro_Z | ✓ | ✓ | ✓ | |||

| SMAMCS_Acc_Gyro_Y | ✓ | ✓ | ✓ | ✓ | ||

| SMAMCS_Acc_Gyro_Z | ✓ | ✓ | ✓ | ✓ | ||

| Wavelet_STD_aD2_Acc_Y | ✓ | |||||

| Wavelet_STD_aD3_Acc_Y | ✓ | |||||

| Wavelet_STD_dA3_Acc_X | ✓ | ✓ | ✓ | |||

| Wavelet_STD_dA3_Acc_Y | ✓ | ✓ | ✓ | |||

| Wavelet_STD_dA3_Acc_Z | ✓ | |||||

| SD_Acc_X | ✓ | ✓ | ||||

| SD_Acc_Y | ✓ | |||||

| SD_Acc_Z | ✓ | |||||

| SD_Acc_N | ✓ | ✓ | ✓ | ✓ | ||

| Avg_ Gyro _N | ✓ | ✓ | ✓ | ✓ | ||

| SD_ Gyro _X | ✓ | ✓ | ✓ | ✓ | ||

| SD_ Gyro _Y | ✓ | ✓ | ||||

| SD_ Gyro _Z | ✓ | |||||

| SD_ Gyro _N | ✓ | |||||

| MinMax_ Gyro _X | ✓ | |||||

| MinMax_ Gyro _Y | ✓ | |||||

| MinMax_ Gyro _N | ✓ | |||||

| Var_ Gyro _X | ✓ | ✓ | ||||

| Var_ Gyro _Y | ||||||

| Var_ Gyro _Z | ✓ | ✓ | ||||

| Var_MI_ Gyro | ✓ | |||||

| SMA_ Gyro | ✓ | ✓ | ✓ | ✓ | ✓ | |

| E_ Gyro | ✓ | ✓ | ||||

| MC_ Gyro _XY | ✓ | ✓ | ||||

| MC_ Gyro _XZ | ✓ | ✓ | ✓ | ✓ | ✓ | |

| MC_ Gyro _YZ | ✓ | |||||

| ME_ Gyro _X | ✓ | ✓ | ✓ | ✓ | ||

| ME_ Gyro _Y | ✓ | ✓ | ✓ | |||

| ME_ Gyro _Z | ✓ | ✓ | ✓ | |||

| SMAMCS_ Gyro _X | ✓ | |||||

| SMAMCS_ Gyro _Y | ✓ | |||||

| SMAMCS_ Gyro _Z | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| SMA_Acc_Gyro | ✓ | |||||

| E_Acc_Gyro | ✓ | |||||

| Wavelet_STD_dD3_Acc_X | ✓ | ✓ | ✓ | ✓ | ||

| Wavelet_STD_dD3_Acc_Y | ✓ | ✓ | ||||

| Wavelet_STD_dD3_Acc_Z | ✓ | ✓ | ||||

| Wavelet_RMS_aD2_Acc_X | ✓ | ✓ | ||||

| Wavelet_RMS_aD2_Acc_Z | ✓ | ✓ | ||||

| Wavelet_RMS_aD4_Acc_Z | ✓ | |||||

| Wavelet_RMS_dA3_Acc_X | ✓ | ✓ | ✓ | ✓ | ||

| Wavelet_RMS_dA3_Acc_Z | ✓ | |||||

| Wavelet_RMS_dD3_Acc_X | ✓ | |||||

| Wavelet_RMS_dD3_Acc_Z | ✓ |

| Feature Name | Walk | Jog | Skip | Stay | Stairs up | Stairs down |

|---|---|---|---|---|---|---|

| Avg_Acc_X | ✓ | ✓ | ✓ | ✓ | ||

| Avg_Acc_Y | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Avg_Acc_Z | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Avg_Acc_N | ✓ | ✓ | ✓ | ✓ | ✓ | |

| SD_Acc_X | ✓ | ✓ | ✓ | |||

| SD_Acc_Y | ✓ | ✓ | ✓ | |||

| SD_Acc_Z | ✓ | |||||

| SD_Acc_N | ✓ | ✓ | ||||

| MinMax_Acc_X | ✓ | ✓ | ||||

| MinMax_Acc_Y | ✓ | ✓ | ✓ | |||

| MinMax_Acc_Z | ✓ | |||||

| MinMax_Acc_N | ✓ | ✓ | ✓ | |||

| MEA_Acc | ✓ | ✓ | ✓ | |||

| ME_Acc_XY | ✓ | ✓ | ✓ | |||

| ME_Acc_XZ | ✓ | ✓ | ||||

| ME_Acc_YZ | ✓ | ✓ | ✓ | ✓ | ✓ | |

| SMAMCS_Acc_X | ✓ | ✓ | ✓ | |||

| SMAMCS_Acc_Y | ✓ | ✓ | ✓ | ✓ | ||

| SMAMCS_Acc_Z | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Spec 3_ Acc _Y | ✓ | |||||

| Avg_ Gyro _X | ✓ | |||||

| Avg_ Gyro _Z | ✓ | |||||

| Avg_ Gyro _N | ✓ | ✓ | ✓ | ✓ | ||

| SD_ Gyro _X | ✓ | ✓ | ||||

| SD_ Gyro _Y | ✓ | ✓ | ||||

| SD_ Gyro _Z | ✓ | ✓ | ✓ | |||

| MinMax_ Gyro _X | ✓ | ✓ | ✓ | |||

| MinMax_ Gyro _Y | ✓ | |||||

| MinMax_ Gyro _Z | ||||||

| MinMax_ Gyro _N | ✓ | ✓ | ||||

| Var_ Gyro _X | ✓ | ✓ | ||||

| Var_ Gyro _Y | ✓ | |||||

| Var_ Gyro _Z | ✓ | ✓ | ||||

| Var_MI_ Gyro | ✓ | ✓ | ||||

| SMA_ Gyro | ✓ | ✓ | ✓ | |||

| E_ Gyro | ✓ | ✓ | ✓ | |||

| Wavelet_RMS_aD2_Acc_X | ✓ | ✓ | ✓ | |||

| Wavelet_RMS_aD2_Acc_Z | ✓ | |||||

| Wavelet_RMS_dA3_Acc_X | ✓ | ✓ | ✓ | |||

| Var_Acc_X | ✓ | ✓ | ✓ | ✓ | ||

| Var_Acc_Y | ✓ | ✓ | ||||

| Var_Acc_Z | ✓ | |||||

| Var_MI_Acc | ✓ | ✓ | ✓ | |||

| SMA_Acc | ✓ | ✓ | ✓ | ✓ | ✓ | |

| E_Acc | ✓ | ✓ | ✓ | |||

| MC_Acc_XY | ✓ | |||||

| ME_Acc_X | ✓ | ✓ | ✓ | ✓ | ||

| ME_Acc_Y | ✓ | ✓ | ✓ | ✓ | ||

| ME_Acc_Z | ✓ | ✓ | ✓ | |||

| MMA_Acc | ✓ | |||||

| MVA_Acc | ✓ | ✓ | ||||

| MC_ Gyro _XY | ✓ | ✓ | ||||

| MC_ Gyro _XZ | ✓ | ✓ | ✓ | ✓ | ✓ | |

| MC_ Gyro _YZ | ✓ | |||||

| ME_ Gyro _X | ✓ | ✓ | ✓ | ✓ | ||

| ME_ Gyro _Y | ✓ | ✓ | ✓ | |||

| ME_ Gyro _Z | ✓ | ✓ | ✓ | |||

| SMAMCS_ Gyro _X | ✓ | ✓ | ✓ | |||

| SMAMCS_ Gyro _Y | ✓ | ✓ | ||||

| SMAMCS_ Gyro _Z | ✓ | ✓ | ✓ | ✓ | ||

| SMA_Acc_Gyro | ✓ | |||||

| E_Acc_Gyro | ✓ | ✓ | ||||

| ME_Acc_Gyro_X | ✓ | ✓ | ✓ | |||

| ME_Acc_Gyro_Y | ✓ | ✓ | ||||

| ME_Acc_Gyro_Z | ✓ | ✓ | ✓ | |||

| SMAMCS_Acc_Gyro_X | ✓ | |||||

| SMAMCS_Acc_Gyro_Y | ✓ | ✓ | ||||

| SMAMCS_Acc_Gyro_Z | ✓ | ✓ | ✓ | ✓ | ||

| Wavelet_STD_aD2_Acc_Y | ✓ | |||||

| Wavelet_STD_dA3_Acc_X | ✓ | ✓ | ||||

| Wavelet_STD_dA3_Acc_Y | ✓ | ✓ | ✓ | |||

| Wavelet_STD_dA3_Acc_Z | ✓ | ✓ | ||||

| Wavelet_STD_dD3_Acc_X | ✓ | ✓ | ✓ | ✓ | ||

| Wavelet_STD_dD3_Acc_Y | ✓ | ✓ | ✓ | |||

| Wavelet_STD_dD3_Acc_Z | ✓ | |||||

| Wavelet_RMS_dA3_Acc_Z | ✓ | |||||

| Wavelet_RMS_dD3_Acc_X | ✓ | ✓ | ✓ | ✓ | ||

| Wavelet_RMS_dD3_Acc_Z | ✓ | ✓ |

| Feature Name | Walk | Jog | Skip | Stay | Stairs up | Stairs down |

|---|---|---|---|---|---|---|

| Avg_Acc_X | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Avg_Acc_Y | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Avg_Acc_Z | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Avg_Acc_N | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| SD_Acc_X | ✓ | ✓ | ✓ | ✓ | ||

| SD_Acc_Y | ✓ | |||||

| SD_Acc_Z | ✓ | ✓ | ||||

| SD_Acc_N | ✓ | ✓ | ✓ | ✓ | ||

| MinMax_Acc_X | ✓ | ✓ | ||||

| MinMax_Acc_Y | ✓ | ✓ | ✓ | |||

| MinMax_Acc_Z | ✓ | ✓ | ||||

| MinMax_Acc_N | ✓ | ✓ | ✓ | |||

| Var_Acc_X | ✓ | ✓ | ✓ | |||

| Var_Acc_Y | ✓ | ✓ | ||||

| Var_Acc_Z | ✓ | |||||

| Var_MI_Acc | ✓ | ✓ | ✓ | |||

| SMA_Acc | ✓ | ✓ | ✓ | ✓ | ||

| E_Acc | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Avg_ Gyro _N | ✓ | ✓ | ✓ | ✓ | ||

| SD_ Gyro _X | ✓ | ✓ | ||||

| SD_ Gyro _Y | ✓ | |||||

| SD_ Gyro _Z | ✓ | ✓ | ✓ | |||

| SD_Gyro_N | ✓ | |||||

| MinMax_ Gyro _X | ✓ | |||||

| MinMax_ Gyro _Y | ✓ | |||||

| MinMax_ Gyro _Z | ✓ | |||||

| MinMax_ Gyro _N | ✓ | ✓ | ||||

| Var_ Gyro _X | ✓ | ✓ | ✓ | ✓ | ||

| Var_ Gyro _Y | ✓ | ✓ | ||||

| Var_ Gyro _Z | ✓ | |||||

| Var_MI_ Gyro | ✓ | |||||

| SMA_ Gyro | ✓ | ✓ | ✓ | ✓ | ||

| E_ Gyro | ✓ | ✓ | ✓ | |||

| MC_ Gyro _XY | ✓ | ✓ | ||||

| MC_Acc_XY | ✓ | |||||

| MC_Acc_YZ | ✓ | ✓ | ||||

| ME_Acc_X | ✓ | ✓ | ✓ | ✓ | ✓ | |

| ME_Acc_Y | ✓ | ✓ | ✓ | ✓ | ||

| ME_Acc_Z | ✓ | ✓ | ✓ | |||

| MMA_Acc | ✓ | ✓ | ✓ | ✓ | ||

| MVA_Acc | ✓ | ✓ | ||||

| MEA_Acc | ✓ | ✓ | ✓ | ✓ | ||

| ME_Acc_XY | ✓ | ✓ | ✓ | ✓ | ||

| ME_Acc_XZ | ✓ | ✓ | ✓ | ✓ | ||

| ME_Acc_YZ | ✓ | ✓ | ✓ | ✓ | ||

| SMAMCS_Acc_X | ✓ | ✓ | ✓ | ✓ | ✓ | |

| SMAMCS_Acc_Y | ✓ | ✓ | ✓ | ✓ | ||

| SMAMCS_Acc_Z | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Spec3_Acc_N | ✓ | |||||

| Spec5_Acc_Y | ✓ | |||||

| Spec9_Gyro_Z | ✓ | |||||

| Avg_ Gyro _Y | ✓ | |||||

| SMA_Acc_Gyro | ✓ | ✓ | ||||

| E_Acc_Gyro | ✓ | |||||

| ME_Acc_Gyro_X | ✓ | ✓ | ✓ | ✓ | ✓ | |

| ME_Acc_Gyro_Y | ✓ | ✓ | ✓ | |||

| ME_Acc_Gyro_Z | ✓ | ✓ | ✓ | ✓ | ||

| SMAMCS_Acc_Gyro_Y | ✓ | ✓ | ✓ | ✓ | ✓ | |

| SMAMCS_Acc_Gyro_Z | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Wavelet_E_Acc_Z | ✓ | |||||

| Wavelet_STD_aD2_Acc_X | ✓ | ✓ | ||||

| Wavelet_STD_aD2_Acc_Y | ✓ | ✓ | ||||

| Wavelet_STD_aD2_Acc_Z | ✓ | |||||

| Wavelet_STD_dA3_Acc_X | ✓ | ✓ | ||||

| Wavelet_STD_dA3_Acc_Y | ✓ | ✓ | ✓ | |||

| Wavelet_STD_dA3_Acc_Z | ✓ | ✓ | ✓ | |||

| Wavelet_STD_dD3_Acc_X | ✓ | ✓ | ✓ | ✓ | ||

| Wavelet_STD_dD3_Acc_Y | ✓ | ✓ | ||||

| MC_ Gyro _XZ | ✓ | ✓ | ✓ | ✓ | ✓ | |

| MC_ Gyro _YZ | ✓ | ✓ | ||||

| ME_ Gyro _X | ✓ | ✓ | ✓ | ✓ | ||

| ME_ Gyro _Y | ✓ | ✓ | ✓ | ✓ | ✓ | |

| ME_ Gyro _Z | ✓ | ✓ | ✓ | ✓ | ✓ | |

| SMAMCS_ Gyro _X | ✓ | |||||

| SMAMCS_ Gyro _Y | ✓ | |||||

| SMAMCS_ Gyro _Z | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Var_MI_Acc_Gyro | ✓ | ✓ | ||||

| Wavelet_STD_dD3_Acc_Z | ✓ | ✓ | ||||

| Wavelet_RMS_aD2_Acc_X | ✓ | ✓ | ||||

| Wavelet_RMS_dA3_Acc_X | ✓ | |||||

| Wavelet_RMS_aD4_Acc_Z | ✓ | |||||

| Wavelet_RMS_dA3_Acc_X | ✓ | ✓ | ||||

| Wavelet_RMS_dA3_Acc_Z | ✓ | ✓ | ||||

| Wavelet_RMS_dD3_Acc_X | ✓ | ✓ | ||||

| Wavelet_RMS_dD3_Acc_Y | ||||||

| Wavelet_RMS_dD3_Acc_Z | ✓ | ✓ |

References

- Smartphone Users Worldwide 2014–2020. Available online: https://www.statista.com/statistics/330695/number-of-smartphone-users-worldwide/ (accessed on 7 November 2018).

- Lane, N.D.; Miluzzo, E.; Lu, H.; Peebles, D.; Choudhury, T.; Campbell, A.T. A survey of mobile phone sensing. IEEE Commun. Mag. 2010, 48, 140–150. [Google Scholar] [CrossRef]

- Wang, J.; Tse, N.C.F.; Poon, T.Y.; Chan, J.Y.C. A Practical Multi-Sensor Cooling Demand Estimation Approach Based on Visual, Indoor and Outdoor Information Sensing. Sensors 2018, 18, 3591. [Google Scholar] [CrossRef] [PubMed]

- Xu, H.; Teo, H.; Tan, B.C.; Agarwal, R. The role of push-pull technology in privacy calculus: The case of location-based services. Manag. Inf. Syst. 2009, 26, 135–174. [Google Scholar] [CrossRef]

- van den Berg, J.; Köbben, B.; van der Drift, S.; Wismans, L. Towards a Dynamic Isochrone Map: Adding Spatiotemporal Traffic and Population Data. In Proceedings of the LBS 2018: 14th International Conference on Location Based Services, Zurich, Switzerland, 15–17 January 2018; pp. 195–209. [Google Scholar]

- Chin, W.H.; Fan, Z.; Haines, R.J. Emerging technologies and research challenges for 5G wireless networks. 2014, 21, 106–112. [Google Scholar] [CrossRef]

- Xu, H.; Wu, M.; Li, P.; Zhu, F.; Wang, R. An RFID Indoor Positioning Algorithm Based on Support Vector Regression. Sensors 2018, 18, 1504. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.; Shao, H. WiFi-based indoor positioning. IEEE Commun. Mag. 2015, 53, 150–157. [Google Scholar] [CrossRef]

- Rana, S.; Prieto, J.; Dey, M.; Dudley, S.; Corchado, J. A Self Regulating and Crowdsourced Indoor Positioning System through Wi-Fi Fingerprinting for Multi Storey Building. Sensors 2018, 18, 3766. [Google Scholar] [CrossRef]

- Gu, Y.; Ren, F. Energy-efficient indoor localization of smart hand-held devices using Bluetooth. IEEE Access 2015, 3, 1450–1461. [Google Scholar] [CrossRef]

- Kang, J.; Seo, J.; Won, Y. Ephemeral ID Beacon-Based Improved Indoor Positioning System. Symmetry 2018, 10, 622. [Google Scholar] [CrossRef]

- Song, J.; Jeong, H.; Hur, S.; Park, Y. Improved indoor position estimation algorithm based on geo-magnetism intensity. In Proceedings of the 2014 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Busan, Korea, 27–30 October 2014; pp. 741–744. [Google Scholar]

- Chintalapudi, K.; Iyer, A.P.; Padmanabhan, V.N. Indoor localization without the pain. In Proceedings of the Sixteenth Annual International Conference on Mobile Computing and Networking, Chicago, IL, USA, 20–24 September 2010; ACM; pp. 173–184. [Google Scholar]

- Capurso, N.; Song, T.; Cheng, W.; Yu, J.; Cheng, X. An android-based mechanism for energy efficient localization depending on indoor/outdoor context. IEEE Internet Thing 2017, 4, 299. [Google Scholar] [CrossRef]

- Radu, V.; Katsikouli, P.; Sarkar, R.; Marina, M.K. A semi-supervised learning approach for robust indoor-outdoor detection with smartphones. In Proceedings of the 12th ACM Conference on Embedded Network Sensor Systems, Memphis, TN, USA, 3–6 November 2014; ACM; pp. 280–294. [Google Scholar]

- Dhondge, K.; Choi, B.Y.; Song, S.; Park, H. Optical wireless authentication for smart devices using an onboard ambient light sensor. In Proceedings of the 2014 23rd International Conference on Computer Communication and Networks (ICCCN), Shanghai, China, 4–7 August 2014; IEEE; pp. 1–8. [Google Scholar]

- Spreitzer, R. Pin skimming: Exploiting the ambient-light sensor in mobile devices. In Proceedings of the 4th ACM Workshop on Security and Privacy in Smartphones & Mobile Devices, Scottsdale, AZ, USA, 3–7 November 2014; ACM; pp. 51–62. [Google Scholar]

- Li, M.; Zhou, P.; Zheng, Y.; Li, Z.; Shen, G. IODetector. ACM Trans. Sens. Netw. 2014, 11, 1–29. [Google Scholar] [CrossRef]

- Ashraf, I.; Hur, S.; Park, Y. MagIO: Magnetic Field Strength Based Indoor- Outdoor Detection with a Commercial Smartphone. Micromachines 2018, 9, 534. [Google Scholar] [CrossRef] [PubMed]

- Krumm, J.; Hariharan, R. Tempio: Inside/outside classification with temperature. In Proceedings of the Second International Workshop on Man-Machine Symbiotic Systems, Kyoto, Japan, 23–24 November 2004; pp. 241–250. [Google Scholar]

- Sung, R.; Jung, S.-H.; Han, D. Sound based indoor and outdoor environment detection for seamless positioning handover. ICT Express 2015, 1, 106–109. [Google Scholar] [CrossRef]

- Wang, W.; Chang, Q.; Li, Q.; Shi, Z.; Chen, W. Indoor-outdoor detection using a smart phone sensor. Sensors 2016, 16, 1563. [Google Scholar] [CrossRef] [PubMed]

- Canovas, O.; Lopez-de-Teruel, P.E.; Ruiz, A. Detecting indoor/outdoor places using WiFi signals and AdaBoost. IEEE Sens. J. 2017, 17, 1443–1453. [Google Scholar] [CrossRef]

- Ali, M.; ElBatt, T.; Youssef, M. SenseIO: Realistic Ubiquitous Indoor Outdoor Detection System Using Smartphones. IEEE Sens. J. 2018, 18, 3684–3693. [Google Scholar] [CrossRef]

- Lin, Y.-W.; Lin, C.-Y. An Interactive Real-Time Locating System Based on Bluetooth Low-Energy Beacon Network. Sensors 2018, 18, 1637. [Google Scholar] [CrossRef]

- Liu, Q.; Yang, X.; Deng, L. An IBeacon-Based Location System for Smart Home Control. Sensors 2018, 18, 1897. [Google Scholar] [CrossRef]

- Hwang, J.; Jung, M.-C. Age and sex differences in ranges of motion and motion patterns. Int. J. Occup. Saf. Ergon. 2015, 21, 173–186. [Google Scholar] [CrossRef]

- Walter, D.J.; Groves, P.D.; Mason, R.J.; Harrison, J.; Woodward, J.; Wright, P. Novel environmental features for robust multisensor navigation. In Proceedings of the 26th International Technical Meeting of The Satellite Division of the Institute of Navigation (ION GNSS), Nashville, TN, USA, 16–20 September 2013; pp. 488–504. [Google Scholar]

- Groves, P.D.; Martin, H.; Voutsis, K.; Walter, D.; Wang, L. Context detection, categorization and connectivity for advanced adaptive integrated navigation. In Proceedings of the 26th International Technical Meeting of The Satellite Division of the Institute of Navigation (ION GNSS), Nashville, TN, USA, 16–20 September 2013; pp. 1039–1056. [Google Scholar]

- Ravindranath, L.; Newport, C.; Balakrishnan, H.; Madden, S. Improving wireless network performance using sensor hints. In Proceedings of the 8th USENIX Conference on Networked Systems Design and Implementation, Boston, MA, USA, 30 March–1 April 2011; pp. 281–294. [Google Scholar]

- Santos, A.C.; Cardoso, J.M.; Ferreira, D.R.; Diniz, P.C.; Chaínho, P. Providing user context for mobile and social networking applications. Pervasive Mob. Comput. 2010, 6, 324–341. [Google Scholar] [CrossRef]

- Wu, M.; Pathak, P.H.; Mohapatra, P. Monitoring building door events using barometer sensor in smartphones. In Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Osaka, Japan, 7–11 September 2015; ACM; pp. 319–323. [Google Scholar]

- Ichino, H.; Kaji, K.; Sakurada, K.; Hiroi, K.; Kawaguchi, N. HASC-PAC2016: Large scale human pedestrian activity corpus and its baseline recognition. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct, Heidelberg, Germany, 12–16 September 2016; ACM; pp. 705–714. [Google Scholar]

- Pires, I.; Garcia, N.M.; Pombo, N.; Flórez-Revuelta, F.; Spinsante, S. Approach for the Development of a Framework for the Identification of Activities of Daily Living Using Sensors in Mobile Devices. Sensors 2018, 18, 640. [Google Scholar] [CrossRef] [PubMed]

- Reinsch, C.H. Smoothing by spline functions. Numer. Math. 1967, 10, 177–183. [Google Scholar] [CrossRef]

- Anguita, D.; Ghio, A.; Oneto, L.; Parra, X.; Reyes-Ortiz, J.L. A public domain dataset for human activity recognition using smartphones. In Proceedings of the 21th International European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning, Bruges, Belgium, 24–26 April 2013; pp. 437–442. [Google Scholar]

- Karantonis, D.M.; Narayanan, M.R.; Mathie, M.; Lovell, N.H.; Celler, B.G. Implementation of a real-time human movement classifier using a triaxial accelerometer for ambulatory monitoring. IEEE Trans. Inf. Technol. Biomed. 2006, 10, 156–167. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Shen, C. Performance Analysis of Smartphone-Sensor Behavior for Human Activity Recognition. IEEE Access 2017, 5, 3095–3110. [Google Scholar] [CrossRef]

- Damasevicius, R.; Narayanan, M.R.; Mathie, M.; Lovell, N.H.; Celler, B.G. Human Activity Recognition in AAL Environments Using Random Projections. Comput. Math. Methods Med. 2016, 4073584. [Google Scholar] [CrossRef]

- Pires, I.M.; Garcia, N.M.; Pombo, N.; Flórez-Revuelta, F. From Data Acquisition to Data Fusion: A Comprehensive Review and a Roadmap for the Identification of Activities of Daily Living Using Mobile Devices. Sensors 2016, 16, 184. [Google Scholar] [CrossRef]

- Ehatisham-Ul-Haq, M.; Azam, M.A.; Loo, J.; Shuang, K.; Islam, S.; Naeem, U.; Amin, M. Authentication of Smartphone Users Based on Activity Recognition and Mobile Sensing. Sensors 2017, 17, 2043. [Google Scholar] [CrossRef]

- Gui, J.; Sun, Z.; Ji, S.; Tao, D.; Tan, T. Feature Selection Based on Structured Sparsity: A Comprehensive Study. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, pp–1490. [Google Scholar] [CrossRef]

- Guyon, I.; Weston, J.; Barnhill, S.; Vapnik, V. Gene Selection for Cancer Classification using Support Vector Machines. Mach. Learn. 2002, 46, 389–422. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random Forests for land cover classification. Pattern Recognit. Lett. 2006, 27, 294–300. [Google Scholar] [CrossRef]

- Bauer, E.; Kohavi, R. An empirical comparison of voting classification algorithms: Bagging, boosting, and variants. Mach. Learn. 1996, 36, 105–139. [Google Scholar] [CrossRef]

- Kadavi, P.; Lee, C.-W.; Lee, S. Application of Ensemble-Based Machine Learning Models to Landslide Susceptibility Mapping. Remote Sens. 2018, 10, 1252. [Google Scholar] [CrossRef]

- Bahaghighat, M.K.; Sahba, F.; Tehrani, E. Text dependent Speaker Recognition by Combination of LBG VQ and DTW for Persian language. Int. J. Comput. Appl. 2012, 51, 23–27. [Google Scholar]

| References | Sensor Types | Proposed Method | Overall Accuracy |

|---|---|---|---|

| Tempio [20], UPCASE [31] | Temperature | Environmental temperature measurements are classified using a threshold based on the user’s comfort zone and weather forecasts | ---- |

| IO Detector [18] | Light, cell, and magnetometer | The system performance was checked based on sub-detectors including light-, cell-, magnetism- and a hybrid detector | Around 85%. |

| Semi-supervised [15] | light, cell, and Sound | Individual modules on diverse phones are used in unfamiliar environments in three different scenarios, including cluster-then-label, self-training, and co-training | 92% for unfamiliar places |

| Door Events [32] | Barometer | At the moment the door of a building sis opened or closed, the indoor pressure increases, and a smartphone’s barometer can measure the pressure increment. | 99% for door event detection |

| Sound [21] | Microphone | A special chirp sound probe is propagated by a mobile device speaker and then collected back through device microphone to use as input dataset. | Roughly 95% accuracy at 46 different known places |

| GSM signal [22] | GSM signal of cell tower | The different GSM signal intensities of four environment types (deep indoors, semi-indoors, semi-outdoors, outdoors) are classified. | 95.3% |

| WIFI Boost [23] | Wi-Fi | The intensity variations of Wi-Fi signals are classified into inside/outside environments or the number of access points around the devices is measured. | Around 2.5% mean error rate for familiar places. |

| SenseIO [24], | Accelerometer, gyroscope, light, cell, Wi-Fi | A multi-modal approach with a framework including four modules (activity recognition, light, Wi-Fi and GSM) is created. | 92% |

| Label | Description | Equation | Size |

|---|---|---|---|

| Avg_ | Average of samples in each axis separately | , where | 1 × 8 |

| SD_ | The standard deviation of samples in each axis separately | , where | 1 × 8 |

| MinMax_ | The difference between “Maximum Value and “Minimum Value” of samples in each axis | 1 × 8 | |

| Var_ | Moving variance of samples on the x-, y-, and z-axes | 1 × 6 | |

| SMA_ | The simple moving average of data | 1 × 2 | |

| E_ | First eigenvalue of moving covariance between samples | 1 × 2 | |

| ME_ | Moving energy of sensor’s signal on each axis | 1 × 8 | |

| MC_ | Moving correlation of sensor data between two axes | 1 × 6 | |

| MMA_ | The moving mean of orientation vector of sensor’s data | 1 × 2 | |

| MVA_ | Moving variance of orientation vector of sensor’s data | 1 × 2 | |

| MEA_ | Moving energy of orientation vector of sensor’s data | 1 × 2 | |

| Spec.No_ | The power spectrum is computed from the FFT result. From 0.5 Hz to 5 Hz (in 0.5 Hz intervals) for x, y, z, and n for both accelerometer and gyroscope | 1 × 80 | |

| Wavelet_STD_index.D.No._ | The standard deviation of the acceleration signal at level 2 to 5 corresponding to 0.78–18.75 Hz in three directions (i.e., AP/ML/VL) for x-, y- and z-axes | 1 × 36 | |

| Wavelet_RMS_index.D.No._ | Root mean square values of AP and VT acceleration signals for the x-, y-, and z-axes | 1 × 24 | |

| Label | Description | Equation | Size |

|---|---|---|---|

| MI_ Acc_Gyro_ | The difference between the movement intensity of the accelerometer and gyroscope | 1 × 1 | |

| Var_MI_ | The moving variance of sample intensity data | where | 1 × 3 |

| SMA_ Acc_Gyro_ | The simple moving average of the variance between acceleration and gyroscope data | 1 × 1 | |

| E_ Acc_Gyro_ | First eigenvalue of moving covariance of difference between acceleration and gyroscope data | 1 × 1 | |

| ME_ Acc_Gyro_ | Moving energy of acceleration and gyroscope data | 1 × 4 | |

| MMA_ Acc_Gyro_ | The moving mean of the orientation vector of the variation between acceleration and gyroscope data | where | 1×1 |

| MVA_ Acc_Gyro_ | Moving variance of the orientation vector of the variance between acceleration and gyroscope data | where | 1 × 1 |

| MEA_ Acc_Gyro_ | Moving energy of the orientation vector of the variance between acceleration and gyroscope data | 1 × 1 | |

| SMAMCS_ Acc_Gyro_ | Moving energy of orientation vector of sensor data | 1 × 3 |

| Scenario | List of Features | Size |

|---|---|---|

| Only accelerometer | 8 | |

| Only gyroscope | 25 | |

| Accelerometer and gyroscope | 7 | |

| Balanced dataset | 8 | |

| Unbalanced dataset | 5 | |

| Selected-features | 7 |

| Activity | Only Gyro 1 | Only Acc 2 | Acc 2 and Gyro 1 | Balanced Data | Unbalanced Data | Selected Features |

|---|---|---|---|---|---|---|

| Walk | 19 | 25 | 54 | 43 | 39 | 42 |

| Jog | 17 | 36 | 61 | 40 | 44 | 59 |

| Skip | 12 | 34 | 53 | 36 | 51 | 51 |

| Stay | 25 | 8 | 7 | 8 | 5 | 7 |

| Stairs Up | 12 | 31 | 32 | 36 | 40 | 34 |

| Stairs Down | 18 | 32 | 48 | 38 | 24 | 46 |

| Random Forest | ||||||

| Activities | Only Accelerometer | Only Gyroscope | Accelerometer & Gyroscope | |||

| Outdoor | Indoor | Outdoor | Indoor | Outdoor | Indoor | |

| Walk | 98.75% | 92.33% | 97.71% | 84.95% | 99.06% | 94.32% |

| Jog | 98.90% | 92.86% | 97.52% | 83.03% | 99.29% | 95.55% |

| Skip | 98.90% | 92.35% | 96.96% | 76.01% | 99.03% | 93.38% |

| Stay | 97.61% | 99.07% | 79.73% | 92.66% | 97.23% | 98.92% |

| Stairs Up | 81.56% | 97.89% | 61.39% | 96.43% | 88.79% | 98.69% |

| Stairs Down | 82.95% | 97.95% | 59.52% | 96.17% | 87.40% | 98.48% |

| AdaBoost | ||||||

| Activities | Only Accelerometer | Only Gyroscope | Accelerometer & Gyroscope | |||

| Outdoor | Indoor | Outdoor | Indoor | Outdoor | Indoor | |

| Walk | 99.38% | 96.32% | 98.26% | 88.94% | 99.51% | 97.10% |

| Jog | 99.59% | 97.43% | 98.37% | 89.15% | 99.76% | 98.49% |

| Skip | 99.36% | 95.72% | 97.69% | 82.44% | 99.72% | 98.15% |

| Stay | 98.18% | 99.29% | 80.51% | 92.89% | 98.18% | 99.29% |

| Stairs Up | 88.64% | 98.67% | 72.09% | 97.21% | 95.81% | 99.49% |

| Stairs Down | 89.06% | 98.66% | 69.75% | 96.92% | 94.86% | 99.34% |

| Random Forest | AdaBoost | |||||||

|---|---|---|---|---|---|---|---|---|

| Activities | Balanced Data | Unbalanced Data | Balanced Data | Unbalanced Data | ||||

| Outdoor | Indoor | Outdoor | Indoor | Outdoor | Indoor | Outdoor | Indoor | |

| Walk | 99.03% | 94.15% | 99.19% | 96.10% | 99.48% | 96.94% | 99.63% | 98.25% |

| Jog | 99.40% | 96.18% | 99.09% | 95.11% | 99.77% | 98.57% | 99.70% | 98.41% |

| Skip | 99.15% | 94.26% | 98.99% | 94.78% | 99.76% | 98.40% | 99.62% | 98.12% |

| Stay | 98.11% | 99.26% | 96.28% | 99.35% | 98.79% | 99.53% | 97.55% | 99.57% |

| Stairs up | 88.04% | 98.59% | 83.18% | 99.03% | 94.27% | 99.30% | 93.13% | 99.58% |

| Stairs down | 87.33% | 98.47% | 81.00% | 98.87% | 94.96% | 99.35% | 91.85% | 99.48% |

| Random Forest | AdaBoost | |||||||

|---|---|---|---|---|---|---|---|---|

| Activities | Selected Features | All Features | Selected Features | All Features | ||||

| Outdoor | Indoor | Outdoor | Indoor | Outdoor | Indoor | Outdoor | Indoor | |

| Walk | 99.05% | 94.24% | 98.79% | 92.61% | 99.55% | 97.34% | 99.43% | 96.62% |

| Jog | 99.32% | 95.69% | 99.08% | 94.05% | 99.76% | 98.49% | 99.74% | 98.40% |

| Skip | 99.05% | 93.46% | 98.89% | 92.40% | 99.77% | 98.49% | 99.55% | 97.02% |

| Stay | 97.87% | 99.17% | 94.62% | 97.96% | 98.68% | 99.48% | 98.07% | 99.25% |

| Stairs up | 88.12% | 98.59% | 82.98% | 98.09% | 95.17% | 99.41% | 92.17% | 99.07% |

| Stairs down | 88.03% | 98.52% | 83.02% | 98.01% | 95.30% | 99.39% | 93.55% | 99.19% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Esmaeili Kelishomi, A.; Garmabaki, A.H.S.; Bahaghighat, M.; Dong, J. Mobile User Indoor-Outdoor Detection through Physical Daily Activities. Sensors 2019, 19, 511. https://doi.org/10.3390/s19030511

Esmaeili Kelishomi A, Garmabaki AHS, Bahaghighat M, Dong J. Mobile User Indoor-Outdoor Detection through Physical Daily Activities. Sensors. 2019; 19(3):511. https://doi.org/10.3390/s19030511

Chicago/Turabian StyleEsmaeili Kelishomi, Aghil, A.H.S. Garmabaki, Mahdi Bahaghighat, and Jianmin Dong. 2019. "Mobile User Indoor-Outdoor Detection through Physical Daily Activities" Sensors 19, no. 3: 511. https://doi.org/10.3390/s19030511

APA StyleEsmaeili Kelishomi, A., Garmabaki, A. H. S., Bahaghighat, M., & Dong, J. (2019). Mobile User Indoor-Outdoor Detection through Physical Daily Activities. Sensors, 19(3), 511. https://doi.org/10.3390/s19030511