Multi-Factor Operating Condition Recognition Using 1D Convolutional Long Short-Term Network

Abstract

1. Introduction

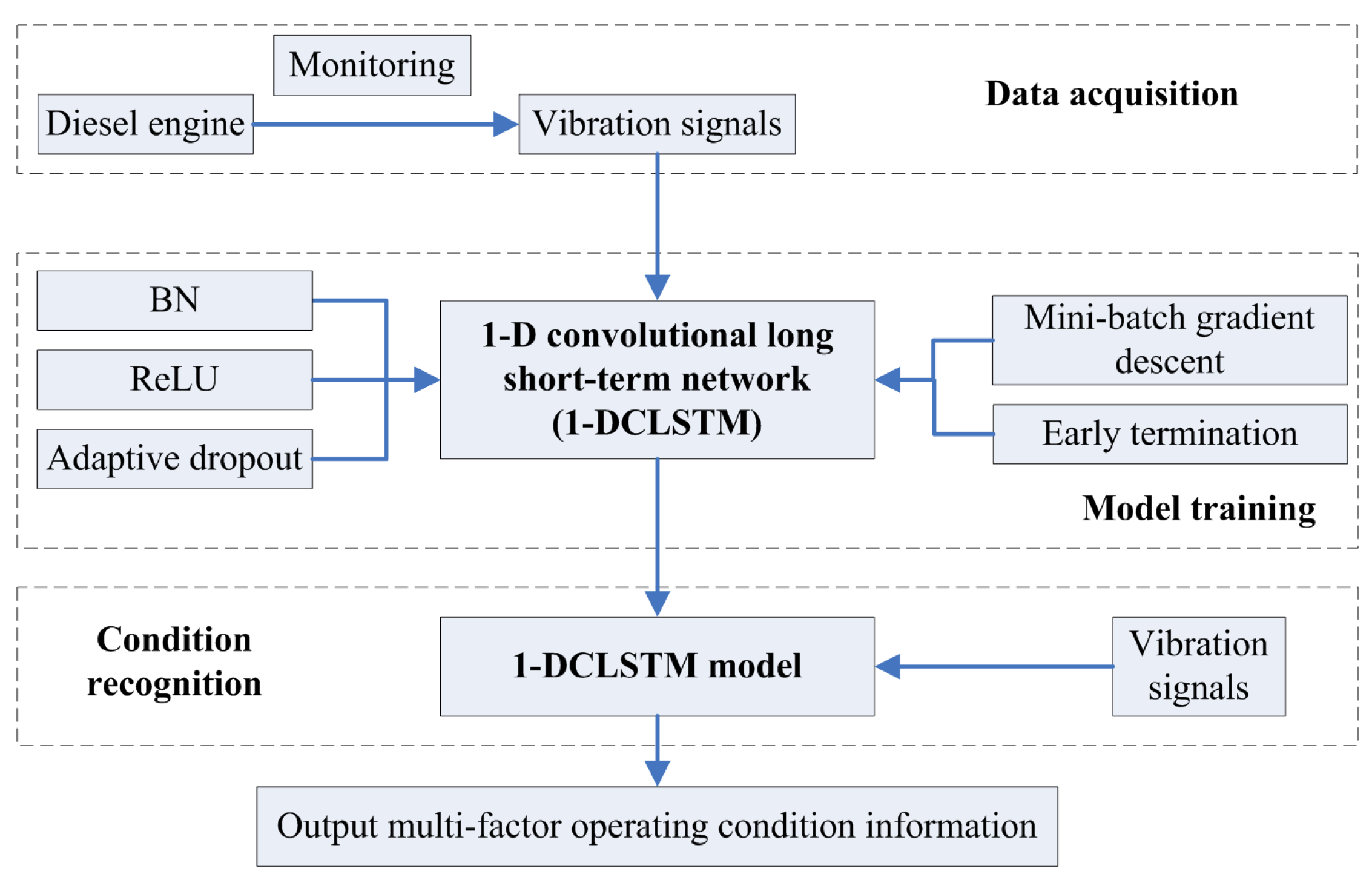

- A multi-factor operating condition recognition method is proposed using a 1D convolutional long short-term network (1D-CLSTM). As far as we know, this is the first study to combine a 1D CNN and LSTM to recognize operating conditions based on a time series of vibration signals;

- Considering the particularity of engine vibration signals, batch normalization (BN) is introduced to regulate the input of some layers by fixing the mean value and variance of input signals in each convolutional layer;

- Adaptive dropout is proposed for improving the model sparsity and preventing overfitting;

- The designed 1D convolutional long short-term network (1D-CLSTM) classifier can achieve high generalization accuracy for recognizing multi-factor operating conditions.

2. Experiment and Vibration Signal

2.1. Test Bench of Diesel Engine

2.2. Experimental Data Acquisition

3. Technical Background

3.1. 1D CNN

3.2. LSTM

4. Methodologies

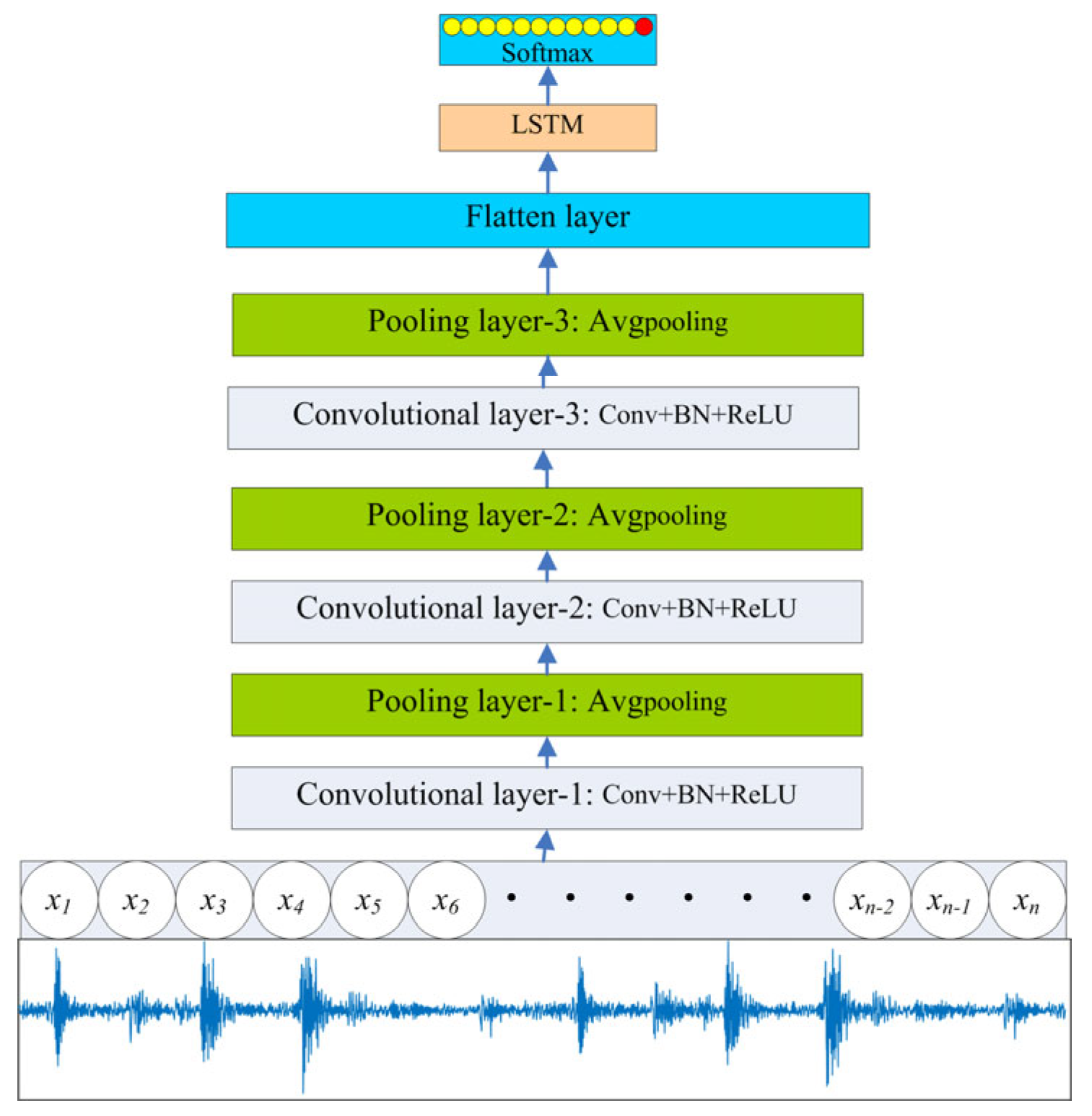

4.1. 1D Convolutional Long Short-Term Network

4.1.1. Overall Architecture

4.1.2. Architecture Design

4.1.3. Adaptive Dropout

4.1.4. Implementation

4.2. Multi-Factor Operating Condition Recognition

5. Experiments

5.1. Training Performance of the Designed 1D-CLSTM

5.2. Comparison of Training Performance with Different Dropout Ratios

5.3. Comparison Analysis

- The k-nearest neighbor (kNN) algorithm, which works with a multi-domain feature set [33]. Based on the multi-domain feature set, the kNN algorithm is more suitable than other statistical learning methods.

- The support vector machine (SVM), which works with a multi-domain feature set. SVM is a kind of generalized linear classifier that can be used for supervised learning.

- The 1D LeNet-5, which is a convolutional network that has the same network layers as LeNet-5, i.e., two convolutional layers and two fully connected layers. The corresponding structural parameters are listed in Table 4.

- The 1D AlexNet, which is a convolutional network that has the same network layers as AlexNet, i.e., five convolutional layers and three fully connected layers. The corresponding structural parameters are also listed in Table 4.

- The 1D VGG-16, which is a convolutional network that has the same network layers as VGG-16, with 1D convolution kernels adopted. The corresponding structural parameters are also listed in Table 4.

- A traditional LSTM, which has two layers and 32 LSTM units in each layer.

5.4. Generalizability Verification

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Kouremenos, D.A.; Hountalas, D.T. Diagnosis and condition monitoring of medium-speed marine diesel engines. Lubr. Sci. 2010, 4, 63–91. [Google Scholar] [CrossRef]

- Zhiwei, M. Research on Typical Fault Diagnosis and Unstable Condition Monitoring and Evaluation for Piston Engine. Ph.D. Thesis, Beijing University of Chemical Technology, Beijing, China, 2017. [Google Scholar]

- Porteiro, J.; Collazo, J.; Patiño, D.; Míguez, J.L. Diesel engine condition monitoring using a multi-net neural network system with nonintrusive sensors. Appl. Therm. Eng. 2011, 31, 4097–4105. [Google Scholar] [CrossRef]

- Xu, H.F. New Intelligent Condition Monitoring and Fault Diagnosis System for Diesel Engines Using Embedded System. Appl. Mech. Mater. 2012, 235, 408–412. [Google Scholar] [CrossRef]

- Xu, X.; Yan, X.; Sheng, C.; Yuan, C.; Xu, D.; Yang, J. A Belief Rule-Based Expert System for Fault Diagnosis of Marine Diesel Engines. IEEE Trans. Syst. Man Cybern. Syst. 2017, 99, 1–17. [Google Scholar] [CrossRef]

- Kowalski, J.; Krawczyk, B.; Woźniak, M. Fault diagnosis of marine 4-stroke diesel engines using a one-vs-one extreme learning ensemble. Eng. Appl. Artif. Intell. 2017, 57, 134–141. [Google Scholar] [CrossRef]

- Shen, H.; Zeng, R.; Yang, W.; Zhou, B.; Ma, W.; Zhang, L.; Projecton Equipment Surport Department, Army Military Transportation University. Diesel Engine Fault Diagnosis Based on Polar Coordinate Enhancement of Time-Frequency Diagram. J. Vib. Meas. Diagn. 2018, 38, 27–33. [Google Scholar]

- Li, Y.; Han, M.; Han, B.; Le, X.; Kanae, S. Fault Diagnosis Method of Diesel Engine Based on Improved Structure Preserving and K-NN Algorithm. In Advances in Neural Networks-ISNN 2018; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Liu, Y. Research on Fault Diagnosis for Fule System and Valve Train of Diesel Engine Based on Vibration Analysis. Ph.D. Thesis, Tianjin University, Tianjin, China, 2016. [Google Scholar]

- Wu, T.; Wu, H.; Du, Y.; Peng, Z. Progress and trend of sensor technology for on-line oil monitoring. Sci. China Technol. Sci. 2013, 56, 2914–2926. [Google Scholar] [CrossRef]

- Tang, G.; Fu, X.; Shao, G.; Chen, N. Application of Improved Grey Model in Prediction of Thermal Parameters for Diesel Engine. Ship Boat 2018, 5, 39–46. [Google Scholar]

- Li, H.; Zhou, P.; Ma, X. Pattern recognition on diesel engine working condition by using a novel methodology-Hilbert spectrum entropy. J. Mar. Eng. Technol. 2005, 4, 43–48. [Google Scholar] [CrossRef][Green Version]

- Ji, S.; Cheng, Y.; Wang, X. Cylinder pressure recognition based on frequency characteristic of vibration signal measured from cylinder head. J. Vib. Shock 2008, 27, 133–136. [Google Scholar]

- Liu, J.; Li, H.; Qiao, X.; Li, X.; Shi, Y. Engine Cylinder Pressure Identification Method Based on Cylinder Head Vibration Signals. Chin. Intern. Comhuslion Engine Eng. 2013, 34, 32–37. [Google Scholar]

- Chang, C.; Jia, J.; Zeng, R.; Mei, J.; Wang, G. Recognition of Cylinder Pressure Based on Time-frequency Coherence and RBF Network. Veh. Engine 2016, 5, 87–92. [Google Scholar]

- Syed, M.S.; Sunghoon, K.; Sungoh, K. Real-Time Classification of Diesel Marine Engine Loads Using Machine Learning. Sensors 2019, 19, 3172. [Google Scholar] [CrossRef]

- Yoo, Y.; Baek, J.G. A Novel Image Feature for the Remaining Useful Lifetime Prediction of Bearings Based on Continuous Wavelet Transform and Convolutional Neural Network. Appl. Sci. 2018, 8, 1102. [Google Scholar] [CrossRef]

- Rui, Z.; Yan, R.; Chen, Z.; Mao, K.; Wang, P.; Gao, R.X. Deep learning and its applications to machine health monitoring. Mech. Syst. Signal Process. 2019, 115, 213–237. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012. [Google Scholar]

- Zhiqiang, C.; Chuan, L.; Sanchez, R.V. Gearbox Fault Identification and Classification with Convolutional Neural Networks. Shock Vib. 2015, 2015, 1–10. [Google Scholar]

- Yuan, J.; Han, T.; Tang, J.; An, L. An Approach to Intelligent Fault Diagnosis of Rolling Bearing Using Wavelet Time-Frequency Representations and CNN. Mach. Des. Res. 2017, 2, 101–105. [Google Scholar]

- Peng, P.; Zhao, X.; Pan, X.; Ye, W. Gas Classification Using Deep Convolutional Neural Networks. Sensors 2018, 18, 157. [Google Scholar] [CrossRef]

- Wu, Y.; Yuan, M.; Dong, S.; Li, L.; Liu, Y. Remaining useful life estimation of engineered systems using vanilla LSTM neural networks. Neurocomputing 2018, 275, 167–179. [Google Scholar] [CrossRef]

- Qing, X.; Niu, Y. Hourly day-ahead solar irradiance prediction using weather forecasts by LSTM. Energy 2018, 148, 461–468. [Google Scholar] [CrossRef]

- Lin, C.; Yuan, Z.; Julie, I.; Muge, C.; Ryan, A.; Jeanne, M.H.; Jeanne, M.H.; Min, C. Early diagnosis and prediction of sepsis shock by combining static and dynamic information using convolutional-LSTM. In Proceedings of the 2018 IEEE International Conference on Healthcare Informatics (ICHI), New York, NY, USA, 4–7 June 2018; pp. 219–228. [Google Scholar]

- Andersen, R.S.; Abdolrahman, P.; Sadasivan, P. A deep learning approach for real-time detection of atrial fibrillation. Expert Syst. Appl. 2019, 115, 465–473. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556v6. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlen, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Nitish, S.; Geoffrey, H.; Alex, K.; Ilya, S.; Ruslan, S. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Visa, S.; Ramsay, B.; Ralescu, A.L.; Van Der Knaap, E. Confusion Matrix-Based Feature Selection; MAICS: Metro Manila, Philippines, 2011; pp. 120–127. [Google Scholar]

- Yan, X.; Jia, M. A novel optimized SVM classification algorithm with multi-domain feature and its application to fault diagnosis of rolling bearing. Neurocomputing 2018, 313, 47–64. [Google Scholar] [CrossRef]

| Item | Parameter |

|---|---|

| Number of cylinders | 12 |

| Shape | V-shaped 60° |

| Firing sequence | B1-A1-B5-A5-B3-A3-B6-A6-B2-A2-B4-A4 |

| Rating speed | 2100 rev/min |

| Rating power | 485 kW |

| No. | Rev (rpm) | Load (N·m) | No. | Rev (rpm) | Load (N·m) |

|---|---|---|---|---|---|

| 1 | 1500 | 700 | 7 | 1800 | 1600 |

| 2 | 1500 | 1000 | 8 | 2100 | 700 |

| 3 | 1500 | 1300 | 9 | 2100 | 1000 |

| 4 | 1800 | 700 | 10 | 2100 | 1300 |

| 5 | 1800 | 1000 | 11 | 2100 | 1600 |

| 6 | 1800 | 1300 | 12 | 2100 | 2200 |

| No. | Network Layer | Size of Convolution Kernel | Stride | Output Dimension |

|---|---|---|---|---|

| 1 | Input layer | - | - | 4096 × 1 |

| 2 | Convolutional layer-1 | 11 | 1 | 4096 × 32 |

| 3 | Pooling layer-1 | 3 | 2 | 2047 × 32 |

| 4 | Convolutional layer-2 | 13 | 1 | 2047 × 64 |

| 5 | Pooling layer-2 | 3 | 2 | 1023 × 64 |

| 6 | Convolutional layer-3 | 15 | 1 | 1023 × 128 |

| 7 | Pooling layer-3 | 3 | 2 | 511 × 128 |

| 8 | Flatten layer | - | - | 73 × 896 |

| 9 | LSTM (two layers) | - | - | 73 |

| 10 | Softmax | - | - | 12 |

| 1D LeNet-5 | 1D AlexNet | 1D VGG-16 | |

|---|---|---|---|

| Conv1 [1,11] × 64, s = 1 | Conv1 [1,11] × 32, s = 1 | Conv1 [1,3] × 16, s = 1 | Conv9 [1,3] × 128, s = 1 |

| AveragePooling1 [1,3], s = 2 | MaxPooling1 [1,3], s = 2 | Conv2 [1,3] × 16, s = 1 | Conv10 [1,3] × 128, s = 1 |

| Conv2 [1,13] × 128, s = 1 | Conv2 [1,5] × 64, s = 1 | MaxPooling1 [1,2], s = 2 | MaxPooling4 [1,2], s = 2 |

| AveragePooling2 [1,3], s = 2 | MaxPooling2 [1,3], s = 2 | Conv3 [1,3] × 32, s = 1 | Conv11 [1,3] × 256, s = 1 |

| FC1 (1024) | Conv3 [1,3] × 128, s = 1 | Conv4 [1,3] × 32, s = 1 | Conv12 [1,3] × 256, s = 1 |

| FC2 (512) | Conv4 [1,3] × 128, s = 1 | MaxPooling2 [1,2], s = 2 | Conv13 [1,3] × 256, s = 1 |

| softmax | Conv5 [1,3] × 128, s = 1 | Conv5 [1,3] × 64, s = 1 | MaxPooling5 [1,2], s = 2 |

| - | MaxPooling3 [1,3], s = 2 | Conv6 [1,3] × 64, s = 1 | FC1 (1024) |

| - | FC1 (1024) | Conv7 [1,3] × 64, s = 1 | FC2 (512) |

| - | FC2 (512) | MaxPooling3 [1,2], s = 2 | softmax |

| - | softmax | Conv8 [1,3] × 128, s = 1 | - |

| Learning Model | Generalization Accuracy (%) |

|---|---|

| 1D-CLSTM | 99.08 |

| LSTM | 74.12 |

| kNN with a multi-domain feature set | 92.18 |

| SVM with a multi-domain feature set | 94.91 |

| 1D LeNet-5 | 94.43 |

| 1D AlexNet | 97.54 |

| 1D VGG-16 | 98.01 |

| No. | Rev (rpm) | Load (kN·m) |

|---|---|---|

| 1 | 600 | 0 |

| 2 | 1100 | 17.7 |

| 3 | 1500 | 22.6 |

| 4 | 1500 | 26.6 |

| 5 | 1500 | 28.3 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, Z.; Lai, Y.; Zhang, J.; Zhao, H.; Mao, Z. Multi-Factor Operating Condition Recognition Using 1D Convolutional Long Short-Term Network. Sensors 2019, 19, 5488. https://doi.org/10.3390/s19245488

Jiang Z, Lai Y, Zhang J, Zhao H, Mao Z. Multi-Factor Operating Condition Recognition Using 1D Convolutional Long Short-Term Network. Sensors. 2019; 19(24):5488. https://doi.org/10.3390/s19245488

Chicago/Turabian StyleJiang, Zhinong, Yuehua Lai, Jinjie Zhang, Haipeng Zhao, and Zhiwei Mao. 2019. "Multi-Factor Operating Condition Recognition Using 1D Convolutional Long Short-Term Network" Sensors 19, no. 24: 5488. https://doi.org/10.3390/s19245488

APA StyleJiang, Z., Lai, Y., Zhang, J., Zhao, H., & Mao, Z. (2019). Multi-Factor Operating Condition Recognition Using 1D Convolutional Long Short-Term Network. Sensors, 19(24), 5488. https://doi.org/10.3390/s19245488