Towards Tangible Vision for the Visually Impaired through 2D Multiarray Braille Display

Abstract

1. Introduction

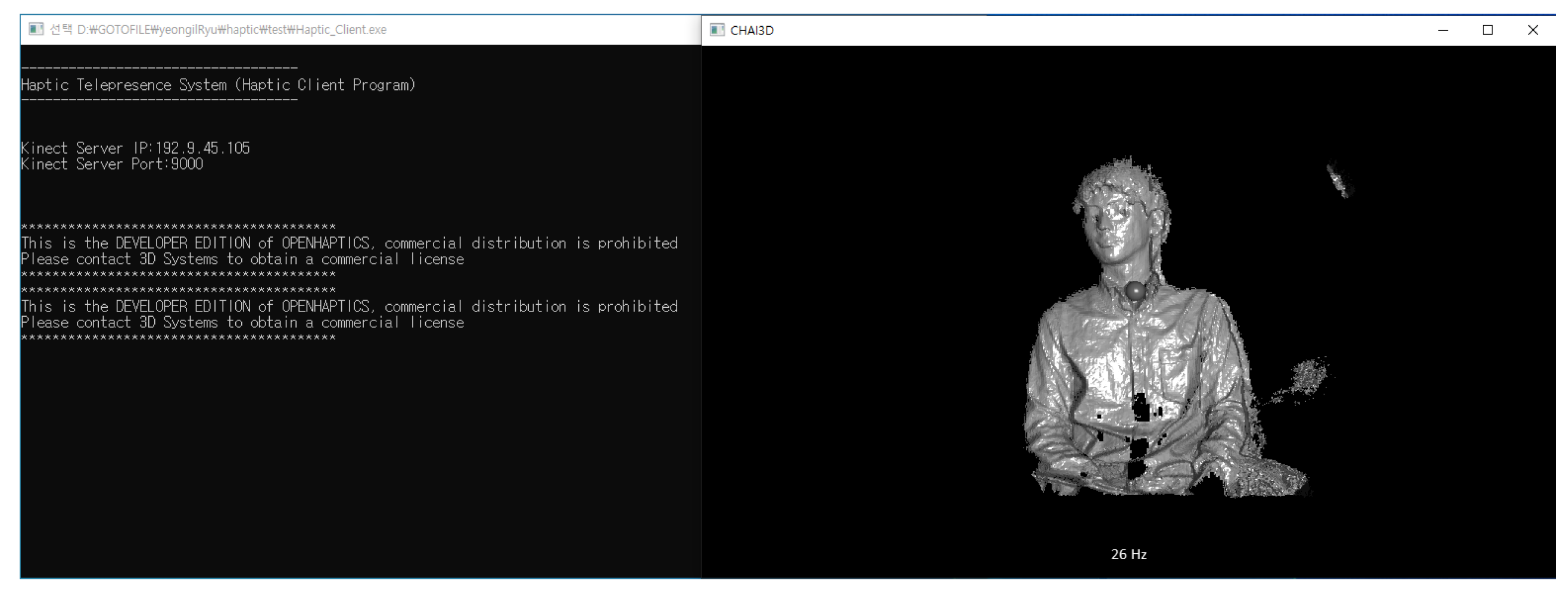

- Firstly, this study presents a haptic telepresence system that the visually impaired people can use to feel the shape of a remote object in real time. The server captures a 3D object, encodes a depth video using high efficiency video coding (HEVC)(H.265/MPEG-H) and sends it to the client. The 2D+depth video is originally reconstructed in 30 frames per second (fps) but the visually impaired people do not need such a high frame rate. Thus, the system downsamples the frame rate according to human feedback; the hpatic device has the button to let the system know the user finished exploring the frame. It can express a 3D object to visually impaired people. However, the authors realized the limitations of this methodology; consequently, the focus of this study was turned to the research on a next-generation braille display. The limitations of haptic telepresence will be discussed in Section 6.

- This study also presents the 2D multiarray braille display. For sharing multimedia content, it presents a braille electronic book (eBook) reader application that can share a large amount of text, figures, and audio content. In the research steps, there are a few types of tethered 2D multiarray braille display devices; they are developed to simulate the proposed software solution before developing a hardware (HW) 2D braille device. The solution in HW device is that does not need the tethered system.

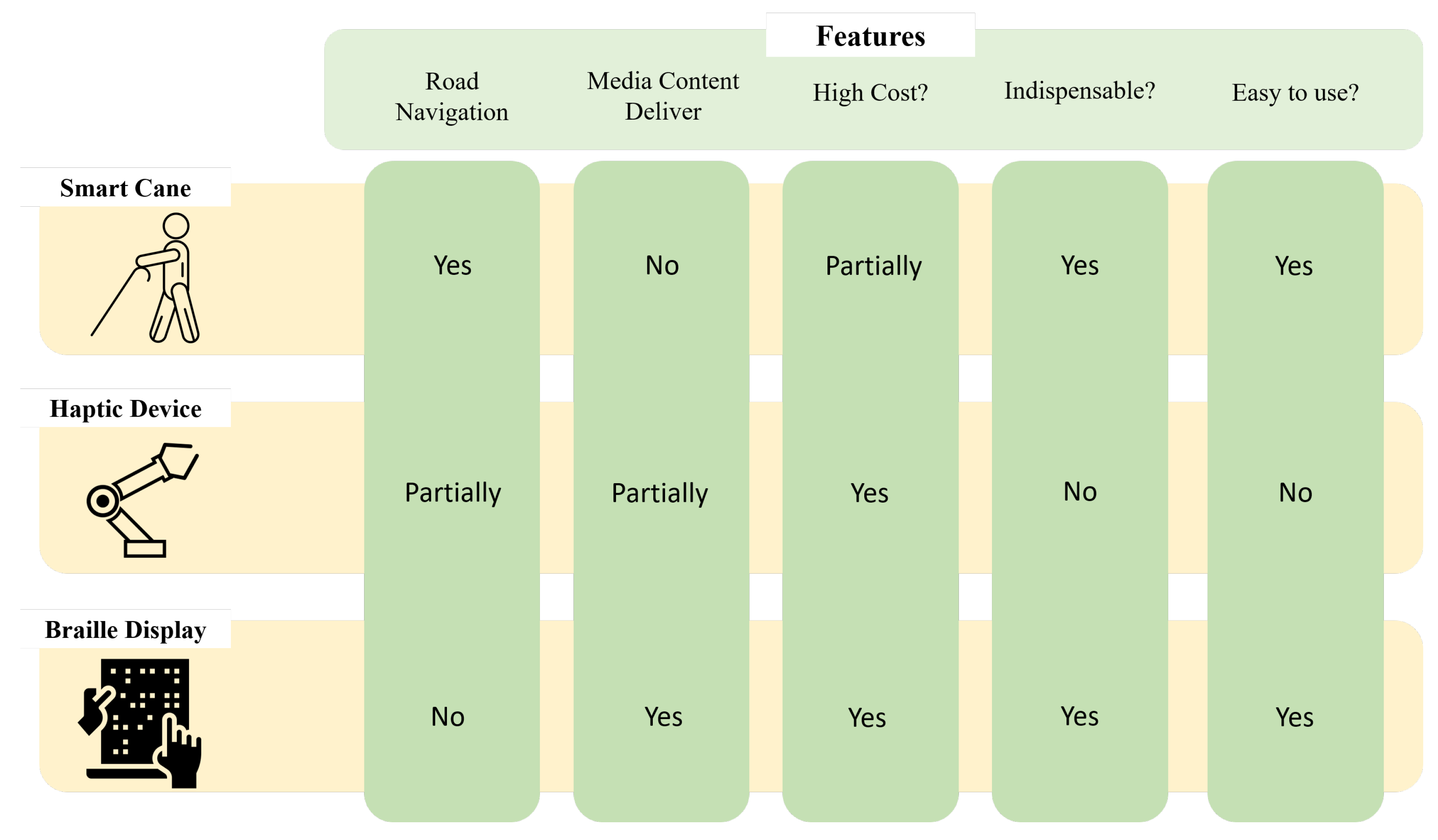

2. Related Work

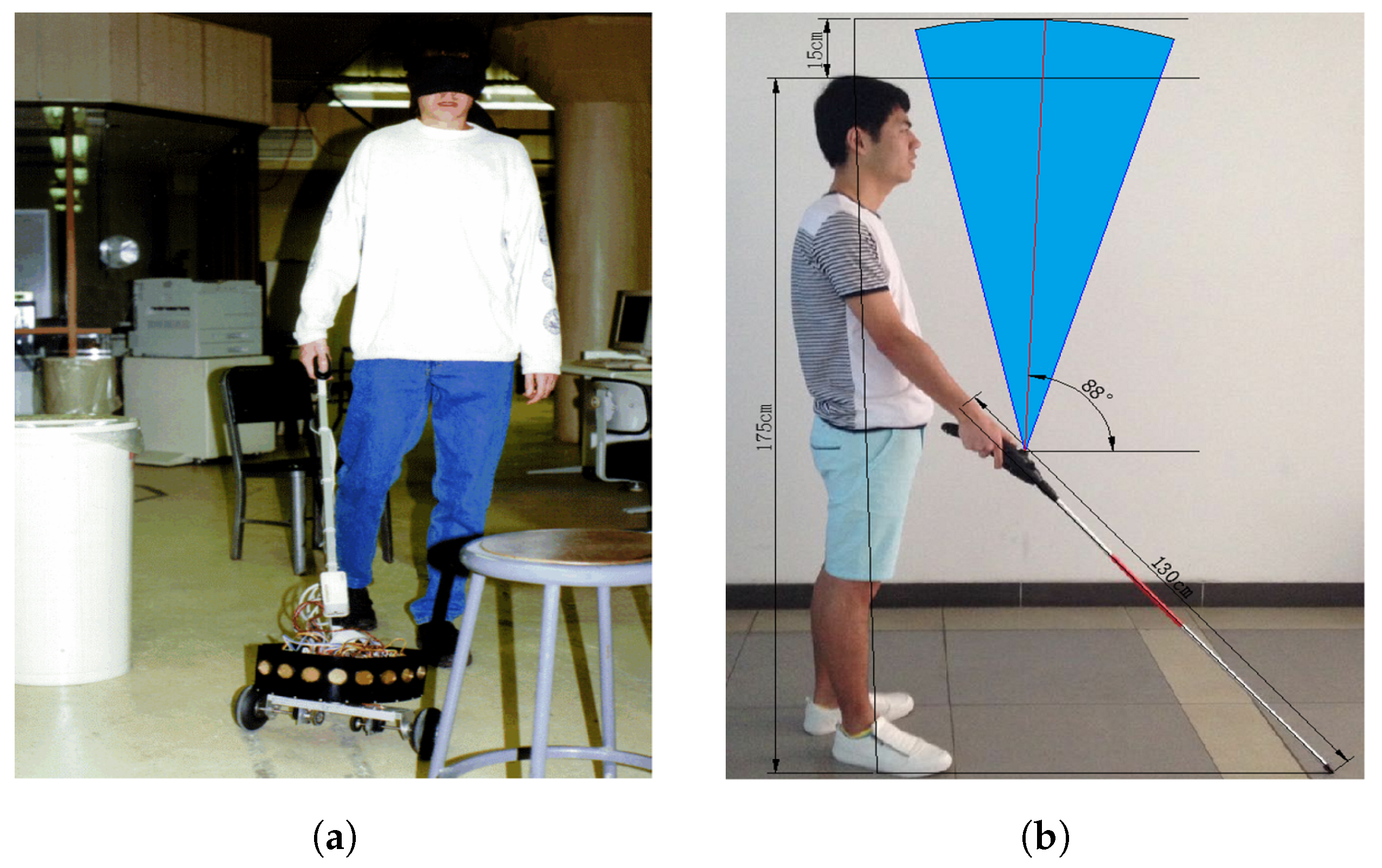

2.1. Smart Canes Using Various Sensors

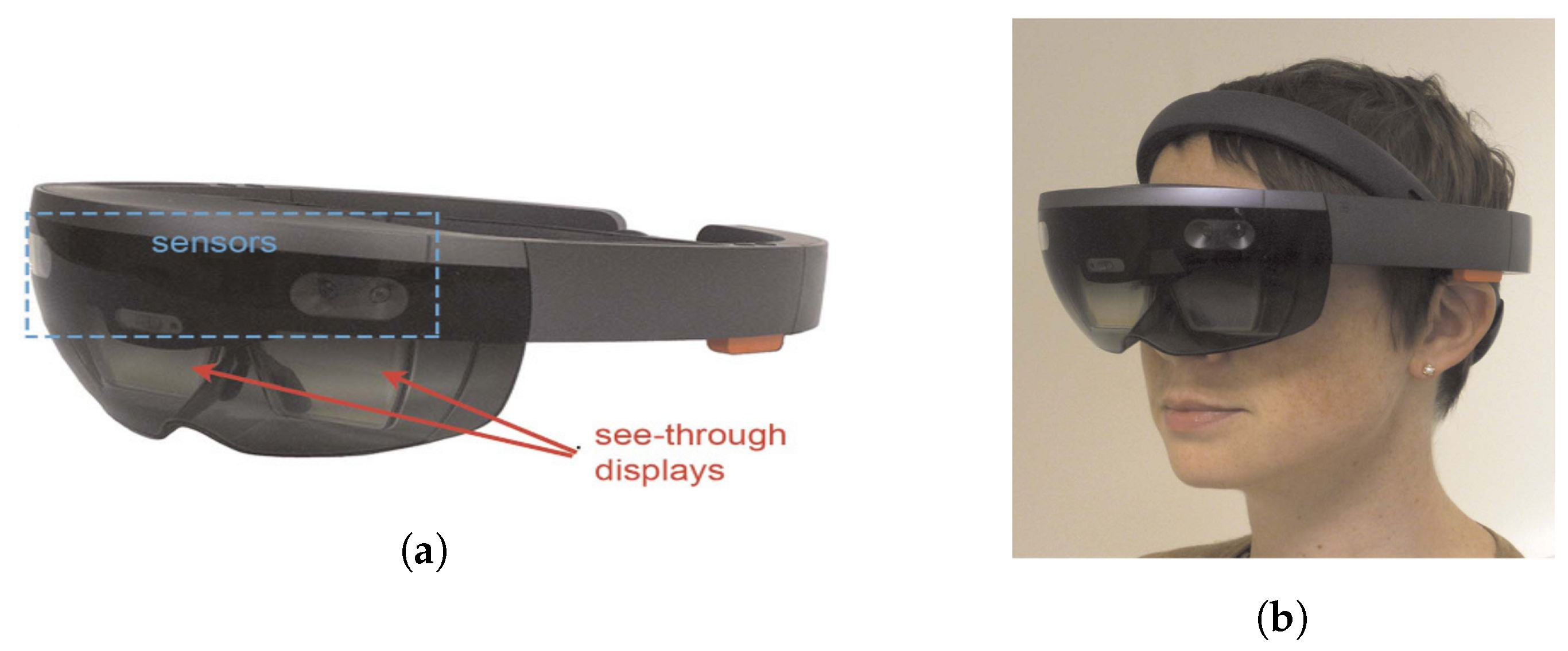

2.2. Communication of 2D or 3D Objects Using Haptic Device or Depth Camera

2.3. Information Transfer Using Braille Device and Assistive Application

3. 3D Haptic Telepresence System

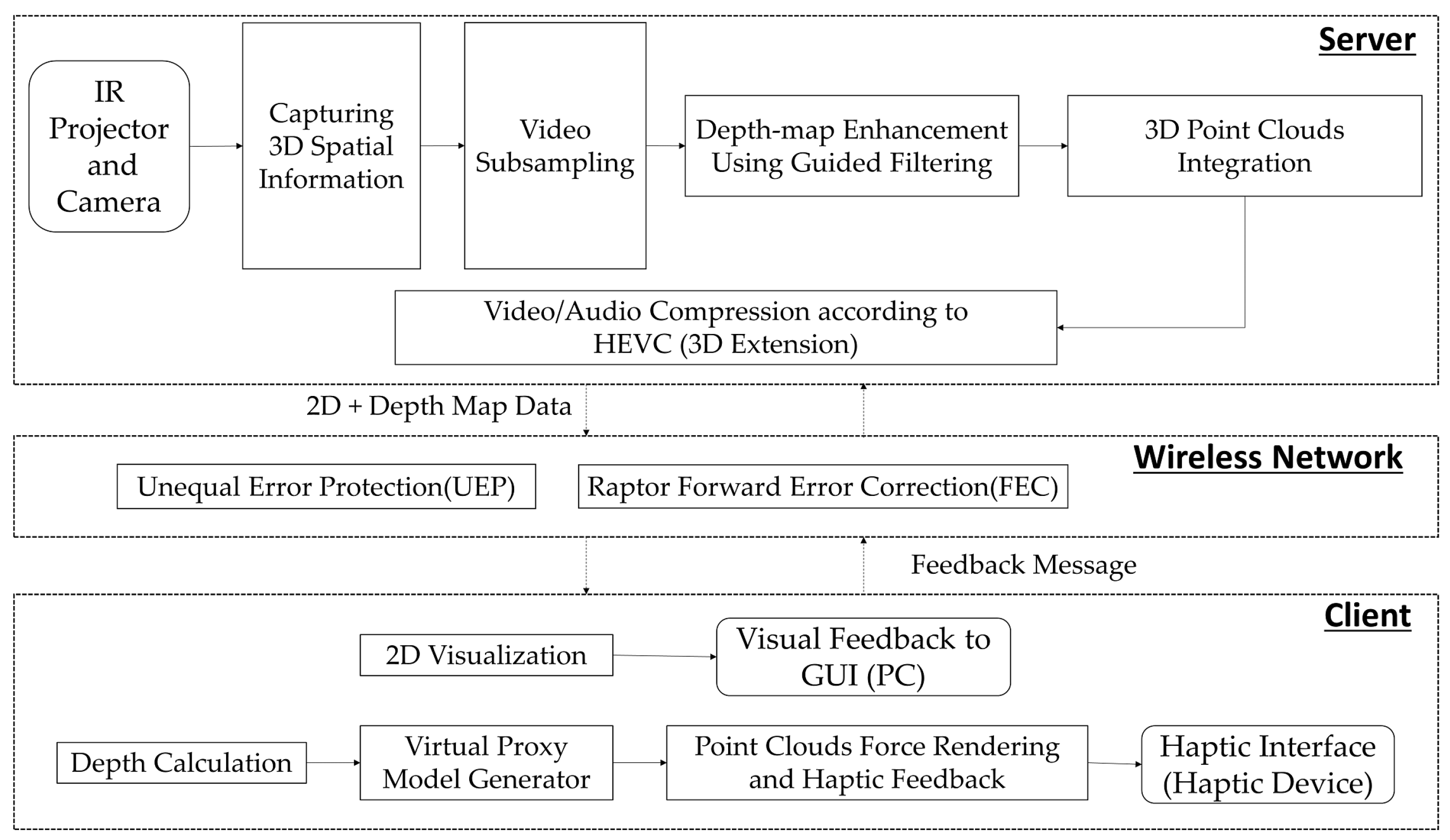

3.1. Architecture of 3D Haptic Telepresence System

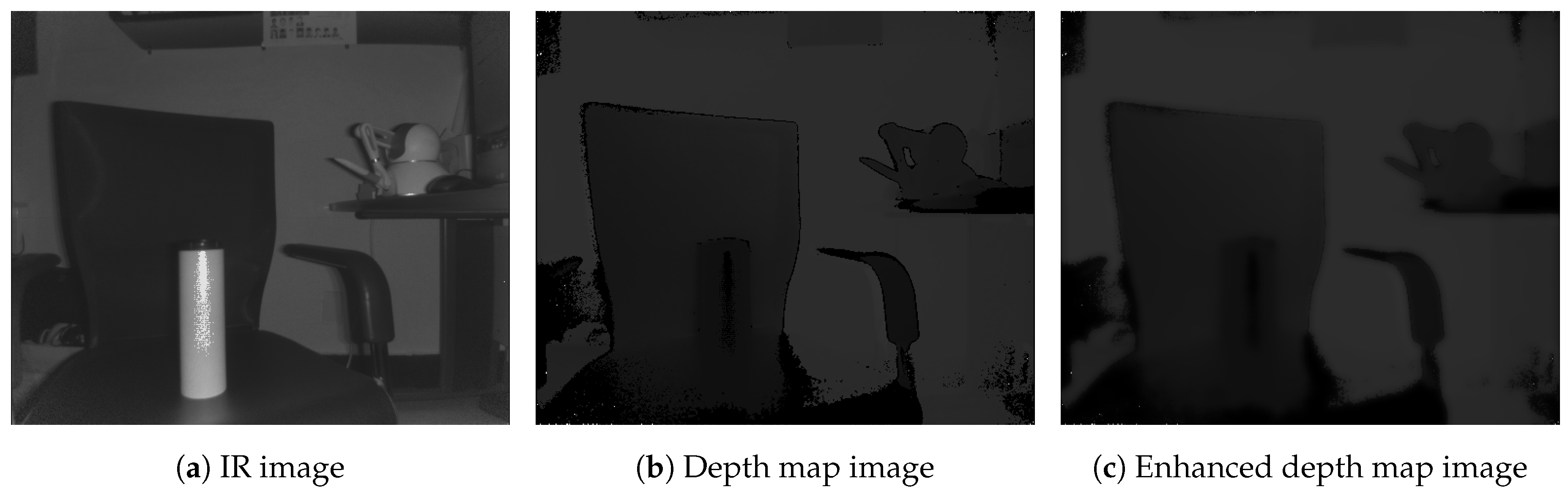

3.2. 3D Spatial Information Capture with Depth Map Using IR Projector and Camera

3.3. Real-Time Video Compression and Transmission to Haptic Device

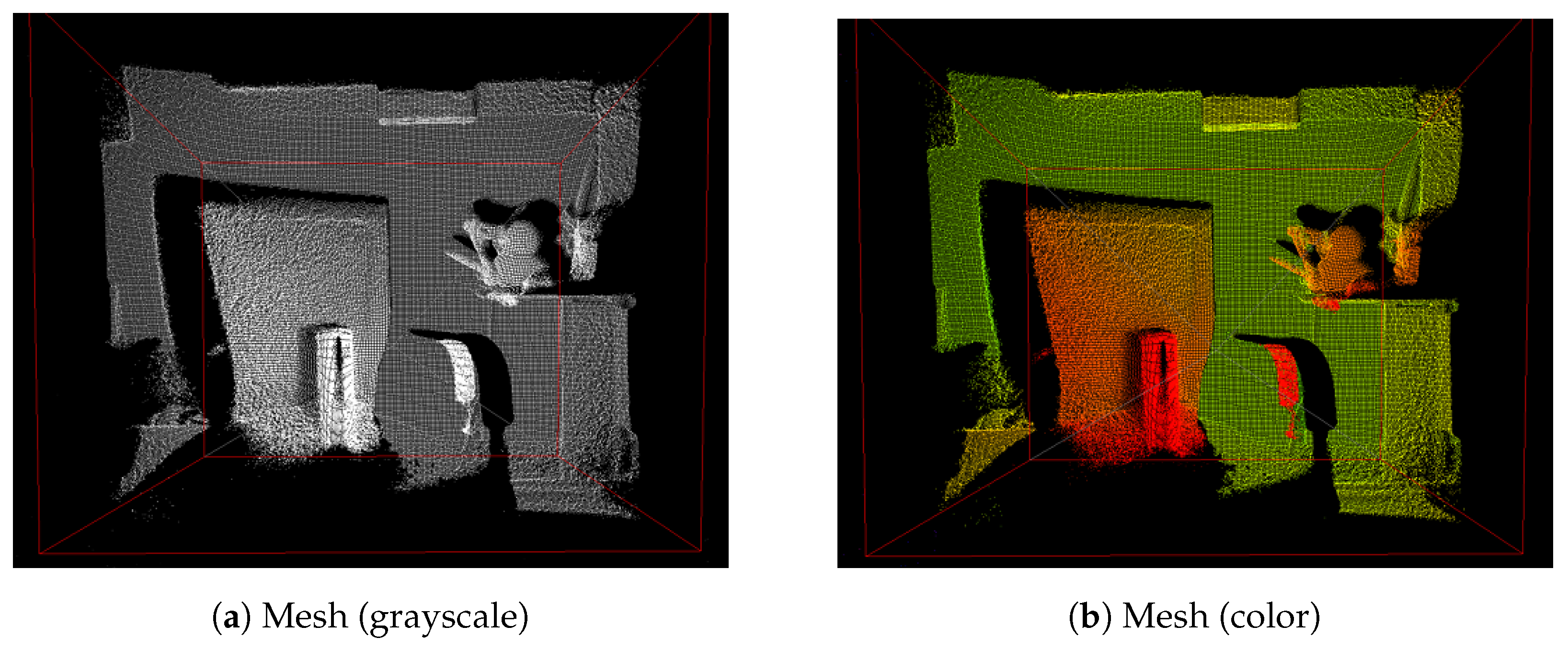

3.4. Real-Time Haptic Interaction Using 2D+ Depth Video

4. Electronic Book (eBook) Reader Application for 2D Braille Display

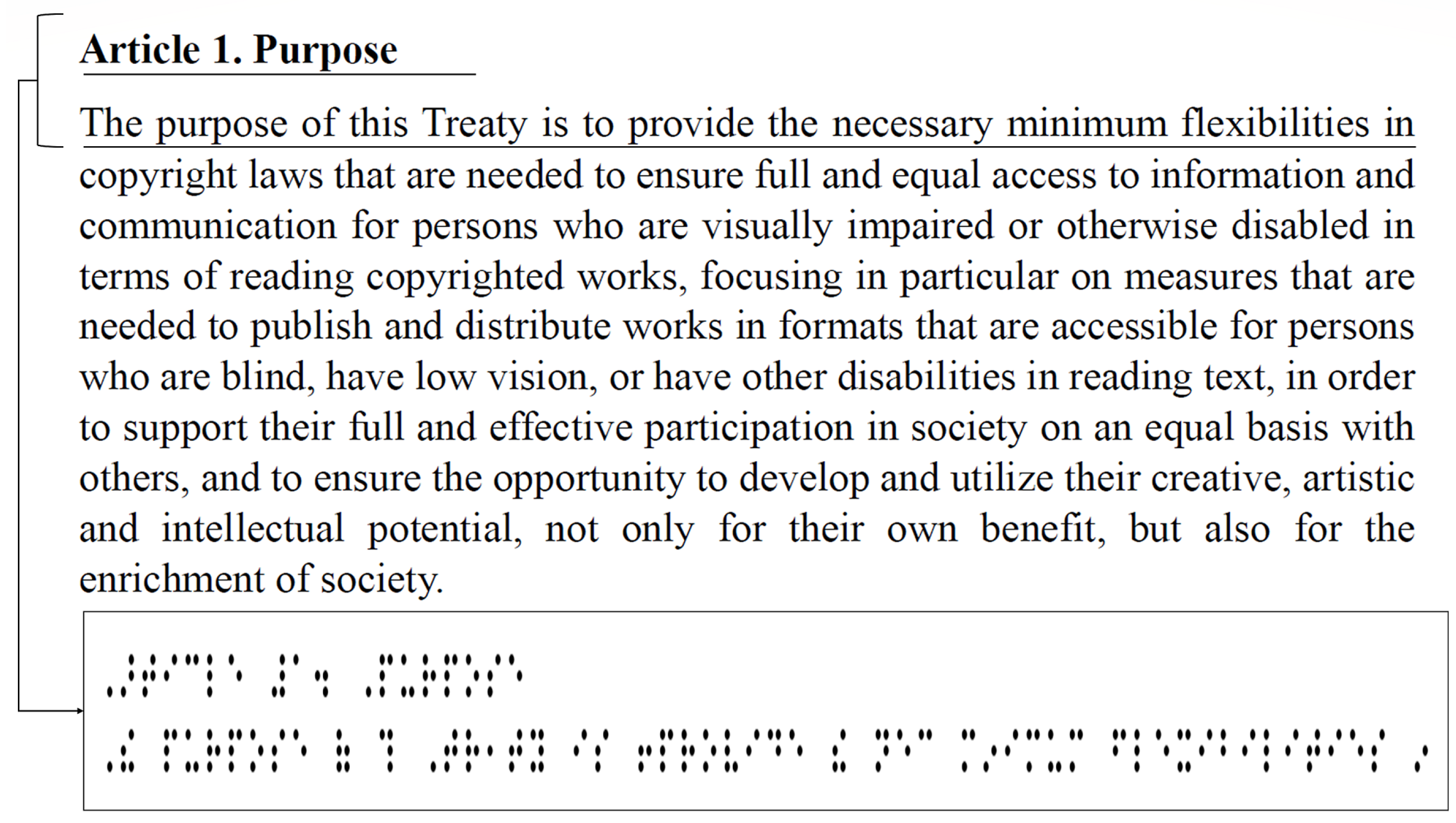

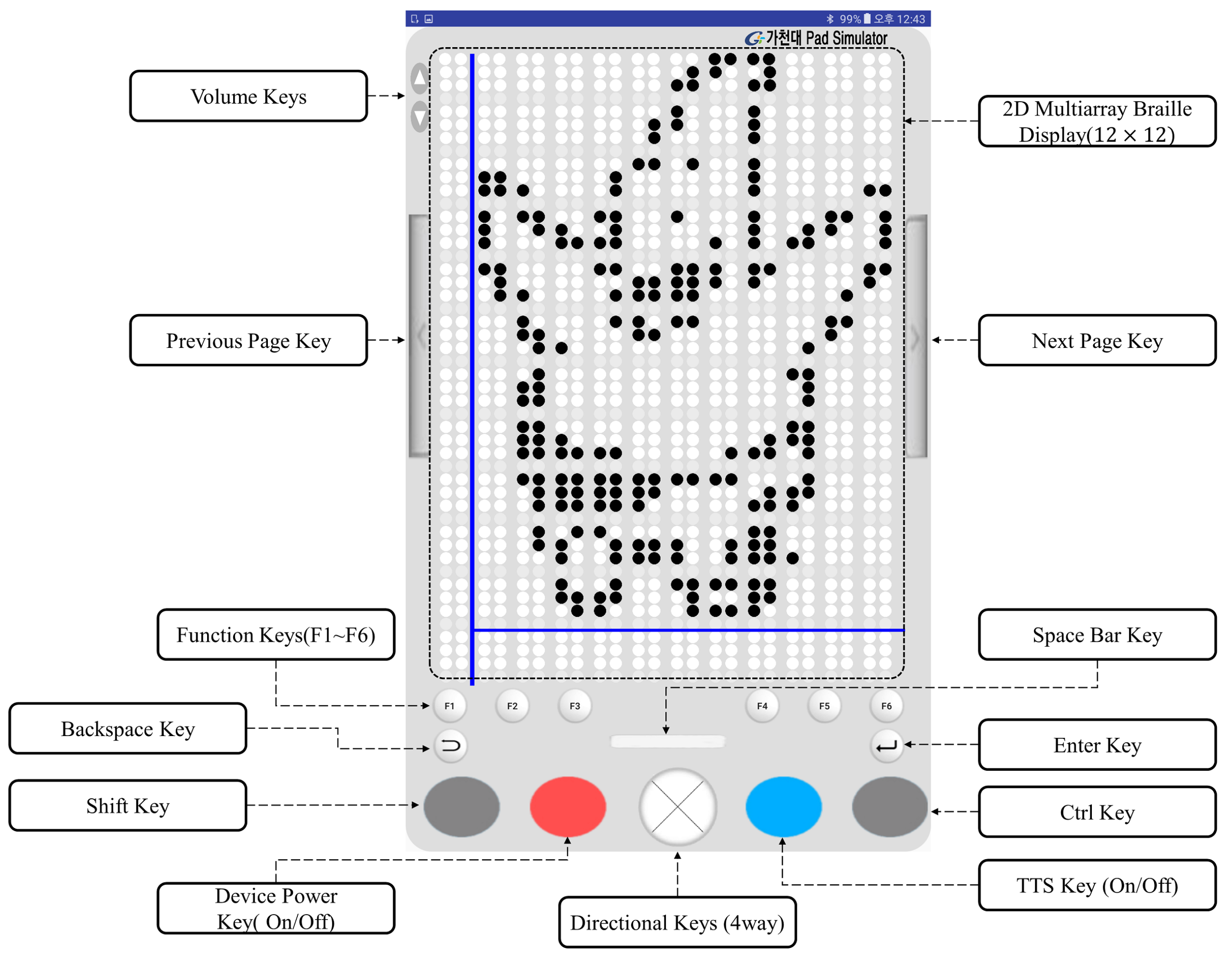

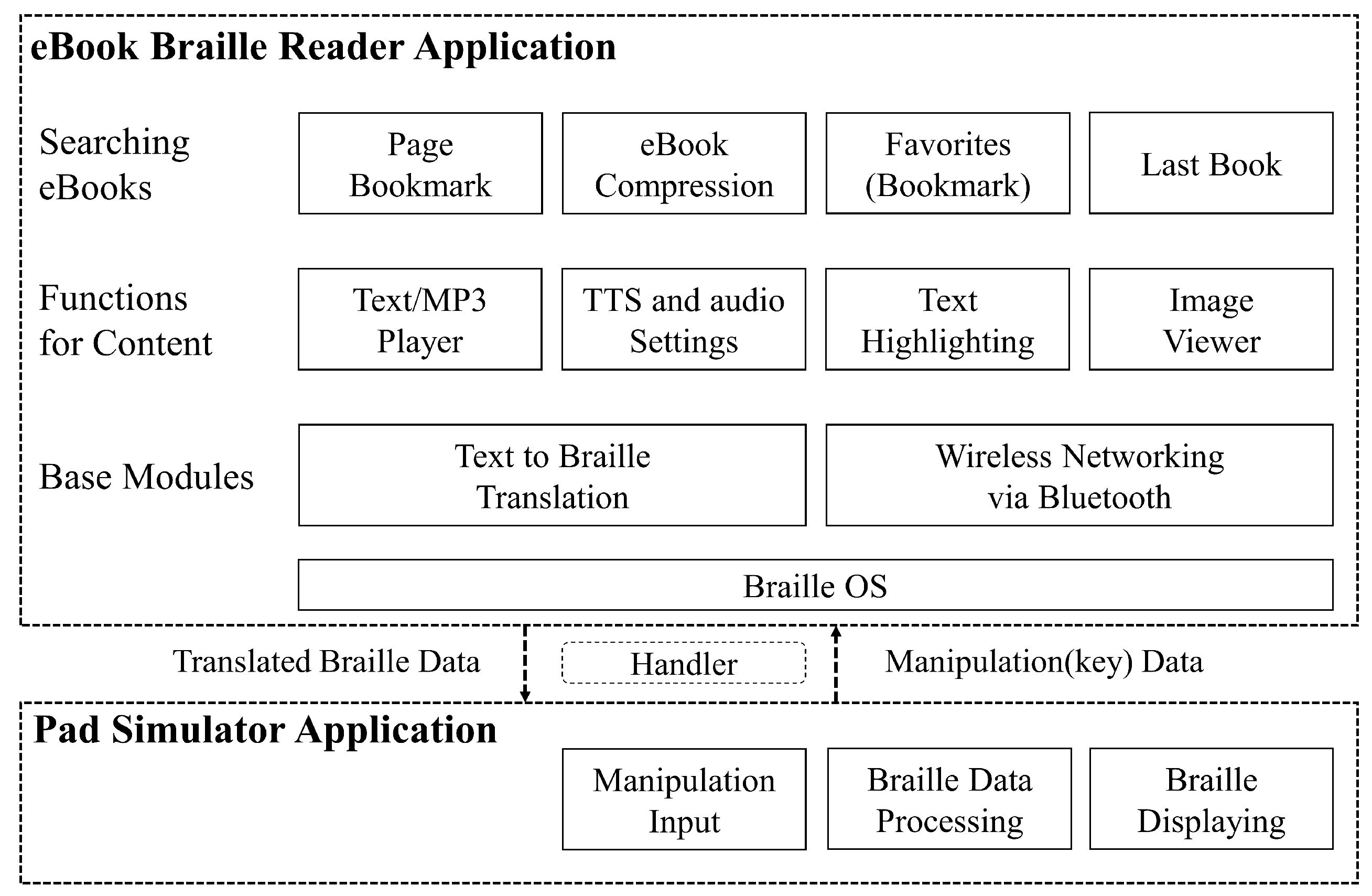

4.1. Design of 2D Multiarray Braille Eisplay and Its Architecture of eBook Reader Application

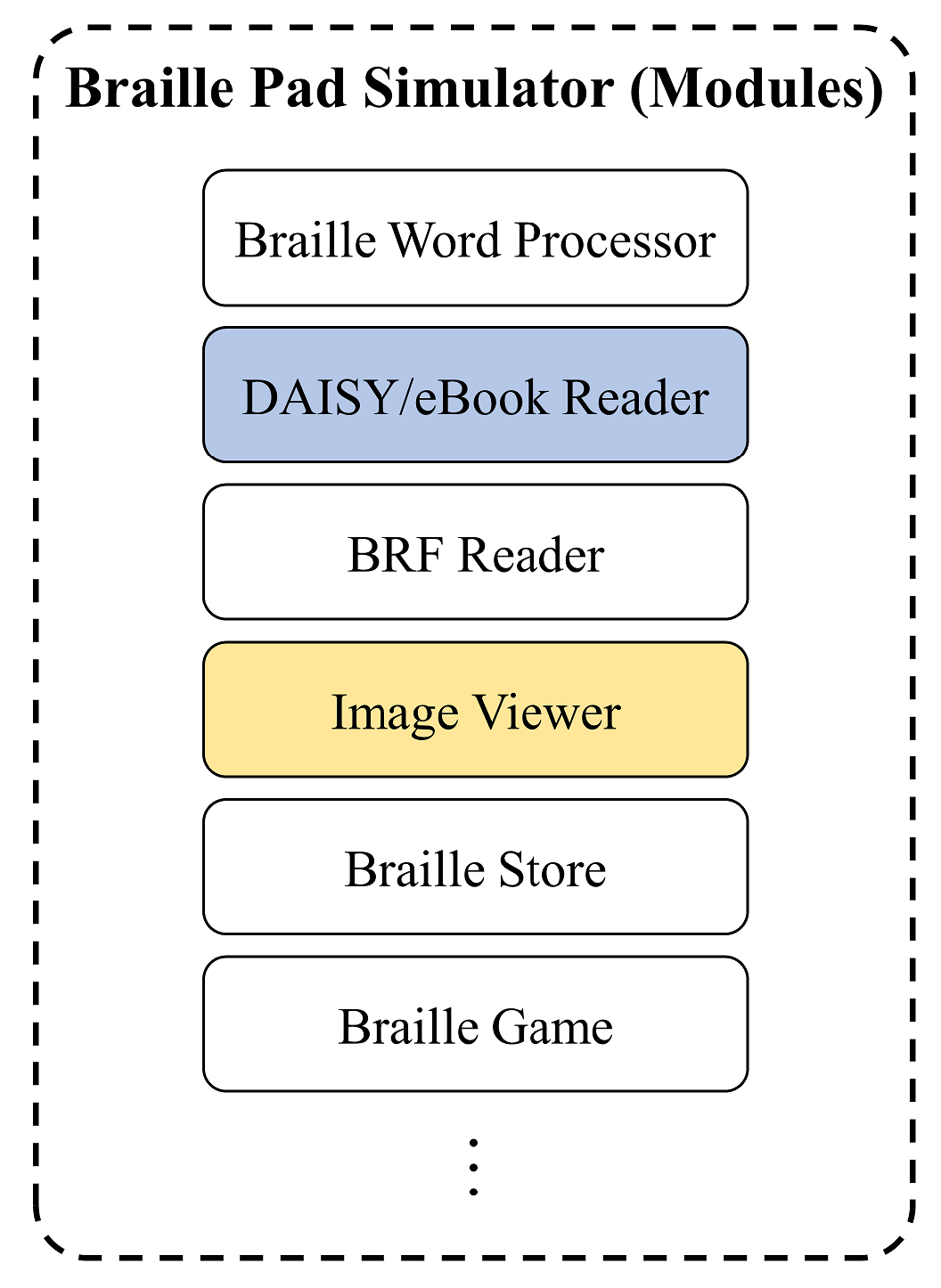

4.2. Modules and Application on Braille OS

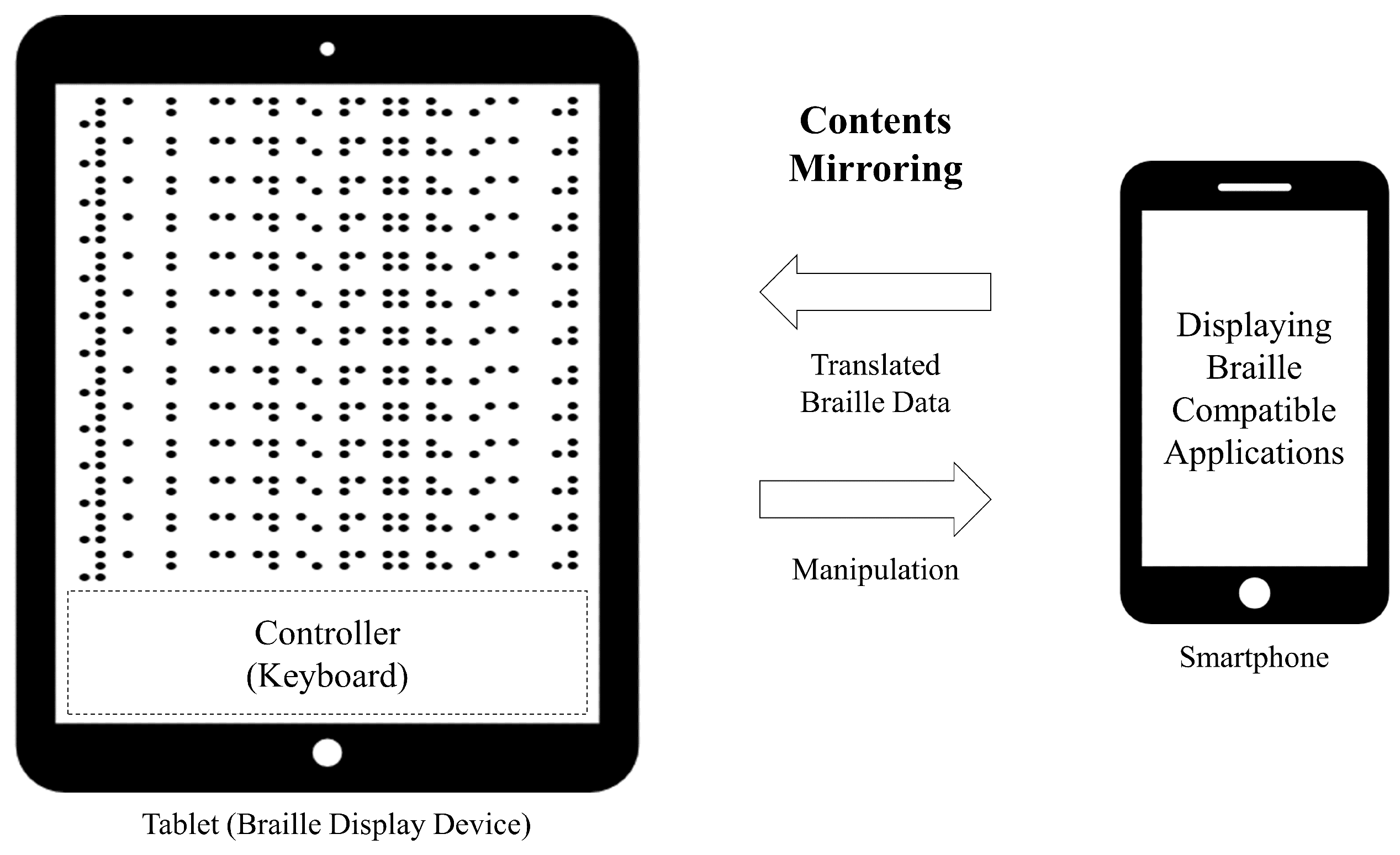

4.3. Wireless Mirroring between Braille Pad and Smartphone

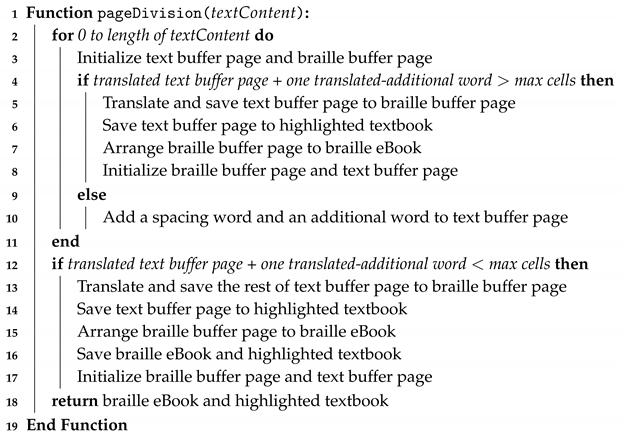

4.4. Extraction and Translation Method for 2D Multiarray Braille Display

| Algorithm 1: Text to braille conversion and text/braille page division for text content of an eBook |

| Input: Text content of an eBook Output: braille eBook and highlighted textbook  |

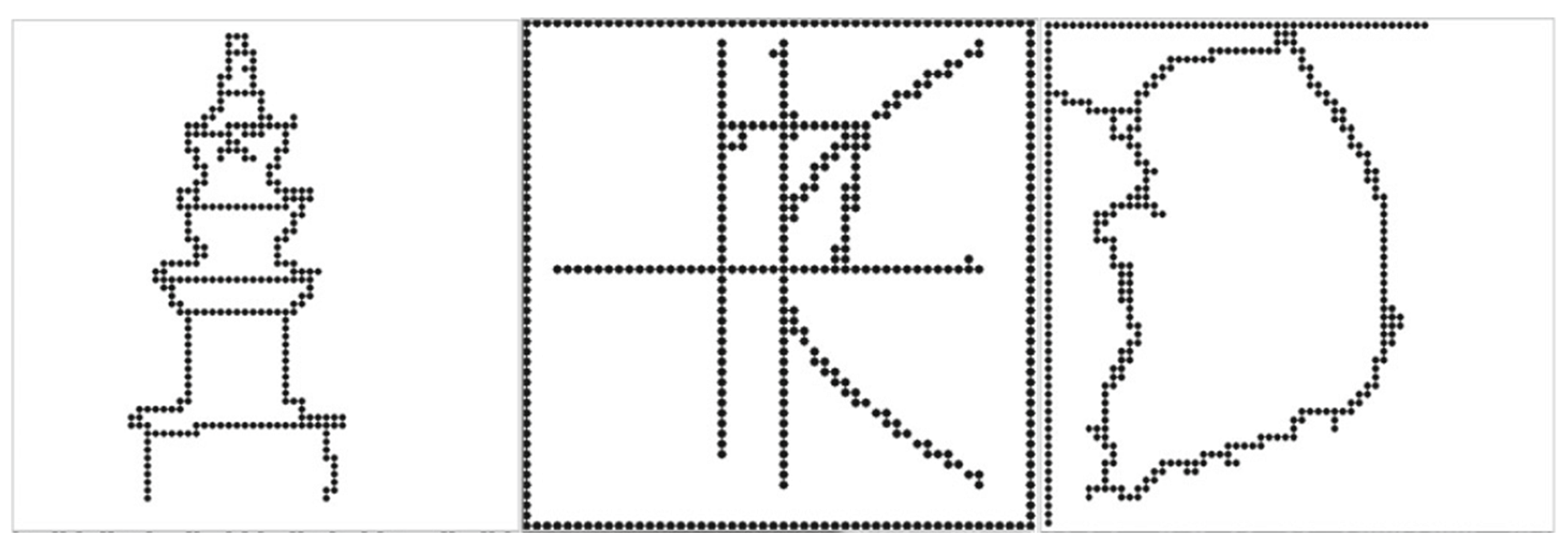

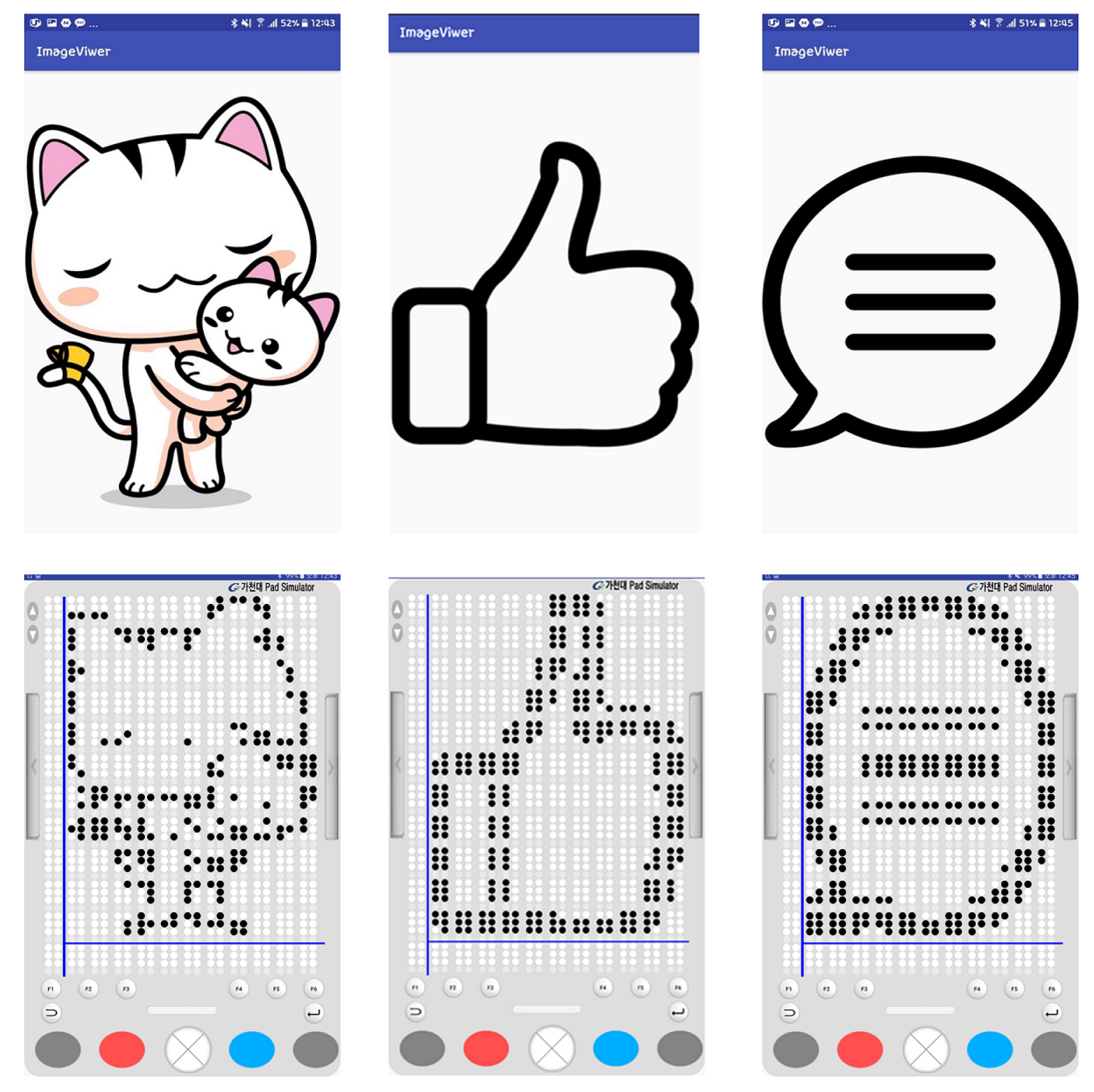

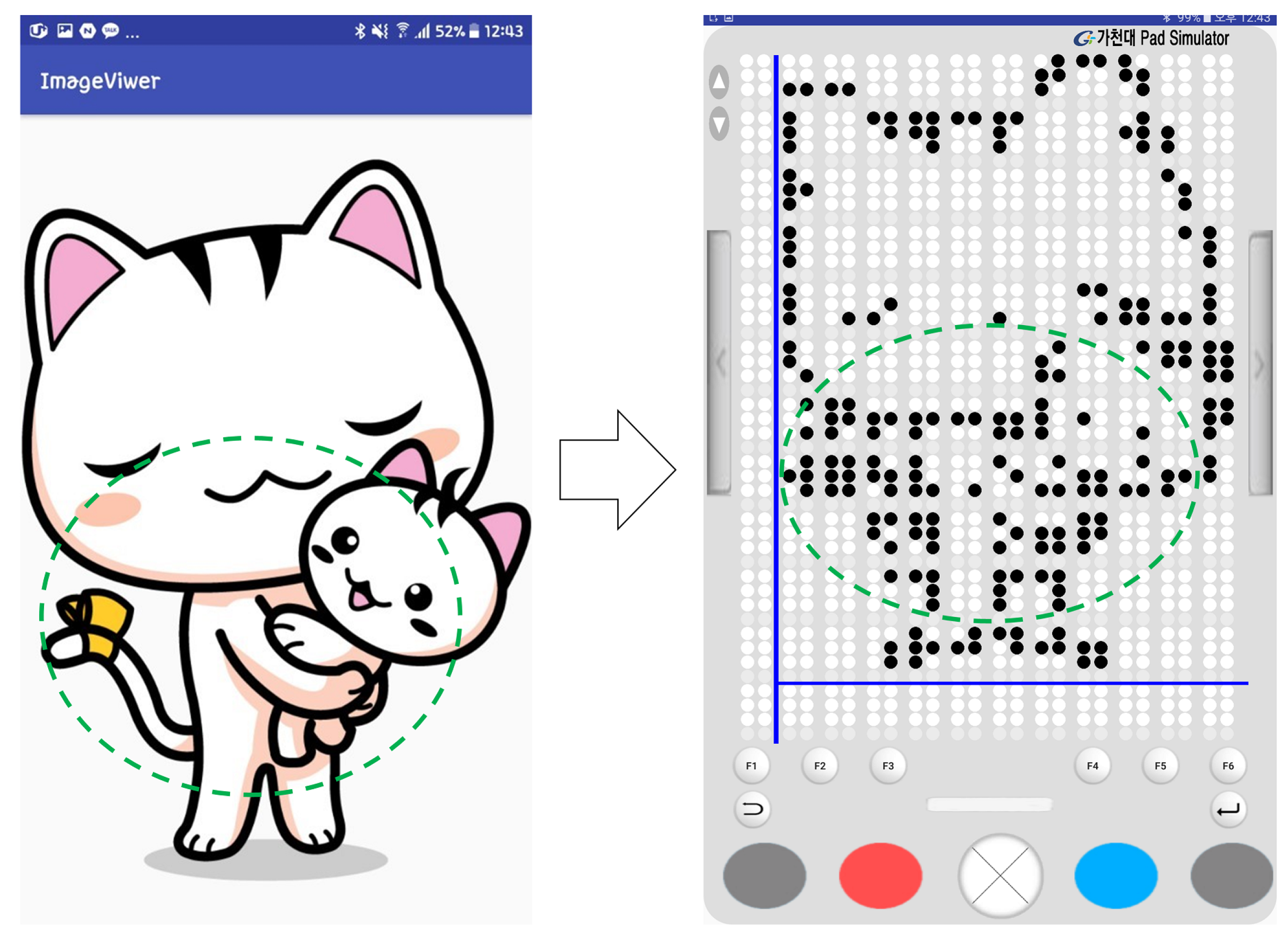

4.5. Braille Image Translation Based on 2D Multiarray Braille Display

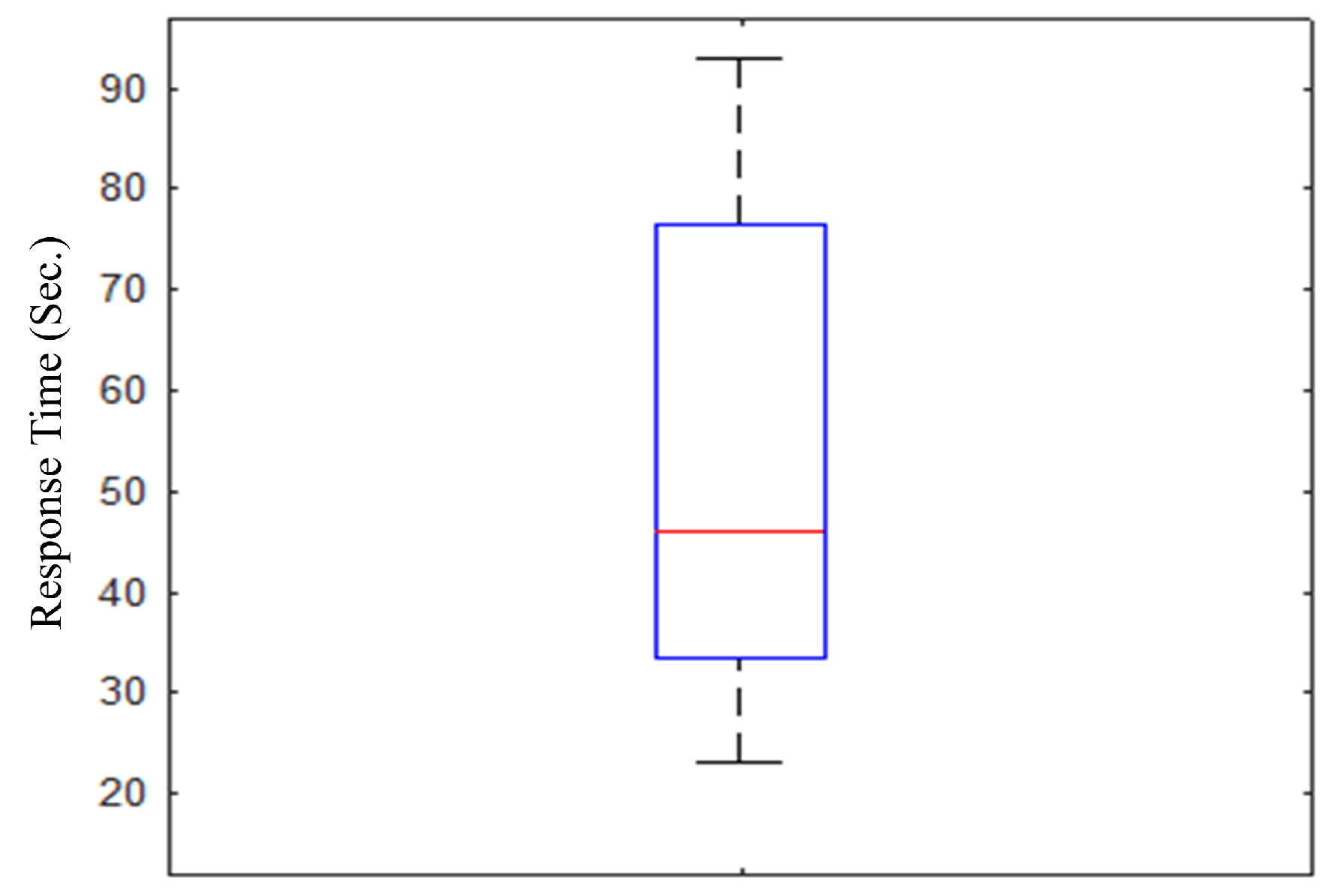

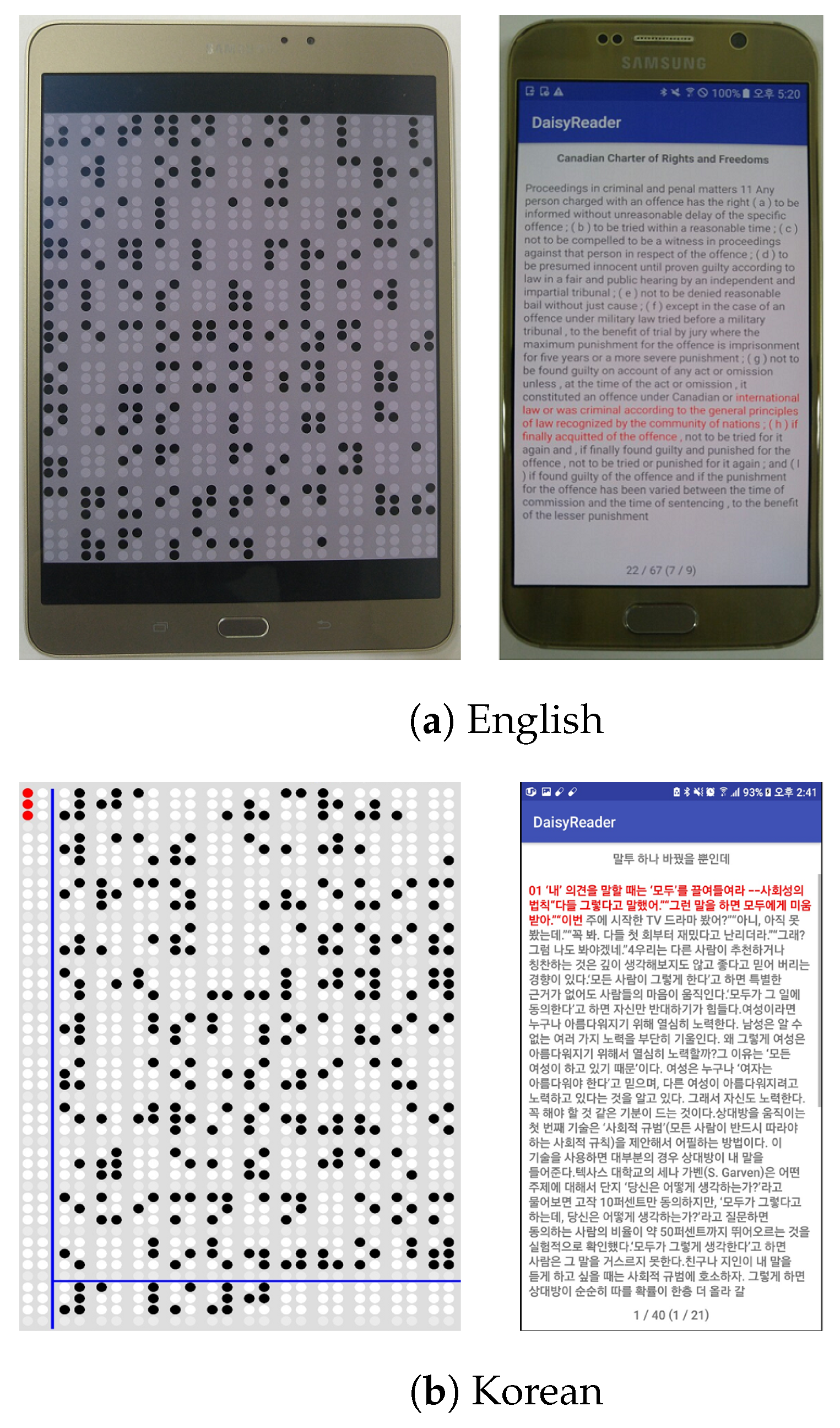

5. Implementation and Results

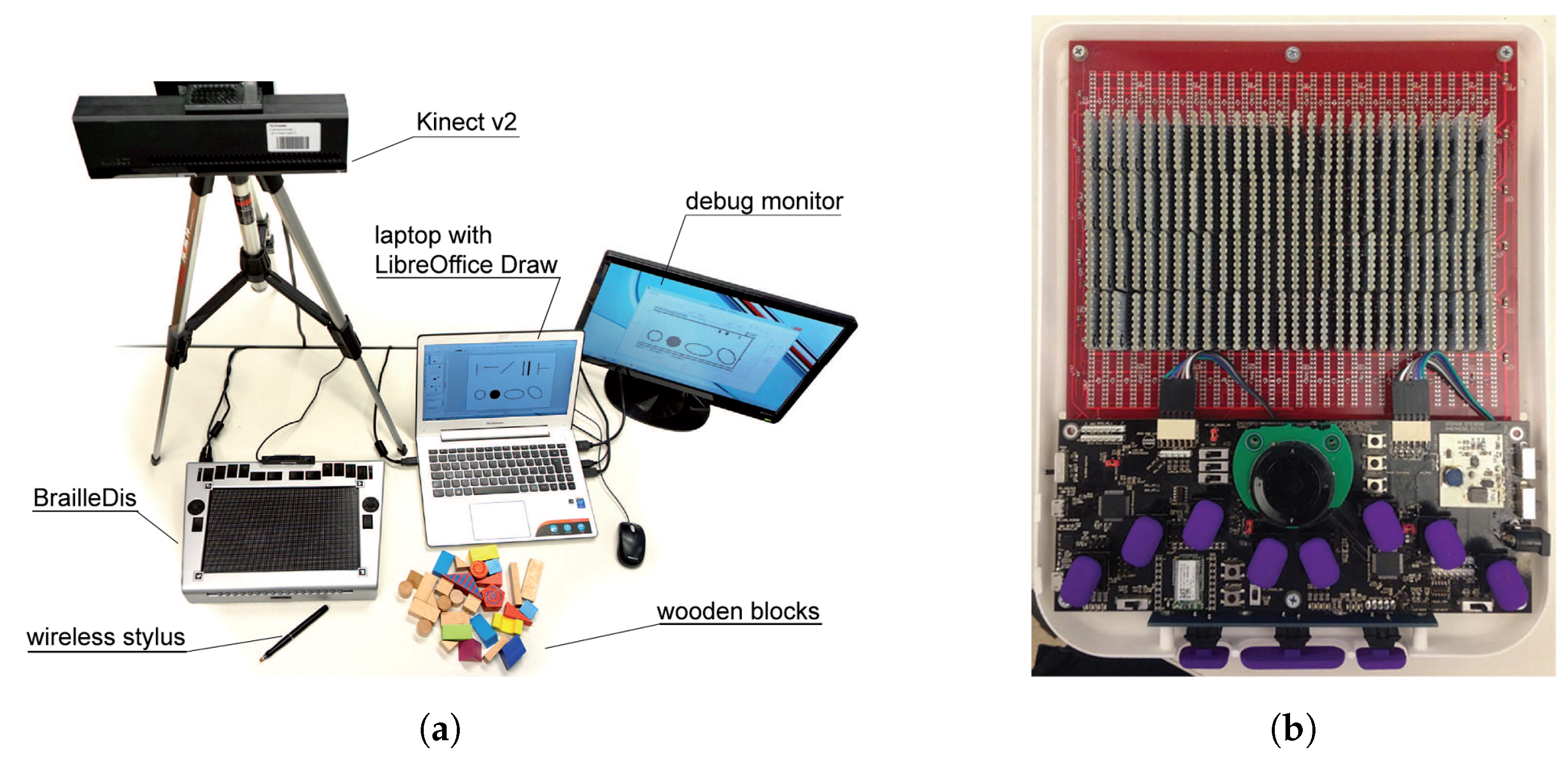

5.1. Haptic Telepresence System

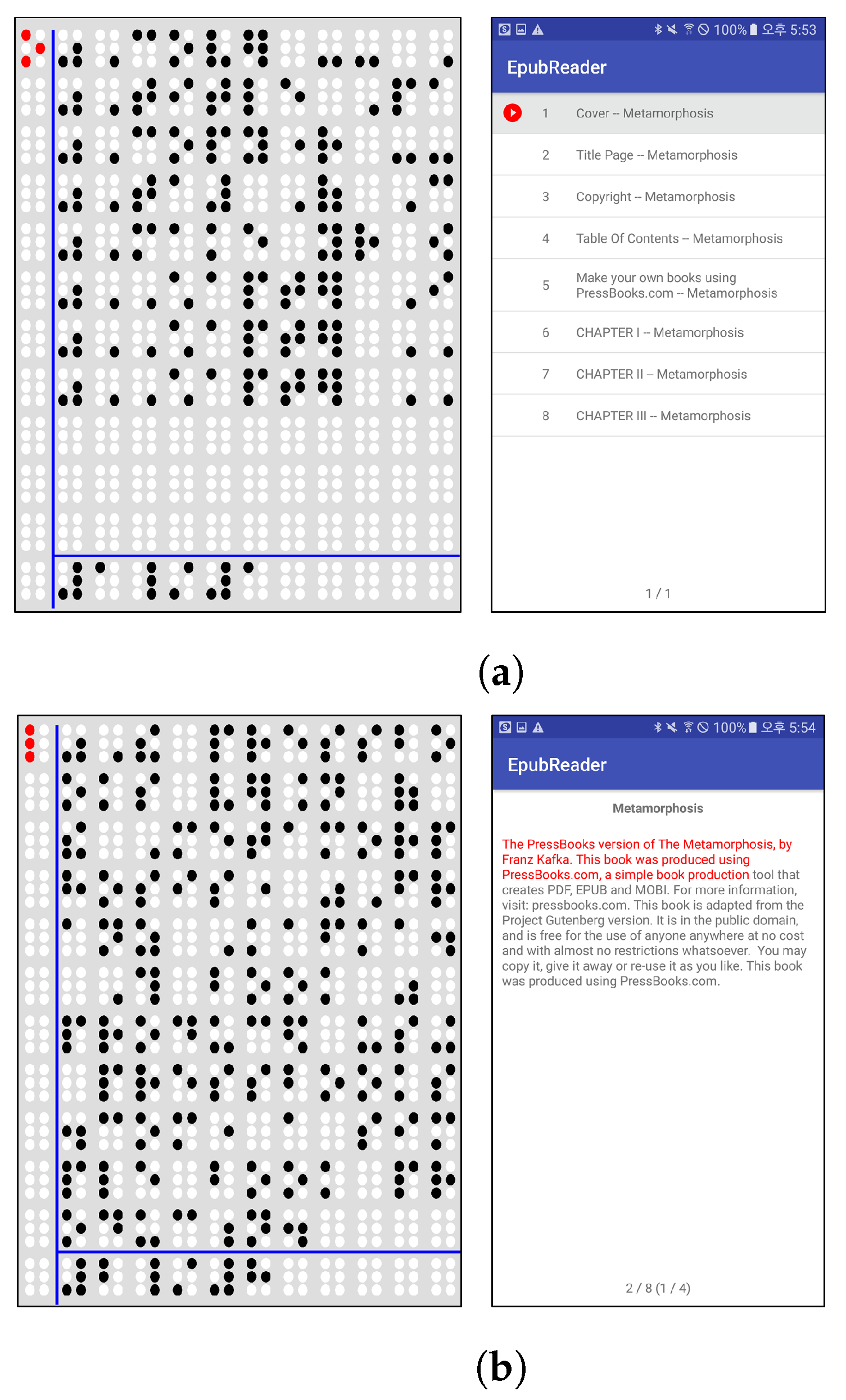

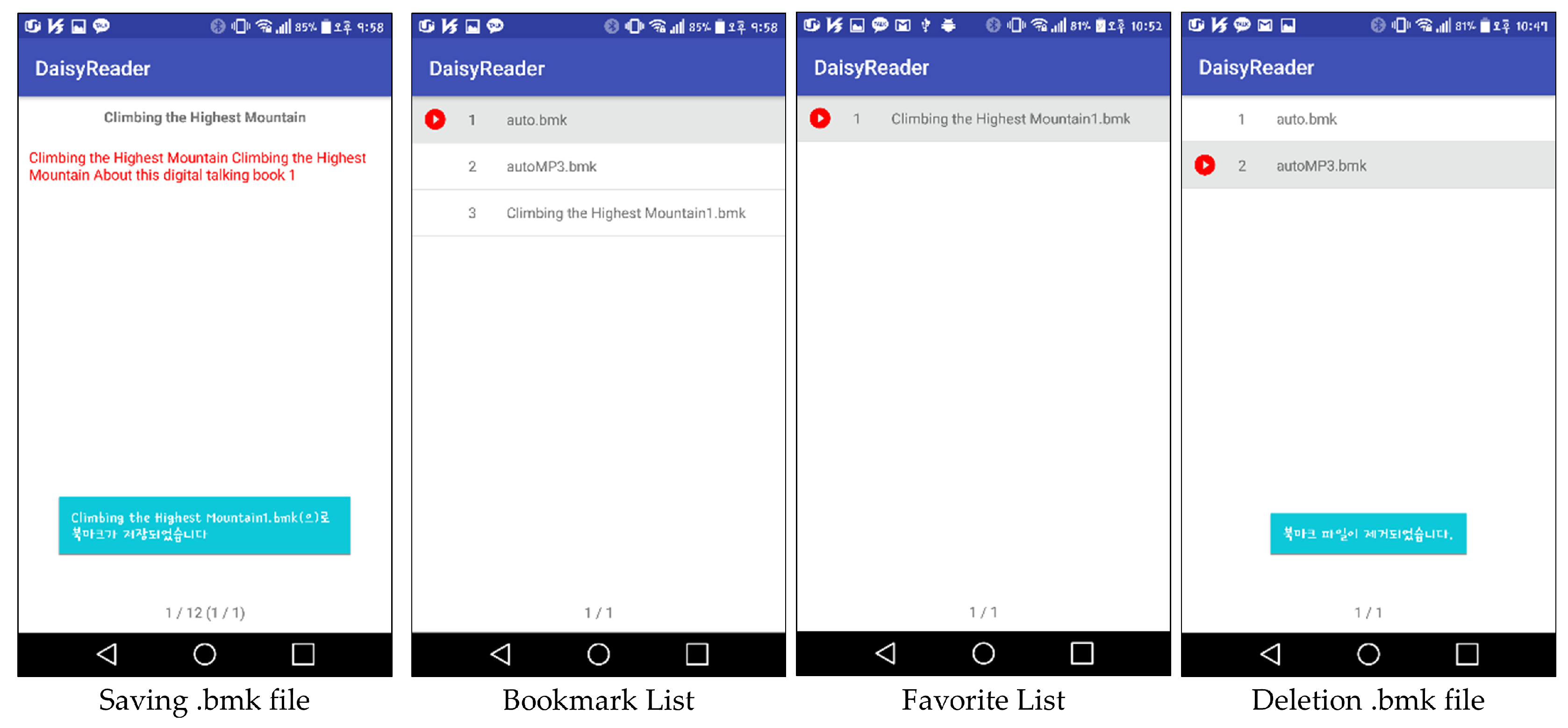

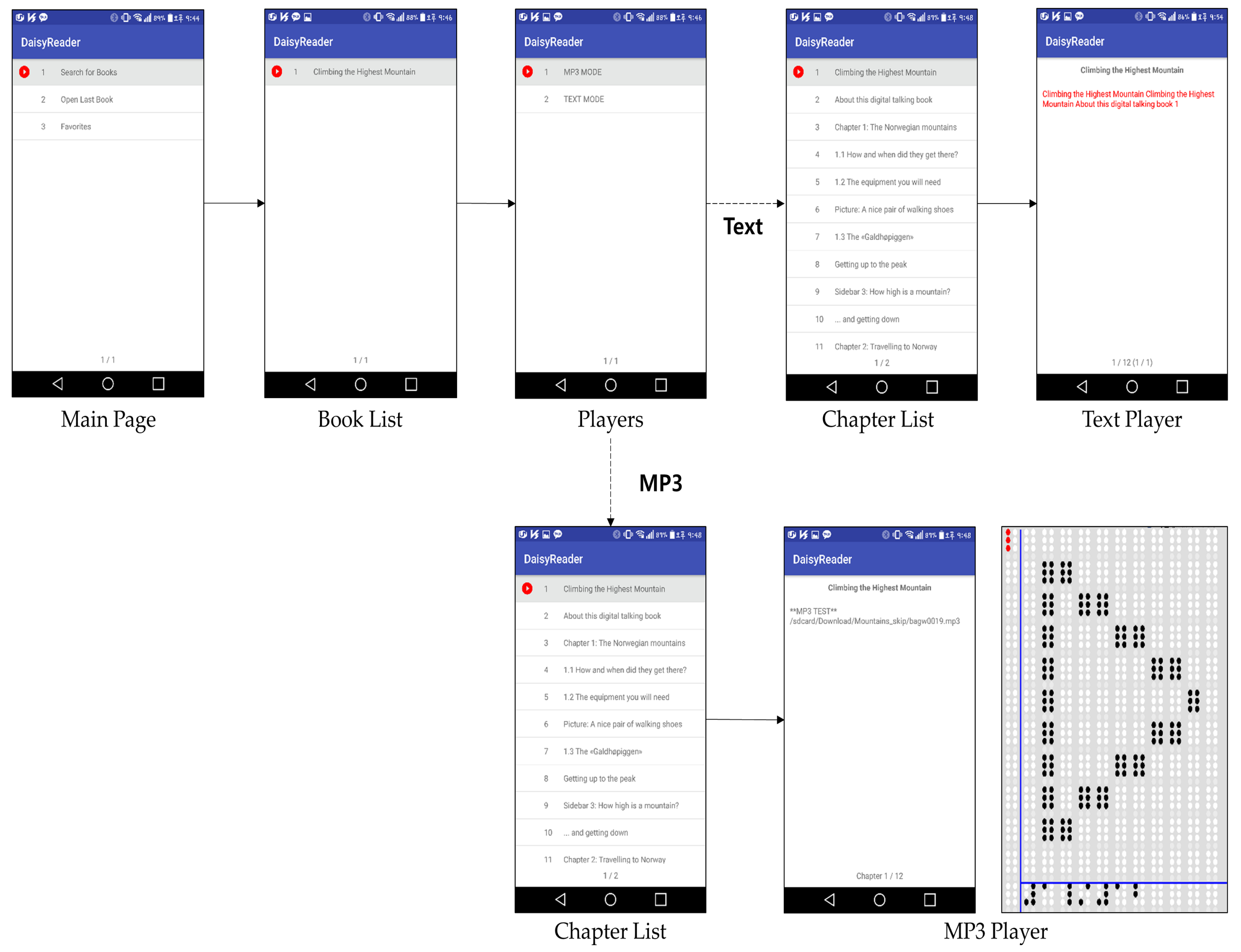

5.2. Braille eBook Reader Application Based On 2D Multiarray Braille Display

6. Limitation and Discussion

6.1. Haptic Telepresence

6.2. Braille eBook Reader Application

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| DAISY | Digital accessible information system |

| HEVC | High efficiency video coding |

| SHVC | Scalable high efficiency video coding |

| LED | Light emitting diode |

| IR | Infrared |

| EPUB | Electronic publication |

| TTS | Text-to-speech |

| NCC | Navigation control center |

| NCX | Navigation control center for extensible markup language |

| XML | Extensible markup language |

| SMIL | Synchronized multimedia integration language |

| HTML | Hyper text markup language |

| SDK | Software development kit |

References

- Colby, S.L.; Ortman, J.M. Projections of the Size and Composition of the US Population: 2014 to 2060: Population Estimates and Projections; US Census Bureau: Washington, DC, USA, 2017.

- He, W.; Larsen, L.J. Older Americans with a Disability, 2008–2012; US Census Bureau: Washington, DC, USA, 2014.

- Bourne, R.R.; Flaxman, S.R.; Braithwaite, T.; Cicinelli, M.V.; Das, A.; Jonas, J.B.; Keeffe, J.; Kempen, J.H.; Leasher, J.; Limburg, H.; et al. Magnitude, temporal trends, and projections of the global prevalence of blindness and distance and near vision impairment: A systematic review and meta-analysis. Lancet Glob. Health 2017, 5, e888–e897. [Google Scholar] [CrossRef]

- Elmannai, W.; Elleithy, K. Sensor-based assistive devices for visually-impaired people: Current status, challenges, and future directions. Sensors 2017, 17, 565. [Google Scholar] [CrossRef] [PubMed]

- Bolgiano, D.; Meeks, E. A laser cane for the blind. IEEE J. Quantum Electron. 1967, 3, 268. [Google Scholar] [CrossRef]

- Borenstein, J.; Ulrich, I. The guidecane-a computerized travel aid for the active guidance of blind pedestrians. In Proceedings of the ICRA, Albuquerque, NM, USA, 21–27 April 1997; pp. 1283–1288. [Google Scholar]

- Yi, Y.; Dong, L. A design of blind-guide crutch based on multi-sensors. In Proceedings of the 2015 12th International Conference on Fuzzy Systems and Knowledge Discovery (FSKD), Zhangjiajie, China, 15–17 August 2015; pp. 2288–2292. [Google Scholar]

- Wahab, M.H.A.; Talib, A.A.; Kadir, H.A.; Johari, A.; Noraziah, A.; Sidek, R.M.; Mutalib, A.A. Smart cane: Assistive cane for visually-impaired people. arXiv 2011, arXiv:1110.5156. [Google Scholar]

- Park, C.H.; Howard, A.M. Robotics-based telepresence using multi-modal interaction for individuals with visual impairments. Int. J. Adapt. Control Signal Process. 2014, 28, 1514–1532. [Google Scholar] [CrossRef]

- Park, C.H.; Ryu, E.S.; Howard, A.M. Telerobotic haptic exploration in art galleries and museums for individuals with visual impairments. IEEE Trans. Haptics 2015, 8, 327–338. [Google Scholar] [CrossRef]

- Park, C.H.; Howard, A.M. Towards real-time haptic exploration using a mobile robot as mediator. In Proceedings of the 2010 IEEE Haptics Symposium, Waltham, MA, USA, 25–26 March 2010; pp. 289–292. [Google Scholar]

- Park, C.H.; Howard, A.M. Real world haptic exploration for telepresence of the visually impaired. In Proceedings of the Seventh Annual ACM/IEEE International Conference on Human-Robot Interaction, Portland, OR, USA, 2–5 March 2012; pp. 65–72. [Google Scholar]

- Park, C.H.; Howard, A.M. Real-time haptic rendering and haptic telepresence robotic system for the visually impaired. In Proceedings of the World Haptics Conference (WHC), Daejeon, Korea, 14–17 April 2013; pp. 229–234. [Google Scholar]

- Hicks, S.L.; Wilson, I.; Muhammed, L.; Worsfold, J.; Downes, S.M.; Kennard, C. A depth-based head-mounted visual display to aid navigation in partially sighted individuals. PLoS ONE 2013, 8, e67695. [Google Scholar] [CrossRef]

- Hong, D.; Kimmel, S.; Boehling, R.; Camoriano, N.; Cardwell, W.; Jannaman, G.; Purcell, A.; Ross, D.; Russel, E. Development of a semi-autonomous vehicle operable by the visually-impaired. In Proceedings of the IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems, Daegu, Korea, 16–18 November 2008; pp. 539–544. [Google Scholar]

- Kinateder, M.; Gualtieri, J.; Dunn, M.J.; Jarosz, W.; Yang, X.D.; Cooper, E.A. Using an Augmented Reality Device as a Distance-based Vision Aid—Promise and Limitations. Optom. Vis. Sci. 2018, 95, 727. [Google Scholar] [CrossRef]

- Oliveira, J.; Guerreiro, T.; Nicolau, H.; Jorge, J.; Gonçalves, D. BrailleType: Unleashing braille over touch screen mobile phones. In Proceedings of the IFIP Conference on Human-Computer Interaction, Lisbon, Portugal, 5–9 September 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 100–107. [Google Scholar]

- Velázquez, R.; Preza, E.; Hernández, H. Making eBooks accessible to blind Braille readers. In Proceedings of the IEEE International Workshop on Haptic Audio visual Environments and Games, Ottawa, ON, Canada, 18–19 October 2008; pp. 25–29. [Google Scholar]

- Goncu, C.; Marriott, K. Creating ebooks with accessible graphics content. In Proceedings of the 2015 ACM Symposium on Document Engineering, Lausanne, Switzerland, 8–11 September 2015; pp. 89–92. [Google Scholar]

- Bornschein, J.; Bornschein, D.; Weber, G. Comparing computer-based drawing methods for blind people with real-time tactile feedback. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; p. 115. [Google Scholar]

- Bornschein, J.; Prescher, D.; Weber, G. Collaborative creation of digital tactile graphics. In Proceedings of the 17th International ACM SIGACCESS Conference on Computers & Accessibility, Lisbon, Portugal, 26–28 October 2015; pp. 117–126. [Google Scholar]

- Byrd, G. Tactile Digital Braille Display. Computer 2016, 49, 88–90. [Google Scholar] [CrossRef]

- Bae, K.J. A Study on the DAISY Service Interface for the Print-Disabled. J. Korean Biblia Soc. Libr. Inf. Sci. 2011, 22, 173–188. [Google Scholar]

- Jihyeon, W.; Hyerina, L.; Tae-Eun, K.; Jongwoo, L. An Implementation of an Android Mobile E-book Player for Disabled People; Korea Multimedia Society: Busan, Korea, 2010; pp. 361–364. [Google Scholar]

- Kim, T.E.; Lee, J.; Lim, S.B. A Design and Implementation of DAISY3 compliant Mobile E-book Viewer. J. Digit. Contents Soc. 2011, 12, 291–298. [Google Scholar] [CrossRef]

- Harty, J.; LogiGearTeam; Holdt, H.C.; Coppola, A. Android-Daisy-Epub-Reader. 2013. Available online: https://code.google.com/archive/p/android-daisy-epub-reader (accessed on 6 October 2018).

- Mahule, A. Daisy3-Reader. 2011. Available online: https://github.com/amahule/Daisy3-Reader (accessed on 6 October 2018).

- BLITAB Technology. Blitab. 2019. Available online: http://blitab.com/ (accessed on 10 January 2019).

- HIMS International. BrailleSense Polaris and U2. 2018. Available online: http://himsintl.com/blindness/ (accessed on 18 October 2018).

- Humanware Store. BrailleNote Touch 32 Braille Notetaker. 2017. Available online: https://store.humanware.com/asia/braillenote-touch-32.html (accessed on 10 January 2019).

- Humanware. Brailliant B 80 Braille Display (New Generation). 2019. Available online: https://store.humanware.com/asia/brailliant-b-80-new-generation.html (accessed on 8 June 2019).

- Humanware. Brailliant BI Braille Display User Guide; Humanware Inc.: Jensen Beach, FL, USA, 2011. [Google Scholar]

- Park, T.; Jung, J.; Cho, J. A method for automatically translating print books into electronic Braille books. Sci. China Inf. Sci. 2016, 59, 072101. [Google Scholar] [CrossRef]

- Kim, S.; Roh, H.J.; Ryu, Y.; Ryu, E.S. Daisy/EPUB-based Braille Conversion Software Development for 2D Braille Information Terminal. In Proceedings of the 2017 Korea Computer Congress of the Korean Institute of Information Scientists and Engineers, Seoul, Korea, 18–20 June 2017; pp. 1975–1977. [Google Scholar]

- Park, E.S.; Kim, S.D.; Ryu, Y.; Roh, H.J.; Koo, J.; Ryu, E.S. Design and Implementation of Daisy 3 Viewer for 2D Braille Device. In Proceedings of the 2018 Winter Conference of the Korean Institute of Communications and Information Sciences, Seoul, Korea, 17–19 January 2018; pp. 826–827. [Google Scholar]

- Ryu, Y.; Ryu, E.S. Haptic Telepresence System for Individuals with Visual Impairments. Sens. Mater. 2017, 29, 1061–1067. [Google Scholar]

- Kim, S.; Park, E.S.; Ryu, E.S. Multimedia Vision for the Visually Impaired through 2D Multiarray Braille Display. Appl. Sci. 2019, 9, 878. [Google Scholar] [CrossRef]

- Microsoft. Kinect for Windows. 2018. Available online: https://developer.microsoft.com/en-us/windows/kinect (accessed on 3 October 2018).

- Microsoft. Setting up Kinect for Windows. 2018. Available online: https://support.xbox.com/en-US/xboxon-windows/accessories/kinect-for-windows-setup (accessed on 4 October 2018).

- Kaiming, H.; Jian, S.; Xiaoou, T. Guided Image Filtering. In Proceedings of the Computer Vision—ECCV 2010; Daniilidis, K., Maragos, P., Paragios, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 1–14. [Google Scholar]

- Atılım Çetin. Guided Filter for OpenCV. 2014. Available online: https://github.com/atilimcetin/guidedfilter (accessed on 25 May 2019).

- CHAI3D. CHAI3D: CHAI3D Documentation. 2018. Available online: http://www.chai3d.org/download/doc/html/wrapper-overview.html (accessed on 5 October 2018).

- 3D Systems, Inc. OpenHaptics: Geomagic® OpenHaptics® Toolkit. 2018. Available online: https://www.3dsystems.com/haptics-devices/openhaptics?utm_source=geomagic.com&utm_medium=301 (accessed on 5 October 2018).

- Sullivan, G.J.; Ohm, J.R.; Han, W.J.; Wiegand, T. Overview of the high efficiency video coding (HEVC) standard. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1649–1668. [Google Scholar] [CrossRef]

- Roh, H.J.; Han, S.W.; Ryu, E.S. Prediction complexity-based HEVC parallel processing for asymmetric multicores. Multimed. Tools Appl. 2017, 76, 25271–25284. [Google Scholar] [CrossRef]

- Ryu, Y.; Ryu, E.S. Video on Mobile CPU: UHD Video Parallel Decoding for Asymmetric Multicores. In Proceedings of the 8th ACM on Multimedia Systems Conference, Taipei, Taiwan, 20–23 June 2017; pp. 229–232. [Google Scholar]

- Sullivan, G.J.; Boyce, J.M.; Chen, Y.; Ohm, J.R.; Segall, C.A.; Vetro, A. Standardized extensions of high efficiency video coding (HEVC). IEEE J. Sel. Top. Signal Process. 2013, 7, 1001–1016. [Google Scholar] [CrossRef]

- Zhang, Q.; Chen, M.; Huang, X.; Li, N.; Gan, Y. Low-complexity depth map compression in HEVC-based 3D video coding. EURASIP J. Image Video Process. 2015, 2015, 2. [Google Scholar] [CrossRef]

- Fraunhofer.; HHI. 3D HEVC Extension. 2019. Available online: https://www.hhi.fraunhofer.de/en/departments/vca/research-groups/image-video-coding/research-topics/3d-hevc-extension.html (accessed on 16 October 2019).

- Shokrollahi, A. Raptor codes. IEEE/ACM Trans. Netw. (TON) 2006, 14, 2551–2567. [Google Scholar] [CrossRef]

- Ryu, E.S.; Jayant, N. Home gateway for three-screen TV using H. 264 SVC and raptor FEC. IEEE Trans. Consum. Electron. 2011, 57. [Google Scholar] [CrossRef]

- Canadian Assistive Technologies Ltd. What to Know before You Buy a Braille Display. 2018. Available online: https://canasstech.com/blogs/news/what-to-know-before-you-buy-a-braille-display (accessed on 10 January 2019).

- Consortium, T.D. Daisy Consortium Homepage. 2018. Available online: http://www.daisy.org/home (accessed on 6 October 2018).

- IDPF (International Digital Publishing Forum). EPUB Official Homepage. 2018. Available online: http://idpf.org/epub (accessed on 6 October 2018).

- Google Developers. Documentation for Android Developers—Message. 2018. Available online: https://developer.android.com/reference/android/os/Message (accessed on 7 October 2018).

- Google Developers. Documentation for Android Developers—Activity. 2018. Available online: https://developer.android.com/reference/android/app/Activity (accessed on 7 October 2018).

- Bray, T.; Paoli, J.; Sperberg-McQueen, C.M.; Maler, E.; Yergeau, F. Extensible Markup Language (XML) 1.0. Available online: http://ctt.sbras.ru/docs/rfc/rec-xml.htm (accessed on 3 December 2019).

- Daisy Consortium. DAISY 2.02 Specification. 2018. Available online: http://www.daisy.org/z3986/specifications/daisy_202.html (accessed on 7 October 2018).

- Daisy Consortium. Part I: Introduction to Structured Markup—Daisy 3 Structure Guidelines. 2018. Available online: http://www.daisy.org/z3986/structure/SG-DAISY3/part1.html (accessed on 7 October 2018).

- Paul Siegmann. EPUBLIB—A Java EPUB Library. 2018. Available online: http://www.siegmann.nl/epublib (accessed on 18 October 2018).

- Siegmann, P. Epublib for Android OS. 2018. Available online: http://www.siegmann.nl/epublib/android (accessed on 6 October 2018).

- Hedley, J. Jsoup: Java HTML Parser. 2018. Available online: https://jsoup.org/ (accessed on 7 October 2018).

- Jung, J.; Kim, H.G.; Cho, J.S. Design and implementation of a real-time education assistive technology system based on haptic display to improve education environment of total blindness people. J. Korea Contents Assoc. 2011, 11, 94–102. [Google Scholar] [CrossRef]

- Jung, J.; Hongchan, Y.; Hyelim, L.; Jinsoo, C. Graphic haptic electronic board-based education assistive technology system for blind people. In Proceedings of the 2015 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 9–12 January 2015; pp. 364–365. [Google Scholar]

- Shi, J.; Tomasi, C. Good Features to Track; Technical Report; Cornell University: Ithaca, NY, USA, 1993. [Google Scholar]

- Daisy Consortium. Daisy Sample Books. 2018. Available online: http://www.daisy.org/sample-content (accessed on 10 October 2018).

- PRESSBOOKS. EPUB Sample Books. 2018. Available online: https://pressbooks.com/sample-books/ (accessed on 8 October 2018).

- Jeong, I.; Ahn, E.; Seo, Y.; Lee, S.; Jung, J.; Cho, J. Design of Electronic Braille Learning Tool System for low vision people and Blind People. In Proceedings of the 2018 Summer Conference of the Korean Institute of Information Scientists and Engineers, Seoul, Korea, 20–22 June 2018; pp. 1502–1503. [Google Scholar]

- Seo, Y.S.; Joo, H.J.; Jung, J.I.; Cho, J.S. Implementation of Improved Functional Router Using Embedded Linux System. In Proceedings of the 2016 IEIE Summer Conference, Seoul, Korea, 22–24 June 2016; pp. 831–832. [Google Scholar]

- Park, J.; Sung, K.K.; Cho, J.; Choi, J. Layout Design and Implementation for Information Output of Mobile Devices based on Multi-array Braille Terminal. In Proceedings of the 2016 Winter Korean Institute of Information Scientists and Engineers, Seoul, Korea, 21–23 December 2016; pp. 66–68. [Google Scholar]

- Goyang-City. Cat character illustration by Goyang City. 2014. Available online: http://www.goyang.go.kr/www/user/bbs/BD_selectBbsList.do?q_bbsCode=1054 (accessed on 16 October 2019).

- Repo, P. Like PNG Icon. 2019. Available online: https://www.pngrepo.com/svg/111221/like (accessed on 16 October 2019).

- FLATICON. Chat Icon. 2019. Available online: https://www.flaticon.com/free-icon/chat_126500#term=chat&page=1&position=9 (accessed on 16 October 2019).

- NV Access. About NVDA. 2019. Available online: https://www.nvaccess.org/ (accessed on 12 March 2019).

| Sex and Age (Years) | World Population (Millions) | Blind | Moderate and Severe Vision Impairment | Mild Vision Impairment | |||

|---|---|---|---|---|---|---|---|

| Men | Prevalence (%) | Number (Millions) | Prevalence (%) | Number (Millions) | Prevalence (%) | Number (Millions) | |

| Over 70 | 169 | 4.55 | 7.72 | 20.33 | 34.53 | 14.05 | 23.85 |

| 50–69 | 613 | 0.93 | 5.69 | 6.78 | 41.57 | 6.46 | 39.65 |

| 0–49 | 2920 | 0.08 | 2.46 | 0.74 | 21.66 | 0.81 | 23.61 |

| Women | |||||||

| Over 70 | 222 | 4.97 | 11.06 | 21.87 | 48.71 | 14.57 | 32.45 |

| 50–69 | 634 | 1.03 | 6.52 | 7.48 | 47.46 | 6.99 | 44.35 |

| 0–49 | 2780 | 0.09 | 2.56 | 0.82 | 22.68 | 0.89 | 24.64 |

| Product | Special Feature | Media Support | Braille Cells | Cost (USD) | OS | Release (Year) |

|---|---|---|---|---|---|---|

| Blitab [28] | Displaying braille image | Image and audio | Unknown | Android | Unknown | |

| BrailleSense Polaris [29] | Office and school-friendly | Audio-only | 32 | 5795.00 | Android | 2017 |

| BrailleSense U2 [29] | Office and school-friendly | Audio-only | 32 | 5595.00 | Windows CE 6.0 | 2012 |

| BrailleNote Touch 32 braille Notetaker [30] | Smart touchscreen keyboard | Audio-only | 40 | 5495.00 | Android | 2016 |

| Brailliant B 80 braille display (new gen.) [31] | Compatibility with other devices | Text-only | 80 | 7985.00 | Mac/iOS/Windows | 2011 |

| Standard | DAISY v2.02 | DAISY v3.0 |

|---|---|---|

| Document | based-on HTML | based-on XML |

| Configuration | Media files, SMIL, and NCC | Media files, SMIL, NCX, XML, and OPF |

| Reference file | NCC file | OPF file |

| Metadata Tag | <title>, <meta> | <metadata> |

| Context Tag | <h> | <level1> |

| Item | Recognition Characteristics |

|---|---|

| Image details | Expressing excessive detail can cause confusion in determining the direction and intersection of image outlines. Therefore, the outline in both low-and high-complexity images should be expressed as simply as possible to increase the information recognition capabilities. |

| High-complexity image with a central object | For an image containing a primary object, the background and surrounding data should be removed and only the outline of the primary object should be provided to increase recognition capabilities. |

| High-complexity image without a central object | For an image without a primary object, such as a landscape, translating the outline does not usually enable the visually impaired to recognize the essential information. |

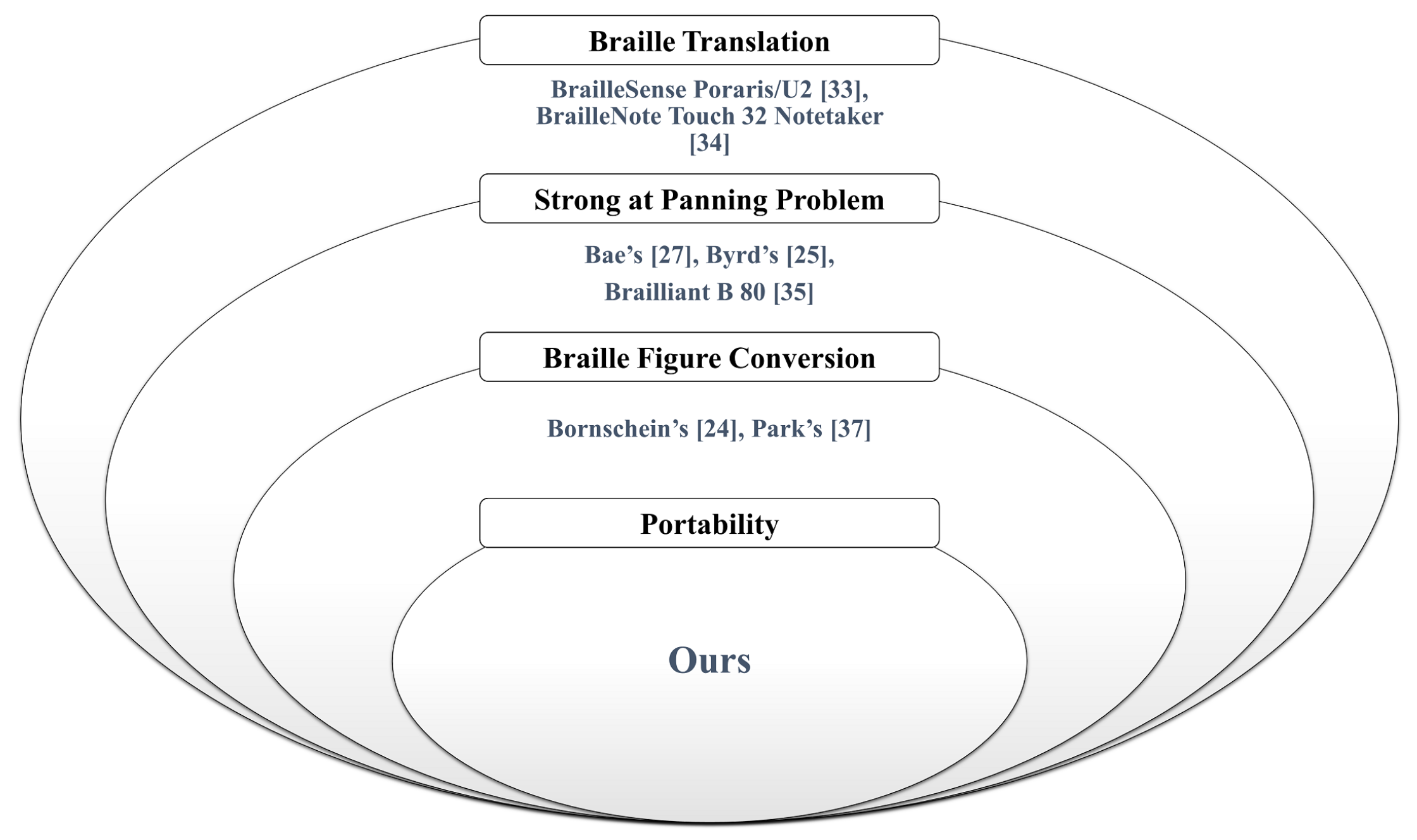

| Research | eBook Support (DAISY/EPUB) | Portability | Braille Translation | Braille Figure Conversion | Panning Problem | OS |

|---|---|---|---|---|---|---|

| Application | ||||||

| Bae’s [23] | DAISY | Weak | ∘ | × | Strong | Windows |

| Kim’s [24,25] | DAISY | Strong | × | × | - | Android |

| Bornschein’s [21] | Unknown | Weak | ∘ | ∘ | Strong | Windows |

| Bornschein’s [20] | Unknown | Weak | ∘ | ∘ | Strong | Windows |

| Goncu’s [19] | EPUB | Strong | × | × | - | iOS |

| Harty’s [26] | DAISY(v2.02) | Strong | × | × | - | Android |

| Mahule’s [27] | DAISY(v3.0) | Strong | × | × | - | Android |

| Braille device | ||||||

| Byrd’s [22] | Unknown | Strong | ∘ | × | Strong | Windows (NVDA) |

| Park’s [33] | Both | Weak | ∘ | ∘ | Strong | Windows |

| BrailleSense Polaris [29] | Both | Strong | ∘ | × | Weak | Android |

| BrailleSense U2 [29] | Both | Strong | ∘ | × | Weak | Windows CE 6.0 |

| BrailleNote Touch 32 Braille Notetaker [30] | Both | Strong | ∘ | × | Weak | Android |

| Brailliant B 80 (new gen.) [31] | Both | Strong | ∘ | × | Strong | - |

| Ours | Both | Strong | ∘ | ∘ | Strong | Android |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, S.; Ryu, Y.; Cho, J.; Ryu, E.-S. Towards Tangible Vision for the Visually Impaired through 2D Multiarray Braille Display. Sensors 2019, 19, 5319. https://doi.org/10.3390/s19235319

Kim S, Ryu Y, Cho J, Ryu E-S. Towards Tangible Vision for the Visually Impaired through 2D Multiarray Braille Display. Sensors. 2019; 19(23):5319. https://doi.org/10.3390/s19235319

Chicago/Turabian StyleKim, Seondae, Yeongil Ryu, Jinsoo Cho, and Eun-Seok Ryu. 2019. "Towards Tangible Vision for the Visually Impaired through 2D Multiarray Braille Display" Sensors 19, no. 23: 5319. https://doi.org/10.3390/s19235319

APA StyleKim, S., Ryu, Y., Cho, J., & Ryu, E.-S. (2019). Towards Tangible Vision for the Visually Impaired through 2D Multiarray Braille Display. Sensors, 19(23), 5319. https://doi.org/10.3390/s19235319