1. Introduction

Advanced Driver Assistant Systems (ADAS) that focus on maintaining a safe distance from a leading vehicle, such as Forward-Collision Warning (FCW), use a combination of cameras, radar, and laser to detect whether the distance between vehicles is safe and alert the driver when that is not the case.

The Adaptive Cruise Control (ACC) ADAS uses radar or cameras to detect traffic and automatically adjust the vehicle’s speed to maintain a safe distance from the leading vehicle by applying longitudinal control algorithms to the throttle and/or the brake [

1]. Cooperative ADAS (coADAS) that are based on Vehicle-to-Vehicle (V2V) communication, such as the cooperative ACC, have the ability to measure the velocity of other vehicles very precisely and determine if a vehicle ahead has decelerated. Even a penetration rate of 40% of vehicles with V2V communication capabilities suffices to prevent accidents in scenarios with rear-end collisions [

2].

Even given that ACC systems have some restrictions related to their response to stopped or slowed traffic if the vehicle was driving at a high speed, or that they require driver input in some traffic conditions below a certain speed threshold, it remains the case that vehicles with ADAS are much more accurate than humans. They not only help to prevent accidents, but they also have been proven beneficial to the environment and traffic flow.

It has been argued that almost every automobile company is manufacturing vehicles with ACC systems on board that enable automatic vehicle following in the longitudinal direction [

3]. While automakers are making efforts to include ADAS features in more than just their premium cars, these systems are being offered as optional equipment in many economy and low-priced models. For example, according to a Bosch evaluation based on the 2014 registration statistics, only 8% of the nearly three million registered passenger cars in Germany were equipped with ACC systems [

4].

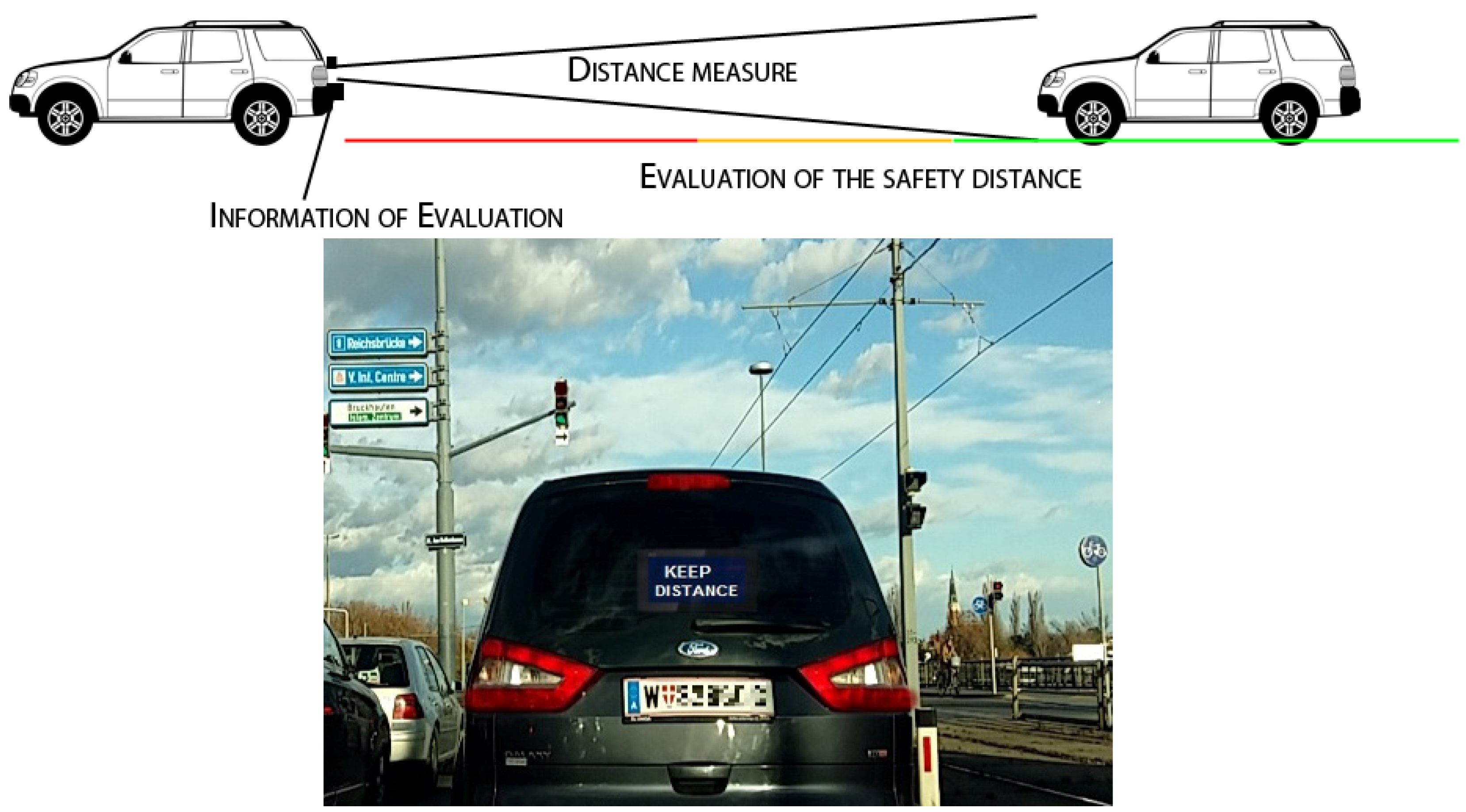

In order to contribute to active safety in the road and prevent or mitigate road crashes, we propose in this work a low-cost FCW system that gathers information through the stereoscopic capturing and processing of images by rear cameras. They are located in the rear part of the leading vehicle, with the purpose of discouraging tailgating behavior from the vehicle driving behind through a message (see

Figure 1).

It has been argued that fluency of communication between users and systems is determined by the kind of information provided to the driver [

5]. Adhering to this statement, our Tailigator system seeks to reduce road fatalities and damage by using traffic condition warnings in real-time to alert drivers when the longitudinal safety distance between two vehicles is not maintained and poses a danger to both [

6,

7]. This kind of visual information might improve safety by making drivers more mindful with regard to potential tailgating behavior, as it emphasizes the interpersonal collaborative nature of driving in order to improve road safety, rather than just safety through sensor-equipped vehicles.

As an extension of the work presented in [

8], in which a detailed error analysis of the distance calculation proposed in our solution was provided based on several measurement procedures and roadway geometry, we implement in this work a new software-architecture approach. We rely on the use of multithreading via 3rd party libraries as QT to investigate if the time difference between the stereoscopic cameras of 0.2 s that was due to the limitations of the previous implementation approach can be improved by integrating real-time capabilities in the system.

Our contribution consists of a novel visualization of messages that is independent of the communication capabilities of the following vehicle, being thus applicable for example in scenarios with low penetration rates of connected and autonomous vehicles [

8].

2. Related Work

ADAS, such as ACC, are able to adjust the vehicle’s speed after having acquired information from a forward-looking radar that is installed in the front part of a vehicle [

9]. This approach is in stark contrast to our proposed system, which uses information that has been collected by camera sensors mounted in the rear part of the leading vehicle. This location of the sensors allows us to aim at improving driving behavior in situations in which the leading vehicle is forced to increase speed and disregard speed limits as an effect of aggressive tailgating behavior from a following vehicle.

A lot of research in the context of object detection for rear-end collision avoidance systems has been performed in recent years. One example is the combining of images from several cameras to increase driver visual perception, accomplished through the mapping of two simultaneous images using parameters, such as camera shot angle, camera focal length, and the virtual square of the area of interest [

10].

In the same line of research, a comprehensive analysis of the mathematical models used to measure distances based on stereoscopic pictures was provided in [

11]. Detection of tailgating behavior has been investigated in several other works, for example through algorithm frameworks based on video-based data in urban road junctions [

12]. Approaches to discourage tailgating can include feedback systems, such as the ones described in [

13,

14]. In these works, the authors assessed the impact of several headway feedback systems on behavior change based on visual and auditory cues. In addition, by using individual vehicle records and a multivariate linear regression data analysis to research the effect of certain messages on the amount of close and aggressive driving, the authors in [

15] detected significant differences in behavior, depending on the messages used to characterize an unsafe distance to a leading vehicle.

In [

16], the authors coped with challenges regarding video analysis for detecting tailgating behavior, such as variations of background and pose uncertainty, by an improved Gaussian Mixture Model (IGMM) for background. They also combined a Deterministic Nonmodel-Based approach with Gaussian Mixture Shadow Model (GMSM) to remove shadows, in the end establishing a tracking strategy and computing the similarity of color histograms. A different approach was presented in [

17], where optical stereoscopy was used in a stationary environment in which a 3D camera was used to provide the two images for processing. The authors concluded that even if the camera did not meet the required accuracy settings, results from a phase-only-correlation method were successful.

An implementation comparable to our presented approach is the rear-end collision warning system from [

18], which also uses the rear view camera of the vehicle. The vehicle’s surroundings are analyzed based on measurements of distance, speed and acceleration, all relative to the following vehicle. A warning is issued as soon as the approaching vehicle enters a non-safe distance. The main idea behind this system was to prevent accidents caused by inattentive drivers. Experiments from off-line video tests were proven to be successful, the system having a potential application in warning the driver of the leading vehicle.

In the same line of research but focusing on eliciting a behavior change from the driver in the following/rear vehicle, in [

6,

8], the stereoscopic capturing and processing of images by rear cameras was used to calculate in real time the distance between a leading and a following vehicle.

If a certain threshold value regarding the distance between both vehicles was exceeded, the leading vehicle displayed a message in the rear part reminding tailgaters to be rational in the event that the tailgating was intentional on the part of the rear driver.

Additionally, relying on the communication between the two vehicles, an in-vehicle system was compared to the developed rear-mounted distance warning system under lab-controlled conditions in terms of their impact on driver response in [

14]. Results showed that both systems influenced the driver in keeping a time gap of two seconds.

We contribute in this work to the state of the art and extend the technological approach of the last three referenced papers by integrating real-time capabilities in the system. We then assess its performance in a field test as described in the next sections.

3. System Implementation

The original system described in [

6] was based on a Raspberry Pi 1 Model B+ and designed and implemented from scratch aiming at a stable approach with a real-time performance. There were several shortcomings (e.g., 0.2 s time difference between cameras, iteration duration between 3 and 6 s, and unstable frame capturing) that needed to be tackled in order for the system to be applicable on the road. An improved version of the approach was described in [

8], in which a detailed error analysis of the distance calculation was provided based on a measurement procedure and roadway geometry.

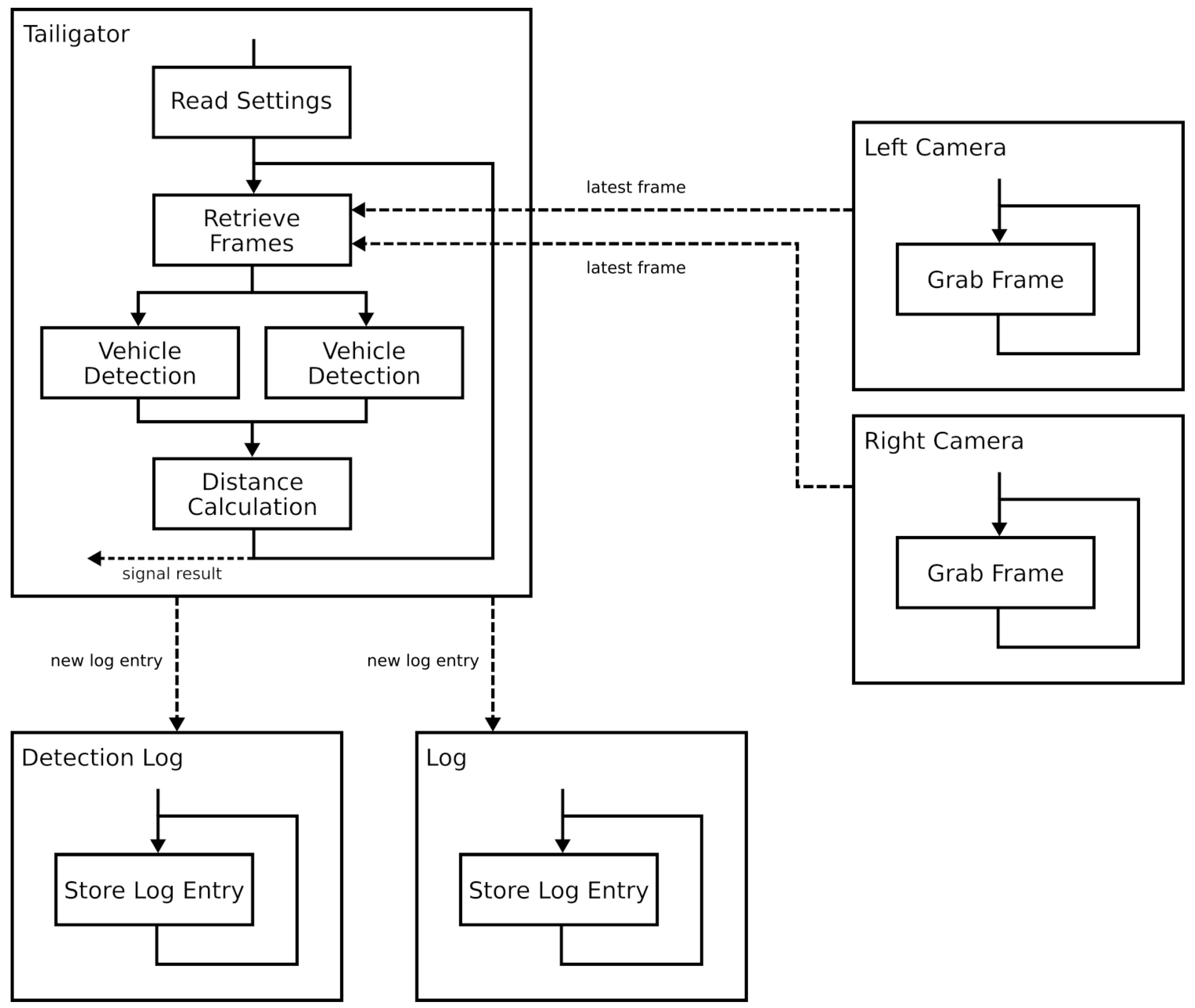

In the work presented in this paper, we upgraded the original Raspberry Pi 1 Model B+ to a Raspberry Pi 3 Model B board using Logitech C270 web cameras. The upgrade was possible due to the multi-threaded design of the system that made it well suited for modern multi-core processors as the one integrated in the Raspberry Pi 3. The software was written in the C++ programming language using the QT framework with its signal/slot mechanism and the OpenCV 2.4 library. A graphical overview of the software architecture can be seen in

Figure 2.

As it is depicted in the figure, the Tailigator software reads in the settings from a file that is written in JSON format. Resolution, frame rate, log directory path, and camera device paths are some of the data that can be found in the settings file. The next step is the retrieving of the latest frames from the cameras. Each camera is handled by a single thread that has continuous access to frames and that stores the last one that is located in the memory for the Tailigator thread so that it can be retrieved when necessary. When the frames are retrieved, two vehicle detection threads are created and each one is transferred to a frame. These two threads detect the vehicles in parallel, thus effectively reducing the detection time in half. After the detection, the distance is calculated and signaled to a listening user. Then, the Tailigator thread starts retrieving the latest pair of frames over again. With this approach, we managed to increase the resolution from 640 × 480 to 864 × 480 and the frame rate from 5 fps to 15 fps, while also reducing the time difference between the cameras from 0.2 s to 0.067 s. Furthermore, the iteration duration was reduced to approximately 0.8 s.

4. Field Test Evaluation of the System

To analyze the Tailigator system performance against the predicted error models, we performed an empirical evaluation of the following parameters:

The distance to an object

placed in front of the cameras was measured following the method presented in [

8] and using the following parameters in Equation (

1) by [

11]: the distance between the cameras

, the horizontal field of view

, the horizontal pixel resolution (pixel number)

, and the horizontal pixel difference to the same object in both pictures in pixels

.

4.1. Curved Road Sections

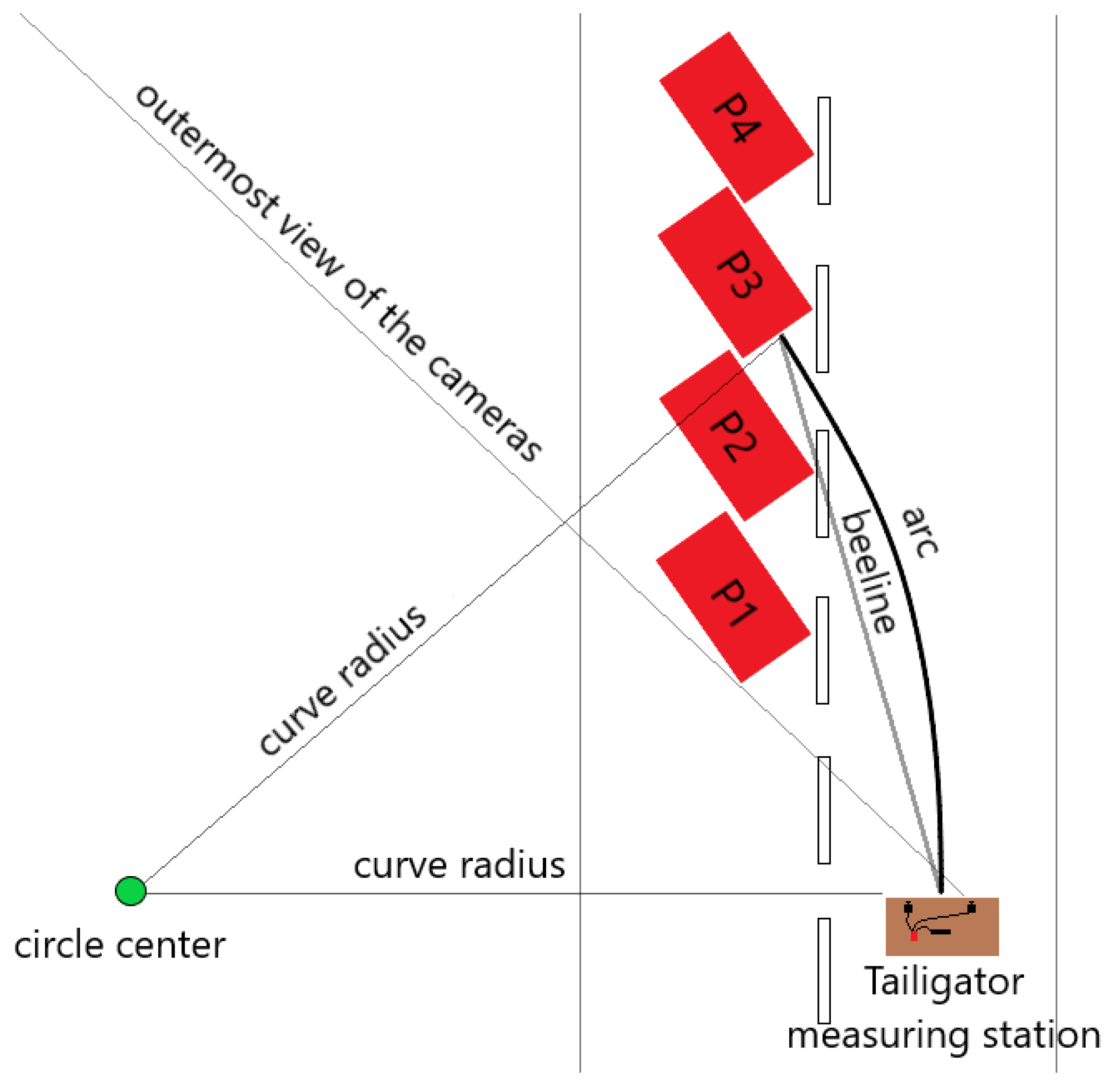

For the measurements in curved road sections, the rear vehicle was placed in a way that the right edge of its bumper could still be detected by both cameras. This resulted in four curve radii with which the measurements were carried out. A graphical overview of the measurement setup in the curve is provided by

Figure 3.

As explained in [

8], the effective driving distance between the leading and following vehicles in circular curves of radius R is denoted by Equation (

2), where the angle

is calculated as shown in Equation (

3) producing a negative value. The deviation between the distance of the circle’s segment and the measured distance by the system is given by

, where the deviation is denoted by Equation (

4).

4.2. Straight Road Section

To perform the measurements on a straight road section, the range between the leading and the rear vehicle was selected within a range of 3 to 30 m. To test the independence of the car model used, we performed the experiments with two different vehicles that were named Testcar1 and Testcar2. At every position of the between both vehicles, several measurements were performed.

The image recognition procedure of the Tailigator aimed at detecting the vehicles in the two parallel recorded camera frames. If a recognition of the vehicle in both camera frames was not possible at the same time, the calculation of the distance failed and a event was logged. If a vehicle was detected by the Tailigator in both camera frames, the distance was calculated and recorded. The was analyzed as a result of the difference between the (denoted by ) and the .

5. Evaluation Results

5.1. Distance on Curved Road Sections

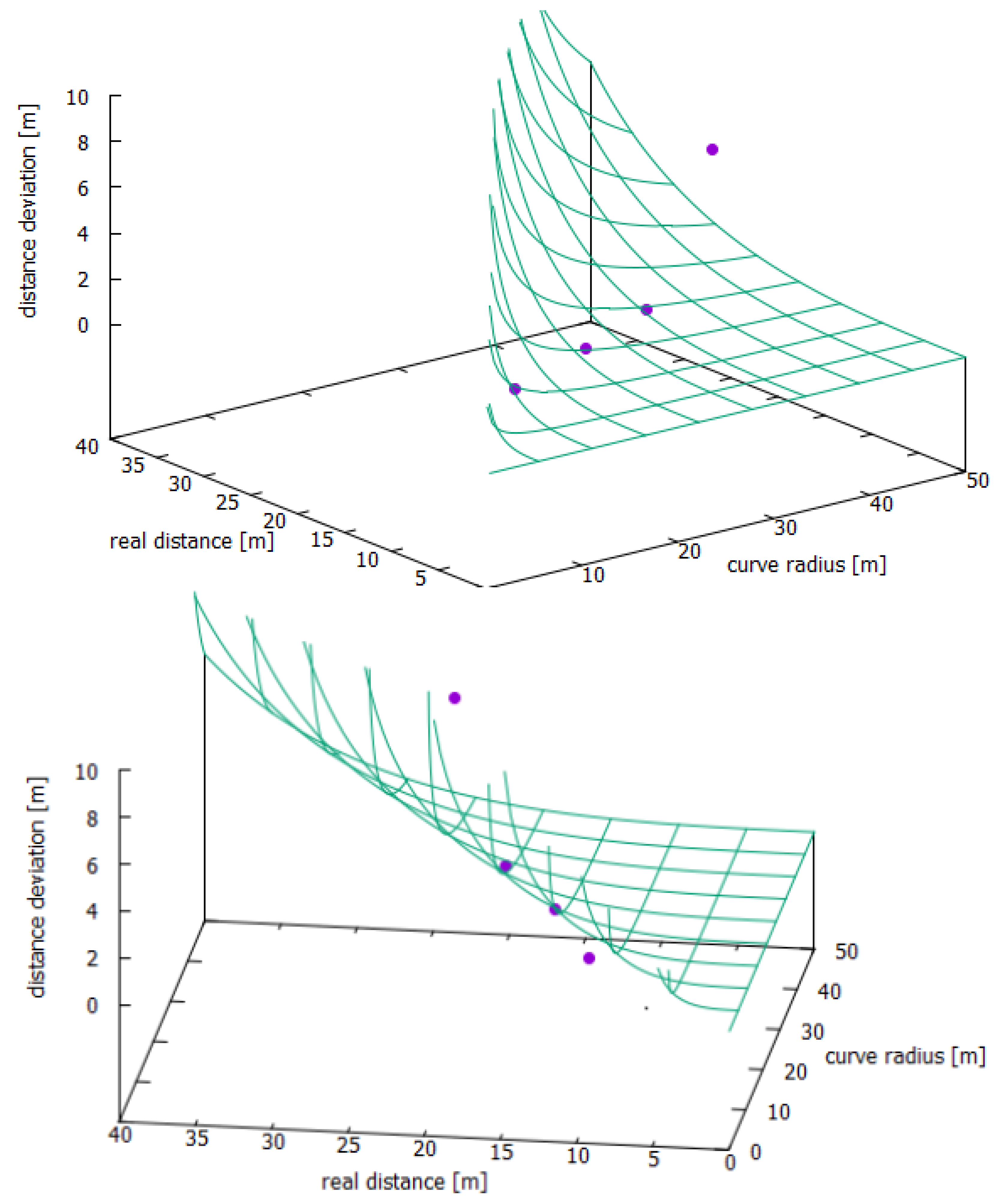

The results of the measurements in the curved road sections in

Table 1 showed a similar course of increasing measurement errors with increasing distances.

This can be seen in a graphical way by the theory error curve provided by [

6] in

Figure 4. As shown in the graph, the mean values of the measured distances of the four positions are consistent with the calculated theoretical error curve, even though the error bars do not reach the real distance curve.

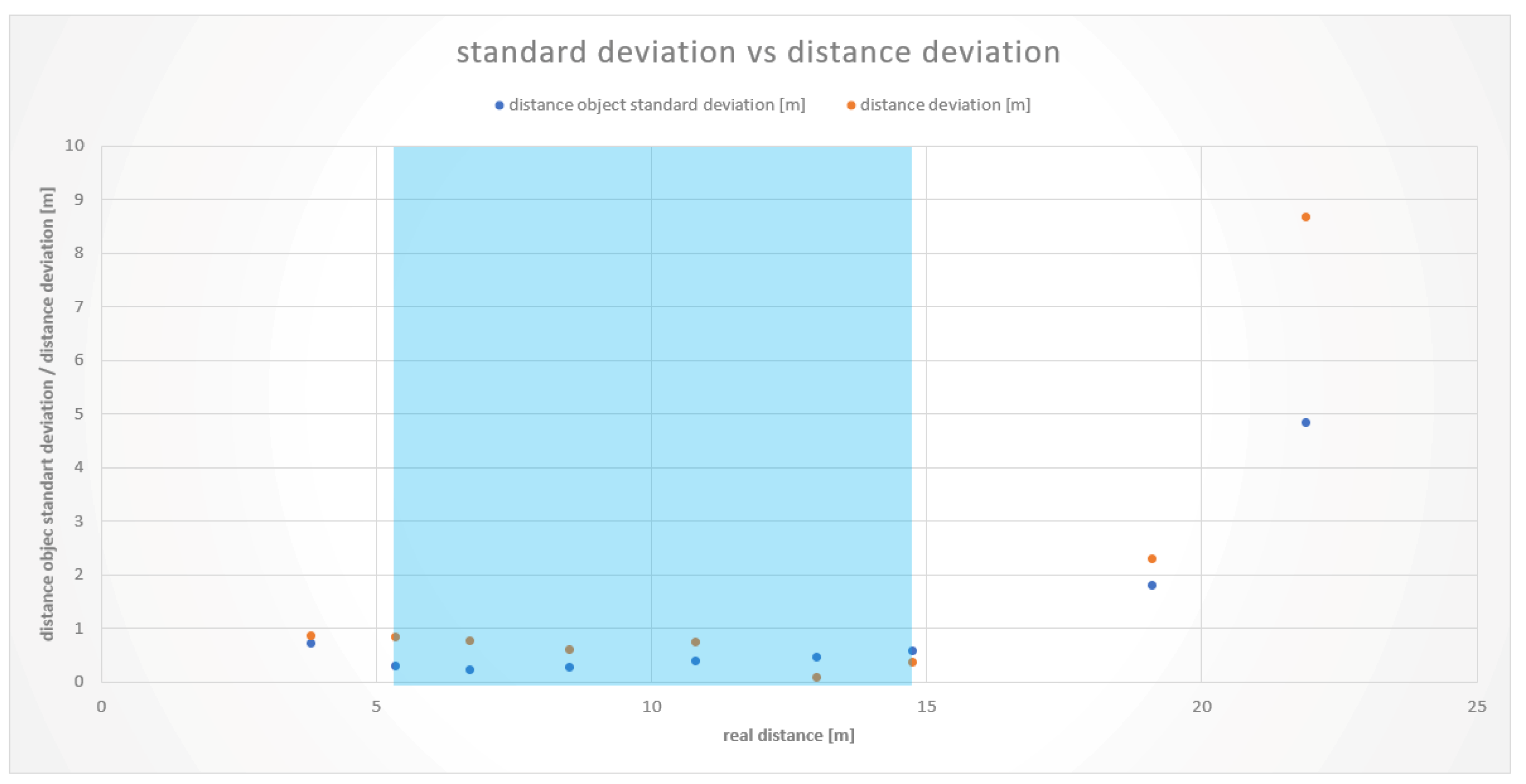

The measurements showed similar characteristics both on the straight line and in the curve. At close distances, between 3 and 5 m, the values deviated to a higher extent from the real value. At average distances, around 10 to 15 m, the Tailigator achieved the best results. From distances more than 20 m, the deviations increased steadily with the distance.

5.2. Distance on Straight Road Sections

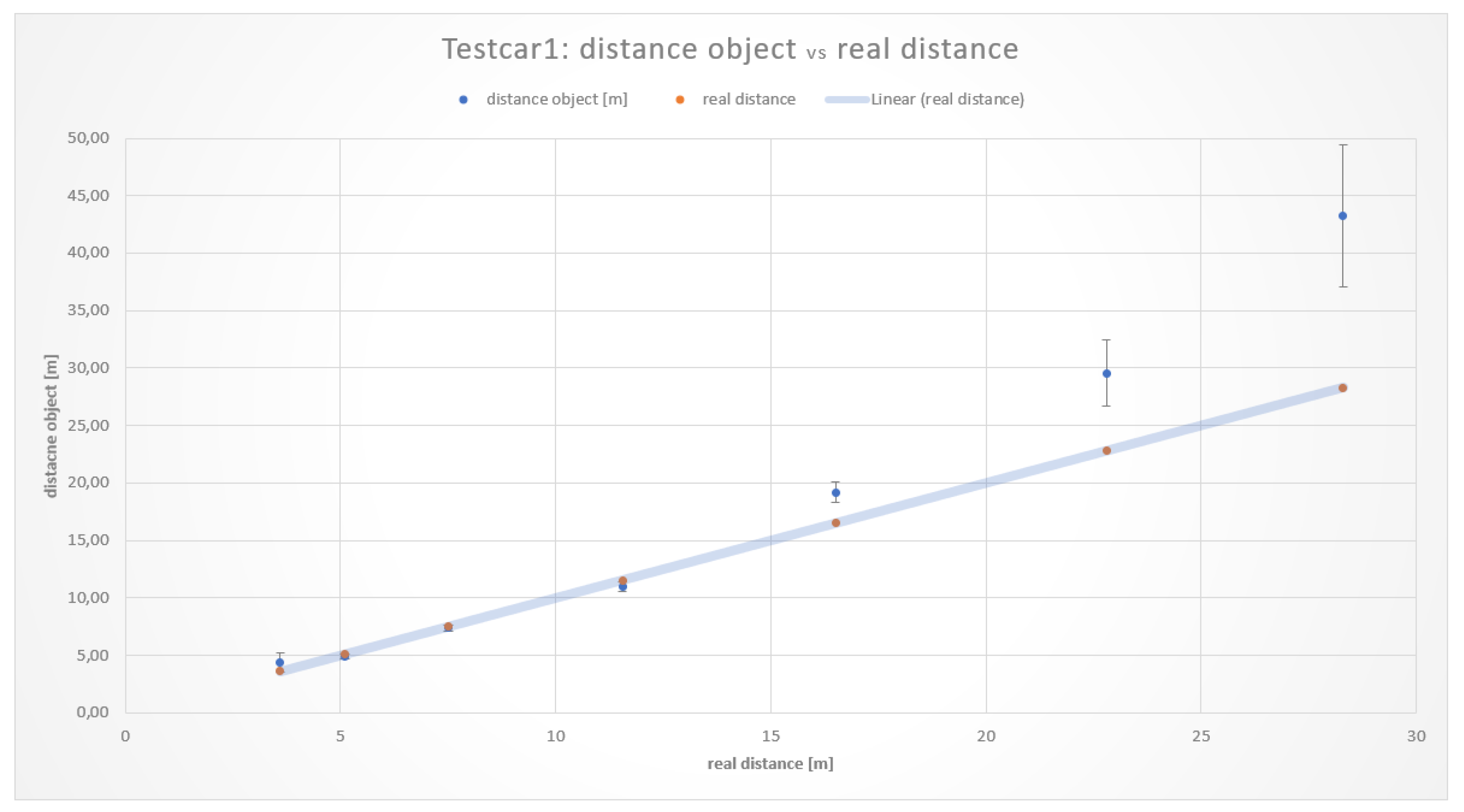

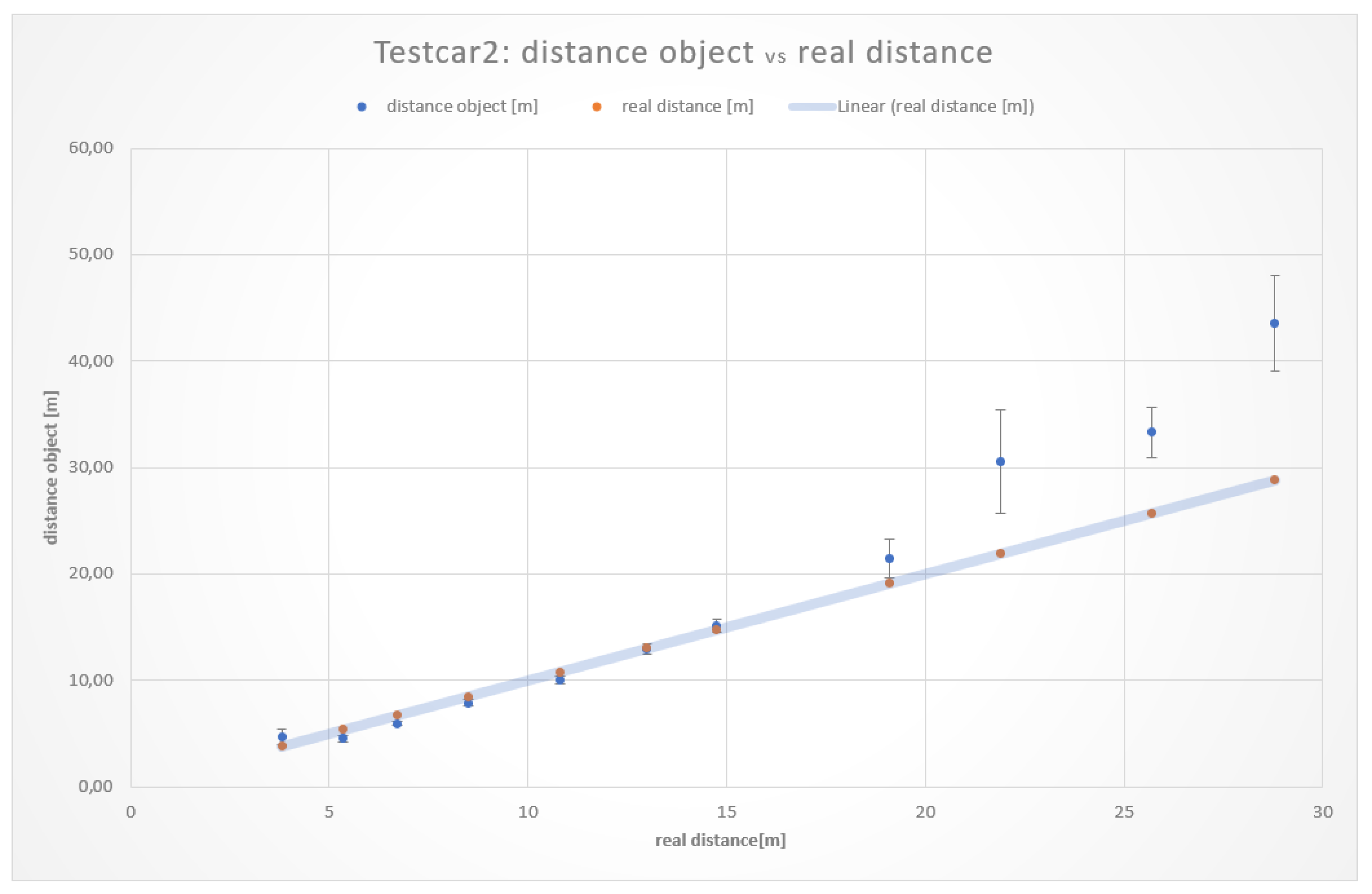

The measurements resulting from the distance on a straight road section of both vehicles Testcar1 and Testcar2 are illustrated in

Table 2 and

Table 3, respectively. Graphical results showing the comparison of the real distance with the measured

are depicted in

Figure 5 and

Figure 6.

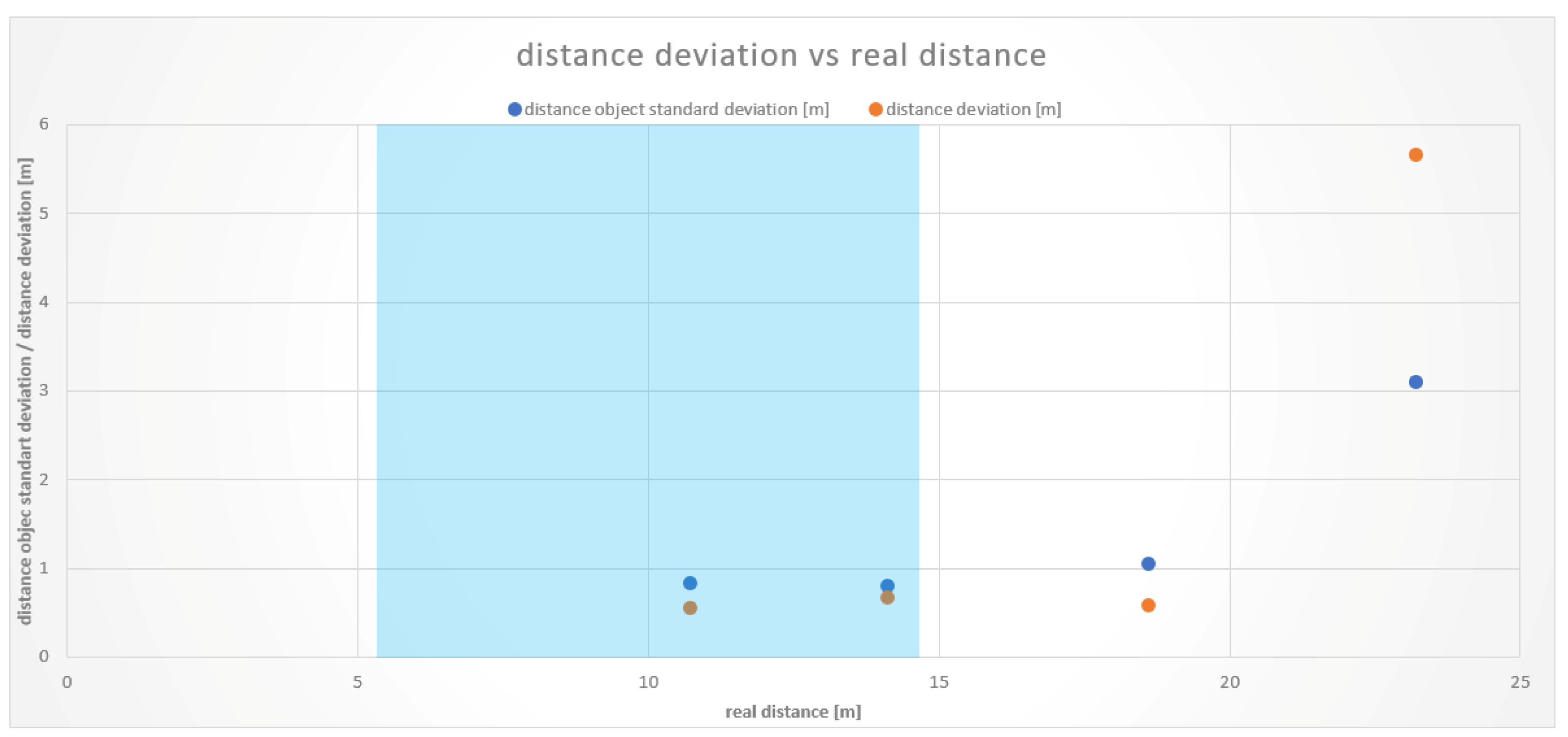

As it can be seen, the averages at shorter distances were closer to the real values than those at longer distances. This behavior is not surprising. Results concerning the different vehicles used in the experiments showed that the measurement values for distances in the range of 5 to 15 m were the most accurate and consistent with each other. For distances more than 15 m, the measurement error increased significantly.

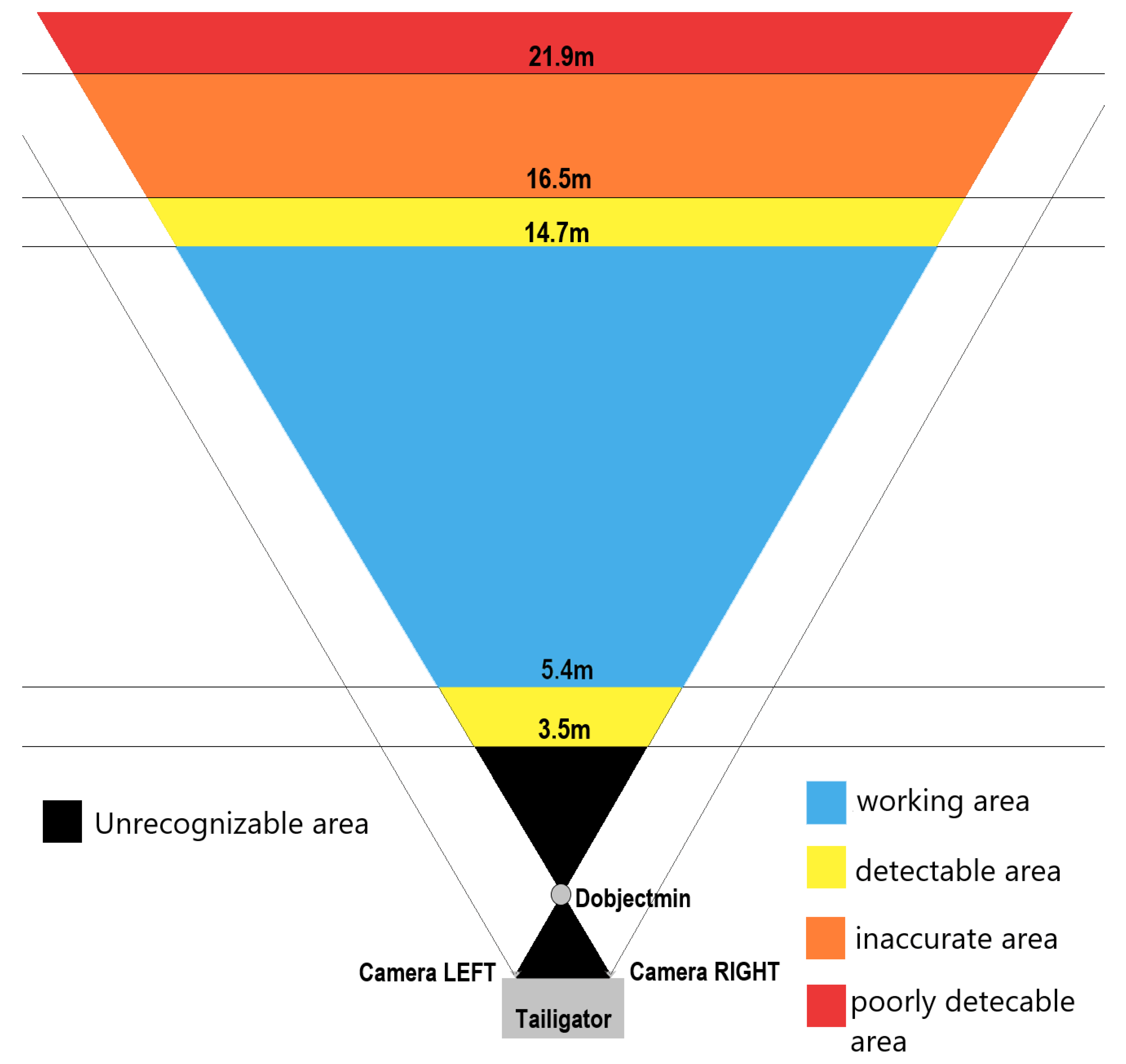

The measurement approach to determine the safe distance calculation without errors by the stereoscopic capturing and processing of images through the Tailigator system showed accurate results in a range of approximately 5 to 16 m. Measurements less than 5 m were made, in order to determine the exact lower bounds of the working range.

The respective measurements at 3.6 and 3.8 m showed deviations from the real values to a higher extent and problems in vehicle identification. The lower limit of the working range of the Tailigator is therefore established in the range of 3.8 to 5.1 m.

Figure 7 illustrates the working area of the Tailigator on a straight road section (blue marked area).

At the upper bound of the working range, there were comparable results between measurements on a straight line and in a curve. At the first test vehicle, a distance of 16.5 m resulted in an average distance deviation of 2.7 m. The measurements with the second test vehicle showed smaller but consistent distance deviations. At a distance of 14.7 m, there was an average deviation of 0.36 m and, at 19.1 m, a mean difference of 2.3 m. These values and the resulting working area of the second test vehicle are depicted in

Figure 8.

Results from the measurements in the curved road sections showed a high distance deviation from a distance of 23.2 m on. The reason for this is that the rear vehicle could no longer be detected clearly by the cameras. A significantly higher vehicle recognition rate occurred less than 18.8 m, determining the working area within this range in straight and curved road sections. The boundaries of the Tailigator are shown graphically in

Figure 9.

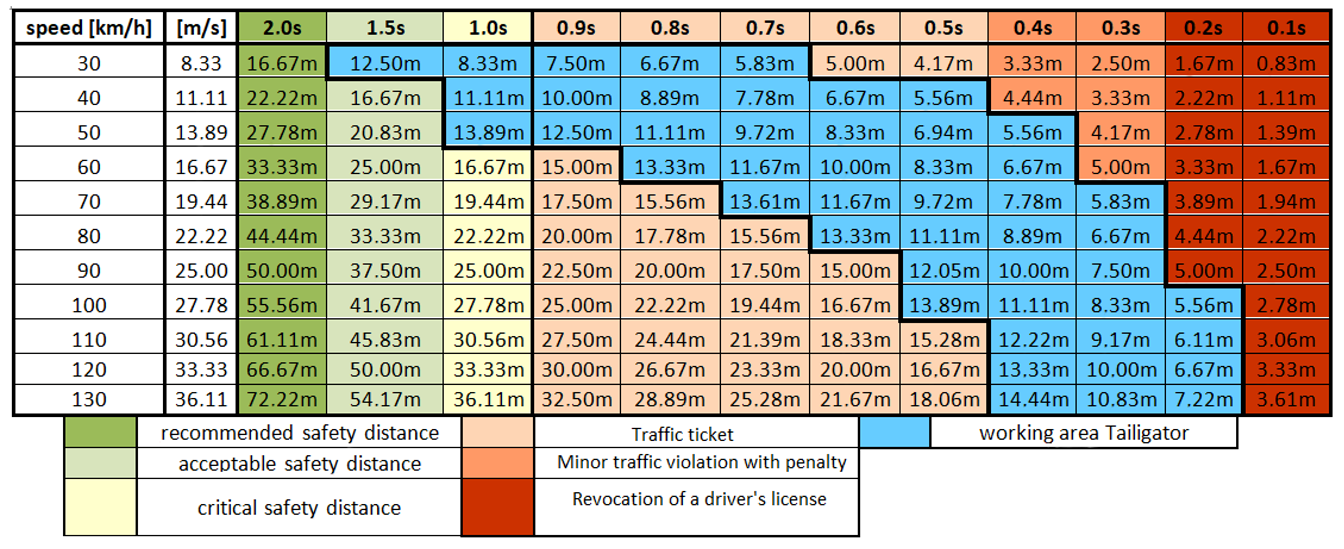

The working area of the Tailigator is related to the speed-dependent critical safety distance. The blue area in

Table 4 identifies the working area of the Tailigator in this context.

6. System Redesign on the Basis of the Resulting Evaluation

Based on the results from the empirical evaluation, we increased the frame rate to 30 fps, the iterations per second, and the resolution of the frames. This was achieved by using an optimized version of the OpenCV 3.2 library and by replacing the Raspberry Pi with the UP board.

The UP Board is the same size as the Raspberry Pi 3 Model B and is also equipped with the same 40-pin header, but it provides double the processing power of the Raspberry and three independent USB 2.0 ports, while the ports on the Raspberry are shell shared. These independent USB ports are especially important for a stable frame rate of 30 fps at higher resolutions. For practical purposes, it should be noted that the UP board costs nearly three times as much as the Raspberry Pi 3 Model B.

The OpenCV 3.2 library was compiled with architecture-dependent optimization in order to get more performance out of the processors. For the Raspberry we used the option -mfpu=neon-fp-armv8 and for the UP Board the options -msse, -msse2, -msse3, -mssse3, -msse4.1, and -msse4.2.

Since minimizing the time difference of the cameras was a requirement, only the results for 30 fps are presented. At lower frame rates the iterations per second (IPS) were slightly higher (about 10% for 15 fps). The Raspberry Pi 3 can be used for resolutions up to 864 × 480. If more than that, the USB bandwidth is insufficient, resulting in slowdowns or corrupted images, or both.

The independent USB ports of the UP Board, on the other hand, allow the use of much higher resolutions. Therefore, the UP Board was able to process an iteration in less than a second at a resolution of 1280 × 960.

Table 5 summarizes the results of the tests at 30 fps.

Summarizing the results, a reduction of the time difference from 0.067 s to 0.034 s could be achieved, while also significantly reducing the iteration duration from 0.8 s to less than 0.27 s (UP Board)/0.56 s (RPi3) at 864 × 480.

7. Conclusions and Future Work

The empirical analysis presented in this work supports the theoretical error model presented in [

8]. Relevant system modifications have been conducted to achieve a low-cost rear-end collision assistant, while improving the time and optical resolution of the system.

Based on the evaluation results, the current version of the Tailigator can be used at speeds up to 50 km per hour without any restrictions, since the critical safety distance resides fully within the working range. At more than 50 km per hour, the working range of the Tailigator would be exceeded. To cover the entire range of permitted speeds in Austria with the critical safety distance in the working area of the Tailigator, it would be necessary that the Tailigator provide a working range of up to 55 m. However, the purpose of the Tailigator system is to inform and alert the driver of the rear vehicle of their tailgating behavior, therefore, such a long range is not required and the visibility of the tailgating warning sign to rear drivers (see

Figure 1) in this regime is questionable.

Future work will include the analysis of the transfer potential to the thermal infrared spectrum in order to extend its use to any road condition and scenario, as well as the implementation of a systematic error model to assess the system that resulted from the evaluation presented in this work.

Author Contributions

Conceptualization, G.C.K. and C.O.-M.; methodology, G.C.K., C.O.-M., R.H., L.B.; software, R.H.; validation, G.C.K.; formal analysis, L.B.; investigation, G.C.K., C.O.-M., R.H., L.B.; writing—original draft preparation, G.C.K. and C.O.-M.; writing—review and editing, G.C.K. and C.O.-M.; visualization, G.C.K., C.O.-M., R.H., L.B.; supervision, G.C.K. and C.O.-M.; project administration, G.C.K. and C.O.-M.; funding acquisition, G.C.K. and C.O.-M.

Funding

This research was funded by the “BMVIT Endowed Professorship and Chair Sustainable Transport Logistics 4.0., FFG grant number 852791” and the “Photonics: Foundations and industrial applications” Project, funded by national funds through the MA 23, Urban Administration for Economy, Work and Statistics, Vienna, Austria. Open Access Funding by the University of Applied Sciences Technikum Wien.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Xiao, L.; Gao, F. A comprehensive review of the development of adaptive cruise control systems. Veh. Syst. Dyn. 2010, 48, 1167–1192. [Google Scholar] [CrossRef]

- Validi, A.; Ludwig, T.; Hussein, A.; Olaverri-Monreal, C. Examining the Impact on Road Safety of Different Penetration Rates of Vehicle-to-Vehicle Communication and Adaptive Cruise Control. IEEE Intell. Transp. Syst. Mag. 2018, 10, 24–34. [Google Scholar] [CrossRef]

- Ioannou, P.A.; Stefanovic, M. Evaluation of ACC vehicles in mixed traffic: Lane change effects and sensitivity analysis. IEEE Trans. Intell. Transp. Syst. 2005, 6, 79–89. [Google Scholar] [CrossRef]

- Car Congress, G. 2nd Annual Bosch Analysis of ADAS Finds Emergency Braking and Lane Assist Systems on the Rise. Available online: https://www.greencarcongress.com/2015/12/20151221-bosch.html/ (accessed on 8 August 2019).

- Olaverri-Monreal, C.; Draxler, C.; Bengler, K.J. Variable menus for the local adaptation of graphical user interfaces. In Proceedings of the 6th Iberian Conference on Information Systems and Technologies (CISTI 2011), Chaves, Portugal, 15–18 June 2011; pp. 1–6. [Google Scholar]

- Olaverri-Monreal, C.; Lorenz, R.; Michaeler, F.; Krizek, G.C.; Pichler, M. Tailigator: Cooperative System for Safety Distance Observance. In Proceedings of the 2016 International Conference on Collaboration Technologies and Systems (CTS), Orlando, FL, USA, 31 October–4 November 2016; pp. 392–397. [Google Scholar]

- Olaverri-Monreal, C.; Jizba, T. Human Factors in the Design of Human–Machine Interaction: An Overview Emphasizing V2X Communication. IEEE Trans. Intell. Veh. 2016, 1, 302–313. [Google Scholar] [CrossRef]

- Olaverri-Monreal, C.; Krizek, G.C.; Michaeler, F.; Lorenz, R.; Pichler, M. Collaborative approach for a safe driving distance using stereoscopic image processing. Future Gener. Comput. Syst. 2019, 95, 800–889. [Google Scholar] [CrossRef]

- European_Road_Safety_Observatory. Advanced Driver Assistance Systems. Available online: https://ec.europa.eu/transport/road_safety/sites/roadsafety/files/ersosynthesis2016-adas15_en.pdf (accessed on 27 May 2019).

- Zheng, X.; Zhang, Y. An image-based object detection method using two cameras. In Proceedings of the 2010 International Conference on Logistics Engineering and Intelligent Transportation Systems, Wuhan, China, 26–28 November 2010; pp. 1–5. [Google Scholar]

- Mrovlje, J.; Vrancic, D. Distance measuring based on stereoscopic pictures. In Proceedings of the 9th International PhD Workshop on Systems and Control: Young Generation Viewpoint, Izola, Slovenia, 1–3 October 2008; Volume 2, pp. 1–6. [Google Scholar]

- Jie, C.; Qiang, Z. Video Based Vehicle Tailgate Behaviour Detection in Urban Road Junction. In Proceedings of the 2011 International Conference on Future Computer Sciences and Application (ICFCSA), Hong Kong, China, 18–19 June 2011; pp. 19–24. [Google Scholar]

- May, A.; Carter, C.; Smith, F.; Fairclough, S. An evaluation of an in-vehicle headway feedback system with a visual and auditory interface. In Proceedings of the IEEE Colloqium on Design of the Driver Interface, London, UK, 19 January 1995; pp. 5/1–5/3. [Google Scholar] [CrossRef]

- Olaverri-Monreal, C.; Gvozdic, M.; Muthurajan, B. Effect on driving performance of two visualization paradigms for rear-end collision avoidance. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 77–82. [Google Scholar]

- Hayden, J.; Castle, J.; Lee, G. Use of MIDAS individual vehicle records data to investigate driver following behaviour. In Proceedings of the Road Transport Information and Control Conference 2014 (RTIC 2014), London, UK, 6–7 October 2014; pp. 1–6. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, Q.; Liu, Y. A Real-time Tracking System for Tailgating Behavior Detection. In Proceedings of the Fourth International Conference on Computer Vision Theory and Applications, Lisboa, Portugal, 5–8 February 2009; pp. 398–402. [Google Scholar]

- Zhang, L.; Li, Y.; Lu, H.; Serikawa, S. Distance Measurement with a General 3D Camera by Using a Modified Phase Only Correlation Method. In Proceedings of the 2013 14th ACIS International Conference on Software Engineering, Artificial Intelligence, Networking and Parallel/Distributed Computing (SNPD), Honolulu, HI, USA,, 1–3 July 2013; pp. 173–178. [Google Scholar]

- Chang, T.H.; Chou, C.J. Rear-end collision warning system on account of a rear-end monitoring camera. In Proceedings of the 2009 IEEE Intelligent Vehicles Symposium, Xi’an, China, 3–5 June 2009; pp. 913–917. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).