Combined Fully Contactless Finger and Hand Vein Capturing Device with a Corresponding Dataset

Abstract

:1. Introduction

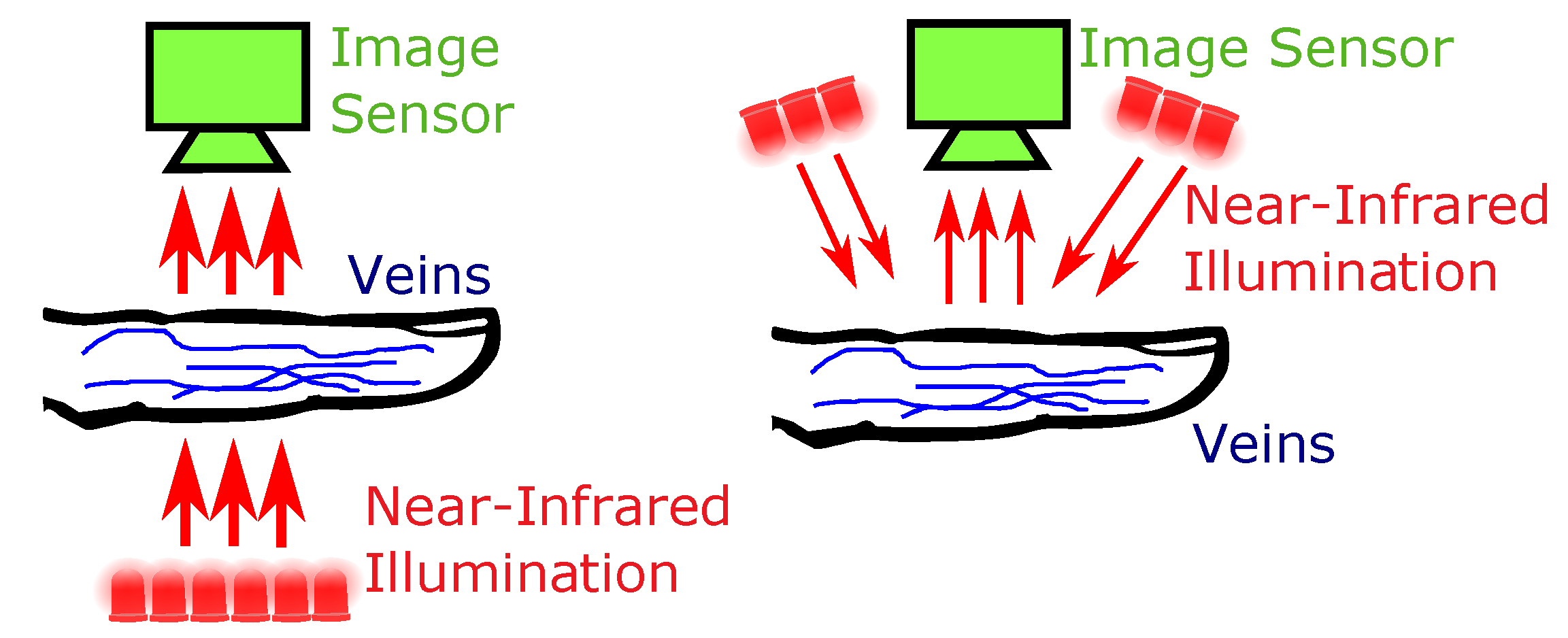

1.1. Acquisition Principle and Capturing Devices

1.2. Related Work

1.3. Main Contributions

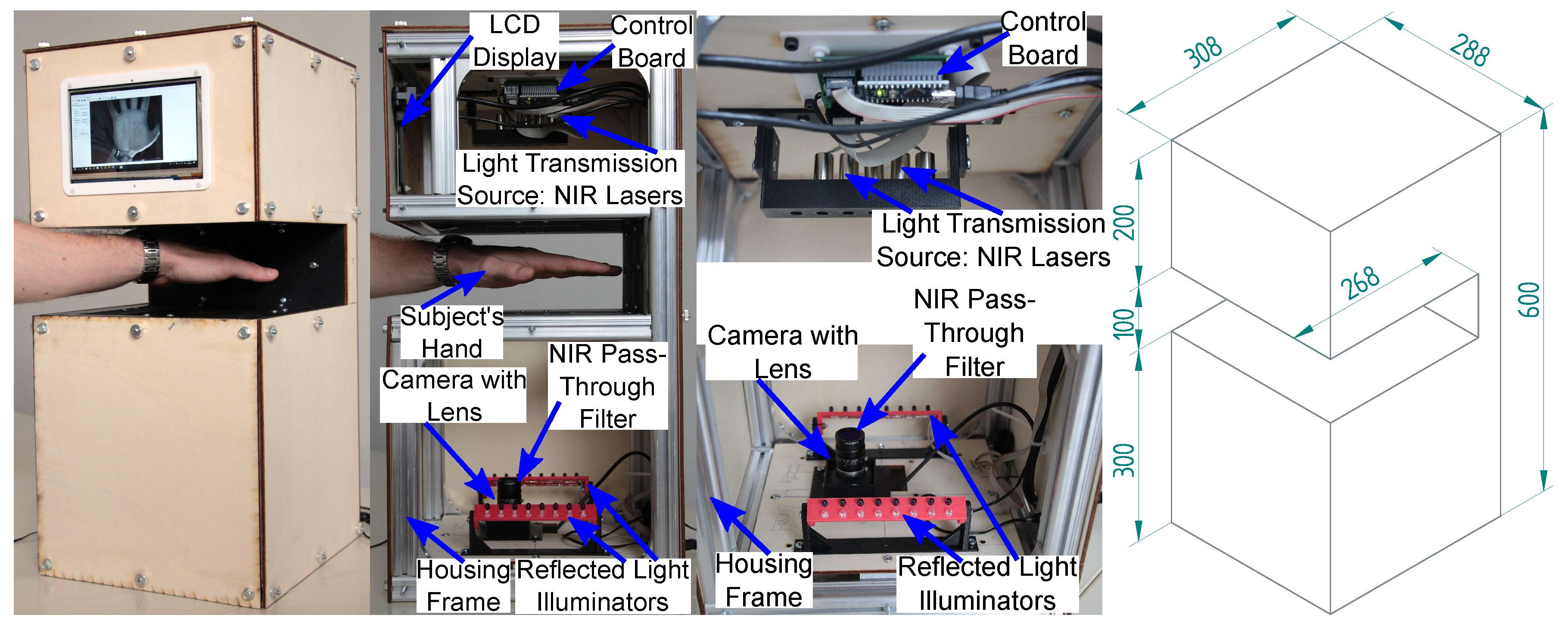

- Design of a novel fully contactless combined finger and hand vein capturing device featuring laser modules instead of NIR LEDs, a special NIR enhanced industrial camera with an additional NIR pass-through filter to achieve the best possible image quality, an optimal lens and distance between the finger/hand and the camera to allow for minimal image distortions as well as an automated illumination control to provide a uniform illumination throughout the finger/hand surface and to arrive at the best possible contrast and image quality.

- Publication of all major technical details of the capturing device design—in this work we describe all the major components of the proposed capturing device design. Further technical details are available on request, which makes it easy to reproduce our design.

- Public finger and hand vein image database established with the proposed capturing device—together with this paper we publish the finger and hand vein datasets acquired with the proposed capturing device. These datasets are publicly available free of charge for research purposes and the finger vein one is the first publicly available contactless finger vein recognition dataset. Due to the nature of contactless acquisition, these datasets are challenging in terms of the different types of the finger/hand misplacements they include.

- Evaluation of the acquired database in terms of image quality and biometric recognition performance—the images acquired with our sensor are evaluated using several image quality assessment schemes. Furthermore, some well-established vein recognition methods implemented in our already open source vein recognition framework are utilised to evaluate the finger and hand vein datasets. This ensures full reproducibility of our published results. The achieved recognition performance during our evaluation is competitive with other state-of-the-art finger and hand vein acquisition devices, validate the advantages of our proposed capturing device design and prove the good image quality and recognition performance of our capturing device.

2. Materials and Methods

2.1. Contactless Finger and Hand Vein Acquisition Device

- Reflected light as well as light transmission—it is the first acquisition device of its kind, able to acquire reflected light as well as light transmission images. This extends the range of possible uses of this capturing device and speeds up the acquisition process if both types of illumination set-ups shall be investigated.

- Suitable for finger as well as hand vein images—it is possible to acquire palmar finger as well as hand vein images with the same device. Again, this is the first capturing device able to acquire both using the same device. In the default configuration, finger vein images are captured using light transmission while hand vein images are captured using reflected light but this can be changed in the set-up so there is a high flexibility in terms of possible acquisition configurations.

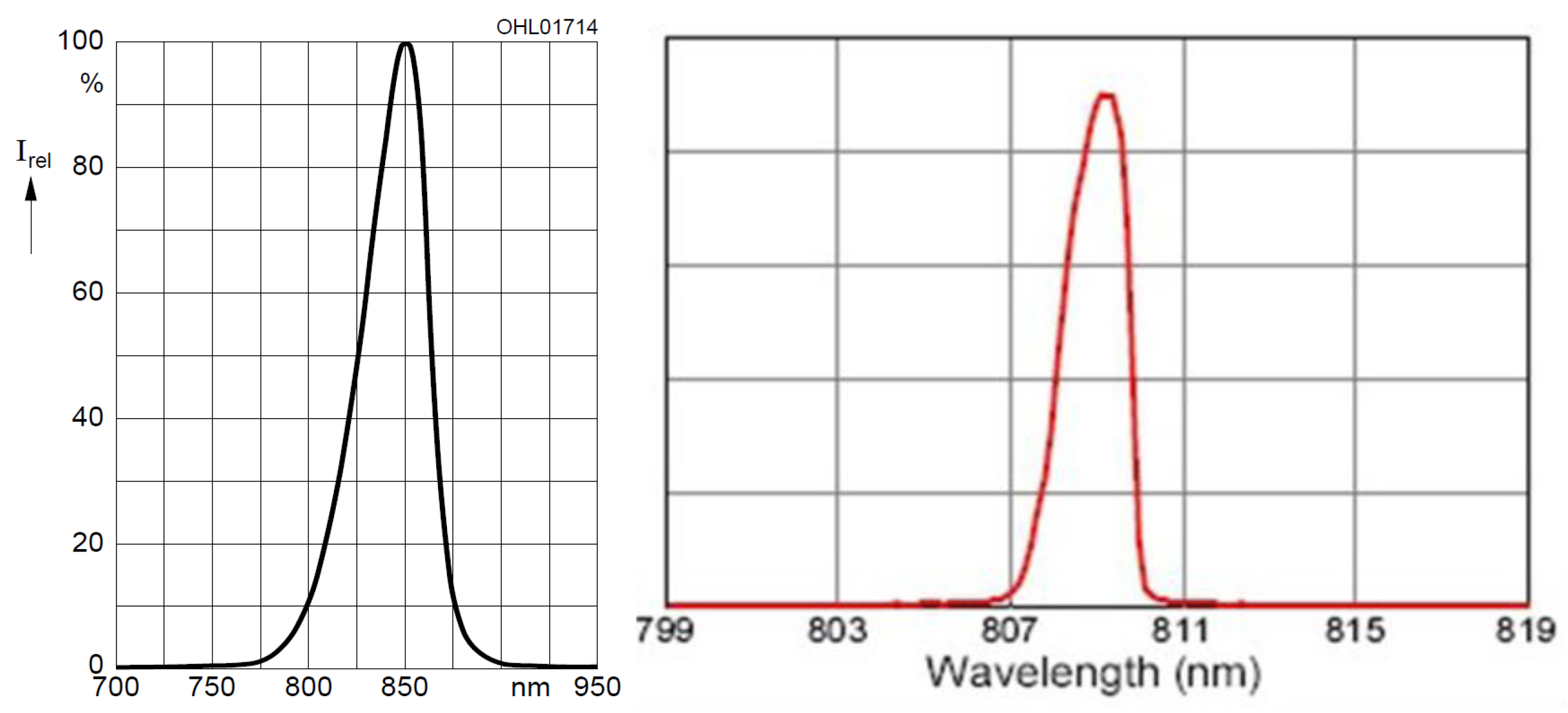

- NIR laser modules for light transmission illumination—the application of NIR laser modules has not been that common in finger vein recognition so far. In a contactless acquisition set-up, laser modules exhibit several advantages over LEDs, especially if it comes to increased range of finger/hand movement as well as an optimal illumination and image contrast [27]. Hence, we decided to equip our capturing device with NIR laser modules.

- Illumination control board and automated brightness control algorithm—the integrated brightness control board handles the illumination intensity of both, the light transmission and the reflected light illuminators. Each of the laser modules in the light transmission illuminator can be brightness controlled separately and independent from the others. This illumination control in combination with our automated brightness control algorithm enables an optimal image contrast without having the operator do any manual settings.

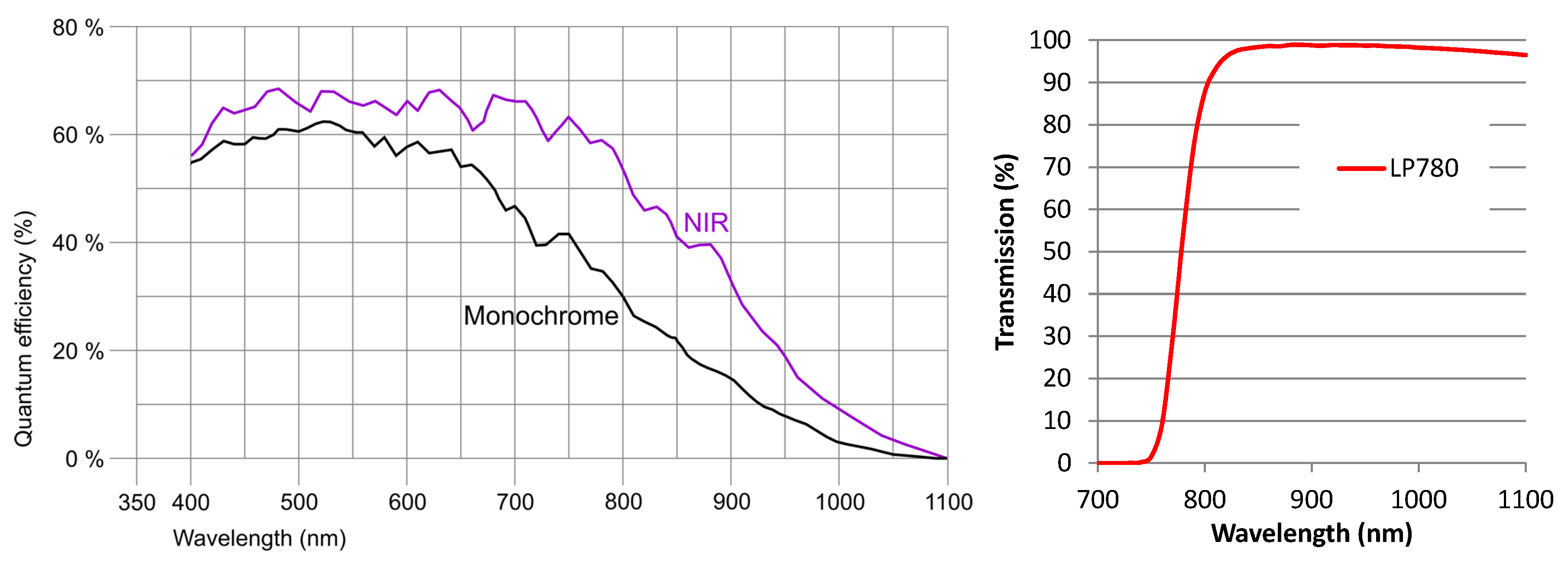

- Special NIR enhanced industrial camera—our capturing device uses a special NIR enhanced industrial camera. In contrast to modified (NIR blocking filter removed) visible light cameras, those NIR enhanced camera have an increased quantum efficiency in the NIR spectrum. This leads to a higher image contrast and quality compared to cheap, modified visible light cameras.

- Optimal lens set-up and distance between camera and finger/hand—in contrast to many other, mainly smaller devices (in terms of physical size of the device), we decided to use a lens with a focal length of 9 mm. This allows for minimal image distortions all over the image area, especially at the image borders at the cost of an increased distance between the camera and the finger/hand. Hence, our capturing device is rather big compared to others.

- Easy to reproduce design—in contrast to most other proposed capturing devices, for which only very few details are available, we provide references to the data sheets and technical details of all of the capturing device’s parts. Furthermore, we provide the 3D models and technical drawings for the frame parts and the 3D printed parts on request. Hence, it is easy to reproduce our proposed capturing device design.

- Fast data acquisition—due to the automated brightness control and the automated acquisition process, sample data acquisition is fast. Capturing a hand vein image only takes less than a second and capturing a finger vein images takes between 2–4 s once the data subject placed their finger/hand.

- Ease of use during data acquisition—in contrast to other available vein capturing devices, for our proposed device the data subjects do not need to align their fingers/hands with some contact surface or pegs. This is one of the main advantages of our contactless design, making the data acquisition easier for the data subjects as well as for the operators. The automated illumination control algorithm and the intuitive graphical capturing software further contribute to a smooth and easy data acquisition process. Moreover, the integrated touchscreen display assists the data subjects by indicating which finger/hand to place at the sensor, how to place it and indicates potential misplacements.

- Biometric fusion can be employed to increase the recognition performance—our proposed capturing device acquires finger vein images as well as hand vein images using two different wavelengths of illumination. Hence, it is easily possible to increase the recognition performance by applying biometric fusion at sensor level with different fusion combinations. An evaluation of selected combinations is done in Section 3.3.

2.1.1. Camera, Lens and Filter

2.1.2. Light Sources—Reflected Light and Light Transmission

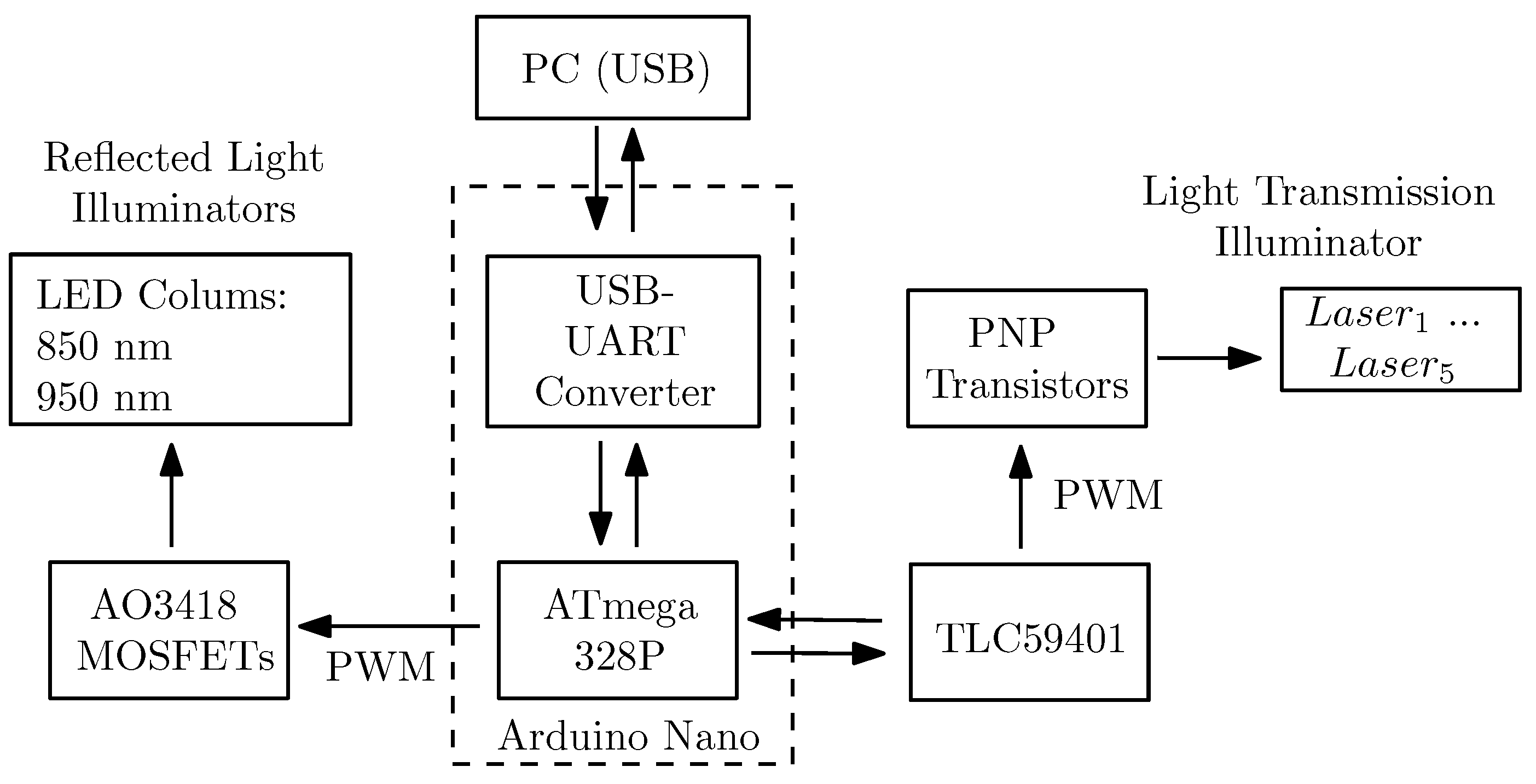

2.1.3. Illumination Control Board and Brightness Control Algorithm

2.1.4. Frame, Housing and Touchscreen

2.2. PLUSVein-Contactless Finger and Hand Vein Data Set

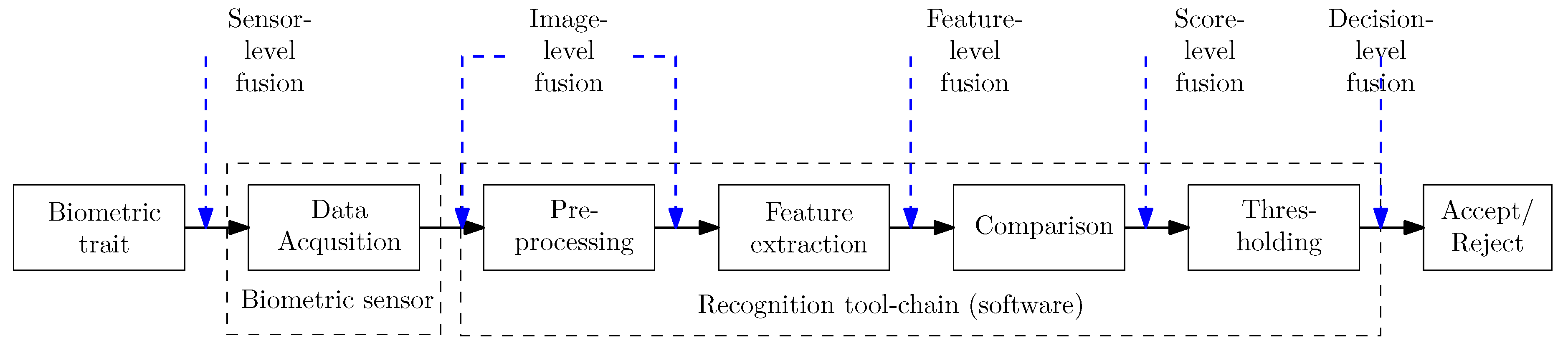

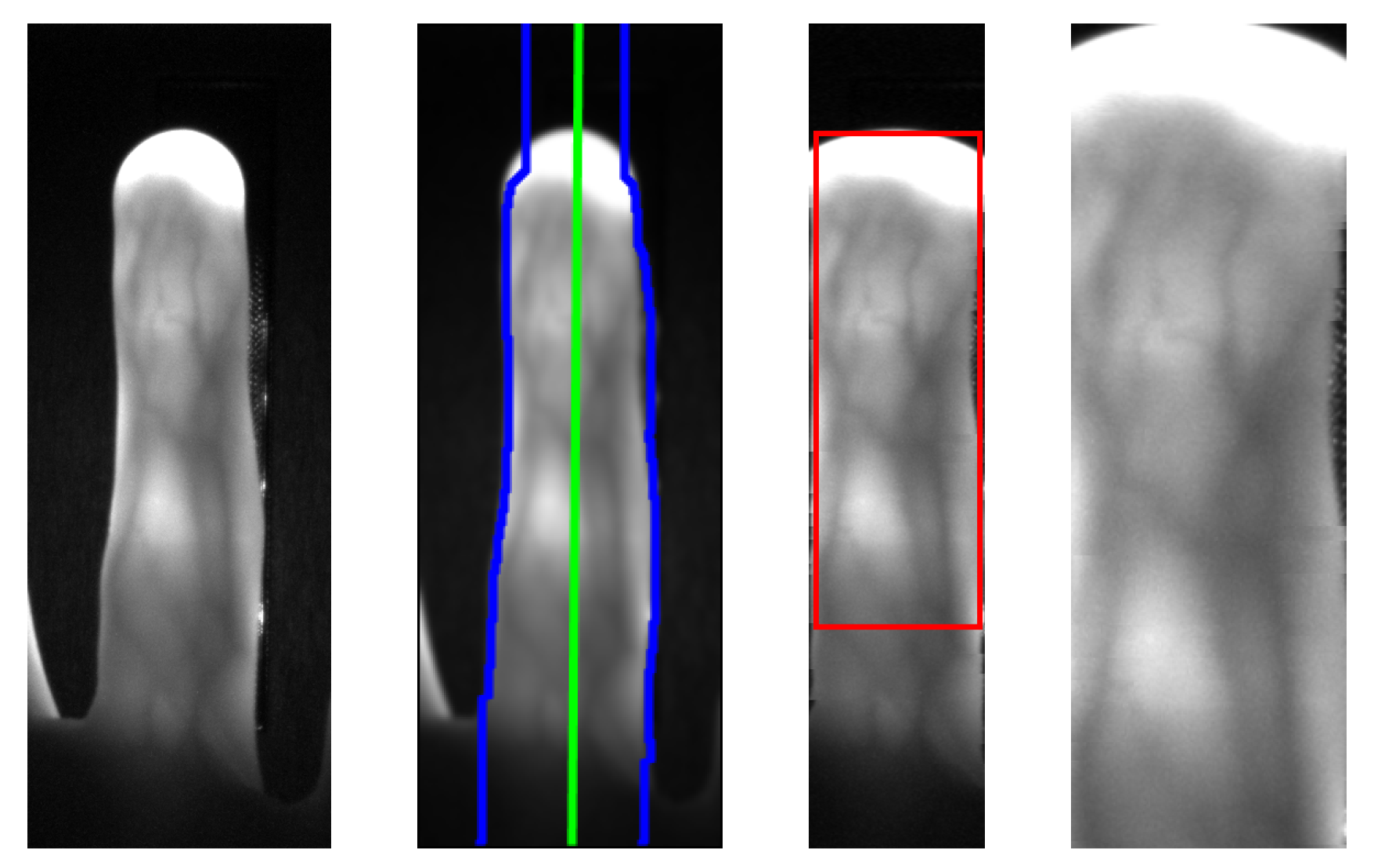

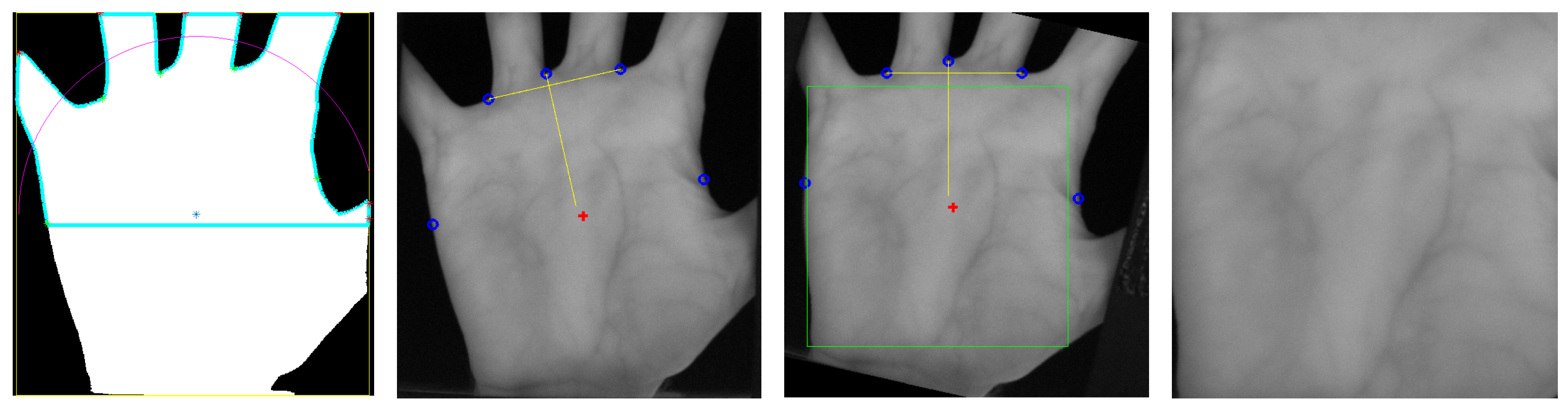

2.3. Finger and Hand Vein Recognition Tool-Chain

- Hand veins 850 nm + hand veins 950 nm

- Hand veins 850 nm + finger veins

- Hand veins 950 nm + finger veins

- Hand veins 850 nm + hand veins 950 nm + finger veins

2.4. Experimental Setup and Evaluation Protocol

3. Results

3.1. Image Quality Assessment

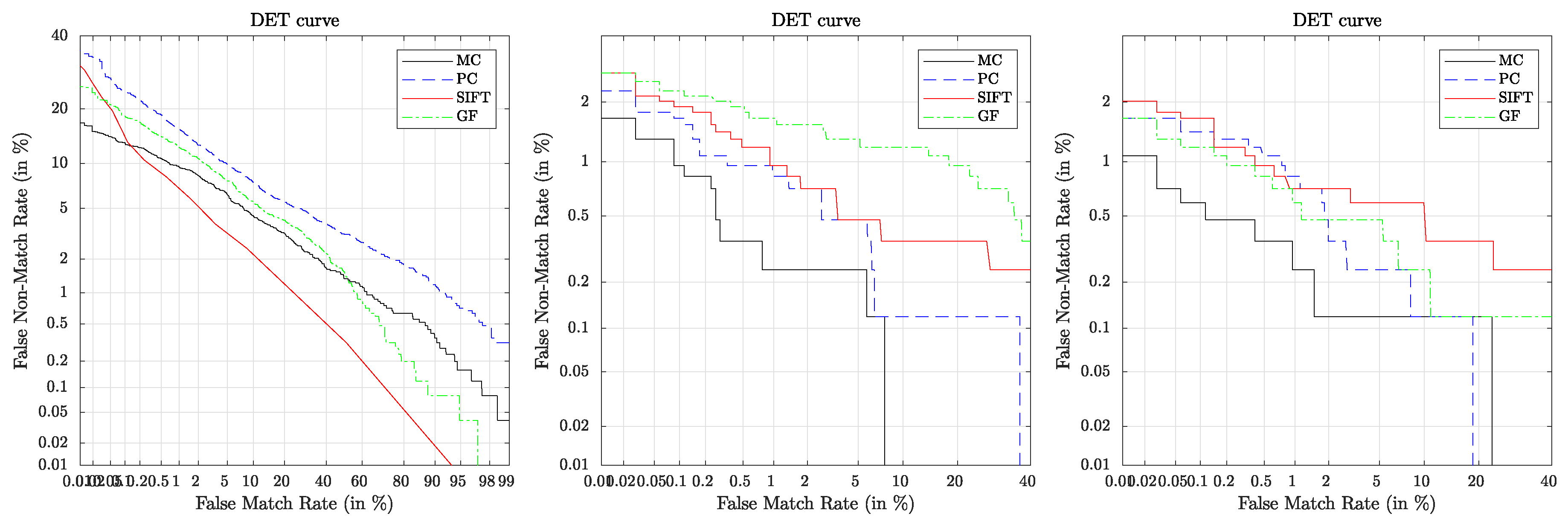

3.2. Recognition Performance

3.3. Biometric Fusion Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| ATM | Automated Teller Machine |

| CCD | Charge Coupled Device |

| CGF | Circular Gabor Filter |

| CLAHE | Contrast Limited Adaptive Histogram Equalisation |

| CMOS | Complimentary Metal Oxid Semiconductor |

| COTS | Commercial Off The Shelf |

| DET | Detection Error Tradeoff |

| EER | Equal Error Rate |

| FMR | False Match Rate |

| FNMR | False Non Match Rate |

| HFEF | High Frequency Emphasis Filtering |

| LED | Light Emitting Diode |

| NFIQ | NIST Fingerprint Image Quality |

| NIR | Near Infrared |

| NIST | National Institutes of Standards and Technology |

| PTFE | Polytetrafluoroethylene |

| PWM | Pulse Width Modulation |

| ROI | Region Of Interest |

References

- Fujitsu Laboratories Ltd. Fujitsu Develops Technology for World’s First Contactless Palm Vein Pattern Biometric Authentication System. Available online: https://www.fujitsu.com/global/about/resources/news/press-releases/2003/0331-05.html (accessed on 6 October 2019).

- Hitachi-Omron Terminal Solutions, Corp. Taiwan’s CTBC Bank Adopts Finger Vein Authentication Solution for ATMs—Hitachi News. Available online: http://www.hitachi-omron-ts.com/news/pdf/201607-001.pdf (accessed on 20 June 2018).

- Hitachi Group, Corp. Finger Vein Technology for Bank BPH (Poland)—Hitachi Europe News. Available online: http://www.hitachi.eu/en-gb/case-studies/finger-vein-technology-bank-bph-poland (accessed on 20 June 2018).

- Kumar, A.; Zhou, Y. Human identification using finger images. Image Process. IEEE Trans. 2012, 21, 2228–2244. [Google Scholar] [CrossRef] [PubMed]

- Mofiria Corp. Mofiria FVA-U3SXE Finger Vein Reader Data Sheet. Available online: https://www.mofiria.com/wp/wp-content/uploads/2017/08/FVA-U3SXE.pdf (accessed on 20 June 2018).

- Mofiria Corp. Mofiria FVA-U4BT Finger Vein Reader Data Sheet (FVA-U4ST Is the Same Device Except for the USB Instead of Bluetooth Connection). Available online: https://www.mofiria.com/wp/wp-content/uploads/2017/08/FVA-U4BT_E.pdf (accessed on 20 June 2018).

- Prommegger, B.; Kauba, C.; Linortner, M.; Uhl, A. Longitudinal Finger Rotation—Deformation Detection and Correction. IEEE Trans. Biom. Behav. Identity Sci. 2019, 1–17. [Google Scholar] [CrossRef]

- Hitachi Group, Corp. Hitachi H-1 Finger-Vein Scanner Product Page. Available online: http://www.hitachi.co.jp/products/it/veinid/global/products/embedded_devices_u.html (accessed on 20 June 2018).

- Sierro, A.; Ferrez, P.; Roduit, P. Contact-less palm/finger vein biometrics. In Proceedings of the 2015 International Conference of the Biometrics Special Interest Group (BIOSIG), Darmstadt, Germany, 9–11 September 2015; pp. 1–12. [Google Scholar]

- Kim, J.; Kong, H.J.; Park, S.; Noh, S.; Lee, S.R.; Kim, T.; Kim, H.C. Non-contact finger vein acquisition system using NIR laser. Proc. SPIE 2009, 7249, 72490Y-1–72490Y-8. [Google Scholar] [CrossRef]

- Raghavendra, R.; Raja, K.B.; Surbiryala, J.; Busch, C. A low-cost multimodal biometric sensor to capture finger vein and fingerprint. In Proceedings of the 2014 IEEE International Joint Conference on Biometrics (IJCB), Clearwater, FL, USA, 29 September–2 October 2014; pp. 1–7. [Google Scholar]

- Matsuda, Y.; Miura, N.; Nonomura, Y.; Nagasaka, A.; Miyatake, T. Walkthrough-style multi-finger vein authentication. In Proceedings of the 2017 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 8–10 January 2017; pp. 438–441. [Google Scholar]

- Hitachi Group, Corp. Making Society Safe and Convenient with High-Precision Walkthrough Finger Vein Authentication. Available online: https://www.hitachi.com/rd/portal/contents/story/fingervein/index.html (accessed on 6 October 2019).

- Zhang, D.; Kong, W.K.; You, J.; Wong, M. Online palmprint identification. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1041–1050. [Google Scholar] [CrossRef]

- Zhang, Y.B.; Li, Q.; You, J.; Bhattacharya, P. Palm vein extraction and matching for personal authentication. In Proceedings of the International Conference on Advances in Visual Information Systems, Shanghai, China, 28–29 June 2007; pp. 154–164. [Google Scholar]

- Badawi, A.M. Hand Vein Biometric Verification Prototype: A Testing Performance and Patterns Similarity. IPCV 2006, 14, 3–9. [Google Scholar]

- Distler, M.; Jensen, S.; Myrtue, N.G.; Petitimbert, C.; Nasrollahi, K.; Moeslund, T.B. Low-cost hand vein pattern recognition. In Proceedings of the IEEE International Conference on Signal and Information Processing (CSIP), Shanghai, China, 28 October 2011; pp. 1–4. [Google Scholar]

- Kabacinski, R.; Kowalski, M. Vein pattern database and benchmark results. Electron. Lett. 2011, 47, 1127–1128. [Google Scholar] [CrossRef]

- Fujitsu Limited. Fujitsu Identity Management and PalmSecure Whitepaper. 2015. Available online: https://www.fujitsu.com/nz/Images/PalmSecure_white_paper-eu-en.pdf (accessed on 6 October 2019).

- Fujitsu Limited. Fujitsu PalmSecure Datasheet. Available online: https://www.fujitsu.com/global/Images/PalmSecure_Datasheet.pdf (accessed on 6 October 2019).

- Chinese Academy of Sciences’ Institute of Automation (CASIA). CASIA Multispectral Palmprint V1.0. Available online: http://biometrics.idealtest.org/dbDetailForUser.do?id=6 (accessed on 6 October 2019).

- Michael, G.K.O.; Connie, T.; Teoh, A.B.J. A contactless biometric system using palm print and palm vein features. In Advanced Biometric Technologies; IntechOpen: London, UK, 2011. [Google Scholar]

- Zhang, Q.; Zhou, Y.; Wang, D.; Hu, X. Personal authentication using hand vein and knuckle shape point cloud matching. In Proceedings of the 2013 IEEE Sixth International Conference on Biometrics: Theory, Applications and Systems (BTAS), Arlington, VA, USA, 29 September–2 October 2013; pp. 1–6. [Google Scholar] [CrossRef]

- Fletcher, R.R.; Raghavan, V.; Zha, R.; Haverkamp, M.; Hibberd, P.L. Development of mobile-based hand vein biometrics for global health patient identification. In Proceedings of the IEEE Global Humanitarian Technology Conference (GHTC 2014), San Jose, CA, USA, 10–13 October 2014; pp. 541–547. [Google Scholar]

- Debiasi, L.; Kauba, C.; Prommegger, B.; Uhl, A. Near-Infrared Illumination Add-On for Mobile Hand-Vein Acquisition. In Proceedings of the IEEE 9th International Conference on Biometrics: Theory, Applications, and Systems (BTAS2018), Redondo Beach, CA, USA, 22–25 October 2018; pp. 1–9. [Google Scholar] [CrossRef]

- University of Reading. PROTECT Multimodal DB Dataset. 2017. Available online: http://projectprotect.eu/dataset/ (accessed on 6 October 2019).

- Kauba, C.; Prommegger, B.; Uhl, A. Focussing the Beam—A New Laser Illumination Based dataset Providing Insights to Finger-Vein Recognition. In Proceedings of the IEEE 9th International Conference on Biometrics: Theory, Applications, and Systems (BTAS2018), Los Angeles, CA, USA, 22–25 October 2018; pp. 1–9. [Google Scholar] [CrossRef]

- IDS Imaging Development Systems GmbH. UI-ML3240-NIR NIR-Enhanced Industrial Camera Data Sheet. Available online: https://en.ids-imaging.com/IDS/datasheet_pdf.php?sku=AB00442 (accessed on 6 October 2019).

- Fujifilm Corp. Fujifilm HF9HA-1B Product Page. Available online: http://www.fujifilmusa.com/products/optical_devices/machine-vision/2-3-15/hf9ha-1b/index.html (accessed on 20 June 2018).

- MIDOPT Corp. MIDOPT LP780 NIR Pass-Through Filter Product Page. Available online: http://midopt.com/filters/lp780/ (accessed on 20 June 2018).

- Aliexpress. TO-18 300 mW 808 nm NIR Laser Diode Product Page. Available online: https://www.aliexpress.com/item/5Pcs-lot-High-Quality-808nm-300mW-High-Power-Burning-Infrared-Laser-Diode-Lab/32272128336.html?spm=a2g0s.9042311.0.0.27424c4drx8E2d (accessed on 20 June 2018).

- Aliexpress. Double IC Two Road ACC Circuit Laser Dode Driver Board 650nm 2.8-5v Adjustable Constant Current 0-390mA 780nm 808nm 980nm Laser Product Page. Available online: https://www.aliexpress.com/item/Double-IC-Two-Road-ACC-Circuit-laser-Dode-Driver-Board-650nm-2-8-5v-Adjustable-Constant/32818824875.html?spm=a2g0s.9042311.0.0.27424c4drx8E2d (accessed on 20 June 2018).

- Aliexpress. 10x Focusable 1230 Metal Housing w Lens for TO-18 5.6mm Laser Diode LD Product Page. Available online: https://www.aliexpress.com/item/10x-Focusable-1230-Metal-Housing-w-Lens-for-TO-18-5-6mm-Laser-Diode-LD/32665828682.html?spm=a2g0s.9042311.0.0.27424c4drx8E2d (accessed on 20 June 2018).

- Osram Opto Semiconductors AG. Osram SFH-4550 850 nm High Power Infrared LED Data Sheet. Available online: https://dammedia.osram.info/media/resource/hires/osram-dam-5580407/SFH%204550_EN.pdf (accessed on 20 June 2018).

- Vishay Semiconductors. TSUS540 Series Infrared Emitting Diode, 950 nm, GaAs Data Sheet. Available online: https://www.vishay.com/docs/81056/tsus5400.pdf (accessed on 20 June 2018).

- Arduino LLC. Arduino Nano Manual. Available online: https://www.arduino.cc/en/uploads/Main/ArduinoNanoManual23.pdf (accessed on 20 June 2018).

- Texas Instruments Corporation. Texas Instruments TLC59401 16-Channel LED Driver with Dot Correction and Greyscale PWM Control Data Sheet. Available online: http://www.ti.com/lit/ds/sbvs137/sbvs137.pdf (accessed on 20 June 2018).

- Microchip Corp. Microchip AVR ATmega328P 8-Bit Microcontroller Product Page. Available online: https://www.microchip.com/wwwproducts/en/ATmega328P (accessed on 20 June 2018).

- Microchip Corp. Microchip AVR ATmega328P 8-Bit Microcontroller Full Data Sheet. Available online: http://ww1.microchip.com/downloads/en/DeviceDoc/Atmel-7810-Automotive-Microcontrollers-ATmega328P_Datasheet.pdf (accessed on 20 June 2018).

- ON Semiconductor. BC808 PNP SMD General Purpose Transistor Data Sheet. Available online: http://www.onsemi.com/pub/Collateral/BC808-25LT1-D.PDF (accessed on 20 June 2018).

- Alpha&Omega Semiconductor. AO3418 30V N-Channel MOSFET SMD Data Sheet. Available online: http://aosmd.com/pdfs/datasheet/AO3418.pdf (accessed on 20 June 2018).

- alfer aluminium GmbH. Combitech Coaxis Online Product Catalog. Available online: https://products.alfer.com/out/media/97010.pdf (accessed on 6 October 2019).

- Waveshare. Waveshare 7inch HDMI LCD (C) Wiki Page. Available online: http://www.waveshare.net/wiki/7inch_HDMI_LCD_(C) (accessed on 6 October 2019).

- Lu, Y.; Xie, S.J.; Yoon, S.; Wang, Z.; Park, D.S. An available database for the research of finger vein recognition. In Proceedings of the 2013 6th International Congress on Image and Signal Processing (CISP), Hangzhou, China, 16–18 December 2013; Volume 1, pp. 410–415. [Google Scholar]

- Zhou, Y.; Kumar, A. Human identification using palm-vein images. IEEE Trans. Inf. Forensics Secur. 2011, 6, 1259–1274. [Google Scholar] [CrossRef]

- Zuiderveld, K. Contrast Limited Adaptive Histogram Equalization. In Graphics Gems IV; Heckbert, P.S., Ed.; Morgan Kaufmann: Burlington, MA, USA, 1994; pp. 474–485. [Google Scholar]

- Zhao, J.; Tian, H.; Xu, W.; Li, X. A New Approach to Hand Vein Image Enhancement. In Proceedings of the Second International Conference on Intelligent Computation Technology and Automation, ICICTA’09, Zhangjiajie, China, 10–11 October 2009; Volume 1, pp. 499–501. [Google Scholar]

- Zhang, J.; Yang, J. Finger-vein image enhancement based on combination of gray-level grouping and circular Gabor filter. In Proceedings of the International Conference on Information Engineering and Computer Science, Wuhan, China, 19–20 December 2009; pp. 1–4. [Google Scholar]

- Miura, N.; Nagasaka, A.; Miyatake, T. Extraction of finger-vein patterns using maximum curvature points in image profiles. IEICE Trans. Inf. Syst. 2007, 90, 1185–1194. [Google Scholar] [CrossRef]

- Choi, J.H.; Song, W.; Kim, T.; Lee, S.R.; Kim, H.C. Finger vein extraction using gradient normalization and principal curvature. Proc. SPIE 2009, 7251, 9. [Google Scholar] [CrossRef]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision (CVPR’99), Kerkyra, Greece, 20–27 September 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Kauba, C.; Reissig, J.; Uhl, A. Pre-processing cascades and fusion in finger vein recognition. In Proceedings of the International Conference of the Biometrics Special Interest Group (BIOSIG’14), Darmstadt, Germany, 10–12 September 2014; pp. 1–6. [Google Scholar]

- Tabassi, E.; Wilson, C.; Watson, C. Nist fingerprint image quality. NIST Res. Rep. NISTIR7151 2004, 5. Available online: https://www.nist.gov/sites/default/files/documents/2016/12/12/tabassi-image-quality.pdf (accessed on 16 November 2019).

- Matkovic, K.; Neumann, L.; Neumann, A.; Psik, T.; Purgathofer, W. Global Contrast Factor-a New Approach to Image Contrast. Comput. Aesthet. 2005, 2005, 159–168. [Google Scholar]

- Wang, C.; Zeng, X.; Sun, X.; Dong, W.; Zhu, Z. Quality assessment on near infrared palm vein image. In Proceedings of the 2017 32nd Youth Academic Annual Conference of Chinese Association of Automation (YAC), Hefei, China, 19–21 May 2017; pp. 1127–1130. [Google Scholar]

- Ma, H.; Cui, F.P.; Oluwatoyin, P. A Non-Contact Finger Vein Image Quality Assessment Method. Appl. Mech. Mater. 2013, 239, 986–989. [Google Scholar] [CrossRef]

- ISO/IEC JTC 1/SC 37. Information Technology – Biometrics – Multimodal and Other Multibiometric Fusion. ISO/IEC TR 24722:2015, 2015. Available online: https://www.iso.org/standard/64061.html (accessed on 16 November 2019).

- Brümmer, N.; de Villiers, E. The BOSARIS toolkit. arXiv 2013, arXiv:1304.2865. [Google Scholar]

- Maio, D.; Maltoni, D.; Cappelli, R.; Wayman, J.L.; Jain, A.K. FVC2004: Third Fingerprint Verification Competition; Springer: Berlin/Heidelberg, Germany, 2004; Volume 3072, pp. 1–7. [Google Scholar]

- Yin, Y.; Liu, L.; Sun, X. SDUMLA-HMT: A multimodal biometric database. In Biometric Recognition; Springer: Berlin/Heidelberg, Germany, 2011; pp. 260–268. [Google Scholar]

- Ton, B.; Veldhuis, R. A high quality finger vascular pattern dataset collected using a custom designed capturing device. In Proceedings of the International Conference on Biometrics, ICB 2013, Madrid, Spain, 4–7 June 2013; pp. 1–5. [Google Scholar]

- Asaari, M.S.M.; Suandi, S.A.; Rosdi, B.A. Fusion of band limited phase only correlation and width centroid contour distance for finger based biometrics. Expert Syst. Appl. 2014, 41, 3367–3382. [Google Scholar] [CrossRef]

- Bogazici University. Bosphorus Hand Database. Available online: http://bosphorus.ee.boun.edu.tr/hand/Home.aspx (accessed on 6 October 2019).

- Faundez-Zanuy, M.; Mekyska, J.; Font-Aragonès, X. A new hand image database simultaneously acquired in visible, near-infrared and thermal spectrums. Cogn. Comput. 2014, 6, 230–240. [Google Scholar] [CrossRef]

- Tome, P.; Marcel, S. On the Vulnerability of Palm Vein Recognition to Spoofing Attacks. In Proceedings of the 8th IAPR International Conference on Biometrics (ICB), New Delhi, India, 29 March–1 April 2015; pp. 319–325. [Google Scholar]

- Kauba, C.; Uhl, A. Shedding Light on the Veins—Reflected Light or Transillumination in Hand-Vein Recognition. In Proceedings of the 11th IAPR/IEEE International Conference on Biometrics (ICB’18), Gold Coast, Australia, 20–23 February 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Li, G.; Yang, B.; Busch, C. Autocorrelation and dct based quality metrics for fingerprint samples generated by smartphones. In Proceedings of the 2013 18th International Conference on Digital Signal Processing (DSP), Fira, Greece, 1–3 July 2013; pp. 1–5. [Google Scholar]

- Yang, B.; Li, G.; Busch, C. Qualifying fingerprint samples captured by smartphone cameras. In Proceedings of the 2013 IEEE International Conference on Image Processing, Melbourne, Australia, 15–18 September 2013; pp. 4161–4165. [Google Scholar]

- Hämmerle-Uhl, J.; Pober, M.; Uhl, A. Systematic evaluation methodology for fingerprint-image quality assessment techniques. In Proceedings of the 2014 37th International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 26–30 May 2014; pp. 1315–1319. [Google Scholar]

- Hämmerle-Uhl, J.; Pober, M.; Uhl, A. General purpose bivariate quality-metrics for fingerprint-image assessment revisited. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 4957–4961. [Google Scholar]

- Prommegger, B.; Kauba, C.; Uhl, A. On the Extent of Longitudinal Finger Rotation in Publicly Available Finger Vein datasets. In Proceedings of the 12th IAPR/IEEE International Conference on Biometrics (ICB’19), Crete, Greece, 4–7 June 2019; pp. 1–8. [Google Scholar]

- Huang, B.; Dai, Y.; Li, R.; Tang, D.; Li, W. Finger-vein authentication based on wide line detector and pattern normalization. In Proceedings of the 2010 20th International Conference on Pattern Recognition (ICPR), Istanbul, Turkey, 23–26 August 2010; pp. 1269–1272. [Google Scholar]

- Yang, W.; Yu, X.; Liao, Q. Personal authentication using finger vein pattern and finger-dorsa texture fusion. In Proceedings of the 17th ACM international conference on Multimedia, Beijing, China, 19–24 October 2009; pp. 905–908. [Google Scholar]

- Ong, T.S.; Teng, J.H.; Muthu, K.S.; Teoh, A.B.J. Multi-instance finger vein recognition using minutiae matching. In Proceedings of the 2013 6th International Congress on Image and Signal Processing (CISP), Hangzhou, China, 16–18 December 2013; Volume 3, pp. 1730–1735. [Google Scholar] [CrossRef]

- Zhang, C.; Li, X.; Liu, Z.; Zhao, Q.; Xu, H.; Su, F. The CFVD reflection-type finger-vein image database with evaluation baseline. In Biometric Recognition; Springer: Berlin/Heidelberg, Germany, 2013; pp. 282–287. [Google Scholar]

- Tome, P.; Vanoni, M.; Marcel, S. On the Vulnerability of Finger Vein Recognition to Spoofing. In Proceedings of the IEEE International Conference of the Biometrics Special Interest Group (BIOSIG), Darmstadt, Germany, 10–12 September 2014; pp. 1–10. [Google Scholar]

- Vanoni, M.; Tome, P.; El Shafey, L.; Marcel, S. Cross-database evaluation using an open finger vein sensor. In Proceedings of the 2014 IEEE Workshop on Biometric Measurements and Systems for Security and Medical Applications (BIOMS) Proceedings, Rome, Italy, 17 October 2014; pp. 30–35. [Google Scholar]

- Shahin, M.; Badawi, A.; Kamel, M. Biometric authentication using fast correlation of near infrared hand vein patterns. Int. J. Biol. Med Sci. 2007, 2, 141–148. [Google Scholar]

- Yuksel, A.; Akarun, L.; Sankur, B. Hand vein biometry based on geometry and appearance methods. IET Comput. Vis. 2011, 5, 398–406. [Google Scholar] [CrossRef]

- Mirmohamadsadeghi, L.; Drygajlo, A. Palm vein recognition with local binary patterns and local derivative patterns. In Proceedings of the 2011 International Joint Conference on Biometrics (IJCB), Washington, DC, USA, 11–13 October 2011; pp. 1–6. [Google Scholar]

- Mirmohamadsadeghi, L.; Drygajlo, A. Palm vein recognition with local texture patterns. IET Biom. 2014, 3, 198–206. [Google Scholar] [CrossRef]

| Dataset | GCF | Wang17 | HSNR | |

|---|---|---|---|---|

| Finger Vein | Finger Vein | 1.72 | 0.256 | 92.16 |

| SDUMLA-HMT [60] | 0.986 | 0.165 | 80.32 | |

| HKPU-FID [4] | 1.46 | 0.166 | 88.13 | |

| UTFVP [61] | 1.47 | 0.356 | 87.15 | |

| MMCBNU_6000 [44] | 1.52 | 0.121 | 87.39 | |

| FV-USM [62] | 0.69 | 0.136 | 83.35 | |

| PLUSVein-FV3 [27] | 1.48 | 0.306 | 89.78 | |

| Hand Vein | Hand Vein 850 nm | 1.42 | 0.682 | 90.43 |

| Hand Vein 950 nm | 1.87 | 0.656 | 91.76 | |

| Bosphorus Hand Vein [63] | 2.69 | 0.329 | 86.12 | |

| Tecnocampus Hand Image [64] | 2.31 | 0.373 | 54.33 | |

| Vera Palm Vein [65] | 1.31 | 0.43 | 85.09 | |

| PROTECT HandVein [66] | 2.8 | 0.563 | 82.43 | |

| Modality | MC | PC | GF | SIFT | |

|---|---|---|---|---|---|

| Finger Vein | EER [%] | 5.61 | 8.22 | 6.63 | 3.66 |

| FMR1000 [%] | 13.12 | 23.99 | 18.39 | 16.61 | |

| ZeroFMR [%] | 18.75 | 42.19 | 28.76 | 36.11 | |

| Hand Vein 850 nm | EER [%] | 0.35 | 0.95 | 1.55 | 0.95 |

| FMR1000 [%] | 0.95 | 1.67 | 2.26 | 1.9 | |

| ZeroFMR [%] | 1.67 | 2.26 | 2.74 | 2.74 | |

| Hand Vein 950 nm | EER [%] | 0.38 | 0.83 | 0.72 | 0.82 |

| FMR1000 [%] | 0.59 | 1.43 | 1.19 | 1.67 | |

| ZeroFMR [%] | 1.07 | 1.67 | 1.67 | 2.02 | |

| Combination | MC | RPI | PC | RPI | GF | RPI | SIFT | RPI | ||

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Hand 850 Hand 950 | EER [%] | 0.24 | 44% | 0.16 | 405% | 0.60 | 19% | 0.37 | 123% |

| FMR1000 [%] | 0.36 | 162% | 0.21 | 586% | 0.77 | 54% | 0.65 | 155% | ||

| ZeroFMR [%] | 0.70 | 139% | 4.90 | −66% | 0.92 | 82% | 1.49 | 35% | ||

| 2 | Hand 850 Middle Finger | EER [%] | 0.03 | 1183% | 0.57 | 65% | 0.64 | 144% | 0.48 | 98% |

| FMR1000 [%] | 0.01 | 7862% | 0.97 | 71% | 1.19 | 90% | 0.52 | 268% | ||

| ZeroFMR [%] | 0.14 | 1112% | 1.32 | 72% | 1.70 | 61% | 0.79 | 246% | ||

| 3 | Hand 950 Middle Finger | EER [%] | 0.14 | 171% | 0.37 | 122% | 0.48 | 50% | 0.26 | 218% |

| FMR1000 [%] | 0.14 | 333% | 0.71 | 102% | 0.74 | 61% | 0.37 | 352% | ||

| ZeroFMR [%] | 0.28 | 289% | 1.28 | 30% | 1.11 | 51% | 2.35 | −14% | ||

| 4 | Hand 850 Hand 950 Middle Finger | EER [%] | 0.04 | 849% | 0.22 | 272% | 0.57 | 26% | 0.20 | 311% |

| FMR1000 [%] | 0.00 | - | 0.36 | 298% | 0.62 | 91% | 0.30 | 449% | ||

| ZeroFMR [%] | 0.17 | 861% | 11.47 | −85% | 0.74 | 127% | 2.08 | −3% | ||

| Name and Reference | Images/Subjects | cla | Feature Type | Performance (EER) | Year |

|---|---|---|---|---|---|

| PKU [72] | 50,700/5208 | no | WLD [72] | 0.87% | 2008 |

| THU-FVFDT [73] | 6540/610 | no | MLD [73] | 98.3% ident. rate | 2009 |

| SDUMLA-HMT [60] | 3816/106 | no | Minutia [74] | 98.5% recogn. rate | 2010 |

| HKPU-FID [4] | 6264/156 | no | Gabor Filter [4] | 0.43% (veins only) | 2011 |

| UTFVP [61] | 1440/60 | no | MC [49] | 0.4% | 2013 |

| MMCBNU_6000 [44] | 6000/100 | no | - | - | 2013 |

| CFVD [75] | 1345/13 | - | - | - | 2013 |

| FV-USM [62] | 5940/123 | no | POC and CD [62] | 3.05% | 2013 |

| VERA FV-Spoof [76,77] | 440/110 | no | MC [49] | 6.2% | 2014 |

| PMMDB-FV [26] | 240/20 | no | MC [49] | 9.75% | 2017 |

| PLUSVein-FV3 [27] | 3600/60 | no | MC [49] | 0.06% | 2018 |

| Contactless FingerVein | 840/42 | yes | MC [49] | 3.66% | 2019 |

| Name and Reference | Images/Subjects | cla | Feature Type | Performance (EER) | Year |

|---|---|---|---|---|---|

| CIE [18] | 2400/50 | no | Thresholding [78] | 1.1% | 2011 |

| Bosphorus Hand Vein [63] | 1575/100 | no | Geometry [79] | 2.25% | 2011 |

| CASIA Multispectral [21] | 7200/100 | no | LBP/LDP [80] | 0.09% | 2011 |

| Tecnocampus Hand Image [64] | 6000/100 | no | BDM [64] | 98% recogn. rate | 2013 |

| Vera Palm Vein [65] | 2200/110 | no | LBP [81] | 3.75% | 2015 |

| PROTECT HandVein [66] | 2400/40 | no | SIFT [51] | 0.093% | 2018 |

| PROTECT Mobile HandVein [25] | 920/31 | yes | MC [49] | 4.13% | 2018 |

| Contactless HandVein | 420/42 | yes | MC [49] | 0.35% | 2019 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kauba, C.; Prommegger, B.; Uhl, A. Combined Fully Contactless Finger and Hand Vein Capturing Device with a Corresponding Dataset. Sensors 2019, 19, 5014. https://doi.org/10.3390/s19225014

Kauba C, Prommegger B, Uhl A. Combined Fully Contactless Finger and Hand Vein Capturing Device with a Corresponding Dataset. Sensors. 2019; 19(22):5014. https://doi.org/10.3390/s19225014

Chicago/Turabian StyleKauba, Christof, Bernhard Prommegger, and Andreas Uhl. 2019. "Combined Fully Contactless Finger and Hand Vein Capturing Device with a Corresponding Dataset" Sensors 19, no. 22: 5014. https://doi.org/10.3390/s19225014

APA StyleKauba, C., Prommegger, B., & Uhl, A. (2019). Combined Fully Contactless Finger and Hand Vein Capturing Device with a Corresponding Dataset. Sensors, 19(22), 5014. https://doi.org/10.3390/s19225014