Abstract

Photometric moments are global descriptors of an image that can be used to recover motion information. This paper uses spherical photometric moments for a closed form estimation of 3D rotations from images. Since the used descriptors are global and not of the geometrical kind, they allow to avoid image processing as features extraction, matching, and tracking. The proposed scheme based on spherical projection can be used for the different vision sensors obeying the central unified model: conventional, fisheye, and catadioptric. Experimental results using both synthetic data and real images in different scenarios are provided to show the efficiency of the proposed method.

1. Introduction

Rotation estimation from images is important for many application as for motion estimation and registration in image processing [1], pattern recognition [2], localization and control of ground/aerial vehicles [3], and computer vision [4]. Feature based and direct (appearance based) are the two main approach categories proposed in the literature. The first method type uses Epipolar geometry applied to geometrical features as as points [5], lines [6], or contours [7] to estimate the fundamental matrix (uncalibrated vision sensors), the essential matrix, or homography matrix for the calibrated case. Examples of such methods using conventional cameras are Agrawal et al. [8] and Malis et al. [9] and using omnidirectional vision sensors is [10,11,12]. The direct approaches, on the other hand, avoid geometric features extraction by using grey level information of the whole image to define the descriptors to be used. Those methods are referred to in the literature as dense, direct, or global. Methods within this category can avoid the need of some higher-level image processing steps such as feature extraction, matching, and tracking. For instance, Reference [13] exploits optical flow estimation. However, a consequence of using optical flow in References [13,14] is to be limited to small camera displacements. To deal with larger motion, in Reference [4], Makadia and Daniilidis proposed a method using correlations between the two sets of spherical harmonic coefficients.

To address even larger camera displacements, motion estimation techniques based on global image representation is utilized in the literature. Makadia and Daniilidis [4] represented the spherical omnidirectional images in the frequency domain to recover rotation using the maximum correlation between the two sets of spherical harmonic coefficients. In Reference [15], the authors proposed to estimate the rotation based on 3D-mesh representation of spherical image intensities. However, these methods are not often computationally efficient due to the computation load of spherical harmonic coefficients.

The moments as descriptors provide useful characteristics such as the center of gravity of an object, orientation, disparity, and volume. As Fourier descriptors, they can be used as a compact representation of the image. In the case of 2D images, in addition to the classical geometrical moments, other kinds of moments have been defined in the literature as Zernike Moments [16] or complex moments [17]. Invariant characteristics to some transformations that an object can undergo, commonly called moment invariants, found many applications such as for pattern recognition. Thanks to their decoupling properties, they also have been used to define a minimal number of features for visual servoing and pose estimation as in References [18,19] and for visual servoing as in References [20,21].

Spherical projections can be obtained from images if the used camera obeys the unified central model. By using such projections, the authors in Reference [22] proposed a decoupled visual servoing scheme using spherical moments computed for a set of matched points. The proposed features allow to control the translational motions independently from the rotational ones. The current work exploits the spherical projection to estimate 3D rotations using spherical moments. The proposed scheme provides an analytical solution to rotation. An early version of this work was published in Reference [23]. In the next section, the central camera model and the definition of spherical moments are first recalled. The proposed approach is subsequently presented. In Section 3, experimental results of rotation estimation using synthetic data and real images acquired from a catadioptric camera mounted on a manipulator robot (Staubli) and a mobile robot (Pioneer) are presented to validate our approach.

2. Direct Estimation of Rotation Using Spherical Moments

2.1. Spherical Moments

The projection onto a unitary sphere t of a 3D point is defined by the following:

Actually, the projection onto the unit sphere can be obtained using the unified central model for conventional, fisheye, or catadioptric [24]. This makes the proposed scheme in this work valid for a wide range of cameras.

From a spherical image (or Gaussian sphere [25]) of the environment, the photometric moments can be defined by the following:

where S is defined as the sphere surface. Let be a rotation matrix and be the instantaneous rotational speed applied to the sensor frame. First, it can be easily shown that a rotational motion defined by a matrix on 3D point (, where is the same 3D point after rotation) induces the same motion on its projection onto the unit sphere (). In tangent space, the effect of a rotation speed on a projected point on the sphere is given by its time derivative :

where is the interaction matrix and defines the skew-symmetric matrix of a vector. On the other side, by taking the time derivative of Equation (2), the time variation of the moment is linked to rotational speed by the following [26]:

In the following, a scheme is proposed to define triples that behave as a projected point onto the sphere with respect to rotations.

2.2. Closed-Form Solution of Rotation Estimation

From moments of order 1 and by using Equation (4), the interaction matrix that links time derivatives of the triple to the rotational velocities is obtained by the following:

As it can be seen, the interaction matrix given by Equation (5) has an identical form to the one obtained for a projected point onto the sphere. This implies a 3D rotation results in the same motion on . Let us now consider triples defined from moments of orders larger than 1. In order to have , the interaction matrix related to must have the same form as Equation (3), which means the following:

Under the assumption of constant speed (i.e., is a constant matrix), solving for Equation (6) leads to the following:

where and are respectively the initial value and at the value time t of the triple (which defines the relation for a couple of images). Any rotational motion between two images can be obtained by applying a constant speed during time t. This means Equation (6) implies for any couple of images.

Let us now explain the procedure to obtain those triples using as an example: the moment vector . The latter is composed of all the possible products between moments of order 2 and those of order 3. There are 6 possible moments of order equal to 2 and 10 of order equal to 3. This implies that is of size equal to 60. Let us define triples , where , , and are vectors of coefficients that define the triple coordinates as linear combinations of the entries of . Our goal is to find the conditions on those vectors of coefficients such that the time derivative is of the same form as Equation (6).

As an example, let us consider the time derivative of the moment product , which is a component of . Using Equation (4), we have the following:

As it can be also concluded from Equation (4), the time variation of a moment is a function of moments of the same order and the rotational speed. From Equation (8), we obtain the time derivative of the product by the following:

Note that the coefficients of , , and are nothing but linear combinations of products between moments of orders 2 and 3. Actually, the time derivative of any component of the vector will be a linear combination of the other components. Based on this fact, can be written under the following form:

where , and are matrices. Using Equation (10), the time derivative of the triple is obtained:

The 9 constraints given by Equation (12) must be valid for any value of . Therefore, they can be simplified as follows:

The constraint set of Equation (13) can be written under the following form:

where and are respectively the identity and zero matrices of size . In practice, , and are very sparse integer matrices. Solving Equation (14) for the case of the moment vector leads to three solutions for the triple (refer to Equations (A1)–(A3) in the Appendix A). Such conditions can be solved using a symbolic computation software as Matlab Symbolic Toolbox.

The procedure used to obtain triples from can be extended straightforwardly to a different moment vector as follows:

- Define the moment vector similarly as for . The vector can be built by moment products of two different orders or more. For instance, products such as which combine moments of order 2, 3, and 4 can be also used. the vector has to be built from moment products of the same nature and has to also include all of them.

- Using Equation (4), compute the matrices , , and such that we obtain the following:

- Solve the following system:where and are respectively the identity and zero matrices of the same size as , and .

In practice, two different triples are enough to obtain a closed-form solution of the rotational motion since they can define an orthonormal basis. Let us consider, for instance, the two triples and computed from the moments of a first image using Equations (A1) and (A2) and and , their corresponding ones, computed from the image after rotation. Therefore, we have and , where is the rotation matrix. Let us define the following base:

where and . First, it can be shown that the vectors , and form an orthonormal base (i.e., and ). Second, let us now consider a second base , and computed from and using Equation (17). Since and , it can be proven using Equation (17) that , and . The last equations can be written under the following form:

where and . Finally, we obtain:

where the rotation matrix can be estimated straightforwardly. Solving Equation (16) ensures that all possible triples are obtained from a given moment vector. Obtaining a mathematical proof on the existence and their number is out of the the scope of this contribution.

2.3. Rotation Estimation and Scene Symmetry

As explained in the previous paragraph, an orthonormal base can be built from the triples and to estimate the rotation matrix. In practice, if the scene has no symmetry, extensive tests (not included in the paper for brevity) show that using the triples and could be enough. If the scene has a symmetry with respect to a plane (for instance plane), the z-coordinates of the triples are null. This means that all will belong to the plane. If the scene is symmetrical with respect to and planes, for instance, then the triples will form a line in the direction of the x-axis.

3. Validation Results

In the following section, simulation results and real experiments are shown. For all the validation results, the spherical moments are computed directly using the pixel’s grey levels without warping the images to the sphere space. The used formulas are given in References [26,27] for the perspective and fisheye cases respectively:

where is the distortion parameter and are the coordinates of a point in the metric image. The formulas of , , and as functions of can be found in Reference [28]. Using Matlab with a computer equipped with a processor Intel(R) Core(TM) i5-7600 CPU @ 3.50 GHz 3.50 GHz and 16,0 Go RAM, the whole process including the computation of spherical moments of an image of size pixels until the rotation estimation takes around 20 ms. This leaves room for reducing the time cost using other programming tools than Matlab to few milliseconds.

3.1. Simulation Results

The objective of this part is threefold:

- To show the validity of the proposed method to cameras obeying unified model, two different kinds of camera model are used to compute the images. The first corresponds to a simulated fisheye camera with the parameters chosen as focal scaling factors pixels, coordinates of the principal point and pixels, and distortion parameter . The second model corresponds a conventional camera with focal scaling factors pixels and coordinates of the principal point and ;

- To Validate our approach for large rotational motion;

- To test the effect of translational motion on the accuracy of the estimated rotations.

The Matlab code used for those simulations results can be downloaded online (http://aramis.iup.univ-evry.fr:8080/~hadj-abdelkader/Sensors/Sim_ForSensors.zip).

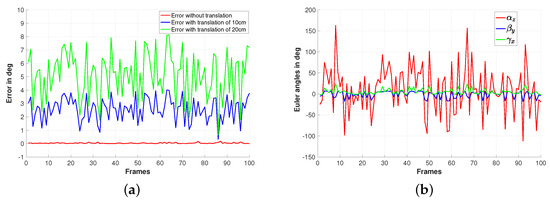

In the first results, a fisheye camera model is used to generate the images. More precisely, from the reference image shown on Figure 1a, three sets of 100 new images are computed based on randomly generated displacements. An example of the used images is shown on Figure 1b. All the three image sets were obtained with the same random rotations shown on Figure 2b but with translational motion of norms equal to 0 for the first set and 10 cm and 20 cm for the two others. For the two last sets, the same random translation directions are used but with different norms equal to 10 cm and 20 cm, respectively. To assess the estimated rotations, the norm of the vector composed by the errors on those Euler angles is used. The obtained accuracy results are shown on Figure 2a. It can be seen that, for the case where the camera displacement does not include translational motion, the rotation motion is well estimated (refer to the red plot of the Figure). The results obtained in the case where the camera displacement involves translational motion of norm 10 cm and 20 cm are shown respectively by the blue and the green plots of the Figure 2a. From the obtained plots, it can be seen that a maximum error of (respectively ) and an average error less then (respectively ) are obtained in the case of translation norm equal to 10 cm (respectively 20 cm).

Figure 1.

Results using a fisheye camera model: (a) the reference image and (b) example of rotated images.

Figure 2.

Simulation results using a fisheye camera model: (a) Estimation error of the rotation and (b) rotation ground truth expressed by Euler angles.

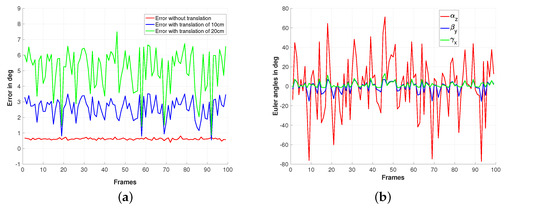

The second simulation results are based on the same previous setup, but this time, it is a conventional perspective camera that is used to generate the images. As for the first simulations, we consider three sets of 100 images corresponding to transnational motions of different norms (0 cm, 10 cm, and 20 cm). Figure 3a,b shows the reference image and an example of the used rotated image for which the rotation has to be estimated. The ground truth of the rotational motions is shown on Figure 4b, while the accuracy of the rotation estimation is depicted on Figure 4a. From this Figure, it can be seen that the rotations are well estimated in the case where pure rotational motions are considered (refer to the red plot). It can also be noticed from the same figure (the blue and the green plots) that maximum rotation errors around and are observed respectively for the cases where the camera displacement involves a translational motion of norms equal to 10 cm and 20 cm, respectively.

Figure 3.

Results using conventional camera model: (a) the reference image and (b) example of the rotated images.

Figure 4.

Simulation results using a perspective camera model: (a) Estimation error of the rotation and (b) rotation ground truth expressed.

3.2. Real Experiments

The following experimental results use the OVMIS (Omnidirectional Vision Dataset of the MIS laboratory) dataset (http://mis.u-picardie.fr/~g-caron/pub/data/OVMIS_dataset.zip). Based on the same database, the preliminary work [23] has provided several experimental results where the proposed method is compared to the SPHARM (SPherical HARmonic analyses) method [15] and to the phase correlation (COR) and photometric (PHO) methods [29]. In the following, the proposed method is validated on a larger set of scenarios from the dataset. Moreover, we compare the performance of the proposed method using the spherical moments computed from the image intensities and from its gradient. We also test the effect of introducing the weighting function on the spherical moment computation of image acquired by catadioptric camera. The used weighting is a function of the pixel coordinates of the following form:

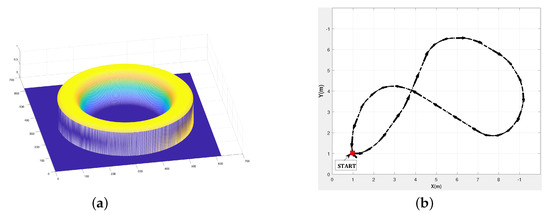

where is the distance of the pixel to the principal point and is a scalar that tunes the wide of the image zone to be used. Note that has its maximum value 1 for . Figure 5a shows the shape of the used weight function for this validation results. As can be seen from weighting function shape, the points close to the image border or to the blind zone in the mage center are given small weights to decrease the effect of appearance/disappearance of image parts. In all these experimental parts, the rotation motion is obtained by accumulating the estimated rotations between each two consecutive images.

Figure 5.

(a)Weight function and (b) Scenario 3: Eight trajectories of the pioneer robot.

The first validation result shown in the video (Supplementary Materials) is based on Dataset 1 of OVMIS with the Staubli robot arm. The considered sequence composed of 144 images has been acquired by applying regular pure rotations around the optical axis by a catadioptric camera mounted on the end effector of a Staubli in an indoor environment which corresponds to a rotation of between two consecutive images. First, the proposed method is tested by considering all the image sequences. Then, one image from 10 and from 20 are considered, which corresponds to rotations of and , respectively, between tow consecutive images. As it can be seen from the video that the obtained results show that the proposed method estimates accurately the rotations in the three considered cases.

The second validation result shown as well in the video (Supplementary Materials) is based on the Scenario 1 of the Pioneer database acquired using a catadioptric camera. A similar setup to the previous case is used: a pure rotational motion around the z-axis in an indoor environment. The proposed method is tested by considering all the image sequences, one image from 10 and one from 20. The obtained results show again that the proposed method estimates accurately the rotations in the three considered cases.

The last experiment tests the proposed method on the more challenging Scenario 3 of the OVMIS dataset of a pioneer robot moving according to the trajectory described in Figure 5b. The achieved path is of length 25.27 m, in the form of 8 motions that include both rotational and translation motions. It has been achieved by the mobile robot in 160 s, which corresponds to 0.187 m/s average speed. The ground truth is obtained by incorporating gyroscopic corrections into the odometry data based on wheel encoders. From the video (Supplementary Materials), it can be seen that the mobile robot has traversed uneven terrain, with changing illumination conditions due to moving cast shadows of the trees and with appearance/disappearance of some image parts.

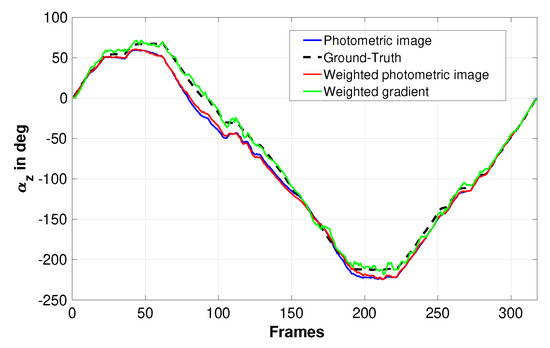

Figure 6 compares the estimated rotation with/without using weighting function and based on moments computed from the image grey level or its gradient. From the obtained plots, it can be seen that using weighting function improves slightly the results obtained using the moment computed from the image intensities (compare the blue curve to the red one). On the other hand, it can be seen that the most accurate rotation estimation is obtained using the image gradient spherical moments and weighting function (refer to the green plot). The last experiments of the video (Supplementary Materials) describes the obtained results using the image gradient spherical moments by considering all the image sequences, one image from 10 and one from 20. From the obtained results, it can be seen that the rotation estimation is satisfactory for camera motion involving transnational and rotational motions.

Figure 6.

Estimated rotation of the pioneer robot around the Z-axis (Scenario 3).

3.3. Discussion

The triples computed from moment vectors provide a closed-form estimation to rotational motion. Compared to the classical method based on the alignment of principal axis, the proposed solution has no ambiguities on rotation formula. Recall that the alignment of principal axis method usually uses moments of higher orders to remove the ambiguities. If the estimated rotation is used as a feature in visual servoing or in active vision, the closed-form formula has a second advantage. For instance, as it has been proposed in References [30,31], the rotation vector is computed from the rotation matrix and used to control the rotational speed of a camera. For accurate visual servoing and active vision, most strategies are based on the analytical form of the visual features dynamics. Independently from the used method to estimate the rotation matrix, the relation between the time variation of a rotation vector and the rotational speed keeps the same form. On the other hand, its dynamic with respect to the translational speed becomes complex to obtain without a closed-form formula. Using the proposed solution in this paper, the dynamics of the rotation vector with respect to the whole degree can be obtained from those of the spherical moments [26,30,31].

A natural question arises about which are the best two triples to be used for building an orthonormal base. For instance, a base can be built from the two triples from to as well as by using two others from to . Although it is well accepted that the sensitivity to noise increases with the moment orders, there is not much difference between results obtained using moments of orders 2 and 3 and those obtained using moments of orders 4 and 3. Moreover, an orthonormal base can be obtained using more than only two triples as it is achieved in Reference [31]. Finally, recall also that the rotation matrix can be obtained using all the triples as a generalized solution of the orthogonal Procrustes problem [32].

4. Conclusions

In this paper, a new method to estimate rotational motions using photometric moments has been provided. The obtained theoretical results have been validated using simulations and real experiments using onboard omnidirectional cameras and two different robots (robot arm and ground mobile robot). The proposed method has been also tested in different scenarios with appearance/disappearance of some image parts and lightening changes. Future works will be devoted to using photometric spherical moments for the control of the 6 degrees of freedom of a camera.

Supplementary Materials

The following are available online at https://www.mdpi.com/1424-8220/19/22/4958/s1.

Author Contributions

Conceptualization, H.H.-A., O.T. and H.-E.B.; methodology, H.H.-A. and O.T.; validation, H.H.-A., O.T. and H.-E.B.; writing—original draft preparation, H.H.-A. and O.T.; writing—review and editing, H.H.-A. and O.T.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

• Triples computed from moments of order 2 and moments of order 3.

References

- De Castro, E.; Morandi, C. Registration of Translated and Rotated Images Using Finite Fourier Transforms. IEEE Trans. Pattern Anal. Mach. Intell. 1987, PAMI-9, 700–703. [Google Scholar] [CrossRef]

- Althloothi, S.; Mahoor, M.; Voyles, R. A Robust Method for Rotation Estimation Using Spherical Harmonics Representation. IEEE Trans. Image Process. 2013, 22, 2306–2316. [Google Scholar] [CrossRef] [PubMed]

- Bazin, J.C.; Demonceaux, C.; Vasseur, P.; Kweon, I. Rotation estimation and vanishing point extraction by omnidirectional vision in urban environment. Int. J. Robot. Res. 2012, 31, 63–81. [Google Scholar] [CrossRef]

- Makadia, A.; Daniilidis, K. Rotation recovery from spherical images without correspondences. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1170–1175. [Google Scholar] [CrossRef] [PubMed]

- Nister, D.; Naroditsky, O.; Bergen, J. Visual odometry. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; Volume 1, pp. I-652–I-659. [Google Scholar]

- Hadj-Abdelkader, H.; Mezouar, Y.; Martinet, P.; Chaumette, F. Catadioptric Visual Servoing from 3-D Straight Lines. IEEE Trans. Robot. 2008, 24, 652–665. [Google Scholar] [CrossRef]

- Safaee-Rad, R.; Tchoukanov, I.; Smith, K.C.; Benhabib, B. Three-dimensional location estimation of circular features for machine vision. IEEE Trans. Robot. Autom. 1992, 8, 624–640. [Google Scholar] [CrossRef]

- Agrawal, M.; Konolige, K. Real-time Localization in Outdoor Environments using Stereo Vision and Inexpensive GPS. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 3, pp. 1063–1068. [Google Scholar]

- Malis, E.; Marchand, E. Experiments with robust estimation techniques in real-time robot vision. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS’06), Beijing, China, 9–15 October 2006; pp. 223–228. [Google Scholar]

- Micusik, B.; Pajdla, T. Structure from motion with wide circular field of view cameras. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1135–1149. [Google Scholar] [CrossRef] [PubMed]

- Hadj-Abdelkader, H.; Malis, E.; Rives, P. Spherical Image Processing for Accurate Visual Odometry with Omnidirectional Cameras. In Proceedings of the 8th Workshop on Omnidirectional Vision, Camera Networks and Non-classical Cameras—OMNIVIS, Marseille, France, 17 October 2008. [Google Scholar]

- Lim, J.; Barnes, N.; Li, H. Estimating Relative Camera Motion from the Antipodal-Epipolar Constraint. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1907–1914. [Google Scholar] [CrossRef] [PubMed]

- Horn, B.K.; Schunck, B.G. Determining Optical Flow. Artif. Intell. 1981, 17, 185–203. [Google Scholar] [CrossRef]

- Gluckman, J.; Nayar, S.K. Ego-motion and omnidirectional cameras. In Proceedings of the Sixth International Conference on Computer Vision (IEEE Cat. No.98CH36271), Bombay, India, 4–7 January 1998; pp. 999–1005. [Google Scholar]

- Benseddik, H.E.; Hadj-Abdelkader, H.; Cherki, B.; Bouchafa, S. Direct method for rotation estimation from spherical images using 3D mesh surfaces with SPHARM representation. J. Vis. Commun. Image Represent. 2016, 40, 708–720. [Google Scholar] [CrossRef]

- Teague, M.R. Image analysis via the general theory of moments. JOSA 1980, 70, 920–930. [Google Scholar] [CrossRef]

- Abu-Mostafa, Y.S.; Psaltis, D. Image normalization by complex moments. IEEE Trans. Pattern Anal. Mach. Intell. 1985, PAMI-7, 46–55. [Google Scholar] [CrossRef]

- Tahri, O.; Araujo, H.; Mezouar, Y.; Chaumette, F. Efficient decoupled pose estimation from a set of points. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS’2013), Tokyo, Japan, 3–7 November 2013; pp. 1608–1613. [Google Scholar]

- Tahri, O.; Chaumette, F. Complex objects pose estimation based on image moment invariants. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; pp. 436–441. [Google Scholar]

- Tahri, O.; Tamtsia, A.Y.; Mezouar, Y.; Demonceaux, C. Visual servoing based on shifted moments. IEEE Trans. Robot. 2015, 31, 798–804. [Google Scholar] [CrossRef]

- Bakthavatchalam, M.; Tahri, O.; Chaumette, F. A Direct Dense Visual Servoing Approach using Photometric Moments. IEEE Trans. Robot. 2018. [Google Scholar] [CrossRef]

- Tahri, O.; Mezouar, Y.; Chaumette, F.; Corke, P. Decoupled image-based visual servoing for cameras obeying the unified projection model. IEEE Trans. Robot. 2010, 26, 684–697. [Google Scholar] [CrossRef]

- Hadj-Abdelkader, H.; Tahri, O.; Benseddik, H.E. Closed form solution for Rotation Estimation using Photometric Spherical Moments. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 627–634. [Google Scholar]

- Courbon, J.; Mezouar, Y.; Eckt, L.; Martinet, P. A generic fisheye camera model for robotic applications. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; pp. 1683–1688. [Google Scholar]

- Barnard, S.T. Interpreting Perspective Images. Artif. Intell. 1983, 21, 435–462. [Google Scholar] [CrossRef]

- Tahri, O. Application des Moments à L’asservissement Visuel et au Calcul de Pose. Ph.D. Thesis, Université de Rennes, Rennes, France, 2004. [Google Scholar]

- Tatsambon Fomena, R. Asservissement Visuel par Projection Sphérique. Ph.D. Thesis, University of Rennes 1, Rennes, France, 2008. [Google Scholar]

- Hadj-Abdelkader, H.; Mezouar, Y.; Martinet, P. Decoupled Visual Servoing Based on the Spherical Projection of Points. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA’09), Kobe, Japan, 12–17 May 2009; pp. 731–736. [Google Scholar]

- Morbidi, F.; Caron, G. Phase Correlation for Dense Visual Compass from Omnidirectional Camera-Robot Images. IEEE Robot. Autom. Lett. 2017, 2, 688–695. [Google Scholar] [CrossRef]

- Fomena, R.T.; Tahri, O.; Chaumette, F. Distance-based and orientation-based visual servoing from three points. IEEE Trans. Robot. 2011, 27, 256–267. [Google Scholar] [CrossRef]

- Tahri, O.; Araujo, H.; Chaumette, F.; Mezouar, Y. Robust image-based visual servoing using invariant visual information. Robot. Auton. Syst. 2013, 61, 1588–1600. [Google Scholar] [CrossRef]

- Schönemann, P.H. A generalized solution of the orthogonal Procrustes problem. Psychometrika 1966, 31, 1–10. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).