A Study on the Gaze Range Calculation Method During an Actual Car Driving Using Eyeball Angle and Head Angle Information

Abstract

:1. Introduction

2. Previous Study about Gaze Estimation Method for Driving the Car

3. Measurement System

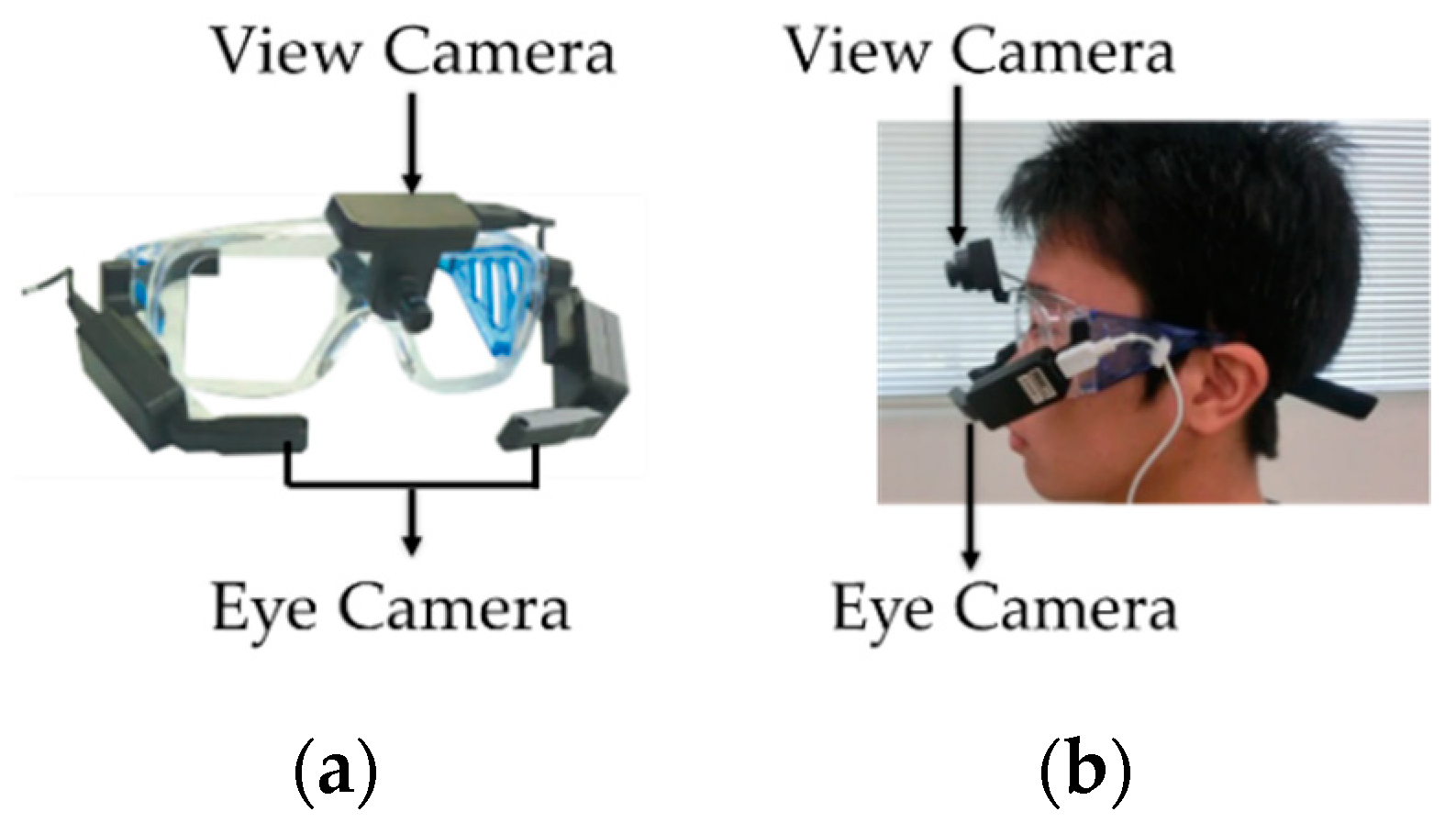

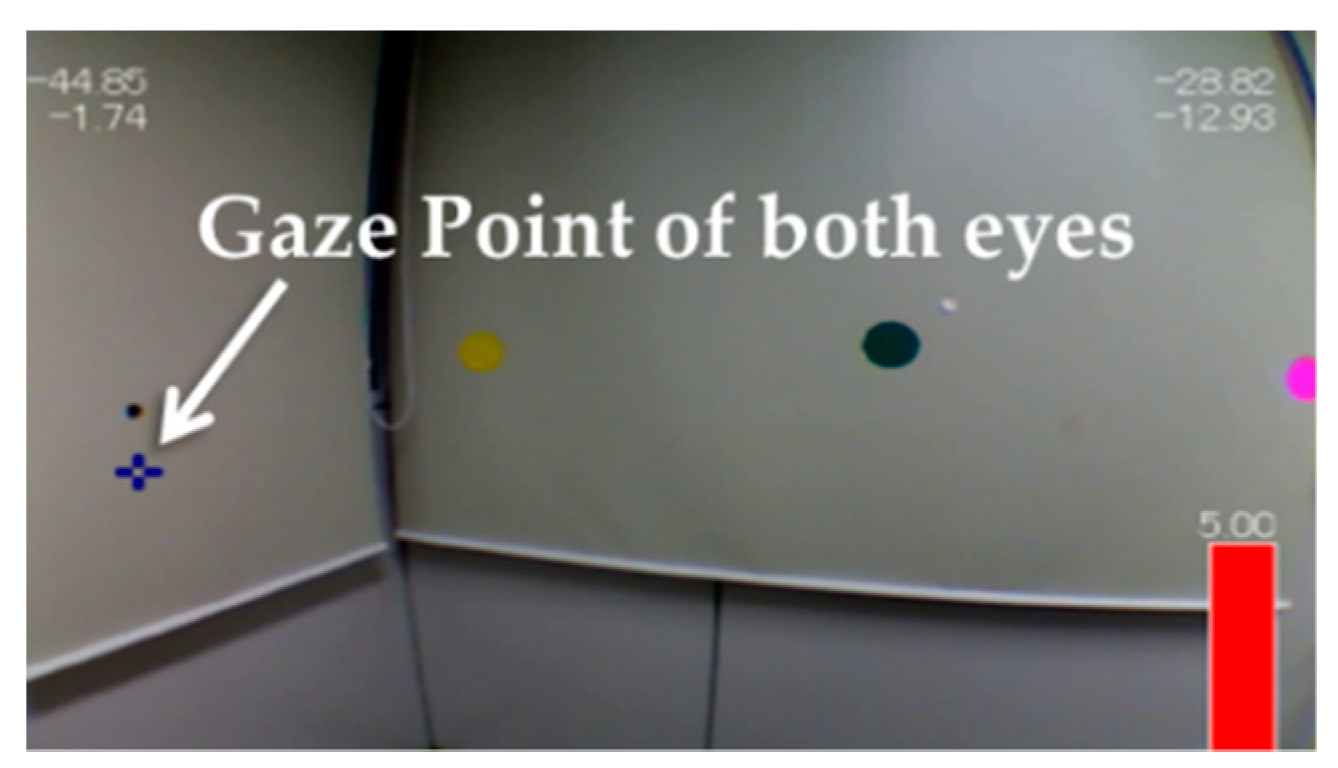

3.1. Measurement System Using TalkEyeLite

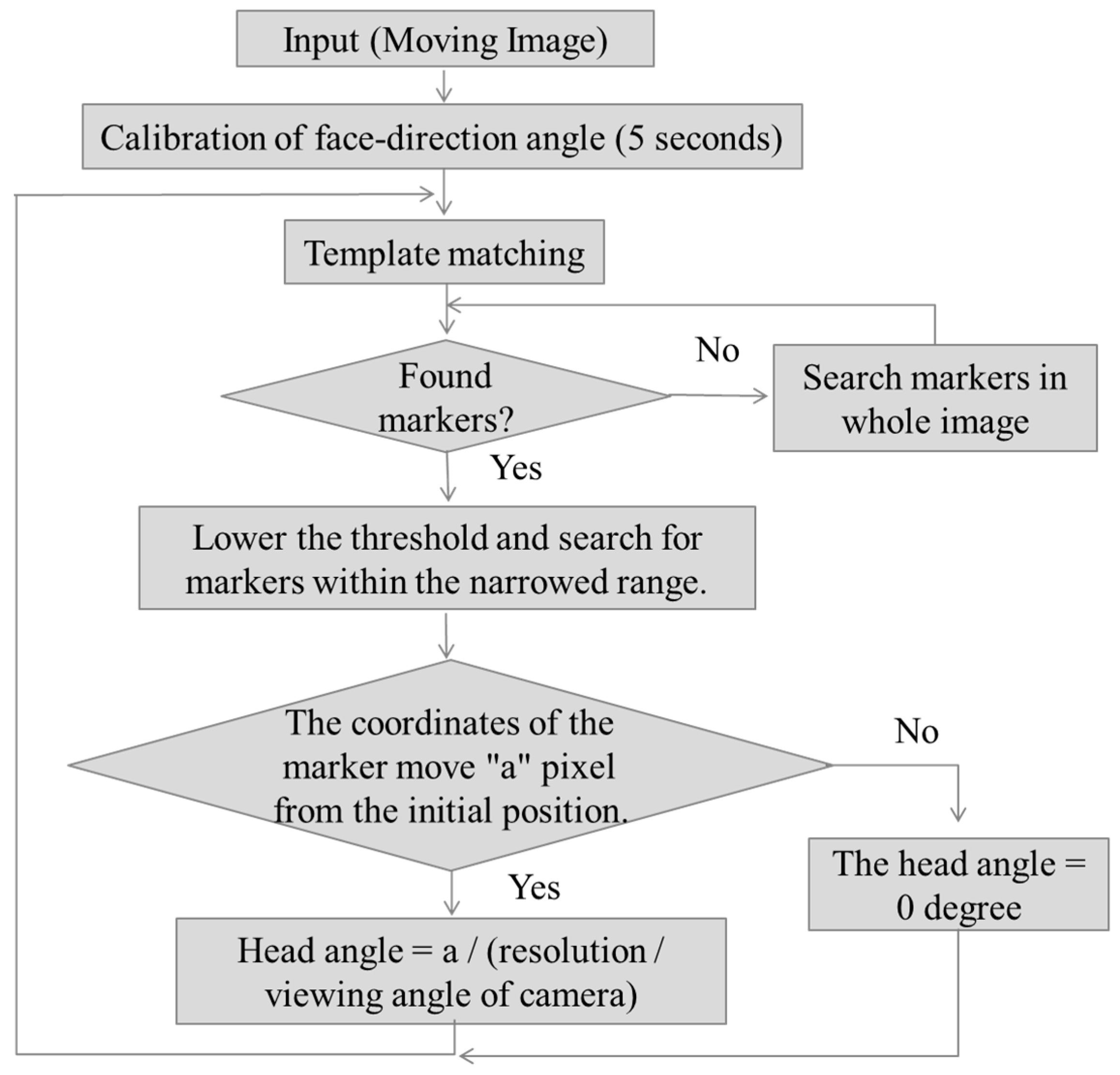

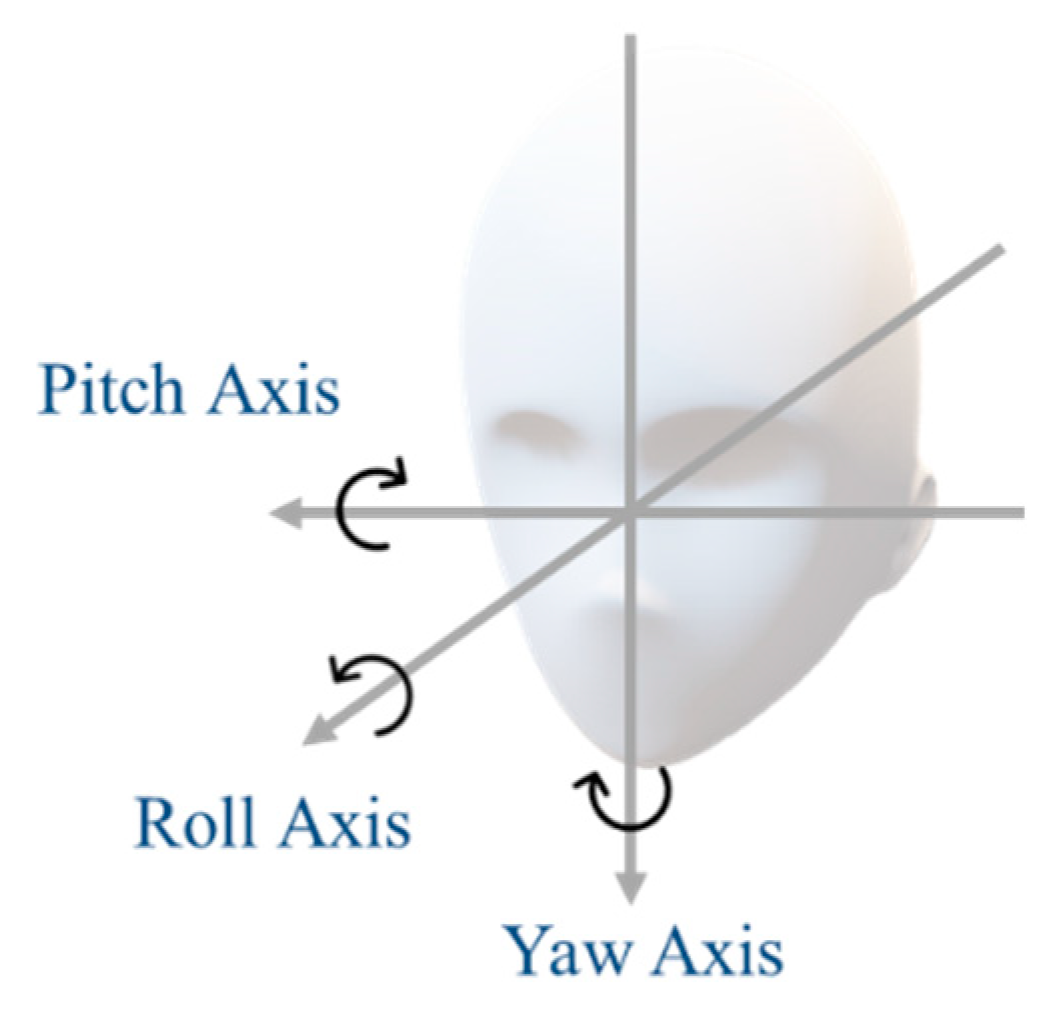

3.2. The Head Angle Estimation Method Using Template Matching

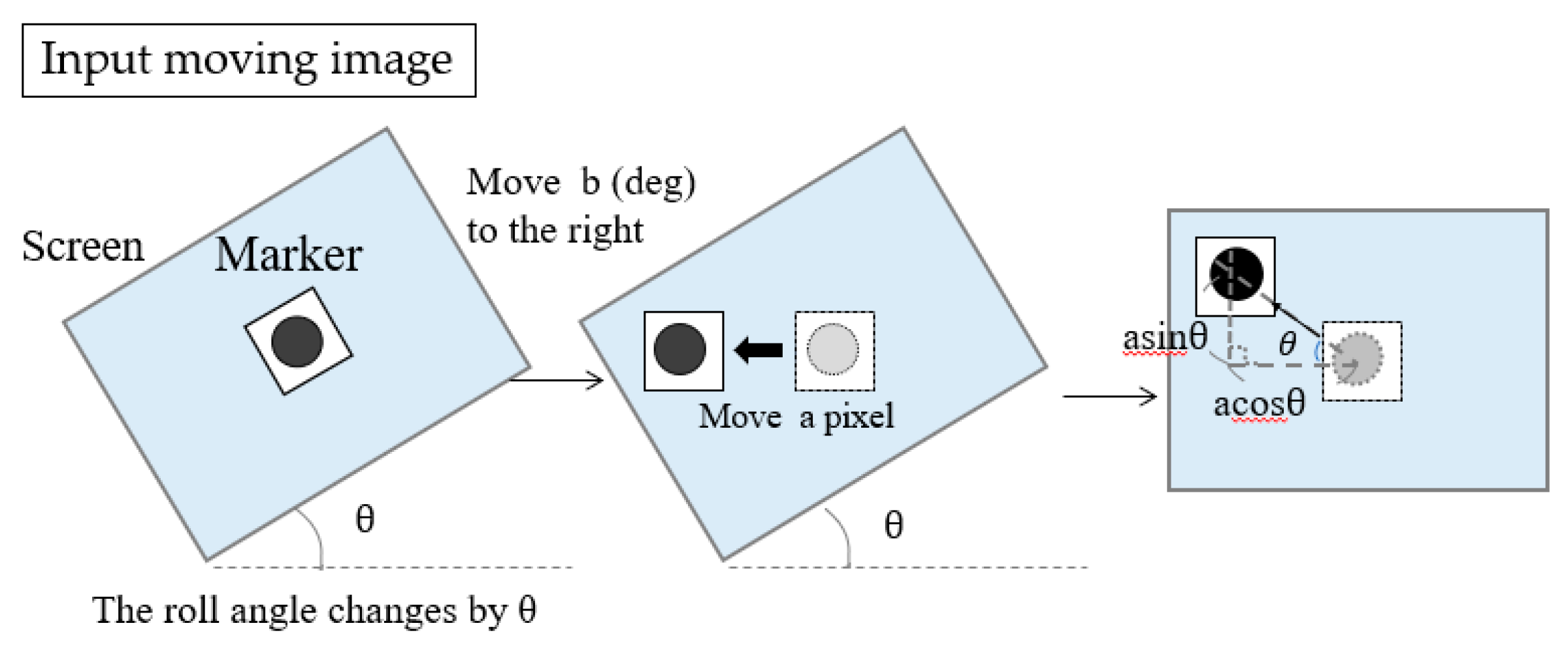

Problems Caused by Inclination of the Head

4. Improved Measurement Method

- The gaze estimation angle range is narrow (about 42 degrees).

- The processing time is long.

4.1. Expansion of the Gaze Estimated Range

4.2. The Method of Reducing Processing Time

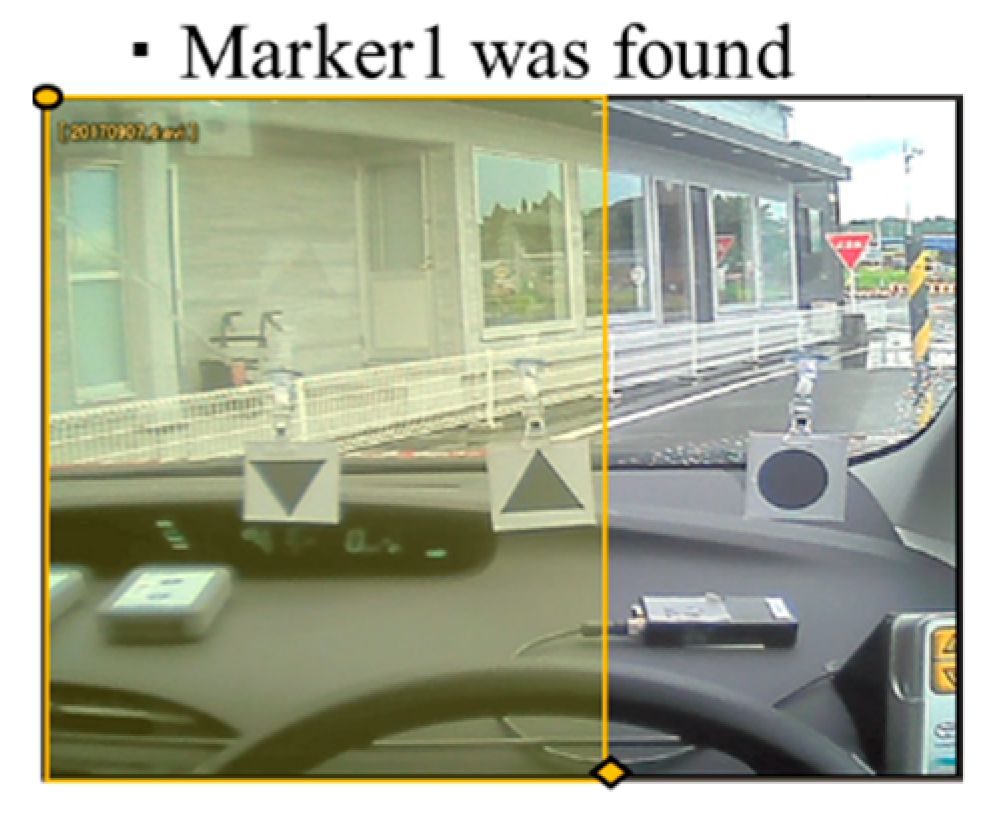

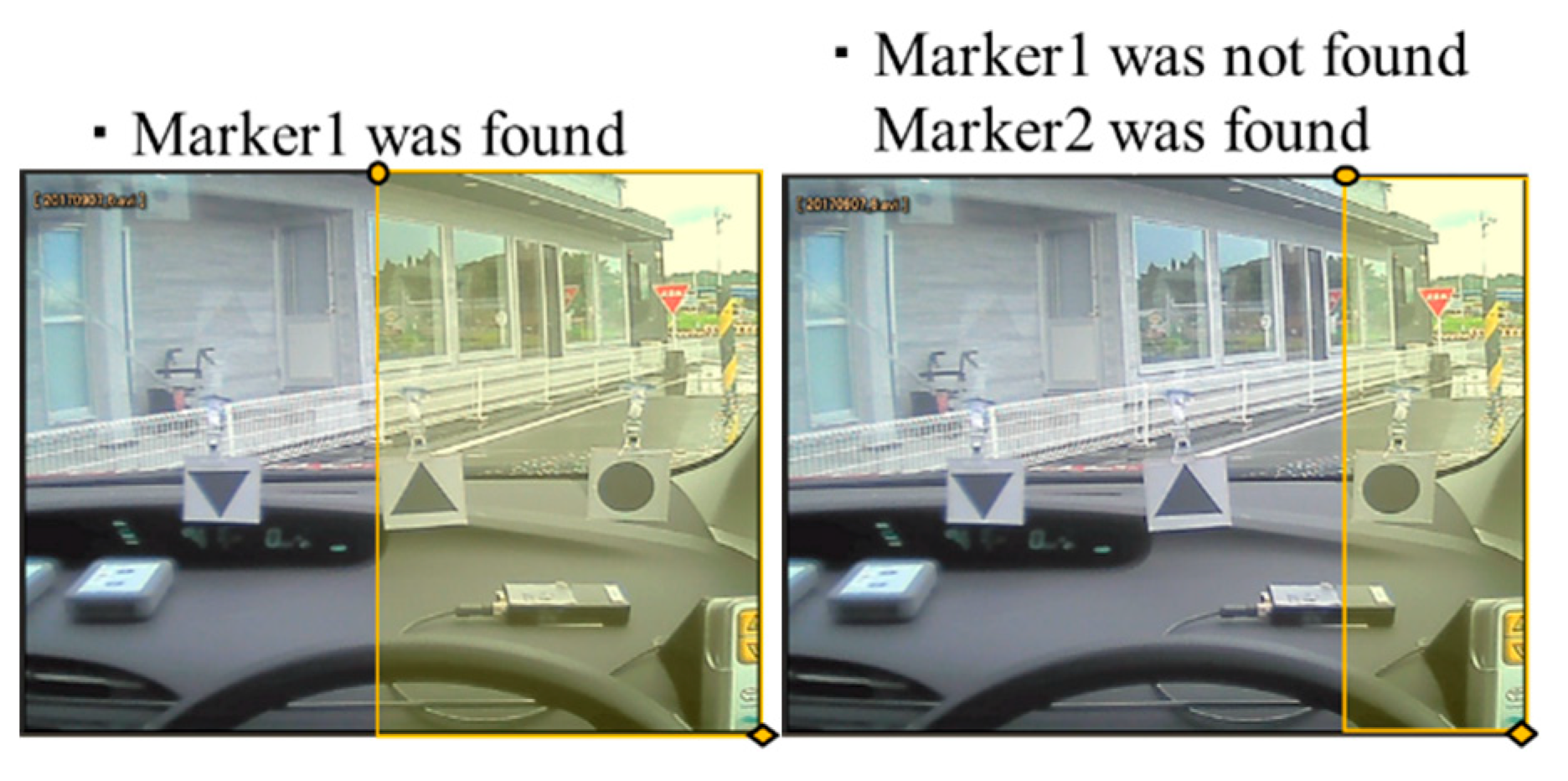

4.2.1. Positional Relation of Markers to Reduce Search Range

- Marker2 is more to the right than Marker1.

- Marker3 is more to the right than Marker1 and Marker2.

- Marker4 does not appear in the same frame as Marker1 and Marker2.

- Marker4 is more to the right than Marker3.

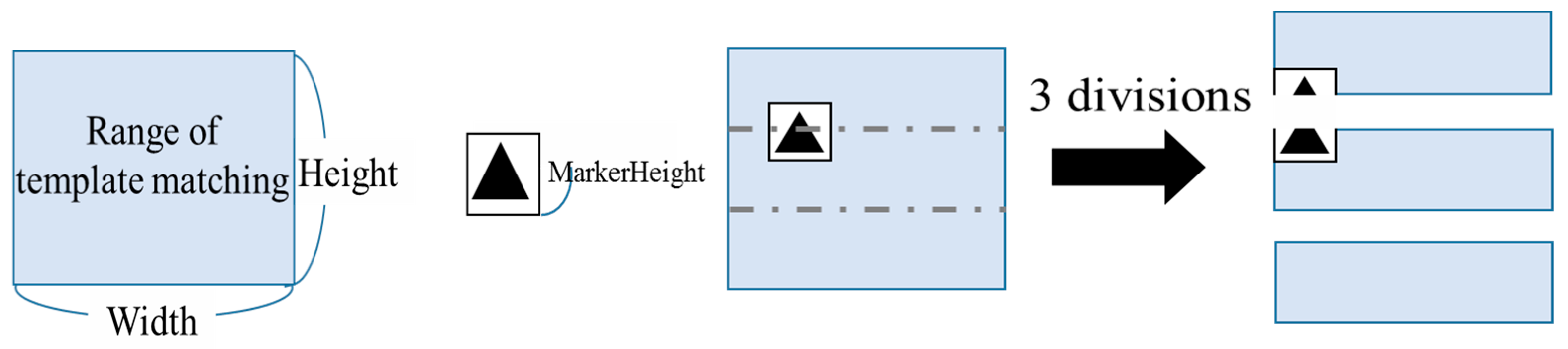

4.2.2. Dividing the Search Range

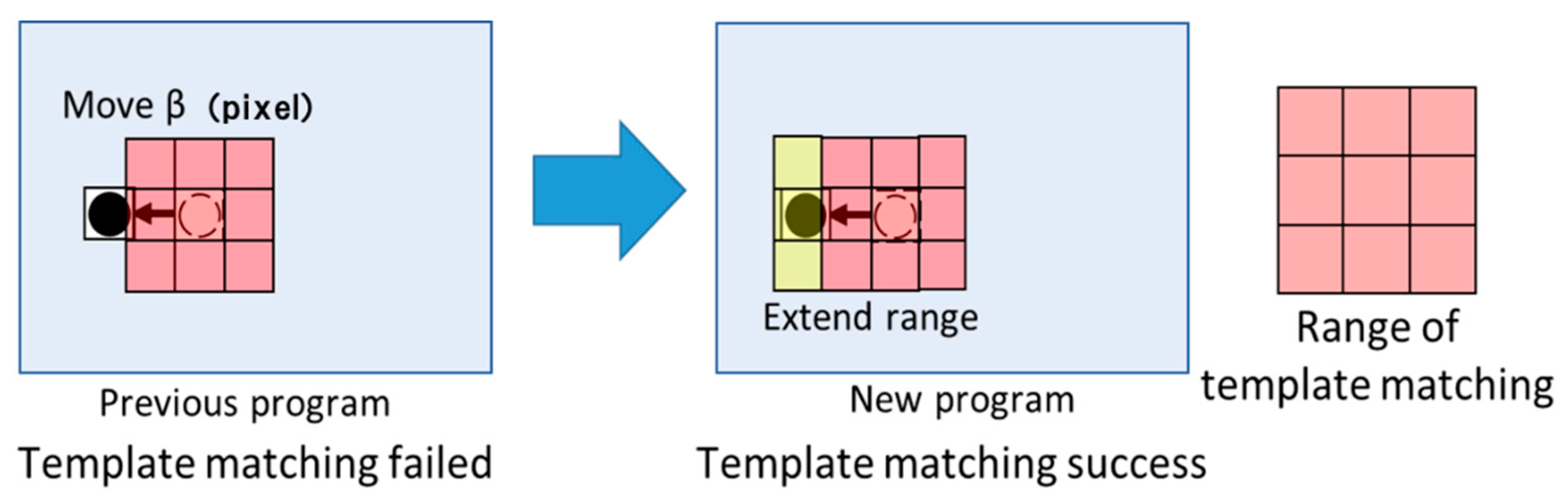

4.2.3. Extending the Search Range

5. Verification of the Accuracy of the Proposed Method

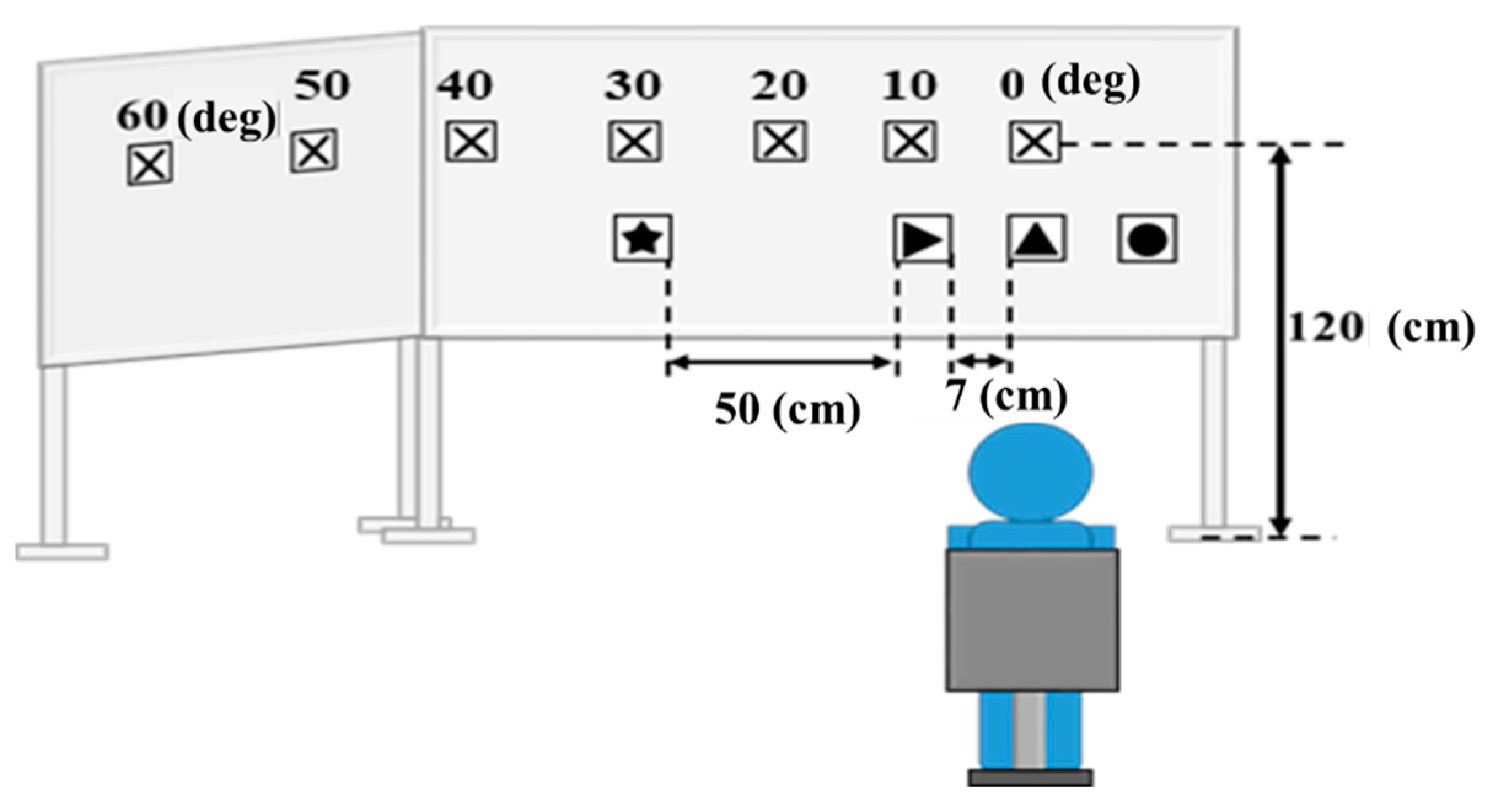

5.1. Indoor Experiments: Conditions and the Results

- f1(t): the eye movement angle;

- f2(t): the head angle;

- t: the number of sampling data.

5.2. Outdoor Experiments (in an Actual Car): Conditions and the Result

6. Proposed Driving Evaluation Method

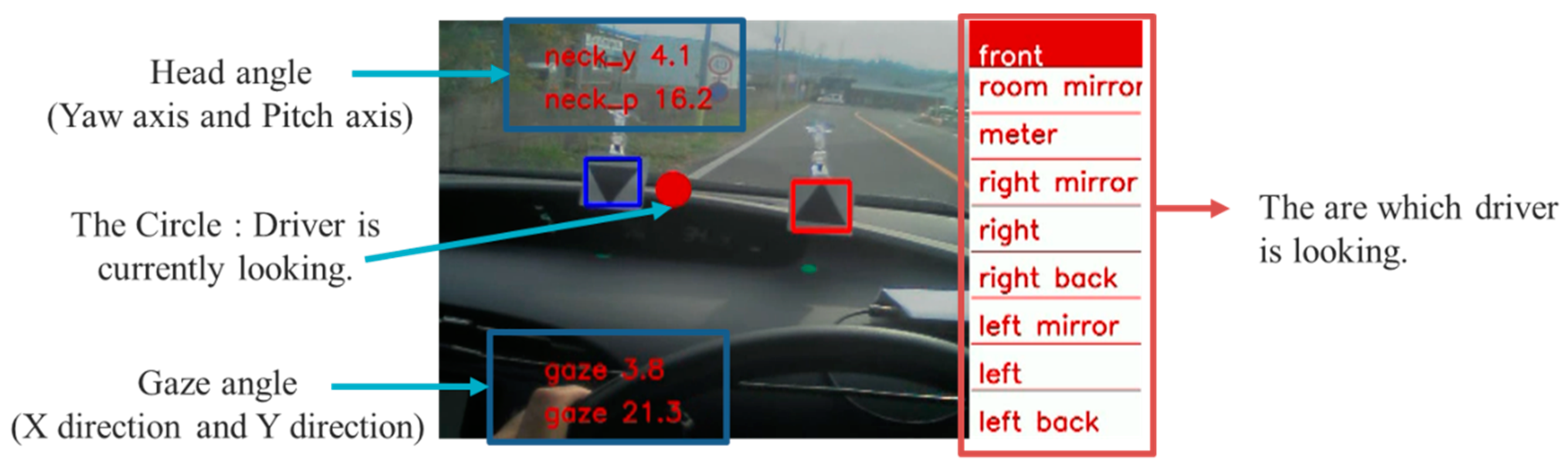

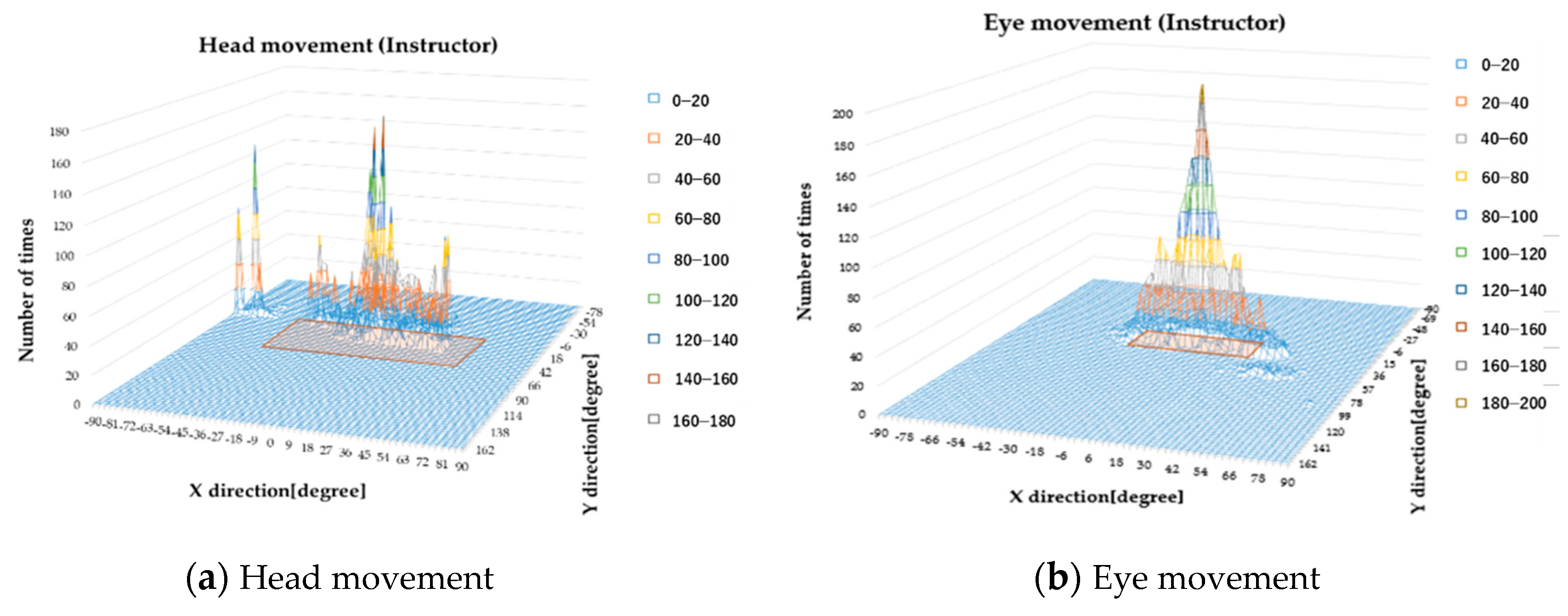

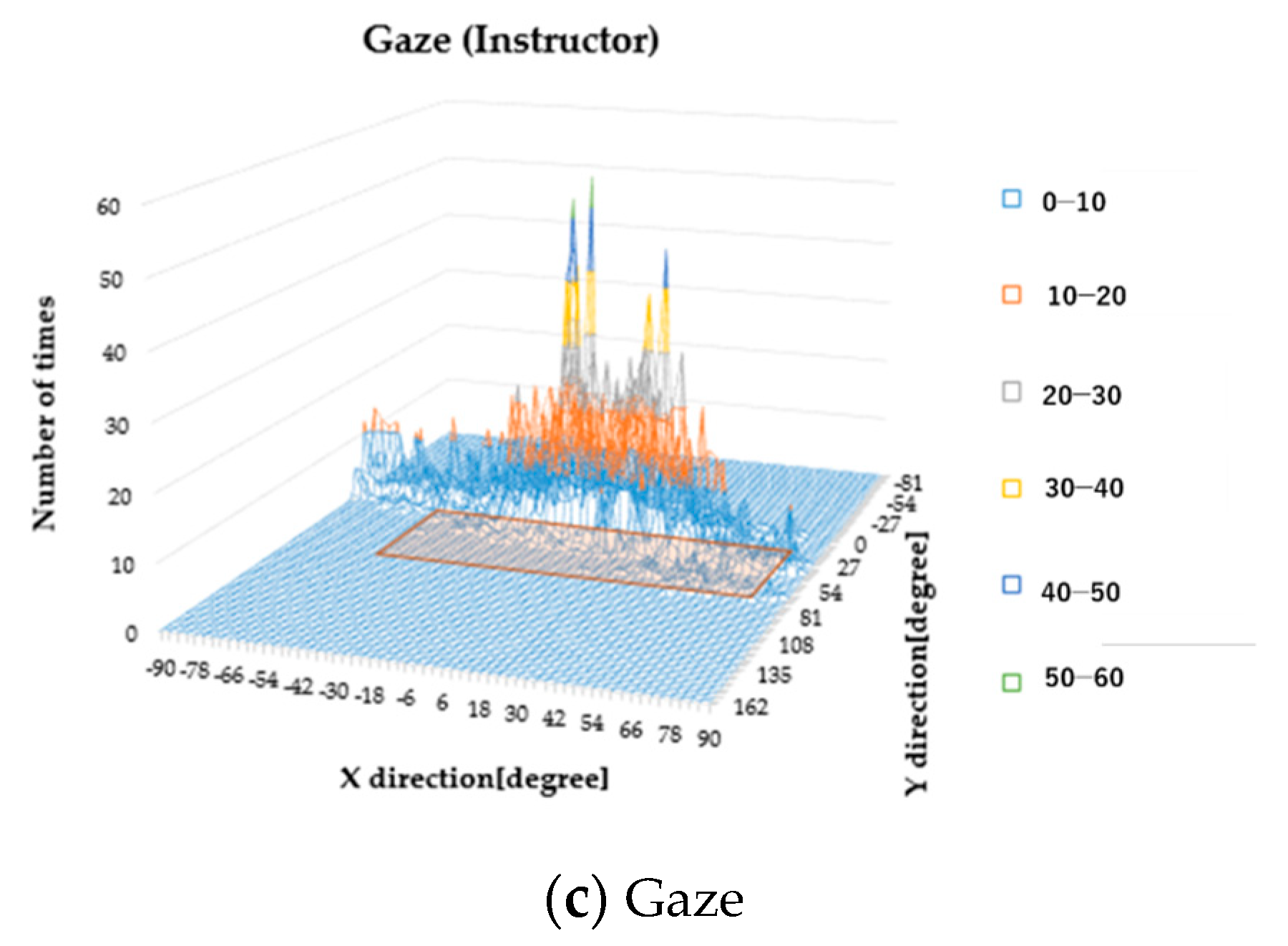

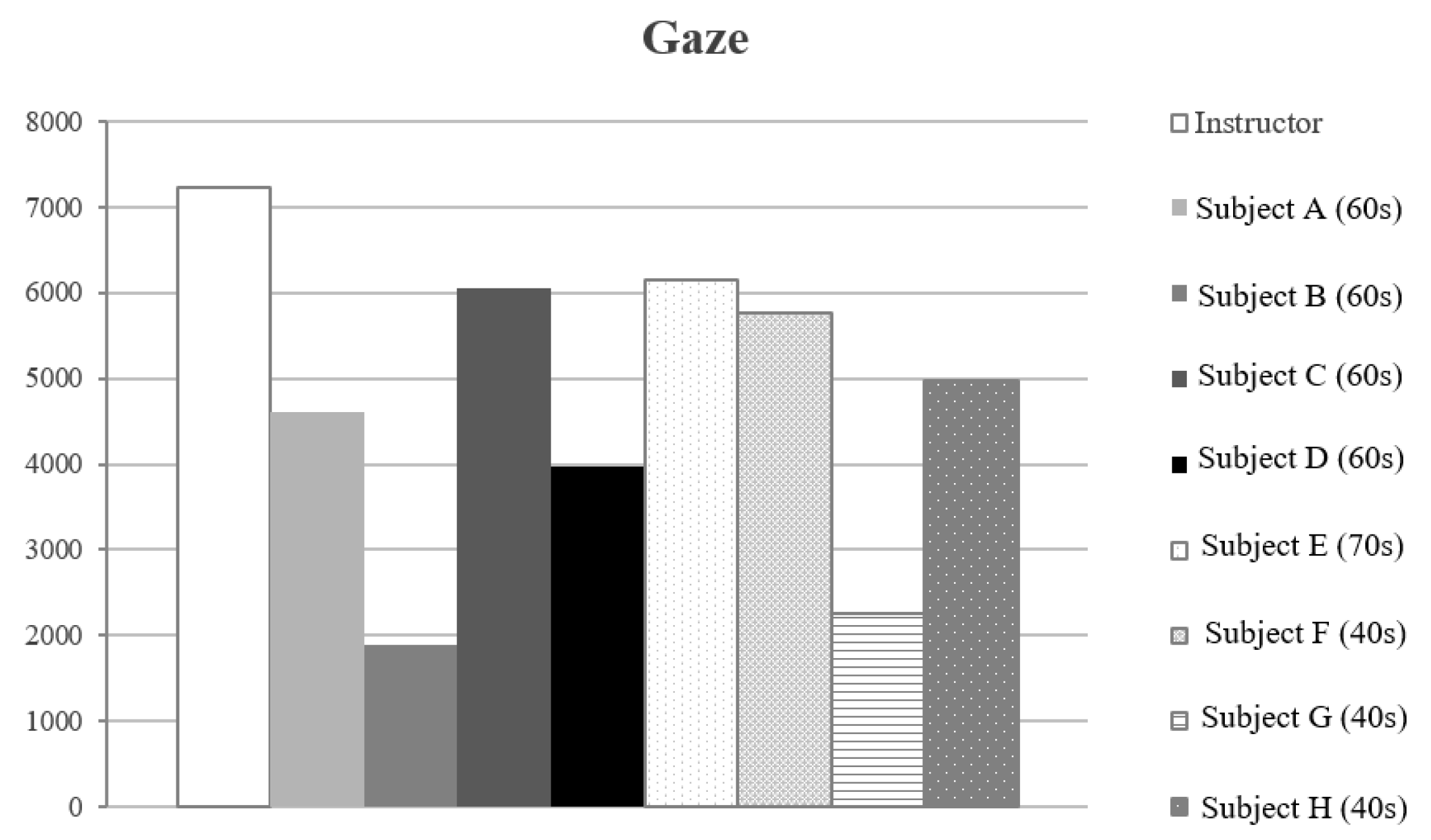

6.1. The Performance of Gaze Estimation (During Actual Car Driving)

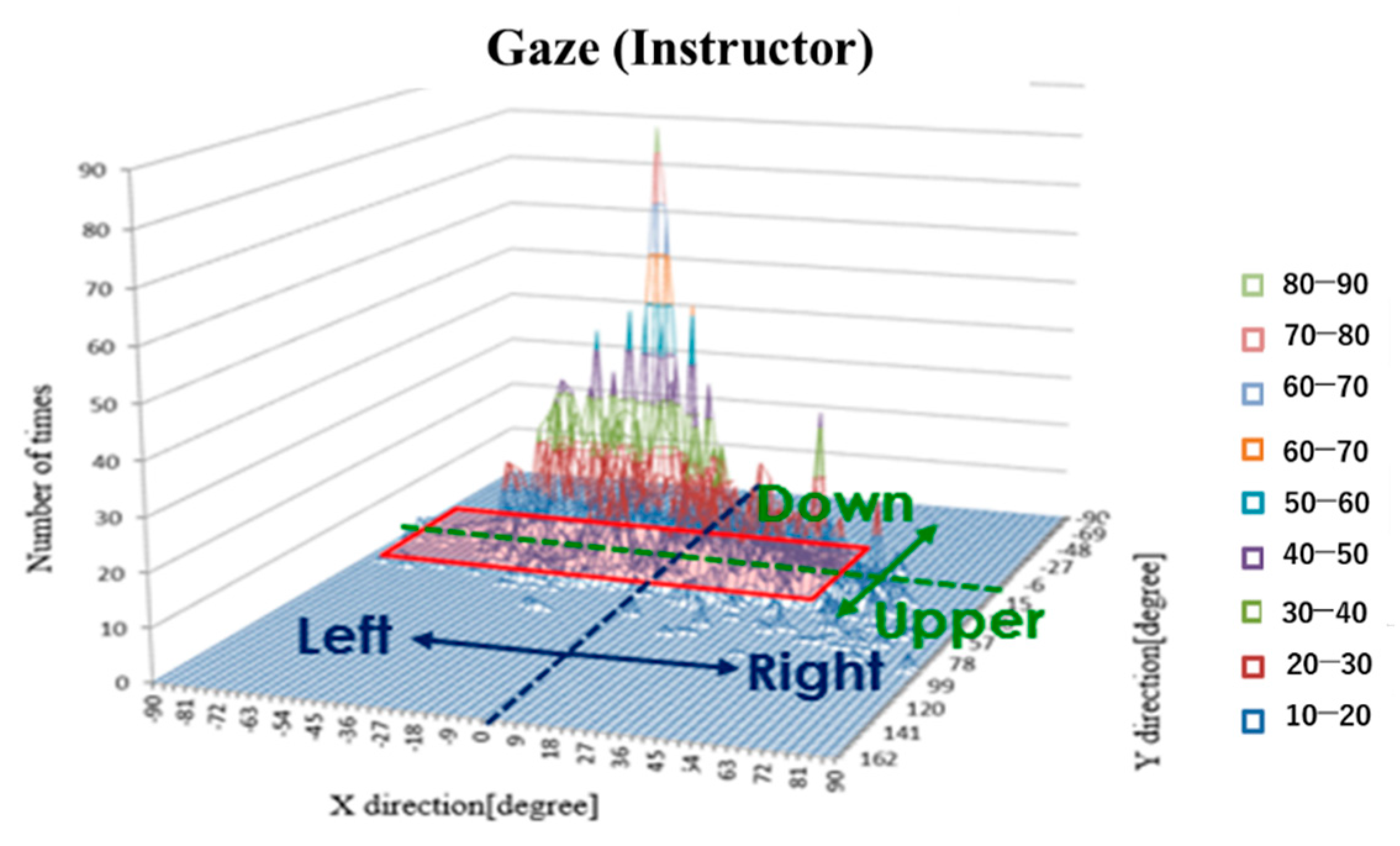

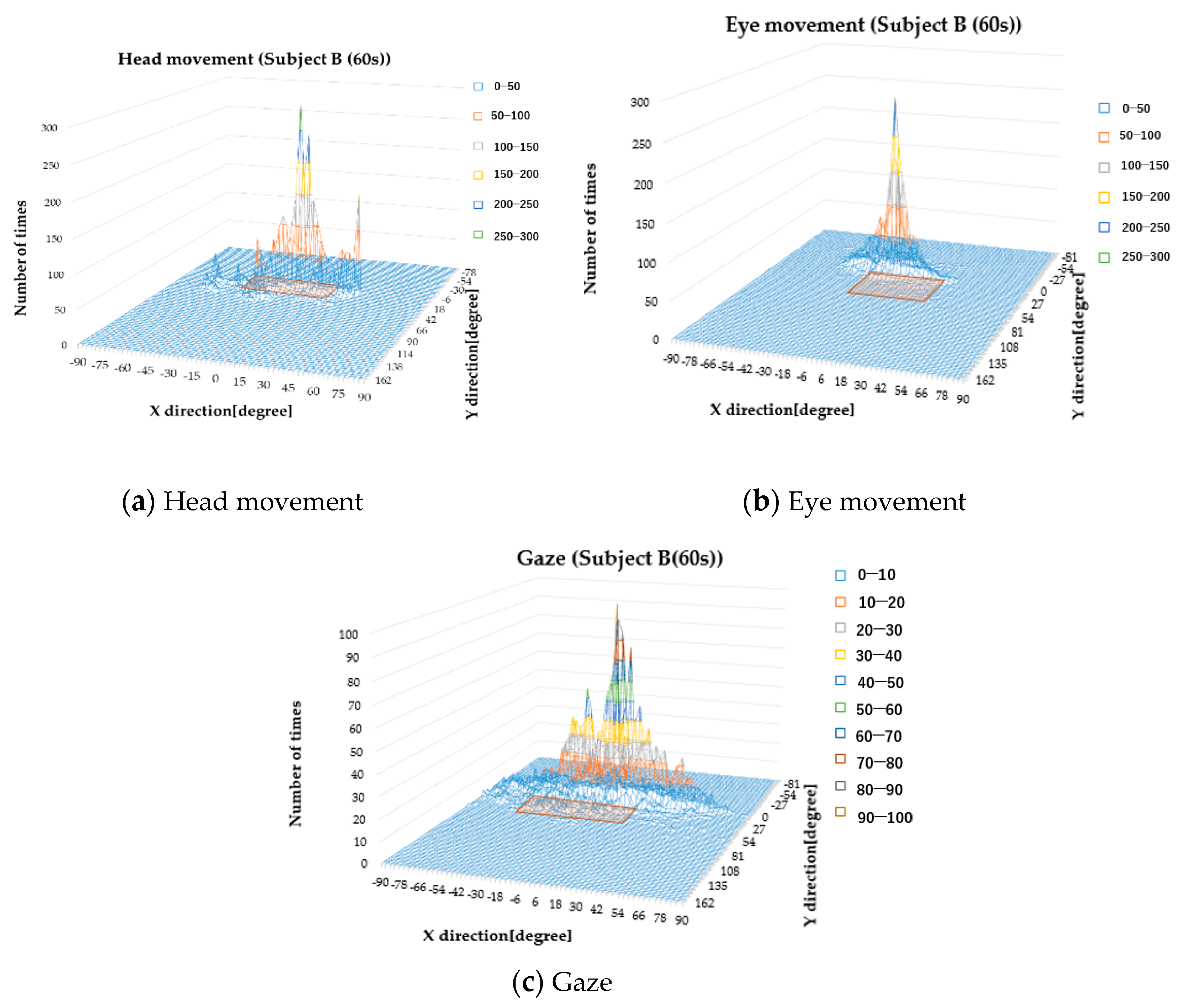

6.2. Evaluation of the Gaze Range

7. Conclusions

- (1)

- In order to extend the range in which head angle estimation can be performed, we proposed the head angle estimate method using markers, and we evaluated the accuracy of this method;

- (2)

- Shortening the processing time of template matching, we changed the range that performed template matching;

- (3)

- The gaze range during driving was compared between eight general subjects and one driving school instructor. As a result, we found that the gaze ranges of two subjects were extremely narrow.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Schultheis, M.; Deluca, J.; Chute, D. Handbook for the Assessment of Driving Capacity; Academic Press: San Diego, CA, USA, 2009. [Google Scholar]

- Regger, M.A.; Welsh, R.K.; Watson, G.; Cholerton, B.; Baker, L.D.; Craft, S. The relationship between neuropsychological functioning and driving ability in dementia: A meta-analysis. Neuropsychology 2004, 18, 85–93. [Google Scholar] [CrossRef] [PubMed]

- Hartman, E. Driver Vision Requirements; Society of Automotive Engineers, Technical Paper Series, 700392; Erlbaum: Hillsdale, NJ, USA, 1970; pp. 629–630. [Google Scholar]

- Owsley, C.; Ball, K.; Sloane, M.; Roenker, D.L.; Bruni, J.R. Visual/cognitive correlates of vehicle accidents in older drivers. Psychol. Aging 1991, 6, 403–415. [Google Scholar] [CrossRef] [PubMed]

- Collins, C.J.S.; Barnes, G.R. Independent control of head and gaze movements during head-free pursuit in humans. J. Physiol. 1999, 515, 299–314. [Google Scholar] [CrossRef] [PubMed]

- Ron, S.; Berthoz, A. Eye and head coupled and dissociated movements during orientation to a double step visual target displacement. Exp. Brain Res. 1991, 85, 196–207. [Google Scholar] [CrossRef] [PubMed]

- Barnes, G.R. Vestibulo-ocular function during co-ordinated head and eye movements to acquire visual target. J. Physiol. 1979, 287, 127–147. [Google Scholar] [CrossRef] [PubMed]

- Tawari, A.; Chen, K.H.; Trivedi, M.M. Where is the driver looking: Analysis of Head, Eye and Iris for Robust Gaze Zone Estimation. In Proceedings of the IEEE Conference on Intelligent Transportation Systems, Qingdao, China, 8–11 October 2014; pp. 988–994. [Google Scholar]

- Dodge, R. The latent time of compensatory eye movements. J. Exp. Psychol. 1921, 4, 247–269. [Google Scholar] [CrossRef]

- Chung, M.G.; Park, J.; Dong, J. A Simple Method for Facial Pose Detection. IEICE Trans. Fundam. Electron. Commun. Comput. Sci. 2004, 87, 2585–2590. [Google Scholar]

- Sakurai, K.; Yan, M.; Inami, K.; Tamura, H.; Tanno, K. A study on human interface system using the direction of eyes and face. Artif. Life Robot. 2015, 20, 291–298. [Google Scholar] [CrossRef]

- Sakurai, K.; Yan, M.; Tamura, H.; Tanno, K. Comparison of two techniques for gaze estimation system using the direction of eyes and head. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016. [Google Scholar] [CrossRef]

- Takei Scientific Instruments Cooperation: URL. Available online: http://www.takei-si.co.jp/en/ (accessed on 10 September 2019).

- Krajewski, J.; Trutschel, U.; Golz, M.; Sommer, D.; Edwards, D. Estimating fatigue from predetermined speech samples transmitted by operator communication systems. In Proceedings of the Fifth International Driving Symposium on Human Factors in Driver Assessment, Training and Vehicle Design, Big Sky, Montana, 22–25 June 2009. [Google Scholar]

- Daza, I.G.; Hernandez, N.; Bergasa, L.M.; Parra, I.; Yebes, J.J.; Gavilan, M.; Quintero, R.; Llorca, D.F.; Sotelo, M.A. Drowsiness monitoring based on driver and driving data fusion. In Proceedings of the 14th international IEEE conference on intelligent transportation systems (ITSC), Washington, DC, USA, 5–7 October 2011; pp. 1199–1204. [Google Scholar]

- Martin, S.; Vora, S.; Yuen, K.; Trivedi, M.M. Dynamics of Driver’s Gaze: Explorations in Behavior Modeling and Maneuver Prediction. IEEE Trans. Intell. Veh. 2018, 3, 141–150. [Google Scholar] [CrossRef]

- Jimenez, P.; Bergasa, L.M.; Nuevo, K.; Hernandez, N.; Daza, I.G. Gaze Fixation System for the Evaluation of Driver Distractions Induced by IVIS. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1167–1178. [Google Scholar] [CrossRef]

- Vicente, F.; Huang, Z.; Xiong, X.; De la Torre, F.; Zhang, W.; Levi, D. Driver Gaze Tracking and Eyes of the Road Detection System. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2014–2027. [Google Scholar] [CrossRef]

- Hansen, D.W.; Ji, Q. In the eye of the beholder: A survey of models for eyes and gaze. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 478–500. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Smith, M.; Dufour, R. A Final Report of Safety Vehicles Using Adaptive Interface Technology: Visual Distraction. Available online: http://www.volpe.dot.gov/coi/hfrsa/work/roadway/saveit/docs.html (accessed on 10 September 2019).

- Doshi, A.; Trivedi, M.M. Tactical driver behavior prediction and intent inference: A review. In Proceedings of the IEEE International Conference on Intelligent Transportation Systems, Washington, DC, USA, 5–7 October 2011; pp. 1892–1897. [Google Scholar]

- Doshi, A.; Cheng, S.Y.; Trivedi, M. A novel active heads-up display for driver assistance. IEEE Trans. Syst. Man Cybern. 2009, 39, 85–93. [Google Scholar] [CrossRef] [PubMed]

- Doshi, A.; Morris, B.T.; Trivedi, M.M. On-road prediction of driver’s intent with multimodal sensory cues. IEEE Pervasive Comput. 2011, 10, 22–34. [Google Scholar] [CrossRef]

- Huang, K.S.; Trivedi, M.M.; Gandhi, T. Driver’s view and vehicle surround estimation using omnidirectional video stream. In Proceedings of the IEEE Intelligent Vehicles Symposium, Columbus, OH, USA, 9–11 June 2003; pp. 444–449. [Google Scholar]

- Ng, J.; Gong, S. Composite Support Vector Machines for Detection of Faces Across Views and Pose Estimation. Image Vis. Comput. 2002, 20, 359–368. [Google Scholar] [CrossRef]

- Voit, M.; Nickel, K.; Stiefelhagen, R. Head Pose Estimation in Single- and Multi-View Environments Results on the CLEAR’07 Benchmarks. In Proceedings of the International Workshop on Classification of Events, Activities and Relationships, Baltimore, MD, USA, 8–9 May 2007. [Google Scholar]

- Yan, S.; Zhang, Z.; Fu, Y.; Hu, Y.; Tu, J.; Huang, T. Learning a Person-Independent Representation for Precise 3D Pose Estimation. In Proceedings of the International Workshop on Classification of Events, Activities and Relationships, Baltimore, MD, USA, 8–9 May 2007. [Google Scholar]

- Wu, Y.; Toyama, K. Wide-Range, Person- and IlluminationInsensitive Head Orientation Estimation. In Proceedings of the IEEE International Conference Automatic Face and Gesture Recognition, Grenoble, France, 28–30 March 2000; pp. 183–188. [Google Scholar]

- Ba, S.; Odobez, J.-M. From Camera Head Pose to 3D Global Room Head Pose Using Multiple Camera Views. In Proceedings of the International Workshop on Classification of Events, Activities and Relationships, Baltimore, MD, USA, 8–9 May 2007. [Google Scholar]

- Sakurai, K.; Yan, M.; Tamura, H.; Tanno, K. A Study on Eyes Tracking Method using Analysis of Electrooculogram Signals. In Proceedings of the 22th International Symposium on Artificial Life and Robotics 2017 (AROB 22nd 2017), Beppu, Japan, 19–21 January 2017. [Google Scholar]

- Gibaldi, A.; Vanegas, M.; Bex, P.J.; Maiello, G. Evaluation of the Tobii EyeX eye tracking controller and Matlab toolkit for research. Behav. Res. Methods 2017. [Google Scholar] [CrossRef] [PubMed]

- Owsley, C.; Ball, K.; McGwin, G.; Sloane, M.E. Visual processing impairment and risk of motor vehicle crash among older adults. J. Am. Med Assoc. 1998, 279, 1083–1088. [Google Scholar] [CrossRef] [PubMed]

- Sekuler, A.B.; Bennett, P.J.; Mamelak, M. Effects of aging on the useful field of view. Exp. Aging Res. 2000, 26, 103–120. [Google Scholar] [PubMed]

| True Value (deg) | Estimated Angle Error (deg) |

|---|---|

| 10 | 1.9 |

| 20 | 4.3 |

| 30 | 6.1 |

| 40 | 7.3 |

| 50 | 4.1 |

| 60 | 0.9 |

| Average | 4.1 |

| Marker1 (Upward Triangle) | 93.9% |

|---|---|

| Marker2 (Circle) | 87.5% |

| Marker3 (Downward Triangle) | 91.4% |

| Marker4 (Star) | 88.2% |

| Approach | Paper | Mean Error (deg) |

|---|---|---|

| Appearance Template Methods ■ Image Comparison | J. Ng and S. Gong [25] | 9.6 |

| Nonlinear Regression Methods ■ Neural Network | M. Voit et al. [26] | 10.5 |

| Manifold Embedding Method ■ Nonlinear Subspaces | S. Yan et al. [27] | 7.8 |

| Tracking Methods ■ Model Tracking | Y. Wu and K. Toyama [28] | 24.5 |

| Hybrid Methods | S. Ba and J.-M. Odobez [29] | 9.4 |

| Proposed Method ■ Template matching | 4.1 |

| Marker | Before | After |

|---|---|---|

| Marker1 (Upward Triangle) | 93.2% | 93.7% |

| Marker2 (Circle) | 85.1% | 89.8% |

| Marker3 (Downward triangle) | 90.8% | 91.1% |

| Marker4 (Star) | 83.7% | 82.0% |

| Video | Before | After |

|---|---|---|

| Video1 (7 min 35 s) | 15 min 1 s | 10 min 48 s |

| Video2 (7 min 45 s) | 15 min 20 s | 10 min 59 s |

| Video3 (7 min 48 s) | 16 min 1 s | 11 min 33 s |

| Number | Situation | Time | Eye | Head | Gaze | Judgement Flag | |||

|---|---|---|---|---|---|---|---|---|---|

| X_eye (deg) | Y_eye (deg) | X_head (deg) | Y_head (deg) | X_gaze (deg) | Y_gaze (deg) | ||||

| 1 | Left | 01:28 | −30.8 | 0.6 | −25.3 | −20.2 | −56.1 | −19.6 | Left |

| 2 | Right | 02:05 | 28.9 | 14.4 | 9.8 | 3.6 | 38.7 | 18 | Right |

| 3 | Right | 02:26 | 7.4 | 4.0 | 32.4 | 30.1 | 39.8 | 34.1 | Right |

| 4 | Right | 02:50 | 40.7 | 46.8 | 21.4 | 24.0 | 62.1 | 70.8 | Right |

| 5 | Left | 02:52 | −11.5 | 9.0 | −32.6 | −18.8 | −44.1 | −9.8 | Left |

| 6 | Left | 03:13 | −10.5 | −3.4 | −33.8 | −16.2 | −44.3 | −19.6 | Left |

| 7 | Left | 03:22 | 3.4 | 2.1 | −123.9 | −12.4 | −120.5 | −10.3 | Left |

| 8 | Left | 03:38 | −28.6 | 4.6 | −112.4 | −18.7 | −141 | −14.1 | Left |

| 9 | Left | 03:54 | 6.3 | 4.2 | −96.6 | −16.7 | −90.3 | −12.5 | Left |

| 10 | Left | 03:56 | 5.2 | 2.1 | 14.6 | 6.8 | −29.5 | 16.8 | Right |

| 11 | Left | 04:27 | −41.9 | 8.9 | −35.0 | −15.7 | −76.9 | −6.7 | Left |

| 12 | Front | 04:41 | −5.4 | 6.8 | 33.9 | 11.5 | −31.2 | 18.8 | Left |

| 13 | Straight | 04:45 | −5.6 | 8.7 | 4.8 | −20.4 | −0.8 | −11.6 | Front |

| 14 | Left | 05:26 | 8.7 | 3.7 | −95.6 | −21.9 | −86.9 | −18.2 | Left |

| 15 | Left | 05:50 | −21.6 | −0.5 | −40.8 | −17.8 | −62.4 | −18.3 | Left |

| 16 | Left | 05:51 | −21.3 | 1.8 | −40.8 | −17.8 | −62.1 | −16 | Left |

| 17 | Right | 06:46 | 33.7 | −20.8 | −1.4 | −11.2 | 32.3 | −32.1 | Right |

| 18 | Left | 06:51 | −24.6 | 0.4 | −29.9 | −13.8 | −54.5 | −13.3 | Left |

| 19 | Right | 06:56 | 22.8 | −1.4 | 34.6 | 15.8 | 57.4 | 14.4 | Right |

| 20 | Straight | 07:03 | 2.0 | −4.0 | −9.3 | −17.4 | −7.4 | −21.4 | Front |

| 21 | Left | 07:07 | −5.9 | −5.6 | −121.4 | −13.9 | −127.3 | −19.5 | Left |

| 22 | Left | 07:48 | −19.8 | −4.2 | −22.1 | −25.9 | −41.9 | −30.1 | Left |

| 23 | Left | 08:28 | 2.4 | 0.9 | −119.7 | −14.8 | −117.3 | −13.9 | Left |

| 24 | Right | 09:15 | 24.3 | 3.4 | 5.3 | 18.8 | 29.6 | 22.2 | Right |

| 25 | Right | 09:50 | 59.8 | 50.7 | −3.1 | −12.7 | 56.7 | 38 | Right |

| 26 | Straight | 10:13 | −0.1 | 5.0 | 5.3 | 15.9 | 5.2 | 20.9 | Front |

| 27 | Left | 10:20 | 2.2 | −1.9 | −40.4 | −7.7 | −38.2 | −9.5 | Left |

| 28 | Right | 10:23 | 17.7 | 8.8 | 25.0 | 14.7 | 42.6 | 23.4 | Right |

| 29 | Straight | 10:30 | −5.1 | −2.4 | 18.6 | 12.8 | 13.5 | 10.4 | Front |

| 30 | Right | 10:35 | 10.0 | 4.2 | 33.3 | 16.3 | 43.2 | 20.4 | Right |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sakurai, K.; Tamura, H. A Study on the Gaze Range Calculation Method During an Actual Car Driving Using Eyeball Angle and Head Angle Information. Sensors 2019, 19, 4774. https://doi.org/10.3390/s19214774

Sakurai K, Tamura H. A Study on the Gaze Range Calculation Method During an Actual Car Driving Using Eyeball Angle and Head Angle Information. Sensors. 2019; 19(21):4774. https://doi.org/10.3390/s19214774

Chicago/Turabian StyleSakurai, Keiko, and Hiroki Tamura. 2019. "A Study on the Gaze Range Calculation Method During an Actual Car Driving Using Eyeball Angle and Head Angle Information" Sensors 19, no. 21: 4774. https://doi.org/10.3390/s19214774

APA StyleSakurai, K., & Tamura, H. (2019). A Study on the Gaze Range Calculation Method During an Actual Car Driving Using Eyeball Angle and Head Angle Information. Sensors, 19(21), 4774. https://doi.org/10.3390/s19214774