Infrared and Visible Image Fusion through Details Preservation

Abstract

1. Introduction

- (1)

- The tiny details cannot be decomposed into the detail part. This brings about uneven texture and poor visibility in the fused image.

- (2)

- These methods cannot extract different features of the source images, leading to the loss of various features in the fused image.

- (3)

- The extracted features cannot be fully utilized, which cause blurring of the fused image.

- (1)

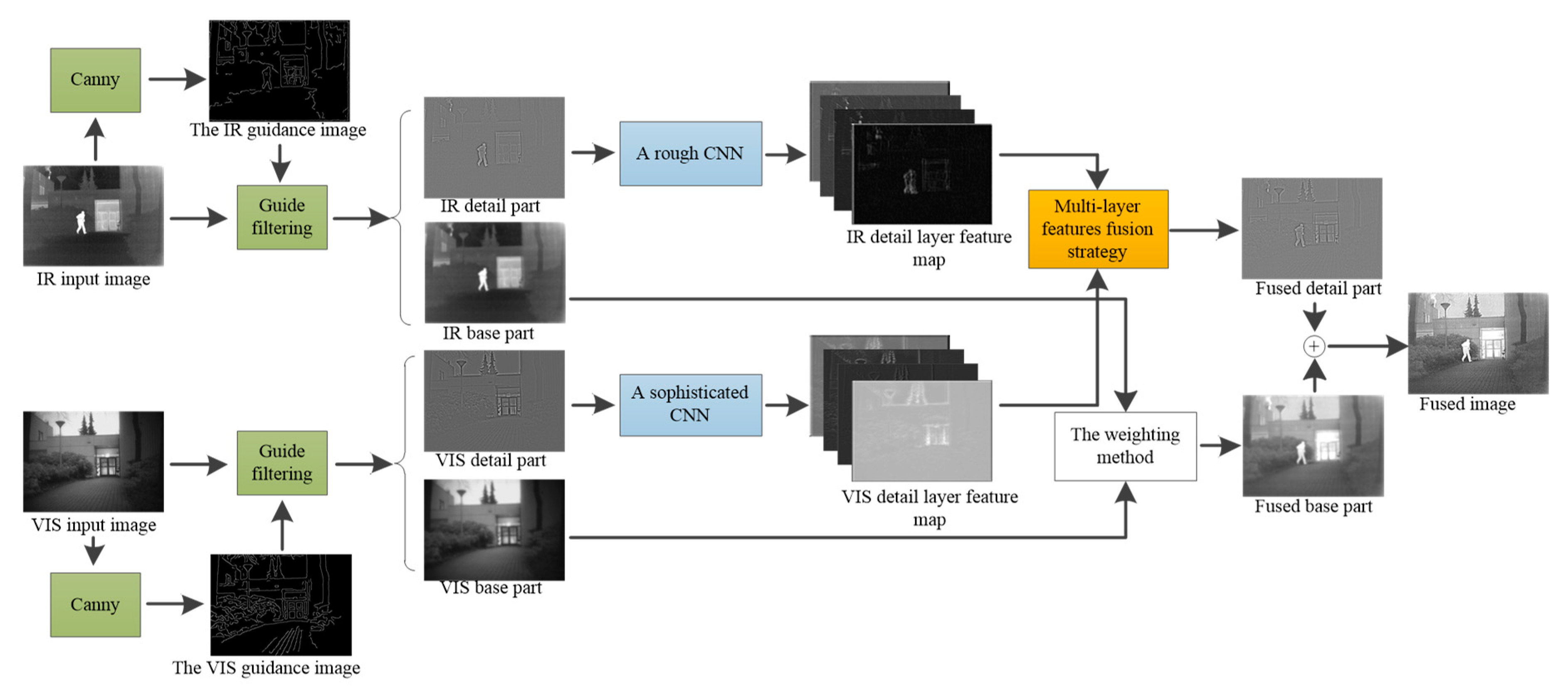

- For the first problem, our method takes the advantage of guided filter to get the detail part, with the image containing only the strong edge of the source image as the guidance image and the source images as the input images. In this way, rich tiny details can be decomposed into the detail part.

- (2)

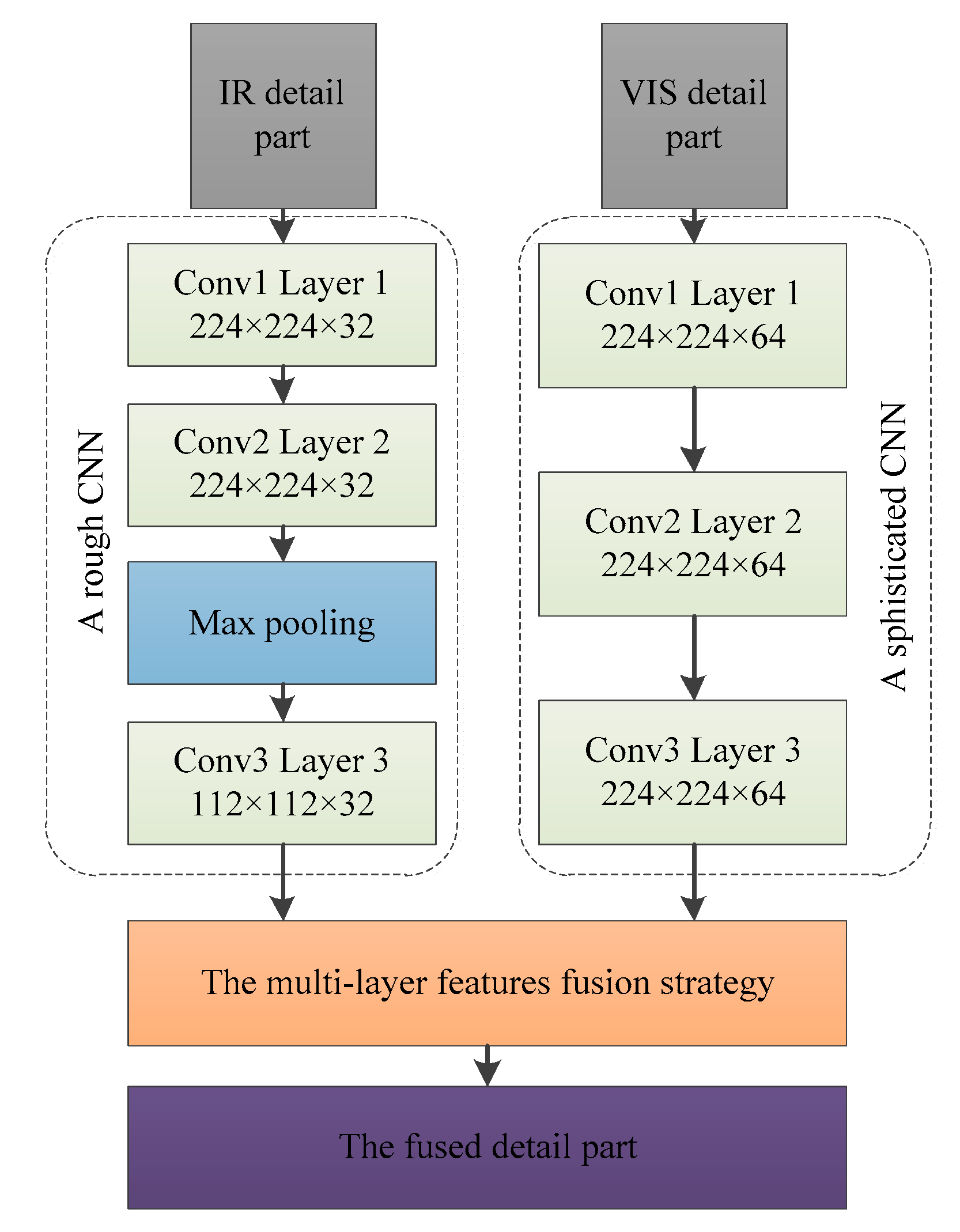

- For the second problem, a rough CNN and a sophisticated CNN are designed to extract various features of the infrared and visible images respectively.

- (3)

- For the third problem, a multi-layer features fusion strategy is proposed, which combines the advantages of DCT. In this way, the significant features can be highlighted and the details can be enhanced.

2. Image Fusion Method through Details Preservation

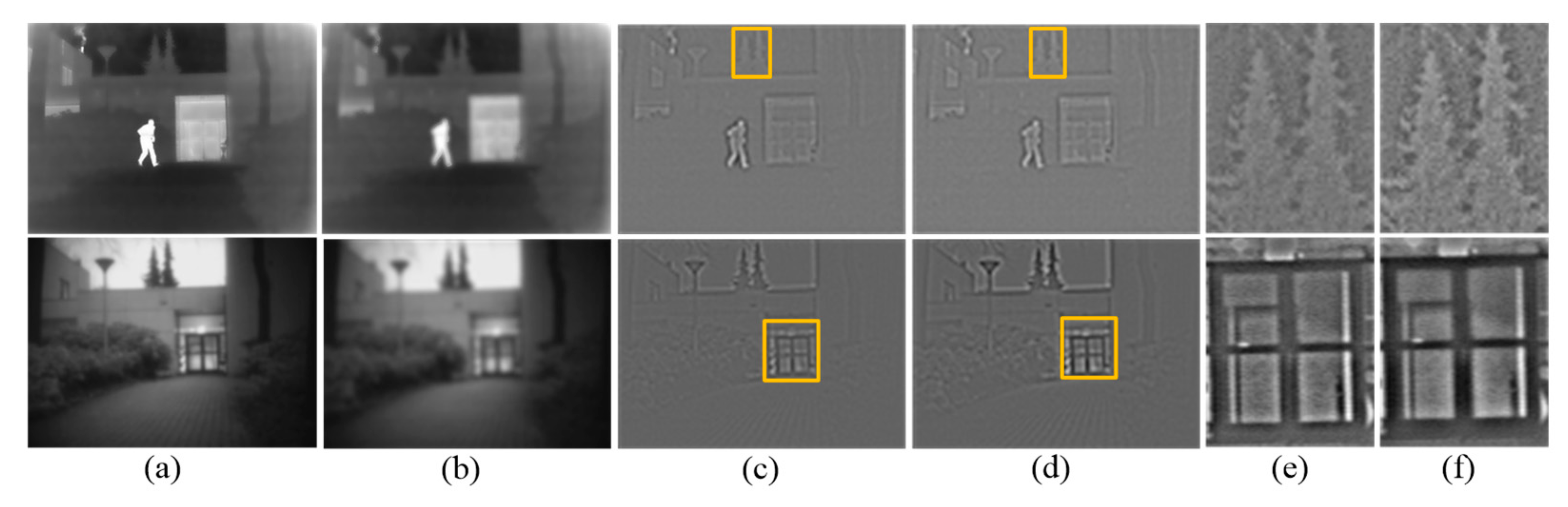

2.1. Image Decomposition by Guided Filter

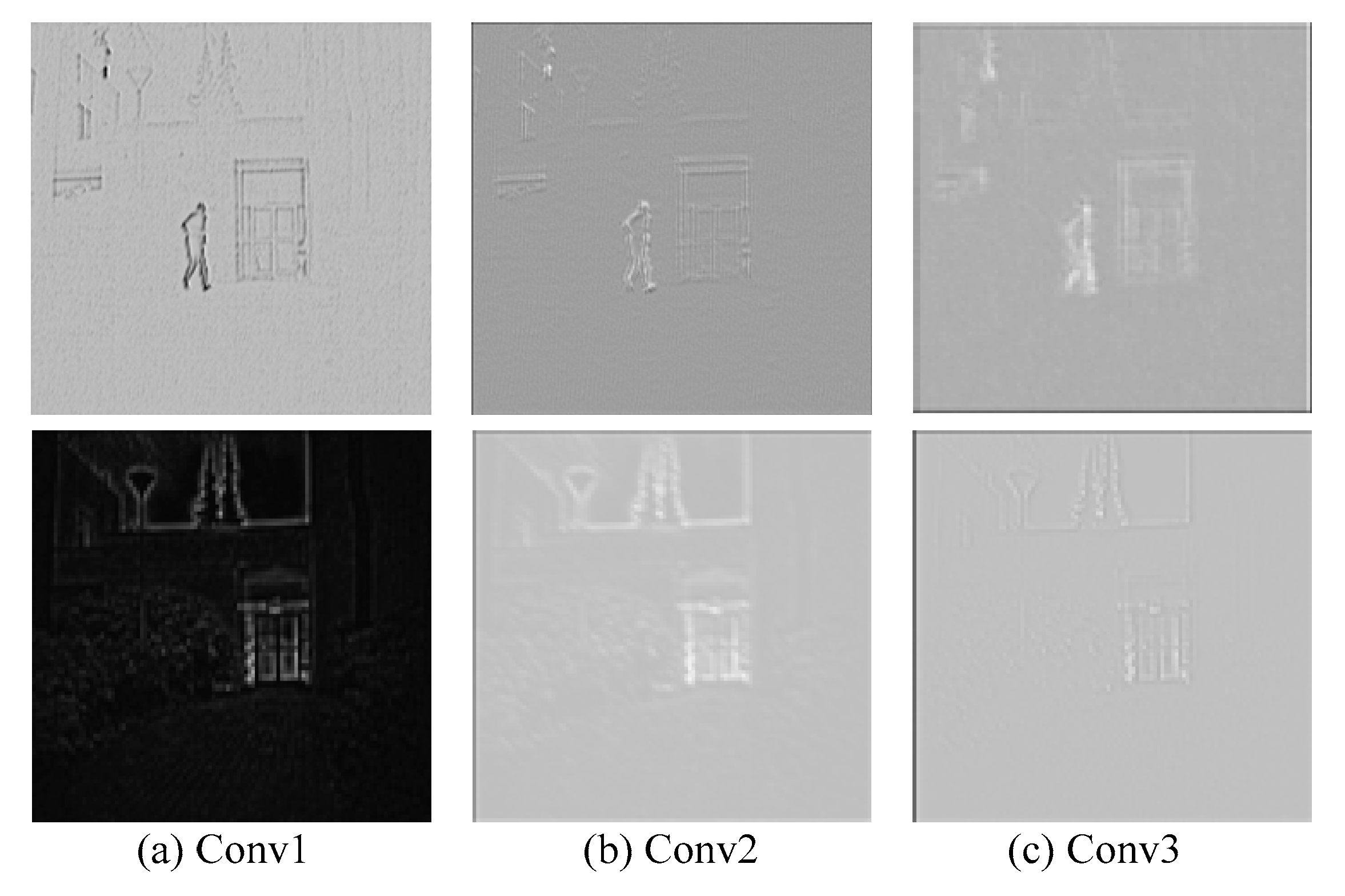

2.2. Fusion of Detail Parts Based on CNN and DCT

2.2.1. Infrared Detail Layer Features Extraction by Rough CNN

2.2.2. Visible Detail Layer Features Extraction by Sophisticated CNN

2.2.3. Training

2.2.4. Design of Multi-Layer Feature Fusion Strategy by DCT

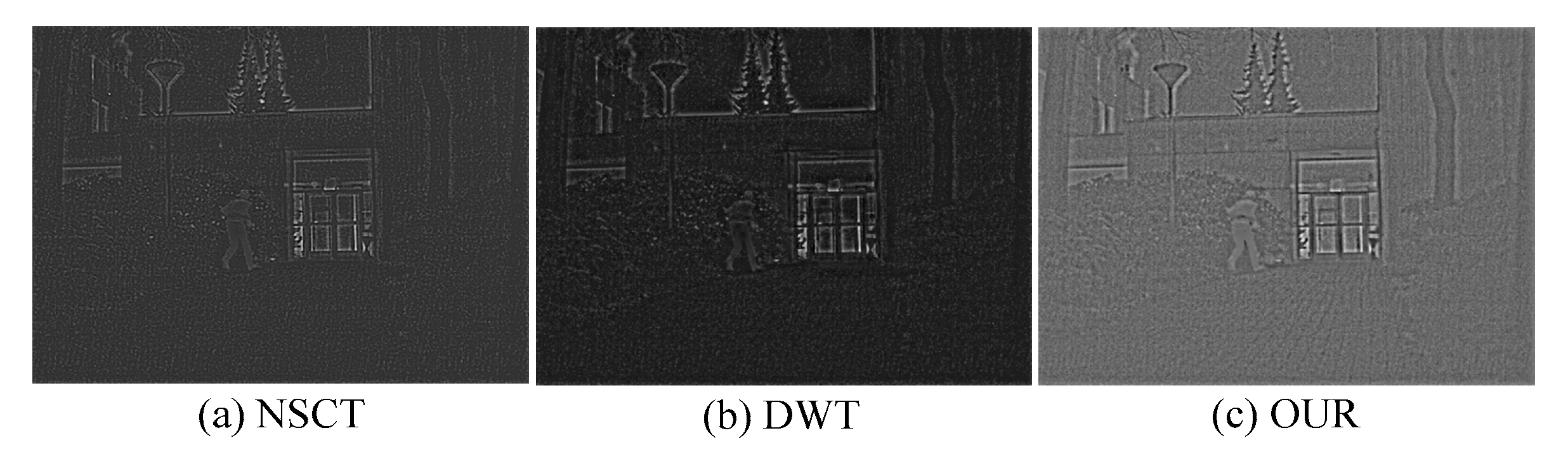

2.3. Weighting Method Fusion Base Parts

2.4. Two-Scale Image Reconstruction

- Step 1: according to Section 2.2, it shows that this paper uses CNN to extract the features of detail parts, and uses the advantages of DCT to design a multi-layer features fusion strategy to get the fused detail part .

- Step 2: according to Section 2.3, it shows that is the fused base part by weighting method.

- Step 3: we get the fused image , as shown in (10):

3. Experimental Results

3.1. Experimental Settings

3.1.1. Image Sets

3.1.2. Compared Methods

3.1.3. Computation Platform

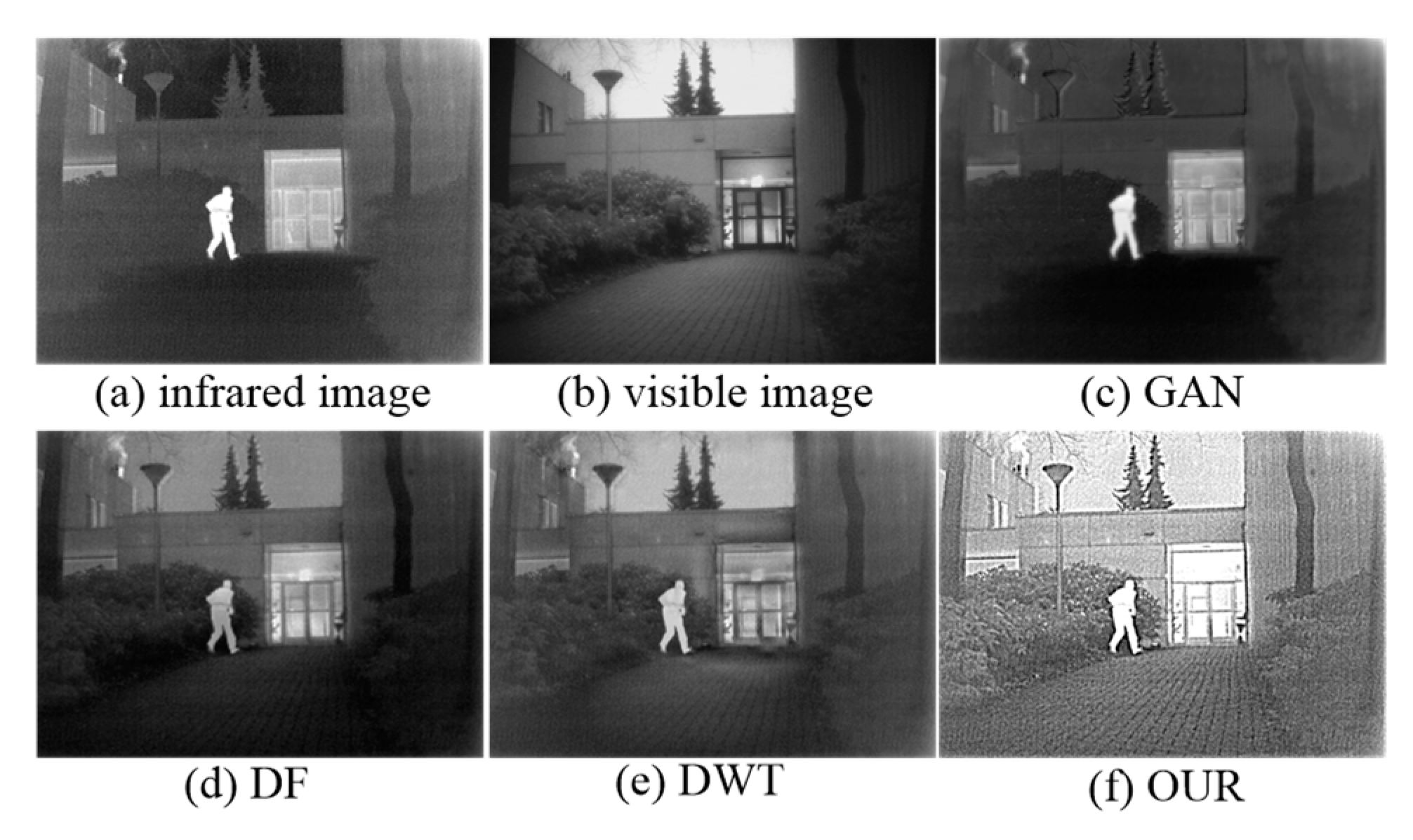

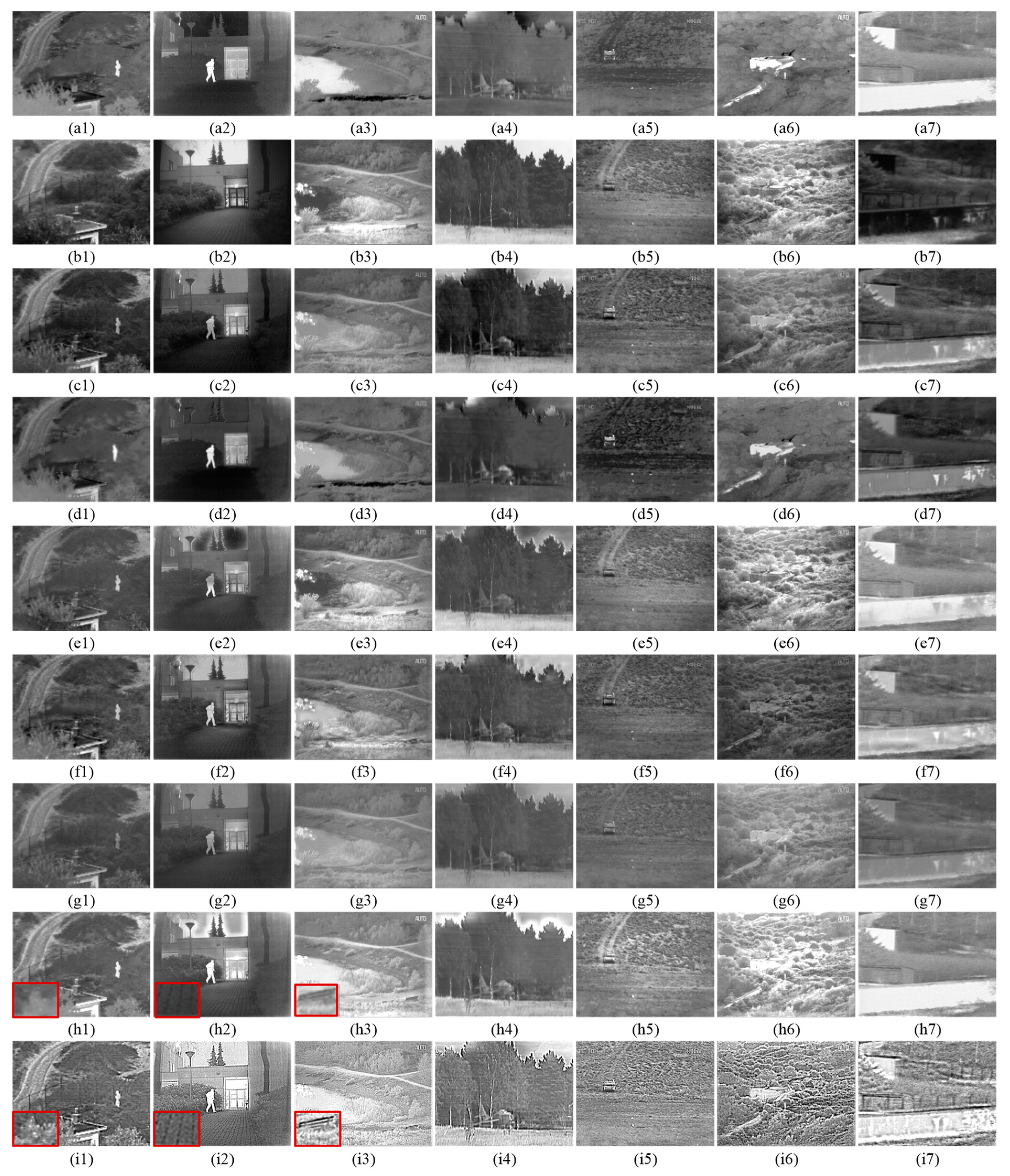

3.2. Subjective Performance Evaluation

3.3. Objective Performance Evaluation

3.4. Computational Costs

4. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Ma, J.; Ma, Y.; Li, C. Infrared and visible image fusion methods and applications: A survey. Inf. Fusion 2019, 45, 153–178. [Google Scholar] [CrossRef]

- Li, S.; Kang, X.; Fang, L.; Hu, J.; Yin, H. Pixel-level image fusion: A survey of the state of the art. Inf. Fusion 2017, 33, 100–112. [Google Scholar] [CrossRef]

- Dong, J.; Zhuang, D.; Huang, Y.; Fu, J. Advances in Multi-Sensor Data Fusion: Algorithms and Applications. Sensors 2009, 9, 7771–7784. [Google Scholar] [CrossRef] [PubMed]

- Bouwans, T.; Javed, S.; Zhang, H.; Lin, Z.; Otazo, R. On the Applications of Robust PCA in Image and Video Processing. Proc. IEEE 2018, 106, 1427–1457. [Google Scholar] [CrossRef]

- Cvejic, N.; Bull, D.; Canagarajah, N. Region-based multimodal image fusion using ICA bases. IEEE Sens. J. 2007, 7, 743–751. [Google Scholar] [CrossRef]

- Zhang, Z.; Blum, R.S. A categorization of multiscale-decomposition-based image fusion schemes with a performance study for a digital camera application. Proc. IEEE 1999, 87, 1315–1326. [Google Scholar] [CrossRef]

- Chai, P.; Luo, X.; Zhang, Z. Image fusion using quaternion wavelet transform and multiple features. IEEE Access 2017, 5, 6724–6734. [Google Scholar] [CrossRef]

- Zhang, Q.; Liu, Y.; Blum, R.; Han, J.; Tao, D. Sparse representation based multi-sensor image fusion for multi-focus and multi-modality images: A review. Inf. Fusion 2018, 40, 57–75. [Google Scholar] [CrossRef]

- Yang, B.; Li, S. Multifocus image fusion and restoration with sparse representation. IEEE Trans. Instrum. Meas. 2010, 59, 884–892. [Google Scholar] [CrossRef]

- Meher, B.; Agrawal, S.; Panda, R.; Abraham, A. A survey on region based image fusion methods. Inf. Fusion 2019, 48, 119–132. [Google Scholar] [CrossRef]

- Zhou, Y.; Gao, K.; Dou, Z.; Hua, Z.; Wang, H. Target-aware fusion of infrared and visible images. IEEE Access 2018, 6, 79039–79049. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Wang, Z.; Ward, R.; Wang, X. Deep learning for pixel-level image fusion: Recent advances and future prospects. Inf. Fusion 2017, 42, 158–173. [Google Scholar] [CrossRef]

- Ma, J.; Yu, W.; Liang, P.; Li, C.; Jiang, J. FusionGAN: A generative adversarial network for infrared and visible image fusion. Inf. Fusion 2019, 48, 11–26. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Cheng, J.; Peng, H.; Wang, Z. Infrared and visible image fusion with convolutional neural networks. Int. J. Wavelets Multiresolut. Inf. Process. 2018, 16, 1850018. [Google Scholar] [CrossRef]

- Li, H.; Wu, X. DenseFuse: A fusion approach to infrared and visible images. IEEE Trans. Image Process. 2019, 25, 2614–2623. [Google Scholar] [CrossRef]

- Tan, W.; Zhou, H.; Song, J.; Li, H.; Yu, Y.; Du, J. Infrared and visible image perceptive fusion through multi-level gaussian curvature filtering image decomposition. Appl. Opt. 2019, 58, 3064–3073. [Google Scholar] [CrossRef]

- Zhang, L.; Yang, F.; Ji, L. Multi-Scale Fusion Algorithm Based on Structure Similarity Index Constraint for Infrared Polarization and Intensity Images. IEEE Access 2017, 5, 24646–24655. [Google Scholar] [CrossRef]

- Zhu, Z.; Yin, H.; Chai, Y.; Li, Y.; Qi, G. A novel multi-modality image fusion method based on image decomposition and sparse representation. Inf. Sci. 2018, 432, 516–529. [Google Scholar] [CrossRef]

- Hu, J.; Li, S. The multiscale directional bilateral filter and its and its application to multisensory image fusion. Inf. Fusion 2012, 13, 196–206. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Guided image filter. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 679–698. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Liu, Y.; Chen, X.; Peng, H.; Wang, Z. Multi-focus image fusion with a deep convolutional neural network. Inf. Fusion 2017, 36, 191–207. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Ward, R.; Wang, Z. Image fusion with convolutional sparse representation. IEEE Signal Process. Lett. 2016, 23, 1882–1886. [Google Scholar] [CrossRef]

- Shao, Z.; Cai, J. Remote sensing image fusion with deep convolutional neural network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1656–1669. [Google Scholar] [CrossRef]

- Zeiler, M.; Fergus, R. Visualizing and understanding convolutional network. arXiv 2013, arXiv:1311.2901. [Google Scholar]

- Itti, L.; Koch, C.; Niebur, E. A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1254–1259. [Google Scholar] [CrossRef]

- Yang, S.; Yang, M.; Jiao, L.; Wu, R.; Wang, Z. Image fusion based on a new contourlet packet. Inf. Fusion 2010, 1, 78–84. [Google Scholar] [CrossRef]

- Paramanandham, N.; Rajendiran, K. Infrared visible image fusion using discrete cosine transform and swarm intelligence for surveillance applicatons. Infrared Phys. Technol. 2018, 88, 13–22. [Google Scholar] [CrossRef]

- Zhu, Z.; Zheng, M.; Qi, G.; Wang, D.; Xiang, Y. A phase congruency and local Laplacian energy based multi-modality medical image fusion method in NSCT domain. IEEE Access 2018, 20, 20811–20824. [Google Scholar] [CrossRef]

- Ma, J.; Zhou, Z.; Wang, B.; Zong, H. Infrared and visible image fusion based on visual saliency map and weighted least square optimization. Infrared Phys. Technol. 2017, 82, 8–17. [Google Scholar] [CrossRef]

- Zhang, W.; Dong, L.; Pan, X.; Zhou, J.; Qin, L.; Xu, W. Single Image Defogging Based on Multi-Channel Convolutional MSRCR. IEEE Access 2019, 7, 72492–72504. [Google Scholar] [CrossRef]

- Li, S.; Kang, X.; Wang, Z. Image fusion with guided filtering. IEEE Trans. Image Process. 2013, 22, 2864–2875. [Google Scholar] [PubMed]

- Zhang, Y.; Zhang, J.; Bai, X. Infrared and visible image fusion through infrared feature extraction and visual information preservation. Infrared Phys. Technol. 2017, 83, 227–237. [Google Scholar] [CrossRef]

- Roberts, J.; van Aardt, J.; Ahmed, F. Assessment of image fusion procedures using entropy, image quality, and multispectral classification. J. Appl. Remote Sens. 2008, 2, 023522. [Google Scholar]

- Bai, X.; Zhou, F.; Xue, B. Edge preserved image fusion based on multiscale toggle contrast operator. Image Vis. Comput. 2011, 29, 829–839. [Google Scholar] [CrossRef]

- Qu, G.; Zhang, D.; Yan, P. Information measure for performance of image fusion. Electron. Lett. 2002, 38, 313–315. [Google Scholar] [CrossRef]

- Eskicioglu, A.; Fisher, P. Image quality measures and their performance. IEEE Trans. Commun. 1995, 43, 2959–2965. [Google Scholar] [CrossRef]

- Bhatnagar, G.; Liu, Z. A novel image fusion framework for night-vision navigation and surveillance. Signal Image Video Process. 2015, 9, 165–175. [Google Scholar] [CrossRef]

- Han, Y.; Cai, Y.; Cao, Y.; Xu, X. A new image fusion performance metric based on visual information fidelity. Inf. Fusion 2013, 14, 127–135. [Google Scholar] [CrossRef]

| Source Image | Index | DF | GAN | GFF | QBI | DWT | DCT | OUR |

|---|---|---|---|---|---|---|---|---|

| Camp | EN | 6.8369 | 6.5921 | 6.3337 | 6.7693 | 6.7044 | 6.3127 | 7.0462 |

| MG | 5.6419 | 3.1139 | 4.2137 | 5.0931 | 5.0542 | 3.3768 | 11.7701 | |

| MI | 13.6737 | 13.1841 | 12.6675 | 13.5386 | 13.4087 | 12.6255 | 14.0923 | |

| SD | 34.4917 | 25.8433 | 25.3713 | 33.7623 | 27.5241 | 24.6854 | 36.8892 | |

| SF | 11.1869 | 6.3977 | 9.0002 | 10.8049 | 9.9757 | 6.7636 | 22.9205 | |

| VIF | 0.5707 | 0.3215 | 0.5882 | 0.6896 | 0.5539 | 0.5873 | 0.6758 | |

| Keptein | EN | 6.9733 | 6.7229 | 6.8564 | 7.1692 | 6.9279 | 6.5259 | 7.3641 |

| MG | 6.0872 | 1.9692 | 3.5963 | 4.7491 | 4.3282 | 2.8373 | 18.1836 | |

| MI | 13.9466 | 13.4458 | 13.7128 | 14.3384 | 13.8557 | 13.0519 | 14.4281 | |

| SD | 43.6383 | 34.9727 | 33.2555 | 49.5339 | 41.3485 | 31.5369 | 54.9976 | |

| SF | 12.3499 | 4.3892 | 7.0715 | 9.2726 | 8.0446 | 5.2636 | 32.0286 | |

| VIF | 0.5716 | 0.3975 | 0.7058 | 0.9552 | 0.7783 | 0.7079 | 1.0037 | |

| Laker | EN | 6.8733 | 6.5194 | 7.3661 | 7.0391 | 6.9733 | 6.5385 | 6.9747 |

| MG | 6.0659 | 2.8229 | 4.6354 | 4.8585 | 4.4619 | 2.7834 | 19.1483 | |

| MI | 13.7466 | 13.0388 | 14.7323 | 14.0781 | 13.9525 | 13.0771 | 14.5495 | |

| SD | 30.4503 | 30.2064 | 43.9306 | 40.484 | 35.5537 | 24.1975 | 44.0385 | |

| SF | 12.2313 | 6.2371 | 11.4587 | 10.4112 | 9.9456 | 6.2536 | 35.7208 | |

| VIF | 0.4825 | 0.5303 | 0.8905 | 0.8532 | 0.7118 | 0.6406 | 0.8816 | |

| Airplane | EN | 7.0575 | 6.0773 | 6.4477 | 6.7303 | 6.6552 | 6.1811 | 7.2813 |

| MG | 4.6496 | 1.4983 | 2.2827 | 2.7531 | 2.4319 | 1.5607 | 9.4955 | |

| MI | 14.1151 | 12.1546 | 12.8955 | 13.4606 | 13.3103 | 12.3621 | 14.5661 | |

| SD | 55.9542 | 20.9171 | 44.6439 | 47.9522 | 41.3763 | 30.4096 | 46.9162 | |

| SF | 11.0442 | 3.3018 | 5.2581 | 7.1919 | 5.4297 | 3.3898 | 20.1914 | |

| VIF | 0.8279 | 0.5059 | 0.7515 | 0.8799 | 0.7828 | 0.6889 | 1.1583 | |

| Fennek | EN | 6.2403 | 6.1625 | 6.3859 | 6.6034 | 5.9661 | 5.3997 | 7.3157 |

| MG | 9.4777 | 4.1883 | 4.3086 | 8.4251 | 4.9379 | 3.2229 | 26.3613 | |

| MI | 12.4807 | 12.3251 | 12.7719 | 13.2068 | 11.9321 | 10.7994 | 14.1314 | |

| SD | 19.1077 | 18.6671 | 20.4052 | 25.2119 | 15.4756 | 10.4274 | 39.9587 | |

| SF | 16.2758 | 7.1355 | 7.4061 | 14.3185 | 8.3821 | 5.4628 | 43.5421 | |

| VIF | 0.4431 | 0.4852 | 0.8333 | 1.1037 | 0.5843 | 0.5822 | 0.7964 | |

| Bunker | EN | 7.0003 | 6.4505 | 7.4894 | 6.9885 | 6.3587 | 6.7014 | 7.1975 |

| MG | 8.7679 | 3.7572 | 7.0454 | 8.2489 | 4.2099 | 4.1189 | 23.0965 | |

| MI | 14.0061 | 12.9011 | 14.9787 | 13.9771 | 12.7175 | 13.4028 | 14.5949 | |

| SD | 31.6247 | 26.4067 | 45.271 | 39.6577 | 20.4897 | 25.5854 | 47.9166 | |

| SF | 15.5211 | 6.9616 | 13.3655 | 15.1211 | 7.6618 | 7.5333 | 40.4068 | |

| VIF | 0.3636 | 0.3651 | 0.8586 | 0.7384 | 0.5103 | 0.5241 | 0.6131 | |

| Wall | EN | 7.1508 | 6.7714 | 6.9342 | 6.6606 | 6.8639 | 6.3696 | 7.2837 |

| MG | 4.9838 | 3.9107 | 4.0493 | 4.4726 | 4.2235 | 2.7378 | 16.4612 | |

| MI | 14.3016 | 13.5428 | 13.8684 | 13.3211 | 13.7277 | 12.7393 | 14.5674 | |

| SD | 41.5795 | 36.4328 | 41.3634 | 45.7268 | 38.3868 | 24.0783 | 46.3583 | |

| SF | 9.3014 | 7.2743 | 8.0142 | 8.7172 | 7.7863 | 5.0341 | 29.1331 | |

| VIF | 0.7201 | 0.2913 | 0.7068 | 0.8944 | 0.6593 | 0.6471 | 0.8073 |

| Method | Camp | Kaptein | Lake | Airplane | Fennk | Bunker | Bunker |

|---|---|---|---|---|---|---|---|

| Dense | 2.074 | 2.171 | 2.189 | 2.196 | 2.122 | 2.139 | 2.191 |

| Gan | 0.154 | 0.171 | 0.253 | 0.184 | 0.102 | 0.137 | 0.146 |

| GFF | 0.556 | 0.486 | 0.583 | 0.421 | 0.305 | 0.587 | 0.561 |

| QBI | 0.038 | 0.051 | 0.082 | 0.081 | 0.041 | 0.062 | 0.051 |

| DWT | 0.581 | 0.537 | 0.638 | 0.473 | 0.412 | 0.638 | 0.605 |

| DCT | 0.013 | 0.056 | 0.090 | 0.059 | 0.007 | 0.095 | 0.153 |

| OUR | 1.914 | 1.963 | 2.121 | 1.831 | 1.675 | 1.598 | 2.098 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Dong, L.; Ji, Y.; Xu, W. Infrared and Visible Image Fusion through Details Preservation. Sensors 2019, 19, 4556. https://doi.org/10.3390/s19204556

Liu Y, Dong L, Ji Y, Xu W. Infrared and Visible Image Fusion through Details Preservation. Sensors. 2019; 19(20):4556. https://doi.org/10.3390/s19204556

Chicago/Turabian StyleLiu, Yaochen, Lili Dong, Yuanyuan Ji, and Wenhai Xu. 2019. "Infrared and Visible Image Fusion through Details Preservation" Sensors 19, no. 20: 4556. https://doi.org/10.3390/s19204556

APA StyleLiu, Y., Dong, L., Ji, Y., & Xu, W. (2019). Infrared and Visible Image Fusion through Details Preservation. Sensors, 19(20), 4556. https://doi.org/10.3390/s19204556