Abstract

Observing animal movements enables us to understand animal behavior changes, such as migration, interaction, foraging, and nesting. Based on spatiotemporal changes in weather and season, animals instinctively change their position for foraging, nesting, or breeding. It is known that moving patterns are closely related to their traits. Analyzing and predicting animals’ movement patterns according to spatiotemporal change offers an opportunity to understand their unique traits and acquire ecological insights into animals. Hence, in this paper, we propose an animal movement prediction scheme using a predictive recurrent neural network architecture. To do that, we first collect and investigate geo records of animals and conduct pattern refinement by using random forest interpolation. Then, we generate animal movement patterns using the kernel density estimation and build a predictive recurrent neural network model to consider the spatiotemporal changes. In the experiment, we perform various predictions using 14 K long-billed curlew locations that contain their five-year movements of the breeding, non-breeding, pre-breeding, and post-breeding seasons. The experimental results confirm that our predictive model based on recurrent neural networks can be effectively used to predict animal movement.

1. Introduction

Analyzing animal movements is the first step toward understanding the ecosystem. Animal movements provide potential information for obtaining ecological insights on topics such as habitat selection, population dynamics, and group behavior. Over the decades, many studies have been carried out based on the awareness of this importance [1,2,3,4]. Since the 1990s, the development of telemetry technologies such as the global positioning system (GPS) and advanced research and global observation satellite (ARGOS) has accelerated various studies to model animal movements. Furthermore, with the availability of remote sensing technology, diverse meteorological and geographical sensing data can be continuously acquired, and the amount is sufficient to carry out reasonable modeling. Many scientists have emphasized the need to use modeling processes to understand animal movements and the factors correlated with such movements [5,6,7,8].

Recently, as the versatility of machine learning methodologies has been observed in various domains, many efforts have been made to model animal movements using machine learning [9,10,11,12]. For instance, Patterson et al. proposed a two-phase prediction scheme to classify two animal behavior states: transient and resident. They used the maximum likelihood and monte carlo (MC) methods, and a hidden markov model (HMM) was used to predict the potential state of behavior change [13]. MoveHMM [14], which is a representative modeling tool based on hidden markov processes, showed that probability-based prediction of state switching is possible by using features such as animal step length and turning angle. More recently, the deep neural network (DNN) has become a major tool in animal movement modeling. For example, Hirakawa et al. focused on filling in the observation gap that frequently occurred while tracking wild animals periodically [15]. They found that previous interpolation methods (e.g., linear, curvilinear, random walk) are not sufficient to recover large spatial gaps. For this reason, they used inverse reinforcement learning (IRL) to recover the trajectory by estimating the gap as a reward function. Zhiping et al. utilized a six-layer convolution neural network (CNN) to distinguish fish shapes and track the zebrafish trajectory [16]. Browning et al. proposed a deep architecture to predict the diving behavior of seabirds associated with foraging. They combined the GPS and time depth record (TDR) from 108 individuals and trained deep learning models for predicting the behavior of European shags, common guillemots, and razorbills. An optimized model achieved 94% and 80% prediction accuracy of non-diving and diving behavior, respectively [17].

Despite the great effort to build animal movement models using geo records (e.g., GPS, ARGOS), the results have been limited to the identification of animal movement state or the analysis of correlations with environmental conditions. The key function that should be implemented in such a model is the prediction of animal movements according to changes in various spatiotemporal factors, which is possible by considering historical movement patterns. Such a model could be used for a variety of purposes, such as understanding animal behavior or protecting animal habitats, but to the best of our knowledge, there are very few reports on this topic.

Therefore, in this paper, we propose an animal movement prediction scheme based on predictive recurrent neural networks (RNNs). To improve the prediction accuracy, we focus on three issues, which are our main contributions. (1) To fill the observation gap, we propose an optimized random forest-based interpolation scheme that considers weather information and movement-related vectors. In fact, interpolating the animal pathway is a very important task for predicting animal movement when considering realistic conditions. (2) We propose a method to construct an image sequence of representative animal movements by using the kernel density estimation (KDE) algorithm. The generated image sequence indicates a reasonable movement range of the animal. (3) We construct a predictive RNN model for movement prediction and evaluate its performance.

2. Related Works

To date, many researchers have attempted to figure out the pattern of animal movement using various machine learning technologies. For instance, Jonsen et al. [18] proposed a meta-analysis method, which combines state-space models (SSM) and Bayesian approach for individual movement inference. They analyzed the relationship between leatherback turtle movement and environmental changes such as sea surface temperature. De Groeve et al. [19] developed an extracting method of spatiotemporal patterns in animal trajectories. They conducted a tree-based approach to identify the pattern of habitat selection and visualize the exploring temporal pattern of habitat use through hierarchical trees. Spigel et al. [20] proposed a conceptual simulation model of animal personality which includes foraging search performance, habitat preference, home ranging utilization pattern, social network and animal population progress. They argued that considering personality-dependent movement can generate far-reaching predictions about the spatial pattern in ecology. Wang [21] inferred animal behavior using machine learning methods such as state-space models, hidden Markov models, random forests, and support vector machines. Through various experiments, they confirmed that machine learning methods can be applied for quantifying the spatial and temporal distributions of specific behavior patterns.

Predictions of long-short term animal movement and population shift have ecological importance because they are directly related to significant factors such as spatiotemporal changes of weather and habitat. Very few studies have predicted movement using long-short term tracking animal data. Li et al. [22] proposed a periodic movement analysis algorithm named ‘Periodica’. They focused on finding periods in complex animal movement and developed an optimal method of periodic animal behaviors analysis. The algorithm contains Fourier transform, autocorrelation, and hierarchical clustering for retrieving periods and formulating the periodic behaviors. Bar-David et al. [23] presented a simple recursion analysis model that simulates the movements of large-herbivore groups. They defined the movement pattern, which includes clockwise and counter-clockwise pattern and simply formulated the African buffalo trajectories using furrier transform. In 2010, Fink et al. [24] developed spatiotemporal exploratory models (STEM) using broad-scale survey data. They focused on the population change of animal distribution and proposed a flexible framework for analyzing the dynamic pattern of species occurrence and abundance from broad-scale data. To construct STEM, they utilized an ensemble model of decision trees. Through various experiments, they emphasized that STEM is a useful analytical tool of animal exploratory. Three years later, Fink et al. [25] presented an adaptive spatiotemporal exploratory model (AdaSTEM), which can exploit variation in the density of observations while adapting to multiple scales in space and time. The AdaSTEM utilized the crowdsourcing data of animal observation for building estimation model and provided an ecological insight that indicates population movement of long-range migration animals. Through AdaSTEM, they estimated the spatiotemporal distribution of Barn Swallow across the western hemisphere during the breeding season, fall migration and winter migration.

To summarize, previous studies on movement prediction models have mainly focused on the behavior state changes such as ‘breeding’ to ‘migrating’ or ‘roaming’ to ‘resting’. As far as we know, few works have been done to predict long-short term animal movement or population shift especially by using continuous tracking data to construct a reasonable prediction model. In this paper, we propose an animal movement prediction model to predict the aggregate movements of animals over short-long term periods by considering continuous tracking data of individuals which are effected by spatiotemporal changes such as weather and movement characteristics.

3. Methods

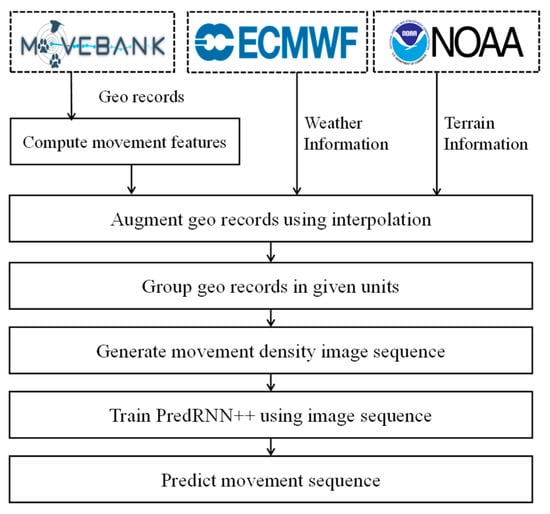

Figure 1 presents the overall flow of our animal movement prediction scheme. First, we collect and investigate the spatiotemporal data closely related to animal movements, such as geo records of animals, weather, and terrain data. In order to refine the movement pattern, we perform random forest interpolation by considering collected data as independent feature values. Then, we uniformly split the geo records and generate the movement density sequence that represents the valid range of animal movement. To build a prediction model, we train a predictive RNN using movement density sequences. Lastly, we evaluate the prediction performance of the model based on various accuracy metrics and visualize the prediction results.

Figure 1.

Overall flow of animal movement prediction.

3.1. Dataset

For the collection of animal movement data, we used geo records of animals from the Movebank website [26]. Movebank is an online database of animal tracking data. In particular, we used the “Long-billed curlew migration from Idaho” dataset, which contains 64 trajectories of long-billed curlews. The dataset was collected over six years, from 2013 to 2019, and it consists of ARGOS latitude, ARGOS longitude, timestamp, and tag local identifier. According to the dataset description, the number of deployed locations is 148,983, and there are no outliers. The coordinate system follows the WGS84 reference format.

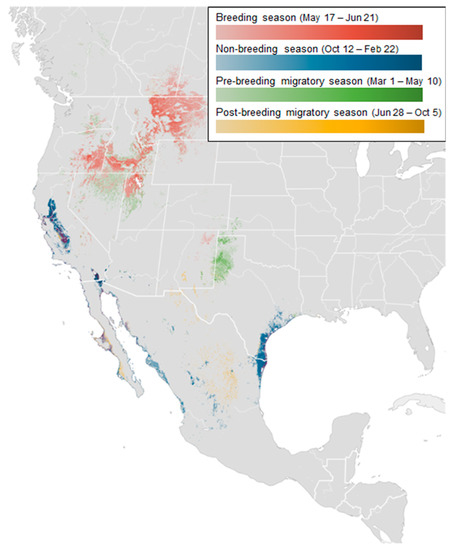

To represent the movement range of long-billed curlews, we use the abundance map [27]. Figure 2 shows the abundance distribution map of long-billed curlews. In the map, the four seasons are represented by different colors, and the distribution density is expressed by the contrast of color. The distribution itself is calculated based on geo records from individuals that have moved more than one kilometer over one hour. For instance, the dark red indicates high-density areas during the breeding season (e.g., Montana, Idaho). During the non-breeding season, San Francisco and California show high density.

Figure 2.

Seasonally averaged abundance map of long-billed curlews.

Animal migration routes are closely related to weather and terrain. Hence, for prediction, we collected weather and terrain data from the European Centre for Medium-Range Weather Forecasts (ECMWF) and the US National Oceanic and Atmospheric Administration (NOAA) and selected eight features for our feature set: Humidity, vertical wind speed, horizontal wind speed, temperature, relative humidity, cloud coverage, land classification label (GlobCover), and land–sea mask.

In this paper, we use four typical features to represent animal movements: Heading, speed, acceleration, and moving distance. Such movement-related features can be easily calculated from the collected time-location records by using the “move” package of R statistics [28].

3.2. Movement Interpolation

Despite the high quality of tracking data, GPS location accuracy and positioning success (ratio between the observed and expected number of locations) are negatively influenced by a number of factors, including the terrestrial atmosphere, satellite constellation, environment of the transmitters (habitat, topography, and weather), and behavior pattern and movement intensity of the tagged animal [29]. When analyzing animal trajectories, lack of observation or erroneous geo records lead to inaccurate results. Hence, it is essential to estimate missing or erroneous animal locations based on correct geo records, which is called movement interpolation. Various interpolation methods, such as linear, cubic spline, and polynomial interpolation, have been widely used in studies on animal movement. Table 1 shows the major interpolation methods.

Table 1.

Comparison of major interpolation methods.

All these methods perform interpolation using a few observation points. Since they do not consider the environmental features such as weather and terrain, the interpolation accuracy could be lowered. To alleviate this problem, we use the random forest method, which can consider both observation points and environmental factors. The random forest method works by constructing several weak decision trees, which are trained by a random subset of features, and producing the result by averaging or voting all individual trees. The basic principle of random forest is bootstrap aggregating (Bagging) to reduce the variance of predicted values. Bagging selects the specific number of training set randomly and fits for optimizing tree construction. For this reason, the random forest has excellent performance in classification and regression problems.

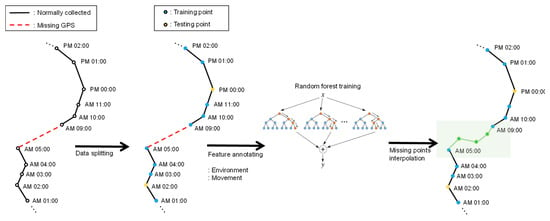

Figure 3 shows an example of movement interpolation process. Two normally observed points are connected by a solid line. If there are any missing points, then they are connected by a red dotted line. For instance, in the figure, we can see that observation records are missing from 6:00 am to 8:00 am. To interpolate these missing records, we first split the geo records into training sets and test sets. Then, we generate a feature tuple for each observation record by augmenting environmental data and movement data as we described in Section 3.1. Each feature tuple is in the form of . For a time sequence of length i, we can make a feature set P of . Basically, we select missing time points at random in the interpolation. Also, we assume that the points of test set are equal to missing time points. Our random forest model interpolates missing bivariate location (latitude and longitude) using a feature tuple of previous missing time points. For example, if a missing location exists a certain time t ( P), we predict the missing bivariate location at time t using the feature tuple at time t-1 as independent variables. The random forest training process follows bagging algorithm, which randomly selects a sample of size k from the feature set P and fits the decision trees. During the tree evolution, each node of the tree chooses the best split size given a randomly selected sample. Each tree is grown to the maximum size until it has no longer split. After tree evolution, prediction for missing coordinate can be calculated by averaging the predictions from all trees. Once the training is over, we are given an optimized random forest model, which can be used for interpolating missing observation points. After training, we perform inbuilt cross-validation and calculate the prediction accuracy using mean absolute percentage error (MAPE) and root mean square error (RMSE) to obtain an optimal random forest. In particular, we used the grid search method to find optimal parameters for the random forest. To construct and train the model, we used scikit-learn [34], a python machine learning package.

Figure 3.

Movement interpolation using the random forest method.

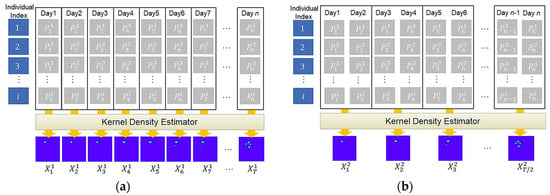

3.3. Movement Density Sequence Generation

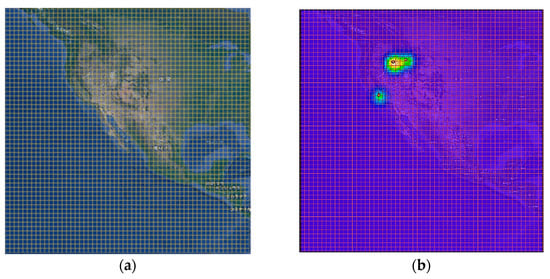

After movement interpolation, we generate the movement density sequence that eventually indicates the movement range of animals. The movement density gives information which represents the spatial distribution of the moving animals. First, we construct a grid map of I × J considering the region of interest. I and J indicate the image width and height, respectively. Figure 4a shows an example of I × J grid map.

Figure 4.

Example of movement density image construction—(a) grid generation; (b) kernel density generation.

To describe animal movements effectively, we use the KDE method. This method has been widely used in movement ecology and detection of animal habitat [35,36,37]. It is one of non-parametric density estimation methods and improves the non-zero probability problem of the histogram method by using the kernel function. A kernel function K is controlled by the bandwidth parameter h. According to the kernel definition, we calculate the probability density function f for the input geo records using the following equation.

here, n and xi indicate the number of geo records and geo record at time i, respectively, and y indicates estimated density value. In particular, to reduce the complexity of computation, we use the Gaussian kernel for generating density map. In addition, we use the bandwidth parameter of 5 and normalize the kernel values to a range of 0 to 1. Figure 4b shows an example of animal movement density map using KDE.

3.4. Movement Prediction

In this section, we describe how to construct a movement prediction model using predictive RNNs. The predictive RNN structure is known to give better accuracy for sequence-based prediction than single or shallow structures. Predictive RNN structure such as convolutional long short-term memory (LSTM) has a stack of learning and memory units, which is effective in solving sequence prediction problems [38,39]. For instance, Xingjian et al. introduced a convolutional LSTM network approach for precipitation nowcasting. Using the Radar Echo dataset, which has a sequence of weather satellite images, they constructed a prediction model using the continuous stack of convolution LSTMs [40].

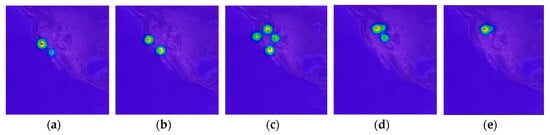

Likewise, we consider the movement prediction problem as a sequence-to-sequence prediction. For the animal movement observation period k, we can define a sequence of movement densities of size T defined by the input size and k by . Usually, each has a centroid of movement ranges, and the centroid shows the maximum density value of KDE. Generally, for a given input sequence , our model predicts its movement density sequence . Figure 5 illustrates an example of where the observation period k is one month.

Figure 5.

Examples of movement density sequence–(a) 12 Dec. 2013 to 12 Jan. 2014; (b) 12 Jan. 2013 to 12 Feb. 2014; (c) 12 Feb. 2014 to 12 Mar. 2014; (d) 12 Apr. 2014 to 12 May 2014; (e) 12 May 2014 to 12 Jun. 2014.

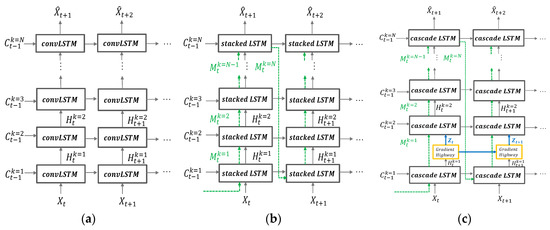

To predict animal movements, we use the PredRNN++ model [41]. RNN architecture has been widely used in the sequence data prediction. However, standard RNN can’t handle long-term temporal dependency because its loss gradient deteriorates exponentially over time. Despite convolutional LSTM being suggested as an alternative to standard RNN, it showed the vanishing gradient problem. PredRNN++ differs from the convolutional LSTM in terms of internal structure and mechanism of gradient transition to solve the back propagation problem. Figure 6 compares the structural differences of convolutional stacking LSTM, PredRNN [42], and PredRNN++.

Figure 6.

Structural differences of (a) convolutional stacking LSTM; (b) PredRNN; (c) PredRNN++.

PredRNN and PredRNN++ have in common that they use a spatiotemporal memory transient concept, but PredRNN++ differs from PredRNN in that it has unique structures of increasing recurrent depth. PredRNN++ has a cascade spatiotemporal memory that is effective in analyzing spatial correlation. Its cascade LSTM unit has a dual memory form of temporal memory and spatial memory. The spatial memory, depends on from the transient path, which is represented by dotted lines in Figure 6c. The temporal memory depends on previous state and is adjusted by current forget, input and input modulation gate. Here, indicates the vertical depth of the layer. Another difference is the existence of the gradient highway unit (GHU), which prevents quick vanishing and can send the gradient information to the deeper layer. The advantages of GHU were confirmed through various experiments [41].

Figure 7 shows the structural comparison of convolutional LSTM, stacked LSTM, and cascade LSTM. The green, blue, and red boxes indicate standard convolutional LSTM, stacked LSTM, and cascade LSTM, respectively. The element notations of structures are as follows: The sequence input at time (which denotes , output state , hidden state , spatiotemporal memory input gates , forget gates , input modulation gate , and output gate . As shown in Figure 7, to obtain effectiveness of sequence modeling, the cascade LSTM adds non-linear hyperbolic tangent layers to recurrent transitions more than convolutional LSTM and stacked LSTM. This has the effect of increasing the network depth, so it can efficiently predict images or video where sudden changes occur. The green-marked , blue marked and red are desired output of convolutional LSTM, stacked LSTM, and cascade LSTM respectively. The final output of cascade LSTM, , is more suitable for non-linearity data processing and prediction than convolutional LSTM and stacked LSTM outputs. Since most animal movements have non-linearity, the structure of the cascade LSTM is useful for predicting animal movements.

Figure 7.

Structural differences of convolutional LSTM, stacked LSTM, and cascade LSTM.

Equations (2)–(7) present the operating of cascade LSTM, where and indicates the operator of the convolution and the Hadamard product, is the Sigmoid function. The square brackets present concatenation of each tensor. The PredRNN++ used convolutional filters , where and has one by on convolution filer. The basic equations derived from standard LSTM [43] and the difference of standard LSTM and PredRNN++ is number of hyperbolic tangent layers and existing spatiotemporal memory cells. Equations (2) and (3) present standard LSTM gates operating equations, and Equation (4) is spatiotemporal gates update equation regulated by previous spatiotemporal memory, , and current state, . Equations (5) and (6) shows current spatiotemporal memory, , update operation. Final output, , is computed based on dual memory states and .

3.5. Model Construction

In this section, we describe how to construct our prediction model using PredRNN++. To train PredRNN++, we first set the resolution of the input sequence to 224 × 224 based on prediction accuracy and computation time. In addition, we use a 5-layer cascade LSTM with 128, 128, 64, 64, and 64 channels, which is known to give the best result. Here, the second layer corresponds to GHU in Figure 6. In addition, we use ADAM optimizer with learning rate and convolution filter size of 5. Following the training strategy of the predictive neural network [41,42], we use the scheduled sampling strategy. The sampling strategy conditionally selects true sequence and predicted values of the model as training input. It prevents initial learning problem and cover the inconsistency between prediction and training. For training, we used 80% of the complete dataset which were recorded from 10 April 2013 to 15 November 2017 as training and validation set, and the remaining 20% which were recorded from 16 November 2017 to 14 May 2019 as test set.

4. Experiments

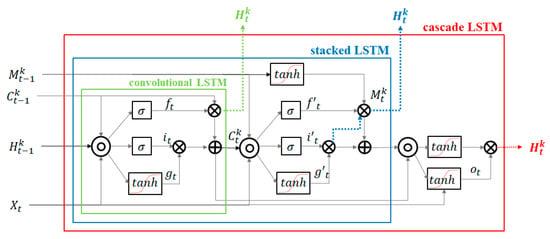

4.1. Experiment Designs

To predict animal movement over time, we first need to generate a sequence of kernel density images from collected geo records. In the experiment, we considered 10 different cases depending on how to group geo records, how many days to consider as input, and how many days to predict as output. For instance, in Figure 8, represents one collected geo record and indicates an individual of a particular animal species, E. For each group of geo records, the KDE generates one density image. For instance, Figure 8a,b shows a sequence of density images generated for a sequence of daily and two-day geo records, respectively. To see the effect of grouping size, we consider five different grouping sizes, which are 1, 2, 3, 7, and 15. After generating movement density images, we use them as an input for our predictive RNN. Table 2 shows an outline of the experiments.

Figure 8.

Examples of input sequence generation–(a) example of case 1; (b) example of case 2.

Table 2.

Experiment outline.

The density image has 224 × 224 resolution and corresponds to the rectangle area on the map according to WGS84 system with coordinates (–82,56), (–138,56), (–82,0), and (–138,0). For the generation of movement density sequence, we used the “SpatialEco” package [44], which is implemented in the R Studio software. Using the generated density images, we trained the PredRNN++.

4.2. Random Forest Interpolation

To compare the performances of interpolation methods, we measure the MAPE and RMSE. In statistics, MAPE is a popular metric for estimating prediction accuracy. MAPE and RMSE are defined by Equations (8) and (9), respectively. Here, is the number of geo records, and , and are the actual and predicted values, respectively.

We consider four interpolation methods as baseline, which are linear, Bezier, cubic spline, and kinematic, and compare them with our random forest method. In the test for comparing them, we first selected 15 representative individuals that include typical movement patterns such as resting, foraging and migration. Then, we built 15 random forest interpolation models for each individual. To construct such random forests, we selected 80% of individual geo records as training set and 20% of individual geo records as test set. When measuring the MAPE and RMSE, we considered actual geo records in the test set as missing and evaluated how multivariate random forest model recover well the missing values. Response variables are longitude and latitude at time i which are estimated from i-1 feature tuples. Table 3 and Table 4 show the MAPE of longitudes and latitudes. Table 5 and Table 6 show the RMSE of longitudes and latitudes. In the tables, the best MAPE and RMSE values are marked in bold for each individual. In most cases, our random forest method performed well and the average accuracy was the best.

Table 3.

Comparison of MAPEs for missing longitude records interpolation.

Table 4.

Comparison of MAPEs for missing latitude records interpolation.

Table 5.

Comparison of RMSEs for missing longitude records interpolation.

Table 6.

Comparison of RMSEs for missing latitude records interpolation.

4.3. Movement Prediction

To evaluate the prediction performance of animal movements, we used three different metrics: mean square error (MSE), structural similarity index (SSIM), and Gaussian mixture centroid distance. MSE is a standard method for evaluating the prediction quality in statistics; it measures the average square difference between pixel values of ground truth image G and pixel values of predicted image P by using Equation (10). In the equation, N × M is the total number of pixels and (i,j) represents a pixel of image.

On the other hand, SSIM is a method for measuring the similarity between two images and is designed to improve on traditional methods such as peak signal-to-noise ratio (PSNR) and MSE. SSIM is calculated using Equation (11), where μ, σ, and σGP are the average of pixels, variance, and covariance of G and P, respectively. By setting the range of SSIM from −1 and 1, we consider that, for the two images, there exists higher structural similarity, as their SSIM values are close to 1.

In the ecology research field, centroid analysis is widely used for evaluating animal dynamics [45,46,47]. Since our movement prediction model produce a movement sequence as prediction, we can calculate the density of movements and their centroids. We measure the centroid distance between the input image, G, and predicted image P, which indicates how accurate our model is in the prediction of animal movements. For measuring centroid distance, we first apply Gaussian mixture fitting [48] to extract centroids from each image and calculate their real coordinates. Then, we calculate their distance using the real coordinates. As mentioned above, we consider ten different cases in the experiment by grouping unit, input days, and output days and calculated the average for each metric. Table 7 shows the results, in which our prediction model showed the best performance from case 1.

Table 7.

MSE, RMSE, SSIM, and centroid distance measure results.

To evaluate the performance of our model, we compare it with vector auto regressive model (VAR), which is known to be useful for time-series prediction. In the comparison, we calculate the movement centroids using the Gaussian mixture fitting and use them as input values of the VAR. To construct VAR, we used the R package ‘VARS’ and utilized the ‘VARselect’ function to find out the most appropriate setting for VAR fitting. The comparison results are as follows.

From these Table 8, Table 9 and Table 10, we can see that our model outperforms VAR in most cases. In particular, our model, when trained using movement patterns of short observation period, showed better performance. In summary, our model can cover long range and short range predictions and hence can be used to predict not only seasonal movements such as strong migration but also typical movements such as foraging and roaming.

Table 8.

Comparison results of Case 1, Case 6 and VAR.

Table 9.

Comparison results of Case 4, Case 9 and VAR.

Table 10.

Comparison results of Case 5, Case 10 and VAR.

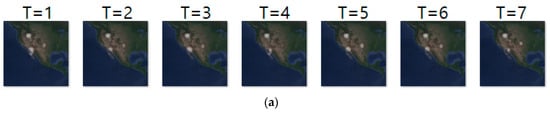

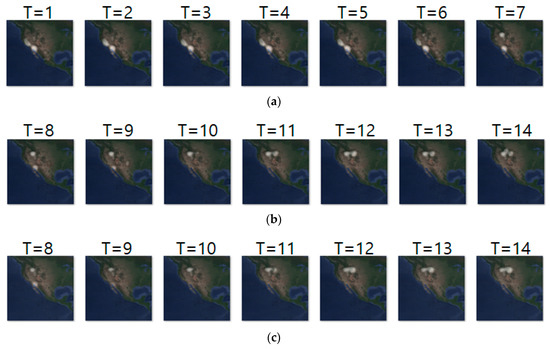

Figure 9 shows an example of movement prediction. In the figure, for an input sequence in (a), (b) and (c) show the ground truth sequence and predicted sequence, respectively. The input and ground truth sequences represent the short-distance migration movement of long-billed curlews from 10 May 2018 to 23 May 2018. The short-distance migration occurs between pre-breeding season and breeding season. This figure demonstrates that our model predicts the density location of animal movements after one-day (T = 1) with an error range of approximately 23 km. That is, based on the density images of the last seven days, our prediction model generated the density images of next seven days. To analyze the prediction accuracy in more detail, we conducted daily comparison of RMSE, SSIM, and centroid distance results are shown in Table 11. In the table, we can see that overall prediction results are quite reasonable and that prediction for the first day is the best.

Figure 9.

Movement prediction result in case 1–(a) input sequence; (b) ground truth; (c) predicted sequence.

Table 11.

Daily comparison of RMSE, SSIM, and centroid distance of case 1.

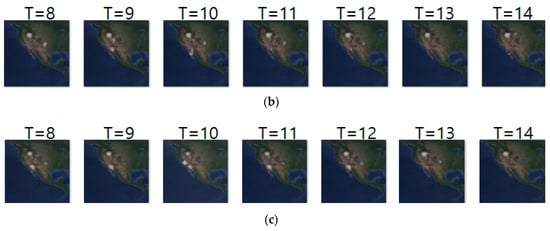

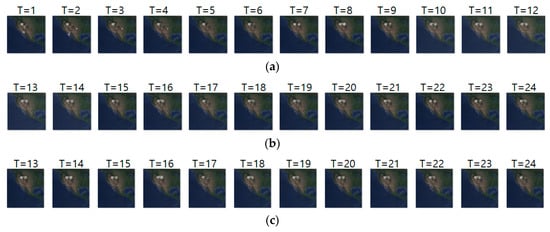

Figure 10 shows another prediction example for case 2, where we analyze geo records over two days. The input and ground truth sequences represent non-breeding season movements of long-billed curlews from 13 October 2018 to 8 November 2018. Generally, long-billed curlews have a habit of not deviating greatly from the habitat at the non-breeding season. Density images in Figure 10 shows such movements at the non-breeding season. Table 12 shows their prediction result. Compared with Table 11, most metrics gave worse results since case 2 considered geo-records using a longer period (of two days).

Figure 10.

Movement prediction result–(a) input sequence; (b) ground truth; (c) predicted sequence.

Table 12.

Daily comparison of RMSE, SSIM, and centroid distance of case 2.

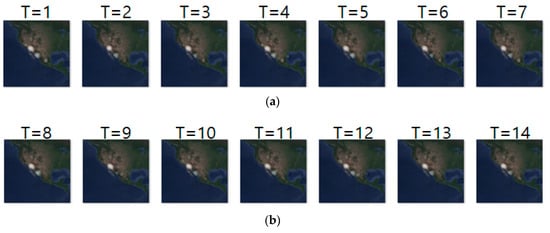

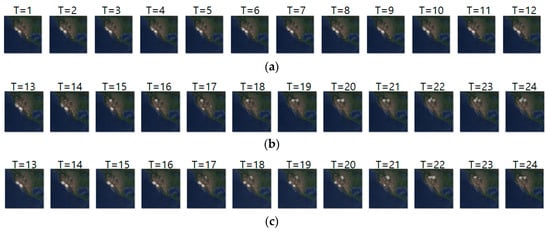

Likewise, Figure 11a–c shows the input sequence, ground truth sequence and predicted sequence of case 4, respectively. The input and ground truth sequences indicate the migration movement of long-billed curlews from 7 February 2018 to 9 May 2018. In the case 4, we generated one movement density image for geo records of seven days. In the figure, we can see three states of migration: non-breeding season movement shown at T = 1 to 6, migration beginning shown at T = 7 to 10 and migration ending shown at T = 11 to 14. Table 13 shows the daily comparison of RMSE, SSIM, and centroid distance.

Figure 11.

Movement prediction result–(a) input sequence; (b) ground truth; (c) predicted sequence.

Table 13.

Daily comparison of RMSE, SSIM, and centroid distance of case 4.

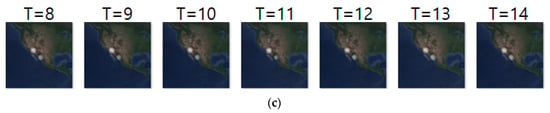

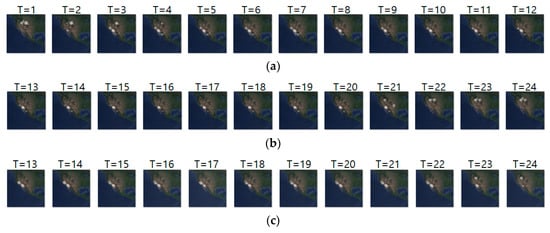

Figure 12a–c shows the input sequence, ground truth and predicted sequence of case 6, respectively. The data we used for case 6 are migration movements of long-billed curlews from 7 March 2018 to 30 March 2018. As we showed in the Figure 12, long-billed curlews undertook pre-breeding and breeding migration. We can see that the image at T = 1 shows the density at the pre-breeding season, the next seven images show the migration beginning movements, and the remaining images show migration ending movements. Table 14 shows daily comparison of RMSE, SSIM, and centroid distance in case 6. Notably, when the animals showed static movements, our model showed a stable prediction performance of less than 90 km error.

Figure 12.

Movement prediction result–(a) input sequence; (b) ground truth; (c) predicted sequence.

Table 14.

Daily comparison of RMSE, SSIM, and centroid distance of case 6.

Figure 13a–c shows the input sequence, ground truth, and predicted sequence of case 9, respectively. The input and ground truth sequences show the migration movement of long-billed curlew from 16 November 2017 to 3 May 2018. In Figure 13a,b, we can see the starting migration movement at T = 14, pre-breeding migratory and migration for breeding at T = 15 to 18, and migration ending at T = 19 to 24. Table 15 shows daily comparison of RMSE, SSIM, and centroid distance in case 9. In this experiment, the input sequence showed a static movement and the ground truth sequence showed a dynamic movement pattern from T = 15 to 18. However, our model did not predict such dynamic movement precisely.

Figure 13.

Movement prediction result–(a) input sequence; (b) ground truth; (c) predicted sequence.

Table 15.

Daily comparison of RMSE, SSIM, and centroid distance of case 9.

Figure 14a–c shows the input, ground truth, and predicted sequences of case 10, respectively. The input and ground truth sequences are the migration movements of long-billed curlews from 19 May 2018 to 14 May 2019. We used the 15-day geo records to make a one-movement density image and as a sequence to predict the migration pattern for one year. As shown in Figure 14a,b, we observe that non-breeding season migration was shown at T = 1 to 3 and T = 21 to 24, and breeding season movement was shown at T = 4 to 20. Table 16 shows the daily comparison of RMSE, SSIM, and centroid distance of case 10. The result shows that there are relatively low distance errors at T = 13 to 20, but as migration starts at T = 21 to 33, we can see relatively high distance errors.

Figure 14.

Movement prediction result–(a) input sequence; (b) ground truth; (c) predicted sequence.

Table 16.

Daily comparison of RMSE, SSIM, and centroid distance of case 10.

5. Conclusions

In this paper, we proposed a novel approach for predicting animal movements using a predictive RNN. For accurate movement prediction, we first showed how to compensate for any missing geo records using random forest regression. To interpolate the missing geo records, we used random forest based on animal movement features and environmental features such as terrain and weather. Compared with other popular interpolation methods, our proposed model achieved higher interpolation accuracy. After augmenting geo records, we grouped geo records by various units and created a sequence of movement density images, which represent movement trends of animals. Then, we built a movement prediction model by training PredRNN++ using these sequence data. We evaluated the model’s performance through various experiments. The results showed that our model is quite effective for animal movement prediction.

Author Contributions

Conceptualization, J.R.; investigation, J.R. and S.J.; methodology, J.R. and S.P.; software, J.R. and Y.C.; visualization, J.R.; writing—original draft preparation, J.R.; writing—review and editing, E.H.; supervision, E.H.

Funding

This work was supported by Korea Environment Industry & Technology Institute (KEITI) through Public Technology Program based on Environmental Policy, funded by Korea Ministry of Environment (MOE) (2017000210001).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Morales, J.M.; Moorcroft, P.R.; Matthiopoulos, J.; Frair, J.L.; Kie, J.G.; Powell, R.A.; Merrill, E.H.; Haydon, D.T. Building the bridge between animal movement and population dynamics. Philos. Trans. R. Soc. B 2010, 365, 2289–2301. [Google Scholar] [CrossRef] [PubMed]

- Valletta, J.J.; Torney, C.; Kings, M.; Thornton, A.; Madden, J. Applications of Machine Learning in Animal Behaviour Studies. Anim. Behav. 2017, 124, 203–220. [Google Scholar] [CrossRef]

- Van Meerbeek, K.; Ottoy, S.; de Andrés García, M.; Muys, B.; Hermy, M. The bioenergy potential of Natura 2000—A synergy between climate change mitigation and biodiversity protection. Front. Ecol. Environ. 2016, 14, 473–478. [Google Scholar] [CrossRef]

- Van Moorter, B.; Rolandsen, C.M.; Basille, M.; Gaillard, J.M. Movement is the glue connecting home ranges and habitat selection. J. Anim. Ecol. 2016, 85, 21–31. [Google Scholar] [CrossRef] [PubMed]

- Tremblay, Y.; Robinson, P.W.; Costa, D.P. A parsimonious approach to modeling animal movement data. PLoS ONE 2009, 4, e4711. [Google Scholar] [CrossRef] [PubMed]

- Kranstauber, B.; Cameron, A.; Weinzerl, R.; Fountain, T.; Tilak, S.; Wikelski, M.; Kays, R. The Movebank data model for animal tracking. Environ. Model. Softw. 2011, 26, 834–835. [Google Scholar] [CrossRef]

- Kays, R.; Crofoot, M.C.; Jetz, W.; Wikelski, M. Terrestrial animal tracking as an eye on life and planet. Science 2015, 348, aaa2478. [Google Scholar] [CrossRef]

- Dragon, A.C.; Bar-Hen, A.; Monestiez, P.; Guinet, C. Comparative analysis of methods for inferring successful foraging areas from Argos and GPS tracking data. Mar. Ecol. Prog. Ser. 2012, 452, 253–267. [Google Scholar] [CrossRef][Green Version]

- Thiebot, J.B.; Pinaud, D. Quantitative method to estimate species habitat use from light-based geolocation data. Endanger. Species Res. 2010, 10, 341–353. [Google Scholar] [CrossRef]

- Rahman, N.M.F.; Baten, M.A. Forecasting area and production of black gram pulse in Bangladesh using ARIMA models. Pak. J. Agric. Sci. 2016, 53, 759–765. [Google Scholar] [CrossRef]

- Knudsen, E.; Lindén, A.; Ergon, T.; Jonzén, N.; Vik, J.O.; Knape, J.; Røer, J.E.; Stenseth, N.C. Characterizing bird migration phenology using data from standardized monitoring at bird observatories. Clim. Res. 2007, 35, 59–77. [Google Scholar] [CrossRef]

- Bildstein, K.L.; Mandel, J.T.; Barber, D.R.; Houston, C.S.; Bohrer, G.; Winkler, D.W. Migration path annotation: Cross-continental study of migration-flight response to environmental conditions. Ecol. Appl. 2011, 21, 2258–2268. [Google Scholar] [CrossRef]

- Patterson, T.A.; Thomas, L.; Wilcox, C.; Ovaskainen, O.; Matthiopoulos, J. State-space models of individual animal movement. Trends Ecol. Evol. 2008, 23, 87–94. [Google Scholar] [CrossRef] [PubMed]

- Michelot, T.; Langrock, R.; Patterson, T.A. MoveHMM: An R package for the statistical modelling of animal movement data using hidden Markov models. Methods Ecol. Evol. 2016, 7, 1308–1315. [Google Scholar] [CrossRef]

- Hirakawa, T.; Yamashita, T.; Tamaki, T.; Fujiyoshi, H.; Umezu, Y.; Takeuchi, I.; Matsumoto, S.; Yoda, K. Can AI predict animal movements? Filling gaps in animal trajectories using inverse reinforcement learning. Ecosphere 2018, 9. [Google Scholar] [CrossRef]

- Xu, Z.; Cheng, X.E. Zebrafish tracking using convolutional neural networks. Sci. Rep. 2017, 7, 1–11. [Google Scholar] [CrossRef]

- Browning, E.; Bolton, M.; Owen, E.; Shoji, A.; Guilford, T.; Freeman, R. Predicting animal behaviour using deep learning: GPS data alone accurately predict diving in seabirds. Methods Ecol. Evol. 2018, 9, 681–692. [Google Scholar] [CrossRef]

- Jonsen, I.D.; Myers, R.A.; Flemming, J.M. Meta-Analysis of Animal Movement Using State-Space Models. Ecology 2003, 84, 3055–3063. [Google Scholar] [CrossRef]

- De Groeve, J.; Van de Weghe, N.; Ranc, N.; Neutens, T.; Ometto, L.; Rota-Stabelli, O.; Cagnacci, F. Extracting Spatio-Temporal Patterns in Animal Trajectories: An Ecological Application of Sequence Analysis Methods. Methods Ecol. Evol. 2016, 7, 369–379. [Google Scholar] [CrossRef]

- Spiegel, O.; Leu, S.T.; Bull, C.M.; Sih, A. What’s Your Move? Movement as a Link between Personality and Spatial Dynamics in Animal Populations. Ecol. Lett. 2017, 20, 3–18. [Google Scholar] [CrossRef]

- Wang, G. Machine Learning for Inferring Animal Behavior from Location and Movement Data. Ecol. Inform. 2019, 49, 69–76. [Google Scholar] [CrossRef]

- Li, Z.; Han, J.; Ding, B.; Kays, R. Mining Periodic Behaviors of Object Movements for Animal and Biological Sustainability Studies. Data Min. Knowl. Discov. 2012, 24, 355–386. [Google Scholar] [CrossRef]

- Bar-David, S.; Bar-David, I.; Cross, P.C.; Ryan, S.J.; Knechtel, C.U.; Getz, W.M. Methods for Assessing Movement Path Recursion with Application to African Buffalo in South Africa. Ecology 2009, 90, 2467–2479. [Google Scholar] [CrossRef] [PubMed]

- Fink, D.; Hochachka, W.M.; Zuckerberg, B.; Winkler, D.W.; Shaby, B.; Munson, M.A.; Hooker, G.; Riedewald, M.; Sheldon, D.; Kelling, S. Spatiotemporal Exploratory Models for Broad-Scale Survey Data. Ecol. Appl. 2010, 20, 2131–2147. [Google Scholar] [CrossRef] [PubMed]

- Fink, D.; Damoulas, T.; Bruns, N.E.; La Sorte, F.A.; Hochachka, W.M.; Gomes, C.P.; Kelling, S. Crowdsourcing Meets Ecology: Hemisphere-Wide Spatiotemporal Species Distribution Models. AI Mag. 2014, 35, 19–30. [Google Scholar] [CrossRef]

- Movebank. Available online: https://www.movebank.org (accessed on 12 July 2019).

- Birds of North America. Available online: https://birdsna.org/Species-Account/bna/home (accessed on 12 July 2019).

- Move: Visualizing and Analyzing Animal Track Data. Available online: https://cran.r-project.org/web/packages/move/ (accessed on 12 July 2019).

- Liu, D.; Chen, L.; Wang, Y.; Lu, J.; Huang, S. How much can we trust gps wildlife tracking? Anassessment in semi-free-ranging crested ibis Nipponia Nippon. PeerJ 2018, 6, e5320. [Google Scholar] [CrossRef] [PubMed]

- Wentz, E.A.; Campbell, A.F.; Houston, R. A comparison of two methods to create tracks of moving objects: Linear weighted distance and constrained random walk. Int. J. Geogr. Inf. Sci. 2003, 17, 623–645. [Google Scholar] [CrossRef]

- Yu, B.; Bailey, T.; Gamboa, R. Curve-based representation of moving object trajectories. In Proceedings of the International Database Engineering and Applications Symposium (IDEAS’04), Coimbra, Portugal, 9 July 2004; pp. 419–425. [Google Scholar]

- Tremblay, Y. Interpolation of animal tracking data in a fluid environment. J. Exp. Biol. 2005, 209, 128–140. [Google Scholar] [CrossRef][Green Version]

- Long, J.A. Kinematic interpolation of movement data. Int. J. Geogr. Inf. Sci. 2016, 30, 854–868. [Google Scholar] [CrossRef]

- Scikit-Learn: Random Forest Regressor. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestRegressor.html (accessed on 12 July 2019).

- Calabrese, J.M.; Fleming, C.H.; Gurarie, E. Ctmm: An R package for analyzing animal relocation data as a continuous-time stochastic process. Methods Ecol. Evol. 2016, 7, 1124–1132. [Google Scholar] [CrossRef]

- Park, N.Z.; Royal, F. A new autocorrelated kernel density estimator. Ecology 2015, 96, 1182–1188. [Google Scholar]

- Fleming, C.H.; Calabrese, J.M. A new kernel density estimator for accurate home-range and species-range area estimation. Methods Ecol. Evol. 2017, 8, 571–579. [Google Scholar] [CrossRef]

- Gkioxari, G.; Toshev, A.; Jaitly, N. Chained predictions using convolutional neural networks. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 728–743. [Google Scholar]

- Villegas, R.; Yang, J.; Zou, Y.; Sohn, S.; Lin, X.; Lee, H. Learning to generate long-term future via hierarchical prediction. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017. [Google Scholar]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.; Woo, W. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. In Proceedings of the Conference of Neural Information Processing Systems 2015, Montreal, QC, Canada, 7–12 December 2015; pp. 802–810. [Google Scholar]

- Wang, Y.; Gao, Z.; Long, M.; Wang, J.; Yu, P.S. PredRNN++: Towards a Resolution of the Deep-in-Time Dilemma in Spatiotemporal Predictive Learning. arXiv 2018, arXiv:1804.06300. [Google Scholar]

- Wang, Y.; Long, M.; Wang, J.; Gao, Z.; Yu, P.S. PredRNN: Recurrent neural networks for predictive learning using spatiotemporal LSTMs. In Proceedings of the Conference of Neural Information Processing Systems 2017, Long Beach, CA, USA, 4–9 December 2017; pp. 879–888. [Google Scholar]

- Sepp, H.; Jurgen, S. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar]

- SpatialEco: Spatial Analysis and Modeling Utilities. Available online: https://cran.r-project.org/web/packages/spatialEco/index.html (accessed on 12 July 2019).

- Langrock, R.; Hopcraft, J.G.C.; Blackwell, P.G.; Goodall, V.; King, R.; Niu, M.; Patterson, T.A.; Pedersen, M.W.; Skarin, A.; Schick, R.S. Modelling group dynamic animal movement. Methods Ecol. Evol. 2014, 5, 190–199. [Google Scholar] [CrossRef]

- Latombe, G.; Parrott, L.; Basille, M.; Fortin, D. Uniting statistical and individual-based approaches for animal movement modelling. PLoS ONE 2014, 9, e99938. [Google Scholar] [CrossRef] [PubMed]

- Ma, M.; Luo, Q.; Zhou, Y.; Chen, X.; Li, L. An improved animal migration optimization algorithm for clustering analysis. Discret. Dyn. Nat. Soc. 2015, 2015, 194792. [Google Scholar] [CrossRef]

- Scikit-Learn: Gaussian Mixture Models. Available online: https://scikit-learn.org/stable/modules/mixture.html (accessed on 12 July 2019).

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).