Unique 4-DOF Relative Pose Estimation with Six Distances for UWB/V-SLAM-Based Devices

Abstract

:1. Introduction

1.1. Related Work

1.2. Contributions

1.3. Outline

2. Methods

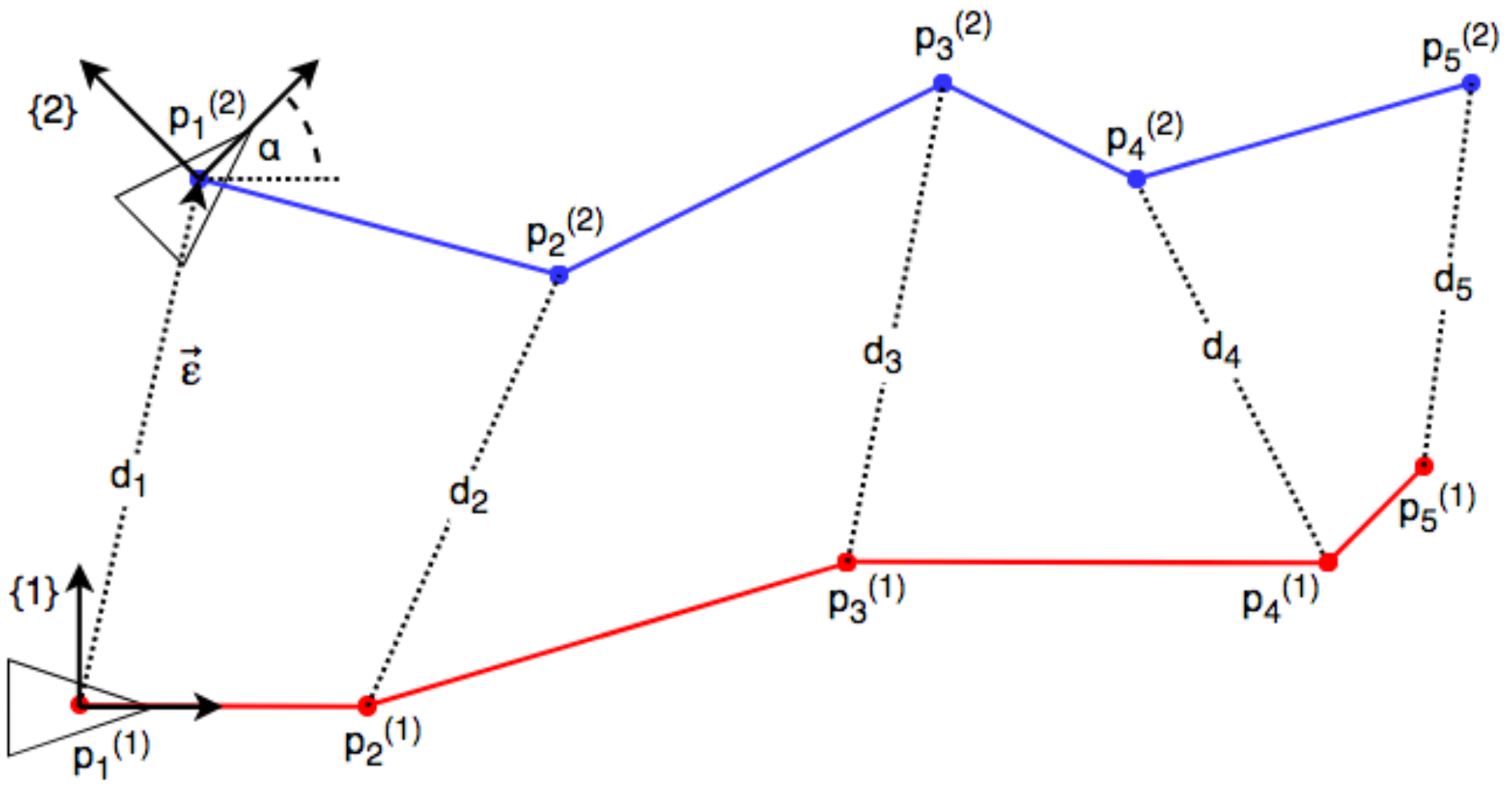

2.1. Problem Formulation and Notation

2.2. Cooperative Localization in 2D

2.2.1. Solution of an Overdetermined System

2.2.2. Unique Solution with Five Distance Measurements

2.3. Cooperative Localization in 3D

2.3.1. Solution of an Overdetermined System

2.3.2. Unique Solution with Six Distance Measurements

3. Simulation Results

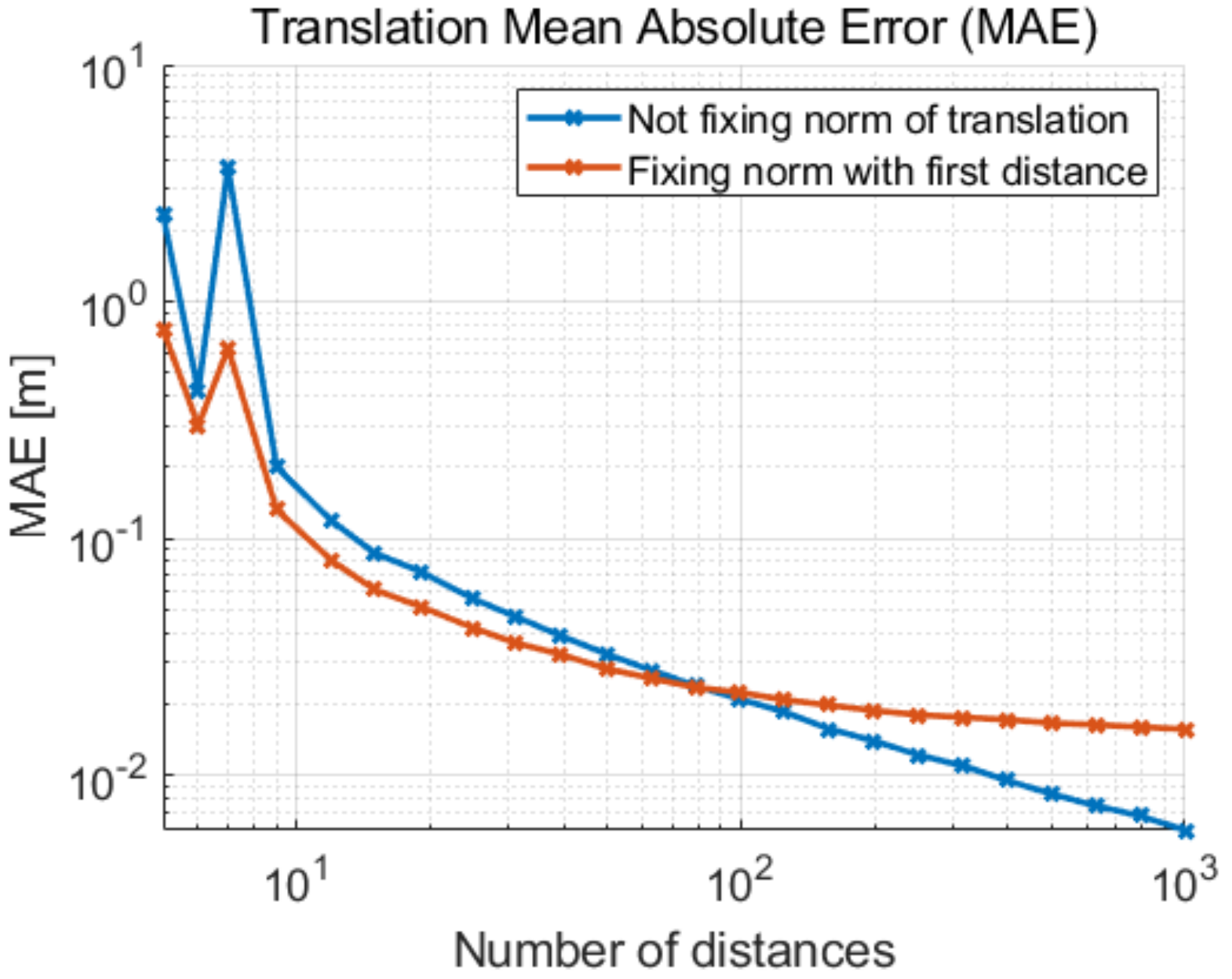

3.1. Cooperative Localization in 2D

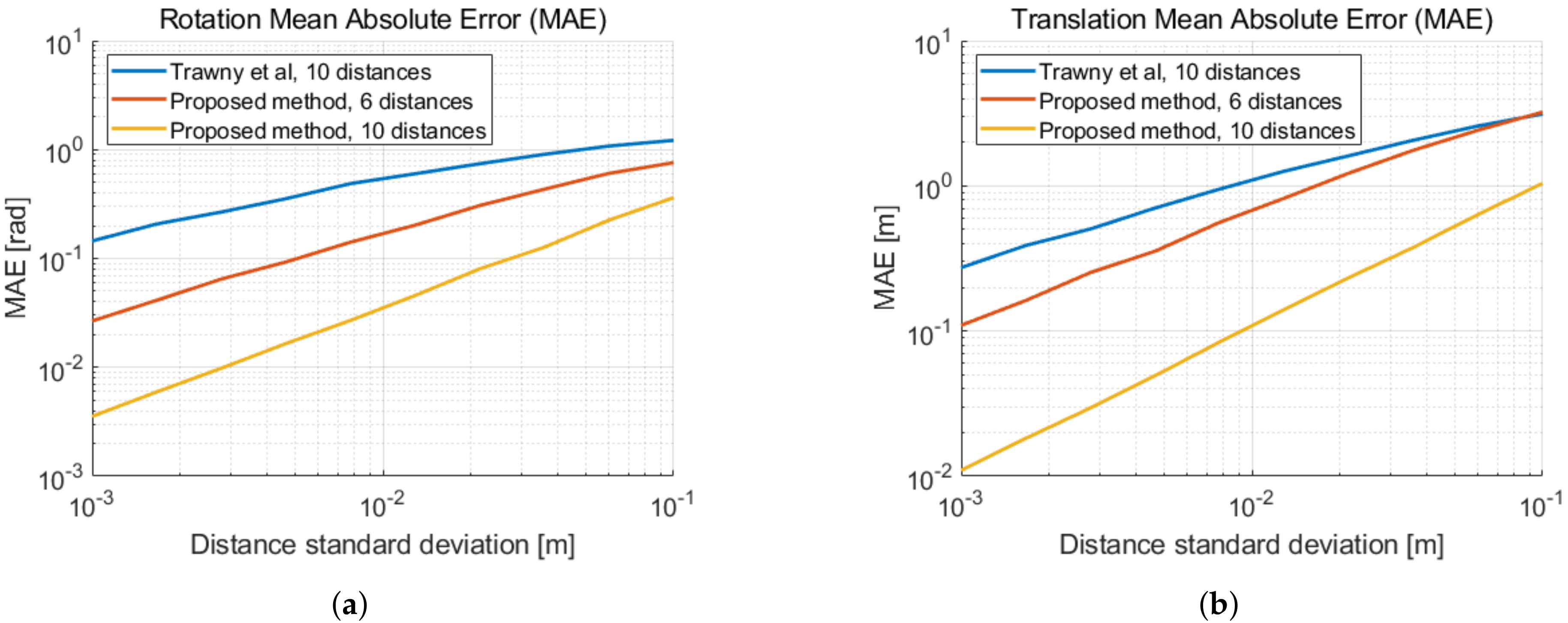

3.2. Cooperative Localization in 3D

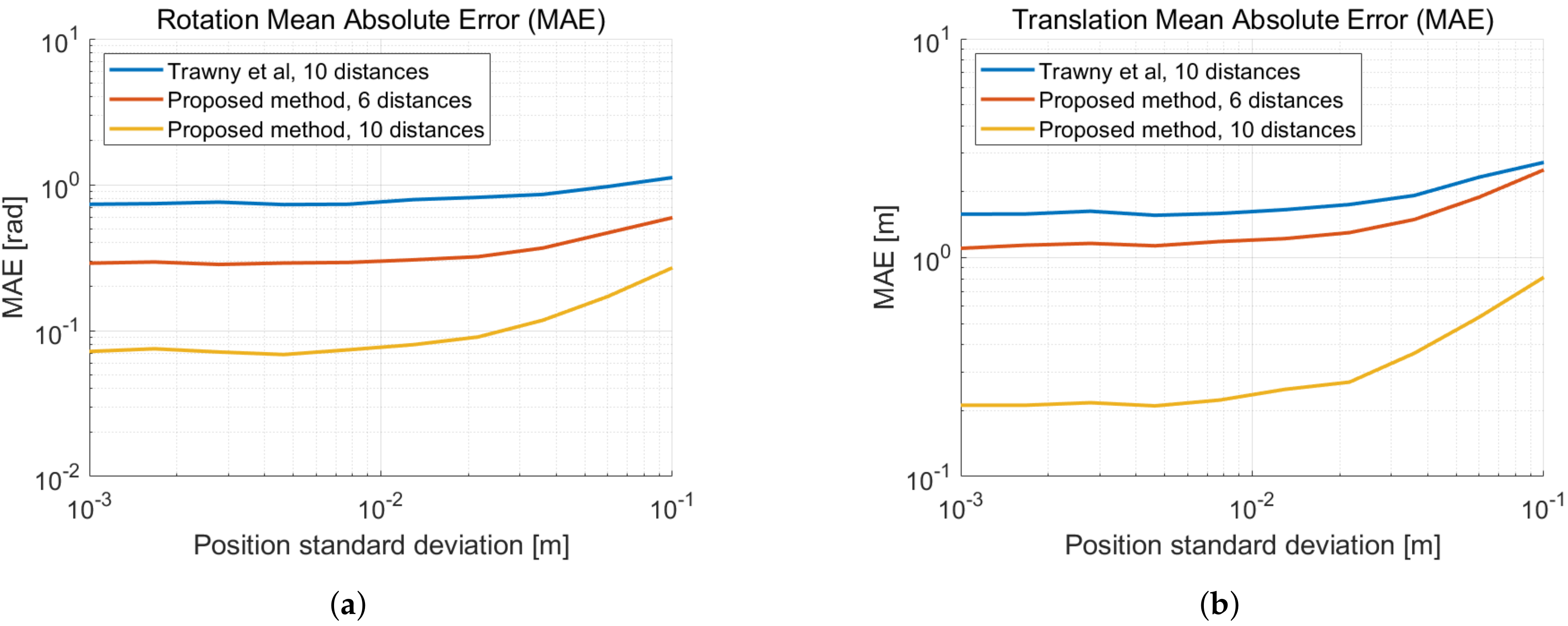

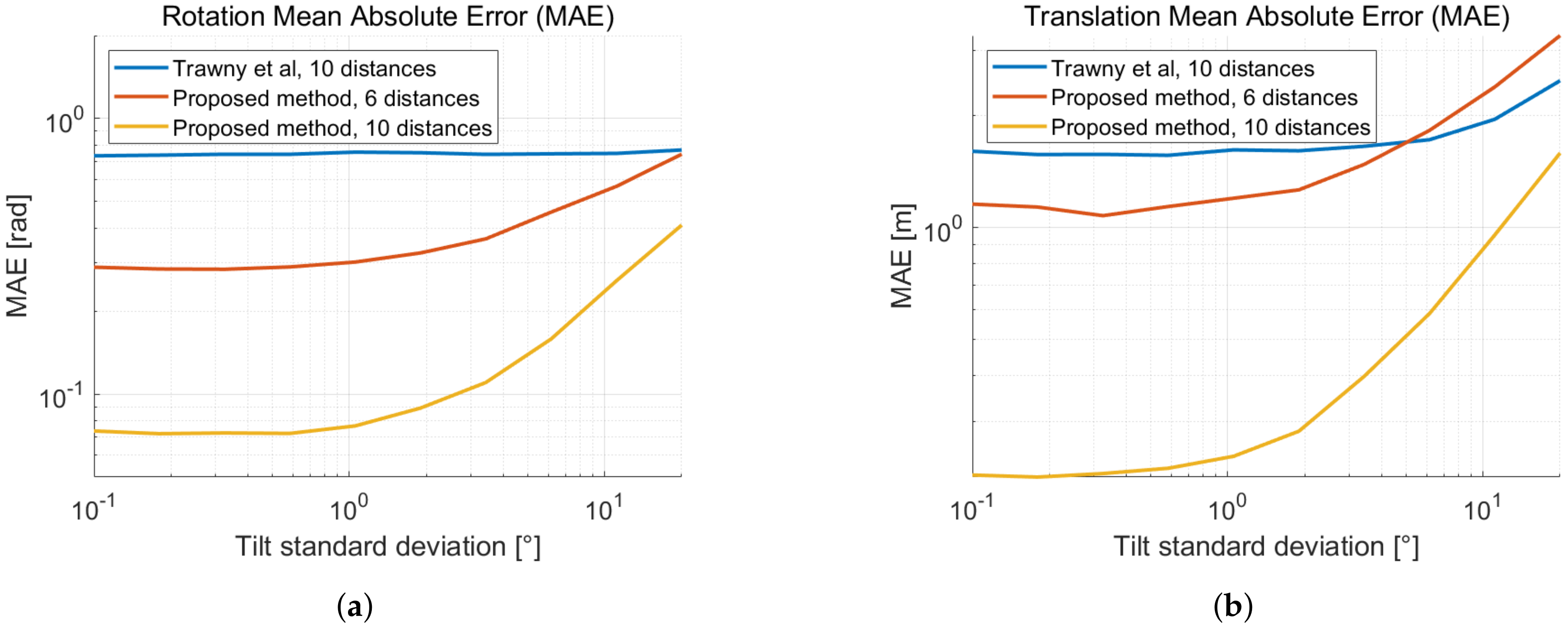

Tilt Error Effect on the 4-DOF Relative Pose Estimation

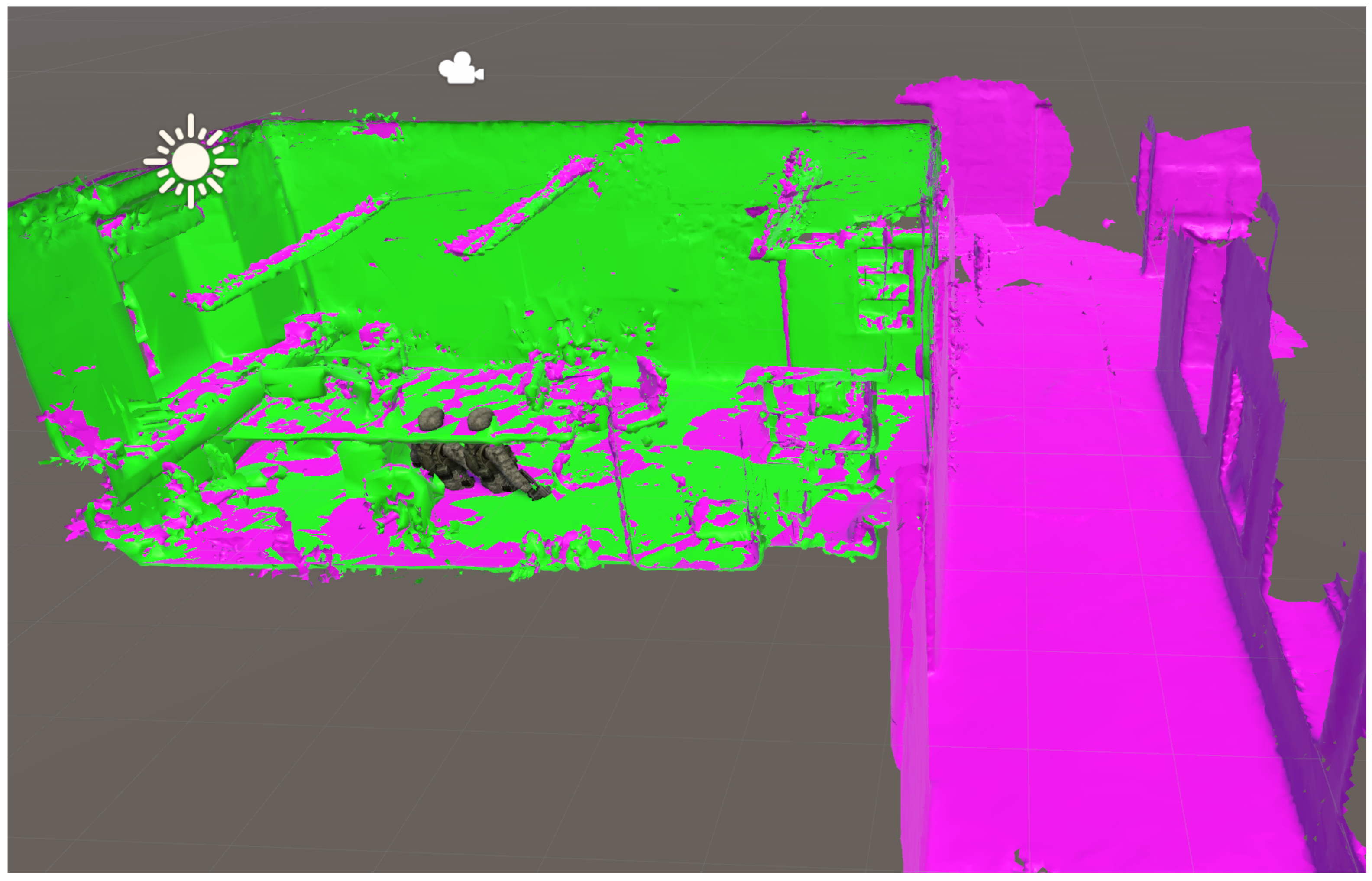

4. Experimental Results

5. Conclusions

6. Patents

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| AR | Augmented Reality |

| DOF | degrees of freedom |

| EKF | extended Kalman filter |

| IC | integrated circuit |

| LOS | line-of-sight |

| NLOS | non-line-of-sight |

| MLE | maximum likelihood estimation |

| RF | radio frequency |

| SLAM | Simultaneous Localization and Mapping |

| C-SLAM | cooperative SLAM |

| V-SLAM | visual SLAM |

| TOA | time of arrival (TOA) |

| UWB | ultra-wideband (UWB) |

| UAV | unmanned aerial vehicle |

References

- Thrun, S.; Burgard, W.; Fox, D. Probabilistic Robotics (Intelligent Robotics and Autonomous Agents); The MIT Press: Cambridge, MA, USA, 2005; ISBN 0262201623. [Google Scholar]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Trans. Rob. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- LaValle, S.M.; Yershova, A.; Katsev, M.; Antonov, M. Head tracking for the oculus rift. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 187–194. [Google Scholar]

- Thrun, S. A probabilistic on-line mapping algorithm for teams of mobile robots. Int. J. Rob. Res. 2001, 20, 335–363. [Google Scholar] [CrossRef]

- Kim, B.; Kaess, M.; Fletcher, L.; Leonard, J.; Bachrach, A.; Roy, N.; Teller, S. Multiple relative pose graphs for robust cooperative mapping. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation (ICRA), Anchorage, AK, USA, 3–7 May 2010; pp. 3185–3192. [Google Scholar]

- Fox, D.; Ko, J.; Konolige, K.; Limketkai, B.; Schulz, D.; Stewart, B. Distributed multirobot exploration and mapping. Proc. IEEE 2006, 94, 1325–1339. [Google Scholar] [CrossRef]

- Howard, A. Multi-robot simultaneous localization and mapping using particle filters. Int. J. Rob. Res. 2006, 25, 1243–1256. [Google Scholar] [CrossRef]

- Saeedi, S.; Trentini, M.; Seto, M.; Li, H. Multiplerobot simultaneous localization and mapping: A review. J. Field Rob. 2016, 33, 33–46. [Google Scholar] [CrossRef]

- Kuzmin, M. Review, Classification and Comparison of the Existing SLAM Methods for Groups of Robots. In Proceedings of the 22nd Conference of Open Innovations Association (FRUCT), Jyväskylä, Finland, 15–18 May 2018; pp. 115–120. [Google Scholar]

- Lee, H.C.; Lee, S.H.; Lee, T.S.; Kim, D.J.; Lee, B.H. A survey of map merging techniques for cooperative-SLAM. In Proceedings of the 9th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Daejeon, Korea, 26–28 November 2012; pp. 285–287. [Google Scholar]

- Rone, W.; Ben-Tzvi, P. Mapping, localization and motion planning in mobile multi-robotic systems. Robotica 2013, 31, 1–23. [Google Scholar] [CrossRef]

- Zhou, X.S.; Roumeliotis, S.I. Multi-robot slam with unknown initial correspondence: The robot rendezvous case. In Proceedings of the 2006 IEEE/RSJ international conference on intelligent robots and systems (IROS), Beijing, China, 9–15 October 2006; pp. 1785–1792. [Google Scholar]

- Rekleitis, I.M.; Dudek, G.; Milios, E.E. Multi-robot cooperative localization: A study of trade-offs between efficiency and accuracy. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Lausanne, Switzerland, 30 September–4 October 2002; pp. 2690–2695. [Google Scholar]

- Howard, A.; Matark, M.J.; Sukhatme, G.S. Localization for mobile robot teams using maximum likelihood estimation. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Lausanne, Switzerland, 30 September–4 October 2002; pp. 434–439. [Google Scholar]

- Martinelli, A.; Pont, F.; Siegwart, R. Multi-robot localization using relative observations. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation (ICRA), Barcelona, Spain, 18–22 April 2005; pp. 2797–2802. [Google Scholar]

- Martinelli, A.; Renzaglia, A. Cooperative visual-inertial sensor fusion: Fundamental equations. In Proceedings of the 2017 International Symposium on Multi-Robot and Multi-Agent Systems (MRS), Los Angeles, CA, USA, 4–5 December 2017; pp. 24–31. [Google Scholar]

- Zhou, X.S.; Roumeliotis, S.I. Determining the robot-to-robot relative pose using range-only measurements. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation (ICRA), Roma, Italy, 10–14 April 2007; pp. 4025–4031. [Google Scholar]

- Zhou, X.S.; Roumeliotis, S.I. Robot-to-robot relative pose estimation from range measurements. IEEE Trans. Rob. 2008, 6, 1379–1393. [Google Scholar] [CrossRef]

- Trawny, N.; Zhou, X.S.; Zhou, K.X.; Roumeliotis, S.I. 3D relative pose estimation from distance-only measurements. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), San Diego, CA, USA, 29 October–2 November 2007; pp. 1071–1078. [Google Scholar]

- Trawny, N.; Zhou, X.S.; Roumeliotis, S.I. 3D Relative Pose Estimation from Six Distances. Available online: https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.148.4386&rep=rep1&type=pdf (accessed on 7 October 2019).

- Shames, I.; Fidan, B.; Anderson, B.D.; Hmam, H. Cooperative self-localization of mobile agents. IEEE Trans. Aerosp. Electron. Syst. 2011, 47, 1926–1947. [Google Scholar] [CrossRef]

- Prorok, A.; Martinoli, A. Accurate indoor localization with ultra-wideband using spatial models and collaboration. Int. J. Rob. Res. 2014, 33, 547–568. [Google Scholar] [CrossRef]

- Guo, K.; Qiu, Z.; Meng, W.; Xie, L.; Teo, R. Ultrawideband based cooperative relative localization algorithm and experiments for multiple unmanned aerial vehicles in gps denied environments. Int. J. Micro Air Veh. 2017, 9, 169–186. [Google Scholar] [CrossRef]

| Vector/Points | x-Axis | y-Axis | z-Axis |

|---|---|---|---|

| u | v | w |

| Method | Translation MAE | Rotation MAE |

|---|---|---|

| Trawny et al, 10 distances | 3.42 m | 27.2° |

| Own method, 6 distances | 1.0 m | 15.94° |

| Own method, 10 distances | 0.26 m | 2.68° |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Molina Martel, F.; Sidorenko, J.; Bodensteiner, C.; Arens, M.; Hugentobler, U. Unique 4-DOF Relative Pose Estimation with Six Distances for UWB/V-SLAM-Based Devices. Sensors 2019, 19, 4366. https://doi.org/10.3390/s19204366

Molina Martel F, Sidorenko J, Bodensteiner C, Arens M, Hugentobler U. Unique 4-DOF Relative Pose Estimation with Six Distances for UWB/V-SLAM-Based Devices. Sensors. 2019; 19(20):4366. https://doi.org/10.3390/s19204366

Chicago/Turabian StyleMolina Martel, Francisco, Juri Sidorenko, Christoph Bodensteiner, Michael Arens, and Urs Hugentobler. 2019. "Unique 4-DOF Relative Pose Estimation with Six Distances for UWB/V-SLAM-Based Devices" Sensors 19, no. 20: 4366. https://doi.org/10.3390/s19204366

APA StyleMolina Martel, F., Sidorenko, J., Bodensteiner, C., Arens, M., & Hugentobler, U. (2019). Unique 4-DOF Relative Pose Estimation with Six Distances for UWB/V-SLAM-Based Devices. Sensors, 19(20), 4366. https://doi.org/10.3390/s19204366