An Improved Recognition Approach for Noisy Multispectral Palmprint by Robust L2 Sparse Representation with a Tensor-Based Extreme Learning Machine

Abstract

1. Introduction

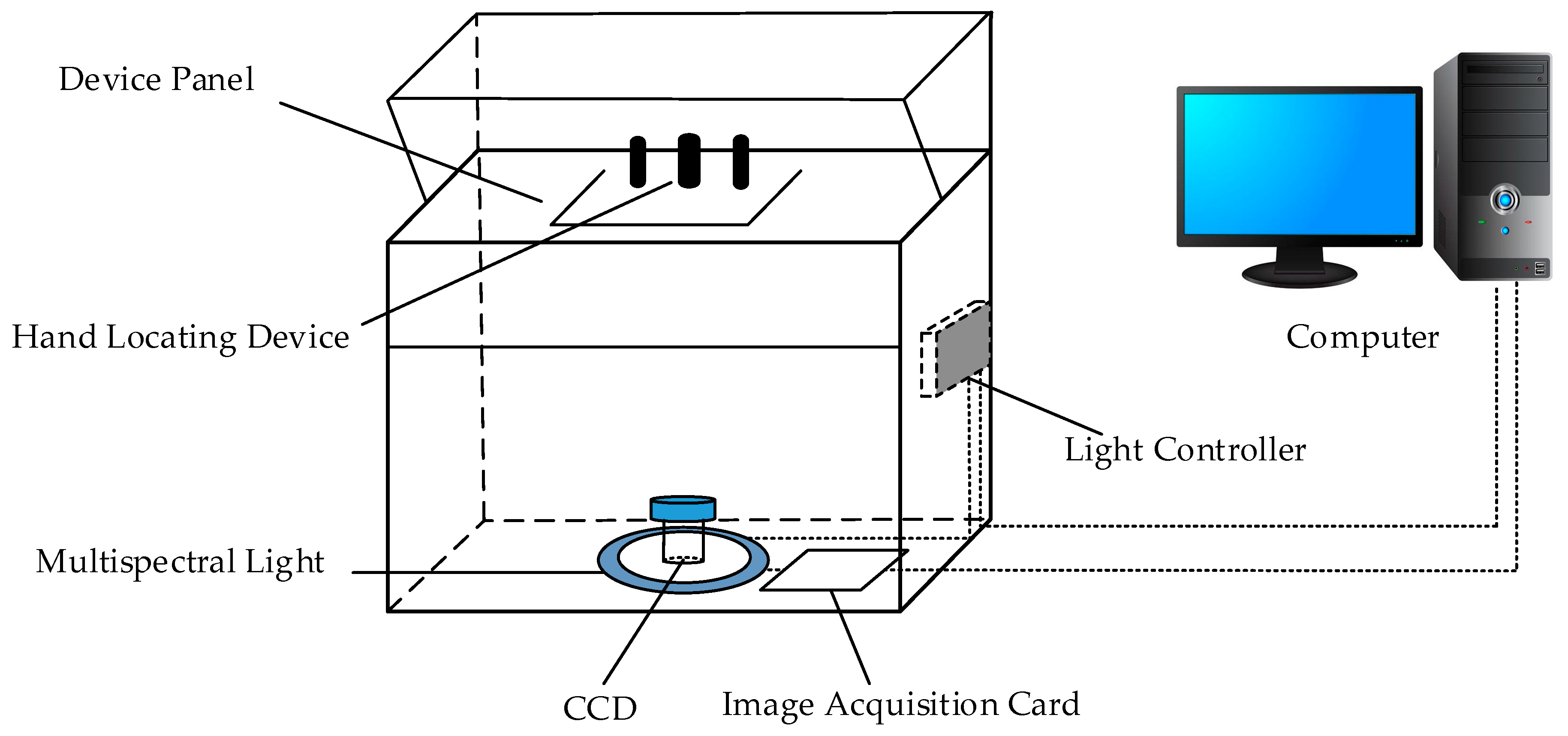

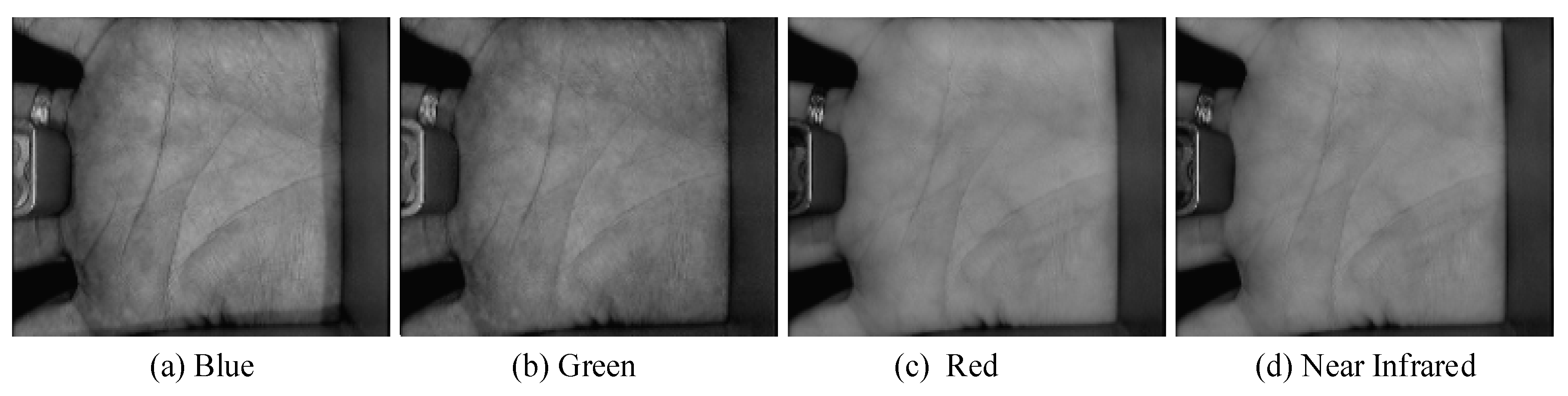

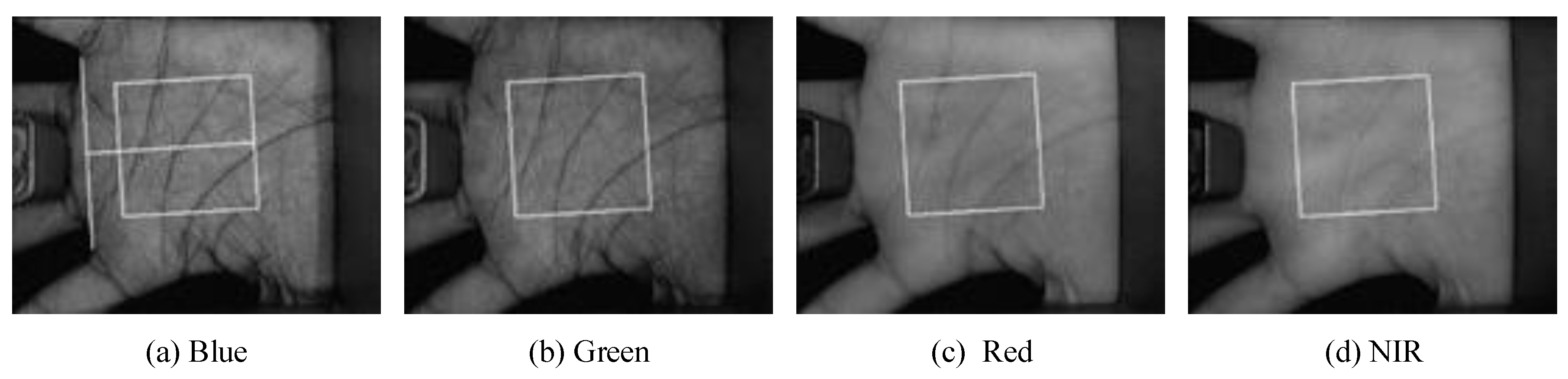

2. Acquisition Device of Multispectral Palmprint Images

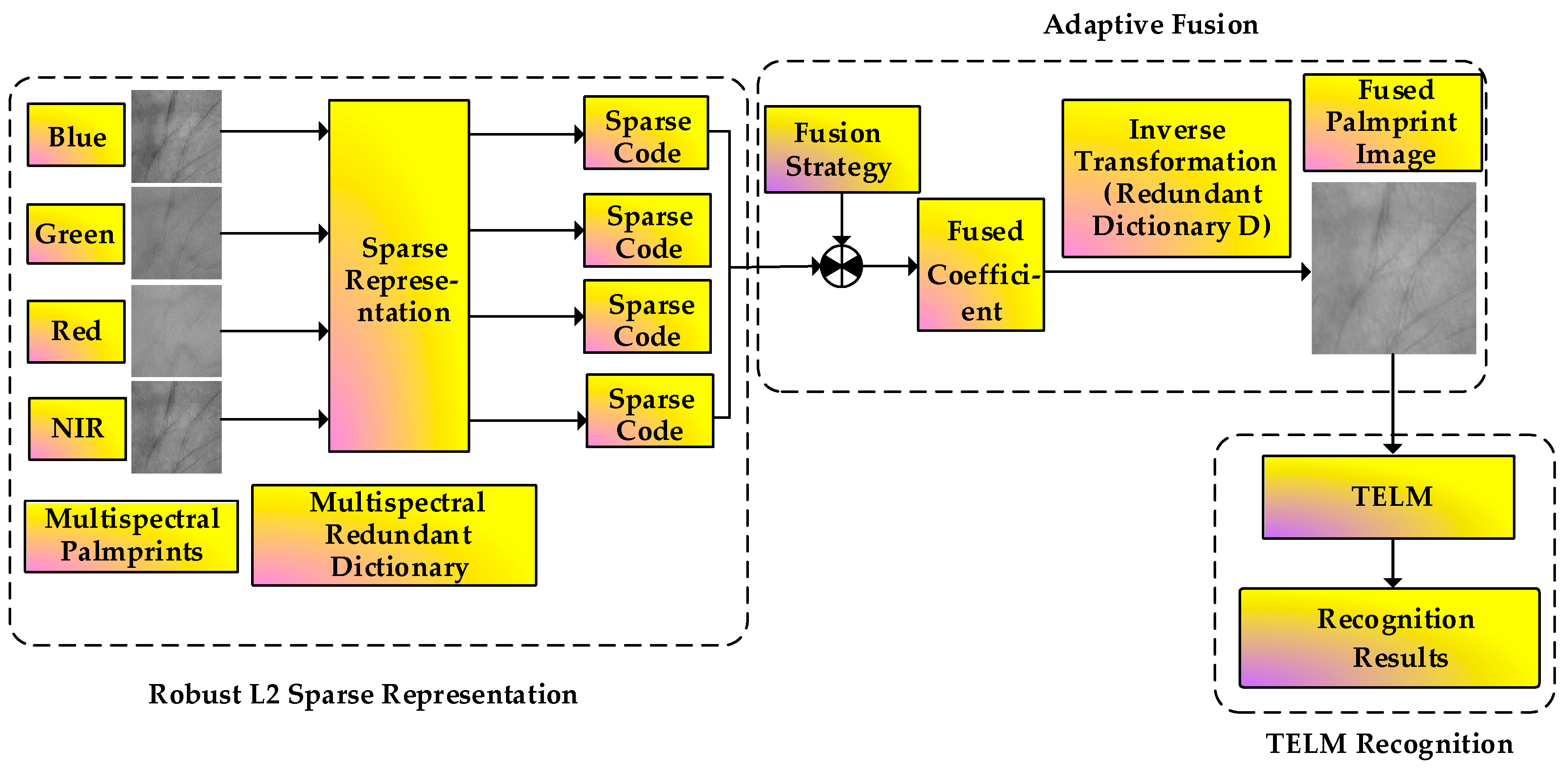

3. Proposed Algorithm

3.1. Robust L2 Sparse Representation Method

3.1.1. SRC Model

3.1.2. Robust L2 Sparse Representation Method

3.2. Image Fusion Based on Adaptive Weighted Method

3.3. Principle of Tensor Based ELM

3.3.1. ELM

3.3.2. Tensor Based ELM

4. Experiments

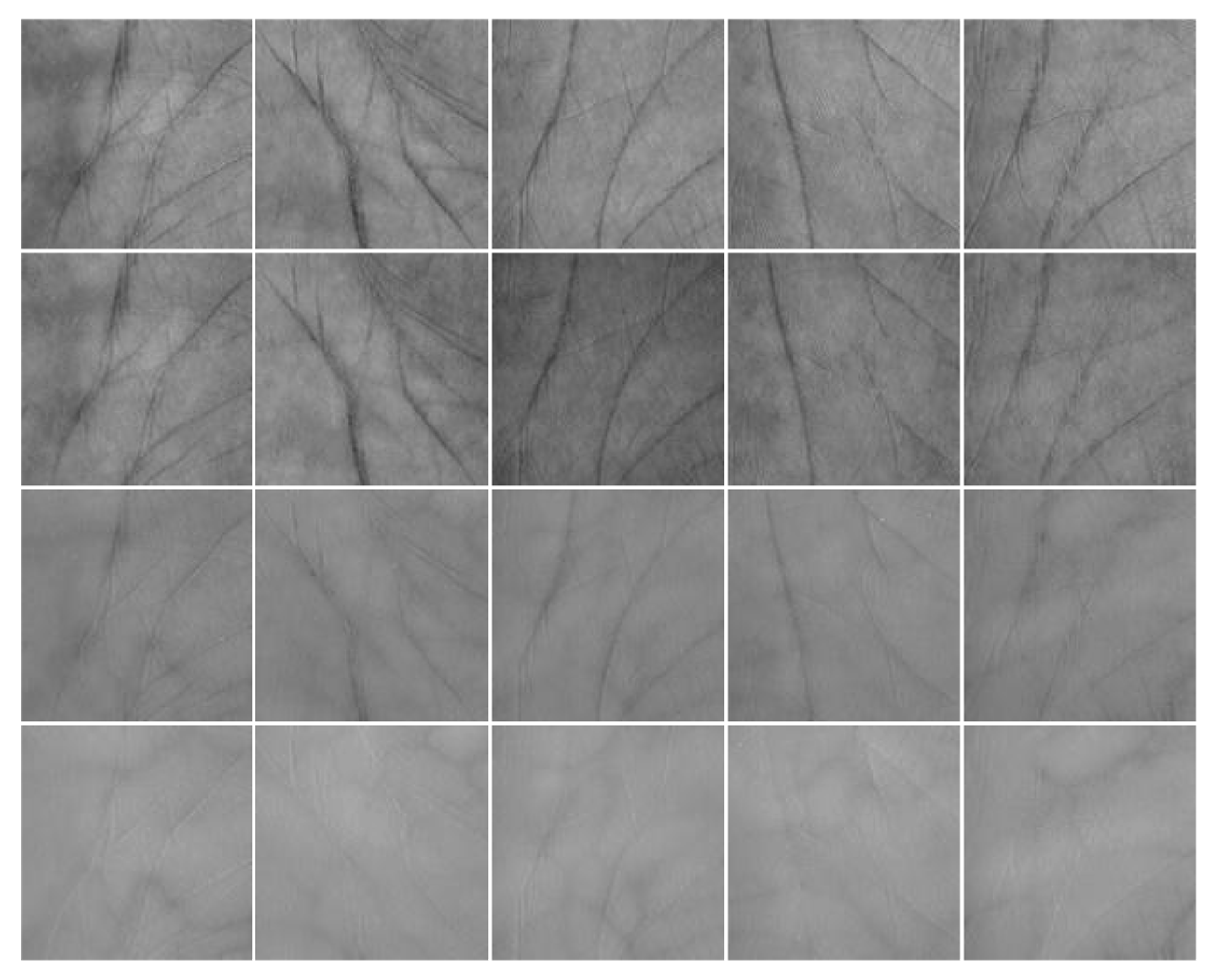

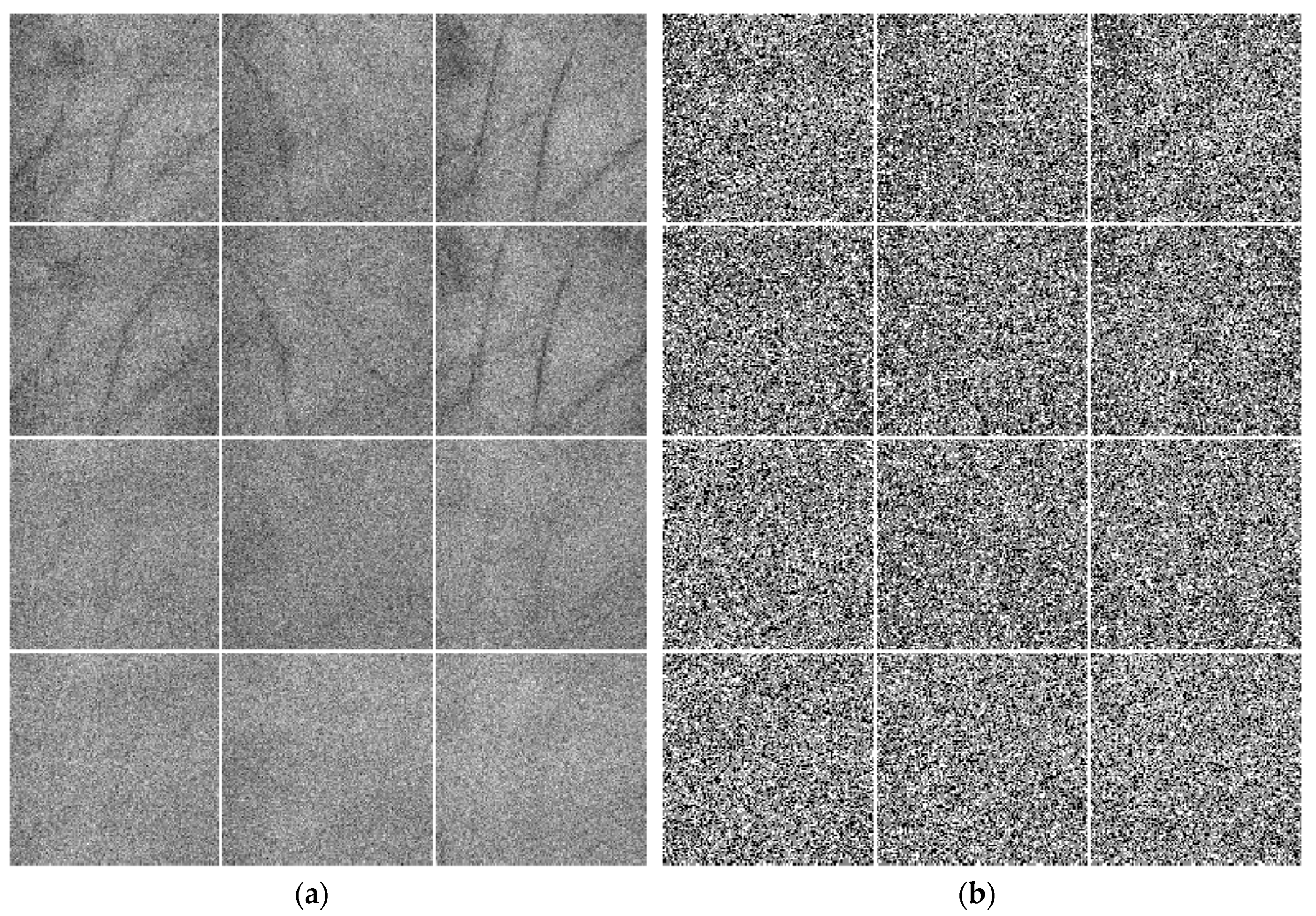

4.1. The PolyU Multispectral Palmprint Database

4.2. Parameter Selection

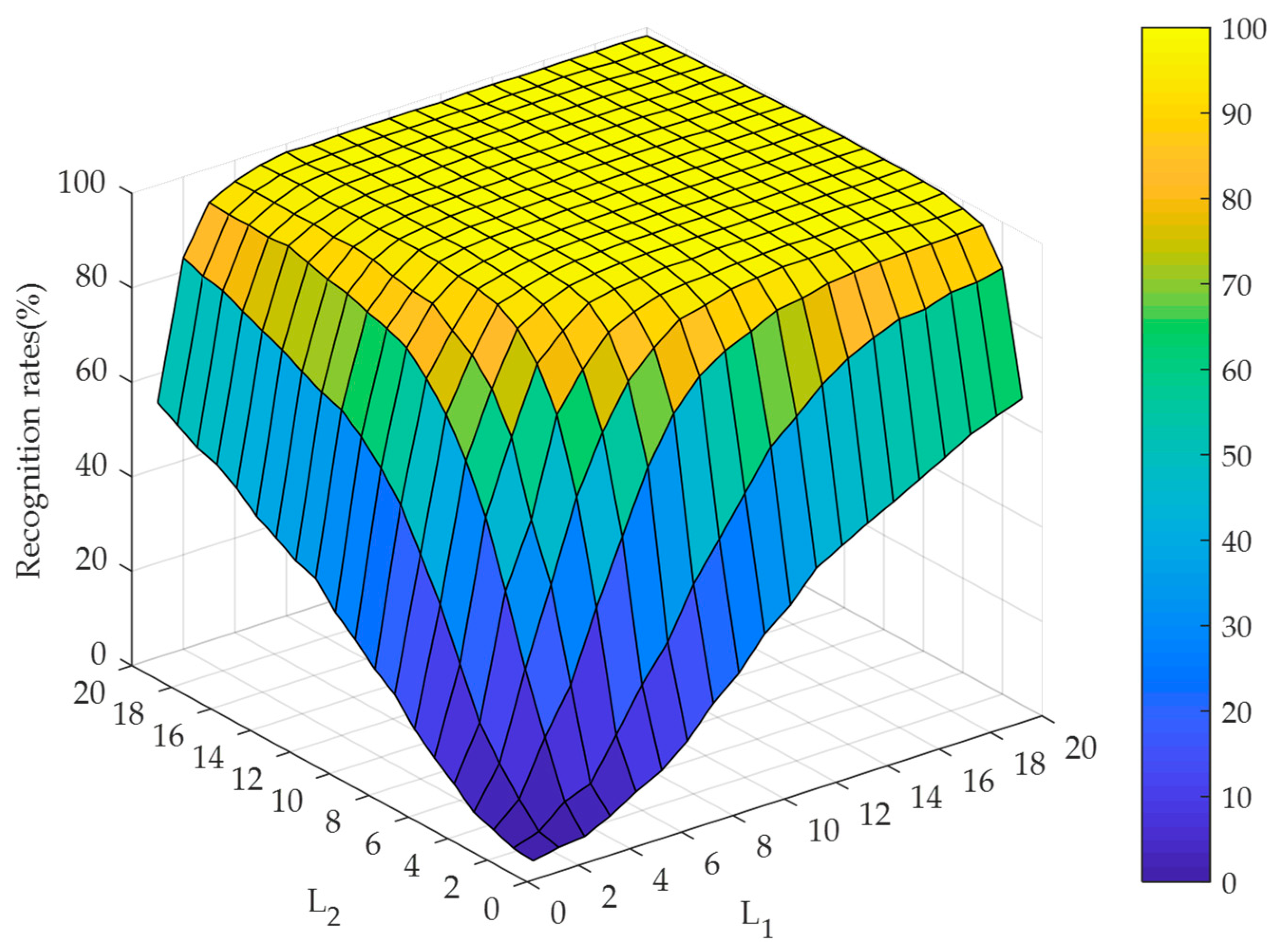

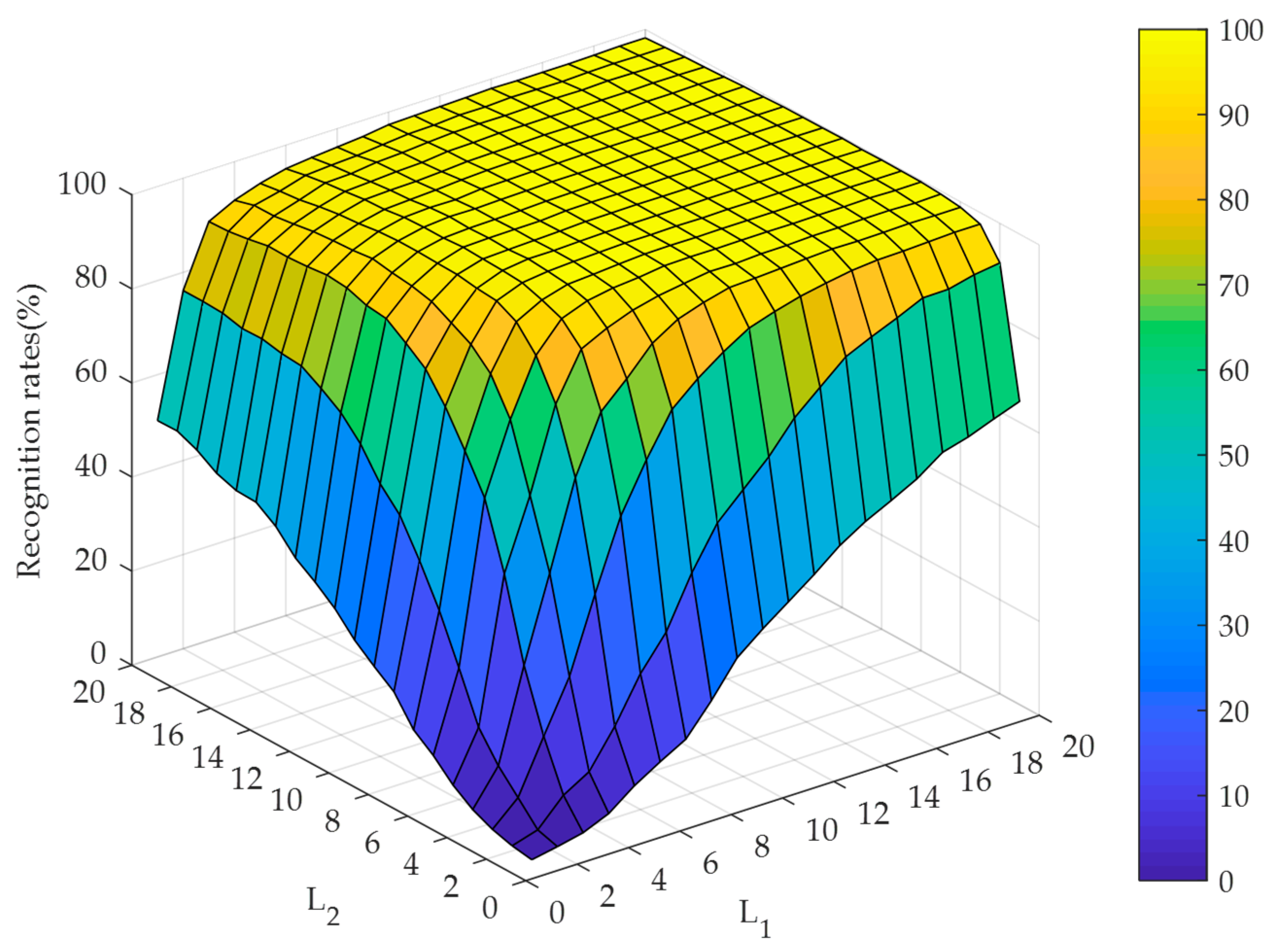

4.2.1. Selection of and for Residual Function

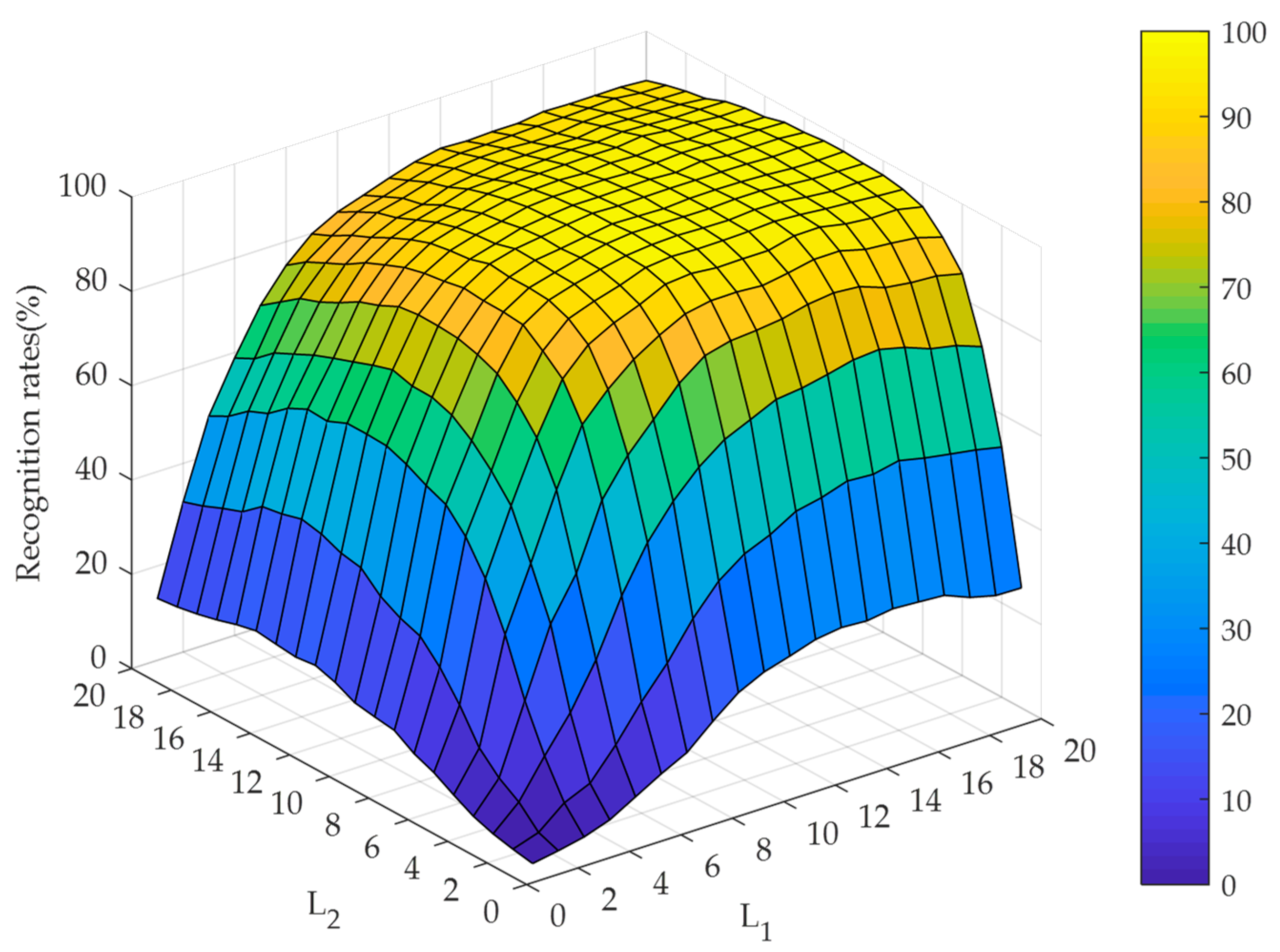

4.2.2. Selection of the Hidden Node Numbers Along the Directions of TELM

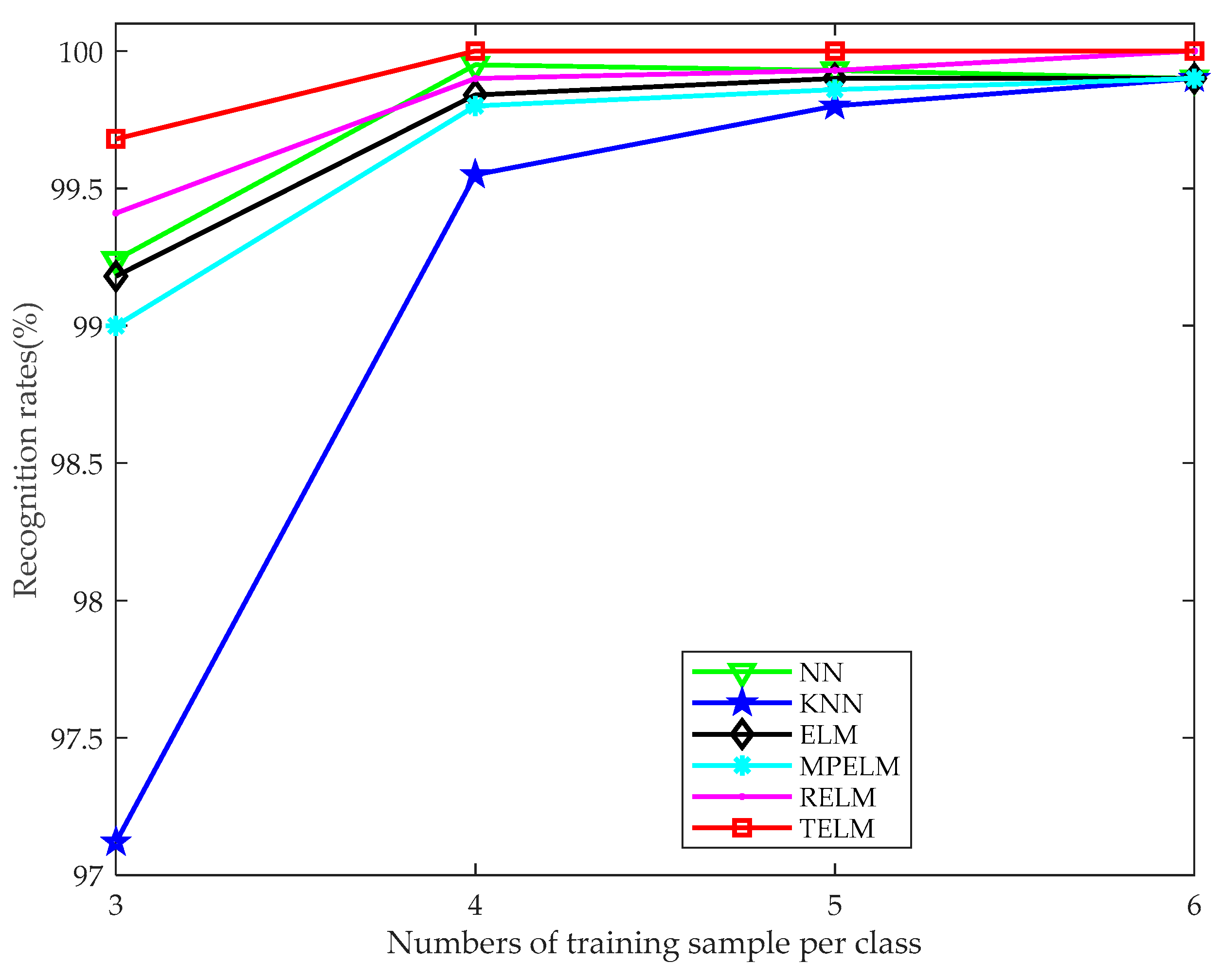

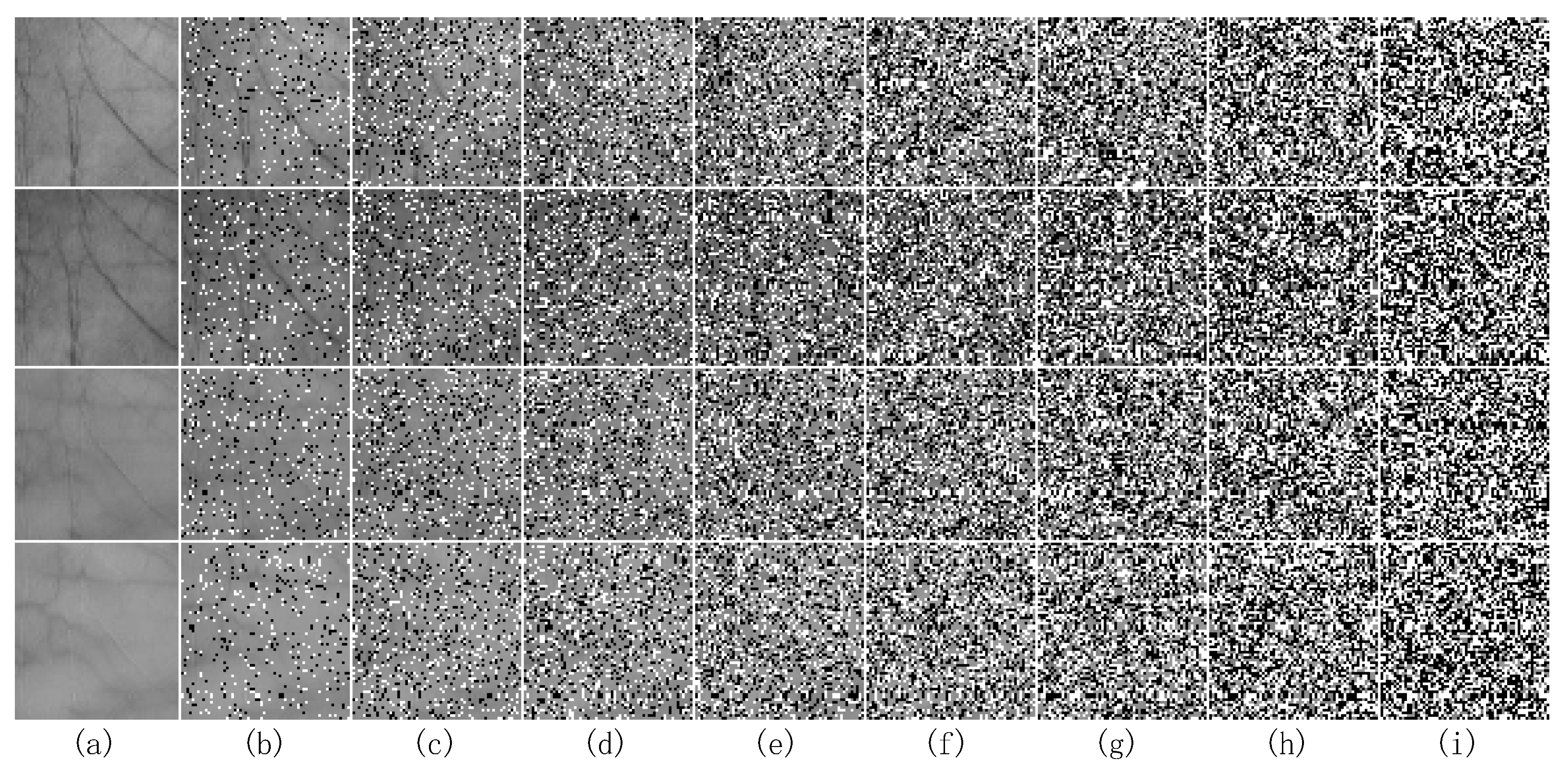

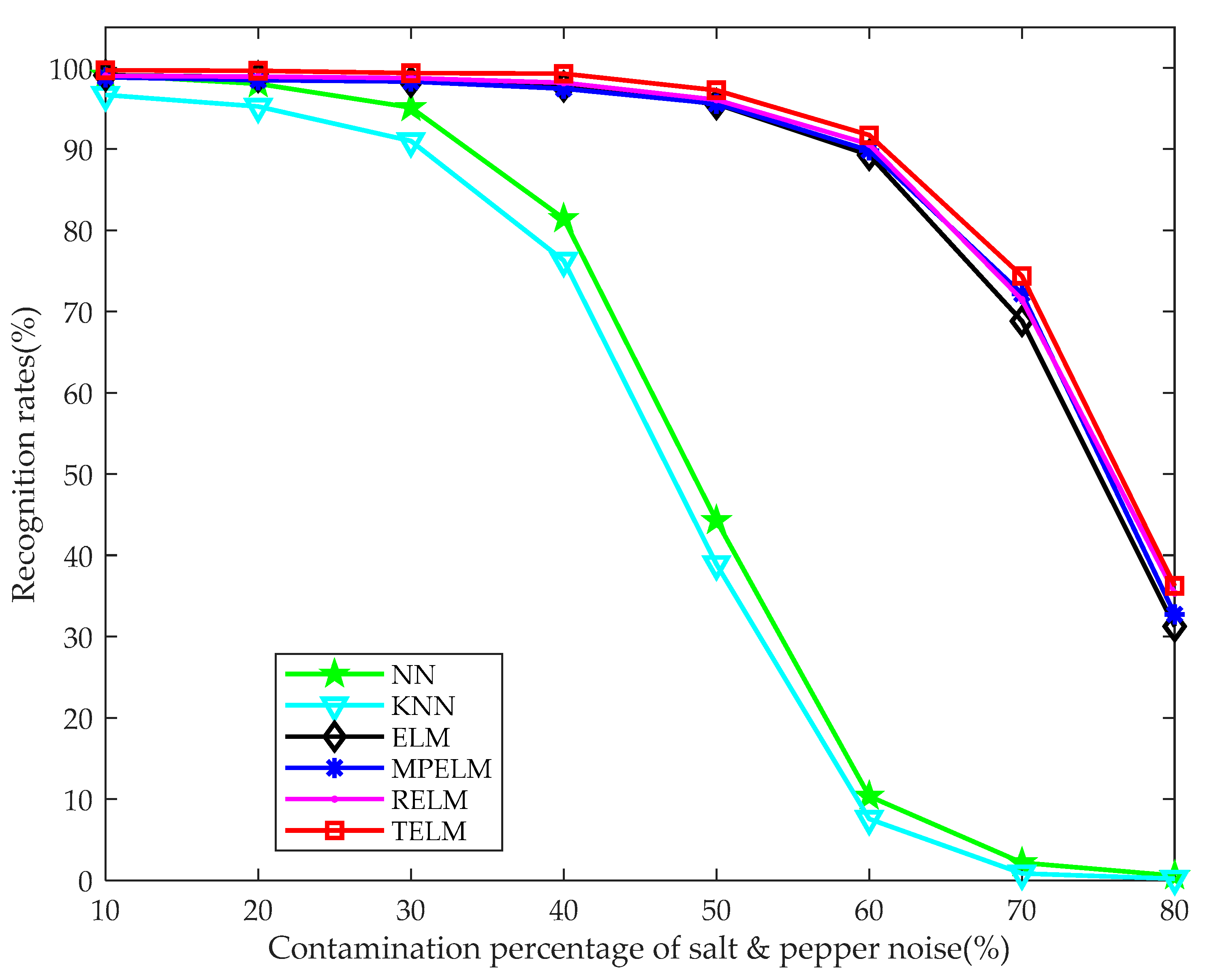

4.3. Experiment Results and Analysis

5. Conclusions

Author Contributions

Funding

Acknowledgements

Conflicts of Interest

References

- Cui, J.R. 2D and 3D Palmprint fusion and recognition using PCA plus TPTSR method. Neural Comput. Appl. 2014, 24, 497–502. [Google Scholar] [CrossRef]

- Lu, G.M.; Zhang, D.; Wang, K.Q. Palmprint recognition using eigenpalms features. Pattern Recogn. Lett. 2003, 24, 1463–1467. [Google Scholar] [CrossRef]

- Bai, X.F.; Gao, N.; Zhang, Z.H.; Zhang, D. 3D palmprint identification combining blocked ST and PCA. Pattern Recogn. Lett. 2017, 100, 89–95. [Google Scholar] [CrossRef]

- Zuo, W.M.; Zhang, H.Z.; Zhang, D.; Wang, K.Q. Post-processed LDA for face and palmprint recognition: What is the rationale. Signal Process. 2010, 90, 2344–2352. [Google Scholar] [CrossRef]

- Rida, I.; Herault, R.; Marcialis, G.L.; Gasso, G. Palmprint recognition with an efficient data driven ensemble classifier. Pattern Recogn. Lett. In press. [CrossRef]

- Rida, I.; Al Maadeed, S.; Jiang, X.; Lunke, F.; Bensrhair, A. An ensemble learning method based on random subspace sampling for palmprint identification. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 2047–2051. [Google Scholar]

- Shang, L.; Huang, D.S.; Du, J.X.; Zheng, C.H. Palmprint recognition using FastICA algorithm and radial basis probabilistic neural network. Neurocomputing 2006, 69, 1782–1786. [Google Scholar] [CrossRef]

- Pan, X.; Ruan, Q.Q. Palmprint recognition using Gabor feature-based (2D)2PCA. Neurocomputing 2008, 71, 3032–3036. [Google Scholar] [CrossRef]

- Ekinci, M.; Aykut, M. Gabor-based kernel PCA for palmprint recognition. Electron. Lett. 2007, 43, 1077–1079. [Google Scholar] [CrossRef]

- Ekinci, M.; Aykut, M. Palmprint recognition by applying wavelet-based kernel PCA. J. Comput. Sci. Technol. 2008, 23, 851–861. [Google Scholar] [CrossRef]

- Fei, L.; Zhang, B.; Xu, Y.; Yan, L.P. Palmprint recognition using neighboring direction indicator. IEEE Trans. Hum. Mach. Syst. 2016, 46, 787–798. [Google Scholar] [CrossRef]

- Zheng, Q.; Kumar, A.; Pan, G. A 3D feature descriptor recovered from a single 2D palmprint image. IEEE Trans. Pattern Anal. 2016, 38, 1272–1279. [Google Scholar] [CrossRef] [PubMed]

- Younesi, A.; Amirani, M.C. Gabor filter and texture based features for palmprint recognition. Procedia Comput. Sci. 2017, 108, 2488–2495. [Google Scholar] [CrossRef]

- Fei, L.K.; Xu, Y.; Tang, W.L.; Zhang, D. Double-orientation code and nonlinear matching scheme for palmprint recognition. Pattern Recogn. 2016, 49, 89–101. [Google Scholar] [CrossRef]

- Gumaei, A.; Sammouda, R.; Al-Salman, A.M.; Alsanad, A. An effective palmprint recognition approach for visible and multispectral sensor images. Sensors 2018, 18, 1575. [Google Scholar] [CrossRef]

- Tabejamaat, M.; Mousavi, A. Concavity-orientation coding for palmprint recognition. Multimed. Tools Appl. 2017, 76, 9387–9403. [Google Scholar] [CrossRef]

- Chen, H.P. An efficient palmprint recognition method based on block dominant orientation code. Optik 2015, 126, 2869–2875. [Google Scholar] [CrossRef]

- Minaee, S.; Wang, Y. Palmprint recognition using deep scattering convolutional network. In Proceedings of the 2017 IEEE International Symposium on Circuits and Systems (ISCAS), Baltimore, MD, USA, 28–31 May 2017; pp. 1–4. [Google Scholar]

- Tamrakar, D.; Khanna, P. Kernel discriminant analysis of block-wise Gaussian derivative phase pattern histogram for palmprint recognition. J. Vis. Commun. Image Represent. 2016, 40, 432–448. [Google Scholar] [CrossRef]

- Li, G.; Kim, J. Palmprint recognition with local micro-structure tetra pattern. Pattern Recogn. 2017, 61, 29–46. [Google Scholar] [CrossRef]

- Luo, Y.T.; Zhao, L.Y.; Zhang, B.; Jia, W.; Xue, F.; Lu, J.T.; Zhu, Y.H.; Xu, B.Q. Local line directional pattern for palmprint recognition. Pattern Recogn. 2016, 50, 26–44. [Google Scholar] [CrossRef]

- Jia, W.; Hu, R.X.; Lei, Y.K.; Zhao, Y.; Gui, J. Histogram of oriented lines for palmprint recognition. IEEE Trans. Syst. Man Cybern. Syst. 2014, 44, 385–395. [Google Scholar] [CrossRef]

- Zhang, S.W.; Wang, H.X.; Huang, W.Z.; Zhang, C.L. Combining modified LBP and weighted SRC for palmprint recognition. Signal Image Video Process. 2018, 12, 1035–1042. [Google Scholar] [CrossRef]

- Guo, X.M.; Zhou, W.D.; Zhang, Y.L. Collaborative representation with HM-LBP features for palmprint recognition. Mach. Vis. Appl. 2017, 28, 283–291. [Google Scholar] [CrossRef]

- Wright, J.; Yang, A.Y.; Ganesh, A.; Sastry, S.S.; Ma, Y. Robust face recognition via sparse representation. IEEE Trans. Pattern Anal. 2009, 31, 210–227. [Google Scholar] [CrossRef] [PubMed]

- Maadeed, S.A.; Jiang, X.D.; Rida, I.; Bouridane, A. Palmprint identification using sparse and dense hybrid representation. Multimed. Tools Appl. 2018, 1–15. [Google Scholar] [CrossRef]

- Tabejamaat, M.; Mousavi, A. Manifold sparsity preserving projection for face and palmprint recognition. Multimed. Tools Appl. 2017, 77, 12233–12258. [Google Scholar] [CrossRef]

- Zuo, W.M.; Lin, Z.C.; Guo, Z.H.; Zhang, D. The multiscale competitive code via sparse representation for palmprint verification. In Proceedings of the 2010 International IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 2265–2272. [Google Scholar]

- Xu, Y.; Fan, Z.Z.; Qiu, M.N.; Zhang, D.; Yang, J.Y. A sparse representation method of bimodal biometrics and palmprint recognition experiments. Neurocomputing 2013, 103, 164–171. [Google Scholar] [CrossRef]

- Rida, I.; Al Maadeed, N.; Al Maadeed, S. A novel efficient classwise sparse and collaborative representation for holistic palmprint recognition. In Proceedings of the 2018 IEEE NASA/ESA Conference on Adaptive Hardware and Systems (AHS), Edinburgh, UK, 6–9 August 2018; pp. 156–161. [Google Scholar]

- Rida, I.; Maadeed, S.A.; Mahmood, A.; Bouridane, A.; Bakshi, S. Palmprint identification using an ensemble of sparse representations. IEEE Access 2018, 6, 3241–3248. [Google Scholar] [CrossRef]

- Han, D.; Guo, Z.H.; Zhang, D. Multispectral palmprint recognition using wavelet-based image fusion. In Proceedings of the IEEE International Conference on Signal Processing (ICSP), Beijing, China, 26–29 October 2008; pp. 2074–2077. [Google Scholar]

- Aberni, Y.; Boubchir, L.; Daachi, B. Multispectral palmprint recognition: A state-of-the-art review. In Proceedings of the IEEE International Conference on Telecommunications and Signal Processing, Barcelona, Spain, 5–7 July 2017; pp. 793–797. [Google Scholar]

- Bounneche, M.D.; Boubchir, L.; Bouridane, A.; Nekhoul, B.; Cherif, A.A. Multi-spectral palmprint recognition based on oriented multiscale log-Gabor filters. Neurocomputing 2016, 205, 274–286. [Google Scholar] [CrossRef]

- Hong, D.F.; Liu, W.Q.; Su, J.; Pan, Z.K.; Wang, G.D. A novel hierarchical approach for multispectral palmprint recognition. Neurocomputing 2015, 151, 511–521. [Google Scholar] [CrossRef]

- Raghavendra, R.; Busch, C. Novel image fusion scheme based on dependency measure for robust multispectral palmprint recognition. Pattern Recogn. 2014, 47, 2205–2221. [Google Scholar] [CrossRef]

- Xu, X.P.; Guo, Z.H.; Song, C.J.; Li, Y.F. Multispectral palmprint recognition using a quaternion matrix. Sensors 2012, 12, 4633–4647. [Google Scholar] [CrossRef] [PubMed]

- Gumaei, A.; Sammouda, R.; Al-Salman, A.M.; Alsanad, A. An improved multispectral palmprint recognition system using autoencoder with regularized extreme learning machine. Comput. Intell. Neurosci. 2018, 2018, 8041069. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.B.; Lu, L.B.; Zhang, X.M.; Lu, H.M.; Deng, W.Y. Multispectral palmprint recognition using multiclass projection extreme learning machine and digital shearlet transform. Neural Comput. Appl. 2016, 27, 143–153. [Google Scholar] [CrossRef]

- El-Tarhouni, W.; Boubchir, L.; Elbendak, M.; Bouridane, A. Multispectral palmprint recognition using Pascal coefficients-based LBP and PHOG descriptors with random sampling. Neural Comput. Appl. 2017, 1–11. [Google Scholar] [CrossRef]

- Zhang, D.; Guo, Z.H.; Lu, G.M.; Zhang, L.; Zuo, W.M. An online system of multispectral palmprint verification. IEEE Trans. Instrum. Meas. 2010, 59, 480–490. [Google Scholar] [CrossRef]

- Minaee, S.; Abdolrashidi, A.A. Multispectral palmprint recognition using textural features. In Proceedings of the 2014 IEEE Signal Processing in Medicine and Biology Symposium (SPMB), Philadelphia, PA, USA, 13 December 2014; pp. 1–5. [Google Scholar]

- Minaee, S.; Abdolrashidi, A.A. On the power of joint wavelet-DCT features for multispectral palmprint recognition. In Proceedings of the 2015 49th Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 8–11 November 2015; pp. 1593–1597. [Google Scholar]

- Li, C.; Benezeth, Y.; Nakamura, K.; Gomez, R.; Yang, F. A robust multispectral palmprint matching algorithm and its evaluation for FPGA applications. J. Syst. Archit. 2018, 88, 43–53. [Google Scholar] [CrossRef]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Zhang, D.; Kong, W.K.; You, J.; Wong, M. Online palmprint identification. IEEE Trans. Pattern Anal. 2003, 25, 1041–1050. [Google Scholar] [CrossRef]

- Donoho, D. For most large underdetermined systems of linear equations the minimal 𝓁1-norm solution is also the sparsest solution. Commun. Pur. Appl. Math. 2006, 59, 797–829. [Google Scholar] [CrossRef]

- Yang, M.; Zhang, L.; Yang, J.; Zhang, D. Robust sparse coding for face recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 625–632. [Google Scholar]

- Xu, Y.; Zhong, Z.F.; Jang, J.; You, J.; Zhang, D. A new discriminative sparse representation method for robust face recognition via 𝓁 2 regularization. IEEE Trans. Neural Netw. Learn Syst. 2017, 28, 2233–2242. [Google Scholar] [CrossRef]

- l1_ls: Simple MATLAB Solver for l1-Regularized Least Squares Problems. Available online: http://web.stanford.edu/~boyd/l1_ls/ (accessed on 15 May 2008).

- Yang, A.Y.; Zhou, Z.H.; Balasubramanian, A.G.; Sastry, S.S.; Ma, Y. Fast 𝓁1-minimization algorithms for robust face recognition. IEEE Trans. Image Process. 2013, 22, 3234–3246. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Yang, M.; Feng, X.C. Sparse representation or collaborative representation: Which helps face recognition? In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 471–478. [Google Scholar]

- Cortes, C. Support vector network. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Kolda, T.G.; Bader, B.W. Tensor decompositions and applications. SIAM Rev. 2009, 51, 455–500. [Google Scholar] [CrossRef]

| Input: testing sample , training sample matrix , initiate the residual function matrix . Output: linear representation coefficient . |

| While not convergent, do 1. Calculate the collaborative representation code by solving 2. Calculate the residual by employing 3. Calculate the residual function by using 4. Update by utilizing 5. Calculate End while 6. For each spectral testing sample , calculate by using |

| Representation Method | Recognition Rate (%) | ||

|---|---|---|---|

| Noise-Free | White Gaussian Noise | Salt & Pepper Noise | |

| SRC | 99.64 | 97.84 | 94.28 |

| CRC | 99.44 | 98.76 | 96.68 |

| DSRM | 97.96 | 96.68 | 96.28 |

| RL2SR | 99.68 | 99.20 | 97.24 |

| Fusion Method | Noise Contamination Case | Recognition Rate (%) | ||

|---|---|---|---|---|

| 2 | 3 | 4 | ||

| Sum fusion | Noise-free | 97.50 | 99.56 | 99.90 |

| White Gaussian noise | 96.70 | 99.44 | 99.65 | |

| Salt & pepper noise | 89.63 | 96.56 | 98.55 | |

| Min-max fusion | Noise-free | 92.83 | 97.68 | 99.25 |

| White Gaussian noise | 92.67 | 97.44 | 99.20 | |

| Salt & pepper noise | 72.53 | 82.16 | 85.85 | |

| Our adaptive fusion | Noise-free | 97.73 | 99.68 | 100.00 |

| White Gaussian noise | 97.47 | 99.20 | 99.95 | |

| Salt & pepper noise | 92.27 | 97.24 | 99.05 | |

| Classifiers | Recognition Rate (%) | ||

|---|---|---|---|

| Noise-Free | White Gaussian Noise | Salt & Pepper Noise | |

| NN | 99.24 | 96.48 | 44.24 |

| KNN | 97.12 | 93.32 | 38.92 |

| ELM | 99.18 | 99.16 | 95.55 |

| MPELM | 99.00 | 98.80 | 95.60 |

| RELM | 99.41 | 98.96 | 96.07 |

| TELM | 99.68 | 99.20 | 97.24 |

| Classifiers | Classify Time (s) |

|---|---|

| NN | 7.76 |

| KNN | 5.17 |

| ELM | 1.51 |

| MPELM | 1.82 |

| RELM | 1.67 |

| TELM | 1.59 |

| Spectral Combination | Recognition Rate (%) | ||

|---|---|---|---|

| Noise-Free | White Gaussian Noise | Salt & Pepper Noise | |

| Blue | 99.55 | 98.65 | 80.90 |

| Green | 99.50 | 99.25 | 87.65 |

| Red | 99.45 | 99.15 | 83.10 |

| NIR | 98.65 | 94.50 | 76.75 |

| Blue, Green | 100.00 | 99.80 | 95.80 |

| Blue, Red | 99.95 | 99.80 | 93.30 |

| Blue, NIR | 100.00 | 99.85 | 90.70 |

| Green, Red | 99.75 | 99.50 | 96.15 |

| Green, NIR | 100.00 | 99.80 | 95.80 |

| Red, NIR | 99.90 | 99.90 | 96.60 |

| Blue, Green, Red | 100.00 | 99.85 | 98.65 |

| Blue, Green, NIR | 100.00 | 99.90 | 97.60 |

| Blue, Red, NIR | 100.00 | 99.90 | 97.15 |

| Green, Red, NIR | 99.95 | 99.85 | 98.35 |

| Blue, Green, Red, NIR | 100.00 | 99.95 | 99.05 |

| Algorithm | Recognition Rate (%) for Different Training Sample Number | |||

|---|---|---|---|---|

| 3 | 4 | 5 | 6 | |

| Deep scattering network method [18] | 100 | 100 | 100 | 100 |

| Texture feature based method [42] | - | 99.96 | 99.99 | 100 |

| DCT-based features method [43] | - | 99.97 | 100 | 100 |

| Our proposed RL2SR-TELM | 99.68 | 100 | 100 | 100 |

| Algorithm | Recognition Rate (%) | ||

|---|---|---|---|

| Noise-Free | White Gaussian Noise | Salt & Pepper Noise | |

| Matching score-level fusion by LOC [41] | 99.43 | 99.23 | 96.48 |

| DST-MPELM [39] | 99.47 | 98.30 | 89.98 |

| AE-RELM [38] | 99.16 | 98.48 | 95.76 |

| QPCA + QDWT [37] | 98.83 | 93.33 | 90.16 |

| Image-level fusion by DWT [32] | 99.03 | 96.23 | 82.75 |

| Our proposed RL2SR-TELM | 99.68 | 99.20 | 97.24 |

| Procedure | RL2SR and Adaptive Fusion | TELM | Total Time |

|---|---|---|---|

| Average time (s) | 0.10892 | 0.00053 | 0.10945 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, D.; Zhang, X.; Xu, X. An Improved Recognition Approach for Noisy Multispectral Palmprint by Robust L2 Sparse Representation with a Tensor-Based Extreme Learning Machine. Sensors 2019, 19, 235. https://doi.org/10.3390/s19020235

Cheng D, Zhang X, Xu X. An Improved Recognition Approach for Noisy Multispectral Palmprint by Robust L2 Sparse Representation with a Tensor-Based Extreme Learning Machine. Sensors. 2019; 19(2):235. https://doi.org/10.3390/s19020235

Chicago/Turabian StyleCheng, Dongxu, Xinman Zhang, and Xuebin Xu. 2019. "An Improved Recognition Approach for Noisy Multispectral Palmprint by Robust L2 Sparse Representation with a Tensor-Based Extreme Learning Machine" Sensors 19, no. 2: 235. https://doi.org/10.3390/s19020235

APA StyleCheng, D., Zhang, X., & Xu, X. (2019). An Improved Recognition Approach for Noisy Multispectral Palmprint by Robust L2 Sparse Representation with a Tensor-Based Extreme Learning Machine. Sensors, 19(2), 235. https://doi.org/10.3390/s19020235