AR Enabled IoT for a Smart and Interactive Environment: A Survey and Future Directions

Abstract

:1. Introduction

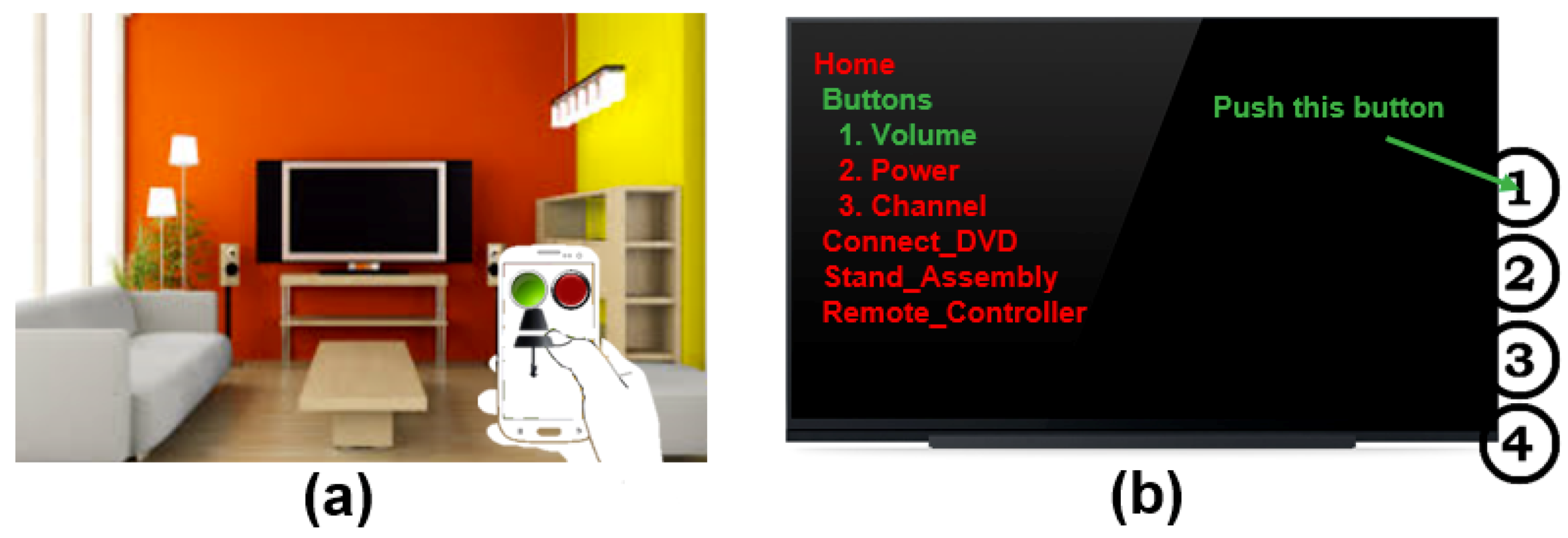

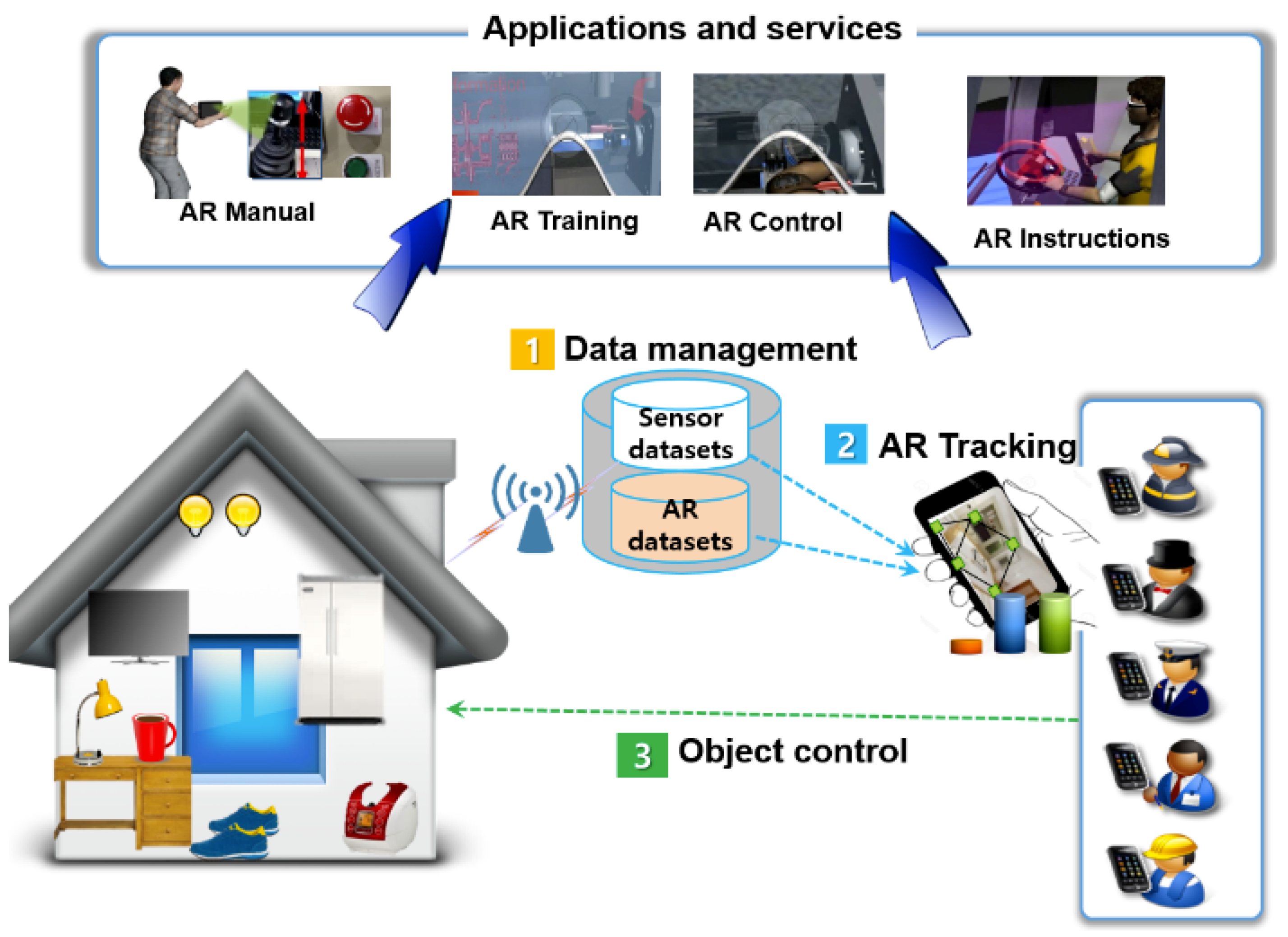

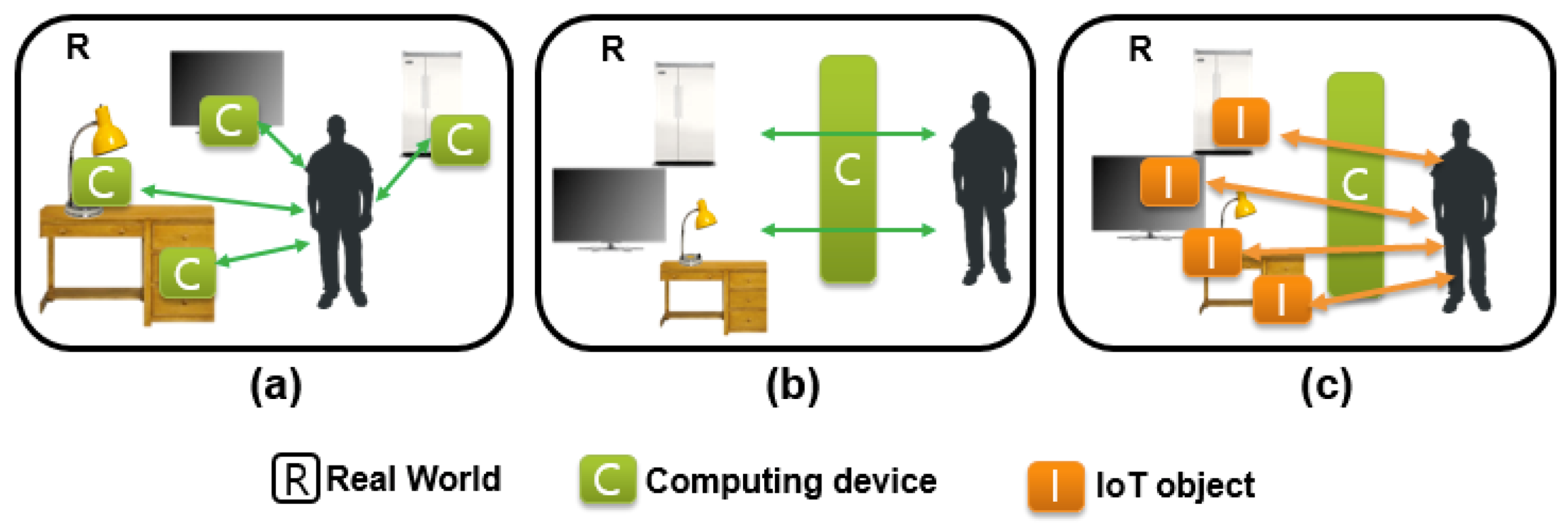

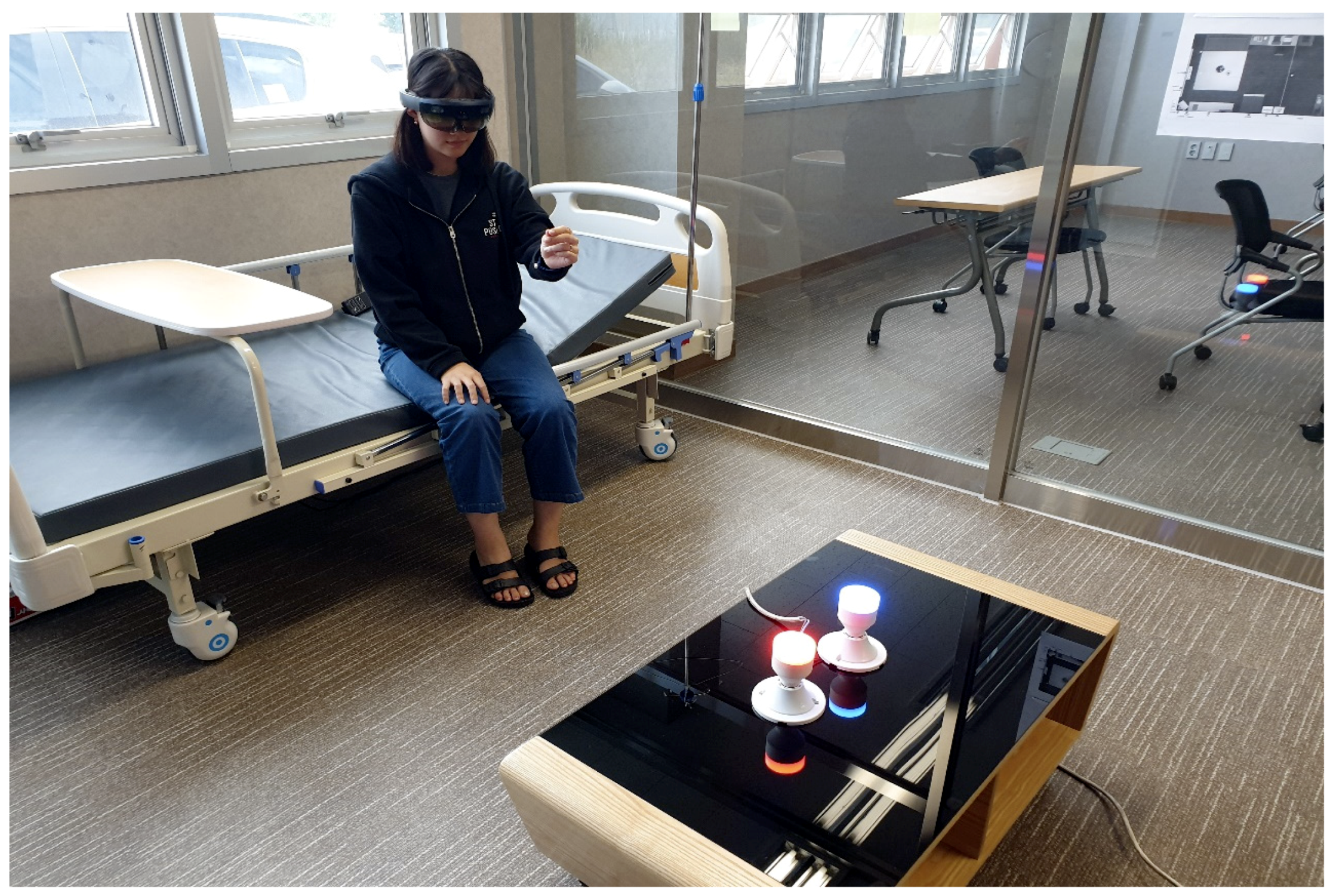

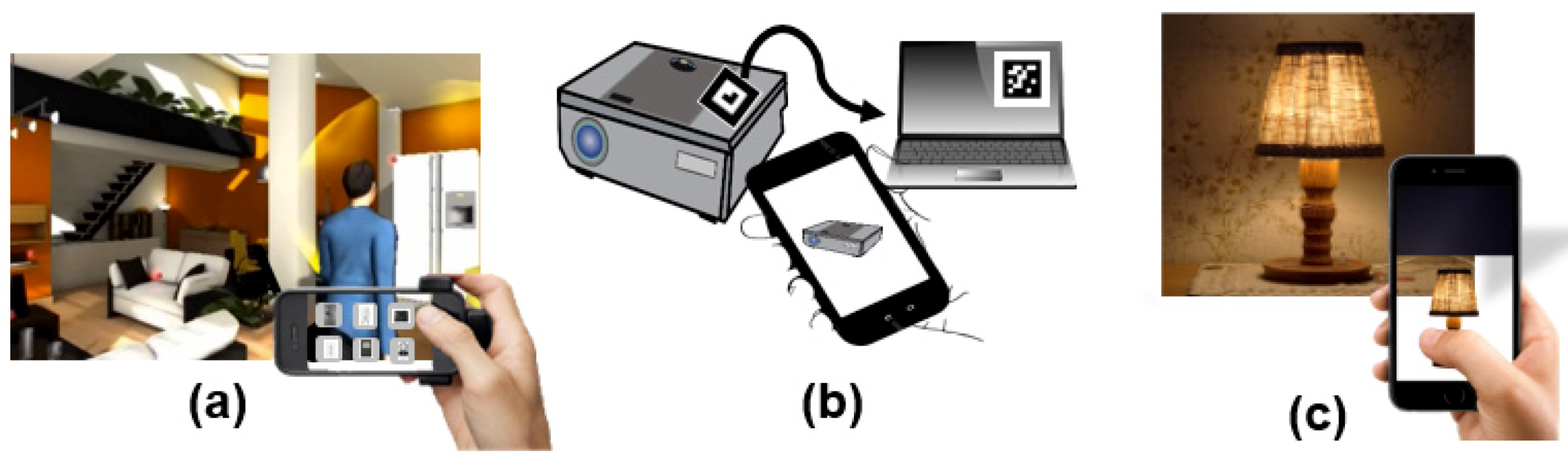

2. Use Case Scenarios

3. AR Enabled IoT Platform for a Smart and Interactive Environment

4. Data Management for Physical Objects

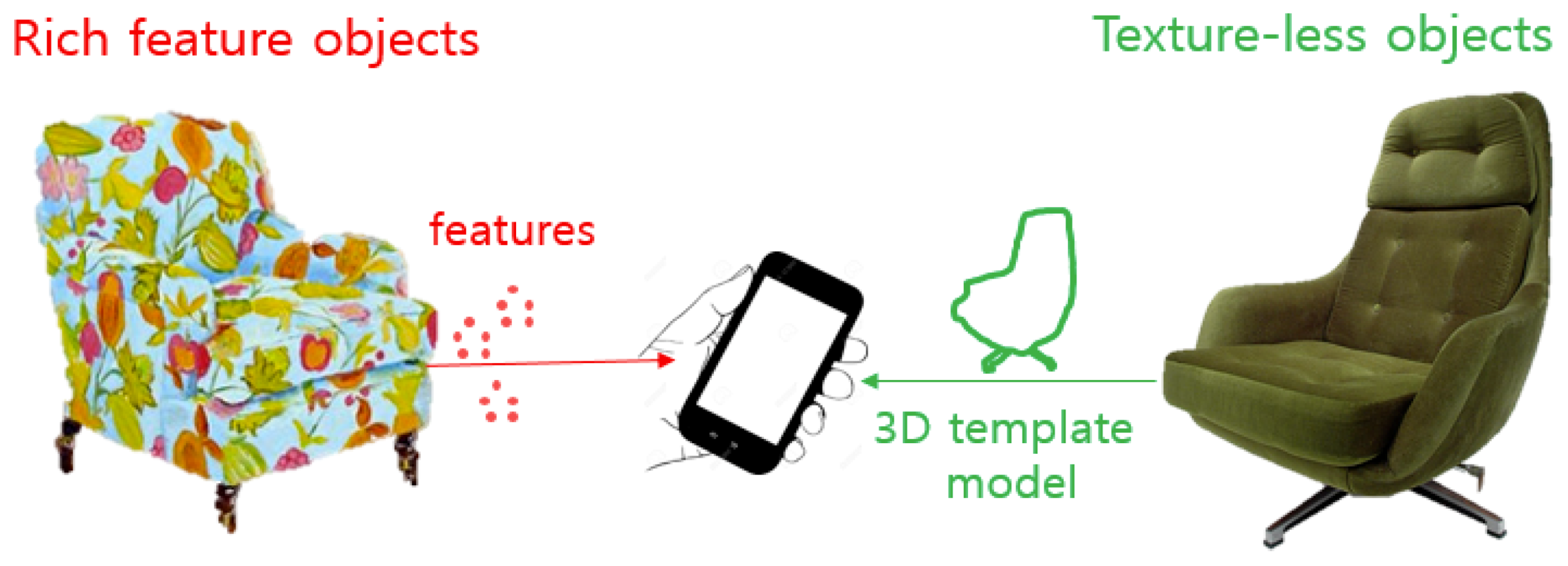

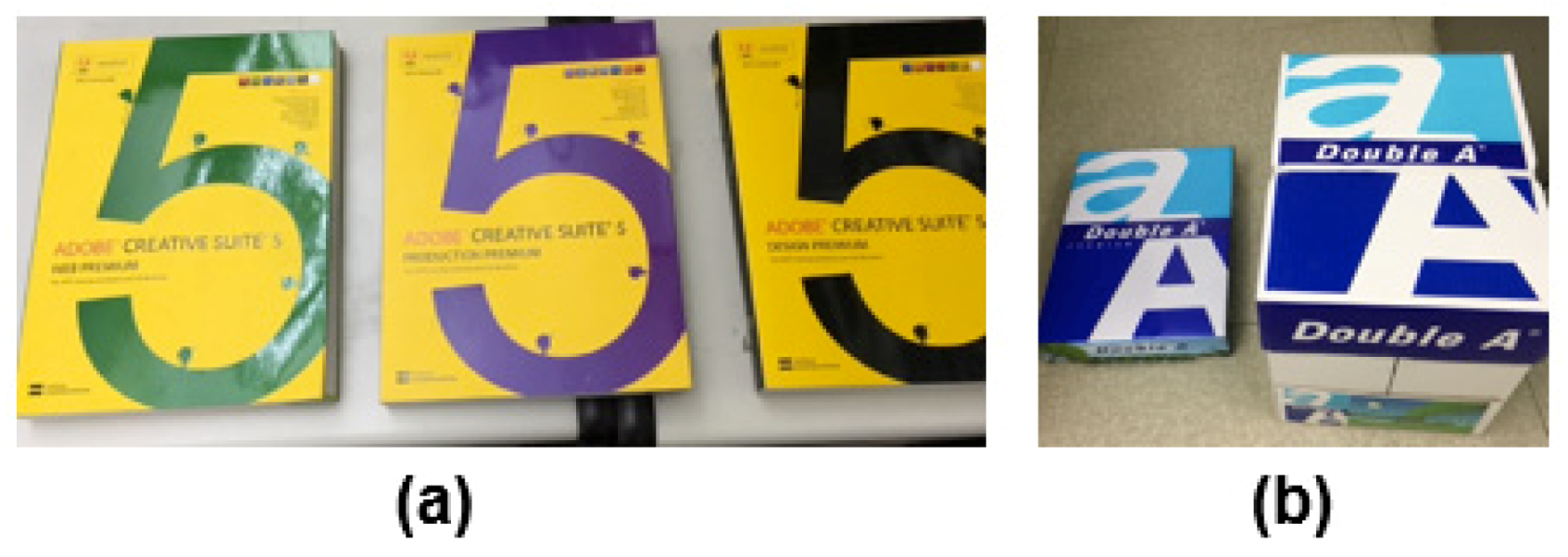

5. Scalable AR Recognition and Tracking for “Every” IoT Object

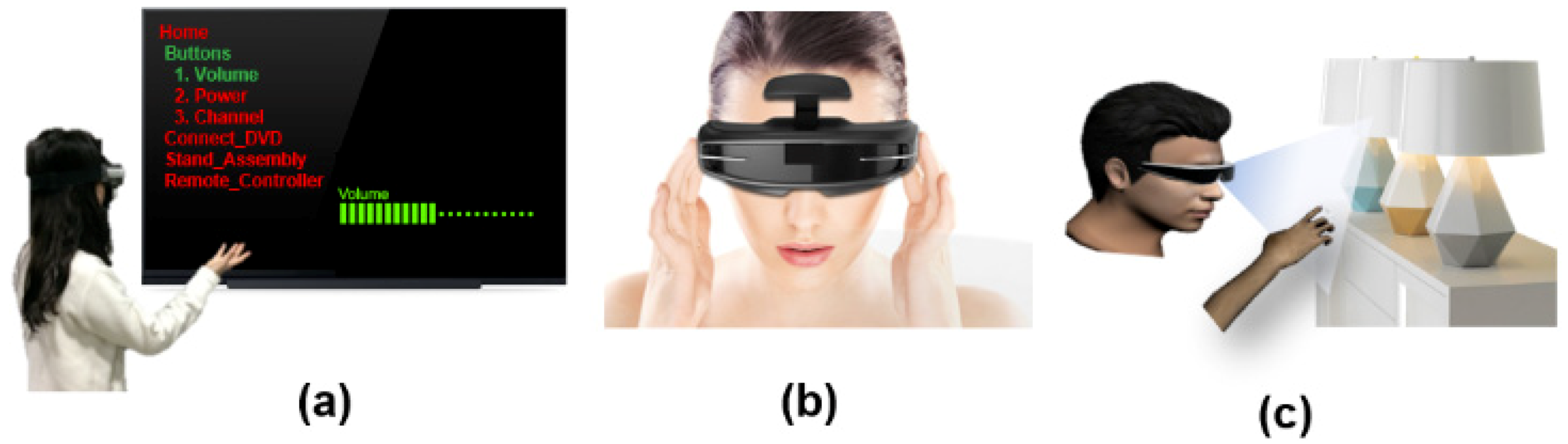

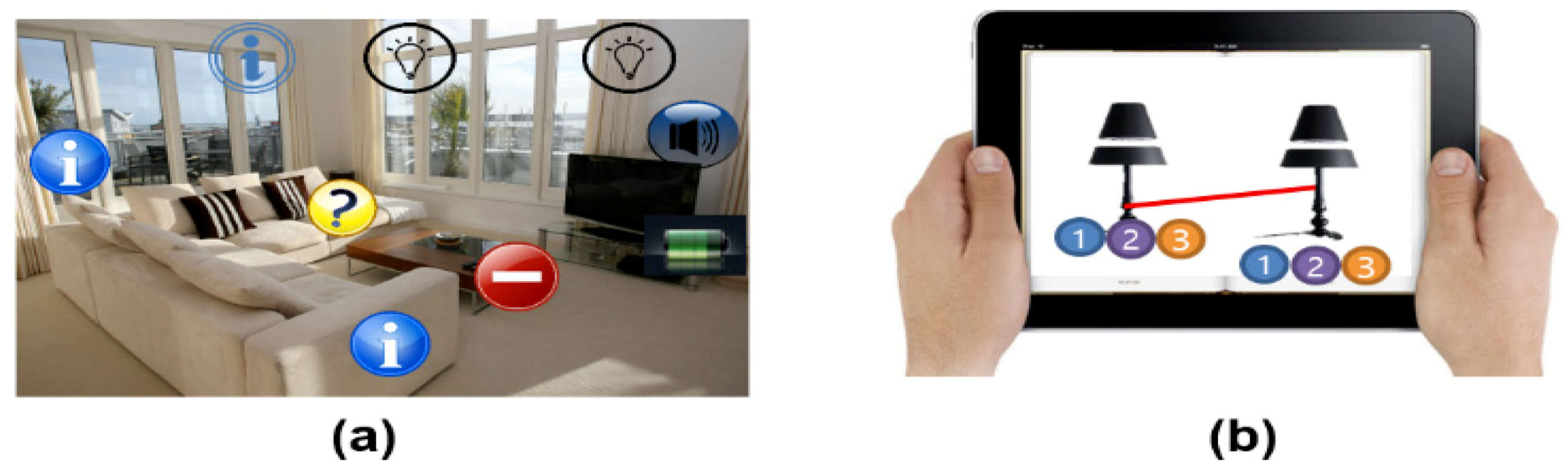

6. IoT Object Control with Scalable AR Interaction

7. Future Research Directions

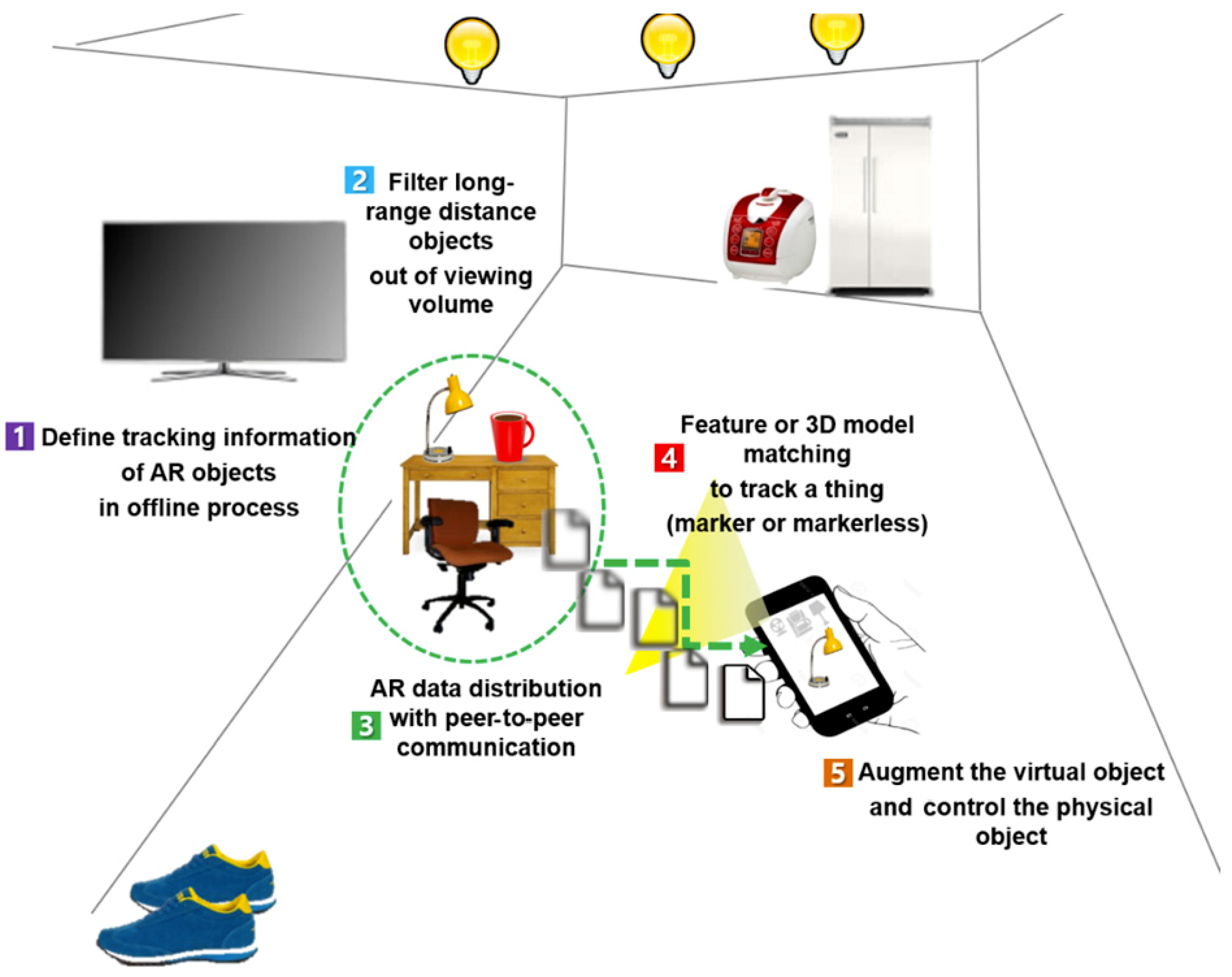

7.1. Proposal: Data Distribution and Peer-to-Peer Communication

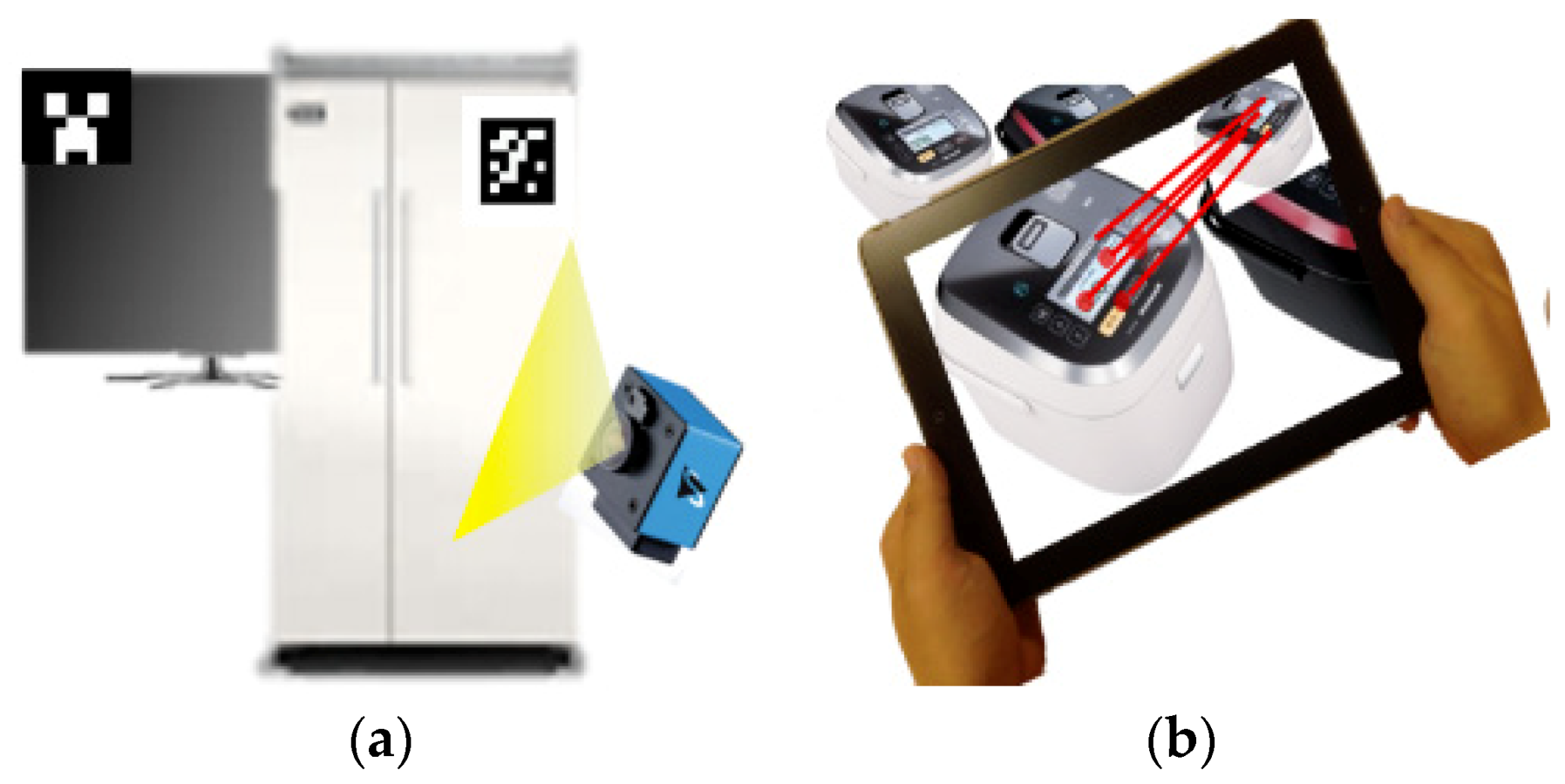

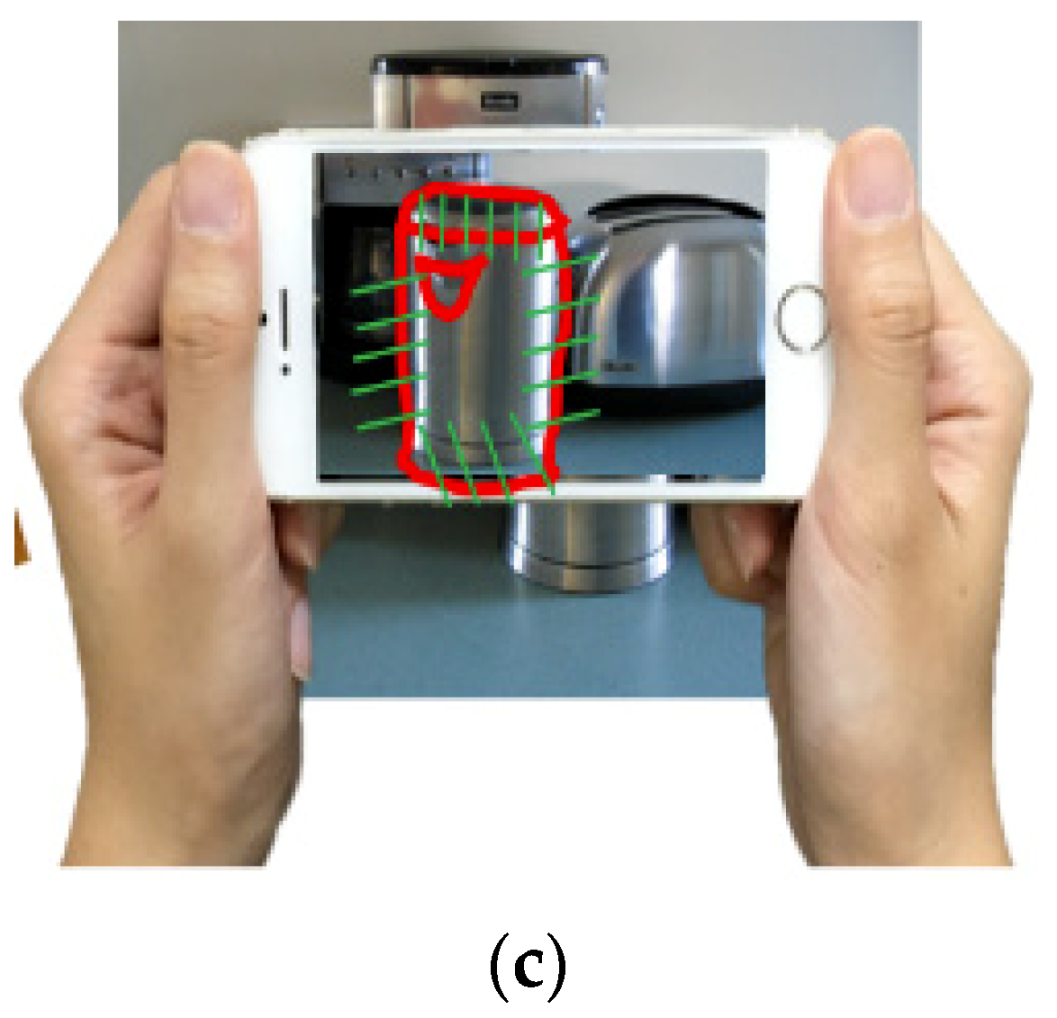

7.2. Proposal: Object-Centric Guided Tracking

7.3. Proposal: AR Interaction Framework for Object Class

8. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Jo, D.; Kim, G.J. Local context based recognition+ Internet of Things: Complementary infrastructures for future generic mixed reality space. In Proceedings of the 21st ACM Symposium on Virtual Reality Software and Technology (VRST 2015), Beijing, China, 13–15 November 2015; p. 196. [Google Scholar]

- White, G.; Cabrara, C.; Palade, A.; Clarke, S. Augmented reality in IoT. In Proceedings of the 8th International Workshop on Context-Aware and IoT Services, Hangzhou, China, 12–15 November 2018. [Google Scholar]

- Phupattanasilp, P.; Tong, S. Augmented reality in the integrative Internet of Things (IoT): Application for precision farming. Sustainability 2019, 11, 2658. [Google Scholar] [CrossRef]

- Gimenez, R.; Pous, M. Augmented reality as an enabling factor for the Internet of Things. In Proceedings of the W3C Workshop: Augmented Reality on the Web, Barcelona, Spain, 15–16 June 2010. [Google Scholar]

- Brooks, F.P. The computer scientist as toolsmith. Commun. ACM 1996, 39, 61–68. [Google Scholar] [CrossRef]

- Radkowski, R. Augmented reality to supplement work instructions. In Proceedings of the Model-Based Enterprise Summit, Gaithersburg, MD, USA, 18–19 December 2013. [Google Scholar]

- Perera, C.; Zaslavsky, A.; Christen, P.; Georgakopoulos, D. Sensing as a service model for smart cities supported by Internet of Things. Trans. Emerg. Telecommun. Technol. 2014, 25, 81–93. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, Z.; Zhang, L.; Yang, Y.; Kang, Q.; Sun, D. Object tracking for a smart city using IoT and edge computing. Sensors 2019, 19, 1987. [Google Scholar] [CrossRef] [PubMed]

- Srimathi, B.; Janani, E.; Shanthi, N.; Thirumoorthy, P. Augmented reality based IoT concept for smart environment. Int. J. Intellect. Adv. Res. Eng. Comput. 2019, 5, 809–812. [Google Scholar]

- Badouch, A.; Krit, S.; Kabrane, M.; Karimi, K. Augmented reality service implemented within smart cities, based on an Internet of Things infrastructure, concepts and challenges: An overview. In Proceedings of the Fourth International Conference on Engineering & MIS, Istanbul, Turkey, 19–20 June 2018. [Google Scholar]

- Jo, D.; Kim, G.J. IoT+AR: Pervasive and augmented environments for Digi-log shopping experience. Hum.-Centric Comput. Inf. Sci. 2019, 9, 1. [Google Scholar] [CrossRef]

- Lee, S.; Lee, G.; Choi, G.; Roh, B.; Kang, J. Integration of OneM2M-based IoT service platform and mixed reality device. In Proceedings of the IEEE International Conference on Consumer Electronics, Las Vegas, NV, USA, 11–13 January 2019. [Google Scholar]

- Kasahara, S.; Heun, V.; Lee, A.S.; Ishii, H. Second surface: Multi-user spatial collaboration system based on augmented reality. In Proceedings of the SIGGRAPH Asia Emerging Technologies, Singapore, 28 November–1 December 2012; pp. 1–4. [Google Scholar]

- Suh, Y.; Park, Y.; Yoon, H.; Chang, Y.; Woo, W. Context-aware mobile AR system for personalization, selective sharing, and interaction of contents in ubiquitous computing environments. Hum.-Comput. Interact. 2007, 4551, 966–974. [Google Scholar]

- Michalakis, K.; Aliprantis, J.; Caridakis, G. Visualizing the Internet of Things: Naturalizing human-computer interaction by incorporating AR features. IEEE Consum. Electron. Mag. 2018, 7, 64–72. [Google Scholar] [CrossRef]

- Bonomi, F.; Milito, R.; Jhu, J.; Addepalli, S. Fog computing and its role in the Internet of things. In Proceedings of the First Edition of the MCC Workshop on Mobile Cloud Computing, Helsinki, Finland, 17 August 2012; pp. 13–16. [Google Scholar]

- Jesudian, S.; Jabez, J. An Intergration of IoT and Mixed Reality Using Fog Computing Concepts. Master’s Thesis, Texas State University, San Marcos, TX, USA, 2018. [Google Scholar]

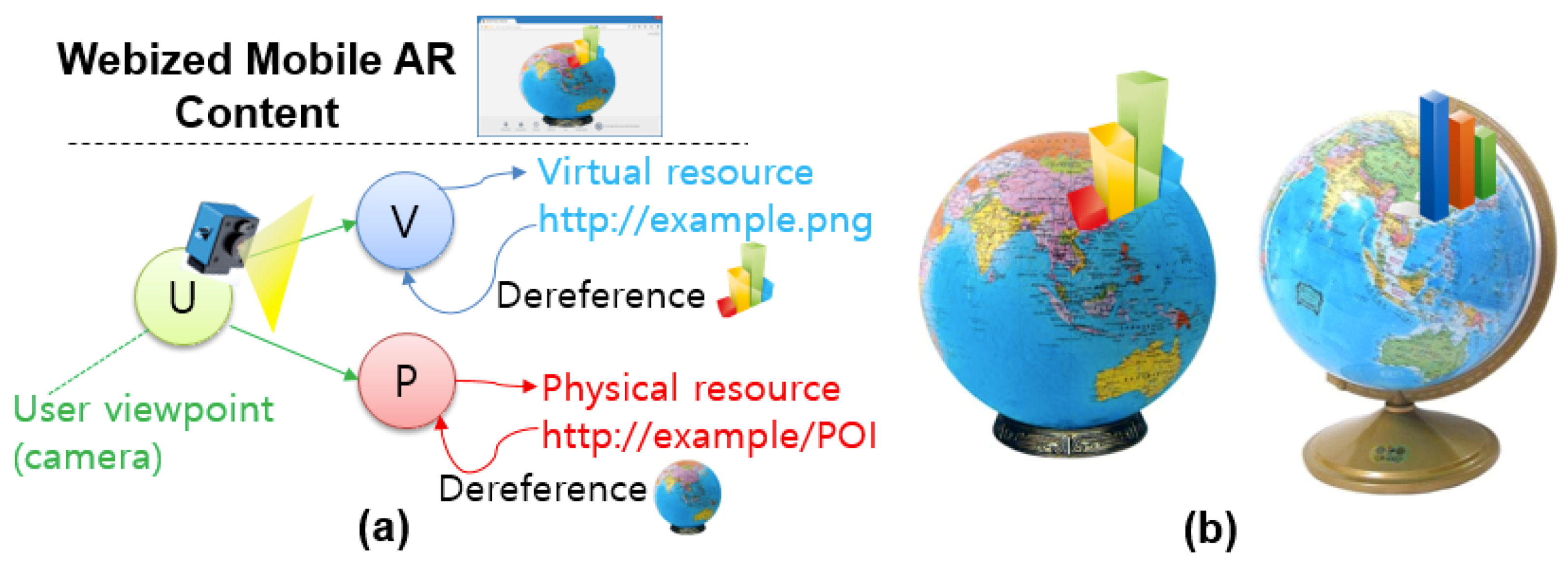

- Ahn, S.C.; Go, H.D.; Yoo, B.H. Webizing mobile augmented reality content. New Rev. Hypermedia Multimed. 2014, 20, 79–100. [Google Scholar] [CrossRef]

- Kruijff, E.; Swan, E.; Feiner, S. Perceptual issues in augmented reality revisited. In Proceedings of the International Symposium on Mixed and Augmented Reality (ISMAR), Seoul, Korea, 13–16 October 2010. [Google Scholar]

- Campana, F.; Dominguez, F. Ariotivity: Provisioning smart objects with augmented reality and open standards. In Proceedings of the IEEE Third Ecuador Technical Chapters Meeting, Cuenca, Ecuador, 15–19 October 2018. [Google Scholar]

- Diao, P.; Shih, N. MARINS: A mobile smartphone AR system for pathfinding in a dark environment. Sensors 2018, 18, 3442. [Google Scholar] [CrossRef] [PubMed]

- Newman, J.; Wagner, M.; Bauer, M.; Williams, A.M.; Pintaric, T.; Beyer, D.; Pustka, D.; Strasser, F.; Schmalstieg, D.; Klinker, G. Ubiquitous tracking for augmented reality. In Proceedings of the 3rd IEEE/ACM International Symposium on Mixed and Augmented Reality, Arlington, VA, USA, 5 November 2004; pp. 192–201. [Google Scholar]

- Negara, G.P.K.; Teck, F.W.; Yiqun, L. Hybrid feature and template-based tracking for augmented reality application. In ACCV 2014 Workshops; Springer: Cham, Switzerland, 2014. [Google Scholar]

- Jeong, Y.; Joo, H.; Hong, G.; Shin, D.; Lee, S. AVIoT: Web-based interactive authoring and visualization of indoor Internet of Things. IEEE Trans. Consum. Electron. 2015, 61, 295–301. [Google Scholar] [CrossRef]

- Jackson, B.J.C.; Watten, P.L.; Newbury, P.F.; Lister, P.F. Rapid authoring for VR-based simulations of pervasive computing applications. In Proceedings of the 1st International Workshop on Methods & Tools for Designing VR Applications (MeTo-VR) In conjunction with 11th International Conference on Virtual Systems and Multimedia, Flanders Expo, Ghent, Belgium, 3–7 October 2005. [Google Scholar]

- Heun, V.; Hobin, J.; Maes, P. Reality Editor: Programming smarter objects. In Proceedings of the 2013 ACM Conference on Pervasive and Ubiquitous Computing Adjunct Publication, Zurich, Switzerland, 8–12 September 2013; pp. 307–310. [Google Scholar]

- Choi, M.; Park, L.; Lee, S.; Hwang, J.; Park, S. Design and implementation of hyper-connected IoT-VR platform for customizable and intuitive remote services. In Proceedings of the IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 8–10 January 2017. [Google Scholar]

- Kim, M.; Choi, S.; Park, K.; Lee, J. User Interactions for Augmented Reality Smart Glasses: A comparative evaluation of visual contexts and interaction gestures. Appl. Sci. 2019, 9, 3171. [Google Scholar] [CrossRef]

- Mihara, S.; Sakamoto, A.; Shimada, H.; Sato, K. Augmented reality marker for operating home appliances. In Proceedings of the International Conference on Embedded and Ubiquitous Computing (EUC), Melbourne, VIC, Australia, 24–26 October 2011; pp. 372–377. [Google Scholar]

- Lu, C.-H. IoT-enhanced and bidirectionally interactive information visualization for context-aware home energy savings. In Proceedings of the Mixed and Augmented Reality—Media, Art, Social Science, Humanities and Design (ISMAR), Fukuoka, Japan, 29 September–3 October 2015; pp. 15–20. [Google Scholar]

- Rekimoto, J.; Nagao, K. The world through the computer: Computer augmented interaction with real world environments. In Proceedings of the Symposium on User Interface Software and Technology (UIST), Pittsburgh, PA, USA, 15–17 November 1995. [Google Scholar]

- Iglesias, J.; Bernardos, A.; Casar, J. An evidential and context-aware recommendation strategy to enhance interactions with smart spaces. In Hybrid Artificial Intelligent Systems; Springer: Berlin/Heidelberg, Germany, 2013; pp. 242–251. [Google Scholar]

- Ajanki, A.; Billinghurst, M.; Gamper, H.; Jarvenpaa, T.; Kandemir, M.; Kaski, S.; Koskela, M.; Kurimo, M.; Laaksonen, J.; Puolamaki, K.; et al. An augmented reality interface to contextual information. Virtual Real. 2011, 15, 161–173. [Google Scholar] [CrossRef]

- Huang, B.-R.; Lin, C.H.; Lee, C.-H. Mobile augmented reality based on cloud computing. In Proceedings of the International Conference on Anti-Counterfeiting, Security and Identification (ASID), Taipei, Taiwan, 24–26 August 2012. [Google Scholar]

- Ghobaei-Arani, M.; Souri, A.; Rahmanian, A. Resource management approaches in fog computing: A comprehensive review. J. Grid Comput. 2019, 1–42. [Google Scholar] [CrossRef]

- Muller, L.; Aslan, I.; Krußen, L. GuideMe: A Mobile augmented reality system to display user manuals for home appliances. In Advances in Computer Entertainment (ACE); Springer: Cham, Switzerland, 2013. [Google Scholar]

- The Physical Web. Available online: https://google.github.io/physical-web/ (accessed on 14 August 2019).

- Kim, J.; Lee, J.; Yoo, B.; Ahn, S.; Go, H. View management for webized mobile AR contents. In Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 10–12 September 2014; pp. 271–272. [Google Scholar]

- Greg, T. Mediating Mediums. Available online: http://www.inventinginteractive.com/2011/08/22/mediating-mediums/ (accessed on 13 September 2019).

- Claros, D.; Seron, M.; Dominguez, M.; Trazegnies, C. Augmented reality visualization interface for biometric wireless sensor networks. In Proceedings 9th International Work-Conference on Artificial Neural Networks (IWANN); Springer: Berlin/Heidelberg, Germany, 2007; pp. 17–21. [Google Scholar]

- Uchiyama, H.; Marchand, E. Object detection and pose tracking for augmented reality: Recent approaches. In Proceedings of the 18th Korea-Japan Joint Workshop on Frontiers of Computer Vision (FCV), Kawasaki, Japan, 2–4 February 2012. [Google Scholar]

- Park, Y.; Yun, S.; Kim, K. When IoT met augmented reality: Visualizing the source of the wireless signal in AR view. In Proceedings of the 17th Annual International Conference on Mobile System, Applications, and Services, Seoul, Korea, 17–21 June 2019; pp. 117–129. [Google Scholar]

- Lifton, J.; Paradiso, J.A. Dual reality-merging the real and virtual. In Proceedings of the 1st International Conference on Facets of Virtual Environments (FAVE), Berlin, Germany, 27–29 July 2009; pp. 27–29. [Google Scholar]

- Zhu, Z.; Branzoi, V.; Wolverton, M. AR-Mentor: Augmented reality based mentoring system. In Proceedings of the 13rd IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 10–12 September 2014; pp. 17–22. [Google Scholar]

- Rekimoto, J.; Ayatsuka, Y. CyberCode: Designing augmented reality environments with visual tags. Proc. DARE 2000, 1–10. [Google Scholar] [CrossRef]

- Rolland, J.P.; Fuchs, H. Optical versus video see-through head-mounted displays in medical visualization. Presence 2000, 9, 287–309. [Google Scholar] [CrossRef]

- WebGL. Available online: https://www.khronos.org/webgl/ (accessed on 14 August 2019).

- Seo, B.-K.; Park, H.; Park, J.; Hinterstoisser, S.; Ilic, S. Optimal local searching for fast and robust textureless 3D object tracking in highly cluttered backgrounds. IEEE Trans. Vis. Comput. Graph. 2014, 20, 99–110. [Google Scholar] [PubMed]

- Tatzqern, M.; Kalkofen, D.; Grasset, R.; Schmalstieg, D. Hedgehog Labeling: View management techniques for external labels in 3D space. In Proceedings of the IEEE VR, Minneapolis, MN, USA, 29 March–2 April 2014; pp. 27–32. [Google Scholar]

- Madsen, J.; Tatzqern, M.; Madsen, C.; Schmalstieg, D.; Kalkofen, D. Temporal coherence strategies for augmented reality labeling. IEEE Trans. Vis. Comput. Graph. 2016, 22, 1415–1423. [Google Scholar] [CrossRef] [PubMed]

- Lee, T.; Hollerer, T. Handy AR: Markerless inspection of augmented reality objects using fingertip tracking. In Proceedings of the 2007 11th IEEE International Symposium on Wearable Computers, Boston, MA, USA, 11–13 October 2007; pp. 1–8. [Google Scholar]

- Jang, Y.; Noh, S.-T.; Chang, H.J.; Kim, T.-K.; Woo, W. 3D Finger CAPE: Clicking action and position Estimation under Self-Occlusions in Egocentric Viewpoint. IEEE Trans. Vis. Comput. Graph. TVCG 2015, 21, 501–510. [Google Scholar] [CrossRef] [PubMed]

- Heun, V.; Kasahara, S.; Maes, P. Smarter Objects: Using AR technology to program physical objects and their interactions. In Proceedings of the ACM International Conference Human Factors in Computing (CHI), Paris, France, 27 April–2 May 2013. [Google Scholar]

| Issues | Problems | References | |

|---|---|---|---|

| Data management | AR | prebuilt 3D virtual object datasets, prestored AR content | Jo et al. [11] |

| context-aware having accurate information about the surrounding environment | Jo et al. [1], Jo et al. [11], Suh el al. [14] | ||

| IoT | access to distributed sensor data (filtering), scalability (IoT perception) | Michalakis et al. [15], Bonomi el al. [16], Jesudian et al. [17] | |

| quality of service, customize the system according to the user’s needs | White el al. [2], Michalakis el al. [15], Gimenez et al. [4], Ahn et al. [18] | ||

| object relationships, exchange of resources among objects | Kruijiff et al. [19], Campana et al. [20], Kasahara el al. [13] | ||

| law interoperability | White et al. [2] | ||

| Viewer and display device | AR | AR registration ambiguity, registration errors significant latency, augmentation method, occlusion, tracking for the dark environment field of view, the need for abundant markers | Kruijff et al. [19], Michalakis et al. [15], Diao et al. [21], Newman et al. [22], Negara et al. [23] |

| predominant viewing by the specific AR device (e.g., head-worn display, handheld mobile device, projector–camera system) | Kruijiff et al. [19] | ||

| IoT | unintuitive context information, undirected viewing | Michalakis et al. [15] | |

| result display with physical characteristics | Phupattanasilp et al. [3] | ||

| authoring IoT | Jeong et al. [24], Jackson et al. [25], Heun et al. [26] | ||

| Interfaces and interaction methods | AR | fixed interaction method | Choi et al. [27], Kim et al. [28] |

| IoT | undirected interaction, limitations on intuitively communicating services | Jo et al. [11], Mihara et al. [29], Lu et al. [30] | |

| response time of the application | White et al. [2] | ||

| Key Components | Generic Features of AR and IoT | Potential Features to Combine AR with IoT in the Future | |

|---|---|---|---|

| AR data management | AR | Visualization with prebuilt 3D virtual object datasets and prestored AR content including a substantial amount of storage | Scalable AR dataset management and services able to directly access and immediately exchange contextual information of everyday objects for efficient AR representation Context-aware service having accurate information about surrounding environment (filtering) Customize AR systems according to the user’s needs in terms of IoT devices Exchange of resources and datasets among IoT objects |

| IoT | Descriptions of and access to distributed sensor data (for example, context information) | ||

| Object-guided tracking | AR | AR registration in only one way (for example, feature detection or model-based) | AR tracking methods based on binding between real space and virtual object to be superimposed over real IoT object in real time Robust object-optimized tracking by considering tracking characteristics of each object Accessorial tracking information in limited environments (e.g., dark lights) Intuitive visualization with physical characteristics of IoT devices AR authoring for IoT devices |

| IoT | Resource monitoring collected from objects that are embedded with sensors (this is not considered virtual imagery to be superimposed on a real IoT object) | ||

| AR-based object control and interface | AR | Interaction to manipulate virtual objects through a fixed method | Object class-wise interaction customization and optimization Intuitive interface to manipulate with AR interaction viewing direct response in terms of functionality of physical objects |

| IoT | Control used to operate IoT sensors with an indirect viewing interface (for example, GUI menu-based IoT object control) | ||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jo, D.; Kim, G.J. AR Enabled IoT for a Smart and Interactive Environment: A Survey and Future Directions. Sensors 2019, 19, 4330. https://doi.org/10.3390/s19194330

Jo D, Kim GJ. AR Enabled IoT for a Smart and Interactive Environment: A Survey and Future Directions. Sensors. 2019; 19(19):4330. https://doi.org/10.3390/s19194330

Chicago/Turabian StyleJo, Dongsik, and Gerard Jounghyun Kim. 2019. "AR Enabled IoT for a Smart and Interactive Environment: A Survey and Future Directions" Sensors 19, no. 19: 4330. https://doi.org/10.3390/s19194330

APA StyleJo, D., & Kim, G. J. (2019). AR Enabled IoT for a Smart and Interactive Environment: A Survey and Future Directions. Sensors, 19(19), 4330. https://doi.org/10.3390/s19194330