Continuous Distant Measurement of the User’s Heart Rate in Human-Computer Interaction Applications

Abstract

1. Introduction

- To our knowledge we are the first to systematically study the impact of human activities during various HCI scenarios (i.e., reading text, playing games) on the accuracy of the HR algorithm,

- As far as we know, we are the first to propose the use of new image representation (excess green ExG), which provides acceptable accuracy and at the same time is much faster to compute than other state of the art methods (i.e., blind-source separation—ICA),

- We used the state-of-the art real-time face detection and tracking algorithm, and evaluated four signal extraction methods (preprocessing), and three different pulse rate estimation algorithms,

- To our knowledge we are the first to propose a method of correcting information delay introduced by the algorithm when comparing results with reference data.

2. Materials and Methods

2.1. Experimental Setup

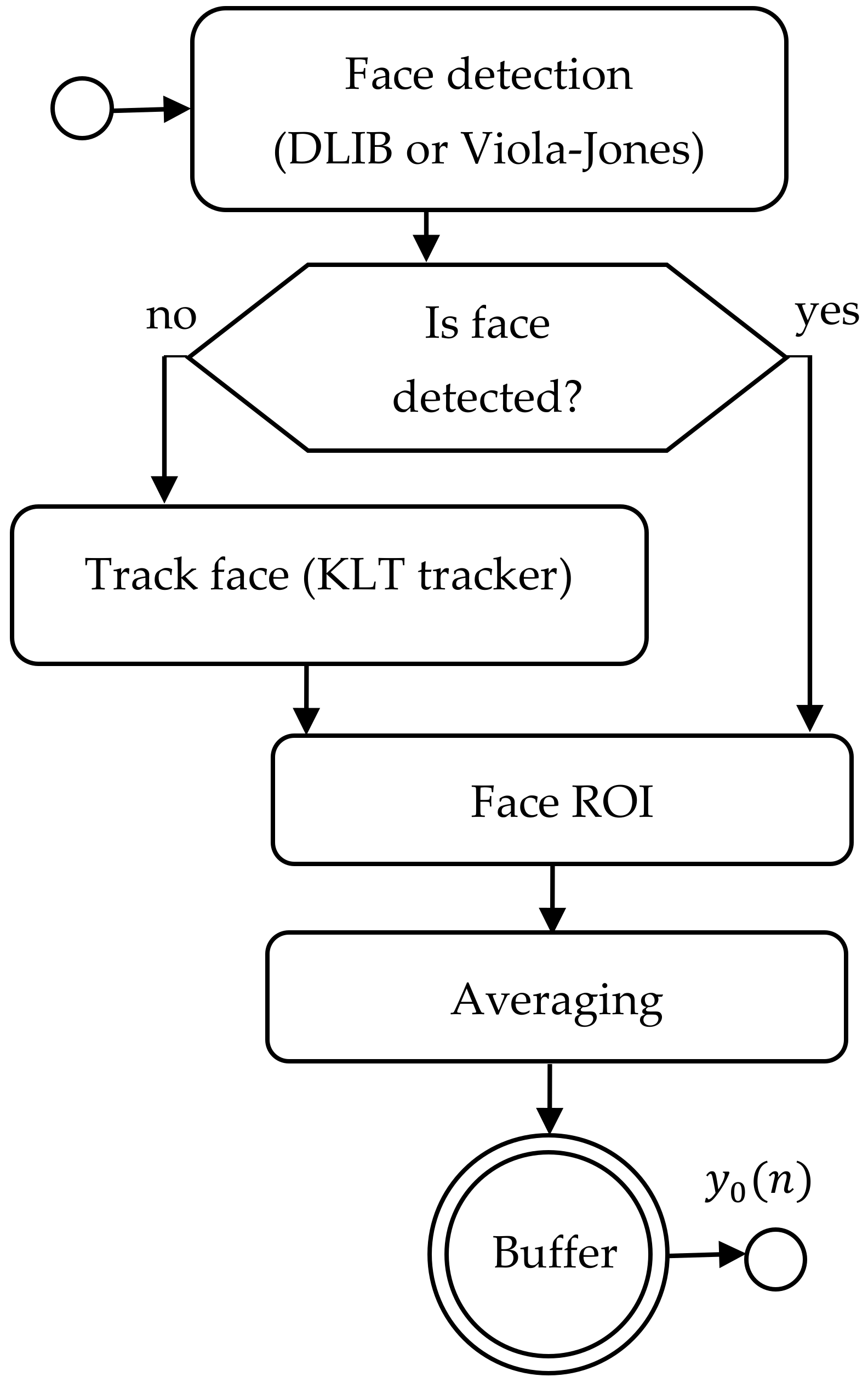

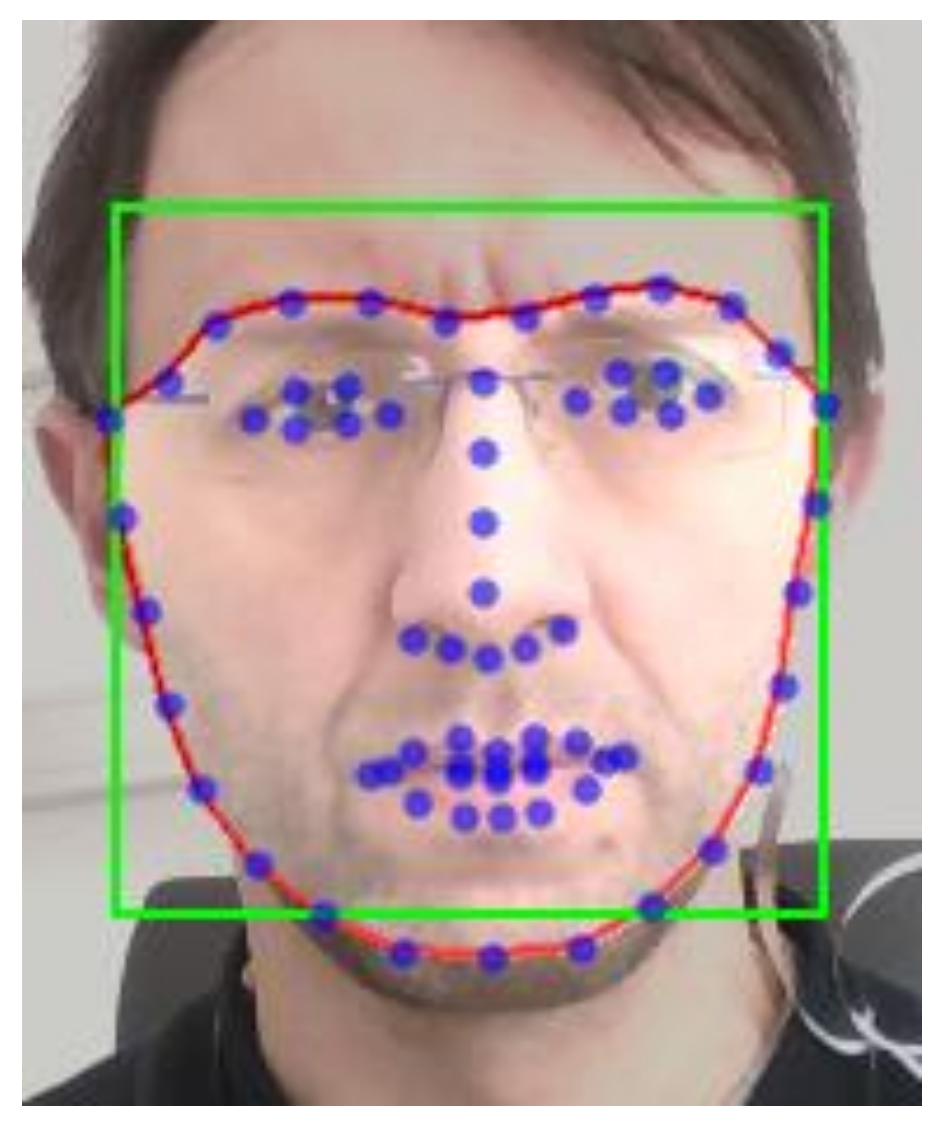

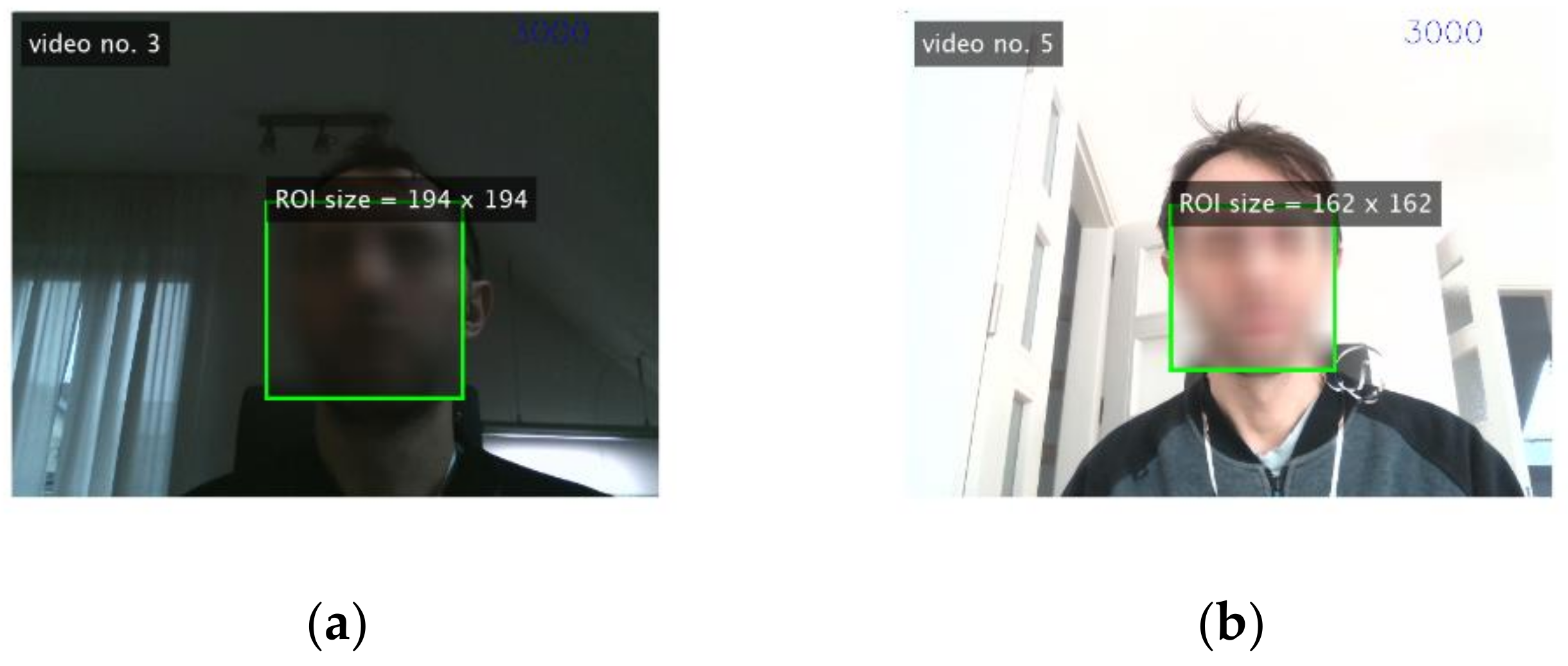

2.2. Region of Interest (ROI) Selection and Tracking

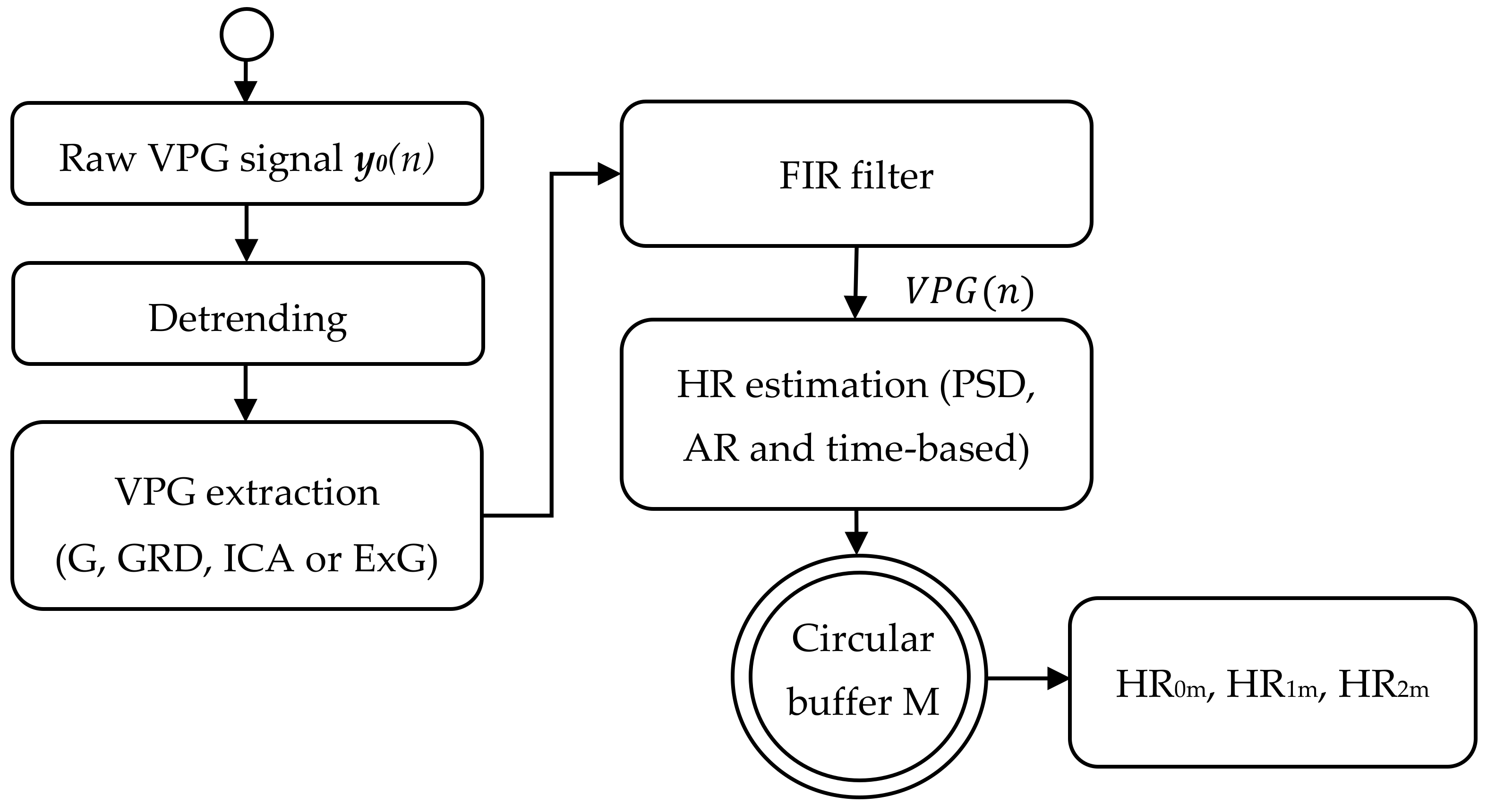

2.3. Preprocessing and VPG Signal Extraction

2.4. Heart Rate Estimation Algorithm

2.5. Evaluation Methodology

2.6. Details of Experiments

- Algorithm No. 1 (PSD, Welch’s estimator): the window length N = 1024 samples (which gives a frequency resolution of 3.52 bpm/bin and temporal buffer window of length 21 s),

- Algorithm No. 2 (AR modelling): the order of AR model was equal to 128, the AR model frequency response computed for FFT length of 1024, the window length of N = 600 samples (which gives a frequency resolution of 3.52 bpm/bin and temporal buffer window of length 10 s),

- Algorithm No. 3 (time-based peak detection, depicted as TIME): the buffer length N = 600 samples (which gives a temporal buffer window of length 10 s).

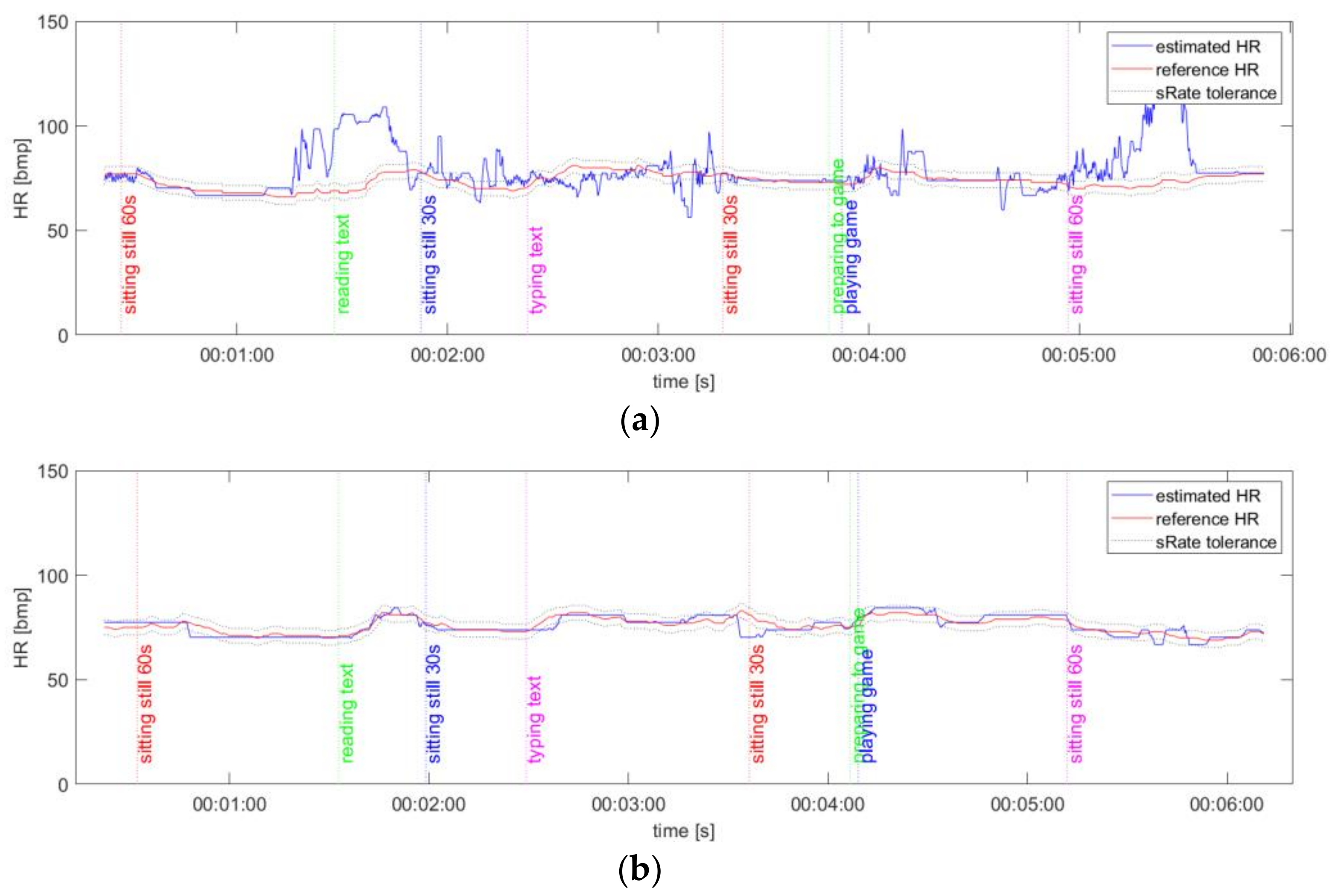

- Part 1—the participant sits still (60 s) without head movements and minimal facial actions,

- Part 2—the participant reads text (short jokes) displayed on the computer screen in front of him, and can express emotions,

- Part 3—the participant sits still (30 s),

- Part 4—the participant rewrites text from the paper located on the left or right side of the desk using the keyboard (which results in head movements),

- Part 5—the participant sits still (30 s),

- Part 6—after the short mental preparation the participant plays the arkanoid game using the mouse and the keyboard,

- Part 7—the participant sits still (60 s).

3. Result

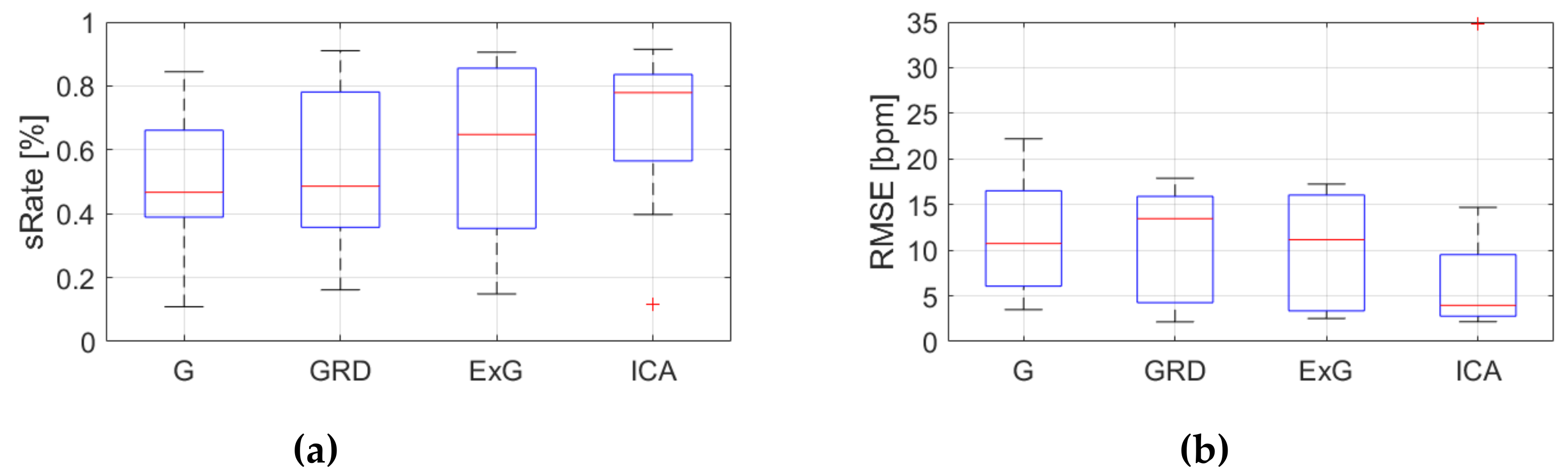

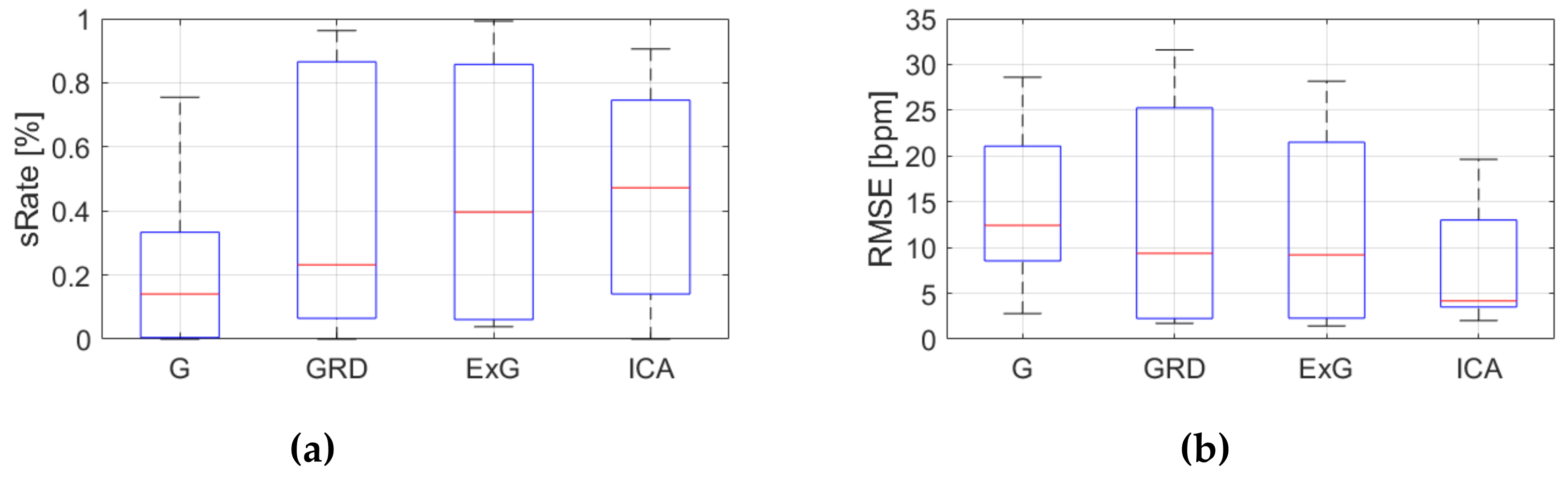

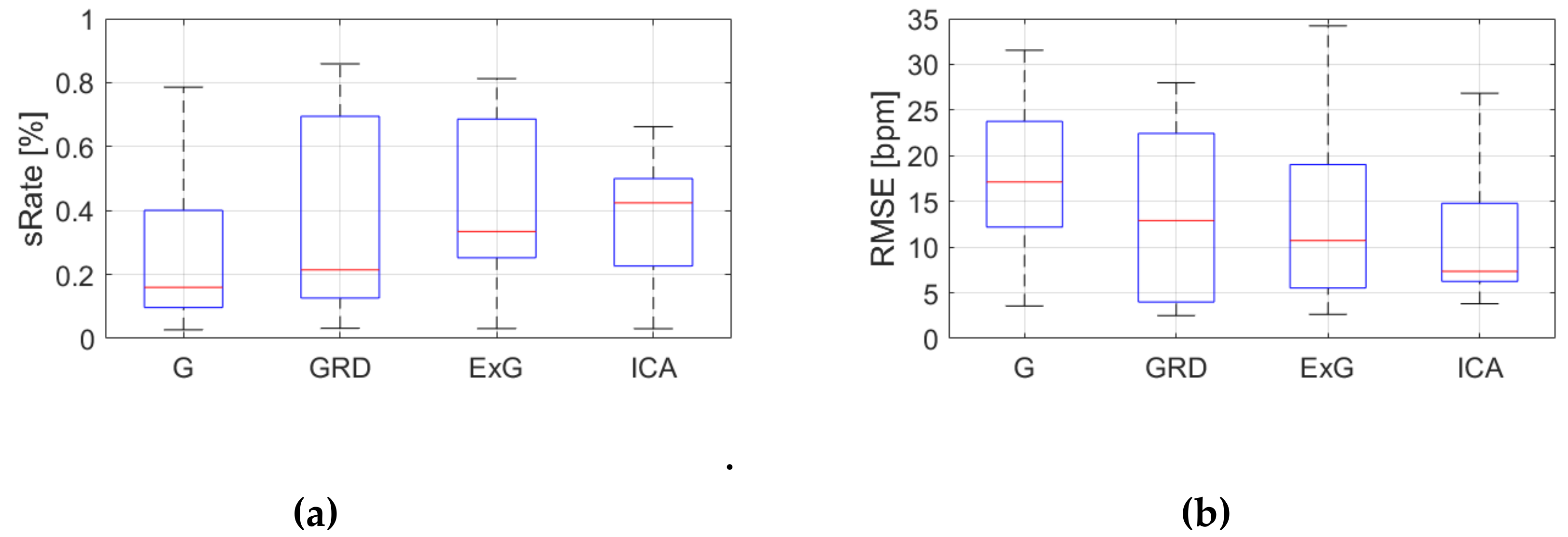

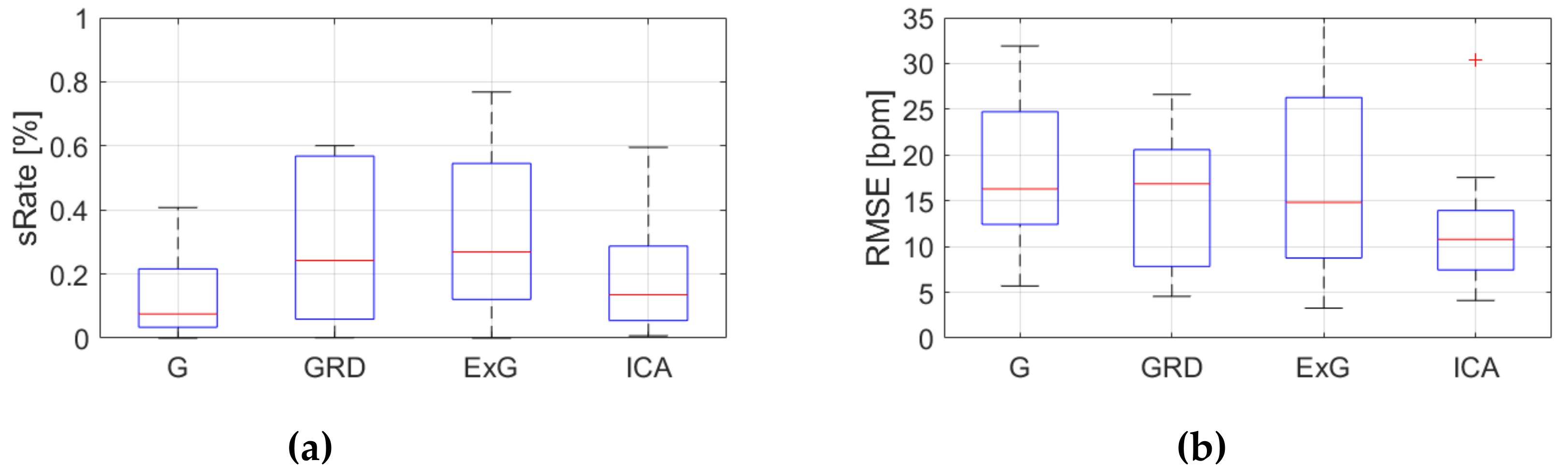

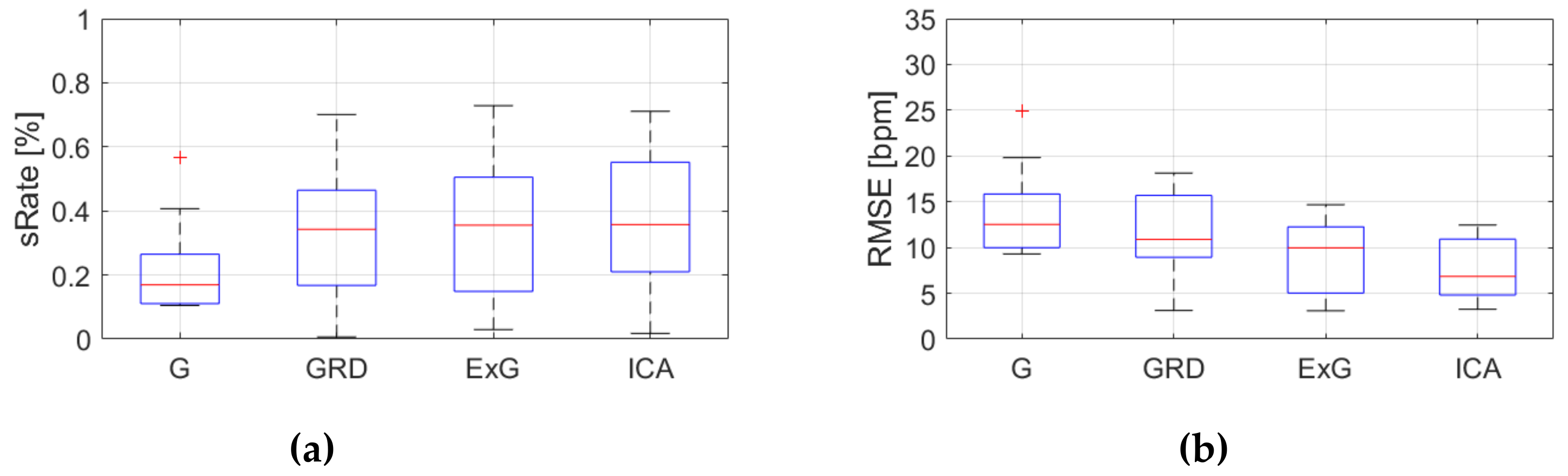

3.1. Comparison of the VPG Signal Extraction Methods (G, GRD, ICA and ExG)

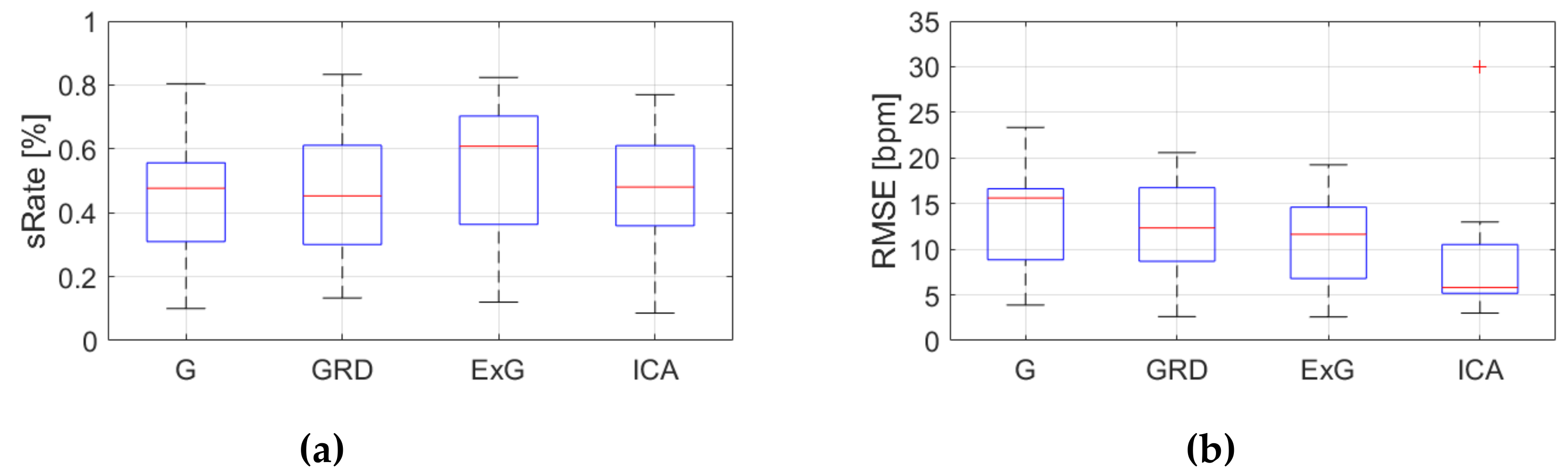

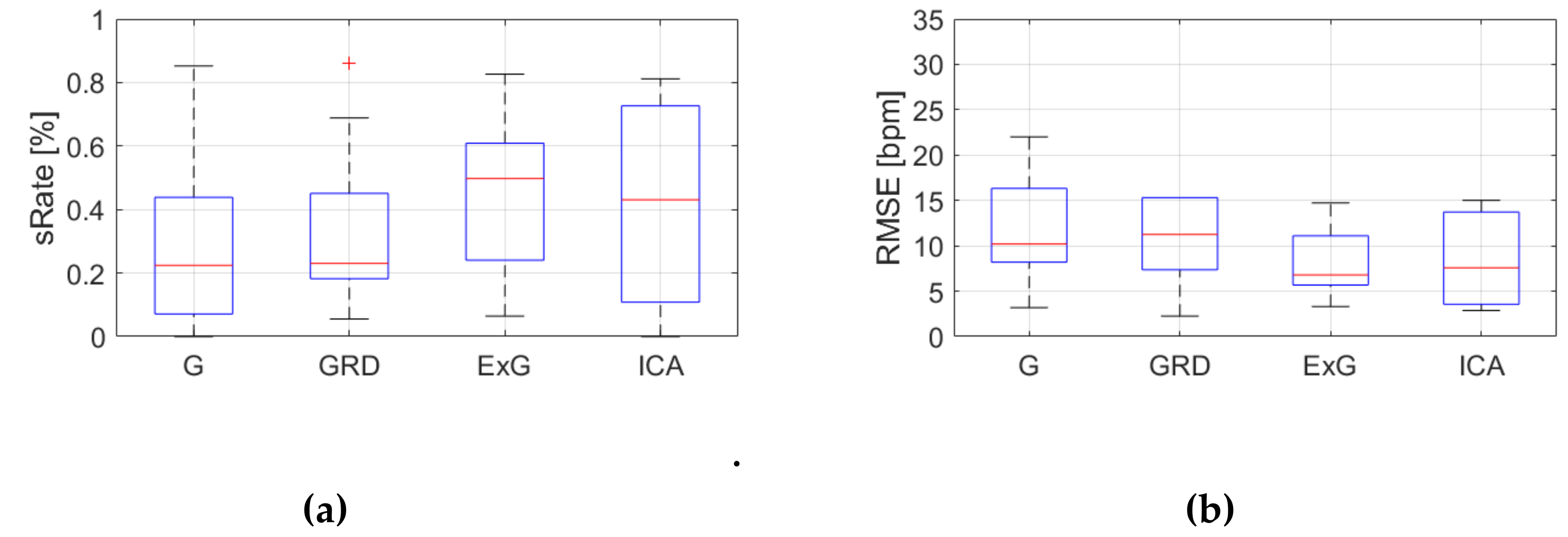

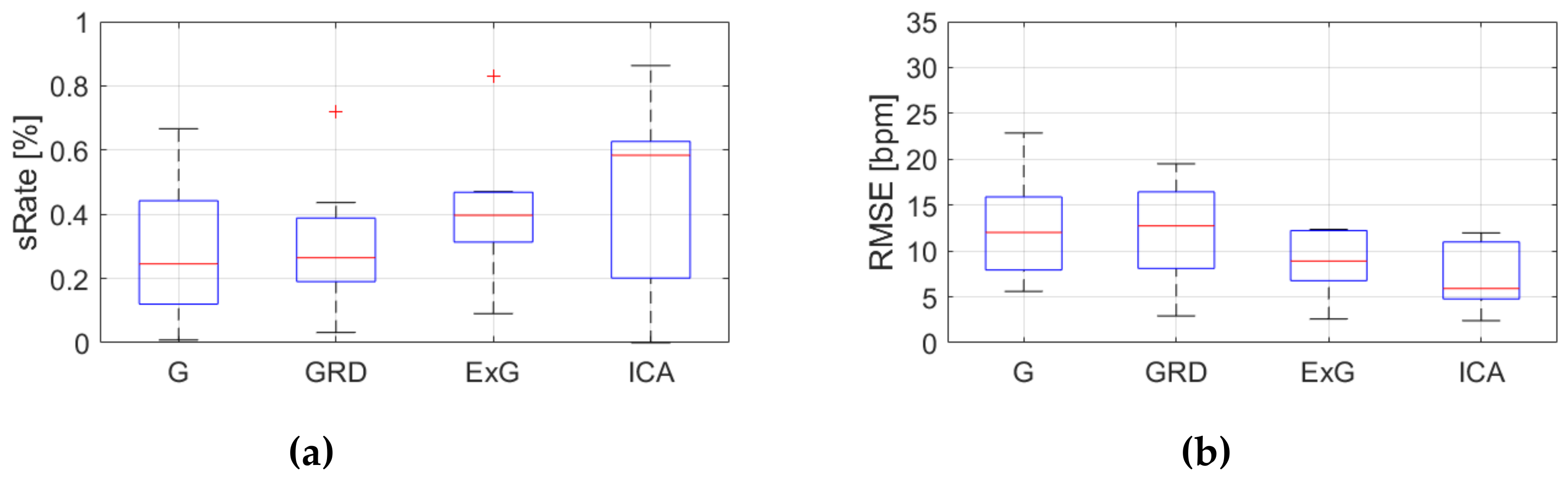

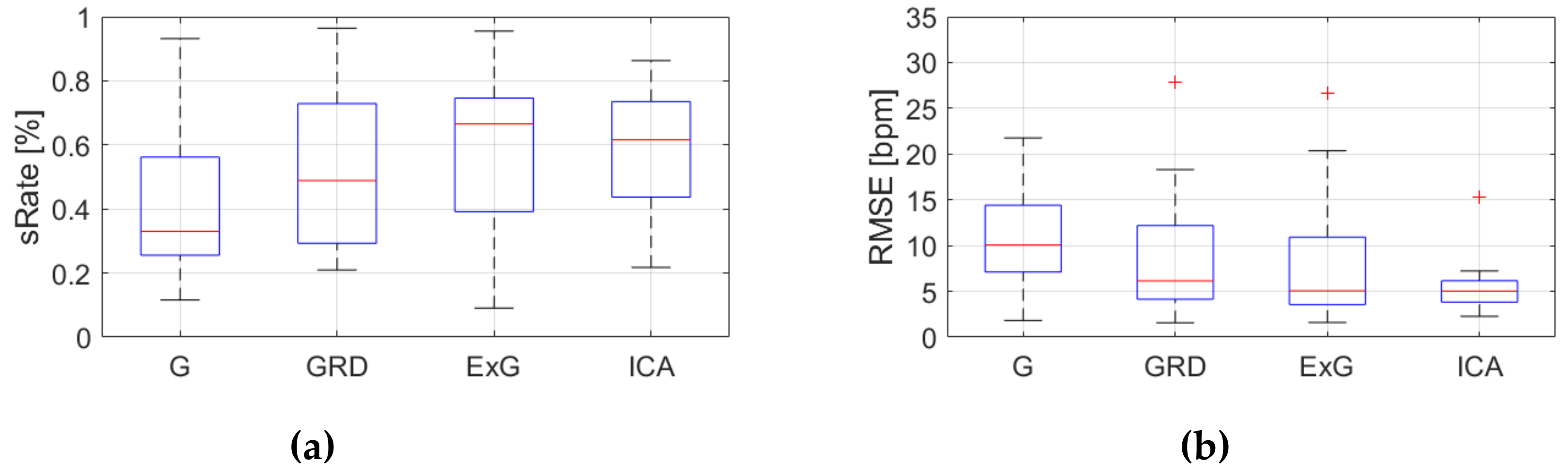

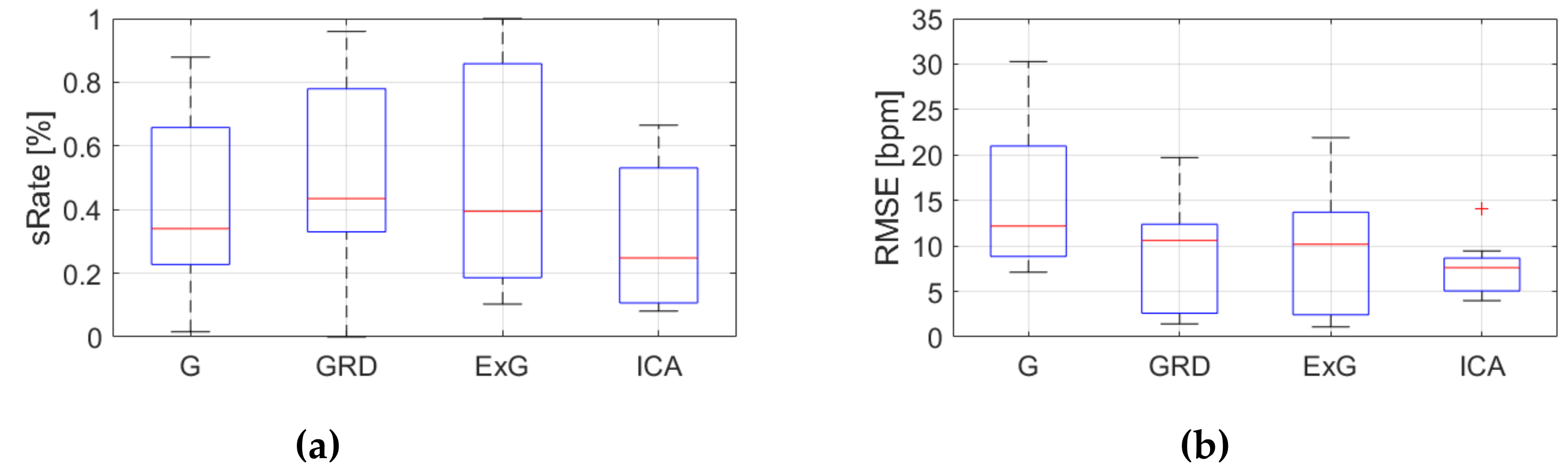

3.2. Comparison of the VPG Signal Extraction Methods for Various Activities

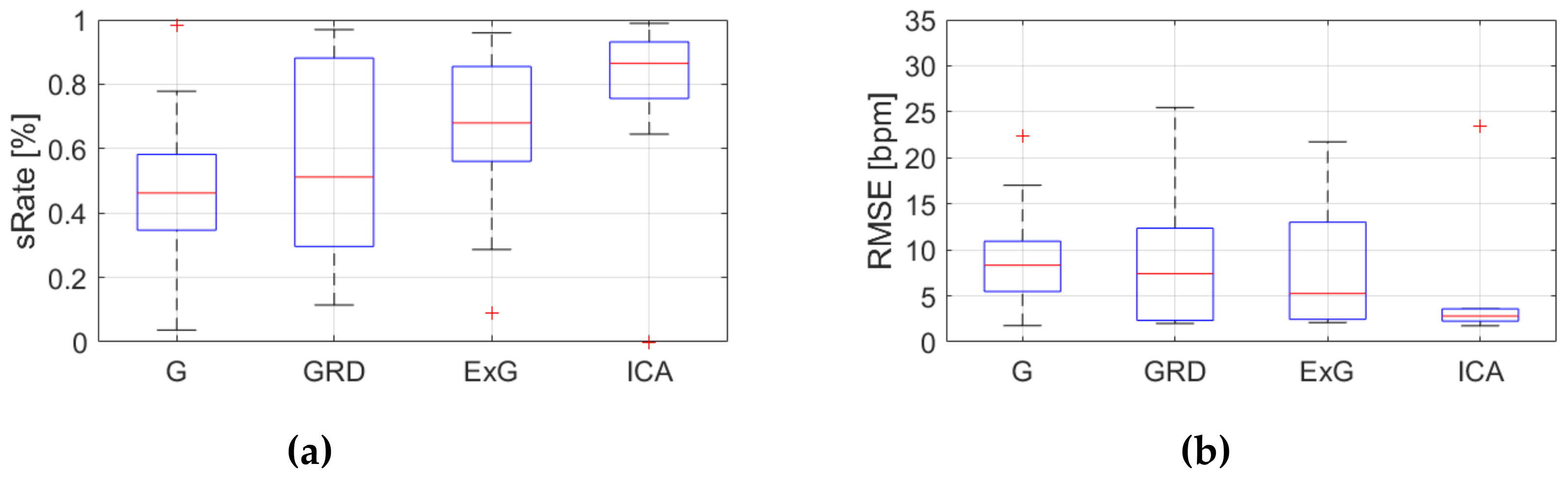

- part 1 (the participant sits still for a minimum of 60 seconds),

- part 2 (the participant reads text),

- part 4 (the participant rewrites text using the keyboard and the mouse),

- and part 6 (the participant plays a game).

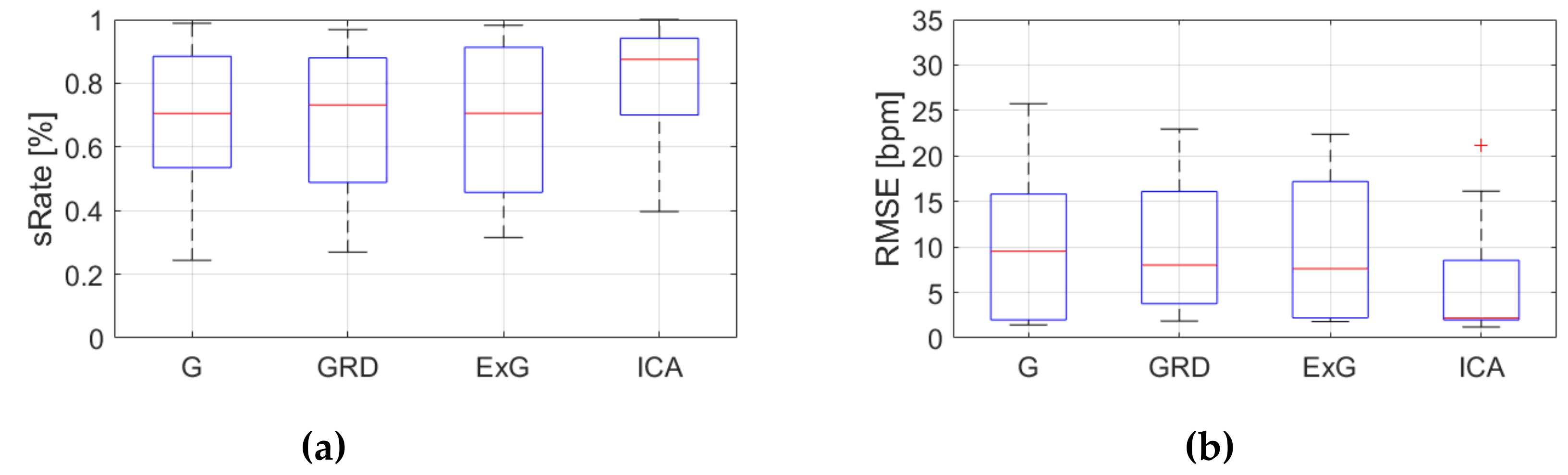

3.3. Comparison of the Different Algorithms and Activities

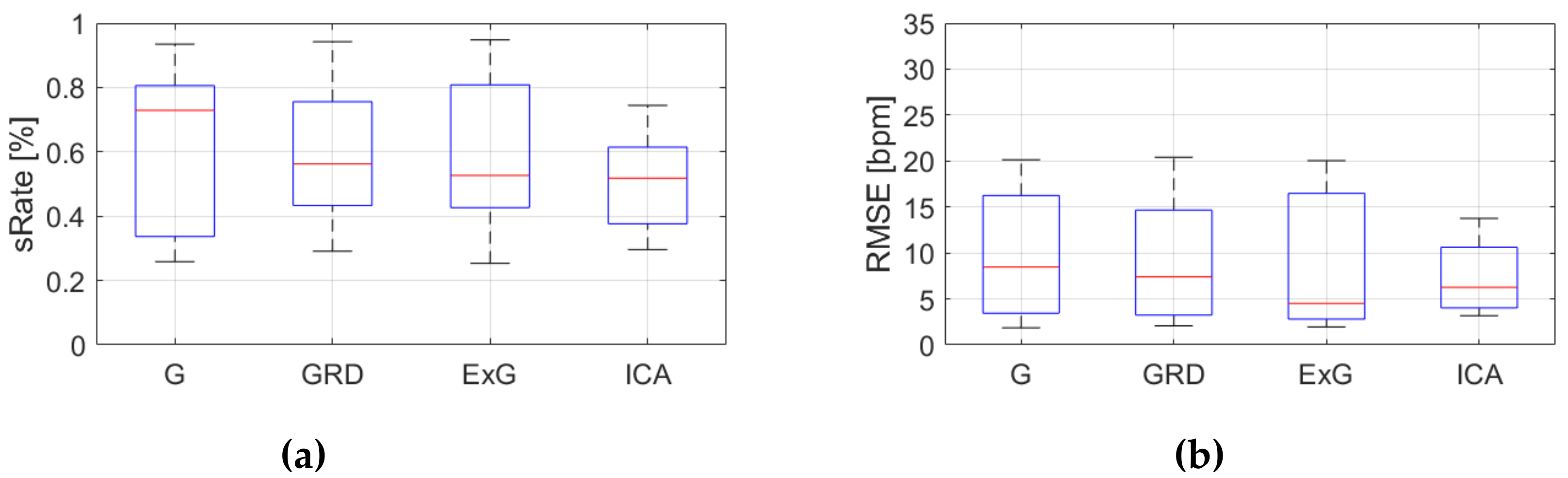

3.4. Analysis of the Impact of Average Lighting and User’s Movement on the Results of Pulse Detection.

- algorithm No. 1 (PSD), and GRD, ExG

- algorithm No. 2 (AR), and GRD

- algorithm No. 3, all except ICA

4. Discussion

- Further analysis which external or internal factors influence the results of HR estimation, i.e., Image parameters (saturation, hue), type of user’s movements, ROI size, etc.),

- Evaluation of selected algorithms on a larger amount of data,

- Development a metric to detect moments when measurement is correct and reliable,

- Evaluating whether the use of depth and IR channels (provided by the Intel RealSense SR300 camera) as additional sources of pulse signal information increases accuracy.

5. Conclusions

Funding

Conflicts of Interest

Appendix A

| Video No. | Entire Video | Part 1 | Part 2 | Part 4 | Part 6 |

|---|---|---|---|---|---|

| video 1 | 05:33 | 01:00 | 00:25 | 00:32 | 01:01 |

| video 2 | 04:53 | 00:50 | 00:19 | 00:31 | 01:01 |

| video 3 | 05:30 | 00:55 | 00:24 | 00:55 | 01:04 |

| video 4 | 05:19 | 00:48 | 00:18 | 00:58 | 01:02 |

| video 5 | 05:48 | 01:00 | 00:26 | 01:07 | 01:02 |

| video 6 | 05:46 | 01:00 | 00:28 | 01:02 | 01:03 |

| video 7 | 05:56 | 00:57 | 00:32 | 01:07 | 01:01 |

| video 8 | 05:56 | 01:00 | 00:20 | 01:02 | 01:01 |

| video 9 | 05:44 | 01:01 | 00:34 | 01:12 | 00:20 |

| Video No. | Entire Video | Part 1 | Part 2 | Part 4 | Part 6 | Entire Video | Part 1 | Part 2 | Part 4 | Part 6 |

|---|---|---|---|---|---|---|---|---|---|---|

| Average Illumination [lux] | std of Accelerations [G] | |||||||||

| video 1 | 72 | 78 | 77 | 62 | 69 | 0.134 | 0.021 | 0.010 | 0.011 | 0.006 |

| video 2 | 86 | 93 | 91 | 63 | 76 | 0.123 | 0.007 | 0.006 | 0.007 | 0.007 |

| video 3 | 40 | 44 | 43 | 39 | 35 | 0.112 | 0.011 | 0.010 | 0.009 | 0.008 |

| video 4 | 54 | 60 | 59 | 58 | 46 | 0.109 | 0.010 | 0.008 | 0.008 | 0.007 |

| video 5 | 950 | 1271 | 1048 | 806 | 816 | 0.118 | 0.007 | 0.008 | 0.008 | 0.008 |

| video 6 | 27 | 27 | 30 | 23 | 25 | 0.110 | 0.007 | 0.009 | 0.008 | 0.007 |

| video 7 | 152 | 146 | 140 | 113 | 170 | 0.125 | 0.013 | 0.018 | 0.011 | 0.008 |

| video 8 | 49 | 57 | 56 | 53 | 38 | 0.099 | 0.008 | 0.007 | 0.008 | 0.009 |

| video 9 | 106 | 108 | 107 | 103 | 99 | 0.102 | 0.009 | 0.011 | 0.017 | 0.011 |

| Video No. | RMSE [bpm] | sRate [%] | ||||||

|---|---|---|---|---|---|---|---|---|

| G | GRD | ExG | ICA | G | GRD | ExG | ICA | |

| video 1 | 10.7 | 4.5 | 3.2 | 7.8 | 48% | 76% | 85% | 71% |

| video 2 | 16.5 | 15.2 | 15.7 | 14.7 | 27% | 41% | 39% | 40% |

| video 3 | 16.5 | 13.9 | 12.9 | 2.8 | 47% | 49% | 57% | 83% |

| video 4 | 10.1 | 11.6 | 7.3 | 3.2 | 45% | 55% | 65% | 78% |

| video 5 | 3.9 | 2.2 | 2.5 | 2.8 | 84% | 91% | 91% | 87% |

| video 6 | 14.1 | 13.5 | 11.1 | 7.2 | 43% | 42% | 65% | 62% |

| video 7 | 3.5 | 3.7 | 3.4 | 2.2 | 80% | 84% | 86% | 91% |

| video 8 | 6.8 | 17.9 | 17.2 | 4.0 | 61% | 19% | 25% | 79% |

| video 9 | 22.2 | 36.7 | 35.8 | 34.8 | 11% | 16% | 15% | 12% |

| Video No. | RMSE [bpm] | sRate [%] | ||||||

|---|---|---|---|---|---|---|---|---|

| G | GRD | ExG | ICA | G | GRD | ExG | ICA | |

| video 1 | 9.5 | 4.6 | 3.5 | 6.3 | 45% | 62% | 74% | 55% |

| video 2 | 17.0 | 11.1 | 11.6 | 13.0 | 30% | 45% | 44% | 37% |

| video 3 | 15.6 | 15.3 | 12.2 | 5.0 | 48% | 45% | 61% | 68% |

| video 4 | 8.6 | 12.3 | 7.0 | 5.7 | 57% | 57% | 69% | 47% |

| video 5 | 3.9 | 2.6 | 2.6 | 3.0 | 80% | 83% | 82% | 77% |

| video 6 | 19.4 | 17.2 | 15.4 | 11.7 | 23% | 27% | 46% | 35% |

| video 7 | 5.4 | 7.9 | 6.7 | 5.8 | 76% | 73% | 71% | 48% |

| video 8 | 15.6 | 20.6 | 19.3 | 4.8 | 53% | 28% | 34% | 63% |

| video 9 | 23.3 | 35.3 | 35.5 | 30.0 | 10% | 13% | 12% | 9% |

| Video No. | RMSE [bpm] | sRate [%] | ||||||

|---|---|---|---|---|---|---|---|---|

| G | GRD | ExG | ICA | G | GRD | ExG | ICA | |

| video 1 | 16.8 | 4.3 | 2.8 | 7.4 | 49% | 70% | 83% | 51% |

| video 2 | 21.3 | 11.3 | 10.9 | 12.2 | 38% | 47% | 53% | 30% |

| video 3 | 20.7 | 18.4 | 17.7 | 6.3 | 28% | 25% | 36% | 43% |

| video 4 | 14.2 | 13.1 | 10.4 | 8.6 | 35% | 43% | 45% | 21% |

| video 5 | 16.7 | 2.5 | 2.6 | 5.5 | 51% | 88% | 89% | 52% |

| video 6 | 20.0 | 21.4 | 17.8 | 12.2 | 22% | 21% | 22% | 26% |

| video 7 | 9.1 | 9.6 | 10.9 | 7.8 | 50% | 47% | 48% | 18% |

| video 8 | 21.4 | 20.9 | 21.2 | 9.7 | 14% | 18% | 20% | 23% |

| video 9 | 16.2 | 34.0 | 34.5 | 29.1 | 18% | 13% | 11% | 14% |

| Comparison | RMSE p-Value | sRate p-Value | ||||

|---|---|---|---|---|---|---|

| PSD | AR | TIME | PSD | AR | TIME | |

| G vs GRD | 1.00 | 0.93 | 0.49 | 0.93 | 1.00 | 0.93 |

| G vs ExG | 0.73 | 0.49 | 0.34 | 0.39 | 0.55 | 0.49 |

| G vs ICA | 0.14 | 0.19 | 0.01 | 0.16 | 0.86 | 0.86 |

| GRD vs ExG | 0.67 | 0.67 | 0.80 | 0.67 | 0.60 | 0.73 |

| GRD vs ICA | 0.22 | 0.30 | 0.34 | 0.34 | 0.86 | 0.67 |

| ExG vs ICA | 0.30 | 0.39 | 0.39 | 0.55 | 0.60 | 0.34 |

| Comparison | RMSE p-Value | sRate p-Value | ||||||

|---|---|---|---|---|---|---|---|---|

| Part1 | Part2 | Part4 | Part6 | Part1 | Part2 | Part4 | Part6 | |

| G vs GRD | 0.80 | 0.49 | 1.00 | 0.93 | 1.00 | 0.44 | 0.60 | 0.80 |

| G vs ExG | 0.93 | 0.39 | 0.30 | 0.60 | 0.93 | 0.25 | 0.16 | 0.22 |

| G vs ICA | 0.44 | 0.09 | 0.34 | 0.05 | 0.30 | 0.16 | 0.45 | 0.04 |

| GRD vs ExG | 0.67 | 0.86 | 0.44 | 1.00 | 0.80 | 1.00 | 0.30 | 0.86 |

| GRD vs ICA | 0.22 | 0.73 | 0.39 | 0.22 | 0.26 | 0.75 | 0.67 | 0.26 |

| ExG vs ICA | 0.30 | 0.73 | 0.86 | 0.19 | 0.45 | 0.93 | 1.00 | 0.30 |

| Comparison | RMSE p-Value | sRate p-Value | ||||||

|---|---|---|---|---|---|---|---|---|

| Part1 | Part2 | Part4 | Part6 | Part1 | Part2 | Part4 | Part6 | |

| G vs GRD | 1.00 | 0.44 | 0.80 | 0.39 | 0.93 | 0.55 | 0.73 | 0.55 |

| G vs ExG | 1.00 | 0.34 | 0.39 | 0.22 | 1.00 | 0.19 | 0.22 | 0.26 |

| G vs ICA | 0.67 | 0.22 | 0.09 | 0.05 | 0.30 | 0.30 | 0.26 | 0.14 |

| GRD vs ExG | 0.93 | 0.73 | 0.30 | 0.67 | 0.86 | 0.49 | 0.14 | 0.60 |

| GRD vs ICA | 0.73 | 0.67 | 0.14 | 0.44 | 0.44 | 0.73 | 0.34 | 0.49 |

| ExG vs ICA | 1.00 | 0.80 | 0.26 | 0.73 | 0.34 | 0.73 | 0.60 | 1.00 |

| Comparison | RMSE p-Value | sRate p-Value | ||||||

|---|---|---|---|---|---|---|---|---|

| Part1 | Part2 | Part4 | Part6 | Part1 | Part2 | Part4 | Part6 | |

| G vs GRD | 0.30 | 0.49 | 0.67 | 0.49 | 0.49 | 0.14 | 0.22 | 0.55 |

| G vs ExG | 0.34 | 0.80 | 0.22 | 0.49 | 0.60 | 0.17 | 0.39 | 0.34 |

| G vs ICA | 0.01 | 0.08 | 0.03 | 0.06 | 0.44 | 0.67 | 0.22 | 0.67 |

| GRD vs ExG | 0.86 | 0.80 | 0.55 | 0.80 | 0.86 | 0.93 | 1.00 | 0.73 |

| GRD vs ICA | 0.49 | 0.44 | 0.22 | 0.60 | 0.22 | 0.49 | 0.80 | 0.93 |

| ExG vs ICA | 0.44 | 0.30 | 0.60 | 0.86 | 0.22 | 0.30 | 0.86 | 0.55 |

| Comparison | RMSE p-Value | sRate p-Value | ||||||

|---|---|---|---|---|---|---|---|---|

| G | GRD | ExG | ICA | G | GRD | ExG | ICA | |

| PSD vs AR | 0.67 | 0.80 | 0.93 | 0.44 | 0.93 | 0.80 | 0.73 | 0.05 |

| PSD vs TIME | 0.05 | 0.73 | 0.67 | 0.11 | 0.26 | 0.44 | 0.26 | 0.01 |

| AR vs TIME | 0.16 | 0.80 | 0.86 | 0.26 | 0.22 | 0.49 | 0.55 | 0.06 |

| Algorithm | Correlation Value | p-Value | ||||||

|---|---|---|---|---|---|---|---|---|

| G | GRD | ExG | ICA | G | GRD | ExG | ICA | |

| PSD | 0.57 | 0.57 | 0.45 | 0.27 | 0.11 | 0.11 | 0.23 | 0.49 |

| AR | 0.56 | 0.61 | 0.46 | 0.47 | 0.11 | 0.08 | 0.22 | 0.20 |

| TIME | 0.50 | 0.71 | 0.62 | 0.51 | 0.17 | 0.03 | 0.08 | 0.16 |

| Algorithm | Correlation Value | p-Value | ||||||

|---|---|---|---|---|---|---|---|---|

| G | GRD | ExG | ICA | G | GRD | ExG | ICA | |

| PSD | 0.27 | 0.75 | 0.70 | 0.24 | 0.49 | 0.02 | 0.04 | 0.53 |

| AR | 0.32 | 0.68 | 0.66 | 0.22 | 0.41 | 0.04 | 0.06 | 0.56 |

| TIME | 0.87 | 0.72 | 0.78 | 0.53 | 0.00 | 0.03 | 0.01 | 0.14 |

References

- Aoyagi, T.; Miyasaka, K. Pulse oximetry: Its invention, contribution to medicine, and future tasks. Anesth. Analg. 2002, 94, S1–S3. [Google Scholar] [PubMed]

- Nilsson, L.; Johansson, A.; Kalman, S. Respiration can be monitored by photoplethysmography with high sensitivity and specificity regardless of anaesthesia and ventilatory mode. Acta Anaesthesiol. Scand. 2005, 49, 1157–1162. [Google Scholar] [CrossRef] [PubMed]

- Kvernebo, K.; Megerman, J.; Hamilton, G.; Abbott, W.M. Response of skin photoplethysmography, laser Doppler flowmetry and transcutaneous oxygen tensiometry to stenosis-induced reductions in limb blood flow. Eur. J. Vasc. Surg. 1989, 3, 113–120. [Google Scholar] [CrossRef]

- Loukogeorgakis, S.; Dawson, R.; Phillips, N.; Martyn, C.N.; Greenwald, S.E. Validation of a device to measure arterial pulse wave velocity by a photoplethysmographic method. Physiol. Meas. 2002, 23, 581–596. [Google Scholar] [CrossRef] [PubMed]

- Incze, A.; Lazar, I.; Abraham, E.; Copotoiu, M.; Cotoi, S. The use of light reflection rheography in diagnosing venous disease and arterial microcirculation. Rom. J. Intern. Med. 2003, 41, 35–40. [Google Scholar] [PubMed]

- Jones, M.E.; Withey, S.; Grover, R.; Smith, P.J. The use of the photoplethysmograph to monitor the training of a cross-leg free flap prior to division. Br. J. Plast. Surg. 2000, 53, 532–534. [Google Scholar] [CrossRef] [PubMed]

- Imholz, B.P.; Wieling, W.; van Montfrans, G.A.; Wesseling, K.H. Fifteen years experience with finger arterial pressure monitoring: Assessment of the technology. Cardiovasc. Res. 1998, 38, 605–616. [Google Scholar] [CrossRef]

- Avnon, Y.; Nitzan, M.; Sprecher, E.; Rogowski, Z.; Yarnitsky, D. Different patterns of parasympathetic activation in uni- and bilateral migraineurs. Brain 2003, 126, 1660–1670. [Google Scholar] [CrossRef]

- Gregoski, M.J.; Mueller, M.; Vertegel, A.; Shaporev, A.; Jackson, B.B.; Frenzel, R.M.; Sprehn, S.M.; Treiber, F.A. Development and validation of a smartphone heart rate acquisition application for health promotion and wellness telehealth applications. Int. J. Telemed. Appl. 2012, 2012, 696324. [Google Scholar] [CrossRef]

- Allen, J. Photoplethysmography and its application in clinical physiological measurement. Physiol. Meas. 2007, 28, R1–R39. [Google Scholar] [CrossRef]

- Kranjec, J.; Beguš, S.; Geršak, G.; Drnovšek, J. Review. Biomed. Signal Process. Control 2014, 13, 102–112. [Google Scholar] [CrossRef]

- Verkruysse, W.; Svaasand, L.O.; Nelson, J.S. Remote plethysmographic imaging using ambient light. Opt. Express 2008, 16, 21434–21445. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Q.; Wu, Q.; Zhou, Y.; Wu, X.; Ou, Y.; Zhou, H. Webcam-based, non-contact, real-time measurement for the physiological parameters of drivers. Measurement 2017, 100, 311–321. [Google Scholar] [CrossRef]

- Wang, W.; den Brinker, A.C.; Stuijk, S.; de Haan, G. Robust heart rate from fitness videos. Physiol. Meas. 2017, 38, 1023–1044. [Google Scholar] [CrossRef] [PubMed]

- McDuff, D.J.; Hernandez, J.; Gontarek, S.; Picard, R.W. COGCAM: Contact-free Measurement of Cognitive Stress During Computer Tasks with a Digital Camera. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 4000–4004. [Google Scholar]

- Sun, Y.; Thakor, N. Photoplethysmography Revisited: From Contact to Noncontact, From Point to Imaging. IEEE Trans. Biomed. Eng. 2016, 63, 463–477. [Google Scholar] [CrossRef] [PubMed]

- Poh, M.Z.; McDuff, D.J.; Picard, R.W. Non-contact, automated cardiac pulse measurements using video imaging and blind source separation. Opt. Express 2010, 18, 10762–10774. [Google Scholar] [CrossRef] [PubMed]

- Poh, M.Z.; McDuff, D.J.; Picard, R.W. Advancements in noncontact, multiparameter physiological measurements using a webcam. IEEE Trans Biomed. Eng. 2011, 58, 7–11. [Google Scholar] [CrossRef]

- Li, X.; Chen, J.; Zhao, G.; Pietikainen, M. Remote heart rate measurement from face videos under realistic situations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 4264–4271. [Google Scholar]

- Balakrishnan, G.; Durand, F.; Guttag, J. Detecting Pulse from Head Motions in Video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Unakafov, A.M. Pulse rate estimation using imaging photoplethysmography: Generic framework and comparison of methods on a publicly available dataset. Biomed. Phys. Eng. Express 2018, 4, 045001. [Google Scholar] [CrossRef]

- Hülsbusch, M. An image-based functional method for opto-electronic detection of skin-perfusion. Ph.D. Thesis, RWTH Aachen University, Aachen, Germany, 2008. [Google Scholar]

- Przybyło, J.; Kańtoch, E.; Jabłoński, M.; Augustyniak, P. Distant Measurement of Plethysmographic Signal in Various Lighting Conditions Using Configurable Frame-Rate Camera. Metrol. Meas. Syst. 2016, 23, 579–592. [Google Scholar] [CrossRef]

- Gong, S.; McKenna, S.J.; Psarrou, A. Dynamic Vision: From Images to Face Recognition, 1st ed.; Imperial College Press: London, UK, 2000; ISBN 1-86094-181-8. [Google Scholar]

- Zafeiriou, S.; Zhang, C.; Zhang, Z. A Survey on Face Detection in the Wild. Comput. Vis. Image Underst. 2015, 138, 1–24. [Google Scholar] [CrossRef]

- Zhang, C.; Zhang, Z. A Survey of Recent Advances in Face Detection. Available online: https://www.microsoft.com/en-us/research/publication/a-survey-of-recent-advances-in-face-detection/ (accessed on 27 September 2019).

- King, D. Dlib c++ library. Available online: http://dlib. net (accessed on 22 January 2018).

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- King, D.E. Max-Margin Object Detection. arXiv 2015. [Google Scholar]

- Tomasi, C.; Kanade, T. Detection and Tracking of Point Features. Available online: https://www2.cs.duke.edu/courses/fall17/compsci527/notes/interest-points.pdf (accessed on 27 September 2019).

- Viola, P.; Jones, M.J. Robust Real-Time Face Detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Shi, J.; Tomasi, C. Good Features to Track; Cornell University: Ithaca, NY, USA, 1993. [Google Scholar]

- Kazemi, V.; Sullivan, J. One Millisecond Face Alignment with an Ensemble of Regression Trees. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 1867–1874. [Google Scholar]

- Yu, Y.P.; Raveendran, P.; Lim, C.L. Dynamic heart rate measurements from video sequences. Biomed. Opt. Express 2015, 6, 2466–2480. [Google Scholar] [CrossRef] [PubMed]

- Hyvärinen, A.; Oja, E. Independent Component Analysis: Algorithms and Applications. Neural Netw. 2000, 13, 411–430. [Google Scholar] [CrossRef]

- Yang, W.; Wang, S.; Zhao, X.; Zhang, J.; Feng, J. Greenness identification based on HSV decision tree. Inf. Process. Agric. 2015, 2, 149–160. [Google Scholar] [CrossRef]

- Jaromir Przybyło. Available online: http://home.agh.edu.pl/~przybylo/download_en.html (accessed on 26 September 2019).

| Algorithm | t0 [s] | t1 [s] | t2 = t0 − t1[s] |

|---|---|---|---|

| No.1 (PSD) | 13.4 | 3 | 10.4 |

| No.2 (AR) | 6.6 | 3 | 3.6 |

| No.3 (TIME) | 5.1 | 3 | 2.1 |

| Video No. | Room Settings | Participant’s Details | Camera Parameters |

|---|---|---|---|

| 1 | room 1: artificial ceiling fluorescent light + natural light (dusk, medium lighting) from a one window on the left side + light from the one computer screen | participant 1: male, ~34 years old | camera-to-face distance ~50 cm, gain = 128, white balance off |

| 2 | room 1: artificial ceiling fluorescent light + natural light (dusk, medium lighting) from a one window on the left side + light from the one computer screen | participant 2: male, ~22 years old | camera-to-face distance ~50 cm, gain = 128, white balance off |

| 3 | room 2: daylight (cloudy, poor lighting): a one roof window on the left, and a second window in the back on the right + fluorescent lamps in the back (2 m) + ceiling fluorescent lamps + right-side table lamp + light from two computer screens | participant 3: male, ~44 years old | camera-to-face distance ~50 cm, gain = 128, white balance off |

| 4 | room 2: daylight (cloudy, medium lighting): a one roof window on the left, and a second window in the back on the right + fluorescent lamps in the back (2 m) + ceiling fluorescent lamps + light from two computer screens | participant 3: male, ~44 years old | camera-to-face distance ~50 cm, gain = 128, white balance on |

| 5 | room 3: daylight (sunny, strong lighting): a one window in the front + light from the one computer screen; | participant 3: male, ~44 years old | camera-to-face distance ~60 cm (computer screen slightly lower – user has to gaze slightly downwards), gain = 100, white balance on |

| 6 | room 4: nighttime, artificial light only (ceiling lamps, table lamps, led curtain lamps + light from the one computer screen); | participant 3: male, ~44 years old | camera-to-face distance ~50 cm (computer screen slightly lower – user has to gaze slightly downwards), gain = 128, white balance on |

| 7 | room 3: daylight (cloudy, medium lighting): a one window in the front + light from the one computer screen; | participant 4: female, ~42 years old | camera-to-face distance ~60 cm (computer screen slightly lower – user has to gaze slightly downwards), gain = 128, white balance on |

| 8 | room 2: daylight (cloudy, poor lighting): a one roof window on the left, and a second window in the back on the right + fluorescent lamps in the back (2 m) + light from two computer screens; | participant 3: male, ~44 years old | camera-to-face distance ~50 cm, gain = 100, white balance off |

| 9 | room 5: artificial ceiling fluorescent light + natural light (dusk, medium lighting) from a one window on the right side + right side bulb lamp + light from the one computer screen; | participant 5: male, ~23 years old | camera-to-face distance ~60 cm, gain = 128, white balance on |

| Algorithm | RMSE [bpm] | sRate [%] | ||||||

|---|---|---|---|---|---|---|---|---|

| G | GRD | ExG | ICA | G | GRD | ExG | ICA | |

| PSD | 10.7 | 13.5 | 11.1 | 4.0 | 47% | 49% | 65% | 78% |

| AR | 15.6 | 12.3 | 11.6 | 5.8 | 48% | 45% | 61% | 48% |

| TIME | 16.8 | 13.1 | 10.9 | 8.6 | 35% | 43% | 45% | 26% |

| average | 14.4 | 13.0 | 11.2 | 6.1 | 43% | 46% | 57% | 51% |

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Przybyło, J. Continuous Distant Measurement of the User’s Heart Rate in Human-Computer Interaction Applications. Sensors 2019, 19, 4205. https://doi.org/10.3390/s19194205

Przybyło J. Continuous Distant Measurement of the User’s Heart Rate in Human-Computer Interaction Applications. Sensors. 2019; 19(19):4205. https://doi.org/10.3390/s19194205

Chicago/Turabian StylePrzybyło, Jaromir. 2019. "Continuous Distant Measurement of the User’s Heart Rate in Human-Computer Interaction Applications" Sensors 19, no. 19: 4205. https://doi.org/10.3390/s19194205

APA StylePrzybyło, J. (2019). Continuous Distant Measurement of the User’s Heart Rate in Human-Computer Interaction Applications. Sensors, 19(19), 4205. https://doi.org/10.3390/s19194205