Fast 3D Surface Measurement with Wrapped Phase and Pseudorandom Image

Abstract

:1. Introduction

2. New Methodology

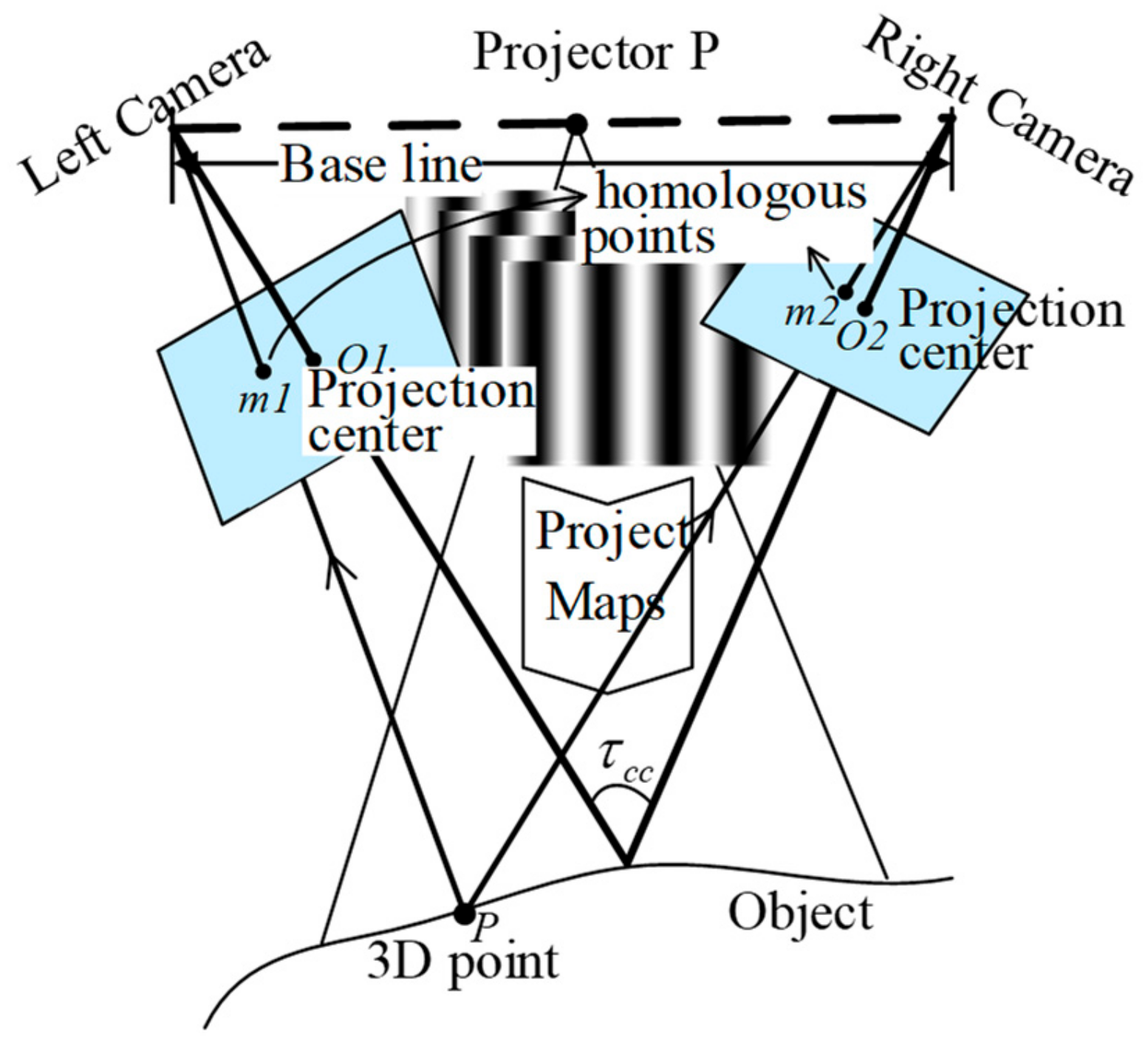

2.1. Fringe Projection Profilometry of Novel Active Stereovision

2.2 Steps to Obtain Candidate 3D Points

2.3. 3D Reconstruction Algorithm

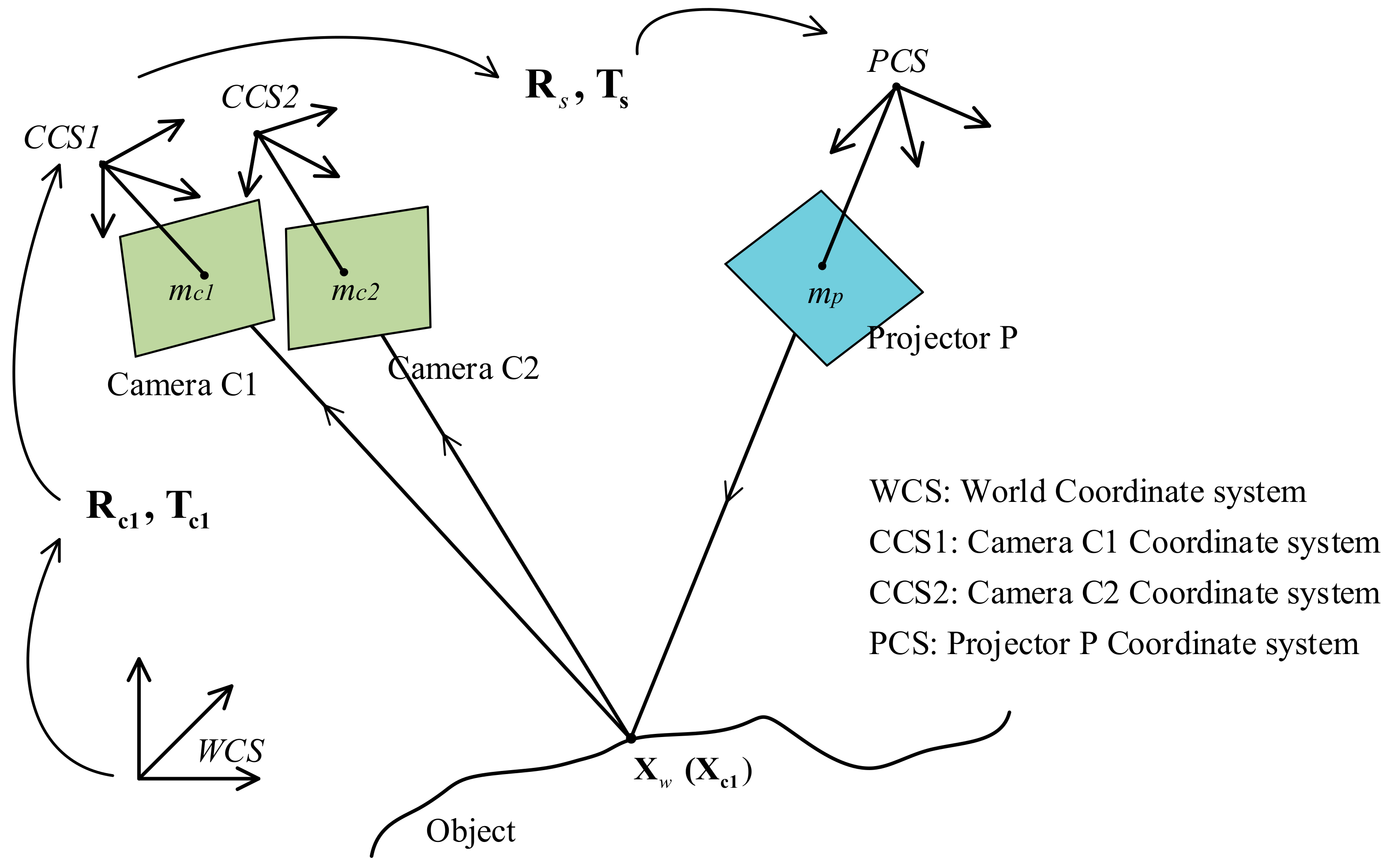

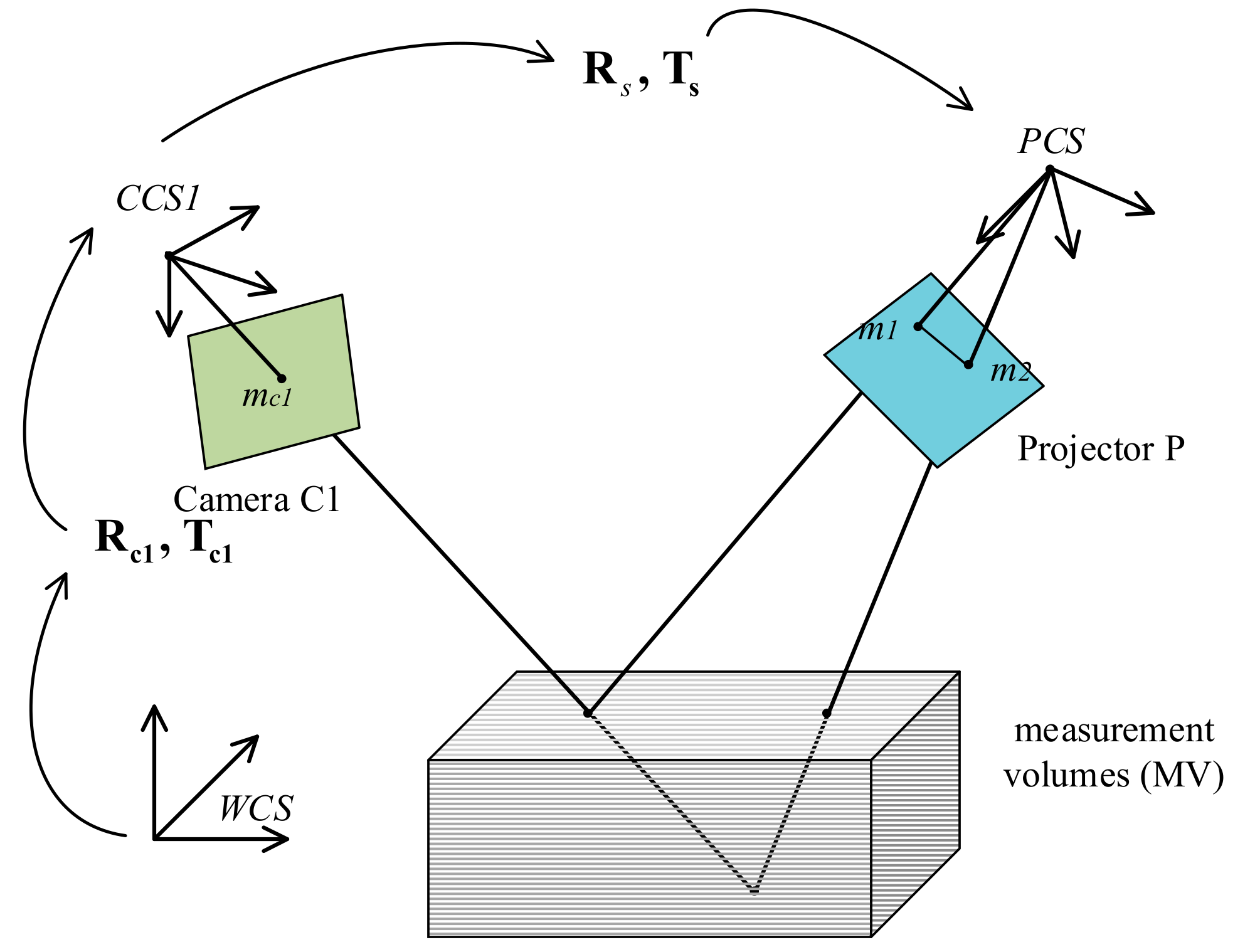

- Calibrate the system. Arrange the special 3D sense device as shown in Figure 2. The active stereovision is formed by camera C1 and projector P, and camera C2 is added to determine the real 3D point from the candidate 3D points. After calibrating the active stereovision and camera C2 parameters using the BA strategy [14], using Equation (8), build the coefficients of the polynomials from phase value to 3D coordinates, described as , , .

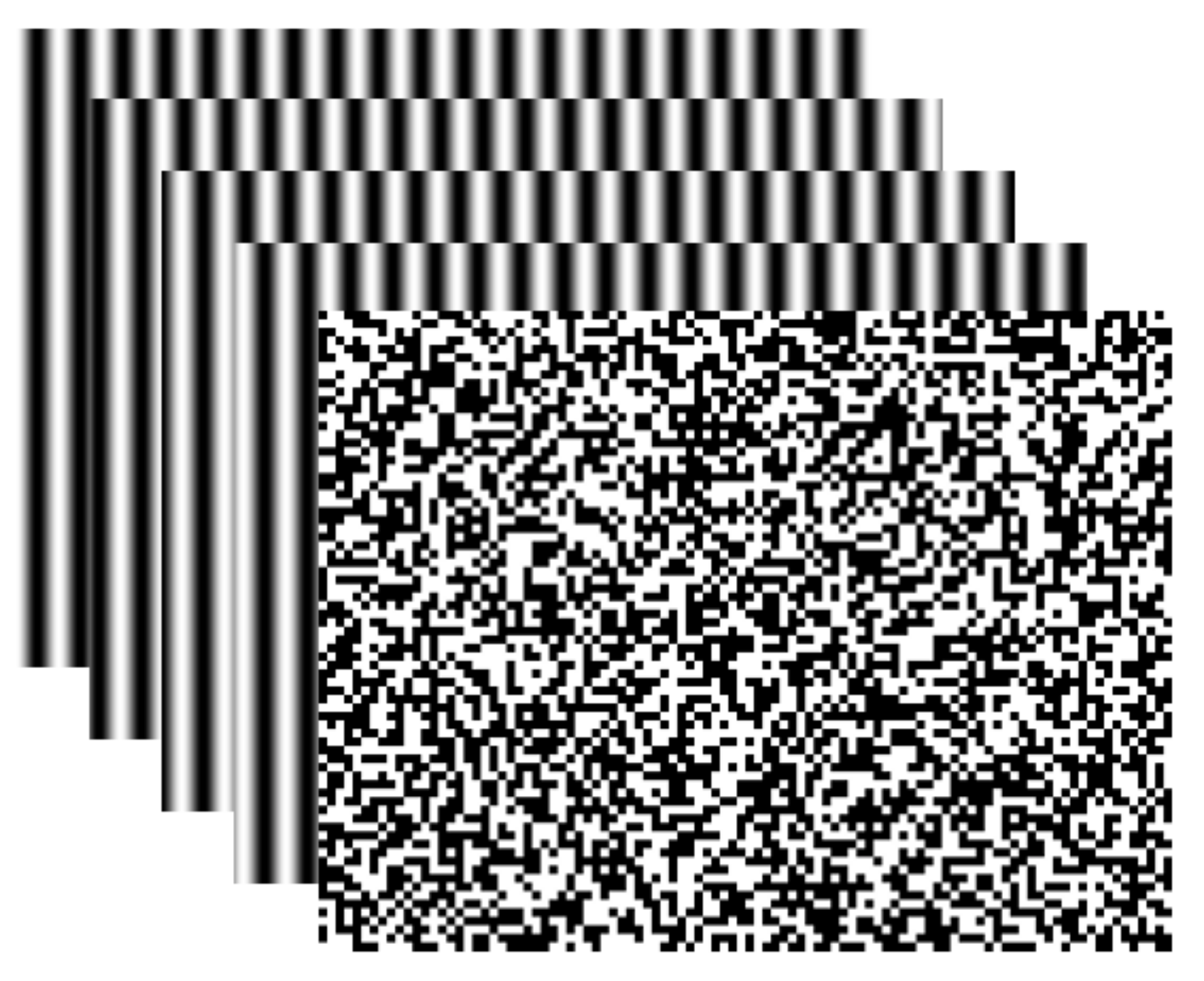

- Determine the projection and acquisition image sequence. The four-step phase-shifted (FSPS) and pseudorandom patterns are sent by the projector to the surface of the measured object, then camera C1 acquires the phase-shifted images reflected off the object. Cameras C1 and C2 simultaneously capture the pseudorandom image. The five projected image patterns are generated by computer, as shown in Figure 5.

- Estimate several candidate 3D points. By the phase-shifted technology in Equation (4), use the FSPS image to get the wrapped phase map of camera C1. For camera C1, any valid pixel based on the relationship between phase value and pixel position (Equations (5) and (6)) can obtain every possibility of unwrapped phase and decompose it into the LUT as Equation (8) to reconstruct several candidate 3D points.

- Select the true 3D points. Project all candidate 3D points to the camera C2 imaging plane and obtain candidate corresponding point pairs . For each point pair, check the correlation between the corresponding regions by the NCC method (Equation (7)) and select the maximum NCC value that has significant correlation. Then, its 3D point is the true one.

3. Experiments and Results

3.1. Precision Test

3.2. Using the Method for Human Body Measurement

4. Summary

Author Contributions

Funding

Conflicts of Interest

References

- Gorthi, S.; Rastogi, S.; Fringe, P. Projection Techniques: Whither We Are? Opt. Laser. Eng. J. 2010, 48, 133–140. [Google Scholar] [CrossRef]

- Peng, X.; Liu, X.; Yin, Y.; Li, A. Optical Measurement Network for Large-Scale and Shell-Like Objects. Opt. Lett. J. 2011, 36, 157–159. [Google Scholar] [CrossRef] [PubMed]

- Rianmora, S.; Koomsap, P. The application of aringe projection concept for selective data acquisition. In Proceedings of the International Conference on Computer and Automation Engineering, Bangkok, Thailand, 8–10 March 2009; pp. 131–134. [Google Scholar]

- Sitnik, R.; Mączkowski, G.; Krzesłowski, J. Integrated Shape, Color, and Reflectivity Measurement Method for 3D Digitization of Cultural Heritage Objects. In Proceedings of the SPIE-The International Society for Optical Engineering, San Jose, CA, USA, 17–21 January 2010; p. 75260Q. [Google Scholar]

- Chen, X.; Sun, J.; Xi, J.; Xiong, Y.; Qiu, J.; Gu, X. Development of a 3d Optical Measurement System Based on Fringe Projection for Facial Prosthesis. In Proceedings of the Instrumentation and Measurement Technology Conference (I2MTC), Binjiang, Hangzhou, China, 10–12 May 2011; pp. 1–5. [Google Scholar]

- Wu, D.; Xie, H.; Li, C.; Rong, W. Application of the Digital Phase-Shifting Method in 3d Deformation Measurement at Micro-Scale by Sem. Meas. Sci. Technol. J. 2014, 25, 8. [Google Scholar] [CrossRef]

- Zhang, Q.; Su, X.; Xiang, L.; Sun, X. 3-D Shape Measurement Based on Complementary Gray-Code Light. Opt. Laser. Eng. J. 2012, 50, 574–579. [Google Scholar] [CrossRef]

- Zhang, C.W.; Zhao, H.; Gu, F.F.; Ma, Y. Phase Unwrapping Algorithm Based on Multi-Frequency Fringe Projection and Fringe Background for Fringe Projection Profilometry. Meas. Sci. Technol. J. 2015, 26, 10. [Google Scholar]

- Huang, L.; Asundi, A.K. Phase Invalidity Identification Framework with the Temporal Phase Unwrapping Method. Meas. Sci. Technol. J. 2011, 22, 5. [Google Scholar]

- Ishiyama, R.; Sakamoto, S.; Tajima, J.; Okatani, T.; Deguchi, K. Absolute Phase Measurements Using Geometric Constraints between Multiple Cameras and Projectors. Appl. Opt. J. 2007, 46, 3528–3538. [Google Scholar] [CrossRef] [PubMed]

- Bräuer-Burchardt, C.; Kühmstedt, P.; Notni, G. Phase Unwrapping Using Geometric Constraints for High-Speed Fringe Projection Based 3D Measurements. In Proceedings of the SPIE-The International Society for Optical Engineering, Munich, Germany, 13–16 May 2013; pp. 878906–878911. [Google Scholar]

- Guan, Y.; Yin, Y.; Li, A.; Liu, X.; Peng, X. Dynamic 3d Imaging Based on Acousto-Optic Heterodyne Fringe Interferometry. Opt. Lett. J. 2014, 39, 3678–3681. [Google Scholar] [CrossRef] [PubMed]

- Zhong, K.; Li, Z.; Shi, Y.; Wang, C.; Lei, Y. Fast Phase Measurement Profilometry for Arbitrary Shape Objects without Phase Unwrapping. Opt. Laser. Eng. J. 2013, 51, 1213–1222. [Google Scholar] [CrossRef]

- Pribanic, T.; Obradovic, N.; Salvi, J. Stereo Computation Combining Structured Light and Passive Stereo Matching. Opt. Commun. J. 2012, 285, 1017–1022. [Google Scholar]

- Lohry, W.; Chen, V.; Zhang, S. Absolute Three-Dimensional Shape Measurement Using Coded Fringe Patterns without Phase Unwrapping or Projector Calibration. Opt. Express J. 2014, 22, 1287–1301. [Google Scholar] [CrossRef] [PubMed]

- Lohry, W.; Zhang, S. High-Speed Absolute Three-Dimensional Shape Measurement Using Three Binary Dithered Patterns. Opt. Express J. 2014, 22, 26752–26762. [Google Scholar] [CrossRef] [PubMed]

- Bräuer-Burchardt, C.; Möller, M.; Munkelt, C.; Heinze, M.; Kühmstedt, P.; Notni, G. On the Accuracy of Point Correspondence Methods in Three-Dimensional Measurement Systems Using Fringe Projection. OPTICE J. 2013, 52, 063601. [Google Scholar] [CrossRef]

- Bräuer-Burchardt, C.; Munkelt, C.; Heinze, M.; Kühmstedt, P.; Notni, G. Using Geometric Constraints to Solve the Point Correspondence Problem in Fringe Projection Based 3D Measuring Systems . In Proceedings of the Image Analysis and Processing-ICIAP, Ravenna, Italy, 14–16 September 2011; pp. 265–274. [Google Scholar]

- Yin, Y.; Peng, X.; Li, A.; Liu, X.; Gao, B.Z. Calibration of Fringe Projection Profilometry with Bundle Adjustment Strategy. Opt. Lett. J. 2012, 37, 542–544. [Google Scholar] [CrossRef] [PubMed]

| Nominal Value of Radius (mm) | Results of Experimental Error | ||||

|---|---|---|---|---|---|

| Mean | Std. | Max. | Min. | ||

| 1 | 25.3897 | 0.0103 | 0.0053 | 0.0174 | 0.0007 |

| 2 | 25.3921 | 0.0048 | 0.0045 | 0.0133 | −0.0012 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; He, D.; Hu, H.; Liu, L. Fast 3D Surface Measurement with Wrapped Phase and Pseudorandom Image. Sensors 2019, 19, 4185. https://doi.org/10.3390/s19194185

Liu X, He D, Hu H, Liu L. Fast 3D Surface Measurement with Wrapped Phase and Pseudorandom Image. Sensors. 2019; 19(19):4185. https://doi.org/10.3390/s19194185

Chicago/Turabian StyleLiu, Xing, Dong He, Hao Hu, and Lixin Liu. 2019. "Fast 3D Surface Measurement with Wrapped Phase and Pseudorandom Image" Sensors 19, no. 19: 4185. https://doi.org/10.3390/s19194185

APA StyleLiu, X., He, D., Hu, H., & Liu, L. (2019). Fast 3D Surface Measurement with Wrapped Phase and Pseudorandom Image. Sensors, 19(19), 4185. https://doi.org/10.3390/s19194185