Visual Measurement of Water Level under Complex Illumination Conditions

Abstract

:1. Introduction

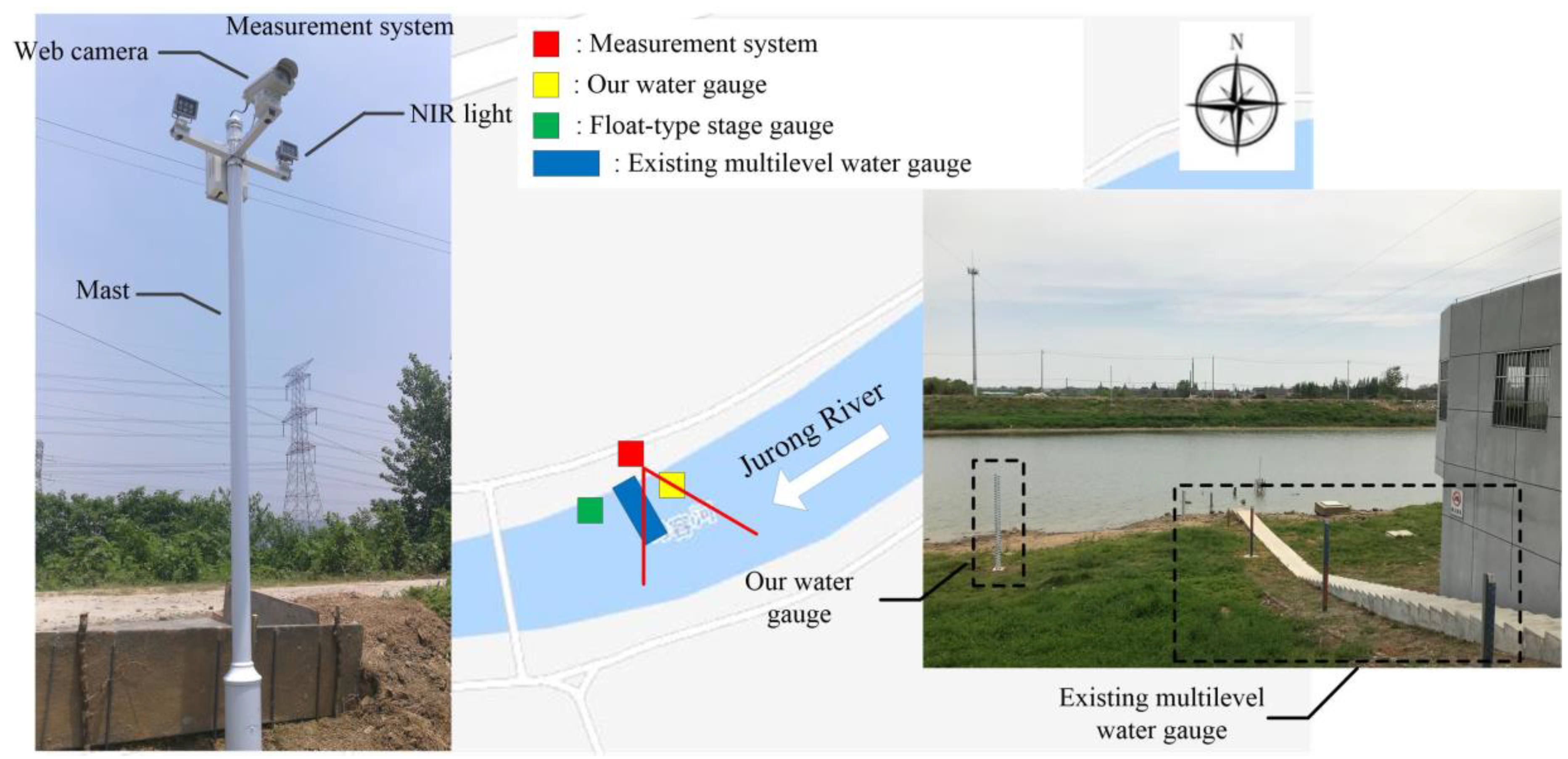

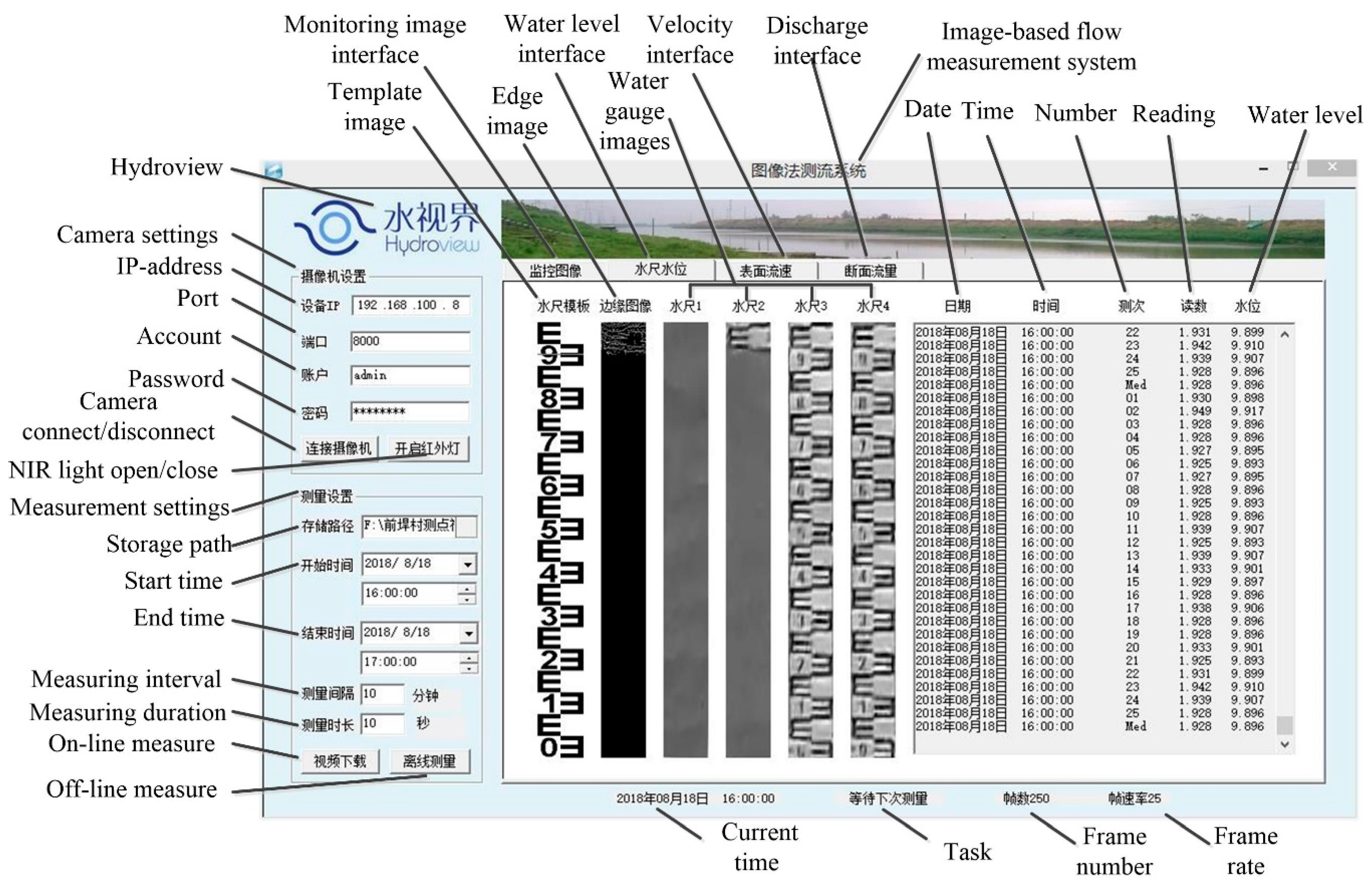

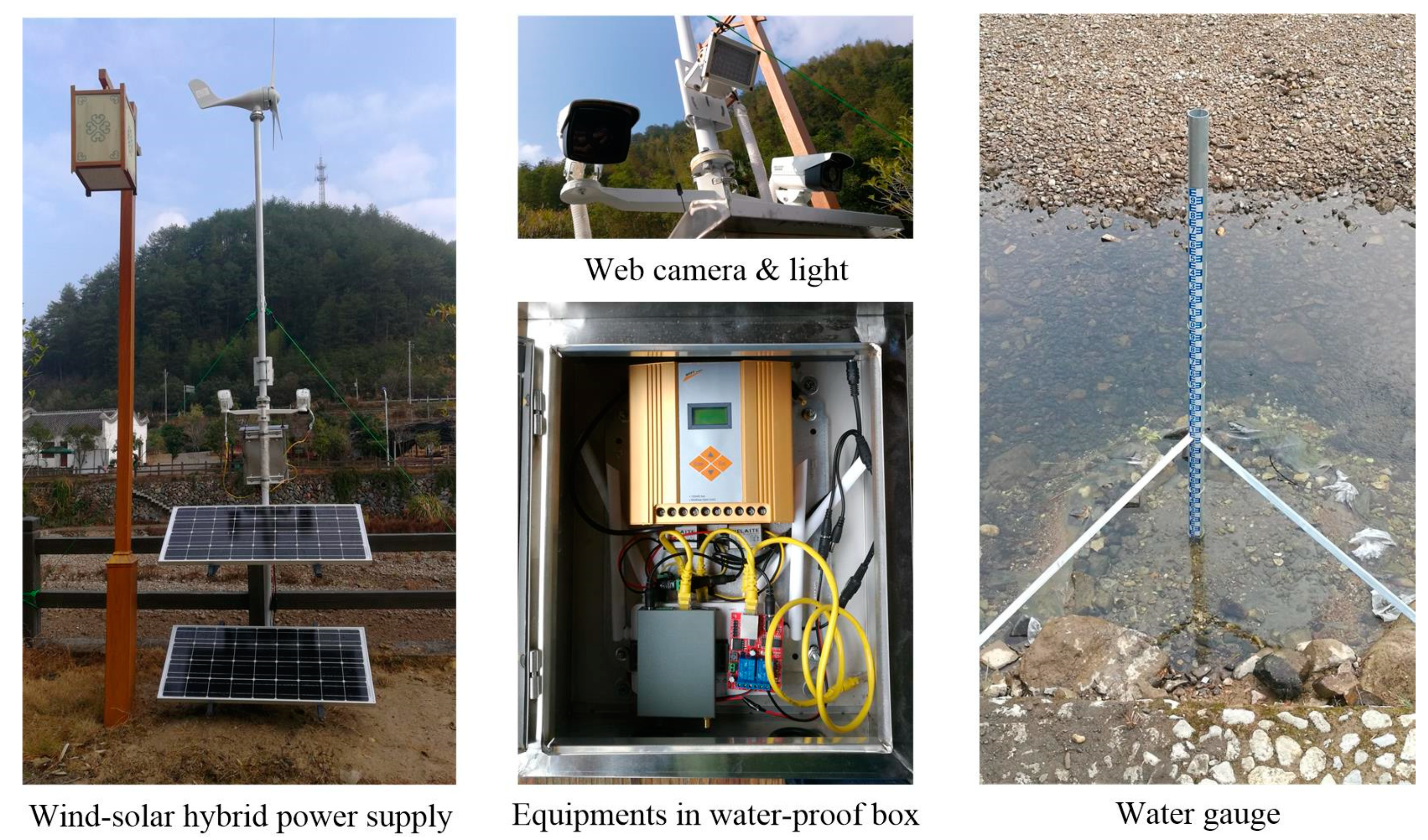

2. Measurement Site and System

2.1. Measurement Site 1

2.2. Measurement Site 2

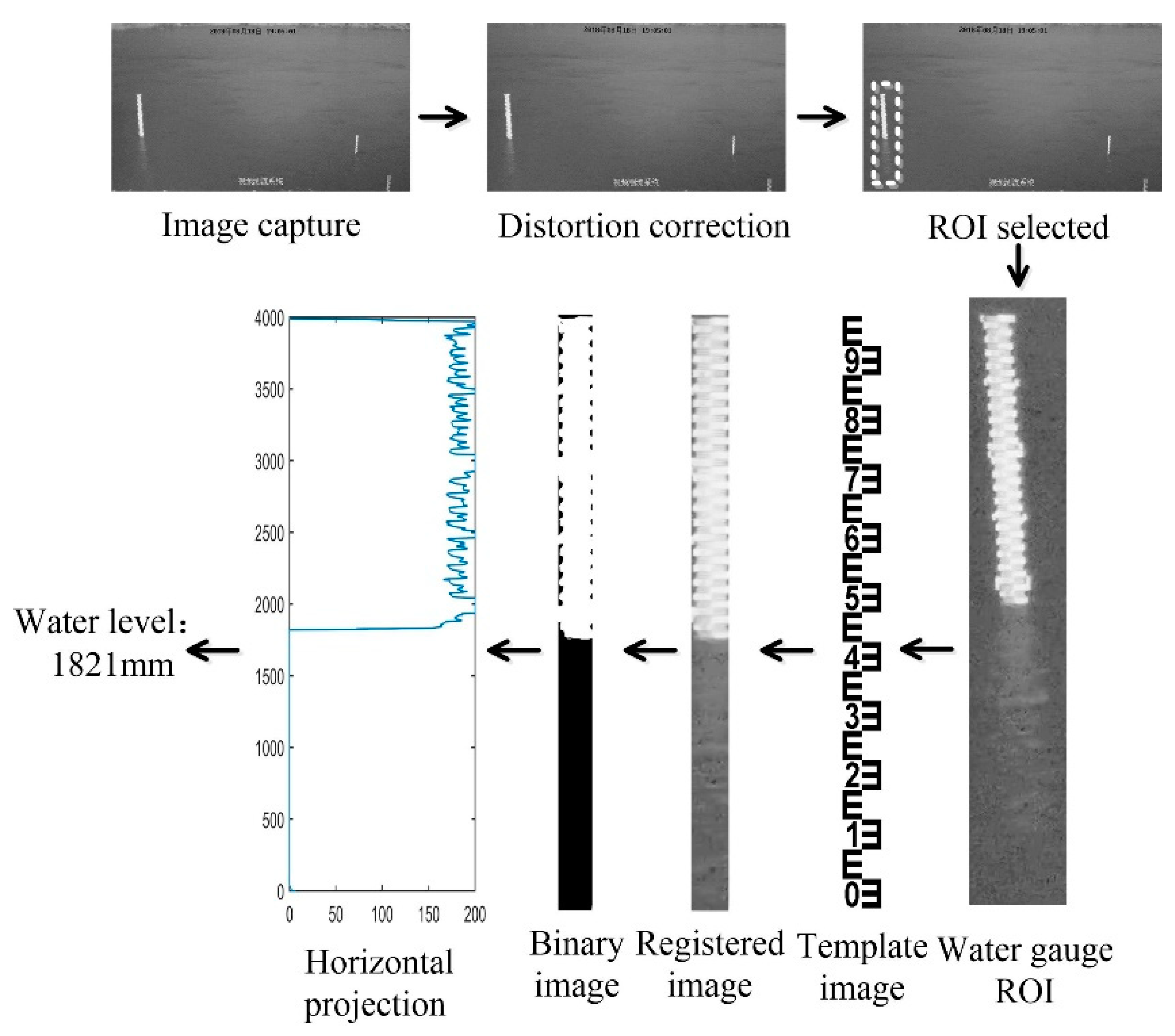

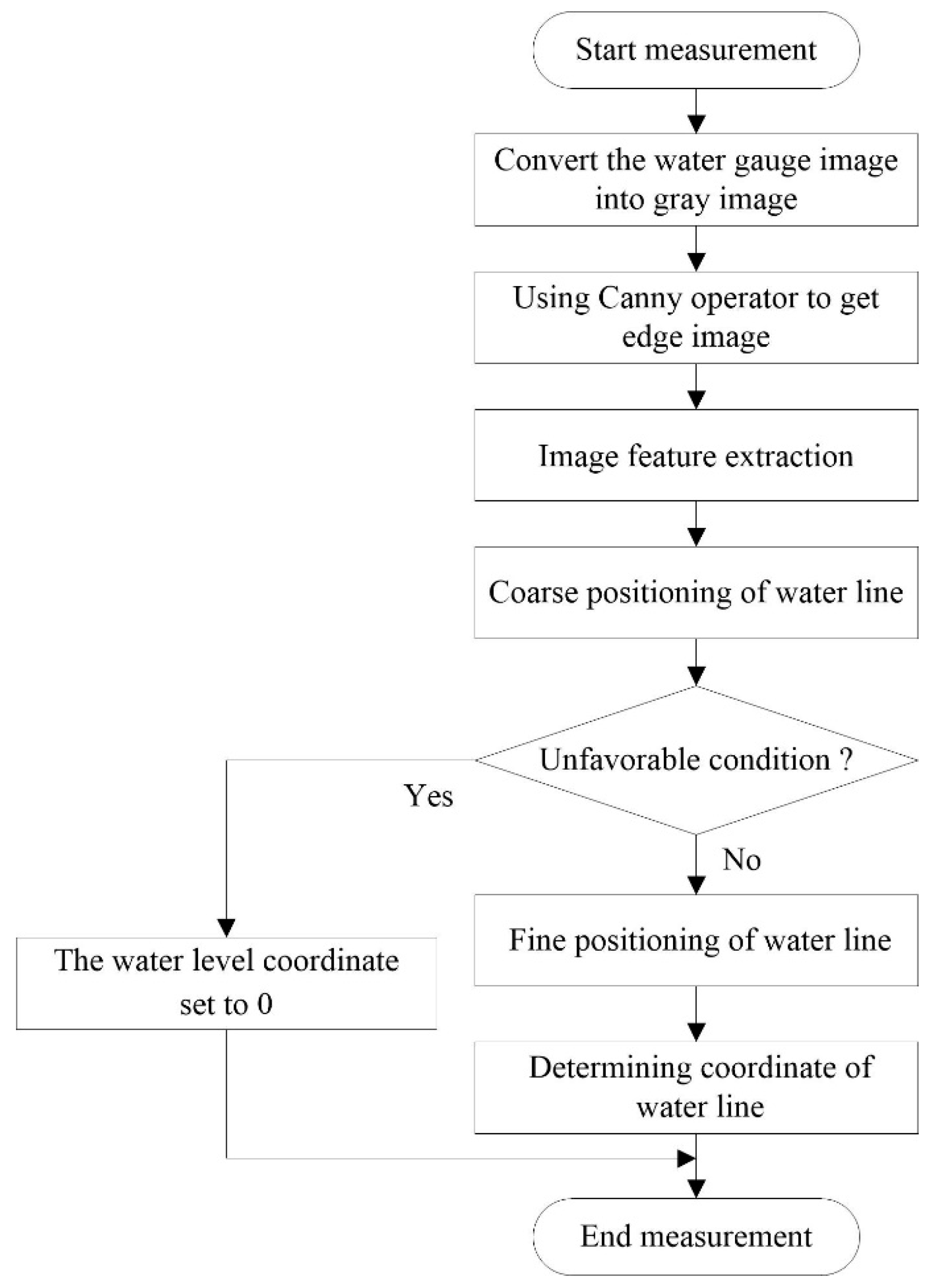

3. Water-Level Measurement

3.1. Overview

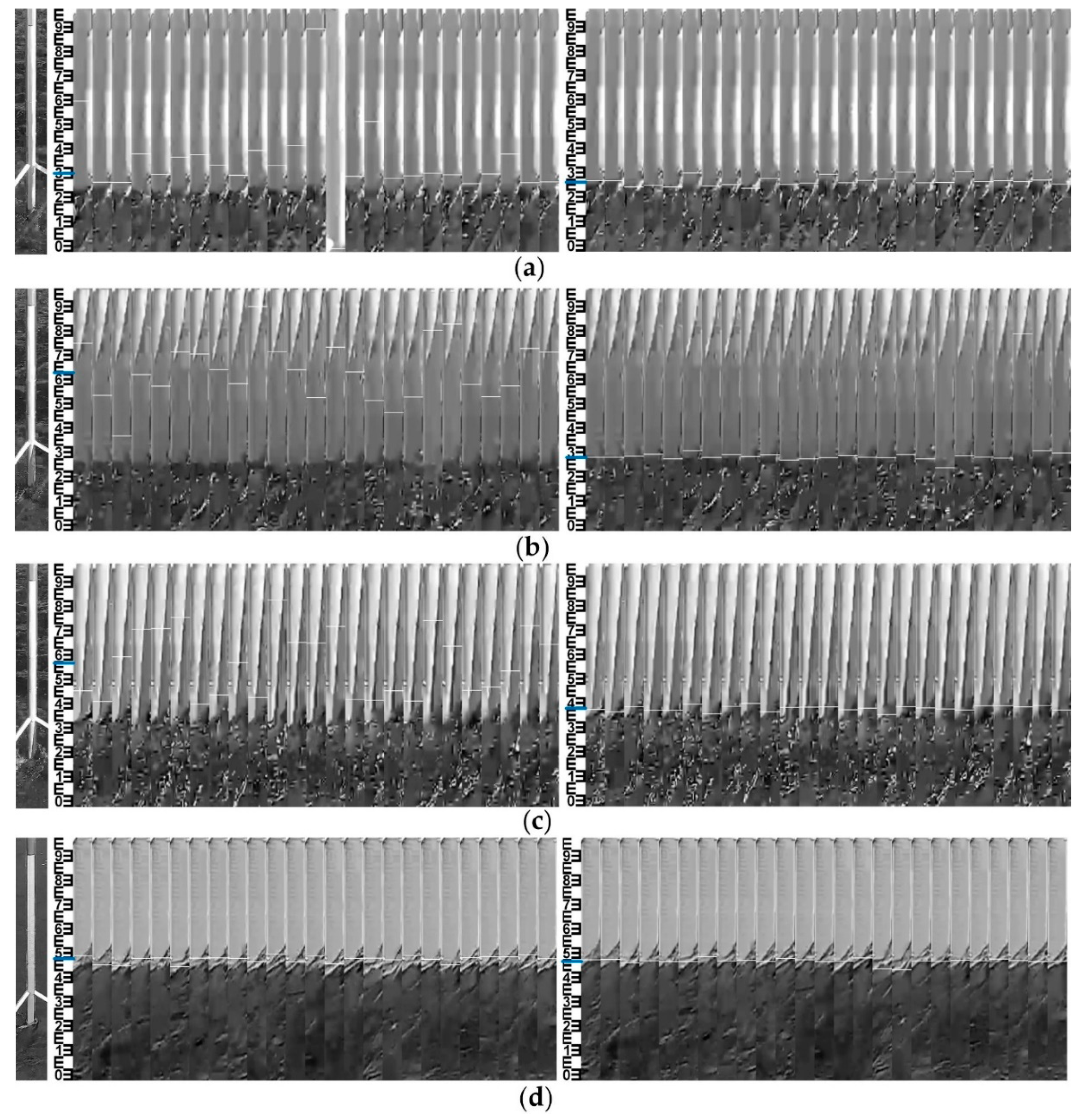

3.2. Basis of Water-Line Detection

3.3. Maximum Mean Difference (MMD) Method

3.3.1. Image Feature Extraction

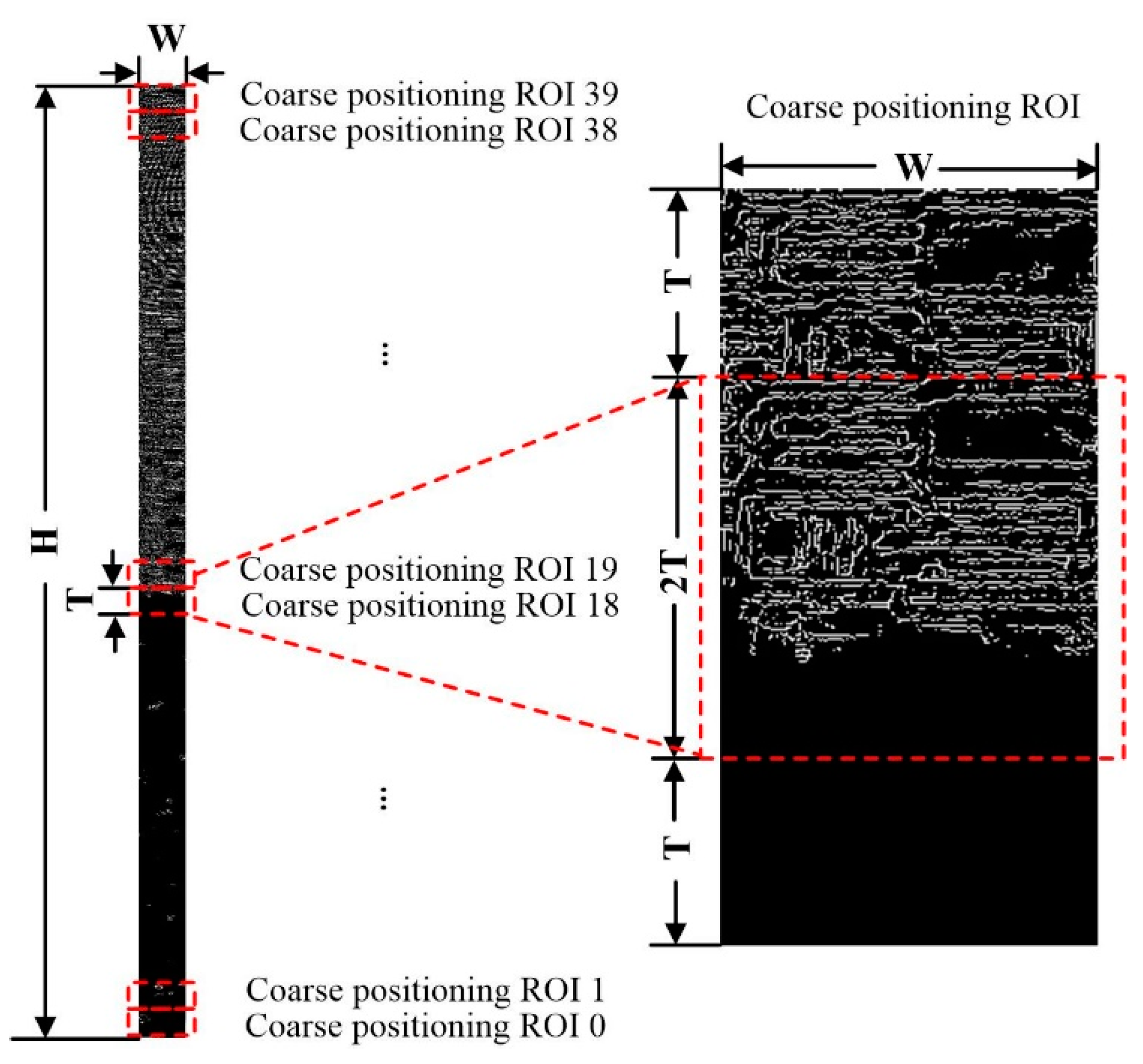

3.3.2. Coarse Positioning of Water Line

3.3.3. Identification of Unfavorable Condition

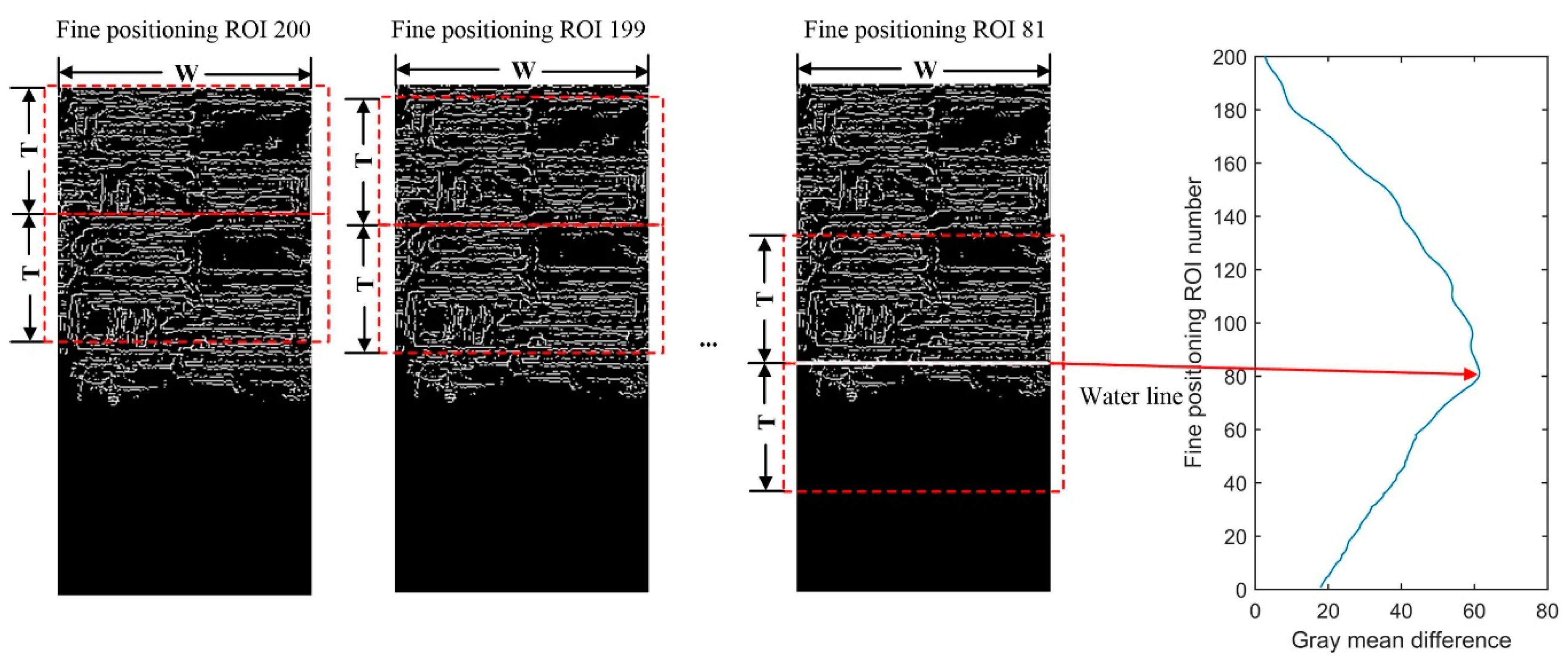

3.3.4. Fine Positioning of Water Line

3.3.5. Determining Coordinate of Water Line

4. Experimental Results

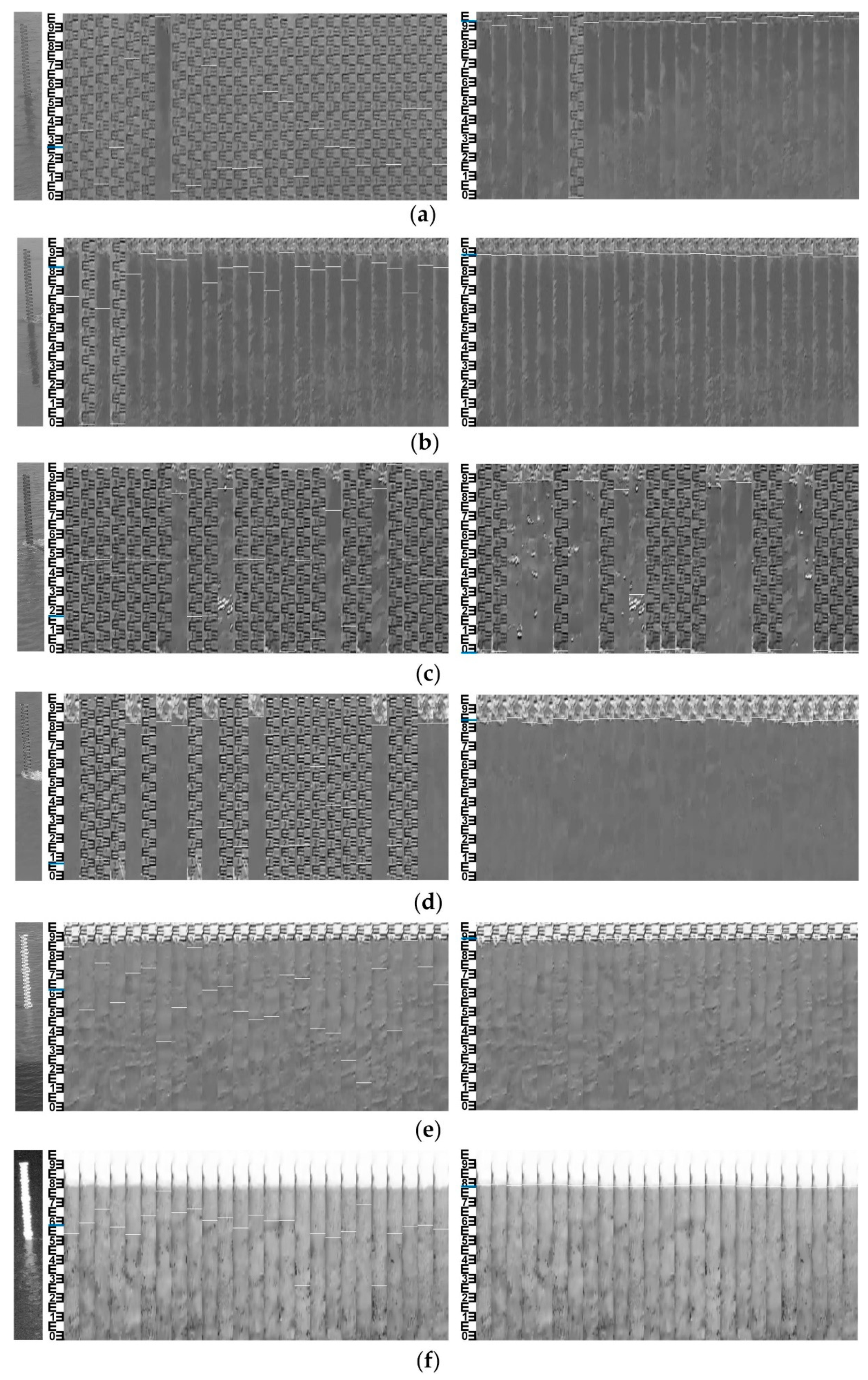

4.1. Experiment in Measurement Site 1

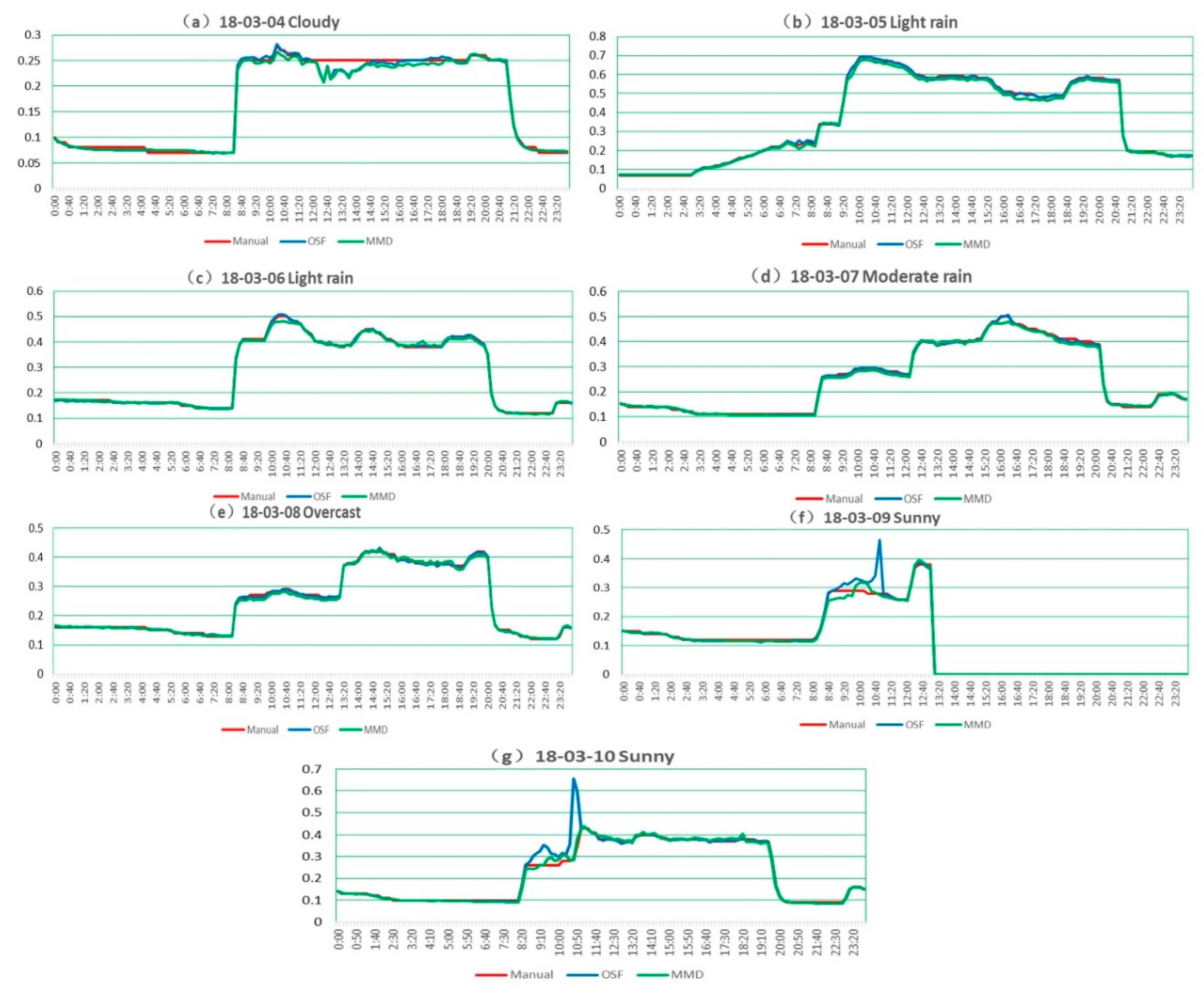

4.2. Experiment in Measurement Site 2

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Xu, C. Climate change and hydrologic models: A review of existing gaps and recent research developments. Water Resour. Manag. 1999, 13, 369–382. [Google Scholar] [CrossRef]

- Moradkhani, H.; Sorooshian, S. General review of rainfall-runoff modeling: Model calibration, data assimilation, and uncertainty analysis. In Hydrological Modelling and the Water Cycle; Springer: Berlin/Heidelberg, Germany, 2009; pp. 1–24. [Google Scholar]

- Muste, M.; Ho, H.C.; Kim, D. Considerations on direct stream flow measurements using video imagery: Outlook and research needs. J. Hydro Environ. Res. 2011, 5, 289–300. [Google Scholar] [CrossRef]

- Lin, F.; Chang, W.Y.; Lee, L.C.; Hsiao, H.T.; Tsai, W.F.; Lai, J.S. Applications of image recognition for real-time water level and surface velocity. In Proceedings of the 2013 IEEE International Symposium on Multimedia, Anaheim, CA, USA, 9–12 December 2013. [Google Scholar]

- Zheng, G.; Zong, H.; Zhuan, X.; Wang, L. High-accuracy surface-perceiving water level gauge with self-calibration for hydrography. IEEE Sens. J. 2010, 10, 1893–1900. [Google Scholar] [CrossRef]

- Li, G.B.; Ha, Q.; Qiu, W.B.; Xu, J.C.; Hu, Y.Q. Application of guided-wave radar water level meter in tidal level observation. J. Ocean Technol. 2018, 37, 19–23. [Google Scholar]

- Chetpattananondh, K.; Tapoanoi, T.; Phukpattaranont, P.; Jindapetch, N. A self-calibration water level measurement using an interdigital capacitive sensor. Sens. Actuators A Phys. 2014, 209, 175–182. [Google Scholar] [CrossRef]

- Simpson, M.R.; Oltmann, R.N. Discharge-Measurement System Using an Acoustic Doppler Current Profiler with Applications to Large Rivers and Estuaries; US Government Printing Office: Washington, DC, USA, 1993; p. 32.

- Zhang, Y.H. A brief discussion on model selection of water level gauge for mountain river. Autom. Water Resour. Hydrol. 2008, 4, 45–46. [Google Scholar]

- Lo, S.W.; Wu, J.H.; Lin, F.P.; Hsu, C.H. Visual sensing for urban flood monitoring. Sensors 2015, 15, 20006–20029. [Google Scholar] [CrossRef] [PubMed]

- Shin, I.; Kim, J.; Lee, S.G. Development of an internet-based water-level monitoring and measuring system using CCD camera. In Proceedings of the ICMIT 2007: Mechatronics, MEMS, and Smart Materials, Gifu, Japan, 5–6 December 2007. [Google Scholar]

- Schoener, G. Time-lapse photography: Low-cost, low-tech alternative for monitoring flow depth. J. Hydrol. Eng. 2017, 23, 06017007. [Google Scholar] [CrossRef]

- Lin, Y.T.; Lin, Y.C.; Han, J.Y. Automatic water-level detection using single-camera images with varied poses. Measurement 2018, 127, 167–174. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhou, Y.; Li, Y.C.; Ye, Y.J.; Li, X.R. An IP camera-based LSPIV system for on-line monitoring of river flow. In Proceedings of the ICEMI 2017: IEEE International Conference on Electronic Measurement & Instruments, Yangzhou, China, 20–23 October 2017. [Google Scholar]

- Eltner, A.; Elias, M.; Sardemann, H.; Spieler, D. Automatic image-based water stage measurement for long-term observations in ungauged catchments. Water Resour. Res. 2018, 54, 10362–10371. [Google Scholar] [CrossRef]

- Xu, Z.; Feng, J.; Zhang, Z.; Duan, C. Water level estimation based on image of staff gauge in smart city. In Proceedings of the 2018 IEEE SmartWorld, Ubiquitous Intelligence & Computing, Advanced & Trusted Computing, Scalable Computing & Communications, Cloud & Big Data Computing, Internet of People and Smart City Innovation, Guangzhou, China, 8–12 October 2018. [Google Scholar]

- Ridolfi, E.; Manciola, P. Water level measurements from drones: A pilot case study at a dam site. Water 2018, 10, 297. [Google Scholar] [CrossRef]

- Kim, Y.J.; Park, H.S.; Lee, C.J.; Kim, D.; Seo, M. Development of a cloud-based image water level gauge. IT CoNvergence PRActice (INPRA) 2014, 2, 22–29. [Google Scholar]

- Huang, Z.H.; Xiong, H.L.; Zhu, M.; Cai, H.Y. Embedded measurement system and interpretation algorithm for water gauge image. Opto Electron. Eng. 2013, 40, 1–7. [Google Scholar]

- Lin, R.F.; Xu, H. Automatic measurement method for canals water level based on imaging sensor. Transducer Microsyst. Technol. 2013, 32, 53–55. [Google Scholar]

- Sun, T.; Zhang, C.; Li, L.; Tian, H.; Qian, B.; Wang, J. Research on image segmentation and extraction algorithm for bicolor water level gauge. In Proceedings of the 2013 25th Chinese Control and Decision Conference (CCDC), Guiyang, China, 25–27 May 2013. [Google Scholar]

- Lan, H.Y.; Yan, H. Research on application of the scale extraction of water-level ruler based on image recognition technology. Yellow River 2015, 37, 28–30. [Google Scholar]

- Shi, Y.L.; Xia, Z.H.; Wang, L. A new algorithm of water level detection based on video image. Sci. Technol. Eng. 2014, 14, 114–116. [Google Scholar]

- Chen, C.; Liu, Z.W.; Chen, X.S.; Luo, M.N.; Niu, Z.X.; Ruan, C. Technology of water level automatically extract based on image processing. Water Resour. Informatiz. 2016, 1, 48–55. [Google Scholar]

- Zhong, Z.Y. Method of water level data capturing based on video image recognition. Foreign Electron. Meas. Technol. 2017, 6, 96–99. [Google Scholar]

- Chen, J.S. Method of water level data capturing based on video image recognition. Water Resour. Informatiz. 2013, 1, 48–51. [Google Scholar]

- Bruinink, M.; Chandarr, A.; Rudinac, M.; van Overloop, P.J.; Jonker, P. Portable, automatic water level estimation using mobile phone cameras. In Proceedings of the 2015 14th IAPR International Conference on Machine Vision Applications (MVA), Tokyo, Japan, 18–22 May 2015. [Google Scholar]

- Leduc, P.; Ashmore, P.; Sjogren, D. Technical note: Stage and water width measurement of a mountain stream using a simple time-lapse camera. Hydrol. Earth Syst. Sci. Discuss. 2018, 22, 1–17. [Google Scholar] [CrossRef]

- Liu, Q.; Chu, B.; Peng, J.; Tang, S. A Visual measurement of water content of crude oil based on image grayscale accumulated value difference. Sensors 2019, 19, 2963. [Google Scholar] [CrossRef] [PubMed]

- Gilmore, T.E.; Birgand, F.; Chapman, K.W. Source and magnitude of error in an inexpensive image-based water level measurement system. J. Hydrol. 2013, 496, 178–186. [Google Scholar] [CrossRef] [Green Version]

- Young, D.S.; Hart, J.K.; Martinez, K. Image analysis techniques to estimate river discharge using time-lapse cameras in remote locations. Comput. Geosci. 2015, 76, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Ren, M.W.; Yang, W.K.; Wang, H. New algorithm of automatic water level measurement based on image processing. Comput. Eng. Appl. 2007, 43, 204–206. [Google Scholar]

- Zhang, Z.; Zhou, Y.; Wang, H.B.; Gao, H.M.; Liu, H.Y. Image-based water level measurement with standard bicolor staff gauge. Chin. J. Sci. Instrum. 2018, 9, 236–245. [Google Scholar]

- Jiang, X.Y.; Hua, Z.J. Water-level auto reading based on image processing. Electron. Des. Eng. 2011, 19, 23–25. [Google Scholar]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhou, Y.; Liu, H.Y.; Gao, H.M. In-situ water level measurement using NIR-imaging video camera. Flow Meas. Instrum. 2019, 67, 95–106. [Google Scholar] [CrossRef]

- Zhang, Z.; Xu, F.; Shen, J.; Han, L.; Xu, L.Z. Plane measurement method with monocular vision based on variable-height homography. Chin. J. Sci. Instrum. 2014, 35, 1860–1867. [Google Scholar]

| Illumination Conditions | Dim Light | Water Glare | Artificial Lighting |

|---|---|---|---|

| Gray Image | 2684 | 1898 | 1801 |

| Edge Image | 3992 | 3999 | 3958 |

| Binary Image | 1990 | 0 | 1788 |

| Manual Reading | 1964 | 1906 | 1836 |

| Methods | Otsu | OSF | MMD |

|---|---|---|---|

| RMSE/m | 0.4867 | 0.0818 | 0.0118 |

| NE>0.1 | 15 | 12 | 0 |

| NE>0.02 | 38 | 40 | 8 |

| Illumination Conditions | Dim Light | Shadow Projection | Water Glare | Direct Sunlight | Lateral Sunlight | Artificial Lighting |

|---|---|---|---|---|---|---|

| Coarse Positioning ROI Number (Gray Image) | 20 | 19 | 20 | 19 | 19 | 18 |

| Coarse Positioning ROI Number (Edge Image) | 20 | 19 | 19 | 18 | 19 | 39 |

| Coarse Positioning ROI Number | 20 | 19 | 20 | 19 | 19 | 18 |

| Fine Positioning ROI Number (Gray Image) | 129 | 112 | 87 | 133 | 84 | 94 |

| Fine Positioning ROI Number (Edge Image) | 137 | 99 | 199 | 158 | 105 | 21 |

| Fine Positioning ROI Number | 137 | 99 | 87 | 133 | 84 | 94 |

| MMD Method Water Level Result/m | 9.729 | 9.690 | 9.782 | 9.644 | 9.694 | 9.588 |

| Stage Gauge Result/m | 9.74 | 9.80 | 9.82 | 9.75 | 9.69 | 9.60 |

| Absolute Error/m | 0.011 | 0.11 | 0.038 | 0.106 | 0.004 | 0.012 |

| Data | 18–03–04 | 18–03–05 | 18–03–06 | 18–03–07 | 18–03–08 | 18–03–09 | 18–03–10 |

|---|---|---|---|---|---|---|---|

| Weather | Cloudy | Light Rain | Light Rain | Moderate Rain | Overcast | Sunny | Sunny |

| Wind Scale | Scale 1 | Scale 5 | Scale 3 | Scale 2 | Scale 1 | Scale 1 | Scale 2 |

| Methods | Data | 18–03–04 | 18–03–05 | 18–03–06 | 18–03–07 | 18–03–08 | 18–03–09 | 18–03–10 |

|---|---|---|---|---|---|---|---|---|

| MMD | RMSE/m | 0.009 | 0.012 | 0.006 | 0.008 | 0.006 | 0.011 | 0.009 |

| NE>0.1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| NE>0.02 | 7 | 10 | 3 | 4 | 0 | 8 | 7 | |

| OSF | RMSE/m | 0.008 | 0.004 | 0.003 | 0.004 | 0.004 | 0.018 | 0.040 |

| NE>0.1 | 0 | 0 | 0 | 0 | 0 | 1 | 2 | |

| NE>0.02 | 7 | 3 | 0 | 1 | 0 | 10 | 13 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; Zhou, Y.; Liu, H.; Zhang, L.; Wang, H. Visual Measurement of Water Level under Complex Illumination Conditions. Sensors 2019, 19, 4141. https://doi.org/10.3390/s19194141

Zhang Z, Zhou Y, Liu H, Zhang L, Wang H. Visual Measurement of Water Level under Complex Illumination Conditions. Sensors. 2019; 19(19):4141. https://doi.org/10.3390/s19194141

Chicago/Turabian StyleZhang, Zhen, Yang Zhou, Haiyun Liu, Lili Zhang, and Huibin Wang. 2019. "Visual Measurement of Water Level under Complex Illumination Conditions" Sensors 19, no. 19: 4141. https://doi.org/10.3390/s19194141

APA StyleZhang, Z., Zhou, Y., Liu, H., Zhang, L., & Wang, H. (2019). Visual Measurement of Water Level under Complex Illumination Conditions. Sensors, 19(19), 4141. https://doi.org/10.3390/s19194141