Improved Traffic Sign Detection and Recognition Algorithm for Intelligent Vehicles

Abstract

1. Introduction

2. Traffic Sign Detection

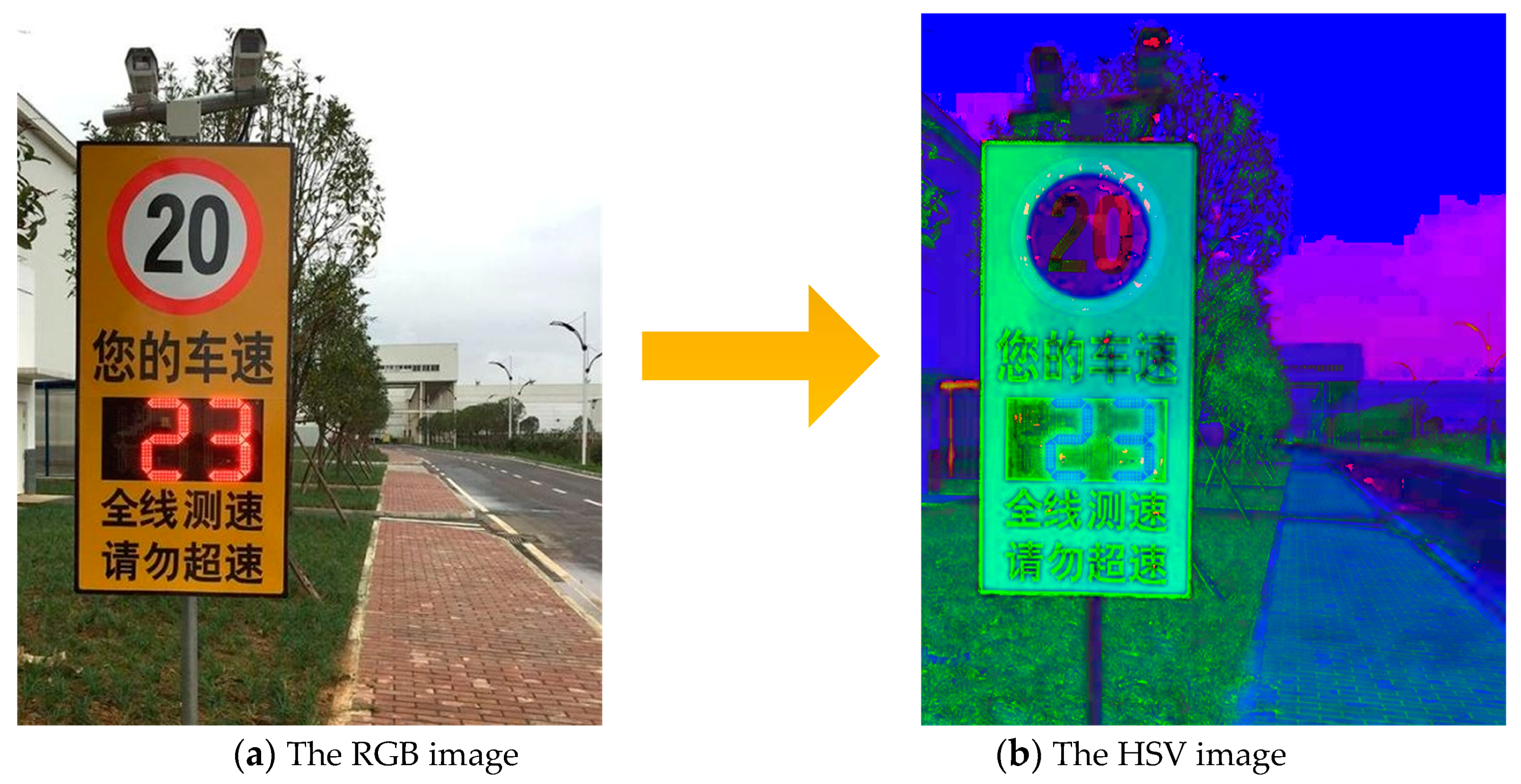

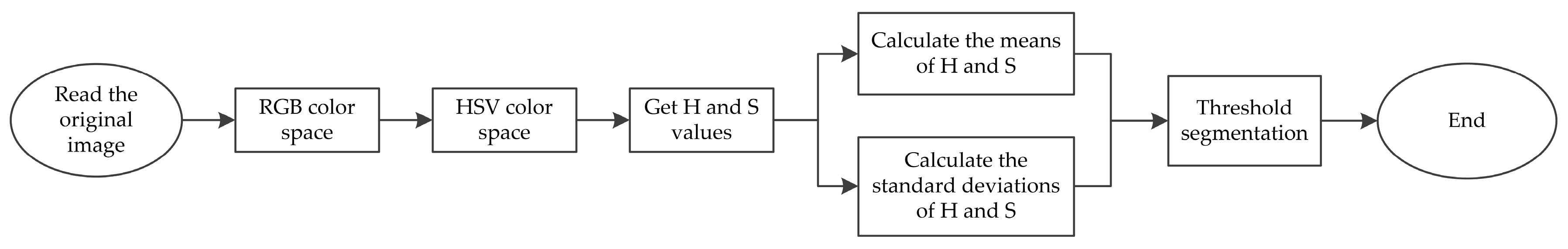

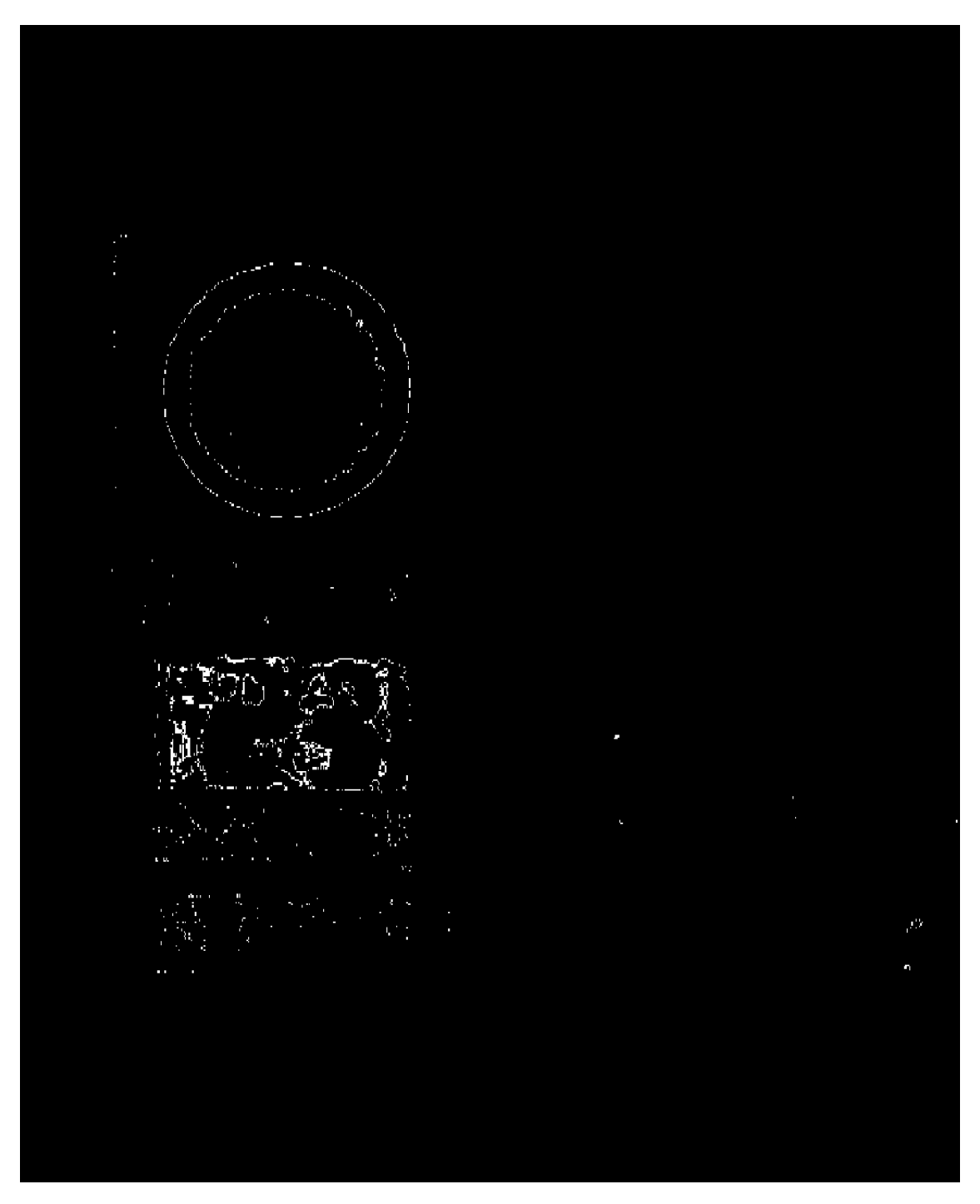

2.1. Traffic Sign Segmentation Based on the HSV Color Space

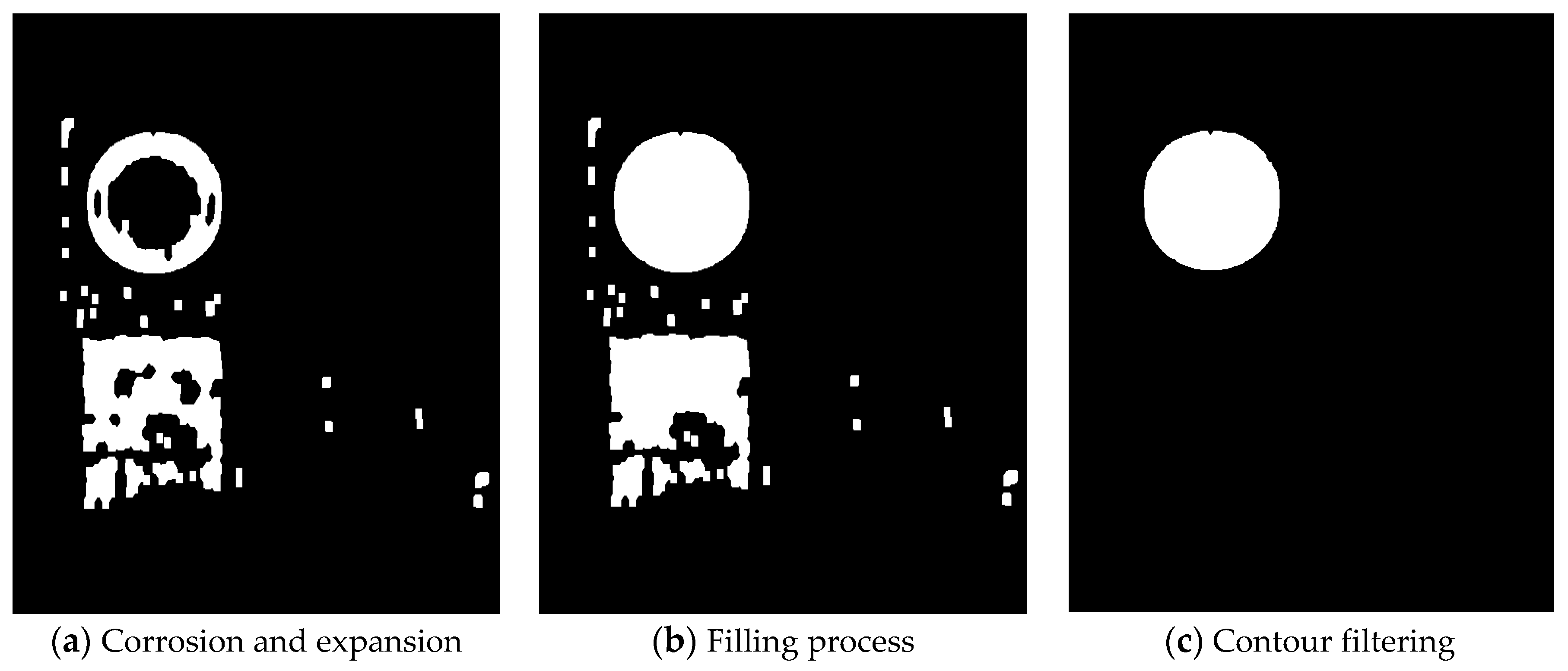

2.2. Traffic Sign Detection Based on the Shape Features

3. Improved LeNet-5 CNN Model

3.1. Deficiency Analysis of Classical LeNet-5 Network Model

- (1)

- The interference background in the traffic sign training image is much more complicated than that in a single digital image. The original convolutional kernel does not perform well in feature extraction. Consequently, the extracted features cannot be properly used for the accurate classification of the subsequent classifier.

- (2)

- Different kinds of traffic sign training images exist, and the number of datasets is large. Gradient dispersion easily occurs during network training, and the generalization ability is significantly markedly reduced.

- (3)

- The size of the ROI in the input traffic sign training image varies, and the effective features obtained by the current network model are insufficient to meet the target requirements of accurate traffic sign recognition.

- (4)

- The learning rate and the iterations number of the training network are not adjusted accordingly, and the relevant parts are rationally optimized, thereby resulting to the emergence of the over-fitting phenomenon during training.

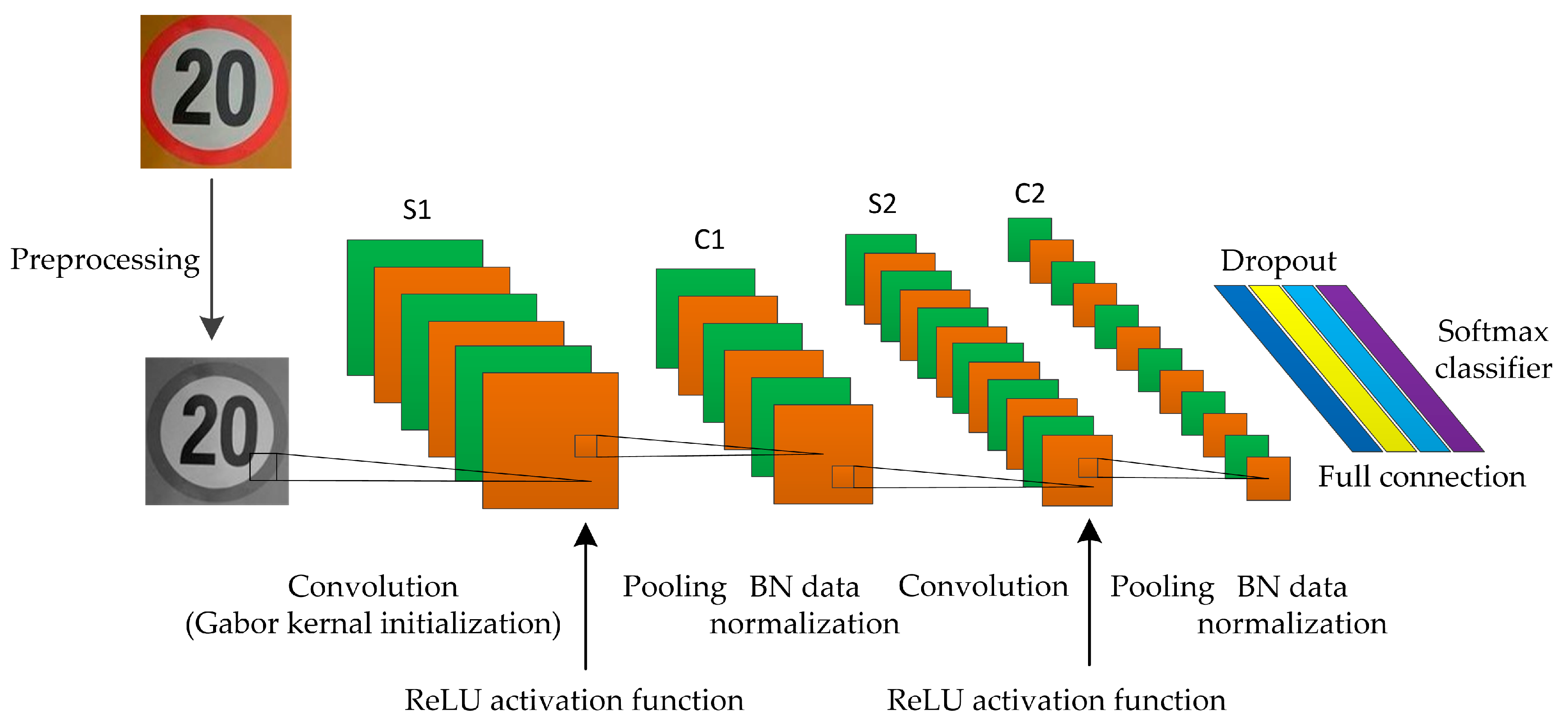

3.2. Improved LeNet-5 Network Model

3.2.1. Image Preprocessing

- (1)

- Edge clipping. Edge cropping is a particularly important step in the image preprocessing. Some background parts in the edge are not related to traffic signs, and these parts can account for approximately 10% of the entire image. The bounding box coordinates are used for proportional cropping to obtain the ROI. The removal of the interference region helps to reduce redundant information and speed up the network training.

- (2)

- Image enhancement. The recognition effects of the same type of traffic signs in the training network under different illumination conditions are significantly different. Therefore, reducing or removing the noise interference caused by the light change via image enhancement is necessary. Direct grey-scale conversion method is used to adjust the grey value of the original image using the transformation function, which presents clear details of the ROI and demonstrates a blurred interference area. Thus, this method effectively improves the image quality and reduces the computational load of the training network.

- (3)

- Size normalization. The same type of traffic signs may have different sizes. The different sizes of training images may have different feature dimensions during the CNN training process, which leads to difficulties in the subsequent classification and recognition. In this paper, the image is uniformly normalized in size, and the normalized image size is 32 × 32.

3.2.2. Improved LeNet-5 Network Model

- The mean of training batch data:

- The variance of training batch data:

- Normalization:where is the minimum positive number used to avoid division by 0.

- Scale transformation and offset:

- The learning parameters and are returned.

4. Traffic Sign Recognition Experiment

4.1. Experimental Environment

4.2. Traffic Sign Recognition Experiment

4.2.1. Traffic Sign Dataset

4.2.2. Traffic Sign Classification and Recognition Experiment

- (1)

- The training set samples are preprocessed, the artificial dataset is generated and the dataset order is disrupted.

- (2)

- The Gabor kernel is used as the initial convolutional kernel, and the convolutional kernel size is 5 × 5, as activated by the ReLU function.

- (3)

- The training set samples are forwardly propagated in the network model, and a series of parameters are set. The BN is used for data normalization after each pooling layer, and the Adam method is used as the optimizer algorithm. The parameters are set as follows: , , and . The dropout parameter is set to 0.5 in the fully-connected layers, and the Softmax function is outputted as a classifier.

- (4)

- The gradient of loss function is calculated, and the parameters, such as network weights and offsets, are updated on the basis of the back-propagation mechanism.

- (5)

- The error between the real and the predicted value of the sample is calculated. When the obtained error is lower than the set error or reaches the maximum number of training, training is stopped and step (6) is executed; otherwise, step (1) is repeated for the next network iteration.

- (6)

- The classification test is conducted in the network model. The subordinate categories of traffic signs in the GTSRB are predicted and compared with the real categories. The classification prediction results of traffic signs are counted, and the correct prediction rate is calculated.

- (1)

- Several images are randomly selected from the testing set samples, and the images are inputted into the trained network model after preprocessing.

- (2)

- The recognition results are outputted through the network model, thereby showing the meaning of traffic signs with the highest probability.

- (3)

- The output results are compared with the actual reference meanings, and the statistical recognition results are obtained.

- (4)

- All the sample extraction images are completely tested, and the accurate recognition rate of traffic signs is calculated.

4.2.3. Statistics and Analysis of Experimental Results

4.3. Performance Comparison of Recognition Algorithms

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Eichberger, A.; Wallner, D. Review of recent patents in integrated vehicle safety, advanced driver assistance systems and intelligent transportation systems. Recent Pat. Mech. Eng. 2010, 3, 32–44. [Google Scholar]

- Campbell, S.; Naeem, W.; Irwin, G.W. A review on improving the autonomy of unmanned surface vehicles through intelligent collision avoidance manoeuvres. Annu. Rev. Control 2012, 36, 267–283. [Google Scholar] [CrossRef]

- Olaverri-Monreal, C. Road safety: Human factors aspects of intelligent vehicle technologies. In Proceedings of the 6th International Conference on Smart Cities and Green ICT Systems, SMARTGREENS 2017 and 3rd International Conference on Vehicle Technology and Intelligent Transport Systems (VEHITS), Porto, Portugal, 22–24 April 2017; pp. 318–332. [Google Scholar]

- Luo, Y.; Gao, Y.; You, Z.D. Overview research of influence of in-vehicle intelligent terminals on drivers’ distraction and driving safety. In Proceedings of the 17th COTA International Conference of Transportation Professionals: Transportation Reform and Change-Equity, Inclusiveness, Sharing, and Innovation (CICTP), Shanghai, China, 7–9 July 2017; pp. 4197–4205. [Google Scholar]

- Andreev, S.; Petrov, V.; Huang, K.; Lema, M.A.; Dohler, M. Dense moving fog for intelligent IoT: Key challenges and opportunities. IEEE Commun. Mag. 2019, 57, 34–41. [Google Scholar] [CrossRef]

- Yang, J.; Coughlin, J.F. In-vehicle technology for self-driving cars: Advantages and challenges for aging drivers. Int. J. Automot. Technol. 2014, 15, 333–340. [Google Scholar] [CrossRef]

- Yoshida, H.; Omae, M.; Wada, T. Toward next active safety technology of intelligent vehicle. J. Robot. Mechatron. 2015, 27, 610–616. [Google Scholar] [CrossRef]

- Zhang, Z.J.; Li, W.Q.; Zhang, D.; Zhang, W. A review on recognition of traffic signs. In Proceedings of the 2014 International Conference on E-Commerce, E-Business and E-Service (EEE), Hong Kong, China, 1–2 May 2014; pp. 139–144. [Google Scholar]

- Gudigar, A.; Chokkadi, S.; Raghavendra, U. A review on automatic detection and recognition of traffic sign. Multimed. Tools Appl. 2016, 75, 333–364. [Google Scholar] [CrossRef]

- Zhu, H.; Yuen, K.V.; Mihaylova, L.; Leung, H. Overview of environment perception for intelligent vehicles. IEEE Trans. Intell. Transp. Syst. 2017, 18, 2584–2601. [Google Scholar] [CrossRef]

- Yang, J.; Kong, B.; Wang, B. Vision-based traffic sign recognition system for intelligent vehicles. Adv. Intell. Syst. Comput. 2014, 215, 347–362. [Google Scholar]

- Shi, J.H.; Lin, H.Y. A vision system for traffic sign detection and recognition. In Proceedings of the 26th IEEE International Symposium on Industrial Electronics (ISIE), Edinburgh, UK, 18–21 June 2017; pp. 1596–1601. [Google Scholar]

- Phu, K.T.; Lwin Oo, L.L. Traffic sign recognition system using feature points. In Proceedings of the 12th International Conference on Research Challenges in Information Science (RCIS), Nantes, France, 29–31 May 2018; pp. 1–6. [Google Scholar]

- Wali, S.B.; Abdullah, M.A.; Hannan, M.A.; Hussain, A.; Samad, S.A.; Ker, P.J.; Mansor, M.B. Vision-based traffic sign detection and recognition systems: Current trends and challenges. Sensors 2019, 19, 2093. [Google Scholar] [CrossRef]

- Wang, G.Y.; Ren, G.H.; Jiang, L.H.; Quan, T.F. Hole-based traffic sign detection method for traffic signs with red rim. Vis. Comput. 2014, 30, 539–551. [Google Scholar] [CrossRef]

- Hechri, A.; Hmida, R.; Mtibaa, A. Robust road lanes and traffic signs recognition for driver assistance system. Int. J. Comput. Sci. Eng. 2015, 10, 202–209. [Google Scholar] [CrossRef]

- Lillo-Castellano, J.M.; Mora-Jiménez, I.; Figuera-Pozuelo, C.; Rojo-Álvarez, J.L. Traffic sign segmentation and classification using statistical learning methods. Neurocomputing 2015, 153, 286–299. [Google Scholar] [CrossRef]

- Xiao, Z.T.; Yang, Z.J.; Geng, L.; Zhang, F. Traffic sign detection based on histograms of oriented gradients and boolean convolutional neural networks. In Proceedings of the 2017 International Conference on Machine Vision and Information Technology (CMVIT), Singapore, 17–19 February 2017; pp. 111–115. [Google Scholar]

- Guan, H.Y.; Yan, W.Q.; Yu, Y.T.; Zhong, L.; Li, D.L. Robust traffic-sign detection and classification using mobile LiDAR data with digital Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1715–1724. [Google Scholar] [CrossRef]

- Sun, Z.L.; Wang, H.; Lau, W.S.; Seet, G.; Wang, D.W. Application of BW-ELM model on traffic sign recognition. Neurocomputing 2014, 128, 153–159. [Google Scholar] [CrossRef]

- Qian, R.Q.; Zhang, B.L.; Yue, Y.; Wang, Z.; Coenen, F. Robust Chinese traffic sign detection and recognition with deep convolutional neural network. In Proceedings of the 2015 11th International Conference on Natural Computation (ICNC), Zhangjiajie, China, 15–17 August 2015; pp. 791–796. [Google Scholar]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 29th IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Yuan, Y.; Xiong, Z.T.; Wang, Q. An incremental framework for video-based traffic sign detection, tracking, and recognition. IEEE Trans. Intell. Transp. Syst. 2017, 18, 1918–1929. [Google Scholar] [CrossRef]

- Kumar, A.D.; Karthika, K.; Parameswaran, L. Novel deep learning model for traffic sign detection using capsule networks. arXiv 2018, arXiv:1805.04424. [Google Scholar]

- Yuan, Y.; Xiong, Z.T.; Wang, Q. VSSA-NET: vertical spatial sequence attention network for traffic sign detection. IEEE Trans Image Process 2019, 28, 3423–3434. [Google Scholar] [CrossRef]

- Li, Y.; Ma, L.F.; Huang, Y.C.; Li, J. Segment-based traffic sign detection from mobile laser scanning data. In Proceedings of the 38th Annual IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Valencia, Spain, 22–27 July 2018; pp. 4607–4610. [Google Scholar]

- Pandey, P.; Kulkarni, R. Traffic sign detection using Template Matching technique. In Proceedings of the 4th International Conference on Computing, Communication Control and Automation (ICCUBEA), Pune, India, 16–18 August 2018; pp. 1–6. [Google Scholar]

- Banharnsakun, A. Multiple traffic sign detection based on the artificial bee colony method. Evol. Syst. 2018, 9, 255–264. [Google Scholar] [CrossRef]

- Zhu, Y.Y.; Zhang, C.Q.; Zhou, D.Y.; Wang, X.G.; Bai, X.; Liu, W.Y. Traffic sign detection and recognition using fully convolutional network guided proposals. Neurocomputing 2016, 214, 758–766. [Google Scholar] [CrossRef]

- Huang, Z.Y.; Yu, Y.L.; Gu, J.; Liu, H.P. An efficient method for traffic sign recognition based on extreme learning machine. IEEE Trans. Cybern. 2017, 47, 920–933. [Google Scholar] [CrossRef]

- Promlainak, S.; Woraratpanya, K.; Kuengwong, J.; Kuroki, Y. Thai traffic sign detection and recognition for driver assistance. In Proceedings of the 7th ICT International Student Project Conference (ICT-ISPC), Nakhon Pathom, Thailand, 11–13 July 2018; pp. 1–5. [Google Scholar]

- Jose, A.; Thodupunoori, H.; Nair, B.B. A novel traffic sign recognition system combining Viola-Jones framework and deep learning. In Proceedings of the International Conference on Soft Computing and Signal Processing (ICSCSP), Hyderabad, India, 22–23 June 2018; pp. 507–517. [Google Scholar]

- Liu, Q.; Zhang, N.Y.; Yang, W.Z.; Wang, S.; Cui, Z.C.; Chen, X.Y.; Chen, L.P. A review of image recognition with deep convolutional neural network. In Proceedings of the 13th International Conference on Intelligent Computing (ICIC), Liverpool, UK, 7–10 August 2017; pp. 69–80. [Google Scholar]

- Rawat, W.; Wang, Z.H. Deep convolutional neural networks for image classification: A comprehensive review. Neural Comp. 2017, 29, 2352–2449. [Google Scholar] [CrossRef] [PubMed]

- El-Sawy, A.; El-Bakry, H.; Loey, M. CNN for handwritten arabic digits recognition based on LeNet-5. In Proceedings of the 2nd International Conference on Advanced Intelligent Systems and Informatics (AISI), Cairo, Egypt, 24–26 October 2016; pp. 565–575. [Google Scholar]

- Wei, G.F.; Li, G.; Zhao, J.; He, A.X. Development of a LeNet-5 gas identification CNN structure for electronic noses. Sensors 2019, 19, 217. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.P.; Liu, Y.L.; Sun, F.C. Traffic sign recognition using group sparse coding. Inf. Sci. 2014, 266, 75–89. [Google Scholar] [CrossRef]

- Huang, L.L. Traffic sign recognition using perturbation method. In Proceedings of the 6th Chinese Conference on Pattern Recognition (CCPR), Changsha, China, 17–19 November 2014; pp. 518–527. [Google Scholar]

- Gonzalez-Reyna, S.E.; Avina-Cervantes, J.G.; Ledesma-Orozco, S.E.; Cruz-Aceves, I. Eigen-gradients for traffic sign recognition. Math. Probl. Eng. 2013, 2013, 364305. [Google Scholar] [CrossRef]

- Mathias, M.; Timofte, R.; Benenson, R.; Van Gool, L. Traffic sign recognition—How far are we from the solution? In Proceedings of the 2013 International Joint Conference on Neural Networks (IJCNN), Dallas, TX, USA, 4–9 August 2013; pp. 1–8. [Google Scholar]

- Vashisth, S.; Saurav, S. Histogram of Oriented Gradients based reduced feature for traffic sign recognition. In Proceedings of the 2018 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Bangalore, India, 19–22 September 2018; pp. 2206–2212. [Google Scholar]

- Natarajan, S.; Annamraju, A.K.; Baradkar, C.S. Traffic sign recognition using weighted multi-convolutional neural network. IET Intel. Transp. Syst. 2018, 12, 1396–1405. [Google Scholar] [CrossRef]

| Color | H | S | V |

|---|---|---|---|

| Red | |||

| Yellow | |||

| Blue |

| Layer Number | Type | Feature Map Number | Convolutional Kernel Size | Feature Map Size | Neuron Number |

|---|---|---|---|---|---|

| 1 | Convolutional Layer | 6 | 5 × 5 | 28 × 28 | 4704 |

| 2 | Pooling Layer | 6 | 2 × 2 | 14 × 14 | 1176 |

| 3 | Convolutional Layer | 12 | 5 × 5 | 10 × 10 | 1200 |

| 4 | Pooling Layer | 12 | 2 × 2 | 5 × 5 | 300 |

| 5 | Fully-connected Layer | 120 | 1 × 1 | 1 × 1 | 120 |

| 6 | Fully-connected Layer | 84 | 1 × 1 | 1 × 1 | 84 |

| 7 | Output Layer | 43 | - | - | 43 |

| Sequence Number | Traffic Signs Type | Test Images Number | TP | FN | Accurate Recognition Rate (%) | Average Processing Time (ms)/Frame |

|---|---|---|---|---|---|---|

| 1 | Speed Limit | 1000 | 997 | 3 | 99.70 | 5.4 |

| 2 | Danger | 1000 | 999 | 1 | 99.90 | 5.8 |

| 3 | Mandatory | 1000 | 997 | 3 | 99.70 | 5.2 |

| 4 | Prohibitory | 1000 | 998 | 2 | 99.80 | 4.9 |

| 5 | Derestriction | 1000 | 994 | 6 | 99.40 | 6.4 |

| 6 | Unique | 1000 | 1000 | 0 | 100.00 | 4.7 |

| Total | - | 6000 | 5985 | 15 | 99.75 | 5.4 |

| Serial Number | Method | Accurate Recognition Rate (%) | Average Processing Time (ms)/Frame | System Environment |

|---|---|---|---|---|

| 1 | Multilayer Perceptron [39] | 95.90 | 5.4 | Intel Core i5 processor, 4 GB RAM |

| 2 | INNLP + INNC [40] | 98.53 | 47 | Quad-Core AMD Opteron 8360 SE, CPU |

| 3 | GF+HE+HOG+PCA [41] | 98.54 | 22 | Intel Core i5 processor @2.50 GHz, 4 GB RAM |

| 4 | Weighted Multi-CNN [42] | 99.59 | 25 | NVIDIA GeForce GTX 1050 Ti GPU, Intel i5 CPU |

| Ours | Proposed Method | 99.75 | 5.4 | Intel(R) Core(TM) i5-6500 CPU @3.20GHz |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, J.; Song, C.; Peng, S.; Xiao, F.; Song, S. Improved Traffic Sign Detection and Recognition Algorithm for Intelligent Vehicles. Sensors 2019, 19, 4021. https://doi.org/10.3390/s19184021

Cao J, Song C, Peng S, Xiao F, Song S. Improved Traffic Sign Detection and Recognition Algorithm for Intelligent Vehicles. Sensors. 2019; 19(18):4021. https://doi.org/10.3390/s19184021

Chicago/Turabian StyleCao, Jingwei, Chuanxue Song, Silun Peng, Feng Xiao, and Shixin Song. 2019. "Improved Traffic Sign Detection and Recognition Algorithm for Intelligent Vehicles" Sensors 19, no. 18: 4021. https://doi.org/10.3390/s19184021

APA StyleCao, J., Song, C., Peng, S., Xiao, F., & Song, S. (2019). Improved Traffic Sign Detection and Recognition Algorithm for Intelligent Vehicles. Sensors, 19(18), 4021. https://doi.org/10.3390/s19184021