Automatic Regularization of TomoSAR Point Clouds for Buildings Using Neural Networks

Abstract

1. Introduction

2. Methods

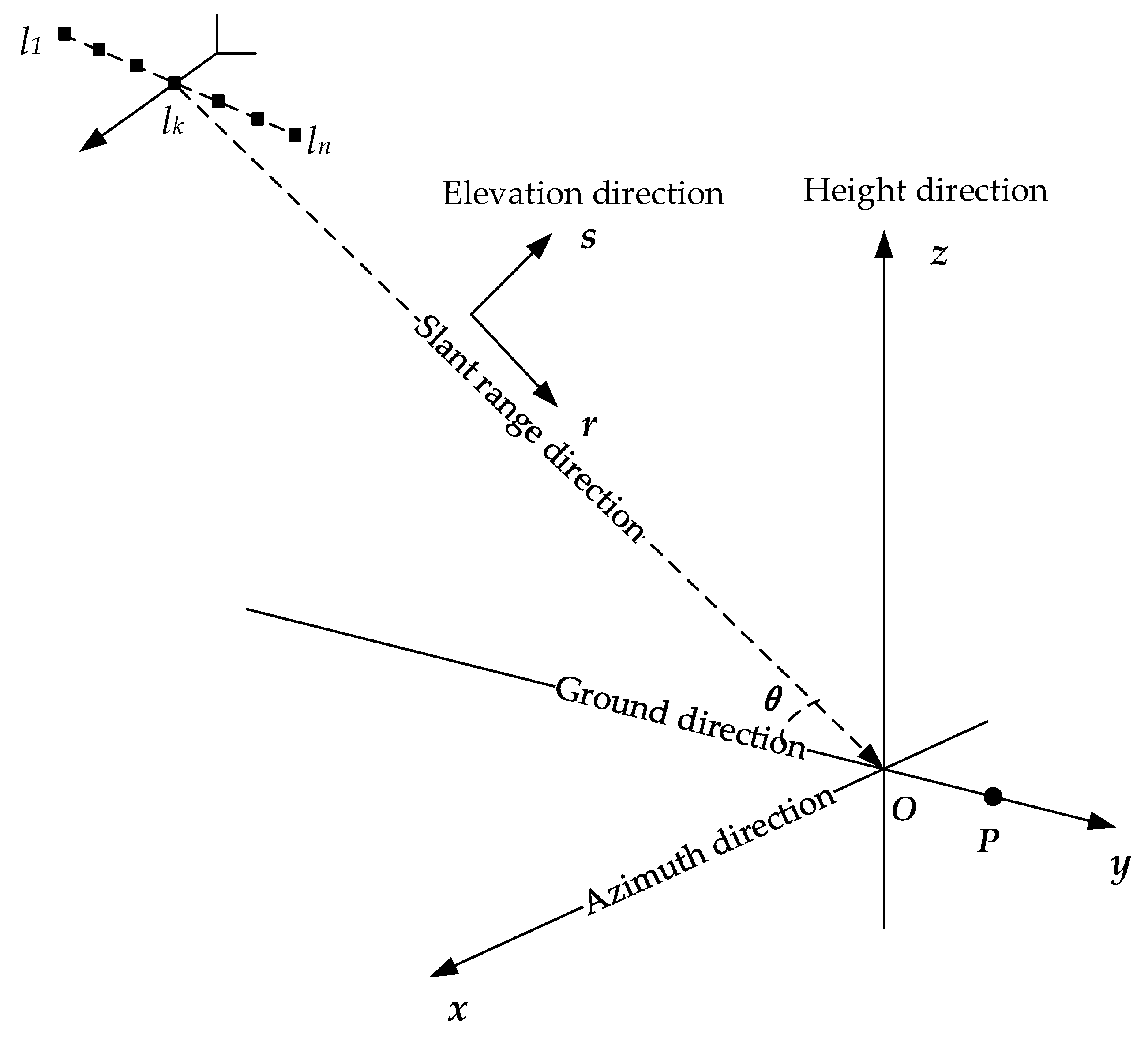

2.1. SAR Tomography

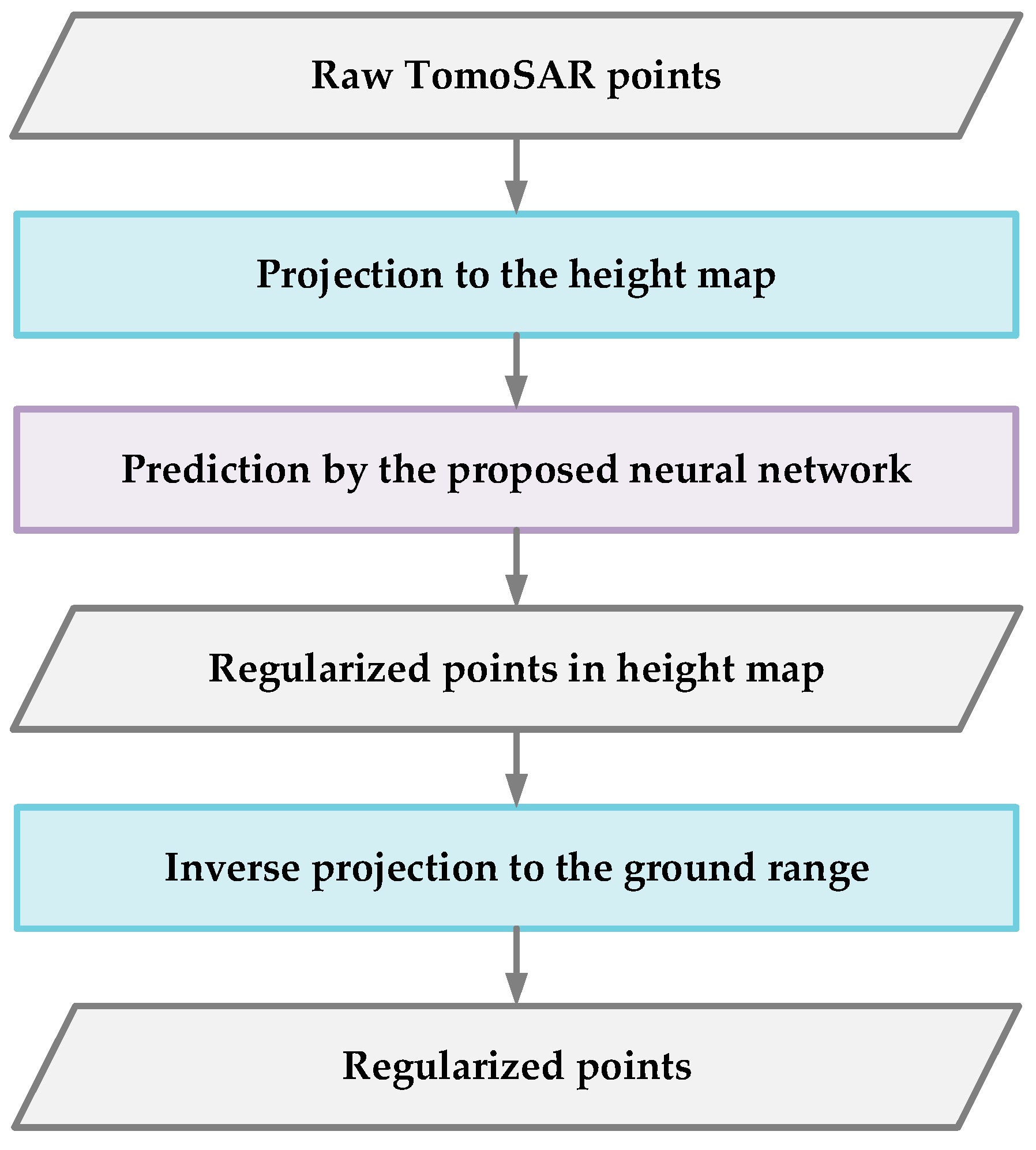

2.2. Processing Procedure

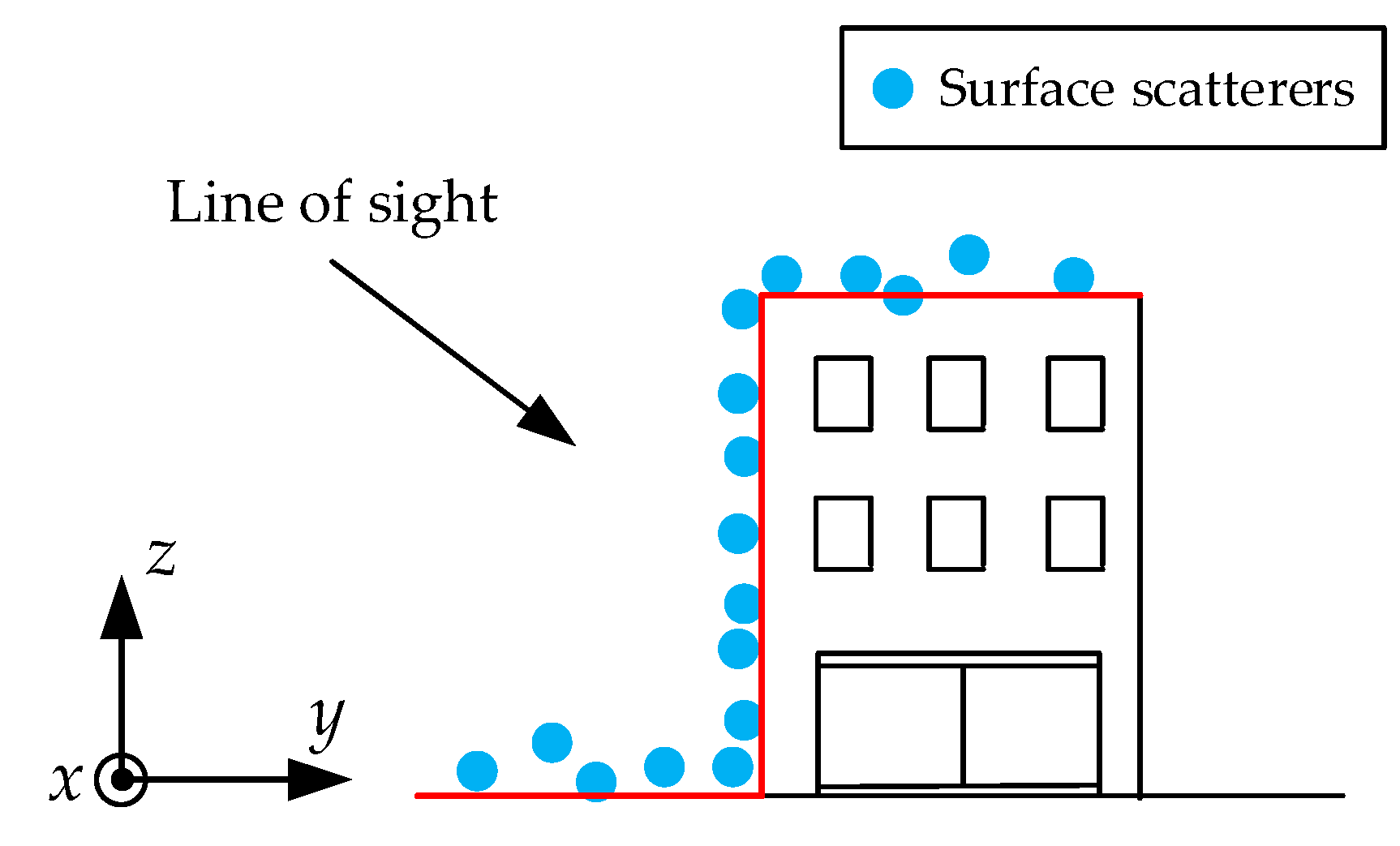

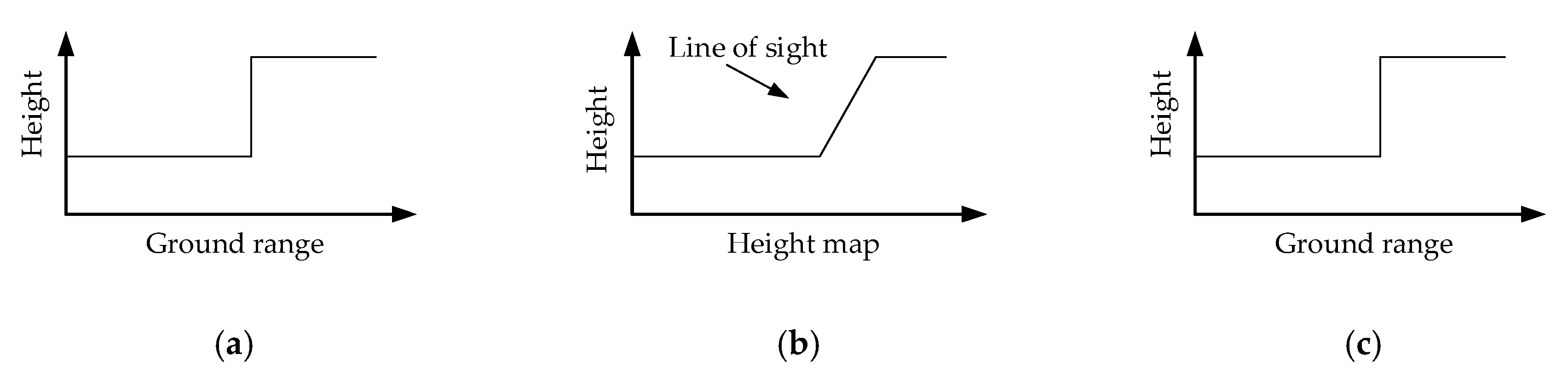

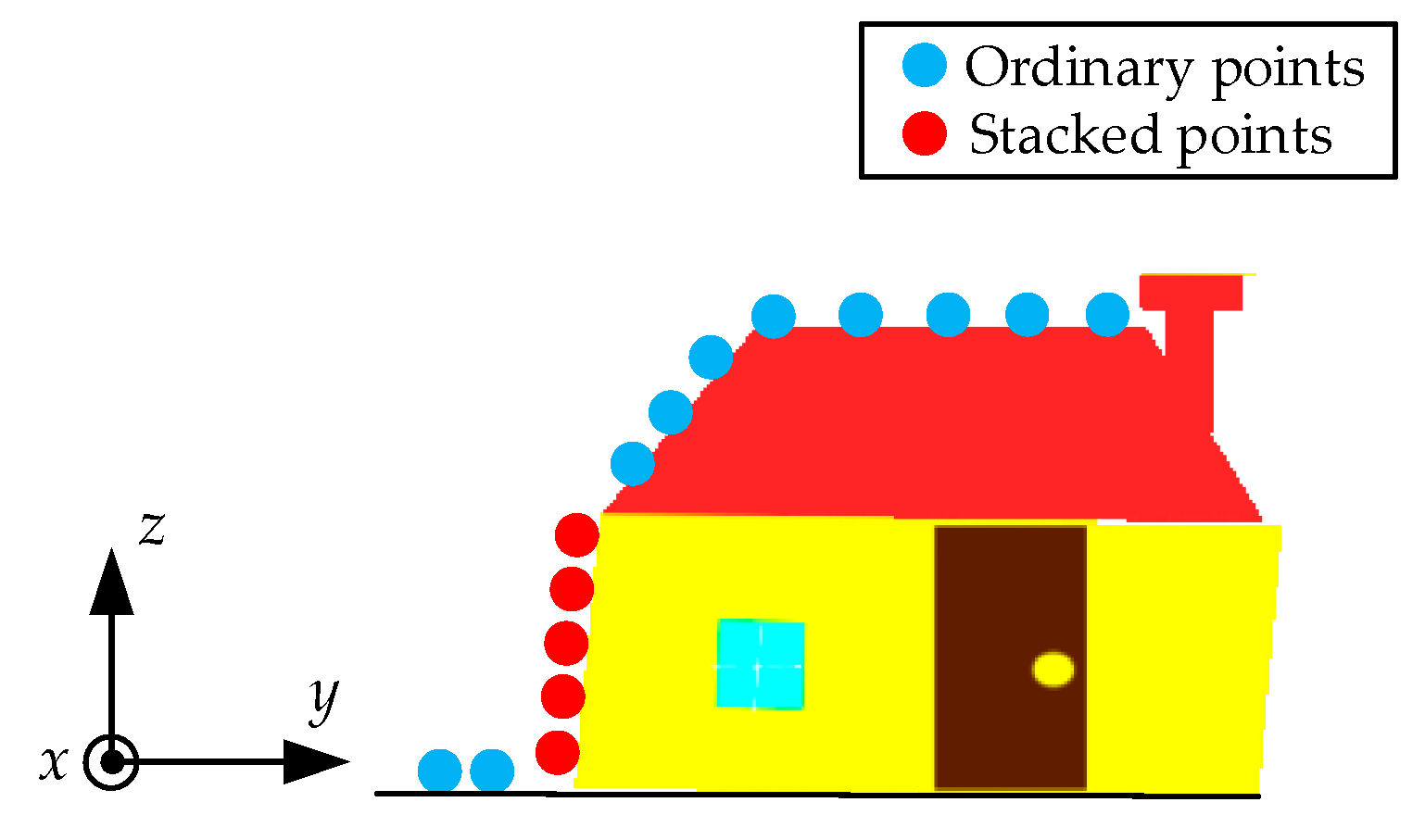

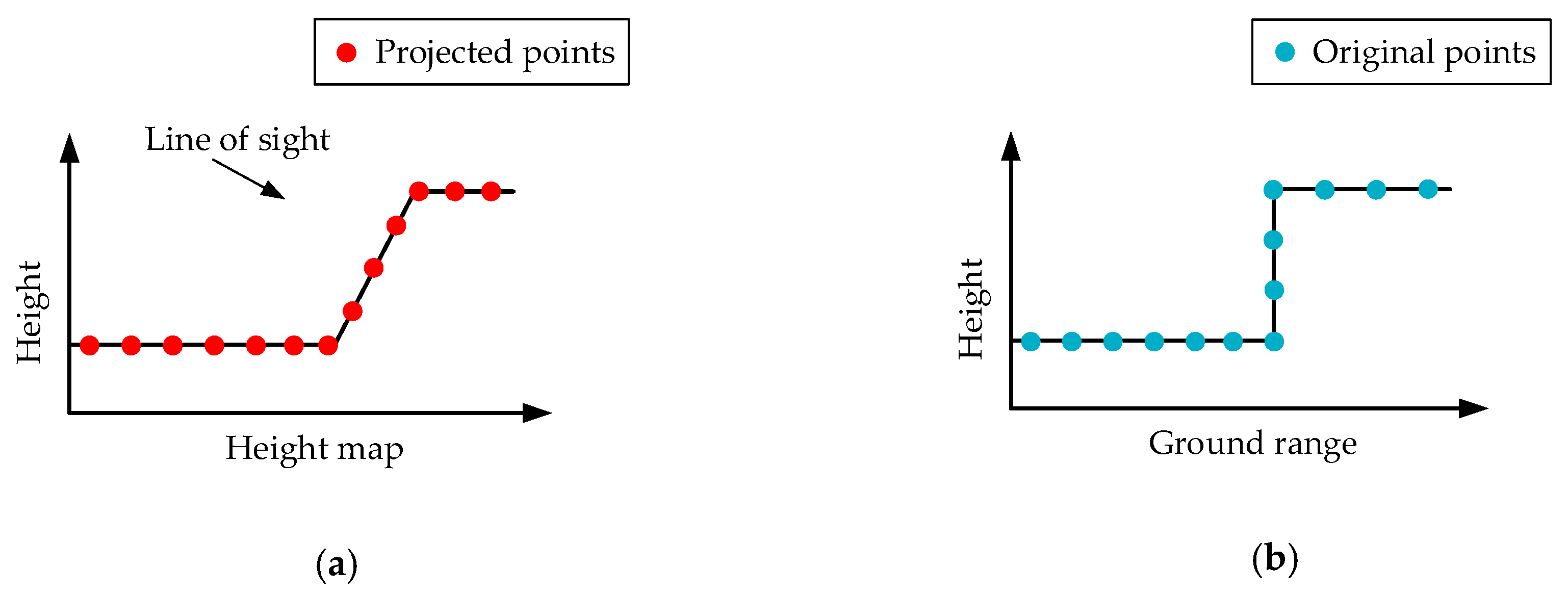

2.3. Projection

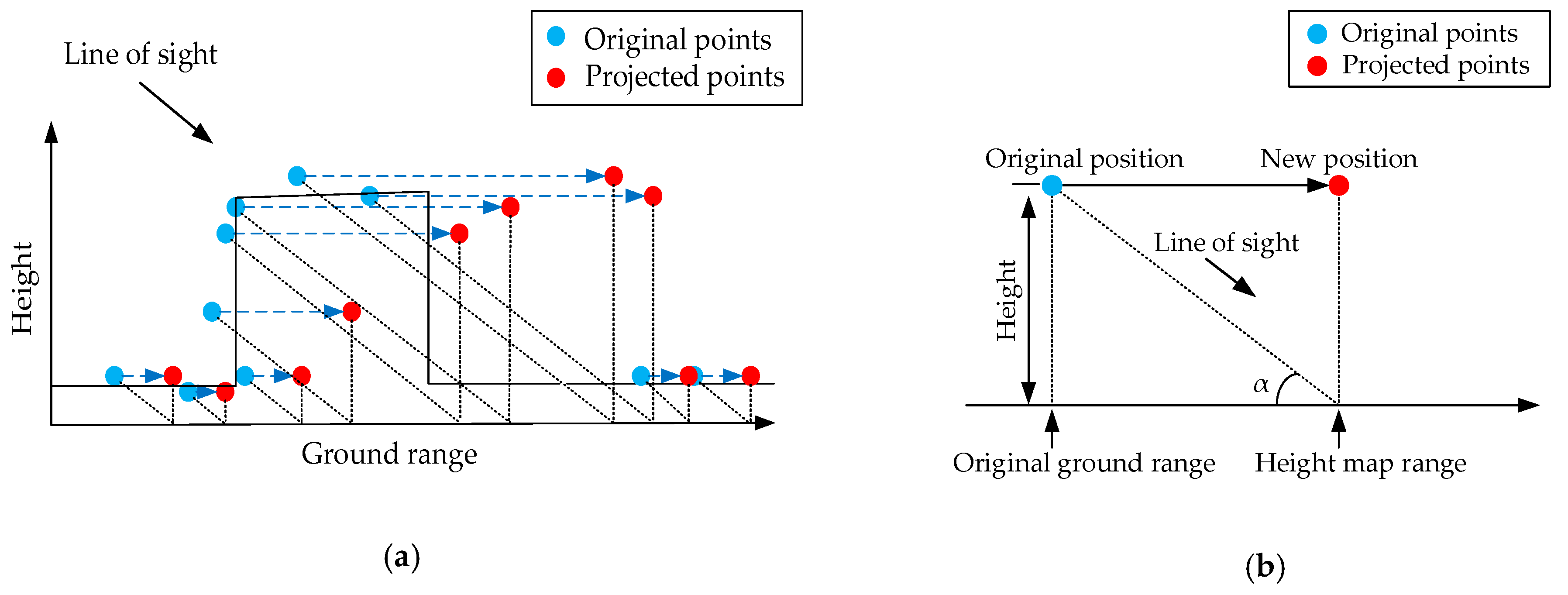

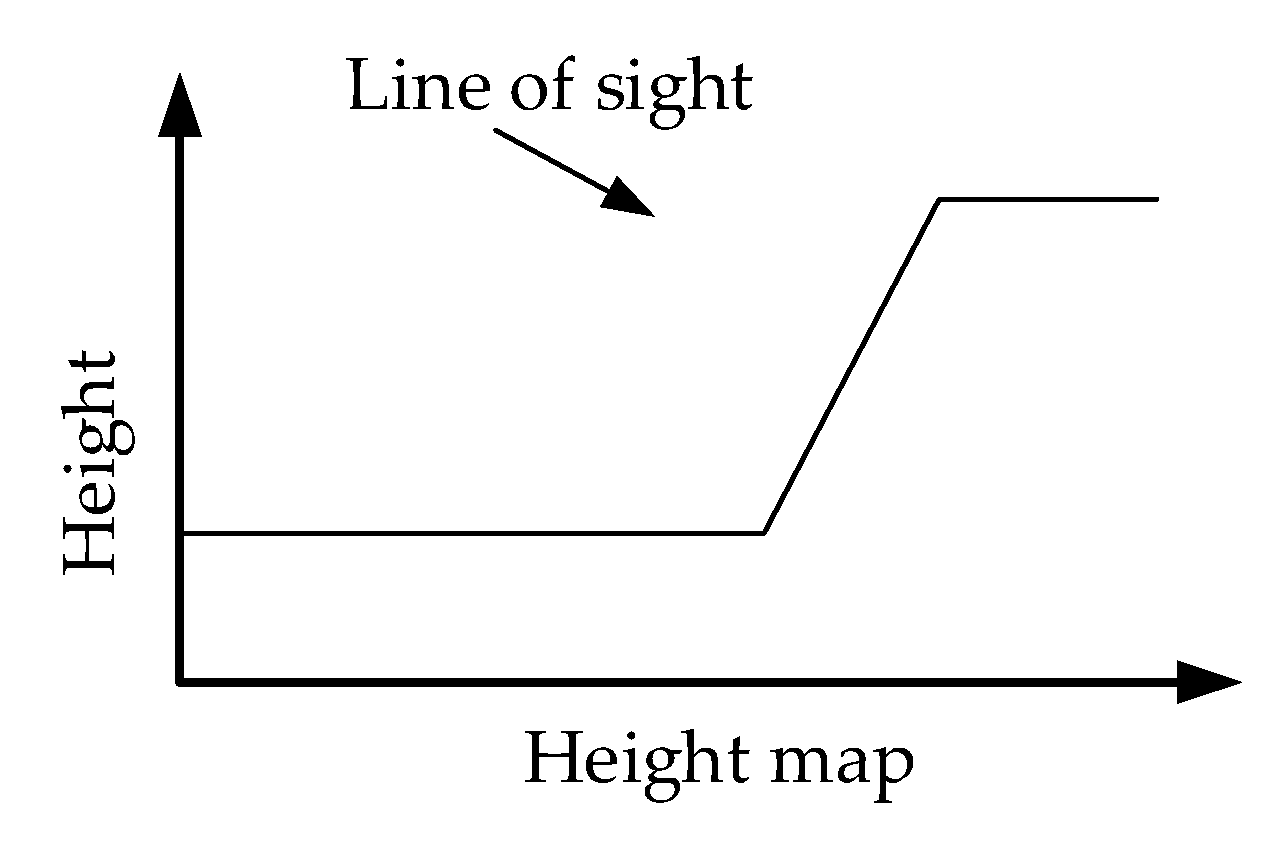

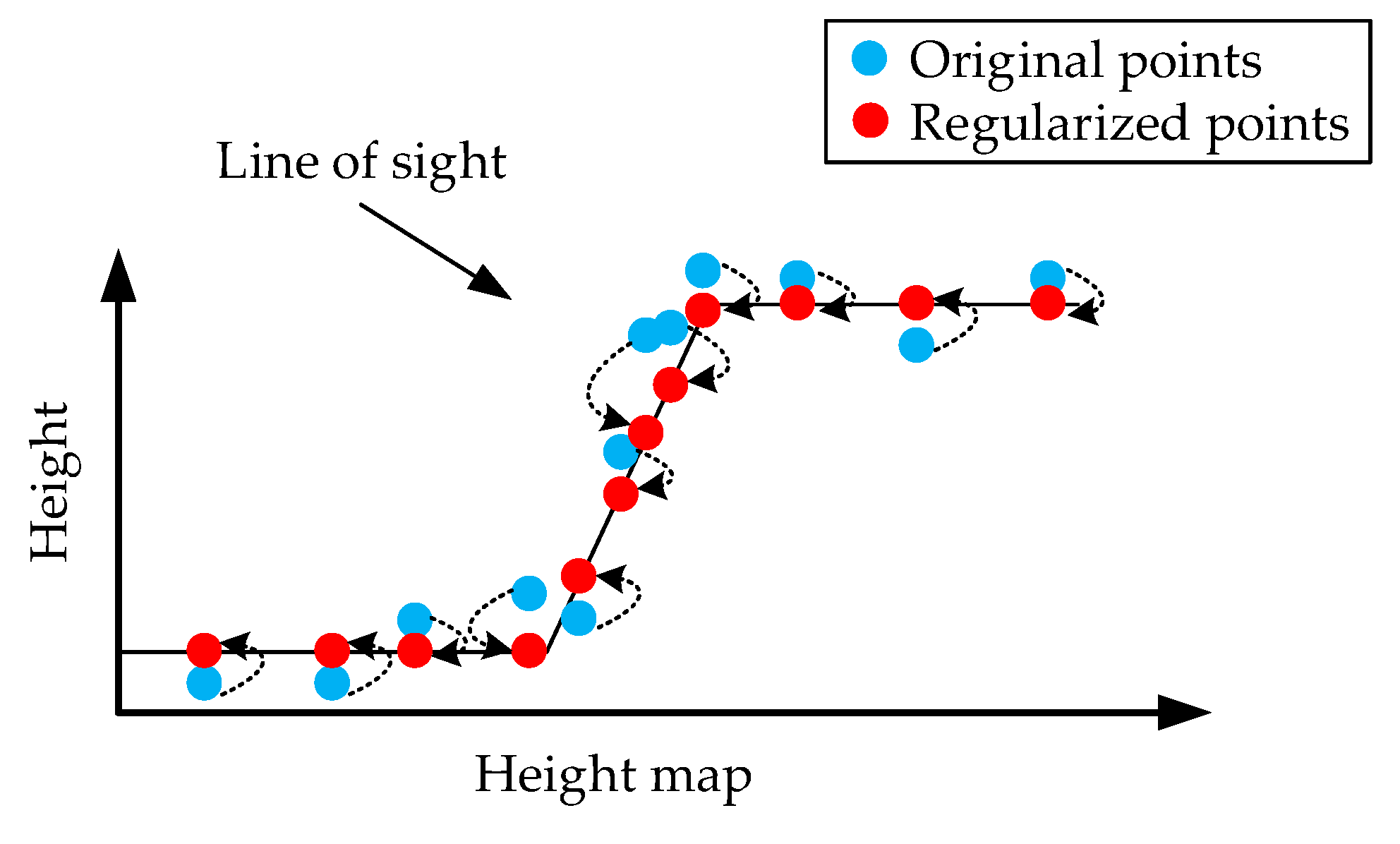

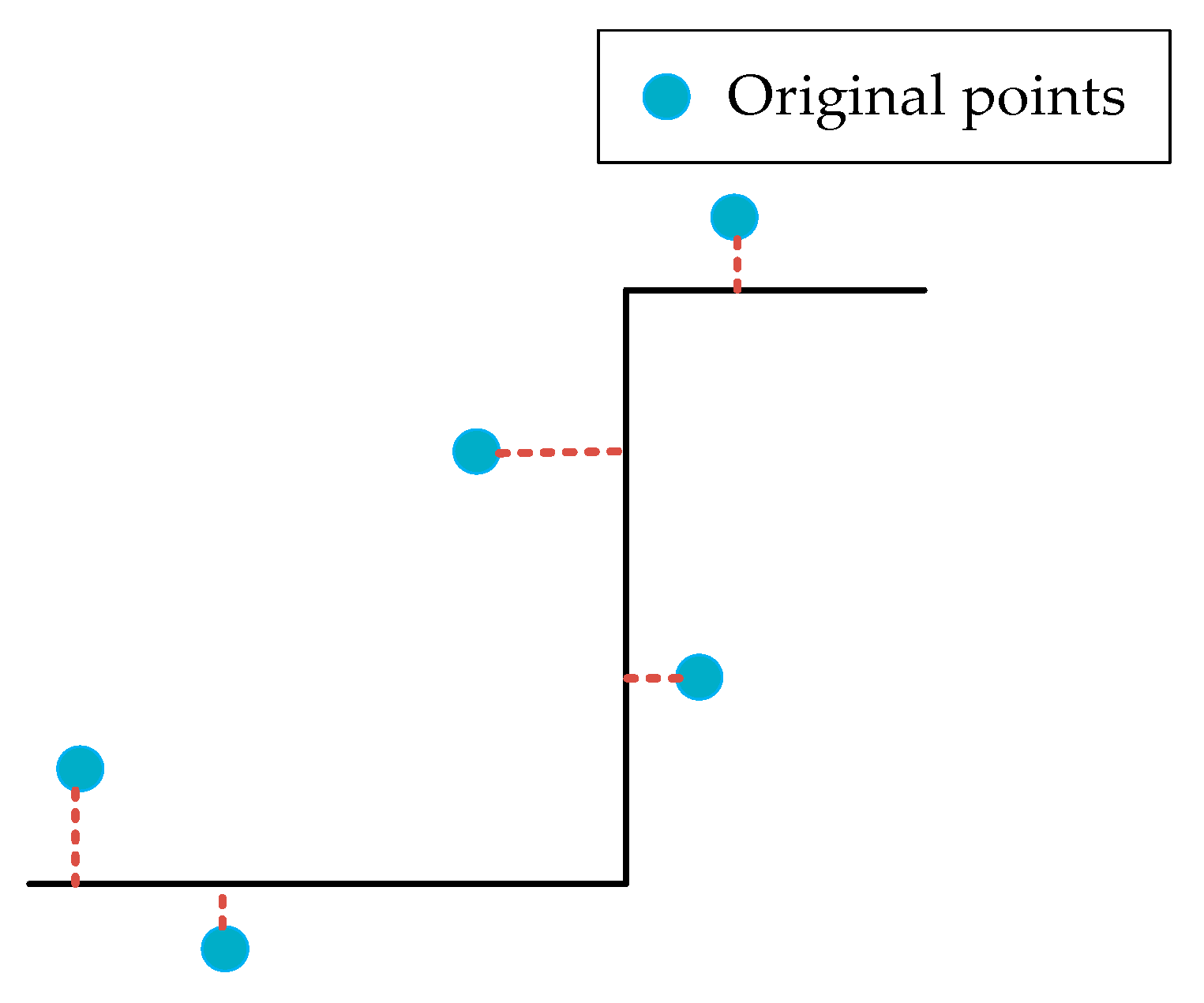

- First, the height map ranges of scatterers are computed according to the triangle relationship between the look-down angle and the original scatterer heights (see Figure 6b). The height map range can be computed with:where is the original ground range of the scatterer; is the original height of the scatterer; is the look-down angle; and is the projected height map range of the scatterer. The azimuth is unchanged in this projection.

- Then, keeping the heights unchanged, the scatterers are converted to the height map with the calculated height map ranges. Thus, the height map coordinate of every scatterer becomes .

- (1)

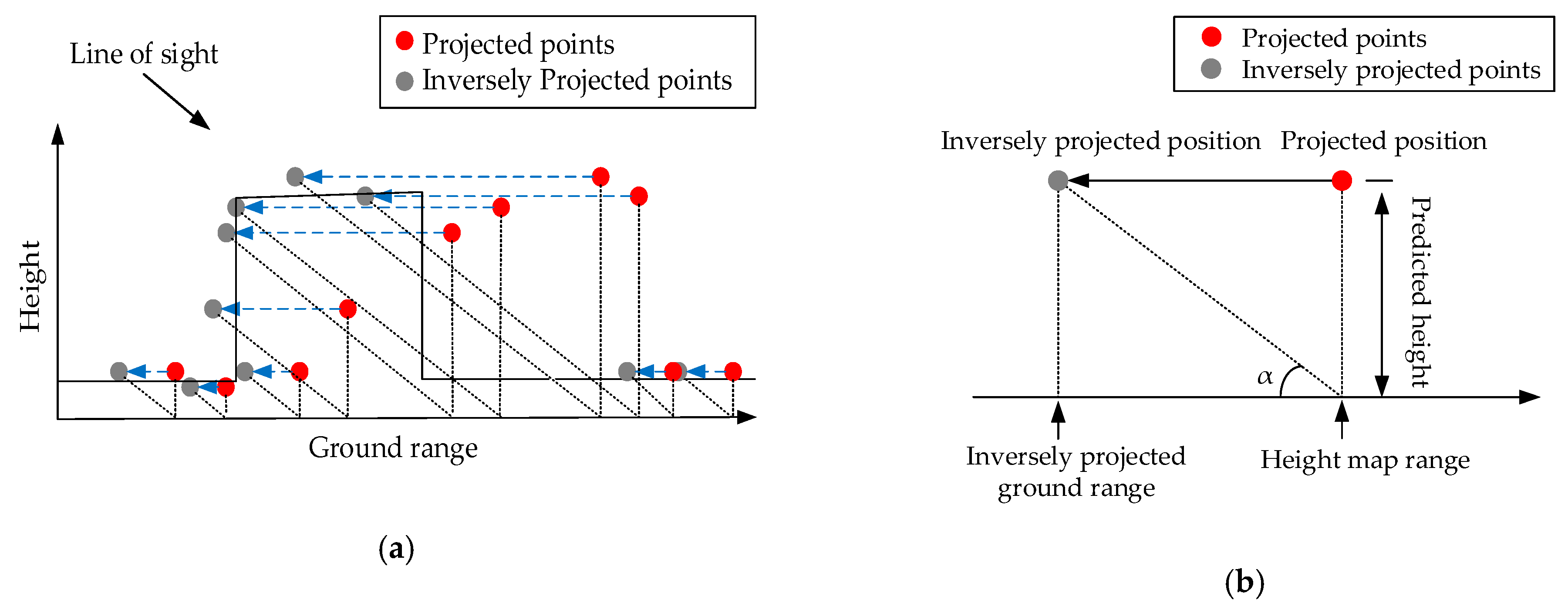

- First, the new ground ranges of scatterers are computed according to the triangle relationship between the look-down angle and the predicted heights. The height map range can be computed with:where is the projected height map range of the scatterer; is the predicted height of the scatterer; is the look-down angle; and is the inversely projected ground range. The azimuth is still unchanged in this projection.

- (2)

- Then, keeping the predicted heights unchanged, the scatterers are converted to the height map with the calculated ground ranges. Thus, the height map coordinate of every scatterer becomes .

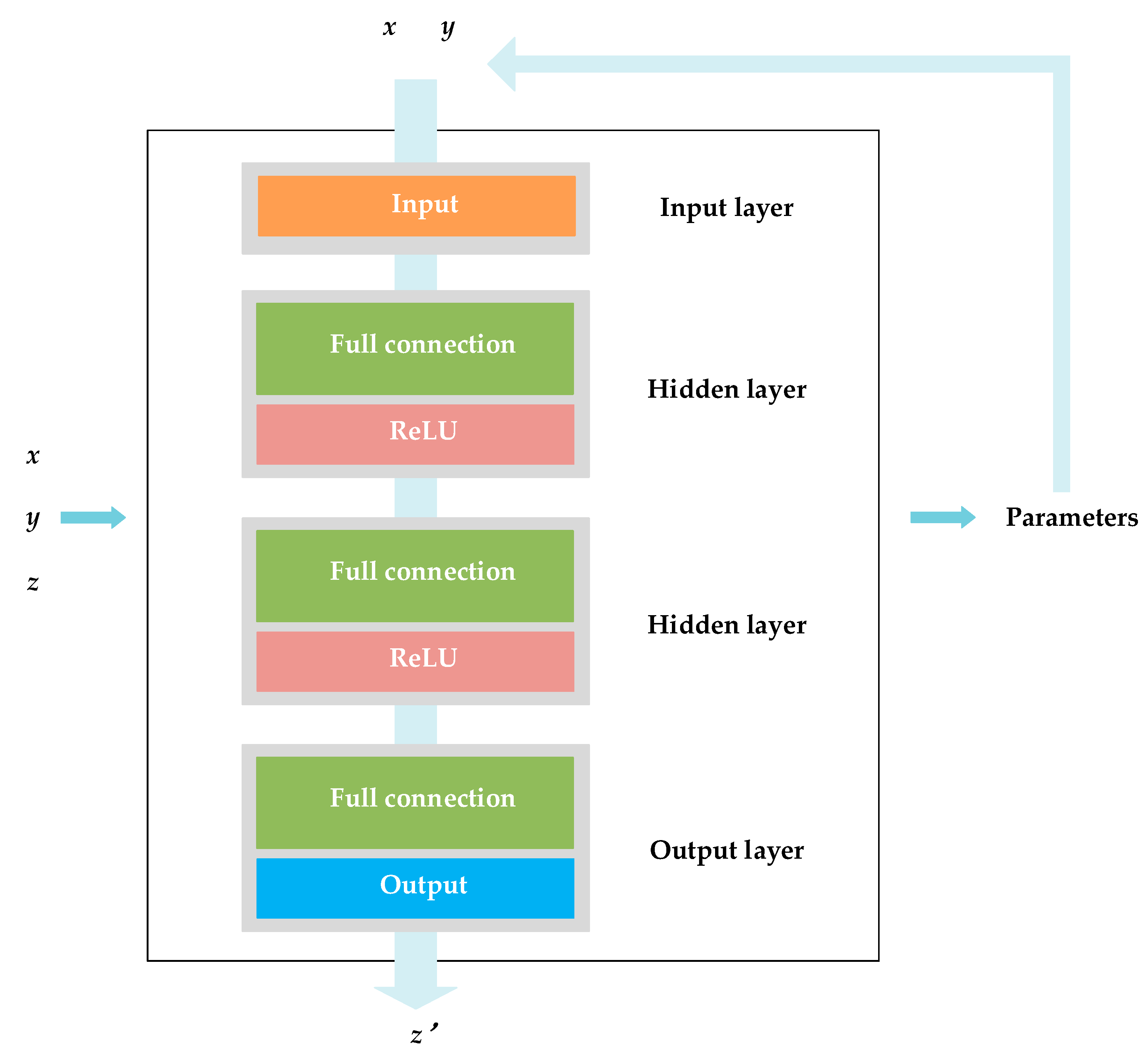

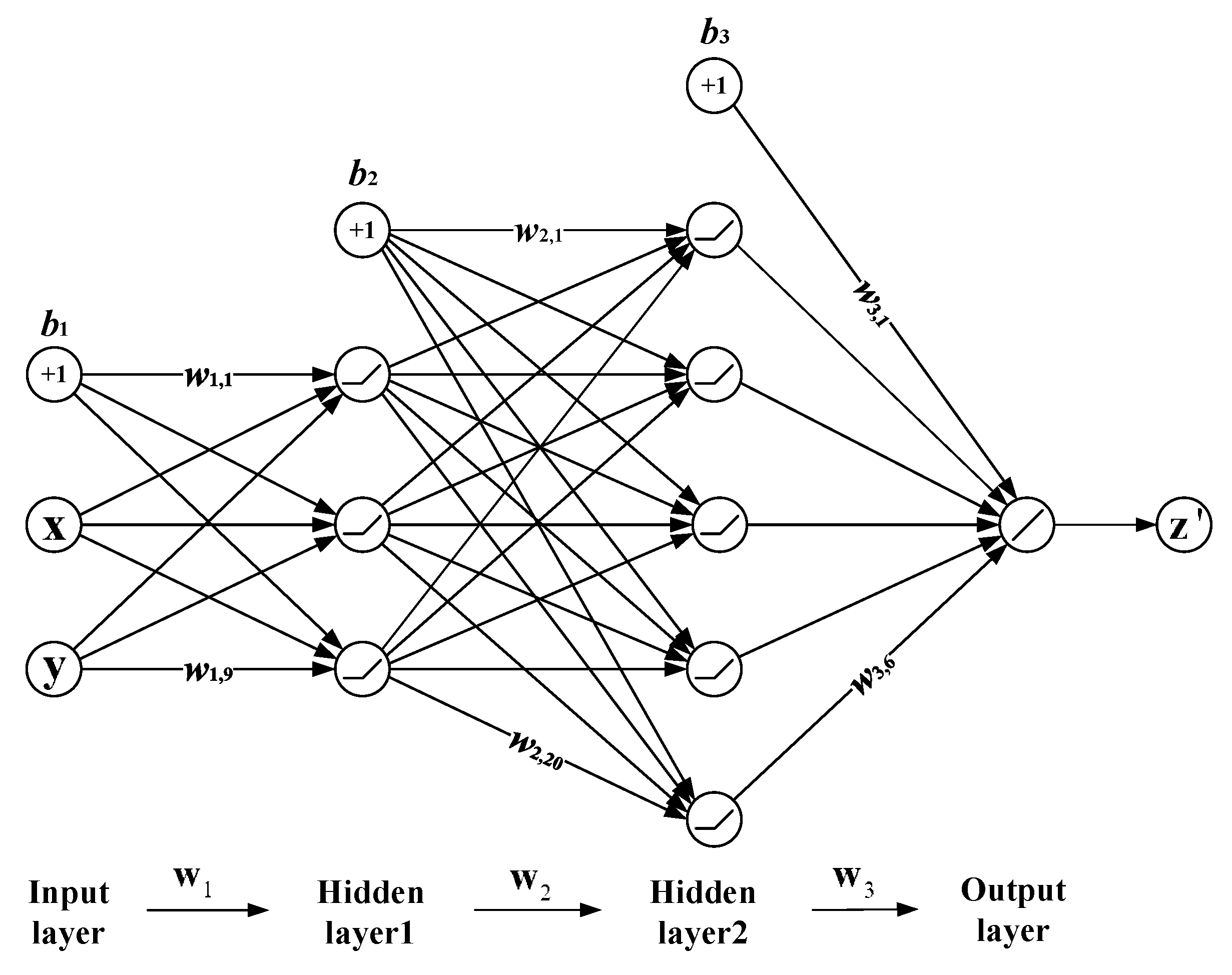

2.4. Neural Network Architecture

represents the ReLU function, the symbol

represents the ReLU function, the symbol  is a summation, and

is a summation, and  is a bias point. Through training, the weights and biases were obtained, so the network model was determined. This trained net can present the distorted z-type building structure. Then, via the trained neural network, the predicted point heights z′ can be acquired by a regression. With the azimuth-ranges (x, y) as the input, the network gives the predicted heights to the corresponding scatterers by using the computed net parameters.

is a bias point. Through training, the weights and biases were obtained, so the network model was determined. This trained net can present the distorted z-type building structure. Then, via the trained neural network, the predicted point heights z′ can be acquired by a regression. With the azimuth-ranges (x, y) as the input, the network gives the predicted heights to the corresponding scatterers by using the computed net parameters. 3. Results

3.1. Simulation Data

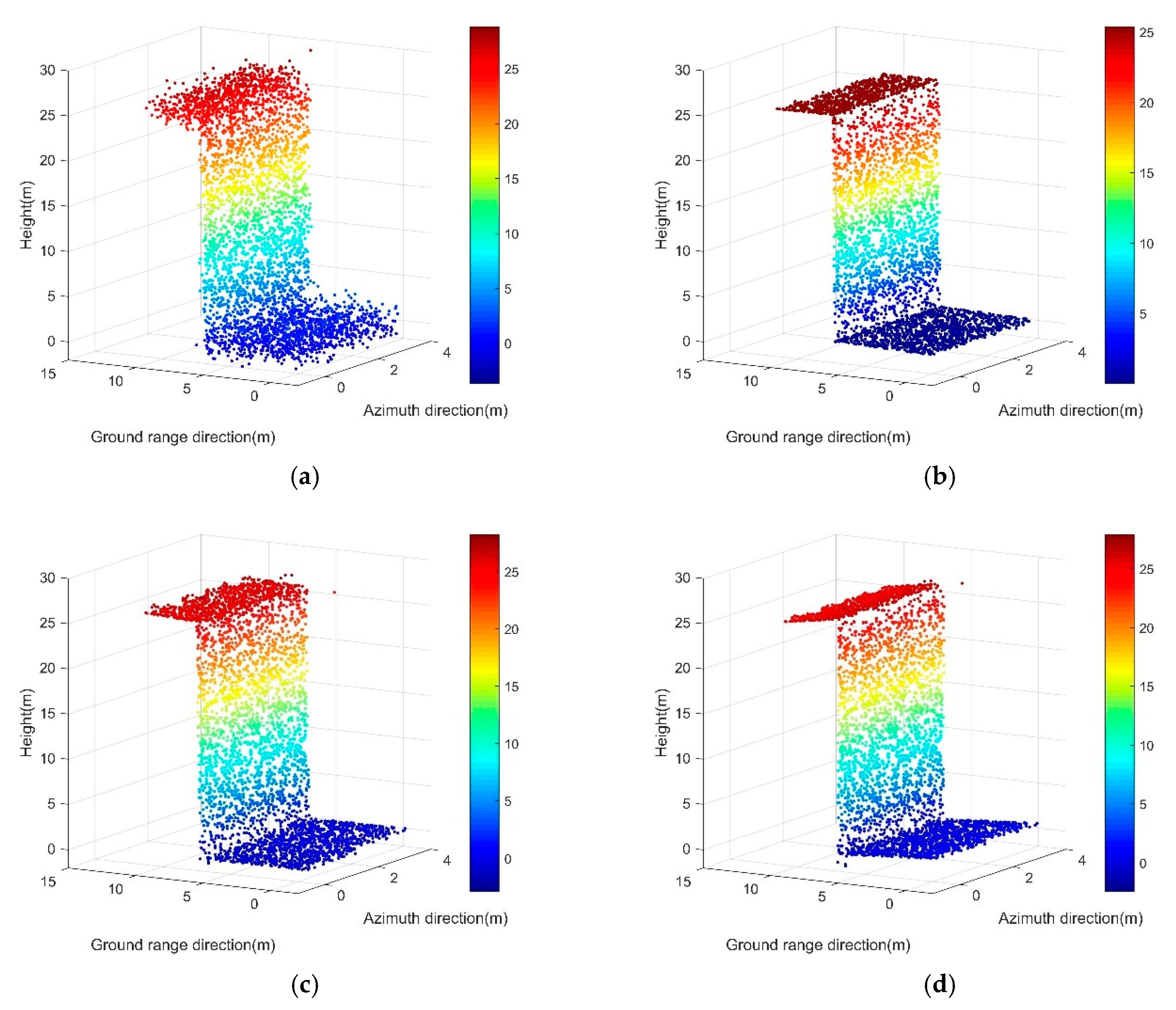

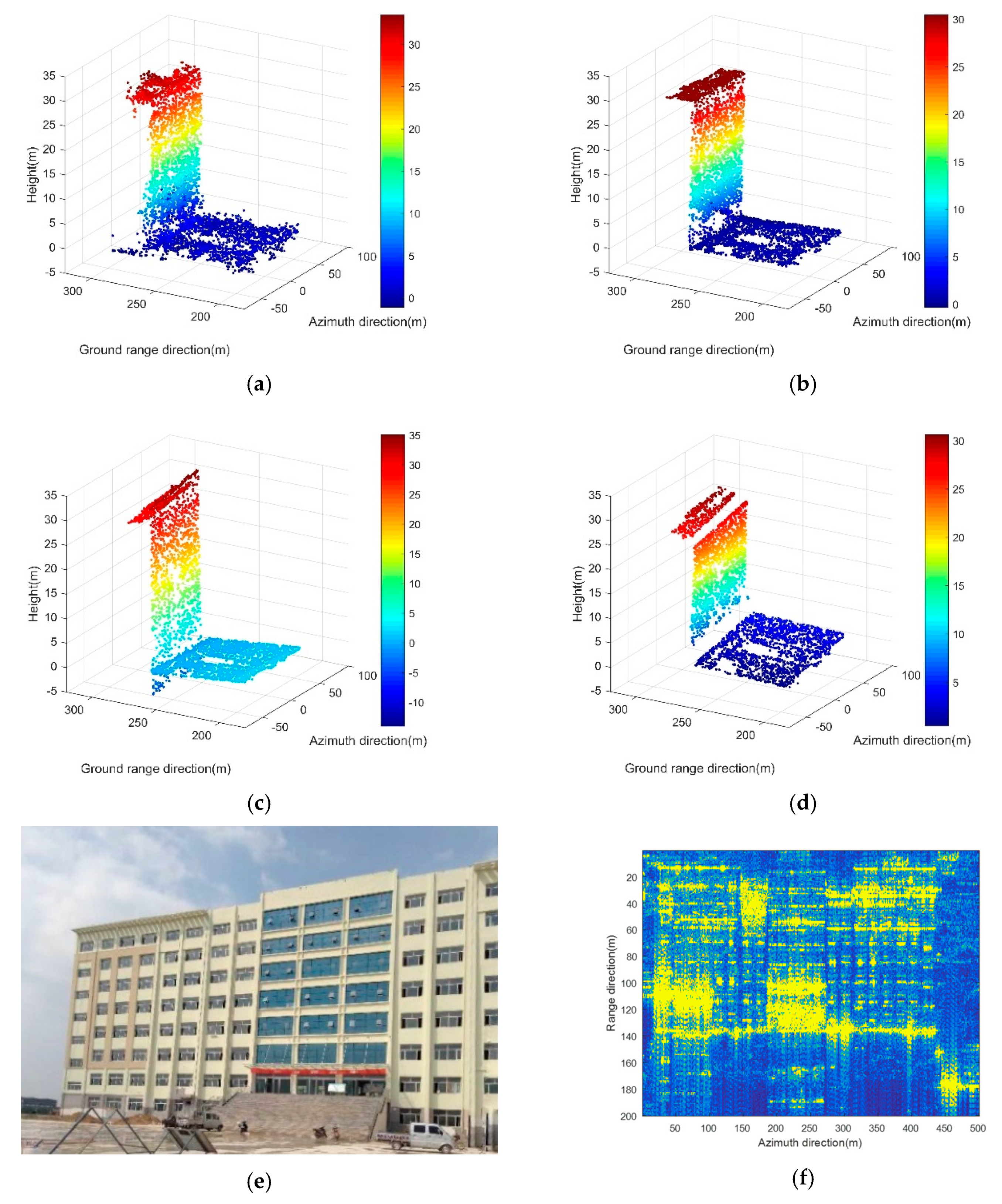

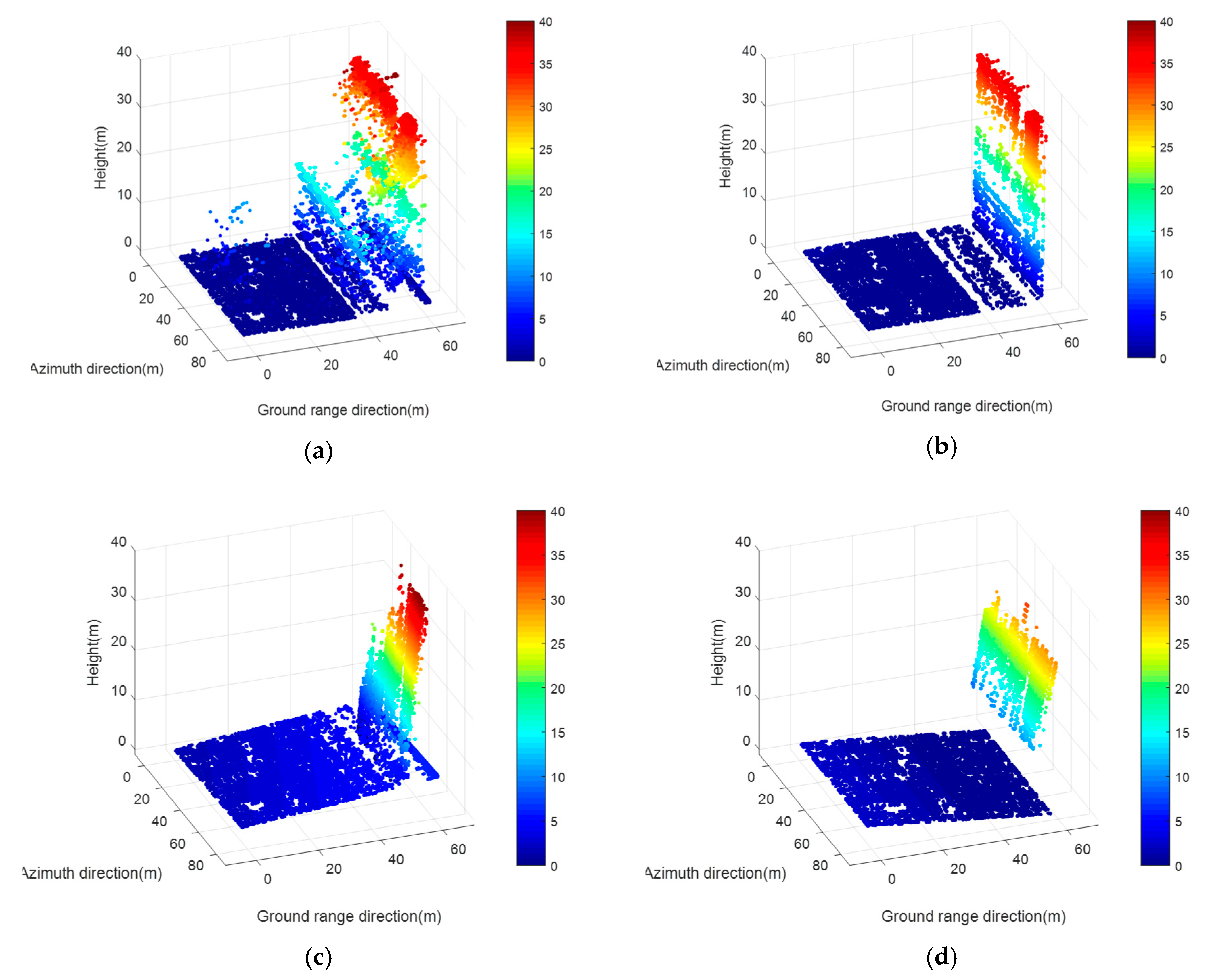

3.1.1. Single Building Facade

3.1.2. Single Building Corner

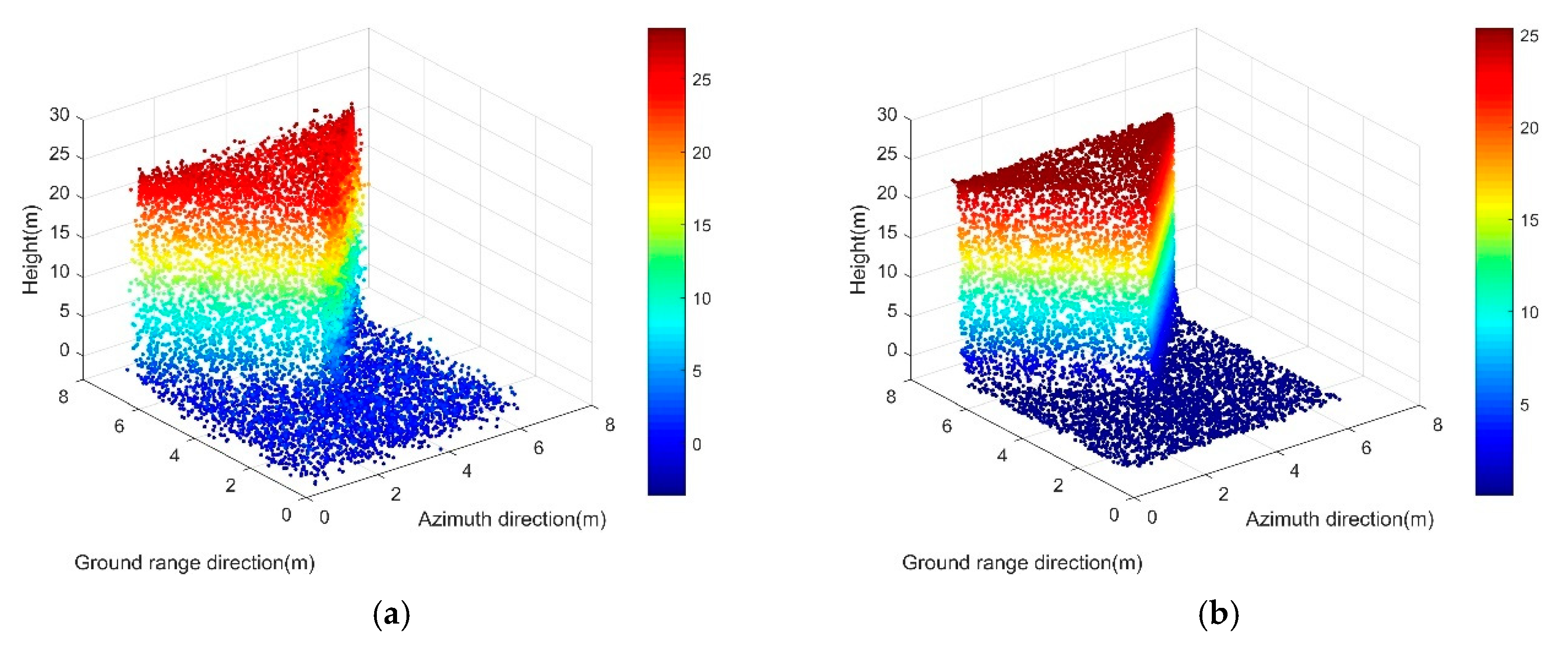

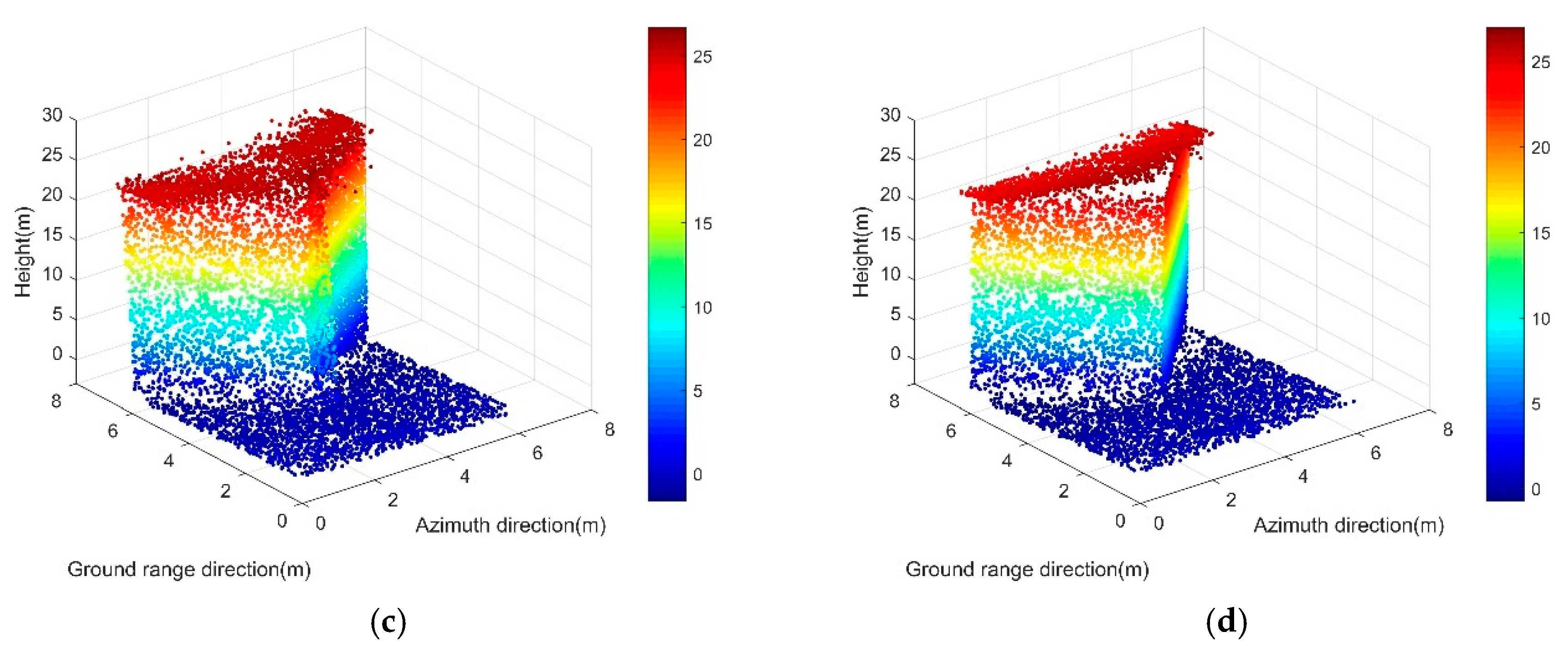

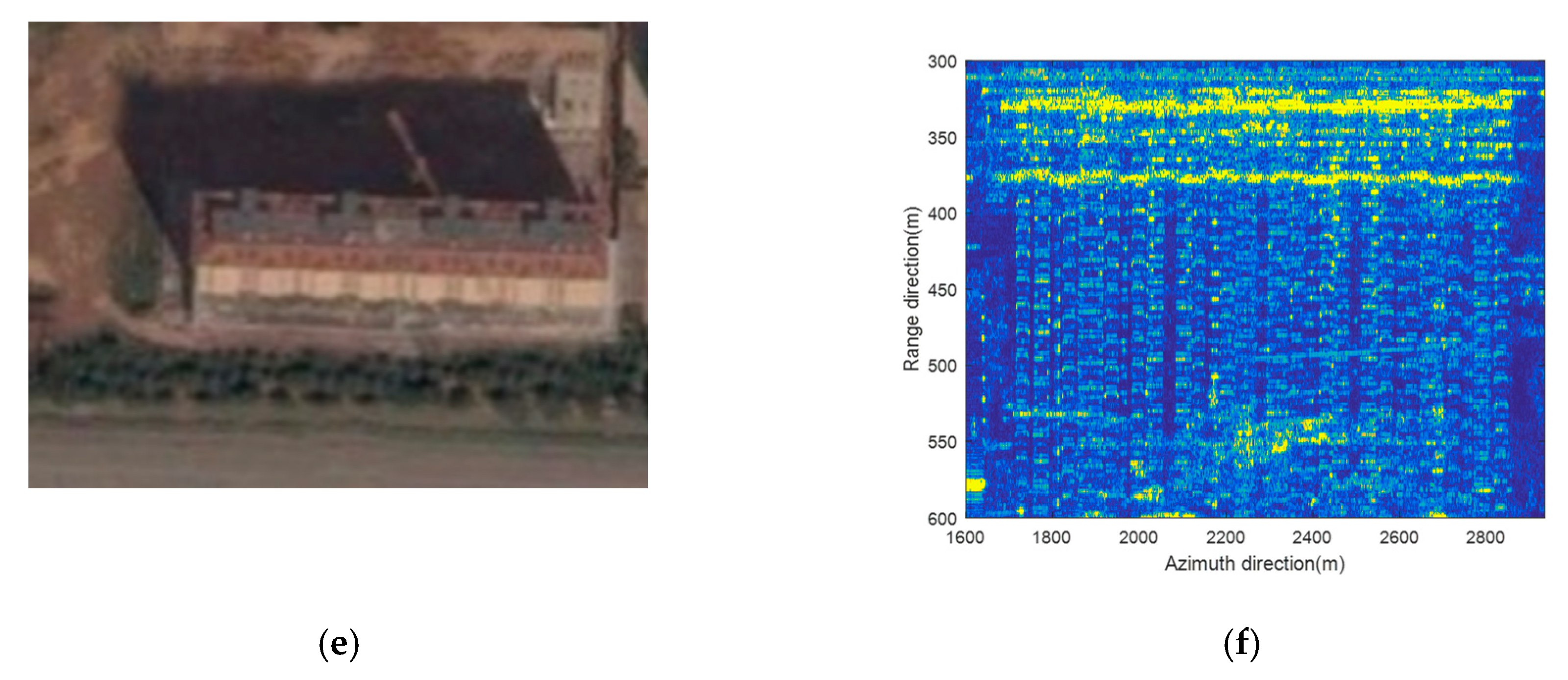

3.2. Experimental TomoSAR Data

- (1)

- The planes in the results of the RANSAC and TomoSeed are dislocated. Big intervals lie among the roof, facade, and ground. In contrast, our method allowed for relatively smoother and more continuous corners;

- (2)

- In the TomoSeed results, the three planes (the roof, facade, and ground) were slanted. In the RANSAC results, the ground rises and the facade were mistakenly lengthened. In contrast, our method kept a more precise preservation of the building structure.

4. Discussion

- (1)

- The most considerable significance of our method is its high automation level. The proposed neural network helps to represent the relationship between the azimuth-ground ranges and the heights of the scatterers. Thus, it allows for the improvement of the automation level of the extraction of 3-D building structures. In comparison, both RANSAC and TomoSeed need to segment the building data first and undertake plane fitting by part. They cannot process all the data at one time. Furthermore, our method does not rely on complex parameter adjustment or prior knowledge of the target buildings. Once our network structure is determined, the parameters can be computed automatically.

- (2)

- The high regularization precision of our method is also noteworthy. As the method does 3-D surface regression, it allows for the extraction of global features of the building structures approximately and guarantees the robustness of our method against local noise. In contrast, both RANSAC and TomoSeed have to carry out plane fitting for every separated part. This mechanism determines the loss of global features. Thus, their results tend to be influenced by local noise, so the planes tend to be dislocated, slanting, or out of size.

- (3)

- Last, but not least, our method is more time-saving when compared to RANSAC and TomoSeed. With the help of the Adam optimization algorithm, our method converged to the optimum solution rapidly so that the prediction loss could be minimized quickly. Thus, the 3-D regularization of urban buildings can be achieved within a short time. However, the plane fitting procedure in RANSAC and TomoSeed is not optimized, so it is very time-consuming. Moreover, as our method does not need to separate the data, we saved time in data segmentation and additionally adjusting the manual parameters.

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Döllner, J.; Kolbe, T.H.; Liecke, F.; Sgouros, T.; Teichmann, K. The Virtual 3D City Model of Berlin-Managing, Integrating, and Communicating Complex Urban Information. In Proceedings of the 25th International Symposium on Urban Data Management, Aalborg, Denmark, 15–17 May 2006. [Google Scholar]

- Köninger, A.; Bartel, S. 3D-GIS for urban purposes. Geoinformatica 1998, 2, 79–103. [Google Scholar] [CrossRef]

- Homer, J.; Longstaff, I.; Callaghan, G. High resolution 3-D SAR via multi-baseline interferometry. In Proceedings of the International Geoscience and Remote Sensing Symposium, Lincoln, NE, USA, 31–31 May 1996. [Google Scholar]

- Zhu, X.X.; Bamler, R. Demonstration of super-resolution for tomographic SAR imaging in urban environment. IEEE Trans. Geosci. Remote Sens. 2011, 50, 3150–3157. [Google Scholar] [CrossRef]

- Fornaro, G.; Serafino, F.; Soldovieri, F. Three-dimensional focusing with multipass SAR data. IEEE Trans. Geosci. Remote Sens. 2003, 41, 507–517. [Google Scholar] [CrossRef]

- Guillaso, S.; D’Hondt, O.; Hellwich, O. Extraction of points of interest from sar tomograms. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; pp. 471–474. [Google Scholar]

- Guillaso, S.; D’Hondt, O.; Hellwich, O. Building characterization using polarimetric tomographic SAR data. In Proceedings of the Joint Urban Remote Sensing Event, Sao Paulo, Brazil, 21–23 April 2013; pp. 234–237. [Google Scholar]

- Cheng, R.; Liang, X.; Zhang, F.; Chen, L. Multipath Scattering of Typical Structures in Urban Areas. IEEE Trans. Geosci. Remote Sens. 2018, 5I7, 1–10. [Google Scholar] [CrossRef]

- Krieger, G.; Rommel, T.; Moreira, A. MIMO-SAR Tomography. In Proceedings of the 11th European Conference on Synthetic Aperture Radar, Hamburg, Germany, 6–9 June 2016; pp. 1–6. [Google Scholar]

- Reigber, A.; Moreira, A. First demonstration of airborne SAR tomography using multibaseline L-band data. IEEE Trans. Geosci. Remote Sens. 2000, 38, 2142–2152. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Chum, O.; Matas, J.; Kittler, J. Locally Optimized RANSAC. In Joint Pattern Recognition Symposium; Springer: Berlin/Heidelberg, Germany, 2003; pp. 236–243. [Google Scholar]

- Toldo, R.; Fusiello, A. Robust Multiple Structures Estimation with J-Linkage. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2008; pp. 537–547. [Google Scholar]

- D’Hondt, O.; Guillaso, S.; Hellwich, O. Geometric primitive extraction for 3D reconstruction of urban areas from tomographic SAR data. In Proceedings of the Joint Urban Remote Sensing Event 2013, Sao Paulo, Brazil, 21–23 April 2013. [Google Scholar]

- Bughin, E.; Almansa, A.; von Gioi, R.G.; Tendero, Y. Fast plane detection in disparity maps. In Proceedings of the IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 2961–2964. [Google Scholar]

- D’hondt, O.; Caselles, V. Unsupervised motion layer segmentation by random sampling and energy minimization. In Proceedings of the Conference on Visual Media Production, London, UK, 17–18 November 2010; pp. 141–150. [Google Scholar]

- Shi, Y.; Wang, Y.; Kang, J.; Lachaise, M.; Zhu, X.X.; Bamler, R. 3D Reconstruction from Very Small TanDEM-X Stacks. In Proceedings of the 12th European Conference on Synthetic Aperture Radar, Aachen, Germany, 4–7 June 2018; pp. 1–4. [Google Scholar]

- Ley, A.; Dhondt, O.; Hellwich, O. Regularization and Completion of TomoSAR Point Clouds in a Projected Height Map Domain. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2104–2114. [Google Scholar] [CrossRef]

- Zhu, X.X.; Shahzad, M. Facade Reconstruction Using Multiview Spaceborne TomoSAR Point Clouds. IEEE Trans. Geosci. Remote Sens. 2014, 52, 3541–3552. [Google Scholar] [CrossRef]

- Wang, Y.; Zhu, X.X. Automatic Feature-Based Geometric Fusion of Multiview TomoSAR Point Clouds in Urban Area. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 8, 953–965. [Google Scholar] [CrossRef]

- Shahzad, M.; Zhu, X.X. Reconstructing 2-D/3-D Building Shapes from Spaceborne Tomographic Synthetic Aperture Radar Data. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 3, 313–320. [Google Scholar] [CrossRef]

- Shahzad, M.; Zhu, X.X. Robust reconstruction of building facades for large areas using spaceborne TomoSAR point clouds. IEEE Trans. Geosci. Remote Sens. 2015, 53, 752–769. [Google Scholar] [CrossRef]

- Shahzad, M.; Zhu, X.X. Reconstruction of Building Footprints Using Spaceborne Tomosar Point Clouds. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 3, 385–392. [Google Scholar] [CrossRef]

- Shahzad, M.; Zhu, X.X. Automatic Detection and Reconstruction of 2-D/3-D Building Shapes from Spaceborne TomoSAR Point Clouds. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1292–1310. [Google Scholar] [CrossRef]

- Rottensteiner, F.; Briese, C. A New Method for Building Extraction in Urban Areas from High-Resolution LIDAR Data. Available online: https://www.isprs.org/proceedings/XXXIV/part3/papers/paper082.pdf (accessed on 25 August 2019).

- D’Hondt, O.; Guillaso, S.; Hellwich, O. Towards a Semantic Interpretation of Urban Areas with Airborne Synthetic Aperture Radar Tomography. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 7, 235–242. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Schmitt, M.; Zhu, X.X. Data Fusion and Remote Sensing: An ever-growing relationship. IEEE Geosci. Remote Sens. Mag. 2016, 4, 6–23. [Google Scholar] [CrossRef]

- Su, H.; Maji, S.; Kalogerakis, E.; Learned-Miller, E. Multi-view convolutional neural networks for 3D shape recognition. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 945–953. [Google Scholar]

- Fang, Y.; Xie, J.; Dai, G.; Wang, M.; Zhu, F.; Xu, T.; Wong, E. 3d deep shape descriptor. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2319–2328. [Google Scholar]

- Guo, K.; Zou, D.; Chen, X. 3D mesh labeling via deep convolutional neural networks. ACM Trans. Graph. 2015, 35, 3. [Google Scholar] [CrossRef]

- Bruna, J.; Zaremba, W.; Szlam, A.; LeCun, Y. Spectral networks and locally connected networks on graphs. arXiv 2013, arXiv:1312.6203. [Google Scholar]

- Masci, J.; Boscaini, D.; Bronstein, M.; Vandergheynst, P. Geodesic convolutional neural networks on riemannian manifolds. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Santiago, Chile, 7–13 December 2015; pp. 37–45. [Google Scholar]

- Maturana, D.; Scherer, S. Voxnet: A 3D convolutional neural network for real-time object recognition. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 922–928. [Google Scholar]

- Qi, C.R.; Su, H.; Nießner, M.; Dai, A.; Yan, M.; Guibas, L.J. Volumetric and multi-view cnns for object classification on 3D data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5648–5656. [Google Scholar]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3D shapenets: A deep representation for volumetric shapes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1912–1920. [Google Scholar]

- Garcia-Garcia, A.; Gomez-Donoso, F.; Garcia-Rodriguez, J.; Orts-Escolano, S.; Cazorla, M.; Azorin-Lopez, J. PointNet: A 3D Convolutional Neural Network for real-time object class recognition. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 1578–1584. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3D classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. In Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5099–5108. [Google Scholar]

- Shi, Y.; Li, Q.; Zhu, X.X. Building Footprint Generation Using Improved Generative Adversarial Networks. IEEE Geosci. Remote Sens. Lett. 2018, 16, 603–607. [Google Scholar] [CrossRef]

- Wilson, A.C.; Roelofs, R.; Stern, M.; Srebro, N.; Recht, B. The marginal value of adaptive gradient methods in machine learning. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 4148–4158. [Google Scholar]

| Method | Precision | Time |

|---|---|---|

| RANSAC | 0.2615 m | 40.2 s |

| TomoSeed | 0.1961 m | 9 min 38 s |

| Proposed | 0.1784 m | 8.77 s |

| Method | Precision | Time |

|---|---|---|

| RANSAC | 1.7384 m | 50.94 s |

| TomoSeed | 1.6420 m | 6 min 43 s |

| Proposed | 0.1896 m | 24.49 s |

| Method | Time |

|---|---|

| RANSAC | 48.83 s |

| TomoSeed | 1 min 18 s |

| Proposed | 14.47 s |

| Method | Time |

|---|---|

| RANSAC | 33.78 s |

| TomoSeed | 18 min 35 s |

| Proposed | 13.94 s |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, S.; Li, Y.; Zhang, F.; Chen, L.; Bu, X. Automatic Regularization of TomoSAR Point Clouds for Buildings Using Neural Networks. Sensors 2019, 19, 3748. https://doi.org/10.3390/s19173748

Zhou S, Li Y, Zhang F, Chen L, Bu X. Automatic Regularization of TomoSAR Point Clouds for Buildings Using Neural Networks. Sensors. 2019; 19(17):3748. https://doi.org/10.3390/s19173748

Chicago/Turabian StyleZhou, Siyan, Yanlei Li, Fubo Zhang, Longyong Chen, and Xiangxi Bu. 2019. "Automatic Regularization of TomoSAR Point Clouds for Buildings Using Neural Networks" Sensors 19, no. 17: 3748. https://doi.org/10.3390/s19173748

APA StyleZhou, S., Li, Y., Zhang, F., Chen, L., & Bu, X. (2019). Automatic Regularization of TomoSAR Point Clouds for Buildings Using Neural Networks. Sensors, 19(17), 3748. https://doi.org/10.3390/s19173748