Multi-Biometric System Based on Cutting-Edge Equipment for Experimental Contactless Verification

Abstract

1. Introduction

Related Literature

- ▪

- EER (Equal Error Rate)

- ▪

- FAR (False Acceptance Rate)

- ▪

- FRR (False Rejection Rate)

- ▪

- FIR (Far infrared)

- ▪

- NIR (Near Infrared)

- ▪

- ROI (Point or region of interest on the image)

2. Problem Definition

- Contact with pins: This method makes use of ”scanning hand fixation images,” i.e., a set of pins that define the position of individual fingers. The hand is laid on a flat surface, which creates a contrast to the surroundings. The method makes evaluation rather simple, even though it is less comfortable for the user, with some unwanted deformations. In the past, “contact-with-pins” was mainly used in research identification systems. Today, it finds a commercial application. In a study by Reference [35], the authors used five pins for the fixation of the position of the hand before scanning from the top and the sides. For the purpose of the evaluation, 16 geometric characteristics were utilized, which yielded an EER of 6%.

- Contact without pins: In this case, the hand is freely laid down onto a contrasting surface or scanner. The absence of pins implies a free movement of the hand, which eventually settles in its natural shape. This way, unwanted deformations are eliminated [36]. In their work, [36] the researchers adopted a tabular scanner without pins fixed on it.

- They evaluated the size and geometry of the finger tips, reaching an FRR value of 11.1% and a low FAR value of 2.2%. These values differ significantly from those obtained when pins are fixed (FRR value of 4% and FAR value of 4.9%). As such, “Contact without pins” is considered the most suitable for security applications due to the importance attached to FAR within domains of the method.

- Contactless scanning: This method does not require pins or surfaces where the hand will be laid and is, by far, the most user-friendly technique of all. To create contactless scanning points, a standard 2D camera or 3D digitizer is used for scanning. Reference [37] carried out hand scanning in front of a camera in an open surrounding. Evaluation was deliberately fixed at the hand’s center of gravity. As such, it was possible to create homocentric circles that intersect with the fingers. The author measured the fingers using these circles, with the results formed by measuring the size of the fingers in 124 points. FRR and FAR were recorded as 54.3% and 8.6%, respectively, with hand movement and its inclination to the camera surface causing some problems. Oftentimes, the movement from and to the camera leads to significant distortions in the size of the image generated. Instability of the surroundings is another problem associated with contactless scanning, which influences its measurement.

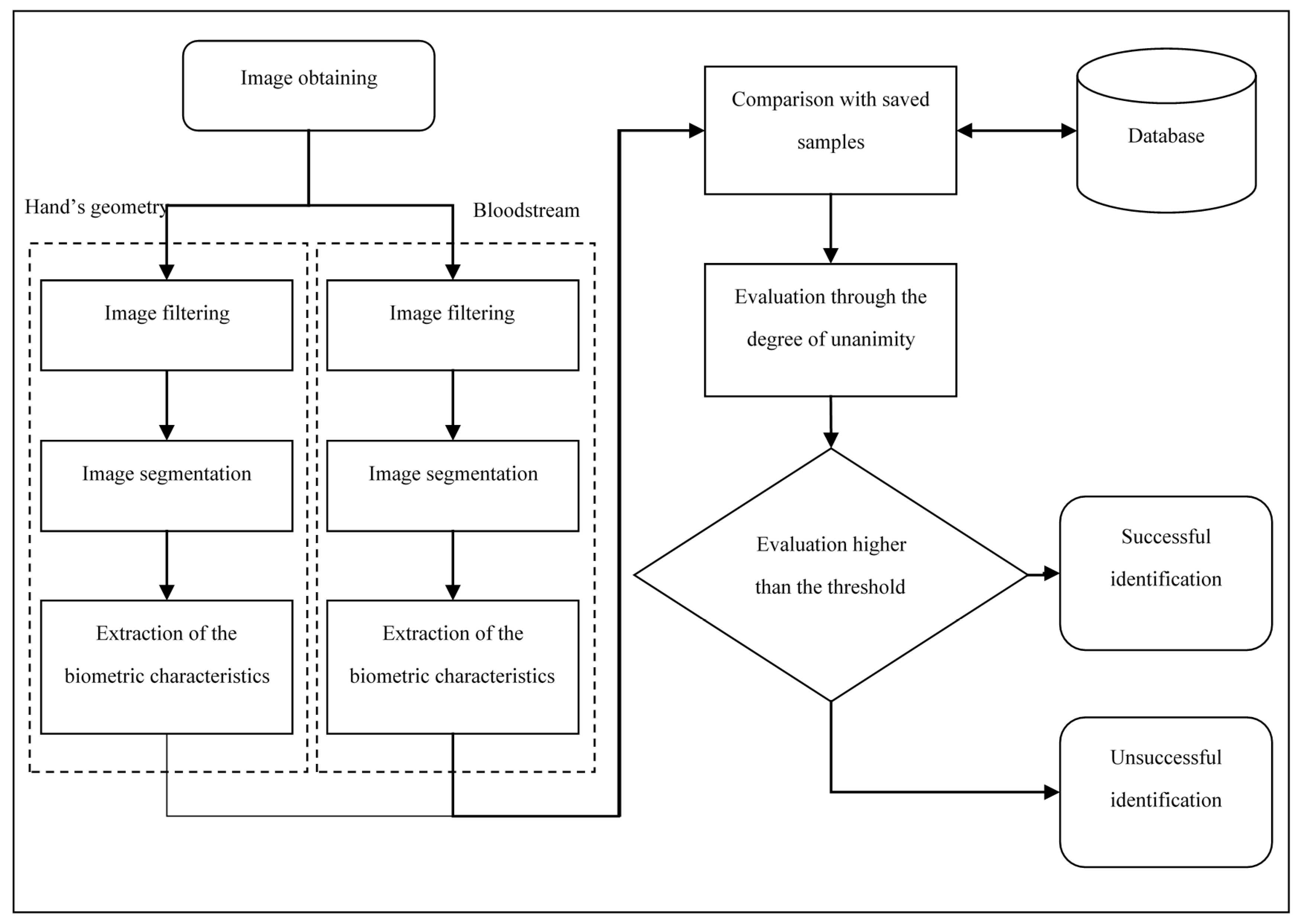

3. System Description

3.1. Hand Geometry

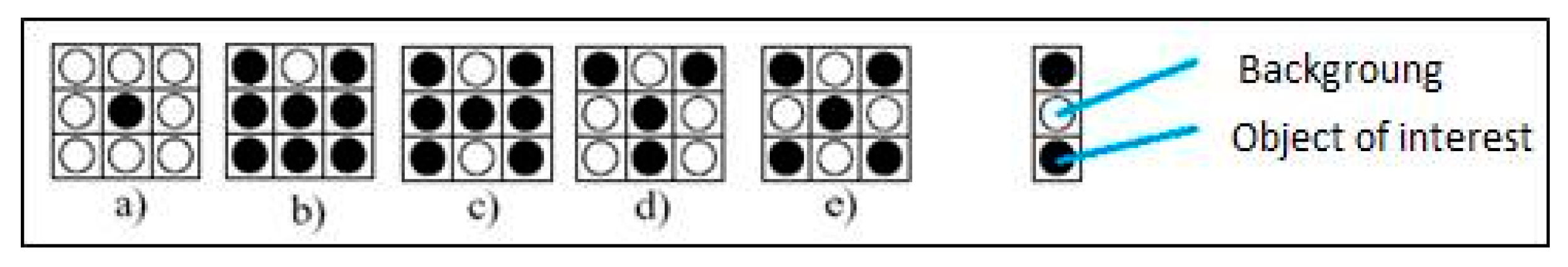

3.1.1. Image Pre-Processing

3.1.2. Image Segmentation

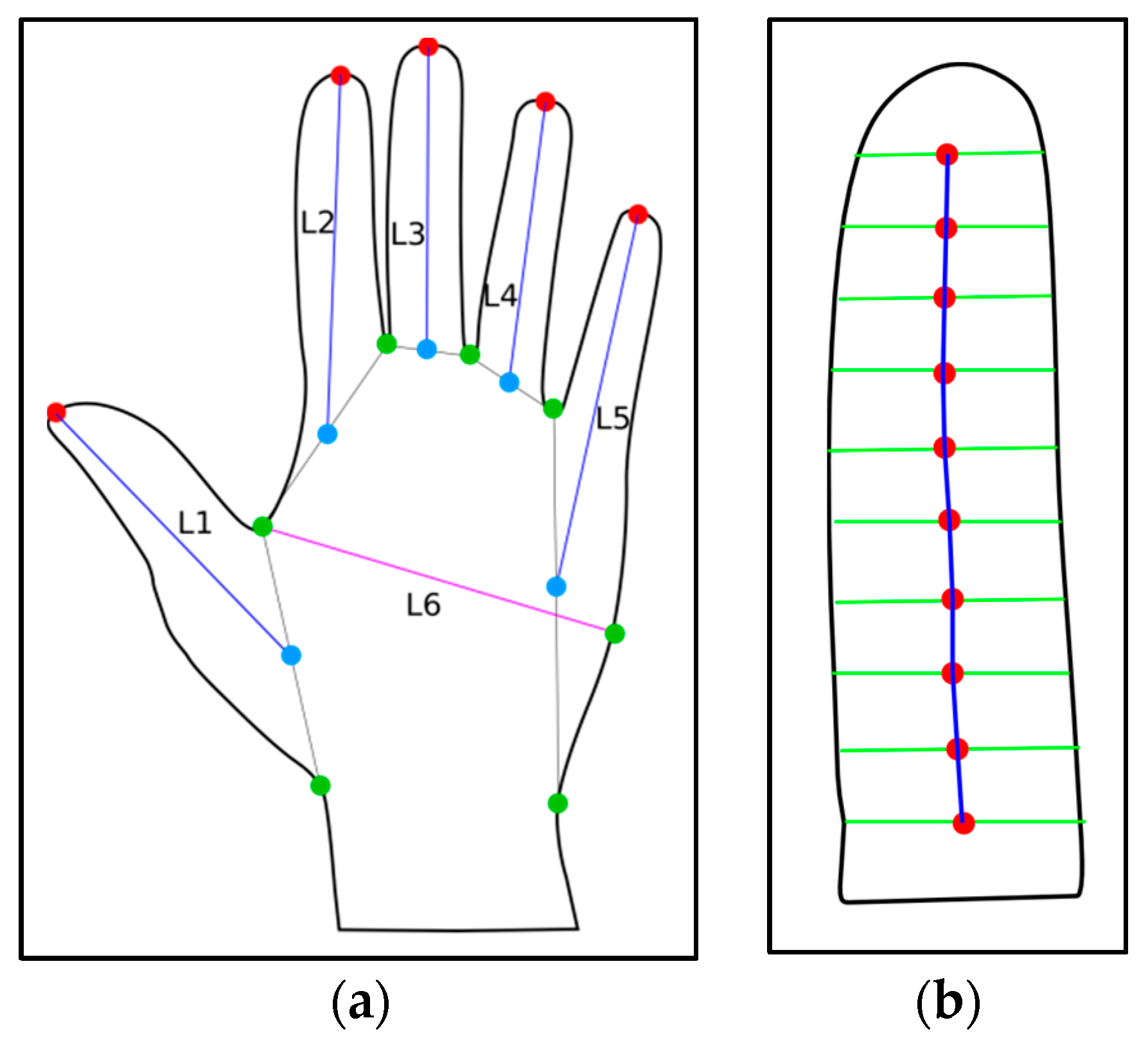

3.1.3. Definition of Biometric Characteristics

3.1.4. Extraction of Biometric Characteristics

3.2. Bloodstream

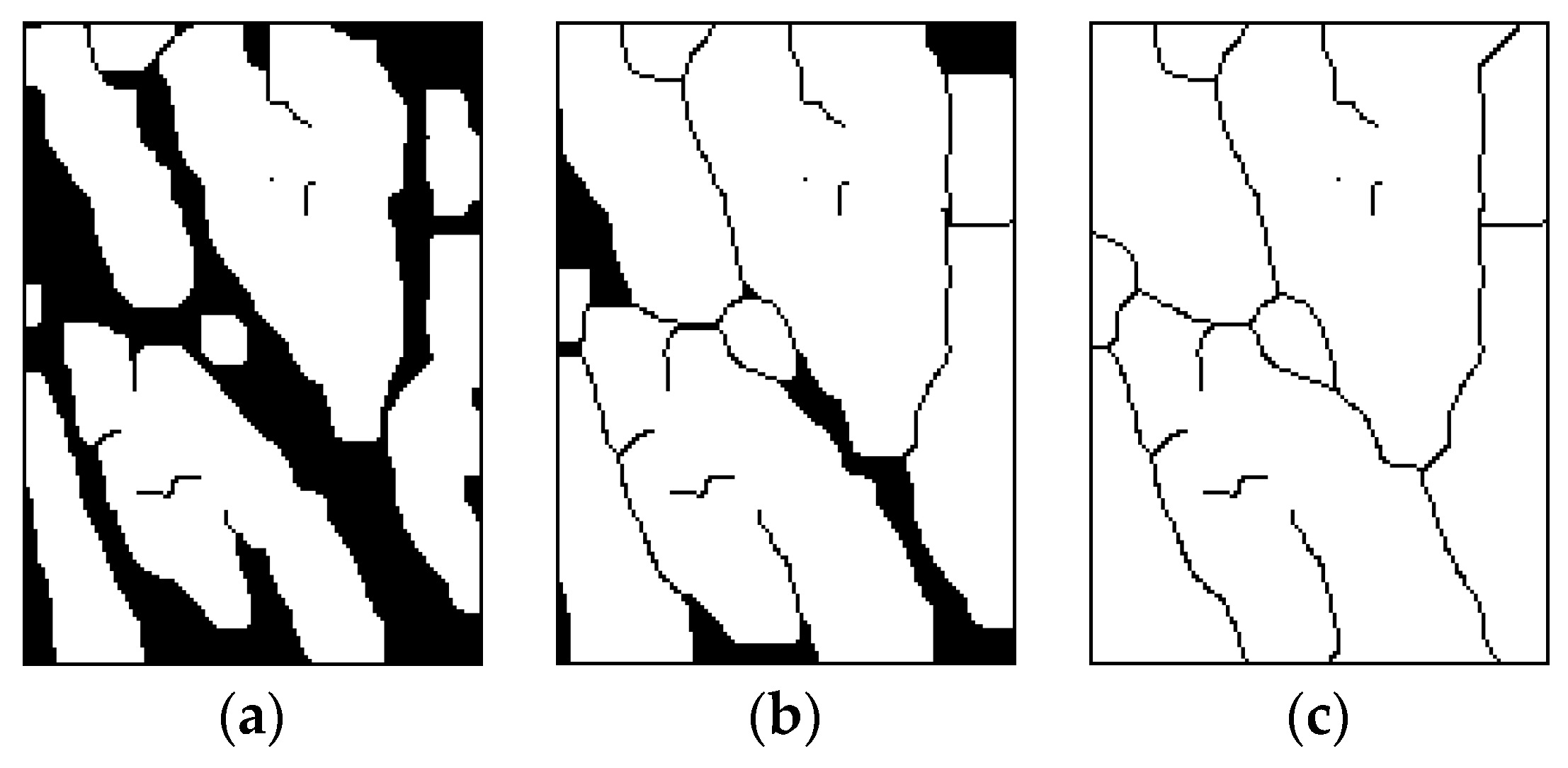

3.2.1. Image Pre-Processing

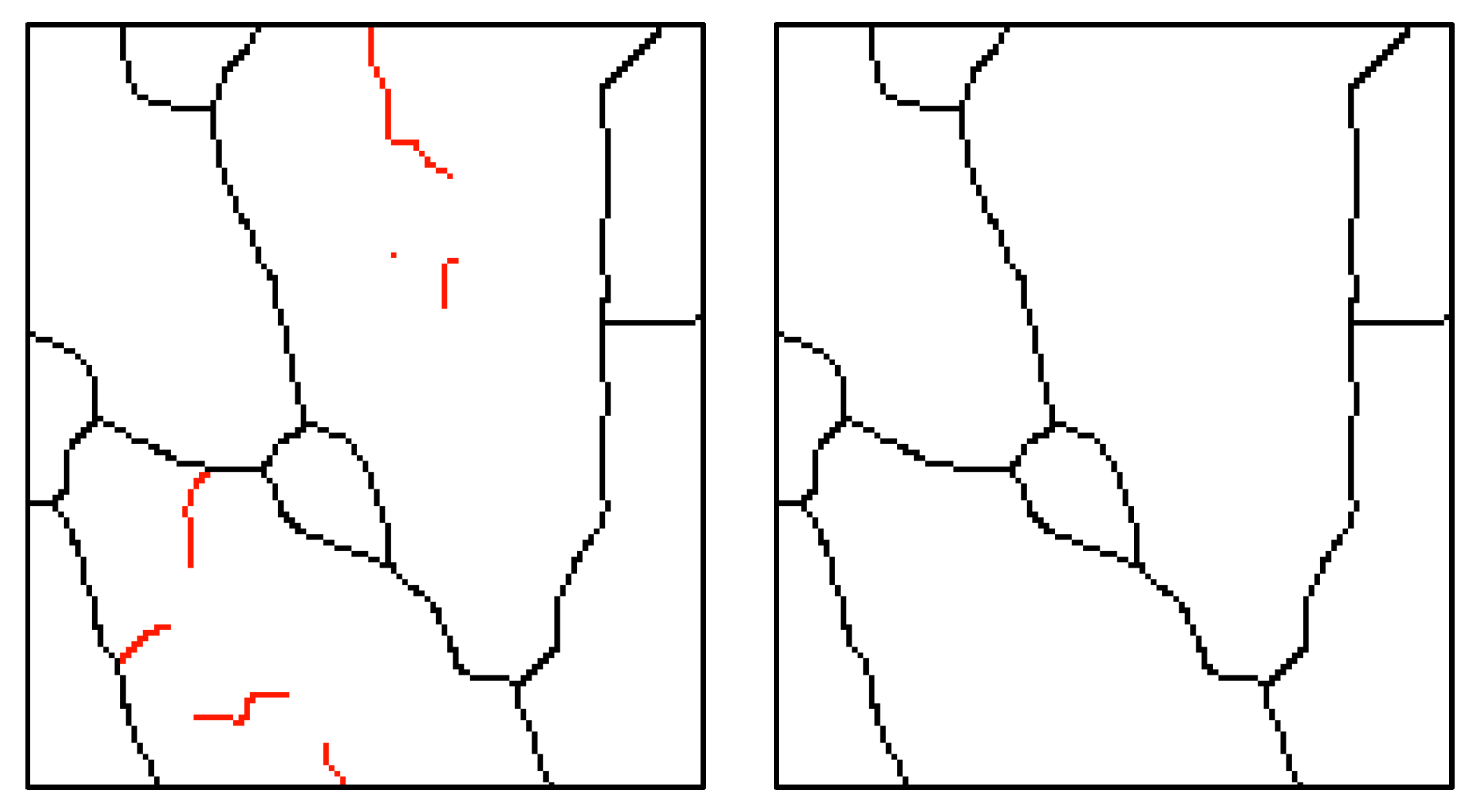

3.2.2. Image Segmentation

3.2.3. Definition of Biometric Characteristics

3.2.4. Extraction of Biometric Characteristics

3.3. Evaluation Using a Degree of Unanimity

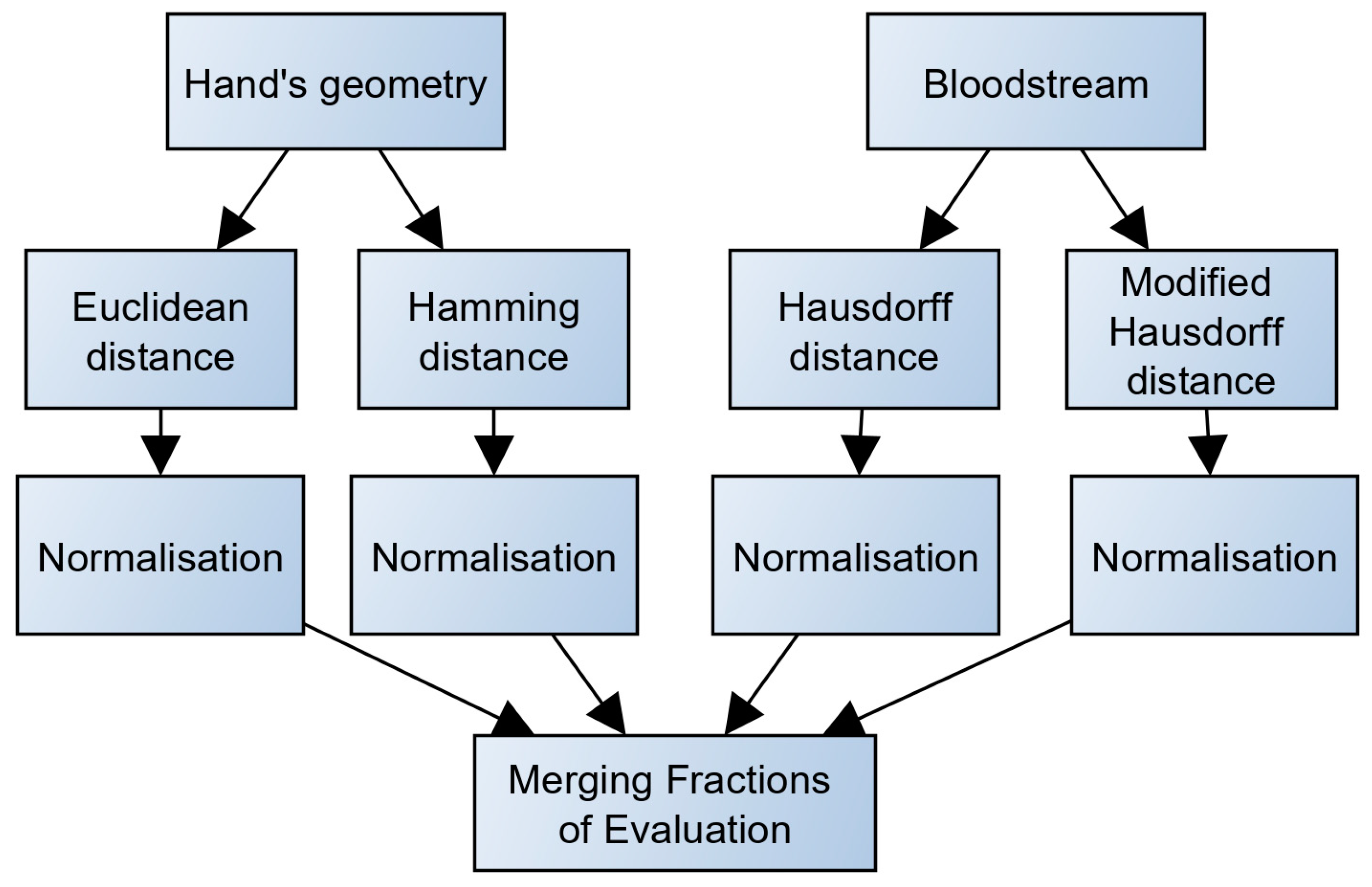

3.3.1. Calculation of Fractional Metrics Evaluation

- ▪

- Euclidean distance, which is the distance measured between two points, is located in the ‘N’ dimension. The Euclidean distance is computed using the formula below.

- ▪

- Hamming distance: This is another way of computing the score-level fusion. Hamming distance originates from the theory of information. In comparing two chains with the same length, the Hamming distance shows the lowest number of differentiation positions. In other words, it presents the number of substitutions that need to be established in order to change one chain into the second one. In their study, the researchers [6] generalized Hamming distance into a form suitable for evaluation of biometric data similarities. The authors suggested the use of a comparison on the basis of the number of non-unanimous biometric characteristics. The result is formed by a metric that does not measure deviation as in the case of Euclidean distance. Rather, it indicates the number of individual biometric characteristics for which there are higher deviations (during the comparison of the testing data and the template) than the root mean square (RMS) error of biometric characteristics. The RMS error is defined for each feature during template generation. Such an error is selected due to the presumption that the characteristics of one user during multiple photographing would never be completely identical. The presumed allocation of values for the given characteristic corresponds to the normal allocation. Hamming distance is calculated according to the following formula.

- ▪

- Hausdorff distance [58] is another method useful for a score-level fusion calculation. It determines the distance between two set of points found in the metric space. In simple terms, the two data sets that are in closest proximity to one another such that points of the second dataset can be found near the surroundings of the first dataset. Hausdorff distance - Helicity Violating (HV) is considered to be the longest distance among the existing distances between the set of points. It is created by joining one point of the first point set to another point on the second set and vice versa. If there are similarities between two sets of points, then HV has a lower value. Since the biometric characteristic of the bloodstream is composed of various sets of points, such a position on the image is essential. In this experimental set-up, HV is able to calculate score-level fusion, which naturally compares the similarity in shapes. HV is, however, flawed on the sensitivity to remote values. Oriented HV, which is marked between the sets of points A and B, corresponds to the maximum distance from all pairs and . The oriented HV is expressed by the equation below.

- ▪

- Modified Hausdorff distance: As earlier mentioned, HV is very sensitive to distant values. This implies that even a few points from the testing set of points that are outside of the template points would cause a large increase in the value of HV. This is regardless of whether or not the sets are very similar to each other. In order to find the solution to this weakness, researchers [58] looked at many different modifications of HV. Results from their analysis showed that, while using modified HV (further MHV), the problem of distant values is suppressed. In contrast to the previous formula, the non-oriented MHV can then be defined as:

3.3.2. Normalization of a Fractional Metric

3.3.3. Merging Fractions of Evaluation

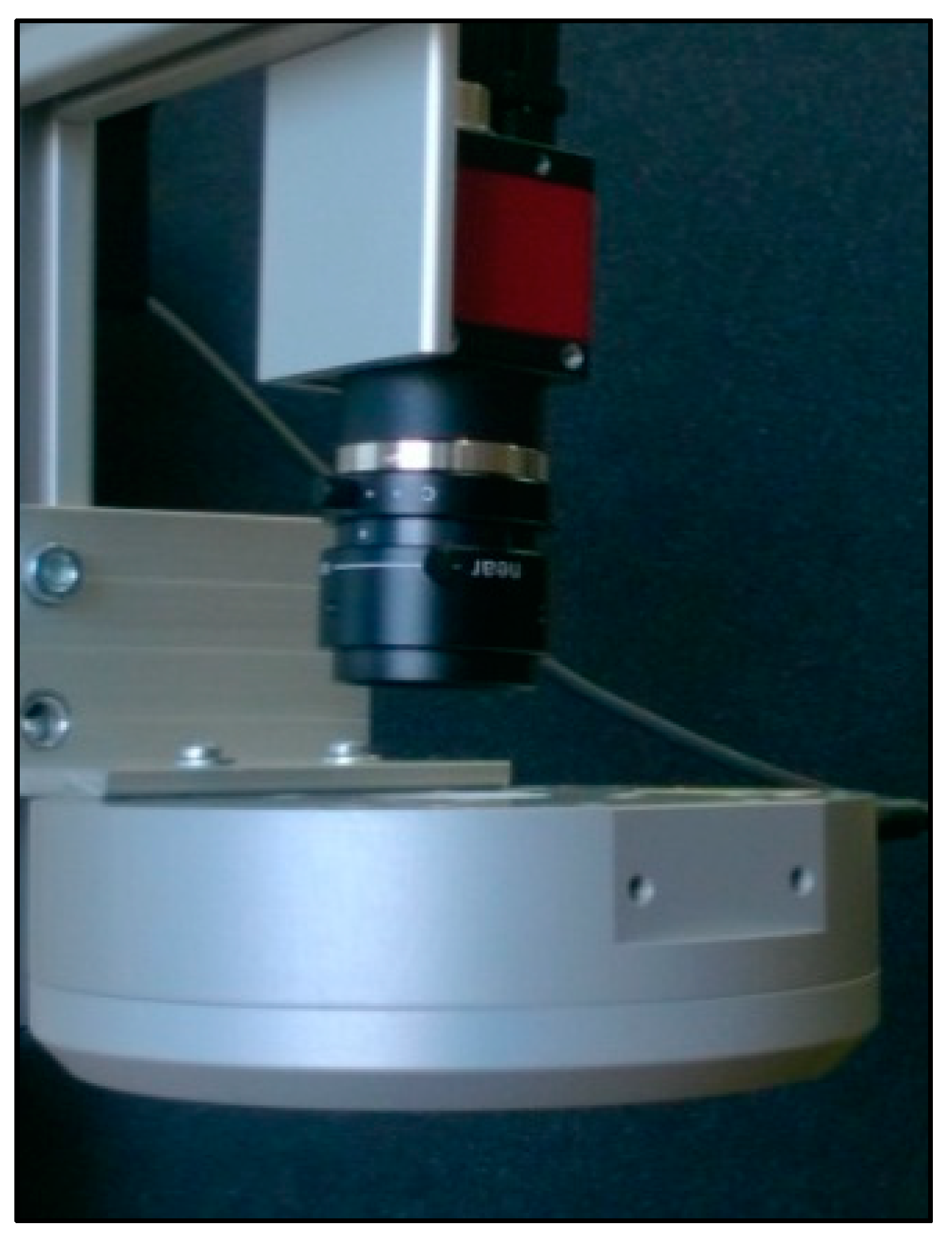

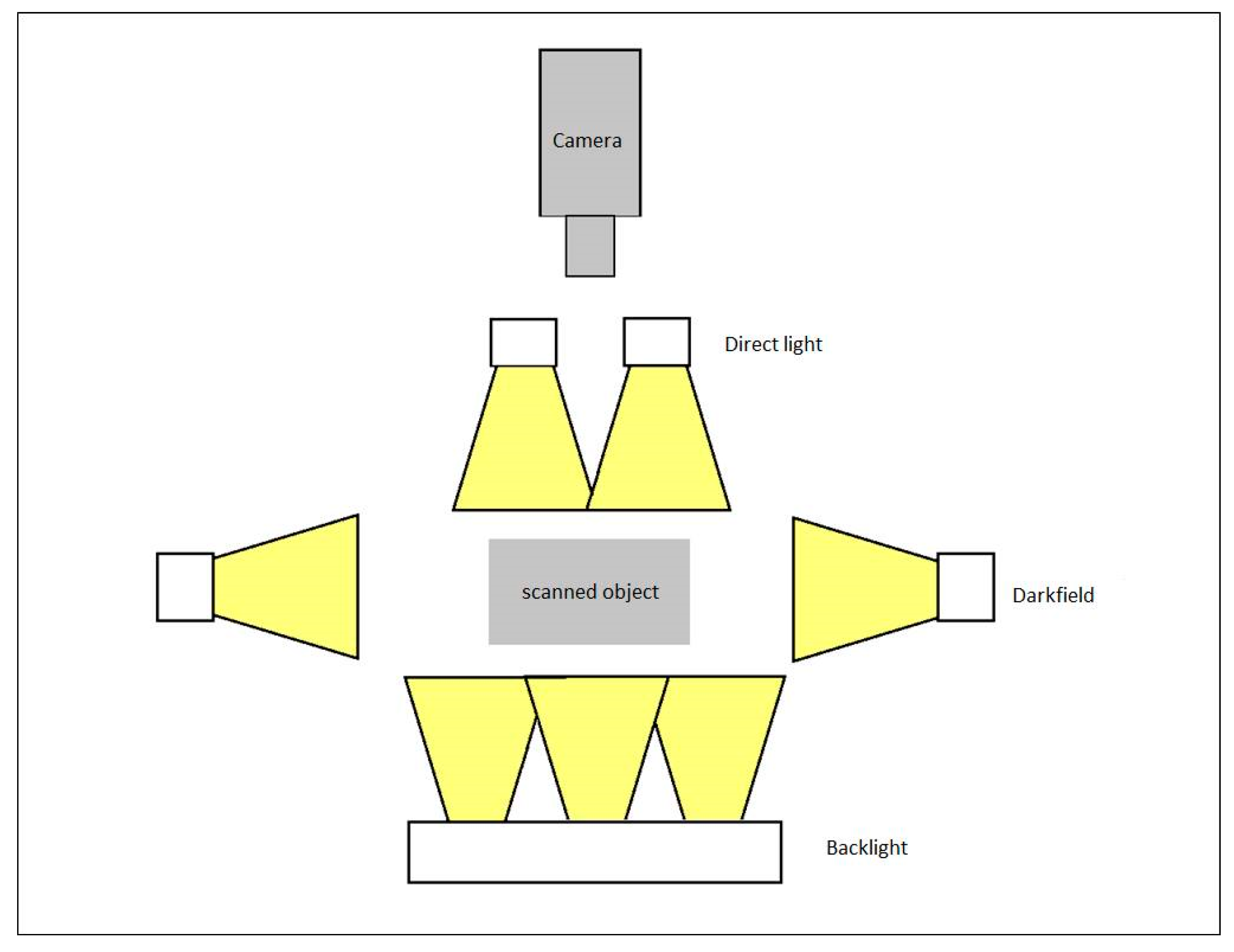

3.4. Image Scanning

3.4.1. Proposal of the Scanning Device

- Sufficient intensity

- Homogenous lighting of the scene

- Consistency of the light intensity over time (to guide against depletion).

3.4.2. Components of the Scanning Device

4. Results: Testing of Developed Application

4.1. Testing Methodology

- OS—Windows 10, 64 bit

- CPU—Intel Core i5-527U with frequency of 2.7 GHz (maximum turbo frequency of 3.1 GHz)

- RAM—8 GB

4.2. Tests of the Experimental System Speed

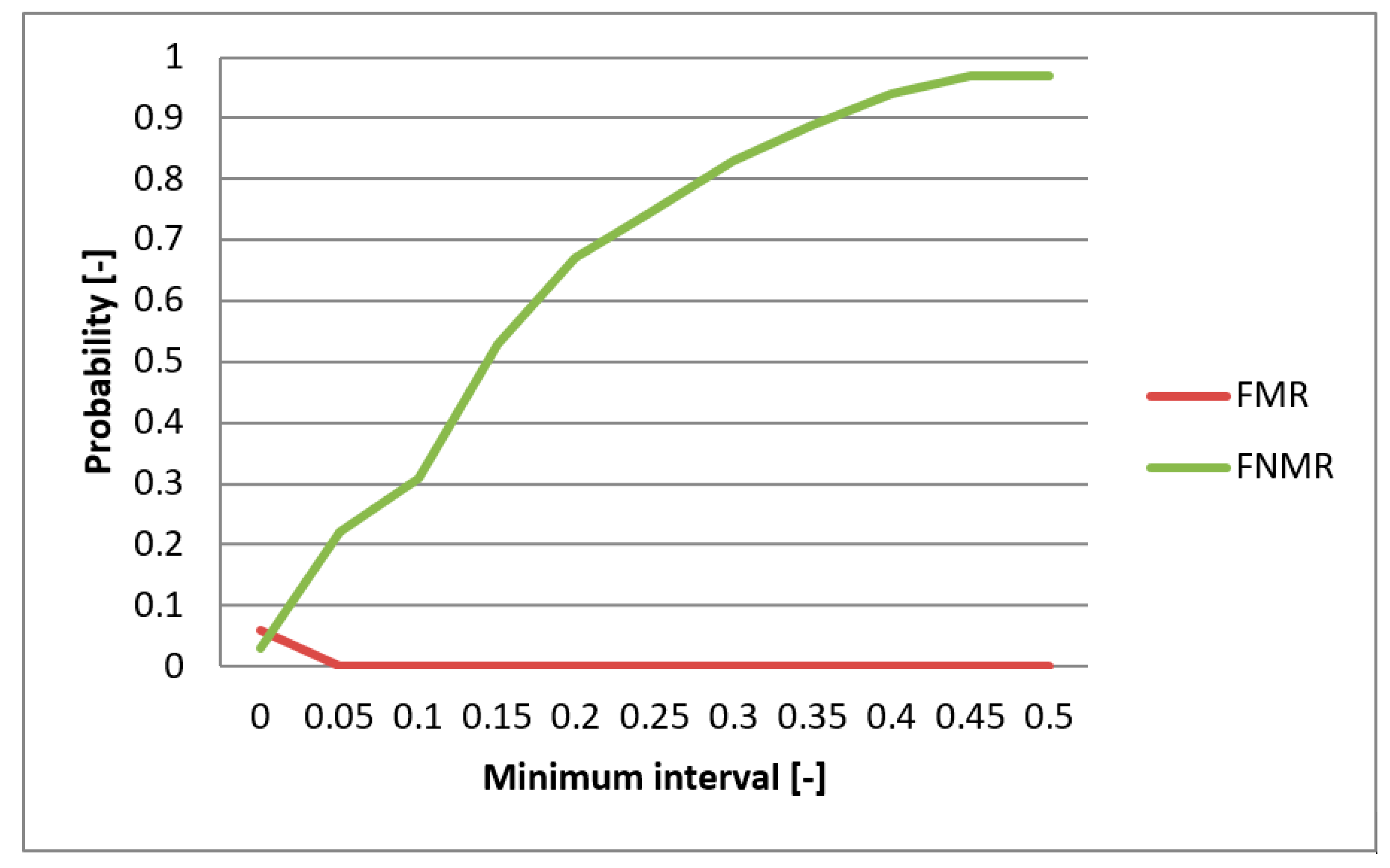

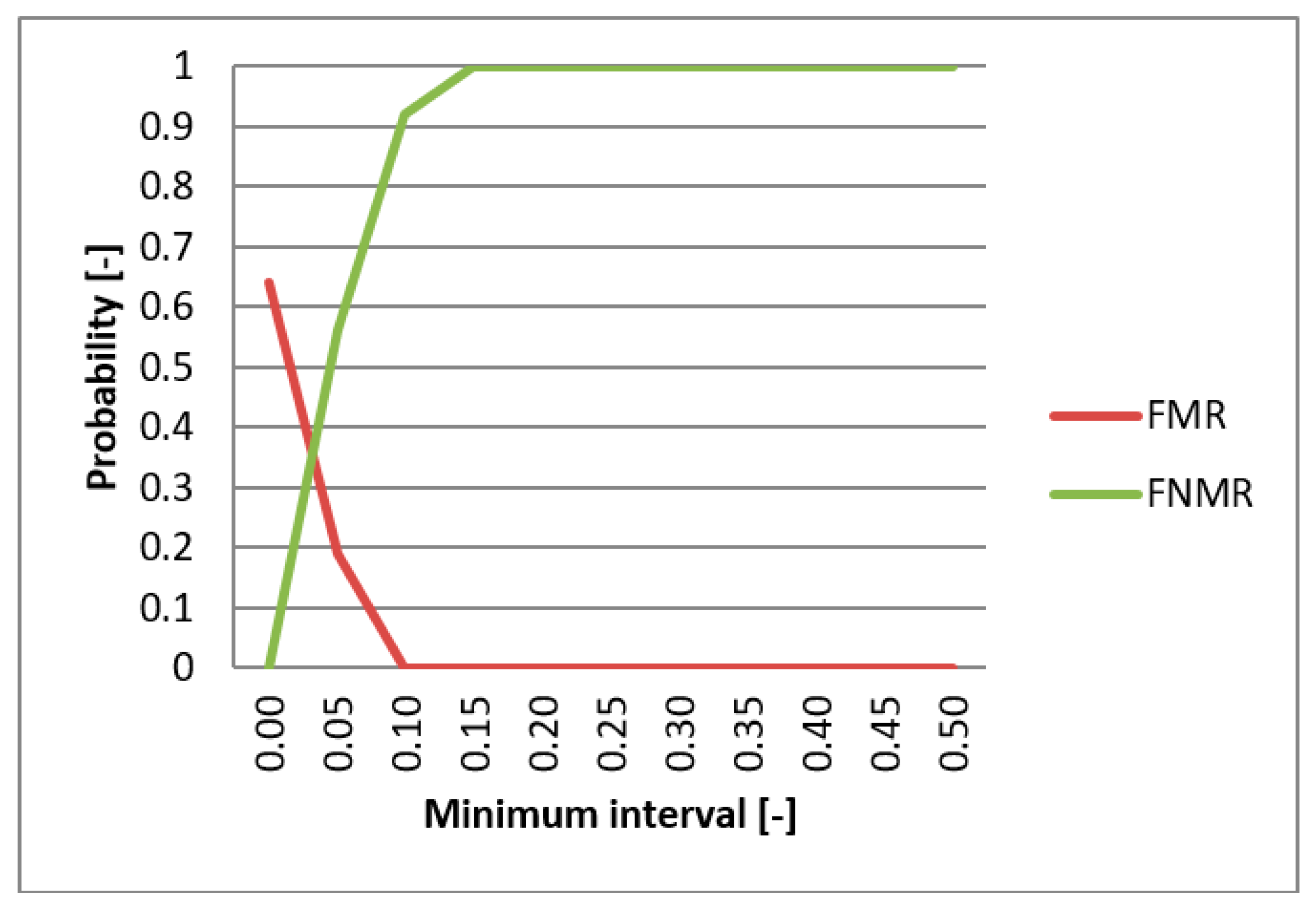

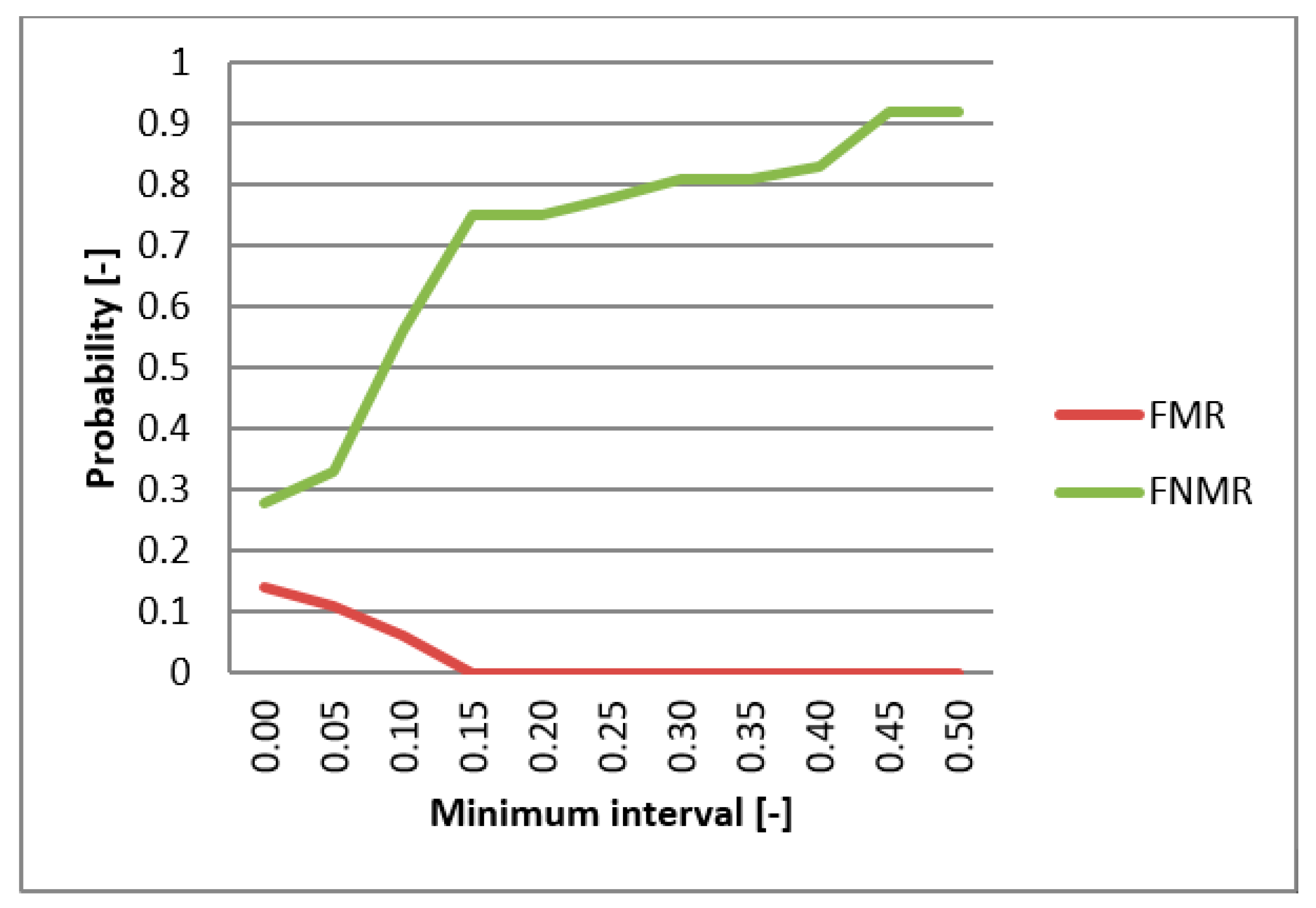

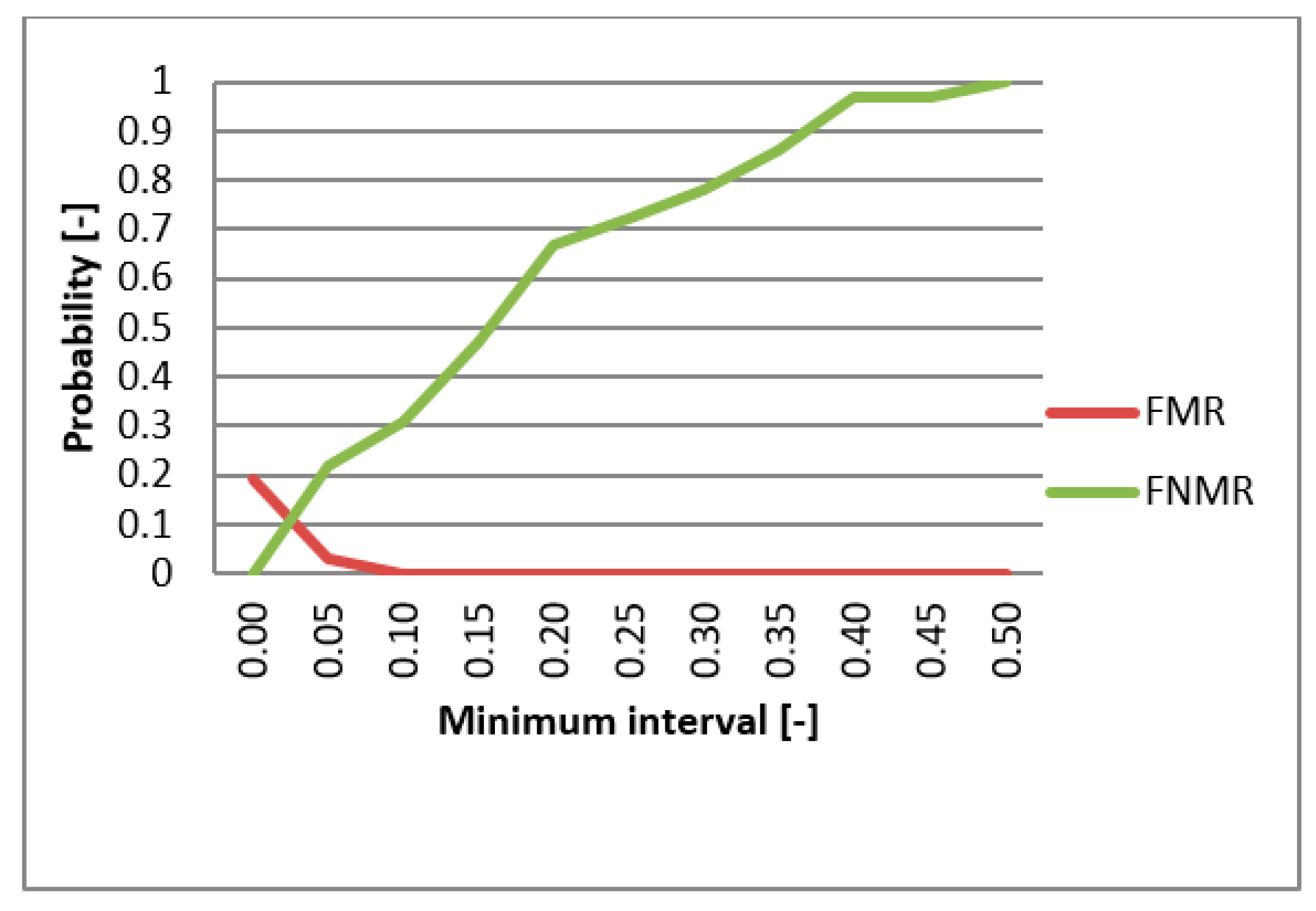

4.3. Results

5. Discussion

6. Conclusions, Limitations, and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Abo-Zahhad, M.; Ahmed, S.M.; Abbas, S.N. A Novel Biometric Approach for Human Identification and Verification Using Eye Blinking Signal. IEEE Signal Process. Lett. 2015, 22, 876–880. [Google Scholar] [CrossRef]

- Zhu, H.; Zhang, Y.; Wang, X. A Novel one-time identity-password authenticated scheme based on biometrics for e-coupon system. Int. J. Netw. Secur. 2016, 18, 401–409. [Google Scholar]

- Luque-Baena, R.M.; Elizondo, D.; López-Rubio, E.; Palomo, E.J.; Watson, T.; Baena, R.M.L. Assessment of geometric features for individual identification and verification in biometric hand systems. Expert Syst. Appl. 2013, 40, 3580–3594. [Google Scholar] [CrossRef]

- Gupta, S.; Buriro, A.; Crispo, B. Demystifying Authentication Concepts in Smartphones: Ways and Types to Secure Access. Mob. Inf. Syst. 2018, 2018, 1–16. [Google Scholar] [CrossRef]

- Alpar, O.; Krejcar, O. Frequency and Time Localization in Biometrics: STFT vs. CWT. In Proceedings of the International Conference on Industrial, Engineering and Other Applications of Applied Intelligent Systems, Montreal, QC, Canada, 25–28 June 2018; Volume 10868 LNAI, pp. 722–728. [Google Scholar]

- Sanchez-Reillo, R.; Sanchez-Avila, C.; González-Marcos, A. Biometric identification through hand geometry measurements. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1168–1171. [Google Scholar] [CrossRef]

- Gaikwad, A.N.; Pasalkar, N.B. Biometric Person Identification—Methods, Advances and Performance Evaluation. IETE Tech. Rev. 2004, 21, 211–217. [Google Scholar] [CrossRef]

- Zhang, J.; Cai, L.; Zhang, S. Malicious Cognitive User Identification Algorithm in Centralized Spectrum Sensing System. Future Internet 2017, 9, 79. [Google Scholar] [CrossRef]

- Zheng, H.; Van Hulle, C.A.; Rathouz, P.J. Comparing Alternative Biometric Models with and without Gene-by-Measured Environment Interaction in Behavior Genetic Designs: Statistical Operating Characteristics. Behav. Genet. 2015, 45, 480–491. [Google Scholar] [CrossRef]

- Hand-based biometrics. Biom. Technol. Today 2003, 11, 9–11. [CrossRef]

- Alpar, O.; Krejcar, O. A Comparative Study on Chrominance Based Methods in Dorsal Hand Recognition: Single Image Case. In Proceedings of the International Conference on Industrial, Engineering and Other Applications of Applied Intelligent Systems, Montreal, QC, Canada, 25–28 June 2018; Volume 10868 LNAI, pp. 711–721. [Google Scholar]

- Yörük, E.; Dutaǧaci, H.; Sankur, B. Hand biometrics. Image Vis. Comput. 2006, 24, 483–497. [Google Scholar] [CrossRef]

- Asem, M.M.; Oveisi, I.S.; Janbozorgi, M. Blood vessel segmentation in modern wide-field retinal images in the presence of additive Gaussian noise. J. Med Imaging 2018, 5, 1. [Google Scholar] [CrossRef]

- Tan, G.J.; Sulong, G.; Rahim, M.S.M. Writer identification: A comparative study across three world major languages. Forensic Sci. Int. 2017, 279, 41–52. [Google Scholar] [CrossRef]

- Renukalatha, S.; Suresh, K.V. A review on biomedical image analysis. Biomed. Eng. Appl. Basis Commun. 2018, 30, 1830001. [Google Scholar] [CrossRef]

- O’Gorman, L.; Schuckers, S.; Derakhshani, R.; Hornak, L.; Xia, X.; D’Amour, M. Spoof Detection for Biometric Sensing Systems. WO0124700 (A1), 12 April 2001. [Google Scholar]

- Waluś, M.; Bernacki, K.; Konopacki, J. Impact of NIR wavelength lighting in image acquisition on finger vein biometric system effectiveness. Opto Electron. Rev. 2017, 25, 263–268. [Google Scholar] [CrossRef]

- Guennouni, S.; Mansouri, A.; Ahaitouf, A. Biometric Systems and Their Applications. In Eye Tracking and New Trends; IntechOpen: London, UK, 2019. [Google Scholar]

- Rinaldi, A. Biometrics’ new identity—measuring more physical and biological traits. EMBO Rep. 2016, 17, 22–26. [Google Scholar] [CrossRef]

- Wang, L.; Leedham, G.; Cho, D.S.-Y. Minutiae feature analysis for infrared hand vein pattern biometrics. Pattern Recognit. 2008, 41, 920–929. [Google Scholar] [CrossRef]

- Jain, A.; Hong, L.; Kulkarni, Y. A Multimodal Biometric System Using Fingerprint, Face, and Speech. In Proceedings of the 2nd Int’l Conference on Audio-and Video-based Biometric Person Authentication, Washington, DC, USA, 22–24 March 1999; pp. 182–187. [Google Scholar]

- Abhishek, K.; Yogi, A. A Minutiae Count Based Method for Fake Fingerprint Detection. Procedia Comput. Sci. 2015, 58, 447–452. [Google Scholar] [CrossRef]

- Han, J.; Bhanu, B. Gait Recognition by Combining Classifiers Based on Environmental Contexts. In Proceedings of the International Conference on Audio- and Video-Based Biometric Person Authentication, Rye Brook, NY, USA, 20–22 July 2005; pp. 416–425. [Google Scholar]

- Zhang, J.; Liu, H.; Ding, D.; Xiao, J. A robust probabilistic collaborative representation based classification for multimodal biometrics. In Proceedings of the Ninth International Conference on Graphic and Image Processing (ICGIP 2017), Qingdao, China, 14–16 October 2017; Volume 10615, p. 106151F. [Google Scholar]

- Barra, S.; de Marsico, M.; Nappi, M.; Narducci, F.; Riccio, D. A hand-based biometric system in visible light for mobile environments. Inf. Sci. 2019, 479, 472–485. [Google Scholar] [CrossRef]

- Engel, E.; Kovalev, I.V.; Ermoshkina, A. The biometric-based module of smart grid system. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2015; Volume 94, p. 012007. [Google Scholar]

- Park, G.; Kim, S. Hand Biometric Recognition Based on Fused Hand Geometry and Vascular Patterns. Sensors 2013, 13, 2895–2910. [Google Scholar] [CrossRef]

- Wu, S.-Q.; Song, W.; Jiang, L.-J.; Xie, S.-L.; Pan, F.; Yau, W.-Y.; Ranganath, S. Infrared Face Recognition by Using Blood Perfusion Data. In Proceedings of the International Conference on Audio- and Video-Based Biometric Person Authentication, Rye Brook, NY, USA, 20–22 July 2005; pp. 320–328. [Google Scholar]

- Ribaric, S.; Fratric, I. A biometric identification system based on eigenpalm and eigenfinger features. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1698–1709. [Google Scholar] [CrossRef]

- Wang, Y.; Shark, L.-K.; Zhang, K. Personal identification based on multiple keypoint sets of dorsal hand vein images. IET Biom. 2014, 3, 234–245. [Google Scholar] [CrossRef]

- Saadat, F.; Nasri, M. A multibiometric finger vein verification system based on score level fusion strategy. In Proceedings of the 2015 International Congress on Technology, Communication and Knowledge (ICTCK), Mashhad, Iran, 11–12 November 2015; pp. 501–507. [Google Scholar]

- Veluchamy, S.; Karlmarx, L. System for multimodal biometric recognition based on finger knuckle and finger vein using feature-level fusion and k-support vector machine classifier. IET Biom. 2017, 6, 232–242. [Google Scholar] [CrossRef]

- Kang, B.J.; Park, K.R. Multimodal biometric method based on vein and geometry of a single finger. IET Comput. Vis. 2010, 4, 209–217. [Google Scholar] [CrossRef]

- Kumar, A.; Prathyusha, K.V. Personal Authentication Using Hand Vein Triangulation and Knuckle Shape. IEEE Trans. Image Process. 2009, 18, 2127–2136. [Google Scholar] [CrossRef]

- Jain, A.K.; Duta, N. Deformable matching of hand shapes for user verification. In Proceedings of the 1999 International Conference on Image Processing (Cat. 99CH36348), Kobe, Japan, 24–28 October 1999; Volume 2, pp. 857–861. [Google Scholar]

- Wong, R.L.N.; Shi, P. Peg-free hand geometry recognition using hierarchical geometry and shape matching. In Proceedings of the IAPR Workshop on Machine Vision Applications, Nara, Japan, 11–13 December 2002; pp. 281–284. [Google Scholar]

- Kang, W.; Liu, Y.; Wu, Q.; Yue, X. Contact-free palm-vein recognition based on local invariant features. PLoS ONE 2014, 9, e97548. [Google Scholar] [CrossRef]

- Van Tilborg, H.C.A.; Jajodia, S. Encyclopedia of Cryptography and Security, 2nd ed.; Springer: Boston, MA, USA, 2011. [Google Scholar]

- Kim, W.; Song, J.M.; Park, K.R. Multimodal biometric recognition based on convolutional neural network by the fusion of finger-vein and finger shape using near-infrared (NIR) camera sensor. Sensors 2018, 18, 2296. [Google Scholar] [CrossRef]

- Kirimtat, A.; Krejcar, O. Parametric Variations of Anisotropic Diffusion and Gaussian High-Pass Filter for NIR Image Preprocessing in Vein Identification. In Proceedings of the International Conference on Bioinformatics and Biomedical Engineering, Granada, Spain, 25–27 April 2018; Volume 10814, pp. 212–220. [Google Scholar]

- Robles, F.E.; Chowdhury, S.; Wax, A. Assessing hemoglobin concentration using spectroscopic optical coherence tomography for feasibility of tissue diagnostics. Biomed. Opt. Express 2010, 1, 310–317. [Google Scholar] [CrossRef]

- Mesicek, J.; Krejcar, O.; Selamat, A.; Kuca, K. A recent study on hardware accelerated Monte Carlo modeling of light propagation in biological tissues. In Proceedings of the International Conference on Industrial, Engineering and Other Applications of Applied Intelligent Systems, Morioka, Japan, 2–4 August 2016; Volume 9799, pp. 493–502. [Google Scholar]

- Mesicek, J.; Zdarsky, J.; Dolezal, R.; Krejcar, O.; Kuca, K. Simulations of light propagation and thermal response in biological tissues accelerated by graphics processing unit. In Proceedings of the International Conference on Computational Collective Intelligence, Halkidiki, Greece, 28–30 September 2016; Volume 9876 LNCS, pp. 242–251. [Google Scholar]

- Jain, A.; Hong, L. Biometrics: Techniques for personal identification. In Proceedings of the SPIE—The International Society for Optical Engineering, Wuhan, China, 21–23 October 1998; Volume 3545, pp. 2–3. [Google Scholar]

- Wayman, J.L. Biometric Verification/Identification/Authentication/Recognition: The Terminology. In Encyclopedia of Biometrics; Li, S.Z., Jain, A., Eds.; Springer: Boston, MA, USA, 2009; pp. 153–157. [Google Scholar]

- Mayhew, S. Explainer: Verification vs. Identification Systems. Biometric Update. 1 June 2012. Available online: https://www.biometricupdate.com/201206/explainer-verification-vs-identification-systems (accessed on 26 July 2019).

- Biometric Security Devices. Biometric Verification Vs Biometric Identification Systems. Biometric Security Devices. 2019. Available online: https://www.biometric-security-devices.com/biometric-verification.html (accessed on 26 July 2019).

- Alpar, O.; Krejcar, O. Detection of Irregular Thermoregulation in Hand Thermography by Fuzzy C-Means. In Proceedings of the International Conference on Bioinformatics and Biomedical Engineering, Granada, Spain, 25–27 April 2018; Volume 10814, pp. 255–265. [Google Scholar]

- Kolda, L.; Krejcar, O. Biometrie hand vein estimation using bloodstream filtration and fuzzy e-means. In Proceedings of the IEEE International Conference on Fuzzy Systems, Naples, Italy, 9–12 July 2017. [Google Scholar]

- Manjón, J.V.; Thacker, N.A.; Lull, J.J.; Garcia-Martí, G.; Martí-Bonmatí, L.; Robles, M. Multicomponent MR Image Denoising. Int. J. Biomed. Imaging 2009, 2009. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Feitosa, R.Q.; van der Meer, F.; van der Werff, H.; van Coillie, F.; et al. Geographic Object-Based Image Analysis—Towards a new paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef]

- Oyebode, K.O. Improved thresholding method for cell image segmentation based on global homogeneity information. J. Telecommun. Electron. Comput. Eng. 2018, 10, 13–16. [Google Scholar]

- Yang, Y.; Stafford, P.; Kim, Y. Segmentation and intensity estimation for microarray images with saturated pixels. BMC Bioinform. 2011, 12, 462. [Google Scholar] [CrossRef]

- Liu, L.; Xie, Z.; Yang, C. A novel iterative thresholding algorithm based on plug-and-play priors for compressive sampling. Future Internet 2017, 9, 24. [Google Scholar]

- Manish, R.; Venkatesh, A.; Ashok, S.D. Machine Vision Based Image Processing Techniques for Surface Finish and Defect Inspection in a Grinding Process. Mater. Today Proc. 2018, 5, 12792–12802. [Google Scholar] [CrossRef]

- Zhang, T.Y.; Suen, C.Y. A fast parallel algorithm for thinning digital patterns. Commun. ACM 1984, 27, 236–239. [Google Scholar] [CrossRef]

- Guo, C.; Ngo, D.; Ahadi, S.; Doub, W.H. Evaluation of an Abbreviated Impactor for Fine Particle Fraction (FPF) Determination of Metered Dose Inhalers (MDI). AAPS PharmSciTech 2013, 14, 1004–1011. [Google Scholar] [CrossRef]

- Zhou, Z.-Q.; Wang, B. A modified Hausdorff distance using edge gradient for robust object matching. In Proceedings of the 2009 International Conference on Image Analysis and Signal Processing (IASP 2009), Linhai, China, 11–12 April 2009; pp. 250–254. [Google Scholar]

- Ross, A.; Jain, A.K. Fusion Techniques in Multibiometric Systems. In Face Biometrics for Personal Identification; Hammoud, R.I., Abidi, B.R., Abidi, M.A., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 185–212. [Google Scholar]

- Ríos-Sánchez, B.; Arriaga-Gómez, M.F.; Guerra-Casanova, J.; de Santos-Sierra, D.; de Mendizábal-Vázquez, I.; Bailador, G.; Sanchez-Avila, C. gb2s μ MOD: A MUltiMODal biometric video database using visible and IR light. Inf. Fusion 2016, 32, 64–79. [Google Scholar] [CrossRef]

- Moini, A.; Madni, A.M. Leveraging Biometrics for User Authentication in Online Learning: A Systems Perspective. IEEE Syst. J. 2009, 3, 469–476. [Google Scholar] [CrossRef]

- Marasco, E.; Sansone, C. An Experimental Comparison of Different Methods for Combining Biometric Identification Systems. Inf. Secur. Appl. 2011, 6979, 255–2641. [Google Scholar]

- Kirimtat, A.; Krejcar, O.; Selamat, A. A Mini-review of Biomedical Infrared Thermography (B-IRT). In Proceedings of the International Work-Conference on Bioinformatics and Biomedical Engineering Granada, Spain, 8–10 May 2019; Volume 11466, pp. 99–110. [Google Scholar]

- Zurek, P.; Cerny, M.; Prauzek, M.; Krejcar, O.; Penhaker, M. New approaches for continuous non invasive blood pressure monitoring. In Proceedings of the XII Mediterranean Conference on Medical and Biological Engineering and Computing 2010, Chalkidiki, Greece, 27–30 May 2010; Volume 29, pp. 228–231. [Google Scholar]

- Mambou, S.; Krejcar, O.; Kuca, K.; Selamat, A. Novel Human Action Recognition in RGB-D Videos Based on Powerful View Invariant Features Technique. Stud. Comput. Intell. 2018, 769, 343–353. [Google Scholar]

- Maresova, P.; Sobeslav, V.; Krejcar, O. Cost–benefit analysis–evaluation model of cloud computing deployment for use in companies. Appl. Econ. 2017, 49, 521–533. [Google Scholar] [CrossRef]

- Isah, S.S.; Selamat, A.; Ibrahim, R.; Krejcar, O. An investigation of information granulation techniques in cybersecurity. Stud. Comput. Intell. 2020, 830, 151–163. [Google Scholar]

- De-Santos-Sierra, A.; Sánchez-Ávila, C.; Del Pozo, G.B.; Guerra-Casanova, J. Unconstrained and Contactless Hand Geometry Biometrics. Sensors 2011, 11, 10143–10164. [Google Scholar] [CrossRef]

| Study Reference | Year | Combined Biometric Methods | Sample Size | Equal Error Rate (%) |

|---|---|---|---|---|

| Current work | 2019 | HG and FV | 40 | 5 |

| [27] | 2013 | FV and HG | 100 | 0.06 |

| [29] | 2019 | PP and FG | 237 | 58 |

| [30] | 2014 | HG and FV | 204 | 0.02 |

| [31] | 2015 | MFV | 106 | 0.08 |

| [32] | 2017 | FK and FV | 100 | 0.35 |

| [33] | 2010 | FV and FG | 102 | 0.075 |

| [34] | 2009 | FV and KS | 100 | 1.14 |

| Component | Seller/Manufacturer | Amount (in €) |

|---|---|---|

| CCD camera GuppyPRO F-031B | Allied Vision Technologies GmbH | 600 |

| Objective VCN 1.4/4.5 f = 4.5 mm | Vision & Control GmbH | 150 |

| IR filter | Heliopan Lichtfilter-Technik Summer GmbH & Co KG | 40 |

| Lighting SFD 42/12 IR | Vision & Control GmbH | 700 |

| Total | 1490 | |

| Men | Women |

|---|---|

| 80% | 20% |

| 20–29 | 30–39 | 40–49 | 50–60 |

|---|---|---|---|

| 20% | 50% | 20% | 10% |

| Program Step | Hand Geometry | Blood Stream | ||

|---|---|---|---|---|

| Average Time [ms] | Maximum Deviation [ms] | Average Time [ms] | Maximum Deviation [ms] | |

| Image pre-adaptation | 1.25 | 14.75 | 3.5 | 8.5 |

| Segmentation | 1.08 | 8.9 | 152.7 | 70.3 |

| Extraction of characteristics | 667.5 | 168.5 | 8.83 | 3.26 |

| Calculation of the degree of differentiation and allocation | 0.97 | 3 | 1 | 1.9 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kolda, L.; Krejcar, O.; Selamat, A.; Kuca, K.; Fadeyi, O. Multi-Biometric System Based on Cutting-Edge Equipment for Experimental Contactless Verification. Sensors 2019, 19, 3709. https://doi.org/10.3390/s19173709

Kolda L, Krejcar O, Selamat A, Kuca K, Fadeyi O. Multi-Biometric System Based on Cutting-Edge Equipment for Experimental Contactless Verification. Sensors. 2019; 19(17):3709. https://doi.org/10.3390/s19173709

Chicago/Turabian StyleKolda, Lukas, Ondrej Krejcar, Ali Selamat, Kamil Kuca, and Oluwaseun Fadeyi. 2019. "Multi-Biometric System Based on Cutting-Edge Equipment for Experimental Contactless Verification" Sensors 19, no. 17: 3709. https://doi.org/10.3390/s19173709

APA StyleKolda, L., Krejcar, O., Selamat, A., Kuca, K., & Fadeyi, O. (2019). Multi-Biometric System Based on Cutting-Edge Equipment for Experimental Contactless Verification. Sensors, 19(17), 3709. https://doi.org/10.3390/s19173709