Practical Inter-Floor Noise Sensing System with Localization and Classification

Abstract

:1. Introduction

2. Related Works

2.1. Noise Level Measurement

2.2. Sound Source Localization

2.3. Sound Classification

3. Implementation of the Inter-Floor Noise Sensing System

3.1. Measurement of Noise Level

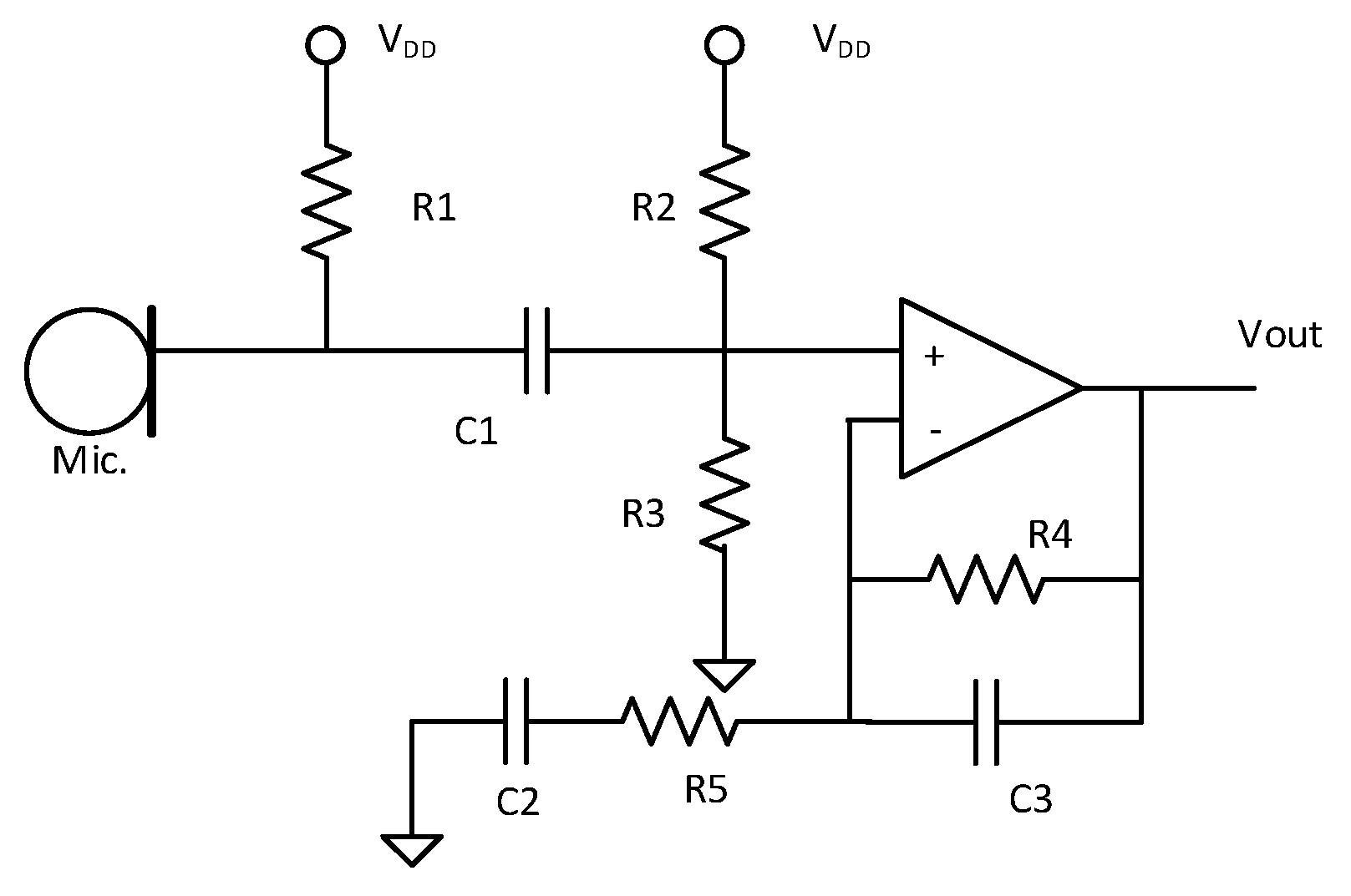

3.1.1. Amplifying Circuit

3.1.2. Sampling and FFT

3.1.3. A-Weighting

3.1.4. Time-Weighting

3.1.5. Computing SPL

3.2. Localization of Noise Source

3.2.1. Introduction to Time Difference of Arrival

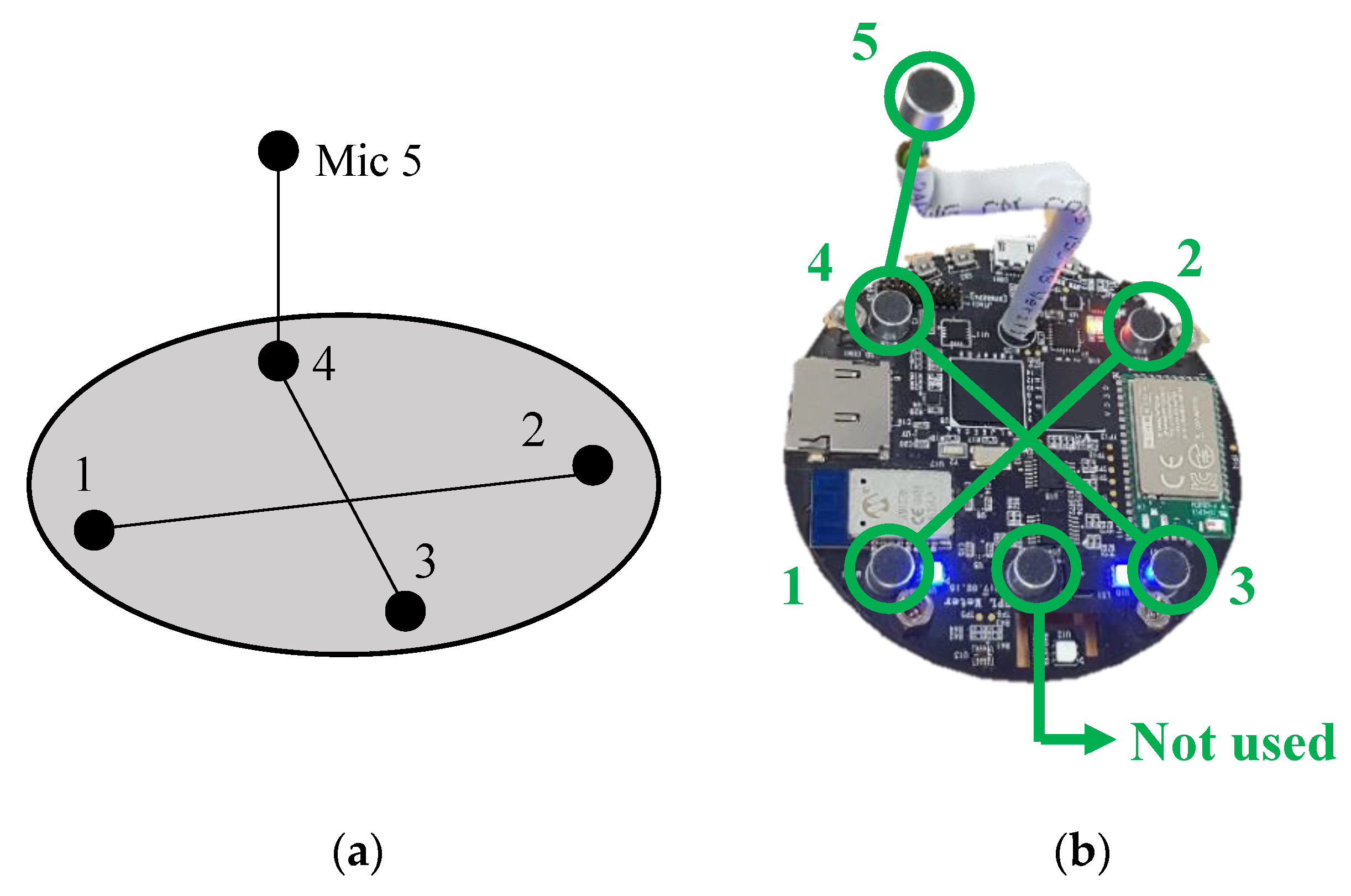

3.2.2. Microphone Array Structure

3.2.3. Estimation of Azimuth and Elevation

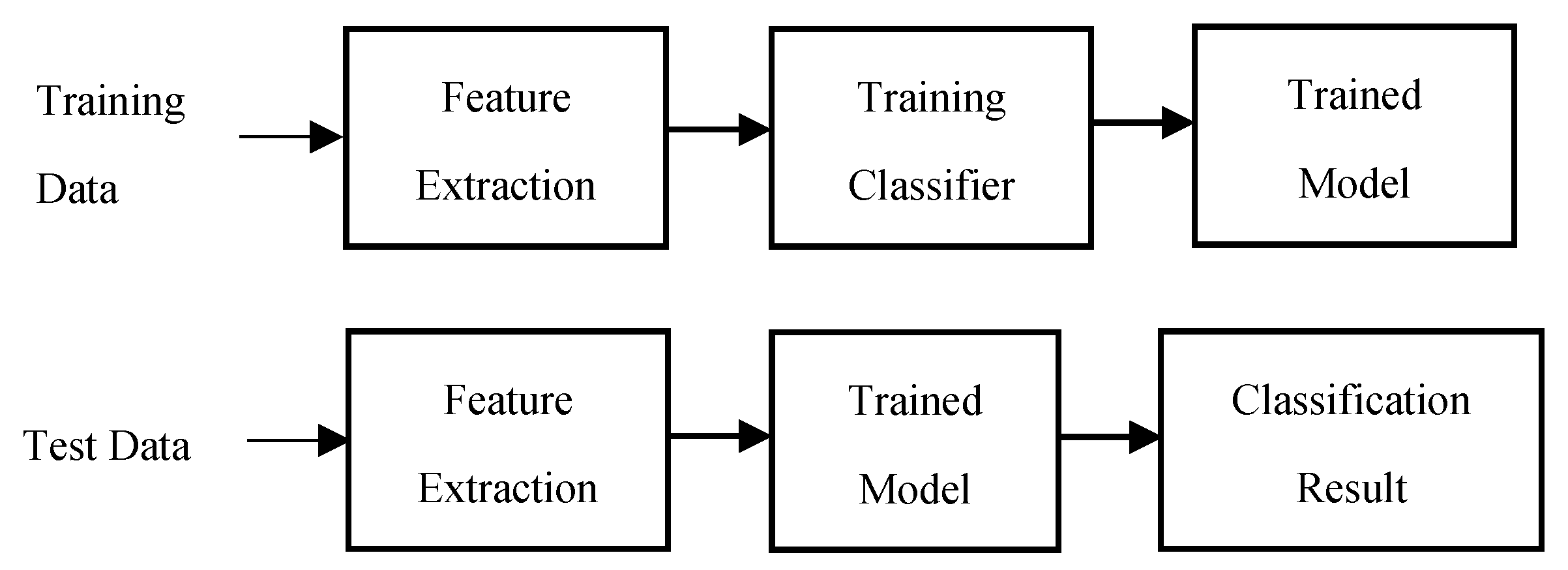

3.3. Classification of Noise Types

3.3.1. Training Dataset

3.3.2. Training Process

4. Experimental Results

4.1. Measurement of Noise Level

4.2. Localization of Noise Source

4.3. Classification of Noise Type

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Statistics Korea. Statistics Korea, 2017 Population and Housing Census. Available online: http://kostat.go.kr/portal/eng/pressReleases/7/2/index.board?bmode=read&bSeq=&aSeq=370994&pageNo=1&rowNum=10&navCount=10&currPg=&searchInfo=&sTarget=title&sTxt= (accessed on 20 August 2019).

- Berglund, B.; Lindvall, T.; Schwela, D.H. WHO Guidelines for Community Noise; WHO: Geneva, Switzerland, 1999. [Google Scholar]

- WHO. Environmental Noise Guidelines for the European Region; WHO: Geneva, Switzerland, 2018. [Google Scholar]

- KIMO Instruments. DB200 Datasheet. Available online: https://kimo-instruments.com/sites/kimo/files/2018-10/FTang_DB200_08-10-18_0.pdf (accessed on 29 July 2019).

- TES Electrical Electronic Corp. TES-1352S Datasheet. Available online: http://www.tes.com.tw/en/product_detail.asp?seq=387 (accessed on 29 July 2019).

- RION. NL-52/51 Datasheet. Available online: https://rion-sv.com/products/NL-52_42-E.html (accessed on 29 July 2019).

- Noiseaware. Noiseaware Indoor Sensor. Available online: https://noiseaware.io/ (accessed on 29 July 2019).

- Risojevic, V.; Rozman, R.; Pilipovic, R.; Censnovar, R. Accurate indoor Sound Level Measurement on a Low-Power and Low-Cost Wireless Sensor Node. Sensors 2018, 18, 2351. [Google Scholar] [CrossRef] [PubMed]

- Santini, S.; Ostermaier, B.; Vitaletti, A. First Experiences Using Wireless Sensor Networks for Noise Pollution Monitoring. In Proceedings of the Workshop on Real-World Wireless Sensor Networks, Glasgow, Scotland, 1 April 2008; pp. 61–65. [Google Scholar]

- Zamora, W.; Calafate, C.T.; Cano, J.-C.; Manzoni, P. Accurate Ambient Noise Assessment Using Smartphone. Sensors 2017, 17, 917. [Google Scholar] [CrossRef] [PubMed]

- Nilsson, M.E. A-weighted sound pressure level as an indicator of short-term loudness or annoyance of road-traffic sound. J. Sound Vib. 2007, 302, 197–207. [Google Scholar] [CrossRef]

- Bech, S.; Zacharov, N. Appendix D: A-, B-, C-and D-Weighting Curves. In Perceptual Audio Evaluation-Theory, Method and Application; John Wiley & Sons: Hoboken, NJ, USA, 2006; pp. 377–437. [Google Scholar]

- IEC. IEC 61672-1: 2013 Electroacoustics-Sound Level Meters-Part 1: Specifications. Available online: https://infostore.saiglobal.com/preview/98701543932.pdf?sku=856357_SAIG_NSAI_NSAI_2037190 (accessed on 20 August 2019).

- Addeo, E.J.; John, D.R.; Gennady, S. Sound Localization System for Teleconferencing Using Self-Steering Microphone Arrays. U.S. Patent 5335011, 2 August 1994. [Google Scholar]

- Mumolo, E.; Nolich, M.; Vercelli, V. Algorithms for acoustic localization based on microphone array in service robotics. Robot. Auton. Syst. 2003, 42, 69–88. [Google Scholar] [CrossRef]

- Valin, J.M.; Michaud, F.; Rouat, J.; Letourneau, D. Robust sound source localization using a microphone array on a mobile robot. In Proceedings of the International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 27–31 October 2003; pp. 1228–1233. [Google Scholar]

- Chen, X.; Chen, Y.; Cao, S.; Zhang, L.; Chen, X. Acoustic Indoor Localization System Integrating TDMA + FDMA Transmission Scheme and Positioning Correction Technique. Sensors 2019, 19, 2353. [Google Scholar] [CrossRef]

- Omologo, M.; Svaizer, P. Acoustic event localization using a crosspower-spectrum phase based technique. In Proceedings of the International Conference Acoustics, Speech, and Signal Processing (ICASSP-94), Adelaide, Australia, 19–22 April 1994; Volume 2, pp. 273–276. [Google Scholar]

- Omologo, M.; Svaizer, P. Acoustic source location in noisy and reverberant environment using CSP analysis. In Proceedings of the International Conference on Acoustics, Speech, and Signal Processing (ICASSP-96), Atlanta, GA, USA, 9 May 1996; Volume 2, pp. 921–924. [Google Scholar]

- Svaizer, P.; Matassoni, M.; Omologo, M. Acoustic source location in a three-dimensional space using crosspower spectrum phase. In Proceedings of the International Conference on Acoustics, Speech, and Signal Processing, ICASSP-97, Munich, Germany, 21–24 April 1997; Volume 1, pp. 231–234. [Google Scholar]

- Brandstein, M.S.; Adcock, J.E.; Silverman, H.F. A closed-form location estimator for use with room environment microphone arrays. IEEE Trans. Speech Audio Process. 1997, 5, 45–50. [Google Scholar] [CrossRef]

- Handzel, A.A.; Krishnaprasad, P.S. Biomimetic Sound-Source Localization. IEEE Sens. J. 2002, 2, 607–616. [Google Scholar] [CrossRef]

- Khyam, M.O.; Ge, S.S.; Li, X.; Pickering, M.R. Highly Accurate Time-of-Flight Measurement Technique Based on Phase-Correlation for Ultrasonic Ranging. IEEE Sens. J. 2017, 17, 434–443. [Google Scholar] [CrossRef]

- Chen, L.; Liu, Y.; Kong, F.; He, N. Acoustic source localization based on generalized cross-correlation time-delay estimation. Procedia Eng. 2011, 15, 4912–4919. [Google Scholar] [CrossRef]

- Cho, H.; Kim, J.; Baek, J. Practical localization system for consumer devices using Zigbee networks. IEEE Trans. Consum. Electron. 2010, 56, 1562–1569. [Google Scholar] [CrossRef]

- Bahl, P.; Padmanabhan, V.N. RADAR: An inbuilding RF-based user location and tracking system. In Proceedings of the INFOCOM 2000, Tel Aviv, Israel, 26–30 March 2000; pp. 775–784. [Google Scholar]

- Chu, S.; Narayanan, S.; Kuo, C.-C.J. Environmental sound recognition with time–frequency audio features. IEEE Trans. Audio Speech Lang. Process. 2009, 17, 1142–1158. [Google Scholar] [CrossRef]

- Cowling, M.; Sitte, R. Comparison of techniques for environmental sound recognition. Pattern Recognit. Lett. 2003, 24, 2895–2907. [Google Scholar] [CrossRef]

- Muda, L.; Begam, M.; Elamvazuthi, I. Voice Recognition Algorithms Using Mel Frequency Cepstral Coefficient (MFCC) and Dynamic Time Warping (DTW) Techniques. arXiv 2010, arXiv:1003.4083. [Google Scholar]

- Garcia, V.; Debreuve, E.; Barlaud, M. Fast k nearest neighbor search using GPU. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, CVPRW’08, Anchorage, AK, USA, 23–28 June 2008; pp. 1–6. [Google Scholar]

- Larose, D.T.; Larose, C.D. k-nearest neighbor algorithm. In Discovering Knowledge in Data: An Introduction to Data Mining; John Wiley & Sons: Hoboken, NJ, USA, 2014. [Google Scholar]

- Keller, J.M.; Gray, M.R.; Givens, J.A. A fuzzy k-nearest neighbor algorithm. IEEE Trans. Syst. Man Cybern. 1985, 4, 580–585. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Neural Information Processing Systems Conference, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Mikolov, T.; Karafiat, M.; Burget, L.; Cemocky, J.; Khudanpur, S. Recurrent Neural Network Based Language Model. In Proceedings of the International Speech Communication Association, INTERSPEECH 2010, Chiba, Japan, 25–30 September 2010; pp. 1045–1048. [Google Scholar]

- Cooley, J.W.; Tukey, J.W. An algorithm for the machine calculation of complex Fourier series. Math. Comput. 1965, 19, 297–301. [Google Scholar] [CrossRef]

- Piczak, K.J. ESC: Dataset for environmental sound classification. In Proceedings of the 23rd ACM international conference on Multimedia, Brisbane, Australia, 26–30 October 2015; pp. 1015–1018. [Google Scholar]

- Gouyon, F.; Pachet, F.; Delerue, O. On the use of zero-crossing rate for an application of classification of percussive sounds. In Proceedings of the COST G-6 conference on Digital Audio Effects, DAFX-00, Verona, Italy, 7–9 December 2000; p. 26. [Google Scholar]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- CEM Instruments. DT8852 Datasheet. Available online: http://www.cem-instruments.com/en/ (accessed on 7 July 2019).

- Audacity. Available online: https://www.audacityteam.org Audacity org (accessed on 7 July 2019).

| Class | Accuracy (%) |

|---|---|

| Clock alarm | 40 |

| Crying baby | 100 |

| Dog barking | 80 |

| Door knock | 80 |

| Footsteps | 80 |

| Vacuum cleaner | 70 |

| Washing machine | 80 |

| Average | 75.7 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Son, J.; Kyung, C.-M.; Cho, H. Practical Inter-Floor Noise Sensing System with Localization and Classification. Sensors 2019, 19, 3633. https://doi.org/10.3390/s19173633

Son J, Kyung C-M, Cho H. Practical Inter-Floor Noise Sensing System with Localization and Classification. Sensors. 2019; 19(17):3633. https://doi.org/10.3390/s19173633

Chicago/Turabian StyleSon, Junho, Chong-Min Kyung, and Hyuntae Cho. 2019. "Practical Inter-Floor Noise Sensing System with Localization and Classification" Sensors 19, no. 17: 3633. https://doi.org/10.3390/s19173633

APA StyleSon, J., Kyung, C.-M., & Cho, H. (2019). Practical Inter-Floor Noise Sensing System with Localization and Classification. Sensors, 19(17), 3633. https://doi.org/10.3390/s19173633