1. Introduction

Vehicle emission are a major factor in urban air pollution, and car ownership continuously increases every year [

1]. Thus, it is essential that we use available measures to monitor and control vehicle emissions. Generally, these measures consist of chassis and engine dynamometer tests, road-tunnel measurements, portable emission measurement systems (PEMS), plume chasing measurements, and optical remote sensing systems (RSSs). Chassis and engine dynamometer testing cannot reflect the real emission levels in on-road driving conditions [

2], and road-tunnel methods are subject to geographical and environmental conditions [

3]. PEMS and plume chasing measurement can precisely determine vehicle emissions, but PEMS take considerable time to install and uninstall these systems to transfer them between vehicles, and plume chasing measurements limit the speed and minimum distance for safety; these approaches are not suitable for monitoring a large number of vehicles. Further, their high price must be taken into consideration [

4,

5]. RSSs adopt non-dispersive infrared technology to detect CO, CO

2, HC, and they use middle-infrared laser spectrum technology to detect NO; thus, RSSs can be used to perform non-contact on-road measurements [

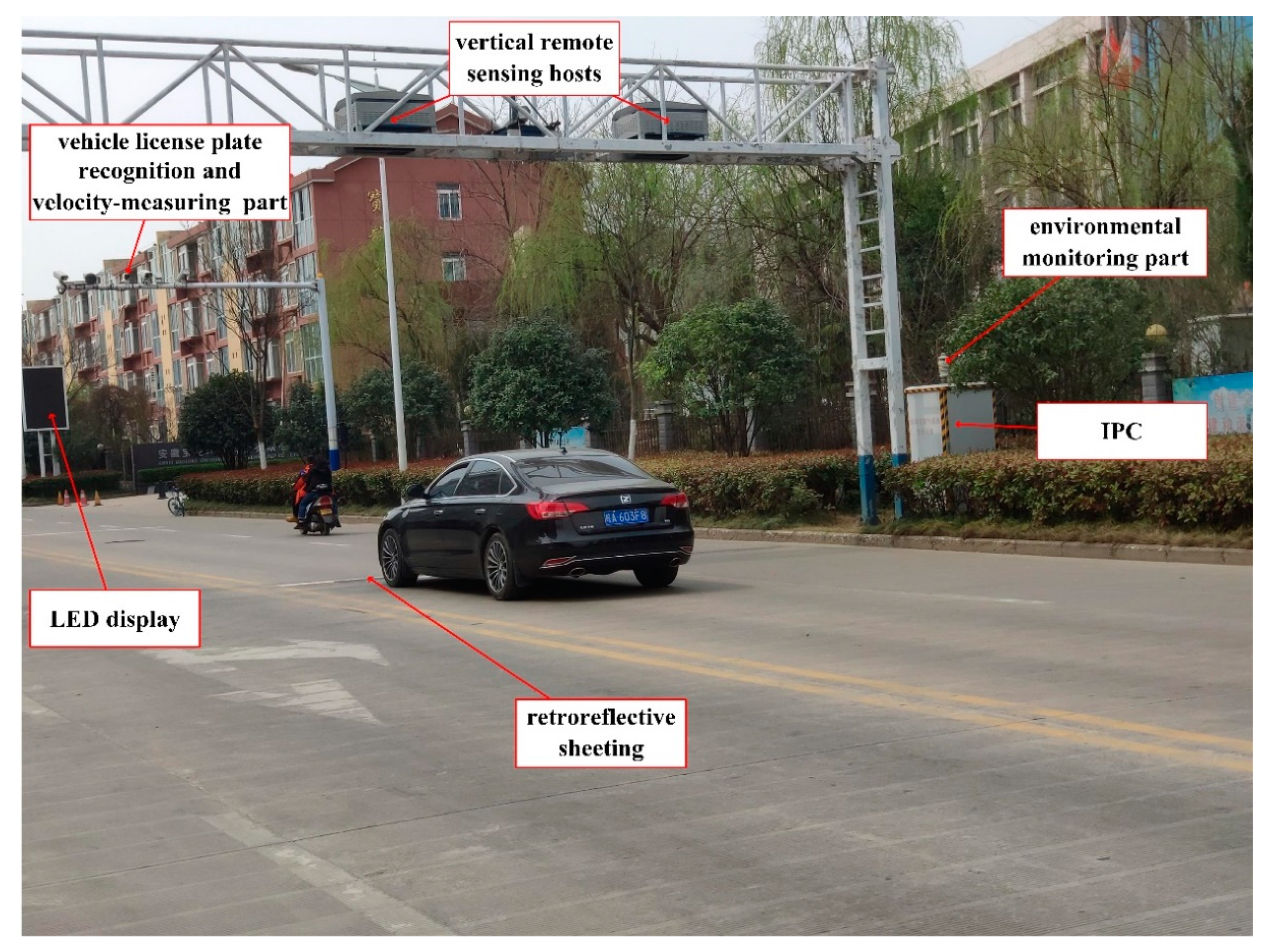

6]. An RSS can be installed on any road, rendering it a feasible and real-time measurement system for law enforcement departments to detect on-road high-emitting vehicles, where it is not viable to use the other three methods.

Many researchers have conducted studies with RSSs. Stedman and Bishop, who invented and developed it for a series of studies, were the pioneers of the RSS [

7]. Kang et al. proposed a two-step location strategy using both, depth-first searching and greedy strategy, to find the minimum set of roads with traffic emission monitors, based on the digraph modeled from the traffic network [

8]. Huang et al. researched the mechanism, applications, as well as a case study of RSS from Hong Kong. Their studies showed that the accuracy and number of vehicles affected by remote sensing screening programs were highly dependent on the cut-points, and that using fixed conservative cut-points in absolute concentrations (% or ppm) may be inappropriate [

9]. Bernard et al. carried out a lot of research on RSS in Europe, and they used a laboratory limit to distinguish high-emitting vehicles [

10,

11]. Zhang et al. used a long short-term memory (LSTM) network to forecast vehicle emissions using multi-day observations by an RSS [

12]. Even though many studies have been performed in different research fields using RSSs [

13], little research has been carried out to automatically detect on-road high-emitting vehicles using this technology.

Usually, high-emitting vehicles and low-emitting vehicles are classified by the fixed cut-off concentrations of

and

. However, the set values for these cut-points lack a scientific basis [

14]. RSS measurements are highly sensitive to multiple environmental factors, such as geographical conditions, meteorological conditions, air quality, wind, humidity, temperature, and so on, so the cut-off points between high-emitting and low-emitting vehicles are variable among different sites, times, and RSS equipment. To solve the above problem, we propose a novel adaptive method in this paper to establish cut-points and recognize high-emitting vehicles quickly and automatically. The system combines data analysis with clustering and classification methods from machine learning, and attempt to apply these methods to remote sensing monitoring of on-road high-emitting vehicles.

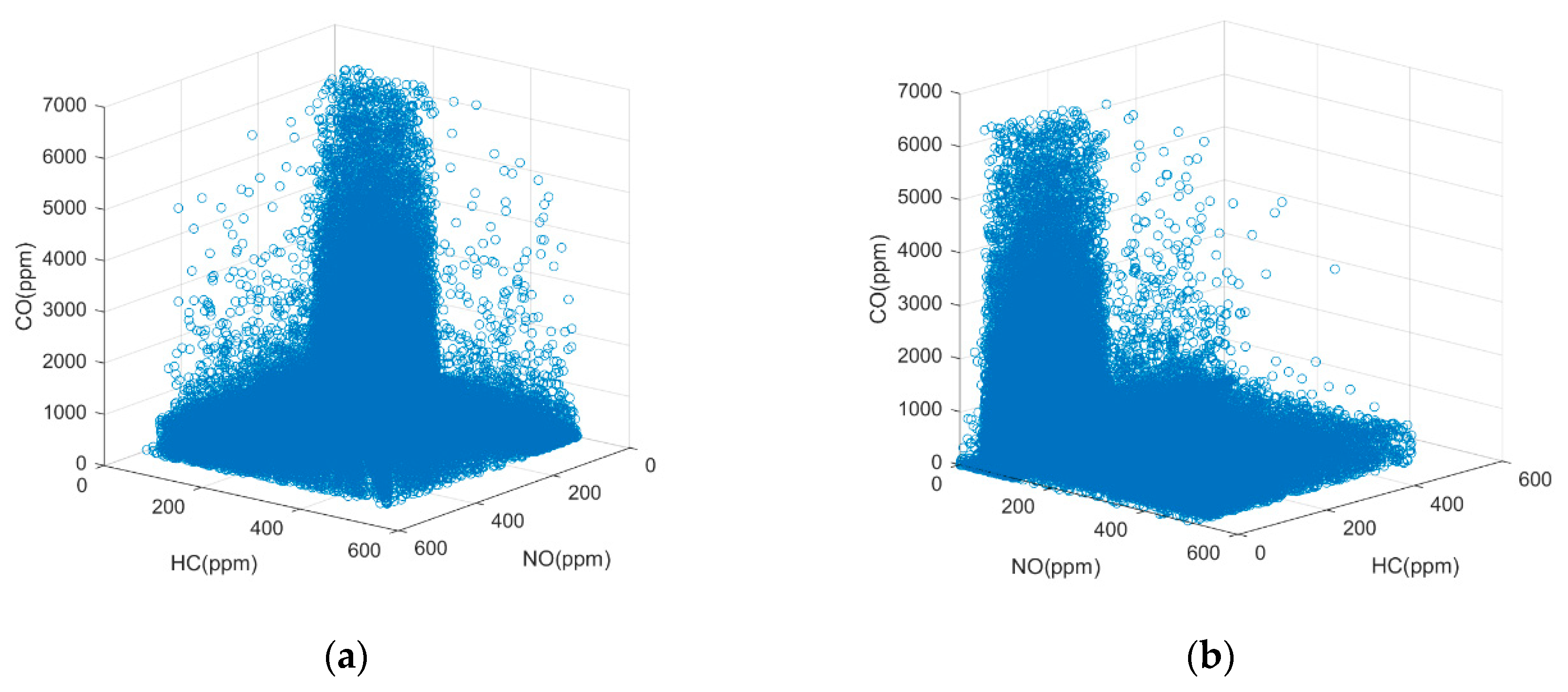

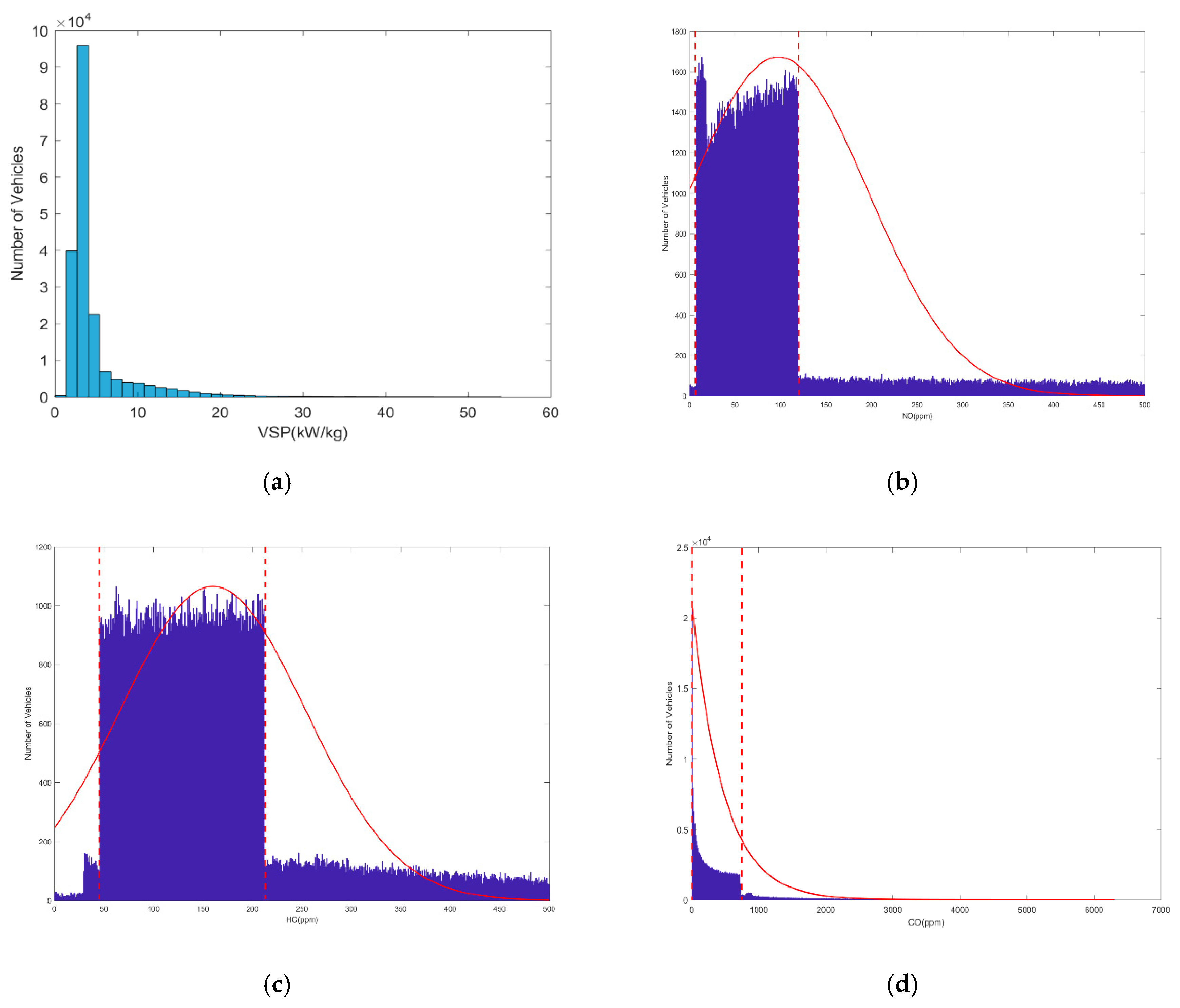

Firstly, 192,097 vehicle emission datasets, comprising and concentrations were collected by RSSs for 8 days. Secondly, we used three-dimensional and histogram statistics to analyze emission relationships. Secondly, an adaptive clustering algorithm was developed to rapidly label and rapidly divide the most recent 10,000 emission datasets into different high-emitting or low-emitting zones. Finally, new vehicles passing through the RSS were automatically and quickly classified into the corresponding zone, using a cluster database and nearest-neighbor classifier.

The core idea of our proposed algorithm is adaptive clustering. In general, there are five types of clustering methods in unsupervised learning: hierarchical-based clustering, density-based clustering, grid-based clustering, model-based clustering, and partition-based clustering. Hierarchical-based clustering generally includes Balanced Iterative Reducing and Clustering using Hierarchies (BIRCH) [

15], Clustering Using REpresentatives (CURE) [

16], RObust Clustering using linKs (ROCK) [

17], and Chameleon [

18]. The datasets are aggregated (bottom-up) or divided (up-bottom) into a series of nested subsets to form a tree structure. The hierarchical method has two major drawbacks; one is its high time-complexity. The second is that, once a mistake is made in one step, all subsequent steps will fail because of the inner greedy algorithm. Density-based clustering, which includes Density-Based Spatial Clustering of Applications with Noise (DBSCAN) [

19], Ordering Points To Identify the Clustering Structure (OPTICS) [

20], Distribution-Based Clustering of Large Spatial Databases (DBCLASD) [

21], and DENsity-based CLUstEring (DENCLUE) [

22], can divide datasets into arbitrary shapes by their regions of density, connectivity, and boundary, but it is extremely sensitive to the two initial parameters. Grid-based clustering divides the data space into grids and computes the density of each grid in order to identify high-density grids, and then adjacent high-density grids are integrated to become a cluster. Wave-Cluster [

23] and STtatistical INfromation Grid (STING) [

24] are typical examples of this clustering method. Model-based clustering optimizes the fit between the given data and the assumed model, which is based on statistics or neural network. The Gaussian Mixture Model (GMM) [

25] and Self-Organizing Maps (SOM) [

26] are representative of these two types of models. Partition-based clustering iteratively relocates datasets with a heuristic algorithm until optimization is achieved. There are many partitioning algorithms, such as K-Means, K-Means++ [

27], kernel K-Means [

28], K-Medoids [

29], K-Modes [

30], and Fuzzy C-means (FCM) [

31]. K-Means++ and K-Medoids are used to restrain the sensitivity of the initial K values and outliers. K-modes and kernel K-means can be used in categorical or non-convex data, which traditional K-means are unable to do. FCM is a soft-threshold clustering method, compared with the hard-threshold of K-means.

The RSS in this study includes, fast and real-time features, as well as a large number of measured concentrations. Given the above advantages and disadvantages of the methods, our proposed approach applies a partition-based method. The most typical partition-based method, called K-means, is efficient for large datasets and has low time and space demands. However, K-means is sensitive to outliers and the selection of the initial K values. The adaptive method, called AFR-OHV was proposed in this paper to solve these two problems.

The remaining content in this paper is organized as follows. In

Section 2, the emission datasets of the RSS are analyzed and our proposed method is introduced in detail. The experimental results and discussion are provided in

Section 3. The paper is concluded in the last section.

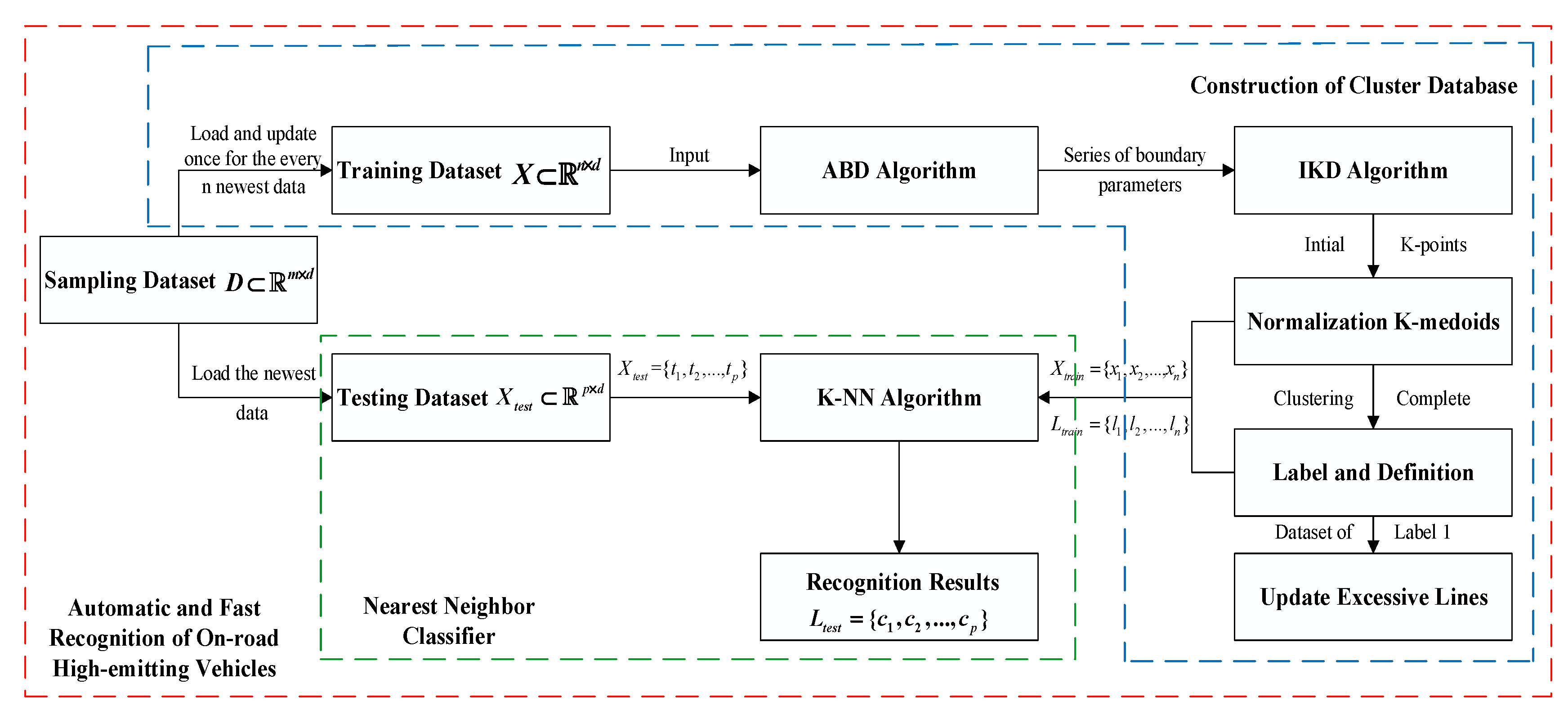

3. Methods

This paper proposes an automatic and fast recognition method that detects on-road high-emitting vehicles, by using the above emission relationships. The proposed method is described in

Figure 4. The training dataset

is loaded and updated for every

n new data from the sampling dataset

, using the automatic boundary detection (ABD) and initial K-center determination (IKD) methods, in order to determine the initial positions of the K-points. After that, the training dataset is normalized to maintain the same weights of different emission gases and clustered by K-medoids. Then, different clusters are labeled and defined. Also, the dataset, label “1”, is extracted to update the cut-points between high-emitting and low-emitting zones of different emission gases. The above processes construct the cluster database in our method, and the outputs,

and

, are inputs to the nearest-neighbor classifier to complete automatic and fast recognition of the testing dataset. The specific sub-algorithms are described in the next subsection.

3.1. Automatic Boundary Detection

Firstly, automatic boundary detection (ABD) is proposed in this paper, in order to improve the adaptability of the high-emitting recognition algorithm. ABD is detailed in Algorithm 1. It loads the most recent datasets into the database of the IPC. The choice of the value, and tests to optimize the clustering speed, are discussed in the experimental section.

Because the concentrations of NO, HC, and CO emissions are the focus of this paper, the characteristic dimension of the datasets is 3. Our method is also suitable for datasets with high feature dimensions owing to the advantages of partition-based clustering. In Algorithm 1, the , , and call library functions that round up the value , take the maximum of the array , and calculate the histogram of the array and divide it into equal intervals, respectively.

| Algorithm 1. ABD Algorithm |

| Input:: m 3-dimensional emission datasets; : the concentrations of NO, HC, CO |

| : the number of datasets that can be loaded in the main memory |

| Output:: the max concentration of NO, HC, CO; |

| : the upper boundary values of NO, HC, CO; |

| : the lower boundary values of NO, HC, CO; |

| 1: load from |

| 2: for 1 to 3 do |

| 3: for 1 to n do |

| 4: |

| 5: |

| 6: end for |

| 7: for 1 to do |

| 8: |

| 9: end for |

| 10: if , then |

| 11: |

| 12: |

| 13: else |

| 14: |

| 15: |

| 16: end if |

| 17: end for |

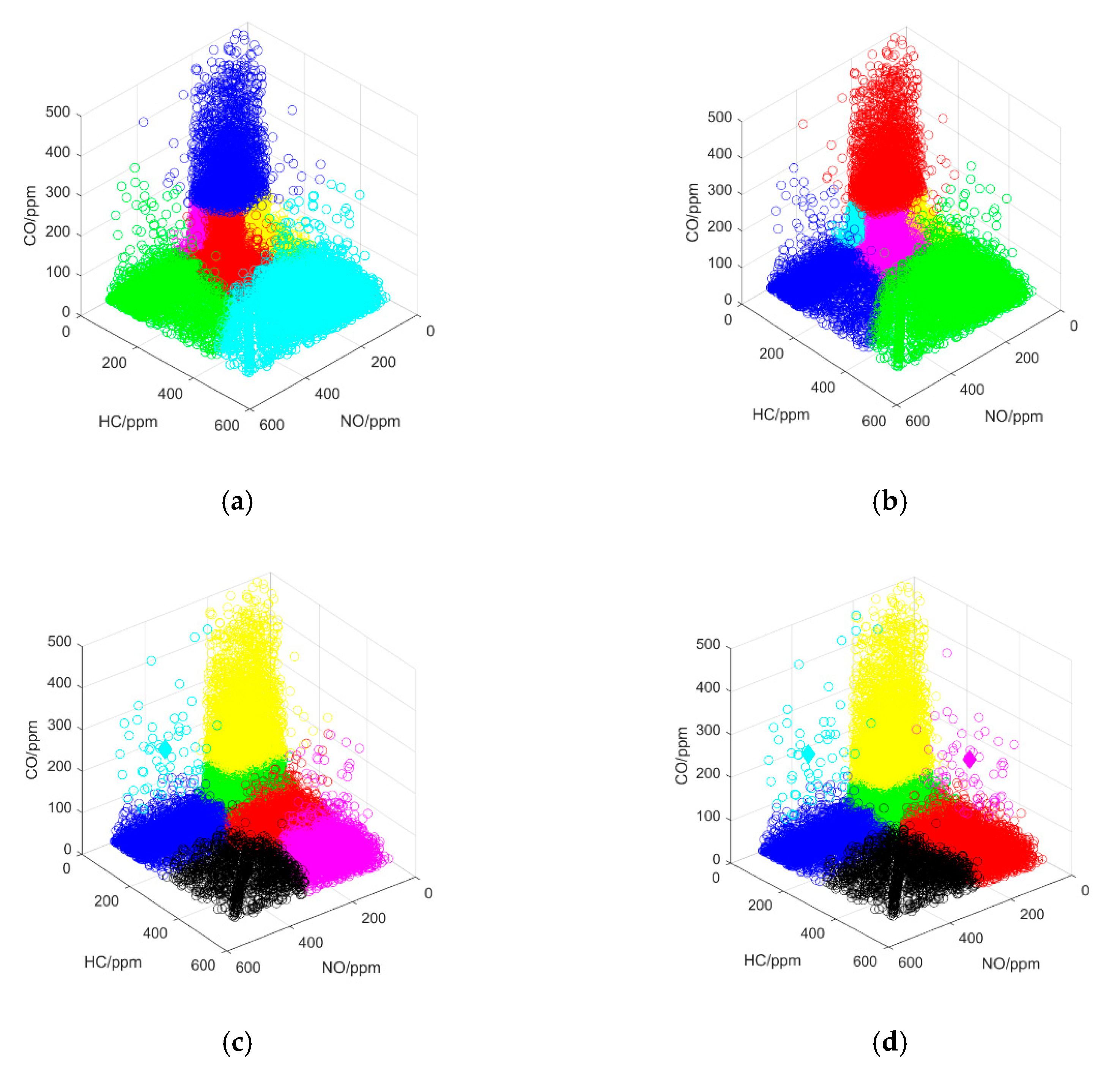

Figure 3b–d show an example of the results computed by Algorithm 1, with

n representing the maximum number of samples. The automatic detection boundaries are indicated by the red dotted lines.

3.2. Initial K-Center Determination

After the maximum and boundary concentrations of each emission gas are established, the proposed method applies the initial K-center determination algorithm, which is detailed in i Algorithm 2.

The IKD algorithm first calculates the center values of the high- and low-emission zones of each gas, and then it forms matrix A, which contains all the center values. At the end of IKD, the function is adopted to return a binary value of from low to high, to automatically generate the initial center points. Since the ABD and IKD methods are continuous calculation processes, we combined them into a single process termed automatic detection of initial k-center (ADIK).

| Algorithm 2. IKD Algorithm |

| Input:: the max concentration of NO, HC, CO |

| : the upper-boundary values of NO, HC, CO |

| : the lower-boundary values of NO, HC, CO. |

| Output:: 3-dimensional initial points |

| 1: for 1 to 3 do |

| 2: |

| 3: |

| 4: end for |

| 5: define matrix |

| 6: for 1 to do |

| 7: |

| 8: |

| 9: end for |

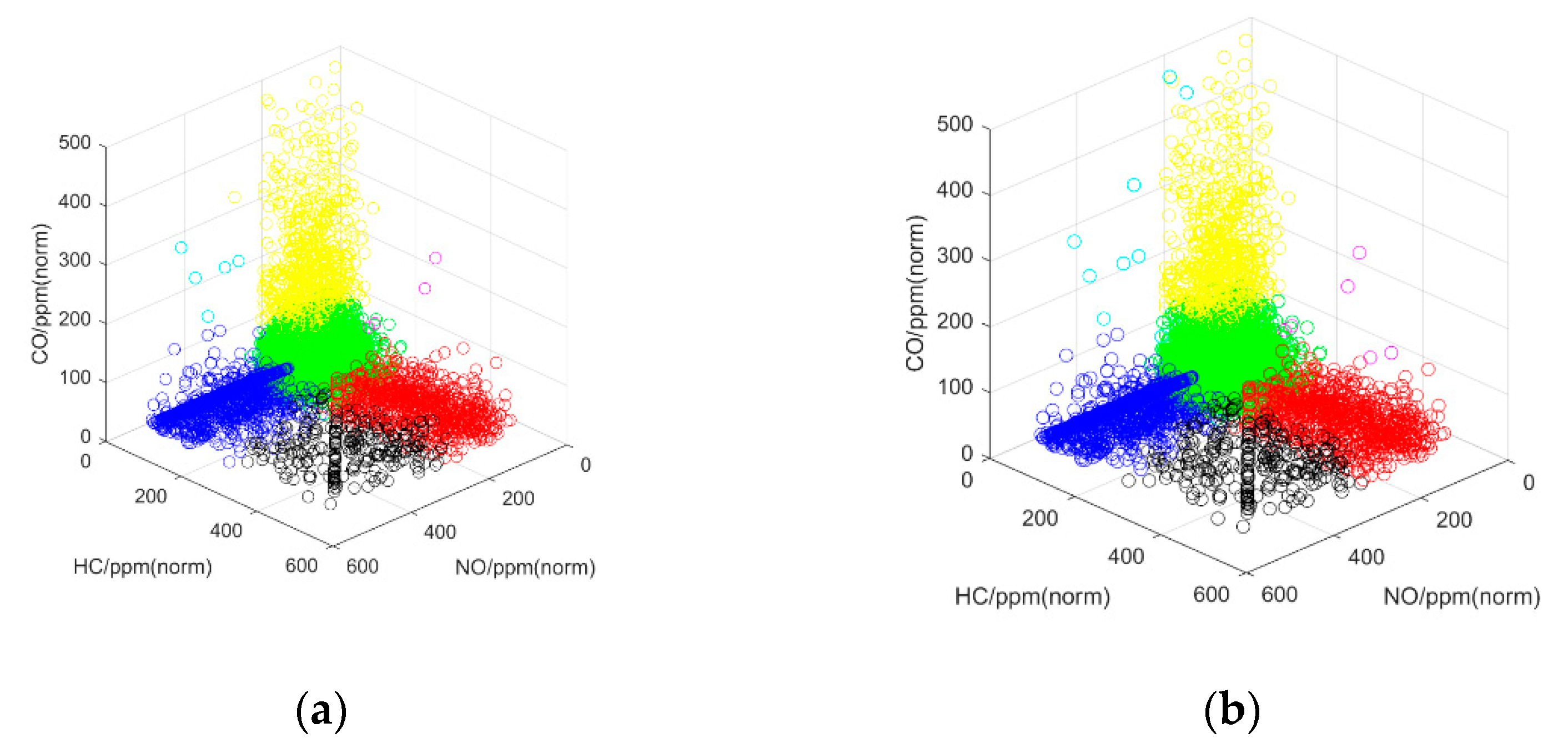

3.3. Normalization K-Medoids

By running the ADIK algorithms, we acquire the initial positions of

center points. To maintain the same weighting of the NO, HC, and CO emission data, a normalization method is adopted as follows,

where

,

, and

.

Then K-medoids are used to cluster the emission datasets, as described in this subsection. The difference between K-means and K-medoids is that the central point

is selected in different ways,

where

is the dataset of class

. Compared with K-means, the advantage of using K-medoids to select the central point is that it can effectively eliminate the influence of outliers on the clustering results, and it also increases the total running time of the algorithm. The detailed calculation process of K-medoids is shown in Algorithm 3.

The function returns an array containing copies of A in the row and column dimensions. The running time of Algorithm 3 largely depends on the size of the clustering datasets and the initial positions of the center points, which are shown in the experimental section.

| Algorithm 3. K-Medoids algorithm |

| Input:: n 3D normalized emission datasets extracted from the database |

| : normalized initial points |

| - convergence threshold |

| Output:: 3-dimensional final K points |

| —indicates the class to which belongs; |

| : iterations of algorithm |

| 1: for 1 to 100 do |

| 2: for 1 to n do |

| 3: |

| 4: |

| 5: |

| 6: end for |

| 7: for 1 to do |

| 8: |

| 9: |

| 10: for 1 to do |

| 11: |

| 12: end for |

| 13: |

| 14: |

| 15: end for |

| 16: if |

| 17: break |

| 18: end if |

| 19: |

| 20: end for |

3.4. Label and Definition

After clustering is finished, different clusters of emission datasets can be labeled by the formula,

where

is as described in the above subsection.

The unlabeled samples in the training datasets are transformed into labeled samples by this method. The labels and definitions of the results are shown in

Table 1.

3.5. Nearest Neighbor Classifier

Once the clustered datasets have been established and labeled, the K-NN algorithm, which is shown in Algorithm 4, is applied to rapidly detect high-emitting vehicles.

| Algorithm 4. K-NN algorithm |

| Input:—n 3-dimensional training emission datasets |

| —m 3-dimensional testing emission datasets |

| —the labels of training emission datasets |

| —initial parameters of K-NN |

| Output:—the labels of testing emission datasets |

| 1: for 1 to do |

| 2: |

| 3: |

| 4: |

| 5: |

| 6: |

| 7: end for |

K-NN calculates the Euclidean distance between the testing sample and all training samples, and then the training samples, closest to the test sample, are selected. The value that appears most frequently in the labels, corresponding to training samples, is regarded as the label of the testing sample.

3.6. Update Cut-Points of Excessive Emissions

As the dataset labeled “1” is defined as a “No Excessive Emissions” zone, it can be extracted to update the cut-points that define high-emitting and low-emitting zones. In the approach proposed in this paper, the maximum concentrations of different emissions gases, which are regarded as the cut-points, are calculated in the dataset labeled “1”, and they are updated for every n newest input dataset.

5. Conclusions

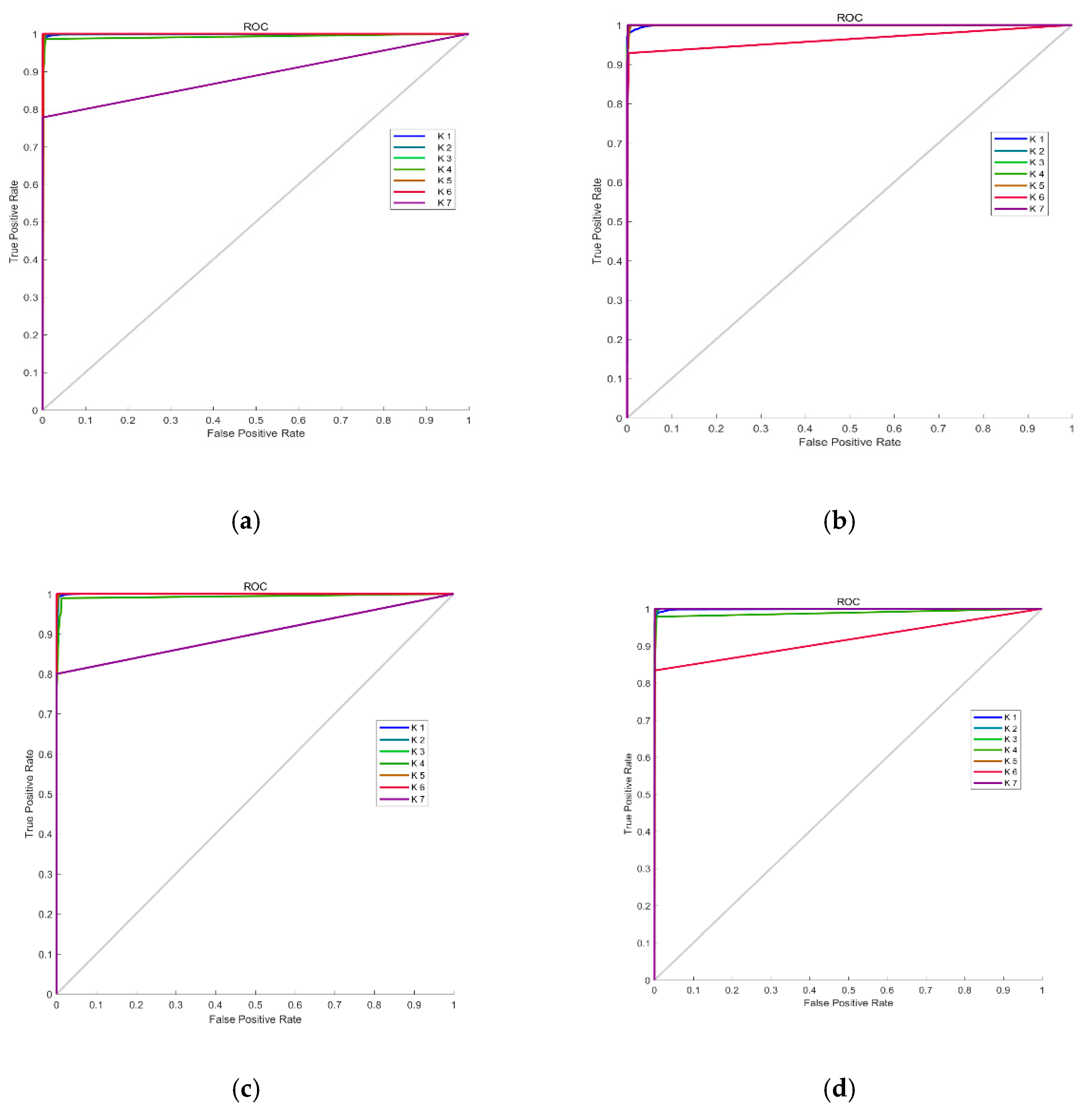

This paper proposes a method for the automatic and fast recognition of on-road high-emitting vehicles, called AFR-OHV. The first step in the AFR-OHV method is to adaptively determine the initial clustering center, according to the distribution characteristics of the most recently input RSS datasets, and to counteract the effects of environmental change to some extent. The second step in AFR-OHV is the normalization of the K-medoids clustering of the RSS datasets. After that, the RSS datasets are labeled and divided into different defined emission zones to construct a clustering database, and then the cut-points are updated automatically. The last step is to recognize high-emitting vehicles, which pass through RSS by a nearest-neighbor classifier, and to update the clustering database.

As reported in the experimental section, the performance of the method was verified using real data collected by RSS from December 2018 to January 2019 on Xueyuan Road, Shijiazhuang City, Hopei Prov, China, and Yangqiao Road, Hefei City, Anhui Prov, China. Different clustering methods were selected for comparison, and the experimental results show that the running time, DBI, and DVI resulting from our method were superior to those obtained using three other methods, namely, ADIK + K-means, K-medoids and K-means. Our classifier also had better performance indexes, i.e., PRE, REC, and AUC. In the last step, the rates of exceeded standards were calculated using multiple emission datasets collected by the RSS in two different geographical locations. The calculated rates provide reference values for law enforcement departments to establish evaluation criteria for on-road high-emitting vehicles detected by remote sensing systems.

The limitation of this paper’s work is that, when optical remote sensing systems, that are developed by different research institutions or companies, are used to detect on-road high-emitting vehicles, the distribution of the emission datasets might be significantly different. In our future work, we will research transfer learning and meta learning in an aim to improve our learning method. The objective is to improve the model so that it can be effectively applied to other optical remote sensing systems after training with a dataset from one set of optical remote sensing systems. In addition, we will research multi-RSS networking on adjacent streets to further reduce the monitoring error and improve the recognition accuracy.