Real-Time Water Surface Object Detection Based on Improved Faster R-CNN

Abstract

1. Introduction

2. Related Work

3. Water Surface Object Detection Method

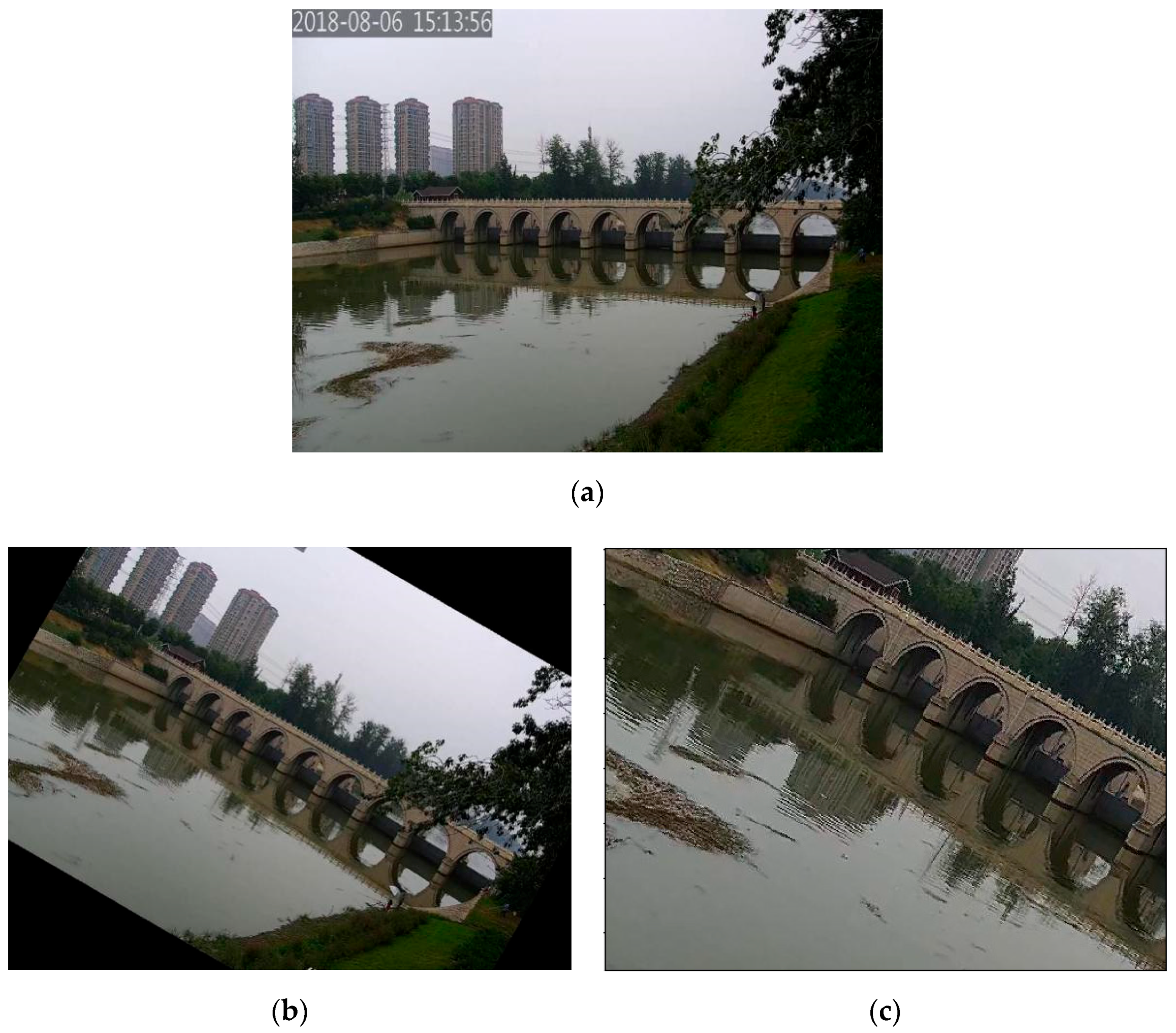

3.1. Image Preprocessing

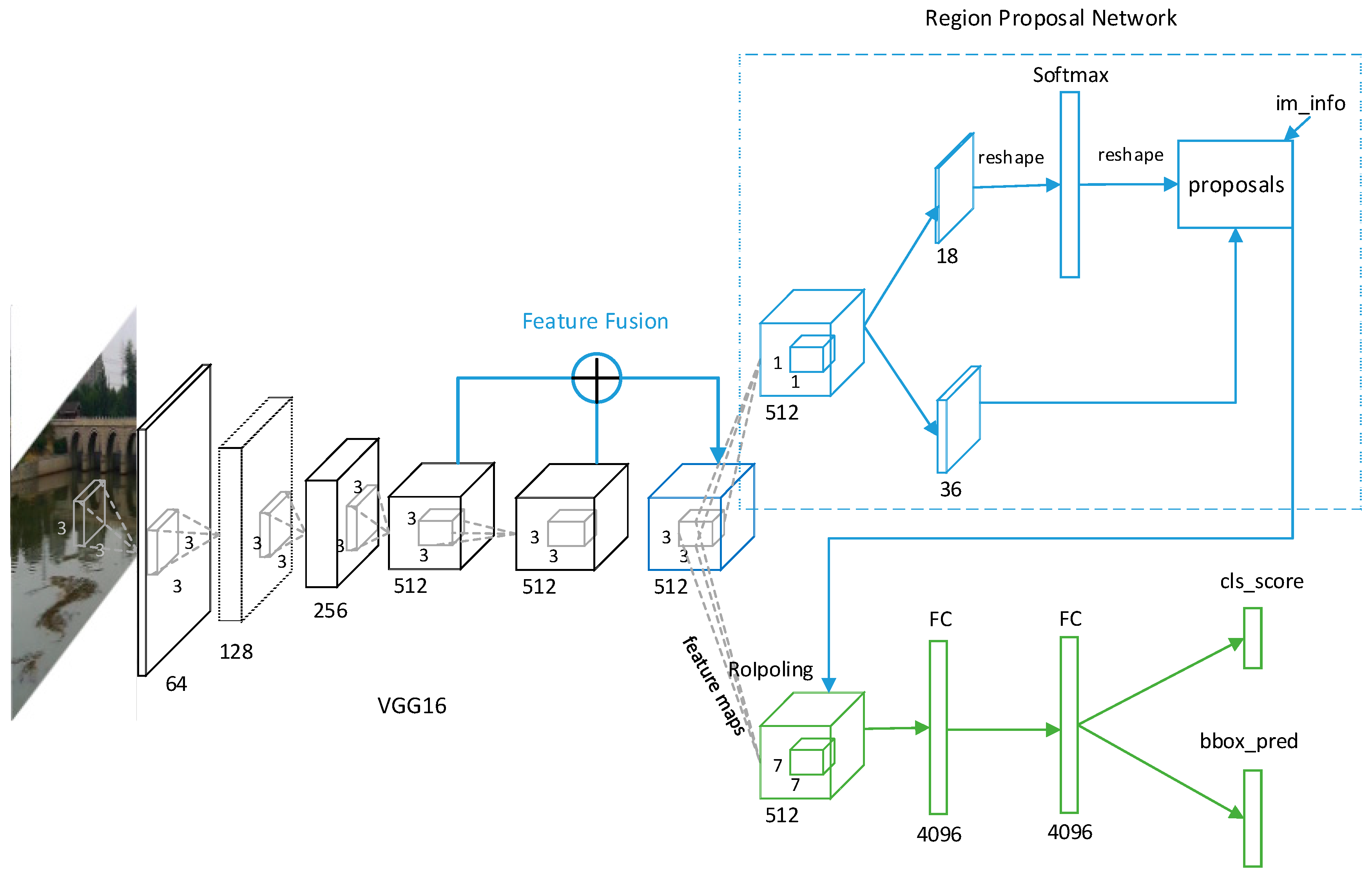

3.2. Scale-Aware Network Model Based on Faster R-CNN

3.2.1. Receptive Field

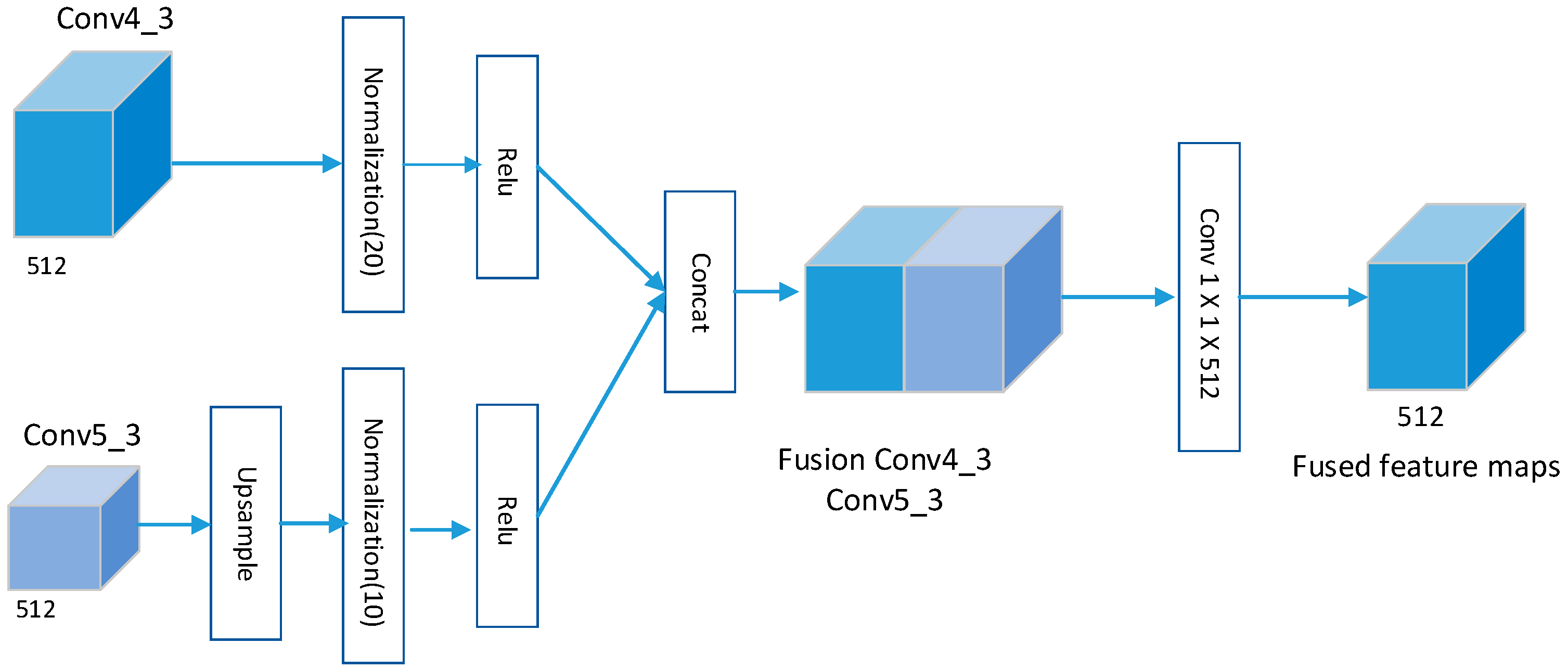

3.2.2. Feature Fusion Layer

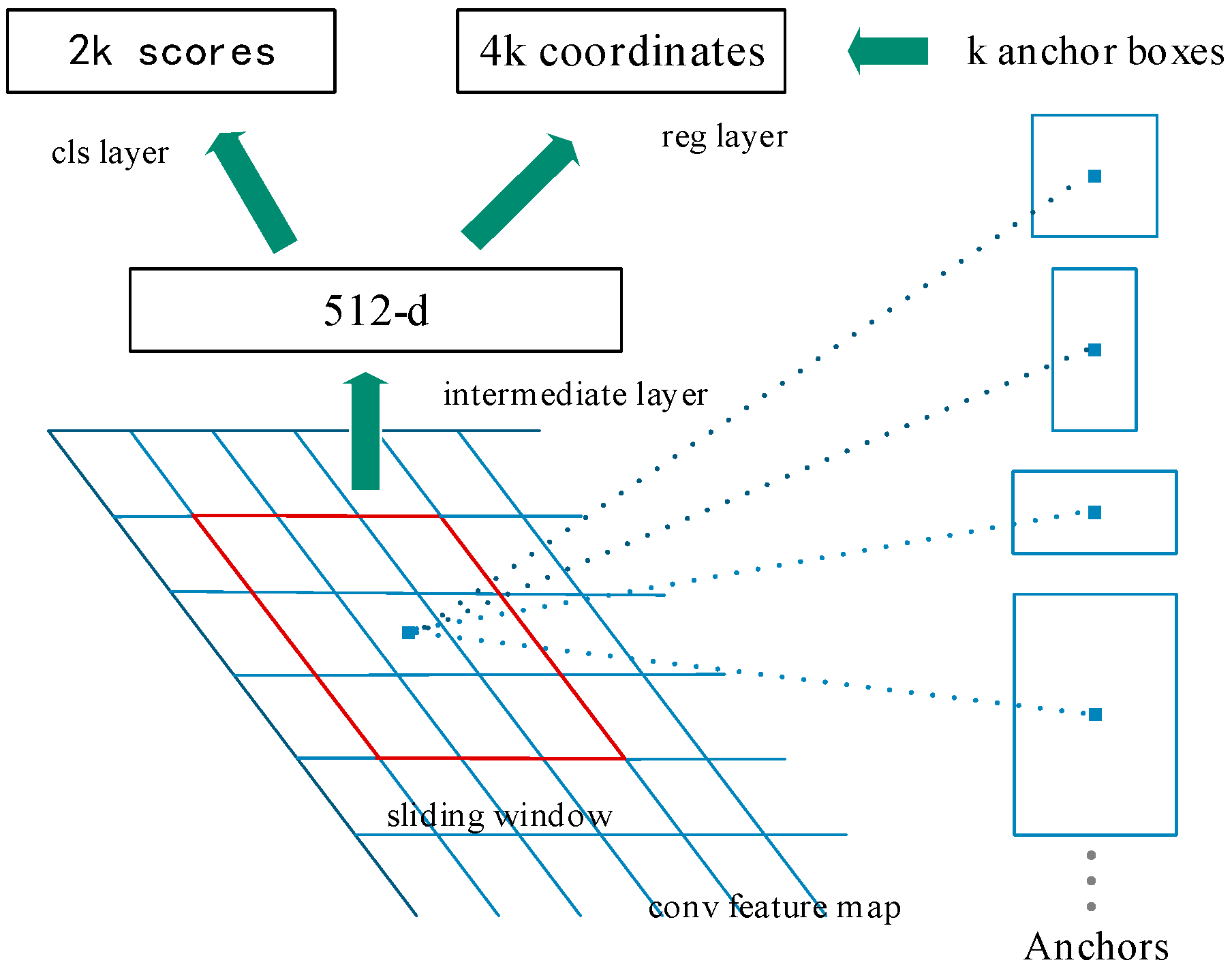

3.2.3. Region Proposal Network

3.2.4. Loss Function

3.3. Dataset Construction

3.4. Training

- Step 1:

- Training RPN. The RPN is initialized by the model parameters obtained on the ImageNet classification network. Then, end-to-end training is used for the RPN.

- Step 2:

- Training Fast R-CNN. The proposals obtained by Step 1 are adopted to perform end-to-end fine-tuned training of Fast R-CNN.

- Step 3:

- The RPN is finetuned by Fast R-CNN obtained in Step 2, while fixing the parameters of shared convolutional layers.

- Step 4:

- The region proposals obtained in Step 3 are used to fine-tune the fully connected layer of Fast R-CNN, while the shared convolutional layer is fixed.

4. Experiments

4.1. Setting

4.2. Evaluation Criterion

4.2.1. MAP

4.2.2. Detection Speed

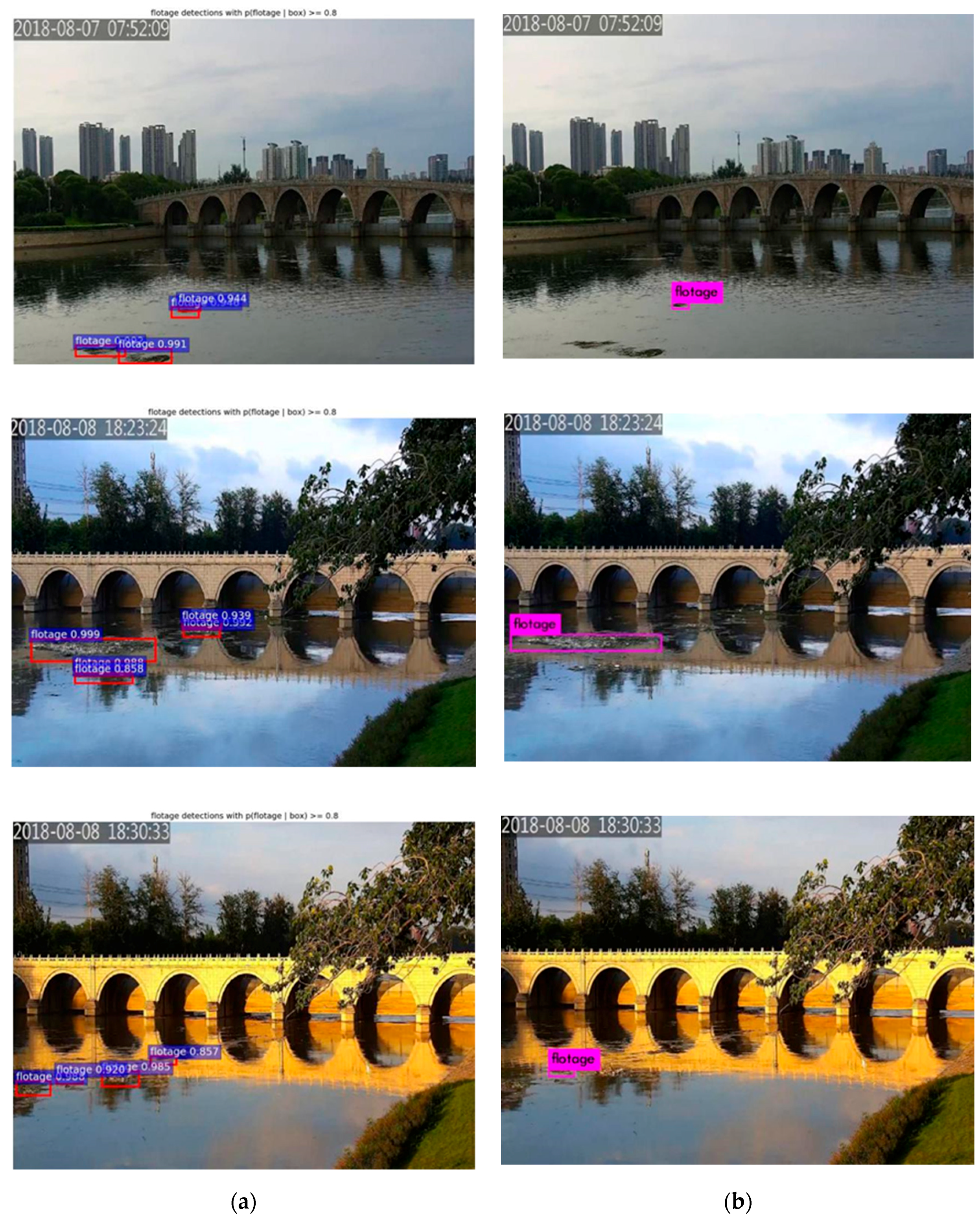

4.3. Analysis

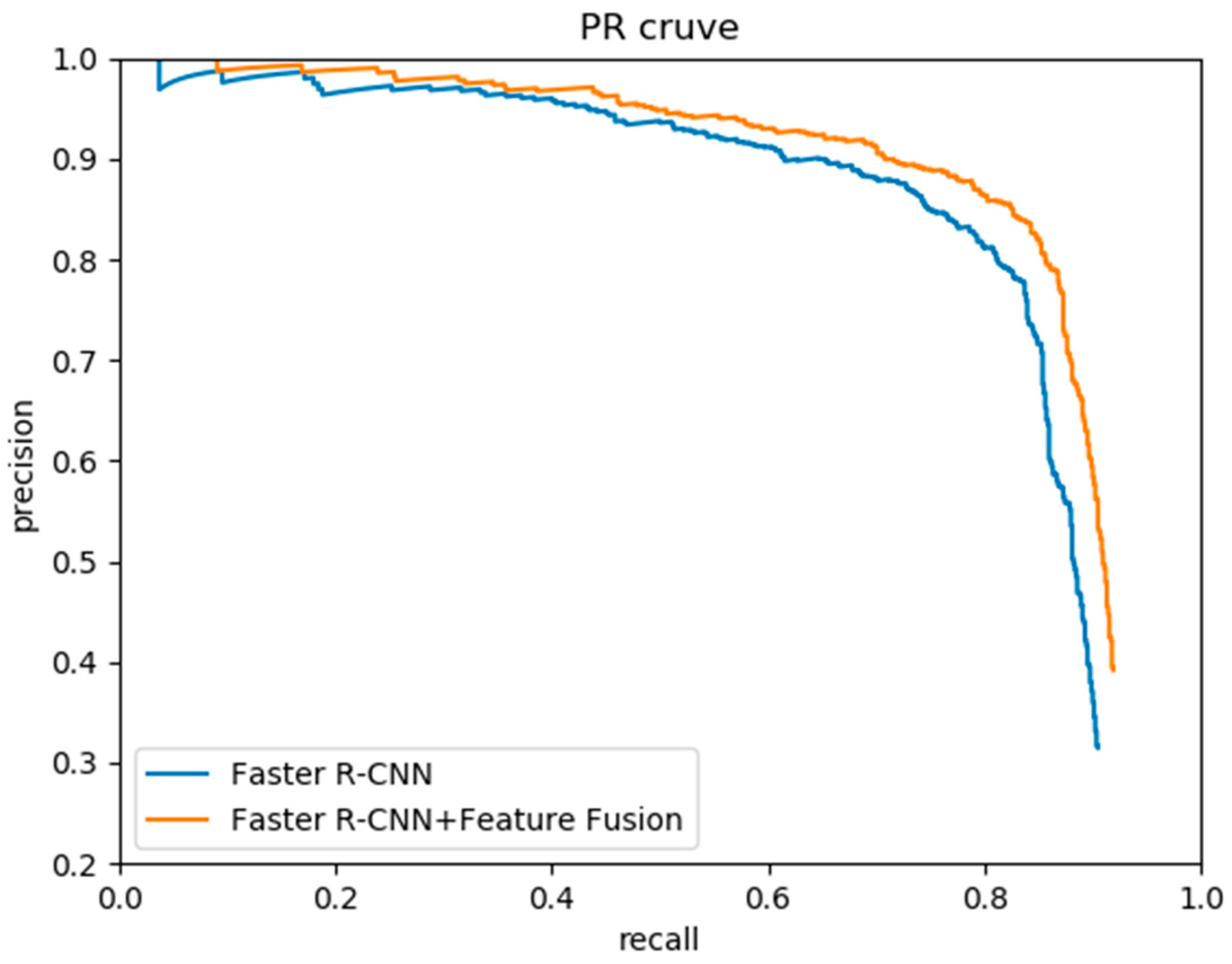

4.3.1. The Effect of Feature Fusion

4.3.2. Experiments on the Different Anchor Settings

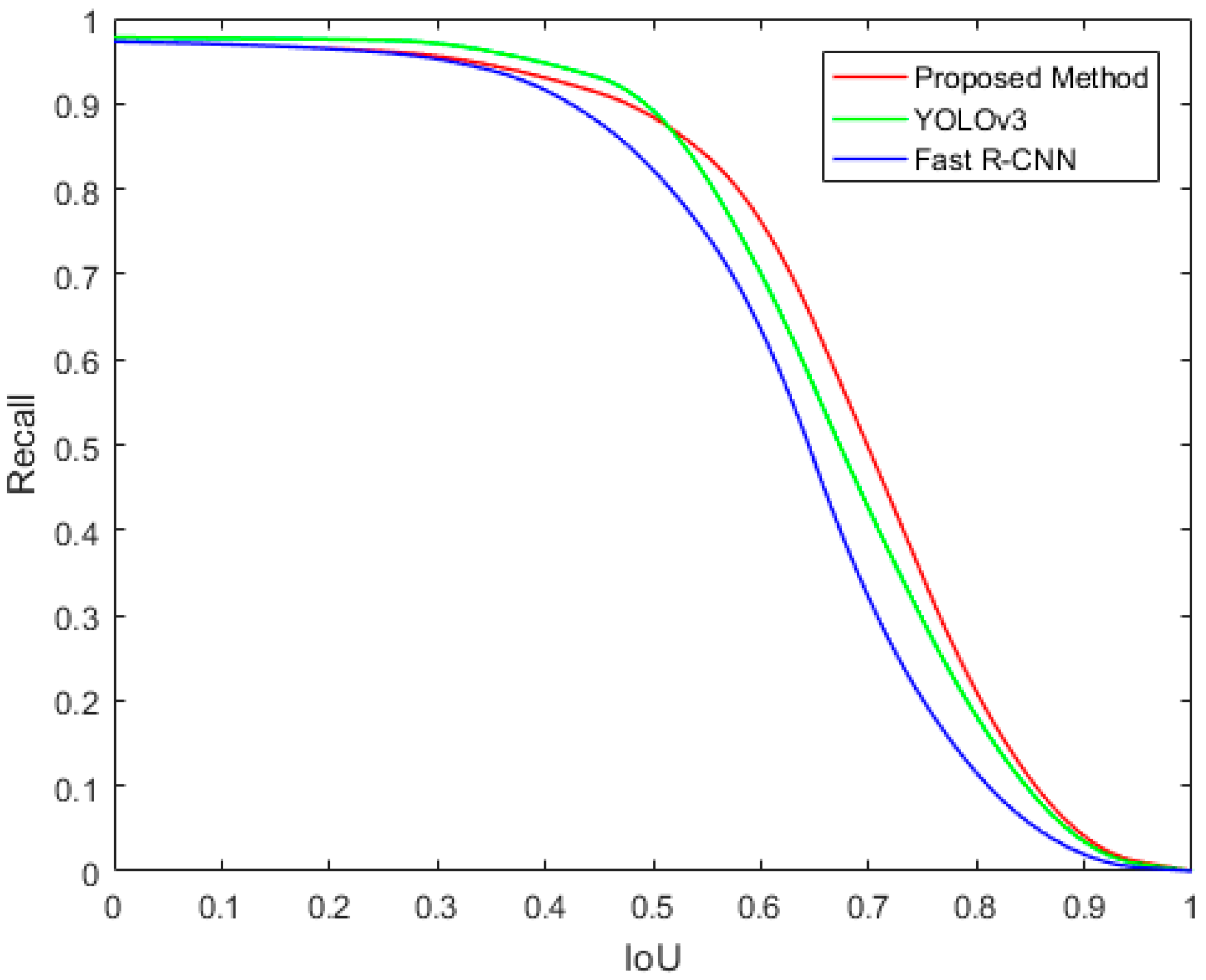

4.3.3. Comparison with Other Methods

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Campmany, V.; Silva, S.; Espinosa, A.; Moure, J.C. GPU-based pedestrian detection for autonomous driving. Procedia Comput. Sci. 2016, 80, 2377–2381. [Google Scholar] [CrossRef]

- Ranjan, R.; Patel, V.M.; Chellappa, R. HyperFace: A Deep Multi-Task Learning Framework for Face Detection, Landmark Localization, Pose Estimation, and Gender Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 121–135. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.C.; Liao, M.S.; Li, X.F. Ship detection in SAR image based on the alpha-stable distribution. Sensors 2008, 8, 4948–4960. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Eldhuset, K. An automatic ship and ship wake detection system for spaceborne SAR images in coastal regions. IEEE Trans. Geosci. Remote Sens. 1996, 34, 1010–1019. [Google Scholar] [CrossRef]

- Lee, D.S. Effective Gaussian mixture learning for video background subtraction. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 827–832. [Google Scholar] [PubMed]

- Singla, N. Motion detection based on frame difference method. Int. J. Inf. Comput. Technol. 2014, 4, 1559–1565. [Google Scholar]

- Merlin, P.M.; Farber, D.J. A parallel mechanism for detecting curves in pictures. IEEE Trans. Comput. 1975, 24, 96–98. [Google Scholar] [CrossRef]

- Horn, B.K.P.; Schunck, B.G. Determining optical flow. Artif. Intell. 1981, 17, 185–203. [Google Scholar] [CrossRef]

- Barron, J.L.; Fleet, D.J.; Beauchemin, S.S. Performance of optical flow techniques. In Proceedings of the 1992 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Champaign, IL, USA, 15–18 June 1992; pp. 236–242. [Google Scholar]

- Li, J.; Liang, X.; Shen, S.M.; Xu, T.; Feng, J.; Yan, S. Scale-Aware Fast R–CNN for Pedestrian Detection. IEEE Trans. Multimedia 2018, 20, 985–996. [Google Scholar] [CrossRef]

- Xu, L.W.; Guo, D.Q. Investigation and Treatment of Floats on Lijiang River. Ind. Sci. Technol. 2013, 29, 128–129. [Google Scholar]

- Moore, C.J. Synthetic polymers in the marine environment: A rapidly increasing, long-term threat. Environ. Res. 2008, 108, 131–139. [Google Scholar] [CrossRef]

- Makantasis, K.; Protopapadakis, E.; Doulamis, A.; Doulamis, A.; Matsatsinis, N. Semi-supervised vision-based maritime surveillance system using fused visual attention maps. Multimed. Tools Appl. 2016, 75, 15051–15078. [Google Scholar] [CrossRef]

- Chen, C.L.; Liu, T.K. Fill the gap: Developing management strategies to control garbage pollution from fishing vessels. Mar. Policy. 2013, 40, 34–40. [Google Scholar] [CrossRef]

- Jiang, J.; Li, G. Study on Automatic Monitoring Method of River Floating Drifts. J. Yellow River. 2010, 32, 47–48. [Google Scholar]

- Wu, Y.F. An Intelligence Monitoring System for Abnormal Water Surface Based on ART. In Proceedings of the 2013 Fourth International Conference on Digital Manufacturing & Automation, Qingdao, China, 29–30 June 2013; pp. 171–174. [Google Scholar]

- Zuo, J.J. Research on the Application of Computer Vision Technology in Social Public Service. Ph.D. Thesis, Guizhou Minzu University, Guizhou, China, 2013. [Google Scholar]

- Hu, R. Research on Automatic Monitoring of Floats on Water Surface Based on Machine Vision. Ph.D. Thesis, Guangxi University of Science and Technology, Liuzhou, China, 2015. [Google Scholar]

- Zheng, D. Gamma Correction Method for Accuracy Enhancement in Grating Projection Profilometry. Acta Optic. Sin. 2011, 31, 124–129. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, Nevada, NV, USA, 3–6 December 2012; Volume 1, pp. 1097–1105. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; Volume 1, pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Uijlings, J.R.; van de Sande, K.E.; Gevers, T.; Smeulders, A.W. Selective search for object recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Kong, T.; Yao, A.; Chen, Y.; Sun, F. HyperNet: Towards Accurate Region Proposal Generation and Joint Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 845–853. [Google Scholar]

- Bell, S.; Zitnick, C.; Bala, K.; Girshick, R. Inside-Outside Net: Detecting Objects in Context with Skip Pooling andRecurrent Neural Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2874–2883. [Google Scholar]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Li, J.; Wei, Y.; Liang, X.; Dong, J.; Xu, T.; Feng, J.; Yan, S. Attentive Contexts for Object Detection. IEEE Trans. Multimed. 2017, 19, 944–954. [Google Scholar] [CrossRef]

- Luo, W.; Li, Y.; Urtasun, R.; Zemel, R. Understanding the effective receptive field in deep convolutional neural networks. In Proceedings of the 30th Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Cao, G.; Xie, X.; Yang, W.; Liao, Q.; Shi, G.; Wu, J. Feature-fused SSD: Fast detection for small objects. In Proceedings of the 9th International Conference on Graphic and Image Processing, Qingdao, China, 14–16 October 2017. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and Understanding Convolutional Networks. In Proceedings of the 13th European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 818–833. [Google Scholar]

- Yu, W.; Yang, K.; Yao, H.; Sun, X.; Xu, P. Exploiting the complementary strengths of multi-layer CNN features for image retrieval. Neurocomputing 2017, 237, 235–241. [Google Scholar] [CrossRef]

- Chen, C.; Liu, M.; Tuzel, O.; Xiao, J. R-CNN for small object detection. In Proceedings of the 13th Asian Conference on Computer Vision, Taipei, Taiwan, 20–24 November 2016; pp. 214–230. [Google Scholar]

- Jan, H.; Rodrigo, B.; Piotr, D.; Bernt, S. What makes for effective detection proposals? IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 814–830. [Google Scholar]

- He, K.; Georgia, G.; Piotr, D.; Ross, G. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

| Image | Original | ||||

|---|---|---|---|---|---|

| Contrast | 25,092 | 24,983 | 25,965 | 25,357 | 24,399 |

| Layer | RF |

|---|---|

| conv1_2 | 5 |

| conv2_2 | 14 |

| conv3_3 | 40 |

| conv4_3 | 92 |

| conv5_3 | 196 |

| Model | MAP | Speed (FPS) |

|---|---|---|

| Faster R-CNN | 81.2% | 13 |

| Faster R-CNN + Feature Fusion | 83.7% | 11 |

| Settings | Anchor Scales | Aspect Ratios | MAP (%) |

|---|---|---|---|

| 1 scale, 1 ratio | 642 | 1:2 | 79.5% |

| 1282 | 1:2 | 78.9% | |

| 1 scale, 3 ratios | 642 | {1:2, 2:5, 1:4} | 81.8% |

| 1282 | {1:2, 2:5, 1:4} | 81.4% | |

| 4 scales, 1 ratio | {322, 642, 1282, 2562} | 1:2 | 81.7% |

| {322, 642, 1282, 2562} | 2:5 | 82.0% | |

| 3 scales, 3 ratios | {1282, 2562, 5122} | {1:1, 1:2, 2:1} | 81.2% |

| 3 scales, 3 ratios | {642, 1282, 2562} | {1:2, 2:5, 1:4} | 82.6% |

| 4 scales, 3 ratios | {322,642, 1282, 2562} | {1:2, 2:5, 1:4} | 83.7% |

| Method | MAP (%) | Speed (FPS) |

|---|---|---|

| Fast R-CNN | 75.3% | 4 |

| YOLOv3 | 78.6% | 35 |

| Proposed method | 83.7% | 11 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, L.; Zhang, Y.; Zhang, Z.; Shen, J.; Wang, H. Real-Time Water Surface Object Detection Based on Improved Faster R-CNN. Sensors 2019, 19, 3523. https://doi.org/10.3390/s19163523

Zhang L, Zhang Y, Zhang Z, Shen J, Wang H. Real-Time Water Surface Object Detection Based on Improved Faster R-CNN. Sensors. 2019; 19(16):3523. https://doi.org/10.3390/s19163523

Chicago/Turabian StyleZhang, Lili, Yi Zhang, Zhen Zhang, Jie Shen, and Huibin Wang. 2019. "Real-Time Water Surface Object Detection Based on Improved Faster R-CNN" Sensors 19, no. 16: 3523. https://doi.org/10.3390/s19163523

APA StyleZhang, L., Zhang, Y., Zhang, Z., Shen, J., & Wang, H. (2019). Real-Time Water Surface Object Detection Based on Improved Faster R-CNN. Sensors, 19(16), 3523. https://doi.org/10.3390/s19163523