In this part, we use the common algorithms of text categorization to evaluate our models. The text representation models we proposed are unsupervised, but in order to prove their validity, experiments are carried out using already labeled data sets.

4.1. Corpus

There are various corpora of text classification. This paper selects corpora of public data sets in Chinese and English. They are also used to train word vectors. These data sets are not too large in scale, but they all have practical value. In realistic applications, although people can obtain a large amount of text data without limiting their scope, it may be necessary to collect data for a specific topic within a certain period of time. For example, if reviews are collected for a definite product of a brand, the amount of data obtained in a single category may be small. Therefore, it is still necessary to test the method of this paper on general-scale data sets.

Amazon_6 [

44] is a corpus for sentiment analysis tasks, which are used for classification. This corpus has six categories, namely cameras, laptops, mobile phones, tablets, TV, and video surveillance. There are differences in the corpus size under different classes in the original corpus. This problem still exists after preprocessing. For example, the tablet category has only 896 texts, while the camera category has 6819 texts. We select the camera and mobile phone categories to experiment. In order to keep balance, we sort the shorter 4000 texts according to length. Then there are 2000 texts in each category, and a total of 17,565 different words involved in the operation. The text data of the other four classes are lower in number than the camera and mobile phone. In order to test the effect of our method on the unbalanced but real dataset, we experiment with short texts in these four classes. Considering that the data is stored in ‘txt’ files, the short texts of this experiment refer to texts that are shorter than 50 KB in size. The total number of texts participating in the experiment is 5705, and the vocabulary size is 48,926.

FudanNLP [

45] is an available corpus for the Chinese text classification task disclosed by Fudan University, China. It contains 20 categories. The number of texts in each category is different. In order to maintain balance, this paper chooses three categories: computer, economy and sports. Five hundred texts are selected from each category for experiment. In addition, similar to the Amazon_6 corpus, we use the remaining 17 classes of data to do the unbalanced experiment. In the case of a comparable amount of information, Chinese articles usually take up less space than English, so a small portion of the texts in the data set with a capacity greater than 15 KB were not included in the experiment. A total of 4117 texts were included in the experiment, and the vocabulary size was 78,634. The difference in the number of texts in the 17 categories is large. For example, there are only 59 texts in the transport class but 617 texts in the space class. However, due to the large number of classes, the vocabulary is still large.

ChnSentiCorp [

46] is a sentiment analysis corpus released by the Beijing Institute of Technology. It contains data in the fields of laptops and books. Each field contains 4000 short texts, which are marked with positive and negative emotions. In each class, the number of texts is 2000. All the data are used for experiments. The vocabulary of laptops is 8257 and the class of books is 22,230.

In summary, this paper selects two kinds of Chinese and English data sets, which are the corpora of general classification and sentiment classification. The corpora selected by the sentiment classification task are different from general texts, and usually contain subjective emotional expressions of human beings, while there is less content of the general character. In this way of labeling, the corpus for sentiment analysis may also different from the general corpus. It is possible to label positive and negative emotional polarities for each field, which is unnecessary for general text data sets. Thus, this paper selected these different data sets for the experiments to prove the applicability of the models.

4.4. Experimental Results and Discussion

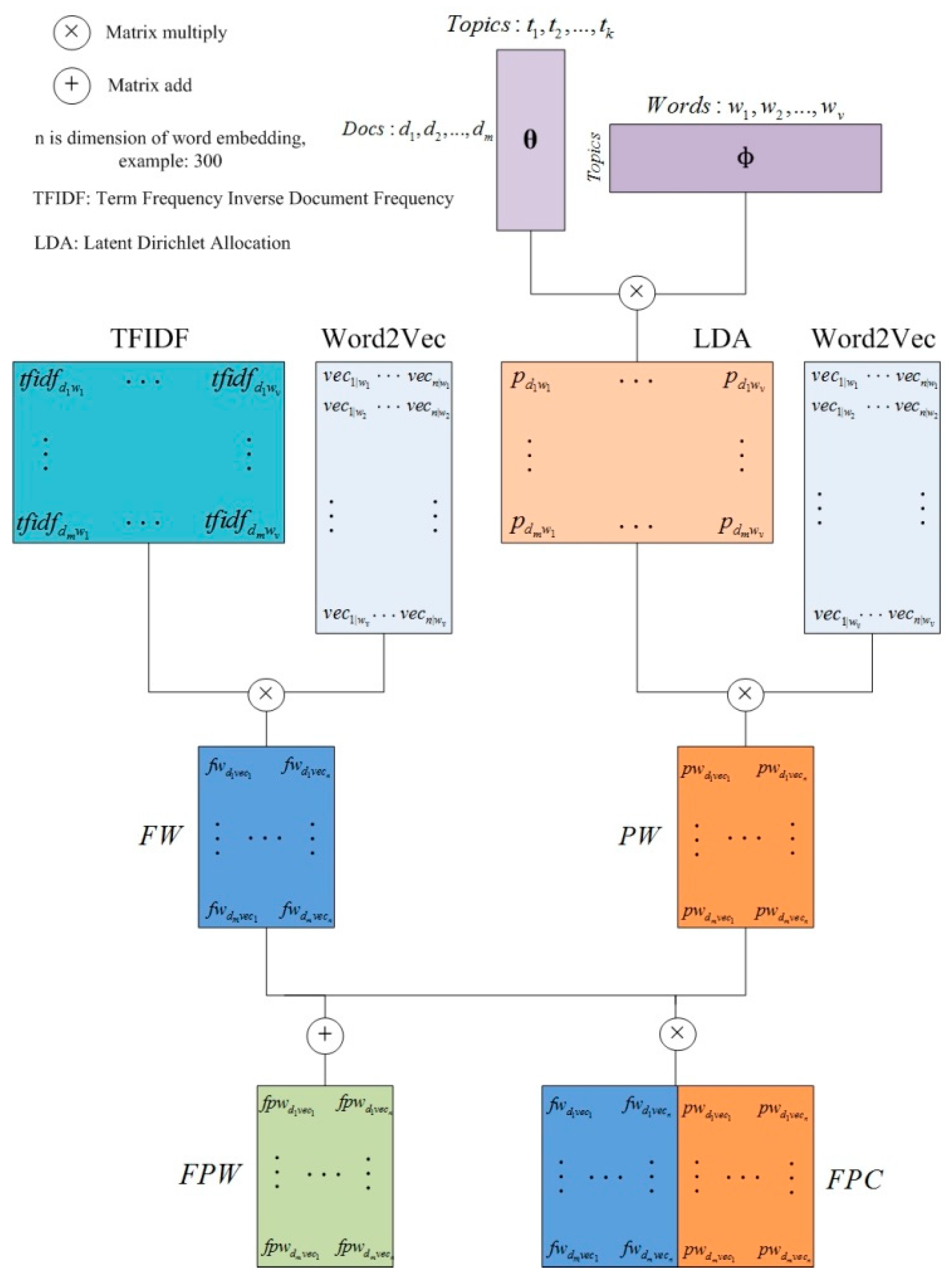

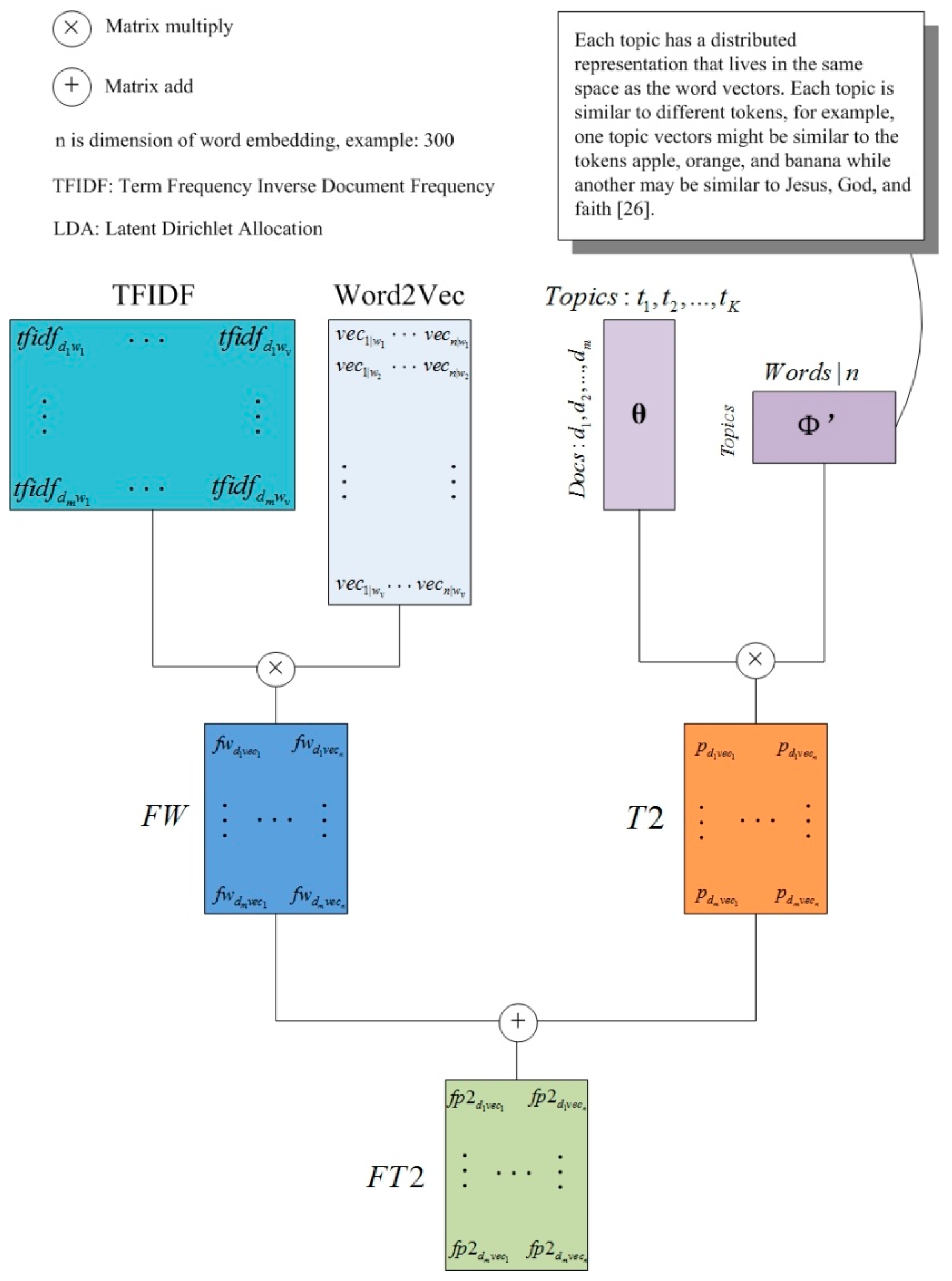

This part gives the experimental results and the corresponding analysis. First, we analyze a single set of experiments performed on each corpus, and then give an overall discussion. The baseline methods are TF–IDF, LDA, and Word2Vec. Word2Vec refers to the method of simply adding the vectors of words to represent an entire document. The results of the different dimensions are averaged and presented in tables. The best results in each column are shown in bold.

Experimental results of Amazon_6 corpus are shown in

Table 1 and

Table 2. The results are good overall, but the models proposed in this paper add a certain accuracy rate and

F1-value compared with the baseline.

Table 1 shows that the FPW model maintains a relatively high accuracy, and the FPC model is close behind. Since the FPC model is a conjunction of two matrices of FW and PW, its vector dimensions are more than marked in the table, which are 400, 600, 1000, and 1600, respectively. If we consider comparing the accuracy in approximately equal dimensions, then the FPW model is still better, and it only uses hundreds of vectors to get better results.

Table 2 gives the experiments of the Amazon_6 corpus by SVM. FPW and FPC have excellent overall effects. The results of FPW are stable and the best. The global maximum value appears in the 500-dimensional experiment of FPW. The PW model has an improvement compared to the baseline LDA, but FW is slightly worse than TF–IDF, which may occur on some corpora. The classification decision function of SVM is determined by only a few support vectors, not the dimensions of vector space. After reducing the dimensions of the vector space, the resulting new vector incorporates more information, but it may also increase the difficulty of classification to some extent. However, after further integrating the vector information of PW, a better classification effect can still be obtained.

At the same time, in this set of experiments, P2 has lost a great deal of information because the part with fewer probabilities in the LDA matrix is cut off. The effect is not good. However, after combining with word embedding, there is obvious improvement. It can be observed that the information obtained by introducing more vectors is helpful for the vector representation of texts. This is also evident in the experiments of the SVM classifier of the following corpus.

In the following

Table 3 and

Table 4, this paper uses the data from the other four categories of the Amazon_6. This set of experiments involves an unbalanced data set with a few more categories.

This set of experiments performed on the KNN classifier results in the best effect on the PW model. The accuracy of FPW in the 500 and 800 dimensions exceeded the baseline methods, and FPW and FPC both exceed the baseline methods on F1-macro. Since the values of the vectors obtained by TF–IDF and Word2Vec are both positive and negative, it may happen that FW is slightly worse than TF–IDF or Word2Vec. The vectors of LDA modeling are only positive, so in most experiments, the effect of PW will be better than LDA or Word2Vec. In the next experiment, such a situation can also be observed. In addition, P2 is also effective, and the impact of data imbalance is not very large. The FP2 model is unexpected but has improved over the P2 model.

Table 4 shows that FPW performance is the best for SVM, followed by FPC. The effect of FW is much lower than the baseline methods, which may be related to the imbalance of the data. The effect of P2 is also poor. It can be seen that SVM does not adapt to the model truncated by topic modeling in this corpus. However, the full PW still performs well, exceeding all baseline methods. The results of the two classifiers above show that the method of this paper can also achieve a certain improvement effect on the unbalanced data.

In the work of Zhao [

1] mentioned in

Section 2, the Amazon_6 corpus is also used. In order to balance the data, Zhao randomly selected 1500 texts in 5 classes which contain more than 1500 texts, with a total vocabulary of 10,790, and experimented with the SVM classifier. In the end, the fuzzy bag-of-words model has proved very effective, with a classification accuracy of 92% to 93%, which was 1% to 2% higher than the baseline TF (BoW) and LDA. This paper does not select texts randomly and the vocabulary is slightly larger. However, we also use LDA as a baseline method, and use

TF–IDF as a benchmark, which is better than TF. The above two sets of experiments show that the method of this paper has a similar increase, which is about 1% to 2.5%.

Table 5 and

Table 6 gives the experimental results related to the FudanNLP corpus. This set of experiments is still performed on FudanNLP.

Table 5 shows that LDA is effective, while

TF–IDF is general. Therefore, most of the better results appear in PW, which means the combination of LDA and Word2Vec gives better results. The best values for the accuracy and F1-macro of our models exceeded the baseline methods, but the best results appeared in different methods. It can be observed from subsequent experiments that such a situation occurs with FudanNLP more than once, but other data sets have no such phenomenon, so we speculate that this situation is related to the data set.

In experiments with SVM on the FudanNLP corpus, FPW and FPC still achieve better results, and the overall results are better than the baseline methods. The global maximum value appears in the 300-dimensional experimental result of FPC. Similar to the results of experiments on Amazon_6, the effect of FW decreases slightly, but PW gets better results. The P2 model is the worst, but FP2 can significantly improve accuracy. In the experiments on P2 and FP2, the benefit of introducing more information can be seen obviously.

Table 7 and

Table 8 show the experimental results of the unbalanced data sets performed on the FudanNLP corpus. There are 17 categories involved and the data is unbalanced. Among the three baseline methods, except for the effect of

TF–IDF on the KNN classifier, both LDA and Word2Vec have a significant decline from the previous experiment. However, our models still show improvement.

Among the results of the KNN classifier, FPW and FPC are the best, and most of the results exceed the baseline methods. Both FW and PW failed to exceed the baseline methods, but their combination has made significant progress. Both P2 and FP2 have average effects, but are still acceptable in terms of accuracy. The F1-macro is general and may be caused by too many categories.

In experiments conducted by SVM, FPW, and FPC exceed the baseline methods most of the time. Word2Vec itself has achieved good results. TF–IDF also performs well on F1-macro. The effect of P2 is still general. SVM is still unsuitable for such incomplete topic models. In the LDA method, the effect of SVM is often not as good as KNN, which is related to the classifier. The SVM classification depends on the support vectors. The text representation models based on the topic models find it relatively difficult to find the support vectors of the edges, while the KNN finds it easier to obtain the center of categories, so the vectors close to the center are divided into one class.

In the work of Zhang [

53], the FudanNLP corpus also used the methods with and without normalization, and the accuracy reached 54% and 79.6%, respectively. It can be observed that this data set is extremely unbalanced, so it is not easy to get better classification results. The effects of the models of this paper are acceptable. Regarding accuracy as a standard, the models of this paper could reach about 83%.

Table 9 and

Table 10 begin with the experimental results of the laptops domain of the ChnSentiCorp corpus. Since this dataset is labeled with positive and negative emotional polarities for each field, we have experimented in each field.

The corpus of laptops has 4000 texts and a vocabulary of 7892. In this set of experiments, the performance of each model is stable. FPW is the best, FPC is second, and PW is third. This may be related to the data in the corpus itself. There is a gap between the TF–IDF method as a baseline and LDA. Similar to the experiment in which FudanNLP selected 1500 corpus in three categories, the effect of the FW model on classification shows a significant improvement.

In the results of SVM on the laptop corpus shown in

Table 10, FPC’s overall effect is better. FPW achieves good results and is better than the baseline methods. In addition to the improvement of PW compared to LDA, FW also improves in most dimensions compared to TF–IDF. The FP2 model also yields mostly better results than the baseline methods. SVM is always effective for the vector space models constructed by TF–IDF. Compared with the KNN experimental results, FW is not much better than TF–IDF, but it can obtain some improvement.

Table 11 and

Table 12 begin with the experimental results of ChnSentiCorp. The data in the book field in ChnSentiCorp is special. People may write some texts about the specific content of books when they evaluate books, so even if the texts are purely positive, the content may be different.

The effect of TF–IDF is general, and LDA works better, but the models we proposed obtain more significant results and improve to nearly 90% accuracy. In the results of the baseline approaches, unlike the previous Amazon_6 and FudanNLP, Word2Vec obtains a slightly worse effect. In most cases, Word2Vec is often the best in the baseline methods, or the second only to the best, but this time it is only slightly better than LDA. This occasional situation does not affect the validity of Word2Vec.

In the book field of the ChnSentiCorp, it is interesting that the best experimental results of SVM in different dimensions are consistent with KNN, which are derived from FW and FP2. The global maximum comes from the 200-dimensional experiment of FW. Similar to the conclusions obtained by KNN, the dimensions of vectors do not have to be set as too large. Although neither FPW nor FPC achieve the highest value, all the results are better than the baseline.

Table 12 shows that FW yields better results than the baseline methods in all dimensions, but PW shows a lower accuracy rate in most dimensions than LDA. It can be seen that feature weighting and topic models have different modeling ideas for texts. When FW can obtain good vector representations, PW may not get better results, and vice versa. Different classifiers perform much quite differently between

TF–IDF and LDA.

In terms of the experiments in this paper, KNN can get useful classification results for the models established by LDA, but not for the vectors obtained by TF–IDF. SVM is the reverse: It always gets reliable results for TF–IDF and ordinary for LDA. These phenomena have a lot to do with the difference between classifiers and corpora. However, in terms of vector representations, satisfactory vector representation models can obtain satisfactory results on different classifiers, as with our FW model of the last set of experiments, as well as the FPW and FPC models proposed here. At the same time, related experiments of P2 and FP2 can also obtain similar conclusions on different classifiers.

Also using this ChnSentiCorp corpus, Zhai [

54] proposed a text representation method for extracting different kinds of features. Since this is a corpus for sentiment analysis, Zhai’s approach considers extracting features that are related to sentimental tendencies. In addition, features such as substrings, substring groups, and key substrings are extracted. Finally, the highest accuracy of 91.9% can be obtained under the SVM classifier, which is a very good result [

55]. In this paper, without the specific feature extraction, the accuracy is comparable or even higher.

By comparing our models with different methods on different corpora, it can be seen that the method of this paper gives an improvement in accuracy, and the increase range is from 1% to 4%. In the design of our method, there are some differences from the existing methods. Compared with the FBoW model [

1], our models introduce the LDA topic model, enabling our new models to describe the texts from the perspective of topic modeling. Compared with Zhang’s method [

53], the method of this paper does not need to deal with the mix problem of Chinese and English, such as word categorization. Compared with Zhai’s method [

54], our method does not set special rules for feature extraction on the data of sentiment analysis, but it matched Zhai’s method in strength.

From the above experiments as a whole, we can also gather some additional conclusions. Firstly, the dimensions of vectors do not need to be large. The 200-and 300-dimensional settings often get the best results. Secondly, for some special corpus, it is possible that the FW or PW model can get good results, but in most cases, text representation models that combine feature extraction, word vector, and topic models are more effective. Thirdly, P2 has a relatively poor accuracy due to the deletion of some information calculated by LDA. However, after combining with the FW model, even if no longer combined with the word vector, it often gets good results.