Abstract

The IoT describes a development field where new approaches and trends are in constant change. In this scenario, new devices and sensors are offering higher precision in everyday life in an increasingly less invasive way. In this work, we propose the use of spatial-temporal features by means of fuzzy logic as a general descriptor for heterogeneous sensors. This fuzzy sensor representation is highly efficient and enables devices with low computing power to develop learning and evaluation tasks in activity recognition using light and efficient classifiers. To show the methodology’s potential in real applications, we deploy an intelligent environment where new UWB location devices, inertial objects, wearable devices, and binary sensors are connected with each other and describe daily human activities. We then apply the proposed fuzzy logic-based methodology to obtain spatial-temporal features to fuse the data from the heterogeneous sensor devices. A case study developed in the UJAmISmart Lab of the University of Jaen (Jaen, Spain) shows the encouraging performance of the methodology when recognizing the activity of an inhabitant using efficient classifiers.

1. Introduction

Activity Recognition (AR) defines models able to detect human actions and their goals in smart environments with the aim of providing assistance. Such methods have increasingly been adopted in smart homes [1] and healthcare applications [2] aiming both at improving the quality of care services and allowing people to stay independent in their own homes for as long as possible [3]. In this way, AR has become an open field of research where approaches based on different types of sensors have been proposed [4]. In the first stages, binary sensors were proposed as suitable devices for describing daily human activities within a smart environment setting [5,6]. More recently, wearable devices have been used to analyze activities and gestures in AR [7].

Furthermore, recent paradigms such as edge computing [8] or fog computing [9] place the the data and services within the devices where data are collected, providing virtualized resources and engaged location-based services, at the edge of the mobile networks [10]. In this new perspective on the Internet of Things (IoT) [11], the focus shifts from cloud computing with centralized processing [12] to collaborative networks where the smart objects interact with each other and cooperate with their neighbors to reach common goals [13,14]. In particular, fog computing has had a great impact, between ambient devices [15] and wearable devices [16].

In this context, the proposed work presents a methodology for activity recognition that: (i) integrates interconnected IoT devices that share environmental data and (ii) learns from the heterogeneous data from sensors using a fuzzy approach, which extracts spatial-temporal features. The outcome of this methodology is recognizing daily activities by means of an efficient and lightweight model, which can be included in the future generation of smart objects.

The remainder of the paper is structured as follows: the following subsection reviews works related to our proposal, emphasizing the main novelties we propose. Section 2 presents the proposed methodology for learning daily activities from heterogeneous sensors in a smart environment. Section 3 introduces a case study to show the utility and applicability of the proposed model for AR in the smart environment of the University of Jaen. Finally, in Section 4, conclusions and ongoing works are discussed.

1.1. Related Works

Connectivity plays an important role in Internet of Things (IoT) solutions [17]. Fog computing approaches require the real-time distribution of collaborative information and knowledge [18] to provide a scalable approach in which the heterogeneous sensors are distributed to dynamic subscribers in real time. In this contribution, smart objects are defined as both sources and targets of information using a publish-subscribe model [19]. In the proposed methodology, we define a fog computing approach to: (i) distribute and aggregate information from sensors, which are defined by spatial-temporal features with fuzzy logic, using middleware based on the publish-subscribe model, and (ii) learn from the distributed feature sensors with efficient classifiers, which enable AR within IoT devices.

In turn, in the context of intelligent environments, a new generation of non-invasive devices is combined with the use of traditional sensors. For example, the use of new location devices based on UWB is allowing us to reach extremely high accuracy in indoor contexts [20], which has increased the performance of previous indoor positioning systems based on BLE devices [21]. At the same time, the use of inertial sensors in wearable devices has been demonstrated to enhance activity recognition [22]. These devices coexist with traditional binary sensors, which have been widely used to describe daily user activities from initial AR works [23] to more recent literature [24]. These heterogeneous sensors require integrating several sources: wearable, binary, and location sensors in smart environments [25], to enable rich AR by means of sensor fusion [26].

Traditionally, the features used to describe sensors under data-driven approaches have depended on the type of sensors, whether accelerometers [27] or binary sensors [28]. In previous works, deep learning has also been shown as a suitable approach in AR to describe heterogeneous features from sensors in smart environments [5,6,29]. However, it is proving hard to include learning capabilities in miniature boards or mobile devices integrated in smart objects [30]. First, we note that deep learning requires huge amounts of data [31]. Second, learning, and in some cases, evaluating, under deep learning approaches within low computing boards requires the adaptation of models and the use of costly high-performance embedded boards. In line with this, we highlight the work [32], where a new form of compression models was proposed in several areas to deploy deep neural networks in high-performance embedded processors, such as the ARM Cortex M3. Advancement across a range of interdependent areas, including hardware, systems, and learning algorithms, is still necessary [33].

To bring the capabilities needed to develop general features from sensors to low-computing devices, we propose using spatial-temporal feature extraction based on fuzzy logic with minimal human configuration together with light and efficient classifiers. On the one hand, fuzzy logic has been proposed in sensor fusion [34] and the aggregation of heterogeneous data in distributed architectures [35]. For example, fuzzy temporal windows have increased performance in several datasets [5,6,29], extracting several temporal features from sensors, which have been demonstrated as a suitable representation for AR from binary [36] and wearable [37] sensors.

On the other hand, some other efficient classifiers have been successfully proposed for AR [38] using devices with limited computing power. For example, decision trees, k-nearest neighbor, or support vector machine has enabled AR in ubiquitous devices by processing embedded sensors in mobile devices [39,40].

Taking this research background into consideration, we defined the following key points to include in our approach and compensate for the lack of previous models:

- To share and distribute data from environmental, wearable, binary, and location sensors among each other using open-source middleware based on MQTT [17].

- To extract spatial features from sensors using fuzzy logic by means of fuzzy scales [41] with multi-granular modeling [42].

- To extract temporal features in the short- and middle-term using incremental fuzzy temporal windows [5].

- To learn from a small amount of data, to avoid the dependency of deep learning on a large amount of data [31].

- To evaluate the performance of AR with efficient and lightweight classifiers [40], which are compatible with computing in miniature boards [38].

2. Methodology

In this section, we present the proposed methodology for learning daily activities from heterogeneous sensors in a smart environment. As the main aim of this work is integrating and learning the information from sensors in real time, we first describe them formally. A given sensor provides information from a data stream , where represents a measurement of the sensor in the timestamp t. Under real-time conditions, represents the current time and the status of the data stream in this point in time.

In this work, in order to increase scalability and modularity in the deployment of sensors, each sensor publishes the data stream independently of the other sensors in real time. For this, a collecting rate is defined in order to describe the data stream constantly and symmetrically over time:

Further details on the deployment of sensors in real time are presented in Section 2.1, where a new trend for smart objects and devices are connected with each other using publish-subscribe-based middleware.

Next, in order to relate the data stream with the activities performed by the inhabitant, it is necessary to describe the information from the sensor stream with a set of features , where a given feature is defined by the function to aggregate the values of the sensor streams in the current time :

Since our model is based on a data-driven supervised approach, the features that describe the sensors are related to a given label for each given time :

where defines a discrete value and identifies the labeled activity performed by the inhabitant in the given time .

In Section 2.2, we describe a formal methodology based on fuzzy logic to obtain spatial-temporal features to fuse the data from the heterogeneous sensors.

Next, we describe the technical and methodological aspects.

2.1. Technical Approach

In this section, we present sensors and smart objects that have been recently proposed as non-invasive data sources for describing daily human activities, followed by the middleware used to interconnect these different devices.

2.1.1. Smart Object and Devices

As mentioned in Section 1.1, the aim of this work is to enable the interaction between new generations of smart objects. In this section, we present the use of sensors and smart objects, which have been recently proposed as non-invasive data sources for describing daily human activities. These devices have been included in the case study presented in this work, deployed at the the UJAmISmart Lab of University of Jaen (Jaen, Spain) [25] (http://ceatic.ujaen.es/ujami/en/smartlab).

First, in order to gain data on certain objects for AR, we included an inertial miniature board in some daily-use objects, which describes their movement and orientation. To evaluate this information, we attached a Tactigon board [43] to them, which collects inertial data from the accelerometer and sends them in real time under a BLE protocol. For prototyping purposes, in Figure 1, we show the integration of the inertial miniature board in some objects.

Figure 1.

Prototyping of smart objects (a cup, a toothbrush, and a fork), whose orientation and movement data are collected and sent in real time by an inertial miniature board (The Tactigon).

Second, we acquired indoor location data by means of UWB devices, which offer high performance with a location accuracy closer to centimeters [44], using wearable devices carried by the inhabitants of the smart environment [44]. In this work, we integrated Decawave’s EVK1000 device [45], which is configured with (almost three) anchors located in the environment and one tag for each inhabitant.

Third, as combining inertial sensors from wearable devices on the user enhances activity recognition [22], we collected inertial information from a wristband device worn by an inhabitant. In this case, we developed an Android Wear application deployed in a Polar M600, which runs on Android Wear [46]. The application allowed us to send data from the accelerometer sensor in real time through a WiFi connection.

Fourth, we included binary sensors in some static objects that the inhabitant interacts with while performing his/her daily activities, such as the microwave or the bed. For this purpose, we integrated some smart things devices [47] in the UJAmI Smart Lab, which transmit the activation of pressure from a mat or the open-close of a door through a Z-Wave protocol. These four types of sensors represent a new trend of high-capability devices for AR. In the next section, we describe the architecture to connect these heterogeneous sources and distribute sensor data in real time.

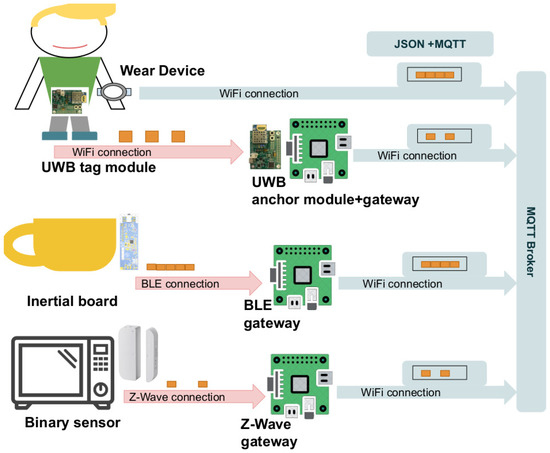

2.1.2. Middleware for Connecting Heterogeneous Devices

In this section, we describe a distributed architecture for IoT devices using MQTT, where aggregated data from sensors are shared under the publish-subscribe model. The development of an architecture to collect and distribute information from heterogeneous sensors in smart environments has become a key aspect, as well as the prolific research field in the integration of IoT devices due to the lack of standardization [48]. In this section, we present the middleware deployed at the UJAmI Smart Lab of the University of Jaen (Spain) [25] based on the following points:

- We include connectivity for devices in a transparent way, including BLE, TCP, and Z-Wave protocols.

- The data collected from heterogeneous devices (without WiFi capabilities) are sent to a given gateway, which reads the raw data in a given protocol, aggregates them, and sends them by MQTT under TCP.

- The representation of data includes the timestamp for when the data were collected together with the given value of the sensor. The messages in MQTT describe the data in JSON format, a lightweight, text-based, language-independent data interchange format [49].

Next, we describe the specific configuration for each sensor deployed in this work. First, the inertial data from the miniature boards located in smart objects are sent under BLE in raw format at a frequency close to 100 samples per second. A Raspberry Pi is configured as a BLE gateway, reading information from the Tactigon boards, aggregating the inertial data into one-second batches and sending a JSON message in MQTT on a given topic for each sensor.

Second, the Decawave UWB devices are collected in a gateway at a frequency close to 1 sample per second, collecting the location of tag devices by means of a USB connection. The open-source software (https://www.decawave.com/software/) from Decawave was used to read the information and then publish a JSON message in MQTT with the location on a given topic for each tag.

Third, an Android Wear application was developed in order to collect the information from the inertial sensors of the smart wristband devices. The application obtains acceleration samples at a frequency close to 100 per second, collecting a batch of aggregated samples and publishing a JSON message in MQTT on a given topic for each wearable device.

Fourth, a Raspberry Pi is configured as a Z-Wave gateway using a Z-Wave card connected to the GPIO and the software (https://z-wave.me/). In this way, the Raspberry Pi gateway is connected to smart things devices in real time, receiving the raw data and translating them to JSON format to be published in real time using MQTT on a given topic that identifies the device.

In Figure 2, we show the architecture of the hardware devices and software components that configure the middleware for distributing the heterogeneous data from sensors in real time with MQTT in JSON format.

Figure 2.

Architecture for connecting heterogeneous devices. Binary, location, and inertial board sensors send raw data to gateways, which collect, aggregate, and publish data with MQTT in JSON format. The Android Wear application collects, aggregates, and publishes the data directly using MQTT through WiFi connection.

2.2. Fuzzy Fusion of Sensor Spatial-Temporal Features

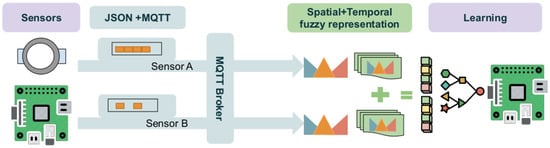

After detailing the technical configuration of the devices and middleware involved in this work, we present a methodology used to extract spatial-temporal features and represent the heterogeneous data from sensors using fuzzy logic in a homogeneous way in order to learn and evaluate tasks in activity recognition using light and efficient classifiers.

The proposed methodology is based on the following stages:

- Describing the spatial representation of sensors by means of fuzzy linguistic scales, which provide high interpretability and require minimal expert knowledge, by means of ordered terms.

- Aggregating and describing the temporal evolution of the terms from linguistic scales by means of fuzzy temporal windows including a middle-to-short temporal evaluation.

- Predicting AR from the fused sensor features by means of light classifiers, which can be trained and evaluated in devices with low computing power.

In Figure 3, we show the components involved in fusing the spatial-temporal features of heterogeneous sensors.

Figure 3.

Fuzzy fusion of spatial-temporal features of sensors: (i) data from the heterogeneous sensors are distributed in real time; (ii) fuzzy logic processes spatial-temporal features; (iii) a light and efficient classifier learns activities from the features.

2.2.1. Spatial Features with Fuzzy Scales

In this section, we detail how the data from heterogeneous sensors is described using fuzzy scales, requiring minimal expert knowledge. A fuzzy scale of granularity g describes the values of an environmental sensor , which is defined by the terms . The terms within the fuzzy linguistic scale (i) fit naturally and are equally ordered within the domain of discourse of the sensor data stream from the interval values and (ii) fulfill the principle of overlapping to ensure a smooth transition [50].

Each term is characterized by using a triangular membership function as detailed in Appendix A. Therefore, the terms , which describe the sensor , configure the fuzzy spatial features of the sensor from the values:

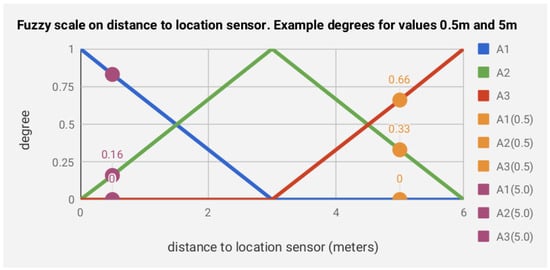

To show a graphical description of the use of fuzzy linguistic scales in describing the sensor values, we provide an example of the sensor location in Figure 4. In the example, the location sensor s measured the distance in meters to the inhabitant within a maximum of 6 meters (we avoided the sensor super index for the sake of simplicity), whose sensor stream is defined in the two points of time and . First, we describe a fuzzy scale of granularity , which determines the membership function of the terms . Second, from the values of the sensor stream, which define the distances to the location sensor, we computed the degrees for each term in the fuzzy scale obtaining .

Figure 4.

Example of the fuzzy scale defined for on distance to the location sensor. Example degrees for values evaluating the distances .

2.2.2. Temporal Features with Fuzzy Temporal Windows

In this section, the use of multiple Fuzzy Temporal Windows (FTW) and fuzzy aggregation methods [35] is proposed to enable the short- and middle-term representation [5,6] of the temporal evolution of the degrees for the terms .

The FTWs are described straightforwardly according to the distance from the current time to a given timestamp as using the membership function . Therefore, a given FTW is defined by the values , which determine a trapezoidal membership function (referred to in Appendix C), as:

Next, the aggregation degree from the relevant terms within the temporal window of a sensor is computed using a max-min operator [35] (detailed in Appendix B). This aggregation degree is defined as , which represents the aggregation degree of the FTW over the degrees of term in a given time .

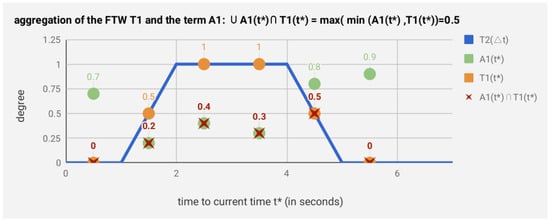

We provide an example in Figure 5 to show a graphical description of the use of an FTW in aggregating the degrees of a term in the sensor stream as (we avoid the sensor super index for the sake of simplicity). First, we define an FTW as [1 s, 2 s, 4 s, 5 s] in magnitude of seconds s. Second, we compute the degree of the temporal window , whose aggregation degree is computed by the max-min operator and determines the value of the spatial-temporal feature defined by the pair . Therefore, we define a given feature for each pair of fuzzy terms and the FTW of a sensor stream in the current time .

Figure 5.

Example of temporal aggregation of the FTW [1 s, 2 s, 4 s, 5 s] (in magnitude of seconds s) for the degrees of the term . The aggregation degree is determined by the max-min operator. The value defines a fuzzy spatial temporal feature of the sensor stream.

3. Results

In this section, we describe the experimental setup and results of a case study developed at the UJAmI Smart Lab of the University of Jaen (Spain), which were gathered in order to evaluate the proposed methodology for AR.

3.1. Experimental Setup

The devices defined in Section 2.1.1 were previously deployed at the UJAmI Smart Lab of the University of Jaen. The middleware based on MQTT and JSON messages integrated: (i) UWB-Decawave location devices, (ii) Tactigon inertial devices, (iii) Smart Things binary sensors, (iv) wearable devices (Polar M660) with Android Wear, and (v) Raspberry Pi gateways. The middleware allowed us to collect data from environmental sensors in real time: location and acceleration data from inhabitants; acceleration data from three smart objects: a cup, a toothbrush, and a fork; binary activation from nine static objects: bathroom faucet, toilet flush, bed, kitchen faucet, microwave, TV, phone, closet, and main door.

In the case study, 5 scenes were collected while the inhabitant performed 10 activities: sleep, toileting, prepare lunch, eat, watch TV, phone, dressing, toothbrush, drink water and enter-leave house. A scene consisted of a coherent sequence of human actions in daily life, such as: waking up, preparing breakfast, and getting dressed to leave home. In the 5 scenes, a total of 842 samples for each one of the 26 sensors were recorded in one-second time-steps. Due to the high inflow of data from the inertial sensors, which were configured to 50 Hz, we aggregated the data in one-second batches within the gateways. Other sensors sent the last single value for each one-second step from the gateways where they were connected. In Table 1, we provide a description of the case scenes and in Table 2 the frequency of activities.

Table 1.

Sequence activities of the case scenes.

Table 2.

Frequency (number of time-steps) for each activity and scene.

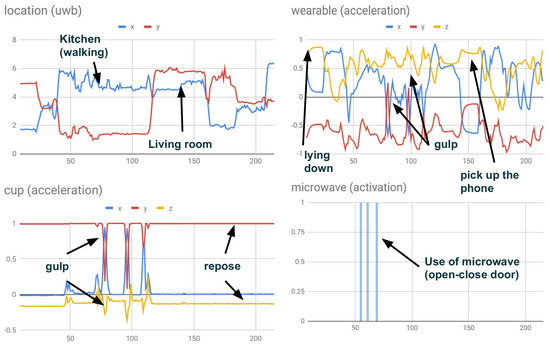

The information from all sensors was distributed in real time by means of MQTT messages and topics in one-second time-steps. An MQTT subscriber collected and recorded the sensor data from topics streaming within a database. The collection of data was managed by MQTT messaging, enabling us to start or stop data collection in the database in real time. We note that at the same point of time, each board or computer could take different time-stamps since the clocks did not have to be synchronized. To synchronize all the devices (within the one-second interval), we collected the time-stamp of the first value for each sensor from the initial message for collecting data, which determined the reference time for this sensor. Therefore, all the following timestamps for each sensor were computed as relative time to starting time . Some examples of data collected from different sources are shown in Figure 6.

Figure 6.

Data from heterogeneous sensors. The top-left shows the location in meters from a UWB device. The top-right shows acceleration from a wearable device. The bottom-left shows acceleration in the inhabitant’s cup. The bottom-right shows the activation of the microwave. Some inhabitant behaviors and the impact on sensors are indicated in the timelines.

During the case study, an external observer labeled the timeline with the activity carried out by the inhabitant in real time. For training and evaluation purposes, a cross-validation was carried out with the 5 scenes (each one was used for testing and another for training). The evaluation of the AR was developed in streaming for each second in real-time conditions, without explicit segmentation of the activities performed. Next, we merged all time-steps from the 5 case tests, configuring a full timeline test, which could be analyzed according to the metrics. The metrics to evaluate the models were precision, recall, and F1-score, which were computed for each activity. In turn, we allowed an error margin of a second, since the human labeling of the scenes may be slightly displaced at this speed (by a margin of seconds).

Finally, as light and efficient classifiers, we evaluated: kNN, decision tree (C4.5), and SVM, whose implementation in Java and C++ [51,52,53] enable learning and evaluation capabilities in miniature boards or mobile devices. We evaluated the approach in a mid-range mobile device (Samsung galaxy J7), where the classifiers were integrated using Weka [52] and the learning time of the classifier was measured.

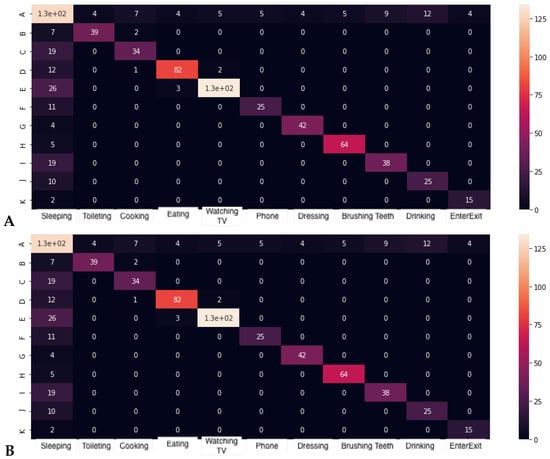

3.2. Baseline Features

In this section, we present the results of baseline features in AR using raw data provided by sensors. Therefore, first we applied the classification of raw data collected by middleware for each second and activity label. Second, in order to evaluate the impact of aggregating raw data in a temporal range, we included an evaluation of several sizes of temporal windows, which summarized sensor data using maximal aggregation. The configurations were: (i) , (ii) , and (iii) , where configure the given temporal window for each evaluation time in the timeline. The number of features corresponds to the number of sensors . Results and learning time in mobile devices for each activity and classifier are shown in Table 3; the confusion matrix with the best configuration and SVM is shown in Figure 7.

Table 3.

Results with baseline features: precision (Pre), recall (Rec) and F1-score (F1-sc).

Figure 7.

Confusion matrix for the best classifiers. (A) SVM + in the baseline, (B) KNN + with fuzzy spatial features, and (C) KNN + with fuzzy spatial temporal features.

We can observe that the use of one temporal window with baseline features was only suitable when the window size fit the short-term sensor activation .

3.3. Fuzzy Spatial-Temporal Features

In this section, we detail the extraction of fuzzy spatial-temporal features from the sensors of the case study. First, in order to process the data from the UWB and acceleration sensors (in wearable devices and inertial objects), we applied a normalized linguistic scale with granularity , where the proposed linguistic terms fit naturally ordered within the domain of discourse of the environmental sensor.

The number of features correspond to the number of sensors times granularity . The linguistic scale of UWB location was defined in the domain m since the the smart lab is less than six meters in size, and the linguistic scale of acceleration data was between the normalized angles defined in . Binary sensors which are represented by the values , one in the case of activation, have the same straightforward representation as a fuzzy or crisp value.

In Table 4, we present the results and learning time in a mobile device for each activity and classifier; the confusion matrix with the best configuration and kNN are shown in Figure 7. We note the stability of the results in the different windows compared to the previous results without fuzzy processing.

Table 4.

Results with fuzzy features (spatial): precision (Pre), recall (Rec) and F1-score (F1-sc).

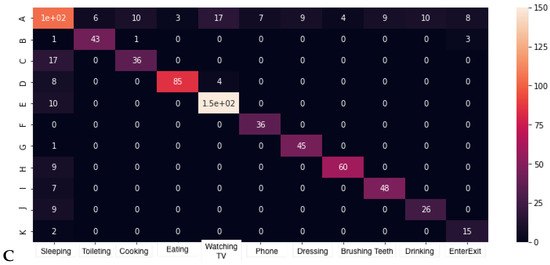

Second, we applied two configurations of FTWs to represent middle- and short-term activation of sensors: (i) and (ii) , where represents a past fuzzy temporal window, a fuzzy temporal window closer to current time , and a delayed temporal window from the current time . The first and second configurations contained a further temporal evaluation of 8 s and 21 s, respectively. The number of features corresponds to the number of sensors times granularity and the number of temporal windows .

Finally, in Table 5, we present the results and learning time in a mobile device for each activity and classifier; the kNN confusion matrix is shown in Figure 7. We note the increase of performance when including several fuzzy temporal windows highlighting the learning time, efficiency, and f1-score of kNN.

Table 5.

Results with fuzzy features (spatial and temporal): precision (Pre), recall (Rec) and F1-score (F1-sc).

3.4. Representation with Extended Baseline Features

In this section, we evaluate the impact of including an advanced representation of sensors as baseline features. For inertial sensors, we included the aggregation function: maximal, minimal, average, and standard deviation, which are identified as a strong representation of acceleration data [27]. In the case of binary sensors, we included the last activation of the sensors and the current activation to represent the last status of the smart environment, which has brought about encouraging results in activity recognition [28,54]. These new features were computed to obtain an extended sensor representation.

First, we computed the performance of the extended representation when used as baseline features within one-second windows , which can be compared with Table 3 to see how the results correspond with the non-extended features. Second, we evaluated the impact of applying the fuzzy spatial-temporal methodology to the extended features with the configurations for FTW and , which can be compared with Table 5 to see how the results correspond with the non-extended features. The results with the performance of the extended representation are shown in Table 6.

Table 6.

Results with extended baseline features: precision (Pre), recall (Rec) and F1-score (F1-sc).

3.5. Impact on Selection by Type of Sensor

In this section, we evaluate the impact of selecting a subset of the sensors of the case study on activity recognition. For this, we started with the best configuration, which utilized fuzzy spatial-temporal extended features with FTW . From this configuration, we evaluated four subsets of sensors by type:

- (S1) removing binary (using inertial and location) sensors.

- (S2) removing location (using binary and inertial) sensors.

- (S3) removing inertial (using binary and location) sensors.

- (S4) removing binary and location (using only inertial) sensors.

In Table 7, we show the results of selecting the four subsets of sensors by type.

Table 7.

S1: Non Binary (inertial + location) sensors. S2: non-location (binary + inertial), S3: non-inertial (binary + location), S4: only inertial.

3.6. Discussion

Based on the results shown in the case study, we defend that the use of fuzzy logic to extract spatial-temporal features from heterogeneous sensors constitutes a suitable model for representation and learning purposes in AR. First, spatial representation based on fuzzy scales increased performance regarding crisp-raw values. Second, the impact of including multiple fuzzy temporal windows as features, which enables middle- and short-term representation of sensor data, brought about a relevant increase in performance. Moreover, two configurations of FTWs were evaluated showing similar results, suggesting that window size definition is not critical in modeling FTW parameters, unlike with crisp windows and baseline features. Third, fuzzy spatial-temporal features showed encouraging performance from raw sensor data; however, we evaluated an advanced representation for inertial sensors and binary sensors. The use of extended features increased performance slightly by around 1–2%. Fourth, we evaluated the impact of removing some types of sensors in the deployment of the smart lab. The combination of all types of sensors provided the best configuration, and we note: (i) the use of inertial sensors and smart objects only by the inhabitant reduced performance notably; (ii) the combination of binary sensors with location or inertial sensors was closer to the best approach, which featured all of them. Finally, it is noteworthy that kNN showed encouraging results, together with SVM. The shorter learning time and the high f1-sc of kNN in AR suggest that it is the best option as a classifier to be integrated in learning AR within miniature boards. Decision trees had lower performance due to poor capabilities in analyzing continuous data.

4. Conclusions and Ongoing Works

The aim of this work was to describe and fuse the information from heterogeneous sensors in an efficient and lightweight manner in order to enable IoT devices to compute spatial-temporal features in AR, which can be deployed in fog computing architectures. On the one hand, a case study with a combination of location, inertial, and binary sensors was performed in a smart lab where an inhabitant carried out 10 daily activities. We included the integration of inertial sensors in daily objects and high-precision location sensors as novel aspects using middleware based on MQTT. On the other hand, we showed the capabilities of fuzzy scales and fuzzy temporal windows to increase the spatial-temporal representation of sensors. We highlighted that the results showed stable performance with fuzzy temporal windows, which helped with the window size selection problem. On spatial features, we applied the same general method based on linguistic scales to fuse and describe heterogeneous sensors. We evaluated the impact of removing the sensors by type (binary, location, and inertial), which provided relevant feedback on which ones performed better for activity recognition in a smart lab setting. Finally, we note the high representativeness of fuzzy logic in describing features, which was made the most of by the use of straightforward and efficient classifiers, among which the performance of kNN stood out.

Author Contributions

Conceptualization: all authors; M.E. and J.M.Q.: methodology, software, and validation.

Funding

This research received funding under the REMINDproject Marie Sklodowska-Curie EU Framework for Research and Innovation Horizon 2020, under Grant Agreement No. 734355. Furthermore, this contribution was supported by the Spanish government by means of the projects RTI2018-098979-A-I00, PI-0387-2018, and CAS17/00292.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| UWB | Ultra-Wide-Band |

| IoT | Internet of Things |

| BLE | Bluetooth Low Energy |

| MQTT | Message Queue Telemetry Transport |

| JSON | JavaScript Object Notation |

| AR | Activity Recognition |

| FTW | Fuzzy Temporal Window |

Appendix A. Linguistic Scale of Fuzzy Terms by Means of Triangular Membership Functions

A linguistic scale for a given environmental sensor is defined by: (i) interval values and (ii) granularity g. Each term is characterized by using a triangular membership function [55], which is defined by the interval values as , where:

Appendix B. Aggregating Fuzzy Temporal Windows and Terms

For a given fuzzy term and a fuzzy temporal window defined over a sensor stream , we define the aggregation in a given current time as:

Using max-min [35] as an operation to model the t-norm and co-norm, we obtain:

Appendix C. Representation of Fuzzy Temporal Windows using Trapezoidal Membership Functions

Each TFW is described by a trapezoidal function based on the time interval from a previous time to the current time : and a fuzzy set characterized by a membership function whose shape corresponds to a trapezoidal function. The well-known trapezoidal membership functions are defined by a lower limit , an upper limit , a lower support limit , and an upper support limit (refer to Equation (A4)):

References

- Bravo, J.; Fuentes, L.; de Ipina, D.L. Theme issue: Ubiquitous computing and ambient intelligence. Pers. Ubiquitous Comput. 2011, 15, 315–316. [Google Scholar] [CrossRef]

- Bravo, J.; Hervas, R.; Fontecha, J.; Gonzalez, I. m-Health: Lessons Learned by m-Experiences. Sensors 2018, 18, 1569. [Google Scholar] [CrossRef] [PubMed]

- Rashidi, P.; Mihailidis, A. A Survey on Ambient Assisted Living Tools for Older Adults. IEEE J. Biomed. Health Inform. 2013, 17, 579–590. [Google Scholar] [CrossRef] [PubMed]

- De-la-Hoz, E.; Ariza-Colpas, P.; Medina, J.; Espinilla, M. Sensor-based datasets for Human Activity Recognition—A Systematic Review of Literature. IEEE Access 2018, 6, 59192–59210. [Google Scholar] [CrossRef]

- Medina-Quero, J.; Zhang, S.; Nugent, C.; Espinilla, M. Ensemble classifier of long short-term memory with fuzzy temporal windows on binary sensors for activity recognition. Expert Syst. Appl. 2018, 114, 441–453. [Google Scholar] [CrossRef]

- Ali-Hamad, R.; Salguero, A.; Bouguelia, M.H.; Espinilla, M.; Medina-Quero, M. Efficient activity recognition in smart homes using delayed fuzzy temporal windows on binary sensors. IEEE J. Biomed. Health Inform. 2019. [Google Scholar] [CrossRef]

- Ordoñez, F.J.; Roggen, D. Deep convolutional and lstm recurrent neural networks for multimodal wearable activity recognition. Sensors 2016, 16, 115. [Google Scholar] [CrossRef] [PubMed]

- Garcia Lopez, P.; Montresor, A.; Epema, D.; Datta, A.; Higashino, T.; Iamnitchi, A.; Barcellos, M.; Felber, P.; Riviere, E. Edge-centric computing: Vision and challenges. ACM SIGCOMM Comput. Commun. Rev. 2015, 45, 37–42. [Google Scholar] [CrossRef]

- Bonomi, F.; Milito, R.; Zhu, J.; Addepalli, S. Fog computing and its role in the internet of things. In Proceedings of the First Edition of the MCC Workshop on Mobile Cloud Computing, Helsinki, Finland, 17 August 2012; pp. 13–16. [Google Scholar]

- Luan, T.H.; Gao, L.; Li, Z.; Xiang, Y.; Wei, G.; Sun, L. Fog computing: Focusing on mobile users at the edge. arXiv 2015, arXiv:1502.01815. [Google Scholar]

- Kopetz, H. Internet of Things. In Real-Time Systems; Springer: New York, NY, USA, 2011; pp. 307–323. [Google Scholar]

- Chen, L.W.; Ho, Y.F.; Kuo, W.T.; Tsai, M.F. Intelligent file transfer for smart handheld devices based on mobile cloud computing. Int. J. Commun. Syst. 2015, 30, e2947. [Google Scholar] [CrossRef]

- Atzori, L.; Iera, A.; Morabito, G. The Internet of Things: A survey. Comput. Netw. 2010, 54, 2787–2805. [Google Scholar] [CrossRef]

- Kortuem, G.; Kawsar, F.; Sundramoorthy, V.; Fitton, D. Smart objects as building blocks for the internet of things. IEEE Internet Comput. 2010, 14, 44–51. [Google Scholar] [CrossRef]

- Kim, J.E.; Boulos, G.; Yackovich, J.; Barth, T.; Beckel, C.; Mosse, D. Seamless integration of heterogeneous devices and access control in smart homes. In Proceedings of the 2012 Eighth International Conference on Intelligent Environments, Guanajuato, Mexico, 26–29 June 2012; pp. 206–213. [Google Scholar]

- Lara, O.D.; Labrador, M.A. A survey on human activity recognition using wearable sensors. IEEE Commun. Surv. Tutor. 2013, 15, 1192–1209. [Google Scholar] [CrossRef]

- Luzuriaga, J.E.; Cano, J.C.; Calafate, C.; Manzoni, P.; Perez, M.; Boronat, P. Handling mobility in IoT applications using the MQTT protocol. In Proceedings of the 2015 Internet Technologies and Applications (ITA), Wrexham, UK, 8–11 September 2015; pp. 245–250. [Google Scholar]

- Shi, H.; Chen, N.; Deters, R. Combining mobile and fog computing: Using coap to link mobile device clouds with fog computing. In Proceedings of the 2015 IEEE International Conference on Data Science and Data Intensive Systems, Sydney, NSW, Australia, 11–13 December 2015; pp. 564–571. [Google Scholar]

- Henning, M. A new approach to object-oriented middleware. IEEE Internet Comput. 2004, 8, 66–75. [Google Scholar] [CrossRef]

- Ruiz, A.R.J.; Granja, F.S. Comparing ubisense, bespoon, and decawave uwb location systems: Indoor performance analysis. IEEE Trans. Instrum. Meas. 2017, 66, 2106–2117. [Google Scholar] [CrossRef]

- Lin, X.Y.; Ho, T.W.; Fang, C.C.; Yen, Z.S.; Yang, B.J.; Lai, F. A mobile indoor positioning system based on iBeacon technology. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milano, Italy, 25–29 August 2015; pp. 4970–4973. [Google Scholar]

- Fiorini, L.; Bonaccorsi, M.; Betti, S.; Esposito, D.; Cavallo, F. Combining wearable physiological and inertial sensors with indoor user localization network to enhance activity recognition. J. Ambient Intell. Smart Environ. 2018, 10, 345–357. [Google Scholar] [CrossRef]

- Singla, G.; Cook, D.J.; Schmitter-Edgecombe, M. Tracking activities in complex settings using smart environment technologies. Int. J. Biosci. Psychiatry Technol. IJBSPT 2009, 1, 25. [Google Scholar]

- Yan, S.; Liao, Y.; Feng, X.; Liu, Y. Real time activity recognition on streaming sensor data for smart environments. In Proceedings of the 2016 International Conference on Progress in Informatics and Computing (PIC), Shanghai, China, 23–25 December 2016; pp. 51–55. [Google Scholar]

- Espinilla, M.; Martínez, L.; Medina, J.; Nugent, C. The experience of developing the UJAmI Smart lab. IEEE Access 2018, 6, 34631–34642. [Google Scholar] [CrossRef]

- Hong, X.; Nugent, C.; Mulvenna, M.; McClean, S.; Scotney, B.; Devlin, S. Evidential fusion of sensor data for activity recognition in smart homes. Pervasive Mob. Comput. 2009, 5, 236–252. [Google Scholar] [CrossRef]

- Espinilla, M.; Medina, J.; Salguero, A.; Irvine, N.; Donnelly, M.; Cleland, I.; Nugent, C. Human Activity Recognition from the Acceleration Data of a Wearable Device. Which Features Are More Relevant by Activities? Proceedings 2018, 2, 1242. [Google Scholar] [CrossRef]

- Ordonez, F.; de Toledo, P.; Sanchis, A. Activity recognition using hybrid generative/discriminative models on home environments using binary sensors. Sensors 2013, 13, 5460–5477. [Google Scholar] [CrossRef] [PubMed]

- Quero, J.M.; Medina, M.Á.L.; Hidalgo, A.S.; Espinilla, M. Predicting the Urgency Demand of COPD Patients From Environmental Sensors Within Smart Cities With High-Environmental Sensitivity. IEEE Access 2018, 6, 25081–25089. [Google Scholar] [CrossRef]

- Rajalakshmi, A.; Shahnasser, H. Internet of Things using Node-Red and alexa. In Proceedings of the 2017 17th International Symposium on Communications and Information Technologies (ISCIT), Cairns, Australia, 25–27 September 2017; pp. 1–4. [Google Scholar]

- Yamashita, T.; Watasue, T.; Yamauchi, Y.; Fujiyoshi, H. Improving Quality of Training Samples Through Exhaustless Generation and Effective Selection for Deep Convolutional Neural Networks. VISAPP 2015, 2, 228–235. [Google Scholar]

- Lane, N.D.; Bhattacharya, S.; Mathur, A.; Georgiev, P.; Forlivesi, C.; Kawsar, F. Squeezing deep learning into mobile and embedded devices. IEEE Pervasive Comput. 2017, 16, 82–88. [Google Scholar] [CrossRef]

- Lane, N.D.; Warden, P. The deep (learning) transformation of mobile and embedded computing. Computer 2018, 51, 12–16. [Google Scholar] [CrossRef]

- Le Yaouanc, J.M.; Poli, J.P. A fuzzy spatio-temporal-based approach for activity recognition. In International Conference on Conceptual Modeling; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Medina-Quero, J.; Martinez, L.; Espinilla, M. Subscribing to fuzzy temporal aggregation of heterogeneous sensor streams in real-time distributed environments. Int. J. Commun. Syst. 2017, 30, e3238. [Google Scholar] [CrossRef]

- Espinilla, M.; Medina, J.; Hallberg, J.; Nugent, C. A new approach based on temporal sub-windows for online sensor-based activity recognition. J. Ambient Intell. Humaniz. Comput. 2018, 1–13. [Google Scholar] [CrossRef]

- Banos, O.; Galvez, J.M.; Damas, M.; Guillen, A.; Herrera, L.J.; Pomares, H.; Rojas, I.; Villalonga, C.; Hong, C.S.; Lee, S. Multiwindow fusion for wearable activity recognition. In Proceedings of the International Work-Conference on Artificial Neural Networks, Palma de Mallorca, Spain, 10–12 June 2015; Springer: Cham, Switzerland, 2015; pp. 290–297. [Google Scholar]

- Grokop, L.H.; Narayanan, V.U.S. Device Position Estimates from Motion and Ambient Light Classifiers. U.S. Patent No. 9,366,749, 14 June 2016. [Google Scholar]

- Akhavian, R.; Behzadan, A.H. Smartphone-based construction workers’ activity recognition and classification. Autom. Constr. 2016, 71, 198–209. [Google Scholar] [CrossRef]

- Martin, H.; Bernardos, A.M.; Iglesias, J.; Casar, J.R. Activity logging using lightweight classification techniques in mobile devices. Pers. Ubiquitous Comput. 2013, 17, 675–695. [Google Scholar] [CrossRef]

- Chen, S.M.; Hong, J.A. Multicriteria linguistic decision making based on hesitant fuzzy linguistic term sets and the aggregation of fuzzy sets. Inf. Sci. 2014, 286, 63–74. [Google Scholar] [CrossRef]

- Morente-Molinera, J.A.; Pérez, I.J.; Ureña, R.; Herrera-Viedma, E. On multi-granular fuzzy linguistic modeling in decision making. Procedia Comput. Sci. 2015, 55, 593–602. [Google Scholar] [CrossRef]

- The Tactigon. 2019. Available online: https://www.thetactigon.com/ (accessed on 8 August 2019).

- Zafari, F.; Papapanagiotou, I.; Christidis, K. Microlocation for internet-of-things-equipped smart buildings. IEEE Internet Things J. 2016, 3, 96–112. [Google Scholar] [CrossRef]

- Kulmer, J.; Hinteregger, S.; Großwindhager, B.; Rath, M.; Bakr, M.S.; Leitinger, E.; Witrisal, K. Using DecaWave UWB transceivers for high-accuracy multipath-assisted indoor positioning. In Proceedings of the 2017 IEEE International Conference on Communications Workshops (ICC Workshops), Paris, France, 21–25 May 2017; pp. 1239–1245. [Google Scholar]

- Mishra, S.M. Wearable Android: Android Wear and Google Fit App Development; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Smartthings. 2019. Available online: https://www.smartthings.com/ (accessed on 8 August 2019).

- Al-Qaseemi, S.A.; Almulhim, H.A.; Almulhim, M.F.; Chaudhry, S.R. IoT architecture challenges and issues: Lack of standardization. In Proceedings of the 2016 Future Technologies Conference (FTC), San Francisco, CA, USA, 6–7 December 2016; pp. 731–738. [Google Scholar]

- Bray, T. The Javascript Object Notation (Json) Data Interchange Format (No. RFC 8259). 2017. Available online: https://buildbot.tools.ietf.org/html/rfc7158 (accessed on 8 August 2019).

- Markowski, A.S.; Mannan, M.S.; Bigoszewska, A. Fuzzy logic for process safety analysis. J. Loss Prev. Process. Ind. 2009, 22, 695–702. [Google Scholar] [CrossRef]

- Beck, J. Implementation and Experimentation with C4. 5 Decision Trees. Bachelor’s Thesis, University of Central Florida, Orlando, FL, USA, 2007. [Google Scholar]

- Hall, M.; Frank, E.; Holmes, G.; Pfahringer, B.; Reutemann, P.; Witten, I.H. The WEKA data mining software: An update. ACM SIGKDD Explor. Newsl. 2009, 11, 10–18. [Google Scholar] [CrossRef]

- Chang, C.C.; Lin, C.J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. TIST 2011, 2, 27. [Google Scholar] [CrossRef]

- Kasteren, T.L.; Englebienne, G.; Krose, B.J. An activity monitoring system for elderly care using generative and discriminative models. Pers. Ubiquitous Comput. 2010, 14, 489–498. [Google Scholar] [CrossRef]

- Chang, D.Y. Applications of the extent analysis method on fuzzy AHP. Eur. J. Oper. Res. 1996, 95, 649–655. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).