Abstract

The stockline, which describes the measured depth of the blast furnace (BF) burden surface with time, is significant to the operator executing an optimized charging operation. For the harsh BF environment, noise interferences and aberrant measurements are the main challenges of stockline detection. In this paper, a novel encoder–decoder architecture that consists of a convolution neural network (CNN) and a long short-term memory (LSTM) network is proposed, which suppresses the noise interferences, classifies the distorted signals, and regresses the stockline in a learning way. By leveraging the LSTM, we are able to model the longer historical measurements for robust stockline tracking. Compared to traditional hand-crafted denoising processing, the time and efforts could be greatly saved. Experiments are conducted on an actual eight-radar array system in a blast furnace, and the effectiveness of the proposed method is demonstrated on the real recorded data.

1. Introduction

Blast furnaces (BFs) are the key reactors of iron and steel smelting, which consumes about 70% of the energy (i.e., coal, electricity, fuel oil, and natural gas) in the steel-making process [1,2]. In iron-making, solid raw materials, e.g., iron ore, coke, limestone, are violently burned and consumed from time to time, and the charging operation needs to be executed by accurately estimating the current depth of the burden surface. Burden surface monitoring is crucial to ensuring high quality steel production as it affects the optimization of charging operations and the utilization ratio of heat and chemical energy [3].

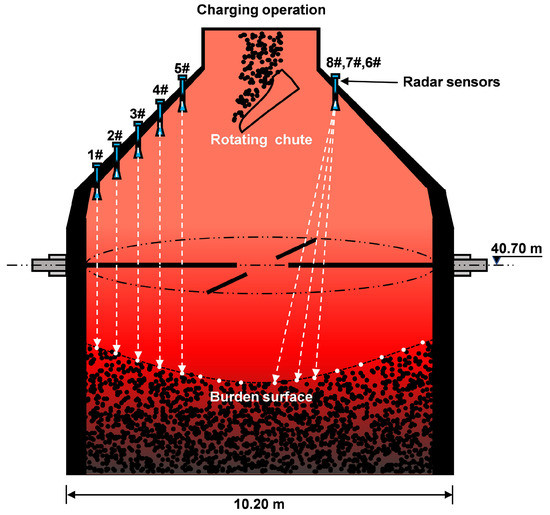

The measurement of the BF burden surface is a longstanding and challenging task because of the harsh in-furnace environment, which is lightless, high-pressure, high-dust, high-humidity, and extremely high in temperature [4]. With the advantage of its contact-free nature, high precision, and high penetrability, the frequency-modulated continuous wave (FMCW) radar has become widely popular with its pointwise measuring method to locate the burden level in real time [5]. As shown in Figure 1, the employed eight-radar array system is introduced, where the radar sensors are placed scientifically in consideration of the practical industrial field. The burden surface is bilaterally symmetrical because of the uniform rotation of the charging chute, so only half of the surface needs to be measured. The trajectory of the measuring points taken by the radar over time is termed the stockline and reflects the depth of the burden surface at every timestamp. Improving the precision of stockline detection, the operator can optimize the burden distribution in a way that is conducive to a stable iron-making process.

Figure 1.

Prototype of the employed eight-radar array system. The frequency-modulated continuous wave (FMCW) radars work in the band of 24 GHz∼26 GHz, with a bandwidth of 1.64 GHz. The radar sensors are placed by scientifically considering the practical industrial field.

Noise interferences are crucial issues in the BF radar system. The radar signals usually suffer heavy low-frequency noises due to strong electromagnetic scattering. At times, the shadows of the rotating chute and falling materials, the influence of natural deviations, instrument errors, fraudulent behaviors, and other unexpected interferences result in the appearance of distorted signals and make stockline detection more challenging.

In the study of BFs, a variety of algorithms of noisy data processing have been developed so far, covering principal component analysis [6], support vector machines (SVM) [7,8], neural network models [9], extreme learning machines [10], etc. It is acknowledged by remarkable previous works that the noises occurring within the BF reactor are still an extremely complex issue. The existing works of stockline detection are reviewed [5,11,12,13,14,15]. Generally, peak searching (PS) is used to extract the stockline, which corresponds to the maximum amplitude component in the signal spectrum [12]. In the case of actual BF environments, the collected signals are usually corrupted with noises that bury the target features under fraudulent peaks and bring outliers to the stockline. A simple approach to eliminate the noise influence is threshold clipping, of which the adaptive threshold method is an example [13], where the noises outside the thresholds would be ignored rudely. Effective filtering methods are being developed to exclude the empirical interval of noise distribution, e.g., the infinite impulse response (IIR) filter used in [14] and the windowed finite impulse response (FIR) filter used in [16]. Ongoing efforts are being made to improve noise robustness by taking historical observations into consideration, e.g., Kalman tracking methods [17,18]. Several stockline smoothing methods are presented to reduce noise fluctuation, e.g., mean shift and spectrum average [13]. The CLEAN and clustering algorithms, as reported in [5], can omit the falsely noisy targets on the burden surface but are unsuitable for the task of continuous detection as such. Traditional noise abatement requires domain expertise to construct the feature selector, involving, for example, threshold selection or noise filtering, to the extent that it limits further performance improvement. Another bottleneck is the limited stockline tracking capability.

Recently, deep neural networks have made considerable progress on diverse kinds of data processing, such as image, video, speech, and text [19]. The convolutional neural network (CNN) is widely believed to have a powerful learning ability for feature selection and extraction. Besides, with the advantage of long-range memory, the long short-term memory (LSTM) network is broadly used in time-series data modeling. An increasing number of hybrid architectures that combine the CNN and LSTM as an encoder–decoder pair have achieved great success in promoting long-term information learning, such as the image caption [20], speech signal processing [21,22], sensory signal estimation [23], etc.

In this paper, we propose a novel encoder–decoder architecture for effective stockline detection. The encoder is a one-dimensional convolutional neural network (1D-CNN), and the decoder is a cascade multi-layer LSTM network. Between the encoder and decoder, a binary classifier is constructed to mitigate the negative impacts of distorted signals. The hybrid architecture has an excellent anti-noise learning ability and a long-range stockline tracking ability. Our contributions can be summarized as follows:

- To present a novel encoder–decoder architecture to improve stockline detection, which learns desired features from noisy data adaptively. We save time and effort compared to traditional hand-crafted denoising processing.

- To present an effective stockline tracking strategy by leveraging the LSTM network to model longer range historical signals. A large tracking capability brings better robustness of noise randomness.

- The experiments are validated on actual industrial BF data. In particular, the experiments are carried out on an intact multi-radar scenario rather than a single radar scenario.

The rest of the paper is organized as follows. In the second section, the issues of stockline detection and the necessity of the encoder–decoder architecture are described. The proposed algorithm and the loss function are explained in Section 3. We conduct the experiments on actual BF data collected from the eight-radar array system in Section 4. A conclusion is provided in Section 5 to summarize this work.

2. Issue Description and Necessity Of Encoder-Decoder Architecture

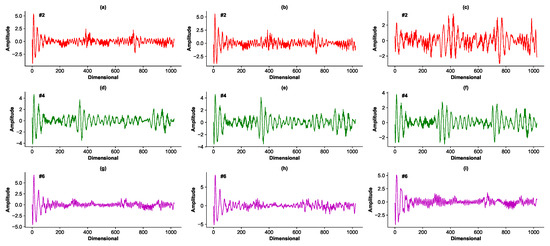

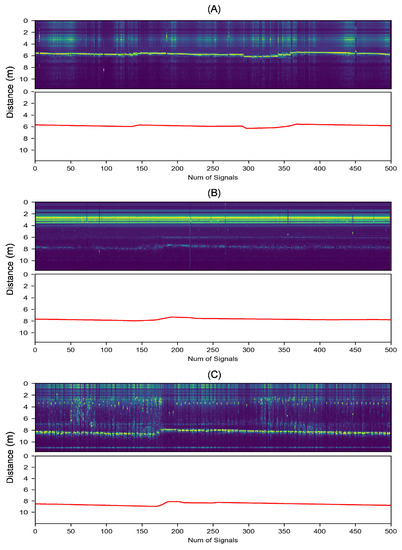

The signals are collected individually and sequentially among different radars. They are 1024-dimensional vectors quantified by the 10-bit analog–digital converter. There are some examples of the input signals shown in Figure 2. Stocklines are fluctuant curves that reflect the changing depth of the burden surface, as shown in the radar temporal-frequency spectrum in Figure 3 where the vertical coordinates have been converted to the measuring distance using

where d is the distance in m, m/s is the velocity of electromagnetic waves, and GHz represents the radar bandwidth. f is the beat frequency in Hz. More introductions about the FMCW radar can be found in [24].

Figure 2.

Examples of the input signals. Each signal is 1024-dimensional. (a–c) are examples from radar #2; (d–f) are examples from radar #4; (g–i) are examples from radar #6.

Figure 3.

The time–frequency spectrum and the expectation stockline of different radar signals. (A–C) are from radar #2, #4, and #6, respectively. The horizontal axis represents the number of time series signals, and the vertical axis represents the distance between the radar sensor and the measured point.

Several typical noisy conditions are depicted in Figure 3. Figure 3A shows a condition where the stockline is able to be detected. On the contrary, the stockline in Figure 3B appears to be buried under the strong low-frequency noises (the luminous yellow band above the stockline). In Figure 3C, the stockline is so discontinuous that numerous absent measurements occurred due to the interruption of distorted signals. In general, the collected data close to the furnace center suffer heavier noisy impacts compared to those close to the furnace wall.

As declared in [2,7], the unknown statistic properties of the actual BF noises are always a dilemma for noise abatement. Different from traditional methods, which perform data filtering processing that is heuristic, repetitive, and knowledge-based for a multi-radar system, the proposed method circumvents the noise interferences in a learning fashion, significantly reducing the time and effort of hand-engineered data filtering. The front CNN is used to extract representative features from the raw signals; the middle classifier is used to separate the fraudulent distorted signals; the trailing LSTM is utilized to capture the dependencies of time series measurements and decode the feature. Such an effective encoder–decoder (CNN–LSTM) backbone architecture tailored to stockline detection is presented.

3. Methodology

3.1. Architecture

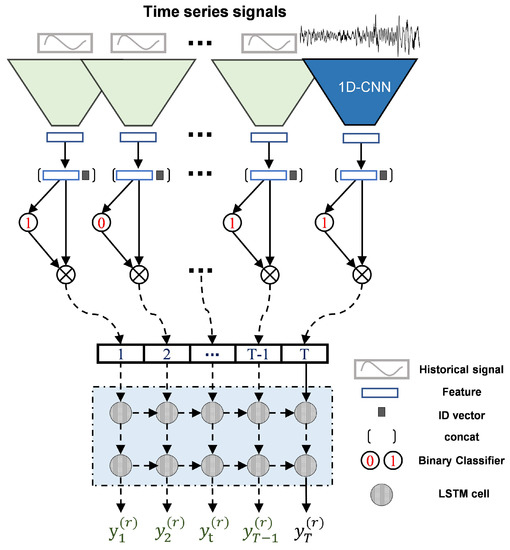

The proposed architecture is shown in Figure 4. It consists of four operations, including CNN feature extraction, radar identification (ID) embedding, distorted signal separation, and temporal feature decoding.

Figure 4.

The proposed encoder–decoder architecture unrolled in time. LSTM: long short-term memory; 1D-CNN: one-dimensional convolutional neural network.

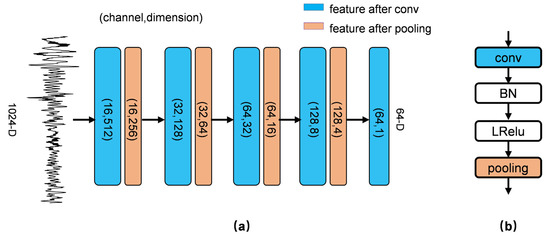

CNN Encoder. A five-layer CNN is constructed as the encoder. It receives the raw signal (1024-D) as input. The structure is shown in Figure 5a.

Figure 5.

(a) The structure of the 1D-CNN encoder. It is composed of 5 convolutional layers and 4 pooling layers. Eight-length convolutional kernels are used at the first four layers, while 4-length kernels are used at the last layer. (b) We perform batch normalization (BN) and a leaky rectified linear unit (LRelu) function after each convolutional layer.

Mathematically, given the input of the convolutional layer, , which is C-channel and D-dimensional. Let be the weight, where K is the number of convolutional kernels and L is the kernel length. The 1D-convolutional calculation can be formulated as

where the symbol ∗ stands for the convolutional operation, s is the sliding stride, and is the ceiling function.

We apply batch normalization (BN) and nonlinearity after each convolutional layer, as shown in Figure 5b. BN is widely used in the neural networks and can speed up convergence and slightly improve performance [25]. Assuming x is the input of BN, we have

where is a scaling factor and is a shifting factor. It is noted that also acts as a bias term to the convolutional layer. is the mean, and is the variance. is a small positive constant to prevent division by zero, e.g., .

The leaky rectified linear unit (LRelu) is adopted as nonlinearity [26]. It is

where represents the LRelu function and is a constant, e.g., .

Max-pooling layers with a stride of 2 are used in the encoder. After encoding by the CNN, the m-dimensional feature is extracted. m is a optional hyperparameter, and is used.

ID Embedding. The characteristics of noisy signal data are different from radar to radar. For multi-radar data, we embed the radar ID information into feature F to slightly improve performance. The radar ID is encoded using one-hot coding. Formally, given R radars, the corresponding ID is denoted by , , where is the i-th component of . For the r-th radar, we have

and are concatenated together in dimensionality, the superscript denotes the signal derived from the r-th radar.

Distorted Signal Separation. We construct a binary classifier using a fully connected layer to classify the normal signals and distorted signals before feeding them into the decoder. The fully connected layer maps the feature into a V-class decision space ( for the 2-class task). Let be the weight, with as the bias. We have

where is j-th element of , is the dimensionality of , and is the category predicting the probability by the softmax function. For the binary classification, we have the expectation for the normal signals and for distorted signals. The distorted signals carry confusing information and do not make any meaningful contributions to stockline estimation. We mask them by zero to reduce their negative impacts for the next calculation step.

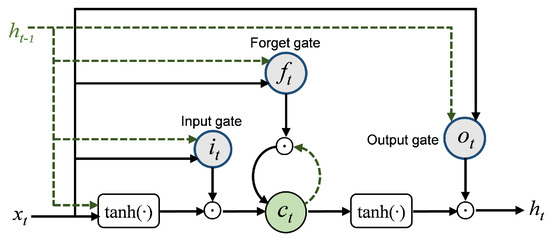

LSTM Decoder. LSTM is an improved variant of recurrent neural networks (RNNs) [27,28]. Define T be the tracking length of the stockline, including historical signals and one current signal. Let the subscript t be the index of the T sequential signals that are fed into the LSTM, so . LSTM makes use of an effective gate mechanism to control the context information flow, which is comprised of the input gate , the forget gate , and the output gate . Its inner gate mechanism is shown in Figure 6. The cell state stores the information of each time step, the input gate determines whether to add new information to , the forget gate selectively forgets the uninteresting previous information involved in , and they control the update of the cell state . The output gate controls the emission from to the hidden state . is mapped to the decoder output , where is the weight matrix and is the bias.

Figure 6.

Diagram of an LSTM cell with its inner memory mechanism, including the input gate , forget gate , and output gate . The dashed line represents the information from the last time step.

The output of LSTM would correspond to one point of the stockline. Equally, .

3.2. Loss Function

Classification loss. The classifier is trained by minimizing the cross-entropy loss. It can be formulated as

Regression loss. The output values are regressed to the target values by minimizing their square errors, and the form of regression loss is

where is the discount factor, is used.

Joint loss. It is a joint training task of classification and regression. The general way of constructing a joint loss is using a linear weighted sum of each subtask loss. The total loss can be written as

where are the weighted factors of each subtask. is the L2 norm loss.

When it simply presets to in our experiments, the classification loss tends to steer the training process and damage the regression performance. Thus, we use the strategy of homoscedastic uncertainty to search for the suitable weight factors to balance their performance [29,30]. The joint loss is rewritten as

where and are two learnable parameters that stand for the observed variances of the subtasks. The bound term discourages the variances from increasing too much. Assuming , , . On implementation, the network is trained to predict the log variance because it is more numerically stable; for instance, letting , we use to replace in (11), and so for .

4. Experiment

In this section, the experiments are carried out and the experimental setups are provided in detail. The experimental results consist of four parts: performance, scientificity of the architecture, the effect of the tracking strategy, and the running time.

4.1. Experiment Setup

Dataset. There are signals collected from the industrial BF eight-radar array system as posed in Table 1. The data is divided into three parts, including the training set, validation set, and testing set; they are 3:1:1, respectively. The training set is used to train the model, the validation set is used to validate the performance and tune the hyperparameters, and the testing set is used to test its performance. The results are from the testing set as default, unless otherwise stated. The radar signals are normalized between in order to eliminate the effect of the amplitude.

Table 1.

The number of normal signals and distorted signals.

Hyperparameters. The encoder with 5 convolutional layers, the decoder with 3 hidden layers, 72 time steps (i.e., ), and 128 hidden nodes are adopted. The convolutional layers and fully connected layers are initialized with zero bias and a Gaussian weight filled with . The LSTM cells are zero-initialized. We use the Adam solver [31], which is an improved stochastic gradient descent algorithm, to optimize the model. A dynamic learning rate is used,. It starts with 0.001 and decays by 0.98 per every 100 iterations. The model is trained for 20 epochs with a batch size of 120. We perform the dropout with a dropout rate to the LSTM as regularization [28,32]. When the training is completed, the adaptive parameters , are leveled off to , , respectively; in other words, .

Evaluation. We use mean absolute error (MAE) and root mean square error (RMSE) to evaluate the detection performance. Formally, MAE is defined as

The RMSE is defined as

We use accuracy, precision, recall, and F1 score to evaluate the classification performance. Accuracy indicates the proportion of correctly classified samples among all samples. Precision indicates the proportion of true positive samples among true positive samples (TPs) and false positive samples (FPs), that is,

Recall indicates the proportion of true positive samples among true positive samples and false negative samples (FNs).

The F1 score is the harmonic mean of precision and recall.

4.2. Results

Different experiments were conducted as shown below. The proposed method was implemented on the Tensorflow framework [33].

Performance. The traditional peak searching method with pass-band FIR filters and Kalman filters were implemented for comparison. The settings of the FIR filters are shown in Table 2, and the Kalman algorithm is introduced in [34]. Respectively, the single CNN model and single LSTM model were constructed for another baseline comparison and their structures were identical to the corresponding part of the hybrid model.

Table 2.

Configuration of the FIR filters with the Blackman–Harris windows.

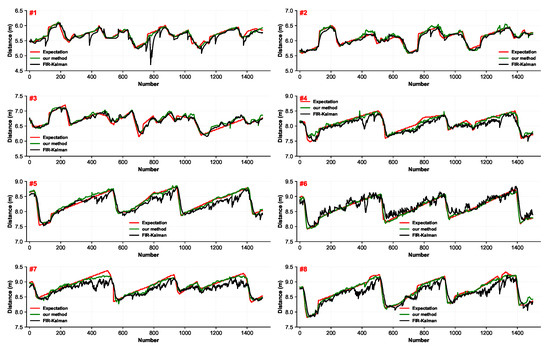

The estimation stocklines are displayed in Figure 7, including the proposed method and FIR-Kalman methods. The regression curves of the proposed method exhibited less fluctuation around the expectation curves. In contrast, the filter-based approaches tended to be influenced by interferences.

Figure 7.

The estimation stocklines of the eight-radar array system measured in the same period.

As shown in Table 3 and Table 4, with an MAE of 0.0432 and a RMSE of 0.0581, the presented architecture is capable of learning features and suppressing noise interferences of noisy data. It could be observed that the proposed method significantly outperforms traditional experience-dependent denoising methods. From the table, the traditional method without denoising processing is extremely sensitive to heavy noisy impacts and achieves a poor performance. In contrast, the proposed method shows itself to be more efficient and robust.

Table 3.

Comparison of the proposed method with different methods on the testing set (mean absolute error, MAE). PS: peak searching.

Table 4.

Comparison of the proposed method with different methods on testing set (root mean square error, RMSE).

The classifier performance is shown in Table 5. With scores of 95.90%, 96.00%, 99.48%, and 97.68% for the accuracy, precision, recall, and F1 score indicators, respectively, it achieves a decent classification result that can classify the distorted signals well.

Table 5.

Classification performance on testing set.

Scientificity of architecture. A series of experiments were carried out to verify the scientificity of such an architecture. First is the validity of such a CNN–LSTM mixed architecture. Two simpler baseline architectures are provided, i.e., a single CNN architecture and a single LSTM architecture, as shown earlier in Table 3 and Table 4. The detached architectures both show worse performances compared to the proposed mixed architecture due to losing the advantages of each. For the simple CNN architecture, it shows a weak performance and is even worse than traditional denoising methods. For the simple LSTM architecture, we observe that LSTM gets into trouble learning the feature from noisy signals without the help of the CNN encoder.

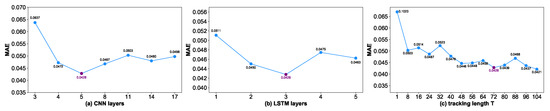

Secondly, we conduct experiments to examine the complexity of the encoder and decoder. The impacts of picking a different number of CNN layers and a different number of LSTM layers are shown in Figure 8a,b, respectively. Based on the experiments, we choose a 5-layer CNN and a 3-layer LSTM as the encoder and decoder for optimal performance. The experimental results also indicate that a deep architecture is not always necessary.

Figure 8.

Performance on the validation set. (a) Performance of selecting different encoder layers. (b) Performance of selecting different decoder layers. (c) Performance of selecting different tracking lengths T.

Thirdly, the stockline tracking length T is examined. The experiments are shown in Figure 8c; as T increases, errors decrease in a general trend. Due to the limited memory of the LSTM network, it is found that when the tracking length , it brings a marginal improvement of performance but conspicuously increases computational time, so a proper is used.

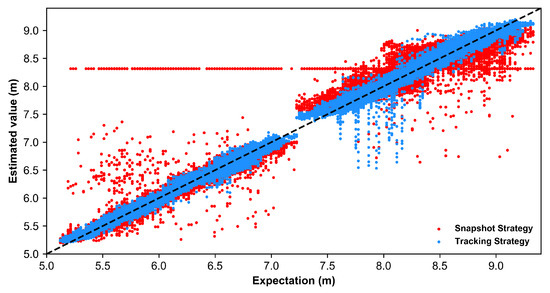

Effect of tracking strategy. To further validate the effect of the stockline tracking ability provided by the LSTM, a snapshot-based model as designed by setting the time step , implying that the network has no visible historical signal. We compare its performance with the tracking-based model (i.e., ) with fair training settings. The results are shown in Figure 9, where the black dotted line stands for the expectation, and the one whose distribution is closer to the black line shows a better performance. The snapshot-based tracking strategy, with a MAE of 0.1019 and a RMSE of 0.1854, is more sensitive to the interruptive noises and is even worse than the FIR-Kalman approach. As shown earlier in Figure 8c, a longer tracking capability has a positive effect on performance. The proposed method takes a longer range of previous stockline into consideration; however, the Kalman tracking approach only makes use of one previous moment. An effective tracking strategy brings a better tolerance to noisy randomness and disturbances.

Figure 9.

The distribution of the estimated values by the snapshot-based model and tracking-based model. As can be seen in the figure, a group of fixed false estimated points (red) occurred at ∼8.3 m, perhaps caused by a fixed noisy target within the blast furnace (BF).

Running time. In addition, with an average computational time of ms, the proposed architecture is lightweight and fully meets the real-time requirements of the industrial process.

5. Conclusions

In this paper, we have successfully developed a hybrid CNN–LSTM architecture for the challenging stockline detection problem. Its effectiveness has been demonstrated by experiments on BF eight-radar array data. The success of the proposed method is attributable to three reasons: its effective learning ability, its ability to classify distorted signals, and its excellent stockline tracking ability.

In the industrial process, most of the monitoring data or variables have close time-series dependencies. The general contribution of this work is that we improve the long-range history learning by leveraging the novel LSTM network. It is a very promising direction to achieve more accurate and robust industrial control, if we can make the most of the context relationship of successive measurements.

In future work, we will dedicate our efforts to the image reconstruction of BF burden surfaces. The inner transparency of the neural network methodology will be investigated, which will allow us to have an in-depth qualitative or quantitative analysis of noise influences.

Author Contributions

Methodology, X.L.; Project administration, J.L.; Resources, X.C.; Validation, M.Z.; Writing—review & editing, Y.L.

Funding

This work was supported by the Natural Science Foundation of Beijing Municipality (No. 4182038), the National Natural Science Foundation of China (No. 61671054), the Fundamental Research Funds for the China Central Universities of USTB (FRF-BR-17-004A, FRF-GF-17-B49), and the Open Project Program of the National Laboratory of Pattern Recognition (No. 201800027).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Guo, Z.; Fu, Z. Current situation of energy consumption and measures taken for energy saving in the iron and steel industry in China. Energy 2010, 35, 4356–4360. [Google Scholar] [CrossRef]

- Zhou, P.; Lv, Y.; Wang, H.; Chai, T. Data-Driven Robust RVFLNs Modeling of a Blast Furnace Iron-Making Process Using Cauchy Distribution Weighted M-Estimation. IEEE Trans. Ind. Electron. 2017, 64, 7141–7151. [Google Scholar] [CrossRef]

- Li, X.L.; Liu, D.X.; Jia, C.; Chen, X.Z. Multi-model control of blast furnace burden surface based on fuzzy SVM. Neurocomputing 2015, 148, 209–215. [Google Scholar] [CrossRef]

- Xu, D.; Li, Z.; Chen, X.; Wang, Z.; Wu, J. A dielectric-filled waveguide antenna element for 3D imaging radar in high temperature and excessive dust conditions. Sensors 2016, 16, 1339. [Google Scholar] [CrossRef] [PubMed]

- Zankl, D.; Schuster, S.; Feger, R.; Stelzer, A.; Scheiblhofer, S.; Schmid, C.M.; Ossberger, G.; Stegfellner, L.; Lengauer, G.; Feilmayr, C.; et al. BLASTDAR—A large radar sensor array system for blast furnace burden surface imaging. IEEE Sens. J. 2015, 15, 5893–5909. [Google Scholar] [CrossRef]

- Zhou, B.; Ye, H.; Zhang, H.; Li, M. Process monitoring of iron-making process in a blast furnace with PCA-based methods. Control. Eng. Pract. 2016, 47, 1–14. [Google Scholar] [CrossRef]

- Gao, C.; Jian, L.; Luo, S. Modeling of the thermal state change of blast furnace hearth with support vector machines. IEEE Trans. Ind. Electron. 2012, 59, 1134–1145. [Google Scholar] [CrossRef]

- Jian, L.; Gao, C. Binary coding SVMs for the multiclass problem of blast furnace system. IEEE Trans. Ind. Electron. 2013, 60, 3846–3856. [Google Scholar] [CrossRef]

- Pettersson, F.; Chakraborti, N.; Saxén, H. A genetic algorithms based multi-objective neural net applied to noisy blast furnace data. Appl. Soft Comput. 2007, 7, 387–397. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, S.; Yin, Y.; Xiao, W.; Zhang, J. A novel online sequential extreme learning machine for gas utilization ratio prediction in blast furnaces. Sensors 2017, 17, 1847. [Google Scholar] [CrossRef]

- Liang, D.; Yu, Y.; Bai, C.; Qiu, G.; Zhang, S. Effect of burden material size on blast furnace stockline profile of bell-less blast furnace. Ironmak. Steelmak. 2009, 36, 217–221. [Google Scholar] [CrossRef]

- Qingwen, H.; Xianzhong, C.; Ping, C. Radar data processing of blast furnace stock-line based on spatio-temporal data association. In Proceedings of the 2015 34th Chinese Control Conference (CCC), Hangzhou, China, 28–30 July 2015; pp. 4604–4609. [Google Scholar]

- Chen, X.; Wei, J.; Xu, D.; Hou, Q.; Bai, Z. 3-Dimension imaging system of burden surface with 6-radars array in a blast furnace. ISIJ Int. 2012, 52, 2048–2054. [Google Scholar] [CrossRef][Green Version]

- Chen, A.; Huang, J.; Qiu, J.; Ke, Y.; Zheng, L.; Chen, X. Signal processing for a FMCW material level measurement system. In Proceedings of the 2016 IEEE 13th International Conference on Signal Processing (ICSP), Chengdu, China, 6–10 November 2016; pp. 244–247. [Google Scholar]

- Malmberg, D.; Hahlin, P.; Nilsson, E. Microwave technology in steel and metal industry, an overview. ISIJ Int. 2007, 47, 533–538. [Google Scholar] [CrossRef]

- Gomes, F.S.; Côco, K.F.; Salles, J.L.F. Multistep forecasting models of the liquid level in a blast furnace hearth. IEEE Trans. Autom. Sci. Eng. 2017, 14, 1286–1296. [Google Scholar] [CrossRef]

- Brännbacka, J.; Saxen, H. Novel model for estimation of liquid levels in the blast furnace hearth. Chem. Eng. Sci. 2004, 59, 3423–3432. [Google Scholar] [CrossRef]

- Wei, J.; Chen, X.; Kelly, J.; Cui, Y. Blast furnace stockline measurement using radar. Ironmak. Steelmak. 2015, 42, 533–541. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436. [Google Scholar] [CrossRef] [PubMed]

- Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.; Salakhudinov, R.; Zemel, R.; Bengio, Y. Show, attend and tell: Neural image caption generation with visual attention. In Proceedings of the International Conference on Machine Learning (ICML), Lille, France, 6–11 July 2015; pp. 2048–2057. [Google Scholar]

- Sainath, T.N.; Vinyals, O.; Senior, A.; Sak, H. Convolutional, long short-term memory, fully connected deep neural networks. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, Australia, 19–24 April 2015; pp. 4580–4584. [Google Scholar]

- Trigeorgis, G.; Ringeval, F.; Brueckner, R.; Marchi, E.; Nicolaou, M.A.; Schuller, B.; Zafeiriou, S. Adieu features? end-to-end speech emotion recognition using a deep convolutional recurrent network. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 5200–5204. [Google Scholar]

- Chen, Z.; Zhao, R.; Zhu, Q.; Masood, M.K.; Soh, Y.C.; Mao, K. Building occupancy estimation with environmental sensors via CDBLSTM. IEEE Trans. Ind. Electron. 2017, 64, 9549–9559. [Google Scholar] [CrossRef]

- Stove, A.G. Linear FMCW radar techniques. In IEE Proceedings F (Radar and Signal Processing); IET: London, UK, 1992; Volume 139, pp. 343–350. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the International Conference on Machine Learning (ICML), Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Zaremba, W.; Sutskever, I.; Vinyals, O. Recurrent neural network regularization. arXiv 2014, arXiv:1409.2329. [Google Scholar]

- Kendall, A.; Cipolla, R. Geometric loss functions for camera pose regression with deep learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5974–5983. [Google Scholar]

- Kendall, A.; Gal, Y. What uncertainties do we need in bayesian deep learning for computer vision? In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2017; pp. 5574–5584. [Google Scholar]

- Diederik, P.; Kingma, J.B. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A System for Large-Scale Machine Learning. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI’16), Savannah, GA, USA, 2–4 November 2016; Volume 16, pp. 265–283. [Google Scholar]

- Bishop, G.; Welch, G. An introduction to the Kalman filter. Proc. Siggraph Course 2001, 8, 59. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).