1. Introduction

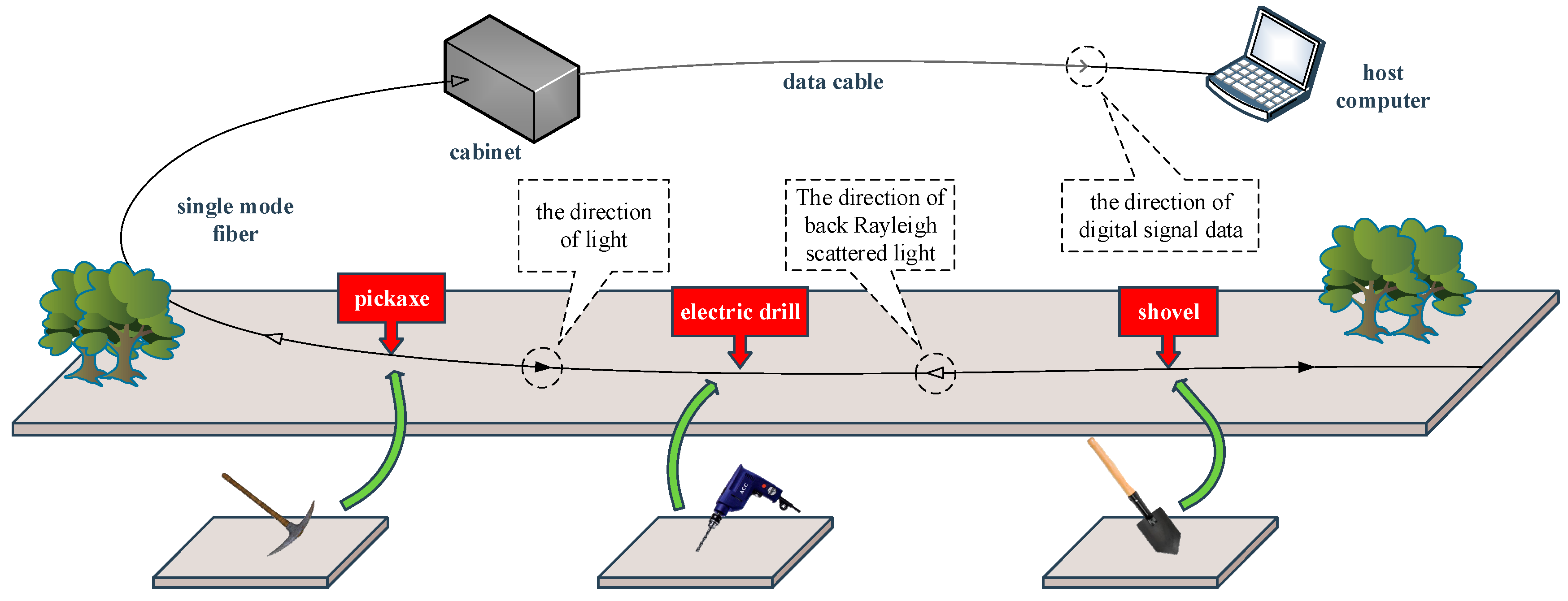

At present, inflammable and explosive resources such as oil and natural gas are mainly transported through pipelines, which is convenient and fast. Meanwhile, problems such as resource waste and environmental pollution caused by pipeline leakage also exist. Therefore, real-time monitoring for pipelines’ status is essential [

1,

2,

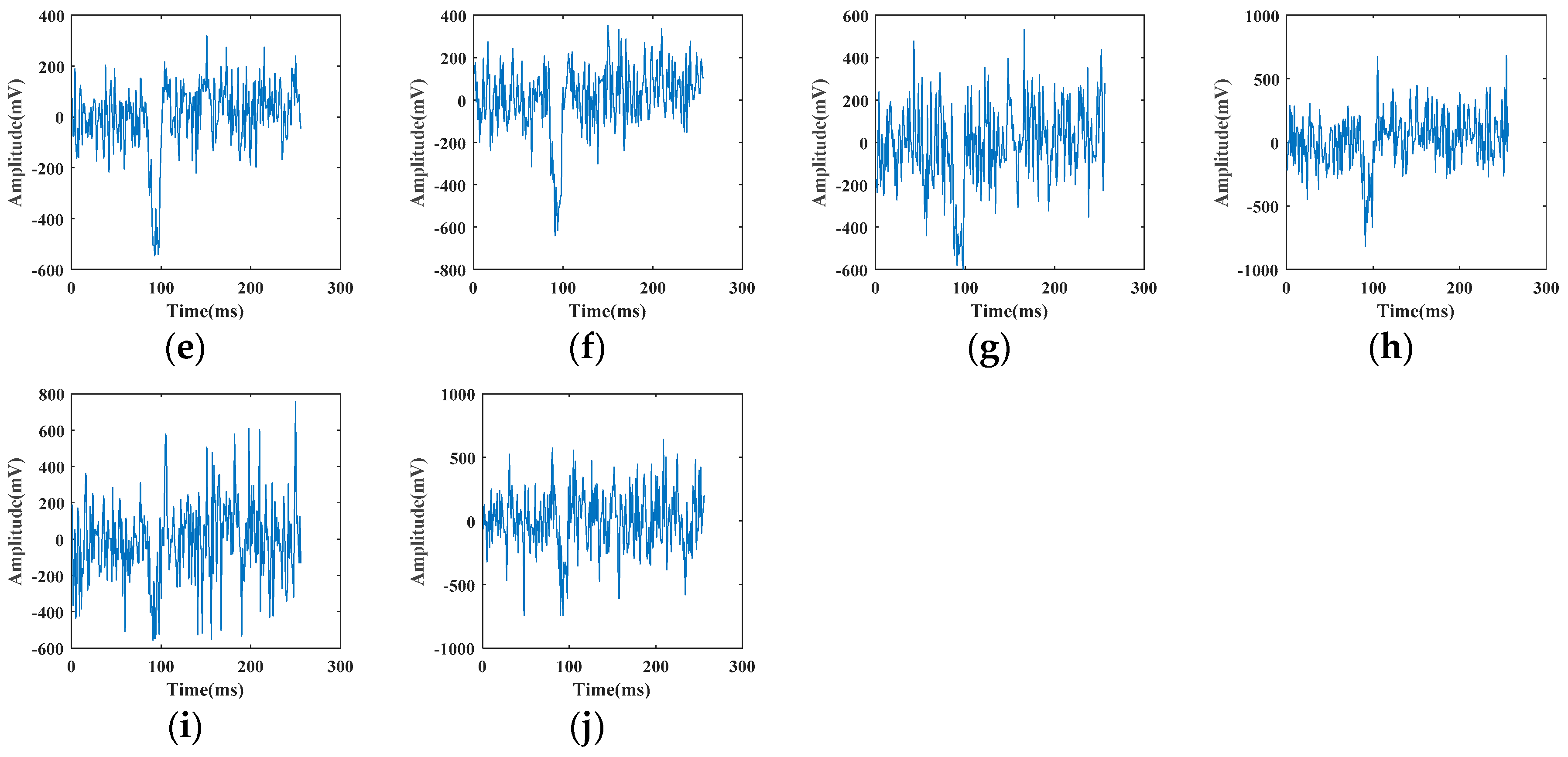

3]. In the process of pipeline monitoring, the research of OFPS mainly focused on monitoring and recognition of vibration signals [

4,

5]. In the field of recognition of optical fiber vibration signal, there are two types of institution at present, reflection and interference. The Mach–Zehnder (MZ) method is an interference method, which can be used to detect sensitive characteristics of vibration signal. In addition, the phase-sensitive optical time-domain reflectometer (Φ-OTDR) is a reflection method, which can be used to detect concurrent vibration signals. Compared with other monitoring methods, optical fiber pre-warning systems (OFPS) based on Φ-OTDR are a very common method of long-distance detection and security protection due to their advantage in design (anti-interference, small additional damage, easy upgrade of back-end, low energy consumption in the field and suitable for long-distance defense) [

6].

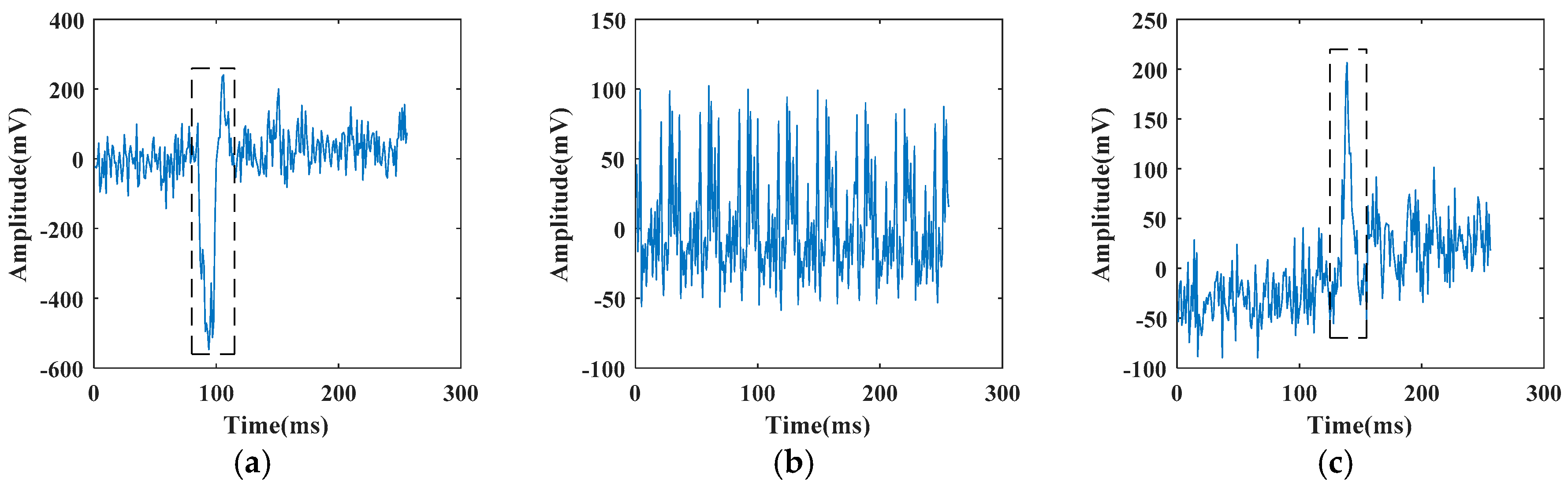

When we choose OFPS based on Φ-OTDR to recognize fiber vibration signals, how to improve the recognition rate of different vibration signals and noisy vibration signals is a challenging task. On the one hand, some scholars studied from the aspect of traditional feature extraction [

7,

8,

9,

10,

11,

12,

13,

14], on the other hand, some scholars studied from the aspect of combining neural network [

15,

16], and all of them achieved certain results.

The recognition methods of vibration signals based on neural network is studied by many scholars, because it is simple to operate and does not require too much preprocessing of vibration signals. At the same time, the vibration signal recognition methods based on neural network is also a challenging research field. With the improvement of computing power, neural networks or artificial intelligence will be widely used in the field of optical fiber vibration signal recognition.

The main contribution of this paper is combining original SCN with bootstrap sampling of Bagging and AdaBoost, and proposing three improved methods: Bootstrap-SCN, AdaBoost-SCN, and AdaBoost-Bootstrap-SCN for noisy fiber vibration signal recognition in the case of small sample set. Likewise, the robustness of the proposed methods is tested by using noisy vibration signals. In the experimental part, we first pretreat collected signals and superimpose various types of noise. Then, we send noisy signal sets to five methods (including original SCN and random vector functional link (RVFL) network) for training. At last, we compare the results of five methods.

The article is divided into the following parts: the second section describe the details of related works; the third section briefly introduces original SCN, proposed methods, and methods for generating various types of noisy signals; the fourth section makes a presentation of the optical fiber pre-warning system, original data of fiber vibration signals, and signal pretreating process; the fifth section shows the experiments and analysis; the sixth section is the discussion of experimental results; and the last section concludes this study.

2. Related Work

Scholars have done in-depth studies on the recognition technology of optical fiber signals, including instrument improvement, optical fiber signal processing, and classification. Traditional analysis methods are based on time and frequency domain [

7,

8,

9,

10]. Bi et al. [

11] and Qiu et al. [

12] applied constant false alarm rate (CFAR) commonly used in radar signal detection to optical fiber signal processing, and proposed cell averaging-CAFR (CA-CAFR) algorithm with small computational complexity and strong adaptability. However, CA-CAFR algorithm is applicable when the input signals of detector are independent identically distribution (IID) Gaussian random variables, otherwise, ideal effect cannot be achieved. Sun et al. [

13] proposed a feature extraction method combining with wavelet energy spectrum and wavelet information entropy, and proved its feasibility in experiments. Yen et al. [

14] used wavelet packet transform to extract time domain information from vibration signals to recognize vibration signals, and the experimental results showed that this method could effectively distinguish three kinds of intrusion signals. At the same time, some scholars used neural networks to recognize fiber vibration signals. King et al. [

15] designed an optical fiber water sensor system by using signal processing method, neural network and fast Fourier transform (FFT). Makarenko [

16] proposed a method for establishing a signal recognition deep learning algorithm in distributed optical fiber monitoring and long period safety systems. It can recognize seven classes of signals and receive time–space data frames as input. However, if a small sample set is used to train deep learning network, the network training will not be in place, which affect recognition effect.

It is also necessary to recognize fiber vibration signals in the case of small sample set. Because not only the acquisition of a large number of fiber vibration signals is difficult, but also the sample calibration work is heavy. Once there is a problem in the calibration of samples, it will directly affect the training of classifier. If a deep network is used for training in the case of small sample set, the network could not be trained effectively. In the field of small networks, Wang et al. [

17] proposed an improved classification algorithm that considers multiscale wavelet packet Shannon entropy, which uses radial basis function neural networks for classification and the accuracy rate is 85%. In addition, some new small networks have been created in recent years. Igelnik et al. [

18] proposed a three-layer network. Its randomly generated parameters of hidden layer nodes not only improve the generalization performance of network, but also save a lot of iterative operations. Based on RVFL network, Wang et al. [

19] proposed stochastic configuration network (SCN) to change the generation method of hidden layer nodes, which makes the size setting of network more flexible.

At the same time, since OFPS usually works in a complex environment and is susceptible to various disturbances, the acquired fiber vibration signals contain various noises. During the propagation of backscattered light, the remaining types of scattered light generated in fiber may become the optical noise of the system. Photoelectric detectors based on avalanche photo diode module (APD) generate random noise during the fiber conversion and amplification process. All these noises overwhelm the fiber signals of the system [

20]. Sun et al. [

21] used the theoretical model to verify the influence of Rayleigh optical noise more intuitively on the accuracy of system measurements. It can be seen that various types of noises seriously affect the recognition of fiber vibration signals. Therefore, it is an important research field to improve the recognition accuracy of fiber vibration signals in noisy environment.

For the recognition task of noisy fiber signals, common methods are to use synchronization overlapping average algorithm to suppress the white noise of the system. The increase of the number of accumulations can effectively promote the signal–noise ratio (SNR) and improve the performance of the system [

22]. Since the structure of self-noise is similar to random noise, and median filter is an effective method to remove random noise, Qin et al. [

23] moved the noise from signals in three-dimensional time domain with median filter. In order to eliminate the influence of coherent Rayleigh noise in coherent optical time domain reflectometry (COTDR) systems, Liang et al. [

24] proposed a noise reduction method based on timed frequency hopping. This method could effectively suppress the noise in COTDR system and improve the long-distance sensing performance of the system. In order to reduce the influence of background noise on vibration detection, Ibrahim Ölçer et al. [

25] proposed an adaptive time-matching filtering method, which significantly improved the SNR of independent adaptive processing. However, when performing long-distance monitoring, it is necessary to introduce optical amplification to increase the distance of laser irradiation, which will affect the effect of this method.

In the recognition of noisy fiber vibration signals with a small sample set, the problem of low classification accuracy still occurs in the use of SCN, and the recognition rate is sometimes even lower than 50%. This shows that the SCN after training is less robust in this case. Combining models with ensemble learning methods is a common choice in solving the problem of low robustness [

26,

27]. In the field of ensemble learning methods, Boosting and Bagging are two representative types of methods. Fernandes et al. [

28] used AdaBoost and random weight neural networks (RWNN) in the spectral data processing of grape stems to obtain better classification results than other methods. Asim et al. [

29] also proposed an earthquake prediction system that combines earthquake prediction indicators with genetic programming and AdaBoost, and obtained better results in earthquake prediction. Likewise, Okujeni et al. [

30] used a small share of training samples and a low number of Bagging iterations to generate accurate urban fraction maps. Akila et al. [

31] combined the Bagging method with Risk Induced Bayesian Inference method to form a cost-sensitive weighted voting combiner, which indicated 1.04–1.5 times reduced cost in the Brazilian bank data experiment. Wing et al. [

32] proposed a bagging boosting-based semi-supervised multi-hashing method with query-adaptive re-ranking, which outperformed state-of-the-art hashing methods with statistical significance.

3. Theoretical Explanation

3.1. Original SCN

SCNs are a class of randomized neural networks proposed by Wang and Li in [

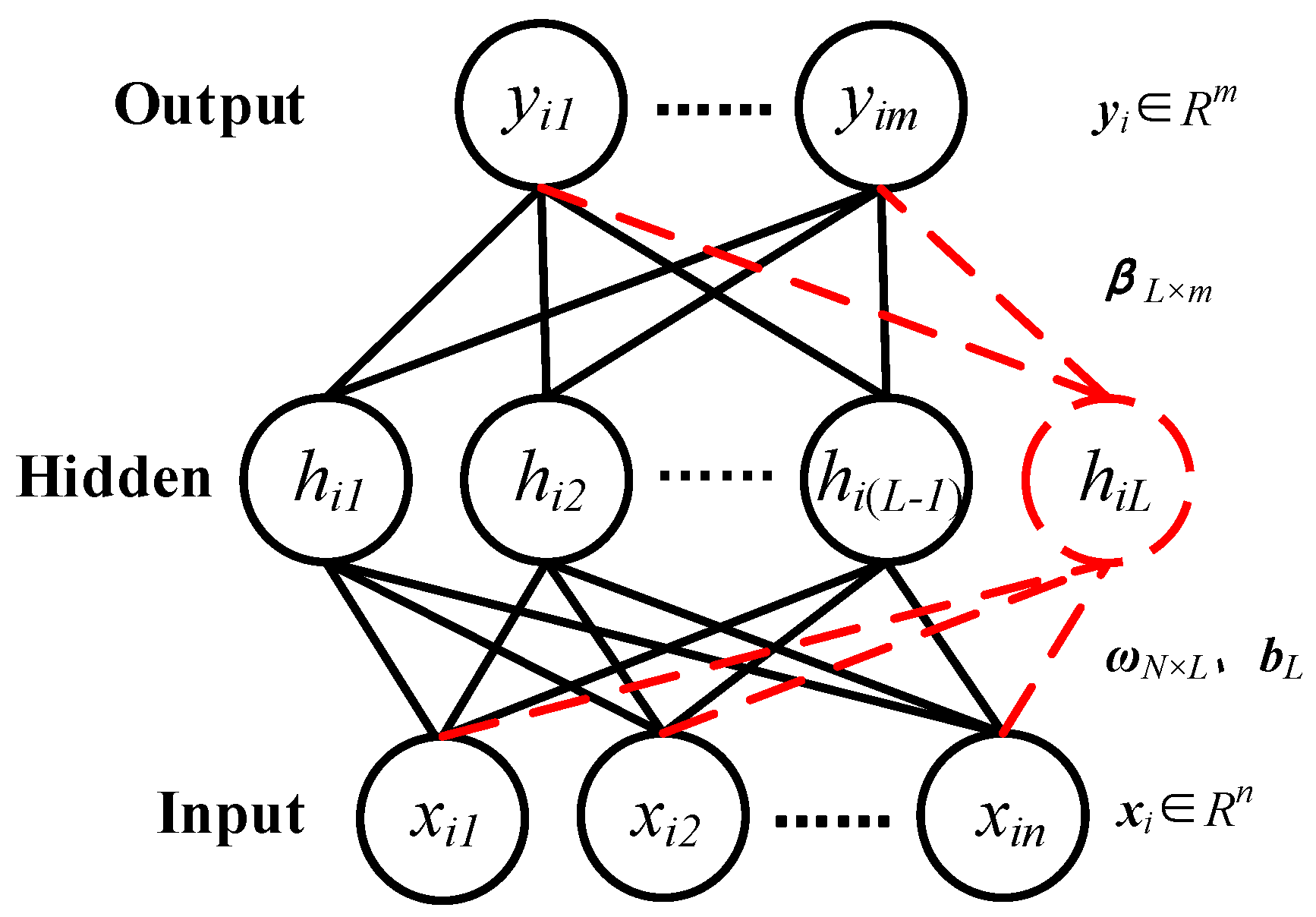

18], which originally contributed to the development of randomized learning techniques. Its structure is shown in

Figure 1.

From

Figure 1, we can see that the input is

,

and the output is

,

, where

N is the number of training samples,

n is the dimension of sample’s input,

m is the dimension of sample’s output. Meanwhile, we define the input weight matrix as

, the bias vector as

, where

L is the number of hidden layer nodes. Then, we calculate the output of hidden layer nodes of network according to the input of network, input weight matrix and bias vector. Herein, the output of the

Lth hidden layer node is expressed as

where

The output weight matrix is

,

. Combine with the output of hidden layer

and the weight matrix of output

, we can get corresponding output

. Meanwhile, we can calculate the current corresponding residual matrix

of network according to the output

corresponded to the input

where

When adding the

Lth hidden layer node, the constraint condition is expressed as

where

,

r is a sequence greater than 0 and less than 1, and varies in the process of finding parameters,

and

.

The training process of SCN is as follows. When adding the Lth hidden layer node, and are randomly generated within a certain range and , and made up into a candidate set , where u is the number of candidate nodes. Then, one set is selected when (6) and minimizing . If there is no one in the candidate set satisfies the constraint condition, the range will be appropriately expanded and the selection will be made again, but the scope of scalability is limited. The network stops training when it meets one of the following three conditions: (1) the number of hidden layer nodes reaches to the preset maximum value ; (2) the training error is lower than the preset error value ; (3) the that satisfies the condition cannot be found within the maximum range.

It can be seen from the above description that the difference between SCN and other neural networks lies in its unique training mode. It gives up the traditional iterative method of updating network parameters, and uses the pattern of adding hidden layer nodes under constraints, which makes the scale of network flexible and controllable, and greatly reduces the loss of computing resources.

3.2. SCN-Based Methods

The robustness of the model refers to the ability to resist or overcome adverse conditions. In these experiments, the stronger models’ ability to overcome noisy environment, the better the robustness of models. In order to strengthen the robustness of original SCN, we combine the original SCN with two ensemble learning methods. We also strengthen the robustness of model by combining two ensemble learning methods based on original SCN.

3.2.1. Bootstrap-SCN Method

Bagging is a parallel ensemble learning method, its process is as follows. First, we randomly sample a number of different training subsets with the same number of training set samples from training set, and this step is called bootstrap sampling. Then, we use these different training subsets to train corresponding base classifiers with the same type of classifier models. Finally, these base classifiers are combined by simple voting or other methods to form the final classifier. Here, bootstrap sampling is the core step of bagging.

By using bootstrap sampling in the case of small training set, on the one hand, these base classifiers will have a larger difference between each other according to training subsets; On the other hand, it also avoids the problem that training subsets are too small which caused by dividing the completely different subsets, and finally the base classifiers are not trained well.

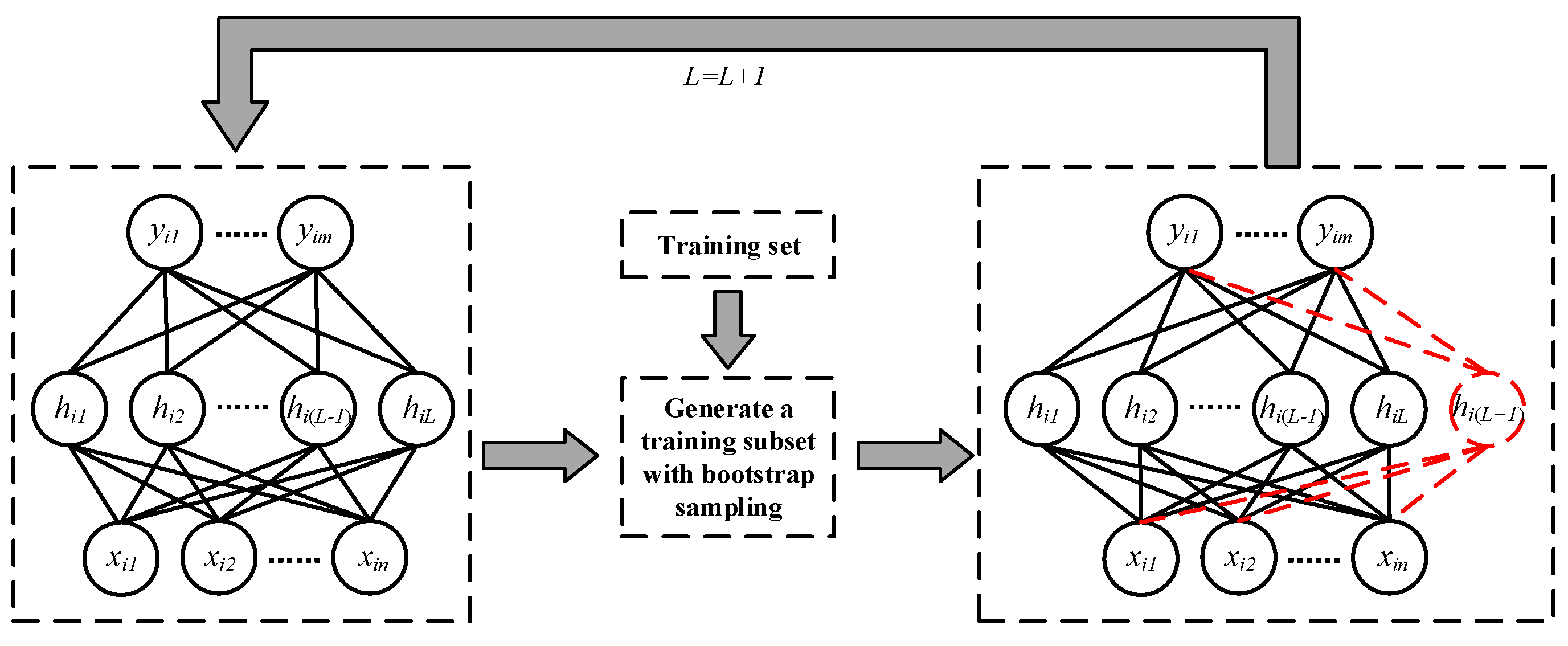

Combined with the training process of SCN, we propose Bootstrap-SCN method. This method generates a training subset by using bootstrap sampling before adding hidden layer nodes to SCN. This training subset is used for the generation of new hidden layer node of SCN. After that, we repeat this step to generate more hidden layer nodes until the end of training. The flow of this method is shown in

Figure 2, and the pseudo code is shown in Algorithm 1.

| Algorithm 1: Pseudo code of Bootstrap-SCN method. |

| Given training set , training subset by bootstrap sampling , SCN model , current and maximum number of hidden layer nodes L and Lmax, training error , expected error tolerance |

| Initialize and ; |

| 1: While AND do |

| 2: Generate using bootstrap sampling on the basis of ; |

| 3: Train and generate a new hidden layer node with ; |

| 4: ; |

| 5: End while |

| Return:. |

3.2.2. AdaBoost-SCN Method

When we train network with small sample sets, it is very likely that the network training is not in place and the robustness of network is poor. AdaBoost can be used to reduce the deviation and improve the effect of network on the vibration signal recognition [

33]. Meanwhile, because the hidden layer nodes of original SCN are generated one after another, which conforms to the form of serially training different base classifiers of AdaBoost. In this method, we use AdaBoost when generating the hidden layer nodes of SCN, so as to improve the effect of original SCN in fiber vibration signal recognition.

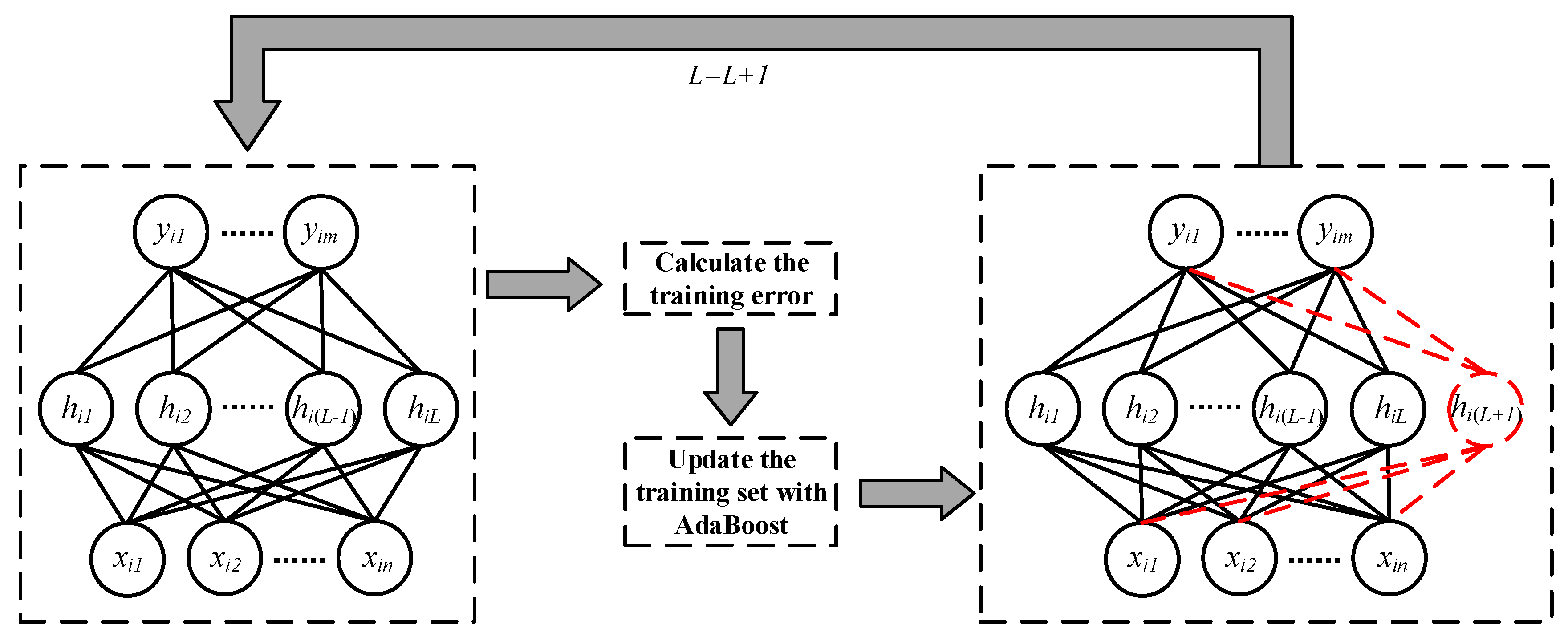

In the process of SCN training, we consider the hidden layer nodes generated by each training round as different classifiers. When SCN is in the initial state, the weights of samples are the same. When training the network, the samples’ weights are adjusted according to the training error of SCN and the recognition results of samples after each time the hidden layer node is added, and are used for the calculation of training error when the hidden layer node is added next time. Then, we repeat this step to generate more hidden layer nodes until the end of training. The flow of this method is shown in

Figure 3, and the pseudo code is shown in Algorithm 2. For the sake of simplicity, we do not assign weights to the hidden layer nodes of trained network, which means all hidden layer nodes have the same weights in calculation process.

| Algorithm 2: The pseudo code of AdaBoost-SCN method. |

| Given training set , SCN model , current and maximum number of hidden layer nodes L and Lmax, training error, expected error tolerance , weights of training set |

| Initialize and , , ; |

| 1: While AND Do |

| 2: ; |

| 3: ; |

| 4: if then set and abort loop |

| 5: ; |

| 6: ; |

| 7: End while |

| Return. |

3.2.3. AdaBoost-Bootstrap-SCN Method

According to

Section 3.2.1 and

Section 3.2.2, the two main ensemble learning methods can be used in the training of SCN and applied to the recognition of fiber vibration signals. From the perspective of bias-variance decomposition, AdaBoost mainly focuses on reducing the bias of classifier, while bagging mainly focuses on reducing the variance of classifier. However, there is a conflict between bias and variance in the training process of a classifier. When the classifier is not trained enough, it cannot fit neither training set and testing set well. At this time, the bias of the classifier dominates the classification error rate. As the training continues, when the training is too much, the classifier can fit training set well, but it cannot fit testing set well. At this time, the variance dominates the classification error rate [

34]. Therefore, in order to balance the bias and variance of classifier, we could combine these two ensemble learning methods with SCN simultaneously.

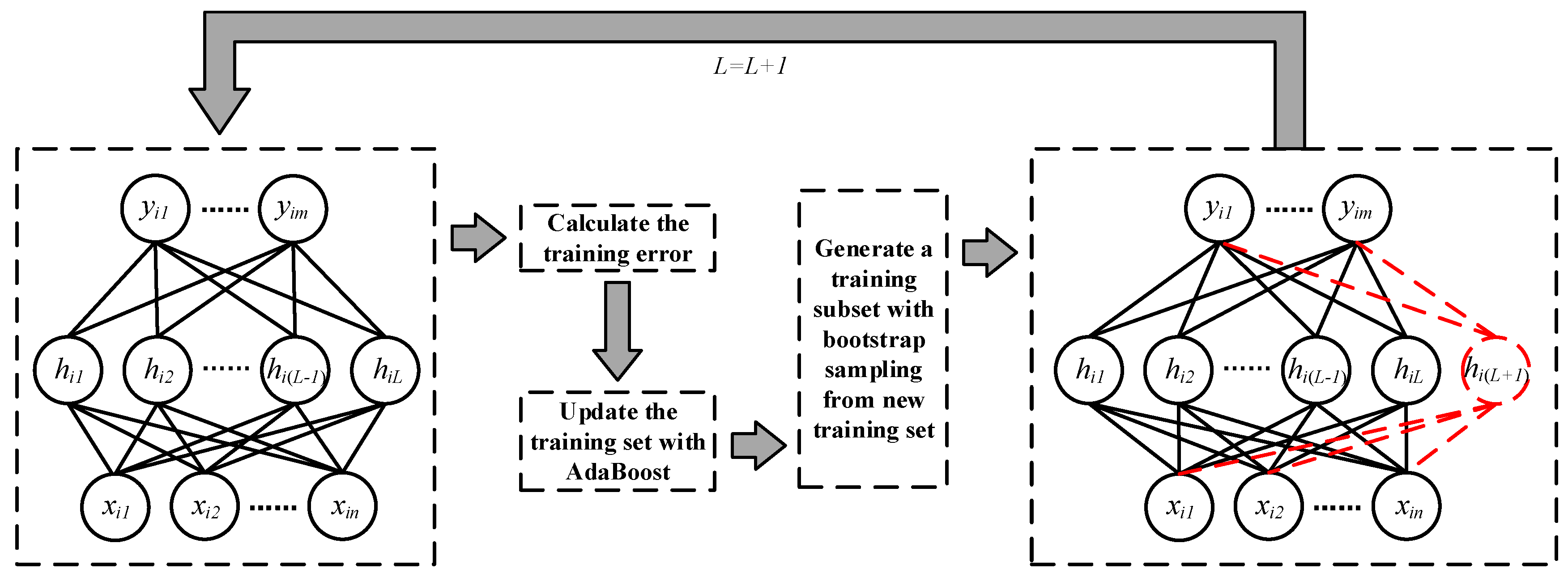

The way we combine two ensemble learning methods with SCN is as follows. During the process of training SCN, we need to update the weights of the samples according to the training error and samples’ recognition results of current network before adding new hidden layer node. Then we use bootstrap sampling to generate a training subset and choose the corresponding weights of samples in the training subset. At last, we use this training subset and the corresponding weights to train SCN and obtain a new hidden layer node. We repeat this step to generate more hidden layer nodes until the end of training. The flow of this method is shown in

Figure 4, and the pseudo code is shown in Algorithm 3. For the sake of simplicity, we do not assign weights to the hidden layer nodes of the trained network, which means all hidden layer nodes have the same weights in calculation process.

| Algorithm 3: The pseudo code of AdaBoost-Bootstrap-SCN method. |

| Initialize and , , ; |

| 1: While AND do |

| 2: Generate using Bootstrap Sampling on the basis of ; |

| 3: Generate with and ; |

| 4: ; |

| 5: ; |

| 6: if then set and abort loop |

| 7: ; |

| 8: ; |

| 9: Update with to ; |

| 10: End while |

| Return. |

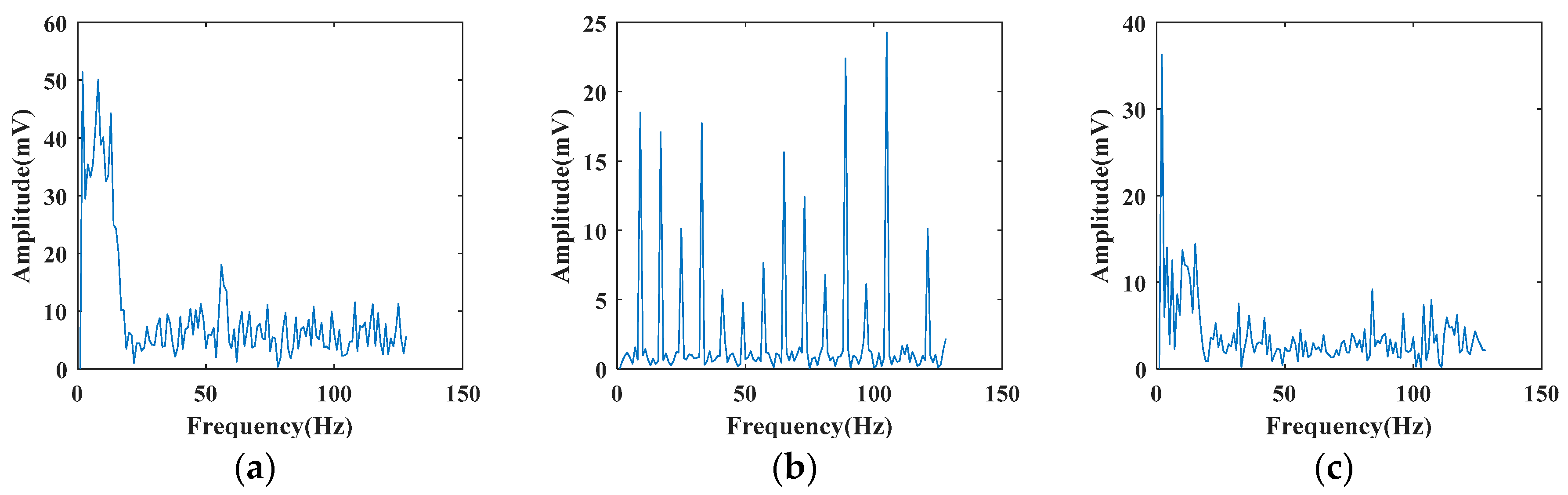

3.3. Simulated Noise

Due to the unique nature of OFPS, it needs to work in complex environments for long periods of time. The various noises also cause some interference to the recognition of the vibration signals of system. In order to eliminate the influence of noises, we need to improve the robustness of classifiers. In this paper, we mainly use four common noises for simulation and verify the effectiveness of the proposed methods.

3.3.1. White Gaussian Noise

White Gaussian noise is a kind of noise whose instantaneous value obeys Gaussian distribution and power spectral density obeys uniform distribution. It is commonly used to simulate ambient noise because its probability distribution is normally distributed. In order to analyze the influence of noise on signal recognition performance, we combine pretreated fiber vibration signals with white Gaussian noise to obtain noisy vibration signals

where

is original fiber vibration signal,

is the multiple of superimposed noise,

is the fiber vibration signal superimposed by Gaussian white noise,

is simulated white Gaussian noise. The frequency domain expression of

is

In order to accurately understand the interference of different noise intensities on signal recognition, we use the variable to control the amplitude of noise, and the same operation is applied to the subsequent noise.

3.3.2. White Uniform Noise

White uniform noise refers to the noise whose power spectral density is constant in the whole frequency domain. In this paper, the vibration signal superimposed white uniform noise is defined as

where

is original fiber vibration signal,

is the multiple of superimposed noise,

is the fiber vibration signal superimposed by white uniform noise. The frequency domain expression of

is

In this paper, the parameters of white uniform noise are set to: .

3.3.3. Raleigh Distributed Noise

Rayleigh distributed noise refers to the noise whose instantaneous value obeys Rayleigh distribution. In this paper, the vibration signal superimposed Raleigh distributed noise is defined as

where

is original fiber vibration signal,

is the multiple of superimposed noise,

is the fiber vibration signal superimposed by Raleigh distributed noise. The frequency domain expression of

is

In this paper, the parameter of Raleigh distributed noise is set to: .

3.3.4. Exponentially Distributed Noise

Exponentially distributed noise refers to the noise whose instantaneous value obeys exponential distribution. In this paper, the vibration signal superimposed exponentially distributed noise is defined as

where

is original fiber vibration signal,

is the multiple of superimposed noise,

is the fiber vibration signal superimposed by exponentially distributed noise. The frequency domain expression of

is

In this paper, the parameter of exponentially distributed noise is set to: .

5. Numerical Experiment

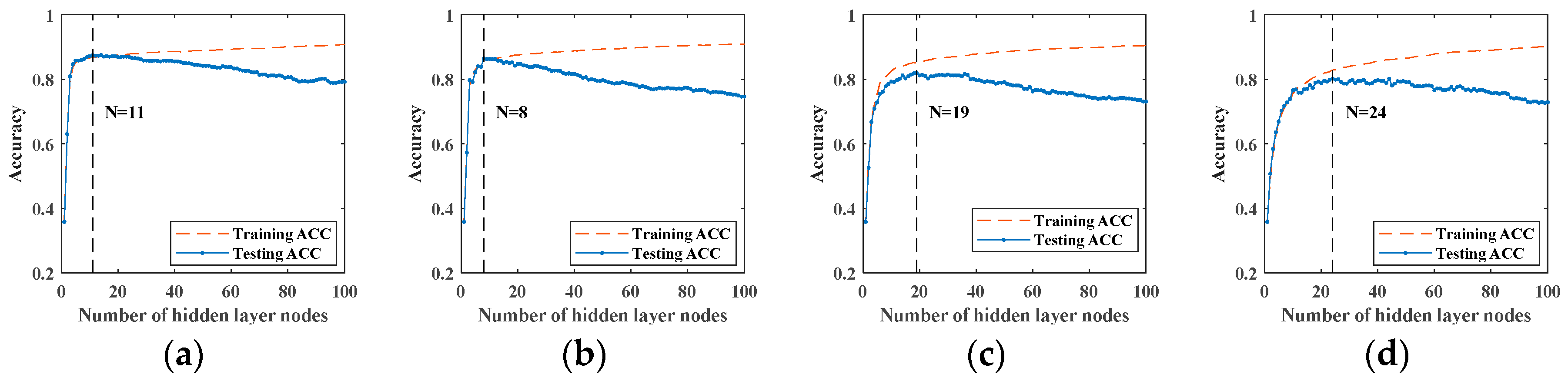

After preparing fiber vibration signals and the signal sets superimposed by four different noises, we use these noisy signal sets to train SCNs which combine with different ensemble learning methods. The recognition effects of different methods on the noisy signal sets is obtained and compared. In order to eliminate the influence of the random generation of hidden layer nodes on the experimental results, we repeat each experiment and average the experimental results. Here, we set network to stop training when the hidden layer nodes of network exceed 100. Meanwhile, the maximum number of hidden layer candidate nodes is set to 100 (one of these candidate nodes is selected when adding a hidden layer node). During the training process, each time a hidden layer node is added, the accuracy of training and testing is recorded.

Here, we have four classifiers including original SCN, Bootstrap-SCN, AdaBoost-SCN, and AdaBoost-Bootstrap-SCN and different multiples of white Gaussian noise, white uniform noise, Rayleigh distributed noise and exponentially distributed noise. First, we train four different types of classifiers with the signal sets superimposed by different types and intensities of noises. In order to eliminate the influence of randomness on experimental results, each sample set is performed 10 times of training and testing on each classifier. Then, the experimental results are averaged to obtain the actual classification effect of classifier in current sample set. Afterwards, we find the position with the highest testing accuracy in the case of each intensity of noises, and use the highest testing accuracy to form the testing accuracy curve. In addition, we use this training method to obtain the results for other signal sets superimposed by different types and intensities of noises.

Figure 9 shows the average results when signals superimposed by different intensities of white Gaussian noise are sent to original SCN. The positions of the maximum testing accuracy are also indicated in figures. Meanwhile, the testing accuracy curve composed of the highest testing accuracy is shown in

Figure 10.

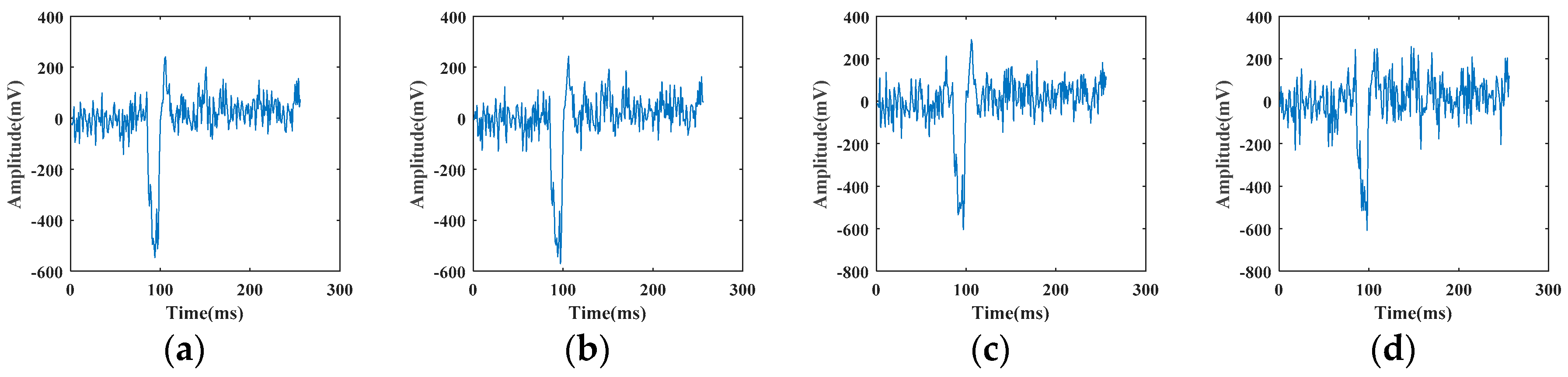

5.1. Recognition with Original SCN

According to the calculation method mentioned above, we first use original SCN to recognize the vibration signals superimposed by four kinds of noises. The results are shown in

Figure 11.

From

Figure 11, we can see that the recognition accuracy of original SCN decreases with the increase of noise’s amplitude. By observing the recognition effect of signals with equal multiple of noises, it can be seen that different noises have different degrees of interference with signals. For white Gaussian noise, testing accuracy decreases from 0.8746 of noiseless signals to 0.5254 when the multiple of noises is 200; For white uniform noise, the testing accuracy decreases from 0.8582 of noiseless signals to 0.4388 when the multiple of noises is 200. For Rayleigh distributed noise and exponentially distributed noise, the testing accuracy decreases from 0.8657 and 0.8463 with the noiseless signal to 0.6045 and 0.6642 when the multiple of noises is 200.

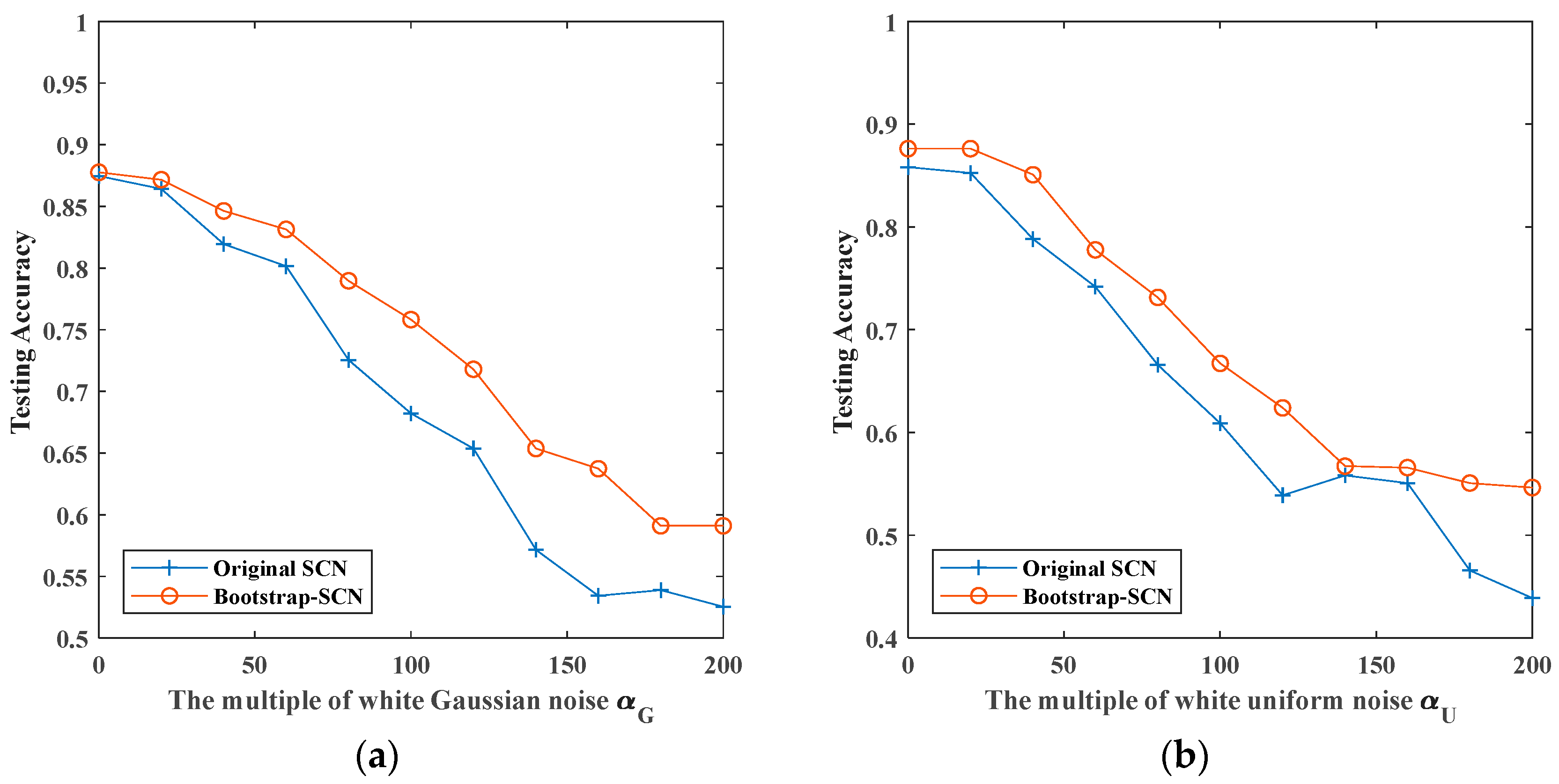

5.2. Recognition with Bootstrap-SCN

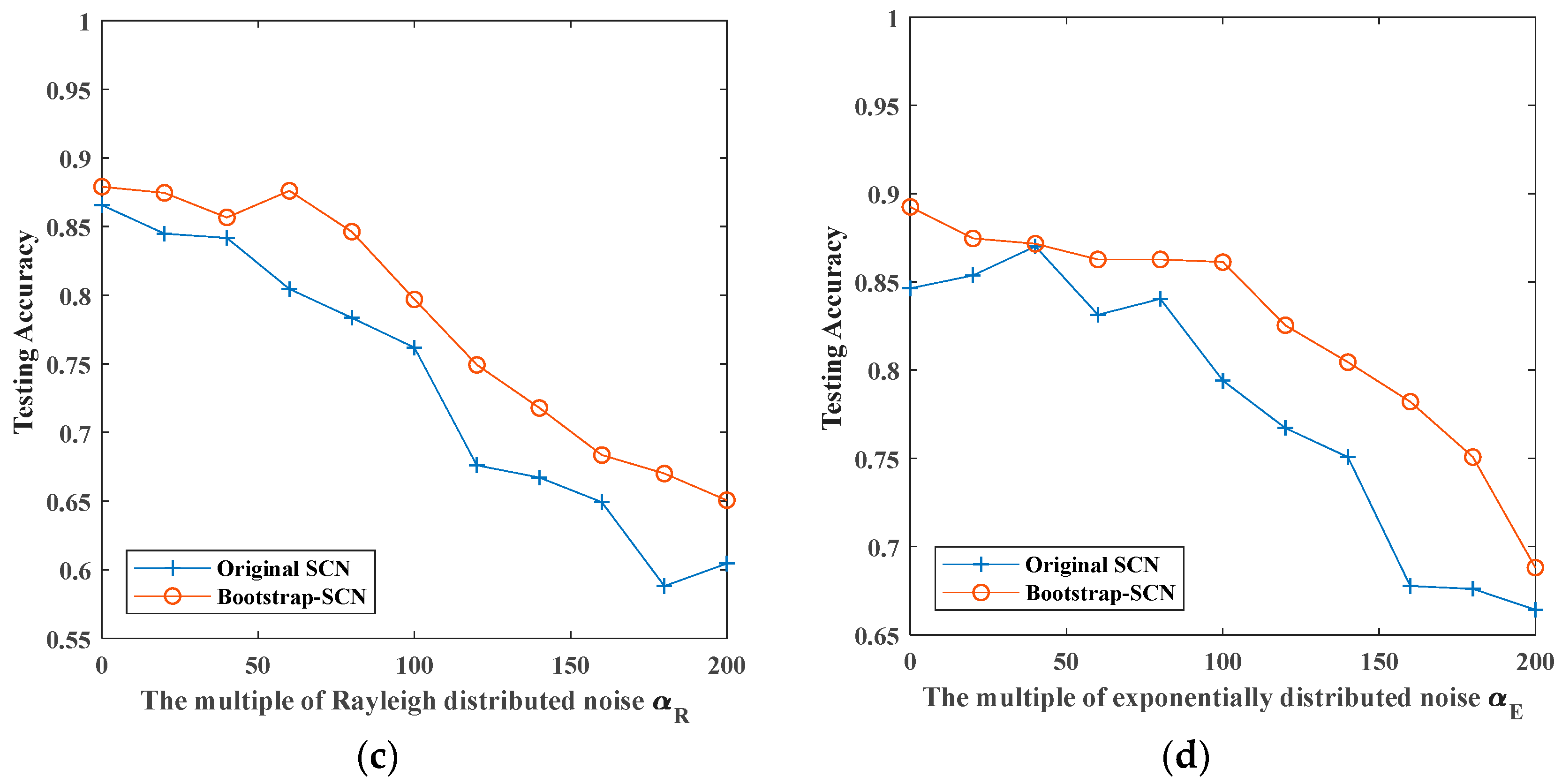

In this section, we combine bootstrap sampling with SCN to recognize noisy vibration signals. The comparison of the recognition accuracy between original SCN and Bootstrap-SCN is shown in

Figure 12.

Figure 12 shows the recognition rates of original SCN and Bootstrap-SCN as the superimposed noises’ multiplier increases. When the multiplier of superimposed noise increases, the recognition rates of both methods decrease. For signals superimposed by white Gaussian noise, the recognition rate of Bootstrap-SCN decreased from 0.8776 of noiseless signals to 0.5910 when the multiple of noises is 200. For signals superimposed by white uniform noise, Rayleigh distributed noise and exponentially distributed noise, the recognition rate of Bootstrap-SCN decreased from 0.8761, 0.8791, and 0.8925 of noiseless signals to 0.5463, 0.6507, and 0.6881 when the multiple of noises is 200.

The above results show that Bootstrap-SCN and original SCN have the same recognition effect when there is no superimposed noise. In the case of superimposing four kinds of noises, the recognition rate of Bootstrap-SCN is slightly higher than that of original SCN, but the effect is not obvious.

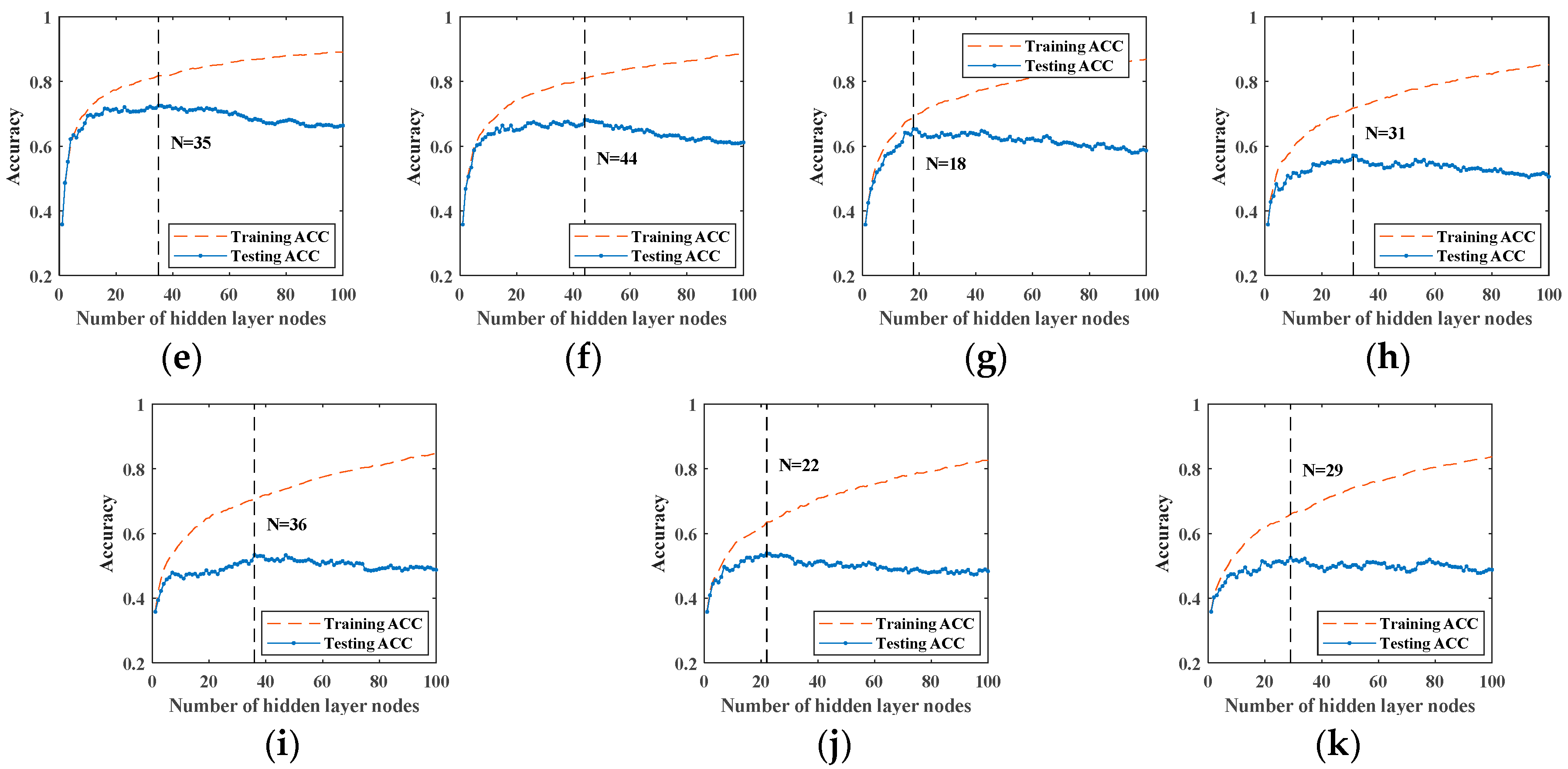

5.3. Recognition with AdaBoost-SCN

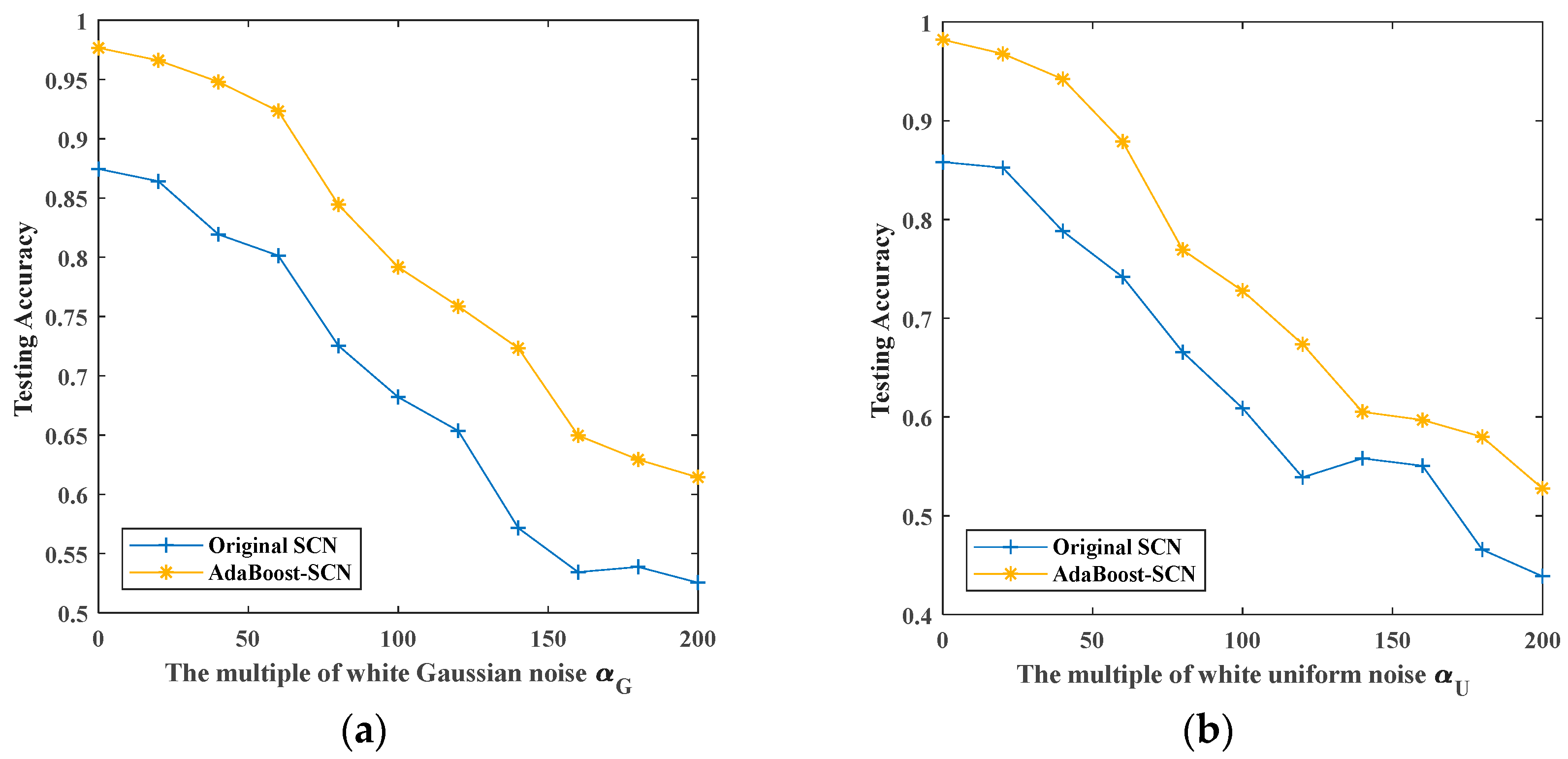

In this part, we use another ensemble learning method to improve the recognition rate of SCN. We combine AdaBoost with SCN to recognize four kinds of noisy signals. The recognition results of noisy signals by original SCN and AdaBoost-SCN are shown in

Figure 13.

Figure 13 shows the recognition rates of four kinds of noisy signals by original SCN and AdaBoost-SCN as the superimposed noises’ multiplier increases. For signals superimposed by white Gaussian noise and white uniform noise, the recognition rate of AdaBoost-SCN decreases from 0.9767 and 0.9820 of noiseless signals to 0.6143 and 0.5278 when the multiple of noises is 200. For signals superimposed by Rayleigh distributed noise and exponential distributed noise, the recognition rate of AdaBoost-SCN decreases from 0.9729 and 0.9752 of noiseless signals to 0.6759 and 0.7902 when the multiple of noises is 200. It can be seen from the comparison with original SCN that the recognition accuracy of AdaBoost-SCN is about 0.1 higher than that of original SCN in the case of different superimposed noise.

5.4. Recognition with AdaBoost-Bootstrap-SCN

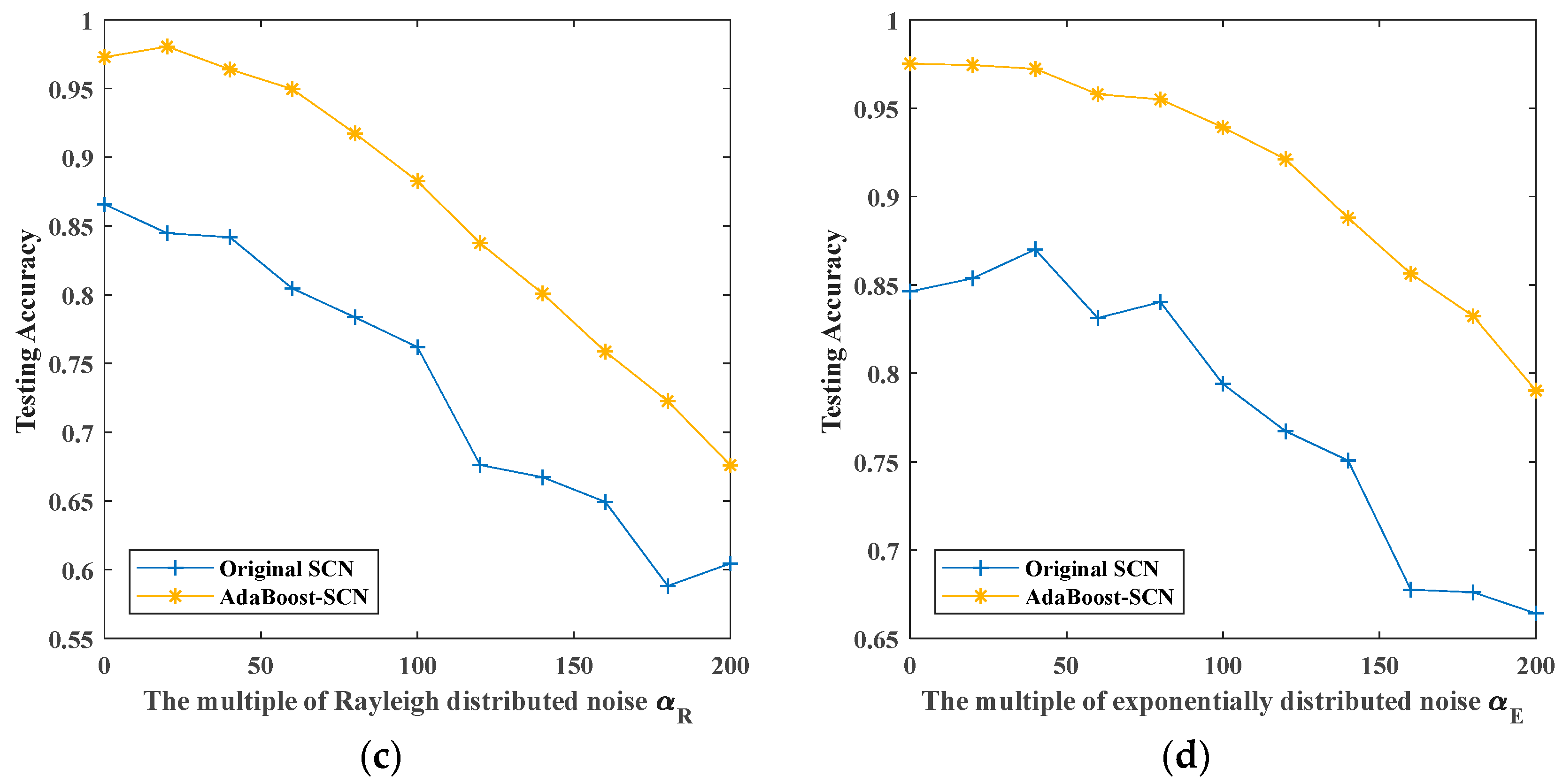

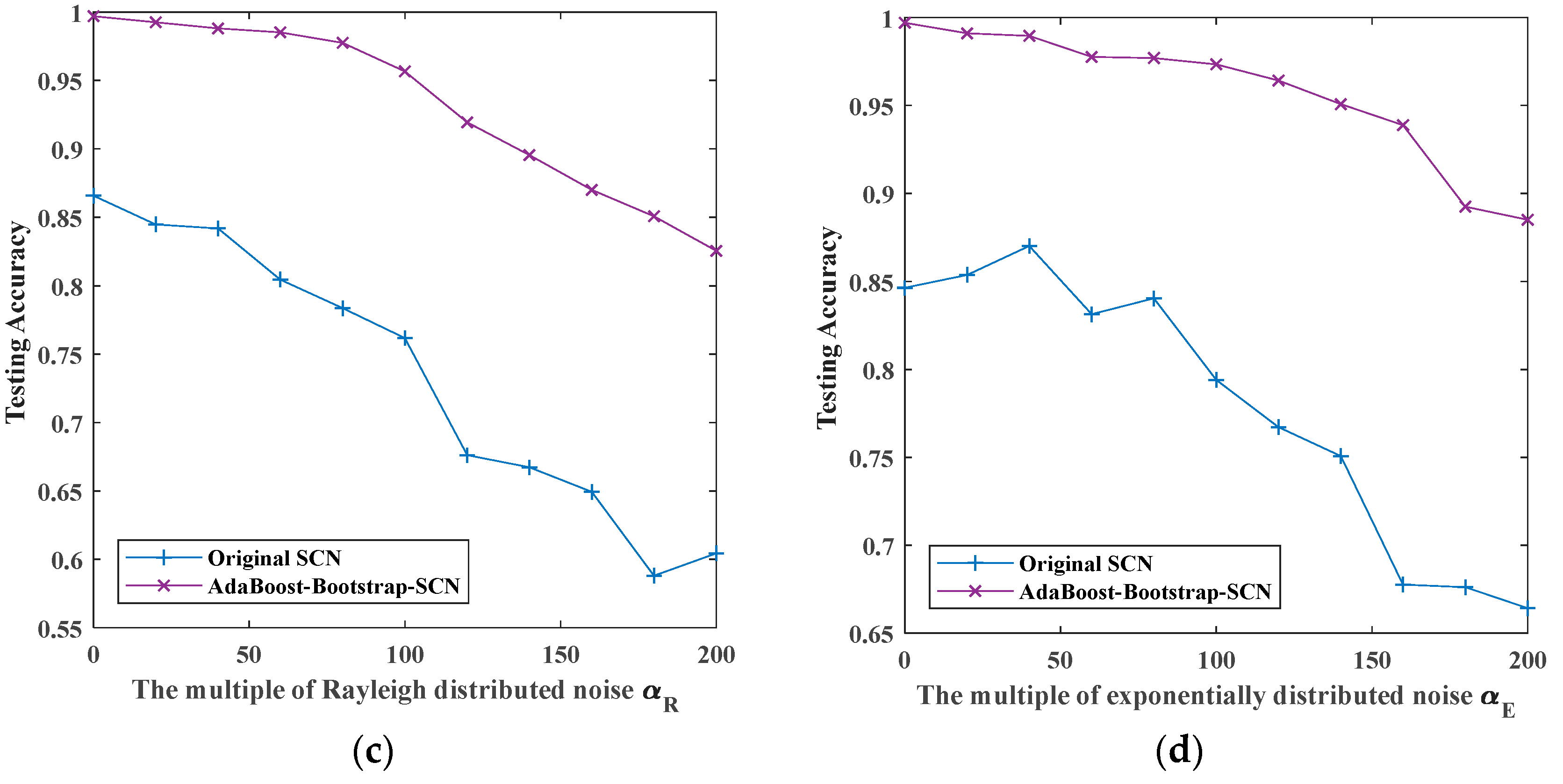

After the above experiments of two combination methods, we carried out experimental verification on the proposed AdaBoost-Bootstrap-SCN. The recognition rates of noisy signals by original SCN and AdaBoost-Bootstrap-SCN are shown in

Figure 14.

Figure 14 shows the recognition rates of four kinds of noisy signals by original SCN and AdaBoost-Bootstrap-SCN as the superimposed noises’ multiplier increases. For signals superimposed by white Gaussian noise and white uniform noise, the recognition rates of AdaBoost-Bootstrap-SCN decreased from 0.9940 and 0.9940 of noiseless signals to 0.7463 and 0.6373 when the multiple of noises is 200. For signals superimposed by Rayleigh distributed noise and exponential distributed noise, the recognition rate of AdaBoost-Bootstrap-SCN decreased from 0.9970 and 0.9970 of noiseless signals to 0.8254 and 0.8850 when the multiple of noises is 200. Compared with original SCN, AdaBoost-Bootstrap-SCN can improve 0.1–0.15 on the basis of original SCN in the case of no noise superposition, and the recognition rate is more than 0.99. Meanwhile, the recognition of this method is improved more than 0.2 on the basis of original SCN when the multiple of noises is 200.

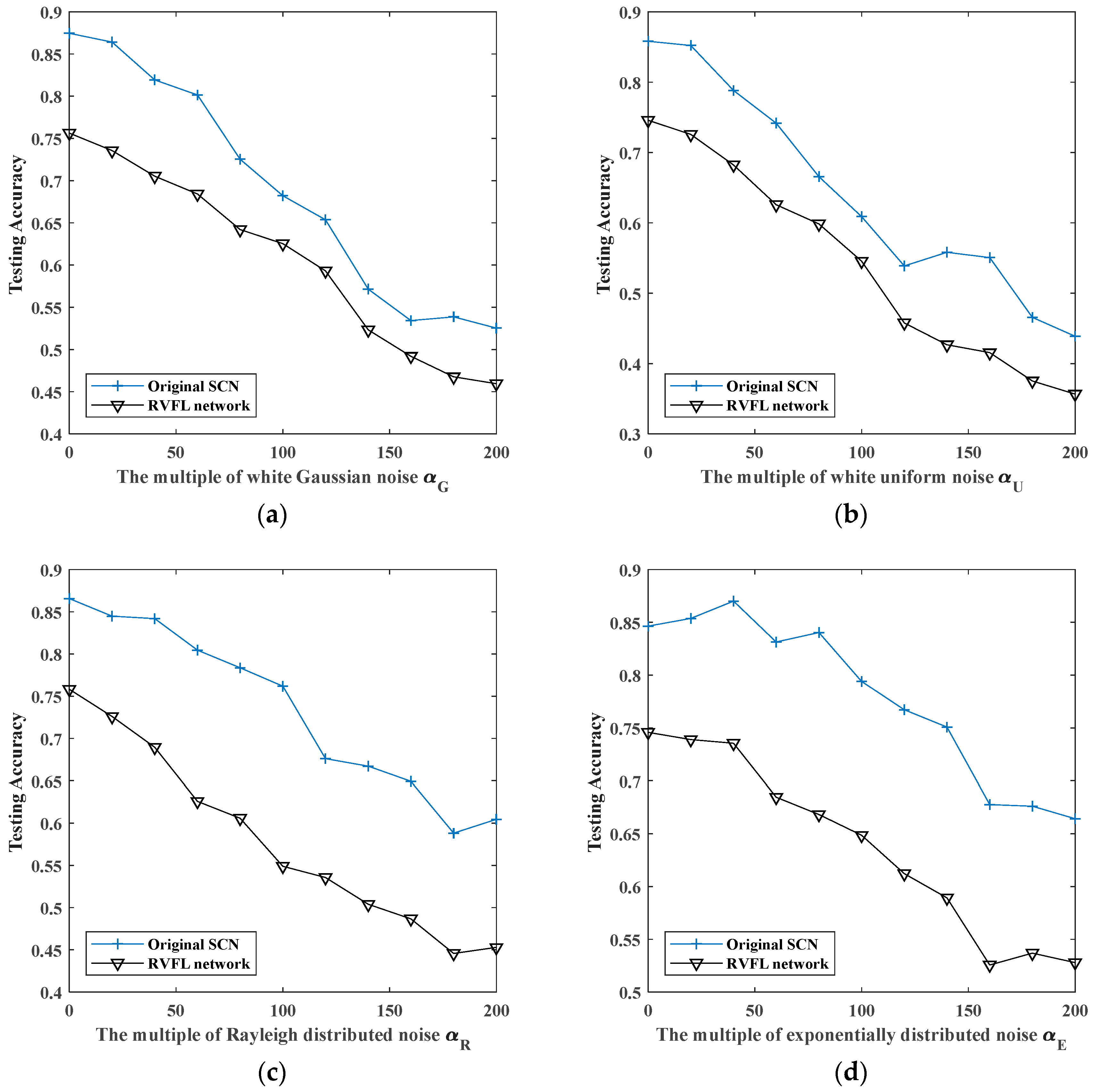

5.5. Comparison with Other Methods

Because the structure of RVFL network is similar to SCN, we use RVFL network for comparison. RVFL network have three layers. Its input layer has 128 nodes, which is the same as the dimension of vibration samples. The number of output layer nodes is the same as the dimension of sample’s label. As the number of hidden layer nodes is a hyper-parameter, which need be set before network training, we need to record the experimental results of RVFL network with different numbers of hidden nodes. In order to eliminate the influence of the randomness in network training, we repeat each experiment 10 times and average experimental results. The condition of stopping training is set as: when testing error is less than 0.01. The experimental results are as follows.

Figure 15 shows the comparison of recognition effects of RVFL network and original SCN with different noises. We can see that the recognition effect of original SCN is 0.04–0.2 better than that of RVFL network, which is why we choose original SCN as the research object.

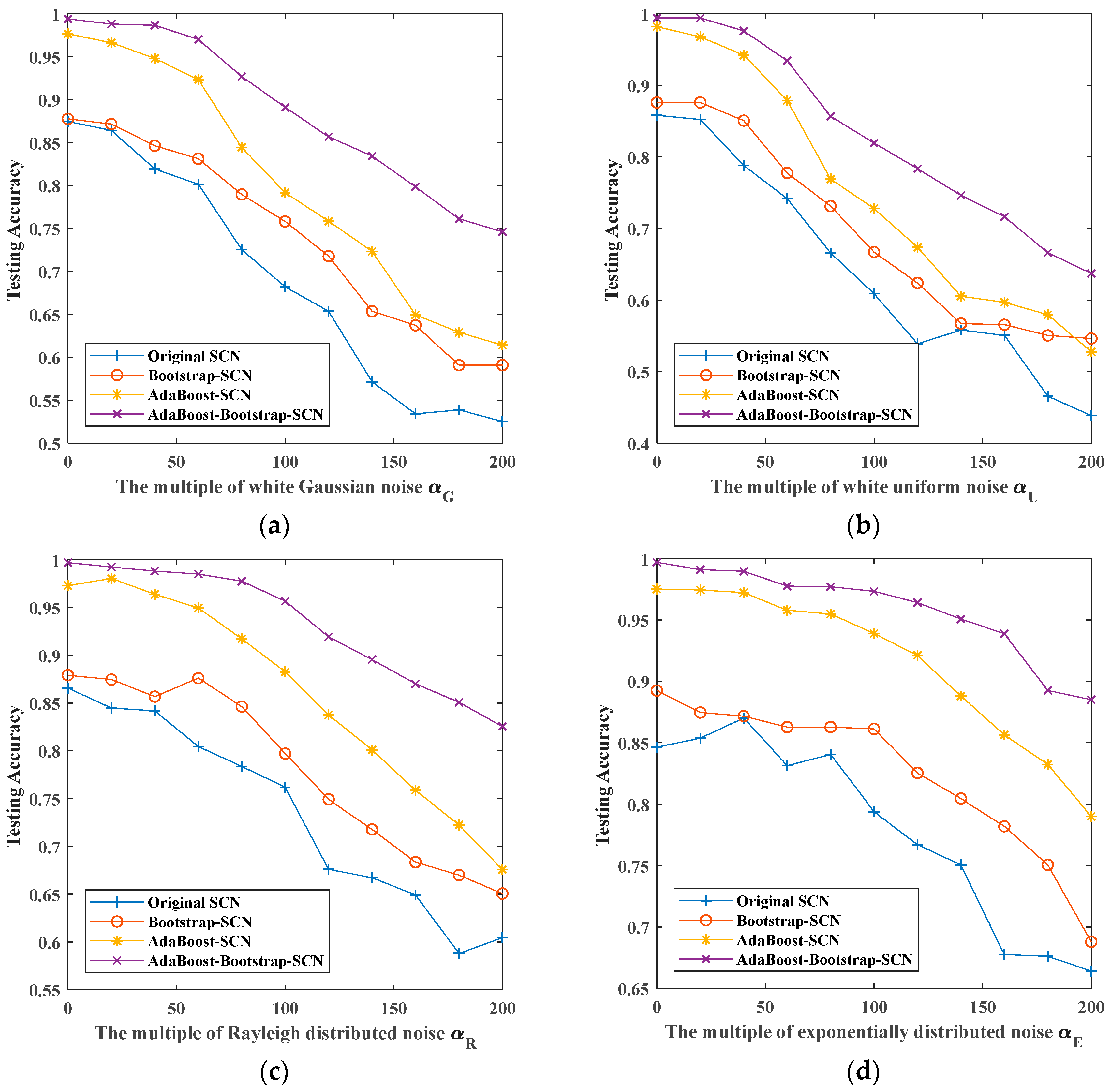

6. Discussion

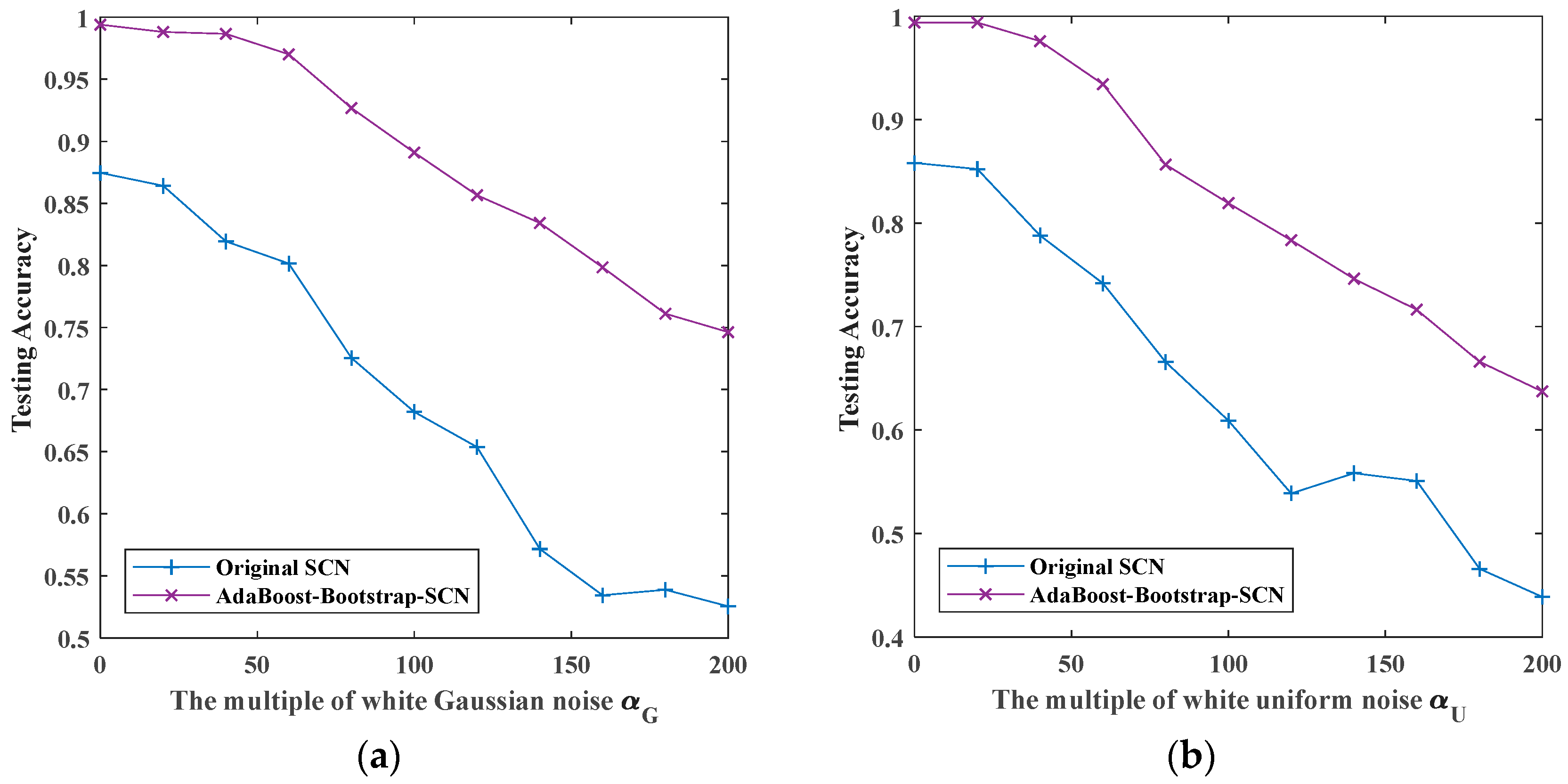

The recognition results of four kinds of noisy signals with four classifiers are shown in

Figure 16 and

Table 2. From the figures we can see that when there is no noise superimposed, the recognition rates of original SCN and Bootstrap-SCN are between 0.85–0.9, while the recognition rates of AdaBoost-SCN and AdaBoost-Bootstrap-SCN are greater than 0.95, which indicates that the use of AdaBoost can improve the recognition rate of the vibration signal in the case of noiseless superposition and noisy superposition. As the multiplier of superimposed noise increases, the recognition effect of each classifier decreases. However, the recognition effect of AdaBoost-Bootstrap-SCN is slower than other methods. When superimposing 200 times of noise, the recognition results of Bootstrap-SCN and AdaBoost-SCN have a small increase compared with that of original SCN, while the recognition results of AdaBoost-Bootstrap-SCN have a great improvement. Meanwhile, AdaBoost-Bootstrap-SCN is also improved by about 0.1 on the basis of AdaBoost-SCN when superimposing 200 times of noise.

It can be seen from the above discussion that: (1) after combining bootstrap sampling method with original SCN, the robustness of SCN can be improved slightly; (2) by combining AdaBoost method with SCN, the recognition rate of SCN can be improved over the entire noise range; (3) when bootstrap sampling method and AdaBoost method are combined with SCN simultaneously, AdaBoost-Bootstrap-SCN can improve the robustness of classifier on the basis of AdaBoost-SCN and achieve better recognition results.