Vehicle Detection in Aerial Images Using a Fast Oriented Region Search and the Vector of Locally Aggregated Descriptors

Abstract

1. Introduction

- We propose an algorithm to generating ORoIs called a Fast Oriented Region Search, which is composed of Edge Boxes NG features re-ranking, vehicle orientation estimation, and region symmetrical refinement. This approach is efficient and accurate, which is significant for the subsequent steps.

- By introducing a dense feature extraction approach based on LSK into a VLAD model, we achieve a better representation of the vehicle objects in the image owing to LSK being designed to be invariant to variations. The properties of LSK guarantees the robustness and stableness of our method.

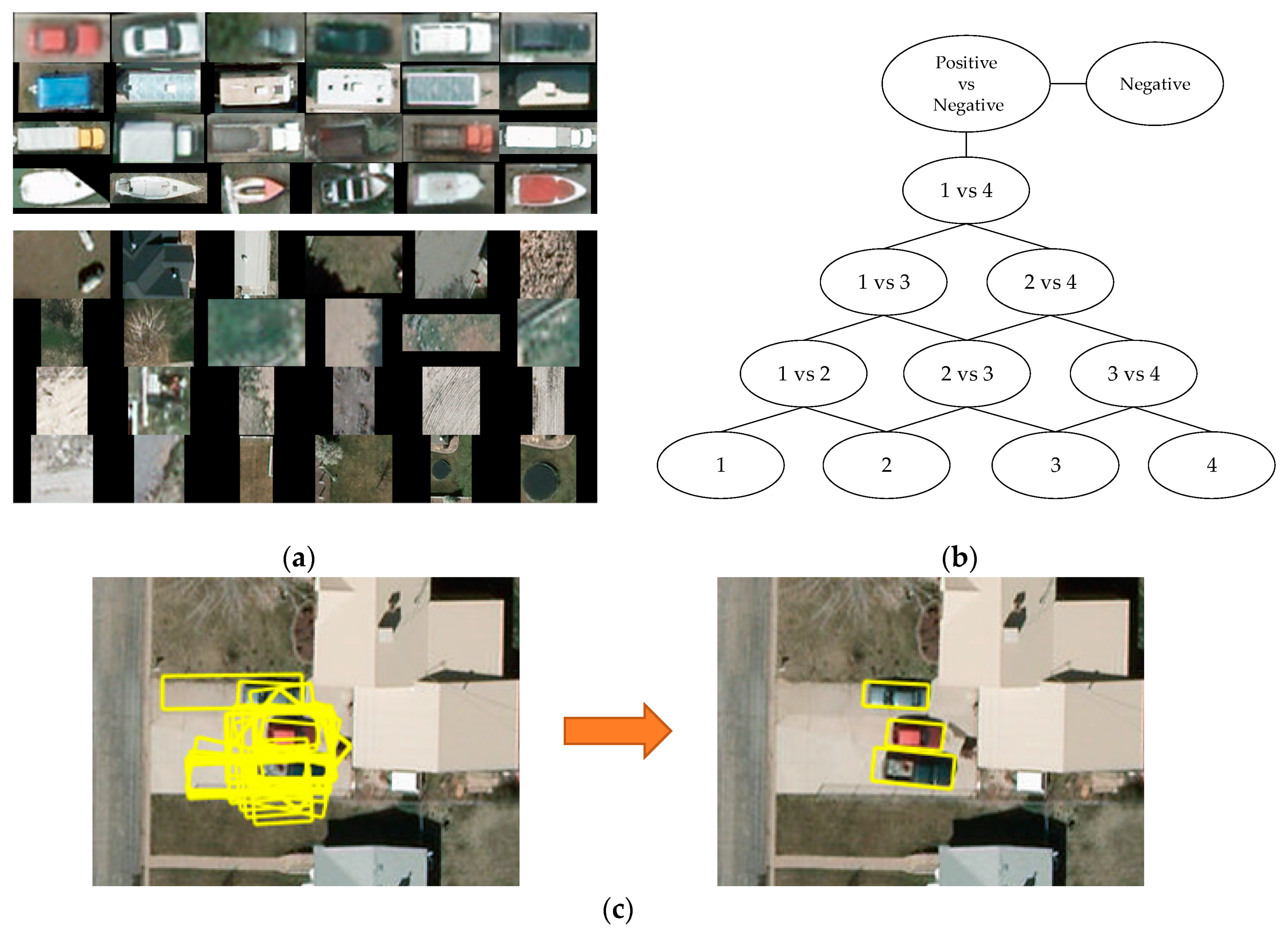

- In view of a large variety of vehicle categories and the difficulty of imbalanced samples, we optimize the training phase. The classification results were improved by applying a modified directed-acyclic-graph support vector machine (DAG SVM) approach, which is trained with negative samples and multiple categories of vehicle samples.

2. Related Work

3. Proposed Method

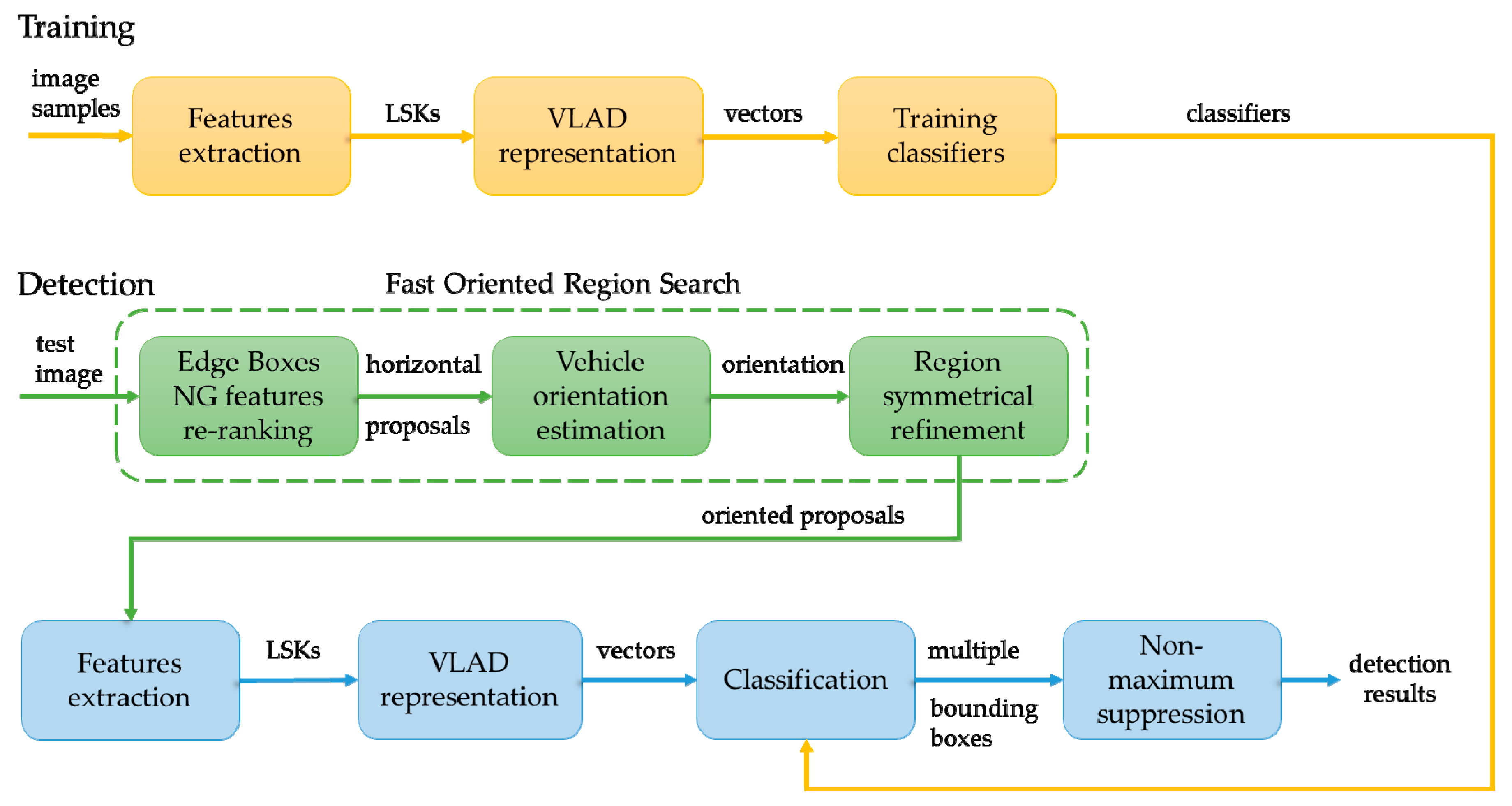

3.1. Overview of the Proposed Method

- (1)

- For training, we first generated positive and negative samples. Second, the LSK features were densely computed because dense sampling strategies are capable of producing more information than key-point-based strategies during the feature-extraction phase, especially for detecting small targets like vehicles in aerial images, where the patches do not have sufficient key-points to be extracted. The reason for using LSK features is that LSK can better capture the characteristics of vehicles under different conditions, even when the target is influenced by illumination, noise, and blur. Third, a VLAD representation was constructed by using a K-means algorithm to build a codebook of visual words. By measuring and accumulating the distance between codewords and descriptors after the principal component analysis (PCA) process, VLAD encoded vectors to characterize the correlation of a descriptor and the visual content. After all the samples were encoded, we could obtain classifiers trained with VLAD vectors.

- (2)

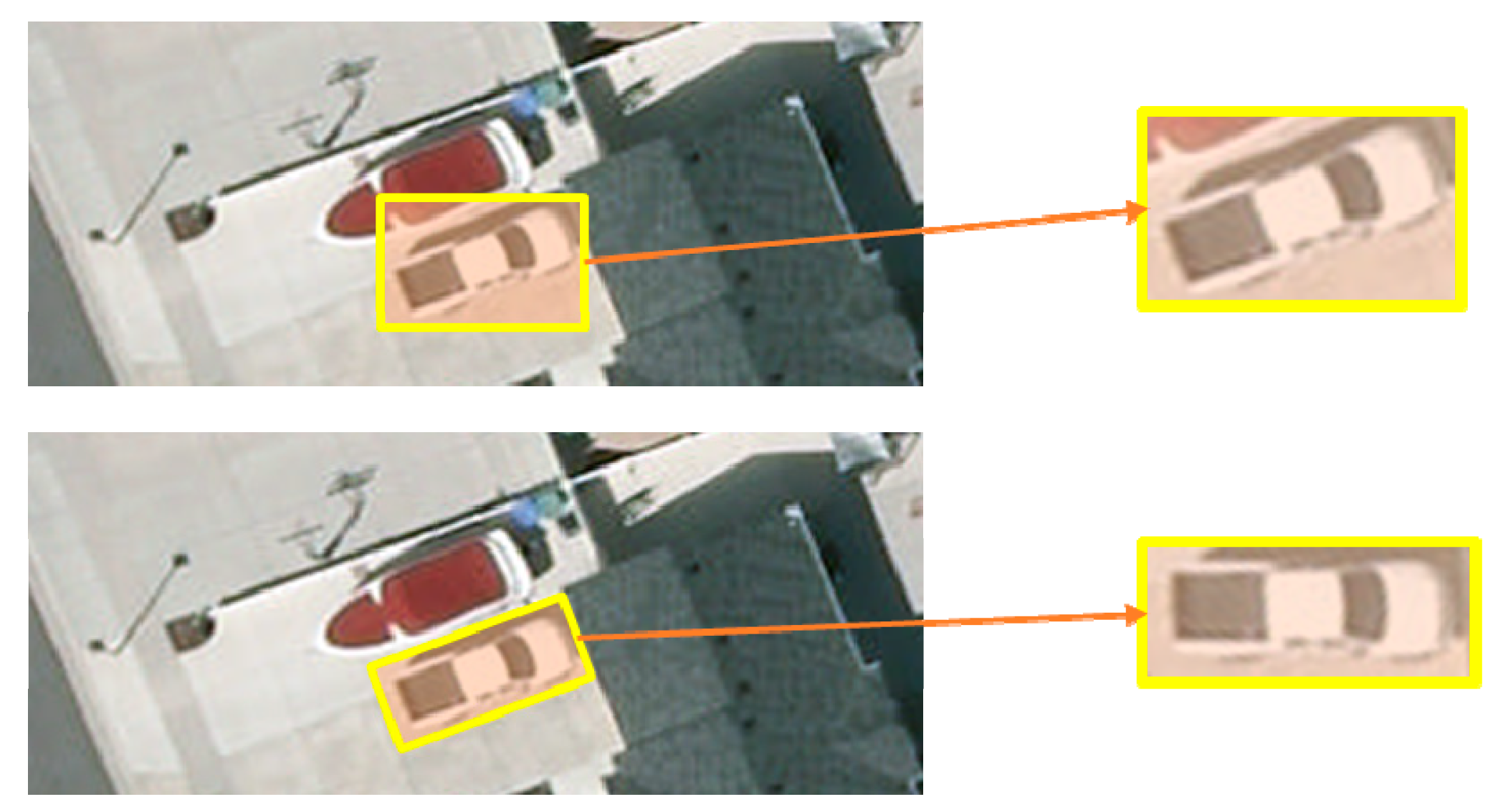

- A Fast Oriented Region Search was first used to generate horizontal bounding box candidates quickly with as few aerial images as possible by applying Edge Boxes NG features re-ranking (EBNR), then calculated the orientations by applying a vehicle orientation estimation. If we simply rotated horizontal bounding boxes to their main directions, the rotated bounding boxes may not be accurately enclosing the objects because the vehicle targets may lie in the rectangular diagonal of boxes. To address this problem, we introduced a fast algorithm to get a point-set of a vehicle targets that could represent its contours. By rotating the points in the set and the corresponding superpixel box, we were able to achieve box refinement. A highlight of this approach is that it only needs to get the superpixel segment graph one time and compute between the bounding boxes. Therefore, this method significantly saves computational time to get oriented proposals efficiently.

- (3)

- Discriminating the ORoIs is the primary mission in this classifying stage. We computed the LSK features and encoded them based on the codebook we built in the training stage. Then, we use the classifiers to recognize the objects in the ORoIs. The classification mechanism was based on ranking scores where one object was very likely surrounded by many oriented bounding boxes. To remove redundancy and retain the optimum oriented bounding box that was closest to the ground-truth, non-maximum suppression (NMS) was performed. Through this method, we could obtain more robust and stable results in some challenging circumstances.

3.2. Fast Oriented Region Search

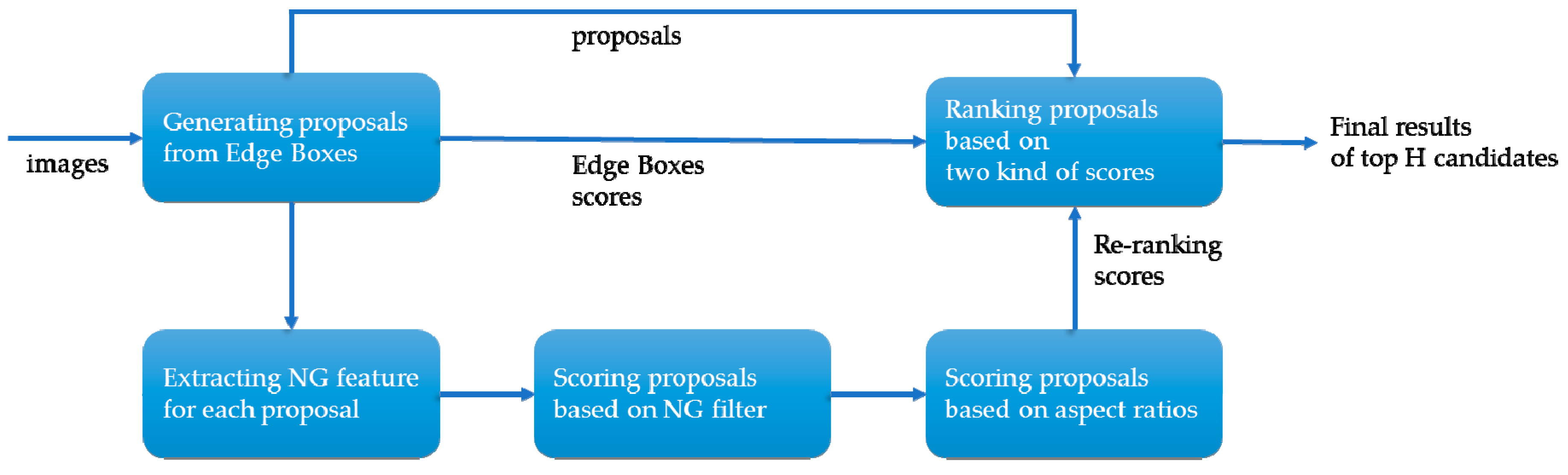

3.2.1. Edge Boxes NG Features Re-Ranking

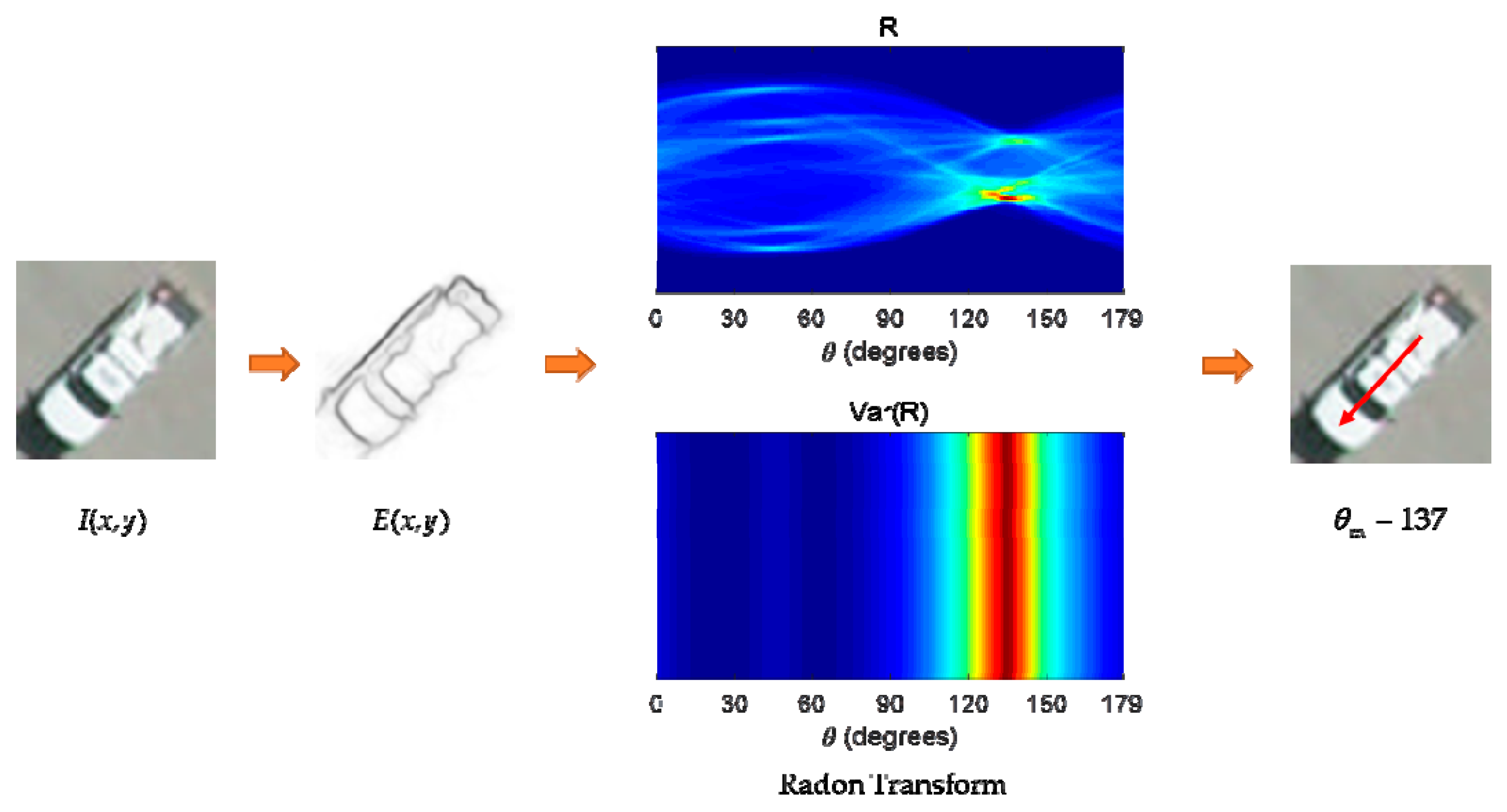

3.2.2. Vehicle Orientation Estimation

3.2.3. Region Symmetrical Refinement

3.3. Feature Definition

3.4. VLAD Representation

3.5. Vehicle Object Category Classifiers

4. Experiments and Discussions

4.1. Datasets

4.2. Metric

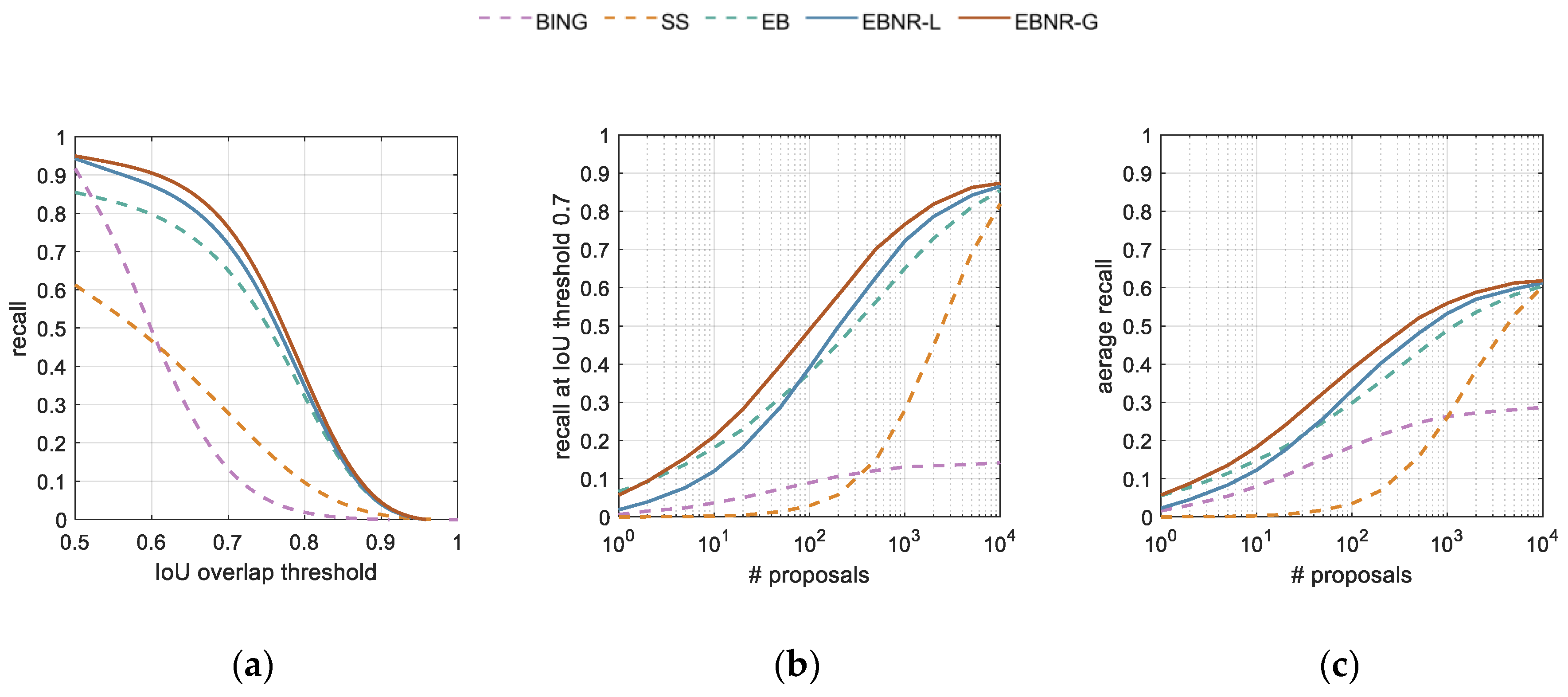

4.3. Proposal Generating Results

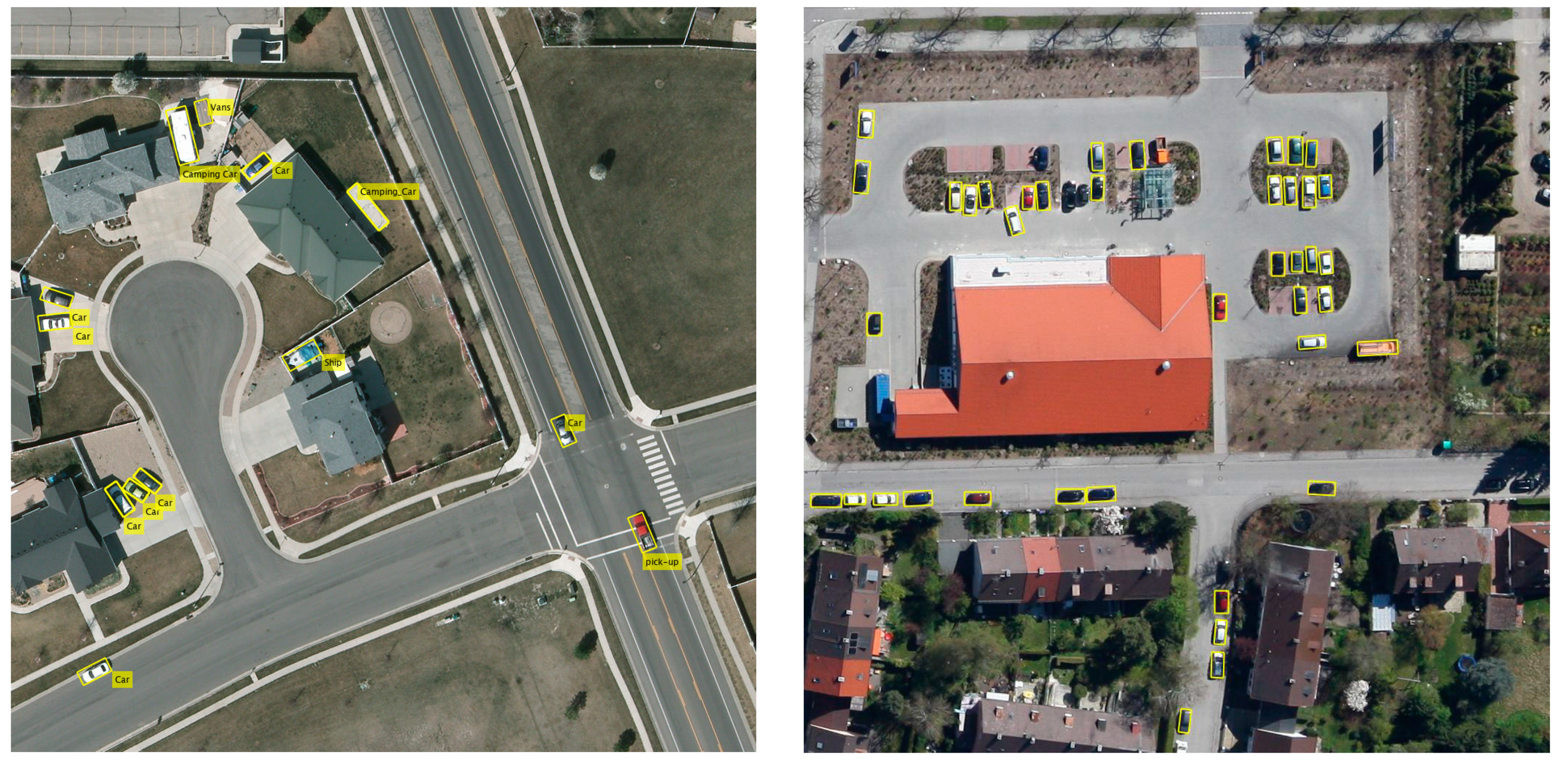

4.4. Vehicle Detection Results

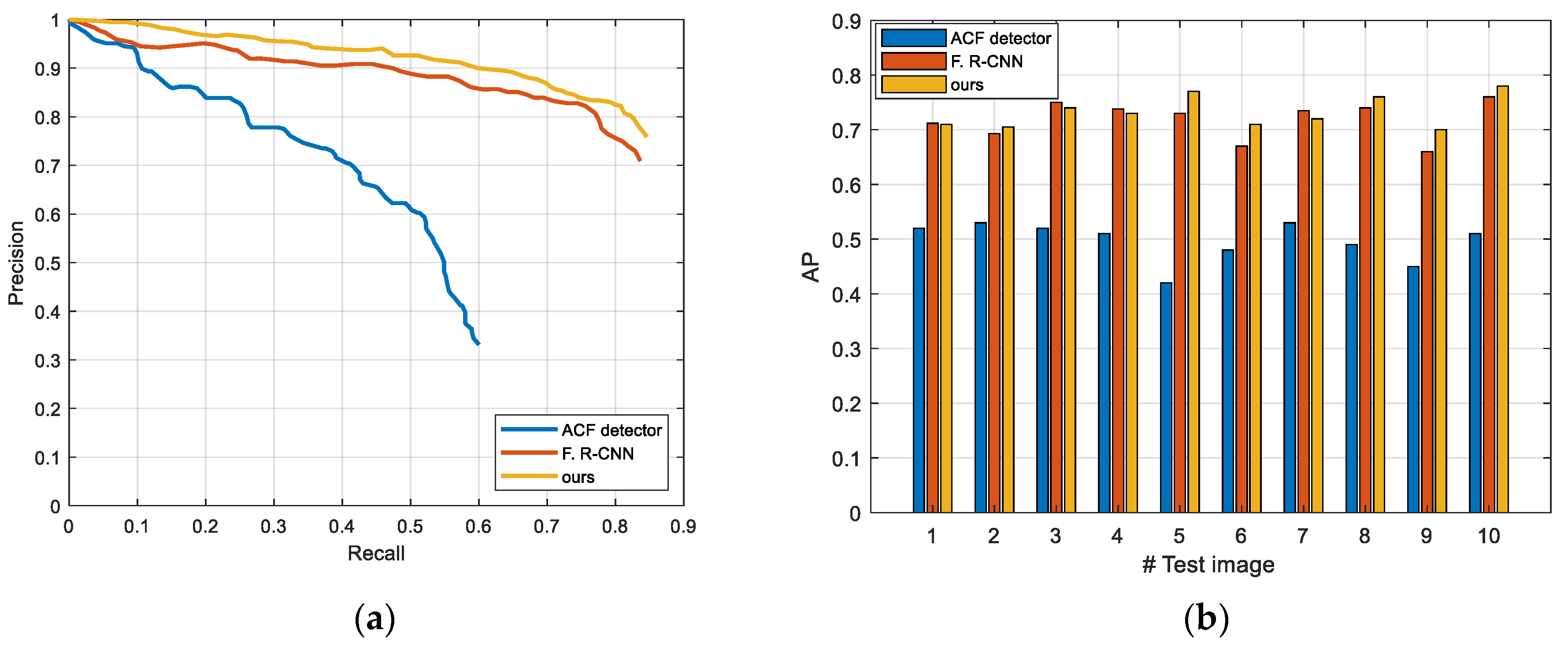

4.4.1. VEDAI Results

4.4.2. Munich 3K Results

4.5. Evaluations and Discussion

4.5.1. Evaluation of Time Complexity

4.5.2. Evaluation of LSK Features

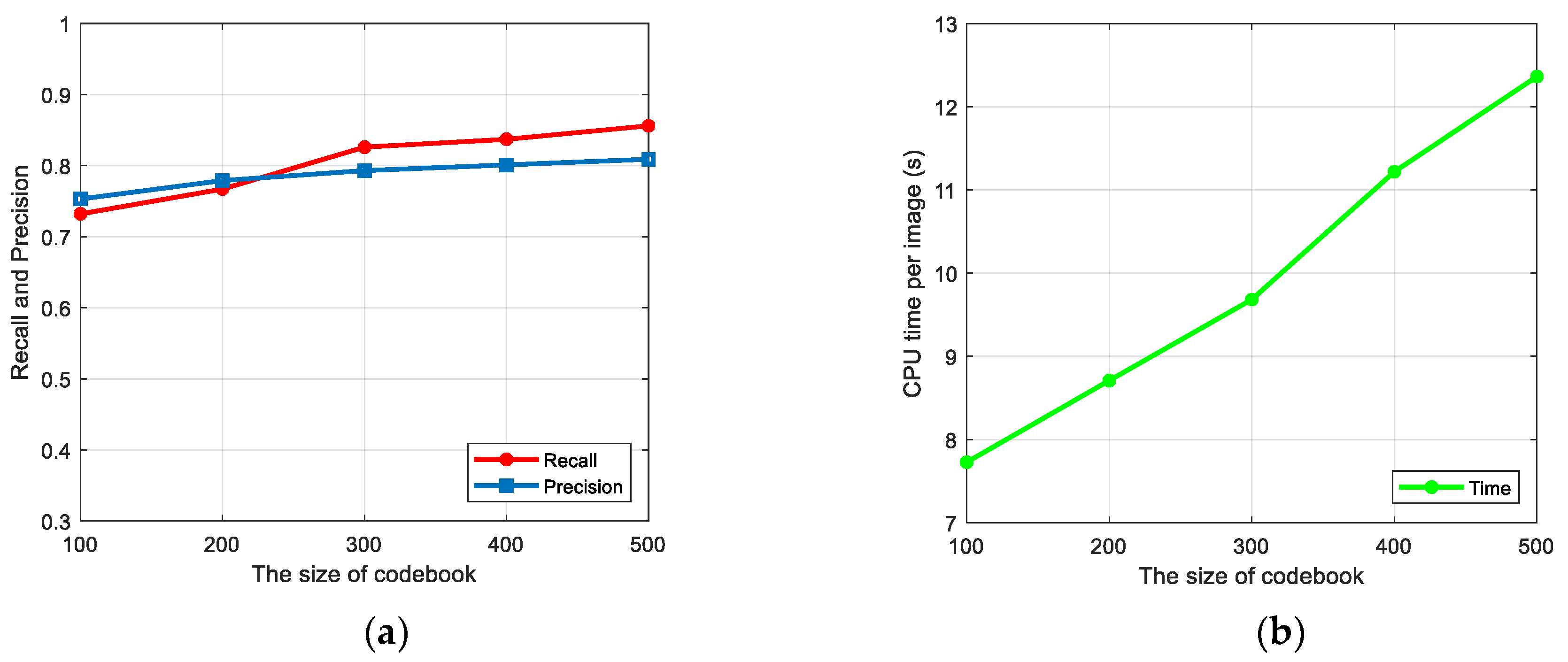

4.5.3. Evaluation of VLAD

4.5.4. Discussion about the Quantitative Examples

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Grabner, H.; Nguyen, T.T.; Gruber, B.; Bischof, H. On-line boosting-based car detection from aerial images. ISPRS J. Photogramm. Remote Sens. 2008, 63, 382–396. [Google Scholar] [CrossRef]

- Cao, X.; Wu, C.; Lan, J.; Yan, P.; Li, X. Vehicle Detection and Motion Analysis in Low-Altitude Airborne Video Under Urban Environment. IEEE Trans. Circuits Syst. Video Technol. 2011, 21, 1522–1533. [Google Scholar] [CrossRef]

- Zhao, T.; Nevatia, R. Car detection in low resolution aerial images. Image Vis. Comput. 2003, 21, 693–703. [Google Scholar] [CrossRef]

- Hinz, S. Integrating Local and Global Features for Vehicle Detection in High Resolution Aerial Imagery. Computer 2003, 34, 119–124. [Google Scholar]

- Choi, J.-Y.; Yang, Y.-K. Vehicle Detection from Aerial Images Using Local Shape Information. In Advances in Image and Video Technology; Wada, T., Huang, F., Lin, S., Eds.; LNCS; Springer: Berlin, Heidelberg, Germany, 2009; Volume 5414, pp. 227–236. ISBN 978-3-540-92957-4. [Google Scholar]

- Niu, X. A semi-automatic framework for highway extraction and vehicle detection based on a geometric deformable model. ISPRS J. Photogramm. Remote Sens. 2006, 61, 170–186. [Google Scholar] [CrossRef]

- Kozempel, K.; Reulke, R. Fast Vehicle Detection and Tracking in Aerial Image Bursts. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2009, 38, 175–180. [Google Scholar]

- Wei, M.S.; Xing, F.; You, Z. A real-time detection and positioning method for small and weak targets using a 1D morphology-based approach in 2D images. Light Sci. Appl. 2018, 7, 18006–18009. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, X.; Zhou, G.; Jiang, L. Vehicle detection based on morphology from highway aerial images. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; pp. 5997–6000. [Google Scholar]

- Zheng, H. Morphological neural network vehicle detection from high resolution satellite imagery. WSEAS Trans. Comput. 2006, 5, 2225–2231. [Google Scholar]

- Breckon, T.P.; Barnes, S.E.; Eichner, M.L.; Wahren, K. Autonomous Real-time Vehicle Detection from a Medium Level UAV. In Proceedings of the 24th International Conference on Unmanned Air Vehicle Systems, Bristol, UK, 30 March–1 April 2009; pp. 29.1–29.9. [Google Scholar]

- Tuermer, S.; Leitloff, J.; Reinartz, P.; Stilla, U. Automatic Vehicle Detection in Aerial Image Sequences of Urban Areas Using 3D Hog Features. Photogramm. Comput. Vis. Image Anal. 2010, 28, 50–54. [Google Scholar]

- Sahli, S.; Ouyang, Y.; Sheng, Y.; Lavigne, D.A. Robust vehicle detection in low-resolution aerial imagery. In Proceedings of The Airborne Intelligence, Surveillance, Reconnaissance (ISR) Systems and Applications VII; Henry, D.J., Ed.; SPIE: Orlando, FL, USA, 2010; Volume 7668, p. 76680G. [Google Scholar]

- Leitloff, J.; Hinz, S.; Stilla, U. Vehicle Detection in Very High Resolution Satellite Images of City Areas. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2795–2806. [Google Scholar] [CrossRef]

- Sharma, G.; Merry, C.J.; Goel, P.; McCord, M. Vehicle detection in 1-m resolution satellite and airborne imagery. Int. J. Remote Sens. 2006, 27, 779–797. [Google Scholar] [CrossRef]

- Jin, X.; Davis, C.H. Vehicle detection from high-resolution satellite imagery using morphological shared-weight neural networks. Image Vis. Comput. 2007, 25, 1422–1431. [Google Scholar] [CrossRef]

- Liu, W.; Yamazaki, F.; Vu, T.T. Automated Vehicle Extraction and Speed Determination From QuickBird Satellite Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4, 75–82. [Google Scholar] [CrossRef]

- Zhou, H.; Kong, H.; Wei, L.; Creighton, D.; Nahavandi, S. Efficient Road Detection and Tracking for Unmanned Aerial Vehicle. IEEE Trans. Intell. Transp. Syst. 2015, 16, 297–309. [Google Scholar] [CrossRef]

- Moranduzzo, T.; Melgani, F. A SIFT-SVM method for detecting cars in UAV images. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; pp. 6868–6871. [Google Scholar]

- Moranduzzo, T.; Melgani, F. Automatic Car Counting Method for Unmanned Aerial Vehicle Images. IEEE Trans. Geosci. Remote Sens. 2014, 52, 1635–1647. [Google Scholar] [CrossRef]

- Moranduzzo, T.; Melgani, F. Detecting Cars in UAV Images With a Catalog-Based Approach. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6356–6367. [Google Scholar] [CrossRef]

- Luo, H.; Li, J.; Chen, Z.; Wang, C.; Chen, Y.; Cao, L.; Teng, X.; Guan, H.; Wen, C. Vehicle Detection in High-Resolution Aerial Images via Sparse Representation and Superpixels. IEEE Trans. Geosci. Remote Sens. 2015, 54, 103–116. [Google Scholar]

- Hosang, J.; Benenson, R.; Dollar, P.; Schiele, B. What Makes for Effective Detection Proposals? IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 814–830. [Google Scholar] [CrossRef]

- Zitnick, C.L.; Dollár, P. Edge Boxes: Locating Object Proposals from Edges. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); LNCS; Springer: Cham, Switzerland, 2014; Volume 8693, pp. 391–405. ISBN 978-3-319-10601-4. [Google Scholar]

- Cheng, M.M.; Zhang, Z.; Lin, W.Y.; Torr, P. BING: Binarized normed gradients for objectness estimation at 300fps. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3286–3293. [Google Scholar]

- Uijlings, J.R.R.; van de Sande, K.E.A.; Gevers, T.; Smeulders, A.W.M. Selective Search for Object Recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Zhou, H.; Wei, L.; Creighton, D.; Nahavandi, S. Orientation aware vehicle detection in aerial images. Electron. Lett. 2017, 53, 1406–1408. [Google Scholar] [CrossRef]

- Chen, X.; Ma, H.; Zhu, C.; Wang, X.; Zhao, Z. Boundary-aware box refinement for object proposal generation. Neurocomputing 2017, 219, 323–332. [Google Scholar] [CrossRef]

- Felzenszwalb, P.F.; Huttenlocher, D.P. Efficient Graph-Based Image Segmentation. Int. J. Comput. Vis. 2004, 59, 167–181. [Google Scholar] [CrossRef]

- Takeda, H.; Farsiu, S.; Milanfar, P. Kernel regression for image processing and reconstruction. IEEE Trans. Image Process. 2007, 16, 349–366. [Google Scholar] [CrossRef] [PubMed]

- Seo, H.J.; Milanfar, P. Training-free, generic object detection using locally adaptive regression kernels. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1688–1704. [Google Scholar] [PubMed]

- Jegou, H.; Douze, M.; Schmid, C.; Perez, P. Aggregating local descriptors into a compact image representation. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 3304–3311. [Google Scholar]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L.S. Soft-NMS-Improving Object Detection with One Line of Code. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5562–5570. [Google Scholar]

- Razakarivony, S.; Jurie, F. Vehicle detection in aerial imagery: A small target detection benchmark. J. Vis. Commun. Image Represent. 2016, 34, 187–203. [Google Scholar] [CrossRef]

- Leitloff, J.; Rosenbaum, D.; Kurz, F.; Meynberg, O.; Reinartz, P. An operational system for estimating road traffic information from aerial images. Remote Sens. 2014, 6, 11315–11341. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Tang, T.; Zhou, S.; Deng, Z.; Zou, H.; Lei, L. Vehicle detection in aerial images based on region convolutional neural networks and hard negative example mining. Sensors 2017, 17, 336. [Google Scholar] [CrossRef]

- Liu, K.; Mattyus, G. Fast Multiclass Vehicle Detection on Aerial Images. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1938–1942. [Google Scholar]

- Ammour, N.; Alhichri, H.; Bazi, Y.; Benjdira, B.; Alajlan, N.; Zuair, M. Deep learning approach for car detection in UAV imagery. Remote Sens. 2017, 9, 312. [Google Scholar] [CrossRef]

| Method | # Proposals = 500 | # Proposals = 1000 | # Proposals = 2000 | Time (sec) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| AR | 70%-Recall | ABO | AR | 70%-Recall | ABO | AR | 70%-Recall | ABO | ||

| BING | 24.7 | 12.2 | 59.7 | 26.3 | 13.1 | 60.7 | 27.2 | 13.4 | 61.3 | 0.07 |

| SS | 15.7 | 15.0 | 43.4 | 26.1 | 27.9 | 56.6 | 38.4 | 44.8 | 66.6 | 19 |

| EB | 43.2 | 56.3 | 67.0 | 48.9 | 65.0 | 71.5 | 53.6 | 72.9 | 74.7 | 0.3 |

| EBNR-L | 48.1 | 62.8 | 72.5 | 53.3 | 72.2 | 75.3 | 57.0 | 78.6 | 77.0 | 0.36 |

| EBNR-G | 52.1 | 70.2 | 73.9 | 55.9 | 76.6 | 76.0 | 58.8 | 81.9 | 77.4 | 0.36 |

| Method | Boa | Cam | Car | Pic | Tra | Tru | Van |

|---|---|---|---|---|---|---|---|

| SVM + HOG | 32.2 | 33.4 | 55.4 | 48.6 | 7.4 | 32.5 | 40.6 |

| DPM | 26.1 | 41.9 | 60.5 | 52.3 | 33.8 | 34.3 | 36.3 |

| F. R-CNN | 66.2 | 72.7 | 77.7 | 74.8 | 54.4 | 66.7 | 69.9 |

| Our Model | 60.3 | 74.5 | 79.7 | 77.6 | 39.5 | 69.7 | 72.4 |

| Method | GT | TP | FP | Recall | Precision |

|---|---|---|---|---|---|

| ACF detector | 5892 | 3078 | 2143 | 52.2% | 58.9% |

| F. R-CNN | 5892 | 4487 | 976 | 76.2% | 82.1% |

| Our Model | 5892 | 4719 | 1006 | 80.1% | 82.4% |

| Method | Recall | Precision | Time Per Image (CPU) | Programming Language |

|---|---|---|---|---|

| Moranduzzo [21] | 65.8% | 53.1% | 44.8 s | Matlab |

| Ammour [39] | 79.4% | 80.8% | 64.6 s | N/A |

| Our Method | 82.6% | 79.3% | 10.3 s | C++ & Matlab |

| Method | Recall | Precision |

|---|---|---|

| LSK | 71.7% | 67.1% |

| LSK + BoW | 77.4% | 72.8% |

| LSK + VLAD | 82.6% | 79.3% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, C.; Ding, Y.; Zhu, M.; Xiu, J.; Li, M.; Li, Q. Vehicle Detection in Aerial Images Using a Fast Oriented Region Search and the Vector of Locally Aggregated Descriptors. Sensors 2019, 19, 3294. https://doi.org/10.3390/s19153294

Liu C, Ding Y, Zhu M, Xiu J, Li M, Li Q. Vehicle Detection in Aerial Images Using a Fast Oriented Region Search and the Vector of Locally Aggregated Descriptors. Sensors. 2019; 19(15):3294. https://doi.org/10.3390/s19153294

Chicago/Turabian StyleLiu, Chongyang, Yalin Ding, Ming Zhu, Jihong Xiu, Mengyang Li, and Qihui Li. 2019. "Vehicle Detection in Aerial Images Using a Fast Oriented Region Search and the Vector of Locally Aggregated Descriptors" Sensors 19, no. 15: 3294. https://doi.org/10.3390/s19153294

APA StyleLiu, C., Ding, Y., Zhu, M., Xiu, J., Li, M., & Li, Q. (2019). Vehicle Detection in Aerial Images Using a Fast Oriented Region Search and the Vector of Locally Aggregated Descriptors. Sensors, 19(15), 3294. https://doi.org/10.3390/s19153294